深度学习多聚焦图像融合方法综述

摘 要

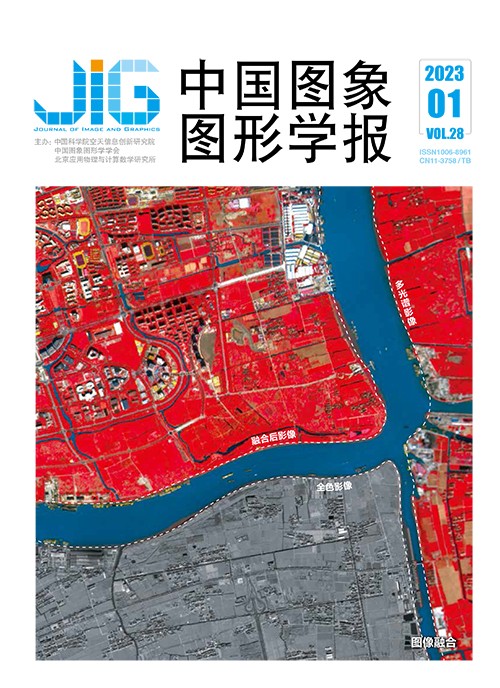

多聚焦图像融合是一种以软件方式有效扩展光学镜头景深的技术,该技术通过综合同一场景下多幅部分聚焦图像包含的互补信息,生成一幅更加适合人类观察或计算机处理的全聚焦融合图像,在数码摄影、显微成像等领域具有广泛的应用价值。传统的多聚焦图像融合方法往往需要人工设计图像的变换模型、活跃程度度量及融合规则,无法全面充分地提取和融合图像特征。深度学习由于强大的特征学习能力被引入多聚焦图像融合问题研究,并迅速发展为该问题的主流研究方向,多种多样的方法不断提出。鉴于国内鲜有多聚焦图像融合方面的研究综述,本文对基于深度学习的多聚焦图像融合方法进行系统综述,将现有方法分为基于深度分类模型和基于深度回归模型两大类,对每一类中的代表性方法进行介绍;然后基于3个多聚焦图像融合数据集和8个常用的客观质量评价指标,对25种代表性融合方法进行了性能评估和对比分析;最后总结了该研究方向存在的一些挑战性问题,并对后续研究进行展望。本文旨在帮助相关研究人员了解多聚焦图像融合领域的研究现状,促进该领域的进一步发展。

关键词

The review of multi-focus image fusion methods based on deep learning

Wang Lei1, Qi Zhengzheng1, Liu Yu1,2(1.Department of Biomedical Engineering, Hefei University of Technology, Hefei 230009, China;2.Anhui Province Key Laboratory of Measuring Theory and Precision Instrument, Hefei University of Technology, Hefei 230009, China) Abstract

Multi-focus image fusion technique can extend the depth-of-field (DOF) of optical lenses effectively via a software-based manner. It aims to fuse a set of partially focused source images of the same scene by generating an all-in-focus fused image, which will be more suitable for human or machine perception. As a result, multi-focus image fusion is of high practical significance in many areas including digital photography, microscopy imaging, integral imaging, thermal imaging, etc. Traditional multi-focus image fusion methods, which generally include transform domain methods (e.g., multi-scale transform-based methods and sparse representation-based methods) and spatial domain methods (e.g., block-based methods and pixel-based methods), are often based on manually designed transform models, activity level measures and fusion rules. To achieve high fusion performance, these key factors tend to become much more complicated in the fusion algorithm, which are usually at the cost of computational efficiency. In addition, these key factors are often independently designed with relatively weak association, which limits the fusion performance to a great extent. In the past few years, deep learning has been introduced into the study of multi-focus image fusion and has rapidly emerged as the current mainstream of this field, with a variety of deep learning-based fusion methods being proposed in the literature. Deep learning models like convolutional neural networks (CNNs) and generative adversarial networks (GANs) have been facilitating in the study of multi-focus image fusion. It is of high significance to conduct a comprehensive survey to review the recent advances achieved in deep learning-based multi-focus image fusion and put forward some future prospects for further improvement. Some survey papers related to image fusion including multi-focus image fusion have been recently published in the international journals around 2020. However, the survey works on multi-focus image fusion are rarely reported in Chinese journals. Moreover, considering that this field grows very rapidly with dozens of papers being published each year, a more timely survey is also highly expected. Based on the above considerations, we demonstrate a systematic review for the deep learning-based multi-focus image fusion methods. In this paper, the existing deep learning-based methods are classified into two main categories: 1) deep classification model-based methods and 2) deep regression model-based methods. Additionally, these two categories of methods are further divided into sub-categories. Specifically, the classification model-based methods are further divided into image block-based methods and image segmentation-based methods in terms of the pixel processing manner adopted. The regression model-based methods are further divided into supervised learning-based methods and unsupervised learning-based methods, according to the learning manner of network models. For each category, the representative fusion methods are introduced as well. In addition, we conduct a comparative study on the performance of 25 representative multi-focus image fusion methods, including 5 traditional transform domain methods, 5 traditional spatial domain methods and 15 deep learning-based methods. To this end, we use three commonly-used multi-focus image fusion datasets in the experiments including “Lytro”, “MFFW” and “Classic”. Additionally, eight objective evaluation metrics that are widely used in multi-focus image fusion are adopted for performance assessment, which are composed of include two information theory-based metrics, two image feature-based metrics, two structural similarity-based metrics and two human visual perception-based metrics. The experimental results verify that deep learning-based methods can achieve very promising fusion results. However, it is worth noting that the performance of most deep learning-based methods is not significantly better than that of the traditional fusion methods. One main reason for this phenomenon is the lack of large-scale and realistic datasets for training in multi-focus image fusion, and the way to create synthetic datasets for training is inevitability different from the real situation, leading to that the potential of deep learning-based methods cannot be fully tapped. Finally, we summarize some challenging problems in the study of deep learning-based multi-focus image fusion and put forward some future prospects accordingly, which mainly include the four aspects as following: 1) the fusion of focus boundary regions; 2) the fusion of mis-registered source images; 3) the construction of large-scale datasets with real labels for network training; and 4) the improvement of network architecture and model training approach.

Keywords

multi-focus image fusion(MFIF) image fusion deep learning convolutional neural network (CNN) generative adversarial network (GAN)

|

中国图象图形学报 │ 京ICP备05080539号-4 │ 本系统由

中国图象图形学报 │ 京ICP备05080539号-4 │ 本系统由