深度学习时代图像融合技术进展

摘 要

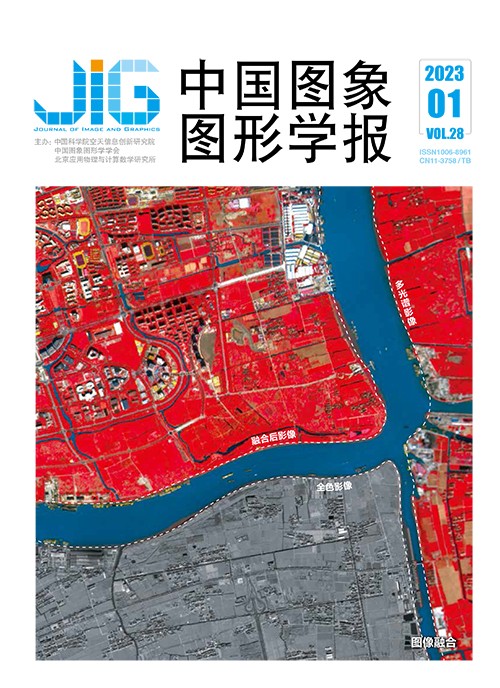

为提升真实场景视觉信号的采集质量,往往需要通过多种融合方式获取相应的图像,例如,多聚焦、多曝光、多光谱和多模态等。针对视觉信号采集的以上特性,图像融合技术旨在利用同一场景不同视觉信号的优势,生成单图像信息描述,提升视觉低、中、高级任务的性能。目前,依托端对端学习强大的特征提取、表征及重构能力,深度学习已成为图像融合研究的主流技术。与传统图像融合技术相比,基于深度学习的图像融合模型性能显著提高。随着深度学习研究的深入,一些新颖的理论和方法也促进了图像融合技术的发展,如生成对抗网络、注意力机制、视觉Transformer和感知损失函数等。为厘清基于深度学习技术的图像融合研究进展,本文首先介绍了图像融合问题建模,并从传统方法视角逐渐向深度学习视角过渡。具体地,从数据集生成、神经网络构造、损失函数设计、模型优化和性能评估等方面总结了基于深度学习的图像融合研究现状。此外,还讨论了选择性图像融合这类衍生问题建模(如基于高分辨率纹理图融合的深度图增强),回顾了一些基于图像融合实现其他视觉任务的代表性工作。最后,根据现有技术的缺陷,提出目前图像融合技术存在的挑战,并对未来发展趋势给出了展望。

关键词

The critical review of the growth of deep learning-based image fusion techniques

Zuo Yifan1, Fang Yuming1, Ma Kede2(1.School of Information Management, Jiangxi University of Finance and Economics, Nanchang 330013, China;2.Department of Computer Science, City University of Hong Kong, Hong Kong 999077, China) Abstract

To capture more effective visual information of the natural scenes, multi-sensor imaging systems have been challenging in multiple configurations or modalities due to the hardware design constraints. It is required to fuse multiple source images into a single high-quality image in terms of rich and feasible perceptual information and few artifacts. To facilitate various image processing and computer vision tasks, image fusion technique can be used to generate a single and clarified image features. Traditional image fusion models are often constructed in accordance with label-manual features or unidentified feature-learned representations. The generalization ability of the models needs to be developed further.Deep learning technique is focused on progressive multi-layer features extraction via end-to-end model training. Most of demonstration-relevant can be learned for specific task automatically. Compared with the traditional methods, deep learning-based models can improve the fusion performance intensively in terms of image fusion. Current image fusion-related deep learning models are often beneficial based on convolutional neural networks (CNNs) and generative adversarial networks (GANs). In recent years, the newly network structures and training techniques have been incorporated for the growth of image fusion like vision transformers and meta-learning techniques. Most of image fusion-relevant literatures are analyzed from specific multi-fusion issues like exposure, focus, spectrum image, and modality issues. However, more deep learning-related model designs and training techniques is required to be incorporated between multi-fusion tasks. To draw a clear picture of deep learning-based image fusion techniques, we try to review the latest image fusion researches in terms of 1) dataset generation, 2) neural network construction, 3) loss function design, 4) model optimization, and 5) performance evaluation. For dataset generation, we emphasize two categories: a) supervised learning and b) unsupervised (or self-supervised) learning. For neural network construction, we distinguish the early or late stages of this construction process, and the issue of information fusion is implemented between multi-scale, coarse-to-fine and the adversarial networks-incorporated (i.e., discriminative networks) as well. For loss function design, the perceptual loss functions-specific method is essential for image fusion-related perceptual applications like multi-exposure and multi-focus image fusion. For model optimization, the generic first-order optimization techniques are covered (e.g., stochastic gradient descent(SGD), SGD+momentum, Adam, and AdamW) and the advanced alternation and bi-level optimization methods are both taken into consideration. For performance evaluation, a commonly-used quantitative metrics are reviewed for the manifested measurement of fusion performance. The relationship between the loss functions (also as a form of evaluation metrics) are used to drive the learning of CNN-based image fusion methods and the evaluation metrics. In addition, to illustrate the transfer feasibility of image fusion-consensus to a tailored image fusion application, a selection of image fusion methods is discussed (e.g., a high-quality texture image-fused depth map enhancement). Some popular computer vision tasks are involved in (such as image denoising, blind image deblurring, and image super-resolution), which can be resolved by image fusion innovatively. Finally, we review some potential challenging issues, including: 1) reliable and efficient ground-truth training data-constructed (i.e., the input image sequence and the predictable image-fused), 2) lightweight, interpretable, and generalizable CNN-based image fusion methods, 3) human or machine-related vision-perceptual calibrated loss functions, 4) convergence-accelerated image fusion models in related to adversarial training setting-specific and the bias-related of the test-time training, and 5) human-related ethical issues in relevant to fairness and unbiased performance evaluation.

Keywords

image fusion deep neural networks stochastic gradient descent(SGD) image quality assessment deep learning

|

中国图象图形学报 │ 京ICP备05080539号-4 │ 本系统由

中国图象图形学报 │ 京ICP备05080539号-4 │ 本系统由