Ranking

- Current Issue

- All Issues

- 112631

- 29564

- 3

Generalized adversarial defense against unseen attacks: a su...

6486 - 46448

- 56414

- 66392

- 1147933

- 241954

- 336327

- 432349

- 531698

- 629990

About the Journal

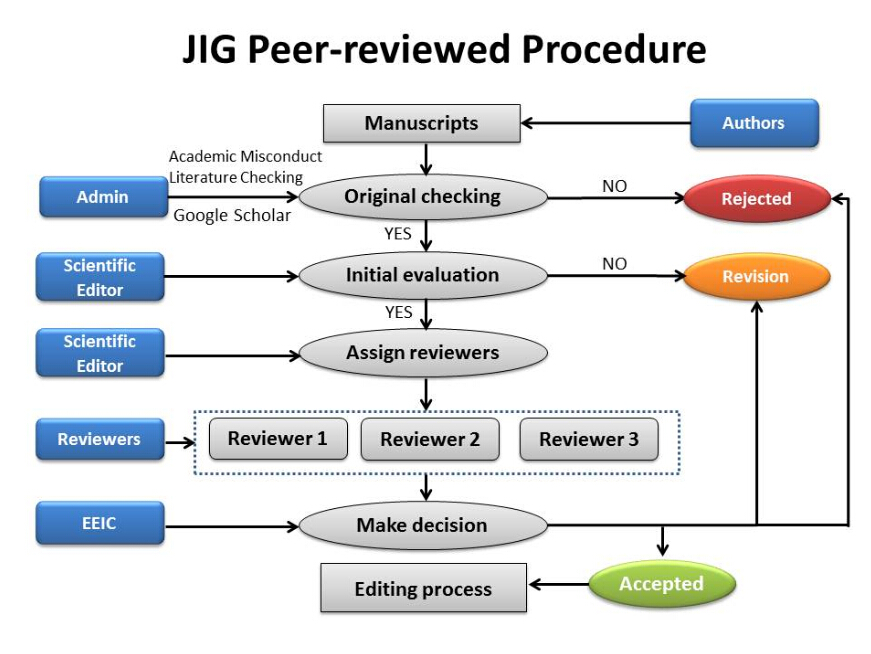

Journal of image and Graphics(JIG) is a peer-reviewed monthly periodical, JIG is an open forum and platform which aims to present all key aspects, theoretical and practical, of a broad interest in computer engineering, technology and science in China since 1996. Its main areas include, but are not limited to, state-of-the-art techniques and high-level research in the areas of image analysis and recognition, image interpretation and computer visualization, computer graphics, virtual reality, system simulation, animation, and other hot topics to meet different application requirements in the fields of urban planning, public security, network communication, national defense, aerospace, environmental change, medical diagnostics, remote sensing, surveying and mapping, and others.

- Current Issue

- Online First

Cover&Content

Trusted AI

- Generalized adversarial defense against unseen attacks: a survey Zhou Dawei, Xu Yibo, Wang Nannan, Liu Decheng, Peng Chunlei, Gao Xinbodoi:10.11834/jig.230423

16-07-2024

16-07-2024  6486

6486  6437

6437

Abstract:Deep learning-based models have achieved impressive breakthroughs in various areas in recent years. However, they are vulnerable when their inputs are affected by imperceptible but adversarial noises, which can easily lead to wrong outputs. To tackle this problem, many defense methods have been proposed to mitigate the effect from these threat models for deep neural networks. As adversaries seek to improve the technologies of disrupting the models’ performances, an increasing number of attacks that are unseen to the model during the training process are emerging. Thus, the defense mechanism, which defends against only some specific types of adversarial perturbations, is becoming less robust. The ability of a model to generally defend against various unseen attacks becomes pivotal. Unseen attacks should be as different as possible from the attacks used in the training process in terms of theory and attack performance rather than adjustment of parameters from the same attack method. The core is to defend against any attacks via efficient training procedures, while the defense is expected to be as independent as possible from adversarial attacks during training. Our survey aims to summarize and analyze the existing adversarial defense methods against unseen adversarial attacks. We first briefly review the background of defending against unseen attacks. One of the main reasons that the model is robust against unseen attacks is that it can extract robust features through a specially designed training mechanism without explicitly designing a defense mechanism that has special internal structures. A robust model can be achieved by modifying its structure or designing additional modules. Therefore, we divide these methods into two categories: training mechanism-based defense and model structure-based defense. The former mainly seeks to improve the quality of the robust feature extracted by the model via its training process. 1) Adversarial training is one of the most effective adversarial defense strategies, but it can easily overfit to some specific types of adversarial noises. Well-designed attacks for training can explicitly improve the model’s ability to explore the perturbation space during training, which directly helps the model learn more representative features compared with traditional adversarial attacks in the perturbation space. Adding regularization terms is another way to obtain robust models by improving the robust features from the basic training process. Furthermore, we introduce some adversarial training-based methods combined with knowledge from other domains, such as domain adaptation, pre-training, and fine tuning. Different examples make different contributions to the model’s robustness. Thus, example reweighting is also a way to achieve robustness against attacks. 2) Standard training is the most basic training method in deep learning. Data augmentation methods focus on example diversity of standard training, while adding regularization terms into standard training aims to enhance the model outputs’ stabilization. Pre-training strategy aims to achieve a robust model within a predefined perturbation bound. 3) We also found that contrastive learning is a useful strategy as its core ideas about feature similarity match well with the goal of acquiring representative robust features. Model structure-based defense, meanwhile, mainly focuses on intrinsic drawbacks from the model’s structure. It is divided into structure optimization for target network methods and input data pre-processing methods according to how the structures are modified. 1) Structure optimization for target network aims to enhance the model’s ability to obtain useful information from inputs and features because the network itself is susceptible to variations from them. 2) Input data pre-processing focuses on eliminating the threats from examples before feeding them into the target network. Removing adversarial noise from inputs or detecting adversarial examples to reject them are two popular strategies because they are easily modeled and rely less on adversarial training examples compared with other methods such as adversarial training. Finally, we analyze the trends of research in this area and summarize some research on other related domains. 1) Defending against multiple adversarial perturbation well cannot make sure that the model is robust against various unseen attacks but contributes to the improvement of robustness against one specific type of perturbation. 2) With the development of defense against unseen adversarial attacks, some auxiliary tools such as the accelerating module have been proposed. 3) Defense against unseen common corruptions is beneficial for applications of defense methods because adversarial perturbations cannot represent the whole perturbation space in the real world. To summarize, defending against attacks that are totally different from the attacks during training has stronger generalizability. The analysis based on this goal shows differences from traditional surveys about adversarial defense. We hope that this survey can further motivate research on defending against unseen adversarial attacks.

Abstract:Deep learning-based models have achieved impressive breakthroughs in various areas in recent years. However, they are vulnerable when their inputs are affected by imperceptible but adversarial noises, which can easily lead to wrong outputs. To tackle this problem, many defense methods have been proposed to mitigate the effect from these threat models for deep neural networks. As adversaries seek to improve the technologies of disrupting the models’ performances, an increasing number of attacks that are unseen to the model during the training process are emerging. Thus, the defense mechanism, which defends against only some specific types of adversarial perturbations, is becoming less robust. The ability of a model to generally defend against various unseen attacks becomes pivotal. Unseen attacks should be as different as possible from the attacks used in the training process in terms of theory and attack performance rather than adjustment of parameters from the same attack method. The core is to defend against any attacks via efficient training procedures, while the defense is expected to be as independent as possible from adversarial attacks during training. Our survey aims to summarize and analyze the existing adversarial defense methods against unseen adversarial attacks. We first briefly review the background of defending against unseen attacks. One of the main reasons that the model is robust against unseen attacks is that it can extract robust features through a specially designed training mechanism without explicitly designing a defense mechanism that has special internal structures. A robust model can be achieved by modifying its structure or designing additional modules. Therefore, we divide these methods into two categories: training mechanism-based defense and model structure-based defense. The former mainly seeks to improve the quality of the robust feature extracted by the model via its training process. 1) Adversarial training is one of the most effective adversarial defense strategies, but it can easily overfit to some specific types of adversarial noises. Well-designed attacks for training can explicitly improve the model’s ability to explore the perturbation space during training, which directly helps the model learn more representative features compared with traditional adversarial attacks in the perturbation space. Adding regularization terms is another way to obtain robust models by improving the robust features from the basic training process. Furthermore, we introduce some adversarial training-based methods combined with knowledge from other domains, such as domain adaptation, pre-training, and fine tuning. Different examples make different contributions to the model’s robustness. Thus, example reweighting is also a way to achieve robustness against attacks. 2) Standard training is the most basic training method in deep learning. Data augmentation methods focus on example diversity of standard training, while adding regularization terms into standard training aims to enhance the model outputs’ stabilization. Pre-training strategy aims to achieve a robust model within a predefined perturbation bound. 3) We also found that contrastive learning is a useful strategy as its core ideas about feature similarity match well with the goal of acquiring representative robust features. Model structure-based defense, meanwhile, mainly focuses on intrinsic drawbacks from the model’s structure. It is divided into structure optimization for target network methods and input data pre-processing methods according to how the structures are modified. 1) Structure optimization for target network aims to enhance the model’s ability to obtain useful information from inputs and features because the network itself is susceptible to variations from them. 2) Input data pre-processing focuses on eliminating the threats from examples before feeding them into the target network. Removing adversarial noise from inputs or detecting adversarial examples to reject them are two popular strategies because they are easily modeled and rely less on adversarial training examples compared with other methods such as adversarial training. Finally, we analyze the trends of research in this area and summarize some research on other related domains. 1) Defending against multiple adversarial perturbation well cannot make sure that the model is robust against various unseen attacks but contributes to the improvement of robustness against one specific type of perturbation. 2) With the development of defense against unseen adversarial attacks, some auxiliary tools such as the accelerating module have been proposed. 3) Defense against unseen common corruptions is beneficial for applications of defense methods because adversarial perturbations cannot represent the whole perturbation space in the real world. To summarize, defending against attacks that are totally different from the attacks during training has stronger generalizability. The analysis based on this goal shows differences from traditional surveys about adversarial defense. We hope that this survey can further motivate research on defending against unseen adversarial attacks.

- Review on fairness in image recognition Wang Mei, Deng Weihong, Su Sendoi:10.11834/jig.230226

16-07-2024

16-07-2024  6414

6414  4388

4388

Abstract:In the past few decades, image recognition technology has undergone rapid developments and has been integrated into people’s lives, profoundly changing the course of human society. However, recent studies and applications indicate that image recognition systems would show human-like discriminatory bias or make unfair decisions toward certain groups or populations, even reducing the quality of their performances in historically underserved populations. Consequently, the need to guarantee fairness for image recognition systems and prevent discriminatory decisions to allow people to fully trust and live in harmony has been increasing. This paper presents a comprehensive overview of the cutting-edge research progress toward fairness in image recognition. First, fairness is defined as achieving consistent performances across different groups regardless of peripheral attributes (e.g., color, background, gender, and race) and the reasons for the emergence of bias are illustrated from three aspects. 1) Data imbalance. In existing datasets, some groups are overrepresented and others are underrepresented. Deep models will facilitate optimization for the overrepresented groups to boost the accuracy, while the underrepresented ones are ignored during training. 2) Spurious correlations. Existing methods continuously capture unintended decision rules from spurious correlations between target variables and peripheral attributes, failing to generalize the images with no such correlations. 3) Group discrepancy. A large discrepancy exists between different groups. Performance on some subjects is sacrificed when deep models cannot trade off the specific requirements of various groups. Second, datasets (e.g., Colored Mixed National Institute of Standards and Technology database (MNIST), Corrupted Canadian Institute for Advanced Research-10 database (CIFAR-10), CelebFaces attributes database (CelebA), biased action recognition (BAR), and racial faces in the wild (RFW)) and evaluation metrics (e.g., equal opportunity and equal odds) used for fairness in image recognition are also introduced. These datasets enable researchers to study the bias of image recognition models in terms of color, background, image quality, gender, race, and age. Third, the debiased methods designed for image recognition are divided into seven categories. 1) Sample reweighting (or resampling). This method simultaneously assigns larger weights (increases the sampling frequency) to the minority groups and smaller weights (decreases the sampling frequency) to the majority ones to help the model focus on the minority groups and reduce the performance difference across groups. 2) Image augmentation. Generative adversarial networks (GANs) are introduced into debiased methods to translate the images of overrepresented groups to those of underrepresented groups. This method modifies the bias attributes of overrepresented samples while maintaining their target attributes. Therefore, additional samples are generated for underrepresented groups, and the problem of data imbalance is addressed. 3) Feature augmentation. Image augmentation suffers from model collapse in the training process of GANs; thus, some works augment samples on the feature level. This augmentation encourages the recognition model to produce consistent predictions for the samples before and after perturbing and editing the bias information of features, making it impossible for the model to predict target attributes based on bias information and thus improving model fairness. 4) Feature disentanglement. This method is one of the most commonly used for debiasing, which removes the spurious correlation between target and bias attributes in the feature space and learns target features that are independent of bias. 5) Metric learning. This method utilizes the power of metric learning (e.g., contrastive learning) to encourage the model to make predictions based on target attributes rather than bias information to promote pulling the same target class with different bias class samples close and pushing the different target classes with similar bias class samples away in the feature space. 6) Model adaptation. Some works adaptively change the network depth or hyperparameters for different groups according to their specific requirements to address group discrepancy, which improves the performance on underrepresented groups. 7) Post-processing. This method assumes black-box access to a biased model and aims to modify the final predictions outputted by the model to mitigate bias. The advantages and limitations of these methods are also discussed. Competitive performances and experimental comparisons in widely used benchmarks are summarized. Finally, the following future directions in this field are reviewed and summarized. 1) In existing datasets, bias attributes are limited to color, background, image quality, race, age, and gender. Diverse datasets must be constructed to study highly complex biases in the real world. 2) Most of the recent studies dealing with bias mitigation require annotations of the bias source. However, annotations require expensive labor, and multiple biases may occasionally coexist. Mitigation of multiple unknown biases must still be fully explored. 3) A tradeoff dilemma exists between fairness and algorithm performance. Simultaneously reducing the effect of bias without hampering the overall model performance is challenging. 4) Causal intervention is introduced into object classification to mitigate bias, while individual fairness is proposed to encourage models to provide the same predictions to similar individuals in face recognition. 5) Fairness on video data has also recently attracted attention.

Abstract:In the past few decades, image recognition technology has undergone rapid developments and has been integrated into people’s lives, profoundly changing the course of human society. However, recent studies and applications indicate that image recognition systems would show human-like discriminatory bias or make unfair decisions toward certain groups or populations, even reducing the quality of their performances in historically underserved populations. Consequently, the need to guarantee fairness for image recognition systems and prevent discriminatory decisions to allow people to fully trust and live in harmony has been increasing. This paper presents a comprehensive overview of the cutting-edge research progress toward fairness in image recognition. First, fairness is defined as achieving consistent performances across different groups regardless of peripheral attributes (e.g., color, background, gender, and race) and the reasons for the emergence of bias are illustrated from three aspects. 1) Data imbalance. In existing datasets, some groups are overrepresented and others are underrepresented. Deep models will facilitate optimization for the overrepresented groups to boost the accuracy, while the underrepresented ones are ignored during training. 2) Spurious correlations. Existing methods continuously capture unintended decision rules from spurious correlations between target variables and peripheral attributes, failing to generalize the images with no such correlations. 3) Group discrepancy. A large discrepancy exists between different groups. Performance on some subjects is sacrificed when deep models cannot trade off the specific requirements of various groups. Second, datasets (e.g., Colored Mixed National Institute of Standards and Technology database (MNIST), Corrupted Canadian Institute for Advanced Research-10 database (CIFAR-10), CelebFaces attributes database (CelebA), biased action recognition (BAR), and racial faces in the wild (RFW)) and evaluation metrics (e.g., equal opportunity and equal odds) used for fairness in image recognition are also introduced. These datasets enable researchers to study the bias of image recognition models in terms of color, background, image quality, gender, race, and age. Third, the debiased methods designed for image recognition are divided into seven categories. 1) Sample reweighting (or resampling). This method simultaneously assigns larger weights (increases the sampling frequency) to the minority groups and smaller weights (decreases the sampling frequency) to the majority ones to help the model focus on the minority groups and reduce the performance difference across groups. 2) Image augmentation. Generative adversarial networks (GANs) are introduced into debiased methods to translate the images of overrepresented groups to those of underrepresented groups. This method modifies the bias attributes of overrepresented samples while maintaining their target attributes. Therefore, additional samples are generated for underrepresented groups, and the problem of data imbalance is addressed. 3) Feature augmentation. Image augmentation suffers from model collapse in the training process of GANs; thus, some works augment samples on the feature level. This augmentation encourages the recognition model to produce consistent predictions for the samples before and after perturbing and editing the bias information of features, making it impossible for the model to predict target attributes based on bias information and thus improving model fairness. 4) Feature disentanglement. This method is one of the most commonly used for debiasing, which removes the spurious correlation between target and bias attributes in the feature space and learns target features that are independent of bias. 5) Metric learning. This method utilizes the power of metric learning (e.g., contrastive learning) to encourage the model to make predictions based on target attributes rather than bias information to promote pulling the same target class with different bias class samples close and pushing the different target classes with similar bias class samples away in the feature space. 6) Model adaptation. Some works adaptively change the network depth or hyperparameters for different groups according to their specific requirements to address group discrepancy, which improves the performance on underrepresented groups. 7) Post-processing. This method assumes black-box access to a biased model and aims to modify the final predictions outputted by the model to mitigate bias. The advantages and limitations of these methods are also discussed. Competitive performances and experimental comparisons in widely used benchmarks are summarized. Finally, the following future directions in this field are reviewed and summarized. 1) In existing datasets, bias attributes are limited to color, background, image quality, race, age, and gender. Diverse datasets must be constructed to study highly complex biases in the real world. 2) Most of the recent studies dealing with bias mitigation require annotations of the bias source. However, annotations require expensive labor, and multiple biases may occasionally coexist. Mitigation of multiple unknown biases must still be fully explored. 3) A tradeoff dilemma exists between fairness and algorithm performance. Simultaneously reducing the effect of bias without hampering the overall model performance is challenging. 4) Causal intervention is introduced into object classification to mitigate bias, while individual fairness is proposed to encourage models to provide the same predictions to similar individuals in face recognition. 5) Fairness on video data has also recently attracted attention.

- Large-scale datasets for facial tampering detection with inpainting techniques Li Wei, Huang Tianqiang, Huang Liqing, Zheng Aokun, Xu Chaodoi:10.11834/jig.230422

16-07-2024

16-07-2024  2051

2051  678

678

Abstract:Objective DeepFake technology, born with the continuous maturation of deep learning techniques, primarily utilizes neural networks to create non-realistic faces. This method has enriched people’s lives as computer vision advances and deep learning technologies mature. It has revolutionized the film industry by generating astonishing visuals and reducing production costs. Similarly, in the gaming industry, it has facilitated the creation of smooth and realistic animation effects. However, the malicious use of image manipulation to spread false information poses significant risks to society, casting doubt on the authenticity of digital content in visual media. Forgery techniques encompass four main categories: face reenactment, face replacement, face editing, and face synthesis. Face editing, a commonly employed image manipulation method, involves falsifying facial features by modifying the information related to the five facial regions. As one of the commonly employed methods in facial editing, image inpainting technology involves utilizing known content from an image to fill in missing areas, aiming to restore the image in a way that aligns as closely as possible with human perception. In the context of facial forgery, image inpainting is primarily used for identity falsification, wherein facial features are altered to achieve the goal of replacing a face. The use of image inpainting for facial manipulation similarly introduces significant disruption to people’s lives. To support research on detection methods for such manipulations, this paper produced a large-scale dataset for face manipulation detection based on inpainting techniques.Method This paper specifically focuses on the field of image tampering detection, utilizing two classic datasets: the high-quality CelebA-HQ dataset, comprising 25 000 high-resolution (1 024×1 024 pixels) celebrity face images, and the low-quality FF++ dataset, consisting of 15 000 face images extracted from video frames. On the basis of the two datasets, facial feature regions (eyebrows, eyes, nose, mouth, and the entire facial area) are segmented using image segmentation methods. Corresponding mask images are created, and the segmented facial regions are directly obscured on the original image. Two deep neural network-based inpainting methods (image inpainting via conditional texture and structure dual generation (CTSDG) and recurrent feature reasoning for image inpainting (RFR)) along with a traditional inpainting method (struct completion(SC)) were employed. The deep neural network methods require the provision of mask images to indicate the areas for inpainting, while the traditional method could directly perform inpainting on segmented facial feature images. The facial regions were inpainted using these three methods, resulting in a large-scale dataset comprising 600 000 images. This extensive dataset incorporates diverse pre-processing techniques, various inpainting methods, and includes images with different qualities and inpainted facial regions. It serves as a valuable resource for training and testing in related detection tasks, offering a rich dataset for subsequent research in the field, and also establishes a meaningful benchmark dataset for future studies in the domain of face tampering detection.Result We present comparative experiments conducted on the generated dataset, revealing notable findings. Experimental results indicate a 15% decrease in detection accuracy for images derived from the FF++ dataset under the ResNet-50 benchmark detection network. Under the Xception-Net network, the detection accuracy experiences a 5% decline. Furthermore, significant variations in detection accuracy are observed among different facial regions, with the lowest accuracy recorded in the eye region at 0.91. Generalization experiments suggest that inpainted images from the same source dataset exhibit a certain degree of generalization across different facial regions. In contrast, minimal generalization is observed among datasets created from different source data. Consequently, this dataset also serves as valuable research data for studying the generalization of inpainted images across different facial regions. Visualization tools demonstrate that the detection network indeed focuses on the inpainted facial features, affirming its attention to the manipulated facial regions. This work provides new research perspectives for methods of detecting image restoration-based manipulations.Conclusion The use of image inpainting techniques for tampering introduces a challenging scenario that can deceive conventional tampering detectors to a certain extent. Researching detection methods for this type of tampering is of practical significance. The provided large-scale face tampering dataset, based on inpainting techniques, encompasses high- and low-quality images, employing three distinct inpainting methods and targeting various facial features. This dataset offers a novel source of data for research in this field, enhancing diversity and providing benchmark data for further exploration of image restoration-related forgeries. With the scarcity of relevant datasets in this domain, we propose the utilization of this dataset as a benchmark for the field of image inpainting tampering detection. This dataset not only supports research in detection methodologies but also contributes to studies on the generalization of such methods. It serves as a foundational resource, filling the gap in the available datasets and facilitating advancements in the detection and generalization studies in the domain of image inpainting tampering. This benchmark includes a large-scale inpainting image dataset, totaling 600 000 images. The dataset’s quality is evaluated based on accuracy on manipulation detection networks, generalizability across different inpainting networks and facial regions, and modules such as data visualization.

Abstract:Objective DeepFake technology, born with the continuous maturation of deep learning techniques, primarily utilizes neural networks to create non-realistic faces. This method has enriched people’s lives as computer vision advances and deep learning technologies mature. It has revolutionized the film industry by generating astonishing visuals and reducing production costs. Similarly, in the gaming industry, it has facilitated the creation of smooth and realistic animation effects. However, the malicious use of image manipulation to spread false information poses significant risks to society, casting doubt on the authenticity of digital content in visual media. Forgery techniques encompass four main categories: face reenactment, face replacement, face editing, and face synthesis. Face editing, a commonly employed image manipulation method, involves falsifying facial features by modifying the information related to the five facial regions. As one of the commonly employed methods in facial editing, image inpainting technology involves utilizing known content from an image to fill in missing areas, aiming to restore the image in a way that aligns as closely as possible with human perception. In the context of facial forgery, image inpainting is primarily used for identity falsification, wherein facial features are altered to achieve the goal of replacing a face. The use of image inpainting for facial manipulation similarly introduces significant disruption to people’s lives. To support research on detection methods for such manipulations, this paper produced a large-scale dataset for face manipulation detection based on inpainting techniques.Method This paper specifically focuses on the field of image tampering detection, utilizing two classic datasets: the high-quality CelebA-HQ dataset, comprising 25 000 high-resolution (1 024×1 024 pixels) celebrity face images, and the low-quality FF++ dataset, consisting of 15 000 face images extracted from video frames. On the basis of the two datasets, facial feature regions (eyebrows, eyes, nose, mouth, and the entire facial area) are segmented using image segmentation methods. Corresponding mask images are created, and the segmented facial regions are directly obscured on the original image. Two deep neural network-based inpainting methods (image inpainting via conditional texture and structure dual generation (CTSDG) and recurrent feature reasoning for image inpainting (RFR)) along with a traditional inpainting method (struct completion(SC)) were employed. The deep neural network methods require the provision of mask images to indicate the areas for inpainting, while the traditional method could directly perform inpainting on segmented facial feature images. The facial regions were inpainted using these three methods, resulting in a large-scale dataset comprising 600 000 images. This extensive dataset incorporates diverse pre-processing techniques, various inpainting methods, and includes images with different qualities and inpainted facial regions. It serves as a valuable resource for training and testing in related detection tasks, offering a rich dataset for subsequent research in the field, and also establishes a meaningful benchmark dataset for future studies in the domain of face tampering detection.Result We present comparative experiments conducted on the generated dataset, revealing notable findings. Experimental results indicate a 15% decrease in detection accuracy for images derived from the FF++ dataset under the ResNet-50 benchmark detection network. Under the Xception-Net network, the detection accuracy experiences a 5% decline. Furthermore, significant variations in detection accuracy are observed among different facial regions, with the lowest accuracy recorded in the eye region at 0.91. Generalization experiments suggest that inpainted images from the same source dataset exhibit a certain degree of generalization across different facial regions. In contrast, minimal generalization is observed among datasets created from different source data. Consequently, this dataset also serves as valuable research data for studying the generalization of inpainted images across different facial regions. Visualization tools demonstrate that the detection network indeed focuses on the inpainted facial features, affirming its attention to the manipulated facial regions. This work provides new research perspectives for methods of detecting image restoration-based manipulations.Conclusion The use of image inpainting techniques for tampering introduces a challenging scenario that can deceive conventional tampering detectors to a certain extent. Researching detection methods for this type of tampering is of practical significance. The provided large-scale face tampering dataset, based on inpainting techniques, encompasses high- and low-quality images, employing three distinct inpainting methods and targeting various facial features. This dataset offers a novel source of data for research in this field, enhancing diversity and providing benchmark data for further exploration of image restoration-related forgeries. With the scarcity of relevant datasets in this domain, we propose the utilization of this dataset as a benchmark for the field of image inpainting tampering detection. This dataset not only supports research in detection methodologies but also contributes to studies on the generalization of such methods. It serves as a foundational resource, filling the gap in the available datasets and facilitating advancements in the detection and generalization studies in the domain of image inpainting tampering. This benchmark includes a large-scale inpainting image dataset, totaling 600 000 images. The dataset’s quality is evaluated based on accuracy on manipulation detection networks, generalizability across different inpainting networks and facial regions, and modules such as data visualization.

- Adaptive heterogeneous federated learning Huang Wenke, Ye Mang, Du Bodoi:10.11834/jig.230239

16-07-2024

16-07-2024  6392

6392  146

146

Abstract:Objective The current development of deep learning has caused significant changes in numerous research fields and has had profound impacts on every aspect of societal and industrial sectors, including computer vision, natural language processing, multi-modal learning, and medical analysis. The success of deep learning heavily relies on large-scale data. However, the public and scientific communities have become increasingly aware of the need for data privacy. In the real world, data are commonly distributed among different entities such as edge devices and companies. With the increasing emphasis on data sensitivity, strict legislation has been proposed to govern data collection and utilization. Thus, the traditional centralized training model, which requires data aggregation, is unusable in the practical setting. In response to such real-world challenges, federated learning (FL) has emerged as a popular research field because it can train a global model for different participants without centralizing data owned by the distributed parties. FL is a privacy-preserving multiparty collaboration model that adheres to privacy protocols without data leakage. Typically, FL requires clients to share a global model architecture for the central server to aggregate parameters from participants and then redistributes the global model (averaged parameters). However, this prerequisite largely restricts the flexibility of the client model architecture. In recent years, the concept of objective model heterogeneous FL has garnered substantial attention because it allows participants to independently design unique models in FL without compromising privacy. Specifically, participants may need to design special model architecture to ease the communication burden or refuse to share the same architecture due to intellectual property concerns. However, existing methods often rely on publicly shared related data or a global model for communication, limiting their applicability. In addition, FL is proposed to handle privacy concerns in the distributed learning environment. A pioneering FL method trains a global model by aggregating local model parameters. However, its performance is impeded due to decentralized data, which results in non-i.i.d distribution (called data heterogeneity). Each participant optimizes toward the local empirical risk minimum, which is inconsistent with the global direction. Therefore, the average global model has a slow convergence speed and achieves limited performance improvement.Method Model heterogeneity largely impedes the local model section flexibility, and data heterogeneity hinders federated performance. To address model and data heterogeneity, this paper introduces a groundbreaking approach called adaptive heterogeneous federated (AHF) learning, which employs a unique strategy by utilizing a randomly generated input signal, such as random noise and public unrelated samples, to facilitate direct communication among heterogeneous model architectures. This task is achieved by aligning the output logit distributions, fostering collaborative knowledge sharing among participants. The primary advantage of AHF is its ability to address model heterogeneity without depending on additional related data collection or shared model design. To further enhance AHF’s effectiveness in handling data heterogeneity, the paper proposes adaptive weight updating on both model and sample levels, which enables AHF participants to acquire rich and diverse knowledge by leveraging dissimilarities in model output on unrelated data while emphasizing the importance of meaningful samples.Result Empirical validation of the proposed AHF method is conducted through a meticulous series of extensive empirical experiments. Random noise inputs are employed in the context of two distinct federated learning tasks: Digits and Office-Caltech scenarios. Specifically, our solution presents the stable generalization performance on the more challenging scenario, Office-Caltech. Notably, when a larger domain gap exists among private data, AHF achieves higher overall generalization performance on these different unrelated data samples and obtains stable improvements on most unseen private data. By contrast, competing methods achieve limited generalization performance in the Office-Caltech scenario. The empirical findings validate our solution’s ability, showcasing a marked improvement in within-domain accuracy and demonstrating superior cross-domain generalization performance compared with existing methodologies.Conclusion In summary, the AHF learning method, as extensively examined in this thorough investigation, not only presents a straightforward yet remarkably efficient foundation for future progress in the domain of federated learning but also emerges as a transformative paradigm in comprehensively addressing model and data heterogeneity. AHF not only lays the groundwork for more resilient and adaptable FL models but also serves as a guide for the transformation of collaborative knowledge sharing in the upcoming era of machine learning. Studying AHF is more than an exploration of an innovative FL methodology; it provides numerous opportunities that arise given the complexities of model and data heterogeneity in the development of machine learning models.

Abstract:Objective The current development of deep learning has caused significant changes in numerous research fields and has had profound impacts on every aspect of societal and industrial sectors, including computer vision, natural language processing, multi-modal learning, and medical analysis. The success of deep learning heavily relies on large-scale data. However, the public and scientific communities have become increasingly aware of the need for data privacy. In the real world, data are commonly distributed among different entities such as edge devices and companies. With the increasing emphasis on data sensitivity, strict legislation has been proposed to govern data collection and utilization. Thus, the traditional centralized training model, which requires data aggregation, is unusable in the practical setting. In response to such real-world challenges, federated learning (FL) has emerged as a popular research field because it can train a global model for different participants without centralizing data owned by the distributed parties. FL is a privacy-preserving multiparty collaboration model that adheres to privacy protocols without data leakage. Typically, FL requires clients to share a global model architecture for the central server to aggregate parameters from participants and then redistributes the global model (averaged parameters). However, this prerequisite largely restricts the flexibility of the client model architecture. In recent years, the concept of objective model heterogeneous FL has garnered substantial attention because it allows participants to independently design unique models in FL without compromising privacy. Specifically, participants may need to design special model architecture to ease the communication burden or refuse to share the same architecture due to intellectual property concerns. However, existing methods often rely on publicly shared related data or a global model for communication, limiting their applicability. In addition, FL is proposed to handle privacy concerns in the distributed learning environment. A pioneering FL method trains a global model by aggregating local model parameters. However, its performance is impeded due to decentralized data, which results in non-i.i.d distribution (called data heterogeneity). Each participant optimizes toward the local empirical risk minimum, which is inconsistent with the global direction. Therefore, the average global model has a slow convergence speed and achieves limited performance improvement.Method Model heterogeneity largely impedes the local model section flexibility, and data heterogeneity hinders federated performance. To address model and data heterogeneity, this paper introduces a groundbreaking approach called adaptive heterogeneous federated (AHF) learning, which employs a unique strategy by utilizing a randomly generated input signal, such as random noise and public unrelated samples, to facilitate direct communication among heterogeneous model architectures. This task is achieved by aligning the output logit distributions, fostering collaborative knowledge sharing among participants. The primary advantage of AHF is its ability to address model heterogeneity without depending on additional related data collection or shared model design. To further enhance AHF’s effectiveness in handling data heterogeneity, the paper proposes adaptive weight updating on both model and sample levels, which enables AHF participants to acquire rich and diverse knowledge by leveraging dissimilarities in model output on unrelated data while emphasizing the importance of meaningful samples.Result Empirical validation of the proposed AHF method is conducted through a meticulous series of extensive empirical experiments. Random noise inputs are employed in the context of two distinct federated learning tasks: Digits and Office-Caltech scenarios. Specifically, our solution presents the stable generalization performance on the more challenging scenario, Office-Caltech. Notably, when a larger domain gap exists among private data, AHF achieves higher overall generalization performance on these different unrelated data samples and obtains stable improvements on most unseen private data. By contrast, competing methods achieve limited generalization performance in the Office-Caltech scenario. The empirical findings validate our solution’s ability, showcasing a marked improvement in within-domain accuracy and demonstrating superior cross-domain generalization performance compared with existing methodologies.Conclusion In summary, the AHF learning method, as extensively examined in this thorough investigation, not only presents a straightforward yet remarkably efficient foundation for future progress in the domain of federated learning but also emerges as a transformative paradigm in comprehensively addressing model and data heterogeneity. AHF not only lays the groundwork for more resilient and adaptable FL models but also serves as a guide for the transformation of collaborative knowledge sharing in the upcoming era of machine learning. Studying AHF is more than an exploration of an innovative FL methodology; it provides numerous opportunities that arise given the complexities of model and data heterogeneity in the development of machine learning models.

- Contrastive semi-supervised adversarial training method for hyperspectral image classification networks Shi Cheng, Liu Ying, Zhao Minghua, Miao Qiguang, Pun Chi-Mandoi:10.11834/jig.230462

16-07-2024

16-07-2024  92

92  100

100

Abstract:Objective Deep neural networks have demonstrated significant superiority in hyperspectral image classification tasks. However, the emergence of adversarial examples poses a serious threat to their robustness. Research on adversarial training methods provides an effective defense strategy for protecting deep neural networks. However, existing adversarial training methods often require a large number of labeled examples to enhance the robustness of deep neural networks, which increases the difficulty of labeling hyperspectral image examples. In addition, a critical limitation of current adversarial training approaches is that they usually do not capture intermediate layer features in the target network and pay less attention to challenging adversarial samples. This oversight can lead to the reduced generalization ability of the defense model. To further enhance the adversarial robustness of hyperspectral image classification networks with limited labeled examples, this paper proposes a contrastive semi-supervised adversarial training method.Method First, the target model is pre-trained using a small number of labeled examples. Second, for a large number of unlabeled examples, the corresponding adversarial examples are generated by maximizing the feature difference between clean unlabeled examples and adversarial examples on the target model. Adversarial samples generated using intermediate layer features of the network exhibit higher transferability compared with those generated only using output layer features. In contrast, feature-based adversarial sample generation methods do not rely on example labels. Therefore, we generate adversarial examples based on the intermediate layer features of the network. Third, the generated adversarial examples are used to enhance the robustness of the target model. The defense capabilities of the target model for the challenging adversarial samples are enhanced by defining the robust upper bound and robust lower bound of the target network based on the pre-trained target model, and a contrastive adversarial loss is designed on both intermediate feature layer and output layer to optimize the model based on the defined robust upper bound and robust lower bound. The defined contrastive loss function consists of three terms: classification loss, output contrastive loss, and feature contrastive loss. The classification loss is designed to maintain the classification accuracy of the target model for clean examples. The output contrastive loss encourages the output layer of the adversarial examples to move closer to the pre-defined output layer robust upper bound and away from the pre-defined output layer robust lower bound. The feature contrastive loss pushes the intermediate layer feature of the adversarial example closer to the pre-defined intermediate robust upper bound and away from the pre-defined intermediate robust lower bound. The proposed output contrastive adversarial loss and feature contrastive loss help improve the classification accuracy and generalization ability of the target network against challenging adversarial examples. The training process of adversarial example generation and target network optimization is performed iteratively, and example labels are not required in the training process. By incorporating a limited number of labeled examples in model training, both the output layer and intermediate feature layer are used to enhance the defense ability of the target model against known and unknown attack methods.Result We compared the proposed method with five mainstream adversarial training methods, two supervised adversarial training methods and three semi-supervised adversarial training methods, on the PaviaU and Indian Pines hyperspectral image datasets. Compared with the mainstream adversarial training methods, the proposed method demonstrates significant superiority in defending against both known and various unknown attacks. Faced with six unknown attacks, compared with the supervised adversarial training methods AT and TRADES, our method showed an average improvement in classification accuracy of 13.3% and 16%, respectively. Compared with the semi-supervised adversarial training methods SRT, RST, and MART, our method achieved an average improvement in classification accuracy of 5.6% and 4.4%, respectively. Compared with the target model without defense method, for example on the Inception_V3, the defense performance of the proposed method in the face of different attacks improved by 34.63%–92.78%.Conclusion The proposed contrastive semi-supervised adversarial training method can improve the defense performance of hyperspectral image classification networks with limited labeled examples. By maximizing the feature distance between clean examples and adversarial examples on the target model, we can generate highly transferable adversarial examples. To address the limitation of defense generalization ability imposed by the number of labeled examples, we define the concept of robust upper bound and robust lower bound based on the pre-trained target model and design an optimization model according to a contrastive semi-supervised loss function. By extensively leveraging the feature information provided by a few labeled examples and incorporating a large number of unlabeled examples, we can further enhance the generalization ability of the target model. The defense performance of the proposed method is superior to that of the supervised adversarial training methods.

Abstract:Objective Deep neural networks have demonstrated significant superiority in hyperspectral image classification tasks. However, the emergence of adversarial examples poses a serious threat to their robustness. Research on adversarial training methods provides an effective defense strategy for protecting deep neural networks. However, existing adversarial training methods often require a large number of labeled examples to enhance the robustness of deep neural networks, which increases the difficulty of labeling hyperspectral image examples. In addition, a critical limitation of current adversarial training approaches is that they usually do not capture intermediate layer features in the target network and pay less attention to challenging adversarial samples. This oversight can lead to the reduced generalization ability of the defense model. To further enhance the adversarial robustness of hyperspectral image classification networks with limited labeled examples, this paper proposes a contrastive semi-supervised adversarial training method.Method First, the target model is pre-trained using a small number of labeled examples. Second, for a large number of unlabeled examples, the corresponding adversarial examples are generated by maximizing the feature difference between clean unlabeled examples and adversarial examples on the target model. Adversarial samples generated using intermediate layer features of the network exhibit higher transferability compared with those generated only using output layer features. In contrast, feature-based adversarial sample generation methods do not rely on example labels. Therefore, we generate adversarial examples based on the intermediate layer features of the network. Third, the generated adversarial examples are used to enhance the robustness of the target model. The defense capabilities of the target model for the challenging adversarial samples are enhanced by defining the robust upper bound and robust lower bound of the target network based on the pre-trained target model, and a contrastive adversarial loss is designed on both intermediate feature layer and output layer to optimize the model based on the defined robust upper bound and robust lower bound. The defined contrastive loss function consists of three terms: classification loss, output contrastive loss, and feature contrastive loss. The classification loss is designed to maintain the classification accuracy of the target model for clean examples. The output contrastive loss encourages the output layer of the adversarial examples to move closer to the pre-defined output layer robust upper bound and away from the pre-defined output layer robust lower bound. The feature contrastive loss pushes the intermediate layer feature of the adversarial example closer to the pre-defined intermediate robust upper bound and away from the pre-defined intermediate robust lower bound. The proposed output contrastive adversarial loss and feature contrastive loss help improve the classification accuracy and generalization ability of the target network against challenging adversarial examples. The training process of adversarial example generation and target network optimization is performed iteratively, and example labels are not required in the training process. By incorporating a limited number of labeled examples in model training, both the output layer and intermediate feature layer are used to enhance the defense ability of the target model against known and unknown attack methods.Result We compared the proposed method with five mainstream adversarial training methods, two supervised adversarial training methods and three semi-supervised adversarial training methods, on the PaviaU and Indian Pines hyperspectral image datasets. Compared with the mainstream adversarial training methods, the proposed method demonstrates significant superiority in defending against both known and various unknown attacks. Faced with six unknown attacks, compared with the supervised adversarial training methods AT and TRADES, our method showed an average improvement in classification accuracy of 13.3% and 16%, respectively. Compared with the semi-supervised adversarial training methods SRT, RST, and MART, our method achieved an average improvement in classification accuracy of 5.6% and 4.4%, respectively. Compared with the target model without defense method, for example on the Inception_V3, the defense performance of the proposed method in the face of different attacks improved by 34.63%–92.78%.Conclusion The proposed contrastive semi-supervised adversarial training method can improve the defense performance of hyperspectral image classification networks with limited labeled examples. By maximizing the feature distance between clean examples and adversarial examples on the target model, we can generate highly transferable adversarial examples. To address the limitation of defense generalization ability imposed by the number of labeled examples, we define the concept of robust upper bound and robust lower bound based on the pre-trained target model and design an optimization model according to a contrastive semi-supervised loss function. By extensively leveraging the feature information provided by a few labeled examples and incorporating a large number of unlabeled examples, we can further enhance the generalization ability of the target model. The defense performance of the proposed method is superior to that of the supervised adversarial training methods.

- Universal detection method for mitigating adversarial text attacks through token loss information Chen Yuhan, Du Xia, Wang Dahan, Wu Yun, Zhu Shunzhi, Yan Yandoi:10.11834/jig.230432

16-07-2024

16-07-2024  144

144  83

83

Abstract:Objective In recent years, adversarial text attacks have become a hot research problem in natural language processing security. An adversarial text attack is an malicious attack that misleads a text classifier by modifying the original text to craft an adversarial text. Natural language processing tasks, such as smishing scams (SMS), ad sales, malicious comments, and opinion detection, can be achieved by creating attacks corresponding to them to mislead text classifiers. A perfect text adversarial example needs to have imperceptible adversarial perturbation and unaffected syntactic-semantic correctness, which significantly increases the difficulty of the attack. The adversarial attack methods in the image domain cannot be directly applied to textual attacks due to discrete text limitation. Existing text attacks can be categorized into two dominant groups: instance-based and learning-based universal non-instance attacks. For instance-based attacks, a specific adversarial example is generated for each input. For learning-based universal non-instance attacks, universal trigger (UniTrigger) is the most representative attack, which reduces the accuracy of the objective model to near zero by generating a fixed sequence of attacks. Existing detection methods mainly tackle instance-based attacks but are seldom studied in UniTrigger attacks. Inspired by the logit-based adversarial detector in computer vision, we propose a UniTrigger defense method based on token loss weight information.Method For our proposed loss-based detect universal adversarial attack (LBD-UAA), we generalize the pre-training model to transform token sequences into word vector sequences to obtain the representation of token sequences in the semantic space. Then, we remove the target to compute the token positions and feed the remaining token sequence strings into the model. In this paper, we use the token loss value (TLV) metric to obtain the weight proportion of each token to build a full-sample sequence lookup table. The token sequences of non-UniTrigger attacks have less fluctuation than the adversarial examples in the variation of the TLV metric. Prior knowledge suggests that the fluctuations in the token sequence are the result of adversarial perturbations generated by UniTrigger. Hence, we envision deriving the distinct numerical differences between the TLV full-sequence lookup table and clean samples, as well as adversarial samples. Subsequently, we can employ the differential outcomes as the data representation for the samples. Building upon this approach, we can set a differential threshold to confine the magnitude of variations. If the magnitude exceeds this threshold, then the input sample will be identified as an adversarial instance.Result To demonstrate the efficacy of the proposed approach, we conducted performance evaluations on four widely used text classification datasets: SST-2, MR, AG, and Yelp. SST-2 and MR represent short-text datasets, while AG and Yelp encompass a variety of domain-specific news articles and website reviews, making them long-text datasets. First, we generated corresponding trigger sequences by attacking specific categories of the four text datasets through the UniTrigger attack framework. Subsequently, we blended the adversarial samples evenly with clean samples and fed them into the LBD-UAA for adversarial detection. Experimental results across the four datasets indicate that this method achieves a maximum detection rate of 97.17%, with a recall rate reaching 100%. When compared with four other detection methods, our proposed approach achieves an overall outperformance with a true positive rate of 99.6% and a false positive rate of 6.8%. Even for the challenging MR dataset, it retains a 96.2% detection rate and outperforms the state-of-the-art approaches. In the generalization experiments, we performed detection on adversarial samples generated using three attack methods from TextBugger and the PWWS attack. Results indicate that LBD-UAA achieves strong detection performance across the four different word-level attack methods, with an average true positive rate for adversarial detection reaching 86.77%, 90.98%, 90.56%, and 93.89%. This finding demonstrates that LBD-UAA possesses significant discriminative capabilities in detecting instance-specific adversarial samples, showcasing robust generalization performance. Moreover, we successfully reduced the false positive rate of short sample detection to 50% by using our proposed differential threshold setting.Conclusion In this paper, we follow the design idea of adversarial detection tasks in the image domain and, for the first time in the general text adversarial domain, introduce a detection method called LBD-UAA, which leverages token weight information from the perspective of token loss measurement, as measured by TLV. We are the first to detect UniTrigger attacks by using token loss weights in the adversarial text domain. This method is tailored for learning-based universal category adversarial attacks and has been evaluated for its defensive capabilities in sentiment analysis and text classification models in two short-text and long-text datasets. During the experimental process, we observed that the numerical feedback from TLV can be used to identify specific locations where perturbation sequences were added to some samples. Future work will focus on using the proposed detection method to eliminate high-risk samples, potentially allowing for the restoration of adversarial samples. We believe that LBD-UAA opens up additional possibilities for exploring future defenses against UniTrigger-type and other text-based adversarial strategies and that it can provide a more effective reference mechanism for adversarial text detection.

Abstract:Objective In recent years, adversarial text attacks have become a hot research problem in natural language processing security. An adversarial text attack is an malicious attack that misleads a text classifier by modifying the original text to craft an adversarial text. Natural language processing tasks, such as smishing scams (SMS), ad sales, malicious comments, and opinion detection, can be achieved by creating attacks corresponding to them to mislead text classifiers. A perfect text adversarial example needs to have imperceptible adversarial perturbation and unaffected syntactic-semantic correctness, which significantly increases the difficulty of the attack. The adversarial attack methods in the image domain cannot be directly applied to textual attacks due to discrete text limitation. Existing text attacks can be categorized into two dominant groups: instance-based and learning-based universal non-instance attacks. For instance-based attacks, a specific adversarial example is generated for each input. For learning-based universal non-instance attacks, universal trigger (UniTrigger) is the most representative attack, which reduces the accuracy of the objective model to near zero by generating a fixed sequence of attacks. Existing detection methods mainly tackle instance-based attacks but are seldom studied in UniTrigger attacks. Inspired by the logit-based adversarial detector in computer vision, we propose a UniTrigger defense method based on token loss weight information.Method For our proposed loss-based detect universal adversarial attack (LBD-UAA), we generalize the pre-training model to transform token sequences into word vector sequences to obtain the representation of token sequences in the semantic space. Then, we remove the target to compute the token positions and feed the remaining token sequence strings into the model. In this paper, we use the token loss value (TLV) metric to obtain the weight proportion of each token to build a full-sample sequence lookup table. The token sequences of non-UniTrigger attacks have less fluctuation than the adversarial examples in the variation of the TLV metric. Prior knowledge suggests that the fluctuations in the token sequence are the result of adversarial perturbations generated by UniTrigger. Hence, we envision deriving the distinct numerical differences between the TLV full-sequence lookup table and clean samples, as well as adversarial samples. Subsequently, we can employ the differential outcomes as the data representation for the samples. Building upon this approach, we can set a differential threshold to confine the magnitude of variations. If the magnitude exceeds this threshold, then the input sample will be identified as an adversarial instance.Result To demonstrate the efficacy of the proposed approach, we conducted performance evaluations on four widely used text classification datasets: SST-2, MR, AG, and Yelp. SST-2 and MR represent short-text datasets, while AG and Yelp encompass a variety of domain-specific news articles and website reviews, making them long-text datasets. First, we generated corresponding trigger sequences by attacking specific categories of the four text datasets through the UniTrigger attack framework. Subsequently, we blended the adversarial samples evenly with clean samples and fed them into the LBD-UAA for adversarial detection. Experimental results across the four datasets indicate that this method achieves a maximum detection rate of 97.17%, with a recall rate reaching 100%. When compared with four other detection methods, our proposed approach achieves an overall outperformance with a true positive rate of 99.6% and a false positive rate of 6.8%. Even for the challenging MR dataset, it retains a 96.2% detection rate and outperforms the state-of-the-art approaches. In the generalization experiments, we performed detection on adversarial samples generated using three attack methods from TextBugger and the PWWS attack. Results indicate that LBD-UAA achieves strong detection performance across the four different word-level attack methods, with an average true positive rate for adversarial detection reaching 86.77%, 90.98%, 90.56%, and 93.89%. This finding demonstrates that LBD-UAA possesses significant discriminative capabilities in detecting instance-specific adversarial samples, showcasing robust generalization performance. Moreover, we successfully reduced the false positive rate of short sample detection to 50% by using our proposed differential threshold setting.Conclusion In this paper, we follow the design idea of adversarial detection tasks in the image domain and, for the first time in the general text adversarial domain, introduce a detection method called LBD-UAA, which leverages token weight information from the perspective of token loss measurement, as measured by TLV. We are the first to detect UniTrigger attacks by using token loss weights in the adversarial text domain. This method is tailored for learning-based universal category adversarial attacks and has been evaluated for its defensive capabilities in sentiment analysis and text classification models in two short-text and long-text datasets. During the experimental process, we observed that the numerical feedback from TLV can be used to identify specific locations where perturbation sequences were added to some samples. Future work will focus on using the proposed detection method to eliminate high-risk samples, potentially allowing for the restoration of adversarial samples. We believe that LBD-UAA opens up additional possibilities for exploring future defenses against UniTrigger-type and other text-based adversarial strategies and that it can provide a more effective reference mechanism for adversarial text detection.

- Sparse adversarial patch attack based on QR code mask Ye Yixuan, Du Xia, Chen Si, Zhu Shunzhi, Yan Yandoi:10.11834/jig.230453

16-07-2024

16-07-2024  133

133  90

90

Abstract:Objective Convolutional neural networks (CNNs) and other deep networks have revolutionized the field of computer vision, particularly in the area of image recognition, leading to significant advancements in various visual tasks. Recent studies have unequivocally demonstrated that the performance of deep neural networks is significantly compromised in the presence of adversarial examples. Maliciously crafted inputs can cause a notable decline in the accuracy and reliability of deep learning models. Traditional adversarial attacks based on adversarial patches tend to concentrate a significant amount of perturbations in the masked regions of an image. However, crafting imperceptible perturbations for patch attack is highly challenging. Adversarial patches consist solely of noise and are visually redundant, lacking any practical significance in their existence. To address this issue, this paper proposes a novel approach called quick response (QR) code-based sparse adversarial patch attack. A QR code is a square symbol consisting of alternating dark and light modules, extensively employed in images. It uses a specialized encoding technique to store meaningful information. Utilizing QR codes as adversarial patches not only inherits the robustness of traditional adversarial patches but also increases the likelihood of evading suspicion. A crucial detail to highlight is that global-based perturbations can potentially disrupt the integrity of the valuable information stored in the QR code. Particularly when attacking robust images, excessive superimposed perturbations can significantly affect the white background of the QR code, thus ultimately rendering the generated adversarial QR code unscannable, preventing its successful detection and decoding. In this regard, we hope to ensure the integrity of QR code by limiting the amount of noise. Inspired by sparse attacks, we integrate the QR code patch with sparse attack techniques to control the sparsity of adversarial perturbations. By doing so, our proposed method effectively limits the number of noise points, minimizing the influence of noise on the QR code pixels and ensuring the robustness of the encoded information. Furthermore, our approach exhibits attack performance and maintains a certain level of imperceptibility, making it a compelling solution.Method Building upon the aforementioned analysis, our proposed method follows a step-by-step approach. First, we gather the prediction information of the target model on the input image. Next, we calculate the gradient that steers the prediction result away from the category with the highest probability. Simultaneously, we create a mask to confine the perturbation noise within the colored area of the QR code, thereby preserving the original information. Taking inspiration from recent advances, we employ the Lp-Box alternating direction method of multipliers algorithm to optimize the sparsity of added perturbation points. This optimization aims to ensure that the original information carried by QR codes remains intact even under the efficient conditions for successful adversarial attacks. By mitigating the impact of densely added high-distortion points, our approach achieves a balance between high attack success rates and preserving the inherent recognizability of QR codes. The final result is an adversarial patch that remains imperceptible to human observers.Result Experiments were conducted on the Inception-v3 and ResNet-50 models using the ImageNet dataset. Our method was compared against representative adversarial attacks in non-target scenarios, considering the attack success rate and Lp-norm perturbation. To assess the similarity between adversarial examples and the original image, we utilized several similarity measures (peak signal-to-noise ratio(PSNR), erreur relative globale adimensionnelle de synthèse(ERGAS), structural similarity index measure(SSIM), spectral angle mapping(SAM)) to calculate the similarity scores and compared them with other attacks. We also evaluated the robustness of our attack after applying several defense algorithms as pre-processing steps. In addition, we investigated the impact of different QR code sizes on the attack success rate and Lp-norm of the perturbation of our method. Experimental results demonstrate that our approach achieves a balance between attack success rate and imperceptible noise in non-target scenarios. The adversarial examples generated by our method exhibit the smallest L0 norm of perturbation among all the methods. Although our method may not always achieve the best similarity scores, visual results demonstrate that our crafted adversarial noise is optimally imperceptible. Moreover, even after pre-processing with various defense methods, our method continues to outperform other attacks. In the ablation study on QR code sizes for non-target attacks, we observed that reducing the QR code size from 55×55 pixels to 50×50 pixels led to a 3.8% decrease in the attack success rate. Conversely, increasing the size to 60×60 pixels resulted in a 2.7% improvement compared with 55×55 pixels. Similarly, reducing the size to 65×65 pixels led to a 1.1% decrease compared with 60×60 pixels, while increasing it to 70×70 pixels resulted in a 6.4% improvement compared with 65×65 pixels. With regard to the Lp-norm of perturbations, we found a positive correlation between the L1-norm and the number of perturbation points, whereas the L2-norm and L0-norm perturbations exhibited a negative correlation with the number of perturbed points.Conclusion The proposed QR code-based adversarial patch attack is more reasonable for real attack scenarios. By utilizing sparsity algorithms, we ensure the preservation of the information carried by the two-dimensional code itself, resulting in the generation of more perplexing adversarial samples. This approach provides novel insights into the research of highly imperceptible adversarial patches.