融合分割先验的多图像目标语义分割

廖旋1,2, 缪君1,3, 储珺1, 张桂梅1(1.南昌航空大学无损检测技术教育部重点实验室, 南昌 330063;2.南昌航空大学航空制造工程学院, 南昌 330063;3.中国科学院月球与深空探测重点实验室, 北京 100012) 摘 要

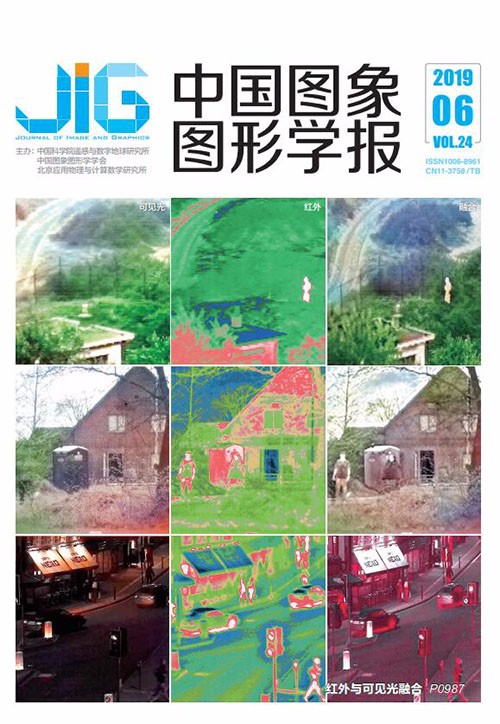

目的 在序列图像或多视角图像的目标分割中,传统的协同分割算法对复杂的多图像分割鲁棒性不强,而现有的深度学习算法在前景和背景存在较大歧义时容易导致目标分割错误和分割不一致。为此,提出一种基于深度特征的融合分割先验的多图像分割算法。方法 首先,为了使模型更好地学习复杂场景下多视角图像的细节特征,通过融合浅层网络高分辨率的细节特征来改进PSPNet-50网络模型,减小随着网络的加深导致空间信息的丢失对分割边缘细节的影响。然后通过交互分割算法获取一至两幅图像的分割先验,将少量分割先验融合到新的模型中,通过网络的再学习来解决前景/背景的分割歧义以及多图像的分割一致性。最后通过构建全连接条件随机场模型,将深度卷积神经网络的识别能力和全连接条件随机场优化的定位精度耦合在一起,更好地处理边界定位问题。结果 本文采用公共数据集的多图像集进行了分割测试。实验结果表明本文算法不但可以更好地分割出经过大量数据预训练过的目标类,而且对于没有预训练过的目标类,也能有效避免歧义的区域分割。本文算法不论是对前景与背景区别明显的较简单图像集,还是对前景与背景颜色相似的较复杂图像集,平均像素准确度(PA)和交并比(IOU)均大于95%。结论 本文算法对各种场景的多图像分割都具有较强的鲁棒性,同时通过融入少量先验,使模型更有效地区分目标与背景,获得了分割目标的一致性。

关键词

Multi-image object semantic segmentation by fusing segmentation priors

Liao Xuan1,2, Miao Jun1,3, Chu Jun1, Zhang Guimei1(1.Key Laboratory of Nondestructive Testing, Nanchang Hangkong University, Nanchang 330063, China;2.School of Aeronautical Manufacturing Engineering, Nanchang Hangkong University, Nanchang 330063, China;3.Key Laboratory of Lunar and Deep Space Exploration, National Astronomical Observatories, Chinese Academy of Sciences, Beijing 100012, China) Abstract

Objective Object segmentation from multiple images involves locating the positions and ranges of common target objects in a scene, as presented in a sequence image set or multi-view images. This process is applied to various computer vision tasks and beyond, such as object detection and tracking, scene understanding, and 3D reconstruction. Early approaches consider object segmentation as a histogram matching of color values, and they are only applied to pair-wise images with the same or similar objects. Later on, object co-segmentation methods are introduced. Most of these methods take the MRF model as the basic framework and establish the cost function that consists of the energy within the image itself and the energy between images by using the feature calculation based on the gray or color values of pixels. The cost function is minimized to obtain consistent segmentation. However, when the foreground and background colors in these images are similar, co-segmentation cannot easily realize object segmentation with consistent regions. In recent years, with the development of deep learning, methods based on various deep learning models have been proposed. Some methods, such as the full convolutional network, adopt convolutional neural networks to extract the high-level semantic features of images to attain end-to-end image classification with pixel level. These algorithms can obtain better precision than traditional methods could. Compared with these traditional methods, deep learning methods can learn appropriate features automatically for individual classes without manual selection and adjustment of features. Exactly segmenting a single image must combine multi-level spatial domain information. Hence, multi-image segmentation not only demands fine grit accuracy in local regions and single image segmentation but also requires the balance of local and global information among multiple images. When ambiguous regions around the foreground and background are involved or when sufficient priori information is not given about objects, most deep learning methods tend to generate errors and achieve inconsistent segmentation from sequential image sets or multi-view images. Method In this study, we propose a multi-image segmentation method on the basis of depth feature exaction. The method is similar to the neural network model of PSPNet-50, in which a residual network is used to exact the features of the first 50 layers of the network. These extracted features are integrated into the pyramid pooling module by using pooling layers with differently sized pooling filters. Then, the features of different levels are fused. After applying a convolutional layer and up-convolutional layer, the initial end-to-end outputs are attained. To make the model learn the detail features from the multi-view images of complex scenes comprehensively, we join the first and fifth parts of the output network features. Thus, the PSPNet-50 network model is improved by integrating the high-resolution details of the shallow layer network, which also is used to reduce the effects of spatial information loss on the segmentation edge details as the network deepens. In the training phase, the improved network model is first pre-trained using the ADE20k dataset. Thus, the model, after considerable data training, achieves strong robustness and generalization. Afterward, one or two prior segmentations of the object are gained by using the interactive segmentation approach. These small priori segmentation integrations are fused into the new model. The network is then re-trained to solve the ambiguity segmentation problem between the foreground and the background and the inconsistent segmentation problem among multi-image. We analyze the relationship between the number of re-training iterations and the segmentation accuracy by employing a large number of experimental results to determine the optimal number of iterations. Finally, by constructing a fully connected conditional random field, the recognition ability of the deep convolutional neural network and the accurate locating ability of the fully connected condition random field are coupled together. The object region is effectively located, and the object edge is clearly detected. Result We evaluate our method on multi-image from various public data sets showing outdoor buildings and indoor objects. We also compare our results with those of other deep learning methods, such as fully convolutional networks (FCNs) and pyramid scene parsing network (PSPNet). Experiments in the multi-view dataset of "Valbonne" and "Box" show that our algorithm can exactly segment the region of the object in re-training classes while effectively avoiding the ambiguous region segmentation for those untraining object classes. To evaluate our algorithm quantitatively, we compute the commonly used accuracy evaluation, average values of pixel accuracy (PA), and intersection over union (IOU) and then evaluate the segmentation accuracy of the object. Results show that our algorithm attains satisfactory scores not only in complex scene image sets with similar foreground and background contexts but also in simple image sets with obvious differences between the foreground and background contexts. For example, in the "Valbonne" set, the PA and IOU values of our result are 0.968 3 and 0.946 9, respectively; whereas the values of FCN are 0.702 7 and 0.694 2, respectively. The values of PSPNet are 0.850 9 and 0.824 0. Our method achieves 10% higher scores than FCN does and 20% higher scores than PSPNet does. In the "Box" set, our method achieves the PA values of 0.994 6 and IOU values of 0.957 7. However, FCN and PSPNet cannot find the real region of the object because the "Box" class is not contained in their re-training classes. The same improvements are found in other data sets. The average scores of PA and IOU of our method are more than 0.95. Conclusion Experimental results demonstrate that our algorithm has strong robustness in various scenes and can achieve consistent segmentation in multi-view images. A small amount of priori integration can help to accurately predict object pixel-level region and make the model effectively distinguish object regions from the background. The proposed approach consistently outperforms competing methods for contained and un-contained object classes.

Keywords

multi-image object segmentation deep learning convolutional neural networks(CNN) segmentation prior conditional random field(CRF)

|

中国图象图形学报 │ 京ICP备05080539号-4 │ 本系统由

中国图象图形学报 │ 京ICP备05080539号-4 │ 本系统由