可解译深度网络的多光谱遥感图像融合

摘 要

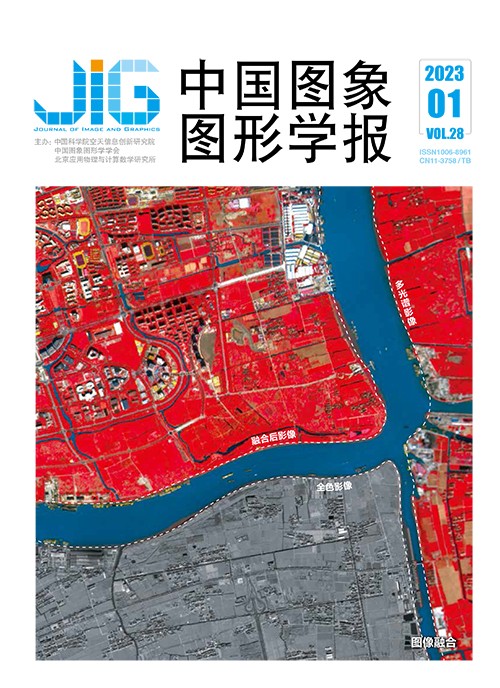

目的 多光谱图像融合是遥感领域中的重要研究问题,变分模型方法和深度学习方法是目前的研究热点,但变分模型方法通常采用线性先验构建融合模型,难以描述自然场景复杂非线性关系,导致成像模型准确性较低,同时存在手动调参的难题;而主流深度学习方法将融合过程当做一个黑盒,忽视了真实物理成像机理,因此,现有融合方法的性能依然有待提升。为了解决上述问题,提出了一种基于可解译深度网络的多光谱图像融合方法。方法 首先构建深度学习先验描述融合图像与全色图像之间的关系,基于多光谱图像是融合图像下采样结果这一认知构建数据保真项,结合深度学习先验和数据保真项建立一种新的多光谱图像融合模型,提升融合模型准确性。采用近端梯度下降法对融合模型进行求解,进一步将求解步骤映射为具有明确物理成像机理的可解译深度网络架构。结果 分别在Gaofen-2和GeoEye-1遥感卫星仿真数据集,以及QuickBird遥感卫星真实数据集上进行了主客观对比实验。相对于经典方法,本文方法的主观视觉效果有了显著提升。在Gaofen-2和GeoEye-1遥感卫星仿真数据集,相对于性能第2的方法,本文方法的客观评价指标全局相对无量纲误差(relative dimensionless global error in synthesis,ERGAS)有效减小了7.58%和4.61%。结论 本文提出的可解译深度网络,综合了变分模型方法和深度学习方法的优点,在有效保持光谱信息的同时较好地增强融合图像空间细节信息。

关键词

Deep network-interpreted multispectral image fusion in remote sensing

Yu Dian1, Li Kun1, Zhang Wei1, Li Duidui2, Tian Xin1, Jiang Hao1(1.Electronic Information School, Wuhan University, Wuhan 430072, China;2.China Centre for Resources Satellite Data and Application, Beijing 100094, China) Abstract

Objective Multispectral image fusion is one of the key tasks in the field of remote sensing (RS). Recent variational model-based and deep learning-based techniques have been developing intensively. However, traditional variational model-based approaches are employed based on linear prior, which is challenged to demonstrate the complicated nonlinear relationship for natural scenarios. Thus, the fusion model is restricted to optimal parameter selection and accurate model design. To resolve these problems, our research is focused on developing a deep network-interpreted for multispectral image and panchromatic image fusion. Method First, we explore a deep prior to describe the relationship between the fusion image and the panchromatic image. Furthermore, a data fidelity term is constructed based on the assumption that the multispectral image is considered to be the down-sampled version of the fusion result. A new fusion model is proposed by integrating the deep prior and the data fidelity term mentioned above. To obtain an accurate fusion result, we first resolve the proposed fusion model by the proximal gradient descent method, which introduces intermediate variables to convert the original optimization problem into several iterative steps. Then, we simplify the iteration function by assuming that the residual for each iteration follows Gaussian distribution. After next, we unroll the above optimization steps into a deep learning network that contains several sub-modules. Therefore, the optimization process of network parameters is driven for a clear physical-based deep fusion network-interpreted via the training data and the proposed physical fusion model both. Moreover, the handcrafted hyper-parameters in the fusion model are also tuned from specific training data, which can resolve the problem of the design of manual parameters in the traditional variational model methods effectively. Specifically, to build an interpretable end-to-end fusion network, we implement the optimization steps in each iteration with different network modules. Furthermore, to deal with the challenging issues of the diversity of sensor spectrum character between different satellites, we use two consecutive 3×3 convolution layers separated with a ReLU nonlinear active layer to represent the optical spectrum transform matrix. For upgrading the intermediate variable-introduced, it is regarded as a denoising problem in related to SwinResUnet. Thanks to the capabilities of extraction of local features and attention of global information, the SwinResUnet incorporates convolutional neural network (CNN) and Swin-Transformer layers into its network architecture. And, a U-Net is adopted as the backbone of SwinResUnet in the deep denoiser, which contains three groups of encoders and decoders with different feature scales. In addition, short connections are established in each group of encoder and decoder for enhancing feature transmission and avoiding gradient explosion. Finally, the L1 norm for reference image and fusion image is used as the cost function. Result The experiments are composed of 3 aspects: 1) simulation experiment, 2) real experiment, and 3) ablation analysis. The Wald's protocol-based simulation experiment fuses images via down-sampled multispectral image (MSI) and panchromatic image (PAN). The real experiment is conducted by fusing original MSI and PAN. The comparison methods include: a) polynomial interpolation, b) gram-schmidt adaptive (GSA) and c) partial replacement-based adaptive component substitution (PRACS) (component substitution methods), d) Indusion and e) additive wavelet luminance proportional (AWLP) (multi-resolution analysis methods), f) simultaneously registration and fusion (SIRF) and g) local gradient constraints (LGC) (variational model optimization methods), h) pansharpening by using a convolutional neural network (PNN), i) deep network architecture for pansharpening (PanNet) and j) interpretable deep network for variational pansharpening (VPNet) (deep learning methods). We demonstrate the superiority of our method in terms of visual effect and quantitative analysis on the simulated Gaofen-2, GeoEye-1 satellite datasets, and the real QuickBird satellite dataset. The quantitative evaluation metrics mainly include: 1) relative dimensionless global error in synthesis (ERGAS), 2) spectral angle mapping, 3) global score Q2n, 4) structural similarity index, 5) root mean square error, 6) relative average spectral error, 7) universal image quality index, and 8) peak signal-to-noise ratio. As there is no reference image for real experiment, we employ some non-reference metrics like quality with no reference (QNR), Ds and Dλ. Visual comparison: the visual effect of the proposed method has a larger improvement over other state-of-the-art methods. Quantitative evaluation: compared with the second-best method, ERGAS can be efficiently reduced by 7.58% and 4.61% on the simulated Gaofen-2 and GeoEye-1 satellite datasets, respectively. Conclusion Our interpretable deep network combines the advantages of variational model-based and deep learning-based approaches, thus achieving a good balance between spatial and spectral qualities.

Keywords

remote sensing(RS) multispectral image(MSI) image fusion deep learning(DL) interpretable network proximal gradient descent(PGD)

|

中国图象图形学报 │ 京ICP备05080539号-4 │ 本系统由

中国图象图形学报 │ 京ICP备05080539号-4 │ 本系统由