基于深度学习的图像融合方法综述

摘 要

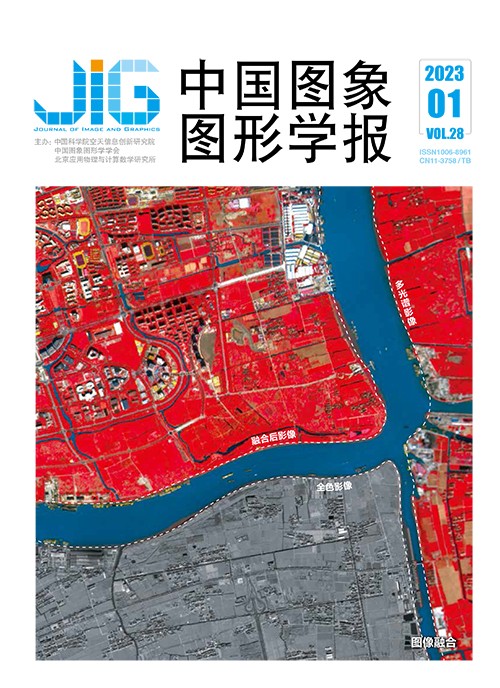

图像融合技术旨在将不同源图像中的互补信息整合到单幅融合图像中以全面表征成像场景,并促进后续的视觉任务。随着深度学习的兴起,基于深度学习的图像融合算法如雨后春笋般涌现,特别是自编码器、生成对抗网络以及Transformer等技术的出现使图像融合性能产生了质的飞跃。本文对不同融合任务场景下的前沿深度融合算法进行全面论述和分析。首先,介绍图像融合的基本概念以及不同融合场景的定义。针对多模图像融合、数字摄影图像融合以及遥感影像融合等不同的融合场景,从网络架构和监督范式等角度全面阐述各类方法的基本思想,并讨论各类方法的特点。其次,总结各类算法的局限性,并给出进一步的改进方向。再次,简要介绍不同融合场景中常用的数据集,并给出各种评估指标的具体定义。对于每一种融合任务,从定性评估、定量评估和运行效率等多角度全面比较其中代表性算法的性能。本文提及的算法、数据集和评估指标已汇总至https://github.com/Linfeng-Tang/Image-Fusion。最后,给出了本文结论以及图像融合研究中存在的一些严峻挑战,并对未来可能的研究方向进行了展望。

关键词

Deep learning-based image fusion: a survey

Tang Linfeng, Zhang Hao, Xu Han, Ma Jiayi(Electronic Information School, Wuhan University, Wuhan 430072, China) Abstract

Image fusion aims to integrate complementary information from multi-source images into a single fused image to characterize the imaging scene and facilitate the subsequent vision tasks further. In recent years, it has been a concern in the field of image processing, especially in artificial intelligence-related industries like intelligent medical service, autonomous driving, smart photography, security surveillance, and military monitoring. Moreover, the growth of deep learning has been promoting deep learning-based image fusion algorithms. In particular, the emergence of advanced techniques, such as the auto-encoder, generative adversarial network, and Transformer, has led to a qualitative leap in image fusion performance. However, a comprehensive review and analysis of state-of-the-art deep learning-based image fusion algorithms for different fusion scenarios are required to be realized. Thus, we develop a systematic and critical review to explore the developments of image fusion in recent years. First, a comprehensive and systematic introduction of the image fusion field is presented from the following three aspects: 1) the development of image fusion technology, 2) the prevailing datasets, and 3) the common evaluation metrics. Then, more extensive qualitative experiments, quantitative experiments, and running efficiency evaluations of representative image fusion methods are conducted on the public datasets to compare their performance. Finally, the summary and challenges in the image fusion community are highlighted. In particular, some prospects are recommended further in the field of image fusion. First of all, from the perspective of fusion scenarios, the existing image fusion methods can be divided into three categories, i.e., multi-modal image fusion, digital photography image fusion, and remote sensing image fusion. Specifically, multi-modal image fusion is composed of infrared and visible image fusion as well as medical image fusion, and digital photography image fusion consists of multi-exposure image fusion as well as multi-focus image fusion. Multi-spectral and panchromatic image fusion can be as one of the key aspects for remote sensing image fusion. In addition, the domain of deep learning-based image fusion algorithms can be classified into the auto-encoder based (AE-based) fusion framework, convolutional neural network based (CNN-based) fusion framework, and generative adversarial network based (GAN-based) fusion framework from the aspect of network architectures. The AE-based fusion framework achieves the feature extraction and image reconstruction by a pre-trained auto-encoder, and accomplishes deep feature fusion via manual fusion strategies. To clarify feature extraction, fusion, and image reconstruction, the CNN-based fusion framework is originated from detailed network structures and loss functions. The GAN-based framework defines the image fusion problem as an adversarial game between the generators and discriminators. From the perspective of the supervision paradigm, the deep learning fusion methods can also be categorized into three classes, i.e., unsupervised, self-supervised, and supervised. The supervised methods leverage ground truth value to guide the training processes, and the unsupervised approaches construct loss function via constraining the similarity between the fusion result and source images. The self-supervised algorithms are associated with the AE-based framework in common. Our critical review is focused on the main concepts and discussions of the characteristics of each method for different fusion scenarios from the perspectives of the network architecture and supervision paradigm. Especially, we summarize the limitations of different fusion algorithms and provide some recommendations for further research. Secondly, we briefly introduce the popular public datasets and provide the interfaces-related to download them for each specific image fusion scenario. Then, we present the common evaluation metrics in the image fusion field from two aspects: regular-based evaluation metrics and specific-based metrics designed for pan-sharpening. The generic metrics can be utilized to evaluate multi-modal and digital photography image fusion algorithms of those are entropy-based, correlation-based, image feature-based, image structure-based, and human visual perception-based metrics in total. Some of the generic metrics, such as peak signal-to-noise ratio (PSNR), correlation coefficient (CC), structural similarity index measure (SSIM), and visual information fidelity (VIF), are also used for the quantitative assessment of pan-sharpening. The specific metrics designed for pan-sharpening consist of no-reference metrics and full-reference metrics that employ the full-resolution image as the reference image, i.e., ground truth. Thirdly, we present the qualitative/quantitative results, and average running times of representative alternatives for various fusion missions. Finally, this review has critically analyzed the conclusion, highlights the challenges in the image fusion community, and carried out forecasting analysis, such as non-registered image fusion, high-level vision task-driven image fusion, cross-resolution image fusion, real-time image fusion, color image fusion, image fusion based on physical imaging principles, image fusion under extreme conditions, and comprehensive evaluation metrics, etc. The methods, datasets, and evaluation metrics mentioned are linked at: https://github.com/Linfeng-Tang/Image-Fusion.

Keywords

|

中国图象图形学报 │ 京ICP备05080539号-4 │ 本系统由

中国图象图形学报 │ 京ICP备05080539号-4 │ 本系统由