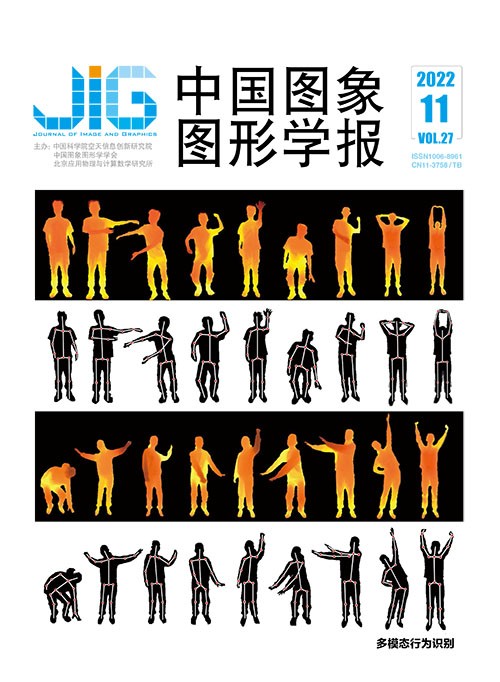

多模态数据的行为识别综述

摘 要

行为识别是当前计算机视觉方向中视频理解领域的重要研究课题。从视频中准确提取人体动作的特征并识别动作,能为医疗、安防等领域提供重要的信息,是一个十分具有前景的方向。本文从数据驱动的角度出发,全面介绍了行为识别技术的研究发展,对具有代表性的行为识别方法或模型进行了系统阐述。行为识别的数据分为RGB模态数据、深度模态数据、骨骼模态数据以及融合模态数据。首先介绍了行为识别的主要过程和人类行为识别领域不同数据模态的公开数据集;然后根据数据模态分类,回顾了RGB模态、深度模态和骨骼模态下基于传统手工特征和深度学习的行为识别方法,以及多模态融合分类下RGB模态与深度模态融合的方法和其他模态融合的方法。传统手工特征法包括基于时空体积和时空兴趣点的方法(RGB模态)、基于运动变化和外观的方法(深度模态)以及基于骨骼特征的方法(骨骼模态)等;深度学习方法主要涉及卷积网络、图卷积网络和混合网络,重点介绍了其改进点、特点以及模型的创新点。基于不同模态的数据集分类进行不同行为识别技术的对比分析。通过类别内部和类别之间两个角度对比分析后,得出不同模态的优缺点与适用场景、手工特征法与深度学习法的区别和融合多模态的优势。最后,总结了行为识别技术当前面临的问题和挑战,并基于数据模态的角度提出了未来可行的研究方向和研究重点。

关键词

Review of action recognition based on multimodal data

Wang Shuaichen1, Huang Qian1,2, Zhang Yunfei1,2, Li Xing1, Nie Yunqing1, Luo Guocui1(1.Key Laboratory of Water Resources Big Data, Ministry of Water Resources (Hohai University), Nanjing 211100, China;2.School of Computer and Information, Hohai University, Nanjing 211100, China) Abstract

Body action oriented recognition issue is an essential domain for video interpretation of computer vision analysis. Its potentials can be focused on accurate video-based features extraction for body actions and the related recognition for multiple applications. The data modes of body action recognition modals can be segmented into RGB, depth, skeleton and fusion, respectively. Our multi-modals based critical analysis reviews the research and development of body action recognition algorithm. Our literature review is systematically focused on current algorithms or models. First, we introduce the key aspects of body action recognition method, which can be divided into video input, feature extraction, classification and output results. Next, we introduce the popular datasets of different data modal in the context of body action recognition, including human motion database(HMDB-51), UCF101 dataset, Something-Something datasets of RGB mode, depth modal and skeleton-mode MSR-Action3D dataset, MSR daily activity dataset, UTD-multimodal human action recognition dataset(MHAD) and RGB mode/depth mode/skeleton modal based NTU RGB + D 60/120 dataset, the characteristics of each dataset are explained in detail. Compared to more action recognition reviews, our contributions can be proposed as following:1) data modal/method/datasets classifications are more instructive; 2) data modal/fusion for body action recognition is discussed more comprehensively; 3) recent challenges of body action recognition is just developed in deep learning and lacks of early manual features methods. We analyze the pros of manual features and deep learning; and 4) their advantages and disadvantages of different data modal, the challenges of action recognition and the future research direction are discussed. According to the data modal classification, the traditional manual feature and deep learning action recognition methods are reviewed via modals analysis of RGB/depth modal/skeleton, as well as multi-modal fused classification and related fusion methods of RGB modal and depth modal. For RGB modal, the traditional manual feature method is related to spatiotemporal volume, spatiotemporal based interest points and the skeleton trajectory based method. The deep learning method involves 3D convolutional neural network and double flow network. 3D convolution can construct the relationship between spatial and temporal dimensions, and the spatiotemporal features extraction is taken into account. The manual feature depth modal methods involve motion change/appearance features. The depth learning method includes representative point cloud network. Point cloud network is leveraged from image processing network. It can extract action features better via point sets processing. The skeleton modal oriented manual method is mainly based on skeleton features, and the deep learning technique mainly uses graph convolution network. Graph convolution network is suitable for the graph shape characteristics of skeleton data, which is beneficial to the transmission of information between skeletons. Next, we summarize the recognition accuracy of representative algorithms and models on RGB modal HMDB-51 dataset, UCF101 dataset and Something-Something V2 dataset, select the data representing manual feature method and depth learning method, and accomplish a histogram for more specific comparative results. For depth modal, we collect the recognition rates of some algorithms and models on MSR-Action3D dataset and NTU RGB + D 60 depth dataset. For skeleton data, we select NTU RGB + D 60 skeleton dataset and NTU RGB + D 120 skeleton dataset, the recognition accuracy of the model is compared. At the same time, we draw the clue of the parameters that need to be trained in the model in recent years. For the multi-modal fusion method, we adopt NTU RGB + D 60 dataset including RGB modal, depth modal and skeleton modal. The comparative recognition rate of single modal is derived of the same algorithm or model and the improved accuracy after multi-modal fusion. We sorted out that RGB modal, depth modal and skeleton modal have their potentials/drawbacks to match applicable scenarios. The fusion of multiple modals can complement mutual information to a certain extent and improve the recognition effect; manual feature method is suitable for some small datasets, and the algorithm complexity is lower; deep learning is suitable for large datasets and can automatically extract features from a large number of data; more researches have changed from deepening the network to lightweight and high recognition rate network. Finally, the current problems and challenges of body action recognition technology are summarized on the aspects of multiple body action recognition, fast and similar body action recognition, and depth and skeleton data collection. At the same time, the data modal issues are predicted further in terms of effective modal fusion, novel network design, and added attention module.

Keywords

|

中国图象图形学报 │ 京ICP备05080539号-4 │ 本系统由

中国图象图形学报 │ 京ICP备05080539号-4 │ 本系统由