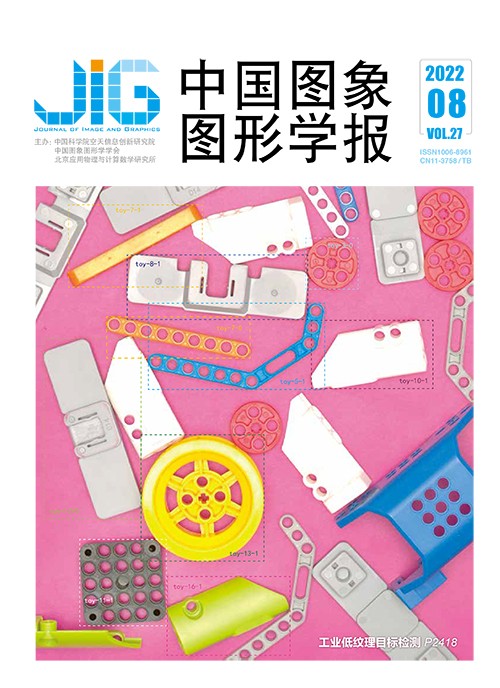

面向工业零件分拣系统的低纹理目标检测

摘 要

目的 随着工业领域智能分拣业务的兴起,目标检测引起越来越多的关注。然而为了适应工业现场快速部署和应用的需求,算法只能在获得少量目标样本的情况下调整参数;另外工控机运算资源有限,工业零件表面光滑、缺乏显著的纹理信息,都不利于基于深度学习的目标检测方法。目前普遍认为Line2D可以很好地用于小样本情况的低纹理目标快速匹配,但Line2D不能正确匹配形状相同而颜色不同的两个零件。对此,提出一种更为鲁棒的低纹理目标快速匹配框架CL2D (color Line2D)。方法 首先使用梯度方向特征作为物体形状的描述在输入图像快速匹配,获取粗匹配结果;然后通过非极大值抑制和颜色直方图比对完成精细匹配。最后根据工业分拣的特点,由坐标变换完成对目标的抓取点定位。结果 为了对算法性能进行测试,本文根据工业分拣的实际环境,提出了YNU-BBD 2020(YNU-building blocks datasets 2020)数据集。在YNU-BBD 2020数据集上的测试结果表明,CL2D可以在CPU平台上以平均2.15 s/幅的速度处理高分辨率图像,在精度上相比于经典算法和深度学习算法,mAP (mean average precision)分别提升了10%和7%。结论 本文针对工业零件分拣系统的特点,提出了一种快速低纹理目标检测方法,能够在CPU平台上高效完成目标检测任务,并且相较于现有方法具有显著优势。

关键词

Texture-less object detection method for industrial components picking system

Yan Ming, Tao Dapeng, Pu Yuanyuan(School of Information and Engineering, Yunnan University, Kunming 650500, China) Abstract

Objective Texture-less object detection is crucial for industrial components picking systems, where multiple components are assigned to a feeder in random, and the objects is to use a vision-guided robot arm grasps each into a packing box. To improve deployment ability in industrial sites, the algorithm is required to adjust parameters with few samples and run in limited computing resources. The traditional detection methods can be achieved by key-point match quickly. However, industrial components are not textured to extract patch descriptors and build key-point correspondence sufficiently. The appearance of industrial components is dominated by their shape, and leads to template matching methods based on object contours. One classical work is Line2D, which only needs a few samples to build template and running in the CPU platform efficiently. However, it produces false-positive results when two components have a similar silhouette. Method We demonstrate a new method called color Line2D (CL2D). CL2D uses object images to extract template information, then running a sliding window template process on the input image to complete object detection. It covers the object shape and color both. We use the gradient direction feature as the shape descriptors, which can be extracted from discrete points on the object contour. Specifically, we compute the oriented gradient of these points on the object image and sliding window, and calculate the cosine value of the angle between each point pair and sum it to sort similarity out. We use the HSV color histogram to represent the appearance of object color as complementary to shape features. We use cosine similarity to compare the histogram between object image and sliding window. The overall framework of CL2D can be categorized into two parts of offline and online. In the offline part, we will build a template database to store the data that may be used in the online matching process in order to speed up the online process. The template database is constructed by the following two steps as mentioned below:first, we annotate relevant information on the object image for extracting template data related to object contour points, foreground area and grasp point. Second, we compute histogram in the context of the foreground area, rotation object image; contour points based gradient orientation to get templates for multi poses of rotation of the object. The online part can be summarized as three steps mentioned below:coarse matching, fine matching, and grasp point localization. First, we use gradient direction templates of different rotation pose, matching on the input image to obtain coarse detection results. The matching process is optimized through gradient direction quantization and pre-computed response maps to achieve a faster matching speed. Second, we use the non-maximum suppression to filter redundant matching results and then compare the color histogram to determine the detection result. Finally, we localize the object grasp point on the input image by a coordinate transformation method. In order to evaluate the performance of texture-less object detection methods, we illustrate YNU-building blocks datasets 2020 (YNU-BBD 2020) to simulate a real industrial scena-rio. Result Our experimental results demonstrate that the algorithm can process 1 920×1 200 resolution images at an average speed of 2.15 s per frame on a CPU platform. In the case of using only one or two samples per object, CL2D can achieve 67.7% mAP on the YNU-BBD 2020 dataset, which is about 10% relative improvement compared to Lind2D, and 7% to deep learning methods based on the synthetic training data. The qualitative results of comparison with classic texture-less object detection methods show that the CL2D algorithm has its priorities in multi-instance object detection. Conclusion We propose a texture-less object detection method in terms of the integration of color and shape representation. Our method can be applied in a CPU platform with few samples. It has significant advantages compare to deep learning methods or classical texture-less object detection methods. The proposed method has the potential to be used in industrial components picking systems.

Keywords

|

中国图象图形学报 │ 京ICP备05080539号-4 │ 本系统由

中国图象图形学报 │ 京ICP备05080539号-4 │ 本系统由