最新刊期

卷 30 , 期 7 , 2025

- “在自动驾驶、机器人技术等领域,点云场景分割技术取得重要进展,专家综述了研究方法和挑战,为未来发展提供方向。”

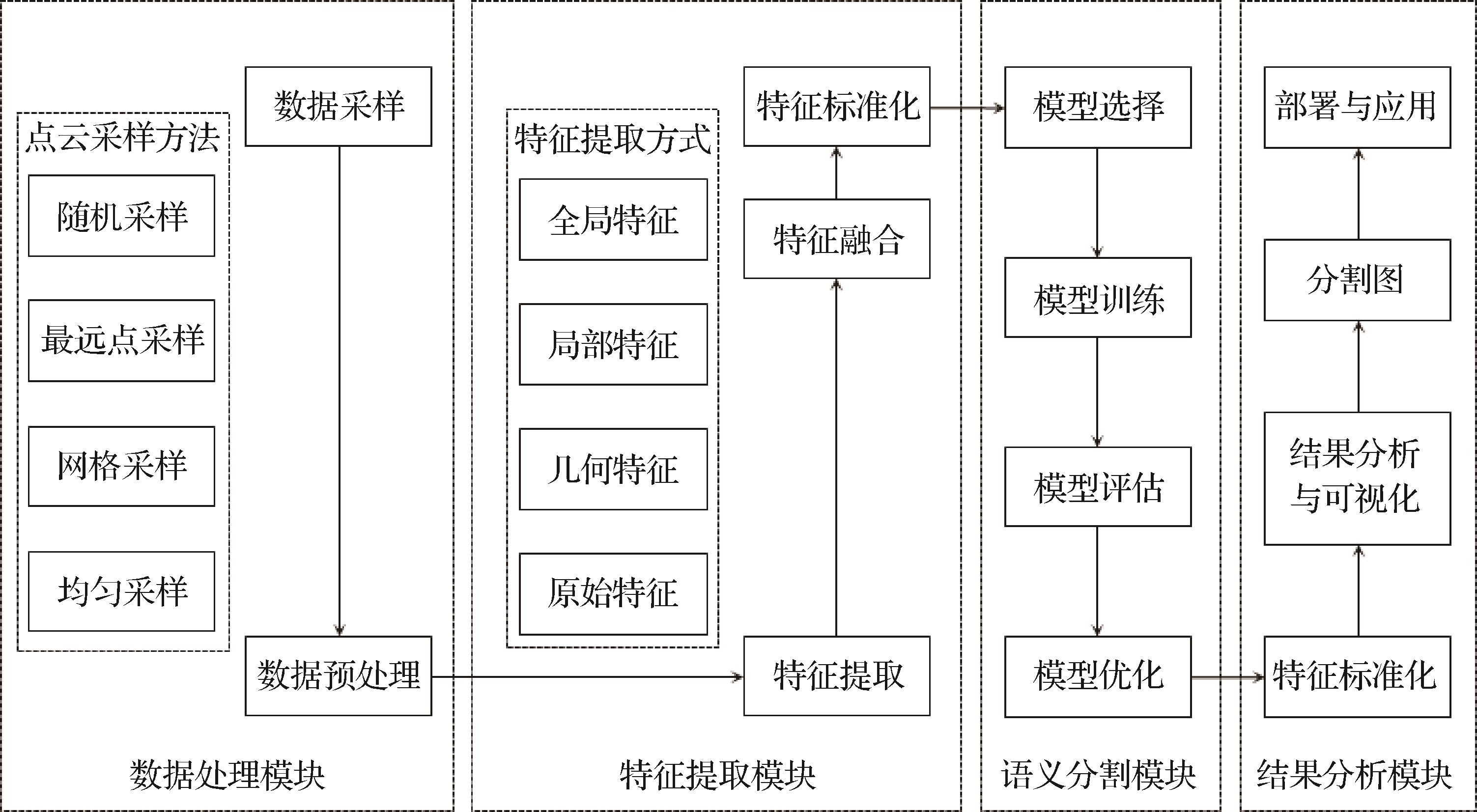

摘要:With the continuous advancement of depth sensing technology, particularly the widespread application of laser scanners across diverse scenarios, 3D point cloud technology is playing a pivotal role in an increasing number of fields. These fields include, but are not limited to, autonomous driving, robotics, geographic information systems, manufacturing, building information modeling, cultural heritage preservation, virtual reality, and augmented reality. As a high-precision, dense, and detailed form of spatial data representation, 3D point clouds can accurately capture various types of information, including the geometric shape, spatial structure, surface texture, and environmental layout of objects. Consequently, the processing, analysis, and comprehension of point cloud data are particularly significant in these applications, particularly point cloud segmentation technology, which serves as the foundation for advanced tasks, such as object recognition, scene understanding, map construction, and dynamic environment monitoring.This study aims to conduct a comprehensive review and in-depth exploration of current mainstream 3D point cloud segmentation methods from multiple perspectives, dissecting the latest advancements in this research domain. In particular, it begins with a detailed analysis and discussion of the fundamentals of 3D point cloud segmentation, covering aspects such as datasets, performance evaluation metrics, sampling methods, and feature extraction techniques. This study summarizes currently publicly available mainstream point cloud datasets, including ShapeNet, Semantic3D, S3DIS, and ScanNet, meticulously dissecting the characteristics, annotation forms, application scenarios, and technical challenges associated with each dataset. In addition, it delves into commonly utilized performance evaluation metrics in the semantic segmentation of point cloud scenes, including overall accuracy, mean class accuracy, and mean intersection over union. These metrics provide effective means for quantifying and comparing model performance, facilitating comprehensive evaluations and improvements across different tasks and scenarios.In the data preprocessing phase, this study systematically summarizes prevalent point cloud sampling strategies. Given that 3D point cloud data typically possess large scales and irregular distributions, a suitable sampling method is essential for reducing computational costs and enhancing model training efficiency. This study introduces strategies, such as farthest point sampling, random sampling, and grid sampling, analyzing their application scenarios, advantages, disadvantages, and specific implementation methods in various tasks. Furthermore, it discusses feature extraction techniques for 3D point clouds, encompassing various methods, including global feature extraction, local feature extraction, and the fusion of global and local features. Through effective feature extraction, more discriminative representations can be provided for subsequent segmentation tasks, aiding the model in improved object recognition and scene understanding.Building on this foundation, this study systematically reviews 3D point cloud segmentation methods from four distinct perspectives: point-based, voxel-based, view-based, and multi-modal fusion methods. First, point-based methods directly process each point within the point cloud, maintaining the high resolution and density of the data while avoiding information loss. Point-based methods are further subdivided into multilayer perceptron (MLP)-based methods, Transformer-based models, graph convolutional network-based methods, and other related approaches. Each category exhibits unique advantages in different application scenarios. For example, MLP is effective for capturing the local features of point clouds, while Transformer-based models excel in handling long-range dependencies and global relationships. Despite the strong performance of point-based methods, their direct processing of a large number of 3D points results in high computational complexity and relatively low efficiency when managing large-scale point cloud scenes.Second, this study presents voxel-based methods, which process point cloud data by partitioning them into regular 3D grids (voxels), effectively reducing data size and simplifying subsequent computations. This approach structures point cloud data, providing relatively stable performance in large-scale scenes. It is particularly applicable to scenarios with large scene sizes but low resolution requirements. However, the inevitable information loss and reduction in spatial resolution during voxelization limit performance in handling fine-grained tasks.Third, the view-based approach processes a 3D point cloud by projecting it onto a 2D plane, leveraging mature 2D image processing techniques and convolutional neural networks. This method transforms the point cloud segmentation task into a traditional image segmentation problem, enhancing processing efficiency, particularly in scenarios where point cloud density is high and rapid processing is required. However, projecting 3D information onto 2D space may lead to the loss of spatial geometric information, resulting in potentially lower accuracy compared with methods that directly handle 3D point clouds in certain applications.Lastly, this study explores multimodal fusion methods, which combine various forms of data, such as point clouds, voxels, and views, fully utilizing the complementarity of different modalities in scene understanding to enhance the accuracy, robustness, and generalization ability of point cloud segmentation.Subsequently, this study conducts a detailed analysis and comparison of the experimental results from different methods. Based on various datasets and performance evaluation metrics, it reveals the strengths and weaknesses of each method in diverse application scenarios. For example, point-based methods excel in fine segmentation tasks and can capture subtle geometric information, while voxel-based and view-based methods offer higher processing efficiency when dealing with large-scale point cloud scenes. Through the comparative analysis of experimental results, this study provides valuable references for point cloud segmentation tasks across different application scenarios.Finally, this study summarizes the major challenges that are currently experienced in the field of 3D point cloud segmentation, including the sparsity and irregularity of point cloud data, the influence of noise and missing points, insufficient generalization ability across diverse scenes, and the demand for real-time processing. This study also anticipates future research directions and proposes measures, such as a deeper understanding of the complex semantic structures of point cloud data through the integration of large language models, the introduction of semi-supervised and unsupervised learning methods to reduce reliance on labeled data, and enhancements in real-time performance and computational efficiency to further advance point cloud segmentation technology. We hope that this comprehensive review can provide a systematic reference for researchers and industrial applications in the field of point cloud technology, facilitating the implementation and development of 3D point cloud technology in a broader range of practical applications.关键词:3D point cloud;point cloud scene semantic segmentation;deep learning;sampling method;feature extraction1060|2426|0更新时间:2025-07-14

摘要:With the continuous advancement of depth sensing technology, particularly the widespread application of laser scanners across diverse scenarios, 3D point cloud technology is playing a pivotal role in an increasing number of fields. These fields include, but are not limited to, autonomous driving, robotics, geographic information systems, manufacturing, building information modeling, cultural heritage preservation, virtual reality, and augmented reality. As a high-precision, dense, and detailed form of spatial data representation, 3D point clouds can accurately capture various types of information, including the geometric shape, spatial structure, surface texture, and environmental layout of objects. Consequently, the processing, analysis, and comprehension of point cloud data are particularly significant in these applications, particularly point cloud segmentation technology, which serves as the foundation for advanced tasks, such as object recognition, scene understanding, map construction, and dynamic environment monitoring.This study aims to conduct a comprehensive review and in-depth exploration of current mainstream 3D point cloud segmentation methods from multiple perspectives, dissecting the latest advancements in this research domain. In particular, it begins with a detailed analysis and discussion of the fundamentals of 3D point cloud segmentation, covering aspects such as datasets, performance evaluation metrics, sampling methods, and feature extraction techniques. This study summarizes currently publicly available mainstream point cloud datasets, including ShapeNet, Semantic3D, S3DIS, and ScanNet, meticulously dissecting the characteristics, annotation forms, application scenarios, and technical challenges associated with each dataset. In addition, it delves into commonly utilized performance evaluation metrics in the semantic segmentation of point cloud scenes, including overall accuracy, mean class accuracy, and mean intersection over union. These metrics provide effective means for quantifying and comparing model performance, facilitating comprehensive evaluations and improvements across different tasks and scenarios.In the data preprocessing phase, this study systematically summarizes prevalent point cloud sampling strategies. Given that 3D point cloud data typically possess large scales and irregular distributions, a suitable sampling method is essential for reducing computational costs and enhancing model training efficiency. This study introduces strategies, such as farthest point sampling, random sampling, and grid sampling, analyzing their application scenarios, advantages, disadvantages, and specific implementation methods in various tasks. Furthermore, it discusses feature extraction techniques for 3D point clouds, encompassing various methods, including global feature extraction, local feature extraction, and the fusion of global and local features. Through effective feature extraction, more discriminative representations can be provided for subsequent segmentation tasks, aiding the model in improved object recognition and scene understanding.Building on this foundation, this study systematically reviews 3D point cloud segmentation methods from four distinct perspectives: point-based, voxel-based, view-based, and multi-modal fusion methods. First, point-based methods directly process each point within the point cloud, maintaining the high resolution and density of the data while avoiding information loss. Point-based methods are further subdivided into multilayer perceptron (MLP)-based methods, Transformer-based models, graph convolutional network-based methods, and other related approaches. Each category exhibits unique advantages in different application scenarios. For example, MLP is effective for capturing the local features of point clouds, while Transformer-based models excel in handling long-range dependencies and global relationships. Despite the strong performance of point-based methods, their direct processing of a large number of 3D points results in high computational complexity and relatively low efficiency when managing large-scale point cloud scenes.Second, this study presents voxel-based methods, which process point cloud data by partitioning them into regular 3D grids (voxels), effectively reducing data size and simplifying subsequent computations. This approach structures point cloud data, providing relatively stable performance in large-scale scenes. It is particularly applicable to scenarios with large scene sizes but low resolution requirements. However, the inevitable information loss and reduction in spatial resolution during voxelization limit performance in handling fine-grained tasks.Third, the view-based approach processes a 3D point cloud by projecting it onto a 2D plane, leveraging mature 2D image processing techniques and convolutional neural networks. This method transforms the point cloud segmentation task into a traditional image segmentation problem, enhancing processing efficiency, particularly in scenarios where point cloud density is high and rapid processing is required. However, projecting 3D information onto 2D space may lead to the loss of spatial geometric information, resulting in potentially lower accuracy compared with methods that directly handle 3D point clouds in certain applications.Lastly, this study explores multimodal fusion methods, which combine various forms of data, such as point clouds, voxels, and views, fully utilizing the complementarity of different modalities in scene understanding to enhance the accuracy, robustness, and generalization ability of point cloud segmentation.Subsequently, this study conducts a detailed analysis and comparison of the experimental results from different methods. Based on various datasets and performance evaluation metrics, it reveals the strengths and weaknesses of each method in diverse application scenarios. For example, point-based methods excel in fine segmentation tasks and can capture subtle geometric information, while voxel-based and view-based methods offer higher processing efficiency when dealing with large-scale point cloud scenes. Through the comparative analysis of experimental results, this study provides valuable references for point cloud segmentation tasks across different application scenarios.Finally, this study summarizes the major challenges that are currently experienced in the field of 3D point cloud segmentation, including the sparsity and irregularity of point cloud data, the influence of noise and missing points, insufficient generalization ability across diverse scenes, and the demand for real-time processing. This study also anticipates future research directions and proposes measures, such as a deeper understanding of the complex semantic structures of point cloud data through the integration of large language models, the introduction of semi-supervised and unsupervised learning methods to reduce reliance on labeled data, and enhancements in real-time performance and computational efficiency to further advance point cloud segmentation technology. We hope that this comprehensive review can provide a systematic reference for researchers and industrial applications in the field of point cloud technology, facilitating the implementation and development of 3D point cloud technology in a broader range of practical applications.关键词:3D point cloud;point cloud scene semantic segmentation;deep learning;sampling method;feature extraction1060|2426|0更新时间:2025-07-14 - “深度伪造技术研究进展,专家系统归纳检测方法,为识别伪造人脸提供解决方案。”

摘要:Deepfake technology refers to the synthesis of images, audio, and videos by using deep learning algorithms. This technology enables the precise mapping of facial features or other physical characteristics from one person onto a subject in another video, achieving highly realistic face-swapping effects. With advancements in algorithms and the increased accessibility of computational resources, the threshold for utilizing deepfake technology has gradually lowered, bringing convenience and numerous social and legal challenges. For example, deepfake technology is used to bring deceased actors back to the screen, providing a novel experience to the audience. Meanwhile, it is frequently exploited to impersonate citizens or leaders for fraudulent activities, produce pornographic content, or create fake news to influence public opinion. Consequently, the importance of deepfake detection technology is increasing, making it a significant focus of current research. To detect images and videos synthesized via deepfake technology, researchers must design models that can uncover subtle traces of manipulation within these media. However, accurately identifying these traces remains challenging due to several factors that complicate the detection process. First, rapid advancements in deepfake technology have made differentiating fake images and videos from authentic content increasingly difficult. As techniques such as generative adversarial networks (GANs) and diffusion models continue to evolve and improve, the texture, lighting, and motion within synthesized media become more seamlessly realistic, imposing significant challenges on detection models that seek to recognize subtle cues of manipulation. Second, forgers can employ a variety of countermeasures to obscure traces of manipulation, such as applying compression, cropping, or noise addition. Furthermore, forgers may create adversarial samples that are specifically crafted to exploit and bypass the vulnerability of detection models, making the identification of deepfake even more complex. Thirdly, the generalizability of deepfake detection methods remains a significant hurdle, because different generative techniques leave behind distinct forensic traces. For example, GAN-generated images frequently exhibit prominent grid-like artifacts in the frequency domain, while images produced through diffusion models typically leave only subtle, less detectable traces in this domain. Therefore, detection models that do not exclusively rely on low-level, technique-specific features but instead focus on capturing deep, generalized features that ensure robustness and applicability across diverse forgery types and detection scenarios are crucial. To address these multifaceted challenges, numerous scholars have proposed a variety of detection methods that are designed to capture nuanced traces left by deepfake manipulations. For example, certain approaches focus on identifying subtle forgery artifacts within the frequency domain of images, capitalizing on the distinct spectral anomalies that forgeries frequently introduce. Other methods prioritize assessing temporal consistency across video frames, because unnatural transitions or frame-level inconsistencies can indicate synthesized content. In addition, some detection strategies focus on evaluating synchronization among different modalities within videos, such as audio and visual elements, to detect inconsistencies that may reveal forgery. At present, several review papers in academia have summarized key research and developments within this domain. However, given the rapid advancements in generative artificial intelligence (AI), fake faces created with diffusion models have recently gained popularity, with scarcely any review that addresses the detection of such forgeries. Furthermore, as generative AI progresses continues advancing toward multimodal integration, deepfake detection methods are similarly evolving to incorporate features from multiple modalities. Nonetheless, the majority of existing reviews lack sufficient focus on multimodal detection approaches, underscoring a gap in the literature that this review seeks to address. To provide an up-to-date overview of face deepfake detection, this review first organizes commonly used datasets and evaluation metrics in the field. Then, it divides detection methods into image-level and video-level face deepfake detection. Based on feature selection approaches, image-level methods are categorized into spatial-domain and frequency-domain methods, while video-level methods are categorized into approaches based on spatiotemporal inconsistencies, biological features, and multimodal features. Each category is thoroughly analyzed with regard to its principles, strengths, weaknesses, and developmental trends. Finally, current research status and challenges in face deepfake detection are summarized, and future research directions are discussed. Compared with other related reviews, the novelty of this review lies in its summary of detection methods that specifically targets text-to-image/video generation and multimodal detection methods. This review is aligned with the latest trends in generative AI, offering a comprehensive and up-to-date summary of recent advancements in face deepfake detection. By examining the latest methodologies, including those developed to address forgeries created through advanced techniques, such as diffusion models and multimodal integration, this review reflects the ongoing evolution of detection technology. It highlights the progress made and the challenges that remain, positioning itself as a valuable resource for researchers who aim to navigate and contribute to the cutting-edge developments in this rapidly advancing field. A comprehensive analysis of face deepfake detection methods reveals that current techniques achieve nearly 100% accuracy within the training datasets, particularly those leveraging advanced models, such as Transformers. However, their performance frequently declines significantly in cross-dataset testing, particularly for spatial-domain and frequency-domain detection methods. This decline suggests that these approaches may fail to capture essential, generalizable features that are robust across varying datasets. By contrast, biological feature-based methods demonstrate superior generalization capabilities, successfully adapting to different contexts. However, they require carefully tailored training data and specific application conditions to reach optimal performance. Meanwhile, multimodal detection methods, which integrate features across multiple modalities, offer enhanced robustness and adaptability due to their layered approach. However, this added complexity frequently results in higher computational costs and increased model intricacy. Given the diversity in feature selection, along with the unique advantages and limitations inherent to each detection approach, no single method has yet provided a fully comprehensive solution to the deepfake detection challenge. This reality underscores the critical need for continued research in this evolving field and highlights the importance of this review in mapping current advancements and identifying future research directions.关键词:DeepFake detection;face forgery detection;face image;face video;spatial domain feature;frequency domain feature;temporal features;multimodal features1326|2408|0更新时间:2025-07-14

摘要:Deepfake technology refers to the synthesis of images, audio, and videos by using deep learning algorithms. This technology enables the precise mapping of facial features or other physical characteristics from one person onto a subject in another video, achieving highly realistic face-swapping effects. With advancements in algorithms and the increased accessibility of computational resources, the threshold for utilizing deepfake technology has gradually lowered, bringing convenience and numerous social and legal challenges. For example, deepfake technology is used to bring deceased actors back to the screen, providing a novel experience to the audience. Meanwhile, it is frequently exploited to impersonate citizens or leaders for fraudulent activities, produce pornographic content, or create fake news to influence public opinion. Consequently, the importance of deepfake detection technology is increasing, making it a significant focus of current research. To detect images and videos synthesized via deepfake technology, researchers must design models that can uncover subtle traces of manipulation within these media. However, accurately identifying these traces remains challenging due to several factors that complicate the detection process. First, rapid advancements in deepfake technology have made differentiating fake images and videos from authentic content increasingly difficult. As techniques such as generative adversarial networks (GANs) and diffusion models continue to evolve and improve, the texture, lighting, and motion within synthesized media become more seamlessly realistic, imposing significant challenges on detection models that seek to recognize subtle cues of manipulation. Second, forgers can employ a variety of countermeasures to obscure traces of manipulation, such as applying compression, cropping, or noise addition. Furthermore, forgers may create adversarial samples that are specifically crafted to exploit and bypass the vulnerability of detection models, making the identification of deepfake even more complex. Thirdly, the generalizability of deepfake detection methods remains a significant hurdle, because different generative techniques leave behind distinct forensic traces. For example, GAN-generated images frequently exhibit prominent grid-like artifacts in the frequency domain, while images produced through diffusion models typically leave only subtle, less detectable traces in this domain. Therefore, detection models that do not exclusively rely on low-level, technique-specific features but instead focus on capturing deep, generalized features that ensure robustness and applicability across diverse forgery types and detection scenarios are crucial. To address these multifaceted challenges, numerous scholars have proposed a variety of detection methods that are designed to capture nuanced traces left by deepfake manipulations. For example, certain approaches focus on identifying subtle forgery artifacts within the frequency domain of images, capitalizing on the distinct spectral anomalies that forgeries frequently introduce. Other methods prioritize assessing temporal consistency across video frames, because unnatural transitions or frame-level inconsistencies can indicate synthesized content. In addition, some detection strategies focus on evaluating synchronization among different modalities within videos, such as audio and visual elements, to detect inconsistencies that may reveal forgery. At present, several review papers in academia have summarized key research and developments within this domain. However, given the rapid advancements in generative artificial intelligence (AI), fake faces created with diffusion models have recently gained popularity, with scarcely any review that addresses the detection of such forgeries. Furthermore, as generative AI progresses continues advancing toward multimodal integration, deepfake detection methods are similarly evolving to incorporate features from multiple modalities. Nonetheless, the majority of existing reviews lack sufficient focus on multimodal detection approaches, underscoring a gap in the literature that this review seeks to address. To provide an up-to-date overview of face deepfake detection, this review first organizes commonly used datasets and evaluation metrics in the field. Then, it divides detection methods into image-level and video-level face deepfake detection. Based on feature selection approaches, image-level methods are categorized into spatial-domain and frequency-domain methods, while video-level methods are categorized into approaches based on spatiotemporal inconsistencies, biological features, and multimodal features. Each category is thoroughly analyzed with regard to its principles, strengths, weaknesses, and developmental trends. Finally, current research status and challenges in face deepfake detection are summarized, and future research directions are discussed. Compared with other related reviews, the novelty of this review lies in its summary of detection methods that specifically targets text-to-image/video generation and multimodal detection methods. This review is aligned with the latest trends in generative AI, offering a comprehensive and up-to-date summary of recent advancements in face deepfake detection. By examining the latest methodologies, including those developed to address forgeries created through advanced techniques, such as diffusion models and multimodal integration, this review reflects the ongoing evolution of detection technology. It highlights the progress made and the challenges that remain, positioning itself as a valuable resource for researchers who aim to navigate and contribute to the cutting-edge developments in this rapidly advancing field. A comprehensive analysis of face deepfake detection methods reveals that current techniques achieve nearly 100% accuracy within the training datasets, particularly those leveraging advanced models, such as Transformers. However, their performance frequently declines significantly in cross-dataset testing, particularly for spatial-domain and frequency-domain detection methods. This decline suggests that these approaches may fail to capture essential, generalizable features that are robust across varying datasets. By contrast, biological feature-based methods demonstrate superior generalization capabilities, successfully adapting to different contexts. However, they require carefully tailored training data and specific application conditions to reach optimal performance. Meanwhile, multimodal detection methods, which integrate features across multiple modalities, offer enhanced robustness and adaptability due to their layered approach. However, this added complexity frequently results in higher computational costs and increased model intricacy. Given the diversity in feature selection, along with the unique advantages and limitations inherent to each detection approach, no single method has yet provided a fully comprehensive solution to the deepfake detection challenge. This reality underscores the critical need for continued research in this evolving field and highlights the importance of this review in mapping current advancements and identifying future research directions.关键词:DeepFake detection;face forgery detection;face image;face video;spatial domain feature;frequency domain feature;temporal features;multimodal features1326|2408|0更新时间:2025-07-14

Review

- “在3D视觉领域,研究者提出了基于外部迭代分数模型的点云去噪方法,有效提高了去噪精度和速度。”

摘要:ObjectivePoint cloud data play an irreplaceable role as important means of environmental sensing and spatial representation in a variety of fields, such as autonomous driving, robotics, and 3D reconstruction. A point cloud is a form of data that represents a discrete set of points on the surface of an object in space by means of 3D coordinates. It is typically captured using 3D imaging sensors, such as LiDAR and depth cameras. These sensors can efficiently acquire spatial information in the environment, providing critical data support for applications, such as environment sensing for self-driving vehicles, autonomous navigation for robots, and 3D reconstruction of complex scenes. However, the process of acquiring point cloud data is frequently subject to multiple influences from the sensor’s performance, environmental conditions, and external disturbances. In such case, the acquired point cloud data may contain a large amount of noise, which is manifested as a deviation of discrete point positions, redundant points, or missing point information. This condition negatively affects subsequent tasks. Therefore, the effective denoising of noisy point cloud data has become a fundamental and extremely important research problem in the field of point clouds. Traditional denoising methods typically rely on geometric processing techniques to identify and remove noisy points by analyzing the geometric properties of the local neighborhood. However, in complex real-world scenarios, traditional methods frequently experience difficulty in achieving satisfactory results when dealing with large-scale data, nonuniformly distributed noise, and fast denoising in dynamic scenarios. For this reason, an increasing number of studies have begun to combine deep learning methods with design denoising algorithms that can efficiently deal with large-scale noisy point cloud data by taking advantage of the 3D structural properties of point clouds. These methods cannot only significantly improve denoising accuracy but also handle multiple types of noise and retain important structural information in a point cloud. At present, deep learning-based methods typically train neural networks to predict point displacements and then move noisy points to a clean surface below. However, such algorithms either have a long iteration time or suffer from over-convergence or a large deviation of displacement when moving points to a clean surface, affecting denoising performance. To improve denoising accuracy and speed, this study proposes point cloud denoising based on an external iterative fractional model.MethodFirst, the input noisy point cloud is chunked and divided into parts to be inputted into the network. Next, the part of the point cloud with noise is inputted into the feature extraction module, through which the features of a point cloud are extracted. Then, the features are inputted into the score estimation unit to obtain the score of the point cloud. Considering that the iteration time of the score model is too long, we add the method of momentum gradient ascent to accelerate the iteration of the point cloud. Finally, the denoised part of the point cloud is spliced together to form a complete denoised point cloud. After the denoised point cloud is obtained, it is used as the input for the next denoising, and the above operations are repeated. The model in this study is set with four external iterations as the total denoising process. Among them, the external iteration is a complete point cloud denoising process that includes a series of complete operations, such as point cloud chunking, feature extraction, and score estimation. By contrast, internal iteration refers to the multiple shifts of noise points by the estimated scores in the score estimation model. In this study, the operation that originally requires a large number of internal iterations is transformed into a limited number of external iterations, accelerating denoising speed.ResultThe experiments are compared with seven models, namely, point clean net(PCN), graph-convolutional point cloud denoising network(GPDNet), DMRDenoise, PDFlow, Pointfilter, Scoredenoise, and IterativePFN, in 1%, 2%, and 3% noise for the point cloud upsampling(PU) datasets 10 K and 50 K point clouds. The model is optimal in the evaluation metrics, Chamfer distance (CD) and point-to-mesh distance (P2M) (lower values are better). A comparison of scene denoising is performed on the RueMadame dataset, which contains only noisy scanned data, and thus, we only consider visual results. After denoising by using our method, the least noise is left in the scene. Thereafter, denoising time comparison is performed on this dataset, and our method reduces time by nearly 30% compared with the model with the third-place performance, which is similar to the time of the model with the second-place performance. We then perform a comparison of generalizability on the PC dataset of 10 K and 50 K point clouds with 3% noise. Our method also has the lowest CD and P2M values. Finally, we perform a series of ablation experiments to demonstrate the effectiveness of our model. All the aforementioned experiments are tested on NVIDIA GeForce RTX 4090 graphics processing unit to ensure their fairness and impartiality.ConclusionThe point cloud denoising model proposed in this study exhibits better denoising accuracy and faster denoising speed compared with other methods. In the future, we hope to apply our network architecture to other tasks, such as point cloud up-sampling and complementation.关键词:point cloud denoising;external iterative;score model;momentum gradient ascent;deep learning370|426|0更新时间:2025-07-14

摘要:ObjectivePoint cloud data play an irreplaceable role as important means of environmental sensing and spatial representation in a variety of fields, such as autonomous driving, robotics, and 3D reconstruction. A point cloud is a form of data that represents a discrete set of points on the surface of an object in space by means of 3D coordinates. It is typically captured using 3D imaging sensors, such as LiDAR and depth cameras. These sensors can efficiently acquire spatial information in the environment, providing critical data support for applications, such as environment sensing for self-driving vehicles, autonomous navigation for robots, and 3D reconstruction of complex scenes. However, the process of acquiring point cloud data is frequently subject to multiple influences from the sensor’s performance, environmental conditions, and external disturbances. In such case, the acquired point cloud data may contain a large amount of noise, which is manifested as a deviation of discrete point positions, redundant points, or missing point information. This condition negatively affects subsequent tasks. Therefore, the effective denoising of noisy point cloud data has become a fundamental and extremely important research problem in the field of point clouds. Traditional denoising methods typically rely on geometric processing techniques to identify and remove noisy points by analyzing the geometric properties of the local neighborhood. However, in complex real-world scenarios, traditional methods frequently experience difficulty in achieving satisfactory results when dealing with large-scale data, nonuniformly distributed noise, and fast denoising in dynamic scenarios. For this reason, an increasing number of studies have begun to combine deep learning methods with design denoising algorithms that can efficiently deal with large-scale noisy point cloud data by taking advantage of the 3D structural properties of point clouds. These methods cannot only significantly improve denoising accuracy but also handle multiple types of noise and retain important structural information in a point cloud. At present, deep learning-based methods typically train neural networks to predict point displacements and then move noisy points to a clean surface below. However, such algorithms either have a long iteration time or suffer from over-convergence or a large deviation of displacement when moving points to a clean surface, affecting denoising performance. To improve denoising accuracy and speed, this study proposes point cloud denoising based on an external iterative fractional model.MethodFirst, the input noisy point cloud is chunked and divided into parts to be inputted into the network. Next, the part of the point cloud with noise is inputted into the feature extraction module, through which the features of a point cloud are extracted. Then, the features are inputted into the score estimation unit to obtain the score of the point cloud. Considering that the iteration time of the score model is too long, we add the method of momentum gradient ascent to accelerate the iteration of the point cloud. Finally, the denoised part of the point cloud is spliced together to form a complete denoised point cloud. After the denoised point cloud is obtained, it is used as the input for the next denoising, and the above operations are repeated. The model in this study is set with four external iterations as the total denoising process. Among them, the external iteration is a complete point cloud denoising process that includes a series of complete operations, such as point cloud chunking, feature extraction, and score estimation. By contrast, internal iteration refers to the multiple shifts of noise points by the estimated scores in the score estimation model. In this study, the operation that originally requires a large number of internal iterations is transformed into a limited number of external iterations, accelerating denoising speed.ResultThe experiments are compared with seven models, namely, point clean net(PCN), graph-convolutional point cloud denoising network(GPDNet), DMRDenoise, PDFlow, Pointfilter, Scoredenoise, and IterativePFN, in 1%, 2%, and 3% noise for the point cloud upsampling(PU) datasets 10 K and 50 K point clouds. The model is optimal in the evaluation metrics, Chamfer distance (CD) and point-to-mesh distance (P2M) (lower values are better). A comparison of scene denoising is performed on the RueMadame dataset, which contains only noisy scanned data, and thus, we only consider visual results. After denoising by using our method, the least noise is left in the scene. Thereafter, denoising time comparison is performed on this dataset, and our method reduces time by nearly 30% compared with the model with the third-place performance, which is similar to the time of the model with the second-place performance. We then perform a comparison of generalizability on the PC dataset of 10 K and 50 K point clouds with 3% noise. Our method also has the lowest CD and P2M values. Finally, we perform a series of ablation experiments to demonstrate the effectiveness of our model. All the aforementioned experiments are tested on NVIDIA GeForce RTX 4090 graphics processing unit to ensure their fairness and impartiality.ConclusionThe point cloud denoising model proposed in this study exhibits better denoising accuracy and faster denoising speed compared with other methods. In the future, we hope to apply our network architecture to other tasks, such as point cloud up-sampling and complementation.关键词:point cloud denoising;external iterative;score model;momentum gradient ascent;deep learning370|426|0更新时间:2025-07-14 - “在软件图形用户界面设计领域,研究者提出了基于Transformer的图标生成方法IconFormer,有效提升了图标设计的效率和创新性。”

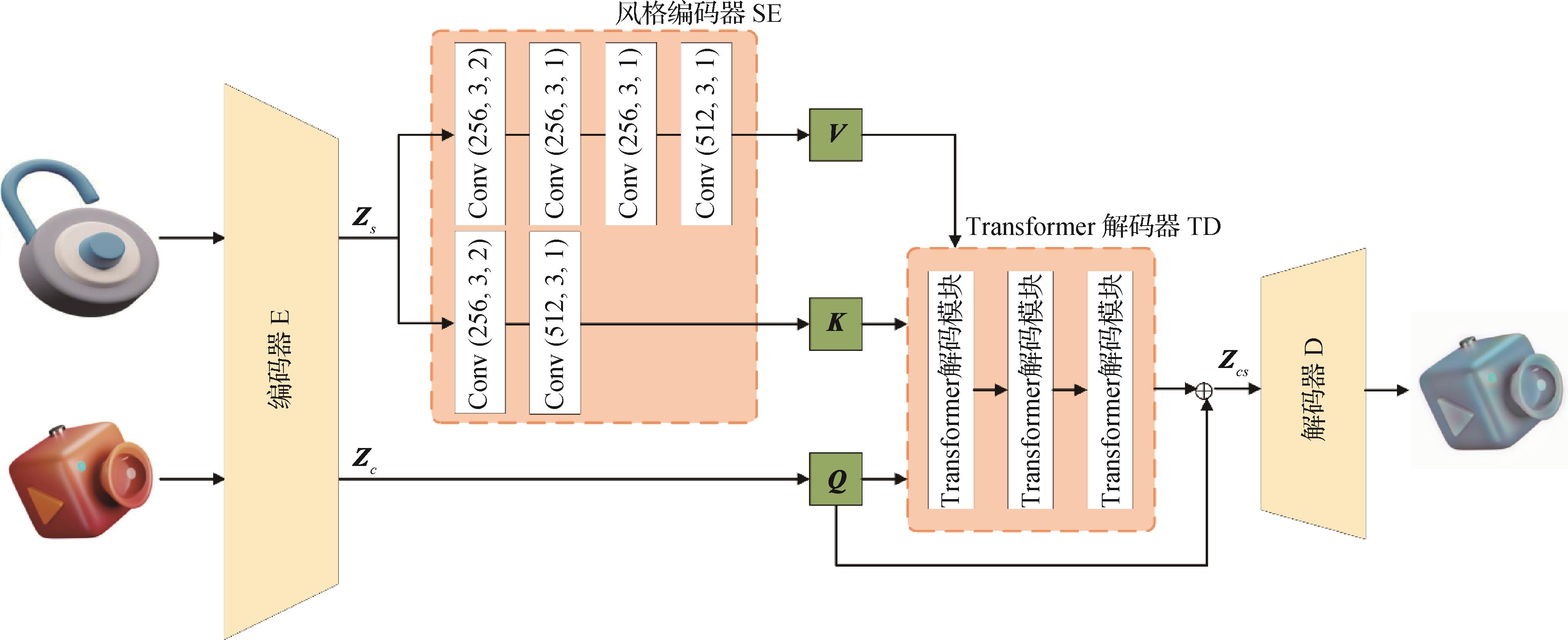

摘要:ObjectiveIcons are essential components for the graphical user interface (GUI) design of software or web sites because they can quickly and directly convey their meaning to users through visual information, improving the usability of software and websites. However, manually creating a large number of icon images with a consistent style and a harmonious color scheme is a labor-exhaustive and time-expensive procedure. Moreover, professional artists are required to do this job. Therefore, researchers have explored methods for automatically generating icons by using deep learning models to improve the efficiency of GUI design in software. Several state-of-the-art icon generation methods have been proposed in recent years. However, some of these methods based on generative adversarial networks suffer from the problem of insufficient diversity in the generated icons, while some of these methods require users to provide initial icon sketches or color prompts as auxiliary inputs, increasing the complexity of the generation process. Therefore, this study proposes a novel icon generation method based on Transformer and convolutional neural network (CNN), with which new icons can be generated based on given pair of content icon and style icon. In this manner, icons can be generated more efficiently and flexibly than in previous methods with better quality. The proposed model in this study, called IconFormer, can effectively establish the relationship between content and style through Transformer and avoid the problems of missing local detail information of the content and insufficient stylization.MethodThis study proposes an icon generation model, called IconFormer, based on deep neural networks. The network architecture is composed of a feature encoder based on VGG, a style encoder based on CNNs, a multilayer Transformer decoder, and a CNN decoder. The style encoder is designed to discover more style information from style features. The Transformer decoder achieves a high degree of integration between content encoding and style encoding. To train and test the proposed icon generation model, this study collects a high-quality dataset that contains 43 741 icon images, comprising icons of different styles, categories, and structures. The icon dataset is organized into pairs, with each pair containing a content icon and a style icon. The dataset is divided into a training set and a testing set, following a ratio of 9∶1. The content and style features are first extracted from the input content icon and style icon with the ImageNet pretrained VGG19 encoder, and then the style features are further encoded into style key K and style value V with the style encoder. Subsequently, the content features as Q, style key K, and style value V are inputted into the multilayer Transformer decoder for feature fusion. Finally, the fused features are decoded into a stylized new icon with the CNN decoder. A new loss function integrated by content loss, style loss, identity loss, and gradient loss is adopted to optimize network parameters.ResultThe proposed IconFormer is evaluated on the icon dataset and compared with previous state-of-the-art methods under the same configuration. These state-of-the-art methods include AdaIN(adaptive instance normalization), ArtFlow, StyleFormer, StyTr2(style transfer transformer), CAP-VSTNet(content affinity preserved versatile style transfer network), and S2WAT(strips window attention Transformer). The experimental results suggest that the icons generated by the proposed IconFormer are more complete in color and structure than those generated by the previous methods. The icons generated by AdaIN, ArtFlow, and StyleFormer demonstrate content loss and insufficient stylization in different extents. StyTr2 cannot effectively distinguish the primary structure from background information of an icon, and most of the background of its generated icons are colorized. The quantitative analysis results show that the proposed IconFormer outperforms previous methods in terms of content and gradient differences. AdaIN results in the highest content difference, indicating that this method exhibits content loss, while ArtFlow presents the highest style difference, indicating that this method cannot effectively stylize content icons. Several ablation experiments are conducted to verify the effectiveness of the feature encoder, style encoder, and loss function definition in the icon generation process. The result shows that the VGG feature extractor, style encoder, and integrated loss function with gradient loss have positive effects on the resulting icons. Additional experiments are conducted to generate a set of icons with a unified style, and the results show that IconFormer is extremely convenient to generate a set of icons with a consistent style, harmonious colors, and high quality.ConclusionThe icon generation model, IconFormer, based on CNNs and Transformer proposed in this study combines the advantages of CNNs and Transformers, and thus can generate new icons with high quality and efficiency, saving time and labor cost for the GUI design of software or websites.关键词:icon generation;image style transfer;convolutional neural network(CNN);Transformer;self-attention mechanism418|376|0更新时间:2025-07-14

摘要:ObjectiveIcons are essential components for the graphical user interface (GUI) design of software or web sites because they can quickly and directly convey their meaning to users through visual information, improving the usability of software and websites. However, manually creating a large number of icon images with a consistent style and a harmonious color scheme is a labor-exhaustive and time-expensive procedure. Moreover, professional artists are required to do this job. Therefore, researchers have explored methods for automatically generating icons by using deep learning models to improve the efficiency of GUI design in software. Several state-of-the-art icon generation methods have been proposed in recent years. However, some of these methods based on generative adversarial networks suffer from the problem of insufficient diversity in the generated icons, while some of these methods require users to provide initial icon sketches or color prompts as auxiliary inputs, increasing the complexity of the generation process. Therefore, this study proposes a novel icon generation method based on Transformer and convolutional neural network (CNN), with which new icons can be generated based on given pair of content icon and style icon. In this manner, icons can be generated more efficiently and flexibly than in previous methods with better quality. The proposed model in this study, called IconFormer, can effectively establish the relationship between content and style through Transformer and avoid the problems of missing local detail information of the content and insufficient stylization.MethodThis study proposes an icon generation model, called IconFormer, based on deep neural networks. The network architecture is composed of a feature encoder based on VGG, a style encoder based on CNNs, a multilayer Transformer decoder, and a CNN decoder. The style encoder is designed to discover more style information from style features. The Transformer decoder achieves a high degree of integration between content encoding and style encoding. To train and test the proposed icon generation model, this study collects a high-quality dataset that contains 43 741 icon images, comprising icons of different styles, categories, and structures. The icon dataset is organized into pairs, with each pair containing a content icon and a style icon. The dataset is divided into a training set and a testing set, following a ratio of 9∶1. The content and style features are first extracted from the input content icon and style icon with the ImageNet pretrained VGG19 encoder, and then the style features are further encoded into style key K and style value V with the style encoder. Subsequently, the content features as Q, style key K, and style value V are inputted into the multilayer Transformer decoder for feature fusion. Finally, the fused features are decoded into a stylized new icon with the CNN decoder. A new loss function integrated by content loss, style loss, identity loss, and gradient loss is adopted to optimize network parameters.ResultThe proposed IconFormer is evaluated on the icon dataset and compared with previous state-of-the-art methods under the same configuration. These state-of-the-art methods include AdaIN(adaptive instance normalization), ArtFlow, StyleFormer, StyTr2(style transfer transformer), CAP-VSTNet(content affinity preserved versatile style transfer network), and S2WAT(strips window attention Transformer). The experimental results suggest that the icons generated by the proposed IconFormer are more complete in color and structure than those generated by the previous methods. The icons generated by AdaIN, ArtFlow, and StyleFormer demonstrate content loss and insufficient stylization in different extents. StyTr2 cannot effectively distinguish the primary structure from background information of an icon, and most of the background of its generated icons are colorized. The quantitative analysis results show that the proposed IconFormer outperforms previous methods in terms of content and gradient differences. AdaIN results in the highest content difference, indicating that this method exhibits content loss, while ArtFlow presents the highest style difference, indicating that this method cannot effectively stylize content icons. Several ablation experiments are conducted to verify the effectiveness of the feature encoder, style encoder, and loss function definition in the icon generation process. The result shows that the VGG feature extractor, style encoder, and integrated loss function with gradient loss have positive effects on the resulting icons. Additional experiments are conducted to generate a set of icons with a unified style, and the results show that IconFormer is extremely convenient to generate a set of icons with a consistent style, harmonious colors, and high quality.ConclusionThe icon generation model, IconFormer, based on CNNs and Transformer proposed in this study combines the advantages of CNNs and Transformers, and thus can generate new icons with high quality and efficiency, saving time and labor cost for the GUI design of software or websites.关键词:icon generation;image style transfer;convolutional neural network(CNN);Transformer;self-attention mechanism418|376|0更新时间:2025-07-14

Image Processing and Coding

- “在目标检测领域,ASAHI算法针对高分辨率图像中小目标检测难题,通过自适应调整切片数量减少冗余计算,提高检测精度和速度。”

摘要:ObjectiveObject detection has attracted considerable attention because of its wide application in various fields. In recent years, the progress of deep learning technology has facilitated the development of object detection algorithms combined with deep convolutional neural networks. In natural scenes, traditional object detectors have achieved excellent results. However, current object detection algorithms still encounter difficulties in small object detection. The reason is that most aerial images refer to complex high-resolution scenes, and some common problems, such as high density, unfixed shooting angle, small size, and high variability of targets, have introduced great challenges to existing object detection methods. Thus, small object detection has emerged as a key area in the field of object detection research. Its broad applications mainly include identification of early small lesions and masses in medical imaging, remote sensing exploration in military operations, and location analysis of small defects in industrial production. Some researchers have obtained high-resolution image features through multiple up-sampling operations, while another set of approaches effectively deals with problems such as high intensity by adding a penalty item in the post-processing stage. Among them, one excellent work is the use of slicing strategy, which slices the image into smaller image blocks to enlarge the receptive field. However, the existing slicing-based methods involve redundant computation that increases the calculation cost and reduces the detection speed.MethodTherefore, a new adaptive slicing method, which is called adaptive slicing-aided hyper inference (ASAHI), is proposed in this study. This approach focuses on the number of slices rather than the traditional slice size. The approach can adaptively adjust the number of slices according to the image resolution to reduce the performance loss caused by redundant calculation. Specifically, in the inference stage, the work first divides the input image into 6 or 12 overlapping patches using the ASAHI algorithm. Then, it interpolates each image patch to maintain the aspect ratio. Next, considering the obvious defects of the slicing strategy in detecting large objects, this method separately performs forward computation on the sliced image patches and the complete input image. Finally, the post-processing stage integrates a faster and efficient Cluster-NMS method and DIoU penalty term to improve accuracy and detection speed in high-density scenes. This method, which is called Cluster-DIoU-NMS(CDN), merges the ASAHI inference and full-image inference results and resizes them back to the original image size. Correspondingly, the dataset constructed in the training stage also includes slice image blocks to support the ASAHI inference. The dataset of slicing images and the pre-training dataset of the entire images together constitute the fine-tuning dataset for the training of this work. Notably, the slicing method used in the fine-tuning dataset can be either the ASAHI algorithm or the conventional sliding window method. In the ASAHI slicing process, this method sets a distinction threshold to control the number of slices . If the length or width of the image exceeds this threshold, then the image will be cut into total 12 slices; otherwise, it will be cut into total 6 slices. Thereafter, the width and height of the slice block are calculated according to the value of , and the coordinate position of the slice is determined. After the abovementioned calculation, the ASAHI algorithm realizes the adaptive adjustment of slice size within a limited range by controlling the number of slices.ResultBroad experiments demonstrate that ASAHI exhibits competitive performance on the VisDrone and xView datasets. The results show that the proposed method achieves the highest mAP50 scores (45.6% and 22.7%) and fast inference speeds (4.88 images per second and 3.58 images per second) on both datasets. In addition, the mAP and mAP75 increase by 1.7% and 1.1%, respectively, on the VisDrone2019-DET-test dataset. Meanwhile, the mAP and mAP75 improve by 1.43% and 0.9%, respectively, on the xView test set. On the VisDrone2019-dt-val dataset, the mAP50 of the experiment exceeds 56.8%. Compared with state of the art, the proposed method achieves the highest mAP (36.0%), mAP75 (28.2%), and mAP50 (56.8%) values, with a highest processing speed of 5.26 images per second, which suggests a better balance performance.ConclusionThe proposed algorithm can effectively handle complex factors, such as high density, different shooting angles, and high variability, in high-resolution scenes. It can also achieve high-quality detection of small objects.关键词:object detection;small object detection;sliced inference;VisDrone;xView213|505|0更新时间:2025-07-14

摘要:ObjectiveObject detection has attracted considerable attention because of its wide application in various fields. In recent years, the progress of deep learning technology has facilitated the development of object detection algorithms combined with deep convolutional neural networks. In natural scenes, traditional object detectors have achieved excellent results. However, current object detection algorithms still encounter difficulties in small object detection. The reason is that most aerial images refer to complex high-resolution scenes, and some common problems, such as high density, unfixed shooting angle, small size, and high variability of targets, have introduced great challenges to existing object detection methods. Thus, small object detection has emerged as a key area in the field of object detection research. Its broad applications mainly include identification of early small lesions and masses in medical imaging, remote sensing exploration in military operations, and location analysis of small defects in industrial production. Some researchers have obtained high-resolution image features through multiple up-sampling operations, while another set of approaches effectively deals with problems such as high intensity by adding a penalty item in the post-processing stage. Among them, one excellent work is the use of slicing strategy, which slices the image into smaller image blocks to enlarge the receptive field. However, the existing slicing-based methods involve redundant computation that increases the calculation cost and reduces the detection speed.MethodTherefore, a new adaptive slicing method, which is called adaptive slicing-aided hyper inference (ASAHI), is proposed in this study. This approach focuses on the number of slices rather than the traditional slice size. The approach can adaptively adjust the number of slices according to the image resolution to reduce the performance loss caused by redundant calculation. Specifically, in the inference stage, the work first divides the input image into 6 or 12 overlapping patches using the ASAHI algorithm. Then, it interpolates each image patch to maintain the aspect ratio. Next, considering the obvious defects of the slicing strategy in detecting large objects, this method separately performs forward computation on the sliced image patches and the complete input image. Finally, the post-processing stage integrates a faster and efficient Cluster-NMS method and DIoU penalty term to improve accuracy and detection speed in high-density scenes. This method, which is called Cluster-DIoU-NMS(CDN), merges the ASAHI inference and full-image inference results and resizes them back to the original image size. Correspondingly, the dataset constructed in the training stage also includes slice image blocks to support the ASAHI inference. The dataset of slicing images and the pre-training dataset of the entire images together constitute the fine-tuning dataset for the training of this work. Notably, the slicing method used in the fine-tuning dataset can be either the ASAHI algorithm or the conventional sliding window method. In the ASAHI slicing process, this method sets a distinction threshold to control the number of slices . If the length or width of the image exceeds this threshold, then the image will be cut into total 12 slices; otherwise, it will be cut into total 6 slices. Thereafter, the width and height of the slice block are calculated according to the value of , and the coordinate position of the slice is determined. After the abovementioned calculation, the ASAHI algorithm realizes the adaptive adjustment of slice size within a limited range by controlling the number of slices.ResultBroad experiments demonstrate that ASAHI exhibits competitive performance on the VisDrone and xView datasets. The results show that the proposed method achieves the highest mAP50 scores (45.6% and 22.7%) and fast inference speeds (4.88 images per second and 3.58 images per second) on both datasets. In addition, the mAP and mAP75 increase by 1.7% and 1.1%, respectively, on the VisDrone2019-DET-test dataset. Meanwhile, the mAP and mAP75 improve by 1.43% and 0.9%, respectively, on the xView test set. On the VisDrone2019-dt-val dataset, the mAP50 of the experiment exceeds 56.8%. Compared with state of the art, the proposed method achieves the highest mAP (36.0%), mAP75 (28.2%), and mAP50 (56.8%) values, with a highest processing speed of 5.26 images per second, which suggests a better balance performance.ConclusionThe proposed algorithm can effectively handle complex factors, such as high density, different shooting angles, and high variability, in high-resolution scenes. It can also achieve high-quality detection of small objects.关键词:object detection;small object detection;sliced inference;VisDrone;xView213|505|0更新时间:2025-07-14 - “最新研究突破了传统户型平面图矢量化技术,通过引导集扩散模型提升墙体矢量化精度,为建筑与家居设计提供可靠技术支持。”

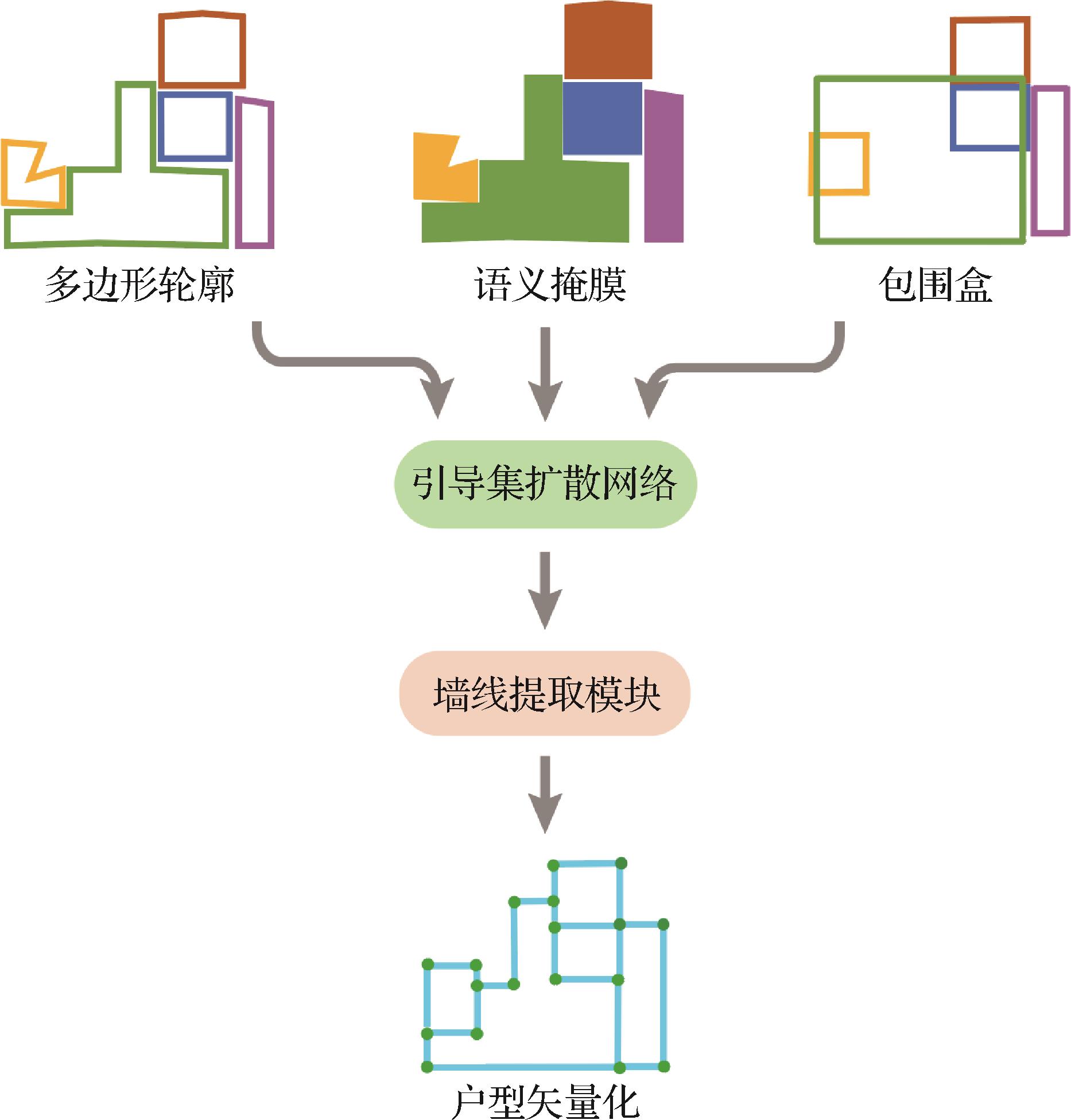

摘要:ObjectiveIndoor floor plan vectorization is a sophisticated technique that aims to extract precise structural information from raster images and convert them into vector representations. This process is essential in fields, such as architectural renovation, interior design, and scene understanding, where the accurate and efficient vectorization of floor plans can considerably enhance the quality and usability of spatial data. The vectorization process has been traditionally performed using a two-stage pipeline. In the first stage, deep neural networks are used to segment the raster image, producing masks that define room regions within the floor plan. These masks serve as the foundation for the subsequent vectorization process. In the second stage, post-processing algorithms are applied to these masks to extract vector information, with focus on elements, such as walls, doors, and other structural components. However, this process also poses challenges. One of the major issues is error accumulation. Inaccuracies in initial mask generation can lead to compounded errors during vectorization. Moreover, post-processing algorithms frequently lack robustness, particularly when dealing with complex or degraded input images, leading to suboptimal vectorization results.MethodTo address these challenges, we propose a novel approach based on diffusion models for the vector reconstruction of indoor floor plans. Diffusion models, which were originally developed for generative tasks, have exhibited considerable promise in producing high-quality output by iteratively refining input data. Our method leverages this capability to enhance the precision of floor plan vectorization. In particular, the algorithm starts with rough masks generated using object detection or instance segmentation models. Although these masks provide a basic outline of room regions, they may lack the accuracy required for precise vectorization. The diffusion model is then employed to iteratively refine the contour points of these rough masks, gradually reconstructing room contours with higher accuracy. This process involves multiple iterations, during which the model adjusts the contour points based on patterns learned from the training data, leading to a more accurate representation of room boundaries. A key innovation of our approach is the introduction of a contour inclination loss function. This loss function is specifically designed to guide the diffusion model in generating more reasonable and structurally sound room layouts. By penalizing unrealistic or impractical contour inclinations, the model is encouraged to produce output that closely resembles real-world room configurations. This process not only improves the visual accuracy of room contours but also enhances the overall quality of the vectorized floor plan. The benefits of our diffusion model-based approach are manifold. First, we can significantly reduce the error accumulation that plagues traditional methods by refining room contours before the vectorization stage. The more accurate the room contours, the more precise the subsequent wall vector extraction process. Second, the use of a diffusion model allows for a more robust handling of complex or noisy input images. In contrast with traditional post-processing algorithms, which may experience difficulty with irregularities in the input data, the diffusion model’s iterative refinement process is better equipped to address such challenges, leading to more reliable vectorization results.ResultWe have thoroughly validated our method on the public CubiCase5K dataset, which is a widely used benchmark in the field of floor plan vectorization. The results of our experiments demonstrate that our approach significantly outperforms existing methods in terms of accuracy and robustness. Notably, we observe a marked improvement in the precision of wall vector extraction, which is crucial for applications in architectural renovation and interior design. The diffusion model’s ability to produce more accurate room contours directly translates into better vectorized representations of floor plans, making our approach an invaluable tool for professionals in these fields.ConclusionIn conclusion, the vectorization of indoor floor plans is a critical task with far-reaching applications, and the limitations of traditional methods have highlighted the need for more advanced techniques. Our diffusion model-based algorithm represents a significant step forward, offering a more accurate and reliable solution for the vector reconstruction of indoor floor plans. By refining room contours through iterative adjustments and incorporating a contour inclination loss function, our method not only addresses the issue of error accumulation but also enhances the overall quality of the vectorized output. Furthermore, our method exhibits broad application prospects in the field of interior design. Modern interior designers increasingly rely on digital tools for design and planning, wherein accurate indoor space representation is essential. With our method, designers can quickly generate high-quality room outlines and apply them to various design scenarios, such as furniture placement, lighting design, and space optimization. This efficient and precise vectorization technology not only saves designers significant time but also improves the feasibility and practicality of design solutions. In the field of building renovation, our approach also offers significant advantages. During the renovation of old buildings, redrawing and optimizing the original floor plan are frequently necessary. This process is often constrained by the quality of the original drawings, particularly in older buildings, where the original plans may have already deteriorated or been lost. Through our diffusion model vectorization method, architects can quickly reconstruct digital versions of these old floor plans and use them as a foundation for renovation designs. This step not only improves the efficiency of the renovation process but also helps preserve the historical character of the building. Lastly, our method demonstrates important application potential in the field of architectural scene understanding. With the rise of smart homes and automated building management systems, accurate interior floor plan data are critical for enabling these intelligent features. Our vectorization method can generate highly accurate representations of indoor spaces, providing reliable foundational data for intelligent systems. Overall, our approach promises to significantly improve the efficiency and effectiveness of floor plan vectorization, paving the way for more accurate architectural designs and enhanced spatial understanding.关键词:deep learning;indoor floor plan;generated reconstruction;diffusion model;floorplan vector technology321|737|0更新时间:2025-07-14

摘要:ObjectiveIndoor floor plan vectorization is a sophisticated technique that aims to extract precise structural information from raster images and convert them into vector representations. This process is essential in fields, such as architectural renovation, interior design, and scene understanding, where the accurate and efficient vectorization of floor plans can considerably enhance the quality and usability of spatial data. The vectorization process has been traditionally performed using a two-stage pipeline. In the first stage, deep neural networks are used to segment the raster image, producing masks that define room regions within the floor plan. These masks serve as the foundation for the subsequent vectorization process. In the second stage, post-processing algorithms are applied to these masks to extract vector information, with focus on elements, such as walls, doors, and other structural components. However, this process also poses challenges. One of the major issues is error accumulation. Inaccuracies in initial mask generation can lead to compounded errors during vectorization. Moreover, post-processing algorithms frequently lack robustness, particularly when dealing with complex or degraded input images, leading to suboptimal vectorization results.MethodTo address these challenges, we propose a novel approach based on diffusion models for the vector reconstruction of indoor floor plans. Diffusion models, which were originally developed for generative tasks, have exhibited considerable promise in producing high-quality output by iteratively refining input data. Our method leverages this capability to enhance the precision of floor plan vectorization. In particular, the algorithm starts with rough masks generated using object detection or instance segmentation models. Although these masks provide a basic outline of room regions, they may lack the accuracy required for precise vectorization. The diffusion model is then employed to iteratively refine the contour points of these rough masks, gradually reconstructing room contours with higher accuracy. This process involves multiple iterations, during which the model adjusts the contour points based on patterns learned from the training data, leading to a more accurate representation of room boundaries. A key innovation of our approach is the introduction of a contour inclination loss function. This loss function is specifically designed to guide the diffusion model in generating more reasonable and structurally sound room layouts. By penalizing unrealistic or impractical contour inclinations, the model is encouraged to produce output that closely resembles real-world room configurations. This process not only improves the visual accuracy of room contours but also enhances the overall quality of the vectorized floor plan. The benefits of our diffusion model-based approach are manifold. First, we can significantly reduce the error accumulation that plagues traditional methods by refining room contours before the vectorization stage. The more accurate the room contours, the more precise the subsequent wall vector extraction process. Second, the use of a diffusion model allows for a more robust handling of complex or noisy input images. In contrast with traditional post-processing algorithms, which may experience difficulty with irregularities in the input data, the diffusion model’s iterative refinement process is better equipped to address such challenges, leading to more reliable vectorization results.ResultWe have thoroughly validated our method on the public CubiCase5K dataset, which is a widely used benchmark in the field of floor plan vectorization. The results of our experiments demonstrate that our approach significantly outperforms existing methods in terms of accuracy and robustness. Notably, we observe a marked improvement in the precision of wall vector extraction, which is crucial for applications in architectural renovation and interior design. The diffusion model’s ability to produce more accurate room contours directly translates into better vectorized representations of floor plans, making our approach an invaluable tool for professionals in these fields.ConclusionIn conclusion, the vectorization of indoor floor plans is a critical task with far-reaching applications, and the limitations of traditional methods have highlighted the need for more advanced techniques. Our diffusion model-based algorithm represents a significant step forward, offering a more accurate and reliable solution for the vector reconstruction of indoor floor plans. By refining room contours through iterative adjustments and incorporating a contour inclination loss function, our method not only addresses the issue of error accumulation but also enhances the overall quality of the vectorized output. Furthermore, our method exhibits broad application prospects in the field of interior design. Modern interior designers increasingly rely on digital tools for design and planning, wherein accurate indoor space representation is essential. With our method, designers can quickly generate high-quality room outlines and apply them to various design scenarios, such as furniture placement, lighting design, and space optimization. This efficient and precise vectorization technology not only saves designers significant time but also improves the feasibility and practicality of design solutions. In the field of building renovation, our approach also offers significant advantages. During the renovation of old buildings, redrawing and optimizing the original floor plan are frequently necessary. This process is often constrained by the quality of the original drawings, particularly in older buildings, where the original plans may have already deteriorated or been lost. Through our diffusion model vectorization method, architects can quickly reconstruct digital versions of these old floor plans and use them as a foundation for renovation designs. This step not only improves the efficiency of the renovation process but also helps preserve the historical character of the building. Lastly, our method demonstrates important application potential in the field of architectural scene understanding. With the rise of smart homes and automated building management systems, accurate interior floor plan data are critical for enabling these intelligent features. Our vectorization method can generate highly accurate representations of indoor spaces, providing reliable foundational data for intelligent systems. Overall, our approach promises to significantly improve the efficiency and effectiveness of floor plan vectorization, paving the way for more accurate architectural designs and enhanced spatial understanding.关键词:deep learning;indoor floor plan;generated reconstruction;diffusion model;floorplan vector technology321|737|0更新时间:2025-07-14 - “在微表情识别领域,专家提出了深度卷积神经网络与浅层3DCNN组成的三分支网络,显著提高了识别精确度,有效解决了样本不足、难以学习多类型特征的问题。”