最新刊期

卷 27 , 期 3 , 2022

-

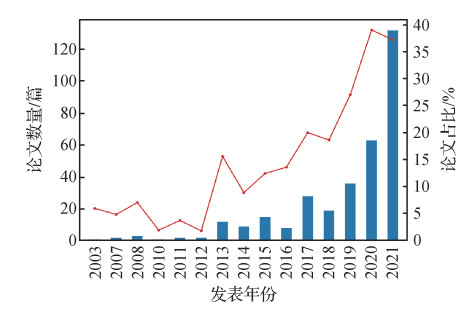

摘要:The development of medical imaging, artificial intelligence (AI) and clinical applications derived from AI-based medical imaging has been recognized in past two decades. The improvement and optimization of AI-based technologies have been significantly applied to various of clinical scenarios to strengthen the capability and accuracy of diagnosis and treatment. Nowadays, China has been playing a major role and making increasing contributions in the field of AI-based medical imaging. More worldwide researchers in the context of AI-based medical imaging have contributed to universities and institutions in China. The number of research papers published by Chinese scholars in top international journals and conferences like AI-based medical imaging has dramatically increased annually. Some AI-based medical imaging international conferences and summits have been successfully held in China. There is an increasing number of traditional medical, internet technology and AI enterprises contributing to the research and development of AI-based medical imaging products. More collaborative medical research projects have been implemented for AI-based medical imaging. The Chinese administrations have also planned relevant policies and issued strategic plans for AI-based medical imaging, and included the intelligent medical care as one of the key tasks for the development of new generation of AI in China in 2030. In order to review China's contribution for AI-based medical imaging, we conducted a 20 years review for AI-based medical imaging forecasting in China. Specifically, we summarized all papers published by Chinese scholars in the top AI-based medical imaging journals and conferences including Medical Image Analysis (MedIA), IEEE Transactions on Medical Imaging (TMI), and Medical Image Computing and Computer Assisted Intervention (MICCAI) in the past 20 years. The detailed quantitative metrics like the number of published papers, authorship, affiliations, author's cooperation network, keywords, and the number of citations were critically reviewed. Meanwhile, we briefly summarized some milestone events of AI-based medical imaging in China, including the renowned international and domestic conferences in AI-based medical imaging held in China, the release of the "The White Paper on Medical Imaging Artificial Intelligence in China", as well as China's contributions during the COVID-19(corona virus desease 2019) pandemic. For instance, the total number of published papers in the past 20 years and the proportion of published papers in 2021 by Chinese affiliations have reached to 333 and 37.29% in MedIA, 601 and 42.26% in TMI, and 985 and 44.26% in MICCAI. In those published papers by Chinese institutes, the proportion of the first and the corresponding Chinese authors is 71.97% in MedIA, 69.64% in TMI, and 77.4% in MICCAI in 2021. The average number of citations per paper by Chinese institutes is 22, 28, and 9 in MedIA, TMI, and MICCAI, respectively. In all published papers by Chinese institutes, the predominant research methods were transformed from conventional approaches to sparse representation in 2012, and to deep learning in 2017, which were close to the latest developmental trend of AI technologies. Besides conventional applications such as medical image registration, segmentation, reconstruction and computer-aided diagnosis, etc., the published papers also focused on healthcare quick response in terms of COVID-19 pandemic. The China-derived data and source codes have been sharing in the global context to facilitate worldwide AI-based medical imaging research and education. Our analysis could provide a reference for international scientific research and education for newly Chinese scholars and students based on the growth of the global AI-based medical imaging. Finally, we promoted technology forecasting on AI-based medical imaging as mentioned below. First, strengthen the capability of deep learning for AI-based medical imaging further, including optimal and efficient deep learning, generalizable deep learning, explainable deep learning, fair deep learning, and responsible and trustworthy deep learning.Next, improve the availability and sharing of high-quality and benchmarked medical imaging datasets in the context of AI-based medical imaging development, validation, and dissemination are harnessed to reveal the key challenges in both basic scientific research and clinical applications. Third, focus on the multi-center and multi-modal medical imaging data acquisition and fusion, as well as integration with natural language such as diagnosis report. Fourth, awake doctors' intervention further to realize the clinical applications of AI-based medical imaging. Finally, conduct talent training, international collaboration, as well as sharing of open source data and codes for worldwide development of AI-based medical imaging.关键词:medical imaging;artificial intelligence(AI);developmental history;international cooperation;quantitative statistics399|718|6更新时间:2024-05-07

摘要:The development of medical imaging, artificial intelligence (AI) and clinical applications derived from AI-based medical imaging has been recognized in past two decades. The improvement and optimization of AI-based technologies have been significantly applied to various of clinical scenarios to strengthen the capability and accuracy of diagnosis and treatment. Nowadays, China has been playing a major role and making increasing contributions in the field of AI-based medical imaging. More worldwide researchers in the context of AI-based medical imaging have contributed to universities and institutions in China. The number of research papers published by Chinese scholars in top international journals and conferences like AI-based medical imaging has dramatically increased annually. Some AI-based medical imaging international conferences and summits have been successfully held in China. There is an increasing number of traditional medical, internet technology and AI enterprises contributing to the research and development of AI-based medical imaging products. More collaborative medical research projects have been implemented for AI-based medical imaging. The Chinese administrations have also planned relevant policies and issued strategic plans for AI-based medical imaging, and included the intelligent medical care as one of the key tasks for the development of new generation of AI in China in 2030. In order to review China's contribution for AI-based medical imaging, we conducted a 20 years review for AI-based medical imaging forecasting in China. Specifically, we summarized all papers published by Chinese scholars in the top AI-based medical imaging journals and conferences including Medical Image Analysis (MedIA), IEEE Transactions on Medical Imaging (TMI), and Medical Image Computing and Computer Assisted Intervention (MICCAI) in the past 20 years. The detailed quantitative metrics like the number of published papers, authorship, affiliations, author's cooperation network, keywords, and the number of citations were critically reviewed. Meanwhile, we briefly summarized some milestone events of AI-based medical imaging in China, including the renowned international and domestic conferences in AI-based medical imaging held in China, the release of the "The White Paper on Medical Imaging Artificial Intelligence in China", as well as China's contributions during the COVID-19(corona virus desease 2019) pandemic. For instance, the total number of published papers in the past 20 years and the proportion of published papers in 2021 by Chinese affiliations have reached to 333 and 37.29% in MedIA, 601 and 42.26% in TMI, and 985 and 44.26% in MICCAI. In those published papers by Chinese institutes, the proportion of the first and the corresponding Chinese authors is 71.97% in MedIA, 69.64% in TMI, and 77.4% in MICCAI in 2021. The average number of citations per paper by Chinese institutes is 22, 28, and 9 in MedIA, TMI, and MICCAI, respectively. In all published papers by Chinese institutes, the predominant research methods were transformed from conventional approaches to sparse representation in 2012, and to deep learning in 2017, which were close to the latest developmental trend of AI technologies. Besides conventional applications such as medical image registration, segmentation, reconstruction and computer-aided diagnosis, etc., the published papers also focused on healthcare quick response in terms of COVID-19 pandemic. The China-derived data and source codes have been sharing in the global context to facilitate worldwide AI-based medical imaging research and education. Our analysis could provide a reference for international scientific research and education for newly Chinese scholars and students based on the growth of the global AI-based medical imaging. Finally, we promoted technology forecasting on AI-based medical imaging as mentioned below. First, strengthen the capability of deep learning for AI-based medical imaging further, including optimal and efficient deep learning, generalizable deep learning, explainable deep learning, fair deep learning, and responsible and trustworthy deep learning.Next, improve the availability and sharing of high-quality and benchmarked medical imaging datasets in the context of AI-based medical imaging development, validation, and dissemination are harnessed to reveal the key challenges in both basic scientific research and clinical applications. Third, focus on the multi-center and multi-modal medical imaging data acquisition and fusion, as well as integration with natural language such as diagnosis report. Fourth, awake doctors' intervention further to realize the clinical applications of AI-based medical imaging. Finally, conduct talent training, international collaboration, as well as sharing of open source data and codes for worldwide development of AI-based medical imaging.关键词:medical imaging;artificial intelligence(AI);developmental history;international cooperation;quantitative statistics399|718|6更新时间:2024-05-07 -

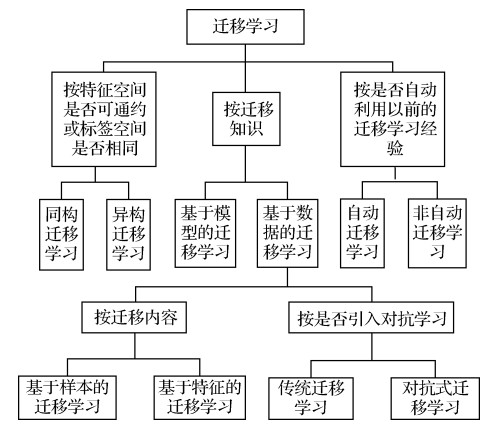

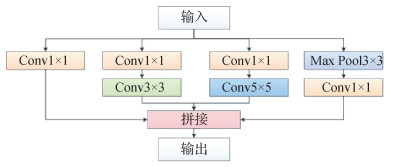

摘要:Medical image classification is a key element of medical image analysis. The method of medical image classification has been evolving in deep learning and transfer learning. A large number of important literatures of medical image classification are analyzed based on transfer learning. As a result, three transfer learning strategies and five modes of medical image classifying are summarized. The transfer learning modes are constructed based on general characteristics which extracted from designed transfer learning processes theoretically. The relevance of the transfer learning strategies and the modes is illustrated as well. These transfer strategies and modes illustrated the application of transfer learning in this field from a higher level of abstraction. The applications, advantages, limitations of these transfer learning strategies and modes are analyzed. The transfer learning in the context of medical image feature extraction and classification is the model-based transfer learning. Most of the migrated models are deep convolution neural network (DCNN). High classifying efficiency is obtained due to ImageNet (a large public image database) training results. To migrate model from the source domain to the target domain, the model needs to be adapted to the tasks of the targeted domain. From the important literature of medical image classification, three transfer model adaptive strategies were sorted out. They are structure fit, parameter option and migrated model based features extraction. The strategies of fitted structure are to modify the structure of the migrated model. Layers can be deleted or added as needed. These layers can be as convolution layer, full connection layer, feature smoothing layer, feature extracting layer, and batch normalizing layer, respectively. The optional strategies of parameter are to re-train the migrated model to adjust the model parameters via using the target domain data. Both the parameters of convolution layer and the parameters of full connection layer can be fitted. The migrated-model-based strategy of features extraction is only used for feature extraction. Image features can be extracted from the convolution layer and the full connection layer. Five transfer learning modes were sorted out based on the literature of medical image classification. They are the DCNN mode, the hybrid mode, the mode of fused feature and classification. The mode of multi-classifier fusing and the mode of transferring are conducted two times all. For instance, the fitting strategies of structure and parameters of convolution layer are used in the DCNN mode to get more accurate features. The transfer learning modes can basically cover all kinds of transfer learning processes in medical image classification researches. The DCNN mode is to use a DCNN to complete image feature extraction and classification. The hybrid model is composed of a DCNN and a traditional classifier. The former is used for feature extracting and the latter is used for classifying. It has the advantage of DCNN feature extraction and advantage of traditional classified classifier. The mode of integrated feature and classification is composed of feature extraction methods and a classifier. Feature extracting methods can be a DCNN or manual features. The mode of multi-classifier fusing is composed of multiple classifiers. The final classifying result is obtained based on multiple classified integrating results. The integrating result is more reliable than the result from single classifier mode (i.e., the DCNN mode and the hybrid mode). In the twice mode of transferring, the initial model is trained in the source domain, then it is migrated to the temporary targeted domain to be trained for the second time, and finally it is migrated to the final targeted domain to be trained for the third time. Compared to first transfer, this mode priority is that the triple migrated model accumulates more knowledge of training. The transfer-learning based issues and of medical image classification potentials are illustrated as below: 1) It is difficult to pick an efficient transfer learning algorithm. Due to the diversity and complexity of medical images, the generalization capability of transfer learning algorithm is to be strengthened. Manual option for a specific image classifying task is often based on continuous high computational cost tests. A low cost automatic transfer learning algorithm is a challenging issue. 2) The modification of transfer model and the setting of mega parameters are lack of theoretical mechanism. The structure and parameters of the migrated model can only be modified through the existing experiences and continuous experiments, and the setting of mega parameters is not different, which lead to the low efficiency of transfer learning. 3) It is challenged to classify rare disease images due to pathological image data samples of rare diseases. The antagonistic transfer learning can generate and enhance target domain data. The heterogeneous transfer learning yield to transfer the knowledge of different modalities or the source domain to target domain. Therefore, the two methods of transfer learning can be further developed in image classification of rare diseases.关键词:medical images;image classification;transfer learning;transfer learning strategies;transfer learning mode333|404|13更新时间:2024-05-07

摘要:Medical image classification is a key element of medical image analysis. The method of medical image classification has been evolving in deep learning and transfer learning. A large number of important literatures of medical image classification are analyzed based on transfer learning. As a result, three transfer learning strategies and five modes of medical image classifying are summarized. The transfer learning modes are constructed based on general characteristics which extracted from designed transfer learning processes theoretically. The relevance of the transfer learning strategies and the modes is illustrated as well. These transfer strategies and modes illustrated the application of transfer learning in this field from a higher level of abstraction. The applications, advantages, limitations of these transfer learning strategies and modes are analyzed. The transfer learning in the context of medical image feature extraction and classification is the model-based transfer learning. Most of the migrated models are deep convolution neural network (DCNN). High classifying efficiency is obtained due to ImageNet (a large public image database) training results. To migrate model from the source domain to the target domain, the model needs to be adapted to the tasks of the targeted domain. From the important literature of medical image classification, three transfer model adaptive strategies were sorted out. They are structure fit, parameter option and migrated model based features extraction. The strategies of fitted structure are to modify the structure of the migrated model. Layers can be deleted or added as needed. These layers can be as convolution layer, full connection layer, feature smoothing layer, feature extracting layer, and batch normalizing layer, respectively. The optional strategies of parameter are to re-train the migrated model to adjust the model parameters via using the target domain data. Both the parameters of convolution layer and the parameters of full connection layer can be fitted. The migrated-model-based strategy of features extraction is only used for feature extraction. Image features can be extracted from the convolution layer and the full connection layer. Five transfer learning modes were sorted out based on the literature of medical image classification. They are the DCNN mode, the hybrid mode, the mode of fused feature and classification. The mode of multi-classifier fusing and the mode of transferring are conducted two times all. For instance, the fitting strategies of structure and parameters of convolution layer are used in the DCNN mode to get more accurate features. The transfer learning modes can basically cover all kinds of transfer learning processes in medical image classification researches. The DCNN mode is to use a DCNN to complete image feature extraction and classification. The hybrid model is composed of a DCNN and a traditional classifier. The former is used for feature extracting and the latter is used for classifying. It has the advantage of DCNN feature extraction and advantage of traditional classified classifier. The mode of integrated feature and classification is composed of feature extraction methods and a classifier. Feature extracting methods can be a DCNN or manual features. The mode of multi-classifier fusing is composed of multiple classifiers. The final classifying result is obtained based on multiple classified integrating results. The integrating result is more reliable than the result from single classifier mode (i.e., the DCNN mode and the hybrid mode). In the twice mode of transferring, the initial model is trained in the source domain, then it is migrated to the temporary targeted domain to be trained for the second time, and finally it is migrated to the final targeted domain to be trained for the third time. Compared to first transfer, this mode priority is that the triple migrated model accumulates more knowledge of training. The transfer-learning based issues and of medical image classification potentials are illustrated as below: 1) It is difficult to pick an efficient transfer learning algorithm. Due to the diversity and complexity of medical images, the generalization capability of transfer learning algorithm is to be strengthened. Manual option for a specific image classifying task is often based on continuous high computational cost tests. A low cost automatic transfer learning algorithm is a challenging issue. 2) The modification of transfer model and the setting of mega parameters are lack of theoretical mechanism. The structure and parameters of the migrated model can only be modified through the existing experiences and continuous experiments, and the setting of mega parameters is not different, which lead to the low efficiency of transfer learning. 3) It is challenged to classify rare disease images due to pathological image data samples of rare diseases. The antagonistic transfer learning can generate and enhance target domain data. The heterogeneous transfer learning yield to transfer the knowledge of different modalities or the source domain to target domain. Therefore, the two methods of transfer learning can be further developed in image classification of rare diseases.关键词:medical images;image classification;transfer learning;transfer learning strategies;transfer learning mode333|404|13更新时间:2024-05-07 -

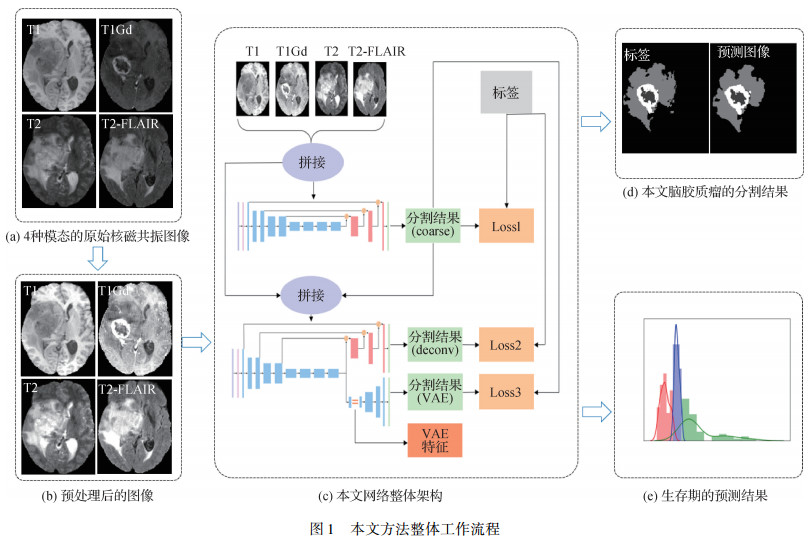

摘要:The generative adversarial network (GAN) consists of a generator based on the data distribution learning and an identified sample's authenticity discriminator. They learn from each other gradually in the process of confrontation. The network enables the deep learning method to learn the loss function automatically and reduces expertise dependence. It has been widely used in natural image processing, and it is also a promising solution for related problems in medical image processing field. This paper aims to bridge the gap between GAN and specific medical field problems and point out the future improvement directions. First, the basic principle of GAN is issued. Secondly, we review the latest medical images research on data augmentation, modality migration, image segmentation, and denoising; analyze the advantage and disadvantage of each method and the scope of application. Next, the current quality assessment is summarized. At the end, the research development, issue, and future improvement direction of GAN on medical image are summarized. GAN theoretical study focus on three aspects of task splitting, introducing conditional constraints and image-to-image translation, which effectively improved the quality of the synthesized image, increased the resolution, and allowed more manipulation across the image synthesis process. However, there are some challenges as mentioned below: 1) Generate high-quality, high-resolution, and diverse images under large-scale complex data sets. 2) The manipulation of synthesized image attributes at different levels and different granularities. 3) The lack of paired training data and the guarantee of image translation quality and diversity. GAN application study in data augmentation, modality migration, image segmentation, and denoising of medical images has been widely analyzed. 1) The network model based on the Pix2pix basic framework can synthesize additional high-quality and high-resolution samples and improve the segmentation and classification performance based on data augmentation effectively. However, there are still problems such as insufficient synthetic sample diversity, basic biological structures maintenance difficulty, and limited 3D image synthesis capabilities. 2) The network model based on the CycleGAN basic framework does not require paired training images. It has been extensively analyzed in modality migration, but may lose the basic structure information. The current research on structure preservation in modality migration limits in the fusion of information, such as edges and segmentation. 3) Both the generator and the discriminator can be fused with the current segmentation model to improve the performance of the segmentation model. The generator can synthesize additional data, and the discriminator can guide model training from a high-level semantic level and make full use of unlabeled data. However, current research mainly focuses on single-modality image segmentation. 4) GAN application in image denoising can reconstruct normal-dose images from low-dose images, reducing the radiation impact suffered by patients. The critical issues of GAN in medical image processing are presented as follows: 1) Most medical image data is three-dimensional, such as MRI (magnetic resonance imaging) and CT (computed tomography), etc. The improvement of the synthesis quality and resolution of the three-dimensional data is a critical issue. 2) The difficulty in ensuring the diversity of synthesized data while keeping its basic geometric structure's rationality. 3) The question on how to make full use of unlabeled and unpaired data to generate high-quality, high-resolution, and diverse images. 4) The improvement of algorithms' cross-modality generalization performance, and the effective migration of different modality data. Future research should focus on the issues as following: 1) To optimize network architecture, objective function, and training methods for 3D data synthesis, improving model training stability, quality, resolution, and diversity of 3D synthesized images. 2) To further promote the prior geometric knowledge integration with GAN. 3) To take full advantage of the GAN's weak supervision characteristics. 4) To extract invariant features via attribute decoupling for good generalization performance and achieve attribute control at different levels, granularities, and needs in the process of modality migration. To conclude, ever since GAN was proposed, its theory has been continuously improved. A considerable evolution in medical image applications has been sorted out, such as data augmentation, modality migration, image segmentation, and denoising. Some challenging issues are still waiting to be resolved, including three-dimensional data synthesis, geometric structure rationality maintenance, unlabeled and unpaired data usage, and multi-modality data application.关键词:generative adversarial network (GAN);medical image;deep learning;data augmentation;modality migration;image segmentation;image denoising192|253|2更新时间:2024-05-07

摘要:The generative adversarial network (GAN) consists of a generator based on the data distribution learning and an identified sample's authenticity discriminator. They learn from each other gradually in the process of confrontation. The network enables the deep learning method to learn the loss function automatically and reduces expertise dependence. It has been widely used in natural image processing, and it is also a promising solution for related problems in medical image processing field. This paper aims to bridge the gap between GAN and specific medical field problems and point out the future improvement directions. First, the basic principle of GAN is issued. Secondly, we review the latest medical images research on data augmentation, modality migration, image segmentation, and denoising; analyze the advantage and disadvantage of each method and the scope of application. Next, the current quality assessment is summarized. At the end, the research development, issue, and future improvement direction of GAN on medical image are summarized. GAN theoretical study focus on three aspects of task splitting, introducing conditional constraints and image-to-image translation, which effectively improved the quality of the synthesized image, increased the resolution, and allowed more manipulation across the image synthesis process. However, there are some challenges as mentioned below: 1) Generate high-quality, high-resolution, and diverse images under large-scale complex data sets. 2) The manipulation of synthesized image attributes at different levels and different granularities. 3) The lack of paired training data and the guarantee of image translation quality and diversity. GAN application study in data augmentation, modality migration, image segmentation, and denoising of medical images has been widely analyzed. 1) The network model based on the Pix2pix basic framework can synthesize additional high-quality and high-resolution samples and improve the segmentation and classification performance based on data augmentation effectively. However, there are still problems such as insufficient synthetic sample diversity, basic biological structures maintenance difficulty, and limited 3D image synthesis capabilities. 2) The network model based on the CycleGAN basic framework does not require paired training images. It has been extensively analyzed in modality migration, but may lose the basic structure information. The current research on structure preservation in modality migration limits in the fusion of information, such as edges and segmentation. 3) Both the generator and the discriminator can be fused with the current segmentation model to improve the performance of the segmentation model. The generator can synthesize additional data, and the discriminator can guide model training from a high-level semantic level and make full use of unlabeled data. However, current research mainly focuses on single-modality image segmentation. 4) GAN application in image denoising can reconstruct normal-dose images from low-dose images, reducing the radiation impact suffered by patients. The critical issues of GAN in medical image processing are presented as follows: 1) Most medical image data is three-dimensional, such as MRI (magnetic resonance imaging) and CT (computed tomography), etc. The improvement of the synthesis quality and resolution of the three-dimensional data is a critical issue. 2) The difficulty in ensuring the diversity of synthesized data while keeping its basic geometric structure's rationality. 3) The question on how to make full use of unlabeled and unpaired data to generate high-quality, high-resolution, and diverse images. 4) The improvement of algorithms' cross-modality generalization performance, and the effective migration of different modality data. Future research should focus on the issues as following: 1) To optimize network architecture, objective function, and training methods for 3D data synthesis, improving model training stability, quality, resolution, and diversity of 3D synthesized images. 2) To further promote the prior geometric knowledge integration with GAN. 3) To take full advantage of the GAN's weak supervision characteristics. 4) To extract invariant features via attribute decoupling for good generalization performance and achieve attribute control at different levels, granularities, and needs in the process of modality migration. To conclude, ever since GAN was proposed, its theory has been continuously improved. A considerable evolution in medical image applications has been sorted out, such as data augmentation, modality migration, image segmentation, and denoising. Some challenging issues are still waiting to be resolved, including three-dimensional data synthesis, geometric structure rationality maintenance, unlabeled and unpaired data usage, and multi-modality data application.关键词:generative adversarial network (GAN);medical image;deep learning;data augmentation;modality migration;image segmentation;image denoising192|253|2更新时间:2024-05-07 -

摘要:Heart diseases diagnosis and treatment has become one of the major public health issues for human beings where non-invasive cardiac imaging is of great significance. Unfortunately, imaging scan time and cardiac image resolution become an intensive contradiction due to the characteristics of living heartbeat. Super-resolution (SR) image reconstruction like low-resolution-images based high-resolution cardiac images reconstruction are obtained based on the determined tolerable error within a short imaging time. Deep learning methods have revealed great vitality in the field of medical image processing nowadays. In terms of its good learning ability and data-driven factors, deep-learning-based SR reconstruction is qualified based on deep residual networks, generative adversarial networks compared with traditional methods. Our research analysis reviews the field via analyzing characteristics of representative methods, summing up cardiac image resources and the scale, summarizing commonly used evaluation indicators, giving performance evaluation and application conclusions of the methods and discussing methods in other fields that can be adapted for SR reconstructing cardiac magnetic resonance (CMR) images. Our analysis is derived from 13 opted literature reviews from Google, database systems and logic programming (DBLP), and CNKI taking deep learning, cardiac and SR reconstruction as search keywords. This review first categorizes all methods by their evaluation datasets, which are open resource or not. Details on the datasets and links to the open-source datasets are also facilitated. By defining standard evaluation indicators based on the reconstructed SR images and their ground truth, namely high-resolution (HR) images, the performance of SR reconstruction methods can be evaluated quantitatively. This demonstration summarizes the 8 evaluation indicators in the cardiac SR reconstruction methods, including structural similarity, cardiovascular diameter measured value, dice coefficient. The evaluation indicators are divided into three categories with respect to the following aspects, those are evaluation of the quality of SR reconstructed images, evaluation of cardiac function and evaluation of the effectiveness of cardiac segmentation. Meanwhile, all 13 literature reviews are only used to increase the spatial resolution of the CMR images. Our research classifies the methods as CMR 2D SR reconstruction, CMR 3D SR reconstruction, and CMR SR reconstruction in other dimensions, depending on the dimension of the cardiac images processing based on the deep learning methods. In general, most CMR 2D SR reconstruction methods can reconstruct high resolution cardiac images in a relative short time span to assure SR reconstruction quality. In contrast, the CMR 3D SR reconstruction methods are involved 3D convolution, which take the spatial structure of the heart into account. The integrated information is analyzed amongst adjacent slices of CMR. Some of these methods achieve the SR reconstruction result qualified than CMR 2D SR reconstruction methods. However, a number of CMR data is small size and most of them are not open. The larger perceptual domain of the methods increases the computation complexity and reduces the temporal performance to some extent. As for CMR SR reconstruction in higher dimensions, corresponding methods meet the requirement of high-resolution image generation and image denoising in clinical analysis. All the selected CMR SR reconstruction methods can also be organized in accordance with network models and high-resolution image degradation methods, such as U-Net, generative adversarial network and long short-term memory network from the aspect of network models. Fourier degradation and different interpolation degradation methods are from the aspect of degradation methods. SR reconstructed high-resolution images can accurately facilitate heart anatomy, blood flow evaluation and heart tissue segmentation level. This research also reviewed the feasibility of adapting other SR reconstruction methods for CMR SR reconstruction as they are currently proposed and applied to images of other in vivo tissues and structures. Our research also tries to find some of the SR reconstruction methods from the field of natural images computer vision to discuss the feasibility that adapting them to CMR SR reconstruction, such as channel attention mechanisms, video SR methods and SR of real scenes. In summary, SR reconstruction of CMR images has its distinctive features than SR reconstruction of natural images like more diverse and purposeful evaluation metrics constraint of local reconstruction quality and to the difficulties of getting training data. It is found that deep-learning-based cardiac SR reconstruction has concerned more in motion artifact suppression, model simplification, and time performance further. In addition, current methods basically rely on the powerful expression ability of the CNN(convolutional neural network), and little clinical prior knowledge is melted to the network to guide its learning. Performance comparison between existing models is relatively less, and there is no representative image repository to evaluate performances of different cardiac SR reconstruction methods.关键词:deep learning;cardiac imaging;super-resolution reconstruction;magnetic resonance imaging;datasets of cardiac images;convolutional neural networks;high-resolution images242|1030|3更新时间:2024-05-07

摘要:Heart diseases diagnosis and treatment has become one of the major public health issues for human beings where non-invasive cardiac imaging is of great significance. Unfortunately, imaging scan time and cardiac image resolution become an intensive contradiction due to the characteristics of living heartbeat. Super-resolution (SR) image reconstruction like low-resolution-images based high-resolution cardiac images reconstruction are obtained based on the determined tolerable error within a short imaging time. Deep learning methods have revealed great vitality in the field of medical image processing nowadays. In terms of its good learning ability and data-driven factors, deep-learning-based SR reconstruction is qualified based on deep residual networks, generative adversarial networks compared with traditional methods. Our research analysis reviews the field via analyzing characteristics of representative methods, summing up cardiac image resources and the scale, summarizing commonly used evaluation indicators, giving performance evaluation and application conclusions of the methods and discussing methods in other fields that can be adapted for SR reconstructing cardiac magnetic resonance (CMR) images. Our analysis is derived from 13 opted literature reviews from Google, database systems and logic programming (DBLP), and CNKI taking deep learning, cardiac and SR reconstruction as search keywords. This review first categorizes all methods by their evaluation datasets, which are open resource or not. Details on the datasets and links to the open-source datasets are also facilitated. By defining standard evaluation indicators based on the reconstructed SR images and their ground truth, namely high-resolution (HR) images, the performance of SR reconstruction methods can be evaluated quantitatively. This demonstration summarizes the 8 evaluation indicators in the cardiac SR reconstruction methods, including structural similarity, cardiovascular diameter measured value, dice coefficient. The evaluation indicators are divided into three categories with respect to the following aspects, those are evaluation of the quality of SR reconstructed images, evaluation of cardiac function and evaluation of the effectiveness of cardiac segmentation. Meanwhile, all 13 literature reviews are only used to increase the spatial resolution of the CMR images. Our research classifies the methods as CMR 2D SR reconstruction, CMR 3D SR reconstruction, and CMR SR reconstruction in other dimensions, depending on the dimension of the cardiac images processing based on the deep learning methods. In general, most CMR 2D SR reconstruction methods can reconstruct high resolution cardiac images in a relative short time span to assure SR reconstruction quality. In contrast, the CMR 3D SR reconstruction methods are involved 3D convolution, which take the spatial structure of the heart into account. The integrated information is analyzed amongst adjacent slices of CMR. Some of these methods achieve the SR reconstruction result qualified than CMR 2D SR reconstruction methods. However, a number of CMR data is small size and most of them are not open. The larger perceptual domain of the methods increases the computation complexity and reduces the temporal performance to some extent. As for CMR SR reconstruction in higher dimensions, corresponding methods meet the requirement of high-resolution image generation and image denoising in clinical analysis. All the selected CMR SR reconstruction methods can also be organized in accordance with network models and high-resolution image degradation methods, such as U-Net, generative adversarial network and long short-term memory network from the aspect of network models. Fourier degradation and different interpolation degradation methods are from the aspect of degradation methods. SR reconstructed high-resolution images can accurately facilitate heart anatomy, blood flow evaluation and heart tissue segmentation level. This research also reviewed the feasibility of adapting other SR reconstruction methods for CMR SR reconstruction as they are currently proposed and applied to images of other in vivo tissues and structures. Our research also tries to find some of the SR reconstruction methods from the field of natural images computer vision to discuss the feasibility that adapting them to CMR SR reconstruction, such as channel attention mechanisms, video SR methods and SR of real scenes. In summary, SR reconstruction of CMR images has its distinctive features than SR reconstruction of natural images like more diverse and purposeful evaluation metrics constraint of local reconstruction quality and to the difficulties of getting training data. It is found that deep-learning-based cardiac SR reconstruction has concerned more in motion artifact suppression, model simplification, and time performance further. In addition, current methods basically rely on the powerful expression ability of the CNN(convolutional neural network), and little clinical prior knowledge is melted to the network to guide its learning. Performance comparison between existing models is relatively less, and there is no representative image repository to evaluate performances of different cardiac SR reconstruction methods.关键词:deep learning;cardiac imaging;super-resolution reconstruction;magnetic resonance imaging;datasets of cardiac images;convolutional neural networks;high-resolution images242|1030|3更新时间:2024-05-07 -

摘要:Lung disease like corona virus disease 2019(COVID-19) and lung cancer endanger the health of human beings. Early screening and treatment can significantly decrease the mortality of lung diseases. Computed tomography (CT) technology can be an effective information collection method for the diagnosis and treatment of lung diseases. CT-based lung lesion region image segmentation is a key step in lung disease screening. High quality lung lesion region segmentation can effectively improve the level of early stage diagnosis and treatment of lung diseases. However, high-quality lung lesion region segmentation in lung CT images has become a challenging issue in computer-aided diagnosis due to the diversity and complexity of lung diseases. Our research reviews the relevant literature recently. First, it is compared and summarized the pros and cons of traditional segmentation methods of lung CT image based on region and active contour. The region-based method uses the similarity and difference of features to guide image segmentation, mainly including threshold method, region growth method, clustering method and random walk method. The active-contour-based method is to set an initial contour line with decreasing energy. The contour line deforms in the internal energy derived from its own characteristics and the external energy originated from image characteristics. Its movement is in accordance with the principle of minimum energy until the energy function is in minimization and the contour line stops next to the boundary of lung region. The active contour method is divided into parametric active contour method and geometric active contour method in terms of the contour curve analysis. Low segmentation accuracy lung CT image segmentation methods are widely used in the early stage diagnosis. Next, the improved model analysis of lung CT image segmentation network structure is based on convolutional neural networks (CNNs), fully convolutional networks (FCNs), and generative adversarial network (GAN). In respect of the CNN-based deep learning segmentation methods, the segmentation methods of lung and lung lesion region can be divided into two-dimensional and three-dimensional methods in terms of the dimension of convolution kernel, the segmentation methods of lung and lung lesion region can also be divided into two-dimensional and three-dimensional methods based on the dimension of convolution kernel for the FCN-based deep learning segmentation methods. In respect of the U-Net based lung CT image segmentation methods, it can be divided into solo network lung CT image segmentation method and multi network lung CT image segmentation method according to the form of U-Net architecture. Due to the CT image containing COVID-19 infection area is very different from the ordinary lung CT imageand the differentiated segmentation characteristics of the two in the same network, the solo network lung CT image segmentation method can be analyzed that whether the data-set contains COVID-19 or not. The multi-network lung CT image segmentation method can be divided into cascade U-Net and dual path U-Net based on the option of serial mode or parallel mode. For the GAN-based lung CT image segmentation methods, it can be divided into GAN models based on network architecture, generator and other methods according to the ways to improve the different architectures of GAN. Deep-learning-based segmentation method has the advantages of high segmentation accuracy, strong transfer learning ability and high robustness. In particular, the auxiliary diagnosis of COVID-19 cases analysis is significantly qualified based on deep learning. Next, the common datasets and evaluation indexes of lung and lung lesion region segmentation are illustrated, including almost 10 lung CT open datasets, such as national lung screening test(NLST) dataset, computer vision and image analysis international early lung cancer action plan database(VIA/I-ELCAP) dataset, lung image database consortium and image database resource initiative(LIDC-IDRI) dataset and Nederlands-Leuvens Longkanker Screenings Onderzoek(NELSON) dataset, and 7 COVID-19 lung CT datasets analysis. It also demonstrates that the related lung CT images datasets is provided based on five large-scale competitions, including TIANCHI dataset, lung nodule analysis 16(LUNA16) dataset, Lung Nodule Database(LNDb) dataset, Kaggle Data Science Bowl 2017(Kaggle DSB) 2017 dataset and Automatic Nodule Detection 2009(ANODE09) dataset, respectively. Our 8 evaluation index is commonly used to evaluate the quality of lung CT image segmentation model, including involved Dice similarity coefficient, Jaccard similarity coefficient, accuracy, precision, false positive rate, false negative rate, sensitivity and specificity, respectively. To increase the number and diversity of training samples, GAN is used to synthesize high-quality adversarial images to expand the dataset. At the end, the prospects, challenges and potentials of CT-based high-precision segmentation strategies are critical reviewed for lung and lung lesion regions. Because the special structure of U-Net can effectively extract target features and restore the information loss derived from down sampling, it does not need a large number of samples for training to achieve high segmentation effect. Therefore, it is necessary to segment lung and lung lesions based on U-Net. The integration of GAN and U-Net is to improve the segmentation accuracy of lung and lung lesion areas. GAN-based network architecture is to extend the dataset for good training quality. The further U-Net application has its priority for qualified segmentation consistently.关键词:computed tomography(CT);medical image segmentation;lung CT image segmentation;lung lesion region;deep learning;corona virus disease 2019(COVID-19)280|226|4更新时间:2024-05-07

摘要:Lung disease like corona virus disease 2019(COVID-19) and lung cancer endanger the health of human beings. Early screening and treatment can significantly decrease the mortality of lung diseases. Computed tomography (CT) technology can be an effective information collection method for the diagnosis and treatment of lung diseases. CT-based lung lesion region image segmentation is a key step in lung disease screening. High quality lung lesion region segmentation can effectively improve the level of early stage diagnosis and treatment of lung diseases. However, high-quality lung lesion region segmentation in lung CT images has become a challenging issue in computer-aided diagnosis due to the diversity and complexity of lung diseases. Our research reviews the relevant literature recently. First, it is compared and summarized the pros and cons of traditional segmentation methods of lung CT image based on region and active contour. The region-based method uses the similarity and difference of features to guide image segmentation, mainly including threshold method, region growth method, clustering method and random walk method. The active-contour-based method is to set an initial contour line with decreasing energy. The contour line deforms in the internal energy derived from its own characteristics and the external energy originated from image characteristics. Its movement is in accordance with the principle of minimum energy until the energy function is in minimization and the contour line stops next to the boundary of lung region. The active contour method is divided into parametric active contour method and geometric active contour method in terms of the contour curve analysis. Low segmentation accuracy lung CT image segmentation methods are widely used in the early stage diagnosis. Next, the improved model analysis of lung CT image segmentation network structure is based on convolutional neural networks (CNNs), fully convolutional networks (FCNs), and generative adversarial network (GAN). In respect of the CNN-based deep learning segmentation methods, the segmentation methods of lung and lung lesion region can be divided into two-dimensional and three-dimensional methods in terms of the dimension of convolution kernel, the segmentation methods of lung and lung lesion region can also be divided into two-dimensional and three-dimensional methods based on the dimension of convolution kernel for the FCN-based deep learning segmentation methods. In respect of the U-Net based lung CT image segmentation methods, it can be divided into solo network lung CT image segmentation method and multi network lung CT image segmentation method according to the form of U-Net architecture. Due to the CT image containing COVID-19 infection area is very different from the ordinary lung CT imageand the differentiated segmentation characteristics of the two in the same network, the solo network lung CT image segmentation method can be analyzed that whether the data-set contains COVID-19 or not. The multi-network lung CT image segmentation method can be divided into cascade U-Net and dual path U-Net based on the option of serial mode or parallel mode. For the GAN-based lung CT image segmentation methods, it can be divided into GAN models based on network architecture, generator and other methods according to the ways to improve the different architectures of GAN. Deep-learning-based segmentation method has the advantages of high segmentation accuracy, strong transfer learning ability and high robustness. In particular, the auxiliary diagnosis of COVID-19 cases analysis is significantly qualified based on deep learning. Next, the common datasets and evaluation indexes of lung and lung lesion region segmentation are illustrated, including almost 10 lung CT open datasets, such as national lung screening test(NLST) dataset, computer vision and image analysis international early lung cancer action plan database(VIA/I-ELCAP) dataset, lung image database consortium and image database resource initiative(LIDC-IDRI) dataset and Nederlands-Leuvens Longkanker Screenings Onderzoek(NELSON) dataset, and 7 COVID-19 lung CT datasets analysis. It also demonstrates that the related lung CT images datasets is provided based on five large-scale competitions, including TIANCHI dataset, lung nodule analysis 16(LUNA16) dataset, Lung Nodule Database(LNDb) dataset, Kaggle Data Science Bowl 2017(Kaggle DSB) 2017 dataset and Automatic Nodule Detection 2009(ANODE09) dataset, respectively. Our 8 evaluation index is commonly used to evaluate the quality of lung CT image segmentation model, including involved Dice similarity coefficient, Jaccard similarity coefficient, accuracy, precision, false positive rate, false negative rate, sensitivity and specificity, respectively. To increase the number and diversity of training samples, GAN is used to synthesize high-quality adversarial images to expand the dataset. At the end, the prospects, challenges and potentials of CT-based high-precision segmentation strategies are critical reviewed for lung and lung lesion regions. Because the special structure of U-Net can effectively extract target features and restore the information loss derived from down sampling, it does not need a large number of samples for training to achieve high segmentation effect. Therefore, it is necessary to segment lung and lung lesions based on U-Net. The integration of GAN and U-Net is to improve the segmentation accuracy of lung and lung lesion areas. GAN-based network architecture is to extend the dataset for good training quality. The further U-Net application has its priority for qualified segmentation consistently.关键词:computed tomography(CT);medical image segmentation;lung CT image segmentation;lung lesion region;deep learning;corona virus disease 2019(COVID-19)280|226|4更新时间:2024-05-07

Review

-

摘要:ObjectiveThe primary routine clinical diagnosis of COVID-19(corona virus disease 2019) is usually conducted based on epidemiological history, clinical manifestations and various laboratory detection methods, including nucleic acid amplification test (NAAT), computed tomography (CT) scan and serological techniques. However, manual detection is costly, time-consuming and leads to the potential increase of the infection risk of clinicians. As a good alternative, artificial intelligence techniques on available data from laboratory tests play an important role in the confirmation of COVID-19 cases. Some studies have been designed for distinguishing between novel coronavirus pneumonia, community-acquired pneumonia and normal people by graph neural network. However, these studies leverage the relationships between features to build a topological structure graph (e.g., connecting the nodes with high similarity), while ignoring the inner relationships between different parts of the lung, and thus limiting the performance of their models. To address this issue, we propose a graph neural network with hierarchical information inherent to the physical structure of lungs for the improved diagnosis of COVID-19. Besides, an attention mechanism is introduced to capture the discriminative features of different severities of infection in the left and right lobes of different patients.MethodFirstly, the topological structure is constructed based on the lungs' physical structure, and different lung segments are regarded as different nodes. Each node in the graph contains three kinds of handcrafted features, such as volume, density and mass feature, which reflect the infection in each lung segment and can be extracted from chest CT images using VB-Net. Secondly, based on graph neural network (GNN) and attention mechanism, we propose a novel structural attention graph neural network (SAGNN), which can perform the graph classification task. The SAGNN first aggregates the features in a given sample graph, and then uses the attention mechanism to effectively fuse the different features to obtain the final graph representation. This representation is then fed into a linear layer with softmax activation function that performs graph classification, so that the corresponding sample graph can be finally classified as a mild case or a severe one. To alleviate the effect of category imbalance on the classification results, we use the focal loss function. We optimize the proposed model via back propagation and learn the representations of graphs.ResultTo verify the effectiveness of the proposed method, we compared SAGNN with several classical machine learning methods and graph classification methods on a real COVID-19 dataset, which includes 358 severe cases and 1 329 mild cases, provided by Shanghai Public Health Clinical Center. The result of comparative experiments was measured using three evaluation metrics including the sensitivity (SEN), the specificity (SPE) and the area under the receiver operating characteristic(ROC) curve (AUC). In the experiments, our model had a good performance, indicating the effectiveness of our model. Based on comparison with the classical machine learning methods and the graph neural network methods, SAGNN outperformed by 14.2%~42.0% and 3.6%~4.8% in terms of SEN, respectively. In terms of AUC, the performance of SAGNN increased by 8.9%~18.7% and 3.1%~3.6%, respectively. In addition, through the ablation experiments of SAGNN, we found that the SAGNN with attention mechanism outperformed by 2.4%, 1.4% and 1.1% in SPE, SEN and AUC than the SAGNN not with attention mechanism, respectively. The SAGNN with focal loss function outperformed by 2.1%, 1.1% and 0.9% in SPE, SEN and AUC than the SAGNN with cross-entropy loss function, respectively.ConclusionIn this work, we propose SAGNN, a new architecture for the diagnosis of severe and mild cases of COVID-19. Experimental results show the superior performance of SAGNN on classification task. Experimental results show that concatenating features of lung segments by their structure is effective. Moreover, we introduce an attention mechanism to distinguish the infection degree of right and left lungs. The focal loss is used to solve the issue of imbalanced group distribution, which further improves the overall network performance. We thus demonstrate the potential of SAGNN as clinical diagnosis support in this highly critical domain of medical intervention. We believe that our architecture provides a valuable case study for the early diagnosis of COVID-19, which is helpful for improvement in the field of computer-aided diagnosis and clinical practice.关键词:corona virus disease 2019(COVID-19) diagnosis;graph neural network(GNN);structural attention mechanism;topology diagram;graph classification109|229|2更新时间:2024-05-07

摘要:ObjectiveThe primary routine clinical diagnosis of COVID-19(corona virus disease 2019) is usually conducted based on epidemiological history, clinical manifestations and various laboratory detection methods, including nucleic acid amplification test (NAAT), computed tomography (CT) scan and serological techniques. However, manual detection is costly, time-consuming and leads to the potential increase of the infection risk of clinicians. As a good alternative, artificial intelligence techniques on available data from laboratory tests play an important role in the confirmation of COVID-19 cases. Some studies have been designed for distinguishing between novel coronavirus pneumonia, community-acquired pneumonia and normal people by graph neural network. However, these studies leverage the relationships between features to build a topological structure graph (e.g., connecting the nodes with high similarity), while ignoring the inner relationships between different parts of the lung, and thus limiting the performance of their models. To address this issue, we propose a graph neural network with hierarchical information inherent to the physical structure of lungs for the improved diagnosis of COVID-19. Besides, an attention mechanism is introduced to capture the discriminative features of different severities of infection in the left and right lobes of different patients.MethodFirstly, the topological structure is constructed based on the lungs' physical structure, and different lung segments are regarded as different nodes. Each node in the graph contains three kinds of handcrafted features, such as volume, density and mass feature, which reflect the infection in each lung segment and can be extracted from chest CT images using VB-Net. Secondly, based on graph neural network (GNN) and attention mechanism, we propose a novel structural attention graph neural network (SAGNN), which can perform the graph classification task. The SAGNN first aggregates the features in a given sample graph, and then uses the attention mechanism to effectively fuse the different features to obtain the final graph representation. This representation is then fed into a linear layer with softmax activation function that performs graph classification, so that the corresponding sample graph can be finally classified as a mild case or a severe one. To alleviate the effect of category imbalance on the classification results, we use the focal loss function. We optimize the proposed model via back propagation and learn the representations of graphs.ResultTo verify the effectiveness of the proposed method, we compared SAGNN with several classical machine learning methods and graph classification methods on a real COVID-19 dataset, which includes 358 severe cases and 1 329 mild cases, provided by Shanghai Public Health Clinical Center. The result of comparative experiments was measured using three evaluation metrics including the sensitivity (SEN), the specificity (SPE) and the area under the receiver operating characteristic(ROC) curve (AUC). In the experiments, our model had a good performance, indicating the effectiveness of our model. Based on comparison with the classical machine learning methods and the graph neural network methods, SAGNN outperformed by 14.2%~42.0% and 3.6%~4.8% in terms of SEN, respectively. In terms of AUC, the performance of SAGNN increased by 8.9%~18.7% and 3.1%~3.6%, respectively. In addition, through the ablation experiments of SAGNN, we found that the SAGNN with attention mechanism outperformed by 2.4%, 1.4% and 1.1% in SPE, SEN and AUC than the SAGNN not with attention mechanism, respectively. The SAGNN with focal loss function outperformed by 2.1%, 1.1% and 0.9% in SPE, SEN and AUC than the SAGNN with cross-entropy loss function, respectively.ConclusionIn this work, we propose SAGNN, a new architecture for the diagnosis of severe and mild cases of COVID-19. Experimental results show the superior performance of SAGNN on classification task. Experimental results show that concatenating features of lung segments by their structure is effective. Moreover, we introduce an attention mechanism to distinguish the infection degree of right and left lungs. The focal loss is used to solve the issue of imbalanced group distribution, which further improves the overall network performance. We thus demonstrate the potential of SAGNN as clinical diagnosis support in this highly critical domain of medical intervention. We believe that our architecture provides a valuable case study for the early diagnosis of COVID-19, which is helpful for improvement in the field of computer-aided diagnosis and clinical practice.关键词:corona virus disease 2019(COVID-19) diagnosis;graph neural network(GNN);structural attention mechanism;topology diagram;graph classification109|229|2更新时间:2024-05-07 -

Segmentation and quantitative analysis of intrapulmonary vasculature in CT images from COPD patients

摘要:ObjectiveChronic obstructive pulmonary disease (COPD) is a worldwide prevalent pulmonary disease. In China, COPD is the third leading cause of death. Pulmonary function tests (PFTs) are widely used to assess COPD severity, but they cannot evaluate the contribution of each disease compartment. Pulmonary vascular remodeling is a remarkable characteristic of COPD. In the past, pulmonary vascular remodeling was regarded as an end-stage feature of COPD. However, more recent studies have found that vascular disease is present in patients with early COPD stage. Pulmonary vascular remodeling has been described as dilation of proximal vessels and pruning or narrowing of distal vessels, thereby increasing vascular resistance. The available tools for the assessment of pulmonary vascular disease remain limited. Computed tomography (CT) is the most widely used imaging modality in COPD patients; it may be utilized to assess the severity of pulmonary vascular diseases. This study aims to develop and validate an automatic method for extracting pulmonary vessels and quantifying pulmonary vascular morphology in CT images.MethodThe extraction of pulmonary vessels is important for automated quantitative analysis of pulmonary vascular morphology. We present an anisotropic variational approach, which incorporates appearance and orientation of pulmonary vessels as prior knowledge for extracting pulmonary vessels. The pipeline of segmentation procedure includes three stages as follows. First, because the lung segmentation can reduce the running time of subsequent stages, we apply a U-Net model, which is a convolution neural network (CNN) trained with high diversity clinical CT images to obtain the left and right lungs. Second, the response of conventional Hessian-based vesselness filters is low at the vessels' edges and bifurcations. To overcome this problem, motivated by the measurement of anisotropy of diffusion tensor, a multiscale Hessian-based vesselness filter is used to highlight pulmonary vessels and generate the axial orientation of tubular structures. This vesselness filter may mitigate the low response of branch points and maintain robust contrast of various images. Third, considering the long and thin characteristic of pulmonary vessels, we incorporate an anisotropic variational regularizer into a continuous maximal flow framework to improve the segmentation performance. This anisotropic regularizer was constructed from the orientation of pulmonary vessels in the form of matrix generated by Eigen vectors of Hessian matrix. The proposed segmentation framework was implemented with parallel computing library. For quantifying the extracted pulmonary vessels, a public clinical data set from the ArteryVein challenge and a simulated data set from the VascuSynth were used to evaluate the performance of pulmonary vessel segmentation. To verify the association between the small vessel volume and COPD, 614 patients with COPD and other pulmonary diseases were investigated with the proposed approach.ResultFor evaluating the pulmonary vessel segmentation method, we tested our segmentation method on simulated vessels with seven levels of Gaussian noise (σ=5, 10, 15, 20, 25, 30, 35) and 10 CT scans from a public clinical data set. The average dice coefficient for the simulated data set is 0.87 (σ=5), 0.80 (σ=10), 0.77 (σ=15), 0.75 (σ=20), 0.73 (σ=25), 0.71 (σ=30), and 0.69 (σ=35). The average dice coefficient for the clinical data set is 0.79. For investigating the pulmonary vessel remodeling in COPD patients, 614 CT scans from 352 patients with COPD and 262 patients with other diseases were used for quantitative analysis, where 281 cases in the COPD group contain GOLD classification information (GOLD 1:16 cases, GOLD 2:108 cases, GOLD 3:108 cases, and GOLD 4:49 cases). The average proportion of small pulmonary vessels (cross section areas < 10 mm2) in the non-COPD and the COPD group was 0.656±0.067 and 0.589±0.074, respectively. The proportions of small vessels in the GOLD1-4 group were 0.612±0.051, 0.600±0.078, 0.565±0.067, and 0.528±0.053.ConclusionWe proposed a pulmonary vessel segmentation method that incorporates the vessels' directions. It can be used in the study of pulmonary vascular remodeling. Experimental results have verified the difference in the proportion of small pulmonary vessel volume between the non-COPD and the COPD group, and the differences also exist in GOLD 1-4 groups.关键词:chronic obstructive pulmonary disease(COPD);pulmonary vasculature segmentation;anisotropic total variation;continuous max flow;quantitative analysis182|130|0更新时间:2024-05-07

摘要:ObjectiveChronic obstructive pulmonary disease (COPD) is a worldwide prevalent pulmonary disease. In China, COPD is the third leading cause of death. Pulmonary function tests (PFTs) are widely used to assess COPD severity, but they cannot evaluate the contribution of each disease compartment. Pulmonary vascular remodeling is a remarkable characteristic of COPD. In the past, pulmonary vascular remodeling was regarded as an end-stage feature of COPD. However, more recent studies have found that vascular disease is present in patients with early COPD stage. Pulmonary vascular remodeling has been described as dilation of proximal vessels and pruning or narrowing of distal vessels, thereby increasing vascular resistance. The available tools for the assessment of pulmonary vascular disease remain limited. Computed tomography (CT) is the most widely used imaging modality in COPD patients; it may be utilized to assess the severity of pulmonary vascular diseases. This study aims to develop and validate an automatic method for extracting pulmonary vessels and quantifying pulmonary vascular morphology in CT images.MethodThe extraction of pulmonary vessels is important for automated quantitative analysis of pulmonary vascular morphology. We present an anisotropic variational approach, which incorporates appearance and orientation of pulmonary vessels as prior knowledge for extracting pulmonary vessels. The pipeline of segmentation procedure includes three stages as follows. First, because the lung segmentation can reduce the running time of subsequent stages, we apply a U-Net model, which is a convolution neural network (CNN) trained with high diversity clinical CT images to obtain the left and right lungs. Second, the response of conventional Hessian-based vesselness filters is low at the vessels' edges and bifurcations. To overcome this problem, motivated by the measurement of anisotropy of diffusion tensor, a multiscale Hessian-based vesselness filter is used to highlight pulmonary vessels and generate the axial orientation of tubular structures. This vesselness filter may mitigate the low response of branch points and maintain robust contrast of various images. Third, considering the long and thin characteristic of pulmonary vessels, we incorporate an anisotropic variational regularizer into a continuous maximal flow framework to improve the segmentation performance. This anisotropic regularizer was constructed from the orientation of pulmonary vessels in the form of matrix generated by Eigen vectors of Hessian matrix. The proposed segmentation framework was implemented with parallel computing library. For quantifying the extracted pulmonary vessels, a public clinical data set from the ArteryVein challenge and a simulated data set from the VascuSynth were used to evaluate the performance of pulmonary vessel segmentation. To verify the association between the small vessel volume and COPD, 614 patients with COPD and other pulmonary diseases were investigated with the proposed approach.ResultFor evaluating the pulmonary vessel segmentation method, we tested our segmentation method on simulated vessels with seven levels of Gaussian noise (σ=5, 10, 15, 20, 25, 30, 35) and 10 CT scans from a public clinical data set. The average dice coefficient for the simulated data set is 0.87 (σ=5), 0.80 (σ=10), 0.77 (σ=15), 0.75 (σ=20), 0.73 (σ=25), 0.71 (σ=30), and 0.69 (σ=35). The average dice coefficient for the clinical data set is 0.79. For investigating the pulmonary vessel remodeling in COPD patients, 614 CT scans from 352 patients with COPD and 262 patients with other diseases were used for quantitative analysis, where 281 cases in the COPD group contain GOLD classification information (GOLD 1:16 cases, GOLD 2:108 cases, GOLD 3:108 cases, and GOLD 4:49 cases). The average proportion of small pulmonary vessels (cross section areas < 10 mm2) in the non-COPD and the COPD group was 0.656±0.067 and 0.589±0.074, respectively. The proportions of small vessels in the GOLD1-4 group were 0.612±0.051, 0.600±0.078, 0.565±0.067, and 0.528±0.053.ConclusionWe proposed a pulmonary vessel segmentation method that incorporates the vessels' directions. It can be used in the study of pulmonary vascular remodeling. Experimental results have verified the difference in the proportion of small pulmonary vessel volume between the non-COPD and the COPD group, and the differences also exist in GOLD 1-4 groups.关键词:chronic obstructive pulmonary disease(COPD);pulmonary vasculature segmentation;anisotropic total variation;continuous max flow;quantitative analysis182|130|0更新时间:2024-05-07 -

摘要:ObjectiveHuman chest computed tomography (CT) image analysis is a key measure for diagnosing human lung diseases. However, the current scanned chest CT images might not meet the requirement of diagnosing lung diseases accurately. Medical image enhancement is an effective technique to improve the image quality and has been used in many clinical applications, such as knee joint disease detection, breast lesion segmentation and corona virus disease 2019(COVID-19) detection. Developing new enhancement algorithms is essential to improve the quality of chest CT images. A simple yet effective chest CT image enhancement algorithm is presented based on basic information preservation and detail enhancement.MethodA good chest CT image enhancement algorithm should well improve the clarity of edges or speckles in the image, while preserving much original structural information. Our human chest CT image enhancement algorithm is developed as follows. First, this algorithm exploits the advanced guided filter to decompose the CT image into multiple layers, including a base layer and multiple different scales of detail layers. Next, an entropy-based weight strategy is adopted to fuse the detail layers, which could well strengthen the informative details and suppress the texture-less layers. Afterwards, the fused detail layer is further strengthened based on an enhancement coefficient. In the end, the enhanced detail layer and the original base layer are integrated to generate the targeted chest CT image. The proposed algorithm could well enhance the details of the chest CT image, as well as transfer much original basic structural information to the enhanced image. Moreover, with the help of our algorithm, the surgeons can inspect more clear medical images without impacting their perception of the pathology information. In order to verify the effectiveness of our proposed algorithm, we have constructed a chest CT image dataset, which is composed of 20 sets/3 209 chest CT images, and then evaluated our algorithm and five state-of-the-art image enhancement algorithms on this large-scale dataset. In addition, the experiments are performed in both qualitative and quantitative ways.ResultTwo qualitative comparison cases demonstrate that our algorithm has mainly strengthened the useful details, while effectively suppressing the background information. As for the five comparison algorithms, histogram equalization(HE) and contrast limited adaptive histogram equalization(CLAHE) usually change the whole image intensities with large variation and degrade the image quality as compared to the original image. Alternative toggle operator(AO) could enhance the chest CT image with much better visual quality than HE and CLAHE, but it has excessively enhanced both image details and background noises. Low-light image enhancement(LIME) and robust retinex model(RRM) usually increase the intensities of the whole image and result in images of inappropriate contrast. The quantitative average standard deviation(STD), structural similarity metric(SSIM), peak signal to noise ratio(PSNR) values of our algorithm are significantly greater than those of the other five comparison algorithms (i.e., increased by 4.95, 0.16, 4.47, respectively) on our constructed chest CT image dataset. To be specific, greater average STD value of our algorithm indicates it has enhanced images with more clear details compared to the other five comparison algorithms. Larger average SSIM and PSNR values of our algorithm validate that it has preserved more basic structural information from the original image than the other five comparison algorithms. Meanwhile, the proposed algorithm only costs about 0.10 seconds to enhance a single CT image, which indicates the proposed algorithm has great potential to be efficiently applied in the real clinical scenarios. Overall, our algorithm achieves the best results amongst all the six image enhancement algorithms in terms of both visual quality and quantitative metrics.ConclusionIn this study, we have developed a simple yet effective human chest CT image enhancement algorithm, which can effectively enhance the textural details of chest CT images while preserving a large amount of original basic structural information. With the help of our enhanced human chest CT images, the surgeons could diagnose lung diseases more accurately. Moreover, the proposed algorithm owns good generalization ability, and is capable of well enhancing CT images scanned from other sites and even other modalities of images.关键词:diagnosis of lung diseases;chest CT image enhancement;image decomposition;basic information preservation;detail enhancement172|252|0更新时间:2024-05-07