最新刊期

卷 30 , 期 12 , 2025

- “在文物领域,人工智能技术正推动着文物防护、保护、研究、管理与传播方式的变革,为文物行业应用发展与未来提供新方向。”

摘要:Cultural relics embody the brilliance of civilization, preserve historical heritage, and uphold the national spirit, serving as vivid manifestations of the confidence and depth of Chinese cultural identity. These artifacts are not merely historical remnants; they are living testaments to a nation’s cultural consciousness and aesthetic achievements. In the Chinese context, such artifacts encompass a wide array of forms——ceramics, bronzes, calligraphy, painting, architecture, and intangible heritage such as folk music and traditional theater——which jointly form a rich, continuous narrative of human development and collective memory. Through their material and symbolic importance, these cultural elements reflect and reinforce a shared sense of belonging and historical continuity. With the rapid development of artificial intelligence (AI), now empowering a broad spectrum of industries and becoming deeply integrated into everyday life, the field of cultural heritage is undergoing a fundamental transformation. This transformation is not only technical but also conceptual, redefining how we understand, protect, and interact with our cultural legacy. AI no longer functions solely as a tool for automation or computation; it now plays a central role in knowledge production, decision-making, and creative processes. These capacities are driving a paradigm shift in cultural heritage work——from reactive, static models to proactive, adaptive systems powered by data and learning. The cultural heritage sector, historically reliant on manual preservation, scholarly interpretation, and traditional dissemination methods, is currently being transformed by advanced algorithms, machine learning models, and intelligent data processing frameworks. The profound capabilities of AI——in areas such as image recognition, natural language processing (NLP), data mining, semantic segmentation, and 3D reconstruction——are increasingly being leveraged to support the digitization, restoration, analysis, management, and public engagement of cultural heritage. These developments, driven by advancements in artificial intelligence, are poised to reshape the entire lifecycle of cultural relics—from their initial discovery and documentation to their long-term preservation and dynamic presentation to the public. The key challenge that currently arises—and forms the central concern of this paper—is the effective, responsible, and innovative application of AI within the cultural heritage field. While the potential of AI is undeniable, its implementation must be carefully aligned with the values, sensitivities, and interdisciplinary nature of cultural preservation. In particular, the complex materiality, symbolic importance, and contextual uniqueness of cultural relics demand AI approaches that are interpretable, ethical, and inclusive of human expertise. Ethical considerations, such as bias in training data, the risks of over-reliance on automated interpretations, and the protection of indigenous knowledge systems, must be at the forefront of AI deployment in cultural domains. This paper explores five critical dimensions of AI applications in the cultural heritage sector: prevention, preservation, research, management, and utilization. The five aspects collectively form a holistic framework for understanding how AI technologies can support the sustained vitality and accessibility of cultural resources. In terms of prevention, AI can play a crucial role in developing early warning systems to identify environmental changes and potential threats to cultural relics. By integrating sensor networks with AI-driven monitoring tools, institutions can proactively detect fluctuations in humidity, temperature, light exposure, and other environmental factors that may contribute to material deterioration. Additionally, predictive models based on historical data can be employed to forecast risks and guide strategic conservation efforts. For instance, machine learning algorithms have been employed in several European museums to predict mold outbreaks in organic cultural relics based on microclimatic data, enabling timely interventions. In terms of preservation, AI contributes to digital restoration, 3D reconstruction, and non-invasive diagnostics. For example, deep learning models can help reconstruct missing parts of fragmented murals or manuscripts by learning visual patterns from intact sections. Additionally, hyperspectral imaging combined with AI analysis can uncover faded texts or underdrawings that are invisible to the human eye. These technologies not only extend the physical lifespan of cultural relics but also introduce innovative approaches to virtual preservation. Some institutions are already using AI in the reconstruction of historical architecture through photogrammetry and simulation of ancient environments for educational use. In the domain of research, AI notably enhances the capabilities of scholars to extract knowledge from vast, heterogeneous datasets. Natural language processing facilitates the digitization and analysis of ancient texts, while computer vision supports the classification of cultural relics based on style, origin, and function. Semantic knowledge graphs and AI-assisted databases promote cross-referencing across disciplines and collections, fostering highly integrated and interdisciplinary research outcomes. These tools are proving essential in digital humanities projects that aim to map large cultural corpora or trace stylistic influences across time and geography. The management of cultural heritage institutions and resources also benefits substantially from AI. Intelligent information systems can optimize inventory tracking, automate metadata tagging, and streamline exhibition logistics. Recommendation systems can be tailored to guide curatorial decisions and enhance user interaction. AI can also help balance conservation needs with public access by dynamically regulating visitor flow in sensitive exhibition areas. Moreover, the integration of blockchain with AI for provenance tracking is emerging as a promising area, enhancing the security and transparency of cultural relic records. In terms of utilization, AI is reshaping how cultural heritage is accessed and experienced—particularly in education, tourism, and public engagement. Virtual museums, intelligent chatbots, augmented reality (AR), and personalized content delivery are making cultural experiences highly interactive and accessible. AI-generated reconstructions and immersive simulations allow audiences to engage with history in immersive ways, expanding the reach of cultural heritage to new demographics and global audiences. Platforms such as Google Arts & Culture, along with various national museum initiatives, are increasingly leveraging AI for context-aware storytelling and multilingual access, making culture more inclusive and dynamic. Beyond practical applications, this paper also examines how traditional research methodologies are evolving in response to AI integration. The paper highlights the epistemological shifts occur as cultural interpretation moves from purely human-centered approaches to hybrid models that combine human expertise with computational inference. While AI presents powerful tools, it also raises critical questions about authenticity, authorship, and cultural sovereignty——especially when applied across diverse cultural contexts and communities. The co-construction of meaning between human curators and intelligent systems may enrich interpretations, but it also demands careful calibration of roles and responsibilities. Overall, the integration of AI into the field of cultural heritage presents an unprecedented opportunity and a profound responsibility. As we navigate this new landscape, balancing technological innovation and cultural sensitivity is essential, ensuring that AI serves as a tool for cultural empowerment, rather than erasure. This paper ultimately offers insights into the current landscape and future trajectory of AI in cultural heritage, advocating for collaborative, interdisciplinary efforts to harness the potential of AI while honoring the depth, diversity, and dignity of the world’s cultural legacies.关键词:cultural heritage;artificial intelligence (AI);deep learning;museums;cultural relic preservation305|524|0更新时间:2025-12-18

摘要:Cultural relics embody the brilliance of civilization, preserve historical heritage, and uphold the national spirit, serving as vivid manifestations of the confidence and depth of Chinese cultural identity. These artifacts are not merely historical remnants; they are living testaments to a nation’s cultural consciousness and aesthetic achievements. In the Chinese context, such artifacts encompass a wide array of forms——ceramics, bronzes, calligraphy, painting, architecture, and intangible heritage such as folk music and traditional theater——which jointly form a rich, continuous narrative of human development and collective memory. Through their material and symbolic importance, these cultural elements reflect and reinforce a shared sense of belonging and historical continuity. With the rapid development of artificial intelligence (AI), now empowering a broad spectrum of industries and becoming deeply integrated into everyday life, the field of cultural heritage is undergoing a fundamental transformation. This transformation is not only technical but also conceptual, redefining how we understand, protect, and interact with our cultural legacy. AI no longer functions solely as a tool for automation or computation; it now plays a central role in knowledge production, decision-making, and creative processes. These capacities are driving a paradigm shift in cultural heritage work——from reactive, static models to proactive, adaptive systems powered by data and learning. The cultural heritage sector, historically reliant on manual preservation, scholarly interpretation, and traditional dissemination methods, is currently being transformed by advanced algorithms, machine learning models, and intelligent data processing frameworks. The profound capabilities of AI——in areas such as image recognition, natural language processing (NLP), data mining, semantic segmentation, and 3D reconstruction——are increasingly being leveraged to support the digitization, restoration, analysis, management, and public engagement of cultural heritage. These developments, driven by advancements in artificial intelligence, are poised to reshape the entire lifecycle of cultural relics—from their initial discovery and documentation to their long-term preservation and dynamic presentation to the public. The key challenge that currently arises—and forms the central concern of this paper—is the effective, responsible, and innovative application of AI within the cultural heritage field. While the potential of AI is undeniable, its implementation must be carefully aligned with the values, sensitivities, and interdisciplinary nature of cultural preservation. In particular, the complex materiality, symbolic importance, and contextual uniqueness of cultural relics demand AI approaches that are interpretable, ethical, and inclusive of human expertise. Ethical considerations, such as bias in training data, the risks of over-reliance on automated interpretations, and the protection of indigenous knowledge systems, must be at the forefront of AI deployment in cultural domains. This paper explores five critical dimensions of AI applications in the cultural heritage sector: prevention, preservation, research, management, and utilization. The five aspects collectively form a holistic framework for understanding how AI technologies can support the sustained vitality and accessibility of cultural resources. In terms of prevention, AI can play a crucial role in developing early warning systems to identify environmental changes and potential threats to cultural relics. By integrating sensor networks with AI-driven monitoring tools, institutions can proactively detect fluctuations in humidity, temperature, light exposure, and other environmental factors that may contribute to material deterioration. Additionally, predictive models based on historical data can be employed to forecast risks and guide strategic conservation efforts. For instance, machine learning algorithms have been employed in several European museums to predict mold outbreaks in organic cultural relics based on microclimatic data, enabling timely interventions. In terms of preservation, AI contributes to digital restoration, 3D reconstruction, and non-invasive diagnostics. For example, deep learning models can help reconstruct missing parts of fragmented murals or manuscripts by learning visual patterns from intact sections. Additionally, hyperspectral imaging combined with AI analysis can uncover faded texts or underdrawings that are invisible to the human eye. These technologies not only extend the physical lifespan of cultural relics but also introduce innovative approaches to virtual preservation. Some institutions are already using AI in the reconstruction of historical architecture through photogrammetry and simulation of ancient environments for educational use. In the domain of research, AI notably enhances the capabilities of scholars to extract knowledge from vast, heterogeneous datasets. Natural language processing facilitates the digitization and analysis of ancient texts, while computer vision supports the classification of cultural relics based on style, origin, and function. Semantic knowledge graphs and AI-assisted databases promote cross-referencing across disciplines and collections, fostering highly integrated and interdisciplinary research outcomes. These tools are proving essential in digital humanities projects that aim to map large cultural corpora or trace stylistic influences across time and geography. The management of cultural heritage institutions and resources also benefits substantially from AI. Intelligent information systems can optimize inventory tracking, automate metadata tagging, and streamline exhibition logistics. Recommendation systems can be tailored to guide curatorial decisions and enhance user interaction. AI can also help balance conservation needs with public access by dynamically regulating visitor flow in sensitive exhibition areas. Moreover, the integration of blockchain with AI for provenance tracking is emerging as a promising area, enhancing the security and transparency of cultural relic records. In terms of utilization, AI is reshaping how cultural heritage is accessed and experienced—particularly in education, tourism, and public engagement. Virtual museums, intelligent chatbots, augmented reality (AR), and personalized content delivery are making cultural experiences highly interactive and accessible. AI-generated reconstructions and immersive simulations allow audiences to engage with history in immersive ways, expanding the reach of cultural heritage to new demographics and global audiences. Platforms such as Google Arts & Culture, along with various national museum initiatives, are increasingly leveraging AI for context-aware storytelling and multilingual access, making culture more inclusive and dynamic. Beyond practical applications, this paper also examines how traditional research methodologies are evolving in response to AI integration. The paper highlights the epistemological shifts occur as cultural interpretation moves from purely human-centered approaches to hybrid models that combine human expertise with computational inference. While AI presents powerful tools, it also raises critical questions about authenticity, authorship, and cultural sovereignty——especially when applied across diverse cultural contexts and communities. The co-construction of meaning between human curators and intelligent systems may enrich interpretations, but it also demands careful calibration of roles and responsibilities. Overall, the integration of AI into the field of cultural heritage presents an unprecedented opportunity and a profound responsibility. As we navigate this new landscape, balancing technological innovation and cultural sensitivity is essential, ensuring that AI serves as a tool for cultural empowerment, rather than erasure. This paper ultimately offers insights into the current landscape and future trajectory of AI in cultural heritage, advocating for collaborative, interdisciplinary efforts to harness the potential of AI while honoring the depth, diversity, and dignity of the world’s cultural legacies.关键词:cultural heritage;artificial intelligence (AI);deep learning;museums;cultural relic preservation305|524|0更新时间:2025-12-18 - “卫星视频单目标跟踪技术在军事和民用领域具有重要应用,面临目标尺寸小、相似目标干扰等挑战。专家总结了典型跟踪方法,为该领域研究提供新方向。”

摘要:In recent years, single-object tracking in satellite videos has gained substantial attention and plays a pivotal role in military and civilian domains. This tracking has found applications in urban-scale disaster relief, public security surveillance, and the monitoring of emergency events, among others. However, due to a combination of factors, such as small target size, interference from similar targets, motion blur, and complex backgrounds, single-object tracking in satellite videos presents numerous challenges. Aiming to promote further exploration in this domain by scholars domestically and internationally, this paper comprehensively reviews and critically analyzes the current state of the art in satellite video-based single-object tracking. Considering challenges and advantages, video satellites offer an expansive field of view. Targets such as vehicles typically occupy only a few to a dozen pixels in satellite videos, with limited distinguishing features or textures. Additionally, satellite videos contain many targets, and the distinguishability between the targets of interest and interfering objects is poor, presenting a high degree of similarity. Moreover, target blurring may occur due to their rapid target movement or satellite platform jitter. When the moving target is inconspicuous and background information overshadows target features, tracking failure is likely to occur. However, compared to ground-based or low-altitude videos, satellite video-based object tracking offers certain advantages. For example, external factors related to the target, such as the camera perspective, are relatively stable, aiding tracking algorithms in maintaining a consistent lock on the target. Most objects in satellite videos are rigid and rarely undergo substantial deformation during tracking. Additionally, the aspect ratios of targets remain approximately consistent across video frames, reducing the potential for algorithmic confusion. The motion of targets is typically straightforward, with trajectories generally following straight lines or smooth curves, enabling the prediction of target positions based on historical motion data. Regarding the development of tracking methods, this paper reviews the evolution of single-object tracking methods for satellite videos and highlights typical tracking paradigms, including generative-based approaches, correlation filter-based methods, and deep learning-based techniques. Deep learning-based tracking methods can be further classified into convolutional neural network (CNN)-based and Transformer-based methods. In contrast to the hand-crafted features employed in correlation filter-based methods, CNNs can extract more comprehensive and robust features, thereby enhancing target tracking performance. In recent years, an increasing number of scholars have applied CNNs to satellite video object tracking tasks. However, when processing high-resolution images, long time-series data, and complex backgrounds, which are common in satellite videos, CNNs exhibit certain limitations. Aiming to address these limitations, Transformers have been gradually introduced into satellite video object tracking. Transformers can capture global spatial information and long-term temporal dependencies, offering a promising alternative for improving tracking accuracy in complex scenarios. Regarding datasets and evaluation metrics, this study compiles existing single-object tracking datasets for satellite videos, along with commonly adopted performance evaluation metrics. Prominent datasets in this field include XDU-BDSTU, video satellite objects(VISO), SatSOT, and the oriented object tracking benchmark(OOTB). Among them, the VISO dataset is the largest in scale, comprising training and test subsets. The XDU-BDSTU dataset features images with a large swath width, making it suitable for long-term tracking tasks. The OOTB dataset provides annotations using rotated bounding boxes, which accurately represents the actual target geometry. The main performance evaluation metrics include precision, success rate, and frame rate, which collectively assess tracking methods in terms of tracking accuracy and speed. Aiming to evaluate the applicability of various tracking algorithms across different scenarios, this paper selects 18 algorithms for performance evaluation and analysis on a self-constructed test set. Experimental results highlight the critical roles of motion estimation, temporal information utilization, and background information exploitation in satellite video object tracking. Specifically, the correlation filter with motion estimation(CFME) algorithm leverages historical motion information of the target to enhance tracking performance, while the Trdimp algorithm incorporates temporal and background information, yielding favorable outcomes. When a vehicle makes a turn, the hand-crafted features employed by the correlation filter-based method CFME lack rotational invariance and are poorly equipped to handle changes in the target’s bounding box due to rotation, resulting in suboptimal tracking performance. Conversely, methods such as Trdimp and Trsiam directly estimate the target’s bounding box, while approaches such as siamese region proposal network(SiamRPN) and SiamRPN++ predefine anchor boxes with different aspect ratios, effectively addressing the challenge of in-plane rotation. Finally, in terms of future perspectives, this paper outlines the anticipated trajectory of single-object tracking algorithms for satellite videos across several key dimensions: standardizing evaluation metrics for tracking results, developing large-scale and high-quality satellite video object tracking datasets, devising models specifically tailored to satellite video tracking challenges, and enabling robust long-term tracking capabilities. In the domain of general video target tracking, commonly used evaluation metrics include those from the OTB and VOT benchmarks. For satellite video target tracking, scholars predominantly adopt the precision and success rate metrics defined by the OTB evaluation framework. In the OTB metrics for general videos, the precision threshold is customarily set to 20 pixels, and the success rate is evaluated based on the area under the curve (AUC) of the overlap score. However, in satellite video target tracking, researchers often adopt varying threshold settings, which hinders the objective evaluation of algorithms under a unified standard. Thus, standardizing evaluation metrics for tracking results is essential for the advancement of satellite video single-object tracking. Before the emergence of large-scale test datasets, most studies in satellite video object tracking verified algorithms using only a few targets, which restricted comprehensive algorithm performance assessment. Moreover, the use of different test dataset across studies has further hindered direct comparisons between algorithms. Consequently, the development of large-scale, high-quality satellite video object tracking datasets is urgently needed, not only for effective model training, but also for model testing and performance benchmarking. Future research could benefit from rapidly assimilating the latest advancements in general video object tracking domain and adapting them to the unique characteristics of satellite videos. Given the rich background information and the continuous, linear nature of target motion trajectories between adjacent frames in satellite videos, these priors can be fully leveraged to explore global spatial and temporal information, thereby enhancing tracking accuracy. Furthermore, techniques such as knowledge distillation, network pruning, and neural architecture search hold considerable potential for autonomously constructing streamlined, low-complexity models specifically tailored to satellite video single-object tracking. These approaches can enable high-precision, real-time target tracking under constrained computation resources. In contrast to ground-based surveillance videos, satellite videos offer broad coverage, making it possible to track trajectories across entire urban areas. However, in such large-scale scenarios, multiple challenges, such as occlusion, interference from similar objects, motion blur, illumination variation, and target rotation, often occur simultaneously. Aiming to address the demands of real-world applications, the development of satellite video tracking algorithms capable of simultaneously addressing these challenges is imperative.关键词:satellite video;single object tracking;correlation filtering;deep learning;Jilin-1 satellite163|161|0更新时间:2025-12-18

摘要:In recent years, single-object tracking in satellite videos has gained substantial attention and plays a pivotal role in military and civilian domains. This tracking has found applications in urban-scale disaster relief, public security surveillance, and the monitoring of emergency events, among others. However, due to a combination of factors, such as small target size, interference from similar targets, motion blur, and complex backgrounds, single-object tracking in satellite videos presents numerous challenges. Aiming to promote further exploration in this domain by scholars domestically and internationally, this paper comprehensively reviews and critically analyzes the current state of the art in satellite video-based single-object tracking. Considering challenges and advantages, video satellites offer an expansive field of view. Targets such as vehicles typically occupy only a few to a dozen pixels in satellite videos, with limited distinguishing features or textures. Additionally, satellite videos contain many targets, and the distinguishability between the targets of interest and interfering objects is poor, presenting a high degree of similarity. Moreover, target blurring may occur due to their rapid target movement or satellite platform jitter. When the moving target is inconspicuous and background information overshadows target features, tracking failure is likely to occur. However, compared to ground-based or low-altitude videos, satellite video-based object tracking offers certain advantages. For example, external factors related to the target, such as the camera perspective, are relatively stable, aiding tracking algorithms in maintaining a consistent lock on the target. Most objects in satellite videos are rigid and rarely undergo substantial deformation during tracking. Additionally, the aspect ratios of targets remain approximately consistent across video frames, reducing the potential for algorithmic confusion. The motion of targets is typically straightforward, with trajectories generally following straight lines or smooth curves, enabling the prediction of target positions based on historical motion data. Regarding the development of tracking methods, this paper reviews the evolution of single-object tracking methods for satellite videos and highlights typical tracking paradigms, including generative-based approaches, correlation filter-based methods, and deep learning-based techniques. Deep learning-based tracking methods can be further classified into convolutional neural network (CNN)-based and Transformer-based methods. In contrast to the hand-crafted features employed in correlation filter-based methods, CNNs can extract more comprehensive and robust features, thereby enhancing target tracking performance. In recent years, an increasing number of scholars have applied CNNs to satellite video object tracking tasks. However, when processing high-resolution images, long time-series data, and complex backgrounds, which are common in satellite videos, CNNs exhibit certain limitations. Aiming to address these limitations, Transformers have been gradually introduced into satellite video object tracking. Transformers can capture global spatial information and long-term temporal dependencies, offering a promising alternative for improving tracking accuracy in complex scenarios. Regarding datasets and evaluation metrics, this study compiles existing single-object tracking datasets for satellite videos, along with commonly adopted performance evaluation metrics. Prominent datasets in this field include XDU-BDSTU, video satellite objects(VISO), SatSOT, and the oriented object tracking benchmark(OOTB). Among them, the VISO dataset is the largest in scale, comprising training and test subsets. The XDU-BDSTU dataset features images with a large swath width, making it suitable for long-term tracking tasks. The OOTB dataset provides annotations using rotated bounding boxes, which accurately represents the actual target geometry. The main performance evaluation metrics include precision, success rate, and frame rate, which collectively assess tracking methods in terms of tracking accuracy and speed. Aiming to evaluate the applicability of various tracking algorithms across different scenarios, this paper selects 18 algorithms for performance evaluation and analysis on a self-constructed test set. Experimental results highlight the critical roles of motion estimation, temporal information utilization, and background information exploitation in satellite video object tracking. Specifically, the correlation filter with motion estimation(CFME) algorithm leverages historical motion information of the target to enhance tracking performance, while the Trdimp algorithm incorporates temporal and background information, yielding favorable outcomes. When a vehicle makes a turn, the hand-crafted features employed by the correlation filter-based method CFME lack rotational invariance and are poorly equipped to handle changes in the target’s bounding box due to rotation, resulting in suboptimal tracking performance. Conversely, methods such as Trdimp and Trsiam directly estimate the target’s bounding box, while approaches such as siamese region proposal network(SiamRPN) and SiamRPN++ predefine anchor boxes with different aspect ratios, effectively addressing the challenge of in-plane rotation. Finally, in terms of future perspectives, this paper outlines the anticipated trajectory of single-object tracking algorithms for satellite videos across several key dimensions: standardizing evaluation metrics for tracking results, developing large-scale and high-quality satellite video object tracking datasets, devising models specifically tailored to satellite video tracking challenges, and enabling robust long-term tracking capabilities. In the domain of general video target tracking, commonly used evaluation metrics include those from the OTB and VOT benchmarks. For satellite video target tracking, scholars predominantly adopt the precision and success rate metrics defined by the OTB evaluation framework. In the OTB metrics for general videos, the precision threshold is customarily set to 20 pixels, and the success rate is evaluated based on the area under the curve (AUC) of the overlap score. However, in satellite video target tracking, researchers often adopt varying threshold settings, which hinders the objective evaluation of algorithms under a unified standard. Thus, standardizing evaluation metrics for tracking results is essential for the advancement of satellite video single-object tracking. Before the emergence of large-scale test datasets, most studies in satellite video object tracking verified algorithms using only a few targets, which restricted comprehensive algorithm performance assessment. Moreover, the use of different test dataset across studies has further hindered direct comparisons between algorithms. Consequently, the development of large-scale, high-quality satellite video object tracking datasets is urgently needed, not only for effective model training, but also for model testing and performance benchmarking. Future research could benefit from rapidly assimilating the latest advancements in general video object tracking domain and adapting them to the unique characteristics of satellite videos. Given the rich background information and the continuous, linear nature of target motion trajectories between adjacent frames in satellite videos, these priors can be fully leveraged to explore global spatial and temporal information, thereby enhancing tracking accuracy. Furthermore, techniques such as knowledge distillation, network pruning, and neural architecture search hold considerable potential for autonomously constructing streamlined, low-complexity models specifically tailored to satellite video single-object tracking. These approaches can enable high-precision, real-time target tracking under constrained computation resources. In contrast to ground-based surveillance videos, satellite videos offer broad coverage, making it possible to track trajectories across entire urban areas. However, in such large-scale scenarios, multiple challenges, such as occlusion, interference from similar objects, motion blur, illumination variation, and target rotation, often occur simultaneously. Aiming to address the demands of real-world applications, the development of satellite video tracking algorithms capable of simultaneously addressing these challenges is imperative.关键词:satellite video;single object tracking;correlation filtering;deep learning;Jilin-1 satellite163|161|0更新时间:2025-12-18 - “在自然语言处理领域,大语言模型取得显著进展,但在视频问答领域仍面临挑战。本文系统回顾了视频问答模型的研究进展,为多模态人工智能发展提供新思路。”

摘要:In recent years, large language models (LLMs) have achieved remarkable progress in natural language processing (NLP), demonstrating exceptional capabilities in language understanding and generation. These advancements have driven widespread applications in tasks such as text generation, machine translation, question answering, text summarization, and text classification. However, despite their impressive performance in handling and generating text, LLMs face notable limitations when handling highly complex multimodal tasks, particularly in the domain of video question answering (Video QA). Video QA is a particularly challenging task that requires models to comprehend and generate responses based on dynamic visual content, which often includes temporal and auditory information. Unlike static images or purely textual contents, video data contains inherent temporal dependencies, where the meaning of events and actions unfolds over time. This temporal dimension adds substantial complexity to the understanding process because models must not only interpret individual frames but also maintain coherent understanding across sequences of frames within the broader video context. Consequently, effective Video QA demands advanced temporal information processing capabilities that many LLMs, primarily designed for static text, often struggle to handle adequately. Moreover, the multimodal nature of video, which often involves the integration of visual, auditory, and occasionally textual cues, further complicates the task. Effective Video QA requires the model to seamlessly fuse information across these different modalities, ensuring accurate interpretation and response to questions regarding video content. This process involves understanding visual scenes, recognizing speech or background sounds, and correlating them with the corresponding textual information. The challenge lies not only in processing each modality independently but also in establishing meaningful connections between them to generate coherent and contextually appropriate responses. This paper presents a comprehensive review of the current state of research on Video QA models based on large language models. The technical characteristics, strengths, and weaknesses of non-real-time and real-time Video QA models are also investigated. Non-real-time Video QA models typically operate on pre-recorded video content, allowing them to access and analyze the entire video sequence before generating responses. These models can leverage global contextual information, making such models particularly effective for tasks that require video content analysis, such as video summarization or detailed scene interpretation. However, they may struggle with efficiency and scalability, particularly when handling long videos or large datasets. In contrast, real-time Video QA models are designed to process video streams as they are received, increasing their suitability for applications requiring immediate responses, such as live video monitoring or interactive video systems. However, these models must maintain a balance between processing speed and accuracy due to their frequently limited access to the full temporal context of the video. The paper discusses the challenges encountered by these models in maintaining performance under real-time constraints, including efficient computation and prediction capability based on partial information. Additionally, the paper explores the commonly used datasets in Video QA research, highlighting their features, limitations, and the types of tasks they are designed to address. The evaluation of Video QA models is also examined, focusing on the metrics and benchmarks used to assess their performance. Understanding the strengths and weaknesses of different datasets is crucial for advancing the field, helping in the identification of gaps in current research and guiding the development of robust and versatile models. Finally, the paper addresses the extensive challenges and bottlenecks in the field of Video QA, including the difficulties in scaling models to handle large and diverse video datasets, the need for efficient multimodal fusion techniques, and the computational demands associated with video data processing in real-time. The discussion is further extended to consider the potential future research directions in Video QA, with particular emphasis on improving the temporal reasoning capabilities of LLMs, enhancing their multimodal integration, and developing efficient model architectures that can operate effectively under resource constraints. Overall, while large language models have presented new possibilities in the field of video interpretation, considerable challenges remain in adapting these models to the specific demands of Video QA. Through the systematic review of the current advancements and the presentation of the key obstacles and future directions, this paper aims to contribute to the ongoing efforts to develop highly capable and intelligent multimodal AI systems. The field must continue innovations in the following areas: temporal modeling, where novel architectures that can effectively capture long-range dependencies in video sequences are needed; multimodal representation learning, where sophisticated approaches for integrating visual, auditory, and textual features could yield substantial improvements. Furthermore, the development of highly efficient training paradigms that can address the computational intensity of video processing while retaining model performance is essential for practical applications. Another critical area for future work focuses on the creation of highly comprehensive and challenging benchmark datasets that effectively reflect real-world scenarios, pushing the boundaries of what current models can achieve. As research in this area progresses, addressing these challenges will be crucial for realizing the full potential of LLMs in video interpretation applications. Achieving this goal will require AI systems that can interpret and reason about dynamic visual content with a level of proficiency comparable to human cognition. The integration of advanced techniques from computer vision, speech processing, and natural language understanding will be pivotal in developing truly multimodal systems capable of managing the complexity and variability in real-world video data. Through continued innovation and interdisciplinary collaboration, the field can overcome current limitations and drive the development of next-generation video understanding technologies with broad applicability across domains such as education, entertainment, surveillance, and human-computer interaction.关键词:large language models(LLMs);video question answering(Video QA);multimodal information fusion;temporal information processing;video understanding168|183|0更新时间:2025-12-18

摘要:In recent years, large language models (LLMs) have achieved remarkable progress in natural language processing (NLP), demonstrating exceptional capabilities in language understanding and generation. These advancements have driven widespread applications in tasks such as text generation, machine translation, question answering, text summarization, and text classification. However, despite their impressive performance in handling and generating text, LLMs face notable limitations when handling highly complex multimodal tasks, particularly in the domain of video question answering (Video QA). Video QA is a particularly challenging task that requires models to comprehend and generate responses based on dynamic visual content, which often includes temporal and auditory information. Unlike static images or purely textual contents, video data contains inherent temporal dependencies, where the meaning of events and actions unfolds over time. This temporal dimension adds substantial complexity to the understanding process because models must not only interpret individual frames but also maintain coherent understanding across sequences of frames within the broader video context. Consequently, effective Video QA demands advanced temporal information processing capabilities that many LLMs, primarily designed for static text, often struggle to handle adequately. Moreover, the multimodal nature of video, which often involves the integration of visual, auditory, and occasionally textual cues, further complicates the task. Effective Video QA requires the model to seamlessly fuse information across these different modalities, ensuring accurate interpretation and response to questions regarding video content. This process involves understanding visual scenes, recognizing speech or background sounds, and correlating them with the corresponding textual information. The challenge lies not only in processing each modality independently but also in establishing meaningful connections between them to generate coherent and contextually appropriate responses. This paper presents a comprehensive review of the current state of research on Video QA models based on large language models. The technical characteristics, strengths, and weaknesses of non-real-time and real-time Video QA models are also investigated. Non-real-time Video QA models typically operate on pre-recorded video content, allowing them to access and analyze the entire video sequence before generating responses. These models can leverage global contextual information, making such models particularly effective for tasks that require video content analysis, such as video summarization or detailed scene interpretation. However, they may struggle with efficiency and scalability, particularly when handling long videos or large datasets. In contrast, real-time Video QA models are designed to process video streams as they are received, increasing their suitability for applications requiring immediate responses, such as live video monitoring or interactive video systems. However, these models must maintain a balance between processing speed and accuracy due to their frequently limited access to the full temporal context of the video. The paper discusses the challenges encountered by these models in maintaining performance under real-time constraints, including efficient computation and prediction capability based on partial information. Additionally, the paper explores the commonly used datasets in Video QA research, highlighting their features, limitations, and the types of tasks they are designed to address. The evaluation of Video QA models is also examined, focusing on the metrics and benchmarks used to assess their performance. Understanding the strengths and weaknesses of different datasets is crucial for advancing the field, helping in the identification of gaps in current research and guiding the development of robust and versatile models. Finally, the paper addresses the extensive challenges and bottlenecks in the field of Video QA, including the difficulties in scaling models to handle large and diverse video datasets, the need for efficient multimodal fusion techniques, and the computational demands associated with video data processing in real-time. The discussion is further extended to consider the potential future research directions in Video QA, with particular emphasis on improving the temporal reasoning capabilities of LLMs, enhancing their multimodal integration, and developing efficient model architectures that can operate effectively under resource constraints. Overall, while large language models have presented new possibilities in the field of video interpretation, considerable challenges remain in adapting these models to the specific demands of Video QA. Through the systematic review of the current advancements and the presentation of the key obstacles and future directions, this paper aims to contribute to the ongoing efforts to develop highly capable and intelligent multimodal AI systems. The field must continue innovations in the following areas: temporal modeling, where novel architectures that can effectively capture long-range dependencies in video sequences are needed; multimodal representation learning, where sophisticated approaches for integrating visual, auditory, and textual features could yield substantial improvements. Furthermore, the development of highly efficient training paradigms that can address the computational intensity of video processing while retaining model performance is essential for practical applications. Another critical area for future work focuses on the creation of highly comprehensive and challenging benchmark datasets that effectively reflect real-world scenarios, pushing the boundaries of what current models can achieve. As research in this area progresses, addressing these challenges will be crucial for realizing the full potential of LLMs in video interpretation applications. Achieving this goal will require AI systems that can interpret and reason about dynamic visual content with a level of proficiency comparable to human cognition. The integration of advanced techniques from computer vision, speech processing, and natural language understanding will be pivotal in developing truly multimodal systems capable of managing the complexity and variability in real-world video data. Through continued innovation and interdisciplinary collaboration, the field can overcome current limitations and drive the development of next-generation video understanding technologies with broad applicability across domains such as education, entertainment, surveillance, and human-computer interaction.关键词:large language models(LLMs);video question answering(Video QA);multimodal information fusion;temporal information processing;video understanding168|183|0更新时间:2025-12-18 - “点云深度学习网络在三维视觉领域取得显著进展,但面临旋转变换挑战。专家系统整理了旋转不变点云网络的研究内容和方法,为未来发展提供新方向。”

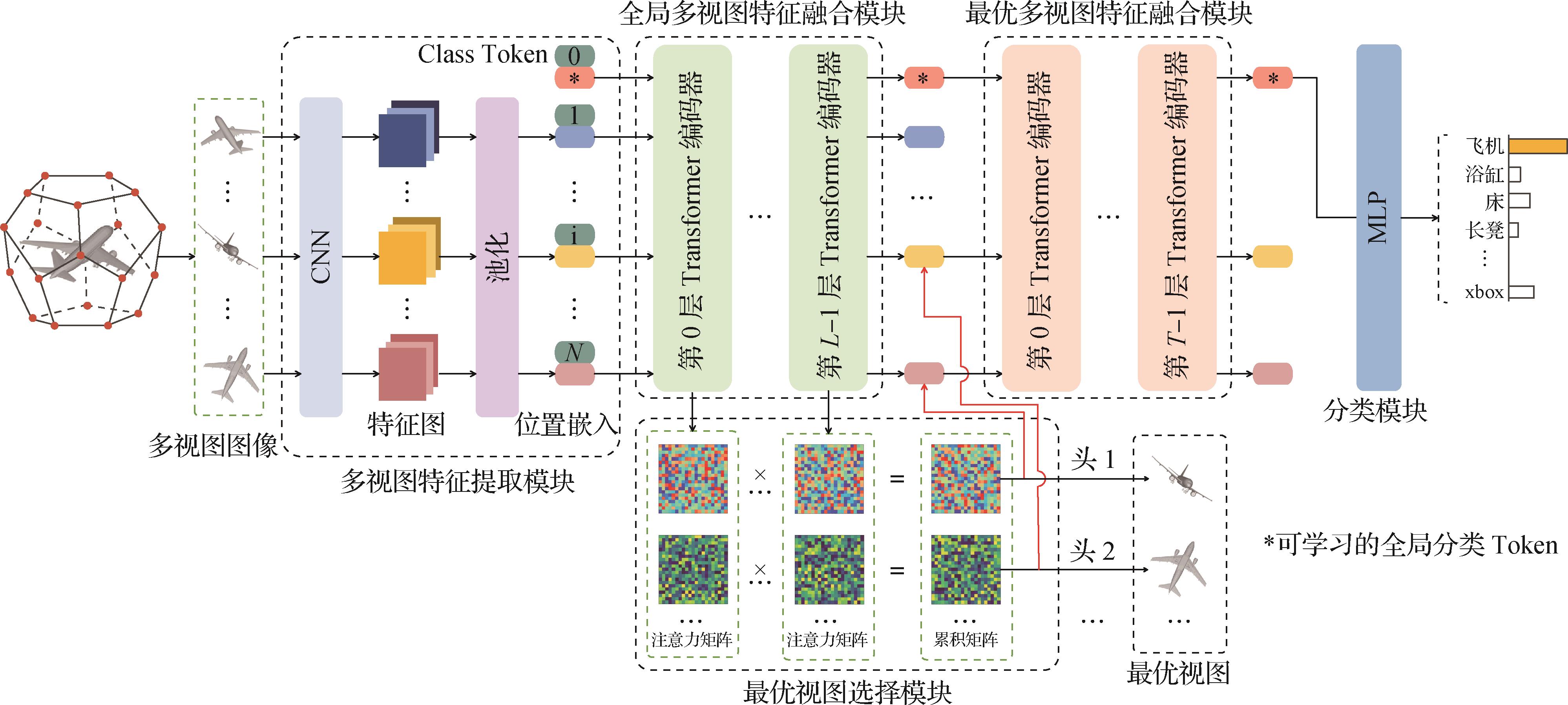

摘要:In recent years, deep learning networks for point clouds have achieved remarkable advancements, with their robust semantic understanding capabilities propelling research across the entire field of three-dimensional (3D) computer vision. These advancements have enabled accurate and efficient processing of 3D data, supporting applications in autonomous driving, robotics, remote sensing and mapping, and augmented reality. However, 3D point clouds often exhibit complex transformation symmetries, with rotation being a particularly challenging yet critical factor. The spatial coordinates of point clouds, which are the fundamental input to point cloud networks, undergo substantial changes, resulting in feature output variations. However, the semantic information embedded within point clouds theoretically remains consistent under various rotational transformations. This spatial variability substantially impacts the stability and reliability of conventional point cloud deep learning networks in semantic perception tasks, such as recognition, classification, and segmentation, reducing their effectiveness in real-world scenarios characterized by arbitrary orientations and poses. Early studies primarily relied on rotational data augmentation to enhance the robustness of point cloud networks against rotational variations. While data augmentation can improve generalization to some extent, it falls short of addressing the fundamental issue posed by the infinite and continuous nature of the rotation group. Acknowledging these limitations, an increasing number of researchers have shifted their focus toward designing rotation-invariant point cloud deep learning networks, which aim to mitigate the impact of rotation on feature extraction at the architectural level. Therefore, researchers seek to achieve consistent semantic perception regardless of point cloud orientation, thereby enhancing the applicability of deep learning models in real-world scenarios where data can be encountered in arbitrary poses. This paper presents a comprehensive survey of the current state of research on rotation-invariant point cloud networks. The research background is first outlined to highlight the importance of rotation invariance in 3D vision tasks and the challenges posed by rotational symmetries in point cloud data. Then, a systematic categorization of the prevailing mainstream methods is investigated. Particularly, the rotation-invariant point cloud networks can be broadly classified into the following three categories: 1) geometric-guided rotation-invariant methods: Using the traditional geometric analysis algorithms, these methods extract rotation-invariant geometric representations such as relative distances, angles, local reference frames, and canonical poses. These representations are then integrated into point cloud networks, facilitating learning of high-level semantic features and maintaining robustness to rotational transformations simultaneously. 2) Feature-guided rotation-invariant methods: These methods employ rotation-equivariant point cloud networks to extract point cloud representations that contain shape and pose information. Leveraging the inherent principles of equivariant networks, they subsequently remove the pose information from the rotation-equivariant representations, obtaining rotation-invariant point cloud features. 3) Training-guided rotation-invariant methods: These methods focus on designing sophisticated and highly generalizable rotational data augmentation training schemes, allowing non-rotation-invariant point cloud networks to gradually acquire robustness of rotations and achieve stable performance simultaneously. An in-depth analysis of the core concepts and algorithmic improvements that support these methods is provided for each category. The current research content on this issue and methodologies within the academic community are outlined, and the advantages and disadvantages of each method are summarized and compared. Subsequently, a comprehensive overview of the prevalent downstream tasks in the research of rotation-invariant point cloud networks is presented. These tasks include point cloud classification, point cloud segmentation, and point cloud retrieval. For each of these tasks, an in-depth discussion of the commonly employed datasets and evaluation metrics, which are essential for assessing network performance, is provided. Additionally, the quantitative performance metrics of mainstream rotation-invariant point cloud networks applied to these tasks are summarized and analyzed, offering a comparative perspective on their efficacy and robustness under rotational variations. Afterward, the downstream application prospects of rotation-invariant point cloud deep learning networks, including point cloud self-supervised representation learning, end-to-end point cloud registration, and point cloud completion, are examined and summarized. Finally, an outlook on future developments and research hotspots is presented. In addition to the ongoing development of new rotation-invariant point cloud networks, three primary issues warrant further research: 1) discrimination of effective geometric attributes. Current approaches are limited by the design of geometric attribute extraction algorithms. An in-depth discussion and determination of the effectiveness of different rotation-invariant geometric attributes within deep learning frameworks could yield novel insights and foster the development of innovative strategies to advance this field. 2) Highly integratable rotation-invariant mechanism. On the one hand, existing non-rotation-invariant point cloud networks continue to demonstrate strong performance on aligned data. The challenge lies in incorporating rotation invariance into these networks in a straightforward manner degrading their original performance. This challenge remains a key research topic because seamless integration requires innovative architectural designs and methodological approaches. On the other hand, rotation-invariant point cloud networks should also exhibit simplicity and reusability, enabling their direct application to downstream tasks with minimal adaptation. 3) High computational efficiency in invariant feature extraction modules. Although many existing methods demonstrate commendable performance, they often incur substantial time and computational costs, making it challenging to efficiently process large-scale point cloud data. Therefore, designing more efficient rotation-invariant point cloud networks that maintain robust feature extraction capabilities while minimizing computational overhead is crucial. Addressing the aforementioned challenges will notably enhance the effectiveness and practicality of rotation-invariant point cloud deep learning networks, facilitating their widespread adoption in complex 3D environments. This survey aims to provide researchers in 3D computer vision with a foundational understanding of current methodologies, highlight key challenges, and suggest potential avenues for future research.关键词:three-dimensional vision;deep learning;Point cloud network;rotation invariance;Rotation equivariance115|138|0更新时间:2025-12-18

摘要:In recent years, deep learning networks for point clouds have achieved remarkable advancements, with their robust semantic understanding capabilities propelling research across the entire field of three-dimensional (3D) computer vision. These advancements have enabled accurate and efficient processing of 3D data, supporting applications in autonomous driving, robotics, remote sensing and mapping, and augmented reality. However, 3D point clouds often exhibit complex transformation symmetries, with rotation being a particularly challenging yet critical factor. The spatial coordinates of point clouds, which are the fundamental input to point cloud networks, undergo substantial changes, resulting in feature output variations. However, the semantic information embedded within point clouds theoretically remains consistent under various rotational transformations. This spatial variability substantially impacts the stability and reliability of conventional point cloud deep learning networks in semantic perception tasks, such as recognition, classification, and segmentation, reducing their effectiveness in real-world scenarios characterized by arbitrary orientations and poses. Early studies primarily relied on rotational data augmentation to enhance the robustness of point cloud networks against rotational variations. While data augmentation can improve generalization to some extent, it falls short of addressing the fundamental issue posed by the infinite and continuous nature of the rotation group. Acknowledging these limitations, an increasing number of researchers have shifted their focus toward designing rotation-invariant point cloud deep learning networks, which aim to mitigate the impact of rotation on feature extraction at the architectural level. Therefore, researchers seek to achieve consistent semantic perception regardless of point cloud orientation, thereby enhancing the applicability of deep learning models in real-world scenarios where data can be encountered in arbitrary poses. This paper presents a comprehensive survey of the current state of research on rotation-invariant point cloud networks. The research background is first outlined to highlight the importance of rotation invariance in 3D vision tasks and the challenges posed by rotational symmetries in point cloud data. Then, a systematic categorization of the prevailing mainstream methods is investigated. Particularly, the rotation-invariant point cloud networks can be broadly classified into the following three categories: 1) geometric-guided rotation-invariant methods: Using the traditional geometric analysis algorithms, these methods extract rotation-invariant geometric representations such as relative distances, angles, local reference frames, and canonical poses. These representations are then integrated into point cloud networks, facilitating learning of high-level semantic features and maintaining robustness to rotational transformations simultaneously. 2) Feature-guided rotation-invariant methods: These methods employ rotation-equivariant point cloud networks to extract point cloud representations that contain shape and pose information. Leveraging the inherent principles of equivariant networks, they subsequently remove the pose information from the rotation-equivariant representations, obtaining rotation-invariant point cloud features. 3) Training-guided rotation-invariant methods: These methods focus on designing sophisticated and highly generalizable rotational data augmentation training schemes, allowing non-rotation-invariant point cloud networks to gradually acquire robustness of rotations and achieve stable performance simultaneously. An in-depth analysis of the core concepts and algorithmic improvements that support these methods is provided for each category. The current research content on this issue and methodologies within the academic community are outlined, and the advantages and disadvantages of each method are summarized and compared. Subsequently, a comprehensive overview of the prevalent downstream tasks in the research of rotation-invariant point cloud networks is presented. These tasks include point cloud classification, point cloud segmentation, and point cloud retrieval. For each of these tasks, an in-depth discussion of the commonly employed datasets and evaluation metrics, which are essential for assessing network performance, is provided. Additionally, the quantitative performance metrics of mainstream rotation-invariant point cloud networks applied to these tasks are summarized and analyzed, offering a comparative perspective on their efficacy and robustness under rotational variations. Afterward, the downstream application prospects of rotation-invariant point cloud deep learning networks, including point cloud self-supervised representation learning, end-to-end point cloud registration, and point cloud completion, are examined and summarized. Finally, an outlook on future developments and research hotspots is presented. In addition to the ongoing development of new rotation-invariant point cloud networks, three primary issues warrant further research: 1) discrimination of effective geometric attributes. Current approaches are limited by the design of geometric attribute extraction algorithms. An in-depth discussion and determination of the effectiveness of different rotation-invariant geometric attributes within deep learning frameworks could yield novel insights and foster the development of innovative strategies to advance this field. 2) Highly integratable rotation-invariant mechanism. On the one hand, existing non-rotation-invariant point cloud networks continue to demonstrate strong performance on aligned data. The challenge lies in incorporating rotation invariance into these networks in a straightforward manner degrading their original performance. This challenge remains a key research topic because seamless integration requires innovative architectural designs and methodological approaches. On the other hand, rotation-invariant point cloud networks should also exhibit simplicity and reusability, enabling their direct application to downstream tasks with minimal adaptation. 3) High computational efficiency in invariant feature extraction modules. Although many existing methods demonstrate commendable performance, they often incur substantial time and computational costs, making it challenging to efficiently process large-scale point cloud data. Therefore, designing more efficient rotation-invariant point cloud networks that maintain robust feature extraction capabilities while minimizing computational overhead is crucial. Addressing the aforementioned challenges will notably enhance the effectiveness and practicality of rotation-invariant point cloud deep learning networks, facilitating their widespread adoption in complex 3D environments. This survey aims to provide researchers in 3D computer vision with a foundational understanding of current methodologies, highlight key challenges, and suggest potential avenues for future research.关键词:three-dimensional vision;deep learning;Point cloud network;rotation invariance;Rotation equivariance115|138|0更新时间:2025-12-18

Review

- “在人脸图像年龄估计领域,研究者提出了一种新的开集半监督多任务学习方法,有效提升了年龄估计精度,并充分利用无标签数据集优化性能。”

摘要:ObjectiveFacial age estimation from images constitutes a prominent area of research within the field of computer vision, offering extensive potential applications in fields such as biometrics, digital marketing, healthcare, and human-computer interaction. Despite substantial efforts by numerous researchers in this field, achieving accurate facial age estimation remains a formidable challenge, primarily due to the lack of high-quality, large-scale labeled datasets for facial age estimation. The manual annotation of facial datasets necessitates considerable time and financial costs. Semi-supervised learning has emerged as a promising strategy for solving this problem because it enables the simultaneous utilization of labeled and unlabeled data. However, achieving satisfactory results in the domain of facial age estimation using semi-supervised learning methods is difficult. This difficulty arises from the limited accuracy of the pseudo-labels produced by these methods, as well as their susceptibility to the influence of outlier data. These factors hinder the effective utilization of unlabeled data, consequently limiting overall performance. Aiming to address these challenges, optimizing the capability of the model to extract features is essential. Such improvements will facilitate the effective acquisition of valuable representations from unlabeled data, thereby yielding highly precise pseudo-labels. Additionally, establishing a semi-supervised learning framework that can adeptly manage the challenges associated with outlier data while optimizing the utilization of the unlabeled dataset is crucial. Consequently, this study presents an open-set semi-supervised multi-task approach for facial age estimation.MethodThis research presents the SwinLEDF model to optimize the capability of the model to extract local and global features from facial images. This model is based on the Swin Transformer architecture and integrates local enhanced feedforward (LEFF) modules along with dynamic filter networks (DFNs). The Swin Transformer demonstrates proficient capabilities in capturing long-range dependencies and global characteristics, particularly in the analysis of age-related trends and the overall morphology of facial structures. The LEFF module incorporates non-linear transformations at the feature level, facilitating the identification of local patterns within images or feature representations. This capability is essential for differentiating age-related attributes, including intricate details such as wrinkles and skin texture. The DFN module implements a dynamic filtering operation within the spatial dimension of the model’s output, thereby enhancing model flexibility and adaptability. Furthermore, this research presents an open-set semi-supervised multitask learning algorithm to optimize the use of labeled and unlabeled data. In this algorithm, the model assesses the probability of unlabeled data being classified as outliers by integrating the outcomes of a closed-set classifier and a multi-class binary classifier. Subsequently, the model generates pseudo-labels for non-outlier data that meet a specified confidence threshold. Additionally, the model simultaneously learns to estimate sex, race, and age using labeled and unlabeled data. Through this process, the model learns not only the unique characteristics associated with each specific task but also the interrelationships among gender, race, and age, thereby enhancing the capability of the model to process diverse data and increases its expressive power and robustness. Furthermore, the process enables the effective utilization of unlabeled datasets, addressing the challenge of limited labeled data in the field of age estimation. This study employs an adaptive threshold mechanism and a negative learning strategy to optimize the use of unlabeled data. The adaptive threshold mechanism dynamically adjusts the confidence threshold for pseudo-labels based on the model’s training performance across different categories, effectively addressing category imbalance and improving the precision of pseudo-label production. The negative learning strategy enhances the handling of unlabeled data by identifying categories to which the input data does not belong, thereby mitigating the adverse effects of false pseudo-labels on model performance.ResultThis study assesses the proposed methodology using the MORPH and UTKface datasets. On the MORPH dataset, the model exhibits a mean absolute error (MAE) of 1.908 when trained solely on labeled data. This error is further reduced to 1.885 with the inclusion of labeled and unlabeled datasets. Similarly, for the UTKface dataset, the initial MAE is recorded at 4.343 using only labeled datasets, which subsequently reduces to 4.246 following the integration of labeled and unlabeled datasets. Compared to current facial age estimation methods, the proposed approach exhibits superior performance and further optimizes its accuracy by leveraging unlabeled facial datasets.ConclusionThis study introduces an open-set semi-supervised multi-task learning method for facial age estimation. The proposed method effectively extracts gender, race, and age attributes from facial images while leveraging unlabeled data and appropriately handling potential outliers. This approach addresses the challenges associated with limited labeled data, thereby enhancing the accuracy of facial age estimation. Furthermore, the methodology presents innovative strategies for achieving precise results and holds strong potential for practical applications.关键词:facial age estimation;open-set semi-supervised learning;multi-task learning;SwinLEDF model;pseudo-label156|197|0更新时间:2025-12-18

摘要:ObjectiveFacial age estimation from images constitutes a prominent area of research within the field of computer vision, offering extensive potential applications in fields such as biometrics, digital marketing, healthcare, and human-computer interaction. Despite substantial efforts by numerous researchers in this field, achieving accurate facial age estimation remains a formidable challenge, primarily due to the lack of high-quality, large-scale labeled datasets for facial age estimation. The manual annotation of facial datasets necessitates considerable time and financial costs. Semi-supervised learning has emerged as a promising strategy for solving this problem because it enables the simultaneous utilization of labeled and unlabeled data. However, achieving satisfactory results in the domain of facial age estimation using semi-supervised learning methods is difficult. This difficulty arises from the limited accuracy of the pseudo-labels produced by these methods, as well as their susceptibility to the influence of outlier data. These factors hinder the effective utilization of unlabeled data, consequently limiting overall performance. Aiming to address these challenges, optimizing the capability of the model to extract features is essential. Such improvements will facilitate the effective acquisition of valuable representations from unlabeled data, thereby yielding highly precise pseudo-labels. Additionally, establishing a semi-supervised learning framework that can adeptly manage the challenges associated with outlier data while optimizing the utilization of the unlabeled dataset is crucial. Consequently, this study presents an open-set semi-supervised multi-task approach for facial age estimation.MethodThis research presents the SwinLEDF model to optimize the capability of the model to extract local and global features from facial images. This model is based on the Swin Transformer architecture and integrates local enhanced feedforward (LEFF) modules along with dynamic filter networks (DFNs). The Swin Transformer demonstrates proficient capabilities in capturing long-range dependencies and global characteristics, particularly in the analysis of age-related trends and the overall morphology of facial structures. The LEFF module incorporates non-linear transformations at the feature level, facilitating the identification of local patterns within images or feature representations. This capability is essential for differentiating age-related attributes, including intricate details such as wrinkles and skin texture. The DFN module implements a dynamic filtering operation within the spatial dimension of the model’s output, thereby enhancing model flexibility and adaptability. Furthermore, this research presents an open-set semi-supervised multitask learning algorithm to optimize the use of labeled and unlabeled data. In this algorithm, the model assesses the probability of unlabeled data being classified as outliers by integrating the outcomes of a closed-set classifier and a multi-class binary classifier. Subsequently, the model generates pseudo-labels for non-outlier data that meet a specified confidence threshold. Additionally, the model simultaneously learns to estimate sex, race, and age using labeled and unlabeled data. Through this process, the model learns not only the unique characteristics associated with each specific task but also the interrelationships among gender, race, and age, thereby enhancing the capability of the model to process diverse data and increases its expressive power and robustness. Furthermore, the process enables the effective utilization of unlabeled datasets, addressing the challenge of limited labeled data in the field of age estimation. This study employs an adaptive threshold mechanism and a negative learning strategy to optimize the use of unlabeled data. The adaptive threshold mechanism dynamically adjusts the confidence threshold for pseudo-labels based on the model’s training performance across different categories, effectively addressing category imbalance and improving the precision of pseudo-label production. The negative learning strategy enhances the handling of unlabeled data by identifying categories to which the input data does not belong, thereby mitigating the adverse effects of false pseudo-labels on model performance.ResultThis study assesses the proposed methodology using the MORPH and UTKface datasets. On the MORPH dataset, the model exhibits a mean absolute error (MAE) of 1.908 when trained solely on labeled data. This error is further reduced to 1.885 with the inclusion of labeled and unlabeled datasets. Similarly, for the UTKface dataset, the initial MAE is recorded at 4.343 using only labeled datasets, which subsequently reduces to 4.246 following the integration of labeled and unlabeled datasets. Compared to current facial age estimation methods, the proposed approach exhibits superior performance and further optimizes its accuracy by leveraging unlabeled facial datasets.ConclusionThis study introduces an open-set semi-supervised multi-task learning method for facial age estimation. The proposed method effectively extracts gender, race, and age attributes from facial images while leveraging unlabeled data and appropriately handling potential outliers. This approach addresses the challenges associated with limited labeled data, thereby enhancing the accuracy of facial age estimation. Furthermore, the methodology presents innovative strategies for achieving precise results and holds strong potential for practical applications.关键词:facial age estimation;open-set semi-supervised learning;multi-task learning;SwinLEDF model;pseudo-label156|197|0更新时间:2025-12-18 - “在轨道缺陷检测领域,研究者提出了LPCANet模型,有效提升了检测速度和精度,具有实际应用价值。”

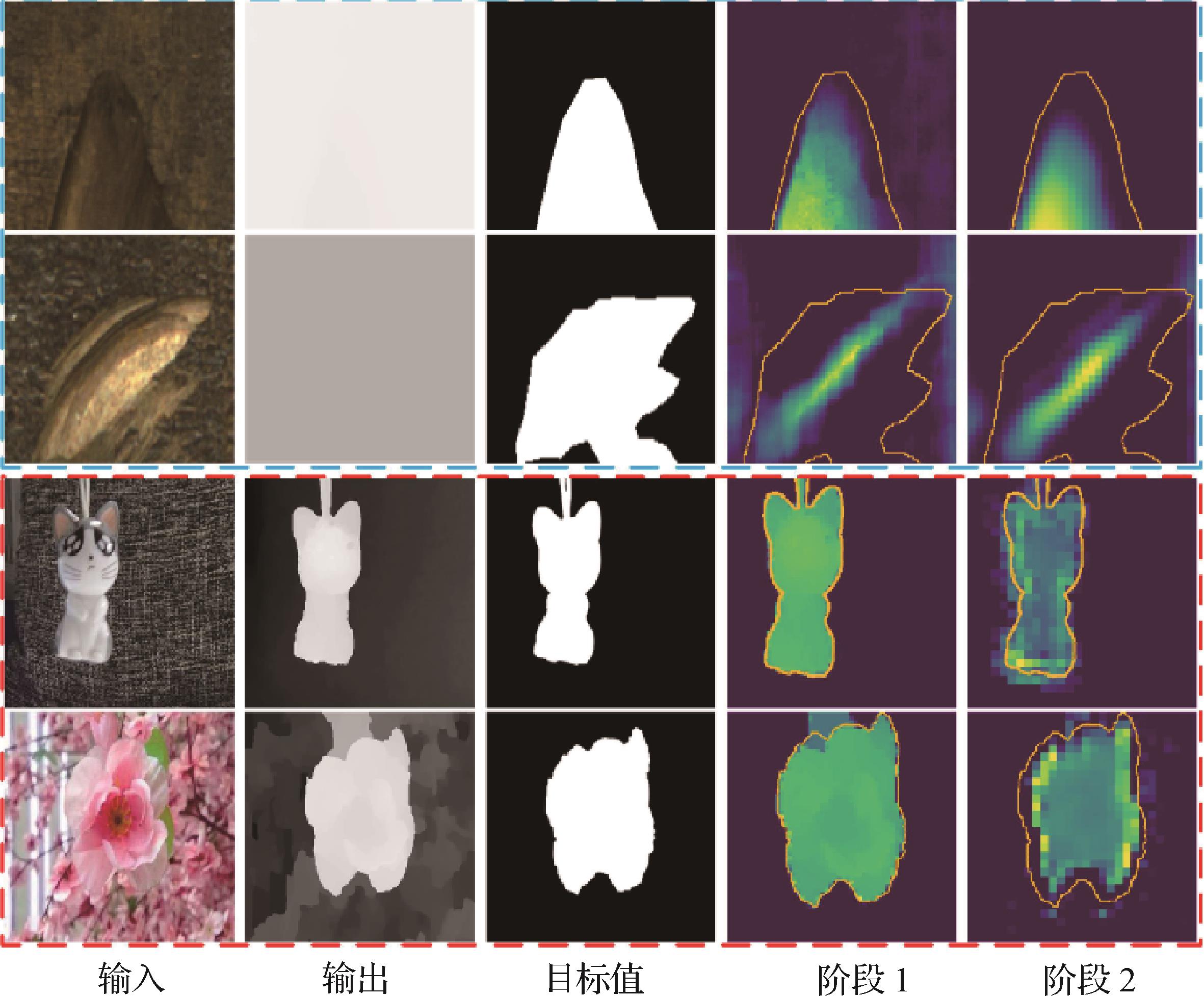

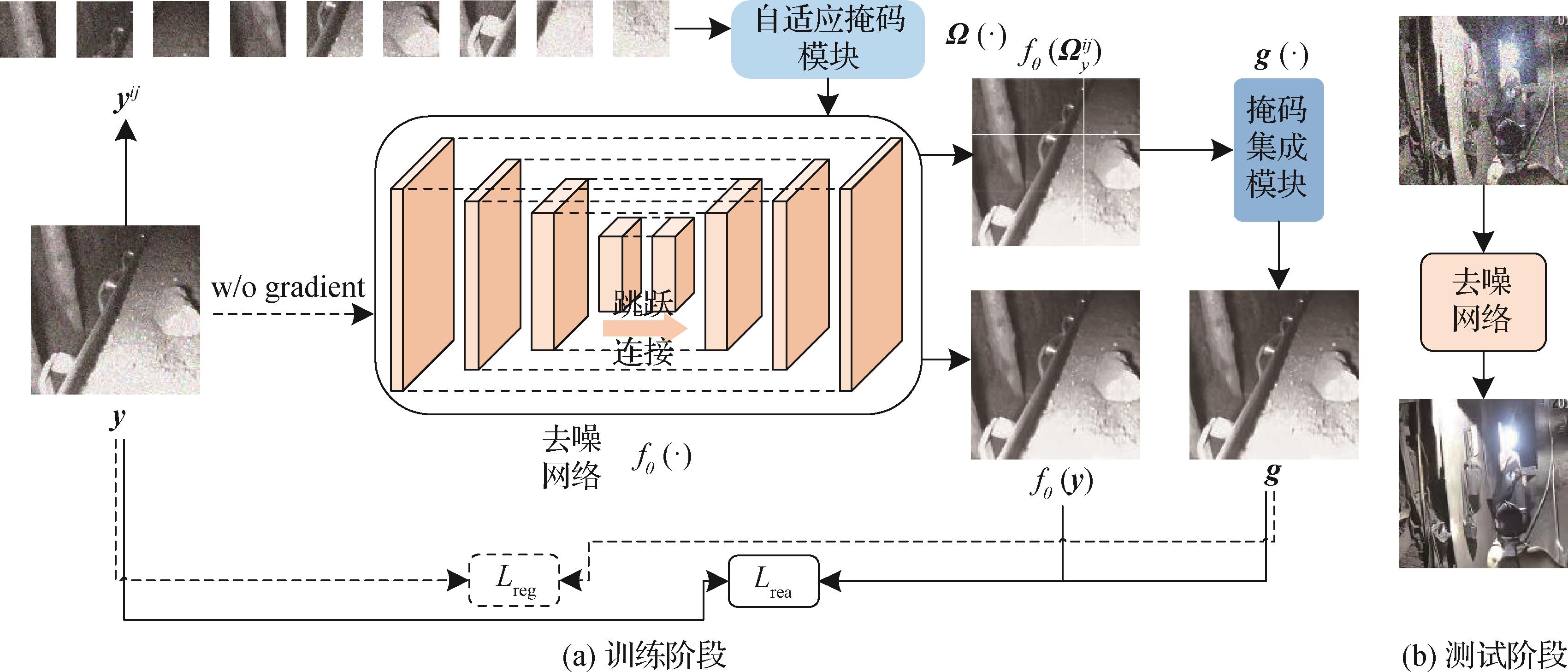

摘要:ObjectiveMost existing vision-based rail defect detection methods face challenges such as high parameter counts, computational complexity, slow detection speeds, and limited accuracy. Aiming to overcome these limitations, this paper introduces a lightweight pyramid cross-attention network (LPCANet) for orbital image defect detection using RGB images and depth images.MethodLPCANet adopts MobileNetv2 as its backbone network to extract multiscale feature maps from RGB images. Simultaneously, a lightweight pyramid module (LPM) is employed to extract similarly-sized feature maps from depth images. Each stage of the LPM comprises a sequence of operations including max pooling, a 3 × 3 convolutional layer, batch normalization, and ReLU activation, enabling efficient extraction of features from depth images. By leveraging deep learning, RGB-D technology, and salient object detection, LPCANet efficiently extracts multiscale feature representations from RGB and depth data. The LPM handles depth image features, while the backbone captures detailed pyramid features from RGB images. Subsequently, a cross-attention mechanism (CAM) is applied to integrate the feature maps from both modalities, enhancing the network’s focus on relevant defect regions. Additionally, a spatial feature extractor (SFE) is introduced to further boost defect detection performance. Finally, a “pixel shuffle” operation is used to restore the output to the original image resolution.ResultThe proposed scheme was computationally evaluated using the PyTorch library in an environment equipped with an NVIDIA 3090 GPU, alongside several benchmark models for comparison. For the evaluation of LPCANet, three publicly available unsupervised RGB-D rail datasets were used: NEU-RSDDS-AUG, RSDD-TYPE1, and RSDD-TYPE2. Experimental results on the NEU-RSDDS-AUG dataset indicate that LPCANet achieves excellent efficiency, with 9.90 million parameters, a computational complexity of 2.50 G, a model size of 37.95 MB, and a running speed of 162.60 frames per second. Compared to 18 existing rail defect detection schemes, LPCANet exhibits superior lightness in performance. In particular, when compared against CSEPNet, the current best-performing model, LPCANet achieves improvements across several evaluation metrics: +1.48% in

摘要:ObjectiveMost existing vision-based rail defect detection methods face challenges such as high parameter counts, computational complexity, slow detection speeds, and limited accuracy. Aiming to overcome these limitations, this paper introduces a lightweight pyramid cross-attention network (LPCANet) for orbital image defect detection using RGB images and depth images.MethodLPCANet adopts MobileNetv2 as its backbone network to extract multiscale feature maps from RGB images. Simultaneously, a lightweight pyramid module (LPM) is employed to extract similarly-sized feature maps from depth images. Each stage of the LPM comprises a sequence of operations including max pooling, a 3 × 3 convolutional layer, batch normalization, and ReLU activation, enabling efficient extraction of features from depth images. By leveraging deep learning, RGB-D technology, and salient object detection, LPCANet efficiently extracts multiscale feature representations from RGB and depth data. The LPM handles depth image features, while the backbone captures detailed pyramid features from RGB images. Subsequently, a cross-attention mechanism (CAM) is applied to integrate the feature maps from both modalities, enhancing the network’s focus on relevant defect regions. Additionally, a spatial feature extractor (SFE) is introduced to further boost defect detection performance. Finally, a “pixel shuffle” operation is used to restore the output to the original image resolution.ResultThe proposed scheme was computationally evaluated using the PyTorch library in an environment equipped with an NVIDIA 3090 GPU, alongside several benchmark models for comparison. For the evaluation of LPCANet, three publicly available unsupervised RGB-D rail datasets were used: NEU-RSDDS-AUG, RSDD-TYPE1, and RSDD-TYPE2. Experimental results on the NEU-RSDDS-AUG dataset indicate that LPCANet achieves excellent efficiency, with 9.90 million parameters, a computational complexity of 2.50 G, a model size of 37.95 MB, and a running speed of 162.60 frames per second. Compared to 18 existing rail defect detection schemes, LPCANet exhibits superior lightness in performance. In particular, when compared against CSEPNet, the current best-performing model, LPCANet achieves improvements across several evaluation metrics: +1.48% in

Image Analysis and Recognition

- “在显著目标检测领域,研究者提出了一种RGB-D显著目标检测方法,通过跨模态特征融合与边缘细节增强,有效提高了检测性能。”