最新刊期

卷 29 , 期 9 , 2024

数字人建模、生成与渲染技术

-

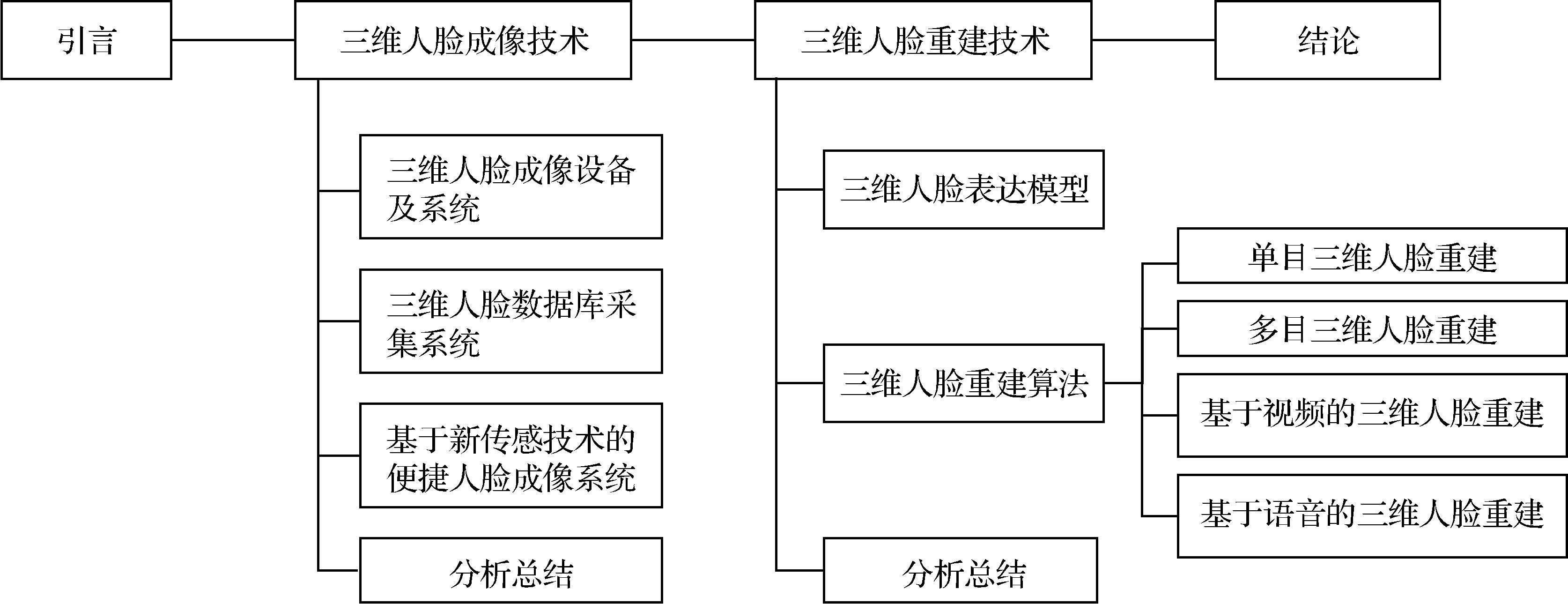

摘要:As the breakthrough technology of artificial intelligence (AI) in the big data era, deep learning (DL) has prompted the renewed upsurge of face technology. Powered by rapid developments of new technologies, such as three-dimensional (3D) vision measurement, image processing chips, and DL models, 3D vision transformed into a key supporting technology in AI, visual reality, etc. The studies and applications of 3D facial imaging and reconstruction technologies have achieved important breakthroughs. 3D face data represent exact multidimensional facial attributes on account of rich visual information, such as texture, shape, space, etc. Moreover, 3D face data shows robust changes in large occlusions, expressions, and poses and increases the difficulty of forgery attack. Therefore, 3D face imaging and reconstruction effectively promote realistic “virtual digital human” reconstruction and rendering. In addition, these processes contribute to the improved security of the face system. In this paper, we comprehensively study the 3D face imaging technology and reconstruction models. The 3D face reconstruction methods based on DL are systematically and deeply analyzed. First, the development and innovation of 3D face imaging devices and capturing systems are discussed through a summary of public 3D face datasets. The devices and systems include consumer imaging devices (such as Kinect) and complex hybrid systems that fuse active and passive 3D imaging technologies to achieve precise geometry and appearance. Moreover, 3D face imaging based on new sensing technologies are introduced. Then, from the perspective of input resources, 3D face reconstruction methods based on DL are categorized into monocular, multiview, video and audio reconstruction methods. 3D face imaging technology introduces public classic 3D face datasets, popular 3D face imaging devices, and capturing systems. Most high-quality 3D face datasets, such as BU-3DFE, FaceScape and FaceVerse, are captured through a large imaging volume with a certain number of high-resolution cameras and controlled lighting conditions. They play key roles in applications of realistic rendering, driven animation, retargeting, etc. On the other hand, novel optical devices and imaging modules with small size and lightweight algorithm must be innovated for tiny AI as intelligent mobile devices. For 3D face reconstruction based on DL, monocular reconstruction has become the most popular technology. The state-of-the-art 3D face reconstruction method is generally self-supervised training on large-scale 2D face databases. The difficulties encountered in 3D face reconstruction include the lack of large-scale 3D face datasets, occlusions and poses of in-the-wild 2D face images, continuous expression deformations, etc. The DL network structure is categorized into general deep convolutional neural network (such as ResNet, U-Net, and Autoencoder), generative adversarial networks (GANs), implicit neural representation (INR) (such as neural radiance field (NeRF) and signed distance functions (SDF)), and Transformer. 3DMM and FLAME are widely used 3D face representation models. The StyleGAN model gives excellent performance in recovering high-quality face texture. INR has achieved remarkable results in 3D scene reconstruction, and the NeRF model plays an important role in the reconstruction of accurate head avatars. The combination of NeRF with GAN shows great potential in the reconstruction of high-fidelity 3D face geometry and realistic rendering appearances. Moreover, the Transformer model, which greatly improves the breakthrough of accuracy and speed, is mainly used in audio-driven 3D face reconstruction. Through in-depth analyses, the research difficulties accompanying 3D face are summarized, and future developments are actively being discussed and explored. Although recent research has made amazing progresses, challenges on how to improve the robustness and generalization to real-world lighting, extreme expressions/poses, and how to effectively disentangle facial attributes (such as identity, expression, albedo, and specular reflectance) and recover accurate detailed geometry of facial motions (such as wrinkles). In this study, we proposed a comprehensive and systematic review and covered classical technologies and studies on 3D face imaging and reconstruction in the last five years to provide a good reference for face studies, developments, and applications.关键词:3D face imaging;3D face reconstruction;deep learning (DL);generative adversarial network (GAN);implicit neural representation (INR)907|1010|0更新时间:2024-09-18

摘要:As the breakthrough technology of artificial intelligence (AI) in the big data era, deep learning (DL) has prompted the renewed upsurge of face technology. Powered by rapid developments of new technologies, such as three-dimensional (3D) vision measurement, image processing chips, and DL models, 3D vision transformed into a key supporting technology in AI, visual reality, etc. The studies and applications of 3D facial imaging and reconstruction technologies have achieved important breakthroughs. 3D face data represent exact multidimensional facial attributes on account of rich visual information, such as texture, shape, space, etc. Moreover, 3D face data shows robust changes in large occlusions, expressions, and poses and increases the difficulty of forgery attack. Therefore, 3D face imaging and reconstruction effectively promote realistic “virtual digital human” reconstruction and rendering. In addition, these processes contribute to the improved security of the face system. In this paper, we comprehensively study the 3D face imaging technology and reconstruction models. The 3D face reconstruction methods based on DL are systematically and deeply analyzed. First, the development and innovation of 3D face imaging devices and capturing systems are discussed through a summary of public 3D face datasets. The devices and systems include consumer imaging devices (such as Kinect) and complex hybrid systems that fuse active and passive 3D imaging technologies to achieve precise geometry and appearance. Moreover, 3D face imaging based on new sensing technologies are introduced. Then, from the perspective of input resources, 3D face reconstruction methods based on DL are categorized into monocular, multiview, video and audio reconstruction methods. 3D face imaging technology introduces public classic 3D face datasets, popular 3D face imaging devices, and capturing systems. Most high-quality 3D face datasets, such as BU-3DFE, FaceScape and FaceVerse, are captured through a large imaging volume with a certain number of high-resolution cameras and controlled lighting conditions. They play key roles in applications of realistic rendering, driven animation, retargeting, etc. On the other hand, novel optical devices and imaging modules with small size and lightweight algorithm must be innovated for tiny AI as intelligent mobile devices. For 3D face reconstruction based on DL, monocular reconstruction has become the most popular technology. The state-of-the-art 3D face reconstruction method is generally self-supervised training on large-scale 2D face databases. The difficulties encountered in 3D face reconstruction include the lack of large-scale 3D face datasets, occlusions and poses of in-the-wild 2D face images, continuous expression deformations, etc. The DL network structure is categorized into general deep convolutional neural network (such as ResNet, U-Net, and Autoencoder), generative adversarial networks (GANs), implicit neural representation (INR) (such as neural radiance field (NeRF) and signed distance functions (SDF)), and Transformer. 3DMM and FLAME are widely used 3D face representation models. The StyleGAN model gives excellent performance in recovering high-quality face texture. INR has achieved remarkable results in 3D scene reconstruction, and the NeRF model plays an important role in the reconstruction of accurate head avatars. The combination of NeRF with GAN shows great potential in the reconstruction of high-fidelity 3D face geometry and realistic rendering appearances. Moreover, the Transformer model, which greatly improves the breakthrough of accuracy and speed, is mainly used in audio-driven 3D face reconstruction. Through in-depth analyses, the research difficulties accompanying 3D face are summarized, and future developments are actively being discussed and explored. Although recent research has made amazing progresses, challenges on how to improve the robustness and generalization to real-world lighting, extreme expressions/poses, and how to effectively disentangle facial attributes (such as identity, expression, albedo, and specular reflectance) and recover accurate detailed geometry of facial motions (such as wrinkles). In this study, we proposed a comprehensive and systematic review and covered classical technologies and studies on 3D face imaging and reconstruction in the last five years to provide a good reference for face studies, developments, and applications.关键词:3D face imaging;3D face reconstruction;deep learning (DL);generative adversarial network (GAN);implicit neural representation (INR)907|1010|0更新时间:2024-09-18 -

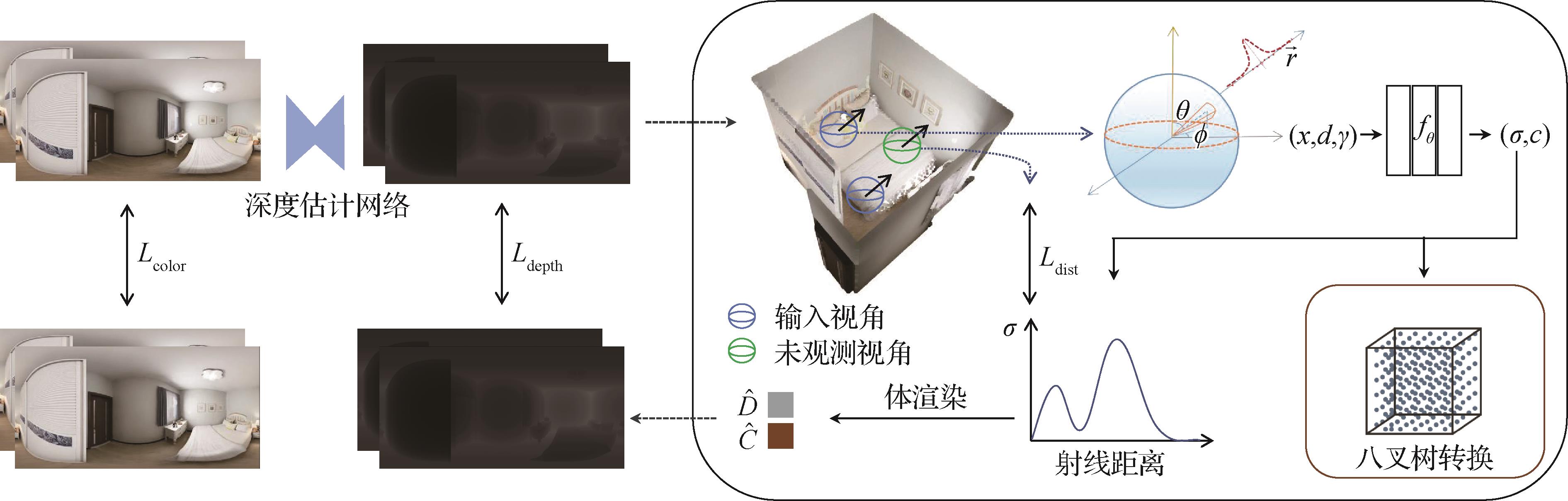

摘要:During continuous development of computer graphics and human-computer interaction, digital three-dimensional (3D) scenes have played a vital role in the academy and industry. 3D scenes show graphical rendering results, supply the environment for applications, and provide a foundation for interaction. Despite being common occurrences, indoor scenes are important. To increase players’ gaming experience, indoor game designers require all kinds of aesthetic digital 3D scenes. In online scene decoration, designers also need to predesign the decoration and furniture layout preview by interacting with 3D scenes. In studies of virtual reality, we can synthesize virtual space from a digital 3D scene, such as the synthesis of training data for wheelchair users. However, a number of difficulties still need to be overcome to obtain ideal digital 3D scenes for the applications mentioned above. First, manually synthesized 3D scenes are usually time consuming and require considerable experience. Designers must add objects to a scene and adjust their location and orientation one by one. These trivialities but heavy works cause difficulty in focusing on core ideas. Second, digital 3D scene is a data structure with extremely complex structure, and no unified consensus has been given to its data structure. Thus, digital 3D scenes are difficult to obtain and apply in large quantities compared with traditional data structures, such as image, audio or text. To solve the problems mentioned above, some existing work attempted to allow computers to automatically synthesize 3D scenes or interactively help synthesize scenes. This survey summarizes these works. This survey also investigates and summarizes 3D digital scene synthesis methodologies from three aspects: automatic scene synthesis, scene synthesis with multichannel and rich input, and interactive scene synthesis. The automatic synthesis allows the computer to directly build an indoor layout based on few inputs, such as the contour of the room or the list of objects. Initially, the scene is synthesized by manually setting rules and applying optimizers in an attempt to satisfy these rules. However, the situation increases in complexity during the synthesis practice, and thus, listing all the rules becomes impossible. As the amount of digital indoor scene increases, more works are introducing machine learning methods to study priors from the digital scenes of the 3D indoor scene dataset. Most of these works organize the furniture with graph to apply algorithms on the graph to process with the information. The results outperform those of former works. Researchers have been applying deep learning (DL) technology, such as convolutional neural network and generative adversarial network, to indoor scene synthesis, which strongly improves the synthetic effect. The synthesis with multichannel and rich input aims to synthesize a digital indoor 3D scene with unformatted information, such as image, text, RGBD scan, point cloud, etc. These algorithms enable the convenient formation of digital copies of scenes in the real world because they are mainly recorded by photos or literal description. Compared with the works on automatic synthesis, the scene syntheses with multichannel and rich input do not require diversity or aesthetics. However, this type of synthesis needs an algorithm for the accurate reconstruction of the indoor scene in the digital world. The interactive synthesis aims to let users control the process of computer-aided scene synthesis. The related works can mainly be divided into two parts: active and passive interactive syntheses. Active interactive synthesis simultaneously provides designers with suggestions while they synthesizing a scene. If the scene syntheses program can analyze the designers’ interaction and recommend the options with higher possibility to be chosen, considerable workload can be saved. During passive interactive synthesis, the system learns the user’s personal preferences from aspects, such as their behavior trajectory, personal abilities, work habits, and height information and automatically synthesize scenes that match the user’s preferences as much as possible. Eventually, this survey will also summarize the application scenario and core technology of the papers and introduce other typical application scenarios and future challenges. We summarized and classified the recent studies on applications of digital 3D scene synthesis to form this survey. Digital 3D indoor scene synthesis has attained great progress and has a wide prospect. The automatic scene synthesis has generally achieved its goal, and more attention should focused on the proposal and resolution of sub-problems and related issues afterward. For scene synthesis with rich input, existing work has explored inputs, such as image, RGBD-scan, text, and sketches. In the future, more potential input forms, such as music and symbols, should be explored. For scene interactive synthesis, current interactions are still limited to mouse and keyboard inputs, and methods based on interactive scenes, such as virtual reality, augmented reality, and hybrid reality, still need to be explored. Scene synthesis algorithm has continuously broadened its application. Industries normally require the automatic synthesis of a large amount of indoor scenes. The synthetic efficiency can be strongly increased if a computer can provide suggestions regarding an object and its layout. In academic studies, 3D scenes are usually applied to form all kinds of dataset. By rendering a scene’s photos from various perspectives and channels, researchers can easily obtain images. However, the study on indoor scene synthesis is still facing a number of limitations. The dissimilarity of data structure causes difficulty in extending the work of others. Copyright issues prevent a scene dataset from being freely used by researchers and coders. In the future, indoor scene datasets with additional furniture model and room contour will serve as the basis of indoor scene synthesis studies. Numerous related fields, such as style consistency and automatic photography, are also showing progress.关键词:indoor scene;3D scene synthesis;3D scene interaction;3D scene intelligent editing;computer graphics602|1160|0更新时间:2024-09-18

摘要:During continuous development of computer graphics and human-computer interaction, digital three-dimensional (3D) scenes have played a vital role in the academy and industry. 3D scenes show graphical rendering results, supply the environment for applications, and provide a foundation for interaction. Despite being common occurrences, indoor scenes are important. To increase players’ gaming experience, indoor game designers require all kinds of aesthetic digital 3D scenes. In online scene decoration, designers also need to predesign the decoration and furniture layout preview by interacting with 3D scenes. In studies of virtual reality, we can synthesize virtual space from a digital 3D scene, such as the synthesis of training data for wheelchair users. However, a number of difficulties still need to be overcome to obtain ideal digital 3D scenes for the applications mentioned above. First, manually synthesized 3D scenes are usually time consuming and require considerable experience. Designers must add objects to a scene and adjust their location and orientation one by one. These trivialities but heavy works cause difficulty in focusing on core ideas. Second, digital 3D scene is a data structure with extremely complex structure, and no unified consensus has been given to its data structure. Thus, digital 3D scenes are difficult to obtain and apply in large quantities compared with traditional data structures, such as image, audio or text. To solve the problems mentioned above, some existing work attempted to allow computers to automatically synthesize 3D scenes or interactively help synthesize scenes. This survey summarizes these works. This survey also investigates and summarizes 3D digital scene synthesis methodologies from three aspects: automatic scene synthesis, scene synthesis with multichannel and rich input, and interactive scene synthesis. The automatic synthesis allows the computer to directly build an indoor layout based on few inputs, such as the contour of the room or the list of objects. Initially, the scene is synthesized by manually setting rules and applying optimizers in an attempt to satisfy these rules. However, the situation increases in complexity during the synthesis practice, and thus, listing all the rules becomes impossible. As the amount of digital indoor scene increases, more works are introducing machine learning methods to study priors from the digital scenes of the 3D indoor scene dataset. Most of these works organize the furniture with graph to apply algorithms on the graph to process with the information. The results outperform those of former works. Researchers have been applying deep learning (DL) technology, such as convolutional neural network and generative adversarial network, to indoor scene synthesis, which strongly improves the synthetic effect. The synthesis with multichannel and rich input aims to synthesize a digital indoor 3D scene with unformatted information, such as image, text, RGBD scan, point cloud, etc. These algorithms enable the convenient formation of digital copies of scenes in the real world because they are mainly recorded by photos or literal description. Compared with the works on automatic synthesis, the scene syntheses with multichannel and rich input do not require diversity or aesthetics. However, this type of synthesis needs an algorithm for the accurate reconstruction of the indoor scene in the digital world. The interactive synthesis aims to let users control the process of computer-aided scene synthesis. The related works can mainly be divided into two parts: active and passive interactive syntheses. Active interactive synthesis simultaneously provides designers with suggestions while they synthesizing a scene. If the scene syntheses program can analyze the designers’ interaction and recommend the options with higher possibility to be chosen, considerable workload can be saved. During passive interactive synthesis, the system learns the user’s personal preferences from aspects, such as their behavior trajectory, personal abilities, work habits, and height information and automatically synthesize scenes that match the user’s preferences as much as possible. Eventually, this survey will also summarize the application scenario and core technology of the papers and introduce other typical application scenarios and future challenges. We summarized and classified the recent studies on applications of digital 3D scene synthesis to form this survey. Digital 3D indoor scene synthesis has attained great progress and has a wide prospect. The automatic scene synthesis has generally achieved its goal, and more attention should focused on the proposal and resolution of sub-problems and related issues afterward. For scene synthesis with rich input, existing work has explored inputs, such as image, RGBD-scan, text, and sketches. In the future, more potential input forms, such as music and symbols, should be explored. For scene interactive synthesis, current interactions are still limited to mouse and keyboard inputs, and methods based on interactive scenes, such as virtual reality, augmented reality, and hybrid reality, still need to be explored. Scene synthesis algorithm has continuously broadened its application. Industries normally require the automatic synthesis of a large amount of indoor scenes. The synthetic efficiency can be strongly increased if a computer can provide suggestions regarding an object and its layout. In academic studies, 3D scenes are usually applied to form all kinds of dataset. By rendering a scene’s photos from various perspectives and channels, researchers can easily obtain images. However, the study on indoor scene synthesis is still facing a number of limitations. The dissimilarity of data structure causes difficulty in extending the work of others. Copyright issues prevent a scene dataset from being freely used by researchers and coders. In the future, indoor scene datasets with additional furniture model and room contour will serve as the basis of indoor scene synthesis studies. Numerous related fields, such as style consistency and automatic photography, are also showing progress.关键词:indoor scene;3D scene synthesis;3D scene interaction;3D scene intelligent editing;computer graphics602|1160|0更新时间:2024-09-18 -

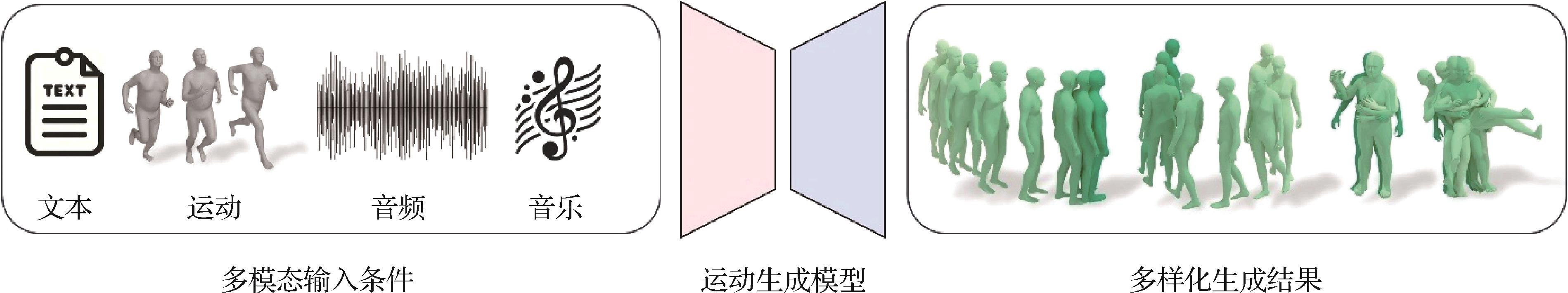

摘要:A multimodal digital human refers to a digital avatar that can perform multimodal cognition and interaction and should be able to think and behave like a human being. Substantial progress has been made in related technologies due to cross-fertilization and vibrant development in various fields, such as computer vision and natural language processing. This article discusses three major themes in the areas of computer graphics and computer vision: multimodal head animation, multimodal body animation, and multimodal portrait creation. The methodologies and representative works in these areas are also introduced. Under the theme of multimodal head animation, this work presents the research on speech- and expression-driven head models. Under the theme of multimodal body animation, the paper explores techniques involving recurrent neural network (RNN)-, Transformer-, and denoising diffusion probabilistic model (DDPM)-based body animation. The discussion of multimodal portrait creation covers portrait creation guided by visual-linguistic similarity, portrait creation guided by multimodal denoising diffusion model, and three-dimensional (3D) multimodal generative models on digital portraits. Further, this article provides an overview and classification of representative works in these research directions, summarizes existing methods, and points out potential future research directions. This article delves into key directions in the field of multimodal digital humans and covers multimodal head animation, multimodal body animation, and the construction of multimodal digital human representations. In the realm of multimodal head animation, we extensively explore two major tasks: expression- and speech-driven animation. For explicit and implicit parameterized models for expression-driven head animation, mesh surfaces and neural radiance fields (NeRF) are used to improve the rendering effects. Explicit models employ 3D morphable and linear models but encounter challenges, such as weak expressive capacity, nondifferentiable rendering, and difficult modeling of personalized features. By contrast, implicit models, especially those based on NeRF, demonstrate superior expressive capacity and realism. In the domain of speech-driven head animation, we review 2D and 3D methods, with a particular focus on the important advantages of NeRF technology in enhancing realism. 2D speech-driven head video generation utilizes techniques, such as generative adversarial networks and image transfer, but depends on 3D prior knowledge and structural characteristics. On the other hand, methods using NeRF, such as audio driven NeRF for talking head synthesis (AD-NeRF) and semantic-aware implicit neural audio-driven video portrait generation (SSP-NeRF), achieve end-to-end training with differentiable NeRF. This condition substantially improves rendering realism while still addressing challenges associated with slow training and inference speeds. Multimodal body animation focuses on speech-driven body animation, music-driven dance, and text-driven body animation. We focus on the importance of learning speech semantics and melody and discuss the applications of RNN, Transformer, and denoising diffusion models in this field. Transformer gradually replaces RNN as the mainstream model, which gains notable advantages in sequence signal learning through attention mechanisms. We also highlight the body animation generation based on denoising diffusion models, such as free-form language-based motion synthesis and editing (FLAME), motion diffusion model (MDM), and text-driven human motion generation with diffusion model (MotionDiffuse), and multimodal denoising networks under music and text conditions. In the realm of the construction of multimodal digital human representations, the article emphasizes virtual-image construction guided by visual-language similarity and denoising of diffusion models. In addition, the demand for large-scale, diverse datasets in digital human representation construction is addressed to foster powerful and universal generative models. The three key aspects of multimodal digital humans are systematically explored: head animation, body animation, and digital human representation construction. In summary, explicit head models, although simple, editable, and computationally efficient, lack expressive capacity, and face challenges in rendering, especially in modeling facial personalization and nonfacial regions. By contrast, implicit models, especially those using NeRF, demonstrate stronger modeling capabilities and realistic rendering effects. In the realm of speech-driven animation, NeRF-based solutions for head animation overcome the limitations of 2D speaker and 3D digital head animation and achieve more natural and realistic speaker videos. Regarding body animation models, Transformer gradually replaces RNN, whereas denoising diffusion models can be used to potentially address mapping challenges in multimodal body animation. Finally, digital human representation construction faces challenges, with visual-language similarity and denoising diffusion model guidance showing promising results. However, the difficulty lies in the direct construction of 3D multimodal virtual humans due to the lack of sufficient 3D virtual human datasets. This study comprehensively analyzes various issues and provides clear directions and challenges for future research. In conclusion, should focus on future developments in multimodal digital humans. Key directions include improvement of 3D modeling and real-time rendering accuracy, integration of speech-driven and facial expression synthesis, construction of large and diverse datasets, exploration of multimodal information fusion and cross-modal learning, and addressing ethical and social impacts. Implicit representation methods, such as neural volume rendering, are crucial for improved 3D modeling. Simultaneously, the construction of larger datasets poses a formidable challenge in the development of robust and universal generative models. Exploration of multimodal information fusion and cross-modal learning allows models to learn from diverse data sources and present a range of behaviors and expressions. Attention to ethical and social impacts, including digital identity and privacy, is crucial. Such research directions should serve as guide the field toward a comprehensive, realistic, and universal future, with profound influence on interactions in virtual spaces.关键词:virtual human modeling;multimodal character animation;multimodal generation and editing;neural rendering;generative models;neural implicit representation1014|2480|0更新时间:2024-09-18

摘要:A multimodal digital human refers to a digital avatar that can perform multimodal cognition and interaction and should be able to think and behave like a human being. Substantial progress has been made in related technologies due to cross-fertilization and vibrant development in various fields, such as computer vision and natural language processing. This article discusses three major themes in the areas of computer graphics and computer vision: multimodal head animation, multimodal body animation, and multimodal portrait creation. The methodologies and representative works in these areas are also introduced. Under the theme of multimodal head animation, this work presents the research on speech- and expression-driven head models. Under the theme of multimodal body animation, the paper explores techniques involving recurrent neural network (RNN)-, Transformer-, and denoising diffusion probabilistic model (DDPM)-based body animation. The discussion of multimodal portrait creation covers portrait creation guided by visual-linguistic similarity, portrait creation guided by multimodal denoising diffusion model, and three-dimensional (3D) multimodal generative models on digital portraits. Further, this article provides an overview and classification of representative works in these research directions, summarizes existing methods, and points out potential future research directions. This article delves into key directions in the field of multimodal digital humans and covers multimodal head animation, multimodal body animation, and the construction of multimodal digital human representations. In the realm of multimodal head animation, we extensively explore two major tasks: expression- and speech-driven animation. For explicit and implicit parameterized models for expression-driven head animation, mesh surfaces and neural radiance fields (NeRF) are used to improve the rendering effects. Explicit models employ 3D morphable and linear models but encounter challenges, such as weak expressive capacity, nondifferentiable rendering, and difficult modeling of personalized features. By contrast, implicit models, especially those based on NeRF, demonstrate superior expressive capacity and realism. In the domain of speech-driven head animation, we review 2D and 3D methods, with a particular focus on the important advantages of NeRF technology in enhancing realism. 2D speech-driven head video generation utilizes techniques, such as generative adversarial networks and image transfer, but depends on 3D prior knowledge and structural characteristics. On the other hand, methods using NeRF, such as audio driven NeRF for talking head synthesis (AD-NeRF) and semantic-aware implicit neural audio-driven video portrait generation (SSP-NeRF), achieve end-to-end training with differentiable NeRF. This condition substantially improves rendering realism while still addressing challenges associated with slow training and inference speeds. Multimodal body animation focuses on speech-driven body animation, music-driven dance, and text-driven body animation. We focus on the importance of learning speech semantics and melody and discuss the applications of RNN, Transformer, and denoising diffusion models in this field. Transformer gradually replaces RNN as the mainstream model, which gains notable advantages in sequence signal learning through attention mechanisms. We also highlight the body animation generation based on denoising diffusion models, such as free-form language-based motion synthesis and editing (FLAME), motion diffusion model (MDM), and text-driven human motion generation with diffusion model (MotionDiffuse), and multimodal denoising networks under music and text conditions. In the realm of the construction of multimodal digital human representations, the article emphasizes virtual-image construction guided by visual-language similarity and denoising of diffusion models. In addition, the demand for large-scale, diverse datasets in digital human representation construction is addressed to foster powerful and universal generative models. The three key aspects of multimodal digital humans are systematically explored: head animation, body animation, and digital human representation construction. In summary, explicit head models, although simple, editable, and computationally efficient, lack expressive capacity, and face challenges in rendering, especially in modeling facial personalization and nonfacial regions. By contrast, implicit models, especially those using NeRF, demonstrate stronger modeling capabilities and realistic rendering effects. In the realm of speech-driven animation, NeRF-based solutions for head animation overcome the limitations of 2D speaker and 3D digital head animation and achieve more natural and realistic speaker videos. Regarding body animation models, Transformer gradually replaces RNN, whereas denoising diffusion models can be used to potentially address mapping challenges in multimodal body animation. Finally, digital human representation construction faces challenges, with visual-language similarity and denoising diffusion model guidance showing promising results. However, the difficulty lies in the direct construction of 3D multimodal virtual humans due to the lack of sufficient 3D virtual human datasets. This study comprehensively analyzes various issues and provides clear directions and challenges for future research. In conclusion, should focus on future developments in multimodal digital humans. Key directions include improvement of 3D modeling and real-time rendering accuracy, integration of speech-driven and facial expression synthesis, construction of large and diverse datasets, exploration of multimodal information fusion and cross-modal learning, and addressing ethical and social impacts. Implicit representation methods, such as neural volume rendering, are crucial for improved 3D modeling. Simultaneously, the construction of larger datasets poses a formidable challenge in the development of robust and universal generative models. Exploration of multimodal information fusion and cross-modal learning allows models to learn from diverse data sources and present a range of behaviors and expressions. Attention to ethical and social impacts, including digital identity and privacy, is crucial. Such research directions should serve as guide the field toward a comprehensive, realistic, and universal future, with profound influence on interactions in virtual spaces.关键词:virtual human modeling;multimodal character animation;multimodal generation and editing;neural rendering;generative models;neural implicit representation1014|2480|0更新时间:2024-09-18 -

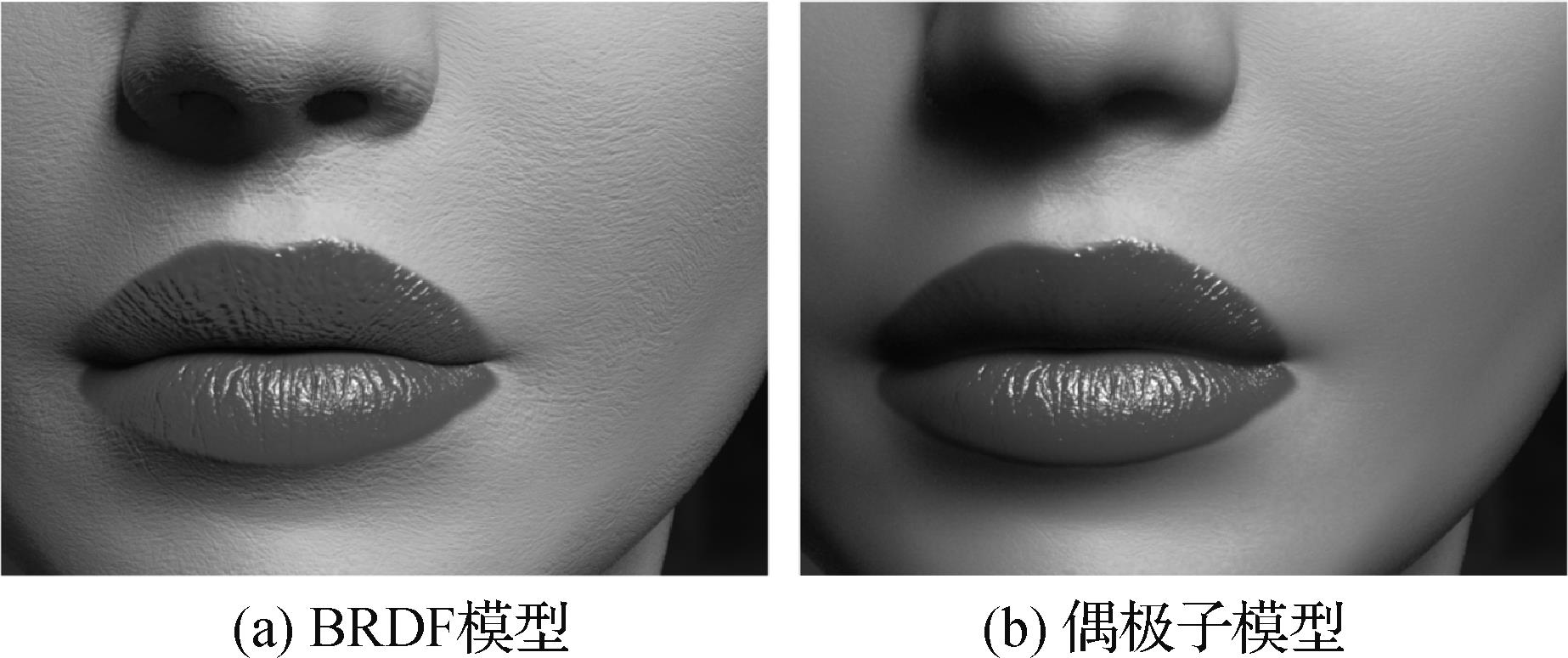

摘要:Digital human technology has attracted widespread attention in digital twins and metaverse fields. As an integral part of digital humans, people have started focusing on facial digitization and presentation. Consequently, the associated techniques find extensive applications in film, gaming, and virtual reality. A growing demand for facial realism rendering and high-quality facial inverse recovery has been observed. However, given the complex and multilayered material structure of the face, facial realism rendering presents a challenge. Furthermore, the composition of internal skin chemicals, such as melanin and hemoglobin, highly influences skin rendering. Factors, such as temperature and blood flow rate, may influence the skin’s appearance. The semitransparency of the skin introduces difficulties in the simulation of subsurface scattering effects, in addition to the wide presence of microscopic geometric features, such as pores and wrinkles on the face. All the issues mentioned above cause problems in the rendering domain and raise the demand for the quality of facial recovery. In addition, as a result of people’s exposure to real human faces in daily life, a heightened sensitivity to the texture and details of digital human faces has been observed, and this condition places greater demands on their realism and accuracy. Meanwhile, recovery of facial geometry and appearance is a crucial method for the construction of facial datasets. However, the high costs of acquisition equipment often constrain high-quality facial recovery, and most studies are limited by the acquisition speed for facial data, which result in the challenging capture of dynamic facial appearance. Lightweight recovery methods also encounter challenges related to the lack of facial material datasets. This paper presents an overview of recent advances in rendering and recovery of digital human faces. First, we introduce methods for realistic facial rendering and categorize them based on diffusion approximation and Monte Carlo approaches. Methods based on diffusion approximation, which focus on the efficient achievement of the semitransparency effect of the skin, are constrained by strict assumptions and suffer from certain limitations in precision. However, their simplified subsurface scattering models can render satisfactory images relatively quickly. Dynamic and interactive applications, such as games, often apply these methods. On the other hand, methods based on the Monte Carlo approach yield high precision and robust results via the meticulous and comprehensive simulation of the complex interactions between light and skin but require long computation times to converge. In applications, such as movies, where highly realistic visual effects are needed, they often become the preferred choice. We emphasized the development and challenges of methods based on diffusion approximation and divided them into improvements in the diffusion profiles, with real-time implementation of subsurface scattering, and hybrid methods combined with Monte Carlo techniques for detailed discussion. A recent Monte Carlo research aimed at improving the convergence rate for applications in facial rendering, including zero-variance random walks, next-event estimation, and path guiding. Second, we divided facial recovery work into two categories: high-precision recovery based on specialized acquisition equipment and low-precision recovery based on deep learning. This paper further categorizes the former based on the use of specialized lighting equipment, which distinguishes between active illumination and passive capture techniques, with provided detailed explanations for each category. Active illumination relies on professional lighting equipment, such as the application of gradient lighting to recover high-precision normal maps, to improve recovery quality. Conversely, passive capture methods are independent of professional lighting equipment, and any artificially provided lighting is limited to uniform illumination to reduce the interference of scene lighting on recovery and similar auxiliary roles. The exploration also focuses on low-precision facial recovery methods incorporating deep learning and classifies them into three categories, namely, geometric detail, texture mapping, and facial material information recoveries, to provide in-depth insights into each approach. We discuss a strategy for overcoming the limitations of geometric recovery based on parametric models, introduced refined parametric expressions of models, and predicted a range of maps, including displacement maps, that represent the model surface’s geometric details. For texture recovery, we explored the application of deep neural networks in generative tasks in the prediction of high-fidelity and personalized facial skin textures. Comprehensive reviews the various attempts to mitigate the ill-posed problem of separating reflectance information. In addition, we introduce the facial recovery work using multiview images and video sequences. These low-precision facial recovery methods can gain a wide application space given their flexibility and achieve improved recovery results with the rapid development of deep learning technology. Finally, the future trends in facial realism rendering and recovery methods based on the current state of research our outlined. In the realm of facial realism, existing works often represent the material properties of faces using texture maps and neglect the unique principles of skin coloration as a biological material. Furthermore, the rapid development of deep learning technology increases the importance of exploring of its integration with currently rendering techniques. In terms of inverse recovery, the lack of high-quality open-source datasets often poses limitations on data-based facial recovery methods. In addition, substantial improvement is needed in modeling and recovering details at the skin pore level. Combination of inverse recovery with text-based generative work also holds enormous potential and application scenarios. Hopefully, this paper can provide novice researchers in facial rendering and appearance recovery with valuable background knowledge and inspiration from harmony and ideas.关键词:facial realism rendering;subsurface scattering;facial inverse recovery;active illumination;passive capture;deep learning501|1074|1更新时间:2024-09-18

摘要:Digital human technology has attracted widespread attention in digital twins and metaverse fields. As an integral part of digital humans, people have started focusing on facial digitization and presentation. Consequently, the associated techniques find extensive applications in film, gaming, and virtual reality. A growing demand for facial realism rendering and high-quality facial inverse recovery has been observed. However, given the complex and multilayered material structure of the face, facial realism rendering presents a challenge. Furthermore, the composition of internal skin chemicals, such as melanin and hemoglobin, highly influences skin rendering. Factors, such as temperature and blood flow rate, may influence the skin’s appearance. The semitransparency of the skin introduces difficulties in the simulation of subsurface scattering effects, in addition to the wide presence of microscopic geometric features, such as pores and wrinkles on the face. All the issues mentioned above cause problems in the rendering domain and raise the demand for the quality of facial recovery. In addition, as a result of people’s exposure to real human faces in daily life, a heightened sensitivity to the texture and details of digital human faces has been observed, and this condition places greater demands on their realism and accuracy. Meanwhile, recovery of facial geometry and appearance is a crucial method for the construction of facial datasets. However, the high costs of acquisition equipment often constrain high-quality facial recovery, and most studies are limited by the acquisition speed for facial data, which result in the challenging capture of dynamic facial appearance. Lightweight recovery methods also encounter challenges related to the lack of facial material datasets. This paper presents an overview of recent advances in rendering and recovery of digital human faces. First, we introduce methods for realistic facial rendering and categorize them based on diffusion approximation and Monte Carlo approaches. Methods based on diffusion approximation, which focus on the efficient achievement of the semitransparency effect of the skin, are constrained by strict assumptions and suffer from certain limitations in precision. However, their simplified subsurface scattering models can render satisfactory images relatively quickly. Dynamic and interactive applications, such as games, often apply these methods. On the other hand, methods based on the Monte Carlo approach yield high precision and robust results via the meticulous and comprehensive simulation of the complex interactions between light and skin but require long computation times to converge. In applications, such as movies, where highly realistic visual effects are needed, they often become the preferred choice. We emphasized the development and challenges of methods based on diffusion approximation and divided them into improvements in the diffusion profiles, with real-time implementation of subsurface scattering, and hybrid methods combined with Monte Carlo techniques for detailed discussion. A recent Monte Carlo research aimed at improving the convergence rate for applications in facial rendering, including zero-variance random walks, next-event estimation, and path guiding. Second, we divided facial recovery work into two categories: high-precision recovery based on specialized acquisition equipment and low-precision recovery based on deep learning. This paper further categorizes the former based on the use of specialized lighting equipment, which distinguishes between active illumination and passive capture techniques, with provided detailed explanations for each category. Active illumination relies on professional lighting equipment, such as the application of gradient lighting to recover high-precision normal maps, to improve recovery quality. Conversely, passive capture methods are independent of professional lighting equipment, and any artificially provided lighting is limited to uniform illumination to reduce the interference of scene lighting on recovery and similar auxiliary roles. The exploration also focuses on low-precision facial recovery methods incorporating deep learning and classifies them into three categories, namely, geometric detail, texture mapping, and facial material information recoveries, to provide in-depth insights into each approach. We discuss a strategy for overcoming the limitations of geometric recovery based on parametric models, introduced refined parametric expressions of models, and predicted a range of maps, including displacement maps, that represent the model surface’s geometric details. For texture recovery, we explored the application of deep neural networks in generative tasks in the prediction of high-fidelity and personalized facial skin textures. Comprehensive reviews the various attempts to mitigate the ill-posed problem of separating reflectance information. In addition, we introduce the facial recovery work using multiview images and video sequences. These low-precision facial recovery methods can gain a wide application space given their flexibility and achieve improved recovery results with the rapid development of deep learning technology. Finally, the future trends in facial realism rendering and recovery methods based on the current state of research our outlined. In the realm of facial realism, existing works often represent the material properties of faces using texture maps and neglect the unique principles of skin coloration as a biological material. Furthermore, the rapid development of deep learning technology increases the importance of exploring of its integration with currently rendering techniques. In terms of inverse recovery, the lack of high-quality open-source datasets often poses limitations on data-based facial recovery methods. In addition, substantial improvement is needed in modeling and recovering details at the skin pore level. Combination of inverse recovery with text-based generative work also holds enormous potential and application scenarios. Hopefully, this paper can provide novice researchers in facial rendering and appearance recovery with valuable background knowledge and inspiration from harmony and ideas.关键词:facial realism rendering;subsurface scattering;facial inverse recovery;active illumination;passive capture;deep learning501|1074|1更新时间:2024-09-18 -

摘要:Three-dimensional (3D) digital human motion generation guided by multimodal information generates human motion under specific input conditions through data, such as text, audio, image, and video. This technology has a wide spectrum of applications and extensive economic and social benefits in the fields of film, animation, game production, metaverse, etc., and is one of the research hotspots in the fields of computer graphics and computer vision. However, such a task faces grand challenges, including the difficult representation and fusion of multimodal information, lack of high-quality datasets, poor quality of generated motion (such as jitter, penetration, and foot sliding), and low generation efficiency. Although various solutions have been proposed to address the aforementioned challenges, a mechanism for achieving efficient and high-quality 3D digital human motion generation based on the characteristics of distinct modal data remains an open problem to be solved. This paper comprehensively reviews 3D digital human motion generation and elaborates on related recent advances from the perspectives of parametrized 3D human models, human motion representation, motion generation techniques, motion analysis and editing, existing human motion datasets and evaluation metrics. Parametrized human models facilitate digital human modeling and motion generation through the provision of parameters associated with body shapes and postures and serve as key pillars of current digital human research and applications. This survey begins with an introduction to widely used parametrized 3D human body models, including shape completion and animation of people (SCAPE), skinned multi-person linear model (SMPL), SMPL-X, and SMPL-H, and their detailed comparison in terms of model representations and the parameters used to control body shapes, poses, and facial expressions. Human motion representation is a core issue in digital human motion generation. This work highlights the musculoskeletal model and classic skinning algorithms, including linear blending skinning and dual quaternion skinning, and their application in physics-based and data-driven methods to control human movements. We have also extensively studied approaches to existing multimodal information-guided human motion generation and categorized them into four major branches, i.e., generative adversarial network-, autoencoder-, variational autoencoder-, and diffusion model-based methods. Other works, such as generative motion matching, have also been mentioned and compared with data-driven methods. The survey summarizes existing schemes of human motion generation from the perspectives of methods and model architectures and presents a unified framework for the generation of digital human motion. A motion encoder extracts motion features from an original motion sequence and fuses them with the conditional characteristics extracted by the conditional encoder into latent variables or maps them to the latent space. This condition enables generative adversarial networks, autoencoders, variational autoencoders, or diffusion models to generate qualified human movements through a motion decoder. In addition, this paper surveys the current work on digital human motion analysis and editing, including motion clustering, motion prediction, motion in-betweening, and motion in-filling. Data-driven human motion generation and evaluation requires the use of a high-quality dataset. We collected publicly available human motion databases and classified them into various types based on two criteria. From the perspective of data type, existing databases can be classified into motion capture and video reconstruction datasets. Motion capture data sets rely on devices, such as motion capture systems, cameras, and inertial measurement units, to obtain real human movement data (i.e., ground truth). Meanwhile, the video reconstruction dataset was used to reconstruct a 3D human body model through estimation of body joints from motion videos and fitting them to a parametric human body model. From the perspective of task type, commonly used databases can be classified into text-, action-, and audio-motion datasets. The new datasets are usually obtained by processing motion capture and video reconstruction datasets based on specific tasks. A comprehensive briefing on the evaluation metrics of 3D human motion generation, including motion quality, motion diversity, and multimodality, consistency between inputs and outputs, and inference efficiency, is also provided. Apart from objective evaluation metrics, user study was employed to generate human motion quality and was discussed in this paper. To compare the performances of various generation methods used in digital human motion on public datasets, we selected a collection of the most representative work and carried out extensive experiments for comprehensive evaluation. Finally, the well-addressed and underexplored issues in this field were summarized, and several potential further research directions regarding datasets, the quality and diversity of generated motions, cross-modal information fusion, and generation efficiency were discussed. Specifically, existing datasets generally fail to meet the expectations concerning motion diversity and descriptions associated with motions, data distribution, and length of motion sequence. Future work should consider the development of a large-scale 3D human motion database to boost the efficacy and robustness of motion generation models. In addition, the quality of generated human motions, especially those with complex movement patterns, remains dissatisfactory. Physical constraints and postprocessing show promise in the integration into human motion generation frameworks to tackle issues. In addition, although human-motion generation methods can generate various motion sequences from multimodal information, such as text, audio, music, actions and keyframes, work on cross-modal human motion generation (e.g., generating a motion from a text description and a piece of background music) is scarcely reported. Investigation of such a task is worthy, especially in unlocking new opportunities in this area. In terms of the diversity of generated content, some researchers have explored harvesting rich, diverse, and stylized motions using variational autoencoders, diffusion models, and contrastive language-image pretraining neural networks. However, current studies mainly focus on the motion generation of a single human represented by an SMPL-like naked parameterized 3D model. Meanwhile, the generation and interaction of multiple dressed humans have huge untapped application potential but have not received sufficient attention. Finally, another nonnegligible issue is a mechanism for boosting motion generation efficiency and achieving a good balance between quality and inference overhead. Possible solutions to such a problem include lightweight parameterized human models, information-intensive training datasets, and improved or more advanced generative frameworks.关键词:3D avatar;motion generation;multimodal information;parametric human model;generative adversarial network (GAN);autoencoder (AE);variational autoencoder (VAE);diffusion model776|1293|1更新时间:2024-09-18

摘要:Three-dimensional (3D) digital human motion generation guided by multimodal information generates human motion under specific input conditions through data, such as text, audio, image, and video. This technology has a wide spectrum of applications and extensive economic and social benefits in the fields of film, animation, game production, metaverse, etc., and is one of the research hotspots in the fields of computer graphics and computer vision. However, such a task faces grand challenges, including the difficult representation and fusion of multimodal information, lack of high-quality datasets, poor quality of generated motion (such as jitter, penetration, and foot sliding), and low generation efficiency. Although various solutions have been proposed to address the aforementioned challenges, a mechanism for achieving efficient and high-quality 3D digital human motion generation based on the characteristics of distinct modal data remains an open problem to be solved. This paper comprehensively reviews 3D digital human motion generation and elaborates on related recent advances from the perspectives of parametrized 3D human models, human motion representation, motion generation techniques, motion analysis and editing, existing human motion datasets and evaluation metrics. Parametrized human models facilitate digital human modeling and motion generation through the provision of parameters associated with body shapes and postures and serve as key pillars of current digital human research and applications. This survey begins with an introduction to widely used parametrized 3D human body models, including shape completion and animation of people (SCAPE), skinned multi-person linear model (SMPL), SMPL-X, and SMPL-H, and their detailed comparison in terms of model representations and the parameters used to control body shapes, poses, and facial expressions. Human motion representation is a core issue in digital human motion generation. This work highlights the musculoskeletal model and classic skinning algorithms, including linear blending skinning and dual quaternion skinning, and their application in physics-based and data-driven methods to control human movements. We have also extensively studied approaches to existing multimodal information-guided human motion generation and categorized them into four major branches, i.e., generative adversarial network-, autoencoder-, variational autoencoder-, and diffusion model-based methods. Other works, such as generative motion matching, have also been mentioned and compared with data-driven methods. The survey summarizes existing schemes of human motion generation from the perspectives of methods and model architectures and presents a unified framework for the generation of digital human motion. A motion encoder extracts motion features from an original motion sequence and fuses them with the conditional characteristics extracted by the conditional encoder into latent variables or maps them to the latent space. This condition enables generative adversarial networks, autoencoders, variational autoencoders, or diffusion models to generate qualified human movements through a motion decoder. In addition, this paper surveys the current work on digital human motion analysis and editing, including motion clustering, motion prediction, motion in-betweening, and motion in-filling. Data-driven human motion generation and evaluation requires the use of a high-quality dataset. We collected publicly available human motion databases and classified them into various types based on two criteria. From the perspective of data type, existing databases can be classified into motion capture and video reconstruction datasets. Motion capture data sets rely on devices, such as motion capture systems, cameras, and inertial measurement units, to obtain real human movement data (i.e., ground truth). Meanwhile, the video reconstruction dataset was used to reconstruct a 3D human body model through estimation of body joints from motion videos and fitting them to a parametric human body model. From the perspective of task type, commonly used databases can be classified into text-, action-, and audio-motion datasets. The new datasets are usually obtained by processing motion capture and video reconstruction datasets based on specific tasks. A comprehensive briefing on the evaluation metrics of 3D human motion generation, including motion quality, motion diversity, and multimodality, consistency between inputs and outputs, and inference efficiency, is also provided. Apart from objective evaluation metrics, user study was employed to generate human motion quality and was discussed in this paper. To compare the performances of various generation methods used in digital human motion on public datasets, we selected a collection of the most representative work and carried out extensive experiments for comprehensive evaluation. Finally, the well-addressed and underexplored issues in this field were summarized, and several potential further research directions regarding datasets, the quality and diversity of generated motions, cross-modal information fusion, and generation efficiency were discussed. Specifically, existing datasets generally fail to meet the expectations concerning motion diversity and descriptions associated with motions, data distribution, and length of motion sequence. Future work should consider the development of a large-scale 3D human motion database to boost the efficacy and robustness of motion generation models. In addition, the quality of generated human motions, especially those with complex movement patterns, remains dissatisfactory. Physical constraints and postprocessing show promise in the integration into human motion generation frameworks to tackle issues. In addition, although human-motion generation methods can generate various motion sequences from multimodal information, such as text, audio, music, actions and keyframes, work on cross-modal human motion generation (e.g., generating a motion from a text description and a piece of background music) is scarcely reported. Investigation of such a task is worthy, especially in unlocking new opportunities in this area. In terms of the diversity of generated content, some researchers have explored harvesting rich, diverse, and stylized motions using variational autoencoders, diffusion models, and contrastive language-image pretraining neural networks. However, current studies mainly focus on the motion generation of a single human represented by an SMPL-like naked parameterized 3D model. Meanwhile, the generation and interaction of multiple dressed humans have huge untapped application potential but have not received sufficient attention. Finally, another nonnegligible issue is a mechanism for boosting motion generation efficiency and achieving a good balance between quality and inference overhead. Possible solutions to such a problem include lightweight parameterized human models, information-intensive training datasets, and improved or more advanced generative frameworks.关键词:3D avatar;motion generation;multimodal information;parametric human model;generative adversarial network (GAN);autoencoder (AE);variational autoencoder (VAE);diffusion model776|1293|1更新时间:2024-09-18 -

摘要:Three-dimensional human body reconstruction is a fundamental task in computer graphics and computer vision, with wide-ranging applications in virtual reality, human-computer interaction, motion analysis, and many other fields. This process is aimed at the accurate recovery of a three-dimensional model of the human body from given input data for further analysis and applications. However, high-fidelity reconstruction of clothed human bodies still presents difficulty given the diversity of human body shapes, variations in clothing, and complex human motion. Considerable progress has been attained in the field of three-dimensional human body reconstruction owing to the rapid development of deep learning methods. In deep learning techniques, multilayer neural network models are leveraged for the effective extraction of features from input data and learning of discriminative representations. In human body reconstruction, deep learning methods achieved remarkable advancements through revolutionized data feature extraction, implicit geometric representation, and neural rendering. This article aims to provide a comprehensive and accessible overview of three-dimensional human body reconstruction and elucidate the underlying methodologies, techniques, and algorithms used in this complex process. The article introduces first the classical framework of human body reconstruction, which comprises several key modules that collectively contribute to the reconstruction pipeline. These modules encompass various types of input data, including images, videos, and three-dimensional scans, that serve as fundamental building blocks in the reconstruction process. The representation of human body geometry is a vital aspect of human body reconstruction. Capturing the nuanced contours and shapes that define the human form presents a challenge. The article also explores various techniques for geometric representation, from mesh-based approaches to implicit representations and voxel grids. These techniques capture intricate details of the human body while ensuring that body shapes and poses remain realistic. The article also delves into the challenges associated with the reconstruction of clothed human bodies and examines the efficacy of parametric models in encapsulating the complexities of clothing deformations. Representation of human body motion is another crucial component of human body reconstruction. Realistic reconstructions require accurate modeling and capture of the dynamic nature of human movements. The article comprehensively explores various approaches to modeling human body motions, including articulated and non-rigid ones. Techniques, such as skeletal animation, motion capture, and spatiotemporal analysis, are discussed for the accurate and lifelike representations of human body motion. Parametric models also contribute to human body reconstruction because they provide a concise and expressive representation of the complete human body. The article further examines optimization-based methods, regression-based approaches, and popular parametric models, such as skinned multi-person linear (SMPL) and SMPL plus offsets, for human body reconstruction. These models allow the capture of realistic body shapes, poses, and clothing deformations. The article also discusses the advantages and limitations of these models and their applications in various domains. Deep learning techniques have had a transformative influence on three-dimensional human body reconstruction. The article explores the application of deep learning methodologies in data feature extraction, implicit geometric representation, and neural rendering and highlights the advancements achieved in leveraging convolutional neural networks, recurrent neural networks, and generative adversarial networks for various aspects of the reconstruction pipeline. These deep learning techniques considerably improve the accuracy and realism of reconstructed human bodies. Furthermore, publicly available datasets have been specifically curated for clothed human body reconstruction. These datasets serve as invaluable resources for benchmarking and evaluation of the performance of various reconstruction algorithms and enable researchers to compare and analyze the effectiveness of different techniques to foster advancements in the field. Then, a comprehensive survey of the rapid advancements in human body reconstruction algorithms over the past decade is presented. The survey highlights breakthroughs in dense view reconstruction, non-rigid structure from motion (NRSFM) methods, pixel-aligned implicit geometry reconstruction, generative models, and parameterized models. The discussion is also focused on the strengths, limitations, and potential applications of each approach to provide readers with holistic insights into the current state-of-the-art techniques. In conclusion, this article offers an in-depth and accessible exploration of three-dimensional human body reconstruction and covers a wide range of topics, such as data acquisition, geometry representation, motion modeling, and rendering of modules. The article not only summarizes existing methods but also provides insights into future research directions, such as the pursuit of high-fidelity reconstructions at reduced costs, accelerated reconstruction speeds, editable reconstruction outcomes, and the capability to reconstruct human bodies in natural environments. These research endeavors increase the accuracy, realism, and practicality of three-dimensional human body reconstruction systems and unlock new possibilities for various applications in the academia and industry.关键词:three-dimensional human body reconstruction;deep learning;parameterized model;implicit geometric representation;non-rigid structure-from-motion method;generative model451|1523|0更新时间:2024-09-18

摘要:Three-dimensional human body reconstruction is a fundamental task in computer graphics and computer vision, with wide-ranging applications in virtual reality, human-computer interaction, motion analysis, and many other fields. This process is aimed at the accurate recovery of a three-dimensional model of the human body from given input data for further analysis and applications. However, high-fidelity reconstruction of clothed human bodies still presents difficulty given the diversity of human body shapes, variations in clothing, and complex human motion. Considerable progress has been attained in the field of three-dimensional human body reconstruction owing to the rapid development of deep learning methods. In deep learning techniques, multilayer neural network models are leveraged for the effective extraction of features from input data and learning of discriminative representations. In human body reconstruction, deep learning methods achieved remarkable advancements through revolutionized data feature extraction, implicit geometric representation, and neural rendering. This article aims to provide a comprehensive and accessible overview of three-dimensional human body reconstruction and elucidate the underlying methodologies, techniques, and algorithms used in this complex process. The article introduces first the classical framework of human body reconstruction, which comprises several key modules that collectively contribute to the reconstruction pipeline. These modules encompass various types of input data, including images, videos, and three-dimensional scans, that serve as fundamental building blocks in the reconstruction process. The representation of human body geometry is a vital aspect of human body reconstruction. Capturing the nuanced contours and shapes that define the human form presents a challenge. The article also explores various techniques for geometric representation, from mesh-based approaches to implicit representations and voxel grids. These techniques capture intricate details of the human body while ensuring that body shapes and poses remain realistic. The article also delves into the challenges associated with the reconstruction of clothed human bodies and examines the efficacy of parametric models in encapsulating the complexities of clothing deformations. Representation of human body motion is another crucial component of human body reconstruction. Realistic reconstructions require accurate modeling and capture of the dynamic nature of human movements. The article comprehensively explores various approaches to modeling human body motions, including articulated and non-rigid ones. Techniques, such as skeletal animation, motion capture, and spatiotemporal analysis, are discussed for the accurate and lifelike representations of human body motion. Parametric models also contribute to human body reconstruction because they provide a concise and expressive representation of the complete human body. The article further examines optimization-based methods, regression-based approaches, and popular parametric models, such as skinned multi-person linear (SMPL) and SMPL plus offsets, for human body reconstruction. These models allow the capture of realistic body shapes, poses, and clothing deformations. The article also discusses the advantages and limitations of these models and their applications in various domains. Deep learning techniques have had a transformative influence on three-dimensional human body reconstruction. The article explores the application of deep learning methodologies in data feature extraction, implicit geometric representation, and neural rendering and highlights the advancements achieved in leveraging convolutional neural networks, recurrent neural networks, and generative adversarial networks for various aspects of the reconstruction pipeline. These deep learning techniques considerably improve the accuracy and realism of reconstructed human bodies. Furthermore, publicly available datasets have been specifically curated for clothed human body reconstruction. These datasets serve as invaluable resources for benchmarking and evaluation of the performance of various reconstruction algorithms and enable researchers to compare and analyze the effectiveness of different techniques to foster advancements in the field. Then, a comprehensive survey of the rapid advancements in human body reconstruction algorithms over the past decade is presented. The survey highlights breakthroughs in dense view reconstruction, non-rigid structure from motion (NRSFM) methods, pixel-aligned implicit geometry reconstruction, generative models, and parameterized models. The discussion is also focused on the strengths, limitations, and potential applications of each approach to provide readers with holistic insights into the current state-of-the-art techniques. In conclusion, this article offers an in-depth and accessible exploration of three-dimensional human body reconstruction and covers a wide range of topics, such as data acquisition, geometry representation, motion modeling, and rendering of modules. The article not only summarizes existing methods but also provides insights into future research directions, such as the pursuit of high-fidelity reconstructions at reduced costs, accelerated reconstruction speeds, editable reconstruction outcomes, and the capability to reconstruct human bodies in natural environments. These research endeavors increase the accuracy, realism, and practicality of three-dimensional human body reconstruction systems and unlock new possibilities for various applications in the academia and industry.关键词:three-dimensional human body reconstruction;deep learning;parameterized model;implicit geometric representation;non-rigid structure-from-motion method;generative model451|1523|0更新时间:2024-09-18 -