最新刊期

卷 29 , 期 10 , 2024

-

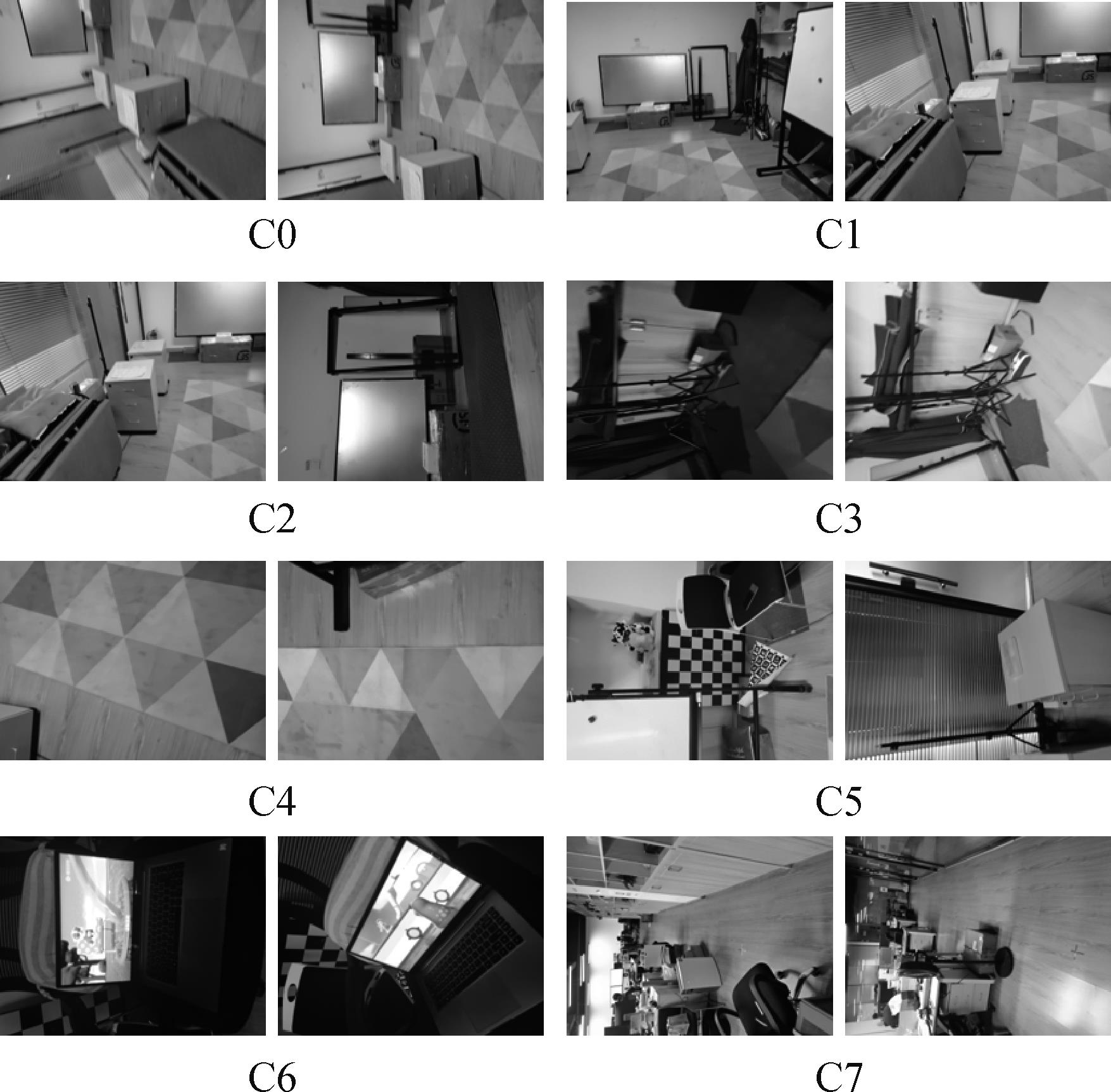

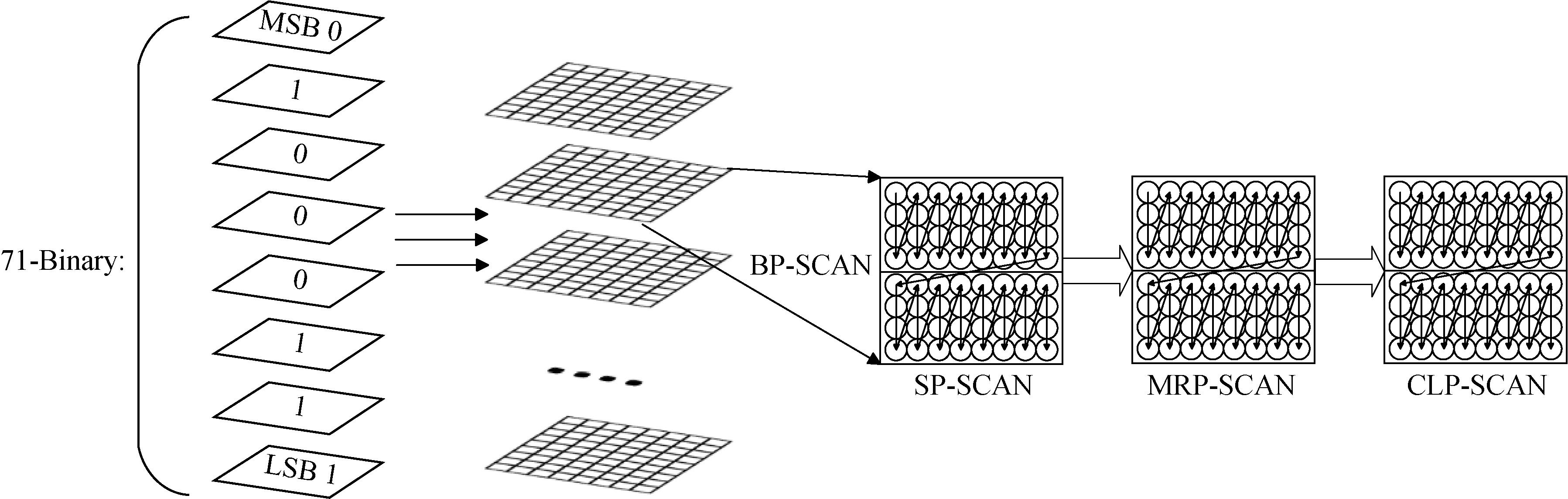

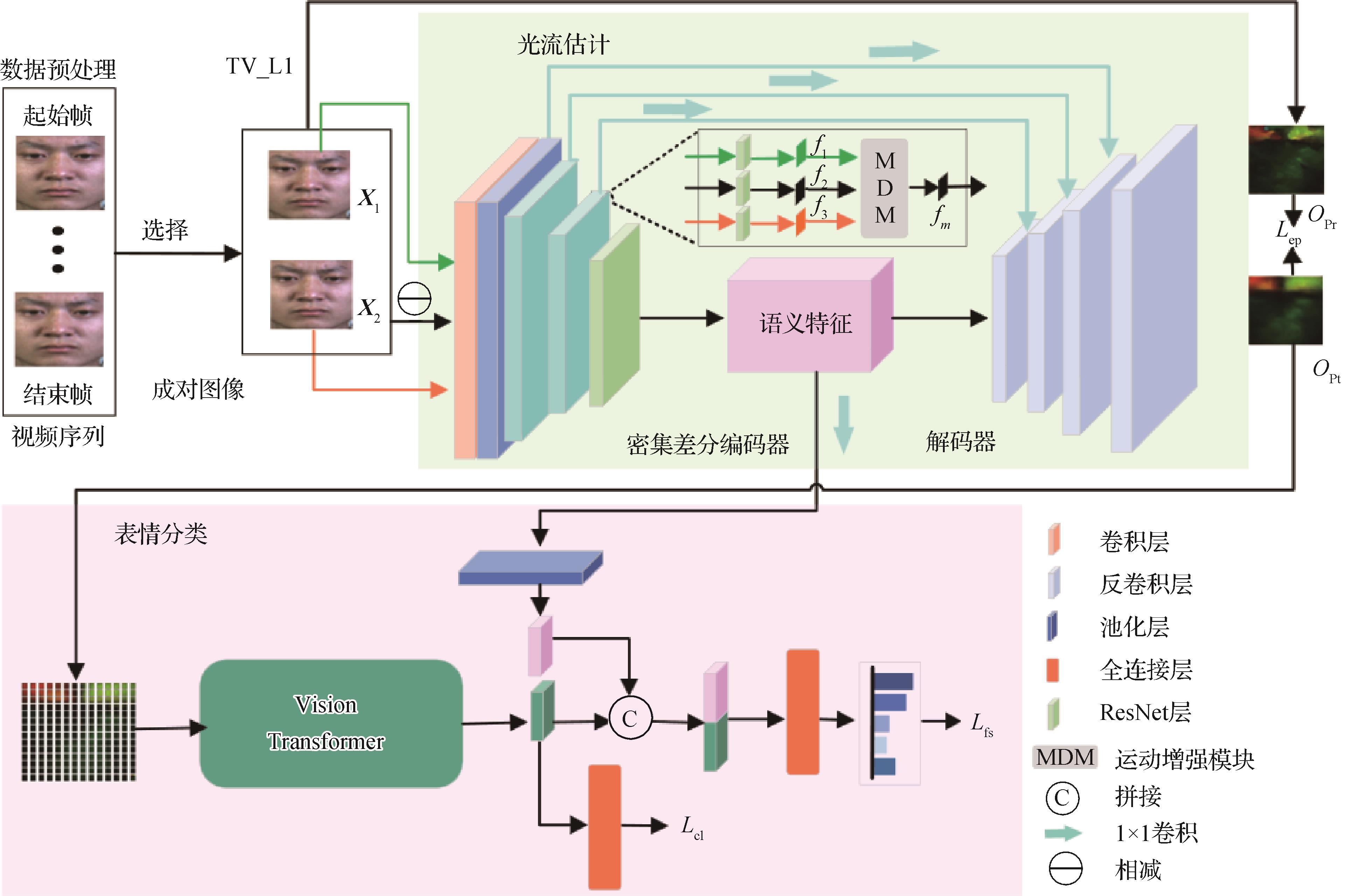

摘要:Monocular visual-inertial simultaneous localization and mapping (VI-SLAM) is an important research topic in computer vision and robotics. It aims to estimate the pose (i.e., the position and orientation) of the device in real-time using a monocular camera with an inertial sensor while constructing the map of the environment. With the rapid development of various fields, such as augmented/virtual reality (AR/VR), robotics, and autonomous driving, monocular VI-SLAM has received widespread attention due to its advantages, including low hardware cost and no requirement for an external environment setup, among others. Over the past decade or so, monocular VI-SLAM has made significant progress and spawned many excellent methods and systems. However, because of the complexity of real-world scenarios, different methods have also shown distinct limitations. Although some works have reviewed and evaluated VI-SLAM methods, most of them only focus on classic methods, which cannot fully reflect the latest development status of VI-SLAM technology. Based on optimization type, VI-SLAM can be divided into filtering- and optimization-based methods. Filtering-based methods use filters to fuse observations from visual and inertial sensors, continuously updating the device’s state information for localization and mapping. Additionally, depending on whether visual data association (or feature matching) is performed separately, existing methods can be divided into indirect methods (or feature-based methods) and direct methods. Furthermore, with the development and widespread application of deep learning technology, researchers have started to incorporate deep learning methods into VI-SLAM to enhance robustness in extreme conditions or perform dense reconstruction. This paper first elaborates on the basic principles of monocular VI-SLAM methods and then classifies them analytically into direct and filtering-, optimization-, feature-, and deep learning-based methods. However, most of the existing datasets and benchmarks are focused on applications like autonomous driving and drones, mainly evaluating pose accuracy. Relatively few datasets have been specifically designed for AR.For a more comprehensive comparison of the advantages and disadvantages of different methods, we select three public datasets to quantitatively evaluate representative monocular VI-SLAM methods from multiple dimensions: the widely used EuRoC dataset, the ZJU-Sensetime dataset suitable for mobile platform AR applications, and the low cost and scalable frarnework to build localization benchmark(LSFB) dataset aimed at large-scale AR scenarios. Then, we supplemented the ZJU-Sensetime dataset with a more challenging set of sequences called sequences C to enhance the variety of data types and evaluation dimensions. This extended dataset is designed to evaluate the robustness of algorithms under extreme conditions such as pure rotation, planar motion, lighting changes, and dynamic scenes. Specifically, sequences C comprise eight sequences, labeled C0–C7. In the C0 sequence, the handheld device moves around a room, performing multiple pure rotational motions. The C1 sequence involves the device mounted on a stabilized gimbal and moves freely. In the C2 sequence, the device moves in a planar motion, maintaining a constant height. The C3 sequence includes turning lights on and off during recording. In the C4 sequence, the device overlooks the floor while moving. The C5 sequence captures the exterior wall with significant parallax and minimal co-visibility, while the C6 sequence involves viewing a monitor during recording, with slight movement and changing screen content. Finally, the C7 sequence involves long-distance recording. On the EuRoC dataset, both filtering- and optimization-based VI-SLAM methods achieved good accuracy. Multi-state constraint Kalman filter(MSCKF), an early filtering-based system, showed lower accuracy and struggled with some sequences. Some methods such OpenVINS and RNIN-VIO enhanced accuracy by adding new features and deep learning-based algorithms, respectively. OKVIS, an early optimization-based system, completed all sequences but with lower accuracy. Other methods such as VINS-Mono, RD-VIO, and ORB-SLAM3 achieved significant optimizations, improving initialization, robustness, and overall accuracy. Direct methods such as DM-VIO and SVO-Pro, which we extended from DSO and SVO, respectively, showed significant improvements in accuracy through techniques like delayed marginalization and efficient use of texture information. Adaptive VIO, which is based on deep learning, achieved high accuracy by continuously updating through online learning, demonstrating adaptability to new scenarios. Furthermore, on the ZJU-Sensetime dataset, the comparison results of different methods are largely similar to those in EuRoC. The main difference is that the accuracy of the direct method DM-VIO significantly decreases when using a rolling shutter camera, whereas the semidirect method SVO-Pro has a slightly better performance. Feature-based methods do not show a significant drop in accuracy, but the smaller field of view (FoV) found in phone cameras reduces the robustness of ORB-SLAM3, Kimera, and MSCKF. Additionally, ORB-SLAM3 has high tracking accuracy but a lower completeness, while Kimera and MSCKF show increased tracking errors. HybVIO, RNIN-VIO, and RD-VIO have the highest accuracy, while HybVIO slightly outperforms the two others. The deep learning-based Adaptive VIO also shows a significant drop in accuracy and struggles to complete sequences B and C, indicating generalization and robustness issues in complex scenarios. On the LSFB dataset, the comparison results are consistent with those in small-scale datasets. The methods with the highest accuracy in small scenes, such as RNIN-VIO, HybVIO, and RD-VIO, continue to show high accuracy in large scenes. In particular, RNIN-VIO demonstrates even more significant accuracy advantages in large scenes. In large-scale scenes, many feature points are distant and lack parallax, leading to rapid accumulation of errors, especially in methods that are heavily rely on visual constraints. The neural inertial network-based RNIN-VIO can better maximize IMU observations, reducing dependence on visual data. The VINS-Mono also shows significant advantages in large scenes, as its sliding window optimization facilitating the early inclusion of small-parallax feature points, effectively controlling error accumulation. In contrast, ORB-SLAM3, which relies on local maps, requires sufficient parallax before adding feature points to the local map, which can lead to insufficient visual constraints in distant environments and ultimately cause error accumulation and even tracking loss. The experimental results also show that optimization-based or combined filtering–optimization methods generally outperform filtering-based methods in terms of tracking accuracy and robustness. At the same time, direct/semidirect methods perform well when shooting with a global shutter camera, but are prone to error accumulation, especially in large scenes when affected by rolling shutter and light changes. Combining deep learning can improve robustness in extreme situations. Finally, the development trend of SLAM is discussed and prospected in this work based on three research hotspots: combining deep learning with V-SLAM/VI-SLAM, multisensor fusion, and end-cloud collaboration.关键词:visual-inertial SLAM(VI-SLAM);augmented reality(AR);visual-inertial dataset;multiple-view geometry;multi-sensor fusion886|1690|0更新时间:2024-10-23

摘要:Monocular visual-inertial simultaneous localization and mapping (VI-SLAM) is an important research topic in computer vision and robotics. It aims to estimate the pose (i.e., the position and orientation) of the device in real-time using a monocular camera with an inertial sensor while constructing the map of the environment. With the rapid development of various fields, such as augmented/virtual reality (AR/VR), robotics, and autonomous driving, monocular VI-SLAM has received widespread attention due to its advantages, including low hardware cost and no requirement for an external environment setup, among others. Over the past decade or so, monocular VI-SLAM has made significant progress and spawned many excellent methods and systems. However, because of the complexity of real-world scenarios, different methods have also shown distinct limitations. Although some works have reviewed and evaluated VI-SLAM methods, most of them only focus on classic methods, which cannot fully reflect the latest development status of VI-SLAM technology. Based on optimization type, VI-SLAM can be divided into filtering- and optimization-based methods. Filtering-based methods use filters to fuse observations from visual and inertial sensors, continuously updating the device’s state information for localization and mapping. Additionally, depending on whether visual data association (or feature matching) is performed separately, existing methods can be divided into indirect methods (or feature-based methods) and direct methods. Furthermore, with the development and widespread application of deep learning technology, researchers have started to incorporate deep learning methods into VI-SLAM to enhance robustness in extreme conditions or perform dense reconstruction. This paper first elaborates on the basic principles of monocular VI-SLAM methods and then classifies them analytically into direct and filtering-, optimization-, feature-, and deep learning-based methods. However, most of the existing datasets and benchmarks are focused on applications like autonomous driving and drones, mainly evaluating pose accuracy. Relatively few datasets have been specifically designed for AR.For a more comprehensive comparison of the advantages and disadvantages of different methods, we select three public datasets to quantitatively evaluate representative monocular VI-SLAM methods from multiple dimensions: the widely used EuRoC dataset, the ZJU-Sensetime dataset suitable for mobile platform AR applications, and the low cost and scalable frarnework to build localization benchmark(LSFB) dataset aimed at large-scale AR scenarios. Then, we supplemented the ZJU-Sensetime dataset with a more challenging set of sequences called sequences C to enhance the variety of data types and evaluation dimensions. This extended dataset is designed to evaluate the robustness of algorithms under extreme conditions such as pure rotation, planar motion, lighting changes, and dynamic scenes. Specifically, sequences C comprise eight sequences, labeled C0–C7. In the C0 sequence, the handheld device moves around a room, performing multiple pure rotational motions. The C1 sequence involves the device mounted on a stabilized gimbal and moves freely. In the C2 sequence, the device moves in a planar motion, maintaining a constant height. The C3 sequence includes turning lights on and off during recording. In the C4 sequence, the device overlooks the floor while moving. The C5 sequence captures the exterior wall with significant parallax and minimal co-visibility, while the C6 sequence involves viewing a monitor during recording, with slight movement and changing screen content. Finally, the C7 sequence involves long-distance recording. On the EuRoC dataset, both filtering- and optimization-based VI-SLAM methods achieved good accuracy. Multi-state constraint Kalman filter(MSCKF), an early filtering-based system, showed lower accuracy and struggled with some sequences. Some methods such OpenVINS and RNIN-VIO enhanced accuracy by adding new features and deep learning-based algorithms, respectively. OKVIS, an early optimization-based system, completed all sequences but with lower accuracy. Other methods such as VINS-Mono, RD-VIO, and ORB-SLAM3 achieved significant optimizations, improving initialization, robustness, and overall accuracy. Direct methods such as DM-VIO and SVO-Pro, which we extended from DSO and SVO, respectively, showed significant improvements in accuracy through techniques like delayed marginalization and efficient use of texture information. Adaptive VIO, which is based on deep learning, achieved high accuracy by continuously updating through online learning, demonstrating adaptability to new scenarios. Furthermore, on the ZJU-Sensetime dataset, the comparison results of different methods are largely similar to those in EuRoC. The main difference is that the accuracy of the direct method DM-VIO significantly decreases when using a rolling shutter camera, whereas the semidirect method SVO-Pro has a slightly better performance. Feature-based methods do not show a significant drop in accuracy, but the smaller field of view (FoV) found in phone cameras reduces the robustness of ORB-SLAM3, Kimera, and MSCKF. Additionally, ORB-SLAM3 has high tracking accuracy but a lower completeness, while Kimera and MSCKF show increased tracking errors. HybVIO, RNIN-VIO, and RD-VIO have the highest accuracy, while HybVIO slightly outperforms the two others. The deep learning-based Adaptive VIO also shows a significant drop in accuracy and struggles to complete sequences B and C, indicating generalization and robustness issues in complex scenarios. On the LSFB dataset, the comparison results are consistent with those in small-scale datasets. The methods with the highest accuracy in small scenes, such as RNIN-VIO, HybVIO, and RD-VIO, continue to show high accuracy in large scenes. In particular, RNIN-VIO demonstrates even more significant accuracy advantages in large scenes. In large-scale scenes, many feature points are distant and lack parallax, leading to rapid accumulation of errors, especially in methods that are heavily rely on visual constraints. The neural inertial network-based RNIN-VIO can better maximize IMU observations, reducing dependence on visual data. The VINS-Mono also shows significant advantages in large scenes, as its sliding window optimization facilitating the early inclusion of small-parallax feature points, effectively controlling error accumulation. In contrast, ORB-SLAM3, which relies on local maps, requires sufficient parallax before adding feature points to the local map, which can lead to insufficient visual constraints in distant environments and ultimately cause error accumulation and even tracking loss. The experimental results also show that optimization-based or combined filtering–optimization methods generally outperform filtering-based methods in terms of tracking accuracy and robustness. At the same time, direct/semidirect methods perform well when shooting with a global shutter camera, but are prone to error accumulation, especially in large scenes when affected by rolling shutter and light changes. Combining deep learning can improve robustness in extreme situations. Finally, the development trend of SLAM is discussed and prospected in this work based on three research hotspots: combining deep learning with V-SLAM/VI-SLAM, multisensor fusion, and end-cloud collaboration.关键词:visual-inertial SLAM(VI-SLAM);augmented reality(AR);visual-inertial dataset;multiple-view geometry;multi-sensor fusion886|1690|0更新时间:2024-10-23 -

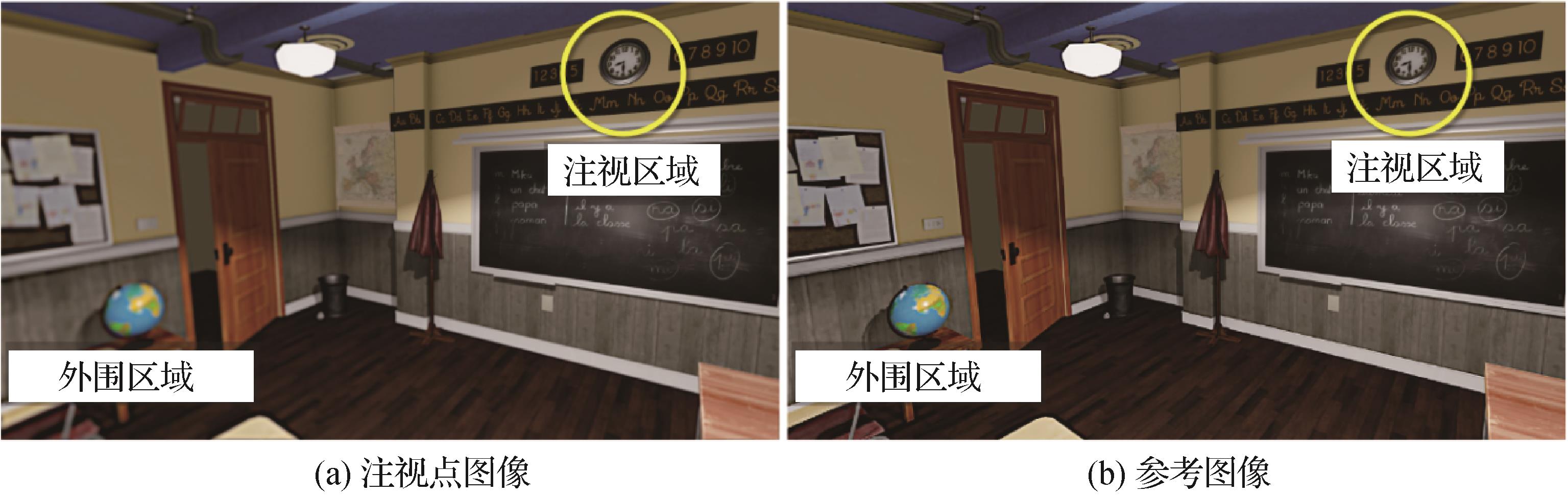

摘要:With the rapid development of software technology and the continuous updating of hardware devices, augmented reality technology has gradually matured and been widely used in various fields, such as military, medical, gaming, industry, and education. Accurate depth perception is crucial in augmented reality, and simply overlaying virtual objects onto video sequences no longer meets user demands. In many augmented reality scenarios, users need to interact with virtual objects constantly, and without accurate depth perception, augmented reality can hardly provide a seamless interactive experience. Virtual-real occlusion handling is one of the key factors to achieve this goal. It presents a realistic virtual-real fusion effect by establishing accurate occlusion relationship, so that the fusion scene can correctly reflect the spatial position relationship between virtual and real objects, thereby enhancing the user’s sense of immersion and realism. This paper first introduces the related background, concepts, and overall processing flow of virtual-real occlusion handling. Existing occlusion handling methods can be divided into three categories: depth based, image analysis based, and model based. By analyzing the distinct characteristics of rigid and nonrigid objects, we summarize the specific principles, representative research works, and the applicability to rigid and nonrigid objects of these three virtual-real occlusion handling methods. The shape and size of rigid objects remain unchanged after motion or force, and they mainly use two types virtual-real occlusion handling methods: depth based and model based. The depth-based methods have evolved from the early use of stereo vision algorithms to the use of depth sensors for indoor depth image acquisition and further to the prediction of moving objects’ depth by using outdoor map data, as well as the densification of sparse simultaneous localization and mapping depth in monocular mobile augmented reality. Further research should focus on the depth image restoration algorithms and the balance between real-time performance and accuracy of scene-dense depth computation algorithms in mobile augmented reality. The model-based methods have developed from constructing partial 3D models by segmenting object contours in video key frames or directly using modeling software to achieving dense reconstruction of indoor static scenes using depth images and constructing approximate 3D models of outdoor scenes by incorporating geographic spatial information. Model-based methods already have a relative well-established processing flow, but further exploration is still needed on how to enhance real-time performance while ensuring tracking and occlusion accuracy. In contrast to rigid objects, nonrigid objects are prone to irregular deformations during movement. Typical nonrigid objects in augmented reality are user’s hands or the bodies of other users. For nonrigid objects, related research has been conducted on all three types virtual-real occlusion handling methods. Depth-based methods focus on the depth image restoration algorithms. These algorithms aim to repair depth image noise while ensuring precise alignment between depth and RGB image, especially in extreme scenarios, such as when foreground and background have similar colors. Image analysis-based methods focus on foreground segmentation algorithms and occlusion relationship judgment means. Foreground segmentation algorithms have evolved from the early color models and background subtraction techniques to the deep learning-based segmentation networks. Moreover, the occlusion relationship judgment means have transitioned from user-specified to incorporating depth information to assist judgment. The key challenge in image analysis-based methods lies in overcoming the irregular deformations of nonrigid objects, obtaining accurate foreground segmentation masks and tracking continuously. Model-based methods initially used LeapMotion combined with customized hand parameters to fit hand model, but now using deep learning networks to reconstruct hand models has become mainstream. Model-based methods should improve the speed and accuracy of hand reconstruction. On the basis of summarizing the virtual-real occlusion handling methods for rigid and nonrigid objects, we also conduct a comparative analysis of existing methods from various perspectives including real-time performance, automation level, whether to support perspective or scene changes, and application scope. In addition, we summarize the specific workflows, difficulties and limitations of the three virtual-real occlusion handling methods. Finally, aiming at the problems existing in related research, we explore the challenges faced by current virtual-real occlusion technology and propose potential future research directions: 1) Occlusion handling for moving nonrigid objects. Obtaining accurate depth or 3D models of nonrigid objects is the key to solving this problem. The accuracy and robustness of hand segmentation must be further improved. Additionally, the use of simpler monocular depth estimation and rapid reconstruction of nonrigid objects other than user’s hands need to be further explored. 2) Occlusion handling for outdoor dynamic scenes. Existing depth cameras have limited working range, which makes them ineffective in outdoor scenes. Sparse 3D models obtained from geographic information systems have low precision and cannot be applied to dynamic objects, such as automobiles. Therefore, further research on dynamic objects’ virtual-real occlusion handling in large outdoor scenes is needed. 3) Registration algorithms for depth and RGB images. The accuracy of edge alignment between depth and color images must be improved without consuming too much computing resources.关键词:augmented reality;virtual-real occlusion handling;rigid and non-rigid bodies;depth image restoration;foreground extraction602|875|0更新时间:2024-10-23

摘要:With the rapid development of software technology and the continuous updating of hardware devices, augmented reality technology has gradually matured and been widely used in various fields, such as military, medical, gaming, industry, and education. Accurate depth perception is crucial in augmented reality, and simply overlaying virtual objects onto video sequences no longer meets user demands. In many augmented reality scenarios, users need to interact with virtual objects constantly, and without accurate depth perception, augmented reality can hardly provide a seamless interactive experience. Virtual-real occlusion handling is one of the key factors to achieve this goal. It presents a realistic virtual-real fusion effect by establishing accurate occlusion relationship, so that the fusion scene can correctly reflect the spatial position relationship between virtual and real objects, thereby enhancing the user’s sense of immersion and realism. This paper first introduces the related background, concepts, and overall processing flow of virtual-real occlusion handling. Existing occlusion handling methods can be divided into three categories: depth based, image analysis based, and model based. By analyzing the distinct characteristics of rigid and nonrigid objects, we summarize the specific principles, representative research works, and the applicability to rigid and nonrigid objects of these three virtual-real occlusion handling methods. The shape and size of rigid objects remain unchanged after motion or force, and they mainly use two types virtual-real occlusion handling methods: depth based and model based. The depth-based methods have evolved from the early use of stereo vision algorithms to the use of depth sensors for indoor depth image acquisition and further to the prediction of moving objects’ depth by using outdoor map data, as well as the densification of sparse simultaneous localization and mapping depth in monocular mobile augmented reality. Further research should focus on the depth image restoration algorithms and the balance between real-time performance and accuracy of scene-dense depth computation algorithms in mobile augmented reality. The model-based methods have developed from constructing partial 3D models by segmenting object contours in video key frames or directly using modeling software to achieving dense reconstruction of indoor static scenes using depth images and constructing approximate 3D models of outdoor scenes by incorporating geographic spatial information. Model-based methods already have a relative well-established processing flow, but further exploration is still needed on how to enhance real-time performance while ensuring tracking and occlusion accuracy. In contrast to rigid objects, nonrigid objects are prone to irregular deformations during movement. Typical nonrigid objects in augmented reality are user’s hands or the bodies of other users. For nonrigid objects, related research has been conducted on all three types virtual-real occlusion handling methods. Depth-based methods focus on the depth image restoration algorithms. These algorithms aim to repair depth image noise while ensuring precise alignment between depth and RGB image, especially in extreme scenarios, such as when foreground and background have similar colors. Image analysis-based methods focus on foreground segmentation algorithms and occlusion relationship judgment means. Foreground segmentation algorithms have evolved from the early color models and background subtraction techniques to the deep learning-based segmentation networks. Moreover, the occlusion relationship judgment means have transitioned from user-specified to incorporating depth information to assist judgment. The key challenge in image analysis-based methods lies in overcoming the irregular deformations of nonrigid objects, obtaining accurate foreground segmentation masks and tracking continuously. Model-based methods initially used LeapMotion combined with customized hand parameters to fit hand model, but now using deep learning networks to reconstruct hand models has become mainstream. Model-based methods should improve the speed and accuracy of hand reconstruction. On the basis of summarizing the virtual-real occlusion handling methods for rigid and nonrigid objects, we also conduct a comparative analysis of existing methods from various perspectives including real-time performance, automation level, whether to support perspective or scene changes, and application scope. In addition, we summarize the specific workflows, difficulties and limitations of the three virtual-real occlusion handling methods. Finally, aiming at the problems existing in related research, we explore the challenges faced by current virtual-real occlusion technology and propose potential future research directions: 1) Occlusion handling for moving nonrigid objects. Obtaining accurate depth or 3D models of nonrigid objects is the key to solving this problem. The accuracy and robustness of hand segmentation must be further improved. Additionally, the use of simpler monocular depth estimation and rapid reconstruction of nonrigid objects other than user’s hands need to be further explored. 2) Occlusion handling for outdoor dynamic scenes. Existing depth cameras have limited working range, which makes them ineffective in outdoor scenes. Sparse 3D models obtained from geographic information systems have low precision and cannot be applied to dynamic objects, such as automobiles. Therefore, further research on dynamic objects’ virtual-real occlusion handling in large outdoor scenes is needed. 3) Registration algorithms for depth and RGB images. The accuracy of edge alignment between depth and color images must be improved without consuming too much computing resources.关键词:augmented reality;virtual-real occlusion handling;rigid and non-rigid bodies;depth image restoration;foreground extraction602|875|0更新时间:2024-10-23 -

摘要:Visual-based localization determines the camera translation and orientation of an image observation with respect to a prebuilt 3D-based representation of the environment. It is an essential technology that empowers the intelligent interactions between computing facilities and the real world. Compared with alternative positioning systems beyond, the capability to estimate the accurate 6DOF camera pose, along with the flexibility and frugality in deployment, positions visual-based localization technology as a cornerstone of many applications, ranging from autonomous vehicles to augmented and mixed reality. As a long-standing problem in computer vision, visual localization has made exceeding progress over the past decades. A primary branch of prior arts relies on a preconstructed 3D map obtained by structure-from-motion techniques. Such 3D maps, a.k.a. SfM point clouds, store 3D points and per-point visual features. To estimate the camera pose, these methods typically establish correspondences between 2D keypoints detected in the query image and 3D points of the SfM point cloud through descriptor matching. The 6DOF camera pose of the query image is then recovered from these 2D-3D matches by leveraging geometric principles introduced by photogrammetry. Despite delivering fairly sound and reliable performance, such a scheme often has to consume several gigabytes of storage for just a single scene, which would result in computationally expensive overhead and prohibitive memory footprint for large-scale applications and resource-intensive platforms. Furthermore, it suffers from other drawbacks, such as costly map maintenance and privacy vulnerability. The aforementioned issues pose a major bottleneck in real-world applications and have thus prompted researchers to shift their focus toward leaner solutions. Lightweight visual-based localization seeks to introduce improvements in scene representations and the associated localization methods, making the resulting framework computationally tractable and memory-efficient without incurring a notable performance expense. For the background, this literature review first introduces several flagship frameworks of the visual-based localization task as preliminaries. These frameworks can be broadly classified into three categories, including image-retrieval-based methods, structure-based methods, and hierarchical methods. 3D scene representations adopted in these conventional frameworks, such as reference image databases and SfM point clouds, generally exhibit a high degree of redundancy, which causes excessive memory usage and inefficiency in distinguishing scene features for descriptor matching. Next, this review provides a guided tour of recent advances that promote the brevity of the 3D scene representations and the efficiency of corresponding visual localization methods. From the perspective of scene representations, existing research efforts in lightweight visual localization can be classified into six categories. Within each category, this literature review analyzes its characteristics, application scenarios, and technical limitations while also surveying some of the representative works. First, several methods have been proposed to enhance memory efficiency by compressing the SfM point clouds. These methods reduce the size of SfM point clouds through the combination of techniques including feature quantization, keypoint subset sampling, and feature-free matching. Extreme compression rates, such as 1% and below, can be achieved with barely noticeable accuracy degradation. Employing line maps as scene representations has become a focus of research in the field of lightweight visual localization. In human-made scenes characterized by salient structural features, the substitution of line maps for point clouds offers two major merits: 1) the abundance and rich geometric properties of line segments make line maps a concise option for depicting the environment; 2) line features exhibit better robustness in weak-textured areas or under temporally varying lighting conditions. However, the lack of a unified line descriptor and the difficulty of establishing 2D-3D correspondences between 3D line segments and image observations remain as main challenges. In the field of autonomous driving, high-definition maps constructed from vectorized semantic features have unlocked a new wave of cost-effective and lightweight solutions to visual localization for self-driving vehicle. Recent trends involve the utilization of data-driven techniques to learn to localize. This end-to-end philosophy has given rise to two regression-based methods. Scene coordinate regression (SCR) methods eschew the explicit processes of feature extraction and matching. Instead, they establish a direct mapping between observations and scene coordinates through regression. While a grounding in geometry remains essential for camera pose estimation in SCR methods, pose regression methods employ deep neural networks to establish the mapping from image observations to camera poses without any explicit geometric reasoning. Absolute pose regression techniques are akin to image retrieval approaches with limited accuracy and generalization capability, while relative pose regression techniques typically serve as a postprocessing step following the coarse localization stage. Neural radiance fields and related volumetric-based approaches have emerged as a novel way for the neural implicit scene representation. While visual localization based solely on a learned volumetric-based implicit map is still in an exploratory phase, the progress made over the past year or two has already yielded an impressive performance in terms of the scene representation capability and precision of localization. Furthermore, this study quantitatively evaluates the performance of several representative lightweight visual localization methods on well-known indoor and outdoor datasets. Evaluation metrics, including offline mapping time usage, storage demand, and localization accuracy, are considered for making comparisons. Results reveal that SCR methods generally stand out among the existing work, boasting remarkably compact scene maps and high success rates of localization. Existing lightweight visual localization methods have dramatically pushed the performance boundary. However, challenges still remain in terms of scalability and robustness when enlarging the scene scale and taking considerable visual disparity between query and mapping images into consideration. Therefore, extensive efforts are still required to promote the compactness of scene representations and improving the robustness of localization methods. Finally, this review provides an outlook on developing trends in the hope of facilitating future research.关键词:visual localization;camera pose estimation;3D scene representation;lightweight map;feature matching;scene coordinate regression;pose regression715|1117|0更新时间:2024-10-23

摘要:Visual-based localization determines the camera translation and orientation of an image observation with respect to a prebuilt 3D-based representation of the environment. It is an essential technology that empowers the intelligent interactions between computing facilities and the real world. Compared with alternative positioning systems beyond, the capability to estimate the accurate 6DOF camera pose, along with the flexibility and frugality in deployment, positions visual-based localization technology as a cornerstone of many applications, ranging from autonomous vehicles to augmented and mixed reality. As a long-standing problem in computer vision, visual localization has made exceeding progress over the past decades. A primary branch of prior arts relies on a preconstructed 3D map obtained by structure-from-motion techniques. Such 3D maps, a.k.a. SfM point clouds, store 3D points and per-point visual features. To estimate the camera pose, these methods typically establish correspondences between 2D keypoints detected in the query image and 3D points of the SfM point cloud through descriptor matching. The 6DOF camera pose of the query image is then recovered from these 2D-3D matches by leveraging geometric principles introduced by photogrammetry. Despite delivering fairly sound and reliable performance, such a scheme often has to consume several gigabytes of storage for just a single scene, which would result in computationally expensive overhead and prohibitive memory footprint for large-scale applications and resource-intensive platforms. Furthermore, it suffers from other drawbacks, such as costly map maintenance and privacy vulnerability. The aforementioned issues pose a major bottleneck in real-world applications and have thus prompted researchers to shift their focus toward leaner solutions. Lightweight visual-based localization seeks to introduce improvements in scene representations and the associated localization methods, making the resulting framework computationally tractable and memory-efficient without incurring a notable performance expense. For the background, this literature review first introduces several flagship frameworks of the visual-based localization task as preliminaries. These frameworks can be broadly classified into three categories, including image-retrieval-based methods, structure-based methods, and hierarchical methods. 3D scene representations adopted in these conventional frameworks, such as reference image databases and SfM point clouds, generally exhibit a high degree of redundancy, which causes excessive memory usage and inefficiency in distinguishing scene features for descriptor matching. Next, this review provides a guided tour of recent advances that promote the brevity of the 3D scene representations and the efficiency of corresponding visual localization methods. From the perspective of scene representations, existing research efforts in lightweight visual localization can be classified into six categories. Within each category, this literature review analyzes its characteristics, application scenarios, and technical limitations while also surveying some of the representative works. First, several methods have been proposed to enhance memory efficiency by compressing the SfM point clouds. These methods reduce the size of SfM point clouds through the combination of techniques including feature quantization, keypoint subset sampling, and feature-free matching. Extreme compression rates, such as 1% and below, can be achieved with barely noticeable accuracy degradation. Employing line maps as scene representations has become a focus of research in the field of lightweight visual localization. In human-made scenes characterized by salient structural features, the substitution of line maps for point clouds offers two major merits: 1) the abundance and rich geometric properties of line segments make line maps a concise option for depicting the environment; 2) line features exhibit better robustness in weak-textured areas or under temporally varying lighting conditions. However, the lack of a unified line descriptor and the difficulty of establishing 2D-3D correspondences between 3D line segments and image observations remain as main challenges. In the field of autonomous driving, high-definition maps constructed from vectorized semantic features have unlocked a new wave of cost-effective and lightweight solutions to visual localization for self-driving vehicle. Recent trends involve the utilization of data-driven techniques to learn to localize. This end-to-end philosophy has given rise to two regression-based methods. Scene coordinate regression (SCR) methods eschew the explicit processes of feature extraction and matching. Instead, they establish a direct mapping between observations and scene coordinates through regression. While a grounding in geometry remains essential for camera pose estimation in SCR methods, pose regression methods employ deep neural networks to establish the mapping from image observations to camera poses without any explicit geometric reasoning. Absolute pose regression techniques are akin to image retrieval approaches with limited accuracy and generalization capability, while relative pose regression techniques typically serve as a postprocessing step following the coarse localization stage. Neural radiance fields and related volumetric-based approaches have emerged as a novel way for the neural implicit scene representation. While visual localization based solely on a learned volumetric-based implicit map is still in an exploratory phase, the progress made over the past year or two has already yielded an impressive performance in terms of the scene representation capability and precision of localization. Furthermore, this study quantitatively evaluates the performance of several representative lightweight visual localization methods on well-known indoor and outdoor datasets. Evaluation metrics, including offline mapping time usage, storage demand, and localization accuracy, are considered for making comparisons. Results reveal that SCR methods generally stand out among the existing work, boasting remarkably compact scene maps and high success rates of localization. Existing lightweight visual localization methods have dramatically pushed the performance boundary. However, challenges still remain in terms of scalability and robustness when enlarging the scene scale and taking considerable visual disparity between query and mapping images into consideration. Therefore, extensive efforts are still required to promote the compactness of scene representations and improving the robustness of localization methods. Finally, this review provides an outlook on developing trends in the hope of facilitating future research.关键词:visual localization;camera pose estimation;3D scene representation;lightweight map;feature matching;scene coordinate regression;pose regression715|1117|0更新时间:2024-10-23 -

摘要:Rendering has been a prominent subject in the field of computer graphics for an extended period. It can be regarded as a function that accepts an abstract scene description as input and typically generates a 2D image as output. The theory and practice of rendering have remarkably advanced through years of research. In recent years, inverse rendering has emerged as a new research focus in the field of computer graphics due to the development of digital technology. The objective of inverse rendering is to reverse the rendering process and deduce scene parameters from the output image, which is equivalent to solving the inverse function of the rendering function. This process plays a crucial role in addressing perception problems in diverse advanced technological domains, including virtual reality, autonomous driving, and robotics. Numerous methods exist for implementing inverse rendering, with the current mainstream framework being optimization through “analysis by synthesis”. First, it estimates a set of initial scene parameters, then performs forward rendering on the scene, compares the rendered result with the target image, and then minimizes the difference (loss function) by optimizing the scene parameters using gradient descent-based method. This pipeline necessitates the ability to compute the derivatives of the output image in forward rendering with respect to the input parameters. Consequently, differentiable rendering has emerged to fulfill this requirement. Specifically, the research topic of differentiable rendering is to convert the forward rendering pipeline in computer graphics into a differentiable form, enabling the differentiation of the output image with respect to input parameters such as geometry, material, light source, and camera. Currently, forward rendering can be broadly categorized into three types: rasterization-based rendering, physically based rendering, and the emerging neural rendering. Rasterization-based rendering is a fundamental technique in computer graphics that converts geometric shapes into pixels for display. It involves projecting 3D objects onto a 2D screen, performing hidden surface removal, shading, and texturing to create realistic images efficiently. While rasterization is fast and suitable for real-time applications, it may lack physical accuracy in simulating light interactions. By contrast, physically based rendering aims to simulate real-world light behavior accurately by considering the physical properties of materials, light sources, and the environment. It calculates how light rays interact with surfaces, accounting for reflections, refractions, and scattering to produce photorealistic visual results. This method prioritizes realism and is widely used in industries, such as animation, gaming, and visual effects. Neural rendering is an emerging rendering technique in recent years, mainly used for image-based rendering tasks. In contrast to traditional graphics rendering, image-based rendering does not require any explicit 3D scene information (geometry, materials, lighting, etc.), but instead implicitly encodes scenes through a sequence of 2D images sampled from different viewpoints, enabling the generation of images of the scene from any viewpoint. Accordingly, differentiable rendering can also be categorized into three types: differentiable rasterization, physically based differentiable rendering, and differentiable neural rendering. In differentiable rasterization, many works employ approximate methods to compute approximate derivatives of the rasterization process for backpropagation of gradients or modify steps in the traditional rendering pipeline (usually rasterization and testing/blending steps) to make pixels differentiable with respect to vertices. Neural rendering is naturally differentiable because its rendering process is conducted through neural networks. For physically based differentiable rendering, accurately calculating the gradient of the image concerning scene parameters is challenging because of the intricate nature of geometry, material, and light transmission processes. Therefore, this study concentrates on recent research in the field of physically based differentiable rendering. The article is organized into the following sections: Section 1 introduces the computational methods of forward rendering and differentiable rendering from an abstract standpoint and two types of method for correctly computing boundary integral: edge sampling and reparameterization. Section 2 explores the attainment of differentiable rendering for distinct representations of geometry, such as volumetric representation, signed distance field, height field, and vectorized geometry; materials, such as volumetric material; parameterized bidirectional reflectance distribution function, bidirectional surface scattering reflectance distribution function, and continuously varying refractive index fields; and camera-related parameters, such as pixel reconstruction filter and time-of-flight camera. Section 3 focuses on enhancing the efficiency and robustness of differentiable rendering, including efficient sampling, high-efficiency system and framework, language for differentiable rendering and several techniques, to enhance the robustness of differentiable rendering. Section 4 showcases the application of differentiable rendering in practical tasks, which can be generally divided in three types: single-object reconstruction, object and environment light reconstruction, and scene reconstruction. Section 5 discusses the future development trends of differentiable rendering, including improving efficiency, robustness of differentiable rendering, and combining differentiable rendering with other methods.关键词:rendering;differentiable rendering;inverse rendering;ray tracing;3d reconstruction882|1288|0更新时间:2024-10-23

摘要:Rendering has been a prominent subject in the field of computer graphics for an extended period. It can be regarded as a function that accepts an abstract scene description as input and typically generates a 2D image as output. The theory and practice of rendering have remarkably advanced through years of research. In recent years, inverse rendering has emerged as a new research focus in the field of computer graphics due to the development of digital technology. The objective of inverse rendering is to reverse the rendering process and deduce scene parameters from the output image, which is equivalent to solving the inverse function of the rendering function. This process plays a crucial role in addressing perception problems in diverse advanced technological domains, including virtual reality, autonomous driving, and robotics. Numerous methods exist for implementing inverse rendering, with the current mainstream framework being optimization through “analysis by synthesis”. First, it estimates a set of initial scene parameters, then performs forward rendering on the scene, compares the rendered result with the target image, and then minimizes the difference (loss function) by optimizing the scene parameters using gradient descent-based method. This pipeline necessitates the ability to compute the derivatives of the output image in forward rendering with respect to the input parameters. Consequently, differentiable rendering has emerged to fulfill this requirement. Specifically, the research topic of differentiable rendering is to convert the forward rendering pipeline in computer graphics into a differentiable form, enabling the differentiation of the output image with respect to input parameters such as geometry, material, light source, and camera. Currently, forward rendering can be broadly categorized into three types: rasterization-based rendering, physically based rendering, and the emerging neural rendering. Rasterization-based rendering is a fundamental technique in computer graphics that converts geometric shapes into pixels for display. It involves projecting 3D objects onto a 2D screen, performing hidden surface removal, shading, and texturing to create realistic images efficiently. While rasterization is fast and suitable for real-time applications, it may lack physical accuracy in simulating light interactions. By contrast, physically based rendering aims to simulate real-world light behavior accurately by considering the physical properties of materials, light sources, and the environment. It calculates how light rays interact with surfaces, accounting for reflections, refractions, and scattering to produce photorealistic visual results. This method prioritizes realism and is widely used in industries, such as animation, gaming, and visual effects. Neural rendering is an emerging rendering technique in recent years, mainly used for image-based rendering tasks. In contrast to traditional graphics rendering, image-based rendering does not require any explicit 3D scene information (geometry, materials, lighting, etc.), but instead implicitly encodes scenes through a sequence of 2D images sampled from different viewpoints, enabling the generation of images of the scene from any viewpoint. Accordingly, differentiable rendering can also be categorized into three types: differentiable rasterization, physically based differentiable rendering, and differentiable neural rendering. In differentiable rasterization, many works employ approximate methods to compute approximate derivatives of the rasterization process for backpropagation of gradients or modify steps in the traditional rendering pipeline (usually rasterization and testing/blending steps) to make pixels differentiable with respect to vertices. Neural rendering is naturally differentiable because its rendering process is conducted through neural networks. For physically based differentiable rendering, accurately calculating the gradient of the image concerning scene parameters is challenging because of the intricate nature of geometry, material, and light transmission processes. Therefore, this study concentrates on recent research in the field of physically based differentiable rendering. The article is organized into the following sections: Section 1 introduces the computational methods of forward rendering and differentiable rendering from an abstract standpoint and two types of method for correctly computing boundary integral: edge sampling and reparameterization. Section 2 explores the attainment of differentiable rendering for distinct representations of geometry, such as volumetric representation, signed distance field, height field, and vectorized geometry; materials, such as volumetric material; parameterized bidirectional reflectance distribution function, bidirectional surface scattering reflectance distribution function, and continuously varying refractive index fields; and camera-related parameters, such as pixel reconstruction filter and time-of-flight camera. Section 3 focuses on enhancing the efficiency and robustness of differentiable rendering, including efficient sampling, high-efficiency system and framework, language for differentiable rendering and several techniques, to enhance the robustness of differentiable rendering. Section 4 showcases the application of differentiable rendering in practical tasks, which can be generally divided in three types: single-object reconstruction, object and environment light reconstruction, and scene reconstruction. Section 5 discusses the future development trends of differentiable rendering, including improving efficiency, robustness of differentiable rendering, and combining differentiable rendering with other methods.关键词:rendering;differentiable rendering;inverse rendering;ray tracing;3d reconstruction882|1288|0更新时间:2024-10-23 -

摘要:With the development of natural human-computer interaction technologies, such as virtual reality(VR), metaverse, and artificial intelligence-generated content, human-computer interfaces based on interactive virtual contents are providing users with a new kind of task challenge——emotional challenge. Emotional challenge mainly examines the user’s ability to understand, explore, and deal with emotional issues in a virtual world. It is considered a core aspect of virtual interactive scenarios in the metaverse and an essential element in the future digital world. Moreover, users’ perceived emotional challenge has been proven to be a serious, profound, and reflective new type of user experience. Specifically, by resolving the tension within the narrative, identifying with virtual characters, and exploring emotional ambiguities, players encountering emotional challenge would be put in a more reflective state of mind and experience more diverse, impactful, and complex emotional experiences. Since the introduction of emotional challenge in 2015, it has attracted considerable attention in the field of human-computer interaction. With this background, researchers have conducted a series of research work on emotional challenge with respect to its definition, characteristics, interactive design, evaluation scales, and computational modeling. This study aims to systematically organize and introduce the related works of emotional challenge first and then elaborate its prospective applications in the metaverse. This paper starts by introducing the concept of challenge in digital games. Game challenge deals with the obstacles that players must overcome and the tasks that they must perform to make game progress. Game challenges generally have three different kinds. Physical challenge depends on players’ skills, such as speed, accuracy, endurance, dexterity, and strength, and cognitive challenge requires players to use their cognitive abilities, such as memory, observation, reasoning, planning, and problem solving. By contrast, emotional challenge deals with tension within the narrative or difficult material presented in the game and can only be overcome with a cognitive and affective effort from the player. Afterward, this paper introduces related works on emotional challenge, including how it differs from more traditional types of challenge, the diverse and relative negative emotional responses it evokes, the required game design characteristics, and the psychological theories relate to emotional challenge. This paper then introduces how emotional challenge affects player experience when separately from or jointly with traditional challenge in VR and PC conditions. Results showed that relatively exclusive emotional challenge induced a wider range of different emotions under VR and PC conditions, while the adding of emotional challenge broadened emotional responses only in VR condition. VR could also enhance players’ perceived experiences of emotional response, appreciation, immersion, and presence. This paper also reviewed the latest work on the potential of detecting perceived emotional challenge from physiological signals. Researchers collected physiological responses from a group of players who engaged in three typical game scenarios coving an entire spectrum of different game challenges. By collecting perceived challenge ratings from players and extracting basic physiological features, researchers applied multiple machine learning methods and metrics to detect challenge experiences. Results showed that most methods achieved a challenge detection accuracy of around 80%. Although emotional challenge has attracted considerable attention in the field of human-computer interaction, it has not been clearly defined at present. Additionally, current research on emotional challenge is primarily limited to the area of digital games. We believe that the concept of emotional challenge has the potential for application in various domains of human-computer interaction. Therefore, building upon previous works on emotional challenge, this paper provides a definition and description of emotional challenge from the perspective of human-computer interaction. Emotional challenge primarily examines users’ understanding, exploration, and processing abilities of emotions in virtual scenarios and the digital world. It often requires individuals to engage in meaningful choices and interactions within virtual interactive environments as they navigate emotional dilemmas and respond to the uncertainties arising from their interactions. For instance, in a virtual scenario, a sniper aims at an enemy soldier holding a rocket launcher, intending to destroy their own camp. However, the sniper suddenly realizes that the enemy soldier is a close friend with whom they have shared hardships. When faced with the decision of whether to pull the trigger, the sniper may experience an emotional challenge of “agonizing between friendship and protecting their own base”. With the proposed definition of emotional challenge, we also elaborate on how emotional challenge is understood from the perspectives of users and designers and highlight the main mediums through which emotional challenge exists. Finally, this paper summarizes the research importance of emotional challenge in the metaverse and provides an outlook on the application prospects of emotional challenge in the metaverse. The metaverse aims to create a comprehensive virtual digital living space that maps or transcends the real world. It includes a plethora of virtual content and virtual characters, with users being a part of it and interacting with these virtual characters or content. Through autonomous interactions with the virtual characters or content, users are likely to explore complex emotions and confront with emotional challenge. Hence, emotional challenge becomes the core content embedded in virtual interactive scenarios within the metaverse, making it an essential element in the future digital world. In the design of metaverse systems for application, the involvement of emotional challenge can equip the metaverse with the ability to evoke and generate complex but meaningful emotional experiences. It can assist in training users’ skills in understanding, exploring, and dealing with difficult emotional issues, promoting self-realization, self-expression, and self-management abilities and thus building correct life outlook and values.关键词:emotional challenge;narrative games;virtual reality(VR);metaverse;human-computer interaction356|620|0更新时间:2024-10-23

摘要:With the development of natural human-computer interaction technologies, such as virtual reality(VR), metaverse, and artificial intelligence-generated content, human-computer interfaces based on interactive virtual contents are providing users with a new kind of task challenge——emotional challenge. Emotional challenge mainly examines the user’s ability to understand, explore, and deal with emotional issues in a virtual world. It is considered a core aspect of virtual interactive scenarios in the metaverse and an essential element in the future digital world. Moreover, users’ perceived emotional challenge has been proven to be a serious, profound, and reflective new type of user experience. Specifically, by resolving the tension within the narrative, identifying with virtual characters, and exploring emotional ambiguities, players encountering emotional challenge would be put in a more reflective state of mind and experience more diverse, impactful, and complex emotional experiences. Since the introduction of emotional challenge in 2015, it has attracted considerable attention in the field of human-computer interaction. With this background, researchers have conducted a series of research work on emotional challenge with respect to its definition, characteristics, interactive design, evaluation scales, and computational modeling. This study aims to systematically organize and introduce the related works of emotional challenge first and then elaborate its prospective applications in the metaverse. This paper starts by introducing the concept of challenge in digital games. Game challenge deals with the obstacles that players must overcome and the tasks that they must perform to make game progress. Game challenges generally have three different kinds. Physical challenge depends on players’ skills, such as speed, accuracy, endurance, dexterity, and strength, and cognitive challenge requires players to use their cognitive abilities, such as memory, observation, reasoning, planning, and problem solving. By contrast, emotional challenge deals with tension within the narrative or difficult material presented in the game and can only be overcome with a cognitive and affective effort from the player. Afterward, this paper introduces related works on emotional challenge, including how it differs from more traditional types of challenge, the diverse and relative negative emotional responses it evokes, the required game design characteristics, and the psychological theories relate to emotional challenge. This paper then introduces how emotional challenge affects player experience when separately from or jointly with traditional challenge in VR and PC conditions. Results showed that relatively exclusive emotional challenge induced a wider range of different emotions under VR and PC conditions, while the adding of emotional challenge broadened emotional responses only in VR condition. VR could also enhance players’ perceived experiences of emotional response, appreciation, immersion, and presence. This paper also reviewed the latest work on the potential of detecting perceived emotional challenge from physiological signals. Researchers collected physiological responses from a group of players who engaged in three typical game scenarios coving an entire spectrum of different game challenges. By collecting perceived challenge ratings from players and extracting basic physiological features, researchers applied multiple machine learning methods and metrics to detect challenge experiences. Results showed that most methods achieved a challenge detection accuracy of around 80%. Although emotional challenge has attracted considerable attention in the field of human-computer interaction, it has not been clearly defined at present. Additionally, current research on emotional challenge is primarily limited to the area of digital games. We believe that the concept of emotional challenge has the potential for application in various domains of human-computer interaction. Therefore, building upon previous works on emotional challenge, this paper provides a definition and description of emotional challenge from the perspective of human-computer interaction. Emotional challenge primarily examines users’ understanding, exploration, and processing abilities of emotions in virtual scenarios and the digital world. It often requires individuals to engage in meaningful choices and interactions within virtual interactive environments as they navigate emotional dilemmas and respond to the uncertainties arising from their interactions. For instance, in a virtual scenario, a sniper aims at an enemy soldier holding a rocket launcher, intending to destroy their own camp. However, the sniper suddenly realizes that the enemy soldier is a close friend with whom they have shared hardships. When faced with the decision of whether to pull the trigger, the sniper may experience an emotional challenge of “agonizing between friendship and protecting their own base”. With the proposed definition of emotional challenge, we also elaborate on how emotional challenge is understood from the perspectives of users and designers and highlight the main mediums through which emotional challenge exists. Finally, this paper summarizes the research importance of emotional challenge in the metaverse and provides an outlook on the application prospects of emotional challenge in the metaverse. The metaverse aims to create a comprehensive virtual digital living space that maps or transcends the real world. It includes a plethora of virtual content and virtual characters, with users being a part of it and interacting with these virtual characters or content. Through autonomous interactions with the virtual characters or content, users are likely to explore complex emotions and confront with emotional challenge. Hence, emotional challenge becomes the core content embedded in virtual interactive scenarios within the metaverse, making it an essential element in the future digital world. In the design of metaverse systems for application, the involvement of emotional challenge can equip the metaverse with the ability to evoke and generate complex but meaningful emotional experiences. It can assist in training users’ skills in understanding, exploring, and dealing with difficult emotional issues, promoting self-realization, self-expression, and self-management abilities and thus building correct life outlook and values.关键词:emotional challenge;narrative games;virtual reality(VR);metaverse;human-computer interaction356|620|0更新时间:2024-10-23 -

摘要:With the development of information technology, mixed reality (MR) technology has been applied in various fields, such as healthcare, education, and assisted guidance. MR scenes contain rich semantic information, and MR technology based on scene context information can improve users’ perception of the scene, optimize user interaction, and enhance the accuracy of interaction models. Therefore, they have quickly gained widespread attention. However, literature reviews specifically investigating context information in this field are limited, and organization and classification are lacking. This paper focuses on MR technology and systems that utilize context information. This study was conducted following the Preferred Reporting Items for Systematic Reviews and Meta-Analyses guidelines. First, keywords for the search were determined on the basis of three factors: research domain, study subjects, and research scenarios. Subsequently, searches were performed in two influential databases in the field of MR: ACM Digital Library and IEEE Xplore. A preliminary screening was then executed, considering the types of journals and conferences to eliminate irrelevant and unpublished literature. Subsequently, the titles and abstracts of the articles were reviewed sequentially, eliminating duplicates and irrelevant results. Finally, a total of 210 articles were individually screened to select 29 papers for the review. Additionally, four more articles were included on the basis of expertise, resulting in a total of 33 articles for the review. Through a comprehensive literature review of MR databases, three research questions were formulated, and a dataset of research articles was established. The three research questions addressed in this paper are as follows: 1) What are the different types of scene context? 2) How is scene context organized in various MR technologies and systems? 3) What are the application areas of empirical research? On the basis of the evolution of scene context and the refinement of MR technologies and systems, we analyze the empirical research papers spanning nearly 20 years. This analysis involves summarizing previous research and providing an overview of the latest developments in systems that leverage scene context. We also propose potential classification criteria, such as types of scene context, construction methods of knowledge bases for contextual information, fundamental technologies, and application domains. Among the various types of scene context, we categorize them into six classes: scene semantics, object semantics, spatial relationships, group relationships, dependence relationships, and motion relationships. Scene semantics is the semantic information encompassed by various elements in the scene environment, including objects, characters, and texture information. In the categorization of object semantics, we consider information about the individual object itself, such as user information, type, attributes, and special content. Spatial relationship refers to numerical information, such as the relative position, angle, or arrangement between various objects in the scene. We analyzed spatial relationships in three ways: base spatial relationships, microscene spatial information, and real-scene spatial information. We consider a certain number of closely neighboring objects of the same category as a group. Group relations focus on information about the overall perspective such as intergroup relations and the number of groups. Dependence relationship is concerned with the dependencies and affiliations that may exist between different objects in the scene at the functional and physical levels. Motion information is a new type of scene context, including basic motion information and special motion information, which describes the dynamic information of scene objects. Through an analysis of the utilization of various types of scene context, we establish the relationship between research objectives and contextual information, providing guidance on the selection of contextual information. The construction of knowledge bases is examined from user-intervention perspectives and types of fundamental technologies. Knowledge bases established with user intervention typically rely on researchers’ abstract analysis of scene objects rather than pre-existing databases. Conversely, knowledge bases built without user intervention rely on existing information, such as low-level raw data in databases or predefined scenarios. The underlying technologies in this context are categorized into virtual reality (VR) and augmented reality (AR). Conducting classification research from the dual perspectives of user intervention and fundamental technology facilitates a deeper understanding of how contextual information is organized in various MR systems. Application areas are investigated on the basis of the types of scenarios and whether they involve generative processes or not. The types of application scenarios are then categorized into six types: auxiliary guidance, AR annotation, scene reconstruction, medical treatment, object manipulation, and general purpose. Generative models can automatically generate target information, such as AR-annotated shadows based on the scene, whereas nongenerative models mainly focus on specific operations. Through analysis from these two perspectives, the advantages and disadvantages of MR systems and technologies in different application scenarios can be explored. Drawing upon the exploration and research in these three dimensions, we investigate the challenges associated with selecting, acquiring, and applying contextual information in MR scenarios. By classifying the research objects from different dimensions, we address the research questions and identify current shortcomings and future research directions. The aim of this review is to support researchers across diverse fields in designing, selecting, and evaluating scene context, ultimately fostering the advancement of future MR application technologies and systems.关键词:virtual reality(VR);augmented reality(AR);perception and interaction;context information;scene semantics385|603|0更新时间:2024-10-23

摘要:With the development of information technology, mixed reality (MR) technology has been applied in various fields, such as healthcare, education, and assisted guidance. MR scenes contain rich semantic information, and MR technology based on scene context information can improve users’ perception of the scene, optimize user interaction, and enhance the accuracy of interaction models. Therefore, they have quickly gained widespread attention. However, literature reviews specifically investigating context information in this field are limited, and organization and classification are lacking. This paper focuses on MR technology and systems that utilize context information. This study was conducted following the Preferred Reporting Items for Systematic Reviews and Meta-Analyses guidelines. First, keywords for the search were determined on the basis of three factors: research domain, study subjects, and research scenarios. Subsequently, searches were performed in two influential databases in the field of MR: ACM Digital Library and IEEE Xplore. A preliminary screening was then executed, considering the types of journals and conferences to eliminate irrelevant and unpublished literature. Subsequently, the titles and abstracts of the articles were reviewed sequentially, eliminating duplicates and irrelevant results. Finally, a total of 210 articles were individually screened to select 29 papers for the review. Additionally, four more articles were included on the basis of expertise, resulting in a total of 33 articles for the review. Through a comprehensive literature review of MR databases, three research questions were formulated, and a dataset of research articles was established. The three research questions addressed in this paper are as follows: 1) What are the different types of scene context? 2) How is scene context organized in various MR technologies and systems? 3) What are the application areas of empirical research? On the basis of the evolution of scene context and the refinement of MR technologies and systems, we analyze the empirical research papers spanning nearly 20 years. This analysis involves summarizing previous research and providing an overview of the latest developments in systems that leverage scene context. We also propose potential classification criteria, such as types of scene context, construction methods of knowledge bases for contextual information, fundamental technologies, and application domains. Among the various types of scene context, we categorize them into six classes: scene semantics, object semantics, spatial relationships, group relationships, dependence relationships, and motion relationships. Scene semantics is the semantic information encompassed by various elements in the scene environment, including objects, characters, and texture information. In the categorization of object semantics, we consider information about the individual object itself, such as user information, type, attributes, and special content. Spatial relationship refers to numerical information, such as the relative position, angle, or arrangement between various objects in the scene. We analyzed spatial relationships in three ways: base spatial relationships, microscene spatial information, and real-scene spatial information. We consider a certain number of closely neighboring objects of the same category as a group. Group relations focus on information about the overall perspective such as intergroup relations and the number of groups. Dependence relationship is concerned with the dependencies and affiliations that may exist between different objects in the scene at the functional and physical levels. Motion information is a new type of scene context, including basic motion information and special motion information, which describes the dynamic information of scene objects. Through an analysis of the utilization of various types of scene context, we establish the relationship between research objectives and contextual information, providing guidance on the selection of contextual information. The construction of knowledge bases is examined from user-intervention perspectives and types of fundamental technologies. Knowledge bases established with user intervention typically rely on researchers’ abstract analysis of scene objects rather than pre-existing databases. Conversely, knowledge bases built without user intervention rely on existing information, such as low-level raw data in databases or predefined scenarios. The underlying technologies in this context are categorized into virtual reality (VR) and augmented reality (AR). Conducting classification research from the dual perspectives of user intervention and fundamental technology facilitates a deeper understanding of how contextual information is organized in various MR systems. Application areas are investigated on the basis of the types of scenarios and whether they involve generative processes or not. The types of application scenarios are then categorized into six types: auxiliary guidance, AR annotation, scene reconstruction, medical treatment, object manipulation, and general purpose. Generative models can automatically generate target information, such as AR-annotated shadows based on the scene, whereas nongenerative models mainly focus on specific operations. Through analysis from these two perspectives, the advantages and disadvantages of MR systems and technologies in different application scenarios can be explored. Drawing upon the exploration and research in these three dimensions, we investigate the challenges associated with selecting, acquiring, and applying contextual information in MR scenarios. By classifying the research objects from different dimensions, we address the research questions and identify current shortcomings and future research directions. The aim of this review is to support researchers across diverse fields in designing, selecting, and evaluating scene context, ultimately fostering the advancement of future MR application technologies and systems.关键词:virtual reality(VR);augmented reality(AR);perception and interaction;context information;scene semantics385|603|0更新时间:2024-10-23 -