最新刊期

卷 28 , 期 8 , 2023

-

摘要:Document analysis and recognition (called document recognition in brief) is aimed to covert non-structured documents (typically, document images and online handwriting) into structured texts for facilitating computer processing and understanding. It is needed in wide applications due to the pervasive communication and usage of documents. The field of document recognition has attracted intensive attention and produced enormous progress in research and applications since 1960s. Particularly, the recent development of deep learning technology has boosted the performance of document recognition remarkably compared to traditional methods, and the technology has been applied successfully to document digitization, form processing, handwriting input, intelligent transportation, document retrieval and information extraction.In this article, we first introduce the background and involved techniques of document recognition, give an overview of the history of research (divided into four periods according to the objects of research, the methods and applications), and then review the main research progress with emphasis on deep learning based methods developed in recent years. After identifying the insufficiency of current technology, we finally suggest some important issues for future research.The review of recent progress is divided into sections corresponding to main processing steps, namely image pre-processing, layout analysis, scene text detection, text recognition, structured symbol and graphics recognition, document retrieval and information extraction.The review of recent progress is divided into sections corresponding to the main processing steps, namely image pre-processing, layout analysis, scene text detection, text recognition, structured symbol and graphics recognition, document retrieval and information extraction. 1) Due to the popularity of camera-captured document images, the current main task in image pre-processing is the rectification of distorted image while the task of binarization is still concerned. Recent methods are mostly end-to-end deep learning based transformation methods. 2) Layout analysis is dichotomized into physical layout analysis (page segmentation) and logical layout analysis (semantic region segmentation and reading order prediction). Recent page segmentation methods based on fully convolutional network (FCN) or graph neural network (GNN) have shown promises. Logical layout analysis has been addressed by deep neural networks fusing multi-modal information. Table structure analysis is a special task of layout analysis and has been studied intensively in recent years. 3) Scene text detection is a hot topic in document analysis and computer vision fields. Deep learning based methods for text methods can be divided into regression-based methods, segmentation-based methods and hybrid methods. FCN is prevalently used for extracting visual features, based on which models are built to predict text regions. 4) Text recognition is the core task in document analysis. We review recent works for handwritten text recognition and scene text recognition, which share some common strategies but also show different preferences. There are two main streams of methods: segmentation-based and sequence-to-sequence learning methods. The convolutional recurrent neural network (CRNN) model has received high attention in recent years and is being extended in respect of encoding, decoding or learning strategies, while segmentation-based methods combining deep learning are still performing competitively. A noteworthy tendency is the extension of text line recognition to page-level recognition. Following text recognition, we also review the works of end-to-end scene text recognition (also called as text spotting), for which text detection and recognition models are learned jointly. 5) Among symbol and graphics in documents, mathematical expressions and flowcharts have received increasing attention. Recent methods for mathematical expression recognition are mostly image-to-markup generation methods using encoder-decoder models, while graph-based methods promise in generating both recognition and segmentation results. Flowchart recognition is addressed using structured prediction models such as GNN. 6) Document retrieval concerned mainly keyword spotting in pre-deep learning era, while recent works focus on information extraction (spotting semantic entities) by fusing layout and language information. Pre-trained layout and multi-modal language models are showing promises, while visual information is not considered adequately.Overall, the recent progress shows that the objects of recognition are expanded in breadth and depth, the methods are getting closer to deep neural networks and deep learning, the recognition performance is improved constantly, and the technology is applied to extensive scenes. The review also reveals the insufficiencies of the current technology in accuracy and reliability on various tasks, the interpretability, the learning ability and adaptability.Future works are suggested in respect of performance promotion, application extension, and improved learning. Issues of performance promotion include the reliability of recognition, interpretability, omni-element recognition, long-tailed recognition, multi-lingual documents, complex layout analysis and understanding, recognition of distorted documents. Issues related to applications include new applications (such as robotic process automation (RPA), text scription in natural scenes, archeology), new technical problems involved in applications (such as semantic information extraction, cross-modal fusion, reasoning and decision related to application scenes). Aiming to improve the automatic system design, learning ability and adaptability, the involved learning problems/methods include small sample learning, transfer learning, multi-task learning, domain adaptation, structured prediction, weakly-supervised learning, self-supervised learning, open set learning, and cross-modal learning.关键词:document analysis and recognition;document intelligence;layout analysis;text detection;text recognition;graphics and symbol recognition;document information extraction353|2383|4更新时间:2024-05-07

摘要:Document analysis and recognition (called document recognition in brief) is aimed to covert non-structured documents (typically, document images and online handwriting) into structured texts for facilitating computer processing and understanding. It is needed in wide applications due to the pervasive communication and usage of documents. The field of document recognition has attracted intensive attention and produced enormous progress in research and applications since 1960s. Particularly, the recent development of deep learning technology has boosted the performance of document recognition remarkably compared to traditional methods, and the technology has been applied successfully to document digitization, form processing, handwriting input, intelligent transportation, document retrieval and information extraction.In this article, we first introduce the background and involved techniques of document recognition, give an overview of the history of research (divided into four periods according to the objects of research, the methods and applications), and then review the main research progress with emphasis on deep learning based methods developed in recent years. After identifying the insufficiency of current technology, we finally suggest some important issues for future research.The review of recent progress is divided into sections corresponding to main processing steps, namely image pre-processing, layout analysis, scene text detection, text recognition, structured symbol and graphics recognition, document retrieval and information extraction.The review of recent progress is divided into sections corresponding to the main processing steps, namely image pre-processing, layout analysis, scene text detection, text recognition, structured symbol and graphics recognition, document retrieval and information extraction. 1) Due to the popularity of camera-captured document images, the current main task in image pre-processing is the rectification of distorted image while the task of binarization is still concerned. Recent methods are mostly end-to-end deep learning based transformation methods. 2) Layout analysis is dichotomized into physical layout analysis (page segmentation) and logical layout analysis (semantic region segmentation and reading order prediction). Recent page segmentation methods based on fully convolutional network (FCN) or graph neural network (GNN) have shown promises. Logical layout analysis has been addressed by deep neural networks fusing multi-modal information. Table structure analysis is a special task of layout analysis and has been studied intensively in recent years. 3) Scene text detection is a hot topic in document analysis and computer vision fields. Deep learning based methods for text methods can be divided into regression-based methods, segmentation-based methods and hybrid methods. FCN is prevalently used for extracting visual features, based on which models are built to predict text regions. 4) Text recognition is the core task in document analysis. We review recent works for handwritten text recognition and scene text recognition, which share some common strategies but also show different preferences. There are two main streams of methods: segmentation-based and sequence-to-sequence learning methods. The convolutional recurrent neural network (CRNN) model has received high attention in recent years and is being extended in respect of encoding, decoding or learning strategies, while segmentation-based methods combining deep learning are still performing competitively. A noteworthy tendency is the extension of text line recognition to page-level recognition. Following text recognition, we also review the works of end-to-end scene text recognition (also called as text spotting), for which text detection and recognition models are learned jointly. 5) Among symbol and graphics in documents, mathematical expressions and flowcharts have received increasing attention. Recent methods for mathematical expression recognition are mostly image-to-markup generation methods using encoder-decoder models, while graph-based methods promise in generating both recognition and segmentation results. Flowchart recognition is addressed using structured prediction models such as GNN. 6) Document retrieval concerned mainly keyword spotting in pre-deep learning era, while recent works focus on information extraction (spotting semantic entities) by fusing layout and language information. Pre-trained layout and multi-modal language models are showing promises, while visual information is not considered adequately.Overall, the recent progress shows that the objects of recognition are expanded in breadth and depth, the methods are getting closer to deep neural networks and deep learning, the recognition performance is improved constantly, and the technology is applied to extensive scenes. The review also reveals the insufficiencies of the current technology in accuracy and reliability on various tasks, the interpretability, the learning ability and adaptability.Future works are suggested in respect of performance promotion, application extension, and improved learning. Issues of performance promotion include the reliability of recognition, interpretability, omni-element recognition, long-tailed recognition, multi-lingual documents, complex layout analysis and understanding, recognition of distorted documents. Issues related to applications include new applications (such as robotic process automation (RPA), text scription in natural scenes, archeology), new technical problems involved in applications (such as semantic information extraction, cross-modal fusion, reasoning and decision related to application scenes). Aiming to improve the automatic system design, learning ability and adaptability, the involved learning problems/methods include small sample learning, transfer learning, multi-task learning, domain adaptation, structured prediction, weakly-supervised learning, self-supervised learning, open set learning, and cross-modal learning.关键词:document analysis and recognition;document intelligence;layout analysis;text detection;text recognition;graphics and symbol recognition;document information extraction353|2383|4更新时间:2024-05-07 -

摘要:Text can be as one of the key carriers for information transmission. Digital media-related text has been widely developing for such image aspects of document and scene contexts. To extract and analyze these text information-involved images automatically, Conventional researches are mainly focused on automatic text extraction techniques like scene text detection and recognition. However, text-centric images-based semantic information recognition or analysis as a downstream task of spotting text, remains a challenge due to the difficulty of fully leveraging multi-modal features from both vision and language. To this end, text-centric image understanding has been an emerging research topic and many related tasks have been proposed. For example, the visual information extraction technique is capable of extracting the specified content from the given image, which can be used to improve productivity in finance, social media, and other fields. In this paper, we introduce five representative text-centric image understanding tasks and conduct a systematic survey on them. According to the understanding level, these tasks can be broadly classified into two categories. The first category requires the basic understanding ability to extract and distinguish information, such as visual information extraction and scene text retrieval. In contrast, besides the fundamental understanding ability, the second category is more concerned with high-level semantic understanding capabilities like information aggregation and logical reasoning. With the research progress in deep learning and multimodal learning, the second category has attracted considerable attention recently. For the second category, this survey mainly introduces document visual question answering, scene text visual question answering, and scene text image captioning tasks. Over the past few decades, the development of text-centric image understanding techniques has gone through several stages. Earlier approaches are based on heuristic rules and may only utilize unimodal features. Currently, deep learning methods have gained wide popularity and dominated this area. Meanwhile, multimodal features are valued and exploited to improve performance. To be more specific, traditional visual information extraction depends on pre-defined templates or specific rules. Traditional text retrieval task tends to represent words with pyramid histograms of character vectors and predict the matched image according to the representation distance. Expanded from the conventional visual question answering framework, earlier document visual question answering, and scene text visual question answering approaches simply add an optical character recognition branch to extract text information. As integrating knowledge from multimodal signals helps to better understand images, graph neural networks and Transformer-based frameworks are used to fuse multi-modal features recently. Furthermore, self-supervised pre-training schemes are applied to learn the alignment between different modalities, thus boosting model capabilities by a large margin. For each text-centric image understanding task, we summarize classical methods and further elaborate the pros and cons of them. In addition, we also discuss the potential problems and further research directions for the community. Firstly, due to the complexity of different modality features, such as mutative layout and diverse fonts, current deep learning architectures still fail to complete the interaction of multi-modal information efficiently. Secondly, existing text-centric image understanding methods are still limited in their reasoning abilities, involving counting, sorting, and arithmetic operations. For instance, in document visual question answering and scene text visual question answering tasks, current models have difficulty predicting accurate answers when they require to jointly reason over image layout, textual content, and visual art, etc. Finally, the current text-centric understanding tasks are often trained independently and the correlation between different tasks has not been effectively leveraged. We hope this survey can help researchers capture the latest progress in text-centric image understanding and inspire the new design of advanced models and algorithms.关键词:text image understanding;visual information extraction;scene text retrieval;document visual question answering;scene text visual question answering;scene text image captioning198|513|1更新时间:2024-05-07

摘要:Text can be as one of the key carriers for information transmission. Digital media-related text has been widely developing for such image aspects of document and scene contexts. To extract and analyze these text information-involved images automatically, Conventional researches are mainly focused on automatic text extraction techniques like scene text detection and recognition. However, text-centric images-based semantic information recognition or analysis as a downstream task of spotting text, remains a challenge due to the difficulty of fully leveraging multi-modal features from both vision and language. To this end, text-centric image understanding has been an emerging research topic and many related tasks have been proposed. For example, the visual information extraction technique is capable of extracting the specified content from the given image, which can be used to improve productivity in finance, social media, and other fields. In this paper, we introduce five representative text-centric image understanding tasks and conduct a systematic survey on them. According to the understanding level, these tasks can be broadly classified into two categories. The first category requires the basic understanding ability to extract and distinguish information, such as visual information extraction and scene text retrieval. In contrast, besides the fundamental understanding ability, the second category is more concerned with high-level semantic understanding capabilities like information aggregation and logical reasoning. With the research progress in deep learning and multimodal learning, the second category has attracted considerable attention recently. For the second category, this survey mainly introduces document visual question answering, scene text visual question answering, and scene text image captioning tasks. Over the past few decades, the development of text-centric image understanding techniques has gone through several stages. Earlier approaches are based on heuristic rules and may only utilize unimodal features. Currently, deep learning methods have gained wide popularity and dominated this area. Meanwhile, multimodal features are valued and exploited to improve performance. To be more specific, traditional visual information extraction depends on pre-defined templates or specific rules. Traditional text retrieval task tends to represent words with pyramid histograms of character vectors and predict the matched image according to the representation distance. Expanded from the conventional visual question answering framework, earlier document visual question answering, and scene text visual question answering approaches simply add an optical character recognition branch to extract text information. As integrating knowledge from multimodal signals helps to better understand images, graph neural networks and Transformer-based frameworks are used to fuse multi-modal features recently. Furthermore, self-supervised pre-training schemes are applied to learn the alignment between different modalities, thus boosting model capabilities by a large margin. For each text-centric image understanding task, we summarize classical methods and further elaborate the pros and cons of them. In addition, we also discuss the potential problems and further research directions for the community. Firstly, due to the complexity of different modality features, such as mutative layout and diverse fonts, current deep learning architectures still fail to complete the interaction of multi-modal information efficiently. Secondly, existing text-centric image understanding methods are still limited in their reasoning abilities, involving counting, sorting, and arithmetic operations. For instance, in document visual question answering and scene text visual question answering tasks, current models have difficulty predicting accurate answers when they require to jointly reason over image layout, textual content, and visual art, etc. Finally, the current text-centric understanding tasks are often trained independently and the correlation between different tasks has not been effectively leveraged. We hope this survey can help researchers capture the latest progress in text-centric image understanding and inspire the new design of advanced models and algorithms.关键词:text image understanding;visual information extraction;scene text retrieval;document visual question answering;scene text visual question answering;scene text image captioning198|513|1更新时间:2024-05-07 -

摘要:A huge amount of big data-driven documents are required to be digitalized, stored and distributed in relation to images contexts. Such of application scenarios are concerned of document images-oriented key information, such as receipt understanding, card recognition, automatic paper scoring and document matching. Such process is called visual information extraction (VIE), which is focused on information mining, analysis, and extraction from visually rich documents. Documents-related text objects are diverse and varied, multi-language documents can be also commonly-used incorporated with single language scenario. Furthermore, text corpus differs from field to field. For example, a difference in the text content is required to be handled between legal files and medical documents. A complex layout may exist when a variety of visual elements are involved in a document, such as pictures, tables, and statistical curves. Unreadable document images are often derived and distorted from such noises like ink, wrinkles, distortion, and illumination. The completed pipeline of visual information extraction can be segmented into four steps: first, a pre-processing algorithm should be applied to remove the problem of interference and noise in a manner of correction and denoising. Second, document image-derived text strings and their locations contexts may be extracted in terms of text detection and recognition methods. Subsequently, multimodal feature extraction is required to perform high-level calculation and fusion of text, layout and visual features contained in visually rich documents. Finally, entity category parsing is applied to determine the category of each entity. Existed methods are mainly focused on the latter of two steps, while some take text detection and recognition into account. Early works are concerned of querying key information manually via rule-based methods. The effectiveness of these algorithms is quite lower, and they have poor generalization performance as well. The emerging deep learning technique-based feature extractors like convolutional neural networks and Transformers are linked with depth features for the optimization of performance and efficiency. In recent years, deep learning based methods have been widely applied in real scenarios. To sum up, we review deep-learning-based VIE methods and public datasets proposed in recent years, and these algorithms can be classified by their main characteristics. Recent deep-learning-based VIE methods proposed can be roughly categorized into six types of methods relevant to such contexts of grid-based, graph-neural-network-based (GNN-based), Transformer-based, end-to-end, few-shot, and the related others. Grid-based methods are focused on taking the document image as a two-dimensional matrix, pixels-inner text bounding box are filled with text embedding, and the grid representation can be formed for deep processing. Grid-based methods are often simple and have less computational cost. However, its representation ability is not strong enough, and features of text regions in small size may not be fully exploited. GNN-based methods take text segments as graph nodes, relations between segment coordinates are encoded for edge representations. Such graph convolution-related operations are applied for feature extraction further. GNN-based schemes achieve a good balance between cost and performance, but some characteristics of GNN itself like over-smoothing and gradient vanishing are often challenged to train the model. Transformer-based methods achieve outstanding performance through pre-training with a vast amount of data. These methods are preferred to have powerful generalizability, and it can be applied for multiple scenarios extended to other related document understanding tasks. However, these computational models are often costly and computing resources are required to be optimized. A more efficient architecture and pre-training strategy is still as a challenging problem to be resolved. The VIE is a mutual-benefited process, and text detection and recognition optical character recognition (OCR) are needed as prerequisites. The OCR-attainable problems like coordinate mismatches and text recognition errors will affect the following steps as well. Such end-to-end paradigms can be traced to optimize the OCR error accumulation to some extent. Few-shot methods-related structures can be used to enhance the generalization ability of models efficiently, and intrinsic features can be exploited to some extend in term of a small number of samples only.First, the growth of this research domain is reviewed and its challenging contexts can be predicted as well. Then, recent deep learning based visual information extraction methods and their contexts are summarized and analyzed. Furthermore, multiple categories-relevant methods are predictable, while the algorithm flow and technical development route of the representative models are further discussed and analyzed. Additionally, features of some public datasets are illustrated in comparison with the performance of representative models on these benchmarks. Finally, research highlights and limitations of each sort of model are laid out, and future research direction is forecasted as well.关键词:visual information extraction (VIE);document image analysis and understanding;computer vision;natural language processing;optical character recognition (OCR);deep learning;survey490|770|1更新时间:2024-05-07

摘要:A huge amount of big data-driven documents are required to be digitalized, stored and distributed in relation to images contexts. Such of application scenarios are concerned of document images-oriented key information, such as receipt understanding, card recognition, automatic paper scoring and document matching. Such process is called visual information extraction (VIE), which is focused on information mining, analysis, and extraction from visually rich documents. Documents-related text objects are diverse and varied, multi-language documents can be also commonly-used incorporated with single language scenario. Furthermore, text corpus differs from field to field. For example, a difference in the text content is required to be handled between legal files and medical documents. A complex layout may exist when a variety of visual elements are involved in a document, such as pictures, tables, and statistical curves. Unreadable document images are often derived and distorted from such noises like ink, wrinkles, distortion, and illumination. The completed pipeline of visual information extraction can be segmented into four steps: first, a pre-processing algorithm should be applied to remove the problem of interference and noise in a manner of correction and denoising. Second, document image-derived text strings and their locations contexts may be extracted in terms of text detection and recognition methods. Subsequently, multimodal feature extraction is required to perform high-level calculation and fusion of text, layout and visual features contained in visually rich documents. Finally, entity category parsing is applied to determine the category of each entity. Existed methods are mainly focused on the latter of two steps, while some take text detection and recognition into account. Early works are concerned of querying key information manually via rule-based methods. The effectiveness of these algorithms is quite lower, and they have poor generalization performance as well. The emerging deep learning technique-based feature extractors like convolutional neural networks and Transformers are linked with depth features for the optimization of performance and efficiency. In recent years, deep learning based methods have been widely applied in real scenarios. To sum up, we review deep-learning-based VIE methods and public datasets proposed in recent years, and these algorithms can be classified by their main characteristics. Recent deep-learning-based VIE methods proposed can be roughly categorized into six types of methods relevant to such contexts of grid-based, graph-neural-network-based (GNN-based), Transformer-based, end-to-end, few-shot, and the related others. Grid-based methods are focused on taking the document image as a two-dimensional matrix, pixels-inner text bounding box are filled with text embedding, and the grid representation can be formed for deep processing. Grid-based methods are often simple and have less computational cost. However, its representation ability is not strong enough, and features of text regions in small size may not be fully exploited. GNN-based methods take text segments as graph nodes, relations between segment coordinates are encoded for edge representations. Such graph convolution-related operations are applied for feature extraction further. GNN-based schemes achieve a good balance between cost and performance, but some characteristics of GNN itself like over-smoothing and gradient vanishing are often challenged to train the model. Transformer-based methods achieve outstanding performance through pre-training with a vast amount of data. These methods are preferred to have powerful generalizability, and it can be applied for multiple scenarios extended to other related document understanding tasks. However, these computational models are often costly and computing resources are required to be optimized. A more efficient architecture and pre-training strategy is still as a challenging problem to be resolved. The VIE is a mutual-benefited process, and text detection and recognition optical character recognition (OCR) are needed as prerequisites. The OCR-attainable problems like coordinate mismatches and text recognition errors will affect the following steps as well. Such end-to-end paradigms can be traced to optimize the OCR error accumulation to some extent. Few-shot methods-related structures can be used to enhance the generalization ability of models efficiently, and intrinsic features can be exploited to some extend in term of a small number of samples only.First, the growth of this research domain is reviewed and its challenging contexts can be predicted as well. Then, recent deep learning based visual information extraction methods and their contexts are summarized and analyzed. Furthermore, multiple categories-relevant methods are predictable, while the algorithm flow and technical development route of the representative models are further discussed and analyzed. Additionally, features of some public datasets are illustrated in comparison with the performance of representative models on these benchmarks. Finally, research highlights and limitations of each sort of model are laid out, and future research direction is forecasted as well.关键词:visual information extraction (VIE);document image analysis and understanding;computer vision;natural language processing;optical character recognition (OCR);deep learning;survey490|770|1更新时间:2024-05-07 -

摘要:ObjectiveVisually-rich document information extraction is committed to such key document images-related text information structure. Invoice-contextual data can be as one of the commonly-used data types of documents. For the enterprises-oriented reimbursement process, much more demands are required of key information extraction of invoices. To resolve this problem, such key techniques like optical character recognition(OCR) and information extraction have been developing intensively. However, the number of related publicly available datasets and the number of images involved are relatively challenged to rich in each dataset.MethodWe develop a real financial scanned Chinese invoice dataset, for which it can be used for collection, annotation, and releasing further. This data set consists of 40 716 images of six types of invoices in the context of aircraft itinerary tickets, taxi invoices, general quota invoices, passenger invoices, train tickets, and toll invoices. It can be divided into training/validation/testing sets further in related to 19 999/10 358/10 359 images. The labeling process of this dataset is concerned of such key steps like pseudo-label generation, manual recheck and cleaning, and manual desensitization, which can offer two sort of labels-related for the OCR task and information extraction deliberately. Such of challenges are still to be resolved in the context of print misalignment, blurring, and overlap. We facilitate a baseline scheme to realize end-to-end inference result. The overall solution can be divided into four steps as mentioned below: 1) a OCR module to predict all text instances’ content and location. 2) A text block ordering module to re-arrange all text instances into a more feasible order and serialize the 2D information into 1D. 3) The LayoutLM v2 model is melted into three modalities information (text, visual, and layout) and generate the prediction of sequence labels, which can utilize knowledge generated from the pre-trained language model. 4) The post-processing module transfer the model’s output to the final structural information. The overall solution can simplify the complexity of the overall ticket system via the integration of multiple invoices.ResultThe baseline experimental results are verified using OCR engine reasoning, OCR model prediction, and OCR ground-truth value. The F1 value of 0.768 7/0.857 0/0.985 7 can be reached as well. Furthermore, the effectiveness of the overall solution and LayoutLM V2 model can be optimized, and the challenging issue of OCR can be reflected in this scenario. Tesla-V100 GPU-based inference speed of the model can be reached to 1.88 frame/s. The accuracy of 90% can be reached using the raw image only as input. We demonstrate that the optimal solutions can be roughly segmented into two categories: one category is focused on melting the structured task into the text detection straightforward (i.e., multi-category detection), and the requirement of recognition model is to identify the text only with the corresponding category of concern. The other one is to implement the general information strategy, and an independent information extraction model can be used to extract key information. These solutions can integrate the potentials of the OCR and information extraction technologies farther.ConclusionThe scanned invoice dataset SCID (scanned Chinese invoice dataset) proposed demonstrates the application scenarios of the OCR technology can provide data support for the research and development of visually-rich document information extraction-related technology and technical implementation. The dataset can be linked and downloaded from https://davar-lab.github.io/dataset/scid.html.关键词:dataset;financial invoices;visually-rich documents;information extraction;optical character recognition (OCR);multi-modal information296|1025|1更新时间:2024-05-07

摘要:ObjectiveVisually-rich document information extraction is committed to such key document images-related text information structure. Invoice-contextual data can be as one of the commonly-used data types of documents. For the enterprises-oriented reimbursement process, much more demands are required of key information extraction of invoices. To resolve this problem, such key techniques like optical character recognition(OCR) and information extraction have been developing intensively. However, the number of related publicly available datasets and the number of images involved are relatively challenged to rich in each dataset.MethodWe develop a real financial scanned Chinese invoice dataset, for which it can be used for collection, annotation, and releasing further. This data set consists of 40 716 images of six types of invoices in the context of aircraft itinerary tickets, taxi invoices, general quota invoices, passenger invoices, train tickets, and toll invoices. It can be divided into training/validation/testing sets further in related to 19 999/10 358/10 359 images. The labeling process of this dataset is concerned of such key steps like pseudo-label generation, manual recheck and cleaning, and manual desensitization, which can offer two sort of labels-related for the OCR task and information extraction deliberately. Such of challenges are still to be resolved in the context of print misalignment, blurring, and overlap. We facilitate a baseline scheme to realize end-to-end inference result. The overall solution can be divided into four steps as mentioned below: 1) a OCR module to predict all text instances’ content and location. 2) A text block ordering module to re-arrange all text instances into a more feasible order and serialize the 2D information into 1D. 3) The LayoutLM v2 model is melted into three modalities information (text, visual, and layout) and generate the prediction of sequence labels, which can utilize knowledge generated from the pre-trained language model. 4) The post-processing module transfer the model’s output to the final structural information. The overall solution can simplify the complexity of the overall ticket system via the integration of multiple invoices.ResultThe baseline experimental results are verified using OCR engine reasoning, OCR model prediction, and OCR ground-truth value. The F1 value of 0.768 7/0.857 0/0.985 7 can be reached as well. Furthermore, the effectiveness of the overall solution and LayoutLM V2 model can be optimized, and the challenging issue of OCR can be reflected in this scenario. Tesla-V100 GPU-based inference speed of the model can be reached to 1.88 frame/s. The accuracy of 90% can be reached using the raw image only as input. We demonstrate that the optimal solutions can be roughly segmented into two categories: one category is focused on melting the structured task into the text detection straightforward (i.e., multi-category detection), and the requirement of recognition model is to identify the text only with the corresponding category of concern. The other one is to implement the general information strategy, and an independent information extraction model can be used to extract key information. These solutions can integrate the potentials of the OCR and information extraction technologies farther.ConclusionThe scanned invoice dataset SCID (scanned Chinese invoice dataset) proposed demonstrates the application scenarios of the OCR technology can provide data support for the research and development of visually-rich document information extraction-related technology and technical implementation. The dataset can be linked and downloaded from https://davar-lab.github.io/dataset/scid.html.关键词:dataset;financial invoices;visually-rich documents;information extraction;optical character recognition (OCR);multi-modal information296|1025|1更新时间:2024-05-07 -

摘要:ObjectiveElectronic entry of paper documents is normally based on optical character recognition (OCR) technology. A commonly-used OCR system consists of four sequential steps: image acquisition, image preprocessing, character recognition, and typesetting output. The acquired digital image will have a certain degree of geometric distortion because paper document may not be parallel to the plane where the image acquisition device is located. The lens of the image acquisition device may have its own problem of distortion, or its paper document may challenge for deformation. Image acquisition problems of interferences and distortions will be more severe when handheld image capture devices are used (e.g., mobile phone cameras). Computer vision-oriented highly robust correction algorithms are focused on removing geometric distortions derived from imaging process of paper documents. Currrent researches are concerned about neural networks-based geometric correction of document images. Compared to traditional geometric correction algorithms, neural network-based document image correction algorithms have its potential ability in terms of both hardware requirements and algorithm implementation. However, it is still challenged for optimizing processing performance, especially for the contexts of offline and light weight.To improve the visual effect and OCR recognition accuracy of the original image, geometric correction of document graphics can be used to handle distortion, aberration, skew, and other related image-capturing geometric perturbations. Conventional image processing methods are required for such auxiliary hardware like laser scanners or multiple views-captured documents, and the algorithms can not be robusted. The emerging deep learning methods can be used to optimize traditional algorithms via modeling, but these models still have certain limitations. So, we develop a lightweight geometric correction network (AsymcNet), for which an integrated document region localisation and correction method can be oriented to implement geometric correction of document images end-to-end.MethodAsymcNet is designed and dealt with possible geometric interference in image acquisition. It consists of document regions-located segmentation network and a grid regression-rectifying regression network, as well as two sub-networks in a cascade form. Segmentation network-based AsymcNet can achieve good correction results for document images in various fields of view. In the regression part of the network, the resolution of the output regression grid is down to shrink the memory consumption and duration of training and inference. The methodologies are illustrated as follows: 1)Segmentation of the network: a simplified Unet-basd skip connection is set up between the encoder and decoder, in which lower layers-derived features can flow into higher layers directly and melt them into small resolution inputs and outputs. Considering the simplicity of the segmentation task, the segmentation network uses a small resolution (128 × 128 pixels) document image as input and outputs a small resolution segmentation result for the sake of lightweight and possible subsequent localization and mobile porting. 2) Regression network: compared to the segmentation task, the regression task of correcting the grid output is more complex. To capture more details from the image to be corrected for the final corrected grid regression, the regression network can be used to adapt a large resolution (512 × 512 pixels) document image as input with the segmentation result of the segmentation network output as a dot product, and outputs a small resolution (128 × 128 pixels) corrected grid.ResultAsymcNet-relevant comparative analysis is carried out in relevance to 4 popular methods. The multi-scale structural similarity (MS-SSIM) of raw images can be improved from 0.318 to 0.467; The local distortion (LD) is improved from 33.608 to 11.615; and the character error rate (CER) is optimized from 0.570 to 0.273. Compared to displacement flow estimation with fully convolutional network (DFE-FC), AsymcNet’s MS-SSIM is improved by 0.036, LD is lower by 2.193, CER is shrinked by 0.033, and AsymcNet’s average processing time for a single image is required for 8.85% of DFE-FC’s only. The experimental results demonstrate that the proposed AsymcNet has certain advantages in comparison with other related correction algorithms. In particular, when the relative area occupied by document regions in the image to be processed is small, the advantage of AsymcNet is more significant due to the integration of sub-networks for document region segmentation in the structure of AsymcNet.ConclusionOur AsymcNet proposed has been validated for its effectiveness and generalization. Compared to existing methods, AsymcNet has its priorities in terms of correction accuracy, computational efficiency, and generalization. Furthermore, the design of AsymcNet is focused on “small resolution grid” as the regression target of the network, which can alleviate the convergence difficulty of the network and the memory consumption during training and inference. The generalizability of the network can be improved further.关键词:image preprocessing;geometric correction;full convolutional network(FCN);grid sampling;end-to-end106|417|1更新时间:2024-05-07

摘要:ObjectiveElectronic entry of paper documents is normally based on optical character recognition (OCR) technology. A commonly-used OCR system consists of four sequential steps: image acquisition, image preprocessing, character recognition, and typesetting output. The acquired digital image will have a certain degree of geometric distortion because paper document may not be parallel to the plane where the image acquisition device is located. The lens of the image acquisition device may have its own problem of distortion, or its paper document may challenge for deformation. Image acquisition problems of interferences and distortions will be more severe when handheld image capture devices are used (e.g., mobile phone cameras). Computer vision-oriented highly robust correction algorithms are focused on removing geometric distortions derived from imaging process of paper documents. Currrent researches are concerned about neural networks-based geometric correction of document images. Compared to traditional geometric correction algorithms, neural network-based document image correction algorithms have its potential ability in terms of both hardware requirements and algorithm implementation. However, it is still challenged for optimizing processing performance, especially for the contexts of offline and light weight.To improve the visual effect and OCR recognition accuracy of the original image, geometric correction of document graphics can be used to handle distortion, aberration, skew, and other related image-capturing geometric perturbations. Conventional image processing methods are required for such auxiliary hardware like laser scanners or multiple views-captured documents, and the algorithms can not be robusted. The emerging deep learning methods can be used to optimize traditional algorithms via modeling, but these models still have certain limitations. So, we develop a lightweight geometric correction network (AsymcNet), for which an integrated document region localisation and correction method can be oriented to implement geometric correction of document images end-to-end.MethodAsymcNet is designed and dealt with possible geometric interference in image acquisition. It consists of document regions-located segmentation network and a grid regression-rectifying regression network, as well as two sub-networks in a cascade form. Segmentation network-based AsymcNet can achieve good correction results for document images in various fields of view. In the regression part of the network, the resolution of the output regression grid is down to shrink the memory consumption and duration of training and inference. The methodologies are illustrated as follows: 1)Segmentation of the network: a simplified Unet-basd skip connection is set up between the encoder and decoder, in which lower layers-derived features can flow into higher layers directly and melt them into small resolution inputs and outputs. Considering the simplicity of the segmentation task, the segmentation network uses a small resolution (128 × 128 pixels) document image as input and outputs a small resolution segmentation result for the sake of lightweight and possible subsequent localization and mobile porting. 2) Regression network: compared to the segmentation task, the regression task of correcting the grid output is more complex. To capture more details from the image to be corrected for the final corrected grid regression, the regression network can be used to adapt a large resolution (512 × 512 pixels) document image as input with the segmentation result of the segmentation network output as a dot product, and outputs a small resolution (128 × 128 pixels) corrected grid.ResultAsymcNet-relevant comparative analysis is carried out in relevance to 4 popular methods. The multi-scale structural similarity (MS-SSIM) of raw images can be improved from 0.318 to 0.467; The local distortion (LD) is improved from 33.608 to 11.615; and the character error rate (CER) is optimized from 0.570 to 0.273. Compared to displacement flow estimation with fully convolutional network (DFE-FC), AsymcNet’s MS-SSIM is improved by 0.036, LD is lower by 2.193, CER is shrinked by 0.033, and AsymcNet’s average processing time for a single image is required for 8.85% of DFE-FC’s only. The experimental results demonstrate that the proposed AsymcNet has certain advantages in comparison with other related correction algorithms. In particular, when the relative area occupied by document regions in the image to be processed is small, the advantage of AsymcNet is more significant due to the integration of sub-networks for document region segmentation in the structure of AsymcNet.ConclusionOur AsymcNet proposed has been validated for its effectiveness and generalization. Compared to existing methods, AsymcNet has its priorities in terms of correction accuracy, computational efficiency, and generalization. Furthermore, the design of AsymcNet is focused on “small resolution grid” as the regression target of the network, which can alleviate the convergence difficulty of the network and the memory consumption during training and inference. The generalizability of the network can be improved further.关键词:image preprocessing;geometric correction;full convolutional network(FCN);grid sampling;end-to-end106|417|1更新时间:2024-05-07 -

摘要:ObjectiveThe Dunhuang manuscripts are evident for cultural heritage researches of China. Most of preserved manuscripts are restricted of its age-derived fragments and remnants and challenged for their collation and contexts. However, artificial reconstruction is time consuming and difficult to be developed. The emerging computer graphics-derived computer-aided virtual recovery technology has been facilitating in the context of high speed, easy to use and accuracy.MethodWe develop a model-hierarchical digital image reconstruction method. First, a dataset of ancient Dunhuang manuscript fragments is constructed. Second, expertise-relevant digital images of the fragments are pre-processed to assist in the rationalization of fragment features and establishment of a plane for the reconstructing process. Moreover, a three layers model is composed of physical, structural and semantic features via fusing multiple collocation cues. For the physical layer, grey-scale feature similarity measures are based on Jaccard correlation coefficients. For the structural layer, geometric contour matching is based on Freeman coding. For the semantic layer, character column spacing consistency features are based on grey-scale fluctuations. The whole reconstruction process is combined with two matching aspects of local and global contexts. The key to the local matching is to determine whether the two pieces match or not, while the vector similarity calculations are performed on the feature descriptors. The local matching results are evaluated and scored by reasonable thresholds between low and the high level. To realize the whole automation process, global matching strategy is implemented in terms of the Hannotta model, and the two aspect of fully automated reconstruction is performed.ResultTo verify the effectiveness of the proposed method, experiments are carried out on a 256-fragments dataset, which consists of 31 splinterable fragments (which can be reconstructed in 11 groups) and 225 orphaned fragments. The results analysis illustrates that 8 groups of fragments are fully matched, 2 groups are partially matched, and 218 orphaned fragments are identified as well. The accuracy of completed matching is 95.76% while incomplete matching is 95.70%. Both of their accuracies can be optimized and reached to 95%. To be more specific, each of partial accuracy are reached to 20.62%, 63.44% and 23.43%, and the improvement in complete accuracy of each are 39.85%, 68.09% and 23.33%.ConclusionThe layered model combined with high-speed computing performance of the computer can incorporate multiple features and complete the reconstruction of ancient manuscript fragments effectively. The potential virtual reconstruction is beneficial for secondary damage to the fragile fragments, as well as some irreversible operations. Furthermore, the reconstructed results can provide an important basis for subsequent physical splicing, which can greatly enhance the efficiency of the artificial reconstruction.关键词:ancient manuscript fragments;Dunhuang manuscripts;automatic reconstruction;curve feature;hierarchical model222|297|2更新时间:2024-05-07

摘要:ObjectiveThe Dunhuang manuscripts are evident for cultural heritage researches of China. Most of preserved manuscripts are restricted of its age-derived fragments and remnants and challenged for their collation and contexts. However, artificial reconstruction is time consuming and difficult to be developed. The emerging computer graphics-derived computer-aided virtual recovery technology has been facilitating in the context of high speed, easy to use and accuracy.MethodWe develop a model-hierarchical digital image reconstruction method. First, a dataset of ancient Dunhuang manuscript fragments is constructed. Second, expertise-relevant digital images of the fragments are pre-processed to assist in the rationalization of fragment features and establishment of a plane for the reconstructing process. Moreover, a three layers model is composed of physical, structural and semantic features via fusing multiple collocation cues. For the physical layer, grey-scale feature similarity measures are based on Jaccard correlation coefficients. For the structural layer, geometric contour matching is based on Freeman coding. For the semantic layer, character column spacing consistency features are based on grey-scale fluctuations. The whole reconstruction process is combined with two matching aspects of local and global contexts. The key to the local matching is to determine whether the two pieces match or not, while the vector similarity calculations are performed on the feature descriptors. The local matching results are evaluated and scored by reasonable thresholds between low and the high level. To realize the whole automation process, global matching strategy is implemented in terms of the Hannotta model, and the two aspect of fully automated reconstruction is performed.ResultTo verify the effectiveness of the proposed method, experiments are carried out on a 256-fragments dataset, which consists of 31 splinterable fragments (which can be reconstructed in 11 groups) and 225 orphaned fragments. The results analysis illustrates that 8 groups of fragments are fully matched, 2 groups are partially matched, and 218 orphaned fragments are identified as well. The accuracy of completed matching is 95.76% while incomplete matching is 95.70%. Both of their accuracies can be optimized and reached to 95%. To be more specific, each of partial accuracy are reached to 20.62%, 63.44% and 23.43%, and the improvement in complete accuracy of each are 39.85%, 68.09% and 23.33%.ConclusionThe layered model combined with high-speed computing performance of the computer can incorporate multiple features and complete the reconstruction of ancient manuscript fragments effectively. The potential virtual reconstruction is beneficial for secondary damage to the fragile fragments, as well as some irreversible operations. Furthermore, the reconstructed results can provide an important basis for subsequent physical splicing, which can greatly enhance the efficiency of the artificial reconstruction.关键词:ancient manuscript fragments;Dunhuang manuscripts;automatic reconstruction;curve feature;hierarchical model222|297|2更新时间:2024-05-07 -

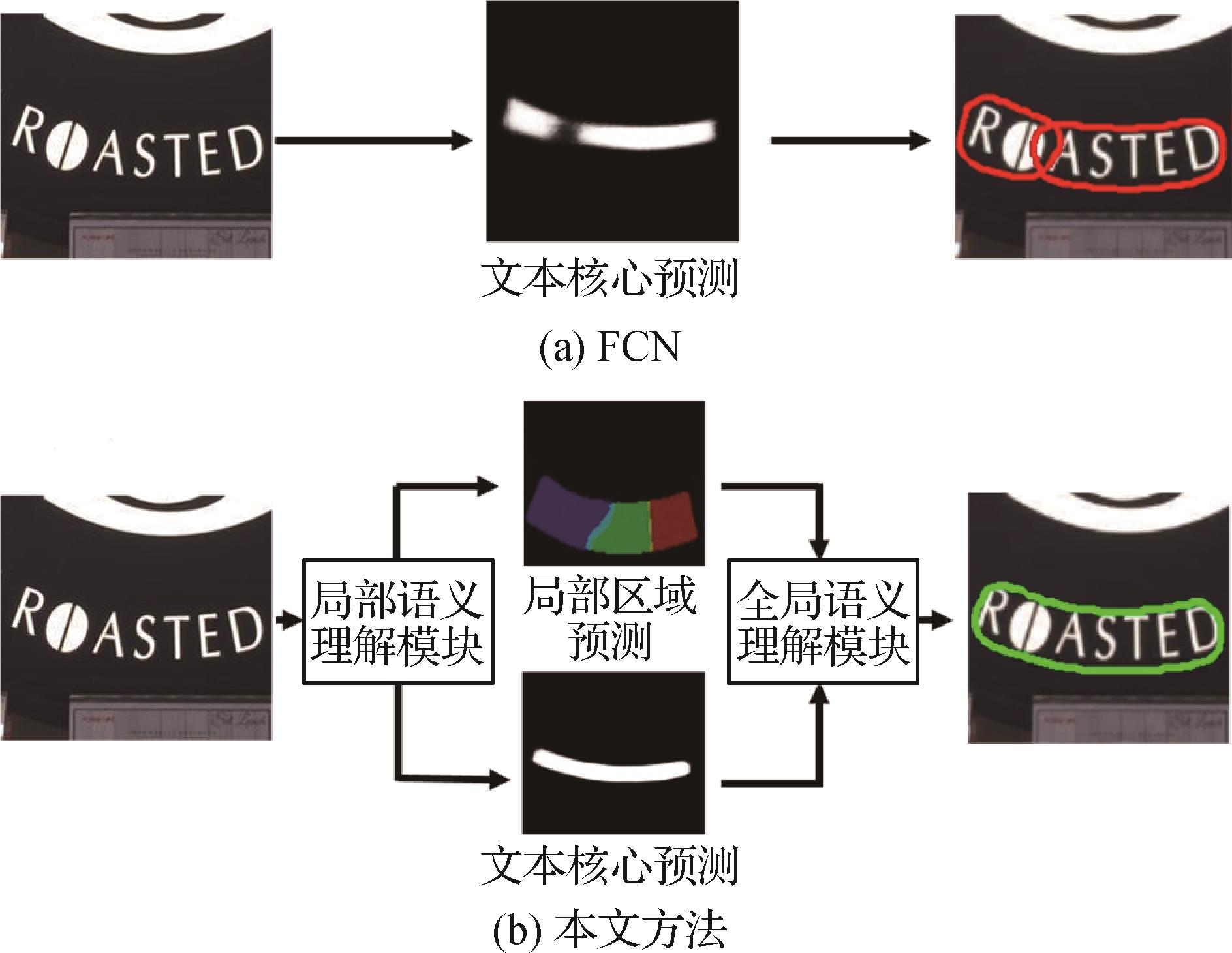

摘要:ObjectiveScene-related text detection is essential for computer vision, which aims to localize text instances for targeted image. It is beneficial for such domain of text recognition applications like scene understanding, translation and text visual question answering. The emerging deep learning based convolution neural network (CNN) has been widely developing in relevance to text detection nowadays. Current researches are focused on texts location in terms of the regression of the quadrangular bounding box. However, since regression based methods unfit texts with arbitrary shapes (e.g., curved texts), many approaches focus on segmentation based methods. Fully convolutional networks (FCN) are commonly used to obtain high-resolution feature maps, and the pixel-level mask is predicted to locate the text instances as well. Due to the extreme aspect ratios and the various sizes of text instances, existing models are challenged for one feature map-related integration of local-level and global-level semantics. More feature maps are introduced from multiple levels of the network, and hierarchical semantics can be generated from the corresponding feature map. But, these modules are required to yield the network to optimize the hierarchical features simultaneously, which may distract the network to a certain extent. Hence, existing networks are required to capture accurate hierarchical semantics further.MethodTo resolve this problem, the segmentation based text detection method is developed and a hierarchical semantic fusion network is demonstrated as well. We decouple the local and global feature extraction process and learn corresponding semantics. Specially, two mutual-benefited components are introduced for enhancing effective local and global feature, sub-region based local semantic understanding module (SLM) and instance based global semantic understanding module (IGM). First, SLM is used to segment the text instance into a kernel and multiple sub-regions in terms of their text-inner position. And, SLM can be used to learn their segmentation, which is an auxiliary task for the model. As a small part of the text, segmenting sub-region requires more local-level information and less long-range context, for which the model can be yielded to learning more accurate local features. Furthermore, ground truth-supervised position information can harness the network to separate the adjacent text instances. Second, IGM is designed for global-contextual feature extraction through capturing text instances-amongst long-range dependency. Thanks to SLM-derived segmentation maps, IGM can be used to filter the noisy background easily, and the instance-level features of each text instance can be obtained as well. Those features are then fed into a Transformer to fuse the semantics from different instances, in which global receptive field-related text features can be generated. And, the similarity is calculated relevant to the original pixel-level feature map. Finally, the global-level feature is aggregated via similarity map-related text features. The integrated SLM and IGM are beneficial for its learning to segment the text from pixel to local region and to text instances further. During this procedure, the hierarchical semantics are collected in the corresponding module, which can shrink the distraction for the other related level manually. In addition, vague semantics-involved ambiguous boundary in segmentation results are be sorted out, which may distort the semantic extraction. To alleviate this problem, we illustrate location aware loss (LAL) to increase the aggression of the misclassification around the border region. The LAL is calculated in terms of a weighted loss, and a higher weight is assigned for the pixels closer to the boundary. This loss function can be used to get a confident and accurate prediction of the boundary-relevant model, which has more accurate and discriminative feature.ResultComparative analysis is carried out on the basis of 12 popular methods. Three sort of challenging datasets are used for a comprehensive evaluation as well, called Total-Text, MSRA-TD500, and ICDAR2015 for each. The quantitative evaluation metrics consists of F-measure, recall, and precision. We achieve over 1% improvement on these two datasets with the F-measure of 87.0% and 88.2%. Especially, the recall and precision on MSRA-TD500 can be reached to 92.1% and 84.5%. For the ICDAR2015 dataset, the precision is improved to 92.3%. And, the F-measure on this dataset is optimized and reached to 87.0%. Additionally, a series of comparative experiments on the Total-Text dataset are conducted to evaluate the effectiveness of each module proposed. Such analyses show that the proposed SLM, IGM, and LAL can be used to improve each F-measure of 1.0%, 0.6%, and 0.5%. The qualitative visualization demonstrates that the baseline model can be optimized to a certain extent.ConclusionHierarchical semantic understanding network is developed and a novel loss function is optimized for hierarchical semantics enhancement as well. Decoupling the local and global feature extraction process can be as an essential tool to get more accurate and reliable hierarchical semantics progressively.关键词:scene text;text detection;fully convolutional network (FCN);convolutional neural network (CNN);feature fusion;attention mechanism129|348|1更新时间:2024-05-07

摘要:ObjectiveScene-related text detection is essential for computer vision, which aims to localize text instances for targeted image. It is beneficial for such domain of text recognition applications like scene understanding, translation and text visual question answering. The emerging deep learning based convolution neural network (CNN) has been widely developing in relevance to text detection nowadays. Current researches are focused on texts location in terms of the regression of the quadrangular bounding box. However, since regression based methods unfit texts with arbitrary shapes (e.g., curved texts), many approaches focus on segmentation based methods. Fully convolutional networks (FCN) are commonly used to obtain high-resolution feature maps, and the pixel-level mask is predicted to locate the text instances as well. Due to the extreme aspect ratios and the various sizes of text instances, existing models are challenged for one feature map-related integration of local-level and global-level semantics. More feature maps are introduced from multiple levels of the network, and hierarchical semantics can be generated from the corresponding feature map. But, these modules are required to yield the network to optimize the hierarchical features simultaneously, which may distract the network to a certain extent. Hence, existing networks are required to capture accurate hierarchical semantics further.MethodTo resolve this problem, the segmentation based text detection method is developed and a hierarchical semantic fusion network is demonstrated as well. We decouple the local and global feature extraction process and learn corresponding semantics. Specially, two mutual-benefited components are introduced for enhancing effective local and global feature, sub-region based local semantic understanding module (SLM) and instance based global semantic understanding module (IGM). First, SLM is used to segment the text instance into a kernel and multiple sub-regions in terms of their text-inner position. And, SLM can be used to learn their segmentation, which is an auxiliary task for the model. As a small part of the text, segmenting sub-region requires more local-level information and less long-range context, for which the model can be yielded to learning more accurate local features. Furthermore, ground truth-supervised position information can harness the network to separate the adjacent text instances. Second, IGM is designed for global-contextual feature extraction through capturing text instances-amongst long-range dependency. Thanks to SLM-derived segmentation maps, IGM can be used to filter the noisy background easily, and the instance-level features of each text instance can be obtained as well. Those features are then fed into a Transformer to fuse the semantics from different instances, in which global receptive field-related text features can be generated. And, the similarity is calculated relevant to the original pixel-level feature map. Finally, the global-level feature is aggregated via similarity map-related text features. The integrated SLM and IGM are beneficial for its learning to segment the text from pixel to local region and to text instances further. During this procedure, the hierarchical semantics are collected in the corresponding module, which can shrink the distraction for the other related level manually. In addition, vague semantics-involved ambiguous boundary in segmentation results are be sorted out, which may distort the semantic extraction. To alleviate this problem, we illustrate location aware loss (LAL) to increase the aggression of the misclassification around the border region. The LAL is calculated in terms of a weighted loss, and a higher weight is assigned for the pixels closer to the boundary. This loss function can be used to get a confident and accurate prediction of the boundary-relevant model, which has more accurate and discriminative feature.ResultComparative analysis is carried out on the basis of 12 popular methods. Three sort of challenging datasets are used for a comprehensive evaluation as well, called Total-Text, MSRA-TD500, and ICDAR2015 for each. The quantitative evaluation metrics consists of F-measure, recall, and precision. We achieve over 1% improvement on these two datasets with the F-measure of 87.0% and 88.2%. Especially, the recall and precision on MSRA-TD500 can be reached to 92.1% and 84.5%. For the ICDAR2015 dataset, the precision is improved to 92.3%. And, the F-measure on this dataset is optimized and reached to 87.0%. Additionally, a series of comparative experiments on the Total-Text dataset are conducted to evaluate the effectiveness of each module proposed. Such analyses show that the proposed SLM, IGM, and LAL can be used to improve each F-measure of 1.0%, 0.6%, and 0.5%. The qualitative visualization demonstrates that the baseline model can be optimized to a certain extent.ConclusionHierarchical semantic understanding network is developed and a novel loss function is optimized for hierarchical semantics enhancement as well. Decoupling the local and global feature extraction process can be as an essential tool to get more accurate and reliable hierarchical semantics progressively.关键词:scene text;text detection;fully convolutional network (FCN);convolutional neural network (CNN);feature fusion;attention mechanism129|348|1更新时间:2024-05-07 -

摘要:ObjectiveThe emerging digitization and intelligence techniques have facilitated the path to accept and recognize text content originated from paper documents, photos, or contexts nowadasys. Recent online mathematical expression recognition is widely used for such domain of portable devices like mobile phones and tablet PCs. The devices are required for converting the online handwritten trajectory into mathematical expression text and indicate symbols-between logical relationship in relevance to such of power, subscript and matrix. Online math calculator can be used to receive handwritten mathematical expressions in terms of online mathematical expression recognition, which makes input easier beyond LaTeX mathematical expressions with symbols of complex mathematical relation. At the same time, instant electric recording in complex scenarios becomes feasible for such scenarios like classes and academic meetings. Current encoder-decoder based mathematical expression recognition methods have been developing intensively. The quality and quantity of training data have a great impact on the performance of deep neural network. The lack of data has threatened the optimization of generalization and robustness of the model in consistency. The input form of the mathematical expression in the online scene is recognized as the track point sequence, which needs to be collected on the annotation-before real time handwriting device further. Therefore, cost of online data collection is higher than offline data. The model still has poor performance due to insufficient data.MethodTo resolve the problems mentioned above, we develop an encoder-decoder based generation model for online handwritten mathematical expressions. The model can generate the corresponding online trajectory point sequence in terms of the given mathematical expression text. We also can synthesize different-writing-style mathematical expressions by different style symbols input. A large amount of near real handwriting data is obtained at a very low cost, which expands the scale of training data flexibly and avoids lacked data fitting or over fitting of the model. For generation tasks, the ability of representation and discrimination of the encoder often affect the performance directly. The encoder aims to model the input text effectively. In detail, sufficient difference is needed between the representations of different inputs, and certain similarity is required between the ones of similar inputs as well. Intuitively, the representation of tree structure can well reflect expressions-between similarities and differences to some extent. Therefore, we design a tree representation-based text feature extraction module for the generation model in the encoder, which makes full use of the two-dimensional structure information. In addition, there is no corresponding relationship between each character of input text and the output track points. Therefore, to align the input text sequence with the output track points, we introduce a location-based attention model into the decoder. Simutaneously, to generate multiple handwriting style samples, we also integrate different handwriting style features into the decoder. The decoder can be used to synthesize the skeleton of the track through the input text, and writing style feature-related can be rendered into different styles.ResultThe method proposed is evaluated from two aspects: visual effect of generated results and the improvement of recognition tasks. First, we illustrate generation results of different difficulty, including simple sequence, complex fraction, multi-line expression and long text. Second, we select and display the generated data with similar and different writing styles. Next, we generate a large number of mathematical expression texts and synthesize online data randomly based on the generation model. Finally, we use these synthetic data as data augmentation to train the Transformer-TAP (track, attend, and parse), TAP and Densetap-TD (DenseNet TAP with tree decoder) as well. The performance of these three models is significantly improved beneficial from synthetic data. The additional data enriches the training set and the model is mutual-benefited for more symbol combinations with different writing styles. The results show that each of the absolute recognition rates is increased by 0.98%, 1.55% and 1.06%, as well as each of the relative recognition rates is increased by 9.9%, 12.37% and 9.81%.ConclusionAn online mathematical expression generation method is introduced based on encoder-decoder model. The method can be used to realize the generation of on-line trajectory point sequence from given expression text. It can expand the original data set more flexibly to a certain extent. Experimental result demonstrates that the synthetic data can improve the accuracy of online handwriting mathematical expression recognition effectively. It improves the generalization and robustness of the recognition model further.关键词:deep learning;handwritten expression recognition;end-to-end network;encoder-decoder; data augmentation131|308|2更新时间:2024-05-07

摘要:ObjectiveThe emerging digitization and intelligence techniques have facilitated the path to accept and recognize text content originated from paper documents, photos, or contexts nowadasys. Recent online mathematical expression recognition is widely used for such domain of portable devices like mobile phones and tablet PCs. The devices are required for converting the online handwritten trajectory into mathematical expression text and indicate symbols-between logical relationship in relevance to such of power, subscript and matrix. Online math calculator can be used to receive handwritten mathematical expressions in terms of online mathematical expression recognition, which makes input easier beyond LaTeX mathematical expressions with symbols of complex mathematical relation. At the same time, instant electric recording in complex scenarios becomes feasible for such scenarios like classes and academic meetings. Current encoder-decoder based mathematical expression recognition methods have been developing intensively. The quality and quantity of training data have a great impact on the performance of deep neural network. The lack of data has threatened the optimization of generalization and robustness of the model in consistency. The input form of the mathematical expression in the online scene is recognized as the track point sequence, which needs to be collected on the annotation-before real time handwriting device further. Therefore, cost of online data collection is higher than offline data. The model still has poor performance due to insufficient data.MethodTo resolve the problems mentioned above, we develop an encoder-decoder based generation model for online handwritten mathematical expressions. The model can generate the corresponding online trajectory point sequence in terms of the given mathematical expression text. We also can synthesize different-writing-style mathematical expressions by different style symbols input. A large amount of near real handwriting data is obtained at a very low cost, which expands the scale of training data flexibly and avoids lacked data fitting or over fitting of the model. For generation tasks, the ability of representation and discrimination of the encoder often affect the performance directly. The encoder aims to model the input text effectively. In detail, sufficient difference is needed between the representations of different inputs, and certain similarity is required between the ones of similar inputs as well. Intuitively, the representation of tree structure can well reflect expressions-between similarities and differences to some extent. Therefore, we design a tree representation-based text feature extraction module for the generation model in the encoder, which makes full use of the two-dimensional structure information. In addition, there is no corresponding relationship between each character of input text and the output track points. Therefore, to align the input text sequence with the output track points, we introduce a location-based attention model into the decoder. Simutaneously, to generate multiple handwriting style samples, we also integrate different handwriting style features into the decoder. The decoder can be used to synthesize the skeleton of the track through the input text, and writing style feature-related can be rendered into different styles.ResultThe method proposed is evaluated from two aspects: visual effect of generated results and the improvement of recognition tasks. First, we illustrate generation results of different difficulty, including simple sequence, complex fraction, multi-line expression and long text. Second, we select and display the generated data with similar and different writing styles. Next, we generate a large number of mathematical expression texts and synthesize online data randomly based on the generation model. Finally, we use these synthetic data as data augmentation to train the Transformer-TAP (track, attend, and parse), TAP and Densetap-TD (DenseNet TAP with tree decoder) as well. The performance of these three models is significantly improved beneficial from synthetic data. The additional data enriches the training set and the model is mutual-benefited for more symbol combinations with different writing styles. The results show that each of the absolute recognition rates is increased by 0.98%, 1.55% and 1.06%, as well as each of the relative recognition rates is increased by 9.9%, 12.37% and 9.81%.ConclusionAn online mathematical expression generation method is introduced based on encoder-decoder model. The method can be used to realize the generation of on-line trajectory point sequence from given expression text. It can expand the original data set more flexibly to a certain extent. Experimental result demonstrates that the synthetic data can improve the accuracy of online handwriting mathematical expression recognition effectively. It improves the generalization and robustness of the recognition model further.关键词:deep learning;handwritten expression recognition;end-to-end network;encoder-decoder; data augmentation131|308|2更新时间:2024-05-07 -