最新刊期

卷 28 , 期 6 , 2023

-

摘要:Virtual-real human-computer interaction (VR-HCI) is an interdisciplinary field that encompasses human and computer interactions to address human-related cognitive and emotional needs. This interdisciplinary knowledge integrates domains such as computer science, cognitive psychology, ergonomics, multimedia technology, and virtual reality. With the advancement of big data and artificial intelligence, VR-HCI benefits industries like education, healthcare, robotics, and entertainment, and is increasingly recognized as a key supporting technology for metaverse-related development. In recent years, machine learning-based human cognitive and emotional analysis has evolved, particularly in applications like robotics and wearable interaction devices. As a result, VR-HCI has focused on the challenging issue of creating “intelligent” and “anthropomorphic” interaction systems. This literature review examines the growth of VR-HCI from four aspects: perceptual computing, human-machine interaction and coordination, human-computer dialogue interaction, and data visualization. Perceptual computing aims to model human daily life behavior, cognitive processes, and emotional contexts for personalized and efficient human-computer interactions. This discussion covers three perceptual aspects related to pathways, objects, and scenes. Human-machine interaction scenarios involve virtual and real-world integration and perceptual pathways, which are divided into primary perception types: visual-based, sensor-based, and wireless non-contact. Object-based perception is subdivided into personal and group contexts, while scene-based perception is subdivided into physical behavior and cognitive contexts. Human-machine interaction primarily encompasses technical disciplines such as mechanical and electrical engineering, computer and control science, artificial intelligence, and other related arts or humanistic disciplines like psychology and design. Human-robot interaction can be categorized by functional mechanisms into 1) collaborative operation robots, 2) service and assistance robots, and 3) social, entertainment, and educational robots. Key modules in human-computer dialogue interaction systems include speech recognition, speaker recognition, dialogue system, and speech synthesis. The level of intelligence in these interaction systems can be further enhanced by considering users' inherent characteristics, such as speech pronunciation, preferences, emotions, and other attributes. For human-machine interaction, it mainly involves technical disciplines in relevant to mechanical and electrical engineering, computer and control science, and artificial intelligence, as well as other related arts or humanistic disciplines like psychology and design. Humans-robots interaction can be segmented into three categories in terms of its functional mechanism: 1) collaborative operation robots, 2) service and assistance robots, and 3) social, entertainment and educational robots. For human-computer dialogue interaction, the system consists of such key modules like speech recognition, speaker recognition, dialogue system, and speech synthesis. The microphone sensor can pick up the speech signal, which is then converted to text information through the speech recognition module. The dialogue system can process the text information, understand the user's intention, and generates a reply. Finally, the speech-synthesized module can convert the reply information into speech information, completing the interaction process. In recent years, the level of intelligence of the interaction system can be further improved by combining users' inherent characteristics such as speech pronunciation, preferences, emotions, and other characteristics, optimizing the various modules of the interaction system. For data transformation and visualization, it is benched for performing data cleaning tasks on tabular data, and various tools in R and Python can perform these tasks as well. Many software systems have developed graphical user interfaces to assist users in completing data transformation tasks, such as Microsoft Excel, Tableau Prep Builder, and OpenRefine. Current recommendation-based algorithms interactive systems are beneficial for users transform data easily. Researchers have also developed tools that can transform network structures. We analyze its four aspects of 1) interactive data transformation, 2) data transformation visualization, 3) data table visual comparison, and 4) code visualization in human-computer interaction systems. We identify several future research directions in VR-HCI, namely 1) designing generalized and personalized perceptual computing, 2) building human-machine cooperation with a deep understanding of user behavior, and 3) expanding user-adaptive dialogue systems. For perceptual computing, it still lacks joint perception of multiple devices and individual differences in human behavior perception. Furthermore, most perceptual research can use generalized models, neglecting individual differences, resulting in lower perceptual accuracy, making it difficult to apply in actual settings. Therefore, future perceptual computing research trends are required for multimodal, transferable, personalized, and scalable research. For human-machine interaction and coordination, a systematic approach is necessary for constructing a design for human-machine interaction and collaboration. This approach requires in-depth research on user understanding, construction of interaction datasets, and long-term user experience. For human-computer dialogue interaction, current research mostly focuses on open-domain systems, which use pre-trained models to improve modeling accuracy for emotions, intentions, and knowledge. Future research should be aimed at developing more intelligent human-machine conversations that cater to individual user needs. For data transformation and visualization in HCI, the future directions can be composed of two parts: 1) the intelligence level of data transformation can be improved through interaction for individual data workers on several aspects, e.g., appropriate algorithms for multiple types of data, recommendations for consistent user behavior and real-time analysis to support massive data. 2) The focus is on the integration of data transformation and visualization among multiple users, including designing collaborative mechanisms, resolving conflicts in data operation, visualizing complex data transformation codes, evaluating the effectiveness of various visualization methods, and recording and displaying multiple human behaviors. In summary, the development of VR-HCI can provide new opportunities and challenges for human-computer interaction towards Metaverse, which has the potential to seamlessly integrate virtual and real worlds.关键词:human-computer interaction(HCI);perceptual computing;human-machine cooperation;dialogue system;data visualization414|386|2更新时间:2024-05-07

摘要:Virtual-real human-computer interaction (VR-HCI) is an interdisciplinary field that encompasses human and computer interactions to address human-related cognitive and emotional needs. This interdisciplinary knowledge integrates domains such as computer science, cognitive psychology, ergonomics, multimedia technology, and virtual reality. With the advancement of big data and artificial intelligence, VR-HCI benefits industries like education, healthcare, robotics, and entertainment, and is increasingly recognized as a key supporting technology for metaverse-related development. In recent years, machine learning-based human cognitive and emotional analysis has evolved, particularly in applications like robotics and wearable interaction devices. As a result, VR-HCI has focused on the challenging issue of creating “intelligent” and “anthropomorphic” interaction systems. This literature review examines the growth of VR-HCI from four aspects: perceptual computing, human-machine interaction and coordination, human-computer dialogue interaction, and data visualization. Perceptual computing aims to model human daily life behavior, cognitive processes, and emotional contexts for personalized and efficient human-computer interactions. This discussion covers three perceptual aspects related to pathways, objects, and scenes. Human-machine interaction scenarios involve virtual and real-world integration and perceptual pathways, which are divided into primary perception types: visual-based, sensor-based, and wireless non-contact. Object-based perception is subdivided into personal and group contexts, while scene-based perception is subdivided into physical behavior and cognitive contexts. Human-machine interaction primarily encompasses technical disciplines such as mechanical and electrical engineering, computer and control science, artificial intelligence, and other related arts or humanistic disciplines like psychology and design. Human-robot interaction can be categorized by functional mechanisms into 1) collaborative operation robots, 2) service and assistance robots, and 3) social, entertainment, and educational robots. Key modules in human-computer dialogue interaction systems include speech recognition, speaker recognition, dialogue system, and speech synthesis. The level of intelligence in these interaction systems can be further enhanced by considering users' inherent characteristics, such as speech pronunciation, preferences, emotions, and other attributes. For human-machine interaction, it mainly involves technical disciplines in relevant to mechanical and electrical engineering, computer and control science, and artificial intelligence, as well as other related arts or humanistic disciplines like psychology and design. Humans-robots interaction can be segmented into three categories in terms of its functional mechanism: 1) collaborative operation robots, 2) service and assistance robots, and 3) social, entertainment and educational robots. For human-computer dialogue interaction, the system consists of such key modules like speech recognition, speaker recognition, dialogue system, and speech synthesis. The microphone sensor can pick up the speech signal, which is then converted to text information through the speech recognition module. The dialogue system can process the text information, understand the user's intention, and generates a reply. Finally, the speech-synthesized module can convert the reply information into speech information, completing the interaction process. In recent years, the level of intelligence of the interaction system can be further improved by combining users' inherent characteristics such as speech pronunciation, preferences, emotions, and other characteristics, optimizing the various modules of the interaction system. For data transformation and visualization, it is benched for performing data cleaning tasks on tabular data, and various tools in R and Python can perform these tasks as well. Many software systems have developed graphical user interfaces to assist users in completing data transformation tasks, such as Microsoft Excel, Tableau Prep Builder, and OpenRefine. Current recommendation-based algorithms interactive systems are beneficial for users transform data easily. Researchers have also developed tools that can transform network structures. We analyze its four aspects of 1) interactive data transformation, 2) data transformation visualization, 3) data table visual comparison, and 4) code visualization in human-computer interaction systems. We identify several future research directions in VR-HCI, namely 1) designing generalized and personalized perceptual computing, 2) building human-machine cooperation with a deep understanding of user behavior, and 3) expanding user-adaptive dialogue systems. For perceptual computing, it still lacks joint perception of multiple devices and individual differences in human behavior perception. Furthermore, most perceptual research can use generalized models, neglecting individual differences, resulting in lower perceptual accuracy, making it difficult to apply in actual settings. Therefore, future perceptual computing research trends are required for multimodal, transferable, personalized, and scalable research. For human-machine interaction and coordination, a systematic approach is necessary for constructing a design for human-machine interaction and collaboration. This approach requires in-depth research on user understanding, construction of interaction datasets, and long-term user experience. For human-computer dialogue interaction, current research mostly focuses on open-domain systems, which use pre-trained models to improve modeling accuracy for emotions, intentions, and knowledge. Future research should be aimed at developing more intelligent human-machine conversations that cater to individual user needs. For data transformation and visualization in HCI, the future directions can be composed of two parts: 1) the intelligence level of data transformation can be improved through interaction for individual data workers on several aspects, e.g., appropriate algorithms for multiple types of data, recommendations for consistent user behavior and real-time analysis to support massive data. 2) The focus is on the integration of data transformation and visualization among multiple users, including designing collaborative mechanisms, resolving conflicts in data operation, visualizing complex data transformation codes, evaluating the effectiveness of various visualization methods, and recording and displaying multiple human behaviors. In summary, the development of VR-HCI can provide new opportunities and challenges for human-computer interaction towards Metaverse, which has the potential to seamlessly integrate virtual and real worlds.关键词:human-computer interaction(HCI);perceptual computing;human-machine cooperation;dialogue system;data visualization414|386|2更新时间:2024-05-07 -

摘要:A brain-computer interface (BCI) establishes a direct communication pathway between the living brain and an external device in terms of brain signals-acquiring and analysis and commands-converted output. It can be used to replace, repair, augment, supplement or improve the normal output of the central nervous system. The BCIs can be divided into invasive or noninvasive BCIs based on the placement of the acquisition electrode. An invasive BCI is linked to its records or brain neurons-relevant stimulation through surgical brain-implanted electrodes. But, it is just used for animal experiments or severe paralysis patients although invasive BCIs can be used to record a high signal-to-noise ratio-related brain signals. Non-invasive BCIs have its potentials of their credibility and portability in comparison with invasive BCIs. The electroencephalography (EEG) is commonly used for brain signal for BCIs now. The EEG-based BCI systems consist of two directions: coding methods used to generate brain signals and the decoding methods used to decode brain signals. In recent years, the growth of coding methods has extended the application scenarios and applicability of the system. Furthermore, to get high-performance BCIs, brain signal decoding methods has greatly developed and low signal-to-noise ratio of EEG signals is optimized as well. The BCI systems can be segmented into such categories of active, reactive or passive. For an active BCI, a user can consciously control mental activities of external stimuli excluded. The motor imagery based (MI-based) BCI system is an active BCI system in terms of EEG signals-within specific frequency changes, which can be balanced using non-motor output mental rehearsal of a motor action. For reactive BCI, brain activity is triggered by an external stimulus, and the user reacts to the stimulus from the external world. Most researches are focused on static-state visual evoked potential based (SSVEP-based) BCI and an event-related potential based (ERP-based) BCI. SSVEPs are brain responses that are elicited over the visual region when a user focuses on a flicker of a visual stimulus. An ERP-based BCI is usually based on a P300 component of ERP, which can be produced after the onset of the stimulus. For a passive BCI, it can provide the hidden state of the brain in the human-computer interaction process rather than temporal and humanized interaction, especially for affective BCIs and mental load BCIs. Our literature analysis is focused on BCI systems from four contexts of its MI-based, SSVEP-based, ERP-based, and affective BCI. Current situation and the application of BCI systems can be analyzed for coding and decoding technology as mentioned below: 1) MI-based BCI studies are mostly focused on the classification of EEG patterns during MI tasks from different limbs, such as the left hand, right hand, and both feet. However, small instruction sets are still challenged for the actual application requirements. Thus, fine MI tasks from the unilateral limb are developed to deal with that. We review fine MI paradigms of multiple unilateral limb contexts in relevance to its joints, directions, and tasks. Decoding methods consist of two procedures in common: feature extraction and feature classification. Feature extraction extracts task-related and recognizable features from brain signals, and feature classification uses features-extracted to clarify the intentions of users. For MI decoding technology, two-stage traditional decoding methods are first briefly introduced, e.g., common spatial pattern (CSP) and linear discriminant analysis (LDA). After that, we summarize the latest deep learning methods, which can improve the decoding accuracy, and some migration learning methods are summarized, which can alleviate its calibration data. Finally, recent applications of the MI-BCI system are introduced in related to control of the mechanical arm and stroke rehabilitation. 2) SSVEP-based BCI systems are concerned about in terms of their high information transmission rate, strong stability, and applicability. Recent researches to highlight of SSVEP-based BCI studies are reviewed literately as well. For example, some new coding strategies are implemented for instruction set or users’ preference and such emerging decoding methods are used to optimize its performance of SSVEP detection. Additionally, we summarize the main application directions of SSVEP-based BCI systems, including communication, control, and state monitoring, and the latest application progress are reviewed as well. 3) For ERP-based BCIs, the signal-to-noise ratio of the ERP response is required to be relatively higher, and a single target-related rapid serial visual presentation paradigm is challenged for the detection of multiple targets. To sum up, BCI-hybrid paradigms are developed in terms of the integration of the ERP and other related paradigms. Function-based ERP decoding methods are divided into four categories of signal de-noising, feature extraction, transfer learning, and zero calibration algorithms. Current status of decoding methods and ERP-based BCI applications are summarized as well. 4) Affective BCI can be adopted to recognize and balance human emotion. The emotion and new decoding methods are evolved in, including multimodal fusion and transfer learning. Additionally, the application of affective BCI in healthcare is reviewed and analyzed as well. Finally, future research direction of non-invasive BCI are predicted further.关键词:brain-computer interface(BCI);non-invasive;encoding method;decoding method;system application370|1916|1更新时间:2024-05-07

摘要:A brain-computer interface (BCI) establishes a direct communication pathway between the living brain and an external device in terms of brain signals-acquiring and analysis and commands-converted output. It can be used to replace, repair, augment, supplement or improve the normal output of the central nervous system. The BCIs can be divided into invasive or noninvasive BCIs based on the placement of the acquisition electrode. An invasive BCI is linked to its records or brain neurons-relevant stimulation through surgical brain-implanted electrodes. But, it is just used for animal experiments or severe paralysis patients although invasive BCIs can be used to record a high signal-to-noise ratio-related brain signals. Non-invasive BCIs have its potentials of their credibility and portability in comparison with invasive BCIs. The electroencephalography (EEG) is commonly used for brain signal for BCIs now. The EEG-based BCI systems consist of two directions: coding methods used to generate brain signals and the decoding methods used to decode brain signals. In recent years, the growth of coding methods has extended the application scenarios and applicability of the system. Furthermore, to get high-performance BCIs, brain signal decoding methods has greatly developed and low signal-to-noise ratio of EEG signals is optimized as well. The BCI systems can be segmented into such categories of active, reactive or passive. For an active BCI, a user can consciously control mental activities of external stimuli excluded. The motor imagery based (MI-based) BCI system is an active BCI system in terms of EEG signals-within specific frequency changes, which can be balanced using non-motor output mental rehearsal of a motor action. For reactive BCI, brain activity is triggered by an external stimulus, and the user reacts to the stimulus from the external world. Most researches are focused on static-state visual evoked potential based (SSVEP-based) BCI and an event-related potential based (ERP-based) BCI. SSVEPs are brain responses that are elicited over the visual region when a user focuses on a flicker of a visual stimulus. An ERP-based BCI is usually based on a P300 component of ERP, which can be produced after the onset of the stimulus. For a passive BCI, it can provide the hidden state of the brain in the human-computer interaction process rather than temporal and humanized interaction, especially for affective BCIs and mental load BCIs. Our literature analysis is focused on BCI systems from four contexts of its MI-based, SSVEP-based, ERP-based, and affective BCI. Current situation and the application of BCI systems can be analyzed for coding and decoding technology as mentioned below: 1) MI-based BCI studies are mostly focused on the classification of EEG patterns during MI tasks from different limbs, such as the left hand, right hand, and both feet. However, small instruction sets are still challenged for the actual application requirements. Thus, fine MI tasks from the unilateral limb are developed to deal with that. We review fine MI paradigms of multiple unilateral limb contexts in relevance to its joints, directions, and tasks. Decoding methods consist of two procedures in common: feature extraction and feature classification. Feature extraction extracts task-related and recognizable features from brain signals, and feature classification uses features-extracted to clarify the intentions of users. For MI decoding technology, two-stage traditional decoding methods are first briefly introduced, e.g., common spatial pattern (CSP) and linear discriminant analysis (LDA). After that, we summarize the latest deep learning methods, which can improve the decoding accuracy, and some migration learning methods are summarized, which can alleviate its calibration data. Finally, recent applications of the MI-BCI system are introduced in related to control of the mechanical arm and stroke rehabilitation. 2) SSVEP-based BCI systems are concerned about in terms of their high information transmission rate, strong stability, and applicability. Recent researches to highlight of SSVEP-based BCI studies are reviewed literately as well. For example, some new coding strategies are implemented for instruction set or users’ preference and such emerging decoding methods are used to optimize its performance of SSVEP detection. Additionally, we summarize the main application directions of SSVEP-based BCI systems, including communication, control, and state monitoring, and the latest application progress are reviewed as well. 3) For ERP-based BCIs, the signal-to-noise ratio of the ERP response is required to be relatively higher, and a single target-related rapid serial visual presentation paradigm is challenged for the detection of multiple targets. To sum up, BCI-hybrid paradigms are developed in terms of the integration of the ERP and other related paradigms. Function-based ERP decoding methods are divided into four categories of signal de-noising, feature extraction, transfer learning, and zero calibration algorithms. Current status of decoding methods and ERP-based BCI applications are summarized as well. 4) Affective BCI can be adopted to recognize and balance human emotion. The emotion and new decoding methods are evolved in, including multimodal fusion and transfer learning. Additionally, the application of affective BCI in healthcare is reviewed and analyzed as well. Finally, future research direction of non-invasive BCI are predicted further.关键词:brain-computer interface(BCI);non-invasive;encoding method;decoding method;system application370|1916|1更新时间:2024-05-07 -

摘要:The preservation and exhibition of cultural relics have been concerned more nowadays, in which number of worldwide museums is even higher at about 55 097 and all the artefacts have been melted into. In China, total number of museums can be reached to 6 183, and it has involved 108 million state-owned movable cultural relics (sets) and 767 000 immovable cultural relics nationwide recently. Traditional museums are mainly focused on display cases, pictures, and other related audio-visual materials. Current digitization of cultural objects is beneficial to optimize traditional methods further. First, standard and consistent preservation can be introduced to alleviate natural-derived irreversible damage challenges to cultural objects. Next, it facilitates diverse ways of displaying cultural relics, which can enhance public awareness for appreciate heritage in a holistic, multi-angle, and three-dimensional manner. In recent years, the development of information technology has harnessed the emergence of many new conservation techniques and ways of displaying heritage, such as deep learning based 3D reconstruction and intelligent museums-oriented metaverse technologies of virtual reality. The digitization of museums has been launched globally in the context of digitization of collection resources since 1990s. But, the following digital museums are still challenged to deal with the problem of museum-across integrated resources. Such worldwide research projects for intelligent museums construction has been called for further. The goal of a smart museum is aimed to create a feasible intelligent ecosystem for museum-relevant domains. We review global-contextual technologies, in which four category of such key technologies in relevance to digital acquisition, heritage realism reconstruction, virtual-intelligent-integrated interaction, and smart platform construction. For digital acquisition of cultural relics, we illustrate and analyze the two most common types of methods in related to 3D scanning and close-up photogrammetry. For the realistic reconstruction technology of cultural relics, geometric processing and deep learning-based methods are focused on in terms of 3D reconstruction model analysis. For virtual-intelligent-integrated interaction, a variety of interaction methods are demonstrated in related to such aspects of multimodality, gesture, and handling. For the construction of smart platforms, we summarize some emerging technologies, and the final artistic results are described and achieved. The case study analysis is based on such famous multination museums of the Louvre Museum in France, the Hermitage Museum in Russia, the Palace Museum in Beijing and other related world-renowned museums. We sort out that the commonly-used construction process of smart museums for cultural relics through collection of digital information, three-dimensional reconstruction, and the integration of multiple virtual interaction. The consensus is focused on improving conservation measures and inherit culture. At the same time, potential metaverse technology can be used to build metaverse museum platforms further. Finally, modernizing museums analysis is focused on and its relevant tools and technologies has been focused on metaverse-related technologies. In the future, such emerging metaverse technologies can be used to predicted and integrated into the construction of smart museums as well like blockchain technology, computing and storage, artificial intelligence, and brain-computer Interface. It is focused on the integrated and intelligent construction of museums, and more ultimate and immersive experience can be melted into visitors experiences. To sum up, to promote the development and preservation of global civilization, metaverse technology-integrated intelligent museums are required for its integrated construction in relevance to human cognitive abilities and behavioral patterns.关键词:metaverse;wisdom museum;digital acquisition;3d reconstruction;virtual interaction306|434|1更新时间:2024-05-07

摘要:The preservation and exhibition of cultural relics have been concerned more nowadays, in which number of worldwide museums is even higher at about 55 097 and all the artefacts have been melted into. In China, total number of museums can be reached to 6 183, and it has involved 108 million state-owned movable cultural relics (sets) and 767 000 immovable cultural relics nationwide recently. Traditional museums are mainly focused on display cases, pictures, and other related audio-visual materials. Current digitization of cultural objects is beneficial to optimize traditional methods further. First, standard and consistent preservation can be introduced to alleviate natural-derived irreversible damage challenges to cultural objects. Next, it facilitates diverse ways of displaying cultural relics, which can enhance public awareness for appreciate heritage in a holistic, multi-angle, and three-dimensional manner. In recent years, the development of information technology has harnessed the emergence of many new conservation techniques and ways of displaying heritage, such as deep learning based 3D reconstruction and intelligent museums-oriented metaverse technologies of virtual reality. The digitization of museums has been launched globally in the context of digitization of collection resources since 1990s. But, the following digital museums are still challenged to deal with the problem of museum-across integrated resources. Such worldwide research projects for intelligent museums construction has been called for further. The goal of a smart museum is aimed to create a feasible intelligent ecosystem for museum-relevant domains. We review global-contextual technologies, in which four category of such key technologies in relevance to digital acquisition, heritage realism reconstruction, virtual-intelligent-integrated interaction, and smart platform construction. For digital acquisition of cultural relics, we illustrate and analyze the two most common types of methods in related to 3D scanning and close-up photogrammetry. For the realistic reconstruction technology of cultural relics, geometric processing and deep learning-based methods are focused on in terms of 3D reconstruction model analysis. For virtual-intelligent-integrated interaction, a variety of interaction methods are demonstrated in related to such aspects of multimodality, gesture, and handling. For the construction of smart platforms, we summarize some emerging technologies, and the final artistic results are described and achieved. The case study analysis is based on such famous multination museums of the Louvre Museum in France, the Hermitage Museum in Russia, the Palace Museum in Beijing and other related world-renowned museums. We sort out that the commonly-used construction process of smart museums for cultural relics through collection of digital information, three-dimensional reconstruction, and the integration of multiple virtual interaction. The consensus is focused on improving conservation measures and inherit culture. At the same time, potential metaverse technology can be used to build metaverse museum platforms further. Finally, modernizing museums analysis is focused on and its relevant tools and technologies has been focused on metaverse-related technologies. In the future, such emerging metaverse technologies can be used to predicted and integrated into the construction of smart museums as well like blockchain technology, computing and storage, artificial intelligence, and brain-computer Interface. It is focused on the integrated and intelligent construction of museums, and more ultimate and immersive experience can be melted into visitors experiences. To sum up, to promote the development and preservation of global civilization, metaverse technology-integrated intelligent museums are required for its integrated construction in relevance to human cognitive abilities and behavioral patterns.关键词:metaverse;wisdom museum;digital acquisition;3d reconstruction;virtual interaction306|434|1更新时间:2024-05-07 -

摘要:Imitation learning(IL) is focused on the integration of reinforcement learning and supervised learning through observing demonstrations and learning expert strategies. The additional information related imitation learning can be used to optimize and implement its strategy, which can provide the possibility to alleviate low efficiency of sample problem. In recent years, imitation learning has become a popular framework for solving reinforcement learning problems, and a variety of algorithms and techniques have emerged to improve the performance of learning procedure. Combined with the latest research in the field of image processing, imitation learning has played an important role in such domains like game artificial intelligence (AI), robot control, autonomous driving. Traditional imitation learning methods are mainly composed of behavioral cloning (BC), inverse reinforcement learning (IRL), and adversarial imitation learning (AIL). Thanks to the computing ability and upstream graphics and image tasks (such as object recognition and scene understanding), imitation learning methods can be used to integrate a variety of technologies-emerged for complex tasks. We summarize and analyze imitation learning further, which is composed of imitation learning from observation (ILfO) and cross-domain imitation learning (CDIL). The ILfO can be used to optimize the requirements for expert demonstration, and information-observable can be learnt only without specific action information from experts. This setting makes imitation learning algorithms more practical, and it can be applied to real-life scenes. To alter the environment transition dynamics modeling, ILfO algorithms can be divided into two categories: model-based and model-free. For model-based methods, due to path-constructed of the model in the process of interaction between the agent and the environment, it can be assorted into forward dynamic model and inverse dynamic model further. The other related model-free methods are mainly composed of adversarial-based and function-rewarded engineering. Cross-domain imitation learning are mainly focused on the status of different domains for agents and experts, such as multiple Markov decision processes. Current CDIL research are mainly focused on the domain differences of three aspects of discrepancy in relevant to: transition dynamics, morphological, and view point. The technical solutions to CDIL problems can be mainly divided into such methods like: direct, mapping, adversarial, and optimal transport. The application of imitation learning is mainly on such aspects like game AI, robot control, and automatic driving. The recognition and perception capabilities of intelligent agents are optimized further in image processing, such as object detection, video understanding, video classification, and video recognition. Our critical analysis can be focused on the annual development of imitation learning from the five aspects: behavioral cloning, inverse reinforcement learning, adversarial imitation learning, imitation learning from observation, and cross-domain imitation learning.关键词:imitation learning (IL);reinforcement learning;imitation learning form observation (ILfO);cross domain imitation learning (CDIL);application of imitation learning518|1432|0更新时间:2024-05-07

摘要:Imitation learning(IL) is focused on the integration of reinforcement learning and supervised learning through observing demonstrations and learning expert strategies. The additional information related imitation learning can be used to optimize and implement its strategy, which can provide the possibility to alleviate low efficiency of sample problem. In recent years, imitation learning has become a popular framework for solving reinforcement learning problems, and a variety of algorithms and techniques have emerged to improve the performance of learning procedure. Combined with the latest research in the field of image processing, imitation learning has played an important role in such domains like game artificial intelligence (AI), robot control, autonomous driving. Traditional imitation learning methods are mainly composed of behavioral cloning (BC), inverse reinforcement learning (IRL), and adversarial imitation learning (AIL). Thanks to the computing ability and upstream graphics and image tasks (such as object recognition and scene understanding), imitation learning methods can be used to integrate a variety of technologies-emerged for complex tasks. We summarize and analyze imitation learning further, which is composed of imitation learning from observation (ILfO) and cross-domain imitation learning (CDIL). The ILfO can be used to optimize the requirements for expert demonstration, and information-observable can be learnt only without specific action information from experts. This setting makes imitation learning algorithms more practical, and it can be applied to real-life scenes. To alter the environment transition dynamics modeling, ILfO algorithms can be divided into two categories: model-based and model-free. For model-based methods, due to path-constructed of the model in the process of interaction between the agent and the environment, it can be assorted into forward dynamic model and inverse dynamic model further. The other related model-free methods are mainly composed of adversarial-based and function-rewarded engineering. Cross-domain imitation learning are mainly focused on the status of different domains for agents and experts, such as multiple Markov decision processes. Current CDIL research are mainly focused on the domain differences of three aspects of discrepancy in relevant to: transition dynamics, morphological, and view point. The technical solutions to CDIL problems can be mainly divided into such methods like: direct, mapping, adversarial, and optimal transport. The application of imitation learning is mainly on such aspects like game AI, robot control, and automatic driving. The recognition and perception capabilities of intelligent agents are optimized further in image processing, such as object detection, video understanding, video classification, and video recognition. Our critical analysis can be focused on the annual development of imitation learning from the five aspects: behavioral cloning, inverse reinforcement learning, adversarial imitation learning, imitation learning from observation, and cross-domain imitation learning.关键词:imitation learning (IL);reinforcement learning;imitation learning form observation (ILfO);cross domain imitation learning (CDIL);application of imitation learning518|1432|0更新时间:2024-05-07 - 摘要:Nowadays, with the booming of multimedia data, the character of multi-source and multi-modality of data has become a challenging problem in multimedia research. Its representation and generation can be as two key factors in cross-modal learning research. Cross-modal representation studies feature learning and information integration methods using multi-modal data. To get more effective feature representation, multimodality-between mutual benefits are required to be strengthened. Cross-modal generation is focused on the knowledge transfer mechanism across modalities. The modals-between semantic consistency can be used to realize data-interchangeable profiles of different modals. It is beneficial to improve modalities-between migrating ability. The literature review in cross-modal representation and generation are critically analyzed on the aspect of 1) traditional cross-modal representation learning, 2) big model for cross-modal representation learning, 3) image-to-text cross-modal conversion, joint representation, and 4) cross-modal image generation. Traditional cross-modal representation has two categories: joint representation and coordinated representation. Joint representation can yield multiple single-modal information to the joint representation space when each of single-modal information is processed through the coordinated representations, and cross-modal representations can be learnt mutually in terms of similarity constraints. Deep neural networks (DNNs) based self-supervised learning ability are activated to deal with large-scale unlabeled data, especially for the Transformer-based methods. To enrich the supervised learning paradigm, the pre-trained large models can yield large-scale unlabeled data to learn training, and a downstream tasks-derived small amount of labeled data is used for model fine-tuning. The pre-trained model has better versatility and transfering ability compared to the trained model for specific tasks, and the fine-tuned model can be used to optimize downstream tasks as well. The developmentof cross-modal synthesis (a.k.a. image caption or video caption) methods have been summarized, including end-to-end, semantic-based, and stylize-based methods. In addition, current situation of cross-modal conversion between image and text has beenanalyzed, including image caption, video caption, and visual question answering. The cross-modal generation methods are summarized as well in relevance to the joint representation of cross-modal information, image generation, text-image cross-modal generation, and cross-modal generation based on pre-trained models. In recent years, generative adversarial networks (GANs) and denoising diffusion probabilistic models (DDPMs) have been faciliating in cross-modal generation tasks. Thanks to the strong adaptability and generation ability of DDPM models, cross-modal generation research can be developed and the constraints of vulnerable textures are optimized to a certain extent. The growth of GAN-based and DDPM-based methods are summarized and analyzed further.关键词:multimedia technology;cross-modal learning;foundation model;cross-modal representation;cross-modal generation;deep learning618|1234|4更新时间:2024-05-07

Intelligent Interaction and Cross modal Learning

-

摘要:Visual sensing technique is essential for human to perceive and understand the world around them. An “electronic eyeball” can be melted into outdoor-related visual information,and visual sensors are equipped with such domains like consumer electronics,machine vision,surveillance,and academic researches. Visual sensor technology-based multiple sensors can be used to richer multi-dimension visual data,which can enhance human-related perceptive and cognitive ability. This literature review is focused on the growth of optical visual sensor technology,including such image sensors in relevance to CCD,CMOS,intelligent-visual,and infrared-context. The CMOS image sensor chip is produced in terms of CMOS technology,in which image acquisition unit and signal processing unit can be integrated into the same chip. It can be mass-produced to a certain extent. Cost-effective applications can be oriented to such aspects in related to small size,light weight,low cost and low power consumption. With the rapid development of autonomous driving, intelligent transportation, machine vision and other fields, multi-functional and intelligent CMOS image sensors with small size will become the focus of research. The emerging CCD sensor technology and its applications have been facilitating as well. The potentials of CCD image sensor can be applied for such domains in related to remote sensing,astronomy,low light detection. In the future, the multi-spectral TDI CCD architecture based on CCD and CMOS fusion technology will be widely used. Infrared image sensor is configured and set that infrared radiation detection can be converted into physical quantity. The high-performance digital signal processing function can be integrated on the infrared focal plane. The newly infrared image sensor can be focused on larger array,higher resolution,wider spectrums,more flexible sensitivity,multi-band,and system-level chip further.关键词:optical visual sensor;CCD image sensor;CMOS image sensor;intelligent visual sensor;infrared image sensor224|216|1更新时间:2024-05-07

摘要:Visual sensing technique is essential for human to perceive and understand the world around them. An “electronic eyeball” can be melted into outdoor-related visual information,and visual sensors are equipped with such domains like consumer electronics,machine vision,surveillance,and academic researches. Visual sensor technology-based multiple sensors can be used to richer multi-dimension visual data,which can enhance human-related perceptive and cognitive ability. This literature review is focused on the growth of optical visual sensor technology,including such image sensors in relevance to CCD,CMOS,intelligent-visual,and infrared-context. The CMOS image sensor chip is produced in terms of CMOS technology,in which image acquisition unit and signal processing unit can be integrated into the same chip. It can be mass-produced to a certain extent. Cost-effective applications can be oriented to such aspects in related to small size,light weight,low cost and low power consumption. With the rapid development of autonomous driving, intelligent transportation, machine vision and other fields, multi-functional and intelligent CMOS image sensors with small size will become the focus of research. The emerging CCD sensor technology and its applications have been facilitating as well. The potentials of CCD image sensor can be applied for such domains in related to remote sensing,astronomy,low light detection. In the future, the multi-spectral TDI CCD architecture based on CCD and CMOS fusion technology will be widely used. Infrared image sensor is configured and set that infrared radiation detection can be converted into physical quantity. The high-performance digital signal processing function can be integrated on the infrared focal plane. The newly infrared image sensor can be focused on larger array,higher resolution,wider spectrums,more flexible sensitivity,multi-band,and system-level chip further.关键词:optical visual sensor;CCD image sensor;CMOS image sensor;intelligent visual sensor;infrared image sensor224|216|1更新时间:2024-05-07 -

摘要:Remote sensing images are often captured from multiview and multiple altitudes, thereby comprising a mass of objects with limited sizes which significantly challenge current detection methods that can achieve outstanding performance on natural images. Moreover, how to precisely detect these small objects plays a crucial role in developing an intelligent interpretation system for remote sensing images. Focusing on the remote sensing images, this paper conducts a comprehensive survey for deep learning-based small object detection (SOD) can be reviewed and analyzed literately, including 1) features-represent bottlenecks, 2) background confusion, and 3) branching-regressed sensitivity. Specially, one of the major bottlenecks is for objective’s representation. It refers that the down-sample operations in the prevailing feature extractors can suppress the signals of small objects unavoidably, and the following detection is impaired further in terms of the weak representations. The detection of size-limited instances is also interference of the confusion between the objects and backgrounds and the sensitivity of regression branch. For the former, the representations of small objects tend to be contaminated in related to feature extraction-contextual factors, which may erase the discriminative information that plays a significant role in head network. And the sensitivity of regression branch in small object detection is derived from the low tolerance for bounding box perturbation, in which a slight deviation of a predicted box will cause a drastic drop on the intersection-over-union (IoU), which is generally adopted to evaluate the accuracy of localization. Furthermore, we review and analyze the literature of small object detection for remote sensing images in the deep-learning era. In detail, by systematically reviewing corresponding methods of three small object detection tasks, i.e., SOD for optical remote sensing images, SOD for synthetic aperture radar (SAR) images and SOD for infrared images, an understandable taxonomy of the reviewed algorithms for each task is given. Specifically, we rigorously split the representative methods into several categories according to the major techniques used. In addition to the algorithm survey, considering the deep learning-based methods are hungry for data and to provide a comprehensive survey about small object detection, we also retrospect several publicly available datasets which are commonly used in these three SOD tasks. For each concrete field, we list the prevailing benchmarks in accordance with the published papers, and a brief introduction and some example images about these datasets are illustrated: small size. What is more, other related features about small object detection are highlighted as well, such as image resolution, data source, the number of images and annotated instances, and some proper statistics of each task, etc. Additionally, to better investigate the performance of generic detection methods on small objects, we analyze an in-depth evaluation and comparison of main-stream detection algorithms and several SOD methods for remote sensing images, namely SODA-A(small object detection datasets), AIR-SARShip and NUAA-SIRST(Nanjing University of Aeronautics and Astronautics, single-frame infrared small target). Afterwards, current situation in applications of small object detection for remote sensing images are analyzed, including SOD-based intelligent transportation system and scene-related understanding. Such harbor-targeted recognition is based on SAR image analysis, the precision-guided weapons based on the detection and recognition techniques of infrared images, and the tracking of moving targets at sea on top of multimodal remote sensing data. In the end, to enlighten the further research of small object detection in remote sensing images, we discuss four promising directions in the future. Concretely, it is required that an efficient backbone network can avoid the information loss of small objects while capturing the discriminative features to optimize the down-stream tasks about small objects. Large-scale benchmarks with well annotated small instances play an irreplaceable role linked to small object detection in remote sensing images further. Moreover, a multimodal remote sensing data-collaborated SOD algorithm is also preferred. A proper evaluation metric can not only guide the training and inference of small object detection methods under some specific scenes, but also rich its domain-related development.关键词:small object detection(SOD);deep learning;optical remote sensing images;SAR images;infrared images;public datasets763|1544|5更新时间:2024-05-07

摘要:Remote sensing images are often captured from multiview and multiple altitudes, thereby comprising a mass of objects with limited sizes which significantly challenge current detection methods that can achieve outstanding performance on natural images. Moreover, how to precisely detect these small objects plays a crucial role in developing an intelligent interpretation system for remote sensing images. Focusing on the remote sensing images, this paper conducts a comprehensive survey for deep learning-based small object detection (SOD) can be reviewed and analyzed literately, including 1) features-represent bottlenecks, 2) background confusion, and 3) branching-regressed sensitivity. Specially, one of the major bottlenecks is for objective’s representation. It refers that the down-sample operations in the prevailing feature extractors can suppress the signals of small objects unavoidably, and the following detection is impaired further in terms of the weak representations. The detection of size-limited instances is also interference of the confusion between the objects and backgrounds and the sensitivity of regression branch. For the former, the representations of small objects tend to be contaminated in related to feature extraction-contextual factors, which may erase the discriminative information that plays a significant role in head network. And the sensitivity of regression branch in small object detection is derived from the low tolerance for bounding box perturbation, in which a slight deviation of a predicted box will cause a drastic drop on the intersection-over-union (IoU), which is generally adopted to evaluate the accuracy of localization. Furthermore, we review and analyze the literature of small object detection for remote sensing images in the deep-learning era. In detail, by systematically reviewing corresponding methods of three small object detection tasks, i.e., SOD for optical remote sensing images, SOD for synthetic aperture radar (SAR) images and SOD for infrared images, an understandable taxonomy of the reviewed algorithms for each task is given. Specifically, we rigorously split the representative methods into several categories according to the major techniques used. In addition to the algorithm survey, considering the deep learning-based methods are hungry for data and to provide a comprehensive survey about small object detection, we also retrospect several publicly available datasets which are commonly used in these three SOD tasks. For each concrete field, we list the prevailing benchmarks in accordance with the published papers, and a brief introduction and some example images about these datasets are illustrated: small size. What is more, other related features about small object detection are highlighted as well, such as image resolution, data source, the number of images and annotated instances, and some proper statistics of each task, etc. Additionally, to better investigate the performance of generic detection methods on small objects, we analyze an in-depth evaluation and comparison of main-stream detection algorithms and several SOD methods for remote sensing images, namely SODA-A(small object detection datasets), AIR-SARShip and NUAA-SIRST(Nanjing University of Aeronautics and Astronautics, single-frame infrared small target). Afterwards, current situation in applications of small object detection for remote sensing images are analyzed, including SOD-based intelligent transportation system and scene-related understanding. Such harbor-targeted recognition is based on SAR image analysis, the precision-guided weapons based on the detection and recognition techniques of infrared images, and the tracking of moving targets at sea on top of multimodal remote sensing data. In the end, to enlighten the further research of small object detection in remote sensing images, we discuss four promising directions in the future. Concretely, it is required that an efficient backbone network can avoid the information loss of small objects while capturing the discriminative features to optimize the down-stream tasks about small objects. Large-scale benchmarks with well annotated small instances play an irreplaceable role linked to small object detection in remote sensing images further. Moreover, a multimodal remote sensing data-collaborated SOD algorithm is also preferred. A proper evaluation metric can not only guide the training and inference of small object detection methods under some specific scenes, but also rich its domain-related development.关键词:small object detection(SOD);deep learning;optical remote sensing images;SAR images;infrared images;public datasets763|1544|5更新时间:2024-05-07 -

摘要:Computer vision-oriented hyperspectral images (HSIs) are featured to enrich spatial and spectral information compared to gray and RGB images. It has been developing in such domains like target detection, scene classification, and tracking. However, the HSI imaging technique is challenged for its distortion problems (e.g., low spatial resolution, noise). HSI-related super-resolution (SR) methods are proposed to reconstruct high-quality HSIs in terms of a high spectral resolution and spatial resolution (HR). Current HSI SR methods can be segmented into two categories: spatial SR and spectral SR. The spatial SR method is oriented to reconstruct the target HR HSI via improving the spatial resolution of low-resolution (LR) HSI. It can be subdivided into single image SR and fusion based SR methods further. Single image based SR method can be used to reconstruct the target HSI via directly improving the spatial resolution of LR HSI. However, due to much more spatial information is sacrificed, single image based SR method is challenged to reconstruct effective HSIs. Therefore, to fuse these high quality spatial information into the LR HSIs, extra high spatial homogeneous information is introduced (e.g., multispectral image (MSI), RGB). In this way, the spatial resolution of LR HSI can be improved greatly (e.g., 8 times, 16 times, and 32 times SR). The other HSI SR method is focused on the spectral super-resolution method, which can improve the spectral resolution of high spatial resolution images (e.g., MSI, RGB) and generate the target hyperspectral image. The literature review is focused on the growth of HSI SR methods in relevance to three aspects single image-based, fusion-based, and spectral super-resolution contexts. Each of these three category for HSI SR methods can be further subdivided into two aspects of traditional optimization framework and deep learning based methods. For single image HSI SR, due to the SR problem being an illness inverse problem, traditional optimization framework based single image HSI SR methods is used to develop effective image priors to restrain the SR process. Image priors like low-rank, sparse representation, and non-local features are commonly used in single based HSI SR method, but it still challenged for such problems of manpower and restrictions. For the traditional fusion based method, the core element is to balance the spatial-spectral correlation between HR MSI and LR HSI. It is feasible to split these two images into key components and then re-combine the effective parts of each image. Therefore, multiple schemes are introduced (e.g., non-negative matrix factorization, coupled tensor factorization) to leverage the key information from HR MSI and LR HSI. In addition, to increase the effectiveness of these decomposition methods, some constraints are required to be introduced (e.g., sparse, low-rank). For the traditional spectral resolution method, it is essential to learn how to reconstruct the spectral characteristics from RGB/MSI images. When paired RGB and HSI exist, a promising way is feasible to construct a dictionary (e.g., sparse dictionary learning) that the mapping relation can be recorded between RGB/MSI images and HSIs. The dictionary learning based spectral super-resolution methods have been developing as well, but it is often challenged to realize more generalization ability for applications. In recent years, deep learning based methods have facilitated much more computer vision tasks, and is beneficial for exploiting the inherent spatial-spectral relations of HSIs. For the single image HSI SR methods, a deep convolution neural network (DCNN) is utilized to learn the mapping process from LR HSI to HR HSI. The DCNN is capable to learn deep image prior from plenty of training samples, which has better representation ability than the heuristic handcrafted image priors (e.g., low-rank, sparse) to some extent. However, the performance of this kind of method is often restricted by the amount and variety of training samples. Therefore, it is necessary to reconstruct HR HSI from LR HSI in an unsupervised manner, but the robustness of the unsupervised method is still a challenging problem to be resolved. For the deep learning based HSI fusion methods, to extract spatial-spectral information from MSIs and HSIs, an effective design of DCNN frameworks (e.g., multi-branch, multi-scale, 3D-CNN) are concerned about. However, such problems (e.g., noise, unknown degeneration, unregistered) are challenged for effective generalization ability of DCNN-based HSI fusion method. To resolve these problems, unsupervised and alternative learning methods are introduced to improve fusion-based generalization ability. The DCNN-unfolding is proposed and the optimal interpretability is demonstrated, and its registration strategy is introduced to improve the robustness further. For the spectral super resolution method, DCNN is demonstrated to model the mapping scheme between RGB/MSI and HR HSI. However, there still some barriers are required to be resolved for DCNN-based spectral super resolution method. For example, most existing spectral super resolution methods can generate HSI only according to its fixed spectral interval or spectrum range. A spectral super resolution framework is required to be linked with generalization ability well in the future. In this study, we will summarize the developing of the HSI SR scope from the perspective of new designs, new methods, and new application scenes.关键词:hyperspectral image(HSI);super-resolution reconstruction;single image super-resolution;fusion based super-resolution;spectral super-resolution752|1482|3更新时间:2024-05-07

摘要:Computer vision-oriented hyperspectral images (HSIs) are featured to enrich spatial and spectral information compared to gray and RGB images. It has been developing in such domains like target detection, scene classification, and tracking. However, the HSI imaging technique is challenged for its distortion problems (e.g., low spatial resolution, noise). HSI-related super-resolution (SR) methods are proposed to reconstruct high-quality HSIs in terms of a high spectral resolution and spatial resolution (HR). Current HSI SR methods can be segmented into two categories: spatial SR and spectral SR. The spatial SR method is oriented to reconstruct the target HR HSI via improving the spatial resolution of low-resolution (LR) HSI. It can be subdivided into single image SR and fusion based SR methods further. Single image based SR method can be used to reconstruct the target HSI via directly improving the spatial resolution of LR HSI. However, due to much more spatial information is sacrificed, single image based SR method is challenged to reconstruct effective HSIs. Therefore, to fuse these high quality spatial information into the LR HSIs, extra high spatial homogeneous information is introduced (e.g., multispectral image (MSI), RGB). In this way, the spatial resolution of LR HSI can be improved greatly (e.g., 8 times, 16 times, and 32 times SR). The other HSI SR method is focused on the spectral super-resolution method, which can improve the spectral resolution of high spatial resolution images (e.g., MSI, RGB) and generate the target hyperspectral image. The literature review is focused on the growth of HSI SR methods in relevance to three aspects single image-based, fusion-based, and spectral super-resolution contexts. Each of these three category for HSI SR methods can be further subdivided into two aspects of traditional optimization framework and deep learning based methods. For single image HSI SR, due to the SR problem being an illness inverse problem, traditional optimization framework based single image HSI SR methods is used to develop effective image priors to restrain the SR process. Image priors like low-rank, sparse representation, and non-local features are commonly used in single based HSI SR method, but it still challenged for such problems of manpower and restrictions. For the traditional fusion based method, the core element is to balance the spatial-spectral correlation between HR MSI and LR HSI. It is feasible to split these two images into key components and then re-combine the effective parts of each image. Therefore, multiple schemes are introduced (e.g., non-negative matrix factorization, coupled tensor factorization) to leverage the key information from HR MSI and LR HSI. In addition, to increase the effectiveness of these decomposition methods, some constraints are required to be introduced (e.g., sparse, low-rank). For the traditional spectral resolution method, it is essential to learn how to reconstruct the spectral characteristics from RGB/MSI images. When paired RGB and HSI exist, a promising way is feasible to construct a dictionary (e.g., sparse dictionary learning) that the mapping relation can be recorded between RGB/MSI images and HSIs. The dictionary learning based spectral super-resolution methods have been developing as well, but it is often challenged to realize more generalization ability for applications. In recent years, deep learning based methods have facilitated much more computer vision tasks, and is beneficial for exploiting the inherent spatial-spectral relations of HSIs. For the single image HSI SR methods, a deep convolution neural network (DCNN) is utilized to learn the mapping process from LR HSI to HR HSI. The DCNN is capable to learn deep image prior from plenty of training samples, which has better representation ability than the heuristic handcrafted image priors (e.g., low-rank, sparse) to some extent. However, the performance of this kind of method is often restricted by the amount and variety of training samples. Therefore, it is necessary to reconstruct HR HSI from LR HSI in an unsupervised manner, but the robustness of the unsupervised method is still a challenging problem to be resolved. For the deep learning based HSI fusion methods, to extract spatial-spectral information from MSIs and HSIs, an effective design of DCNN frameworks (e.g., multi-branch, multi-scale, 3D-CNN) are concerned about. However, such problems (e.g., noise, unknown degeneration, unregistered) are challenged for effective generalization ability of DCNN-based HSI fusion method. To resolve these problems, unsupervised and alternative learning methods are introduced to improve fusion-based generalization ability. The DCNN-unfolding is proposed and the optimal interpretability is demonstrated, and its registration strategy is introduced to improve the robustness further. For the spectral super resolution method, DCNN is demonstrated to model the mapping scheme between RGB/MSI and HR HSI. However, there still some barriers are required to be resolved for DCNN-based spectral super resolution method. For example, most existing spectral super resolution methods can generate HSI only according to its fixed spectral interval or spectrum range. A spectral super resolution framework is required to be linked with generalization ability well in the future. In this study, we will summarize the developing of the HSI SR scope from the perspective of new designs, new methods, and new application scenes.关键词:hyperspectral image(HSI);super-resolution reconstruction;single image super-resolution;fusion based super-resolution;spectral super-resolution752|1482|3更新时间:2024-05-07 -

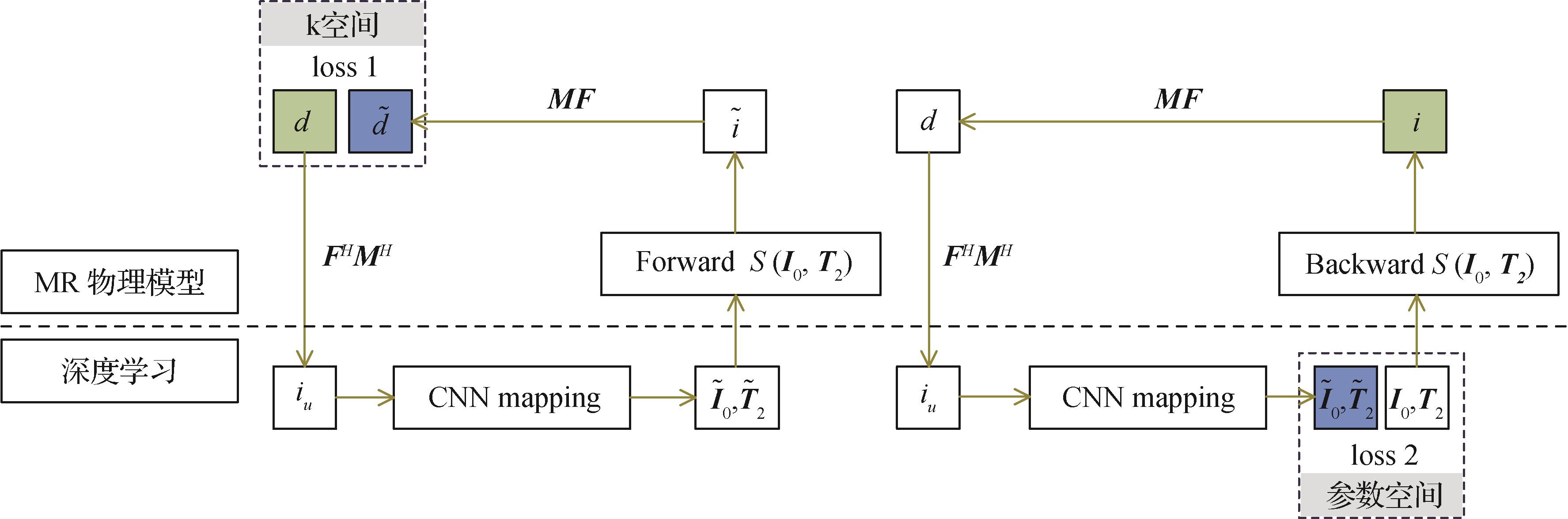

摘要:Magnetic resonance imaging (MRI) is a commonly-used non-invasive medical imaging method. Due to richable soft tissue-related contrast features of human body, medical imaging diagnosis is beneficial from more internal structures-contextual image in clinic, including its organs, bones, muscles, and blood vessels. However, two sorts of bottlenecks of MRI like slow scanning speed and labor-intensive scanning operation are required to be resolved beyond the constraints of hardware and existing techniques. Current MRI has been facilitating in terms of the emerging deep learning based medical imaging technique. To optimize its acquisition time, parallel imaging and compressed sensing combined with the use of multi-array coils, conventional MRI mainly depends on the hardware improvements and new compactable sequence design. First, literature review of deep learning-based MR reconstruction methods are analyzed, including such deep learning issues originated from 1) integration of parallel imaging and compressed sensing of MRI and 2) acceleration of multi-contrast images and dynamic images of single-contrast image. Deep learning-based reconstruction models is oriented to get their generalizability incorporated with multiple datasets, in which MRI data are challenged for multiple factors like its relevance of centers, models, and field strengths. Two decadal development of quantitative MRI technique can provide pixel-level characterization of intrinsic tissue quantitative parameters, such as T1, T2, and ADC. Generally, quantitative MRI is often required to get multiple weighted images under different parameters, and quantitative tissue parameters can be generated via signal model-required pixel-level nonlinear fitting. Compared to the acquisition of contrast images, acquisition and reconstruction duration has become more longer. To accelerate the acquisition and reconstruction or facilitate accurate mapping, extension ability of deep learning has been developing for the reconstruction of MRI fast imaging. Apart from conventional single parameter mapping method, simultaneous multi-parameter mapping techniques have leaked out, such as MR fingerprinting. Compared to single parameter mapping, multi-parameter mapping can be synchronized and accelerated, and more efficient acquisition and inherent coregistration can be used to deal with such complicated reconstruction. Deep learning technique can be as a multifaceted tool to simplify the reconstruction and speed up the acquisition. However, it is still challenged for such constraints like deep learning algorithms and large amounts of training data. Deep learning methods are required to be melted into magnetic resonance physical models and traditional reconstruction algorithms to a certain extent, and to improve the interpretability of the model and alleviate the impact on large datasets, such methods of data enhancement, weakly supervised learning, unsupervised learning, and transfer learning can be involved in as well. A quantitative MRI-relevant unsupervised network can be potentially used for training in terms of different parameter mapping sequences, which can greatly shrink the amount of data samples further.关键词:deep learning;magnetic resonance imaging(MRI);contrast weighted imaging;quantitative imaging276|918|1更新时间:2024-05-07

摘要:Magnetic resonance imaging (MRI) is a commonly-used non-invasive medical imaging method. Due to richable soft tissue-related contrast features of human body, medical imaging diagnosis is beneficial from more internal structures-contextual image in clinic, including its organs, bones, muscles, and blood vessels. However, two sorts of bottlenecks of MRI like slow scanning speed and labor-intensive scanning operation are required to be resolved beyond the constraints of hardware and existing techniques. Current MRI has been facilitating in terms of the emerging deep learning based medical imaging technique. To optimize its acquisition time, parallel imaging and compressed sensing combined with the use of multi-array coils, conventional MRI mainly depends on the hardware improvements and new compactable sequence design. First, literature review of deep learning-based MR reconstruction methods are analyzed, including such deep learning issues originated from 1) integration of parallel imaging and compressed sensing of MRI and 2) acceleration of multi-contrast images and dynamic images of single-contrast image. Deep learning-based reconstruction models is oriented to get their generalizability incorporated with multiple datasets, in which MRI data are challenged for multiple factors like its relevance of centers, models, and field strengths. Two decadal development of quantitative MRI technique can provide pixel-level characterization of intrinsic tissue quantitative parameters, such as T1, T2, and ADC. Generally, quantitative MRI is often required to get multiple weighted images under different parameters, and quantitative tissue parameters can be generated via signal model-required pixel-level nonlinear fitting. Compared to the acquisition of contrast images, acquisition and reconstruction duration has become more longer. To accelerate the acquisition and reconstruction or facilitate accurate mapping, extension ability of deep learning has been developing for the reconstruction of MRI fast imaging. Apart from conventional single parameter mapping method, simultaneous multi-parameter mapping techniques have leaked out, such as MR fingerprinting. Compared to single parameter mapping, multi-parameter mapping can be synchronized and accelerated, and more efficient acquisition and inherent coregistration can be used to deal with such complicated reconstruction. Deep learning technique can be as a multifaceted tool to simplify the reconstruction and speed up the acquisition. However, it is still challenged for such constraints like deep learning algorithms and large amounts of training data. Deep learning methods are required to be melted into magnetic resonance physical models and traditional reconstruction algorithms to a certain extent, and to improve the interpretability of the model and alleviate the impact on large datasets, such methods of data enhancement, weakly supervised learning, unsupervised learning, and transfer learning can be involved in as well. A quantitative MRI-relevant unsupervised network can be potentially used for training in terms of different parameter mapping sequences, which can greatly shrink the amount of data samples further.关键词:deep learning;magnetic resonance imaging(MRI);contrast weighted imaging;quantitative imaging276|918|1更新时间:2024-05-07 -

摘要:Autonomous driving-oriented accurate perception and measurement of the three-dimensional (3D) spatial position and scale can be as the basis for realizing the control ability and decision-making level. Sensing technology-driven autonomous vehicles are equipped with high-resolution camera, light detection and ranging(LiDAR), radar, global positioning system(GPS)/inertial measurement unit(IMU) and other related sensors. Current LiDAR or multi-modal data-based 3D object detection algorithms are challenged for its deployment and application because of the shortcomings of LiDAR sensors like high price, limited sensing range, and sparse point clouds data. In contrast, such high-resolution cameras are commonly-used and featured by its lower price, and it can obtain high-resolution spatial information, richer shape, and appearance details as well. The emerging image-based 3D object detection is focused on further. At present, constraints of detection accuracy of the existing methods are still to be analyzed thoroughly and systematically. We summary the research results and industrial applications in relevance to such 1) perspectives of commonly used datasets and evaluation criteria, 2) data impact, 3) methodological constraints and prediction errors. First, a brief introduction is linked to perspective of academic domain and application of autonomous driving industry. We briefly review latest growths of Baidu Apollo, Google Waymo, Tesla and other related autonomous driving companies, and the thread of 3D object detection methods for autonomous driving. Then, we analyzed and summarized four popular datasets like KITTI, nuScenes, Waymo open dataset, and DAIR-V2X dataset from three aspects of: 1) data acquisition/sensors, data accuracy and data label information; 2) key evaluation standards proposed by these data sets, and 3) pros/cons and applicability of these evaluation standards. Third, main constraints of the image-based 3D object detection algorithm and the errors are derived from two sides of: data and methodology. Such main data constraints are originated from their data accuracy, sample difference, data volume, and data annotation. The data accuracy is mainly limited by equipment performance. The sample difference is mainly restricted by such image processing problems in related to object distance difference, angle difference, occlusion, and truncation. Data volume is affected by variety of 3D data types and high difficulty of labeling. The volume of 3D object detection data set is much smaller in comparison with the 2D object detection data set. Data annotation is mainly focused on 3D bounding box labeling, the labeling details, and quality of the dataset, especially for image annotation used in image-based 3D object detection. For non-rigid objects like pedestrians, the annotation error is larger, and there are some optimal for improving the labeling method. The general framework of image-based 3D object detection can be classified as one-stage methods and two-stage methods, and the limitations consists of 1) the prior geometric relationship, 2) depth prediction accuracy, and 3) data modality. The prior geometric relationship is focused on 2D-3D geometric constraints for 2D images-projected 3D objects and objects-between position relationships. The image-based 3D object detection methods face such problems as: prior 2D-3D geometric constraints and occluded and truncated objects. The prediction of depth information from 2D images is an ill conditioned problem, and dimension collapse will cause depth prediction error-relevant loss of depth information in the image. On the one hand, the depth prediction is often not accurate due to the influence of projection relationship. On the other hand, the performance of continuous depth prediction is often poor at the depth mutation of the image (such as edge of objects). When the prediction depth is discretized, there is a problem that the classification of depth is relatively rough, and the accuracy classification cannot be arbitrarily divided. The limitation of single image-based data modality is mainly reflected via large error of depth prediction. The detection performance of the algorithm can be optimized by 1) simulating the stereo signal and LiDAR point clouds, or 2) using stereo image as the aided input, or 3) leveraging point clouds data with accurate 3D information as supervision signal. In addition, video data can be adopted to improve the detection accuracy to a certain extent. Forth, current research situation is summarized and compared from academic and industrial domain. Finally, some future research directions are predicted in terms of such factors of datasets, evaluation indicators, and depth prediction.关键词:3D object detection;benchmark;constraint;error analysis;autonomous driving;image processing;computer vision502|874|5更新时间:2024-05-07