最新刊期

卷 28 , 期 4 , 2023

-

摘要:This is the 28th annual survey series of bibliographies on image engineering in China. This statistic and analysis study aims to capture the up-to-date development of image engineering in China, provide a targeted means of literature searching facility for readers working in related areas, and supply a useful recommendation for the editors of journals and potential authors of papers. Specifically, considering the wide distribution of related publications in China, all references (908) on image engineering research and technique are selected carefully from the research papers (3 096 in total) published in all issues (154) of a set of 15 Chinese journals. These 15 journals are considered important, in which papers concerning image engineering have higher quality and are relatively concentrated. The selected references are initially classified into five categories (image processing, image analysis, image understanding, technique application, and survey) and then into 23 specialized classes in accordance with their main contents (same as the last 17 years). Analysis and discussions about the statistics of the results of classifications by journal and by category are also presented. Analysis on the statistics in 2022 shows that the direction of image analysis has received the most attention at present, among which object detection and recognition, image segmentation and primitive detection are the focus of research. Besides, the development and application of image technology in remote sensing, radar, sonar and mapping, as well as biology and medicine fields are the most active. In addition, image engineering technology is constantly expanding new application fields, new application categories may be increased in the future. In conclusion, this work shows a general and up-to-date picture of the various continuing progresses, either for depth or for width, of image engineering in China in 2022. The statistics for 28 years also provide readers with more comprehensive and credible information on the development trends of various research directions.关键词:image engineering;image processing;image analysis;image understanding;technique application;literature survey;literature statistics;literature classification;bibliometrics96|226|1更新时间:2024-05-07

摘要:This is the 28th annual survey series of bibliographies on image engineering in China. This statistic and analysis study aims to capture the up-to-date development of image engineering in China, provide a targeted means of literature searching facility for readers working in related areas, and supply a useful recommendation for the editors of journals and potential authors of papers. Specifically, considering the wide distribution of related publications in China, all references (908) on image engineering research and technique are selected carefully from the research papers (3 096 in total) published in all issues (154) of a set of 15 Chinese journals. These 15 journals are considered important, in which papers concerning image engineering have higher quality and are relatively concentrated. The selected references are initially classified into five categories (image processing, image analysis, image understanding, technique application, and survey) and then into 23 specialized classes in accordance with their main contents (same as the last 17 years). Analysis and discussions about the statistics of the results of classifications by journal and by category are also presented. Analysis on the statistics in 2022 shows that the direction of image analysis has received the most attention at present, among which object detection and recognition, image segmentation and primitive detection are the focus of research. Besides, the development and application of image technology in remote sensing, radar, sonar and mapping, as well as biology and medicine fields are the most active. In addition, image engineering technology is constantly expanding new application fields, new application categories may be increased in the future. In conclusion, this work shows a general and up-to-date picture of the various continuing progresses, either for depth or for width, of image engineering in China in 2022. The statistics for 28 years also provide readers with more comprehensive and credible information on the development trends of various research directions.关键词:image engineering;image processing;image analysis;image understanding;technique application;literature survey;literature statistics;literature classification;bibliometrics96|226|1更新时间:2024-05-07 -

摘要:Artificial intelligence (AI) technology has been developing intensively, especially for such scenarios in relevance to its applications of 1) natural language processing, 2) computer vision, 3) recommendation systems, and 4) forecast analysis. AI technology has been challenging for human cognition over the past decade. In recent years, natural language processing techniques can be focused on more. ChatGPT, as a case of emerging generative AI technology, is launched in December of 2022. ChatGPT, as an advanced language model, is commonly used on the basis of its a) larger model sizes, b) advanced pre-training methods, c) faster computing resources, and d) more language processing tasks. This ChatGPT-related literature review is focused on its (1) public awareness and application status, (2) characteristics, (3) mechanisms, (4) scalability, (5) challenges and limitations, (6) future development and application prospects, and (7) improvements of GPT-4 relative to ChatGPT. Cognitive computing and AI-based ChatGPT can be as a sort of language model in terms of the Transformer architecture and Generative Pre-Training (GPT). This GPT-trained model can be related to natural language processing, which can predict the probability distribution of the next token using a multi-layer Transformer to generate natural language text. It can be outreached by training the learned language patterns on a large corpus of text. The OpenAI’s language model has shown a significant improvement in their level of intelligence from GPT-1(117 million parameters) in 2018 to GPT-3(175 billion parameters) in 2020. The language processing and generation capabilities of GPT have been improving dramatically in terms of consistent optimization like its 1) model size, 2) generative models, and 3) self-supervised learning. Thereafter, reinforcement learning-based InstructGPT is originated from Human Feedback and such probability of infeasible, untrue, and biased outputs can be significantly reduced in January 2022. In December 2022, ChatGPT is introduced as the sister model of InstructGPT. ChatGPT is not only add InstructGPT-based chat attributes, and a test version is opened to the public. The core technologies of ChatGPT can be linked to 1) reinforcement learning from human feedback(RLHF), 2) supervised fine-tuning(SFT), 3) instruction fine-tnning(IFT), and 4) chain-of-thought(CoT) as well. ChatGPT has attracked about 100 million active users per month after the launch of two months. In comparison, TikTok took nine months to achieve 100 million monthly active users, and Instagram took two and a half years. According to Similar Web, more than 13 million independent visitors use ChatGPT on average each day in January of 2023, which is more than twice in December of 2022. The leading US new media company Buzzfeed accurately seized the opportunity of ChatGPT and saw its stock price triple in two days. The ChatGPT-derived impact shows its potential preference for consumers. The ChatGPT can play mulitiple roles for such domain like clinics, translation, official administrations, and programming tasks. Such extensive application of ChatGPT is still to be developed. However, while ChatGPT has the potential for widespread application in various industries, it cannot be universally applied to all industries. For example, as certain industrial production processes typically rely on digitalization and do not necessitate the handling of human language, natural language processing techniques may not be required. Furthermore, various other factors, such as legal restrictions and data privacy concerns, may also impinge upon the application of natural language processing technologies within certain industries. For industries that require the processing of sensitive information, such as the healthcare industry, natural language processing technologies may need to comply with strict legal regulations to ensure data privacy and security. In addition to industry-specific reasons, it should be noted that ChatGPT has not yet achieved perfection in natural language processing tasks. In summary, as a phenomenal and technological product, AI-generated ChatGPT’s potentials are beneficial for textual and multi-modal AIGC applications to a certain extent, and it may have an impact on the a) survival of corporations, b) competition among countries, and c) entire social structure. However, the current various positive evaluations of ChatGPT can only be seen as a phenomenon of good rain after a long drought, and it cannot change the fact that ChatGPT is a questions and answers (Q&A) solution based on prior knowledge and models. It is required to be acknowledged that ChatGPT does not have its true recognition, intention, and creativity yet, and its true intelligence need to be tackled further.关键词:artificial intelligence(AI);deep learning;natural language processing;artificial intelligence generated content(AIGC);ChatGPT239|195|3更新时间:2024-05-07

摘要:Artificial intelligence (AI) technology has been developing intensively, especially for such scenarios in relevance to its applications of 1) natural language processing, 2) computer vision, 3) recommendation systems, and 4) forecast analysis. AI technology has been challenging for human cognition over the past decade. In recent years, natural language processing techniques can be focused on more. ChatGPT, as a case of emerging generative AI technology, is launched in December of 2022. ChatGPT, as an advanced language model, is commonly used on the basis of its a) larger model sizes, b) advanced pre-training methods, c) faster computing resources, and d) more language processing tasks. This ChatGPT-related literature review is focused on its (1) public awareness and application status, (2) characteristics, (3) mechanisms, (4) scalability, (5) challenges and limitations, (6) future development and application prospects, and (7) improvements of GPT-4 relative to ChatGPT. Cognitive computing and AI-based ChatGPT can be as a sort of language model in terms of the Transformer architecture and Generative Pre-Training (GPT). This GPT-trained model can be related to natural language processing, which can predict the probability distribution of the next token using a multi-layer Transformer to generate natural language text. It can be outreached by training the learned language patterns on a large corpus of text. The OpenAI’s language model has shown a significant improvement in their level of intelligence from GPT-1(117 million parameters) in 2018 to GPT-3(175 billion parameters) in 2020. The language processing and generation capabilities of GPT have been improving dramatically in terms of consistent optimization like its 1) model size, 2) generative models, and 3) self-supervised learning. Thereafter, reinforcement learning-based InstructGPT is originated from Human Feedback and such probability of infeasible, untrue, and biased outputs can be significantly reduced in January 2022. In December 2022, ChatGPT is introduced as the sister model of InstructGPT. ChatGPT is not only add InstructGPT-based chat attributes, and a test version is opened to the public. The core technologies of ChatGPT can be linked to 1) reinforcement learning from human feedback(RLHF), 2) supervised fine-tuning(SFT), 3) instruction fine-tnning(IFT), and 4) chain-of-thought(CoT) as well. ChatGPT has attracked about 100 million active users per month after the launch of two months. In comparison, TikTok took nine months to achieve 100 million monthly active users, and Instagram took two and a half years. According to Similar Web, more than 13 million independent visitors use ChatGPT on average each day in January of 2023, which is more than twice in December of 2022. The leading US new media company Buzzfeed accurately seized the opportunity of ChatGPT and saw its stock price triple in two days. The ChatGPT-derived impact shows its potential preference for consumers. The ChatGPT can play mulitiple roles for such domain like clinics, translation, official administrations, and programming tasks. Such extensive application of ChatGPT is still to be developed. However, while ChatGPT has the potential for widespread application in various industries, it cannot be universally applied to all industries. For example, as certain industrial production processes typically rely on digitalization and do not necessitate the handling of human language, natural language processing techniques may not be required. Furthermore, various other factors, such as legal restrictions and data privacy concerns, may also impinge upon the application of natural language processing technologies within certain industries. For industries that require the processing of sensitive information, such as the healthcare industry, natural language processing technologies may need to comply with strict legal regulations to ensure data privacy and security. In addition to industry-specific reasons, it should be noted that ChatGPT has not yet achieved perfection in natural language processing tasks. In summary, as a phenomenal and technological product, AI-generated ChatGPT’s potentials are beneficial for textual and multi-modal AIGC applications to a certain extent, and it may have an impact on the a) survival of corporations, b) competition among countries, and c) entire social structure. However, the current various positive evaluations of ChatGPT can only be seen as a phenomenon of good rain after a long drought, and it cannot change the fact that ChatGPT is a questions and answers (Q&A) solution based on prior knowledge and models. It is required to be acknowledged that ChatGPT does not have its true recognition, intention, and creativity yet, and its true intelligence need to be tackled further.关键词:artificial intelligence(AI);deep learning;natural language processing;artificial intelligence generated content(AIGC);ChatGPT239|195|3更新时间:2024-05-07 -

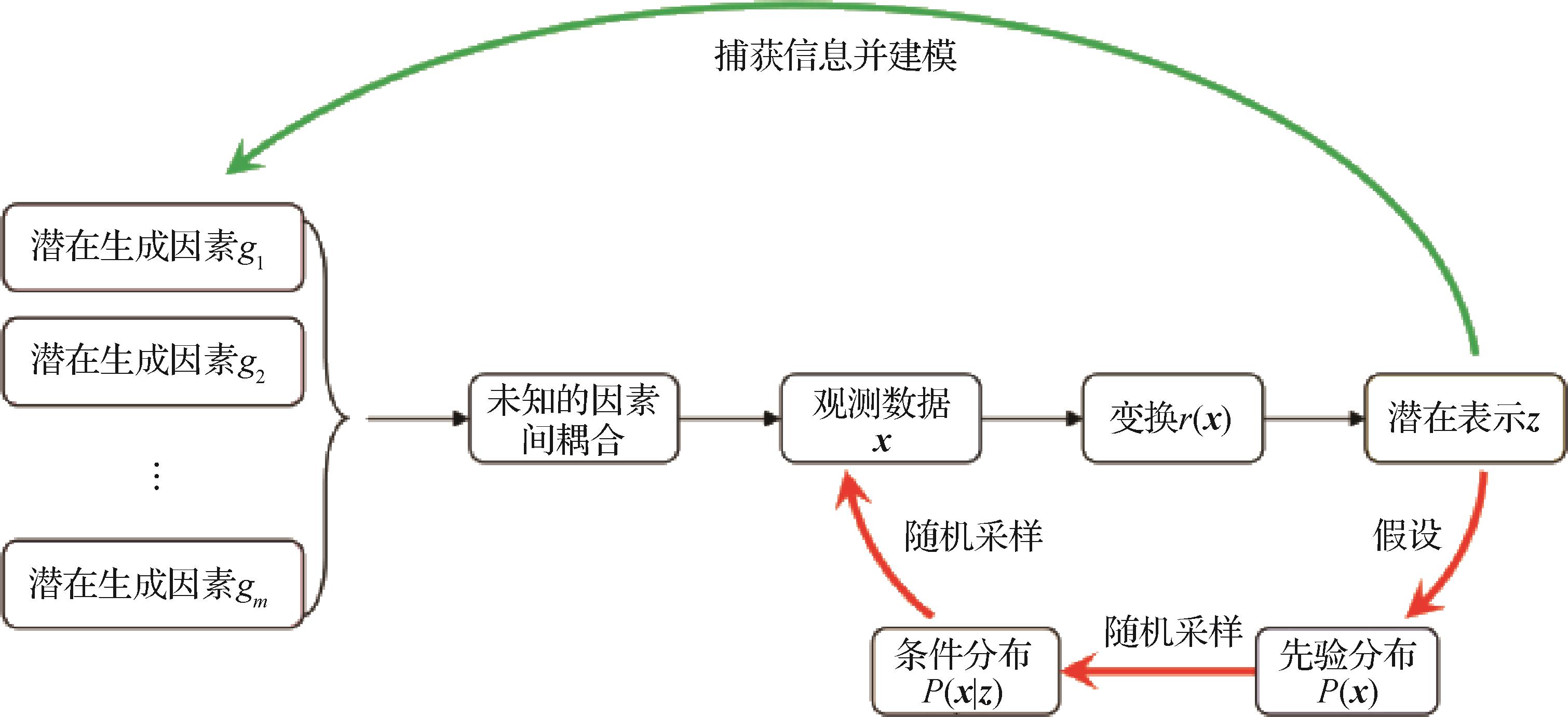

摘要:Representation learning is essential for machine learning technique nowadays. The transition of input representations have been developing intensively in algorithm performance benefited from the growth of hand-crafted features to the representation for multi-media data. However, the representations of visual data are often highly entangled. The interpretation challenges are to be faced because all information components are encoded into the same feature space. Disentangled representation learning (DRL) aims to learn a low-dimensional interpretable abstract representation that can sort the multiple factors of variation out in high-dimensional observations. In the disentangled representation, we can capture and manipulate the information of a single factor of variation through the corresponding latent subspace, which makes it more interpretable. DRL can improve sample efficiency and tolerance to the nuisance variables and offer robust representation of complex variations. Their semantic information is extracted and beneficial for artificial intelligence (AI) downstream tasks like recognition, classification and domain adaptation. Our summary is focused on brief introduction to the definition, research development and applications of DRL. Some of independent component analysis (ICA)-nonlinear DRL researches are covered as well since the DRL is similar to the identifiability issue of nonlinear independent component analysis (nonlinear ICA). The cause and effects mechanism of DRL as high-dimensional ground truth data is generated by a set of unobserved changing factors (generating factors). The DRL can be used to model the factors of variation in terms of latent representation, and the observed data generation process is restored. We summarize the key elements that a well-defined disentangled representation should be qualified into three aspects, which are 1) modularity, 2) compactness, and 3) explicitness. First, explicitness consists of the two sub-requirements of completeness and informativeness. Then, current DRL types are categorized into 1) dimension-wise disentanglement, 2) semantic-based disentanglement, 3) hierarchical disentanglement, and 4) nonlinear ICA four types in terms of its formulation, characteristics, and scope of application. Dimension-wise disentanglement is assumed that the generative factors are solely and each dimension of latent vector can be separated and mapped, which is suitable for learning the disentangled representation of simple synthetic visual data. Semantic-based disentanglement is hypnotized that some semantic information is solely as well. The generative factors are group-disentangled in terms of specific semantics and they are mapped to different latent spaces, which is suitable for complicated ground truth data. Hierarchical disentanglement is based on the assumption that there is a correlation between generative factors at different levels of abstraction. The generative factors are disentangled by group from the bottom up and they can be mapped to latent space of different semantic abstraction levels to form a hierarchical disentangled representation. Nonlinear ICA provides an identifiable method for observed data-mixed disentangling unknown generative factors through a nonlinear reversible generator. For the motivation of loss functions, the loss functions can be commonly used in disentangled representation learning, which are grouped into three categories: 1) modularity constraint: a single latent variable-constrained in the disentangled representation to capture only a single or a single group of factors of variation, and it promotes the separation of factors of variation mutually; 2) explicitness constraint: current latent variable of the latent representation is activated to encode the ground truth of the corresponding generating factor effectively, and the entire latent representation contains complete information about all generative factors; and 3) multi-purpose constraint: loss-related can optimize multiple disentangled representation, including modularity, compactness, and explicitness of the disentangled representation at the same time. The model-relevant can combine multiple loss constraint terms to form the final hybrid objective function. We compare the scope of application and limitations of each type of loss functions and summarize the classical disentangled representation works using the hybrid objective function further.关键词:disentangled representation learning;visual data;latent representation;factors of variation;latent space189|252|1更新时间:2024-05-07

摘要:Representation learning is essential for machine learning technique nowadays. The transition of input representations have been developing intensively in algorithm performance benefited from the growth of hand-crafted features to the representation for multi-media data. However, the representations of visual data are often highly entangled. The interpretation challenges are to be faced because all information components are encoded into the same feature space. Disentangled representation learning (DRL) aims to learn a low-dimensional interpretable abstract representation that can sort the multiple factors of variation out in high-dimensional observations. In the disentangled representation, we can capture and manipulate the information of a single factor of variation through the corresponding latent subspace, which makes it more interpretable. DRL can improve sample efficiency and tolerance to the nuisance variables and offer robust representation of complex variations. Their semantic information is extracted and beneficial for artificial intelligence (AI) downstream tasks like recognition, classification and domain adaptation. Our summary is focused on brief introduction to the definition, research development and applications of DRL. Some of independent component analysis (ICA)-nonlinear DRL researches are covered as well since the DRL is similar to the identifiability issue of nonlinear independent component analysis (nonlinear ICA). The cause and effects mechanism of DRL as high-dimensional ground truth data is generated by a set of unobserved changing factors (generating factors). The DRL can be used to model the factors of variation in terms of latent representation, and the observed data generation process is restored. We summarize the key elements that a well-defined disentangled representation should be qualified into three aspects, which are 1) modularity, 2) compactness, and 3) explicitness. First, explicitness consists of the two sub-requirements of completeness and informativeness. Then, current DRL types are categorized into 1) dimension-wise disentanglement, 2) semantic-based disentanglement, 3) hierarchical disentanglement, and 4) nonlinear ICA four types in terms of its formulation, characteristics, and scope of application. Dimension-wise disentanglement is assumed that the generative factors are solely and each dimension of latent vector can be separated and mapped, which is suitable for learning the disentangled representation of simple synthetic visual data. Semantic-based disentanglement is hypnotized that some semantic information is solely as well. The generative factors are group-disentangled in terms of specific semantics and they are mapped to different latent spaces, which is suitable for complicated ground truth data. Hierarchical disentanglement is based on the assumption that there is a correlation between generative factors at different levels of abstraction. The generative factors are disentangled by group from the bottom up and they can be mapped to latent space of different semantic abstraction levels to form a hierarchical disentangled representation. Nonlinear ICA provides an identifiable method for observed data-mixed disentangling unknown generative factors through a nonlinear reversible generator. For the motivation of loss functions, the loss functions can be commonly used in disentangled representation learning, which are grouped into three categories: 1) modularity constraint: a single latent variable-constrained in the disentangled representation to capture only a single or a single group of factors of variation, and it promotes the separation of factors of variation mutually; 2) explicitness constraint: current latent variable of the latent representation is activated to encode the ground truth of the corresponding generating factor effectively, and the entire latent representation contains complete information about all generative factors; and 3) multi-purpose constraint: loss-related can optimize multiple disentangled representation, including modularity, compactness, and explicitness of the disentangled representation at the same time. The model-relevant can combine multiple loss constraint terms to form the final hybrid objective function. We compare the scope of application and limitations of each type of loss functions and summarize the classical disentangled representation works using the hybrid objective function further.关键词:disentangled representation learning;visual data;latent representation;factors of variation;latent space189|252|1更新时间:2024-05-07 -

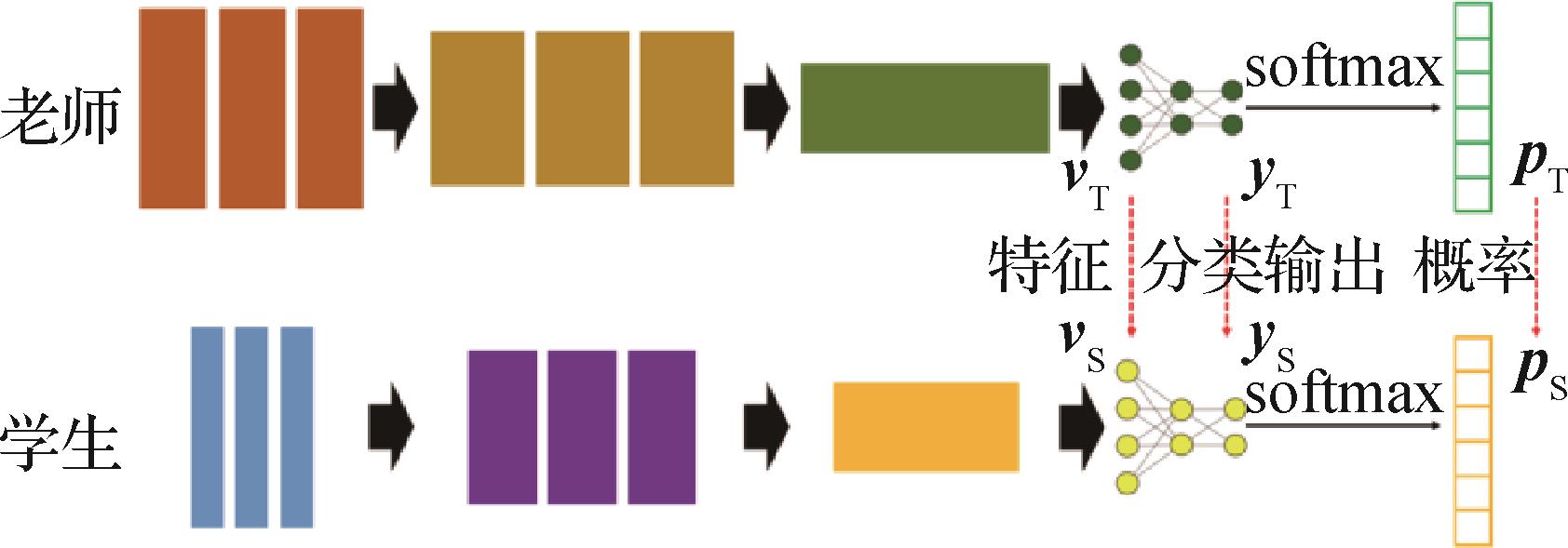

摘要:Computer vision tasks aim to construct computational models in relevant to functions-like of human visual systems. Current deep learning models are progressively improving upper bounds of performances in multiple computer vision tasks, especially for analysis and understanding of high-level semantics, i.e., multimedia-based descriptors for human recognition. Typical tasks to understand high-level semantics include image classification, object detection, instance segmentation, semantic segmentation, and video’s recognition and tracking. With the development of convolutional neural networks (CNNs), deep learning based high-level semantic understanding have all been benefiting from increasingly deeper and cumbersome models, which is also challenged for the problem of storages and computational costs. To obtain lighter structure and computation efficiency, many model compression strategies have been proposed, e.g., pruning, weight quantization, and low-rank factorization. But, such challenging issue is to be resolved for altered network structure or drop-severe of performance when deployed on computer vision tasks. Model distillation can be as one of the typical compression methods in terms of transfer learning to model compression. In general, model distillation utilizes a large and complicated pre-trained model as “teacher” and takes its effective representations, e.g., model outputs, features of hidden layers or feature maps-between similarities. These representations are treated as extra supervision signal together with the original ground truth for a lighter and faster model’s training, in which the lighter model is called “student”. As model distillation provides favorable balance between models’ performances and efficiency, it is being rapidly explored on different computer vision tasks. This paper investigates the progress of model distillation methods since its introduction in 2014 and introduces their different strategies in various applications. We review some popular distillation strategies and current model distillation algorithms deployed on image classification, object detection and semantic segmentation in this paper. First, we introduce distillation methods for image classification tasks, where model distillation has already achieved mature development. Fundamentals of model distillation starts from using teacher classifiers’ output logits as soft labels, bringing student with more inter-categories structural information, which is not available in conventional one-hot ground truths. Furthermore, hint learning can be used to utilize hierarchical structure of neural networks and take feature maps from hidden layers as another “teachers”-involved representations. Most of distillation strategies are designed and derived from similar approaches. In the aspects of frameworks’ design and application scenes, the paper respectively introduced some typical distillation strategies on classification models. Some methods mainly considered novel approaches on supervision signal design, i.e., ensembles that differs from conventional classification soft labels or feature maps. Newly developed features for student models to mimic are usually computed from attention or similarity maps of different layers, data augmentations or sampled images. Other methods consider adding noise or perturbation to teacher classifiers’ output or using probability inference to minimize the gap between teacher and student models. These specially designed features or logits are focused on a more appropriate representation of knowledge in teacher models than plain features from some layers’ outputs. Moreover, in other methods, the procedure of model distillation is altered, and more complicated schemes are introduced to transfer teacher’s knowledge instead of simply training the student with generated labels or features. Also, as generative adversarial networks (GANs) achieve promising performance in image synthesis, some model distillation methods also introduce adversarial mechanisms in classifiers’ distillation, where teacher models’ features are regarded as “real ones” and the students are expected to “generate” similar features. In many practical scenes such as model compression, self-training and parallel computing, classifiers’ distillation is utilized in coordinate to specific process as well, e.g., fine tuning networks with full-precision teachers, distilling student model with its previous versions during training, and using models from different nodes as teachers. We summarize some popular strategies performances and illustrate the data in a table after approaches of model distillation in image classification tasks are introduced. Distillation methods’ performances on improving classifiers’ top-1 accuracies are compared on several typical classification datasets. The second part of the paper focuses on specially developed distillation methods for computer vision tasks more complicated than classification, e.g., object detection, instance segmentation and semantic segmentation. Differentiated from classifiers, models of these tasks contain more redundant structures with heterogeneous outputs. Hence, recent works on detectors’ and segmentation models’ distillation is relatively less than those in classifiers’ distillation. The paper describes current challenges in designing of distillation frameworks on detection and segmentation tasks. Some of typical distillation methods for detectors and segmentation models are then introduced based on different tasks and their multifaceted structures. Since there were few works specified for instance segmentation models’ distillation, the papers simply introduce similar distillation methods for object detectors in the beginning of the second part. For detectors, requirements from localization demand special concentration on local information around foreground objects. Meanwhile, images from object detection datasets consists of more complicated scenes generally in which large amounts of different objects may occur. Hence, the solutions of distillation strategies-borrowing from for classifies may bring undesired performance decrease in object detection. Due to more complex structures in detectors, previous distillation methods may not be applicable. As “backbone with task heads” structure is widely used in modern computer vision models, researchers develop novel distillation methods mainly based on this typical framework. The introduced detectors’ distillation strategies investigate issues above and mainly focus on specific output logits acquirement and specially designed loss functions for different parts in detectors. To highlight foreground regions before distillation, backbones-derived feature maps are often selected through regions of interest (RoIs) using masking operations. Various of output logits are selected in different methods from teacher models’ task heads, affecting training of students’ task heads in terms of specific matching and imitation schemes. Semantic segmentation requires more global information than object detection or instance segmentation tasks, focusing on pixel-wise classification inside the total image. One of the critical factors of pixels’ correct classification is oriented to the analysis of inter-pixel relationships. Hence, model distillation methods for semantic segmentation also take advantages of pixels in both output masks and feature maps from hidden layers. Distillation strategies introduced in the paper are majorly on the application of hierarchical distillation on different part, e.g., the imitation of full output classification mask, imitation of full feature maps, computing of similarity matrices, and using conditional GANs (cGANs) for auxiliary imitation. The former two approaches are fundamental practices in model distillation. In contrast, to realize segmentation model’s pixel-wise knowledge to be more ‘compact’ after compression, some distillation methods utilize compressed features instead of original one to compute similarity with student. When cGANs is used to imitate student segmentation model to the teacher features, researchers introduce Wasserstein distance as a better metric for adversarial training. At the final part of this paper, previous works of model distillation for high-level semantic understanding are summarized. We review some obstacles and unsolved problems in current development of model distillation, and the future research direction is predicted as well.关键词:model distillation;deep learning;image classification;object detection;semantic segmentation;transfer learning188|450|1更新时间:2024-05-07

摘要:Computer vision tasks aim to construct computational models in relevant to functions-like of human visual systems. Current deep learning models are progressively improving upper bounds of performances in multiple computer vision tasks, especially for analysis and understanding of high-level semantics, i.e., multimedia-based descriptors for human recognition. Typical tasks to understand high-level semantics include image classification, object detection, instance segmentation, semantic segmentation, and video’s recognition and tracking. With the development of convolutional neural networks (CNNs), deep learning based high-level semantic understanding have all been benefiting from increasingly deeper and cumbersome models, which is also challenged for the problem of storages and computational costs. To obtain lighter structure and computation efficiency, many model compression strategies have been proposed, e.g., pruning, weight quantization, and low-rank factorization. But, such challenging issue is to be resolved for altered network structure or drop-severe of performance when deployed on computer vision tasks. Model distillation can be as one of the typical compression methods in terms of transfer learning to model compression. In general, model distillation utilizes a large and complicated pre-trained model as “teacher” and takes its effective representations, e.g., model outputs, features of hidden layers or feature maps-between similarities. These representations are treated as extra supervision signal together with the original ground truth for a lighter and faster model’s training, in which the lighter model is called “student”. As model distillation provides favorable balance between models’ performances and efficiency, it is being rapidly explored on different computer vision tasks. This paper investigates the progress of model distillation methods since its introduction in 2014 and introduces their different strategies in various applications. We review some popular distillation strategies and current model distillation algorithms deployed on image classification, object detection and semantic segmentation in this paper. First, we introduce distillation methods for image classification tasks, where model distillation has already achieved mature development. Fundamentals of model distillation starts from using teacher classifiers’ output logits as soft labels, bringing student with more inter-categories structural information, which is not available in conventional one-hot ground truths. Furthermore, hint learning can be used to utilize hierarchical structure of neural networks and take feature maps from hidden layers as another “teachers”-involved representations. Most of distillation strategies are designed and derived from similar approaches. In the aspects of frameworks’ design and application scenes, the paper respectively introduced some typical distillation strategies on classification models. Some methods mainly considered novel approaches on supervision signal design, i.e., ensembles that differs from conventional classification soft labels or feature maps. Newly developed features for student models to mimic are usually computed from attention or similarity maps of different layers, data augmentations or sampled images. Other methods consider adding noise or perturbation to teacher classifiers’ output or using probability inference to minimize the gap between teacher and student models. These specially designed features or logits are focused on a more appropriate representation of knowledge in teacher models than plain features from some layers’ outputs. Moreover, in other methods, the procedure of model distillation is altered, and more complicated schemes are introduced to transfer teacher’s knowledge instead of simply training the student with generated labels or features. Also, as generative adversarial networks (GANs) achieve promising performance in image synthesis, some model distillation methods also introduce adversarial mechanisms in classifiers’ distillation, where teacher models’ features are regarded as “real ones” and the students are expected to “generate” similar features. In many practical scenes such as model compression, self-training and parallel computing, classifiers’ distillation is utilized in coordinate to specific process as well, e.g., fine tuning networks with full-precision teachers, distilling student model with its previous versions during training, and using models from different nodes as teachers. We summarize some popular strategies performances and illustrate the data in a table after approaches of model distillation in image classification tasks are introduced. Distillation methods’ performances on improving classifiers’ top-1 accuracies are compared on several typical classification datasets. The second part of the paper focuses on specially developed distillation methods for computer vision tasks more complicated than classification, e.g., object detection, instance segmentation and semantic segmentation. Differentiated from classifiers, models of these tasks contain more redundant structures with heterogeneous outputs. Hence, recent works on detectors’ and segmentation models’ distillation is relatively less than those in classifiers’ distillation. The paper describes current challenges in designing of distillation frameworks on detection and segmentation tasks. Some of typical distillation methods for detectors and segmentation models are then introduced based on different tasks and their multifaceted structures. Since there were few works specified for instance segmentation models’ distillation, the papers simply introduce similar distillation methods for object detectors in the beginning of the second part. For detectors, requirements from localization demand special concentration on local information around foreground objects. Meanwhile, images from object detection datasets consists of more complicated scenes generally in which large amounts of different objects may occur. Hence, the solutions of distillation strategies-borrowing from for classifies may bring undesired performance decrease in object detection. Due to more complex structures in detectors, previous distillation methods may not be applicable. As “backbone with task heads” structure is widely used in modern computer vision models, researchers develop novel distillation methods mainly based on this typical framework. The introduced detectors’ distillation strategies investigate issues above and mainly focus on specific output logits acquirement and specially designed loss functions for different parts in detectors. To highlight foreground regions before distillation, backbones-derived feature maps are often selected through regions of interest (RoIs) using masking operations. Various of output logits are selected in different methods from teacher models’ task heads, affecting training of students’ task heads in terms of specific matching and imitation schemes. Semantic segmentation requires more global information than object detection or instance segmentation tasks, focusing on pixel-wise classification inside the total image. One of the critical factors of pixels’ correct classification is oriented to the analysis of inter-pixel relationships. Hence, model distillation methods for semantic segmentation also take advantages of pixels in both output masks and feature maps from hidden layers. Distillation strategies introduced in the paper are majorly on the application of hierarchical distillation on different part, e.g., the imitation of full output classification mask, imitation of full feature maps, computing of similarity matrices, and using conditional GANs (cGANs) for auxiliary imitation. The former two approaches are fundamental practices in model distillation. In contrast, to realize segmentation model’s pixel-wise knowledge to be more ‘compact’ after compression, some distillation methods utilize compressed features instead of original one to compute similarity with student. When cGANs is used to imitate student segmentation model to the teacher features, researchers introduce Wasserstein distance as a better metric for adversarial training. At the final part of this paper, previous works of model distillation for high-level semantic understanding are summarized. We review some obstacles and unsolved problems in current development of model distillation, and the future research direction is predicted as well.关键词:model distillation;deep learning;image classification;object detection;semantic segmentation;transfer learning188|450|1更新时间:2024-05-07 -

摘要:Deep learning technique has been developing intensively in big data era. However, its capability is still challenged for the design of network structure and parameter setting. Therefore, it is essential to improve the performance of the model and optimize the complexity of the model. Machine learning can be segmented into five categories in terms of learning methods: 1) supervised learning, 2) unsupervised learning, 3) semi-supervised learning, 4) deep learning, and 5) reinforcement learning. These machine learning techniques are required to be incorporated in. To improve its fitting and generalization ability, we select supervised deep learning as a niche to summarize and analyze the optimization methods. First, the mechanism of optimization is demonstrated and its key elements are illustrated. Then, the optimization problem is decomposed into three directions in relevant to fitting ability: 1) convergence, 2) convergence speed, and 3) global-context quality. At the same time, we also summarize and analyze the specific methods and research results of these three optimization directions. Among them, convergence refers to running the algorithm and converging to a synthesis like a stationary point. The gradient exploding/vanishing problem is shown that small changes in a multi-layer network may amplify and stimuli or decline and disappear for each layer. The speed of convergence refers to the ability to assist the model to converge at a faster speed. After the convergence task of the model, the optimization algorithm to accelerate the model convergence should be considered to improve the performance of the model. The global-context quality problem is to ensure that the model converges to a lower solution (the global minimum). The first two problems are local-oriented and the last one is global-concerned. The boundary of these three problems is fuzzy, for example, some optimization methods to improve convergence can accelerate the convergence speed of the model as well. After the fitting optimization of the model, it is necessary to consider the large number of parameters in the deep learning model as well, which can cause poor generalization effect due to overfitting. Regularization can be regarded as an effective method for generalization. To improve the generalization ability of the model, current situation of regularization methods are categorized from two aspects: 1) data processing and 2) model parameters-constrained. Data processing refers to data processing during model training, such as dataset enhancement, noise injection and adversarial training. These optimization methods can improve the generalization ability of the model effectively. Model parameters constraints are oriented to parameters-constrained in the network, which can also improve the generalization ability of the model. We take generative adversarial network (GAN) as the application background and review the growth of its variant model because it can be as a commonly-used deep learning network. We analyze the application of relevant optimization methods in GAN domain from two aspects of fitting and generalization ability. Taking WGAN with gradient penalty (WGAN-GP) as the basic model, we design an experiment on MNIST-10 dataset to study the applicability of the six algorithms (stochastic gradient method(SGD), momentum SGD, Adagrad, Adadelta, root mean square propagation(RMSProp), and Adam) in the context of deep learning based GAN domain. The optimization effects are compared and analyzed in relevant to the experimental results of multiple optimization methods on variants of GAN model, and some GAN-based optimization strategies are required to be clarified further. At present, various optimization methods have been widely used in deep learning models. Various optimization methods to improve the fitting ability can improve the performance of the model. Furthermore, these regularized optimization methods are beneficial to alleviate the problem of model overfitting and improve the robustness of the model. But, there is still a lack of systematic theories and mechanisms for guidance. In addition, there are still some optimization problems to be further studied. The Lipschitz limitation of global gradients is not guaranteed in deep neural networks due to the gap between theory and practice. In the field of GAN, there is still a lack of theoretical breakthroughs to find the stable global optimal solution, that is, the optimal Nash equilibrium. Moreover, some of the existing optimization methods are empirical and its interpretability is lack of clear theoretical proof. There are many and complex optimization methods in deep learning. The use of various optimization methods should be focused on the integrated effect of multiple optimizations. Our critical analysis is potential to provide a reference for the optimization method selection in the design of deep neural network.关键词:machine learning;deep learning;deep learning optimization;regularization;generative adversarial network (GAN)225|389|1更新时间:2024-05-07

摘要:Deep learning technique has been developing intensively in big data era. However, its capability is still challenged for the design of network structure and parameter setting. Therefore, it is essential to improve the performance of the model and optimize the complexity of the model. Machine learning can be segmented into five categories in terms of learning methods: 1) supervised learning, 2) unsupervised learning, 3) semi-supervised learning, 4) deep learning, and 5) reinforcement learning. These machine learning techniques are required to be incorporated in. To improve its fitting and generalization ability, we select supervised deep learning as a niche to summarize and analyze the optimization methods. First, the mechanism of optimization is demonstrated and its key elements are illustrated. Then, the optimization problem is decomposed into three directions in relevant to fitting ability: 1) convergence, 2) convergence speed, and 3) global-context quality. At the same time, we also summarize and analyze the specific methods and research results of these three optimization directions. Among them, convergence refers to running the algorithm and converging to a synthesis like a stationary point. The gradient exploding/vanishing problem is shown that small changes in a multi-layer network may amplify and stimuli or decline and disappear for each layer. The speed of convergence refers to the ability to assist the model to converge at a faster speed. After the convergence task of the model, the optimization algorithm to accelerate the model convergence should be considered to improve the performance of the model. The global-context quality problem is to ensure that the model converges to a lower solution (the global minimum). The first two problems are local-oriented and the last one is global-concerned. The boundary of these three problems is fuzzy, for example, some optimization methods to improve convergence can accelerate the convergence speed of the model as well. After the fitting optimization of the model, it is necessary to consider the large number of parameters in the deep learning model as well, which can cause poor generalization effect due to overfitting. Regularization can be regarded as an effective method for generalization. To improve the generalization ability of the model, current situation of regularization methods are categorized from two aspects: 1) data processing and 2) model parameters-constrained. Data processing refers to data processing during model training, such as dataset enhancement, noise injection and adversarial training. These optimization methods can improve the generalization ability of the model effectively. Model parameters constraints are oriented to parameters-constrained in the network, which can also improve the generalization ability of the model. We take generative adversarial network (GAN) as the application background and review the growth of its variant model because it can be as a commonly-used deep learning network. We analyze the application of relevant optimization methods in GAN domain from two aspects of fitting and generalization ability. Taking WGAN with gradient penalty (WGAN-GP) as the basic model, we design an experiment on MNIST-10 dataset to study the applicability of the six algorithms (stochastic gradient method(SGD), momentum SGD, Adagrad, Adadelta, root mean square propagation(RMSProp), and Adam) in the context of deep learning based GAN domain. The optimization effects are compared and analyzed in relevant to the experimental results of multiple optimization methods on variants of GAN model, and some GAN-based optimization strategies are required to be clarified further. At present, various optimization methods have been widely used in deep learning models. Various optimization methods to improve the fitting ability can improve the performance of the model. Furthermore, these regularized optimization methods are beneficial to alleviate the problem of model overfitting and improve the robustness of the model. But, there is still a lack of systematic theories and mechanisms for guidance. In addition, there are still some optimization problems to be further studied. The Lipschitz limitation of global gradients is not guaranteed in deep neural networks due to the gap between theory and practice. In the field of GAN, there is still a lack of theoretical breakthroughs to find the stable global optimal solution, that is, the optimal Nash equilibrium. Moreover, some of the existing optimization methods are empirical and its interpretability is lack of clear theoretical proof. There are many and complex optimization methods in deep learning. The use of various optimization methods should be focused on the integrated effect of multiple optimizations. Our critical analysis is potential to provide a reference for the optimization method selection in the design of deep neural network.关键词:machine learning;deep learning;deep learning optimization;regularization;generative adversarial network (GAN)225|389|1更新时间:2024-05-07

Review

-

摘要:ObjectiveShip hull number detection and recognition can be as the key technologies for marine awareness. It is essential for the preservation of maritime rights and interests. However, it is required to data-driven researches in support of ship hull number detection and recognition. Therefore, we develop a sparse ship hull number dataset in real scene (SSHN-RS), which contains 3 004 images with a total of 11 328 hull numbers. The challenging SSHN-RS dataset is featured of ship hull numbers of various countries, hull numbers of various types, horizontal hull numbers, inclined hull numbers, hull numbers with complex background, hull numbers with simple background, poorly illuminated hull numbers and partially occluded hull numbers. We carry out SSHN-RS-related research on ship hull number detection and recognition. The main challenges are required to be resolved on three aspects as following: 1) the hull number samples are sparse, which causes over-fitting of the network, 2) the features of hull number are densely distributed, which is challenged to learn some of the hull number characteristics fully, and 3) some hull number have its nested areas and a high degree of similarity, which is costly for large number of redundant results.MethodTo resolve these problems mentioned above, we demonstrate a ship hull number detection and recognition algorithm in terms of multi-view progressive context decoupling. First, a random perspective transformation technology with fixed center and maximized area is illustrated. To realize data augmentation and improve the generalization ability of the model, the hull number spatial attitude is extended without increasing the number of samples. Second, a progressive context decoupling technology is proposed. A series of new samples are first generated by sequentially erasing each character of the hull number, and the feature extraction network is then used to extract and fuse the multi-scale features of each sample. It can reduce the influence of feature-contextual information on feature learning and rich the data expansion. It can improve the feature expression ability effectively as well. Finally, in the testing stage, to generate new samples, and inputs the new samples into the testing network for prediction, an inter mask disturbance suppression technology is first focused on a method similar to the progressive context decoupling technology. At the same time, a one-dimensional non-maximum suppression technology is introduced that it can mainly process the hull number recognition results of each sample twice. To suppress the redundant mask in the detection and recognition results effectively, it can make each character of the hull number of all samples correspond to a recognition result. For the testing network, the output has only a set of optimal results. However, there is hull number noisy recognition in some samples. Therefore, the hull number recognition results of all samples are added on the original images, and the second one-dimensional non-maximum suppression technology is performed and processed on it. It can suppress the noises in some samples and outputs a set of optimal results. The post-processing module can optimize the detection and recognition performance further.ResultThe comparative experiment is mainly carried out on SSHN-RS. First, we conduct evaluation analysis on the general instance segmentation algorithms. Multi-view progressive context decoupling-based detection precision, recall, f-score and recognition rate of the ship number detection and recognition algorithm can reach 0.985 4, 0.957 6, 0.971 3 and 0.901 8, which are improved by 4.51%, 3.45%, 3.97% and 8.83% respectively compared to the second-ranked method. Second, the ablation experiments on the ship hull number detection and recognition algorithm are carried out in terms of multi-view progressive context decoupling. The experimental results show that the performance of ship hull number detection and recognition is improved whether each technology is used solely or mixed. Finally, to validate its effectiveness, we apply some popular modules of the ship hull number detection and recognition algorithm based on multi-view progressive context decoupling to other general instance segmentation algorithms. Taking the classic algorithm mask region based convolutional neural network(Mask RCNN) as an example, the indexes have been improved by 9.82%, 6.04%, 7.80% and 6.73% of each after our algorithm module is added.ConclusionOur SSHN-RS contains rich and effective ship hull number information, which can provide data support for ship hull number detection and recognition. The experimental results show that the multi-view progressive context decoupling-based ship hull number detection and recognition algorithm can optimize the detection and recognition performance of hull numbers due to sparse samples, dense character distribution, nested areas, and characters-between high similarity. This algorithm can be generalized to other deep learning based segmentation algorithms. The benchmarks-relevant are provided as a basis for future research on ship hull number detection and recognition. The dataset is available at https://github.com/Bingchuan897/SSHN-RS.关键词:sparse samples;public dataset;ship hull number detection and recognition;instance segmentation;data augmentation;progressive context decoupling336|1931|1更新时间:2024-05-07

摘要:ObjectiveShip hull number detection and recognition can be as the key technologies for marine awareness. It is essential for the preservation of maritime rights and interests. However, it is required to data-driven researches in support of ship hull number detection and recognition. Therefore, we develop a sparse ship hull number dataset in real scene (SSHN-RS), which contains 3 004 images with a total of 11 328 hull numbers. The challenging SSHN-RS dataset is featured of ship hull numbers of various countries, hull numbers of various types, horizontal hull numbers, inclined hull numbers, hull numbers with complex background, hull numbers with simple background, poorly illuminated hull numbers and partially occluded hull numbers. We carry out SSHN-RS-related research on ship hull number detection and recognition. The main challenges are required to be resolved on three aspects as following: 1) the hull number samples are sparse, which causes over-fitting of the network, 2) the features of hull number are densely distributed, which is challenged to learn some of the hull number characteristics fully, and 3) some hull number have its nested areas and a high degree of similarity, which is costly for large number of redundant results.MethodTo resolve these problems mentioned above, we demonstrate a ship hull number detection and recognition algorithm in terms of multi-view progressive context decoupling. First, a random perspective transformation technology with fixed center and maximized area is illustrated. To realize data augmentation and improve the generalization ability of the model, the hull number spatial attitude is extended without increasing the number of samples. Second, a progressive context decoupling technology is proposed. A series of new samples are first generated by sequentially erasing each character of the hull number, and the feature extraction network is then used to extract and fuse the multi-scale features of each sample. It can reduce the influence of feature-contextual information on feature learning and rich the data expansion. It can improve the feature expression ability effectively as well. Finally, in the testing stage, to generate new samples, and inputs the new samples into the testing network for prediction, an inter mask disturbance suppression technology is first focused on a method similar to the progressive context decoupling technology. At the same time, a one-dimensional non-maximum suppression technology is introduced that it can mainly process the hull number recognition results of each sample twice. To suppress the redundant mask in the detection and recognition results effectively, it can make each character of the hull number of all samples correspond to a recognition result. For the testing network, the output has only a set of optimal results. However, there is hull number noisy recognition in some samples. Therefore, the hull number recognition results of all samples are added on the original images, and the second one-dimensional non-maximum suppression technology is performed and processed on it. It can suppress the noises in some samples and outputs a set of optimal results. The post-processing module can optimize the detection and recognition performance further.ResultThe comparative experiment is mainly carried out on SSHN-RS. First, we conduct evaluation analysis on the general instance segmentation algorithms. Multi-view progressive context decoupling-based detection precision, recall, f-score and recognition rate of the ship number detection and recognition algorithm can reach 0.985 4, 0.957 6, 0.971 3 and 0.901 8, which are improved by 4.51%, 3.45%, 3.97% and 8.83% respectively compared to the second-ranked method. Second, the ablation experiments on the ship hull number detection and recognition algorithm are carried out in terms of multi-view progressive context decoupling. The experimental results show that the performance of ship hull number detection and recognition is improved whether each technology is used solely or mixed. Finally, to validate its effectiveness, we apply some popular modules of the ship hull number detection and recognition algorithm based on multi-view progressive context decoupling to other general instance segmentation algorithms. Taking the classic algorithm mask region based convolutional neural network(Mask RCNN) as an example, the indexes have been improved by 9.82%, 6.04%, 7.80% and 6.73% of each after our algorithm module is added.ConclusionOur SSHN-RS contains rich and effective ship hull number information, which can provide data support for ship hull number detection and recognition. The experimental results show that the multi-view progressive context decoupling-based ship hull number detection and recognition algorithm can optimize the detection and recognition performance of hull numbers due to sparse samples, dense character distribution, nested areas, and characters-between high similarity. This algorithm can be generalized to other deep learning based segmentation algorithms. The benchmarks-relevant are provided as a basis for future research on ship hull number detection and recognition. The dataset is available at https://github.com/Bingchuan897/SSHN-RS.关键词:sparse samples;public dataset;ship hull number detection and recognition;instance segmentation;data augmentation;progressive context decoupling336|1931|1更新时间:2024-05-07 -

摘要:ObjectiveTo optimize the detection accuracy, lightweight target detection method is focused on the problem of cost efficiency of computation and storage. The Mobilenetv3 can extract feature effectively through the inverted residual structures-related bneck. However, features are connected with bneck-inner only excluded the bneck-between feature connection. The network accuracy is not optimized because more initial features are not involved in. To achieve a better balance between computation and detection accuracy, the neural architecture search-feature pyramid networks lite(NAS-FPNLite) can be as an effective target detection method based on deep learning technique. The NAS-FPNLite detector is focused on depth separation convolution in the feature pyramid part and the channel number of the intermediate feature layer can be compressed to a fixed 64-dimensional. A better balance can be achieved between the floating point operations and detection accuracy. The depth separation convolution can configure the parameters to 0 easily under this circumstance. To resolve these two problems, we develop a NAS-FPNLite lightweight object detection method in terms of the fusion of cross stage connection and inverted residual. First, an improved network model CSCMobileNetv3 is illustrated, which can obtain more multifaceted information and improves the efficiency of network feature extraction. Next, the inverted residual structure is applied to the feature pyramid part of NAS-FPNLite to obtain a higher number of channels during the depthwise separable convolutions, which can improve the detection accuracy on possibility-alleviated of those parameters become 0. Finally, the experiment for CSCMobilenetv3 model is validated on the Canadian Institute for Advanced Research(CIFAR)-100 and ImageNet 1000 datasets, as well as the inverted residual NAS-FPNLite detector in the COCO (common objects in context) dataset.MethodAt the beginning, to obtain different gradient information between network layers, a cross stage connection (CSC) structure is proposed in terms of DenseNet dense connection, which can combine the initial input with final output of the same level network block to obtain the gradient combination with the maximum difference, and get an improved CSCMobileNetv3 network model. The CSCMobileNetv3 network model is composed of 6 block structures, the first two blocks remain unchanged, and the last four block structures are combined with the CSC structure. Within the same block, the initial input is combined with the final output and as the input of the next block. At the same time, to obtain more different gradient information, the number of channels between the various blocks is changed from the original 16, 24, 40, 80, 112, 160 to 16, 24, 40, 80, 160, 320 in correspondent, It can surpress the intensity of the number of parameters and the amount of floating point operations effectively derived from the excessive expansion of channels. Then, in the detector part of NAS-FPNLite, the feature pyramid part is fused with the inverted residual structure, and the feature fusion method of element-by-element growth between different feature layers is replaced by the channel-concatenated. It is possible to maintain a higher number of channels via processing depthwise separable convolutions. To perform sufficient feature fusion, the situation where the parameters become 0 is avoided effectively, and a skip connection is realized between the input feature layer and the final output layer. A NAS-FPNLite object detection method fused with inverted residuals is demonstrated.ResultIn the training stage, our configuration is equipped with listed below: 1) the graphics card used is NVIDIA GeForce GTX 2070 Super, 2) 8 GB video memory, 3) CUDA version is CUDA10.0, and 4) the CPU is 8-core AMD Ryzen7 3700x. On CIFAR-100 dataset training, since the image resolution of the CIFAR-100 dataset is 32 × 32 pixels, and the CSCMobileNetv3 is required of the resolution of the input image to be set to 224 × 224 pixels, the first convolutional layer, and in the first and third bnecks, the convolution stride is set from 2 to 1, 200 epochs are trained, the learning rate is set to multi-stage adjustment, the initial learning rate lr is set to 0.1, and then at 100, 150 and 180 epochs, multiply the learning rate by a factor of 10. On ImageNet 1000 dataset training, the dataset needs to be preprocessed first, the image resolution is adjusted to 224 × 224 pixels as the input of the network, the number of training iterations is 150 epochs, the cosine annealing learning strategy is used, and the initial learning rate lr is 0.02. The experimental results show that the accuracy of the CSCMobileNetv3 network can be increased by 0.71% to 1.04% compared to MobileNetv3 when the scaling factors are 0.5, 0.75, and 1.0 on the CIFAR-100 dataset. Compared to MobileNetv3, CSCMobileNetv3 increases the number of parameters by 6% and the amount of floating point operations by about 11% when the scaling factor is 1.0, but the accuracy rate increases by 1.04%. Especially, when the CSCMobileNetv3 zoom factor is 0.75, the number of parameters is reduced by 30% compared to the MobileNetv3 zoom factor of 1.0 and the amount of floating point operations is reduced by 20%. The accuracy rate is still improved by 0.19%. On the ImageNet 1000 dataset, CSCMobileNetv3 has a 0.7% improvement in accuracy although the amount of parameters and floating point operations is slightly higher than that of MobileNetv3. To sum up, CSCMobileNetv3 has optimized relevant parameters, floating-point operations and accuracy. On the COCO dataset, compared to other lightweight object detection methods, the detection accuracy of CSCMobileNetv3 and NAS-FPNLite lightweight object detection method fused with inverted residuals can be improved by 0.7% to 4% based on equivalent computation.ConclusionThe CSCMobileNetv3 can obtain differential gradient information effectively, and achieve higher accuracy with a small calculation only. To improve detection accuracy, the NAS-FPNLite object detection method fused with inverted residuals can avoid the situation effectively where the parameters become 0. Our method has its balancing potentials between the amount of calculation and detection accuracy.关键词:lightweight object detection;image classification;depthwise separable convolution;multi-scale feature fusion139|267|1更新时间:2024-05-07

摘要:ObjectiveTo optimize the detection accuracy, lightweight target detection method is focused on the problem of cost efficiency of computation and storage. The Mobilenetv3 can extract feature effectively through the inverted residual structures-related bneck. However, features are connected with bneck-inner only excluded the bneck-between feature connection. The network accuracy is not optimized because more initial features are not involved in. To achieve a better balance between computation and detection accuracy, the neural architecture search-feature pyramid networks lite(NAS-FPNLite) can be as an effective target detection method based on deep learning technique. The NAS-FPNLite detector is focused on depth separation convolution in the feature pyramid part and the channel number of the intermediate feature layer can be compressed to a fixed 64-dimensional. A better balance can be achieved between the floating point operations and detection accuracy. The depth separation convolution can configure the parameters to 0 easily under this circumstance. To resolve these two problems, we develop a NAS-FPNLite lightweight object detection method in terms of the fusion of cross stage connection and inverted residual. First, an improved network model CSCMobileNetv3 is illustrated, which can obtain more multifaceted information and improves the efficiency of network feature extraction. Next, the inverted residual structure is applied to the feature pyramid part of NAS-FPNLite to obtain a higher number of channels during the depthwise separable convolutions, which can improve the detection accuracy on possibility-alleviated of those parameters become 0. Finally, the experiment for CSCMobilenetv3 model is validated on the Canadian Institute for Advanced Research(CIFAR)-100 and ImageNet 1000 datasets, as well as the inverted residual NAS-FPNLite detector in the COCO (common objects in context) dataset.MethodAt the beginning, to obtain different gradient information between network layers, a cross stage connection (CSC) structure is proposed in terms of DenseNet dense connection, which can combine the initial input with final output of the same level network block to obtain the gradient combination with the maximum difference, and get an improved CSCMobileNetv3 network model. The CSCMobileNetv3 network model is composed of 6 block structures, the first two blocks remain unchanged, and the last four block structures are combined with the CSC structure. Within the same block, the initial input is combined with the final output and as the input of the next block. At the same time, to obtain more different gradient information, the number of channels between the various blocks is changed from the original 16, 24, 40, 80, 112, 160 to 16, 24, 40, 80, 160, 320 in correspondent, It can surpress the intensity of the number of parameters and the amount of floating point operations effectively derived from the excessive expansion of channels. Then, in the detector part of NAS-FPNLite, the feature pyramid part is fused with the inverted residual structure, and the feature fusion method of element-by-element growth between different feature layers is replaced by the channel-concatenated. It is possible to maintain a higher number of channels via processing depthwise separable convolutions. To perform sufficient feature fusion, the situation where the parameters become 0 is avoided effectively, and a skip connection is realized between the input feature layer and the final output layer. A NAS-FPNLite object detection method fused with inverted residuals is demonstrated.ResultIn the training stage, our configuration is equipped with listed below: 1) the graphics card used is NVIDIA GeForce GTX 2070 Super, 2) 8 GB video memory, 3) CUDA version is CUDA10.0, and 4) the CPU is 8-core AMD Ryzen7 3700x. On CIFAR-100 dataset training, since the image resolution of the CIFAR-100 dataset is 32 × 32 pixels, and the CSCMobileNetv3 is required of the resolution of the input image to be set to 224 × 224 pixels, the first convolutional layer, and in the first and third bnecks, the convolution stride is set from 2 to 1, 200 epochs are trained, the learning rate is set to multi-stage adjustment, the initial learning rate lr is set to 0.1, and then at 100, 150 and 180 epochs, multiply the learning rate by a factor of 10. On ImageNet 1000 dataset training, the dataset needs to be preprocessed first, the image resolution is adjusted to 224 × 224 pixels as the input of the network, the number of training iterations is 150 epochs, the cosine annealing learning strategy is used, and the initial learning rate lr is 0.02. The experimental results show that the accuracy of the CSCMobileNetv3 network can be increased by 0.71% to 1.04% compared to MobileNetv3 when the scaling factors are 0.5, 0.75, and 1.0 on the CIFAR-100 dataset. Compared to MobileNetv3, CSCMobileNetv3 increases the number of parameters by 6% and the amount of floating point operations by about 11% when the scaling factor is 1.0, but the accuracy rate increases by 1.04%. Especially, when the CSCMobileNetv3 zoom factor is 0.75, the number of parameters is reduced by 30% compared to the MobileNetv3 zoom factor of 1.0 and the amount of floating point operations is reduced by 20%. The accuracy rate is still improved by 0.19%. On the ImageNet 1000 dataset, CSCMobileNetv3 has a 0.7% improvement in accuracy although the amount of parameters and floating point operations is slightly higher than that of MobileNetv3. To sum up, CSCMobileNetv3 has optimized relevant parameters, floating-point operations and accuracy. On the COCO dataset, compared to other lightweight object detection methods, the detection accuracy of CSCMobileNetv3 and NAS-FPNLite lightweight object detection method fused with inverted residuals can be improved by 0.7% to 4% based on equivalent computation.ConclusionThe CSCMobileNetv3 can obtain differential gradient information effectively, and achieve higher accuracy with a small calculation only. To improve detection accuracy, the NAS-FPNLite object detection method fused with inverted residuals can avoid the situation effectively where the parameters become 0. Our method has its balancing potentials between the amount of calculation and detection accuracy.关键词:lightweight object detection;image classification;depthwise separable convolution;multi-scale feature fusion139|267|1更新时间:2024-05-07 -

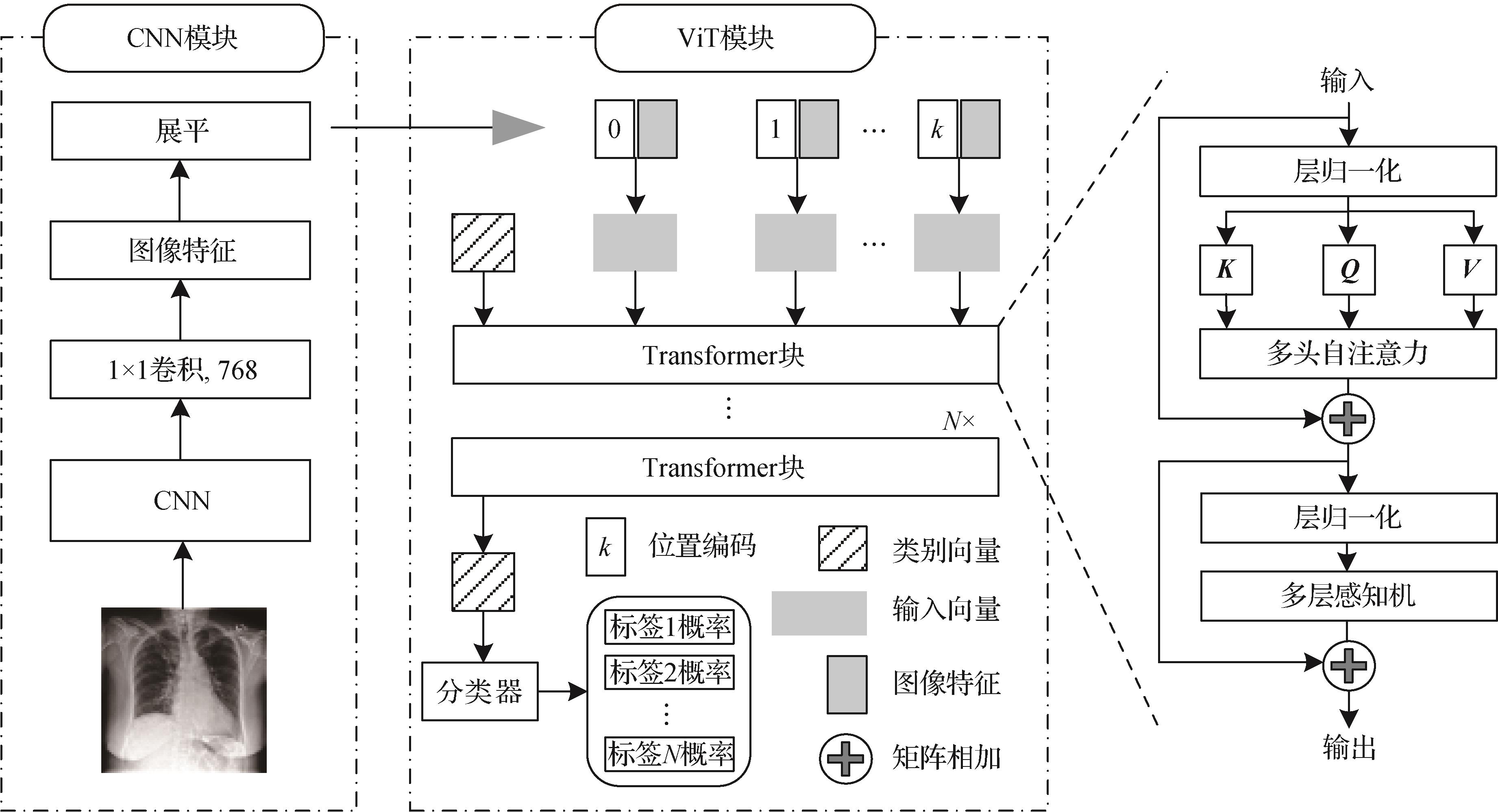

摘要:ObjectiveThe pavement-relevant inspection is focused on the optimization for pavement cracks early-alarming detection and the preservation of pavement structure. However, conventional image processing-based techniques are labor-intensive and time-consuming, such as edge detection, threshold segmentation, template matching, and morphology operations. It is challenged to the geometric and spectral complexities of pavement crack and its contexts (e.g., illumination variation, oil or water stains, and shadows caused by trees and vehicles). The convolution neural network (CNN) based deep learning image processing techniques have been developing intensively. However, the CNN-based methods are less effective in long-range dependency modeling, which may cause insufficient detection results in complicated road surface scenarios. Some works are related to attention mechanisms like spatial or channel attention modules, and self-attention modules. However, these attention mechanism-based operations are still challenged for their sophistication and computational cost.MethodTo detect pavement cracks efficiently and effectively, we develop a novel Transformer-based encoder-decoder neural network, called CTNet, which consists of Transformer blocks, multi-scale local feature enhanced blocks, upsampling blocks, and skip connections. The CTNet can achieve more long-range dependency and global receptive field in terms of multi-head self-attention-based Transformer mechanism. Although Transformer is featured by high running efficiency and low computational overhead demand, it is infeasible to model local contextual information because Token generation can break the connections of neighboring regions. Thus, to capture more multi-scale local information, we design a multi-scale local feature-enhanced block in terms of a multiple dilation ratios-relevant dilation convolution block. Especially, the designed multi-scale local feature enhancement block is melted into each Transformer block for local information complement. Both of local and global low-level contextual features can be captured for feature enhancement. Afterwards, a novel decoder path is implemented to extract high-level features. The decoder consists of the Transformer blocks similar to the up-sampling blocks and the spatial details can be restored for end-to-end segmentation.ResultTo demonstrate the efficiency and effectiveness of our proposed CTNet, a series of comparative analyses and ablation studies are carried out on three datasets. First, the CTNet can optimize running efficiency, as well as comparable computation overhead and complexity compared to the current UNet, SegNet, DeepCrack, and SwinUnet. Second, CTNet is 6.78 times faster than the second-best DeepCrack model in terms of training speed. On CrackLS315 dataset, quantitative analyses are also showed that the optimal CTNet is obtained a precision of 91.38%, a recall of 80.38%, and a F1 measure of 85.53% of each; on CrackWH100 dataset, CTNet can obtain a precision of 92.70%, a recall of 90.52%, and a F1 measure 91.60% of each as well. However, it is still challenged to lack of local information when pure-Transformer-based Swin-UNet performed not well compared to fully convolution networks. Furthermore, the CTNet is insufficient to converge when the local blocks-enhanced are removed. In summary, the Transformer-based CTNet is beneficial to multi-scenario pavement cracks in terms of the global receptive field. The CTNet can get pavement crack detection results consistently.ConclusionThe proposed CTNet has its potentials to deal with noisy pavement images for pavement crack detection.关键词:road engineering;pavement crack detection;deep learning;semantic segmentation;self-attention;Transformer249|540|4更新时间:2024-05-07