最新刊期

卷 28 , 期 1 , 2023

-

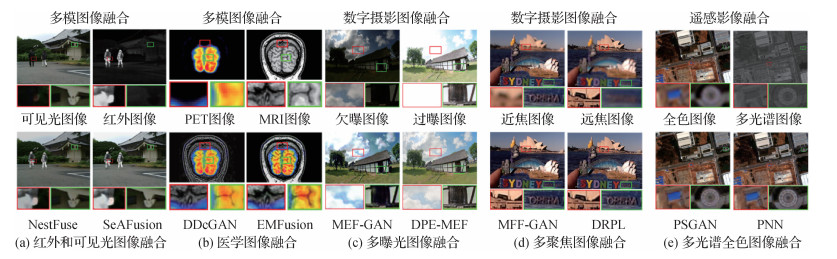

摘要:Image fusion aims to integrate complementary information from multi-source images into a single fused image to characterize the imaging scene and facilitate the subsequent vision tasks further. In recent years, it has been a concern in the field of image processing, especially in artificial intelligence-related industries like intelligent medical service, autonomous driving, smart photography, security surveillance, and military monitoring. Moreover, the growth of deep learning has been promoting deep learning-based image fusion algorithms. In particular, the emergence of advanced techniques, such as the auto-encoder, generative adversarial network, and Transformer, has led to a qualitative leap in image fusion performance. However, a comprehensive review and analysis of state-of-the-art deep learning-based image fusion algorithms for different fusion scenarios are required to be realized. Thus, we develop a systematic and critical review to explore the developments of image fusion in recent years. First, a comprehensive and systematic introduction of the image fusion field is presented from the following three aspects: 1) the development of image fusion technology, 2) the prevailing datasets, and 3) the common evaluation metrics. Then, more extensive qualitative experiments, quantitative experiments, and running efficiency evaluations of representative image fusion methods are conducted on the public datasets to compare their performance. Finally, the summary and challenges in the image fusion community are highlighted. In particular, some prospects are recommended further in the field of image fusion. First of all, from the perspective of fusion scenarios, the existing image fusion methods can be divided into three categories, i.e., multi-modal image fusion, digital photography image fusion, and remote sensing image fusion. Specifically, multi-modal image fusion is composed of infrared and visible image fusion as well as medical image fusion, and digital photography image fusion consists of multi-exposure image fusion as well as multi-focus image fusion. Multi-spectral and panchromatic image fusion can be as one of the key aspects for remote sensing image fusion. In addition, the domain of deep learning-based image fusion algorithms can be classified into the auto-encoder based (AE-based) fusion framework, convolutional neural network based (CNN-based) fusion framework, and generative adversarial network based (GAN-based) fusion framework from the aspect of network architectures. The AE-based fusion framework achieves the feature extraction and image reconstruction by a pre-trained auto-encoder, and accomplishes deep feature fusion via manual fusion strategies. To clarify feature extraction, fusion, and image reconstruction, the CNN-based fusion framework is originated from detailed network structures and loss functions. The GAN-based framework defines the image fusion problem as an adversarial game between the generators and discriminators. From the perspective of the supervision paradigm, the deep learning fusion methods can also be categorized into three classes, i.e., unsupervised, self-supervised, and supervised. The supervised methods leverage ground truth value to guide the training processes, and the unsupervised approaches construct loss function via constraining the similarity between the fusion result and source images. The self-supervised algorithms are associated with the AE-based framework in common. Our critical review is focused on the main concepts and discussions of the characteristics of each method for different fusion scenarios from the perspectives of the network architecture and supervision paradigm. Especially, we summarize the limitations of different fusion algorithms and provide some recommendations for further research. Secondly, we briefly introduce the popular public datasets and provide the interfaces-related to download them for each specific image fusion scenario. Then, we present the common evaluation metrics in the image fusion field from two aspects: regular-based evaluation metrics and specific-based metrics designed for pan-sharpening. The generic metrics can be utilized to evaluate multi-modal and digital photography image fusion algorithms of those are entropy-based, correlation-based, image feature-based, image structure-based, and human visual perception-based metrics in total. Some of the generic metrics, such as peak signal-to-noise ratio (PSNR), correlation coefficient (CC), structural similarity index measure (SSIM), and visual information fidelity (VIF), are also used for the quantitative assessment of pan-sharpening. The specific metrics designed for pan-sharpening consist of no-reference metrics and full-reference metrics that employ the full-resolution image as the reference image, i.e., ground truth. Thirdly, we present the qualitative/quantitative results, and average running times of representative alternatives for various fusion missions. Finally, this review has critically analyzed the conclusion, highlights the challenges in the image fusion community, and carried out forecasting analysis, such as non-registered image fusion, high-level vision task-driven image fusion, cross-resolution image fusion, real-time image fusion, color image fusion, image fusion based on physical imaging principles, image fusion under extreme conditions, and comprehensive evaluation metrics, etc. The methods, datasets, and evaluation metrics mentioned are linked at: https://github.com/Linfeng-Tang/Image-Fusion.关键词:image fusion;deep learning;multi-modal;digital photography;remote sensing imagery6357|7342|21更新时间:2024-05-08

摘要:Image fusion aims to integrate complementary information from multi-source images into a single fused image to characterize the imaging scene and facilitate the subsequent vision tasks further. In recent years, it has been a concern in the field of image processing, especially in artificial intelligence-related industries like intelligent medical service, autonomous driving, smart photography, security surveillance, and military monitoring. Moreover, the growth of deep learning has been promoting deep learning-based image fusion algorithms. In particular, the emergence of advanced techniques, such as the auto-encoder, generative adversarial network, and Transformer, has led to a qualitative leap in image fusion performance. However, a comprehensive review and analysis of state-of-the-art deep learning-based image fusion algorithms for different fusion scenarios are required to be realized. Thus, we develop a systematic and critical review to explore the developments of image fusion in recent years. First, a comprehensive and systematic introduction of the image fusion field is presented from the following three aspects: 1) the development of image fusion technology, 2) the prevailing datasets, and 3) the common evaluation metrics. Then, more extensive qualitative experiments, quantitative experiments, and running efficiency evaluations of representative image fusion methods are conducted on the public datasets to compare their performance. Finally, the summary and challenges in the image fusion community are highlighted. In particular, some prospects are recommended further in the field of image fusion. First of all, from the perspective of fusion scenarios, the existing image fusion methods can be divided into three categories, i.e., multi-modal image fusion, digital photography image fusion, and remote sensing image fusion. Specifically, multi-modal image fusion is composed of infrared and visible image fusion as well as medical image fusion, and digital photography image fusion consists of multi-exposure image fusion as well as multi-focus image fusion. Multi-spectral and panchromatic image fusion can be as one of the key aspects for remote sensing image fusion. In addition, the domain of deep learning-based image fusion algorithms can be classified into the auto-encoder based (AE-based) fusion framework, convolutional neural network based (CNN-based) fusion framework, and generative adversarial network based (GAN-based) fusion framework from the aspect of network architectures. The AE-based fusion framework achieves the feature extraction and image reconstruction by a pre-trained auto-encoder, and accomplishes deep feature fusion via manual fusion strategies. To clarify feature extraction, fusion, and image reconstruction, the CNN-based fusion framework is originated from detailed network structures and loss functions. The GAN-based framework defines the image fusion problem as an adversarial game between the generators and discriminators. From the perspective of the supervision paradigm, the deep learning fusion methods can also be categorized into three classes, i.e., unsupervised, self-supervised, and supervised. The supervised methods leverage ground truth value to guide the training processes, and the unsupervised approaches construct loss function via constraining the similarity between the fusion result and source images. The self-supervised algorithms are associated with the AE-based framework in common. Our critical review is focused on the main concepts and discussions of the characteristics of each method for different fusion scenarios from the perspectives of the network architecture and supervision paradigm. Especially, we summarize the limitations of different fusion algorithms and provide some recommendations for further research. Secondly, we briefly introduce the popular public datasets and provide the interfaces-related to download them for each specific image fusion scenario. Then, we present the common evaluation metrics in the image fusion field from two aspects: regular-based evaluation metrics and specific-based metrics designed for pan-sharpening. The generic metrics can be utilized to evaluate multi-modal and digital photography image fusion algorithms of those are entropy-based, correlation-based, image feature-based, image structure-based, and human visual perception-based metrics in total. Some of the generic metrics, such as peak signal-to-noise ratio (PSNR), correlation coefficient (CC), structural similarity index measure (SSIM), and visual information fidelity (VIF), are also used for the quantitative assessment of pan-sharpening. The specific metrics designed for pan-sharpening consist of no-reference metrics and full-reference metrics that employ the full-resolution image as the reference image, i.e., ground truth. Thirdly, we present the qualitative/quantitative results, and average running times of representative alternatives for various fusion missions. Finally, this review has critically analyzed the conclusion, highlights the challenges in the image fusion community, and carried out forecasting analysis, such as non-registered image fusion, high-level vision task-driven image fusion, cross-resolution image fusion, real-time image fusion, color image fusion, image fusion based on physical imaging principles, image fusion under extreme conditions, and comprehensive evaluation metrics, etc. The methods, datasets, and evaluation metrics mentioned are linked at: https://github.com/Linfeng-Tang/Image-Fusion.关键词:image fusion;deep learning;multi-modal;digital photography;remote sensing imagery6357|7342|21更新时间:2024-05-08 -

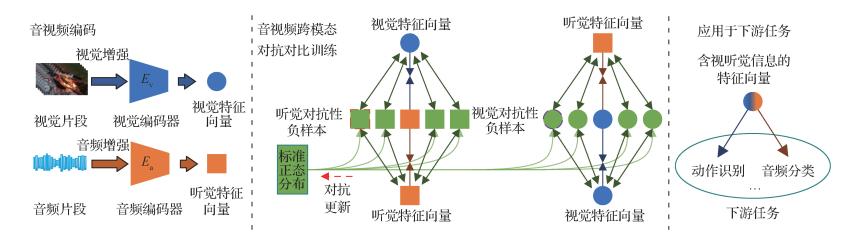

摘要:Visual tracking can be as one of the key tasks in computer vision applications like surveillance, robotics and automatic driving in the past decades. The performance issue for visual tracking is still challenged of the quality of visible light data in adverse scenes, such as low illumination, background clutter, haze and smog. To deal with the imaging constraints of visible light data, current researches are focused on multiple modal data-introduced in common. The visible and modal data integration can be effective in tracking performance in terms of the manner of thermal infrared, depth, event and language. Benefiting from the integrated capability of visible and multi-modal data, multi-modal trackers have been developing intensively in such complicated scenarios of those are low illumination, occlusion, fast motion and semantic ambiguity. Nowadays, our executive summary is focused on reviewing the RGB and thermal infrared(RGBT) tracking algorithms, which is oriented for the popular visible-infrared visual tracking towards multi-modal visual tracking. Existing multi-modal visual tracking-based summaries are concerned of the segmentation of tracking algorithms in terms of multi-framework tracking or multi-level based fusions derived of pixel, feature, and decision. With respect of the information fusion plays a key role in multi-modal visual tracking, we divide and analyze existing RGBT tracking methods from the perspective of information fusion, including synthesized and specific-based fusions. Specifically, the fusion-integrated can be used to combine all multimodal information together via different fusion methods, including: 1) sparse representation fusion, 2) collaborative graph representation fusion, 3) modality-synthesized and modality-specific information fusion, and 4) attribute-based feature decoupling fusion. First, sparse representation fusion has a good ability to suppress feature noise, but most of these algorithms are restricted by the time-consuming online optimization of the sparse representation models. In addition, these methods can be used as target representation via pixel values, and thus have low robustness in complex scenes. Second, collaborative graph representation fusion can be used to suppress the effect of background clutter in terms of modality weights and local image patch weights. However, these methods are required for multi-variables optimization iteratively, and the tracking efficiency is quite lower. Furthermore, these models are required to use color and gradient features, which are better than pixel values but also hard to deal with challenging scenarios. Third, modality-synthesized and modality-specific information fusion can use be used to model modality-synthesized and modality-specific representations based on different sub-networks and provide an effective fusion strategy for tracking. However, these methods are lack of the information interaction in the learning of modality-specific representations, and thus introduce noises and redundancy easily. Fourth, attribute-based feature decoupling fusion can be applied to model the target representations under different attributes, and it alleviates the dependence on large-scale training data more. However, it is difficult to cover all challenging problems in practical applications. Although these fusion-synthesized methods have achieved good tracking performance, all multiple modalities information-synthesized have to introduce the information redundancy and feature noises inevitably. To resolve these problems, some researches have been concerned of fusion-specific methods in RGBT tracking. This sort of fusion is aimed to mine the specific features of multiple modalities for effective and efficient information fusion, including: 1) feature-selected fusion, 2) attention-mechanism-based adaptive fusion, 3)mutual-enhanced fusion, and 4)other fusion-specific methods. Feature selection fusion is employed to select specific features in regular. It can not only avoid the interference of data noises and is beneficial to improving tracking performance, but also eliminate data redundancy. However, the selection criteria are hard to be designed, and unsuitable criterion often removes useful information under low-quality data and thus limits tracking performance. Adaptive fusion is aimed to estimate the reliability of multi-modal data in term of attention mechanism, including the modality, spatial and channel reliabilities, and thus achieves the adaptive fusion of multi-modal information. However, there is no clear supervision to generate these weights-reliable, which is possible to mislead the estimated results in complex scenarios. Mutual enhancement fusion is focused on data noises-related suppression for low-quality modality and its features can be enhanced between the specific information and other modality. These methods can be implemented to mine the specific information of multiple modalities and improve target representations of low-quality modalities. However, these methods are complicated and have low tracking efficiency.The task of multi-modal vision tracking has three sub-tasks besides RGBT tracking, including: 1) visible-depth tracking (called RGB and depth(RGBD) tracking), 2) visible-event tracking (called RGBE(RGB and event) tracking), 3) visible-language tracking (called RGB and language(RGBL) tracking). We review these three multi-modal visual tracking issues in brief as well. Furthermore, we predict some academic challenges and future directions for multi-modal visual tracking.关键词:information fusion;visual tracking;multiple modalities;combinative fusion;discriminative fusion439|404|7更新时间:2024-05-08

摘要:Visual tracking can be as one of the key tasks in computer vision applications like surveillance, robotics and automatic driving in the past decades. The performance issue for visual tracking is still challenged of the quality of visible light data in adverse scenes, such as low illumination, background clutter, haze and smog. To deal with the imaging constraints of visible light data, current researches are focused on multiple modal data-introduced in common. The visible and modal data integration can be effective in tracking performance in terms of the manner of thermal infrared, depth, event and language. Benefiting from the integrated capability of visible and multi-modal data, multi-modal trackers have been developing intensively in such complicated scenarios of those are low illumination, occlusion, fast motion and semantic ambiguity. Nowadays, our executive summary is focused on reviewing the RGB and thermal infrared(RGBT) tracking algorithms, which is oriented for the popular visible-infrared visual tracking towards multi-modal visual tracking. Existing multi-modal visual tracking-based summaries are concerned of the segmentation of tracking algorithms in terms of multi-framework tracking or multi-level based fusions derived of pixel, feature, and decision. With respect of the information fusion plays a key role in multi-modal visual tracking, we divide and analyze existing RGBT tracking methods from the perspective of information fusion, including synthesized and specific-based fusions. Specifically, the fusion-integrated can be used to combine all multimodal information together via different fusion methods, including: 1) sparse representation fusion, 2) collaborative graph representation fusion, 3) modality-synthesized and modality-specific information fusion, and 4) attribute-based feature decoupling fusion. First, sparse representation fusion has a good ability to suppress feature noise, but most of these algorithms are restricted by the time-consuming online optimization of the sparse representation models. In addition, these methods can be used as target representation via pixel values, and thus have low robustness in complex scenes. Second, collaborative graph representation fusion can be used to suppress the effect of background clutter in terms of modality weights and local image patch weights. However, these methods are required for multi-variables optimization iteratively, and the tracking efficiency is quite lower. Furthermore, these models are required to use color and gradient features, which are better than pixel values but also hard to deal with challenging scenarios. Third, modality-synthesized and modality-specific information fusion can use be used to model modality-synthesized and modality-specific representations based on different sub-networks and provide an effective fusion strategy for tracking. However, these methods are lack of the information interaction in the learning of modality-specific representations, and thus introduce noises and redundancy easily. Fourth, attribute-based feature decoupling fusion can be applied to model the target representations under different attributes, and it alleviates the dependence on large-scale training data more. However, it is difficult to cover all challenging problems in practical applications. Although these fusion-synthesized methods have achieved good tracking performance, all multiple modalities information-synthesized have to introduce the information redundancy and feature noises inevitably. To resolve these problems, some researches have been concerned of fusion-specific methods in RGBT tracking. This sort of fusion is aimed to mine the specific features of multiple modalities for effective and efficient information fusion, including: 1) feature-selected fusion, 2) attention-mechanism-based adaptive fusion, 3)mutual-enhanced fusion, and 4)other fusion-specific methods. Feature selection fusion is employed to select specific features in regular. It can not only avoid the interference of data noises and is beneficial to improving tracking performance, but also eliminate data redundancy. However, the selection criteria are hard to be designed, and unsuitable criterion often removes useful information under low-quality data and thus limits tracking performance. Adaptive fusion is aimed to estimate the reliability of multi-modal data in term of attention mechanism, including the modality, spatial and channel reliabilities, and thus achieves the adaptive fusion of multi-modal information. However, there is no clear supervision to generate these weights-reliable, which is possible to mislead the estimated results in complex scenarios. Mutual enhancement fusion is focused on data noises-related suppression for low-quality modality and its features can be enhanced between the specific information and other modality. These methods can be implemented to mine the specific information of multiple modalities and improve target representations of low-quality modalities. However, these methods are complicated and have low tracking efficiency.The task of multi-modal vision tracking has three sub-tasks besides RGBT tracking, including: 1) visible-depth tracking (called RGB and depth(RGBD) tracking), 2) visible-event tracking (called RGBE(RGB and event) tracking), 3) visible-language tracking (called RGB and language(RGBL) tracking). We review these three multi-modal visual tracking issues in brief as well. Furthermore, we predict some academic challenges and future directions for multi-modal visual tracking.关键词:information fusion;visual tracking;multiple modalities;combinative fusion;discriminative fusion439|404|7更新时间:2024-05-08 -

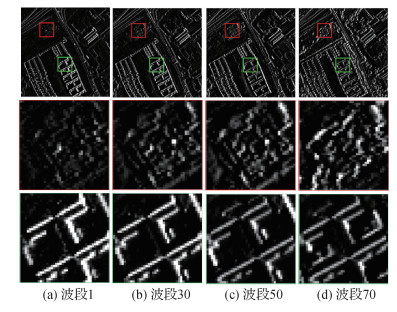

摘要:The aim of pan-sharpening is focused on data acquisition and processing captured from multiple remote sensing satellites in terms of machine learning and signal processing techniques. To produce a high-quality multispectral image, its objective is oriented to fuse a low spatial resolution-based multispectral (MS) image and a high spatial resolution-based panchromatic (PAN) image. Machine learning (ML) technique is being developed to deal with it. Pan-sharpening, a recent multispectral image-based image fusion technique, has been concerned about in terms of machine learning, which is featured by its medium/low spatial resolution. In recent years, remote sensing (RS) science, big data science and convolutional neural networks based (CNNs-based) techniques (a sub-category of ML), have promoted image processing, computer vision and its contexts. Thanks to the priority of RS images-based pan-sharpening of fusion, CNN-oriented researches have been developing dramatically, but some challenging issues are required to be handled, such as the detailed introduction to typical pan-sharpening CNNs, data simulation, feasible and open training-test datasets, simple and easy-to-understand unified code writing framework, etc. So, we mainly review the growth of CNNs for RS-based pan-sharpening from 5 aspects as following: 1) detailed introduction of 7 CNN-based pan-sharpening methods and comparative analysis are given out under the datasets-coordinated; 2) the simulation of training-test datasets is introduced in details. We intend to release the pan-sharpening datasets of related satellites (such as WorldView-3, QuickBird, GaoFen2, WorldView-2 satellites); 3) for all 7 CNN-based methods introduced, Python codes-based on Pytorch library is released in a framework-integrated to facilitate the completeness and feasibility further; 4) a pan-sharpening-unified MATLAB software package is demonstrated for the test of deep-learning and traditional approaches, which is beneficial to conduct balancing tests; and 5) future research direction is predicted as well. Please click the link for project details: https://liangjiandeng.github.io/PanCollection.html. First, CNN-based techniques have proven to have good performance under the similar situations. But, the performance has decreased when a complete different situation is employed (such as in contrast with existing contemporary methods), a limitation of these approaches are challenged. Next, the fine-tuning technique has demonstrated its effectiveness in resolving the problem mentioned above, ensuring better performance for these complicated test scenarios. Compared to the quickest speed conventional methods, the computing load of the studied CNN-based algorithms can be determined as an appropriate manner during the testing phase. Finally, we recommend that a novel design of CNN-based pan-sharpening methods. The skip connection operation can aid ML-based methods in achieving a faster convergence when it is focused on the analyzed CNN-based pan-sharpening approaches. Instead, a stronger feature extraction and learning can be supported by the design of multi-scaled architectures (including the bidirectional structure even). Furthermore, the capacity of the generalized networks can be improved using a fine-tuning technique and learning in a customized domain (instead of the original image domain). However, there are still some problems to be resolved. The computational weight problem is challenged to create networks with fewer parameters so as to guarantee network performance (even achieving a quick convergence). Furthermore, the recent advancements in CNN for pan-sharpening have a restricted capacity for generalization. A lower resolution-based initial vision is beneficial to provide labels for network training. As a result, loss functions-based techniques (unsupervised) have been developed in evaluating similarities at full resolution. The future research is potential to be developed for full resolution further.关键词:Pansharpening;convolutional neural network(CNN);comparison of typical CNN methods;datasets releasing;coding-framework releasing;pansharpening survey532|746|2更新时间:2024-05-08

摘要:The aim of pan-sharpening is focused on data acquisition and processing captured from multiple remote sensing satellites in terms of machine learning and signal processing techniques. To produce a high-quality multispectral image, its objective is oriented to fuse a low spatial resolution-based multispectral (MS) image and a high spatial resolution-based panchromatic (PAN) image. Machine learning (ML) technique is being developed to deal with it. Pan-sharpening, a recent multispectral image-based image fusion technique, has been concerned about in terms of machine learning, which is featured by its medium/low spatial resolution. In recent years, remote sensing (RS) science, big data science and convolutional neural networks based (CNNs-based) techniques (a sub-category of ML), have promoted image processing, computer vision and its contexts. Thanks to the priority of RS images-based pan-sharpening of fusion, CNN-oriented researches have been developing dramatically, but some challenging issues are required to be handled, such as the detailed introduction to typical pan-sharpening CNNs, data simulation, feasible and open training-test datasets, simple and easy-to-understand unified code writing framework, etc. So, we mainly review the growth of CNNs for RS-based pan-sharpening from 5 aspects as following: 1) detailed introduction of 7 CNN-based pan-sharpening methods and comparative analysis are given out under the datasets-coordinated; 2) the simulation of training-test datasets is introduced in details. We intend to release the pan-sharpening datasets of related satellites (such as WorldView-3, QuickBird, GaoFen2, WorldView-2 satellites); 3) for all 7 CNN-based methods introduced, Python codes-based on Pytorch library is released in a framework-integrated to facilitate the completeness and feasibility further; 4) a pan-sharpening-unified MATLAB software package is demonstrated for the test of deep-learning and traditional approaches, which is beneficial to conduct balancing tests; and 5) future research direction is predicted as well. Please click the link for project details: https://liangjiandeng.github.io/PanCollection.html. First, CNN-based techniques have proven to have good performance under the similar situations. But, the performance has decreased when a complete different situation is employed (such as in contrast with existing contemporary methods), a limitation of these approaches are challenged. Next, the fine-tuning technique has demonstrated its effectiveness in resolving the problem mentioned above, ensuring better performance for these complicated test scenarios. Compared to the quickest speed conventional methods, the computing load of the studied CNN-based algorithms can be determined as an appropriate manner during the testing phase. Finally, we recommend that a novel design of CNN-based pan-sharpening methods. The skip connection operation can aid ML-based methods in achieving a faster convergence when it is focused on the analyzed CNN-based pan-sharpening approaches. Instead, a stronger feature extraction and learning can be supported by the design of multi-scaled architectures (including the bidirectional structure even). Furthermore, the capacity of the generalized networks can be improved using a fine-tuning technique and learning in a customized domain (instead of the original image domain). However, there are still some problems to be resolved. The computational weight problem is challenged to create networks with fewer parameters so as to guarantee network performance (even achieving a quick convergence). Furthermore, the recent advancements in CNN for pan-sharpening have a restricted capacity for generalization. A lower resolution-based initial vision is beneficial to provide labels for network training. As a result, loss functions-based techniques (unsupervised) have been developed in evaluating similarities at full resolution. The future research is potential to be developed for full resolution further.关键词:Pansharpening;convolutional neural network(CNN);comparison of typical CNN methods;datasets releasing;coding-framework releasing;pansharpening survey532|746|2更新时间:2024-05-08 -

摘要:Multi-focus image fusion technique can extend the depth-of-field (DOF) of optical lenses effectively via a software-based manner. It aims to fuse a set of partially focused source images of the same scene by generating an all-in-focus fused image, which will be more suitable for human or machine perception. As a result, multi-focus image fusion is of high practical significance in many areas including digital photography, microscopy imaging, integral imaging, thermal imaging, etc. Traditional multi-focus image fusion methods, which generally include transform domain methods (e.g., multi-scale transform-based methods and sparse representation-based methods) and spatial domain methods (e.g., block-based methods and pixel-based methods), are often based on manually designed transform models, activity level measures and fusion rules. To achieve high fusion performance, these key factors tend to become much more complicated in the fusion algorithm, which are usually at the cost of computational efficiency. In addition, these key factors are often independently designed with relatively weak association, which limits the fusion performance to a great extent. In the past few years, deep learning has been introduced into the study of multi-focus image fusion and has rapidly emerged as the current mainstream of this field, with a variety of deep learning-based fusion methods being proposed in the literature. Deep learning models like convolutional neural networks (CNNs) and generative adversarial networks (GANs) have been facilitating in the study of multi-focus image fusion. It is of high significance to conduct a comprehensive survey to review the recent advances achieved in deep learning-based multi-focus image fusion and put forward some future prospects for further improvement. Some survey papers related to image fusion including multi-focus image fusion have been recently published in the international journals around 2020. However, the survey works on multi-focus image fusion are rarely reported in Chinese journals. Moreover, considering that this field grows very rapidly with dozens of papers being published each year, a more timely survey is also highly expected. Based on the above considerations, we demonstrate a systematic review for the deep learning-based multi-focus image fusion methods. In this paper, the existing deep learning-based methods are classified into two main categories: 1) deep classification model-based methods and 2) deep regression model-based methods. Additionally, these two categories of methods are further divided into sub-categories. Specifically, the classification model-based methods are further divided into image block-based methods and image segmentation-based methods in terms of the pixel processing manner adopted. The regression model-based methods are further divided into supervised learning-based methods and unsupervised learning-based methods, according to the learning manner of network models. For each category, the representative fusion methods are introduced as well. In addition, we conduct a comparative study on the performance of 25 representative multi-focus image fusion methods, including 5 traditional transform domain methods, 5 traditional spatial domain methods and 15 deep learning-based methods. To this end, we use three commonly-used multi-focus image fusion datasets in the experiments including "Lytro", "MFFW" and "Classic". Additionally, eight objective evaluation metrics that are widely used in multi-focus image fusion are adopted for performance assessment, which are composed of include two information theory-based metrics, two image feature-based metrics, two structural similarity-based metrics and two human visual perception-based metrics. The experimental results verify that deep learning-based methods can achieve very promising fusion results. However, it is worth noting that the performance of most deep learning-based methods is not significantly better than that of the traditional fusion methods. One main reason for this phenomenon is the lack of large-scale and realistic datasets for training in multi-focus image fusion, and the way to create synthetic datasets for training is inevitability different from the real situation, leading to that the potential of deep learning-based methods cannot be fully tapped. Finally, we summarize some challenging problems in the study of deep learning-based multi-focus image fusion and put forward some future prospects accordingly, which mainly include the four aspects as following: 1) the fusion of focus boundary regions; 2) the fusion of mis-registered source images; 3) the construction of large-scale datasets with real labels for network training; and 4) the improvement of network architecture and model training approach.关键词:multi-focus image fusion(MFIF);image fusion;deep learning;convolutional neural network (CNN);generative adversarial network (GAN)503|872|0更新时间:2024-05-08

摘要:Multi-focus image fusion technique can extend the depth-of-field (DOF) of optical lenses effectively via a software-based manner. It aims to fuse a set of partially focused source images of the same scene by generating an all-in-focus fused image, which will be more suitable for human or machine perception. As a result, multi-focus image fusion is of high practical significance in many areas including digital photography, microscopy imaging, integral imaging, thermal imaging, etc. Traditional multi-focus image fusion methods, which generally include transform domain methods (e.g., multi-scale transform-based methods and sparse representation-based methods) and spatial domain methods (e.g., block-based methods and pixel-based methods), are often based on manually designed transform models, activity level measures and fusion rules. To achieve high fusion performance, these key factors tend to become much more complicated in the fusion algorithm, which are usually at the cost of computational efficiency. In addition, these key factors are often independently designed with relatively weak association, which limits the fusion performance to a great extent. In the past few years, deep learning has been introduced into the study of multi-focus image fusion and has rapidly emerged as the current mainstream of this field, with a variety of deep learning-based fusion methods being proposed in the literature. Deep learning models like convolutional neural networks (CNNs) and generative adversarial networks (GANs) have been facilitating in the study of multi-focus image fusion. It is of high significance to conduct a comprehensive survey to review the recent advances achieved in deep learning-based multi-focus image fusion and put forward some future prospects for further improvement. Some survey papers related to image fusion including multi-focus image fusion have been recently published in the international journals around 2020. However, the survey works on multi-focus image fusion are rarely reported in Chinese journals. Moreover, considering that this field grows very rapidly with dozens of papers being published each year, a more timely survey is also highly expected. Based on the above considerations, we demonstrate a systematic review for the deep learning-based multi-focus image fusion methods. In this paper, the existing deep learning-based methods are classified into two main categories: 1) deep classification model-based methods and 2) deep regression model-based methods. Additionally, these two categories of methods are further divided into sub-categories. Specifically, the classification model-based methods are further divided into image block-based methods and image segmentation-based methods in terms of the pixel processing manner adopted. The regression model-based methods are further divided into supervised learning-based methods and unsupervised learning-based methods, according to the learning manner of network models. For each category, the representative fusion methods are introduced as well. In addition, we conduct a comparative study on the performance of 25 representative multi-focus image fusion methods, including 5 traditional transform domain methods, 5 traditional spatial domain methods and 15 deep learning-based methods. To this end, we use three commonly-used multi-focus image fusion datasets in the experiments including "Lytro", "MFFW" and "Classic". Additionally, eight objective evaluation metrics that are widely used in multi-focus image fusion are adopted for performance assessment, which are composed of include two information theory-based metrics, two image feature-based metrics, two structural similarity-based metrics and two human visual perception-based metrics. The experimental results verify that deep learning-based methods can achieve very promising fusion results. However, it is worth noting that the performance of most deep learning-based methods is not significantly better than that of the traditional fusion methods. One main reason for this phenomenon is the lack of large-scale and realistic datasets for training in multi-focus image fusion, and the way to create synthetic datasets for training is inevitability different from the real situation, leading to that the potential of deep learning-based methods cannot be fully tapped. Finally, we summarize some challenging problems in the study of deep learning-based multi-focus image fusion and put forward some future prospects accordingly, which mainly include the four aspects as following: 1) the fusion of focus boundary regions; 2) the fusion of mis-registered source images; 3) the construction of large-scale datasets with real labels for network training; and 4) the improvement of network architecture and model training approach.关键词:multi-focus image fusion(MFIF);image fusion;deep learning;convolutional neural network (CNN);generative adversarial network (GAN)503|872|0更新时间:2024-05-08 -

摘要:To capture more effective visual information of the natural scenes, multi-sensor imaging systems have been challenging in multiple configurations or modalities due to the hardware design constraints. It is required to fuse multiple source images into a single high-quality image in terms of rich and feasible perceptual information and few artifacts. To facilitate various image processing and computer vision tasks, image fusion technique can be used to generate a single and clarified image features. Traditional image fusion models are often constructed in accordance with label-manual features or unidentified feature-learned representations. The generalization ability of the models needs to be developed further.Deep learning technique is focused on progressive multi-layer features extraction via end-to-end model training. Most of demonstration-relevant can be learned for specific task automatically. Compared with the traditional methods, deep learning-based models can improve the fusion performance intensively in terms of image fusion. Current image fusion-related deep learning models are often beneficial based on convolutional neural networks (CNNs) and generative adversarial networks (GANs). In recent years, the newly network structures and training techniques have been incorporated for the growth of image fusion like vision transformers and meta-learning techniques. Most of image fusion-relevant literatures are analyzed from specific multi-fusion issues like exposure, focus, spectrum image, and modality issues. However, more deep learning-related model designs and training techniques is required to be incorporated between multi-fusion tasks. To draw a clear picture of deep learning-based image fusion techniques, we try to review the latest image fusion researches in terms of 1) dataset generation, 2) neural network construction, 3) loss function design, 4) model optimization, and 5) performance evaluation. For dataset generation, we emphasize two categories: a) supervised learning and b) unsupervised (or self-supervised) learning. For neural network construction, we distinguish the early or late stages of this construction process, and the issue of information fusion is implemented between multi-scale, coarse-to-fine and the adversarial networks-incorporated (i.e., discriminative networks) as well. For loss function design, the perceptual loss functions-specific method is essential for image fusion-related perceptual applications like multi-exposure and multi-focus image fusion. For model optimization, the generic first-order optimization techniques are covered (e.g., stochastic gradient descent(SGD), SGD+momentum, Adam, and AdamW) and the advanced alternation and bi-level optimization methods are both taken into consideration. For performance evaluation, a commonly-used quantitative metrics are reviewed for the manifested measurement of fusion performance. The relationship between the loss functions (also as a form of evaluation metrics) are used to drive the learning of CNN-based image fusion methods and the evaluation metrics. In addition, to illustrate the transfer feasibility of image fusion-consensus to a tailored image fusion application, a selection of image fusion methods is discussed (e.g., a high-quality texture image-fused depth map enhancement). Some popular computer vision tasks are involved in (such as image denoising, blind image deblurring, and image super-resolution), which can be resolved by image fusion innovatively. Finally, we review some potential challenging issues, including: 1) reliable and efficient ground-truth training data-constructed (i.e., the input image sequence and the predictable image-fused), 2) lightweight, interpretable, and generalizable CNN-based image fusion methods, 3) human or machine-related vision-perceptual calibrated loss functions, 4) convergence-accelerated image fusion models in related to adversarial training setting-specific and the bias-related of the test-time training, and 5) human-related ethical issues in relevant to fairness and unbiased performance evaluation.关键词:image fusion;deep neural networks;stochastic gradient descent(SGD);image quality assessment;deep learning407|539|2更新时间:2024-05-08

摘要:To capture more effective visual information of the natural scenes, multi-sensor imaging systems have been challenging in multiple configurations or modalities due to the hardware design constraints. It is required to fuse multiple source images into a single high-quality image in terms of rich and feasible perceptual information and few artifacts. To facilitate various image processing and computer vision tasks, image fusion technique can be used to generate a single and clarified image features. Traditional image fusion models are often constructed in accordance with label-manual features or unidentified feature-learned representations. The generalization ability of the models needs to be developed further.Deep learning technique is focused on progressive multi-layer features extraction via end-to-end model training. Most of demonstration-relevant can be learned for specific task automatically. Compared with the traditional methods, deep learning-based models can improve the fusion performance intensively in terms of image fusion. Current image fusion-related deep learning models are often beneficial based on convolutional neural networks (CNNs) and generative adversarial networks (GANs). In recent years, the newly network structures and training techniques have been incorporated for the growth of image fusion like vision transformers and meta-learning techniques. Most of image fusion-relevant literatures are analyzed from specific multi-fusion issues like exposure, focus, spectrum image, and modality issues. However, more deep learning-related model designs and training techniques is required to be incorporated between multi-fusion tasks. To draw a clear picture of deep learning-based image fusion techniques, we try to review the latest image fusion researches in terms of 1) dataset generation, 2) neural network construction, 3) loss function design, 4) model optimization, and 5) performance evaluation. For dataset generation, we emphasize two categories: a) supervised learning and b) unsupervised (or self-supervised) learning. For neural network construction, we distinguish the early or late stages of this construction process, and the issue of information fusion is implemented between multi-scale, coarse-to-fine and the adversarial networks-incorporated (i.e., discriminative networks) as well. For loss function design, the perceptual loss functions-specific method is essential for image fusion-related perceptual applications like multi-exposure and multi-focus image fusion. For model optimization, the generic first-order optimization techniques are covered (e.g., stochastic gradient descent(SGD), SGD+momentum, Adam, and AdamW) and the advanced alternation and bi-level optimization methods are both taken into consideration. For performance evaluation, a commonly-used quantitative metrics are reviewed for the manifested measurement of fusion performance. The relationship between the loss functions (also as a form of evaluation metrics) are used to drive the learning of CNN-based image fusion methods and the evaluation metrics. In addition, to illustrate the transfer feasibility of image fusion-consensus to a tailored image fusion application, a selection of image fusion methods is discussed (e.g., a high-quality texture image-fused depth map enhancement). Some popular computer vision tasks are involved in (such as image denoising, blind image deblurring, and image super-resolution), which can be resolved by image fusion innovatively. Finally, we review some potential challenging issues, including: 1) reliable and efficient ground-truth training data-constructed (i.e., the input image sequence and the predictable image-fused), 2) lightweight, interpretable, and generalizable CNN-based image fusion methods, 3) human or machine-related vision-perceptual calibrated loss functions, 4) convergence-accelerated image fusion models in related to adversarial training setting-specific and the bias-related of the test-time training, and 5) human-related ethical issues in relevant to fairness and unbiased performance evaluation.关键词:image fusion;deep neural networks;stochastic gradient descent(SGD);image quality assessment;deep learning407|539|2更新时间:2024-05-08 -

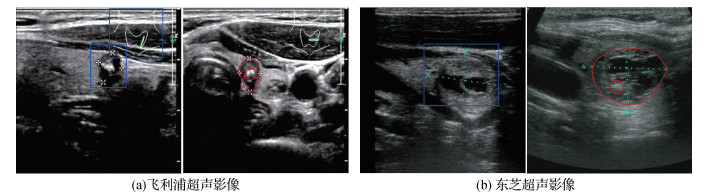

摘要:Multimodal medical-fused images are essential to more comprehensive and accurate medical image descriptions for various clinical applications like medical diagnosis, treatment planning, and surgical navigation. However, single-modal medical images is challenged to deal with diagnose disease types and localize lesions due to its variety and complexity of disease types.As a result, multimodal medical image fusion methods are focused on obtaining medical images with rich information in clinical applications. Medical-based imaging techniques are mainly segmented into electromagnetic energy-based and acoustic energy-based. To achieve the effect of real-time imaging and provide dynamic images, the latter one uses the multiple propagation speed of ultrasound in different media. Current medical image fusion techniques are mainly concerned of static images in terms of electromagnetic energy imaging techniques. For example, it is related to some key issues like X-ray computed tomography imaging, single photon emission computed tomography, positron emission tomography and magnetic resonance imaging. We review recent literature-relevant based on the current status of medical image fusion methods. Our critical analysis can divide current medical image fusion techniques into two categories: 1) traditional methods and 2) deep learning methods. Nowadays, spatial domain and frequency domain-based algorithms are very proactive for traditional medical image fusion methods. The spatial domain techniques are implemented for the evaluation of image element values via prior pixel-level strategies, and the images-fused can realize less spatial distortion and a lower signal-to-noise ratio. The spatial domain-based methods are included some key aspects like 1) simple min/max, 2) independent component analysis, 3) principal component analysis, 4) weighted average, 5) simple average, 6) fuzzy logic, and 7) cloud model. The fusion process of spatial domain-based methods is quite simple, and its algorithm complexity can lower the computation cost. It also has a relatively good performance in alleviating the spectral distortion of fused images. However, the challenging issue is called for their fusion results better in terms of clarity, contrast and continuous lower spatial resolution. In the frequency domain, the input image is first converted from the null domain to the frequency domain via Fourier transform computation, and the fusion algorithm is then applied to the image-converted to obtain the final fused image, followed by the inversed Fourier transform. The commonly-used fusion algorithms in the frequency domain are composed of 1) pyramid transform, 2) wavelet transform and 3) multi-scale geometric transform fusion algorithms. This multi-level decomposition based methods can enhance the detail retention of the fused image. The output fusion results contain high spatial resolution and high quality spectral components. However, this type of algorithm is derived from a fine-grained fusion rule design as well. The deep learning-based methods are mainly related to convolutional neural networks (CNN) and generative adversarial networks (GAN), which can avoid fine-grained fusion rule design, reduce the manual involvement in the process, and their stronger feature extraction capability enables their fusion results to retain more source image information. The CNN can be used to process the spatial and structural information effectively in the neighborhood of the input image. It consists of a series of convolutional layers, pooling layers and fully connected layers. The convolution layer and pooling layer can extract the features in the source image, and the fully connected layer can complete the mapping from the features to the final output. In CNN, image fusion is regarded as a classification problem, corresponding to the process of feature extraction, feature option and output prediction. The fusion task is targeted on image transformation, activity level measurement and fusion rule design as well. Different from CNN, GAN network can be used to model saliency information in medical images through adversarial learning mechanism. GAN is a generative model with two multilayer networks, the first network mentioned is a generator-used to generate pseudo data, and the second following network is a discriminator-used to classify images into real data and pseudo data. The back-propagation-based training mode can improve the ability of GAN to distinguish between real data and generated data. Although GAN is not as widely used in multi-model medical image fusion (MMIF) as CNN, it has the potential for in-depth research. A completed overview of existing multimodal medical image databases and fusion quality evaluation metrics is developed further. Four open-source freely accessible medical image databases are involved in, such as the open access series of imaging studies (OASIS) dataset, the cancer immunome atlas (TCIA) dataset, the whole brain atlas (AANLIB) dataset, and the Alzheimer' s disease neuroimaging initiative (ANDI) dataset. And, a gene database for green fluorescent protein and phase contrast images are included as well, called the John Innes centre (JIC) dataset. Our critical review is based on the summary of 25 commonly-used medical image fusion result evaluation indicators in four types of metrics: 1) information theory-based; 2) image feature-based; 3) image structural similarity-based and 4) human visual perception-based, as well as 22 fusion algorithms for medical image datasets in recent years. The pros and cons of the algorithms are analyzed in terms of the technical-based comparison, fusion modes and evaluation indexes of each algorithm. In addition, our review is carried out on a large number of experiments to compare the performance of deep learning-based and traditional medical image fusion methods. Source images of three modal pairs are tested qualitatively and quantitatively via 22 multimodal medical image fusion algorithms. For qualitative analysis, the brightness, contrast and distortion of the fused image are observed based on the human vision system. For quantitative-based analysis, 15 objective evaluation indexes are used. By analyzing the qualitative and quantitative results, some critical analyses are discussed based on the current situation, challenging issues and future direction of medical image fusion techniques. Both of the traditional and deep learning methods have promoted fusion performance to a certain extent. More medical image fusion methods with good fusion effect and high model robustness are illustrated in the context of the algorithm optimization and the enrichment of medical image data sets. And, the two technical fields will continue to be developed towards the common research trends of expanding the multi-facet and multi-case medical images, proposing effective indicators suitable for medical image fusion, and deepening the research scope of image fusion.关键词:multimodel medical image;medical image fusion;deep learning;medical image database;quality evaluation metrics873|1380|3更新时间:2024-05-08

摘要:Multimodal medical-fused images are essential to more comprehensive and accurate medical image descriptions for various clinical applications like medical diagnosis, treatment planning, and surgical navigation. However, single-modal medical images is challenged to deal with diagnose disease types and localize lesions due to its variety and complexity of disease types.As a result, multimodal medical image fusion methods are focused on obtaining medical images with rich information in clinical applications. Medical-based imaging techniques are mainly segmented into electromagnetic energy-based and acoustic energy-based. To achieve the effect of real-time imaging and provide dynamic images, the latter one uses the multiple propagation speed of ultrasound in different media. Current medical image fusion techniques are mainly concerned of static images in terms of electromagnetic energy imaging techniques. For example, it is related to some key issues like X-ray computed tomography imaging, single photon emission computed tomography, positron emission tomography and magnetic resonance imaging. We review recent literature-relevant based on the current status of medical image fusion methods. Our critical analysis can divide current medical image fusion techniques into two categories: 1) traditional methods and 2) deep learning methods. Nowadays, spatial domain and frequency domain-based algorithms are very proactive for traditional medical image fusion methods. The spatial domain techniques are implemented for the evaluation of image element values via prior pixel-level strategies, and the images-fused can realize less spatial distortion and a lower signal-to-noise ratio. The spatial domain-based methods are included some key aspects like 1) simple min/max, 2) independent component analysis, 3) principal component analysis, 4) weighted average, 5) simple average, 6) fuzzy logic, and 7) cloud model. The fusion process of spatial domain-based methods is quite simple, and its algorithm complexity can lower the computation cost. It also has a relatively good performance in alleviating the spectral distortion of fused images. However, the challenging issue is called for their fusion results better in terms of clarity, contrast and continuous lower spatial resolution. In the frequency domain, the input image is first converted from the null domain to the frequency domain via Fourier transform computation, and the fusion algorithm is then applied to the image-converted to obtain the final fused image, followed by the inversed Fourier transform. The commonly-used fusion algorithms in the frequency domain are composed of 1) pyramid transform, 2) wavelet transform and 3) multi-scale geometric transform fusion algorithms. This multi-level decomposition based methods can enhance the detail retention of the fused image. The output fusion results contain high spatial resolution and high quality spectral components. However, this type of algorithm is derived from a fine-grained fusion rule design as well. The deep learning-based methods are mainly related to convolutional neural networks (CNN) and generative adversarial networks (GAN), which can avoid fine-grained fusion rule design, reduce the manual involvement in the process, and their stronger feature extraction capability enables their fusion results to retain more source image information. The CNN can be used to process the spatial and structural information effectively in the neighborhood of the input image. It consists of a series of convolutional layers, pooling layers and fully connected layers. The convolution layer and pooling layer can extract the features in the source image, and the fully connected layer can complete the mapping from the features to the final output. In CNN, image fusion is regarded as a classification problem, corresponding to the process of feature extraction, feature option and output prediction. The fusion task is targeted on image transformation, activity level measurement and fusion rule design as well. Different from CNN, GAN network can be used to model saliency information in medical images through adversarial learning mechanism. GAN is a generative model with two multilayer networks, the first network mentioned is a generator-used to generate pseudo data, and the second following network is a discriminator-used to classify images into real data and pseudo data. The back-propagation-based training mode can improve the ability of GAN to distinguish between real data and generated data. Although GAN is not as widely used in multi-model medical image fusion (MMIF) as CNN, it has the potential for in-depth research. A completed overview of existing multimodal medical image databases and fusion quality evaluation metrics is developed further. Four open-source freely accessible medical image databases are involved in, such as the open access series of imaging studies (OASIS) dataset, the cancer immunome atlas (TCIA) dataset, the whole brain atlas (AANLIB) dataset, and the Alzheimer' s disease neuroimaging initiative (ANDI) dataset. And, a gene database for green fluorescent protein and phase contrast images are included as well, called the John Innes centre (JIC) dataset. Our critical review is based on the summary of 25 commonly-used medical image fusion result evaluation indicators in four types of metrics: 1) information theory-based; 2) image feature-based; 3) image structural similarity-based and 4) human visual perception-based, as well as 22 fusion algorithms for medical image datasets in recent years. The pros and cons of the algorithms are analyzed in terms of the technical-based comparison, fusion modes and evaluation indexes of each algorithm. In addition, our review is carried out on a large number of experiments to compare the performance of deep learning-based and traditional medical image fusion methods. Source images of three modal pairs are tested qualitatively and quantitatively via 22 multimodal medical image fusion algorithms. For qualitative analysis, the brightness, contrast and distortion of the fused image are observed based on the human vision system. For quantitative-based analysis, 15 objective evaluation indexes are used. By analyzing the qualitative and quantitative results, some critical analyses are discussed based on the current situation, challenging issues and future direction of medical image fusion techniques. Both of the traditional and deep learning methods have promoted fusion performance to a certain extent. More medical image fusion methods with good fusion effect and high model robustness are illustrated in the context of the algorithm optimization and the enrichment of medical image data sets. And, the two technical fields will continue to be developed towards the common research trends of expanding the multi-facet and multi-case medical images, proposing effective indicators suitable for medical image fusion, and deepening the research scope of image fusion.关键词:multimodel medical image;medical image fusion;deep learning;medical image database;quality evaluation metrics873|1380|3更新时间:2024-05-08

Review

-

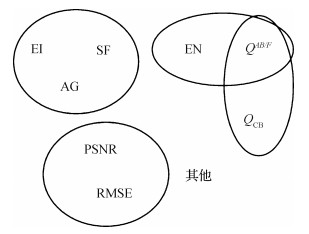

摘要:ObjectiveAs a research branch in the field of image fusion, objective assessment metrics can overcome these shortcomings of subjective evaluation methods that are easily affected by human psychological interference, surrounding environment, and visual characteristics. It can be utilized to evaluate algorithms and design parameters. Our algorithms advantages proposed can be demonstrated via objective assessment metrics. However, there is still a lack of benchmarks and metrics in various application fields like visible and infrared image fusion. A couple of objective assessment metrics can be selected based on prior experience. To facilitate the comparative analysis for different fusion algorithms, our research is focused on a general option method for objective assessment metrics and a set of recommended metrics for the fusion of visible and infrared images.MethodA new selecting method for objective assessment metrics is built. Our method consists of three parts: 1) correlation analysis, 2) consistency analysis and 3) discrete analysis. The Kendall correlation coefficient is utilized to perform correlation analysis for all objective assessment metrics. All the objective assessment metrics are clustered according to the value of the correlation coefficient: if the Kendall value of two metrics is higher than the threshold, the two metrics will be put into the same group. The Borda voting method is used in the consistency analysis. There is a ranking for all algorithms in terms of each metric value. An overall ranking is also generated by Borda voting method based on each single ranking of different metrics. The correlation coefficient is used to analyze the consistency between each single ranking and the overall ranking. The objective assessment metric has higher consistency if its correlation coefficient value is higher. Such experiments showed that the metric value will be fluctuated if the fusion quality is changed. A good metric should reflect the fusion quality of different algorithms clearly, so the metric value will cause a large fluctuation in terms of different fusion quality. The different fusion quality we illustrated is originated from multiple algorithms. The coefficient of variation is used to interpret the fluctuation because different objective assessment metrics match different measurement scales. The coefficient of variation reflects overall fluctuations under the influence of the measurement scale. Therefore, the final selected objective assessment metrics set has the following three characteristics: 1) high consistency, 2) high coefficient of variation and 3)non-same group.ResultThe experiments are conducted on the visible and infrared fusion benchmark (VIFB) dataset. The experiments are segmented into two groups in terms of the visible images in the dataset in related to grayscale images and RGB color images. The recommended objective assessment metric set is under the fusion of visible and infrared image, color visible and infrared image fusion: {standard deviation(SD),

摘要:ObjectiveAs a research branch in the field of image fusion, objective assessment metrics can overcome these shortcomings of subjective evaluation methods that are easily affected by human psychological interference, surrounding environment, and visual characteristics. It can be utilized to evaluate algorithms and design parameters. Our algorithms advantages proposed can be demonstrated via objective assessment metrics. However, there is still a lack of benchmarks and metrics in various application fields like visible and infrared image fusion. A couple of objective assessment metrics can be selected based on prior experience. To facilitate the comparative analysis for different fusion algorithms, our research is focused on a general option method for objective assessment metrics and a set of recommended metrics for the fusion of visible and infrared images.MethodA new selecting method for objective assessment metrics is built. Our method consists of three parts: 1) correlation analysis, 2) consistency analysis and 3) discrete analysis. The Kendall correlation coefficient is utilized to perform correlation analysis for all objective assessment metrics. All the objective assessment metrics are clustered according to the value of the correlation coefficient: if the Kendall value of two metrics is higher than the threshold, the two metrics will be put into the same group. The Borda voting method is used in the consistency analysis. There is a ranking for all algorithms in terms of each metric value. An overall ranking is also generated by Borda voting method based on each single ranking of different metrics. The correlation coefficient is used to analyze the consistency between each single ranking and the overall ranking. The objective assessment metric has higher consistency if its correlation coefficient value is higher. Such experiments showed that the metric value will be fluctuated if the fusion quality is changed. A good metric should reflect the fusion quality of different algorithms clearly, so the metric value will cause a large fluctuation in terms of different fusion quality. The different fusion quality we illustrated is originated from multiple algorithms. The coefficient of variation is used to interpret the fluctuation because different objective assessment metrics match different measurement scales. The coefficient of variation reflects overall fluctuations under the influence of the measurement scale. Therefore, the final selected objective assessment metrics set has the following three characteristics: 1) high consistency, 2) high coefficient of variation and 3)non-same group.ResultThe experiments are conducted on the visible and infrared fusion benchmark (VIFB) dataset. The experiments are segmented into two groups in terms of the visible images in the dataset in related to grayscale images and RGB color images. The recommended objective assessment metric set is under the fusion of visible and infrared image, color visible and infrared image fusion: {standard deviation(SD),$Q^{A B / F}$ $Q_{\mathrm{CB}}$ $Q^{A B / F}$ $Q_{\mathrm{CB}}$ $Q^{A B / F}$ $Q_{\mathrm{CB}}$ $Q^{A B / F}$ $Q^{A B / F}$ $Q^{A B / F}$ 关键词:image fusion;objective assessment metrics;correlation analysis;consistency analysis;coefficient of variation897|471|9更新时间:2024-05-08 -

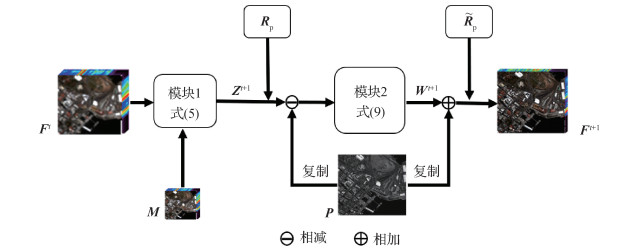

摘要:ObjectiveMulti-modal images have been developed based on multiple imaging techniques. The infrared image collects the radiation information of the target in the infrared band. The visible image is more suitable to human visual perception in terms of higher spatial resolution, richer effective information and lower noise. Infrared and visible image fusion (IVIF) can integrate the configurable information of multi-sensors to alleviate the limitations of hardware equipment and obtain more low-cost information for high-quality images. The IVIF can be used for a wide range of applications like surveillance, remote sensing and agriculture. However, there are several challenges to be solved in multi-modal image fusion. For instance, effective information extraction issue from different modalities and the problem-solving for fusion rule of the complementary information of different modalities. Current researches can be roughly divided into two categories: 1) traditional methods and 2) deep learning based methods. The traditional methods decompose the infrared image and the visible image into the transform domain to make the decomposed representation have special properties that are benefit to fusion, then perform fusion in the transform domain, which can depress information loss and avoid the artifacts caused by direct pixel manipulation, and finally reconstruct the fused image. Traditional methods are based on the assumptions on the source image pair and manual-based image decomposition methods to extract features. However, these hand-crafted features are not comprehensive and may cause the sensitivity to high-frequency or primary components and generate image distortion and artifacts. In recent years, data-driven deep learning-based image fusion methods have been developing. Most of the deep learning based fusion methods have been oriented for the infrared and visible image fusion in the deep feature space. Deep learning-based fusion methods can be divided into two categories: 1) convolutional neural network (CNN) for fusion, and 2) generative adversarial network (GAN) to generate fusion images. CNN-based information extraction is not fully utilized by the intermediate layers. The GAN-based methods are challenged to preserving image details in adequately.MethodWe develop a novel progressive infrared and visible image fusion framework (ProFuse), which extracts multi-scale features with U-Net as our backbone, merges the multi-scale features and reconstructs the fused image layer by layer. Our network has composed of three parts: 1) encoder; 2) fusion module; and 3) decoder. First, a series of multi-scale feature maps are generated from the infrared image and the visible image via the encoder. Next, the multi-scale features of the infrared and visible image pair are fused in the fusion layer to obtain fused features. At last, the fused features pass through the decoder to construct the fused image. The network architecture of the encoder and decoder is designed based on U-Net. The encoder consists of the replicable applications of recurrent residual convolutional unit (RRCU) and the max pooling operation. Each down-sampling step can be doubled the number of feature channels, so that more features can be extracted. The decoder aims to reconstruct the final fused image. Every step in the decoder consists of an up-sampling of the feature map followed by a 3 × 3 convolution that halves the number of feature channels, a concatenation with the corresponding feature maps from the encoder, and a RRCU. At the fusion layer, our spatial attention-based fusion method is used to deal with image fusion tasks. This method has the following two advantages. First, it can perform fusion on global information-contained high-level features (at bottleneck semantic layer), and details-related low-level features (at shallow layers). Second, our method not only perform fusion on the original scale (maintaining more details), but also perform fusion on other smaller scales (maintaining semantic information). Therefore, the design of progressive fusion is mainly specified in the following two aspects: 1) we conduct image fusion progressively from high-level to low-level and 2) from small-scale to large-scale progressively.ResultIn order to evaluate the fusion performance of our method, we conduct experiments on publicly available Toegepast Natuurwetenschappelijk Onderzoek (TNO) dataset and compare it with some state-of-the-art (SOTA) fusion methods including DenseFuse, discrete wavelet transform (DWT), Fusion-GAN, ratio of low-pass pyramid (RP), generative adversarial network with multiclassification constraints for infrared and visible image fusion (GANMcC), curvelet transform (CVT). All these competitors are implemented according to public code, and the parameters are set by referring to their original papers. Our method is evaluated with other methods in subjective evaluation, and some quality metrics are used to evaluate the fusion performance objectively. Generally speaking, the fusion results of our method obviously have higher contrast, more details and clearer targets. Compared with other methods, our method preserves the detailed information of visible and infrared radiation in maximization. At the same time, very little noise and artifacts are introduced in the results. We evaluate the performances of different fusion methods quantitatively via using six metrics, i.e., entropy (EN), structure similarity (SSIM), edge-based similarity measure (Qabf), mutual information (MI), standard deviation (STD), sum of the correlations of differences (SCD). Our method has achieved a larger value on EN, Qabf, MI and STD. The maximum EN value indicates that our method retains richer information than other competitors. The Qabf is a novel objective quality evaluation metric for fused images. The higher the value of Qabf is, the better the quality of the fusion images are. STD is an objective evaluation index that measures the richness of image information. The larger the value, the more scattered the gray-level distribution of the image, the more information the image carries, and the better the quality of the fused image. The larger the value of MI, the more information obtained from the source images, and the better the fusion effect. Our method has an improvement of 115.64% in the MI index compared with the generative adversarial network for infrared and visible image fusion (FusionGAN) method, 19.93% in the STD index compared with the GANMcC method, 1.91% in the edge preservation (Qabf) index compared with the DWT method and 1.30% in the EN index compared with the GANMcC method. This indicates that our method is effective for IVIF task.ConclusionExtensive experiments demonstrate the effectiveness and generalization of our method. It shows better results on the evaluations in qualitative and quantitative both.关键词:image fusion;deep learning;unsupervised learning;infrared image;visible image401|1|1更新时间:2024-05-08