最新刊期

卷 27 , 期 8 , 2022

-

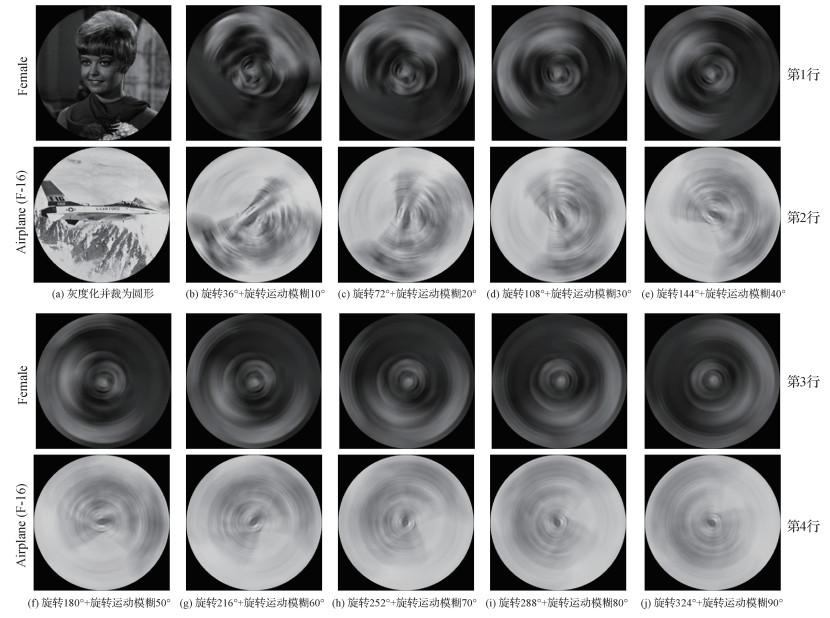

摘要:Virtual reality (VR) technology has gradually penetrated into many fields such as medical education, military and entertainment. Given visual quality is the key to the successful application of VR technology, and the image is visual information benched carrier of VR applications, VR image quality evaluation has become an important frontier research direction for quality evaluation. Just like traditional image quality evaluation, VR image quality evaluation can be divided into subjective quality evaluation and objective quality evaluation. Among them, the subjective quality evaluation methods refer to images scoring through human eyes followed by some data processing steps to obtain the subjective scores, while the objective quality evaluation methods focus on the methods of images scoring based on designing the mathematical model using computers to simulate the subjective scoring results as closely as possible. Compared to the subjective quality evaluation, the objective quality evaluation has its priorities s of lower cost, stronger stability and wider application scope. Many researchers in the scientific research institutions and colleges have dedicated to studying the objective quality evaluation of VR images. Our executive summary is focused on the research of objective quality evaluation of VR images. First, the current situation of VR image quality evaluation is summarized. Then, the existing objective quality evaluation models of VR images are mainly introduced and analyzed. According to whether the models need to use the original undistorted image information as modeling reference, current objective quality evaluation models for VR images are divided into two types, including the full-reference (FR) and no-reference (NR) types. The ground truth based FR models need the completed original image information as reference, while the NR models can achieve quality evaluation of a distorted VR image without any reference information. Specifically, the FR models can be divided into two categories for VR images, including the peak-signal-to-noise ratio/structural similarity (PSNR/SSIM) based methods and the machine learning based methods. The latter first extract features from VR images and then train the quality evaluation model via the support vector regression method or the random forest method. The NR models are further divided into three categories: the equirectangular projection (ERP) expression space based methods, the other projection expression spaces based methods and the actual viewing space based methods. These models are classified according to the space in which features are extracted. For the first kind of models, the raw spherical VR image is first transferred to the ERP space for feature expression, while it is converted to other projection spaces or the actual viewing space for the other two kinds of methods. The reason why the ERP expression space based methods are listed as a separate category is that the ERP space is the default and the mostly used projection space. Followed the space transformation, there are also two options for the sequential quality evaluation consisting of the traditional quality evaluation methods and the deep-learning based methods. For the traditional ones, the manual features are first extracted and fused to generate the final quality score or fed into the model trainer to obtain the quality assessment model. For the deep-learning based methods, both the feature extraction and quality prediction steps are conducted based on deep neural networks. The pros and cons of them are further analyzed. In addition, the performance evaluation indexes of the objective quality evaluation models of VR images are introduced, which are consistent to other image quality evaluations. The detailed existing VR image databases are summarized subsequently. Finally, our review focuses on the applications of VR image objective quality evaluation models and predicts the future potentials further.关键词:image quality evaluation;objective evaluation;virtual reality (VR);spherical image;equirectangular projection (ERP)125|410|1更新时间:2024-08-15

摘要:Virtual reality (VR) technology has gradually penetrated into many fields such as medical education, military and entertainment. Given visual quality is the key to the successful application of VR technology, and the image is visual information benched carrier of VR applications, VR image quality evaluation has become an important frontier research direction for quality evaluation. Just like traditional image quality evaluation, VR image quality evaluation can be divided into subjective quality evaluation and objective quality evaluation. Among them, the subjective quality evaluation methods refer to images scoring through human eyes followed by some data processing steps to obtain the subjective scores, while the objective quality evaluation methods focus on the methods of images scoring based on designing the mathematical model using computers to simulate the subjective scoring results as closely as possible. Compared to the subjective quality evaluation, the objective quality evaluation has its priorities s of lower cost, stronger stability and wider application scope. Many researchers in the scientific research institutions and colleges have dedicated to studying the objective quality evaluation of VR images. Our executive summary is focused on the research of objective quality evaluation of VR images. First, the current situation of VR image quality evaluation is summarized. Then, the existing objective quality evaluation models of VR images are mainly introduced and analyzed. According to whether the models need to use the original undistorted image information as modeling reference, current objective quality evaluation models for VR images are divided into two types, including the full-reference (FR) and no-reference (NR) types. The ground truth based FR models need the completed original image information as reference, while the NR models can achieve quality evaluation of a distorted VR image without any reference information. Specifically, the FR models can be divided into two categories for VR images, including the peak-signal-to-noise ratio/structural similarity (PSNR/SSIM) based methods and the machine learning based methods. The latter first extract features from VR images and then train the quality evaluation model via the support vector regression method or the random forest method. The NR models are further divided into three categories: the equirectangular projection (ERP) expression space based methods, the other projection expression spaces based methods and the actual viewing space based methods. These models are classified according to the space in which features are extracted. For the first kind of models, the raw spherical VR image is first transferred to the ERP space for feature expression, while it is converted to other projection spaces or the actual viewing space for the other two kinds of methods. The reason why the ERP expression space based methods are listed as a separate category is that the ERP space is the default and the mostly used projection space. Followed the space transformation, there are also two options for the sequential quality evaluation consisting of the traditional quality evaluation methods and the deep-learning based methods. For the traditional ones, the manual features are first extracted and fused to generate the final quality score or fed into the model trainer to obtain the quality assessment model. For the deep-learning based methods, both the feature extraction and quality prediction steps are conducted based on deep neural networks. The pros and cons of them are further analyzed. In addition, the performance evaluation indexes of the objective quality evaluation models of VR images are introduced, which are consistent to other image quality evaluations. The detailed existing VR image databases are summarized subsequently. Finally, our review focuses on the applications of VR image objective quality evaluation models and predicts the future potentials further.关键词:image quality evaluation;objective evaluation;virtual reality (VR);spherical image;equirectangular projection (ERP)125|410|1更新时间:2024-08-15

Review

-

摘要:ObjectiveHuman eyes physiological features are challenged to be captured, which can reflect health, fatigue and emotion of human behaviors. Fatigue phenomenon can be judged according to the state of the patients' eyes. The state of the in-class students' eyes can be predicted by instructorsin terms of students' emotion, psychology and cognitive analyses. Targeted consumers can be recognized through their gaze location when shopping. Camera shot cannot be used to capture the changes in pupil size and orientation in the wild. Meanwhile, there is a lack of eye behavior related dataset with fine landmarks detection and segment similar to the real application scenario. Near-infrared and head-mounted cameras could be used to capture eye images. Light is used to distinguish the iris and pupil, which obtain a high-quality image. Head posture, illumination, occlusion and user-camera distance may affect the quality of image. Therefore, the images collection in the laboratory environment are difficult to apply in the real world.MethodAn eye region segmentation and landmark detection dataset can resolve the issue of mismatch results between the indoor and outdoor scenarios. Our research focuses on collection and annotation of a new eye region segment and landmark detection dataset (eye segment and landmark detection dataset, ESLD) in constraint of dataset for fine landmark detection and eye region, which contain multiple types of eye. First, facial images are collected. There are three ways to collect images, including the facial images of user when using the computer, images in the public dataset captured by the ordinary camera and the synthesized eye images, respectively. The number of images is developed to 1 386, 804 and 1 600, respectively. Second, eye region is cut out from the original image. Dlib is used to detect landmarks and eye region is segmented according to the labels of the completed face images involved. For an incomplete face images, eye region should be segment artificially. And then, all eye region images are normalized in 256×128 pixels. The eye region images are restored in a folder according to the type of acquisitions. Finally, annotators are initially to be trained and manually annotated images labels followed. In order to reduce the label error caused by human behavior factors, each annotator selects four images from each type of image for labeling. An experienced annotator will be checked after the landmarks are labeled and completed. The remaining images can be labeled when the annotate standard is reached. Each landmarks location is saved as json file and labelme is used to segment eye region derived the json file. A total of 2 404 images are obtained. Each image contains 16 landmarks around eyes, 12 landmarks around iris and 12 pupil surrounded landmarks. The segment labels are relevant to sclera, iris, and pupil and skip around eyes.ResultOur dataset is classified into training, testing and validation sets by 0.6∶0.2∶0.2. Our demonstration evaluates the proposed dataset using deep learning algorithms and provides baseline for each experiment. First, the model is trained by synthesized eye images. An experiment is conducted to recognize whether the eye is real or not. Our analyzed results show that model cannot recognize real and synthesis accurately, which indicate synthesis eye images can be used as training data. And, deep learning-based algorithms are used to eye region segment. Mask region convolutional neural network(Mask R-CNN) with different backbones are used to train the model. It shows that backbones with deep network structure can obtain high segment accuracy under the same training epoch and the mean average precision (mAP) is 0.965. Finally, Mask R-CNN is modified to landmarks detection task. Euclidean distance is used to test the model and the error is 5.828. Compared to eye region segment task, it is difficult to detect landmarks due to the small region of the eye. Deep structure is efficient to increase the accuracy of landmarks detection with eye region mask.ConclusionESLD is focused on multiple types of eye images in a real environment and bridge the gaps in the fine landmarks detection and segmentation in eye region. To study the relationship between eye state and emotion, a deep learning algorithm can be developed further based on combining ESLD with other datasets.关键词:in the wild;pupil segment;landmark detection;user identification;E-learning;dataset190|205|0更新时间:2024-08-15

摘要:ObjectiveHuman eyes physiological features are challenged to be captured, which can reflect health, fatigue and emotion of human behaviors. Fatigue phenomenon can be judged according to the state of the patients' eyes. The state of the in-class students' eyes can be predicted by instructorsin terms of students' emotion, psychology and cognitive analyses. Targeted consumers can be recognized through their gaze location when shopping. Camera shot cannot be used to capture the changes in pupil size and orientation in the wild. Meanwhile, there is a lack of eye behavior related dataset with fine landmarks detection and segment similar to the real application scenario. Near-infrared and head-mounted cameras could be used to capture eye images. Light is used to distinguish the iris and pupil, which obtain a high-quality image. Head posture, illumination, occlusion and user-camera distance may affect the quality of image. Therefore, the images collection in the laboratory environment are difficult to apply in the real world.MethodAn eye region segmentation and landmark detection dataset can resolve the issue of mismatch results between the indoor and outdoor scenarios. Our research focuses on collection and annotation of a new eye region segment and landmark detection dataset (eye segment and landmark detection dataset, ESLD) in constraint of dataset for fine landmark detection and eye region, which contain multiple types of eye. First, facial images are collected. There are three ways to collect images, including the facial images of user when using the computer, images in the public dataset captured by the ordinary camera and the synthesized eye images, respectively. The number of images is developed to 1 386, 804 and 1 600, respectively. Second, eye region is cut out from the original image. Dlib is used to detect landmarks and eye region is segmented according to the labels of the completed face images involved. For an incomplete face images, eye region should be segment artificially. And then, all eye region images are normalized in 256×128 pixels. The eye region images are restored in a folder according to the type of acquisitions. Finally, annotators are initially to be trained and manually annotated images labels followed. In order to reduce the label error caused by human behavior factors, each annotator selects four images from each type of image for labeling. An experienced annotator will be checked after the landmarks are labeled and completed. The remaining images can be labeled when the annotate standard is reached. Each landmarks location is saved as json file and labelme is used to segment eye region derived the json file. A total of 2 404 images are obtained. Each image contains 16 landmarks around eyes, 12 landmarks around iris and 12 pupil surrounded landmarks. The segment labels are relevant to sclera, iris, and pupil and skip around eyes.ResultOur dataset is classified into training, testing and validation sets by 0.6∶0.2∶0.2. Our demonstration evaluates the proposed dataset using deep learning algorithms and provides baseline for each experiment. First, the model is trained by synthesized eye images. An experiment is conducted to recognize whether the eye is real or not. Our analyzed results show that model cannot recognize real and synthesis accurately, which indicate synthesis eye images can be used as training data. And, deep learning-based algorithms are used to eye region segment. Mask region convolutional neural network(Mask R-CNN) with different backbones are used to train the model. It shows that backbones with deep network structure can obtain high segment accuracy under the same training epoch and the mean average precision (mAP) is 0.965. Finally, Mask R-CNN is modified to landmarks detection task. Euclidean distance is used to test the model and the error is 5.828. Compared to eye region segment task, it is difficult to detect landmarks due to the small region of the eye. Deep structure is efficient to increase the accuracy of landmarks detection with eye region mask.ConclusionESLD is focused on multiple types of eye images in a real environment and bridge the gaps in the fine landmarks detection and segmentation in eye region. To study the relationship between eye state and emotion, a deep learning algorithm can be developed further based on combining ESLD with other datasets.关键词:in the wild;pupil segment;landmark detection;user identification;E-learning;dataset190|205|0更新时间:2024-08-15

Dataset

-

摘要:ObjectiveThe acquired depth information has led to the research development of three-dimensional reconstruction and stereo vision. However, the acquired depth images issues have challenged of image holes and image noise due to the lack of depth information. The quality of the depth image is as a benched data source for each 3D-vision(3DV) system. Our method is focused on the lack of depth map information repair derived from objective factors in the depth acquisition process. It is required of the high precision, the spatial distribution difference between color and depth features, the interference of noise and blur, and the large scale holes information loss.MethodReal-time ability is relatively crucial in terms of the depth image recovery algorithms serving as pre-processing modules in the 3DV systems. The sequential filling method has been optimized in computational speed by processing each invalid point in one loop. The invalid points based pixels are obtained without depth values. By contrast, depth values captured pixels are referred to as valid points. Therefore, we facilitate a dual-scale sequential filling framework for depth image recovery. We carry out filling priority estimation and depth value prediction of the invalid points in this framework. For the evaluation of the priority of invalid points, we use conditional entropy as the benchmark for evaluating the priority of invalid point filling evaluation and verification. It is incredible to estimate the filling priority and filling depth value through the overall features of a single pixel and its 8-neighborhood. However, the use of multi-scale filtering increases the computational costs severely. We introduce the super-pixel over-segmentation algorithm to segment the input image into more small patches, which ensures the pixels inside the super-pixel homogeneous contexts like color, texture, and depth. We believe that the super-pixels can provide more reliable features in larger scale for priority estimation filling and depth value prediction. In addition, we optioned a simple linear iterative clustering (SLIC) algorithm to handle the super-pixel segmentation task and added a depth difference metric for the image characteristics of RGB-D to make it efficient and reliable. For depth estimation, we use maximum likelihood estimation to estimate the depth of invalid points integrated to the depth value exhaustive method. Finally, the restoration results are integrated on the pixel and super-pixel scales to accurately fill the holes in the depth image.ResultOur method is compared to 7 methods related to dataset Middlebury (MB), which shows great advantages on deep repair effection. The averaged peak signal-to-noise ratio (PSNR) is 47.955 dB and the averaged structural similarity index (SSIM) is 0.998 2. Our PSNR reached 34.697 dB and the SSIM reached 0.978 5 in MB based manual populated data set for deep repair. The method herein verifies that this algorithm has relatively strong efficiency in comparison to time efficiency validation. Our filling priority estimation, depth value prediction and double-scale improvement ability are evaluated in the ablation experimental section separately.ConclusionWe illustrate a dual-scale sequential filling framework for depth image recovery. The experimental results demonstrate that our algorithm proposed has its priority to optimize robustness, precision and efficiency.关键词:depth image recovery;sequential filling;fast approximation of conditional entropy;depth value prediction;super-pixel126|190|0更新时间:2024-08-15

摘要:ObjectiveThe acquired depth information has led to the research development of three-dimensional reconstruction and stereo vision. However, the acquired depth images issues have challenged of image holes and image noise due to the lack of depth information. The quality of the depth image is as a benched data source for each 3D-vision(3DV) system. Our method is focused on the lack of depth map information repair derived from objective factors in the depth acquisition process. It is required of the high precision, the spatial distribution difference between color and depth features, the interference of noise and blur, and the large scale holes information loss.MethodReal-time ability is relatively crucial in terms of the depth image recovery algorithms serving as pre-processing modules in the 3DV systems. The sequential filling method has been optimized in computational speed by processing each invalid point in one loop. The invalid points based pixels are obtained without depth values. By contrast, depth values captured pixels are referred to as valid points. Therefore, we facilitate a dual-scale sequential filling framework for depth image recovery. We carry out filling priority estimation and depth value prediction of the invalid points in this framework. For the evaluation of the priority of invalid points, we use conditional entropy as the benchmark for evaluating the priority of invalid point filling evaluation and verification. It is incredible to estimate the filling priority and filling depth value through the overall features of a single pixel and its 8-neighborhood. However, the use of multi-scale filtering increases the computational costs severely. We introduce the super-pixel over-segmentation algorithm to segment the input image into more small patches, which ensures the pixels inside the super-pixel homogeneous contexts like color, texture, and depth. We believe that the super-pixels can provide more reliable features in larger scale for priority estimation filling and depth value prediction. In addition, we optioned a simple linear iterative clustering (SLIC) algorithm to handle the super-pixel segmentation task and added a depth difference metric for the image characteristics of RGB-D to make it efficient and reliable. For depth estimation, we use maximum likelihood estimation to estimate the depth of invalid points integrated to the depth value exhaustive method. Finally, the restoration results are integrated on the pixel and super-pixel scales to accurately fill the holes in the depth image.ResultOur method is compared to 7 methods related to dataset Middlebury (MB), which shows great advantages on deep repair effection. The averaged peak signal-to-noise ratio (PSNR) is 47.955 dB and the averaged structural similarity index (SSIM) is 0.998 2. Our PSNR reached 34.697 dB and the SSIM reached 0.978 5 in MB based manual populated data set for deep repair. The method herein verifies that this algorithm has relatively strong efficiency in comparison to time efficiency validation. Our filling priority estimation, depth value prediction and double-scale improvement ability are evaluated in the ablation experimental section separately.ConclusionWe illustrate a dual-scale sequential filling framework for depth image recovery. The experimental results demonstrate that our algorithm proposed has its priority to optimize robustness, precision and efficiency.关键词:depth image recovery;sequential filling;fast approximation of conditional entropy;depth value prediction;super-pixel126|190|0更新时间:2024-08-15 -

摘要:ObjectiveQuick response code (QR code) is a kind of widely used 2D barcode nowadays. A QR code is a square symbol consisting of dark and light modules. There is a great need to accommodate more data in the target area or encode the same input data in a smaller area. The input data is first encoded into a bit stream. Different encoding algorithms may output different bit streams via assigned input data. The length of bit stream determines the version of a QR code, and the version determines the amount of modules per side. A QR code with a smaller version takes a smaller area without the size of modules changing, or has a larger module size without changing the area. The bit stream consists of one or multiple segments, and the encoding mode of each segment is chosen from three modes separately, i.e., numeric mode, alphanumeric mode and byte mode. The numeric mode can only encode digits, and every 3 digits are encoded into 10 bits. The alphanumeric mode can encode digits, upper case letters, and 9 kinds of punctuations, and every 2 characters are encoded into 11 bits. The byte mode can encode any kind of binary data, but each byte is encoded into 8 bits. Compared to using a single mode to encode the overall input data, different modes interchange may result in a shorter bit stream. But different modes switching leads to redundancy on bit stream length.MethodThe key to minimizing the version of QR code is to minimize the length of bit stream. The minimization should balance the redundancy of data encoding and the mode switching efficiency. The QR code specification gives "optimization of bit stream length" in annex. However, the illustration has proposed that the optimization method may not output the minimum bit stream. The demonstration has mentioned algorithms as below. The first algorithm is called "minimization algorithm", which converts the minimization of bit stream length to a dynamic programming problem. The algorithm can output the bit stream with minimum length by resolving the dynamic programming problem. Time cost is bounded in a linear function to the length of input data. The second algorithm is called "URL minimization algorithm", which is optimized for cases that the input data is a uniform resource locator (URL) further. The customized optimization for URL makes use of two properties: 1) the scheme field and the host field of a URL are case-insensitive, and 2) the scheme-specific part of a URL can be escaped. The properties have been guaranteed via request for comments (RFC) 1 738 and RFC 1 035. A lower case letter can only be encoded in byte mode. By converting a lower case letter in a case-insensitive field to an upper case letter, the letter can be encoded in either byte mode or alphanumeric mode. The property provides more options in dynamic programming. In addition, each character in the scheme-specific part of a URL may be converted to an escape sequence. The escape sequence contains 3 alphanumeric mode characters. Hence, it can be encoded in alphanumeric mode or a combination of alphanumeric mode and numeric mode. This kind of conversion also provides more choices in dynamic programming. The URL minimization algorithm takes advantage of such two kinds of conversions to calculate the bit stream of a URL with minimum length.ResultA QR code data set (also called a test set) is constructed to verify the efficiency of the proposed algorithms. The test set is collected from 6 web image search engines, i.e., Baidu, Sogou, 360, Google, Bing, and Yahoo. QR codes with different bit streams or different error correction levels are regarded as different QR codes. The test set contains 2 282 distinct QR codes, where 603 QR codes encode non-URL data and the other 1 679 QR codes encode URL data. Four algorithms are compared on the test set, i.e., 1) the optimization method given in the QR code specification, 2) encoding input data in a single segment, 3) the minimization algorithm, and 4) the URL minimization algorithm. The bit stream lengths of initial QR codes are offered for comparison as well. The demonstrations show that for the non-URL test set, average bit stream length reduces 0.4% (compared to the optimization in the QR code specification), QR codes with reduced bit stream lengths account for 9.1%, and QR codes with reduced versions account for 1.2%; for the URL test set, average bit stream length reduces 13.9%, QR codes with reduced bit stream lengths account for 98.4%, and QR codes with reduced versions account for 31.7%. An ablation study on the two components of URL minimization has been implemented, i.e., 1) utilizing case-insensitive fields and 2) converting characters to escaping sequences. The calculating results have shown that each component has an effect and combining both components achieves the best performance, i.e., the minimum bit stream length and the minimum version. In the context of URL test set, average bit stream length reduces only 0.5% using the minimization algorithm, while the value reduces 9.1% using the URL minimization algorithm; QR codes with reduced bit stream lengths account for 10.4% using the minimization algorithm, but the value is 98.4% for the URL minimization algorithm; QR codes with reduced versions account for 1.3% using the minimization algorithm, while the value is 31.7% for the URL minimization algorithm. The average time cost to encode a message is 2.45 microsecond.ConclusionThe proposed algorithms minimize the length of bit stream. Data capacity of QR codes has been increased without changing QR code format or revising the error correction capability. The URL minimization algorithm is qualified under the huge amounts of QR codes based on URL encoded data. The proposed algorithms are friendly-used, i.e., there are no hyper-parameters to be tuned, and users input data and error correction level only. The illustrated algorithms have been verified in a realistic running speed, and it is proved that the time costs of both algorithms are bounded in a linear function to the length of input data.关键词:2D code;quick response code (QR code);QR code encoding;dynamic programming;uniform resource locators (URL)98|167|0更新时间:2024-08-15

摘要:ObjectiveQuick response code (QR code) is a kind of widely used 2D barcode nowadays. A QR code is a square symbol consisting of dark and light modules. There is a great need to accommodate more data in the target area or encode the same input data in a smaller area. The input data is first encoded into a bit stream. Different encoding algorithms may output different bit streams via assigned input data. The length of bit stream determines the version of a QR code, and the version determines the amount of modules per side. A QR code with a smaller version takes a smaller area without the size of modules changing, or has a larger module size without changing the area. The bit stream consists of one or multiple segments, and the encoding mode of each segment is chosen from three modes separately, i.e., numeric mode, alphanumeric mode and byte mode. The numeric mode can only encode digits, and every 3 digits are encoded into 10 bits. The alphanumeric mode can encode digits, upper case letters, and 9 kinds of punctuations, and every 2 characters are encoded into 11 bits. The byte mode can encode any kind of binary data, but each byte is encoded into 8 bits. Compared to using a single mode to encode the overall input data, different modes interchange may result in a shorter bit stream. But different modes switching leads to redundancy on bit stream length.MethodThe key to minimizing the version of QR code is to minimize the length of bit stream. The minimization should balance the redundancy of data encoding and the mode switching efficiency. The QR code specification gives "optimization of bit stream length" in annex. However, the illustration has proposed that the optimization method may not output the minimum bit stream. The demonstration has mentioned algorithms as below. The first algorithm is called "minimization algorithm", which converts the minimization of bit stream length to a dynamic programming problem. The algorithm can output the bit stream with minimum length by resolving the dynamic programming problem. Time cost is bounded in a linear function to the length of input data. The second algorithm is called "URL minimization algorithm", which is optimized for cases that the input data is a uniform resource locator (URL) further. The customized optimization for URL makes use of two properties: 1) the scheme field and the host field of a URL are case-insensitive, and 2) the scheme-specific part of a URL can be escaped. The properties have been guaranteed via request for comments (RFC) 1 738 and RFC 1 035. A lower case letter can only be encoded in byte mode. By converting a lower case letter in a case-insensitive field to an upper case letter, the letter can be encoded in either byte mode or alphanumeric mode. The property provides more options in dynamic programming. In addition, each character in the scheme-specific part of a URL may be converted to an escape sequence. The escape sequence contains 3 alphanumeric mode characters. Hence, it can be encoded in alphanumeric mode or a combination of alphanumeric mode and numeric mode. This kind of conversion also provides more choices in dynamic programming. The URL minimization algorithm takes advantage of such two kinds of conversions to calculate the bit stream of a URL with minimum length.ResultA QR code data set (also called a test set) is constructed to verify the efficiency of the proposed algorithms. The test set is collected from 6 web image search engines, i.e., Baidu, Sogou, 360, Google, Bing, and Yahoo. QR codes with different bit streams or different error correction levels are regarded as different QR codes. The test set contains 2 282 distinct QR codes, where 603 QR codes encode non-URL data and the other 1 679 QR codes encode URL data. Four algorithms are compared on the test set, i.e., 1) the optimization method given in the QR code specification, 2) encoding input data in a single segment, 3) the minimization algorithm, and 4) the URL minimization algorithm. The bit stream lengths of initial QR codes are offered for comparison as well. The demonstrations show that for the non-URL test set, average bit stream length reduces 0.4% (compared to the optimization in the QR code specification), QR codes with reduced bit stream lengths account for 9.1%, and QR codes with reduced versions account for 1.2%; for the URL test set, average bit stream length reduces 13.9%, QR codes with reduced bit stream lengths account for 98.4%, and QR codes with reduced versions account for 31.7%. An ablation study on the two components of URL minimization has been implemented, i.e., 1) utilizing case-insensitive fields and 2) converting characters to escaping sequences. The calculating results have shown that each component has an effect and combining both components achieves the best performance, i.e., the minimum bit stream length and the minimum version. In the context of URL test set, average bit stream length reduces only 0.5% using the minimization algorithm, while the value reduces 9.1% using the URL minimization algorithm; QR codes with reduced bit stream lengths account for 10.4% using the minimization algorithm, but the value is 98.4% for the URL minimization algorithm; QR codes with reduced versions account for 1.3% using the minimization algorithm, while the value is 31.7% for the URL minimization algorithm. The average time cost to encode a message is 2.45 microsecond.ConclusionThe proposed algorithms minimize the length of bit stream. Data capacity of QR codes has been increased without changing QR code format or revising the error correction capability. The URL minimization algorithm is qualified under the huge amounts of QR codes based on URL encoded data. The proposed algorithms are friendly-used, i.e., there are no hyper-parameters to be tuned, and users input data and error correction level only. The illustrated algorithms have been verified in a realistic running speed, and it is proved that the time costs of both algorithms are bounded in a linear function to the length of input data.关键词:2D code;quick response code (QR code);QR code encoding;dynamic programming;uniform resource locators (URL)98|167|0更新时间:2024-08-15

Image Processing and Coding

-

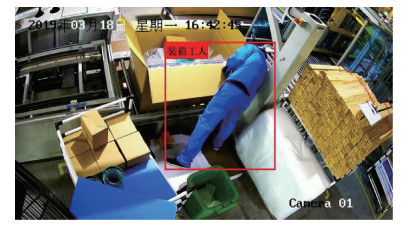

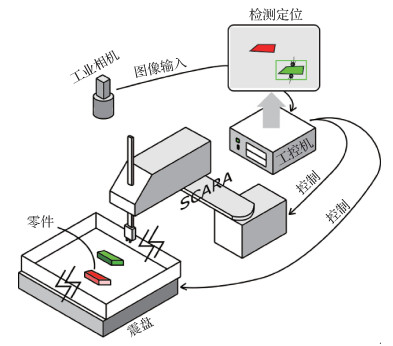

摘要:ObjectiveThe action recognition technology is proactive in computer vision contexts, such as intelligent video surveillance, human-computer interaction, virtual reality, and medical image analysis. It plays an important role in the automated and intelligent modern manufacturing process, but the complexity of the actual manufacturing environment has still been challenging. The research direction is attributed to the deep neural networks largely, especially the 3D convolutional networks, which mainly use 3D convolution to capture temporal information. The 3D convolutional networks can extract the spatio-temporal features of videos better with the added temporal dimension compared to 2D convolutional networks. At present, it shows good performance in action recognition through the optical flow melting in 3D convolutional network, but it still cannot solve the problem of human body being occluded, and the computational cost of optical flow is complicated and cannot be applied in real-time scenes. The product qualification rate is required to be satisfied in the context of action recognition application in production scenes. It is necessary to rank out the unqualified products as much as possible while ensuring high accuracy and high true negative rate (TNR) of detection results. It is challenged to optimize the true negative rate among the existing action recognition methods. Our analysis facilitates a packing action recognition method based on dual-view 3D convolutional network.MethodFirst, we extract motion features better through stacked residual frames as inputs, replacing optical flow that is not available in the real-time scene. The original RGB images and the residual frames are input to two parallel 3D ResNeXt101, and a concatenation layer is used to concatenate the features extracted in the last convolution layer of the two 3D ResNext101. Next, we adopts a dual-view structure to resolve the issue of human body being occluded, optimizes 3D ResNeXt101 into a dual-view model, builds up a learnable dual-view pooling layer for multifaceted feature fusion of views, and then uses this dual-view 3D ResNeXt101 model for action recognition. Finally, a noise-reducing self-encoder and two-class support vector machine (SVM) are added in our model to improve the true negative rate (TNR) of the detection results further. The dual-view pooling derived features are input to the noise-reducing self-encoder in the model, and the features are optimized and downscaled by the trained noise-reducing self-encoder, and then the two-class SVM model is used for secondary recognition.ResultWe conducted experiments in a packing scenario and evaluated using two metrics like accuracy rate and true-negative rate. The accuracy of our packing action recognition model is 94.2%, and the true negative rate is 98.9%, which optimizes current action recognition methods. Our accuracy is increased from 91.1% to 95.8% via the dual-view structure. The accuracy of the model is increased from 88.2% to 95.8% based on the residual frames module. If the residual frames module is altered by optical flow module, the accuracy rate is 96.2%, which is equivalent to the model using the residual frames module. The accuracy is only 91.5% that the unique two-class SVM structure added to the model without the denoising autoencoder. Thanks to the optimization and dimensionality reduction of the feature vectors by the denoising autoencoder, the accuracy reaches 94.2% via the combination of the denoising autoencoder and the two-class SVM both, the highest true negative rate of 98.9% obtained. After adding denoising autoencoder and two-class SVM to the model, the true negative rate of the model increased from 95.7% to 98.9%, while the accuracy rate decreased by 1.6%. Our demonstrated result is evaluated in the public dataset UCF (University of Central Florida) 101.Our single-view model obtained an accuracy of 97.1%, which achieved the second highest accuracy among all compared methods, second only to 3D ResNeXt101's 98.0%.ConclusionWe use a dual-view 3D ResNeXt101 model for effective packing action recognition. To obtain richer features from RGB images and differential images, two parallel 3D ResNeXt101 are used to learn spatio-temporal features and a dual-view feature fusion is accomplished using a learnable view pooling layer. In addition, a stacked denoising autoencoder is trained to optimize and downscale the features extracted in terms of the dual-view 3D ResNeXt101 model. To improve the true negative rate, a two-class SVM model is used for secondary detection. Our method can recognize the boxing action of the packing workers accurately and realize the high true negative rate (TNR) of the recognition results.关键词:action recognition;dual-view;3D convolutional neural network;denoising autoencoder;support vectormachine(SVM)115|207|1更新时间:2024-08-15

摘要:ObjectiveThe action recognition technology is proactive in computer vision contexts, such as intelligent video surveillance, human-computer interaction, virtual reality, and medical image analysis. It plays an important role in the automated and intelligent modern manufacturing process, but the complexity of the actual manufacturing environment has still been challenging. The research direction is attributed to the deep neural networks largely, especially the 3D convolutional networks, which mainly use 3D convolution to capture temporal information. The 3D convolutional networks can extract the spatio-temporal features of videos better with the added temporal dimension compared to 2D convolutional networks. At present, it shows good performance in action recognition through the optical flow melting in 3D convolutional network, but it still cannot solve the problem of human body being occluded, and the computational cost of optical flow is complicated and cannot be applied in real-time scenes. The product qualification rate is required to be satisfied in the context of action recognition application in production scenes. It is necessary to rank out the unqualified products as much as possible while ensuring high accuracy and high true negative rate (TNR) of detection results. It is challenged to optimize the true negative rate among the existing action recognition methods. Our analysis facilitates a packing action recognition method based on dual-view 3D convolutional network.MethodFirst, we extract motion features better through stacked residual frames as inputs, replacing optical flow that is not available in the real-time scene. The original RGB images and the residual frames are input to two parallel 3D ResNeXt101, and a concatenation layer is used to concatenate the features extracted in the last convolution layer of the two 3D ResNext101. Next, we adopts a dual-view structure to resolve the issue of human body being occluded, optimizes 3D ResNeXt101 into a dual-view model, builds up a learnable dual-view pooling layer for multifaceted feature fusion of views, and then uses this dual-view 3D ResNeXt101 model for action recognition. Finally, a noise-reducing self-encoder and two-class support vector machine (SVM) are added in our model to improve the true negative rate (TNR) of the detection results further. The dual-view pooling derived features are input to the noise-reducing self-encoder in the model, and the features are optimized and downscaled by the trained noise-reducing self-encoder, and then the two-class SVM model is used for secondary recognition.ResultWe conducted experiments in a packing scenario and evaluated using two metrics like accuracy rate and true-negative rate. The accuracy of our packing action recognition model is 94.2%, and the true negative rate is 98.9%, which optimizes current action recognition methods. Our accuracy is increased from 91.1% to 95.8% via the dual-view structure. The accuracy of the model is increased from 88.2% to 95.8% based on the residual frames module. If the residual frames module is altered by optical flow module, the accuracy rate is 96.2%, which is equivalent to the model using the residual frames module. The accuracy is only 91.5% that the unique two-class SVM structure added to the model without the denoising autoencoder. Thanks to the optimization and dimensionality reduction of the feature vectors by the denoising autoencoder, the accuracy reaches 94.2% via the combination of the denoising autoencoder and the two-class SVM both, the highest true negative rate of 98.9% obtained. After adding denoising autoencoder and two-class SVM to the model, the true negative rate of the model increased from 95.7% to 98.9%, while the accuracy rate decreased by 1.6%. Our demonstrated result is evaluated in the public dataset UCF (University of Central Florida) 101.Our single-view model obtained an accuracy of 97.1%, which achieved the second highest accuracy among all compared methods, second only to 3D ResNeXt101's 98.0%.ConclusionWe use a dual-view 3D ResNeXt101 model for effective packing action recognition. To obtain richer features from RGB images and differential images, two parallel 3D ResNeXt101 are used to learn spatio-temporal features and a dual-view feature fusion is accomplished using a learnable view pooling layer. In addition, a stacked denoising autoencoder is trained to optimize and downscale the features extracted in terms of the dual-view 3D ResNeXt101 model. To improve the true negative rate, a two-class SVM model is used for secondary detection. Our method can recognize the boxing action of the packing workers accurately and realize the high true negative rate (TNR) of the recognition results.关键词:action recognition;dual-view;3D convolutional neural network;denoising autoencoder;support vectormachine(SVM)115|207|1更新时间:2024-08-15 -

摘要:ObjectiveElectrical power lines construction, plays an important role in the urban development, especially the high-voltage power lines. Engineering vehicles are composed of excavators and wheeled cranes contexts, which are used in construction sites. If the engineering vehicle is working on site surrounding the high-voltage power line, its bucket or boom would probably enter the high-voltage breakdown range when they are lifted, which is very easy to result in accidents such as short circuit breakdowns. So, it is necessary to find out the adequate engineering vehicles working scenario near high-voltage power line. The multiple rotors unmanned aerial vehicle (UAV) is widely used to acquire amounts of aerial images for power lines inspection. The engineering vehicle information should be recognized from these aerial images manually in common. The classical pattern recognition methods and some deep learning models like you only look once version 5 (YOLOv5) has been challenged to some issues of recognizing the engineering vehicle in acquired aerial image, such as inefficiency and inaccuracy. The classical pattern recognition method needs to manually extract the features. Some deep learning models usually have large parameter scale and complex network structure, and are not accurate enough while the training set is small. In order to solve these problems, our research demonstrated an improved capsule network model to recognize engineering vehicles from aerial images. Capsule network improvement is mainly on the two aspects as mentioned below: one is to improve the network structure of the capsule network model, and the other one is to improve the dynamic routing algorithm of the capsule network.MethodFirst, we built up an image dataset, which includes 1 890 aerial images in total. The dataset is then separated into training set and testing set at a ratio of 4 ∶1. Next, we improved the network structure of capsule network through a multi-layer densely connected method to extract more features of the engineering vehicle from the image, named improved model No.1. The multi-layer densely connected capsule network has 3 layers, 5 layers or 7 layers probably. Third, we facilitated the dynamic routing method of the capsule network by replacing the softmax function with the leaky-softmax function to improve the anti-interference performance of the capsule network, named improved model No.2. We named the model with multi-layer densely connected network and the leaky-softmax function as the improved model No.3. Fourth, we embedded several key parameters on those models. The key parameters are related to the number of layers in the capsule network, the routing coefficient and squeeze coefficient in the dynamic routing algorithm.ResultThe aim of first group of experiments is to validate whether the two improved approaches are effective or not. We compared the mean average precision (mAP) of the original capsule network model with improvement model No.1, improvement model No.2 and improvement model No.3. All models use the 3-layer densely connected capsule network. Our experimental results illustrate that the mAP of the improvement model No.1 is 91.70%, and the mAP of the model with improvement No.2 is 90.01%, which are 2.21% and 0.54% each better than the original capsule network. The improvement model No.3 further improves the recognition accuracy, whose mAP reaching 92.10%. The aim of second group of experiments is to classify the issue of the number of network layers influence the mAP of those models. The experimental results demonstrate that the number of network layers influences the mAP greatly. When the number of network layers is small, the mAP increases while the number of network layers increasing. After a peak mAP of recognition shown, the mAP often decreases while the number of network layers increasing. So, their relationship is non-monotonic and nonlinear. In the application case, a 5-layer capsule network has the best recognition mAP. Additionally, the various trends of mAP are not affected by the improvement of dynamic routing algorithm. Furthermore, the efficiency of those improved models all decreased dramatically while the number of capsule network layers increase. And the parameter volume of those improved models is not obviously various, which means that the volume of parameter is irrelevant to the target recognition precision. The aim of third group of experiments is to find out the optimal model with appropriate routing coefficient and squeeze coefficient. This group of experimental results show that the mAP of 5-layer densely connected capsule network reaches up to 94.56% while the routing coefficient is 5 and the squeeze coefficient is l, which is an increase of 5.07% compared to the original capsule network. Meanwhile, the parameter volume of this optimal model is close to original model. Therefore, this optimal model has quite qualified mAP and small parameter volume. The aim of fourth group of experiments is to compare the performance of optimal model with other models. This kind of result shows that our optimal model is better than the classical pattern recognition model and YOLOv5x model in mAP, and the parameter volume of the optimal model is smaller.ConclusionOur research harnessed two approaches to improve the capsule network model for the engineering vehicles recognition derived of UAV aerial images. Our demonstrated experiments illustrate that this improved model has the small parameter volume and quite good recognizing precision, which is very significant for the UAV to recognize the airborne target information.关键词:aerial image of unmanned aerial vehicle(UAV);recognition of engineering vehicle;capsule network;dynamic routing algorithm;densely connected network90|94|1更新时间:2024-08-15

摘要:ObjectiveElectrical power lines construction, plays an important role in the urban development, especially the high-voltage power lines. Engineering vehicles are composed of excavators and wheeled cranes contexts, which are used in construction sites. If the engineering vehicle is working on site surrounding the high-voltage power line, its bucket or boom would probably enter the high-voltage breakdown range when they are lifted, which is very easy to result in accidents such as short circuit breakdowns. So, it is necessary to find out the adequate engineering vehicles working scenario near high-voltage power line. The multiple rotors unmanned aerial vehicle (UAV) is widely used to acquire amounts of aerial images for power lines inspection. The engineering vehicle information should be recognized from these aerial images manually in common. The classical pattern recognition methods and some deep learning models like you only look once version 5 (YOLOv5) has been challenged to some issues of recognizing the engineering vehicle in acquired aerial image, such as inefficiency and inaccuracy. The classical pattern recognition method needs to manually extract the features. Some deep learning models usually have large parameter scale and complex network structure, and are not accurate enough while the training set is small. In order to solve these problems, our research demonstrated an improved capsule network model to recognize engineering vehicles from aerial images. Capsule network improvement is mainly on the two aspects as mentioned below: one is to improve the network structure of the capsule network model, and the other one is to improve the dynamic routing algorithm of the capsule network.MethodFirst, we built up an image dataset, which includes 1 890 aerial images in total. The dataset is then separated into training set and testing set at a ratio of 4 ∶1. Next, we improved the network structure of capsule network through a multi-layer densely connected method to extract more features of the engineering vehicle from the image, named improved model No.1. The multi-layer densely connected capsule network has 3 layers, 5 layers or 7 layers probably. Third, we facilitated the dynamic routing method of the capsule network by replacing the softmax function with the leaky-softmax function to improve the anti-interference performance of the capsule network, named improved model No.2. We named the model with multi-layer densely connected network and the leaky-softmax function as the improved model No.3. Fourth, we embedded several key parameters on those models. The key parameters are related to the number of layers in the capsule network, the routing coefficient and squeeze coefficient in the dynamic routing algorithm.ResultThe aim of first group of experiments is to validate whether the two improved approaches are effective or not. We compared the mean average precision (mAP) of the original capsule network model with improvement model No.1, improvement model No.2 and improvement model No.3. All models use the 3-layer densely connected capsule network. Our experimental results illustrate that the mAP of the improvement model No.1 is 91.70%, and the mAP of the model with improvement No.2 is 90.01%, which are 2.21% and 0.54% each better than the original capsule network. The improvement model No.3 further improves the recognition accuracy, whose mAP reaching 92.10%. The aim of second group of experiments is to classify the issue of the number of network layers influence the mAP of those models. The experimental results demonstrate that the number of network layers influences the mAP greatly. When the number of network layers is small, the mAP increases while the number of network layers increasing. After a peak mAP of recognition shown, the mAP often decreases while the number of network layers increasing. So, their relationship is non-monotonic and nonlinear. In the application case, a 5-layer capsule network has the best recognition mAP. Additionally, the various trends of mAP are not affected by the improvement of dynamic routing algorithm. Furthermore, the efficiency of those improved models all decreased dramatically while the number of capsule network layers increase. And the parameter volume of those improved models is not obviously various, which means that the volume of parameter is irrelevant to the target recognition precision. The aim of third group of experiments is to find out the optimal model with appropriate routing coefficient and squeeze coefficient. This group of experimental results show that the mAP of 5-layer densely connected capsule network reaches up to 94.56% while the routing coefficient is 5 and the squeeze coefficient is l, which is an increase of 5.07% compared to the original capsule network. Meanwhile, the parameter volume of this optimal model is close to original model. Therefore, this optimal model has quite qualified mAP and small parameter volume. The aim of fourth group of experiments is to compare the performance of optimal model with other models. This kind of result shows that our optimal model is better than the classical pattern recognition model and YOLOv5x model in mAP, and the parameter volume of the optimal model is smaller.ConclusionOur research harnessed two approaches to improve the capsule network model for the engineering vehicles recognition derived of UAV aerial images. Our demonstrated experiments illustrate that this improved model has the small parameter volume and quite good recognizing precision, which is very significant for the UAV to recognize the airborne target information.关键词:aerial image of unmanned aerial vehicle(UAV);recognition of engineering vehicle;capsule network;dynamic routing algorithm;densely connected network90|94|1更新时间:2024-08-15 -

摘要:ObjectiveThe contexts of action analysis and recognition is challenged for a number of applications like video surveillance, personal assistance, human-machine interaction, and sports video analysis. Thanks to the video-based action recognition methods, an skeleton data based approach has been focused on recently due to its complex scenarios. To locate the 2D or 3D spatial coordinates of the joints, the skeleton data is mainly obtained via depth sensors or video-based pose estimation algorithms. Graph convolutional networks (GCNs) have been developed to resolve the issue in terms of the traditional methods cannot capture the completed dependence of joints with no graphical structure of skeleton data. The critical viewpoint is challenged to determine an adaptive graph structure for the skeleton data at the convolutional layers. The spatio-temporal graph convolutional network (ST-GCN) has been facilitated to learn spatial and temporal features simultaneously through the temporal edges plus between the corresponding joints of the spatial graph in consistent frames. However, ST-GCN focuses on the physical connection between joints of the human body in the spatial graph, and ignores internal dependencies in motion. Spatio-temporal modeling and channel-wise dependencies are crucial for capturing motion information in videos for the action recognition task. Despite of the credibility in skeleton-based action recognition of GCNs, the relative improvement of classical attention mechanism applications has been constrained. Our research highlights the importance of spatio-temporal interactions and channel-wise dependencies both in accordance with a novel multi-dimensional feature fusion attention mechanism (M2FA).MethodOur proposed model explicitly leverages comprehensive dependency information by feature fusion module embedded in the framework, which is differentiated from other action recognition models with additional information flow or complicated superposition of multiple existing attention modules. Given medium feature maps, M2FA infers the feature descriptors on the spatial, temporal and channel scales sequentially. The fusion of the feature descriptors filters the input feature maps for adaptive feature refinement. As M2FA is being a lightweight and general module, it can be integrated into any skeleton-based architecture seamlessly with end-to-end trainable attributes following the core recognition methods.ResultTo verify its effectiveness, our algorithm is validated and analyzed on two large-scale skeleton-based action recognition datasets: NTU-RGBD and Kinetics-Skeleton. Our experiments are carried out ablation studies to demonstrate the advantages of multi-dimensional feature fusion on the two datasets. Our analyses demonstrate the merit of M2FA for skeleton-based action recognition. On the Kinetics-Skeleton dataset, the action recognition rate of the proposed algorithm is 1.8% higher than that of the baseline algorithm (2s-AGCN). On cross-view benchmark of NTU-RGBD dataset, the human action recognition accuracy of the proposed method is 96.1%, which is higher than baseline method. In addition, the action recognition rate of the proposed method is 90.1% on cross-subject benchmark of NTU-RGBD dataset. We showed that the skeleton-based action recognition model, known as 2s-AGCN, can be significantly improved in terms of accuracy based on adaptive attention mechanism incorporation. Our multi-dimensional feature fusion attention mechanism, called M2FA, captures spatio-temporal interactions and interconnections between potential channels.ConclusionWe developed a novel multi-dimensional feature fusion attention mechanism (M2FA) that captures spatio-temporal interactions and channel-wise dependencies at the same time. Our experimental results show consistent improvements in classification and its priorities of M2FA.关键词:action recognition;skeleton information;graph convolutional network (GCN);attention mechanism;spatio-temporal interaction;channel-wise dependencies;multi-dimensional feature fusion113|164|4更新时间:2024-08-15

摘要:ObjectiveThe contexts of action analysis and recognition is challenged for a number of applications like video surveillance, personal assistance, human-machine interaction, and sports video analysis. Thanks to the video-based action recognition methods, an skeleton data based approach has been focused on recently due to its complex scenarios. To locate the 2D or 3D spatial coordinates of the joints, the skeleton data is mainly obtained via depth sensors or video-based pose estimation algorithms. Graph convolutional networks (GCNs) have been developed to resolve the issue in terms of the traditional methods cannot capture the completed dependence of joints with no graphical structure of skeleton data. The critical viewpoint is challenged to determine an adaptive graph structure for the skeleton data at the convolutional layers. The spatio-temporal graph convolutional network (ST-GCN) has been facilitated to learn spatial and temporal features simultaneously through the temporal edges plus between the corresponding joints of the spatial graph in consistent frames. However, ST-GCN focuses on the physical connection between joints of the human body in the spatial graph, and ignores internal dependencies in motion. Spatio-temporal modeling and channel-wise dependencies are crucial for capturing motion information in videos for the action recognition task. Despite of the credibility in skeleton-based action recognition of GCNs, the relative improvement of classical attention mechanism applications has been constrained. Our research highlights the importance of spatio-temporal interactions and channel-wise dependencies both in accordance with a novel multi-dimensional feature fusion attention mechanism (M2FA).MethodOur proposed model explicitly leverages comprehensive dependency information by feature fusion module embedded in the framework, which is differentiated from other action recognition models with additional information flow or complicated superposition of multiple existing attention modules. Given medium feature maps, M2FA infers the feature descriptors on the spatial, temporal and channel scales sequentially. The fusion of the feature descriptors filters the input feature maps for adaptive feature refinement. As M2FA is being a lightweight and general module, it can be integrated into any skeleton-based architecture seamlessly with end-to-end trainable attributes following the core recognition methods.ResultTo verify its effectiveness, our algorithm is validated and analyzed on two large-scale skeleton-based action recognition datasets: NTU-RGBD and Kinetics-Skeleton. Our experiments are carried out ablation studies to demonstrate the advantages of multi-dimensional feature fusion on the two datasets. Our analyses demonstrate the merit of M2FA for skeleton-based action recognition. On the Kinetics-Skeleton dataset, the action recognition rate of the proposed algorithm is 1.8% higher than that of the baseline algorithm (2s-AGCN). On cross-view benchmark of NTU-RGBD dataset, the human action recognition accuracy of the proposed method is 96.1%, which is higher than baseline method. In addition, the action recognition rate of the proposed method is 90.1% on cross-subject benchmark of NTU-RGBD dataset. We showed that the skeleton-based action recognition model, known as 2s-AGCN, can be significantly improved in terms of accuracy based on adaptive attention mechanism incorporation. Our multi-dimensional feature fusion attention mechanism, called M2FA, captures spatio-temporal interactions and interconnections between potential channels.ConclusionWe developed a novel multi-dimensional feature fusion attention mechanism (M2FA) that captures spatio-temporal interactions and channel-wise dependencies at the same time. Our experimental results show consistent improvements in classification and its priorities of M2FA.关键词:action recognition;skeleton information;graph convolutional network (GCN);attention mechanism;spatio-temporal interaction;channel-wise dependencies;multi-dimensional feature fusion113|164|4更新时间:2024-08-15 -

摘要:ObjectiveThe task of action recognition is focused on multi-frame images analysis like the pose of the human body from a given sensor input or recognize the in situ action of the human body through the obtained images. Action recognition has a wide range of applications in ground truth scenarios, such as human interaction, action analysis and monitoring. Specifically, some illegal human behaviors monitoring in public sites related to bus interchange, railway stations and airports. At present, most of skeleton-based methods are required to use spatio-temporal information in order to obtain good results. Graph convolutional network (GCN) can combine space and time information effectively. However, GCN-based methods have high computational complexity. The integrated strategies of attention modules and multi-stream fusion will cause lower efficiency in the completed training process. The issue of algorithm cost as well as ensuring accuracy is essential to be dealt with in action recognition. Shift graph convolutional network (Shift-GCN) is applied shift to GCN effectively. Shift-GCN is composed of novel shift graph operations and lightweight point-wise convolutions, where the shift graph operations provide flexible receptive fields for spatio-temporal graphs. Our proposed Shift-GCN has its priority with more than 10× less computational complexity based on three datasets for skeleton-based action recognition However, the featured network is redundant and the internal structural design of the network has not yet optimized. Therefore, our research analysis optimizes it on the basis of lightweight Shift-GCN and finally gets our own integer sparse graph convolutional network (IntSparse-GCN).MethodIn order to effectively solve the feature redundancy problem of Shift-GCN, we proposes to move each layer of the network on the basis of the feature shift operation that the odd-numbered columns are moved up and the even-numbered columns are moved down and the removed part is replaced with 0. The input and output of is set to an integer multiple of the joint point. First, we adopt a basic network structure similar to the previous network parameters. In the process of designing the number of input and output channels, try to make the 0 in the characteristics of each joint point balanced and finally get the optimization network structure. This network makes the position of almost half of the feature channel 0, which can express features more accurately, making the feature matrix a sparse feature matrix with strong regularity. The network can improve the robustness of the model and the accuracy of recognition more effectively. Next, we analyzed the mask function in Shift-GCN. The results are illustrated that the learned network mask is distributed in a range centered on 0 and the learned weights will focus on few features. Most of features do not require mask intervention. Finally, our experiments found that more than 80% of the mask function is ineffective. Hence, we conducted a lot of experiments and found that the mask value in different intervals is set to 0. The influence is irregular, so we designed an automated traversal method to obtain the most accurate optimized parameters and then get the optimal network model. Not only improves the accuracy of the network, but also reduces the multiplication operation of the feature matrix and the mask vector.ResultOur ablation experiment shows that each algorithm improvement can harness the ability of the overall algorithm. On the X-sub dataset, the Top-1 of 1 stream(s) IntSparse-GCN reached 87.98%, the Top-1 of 1 s IntSparse-GCN+M-Sparse reached 88.01%; the Top-1 of 2 stream(s) IntSparse-GCN reached 89.80%, and the Top-1 of 2 s IntSparse-GCN+M-Sparse's Top-1 reached 89.82%; 4 stream(s) IntSparse-GCN's Top-1 reached 90.72%, 4 s IntSparse-GCN+M-Sparse's Top-1 reached 90.72%., Our evaluation is carried out on the NTU RGB + D dataset, X-view's 1 s IntSparse-GCN+M-Sparse's Top-1 reached 94.89%, and 2 s IntSparse-GCN+M-Sparse's Top-1 reached 96.21%, and the Top-1 of 4 s IntSparse-GCN+M-Sparse reached 96.57% through the ablation experiment, the Top-1 of 1s IntSparse-GCN+M-Sparse reached 92.89%, the Top-1 of 2 s IntSparse-GCN+M-Sparse reached 95.26%, and the Top of 4 s IntSparse-GCN+M-Sparse-1 reached 96.77%, which is 2.17% higher than the original model through the Northwestern-UCLA dataset evaluation. Compared to other representative algorithms, the multiple data sets accuracy and 4 streams have been improved.ConclusionWe first proposed a novel method called IntSparse-GCN. A spatial shift algorithm is introduced based on integer multiples of the channel. Such feature matrix is a sparse feature matrix with strong regularity. The matrix facilitates the possibility to optimize the model pruning. To obtain the most accurate optimization parameters, our research analyzed the mask function in Shift-GCN and designed an automated traversal method. Sparse feature matrix and the mask parameter have potential to pruning and quantification further.关键词:action recognition;lightweight;sparse feature matrix;integer sparse graph convolutional network (IntSparse-GCN);mask function108|175|2更新时间:2024-08-15

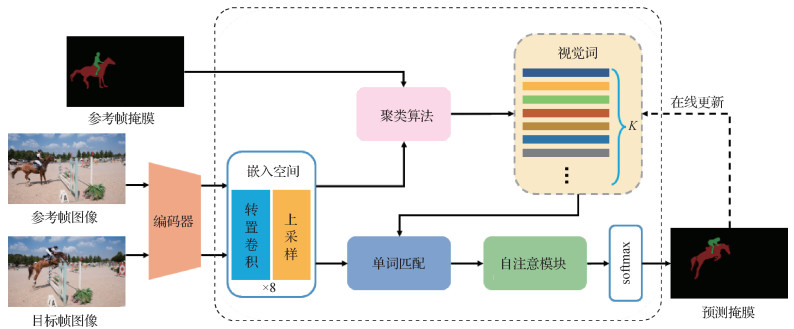

摘要:ObjectiveThe task of action recognition is focused on multi-frame images analysis like the pose of the human body from a given sensor input or recognize the in situ action of the human body through the obtained images. Action recognition has a wide range of applications in ground truth scenarios, such as human interaction, action analysis and monitoring. Specifically, some illegal human behaviors monitoring in public sites related to bus interchange, railway stations and airports. At present, most of skeleton-based methods are required to use spatio-temporal information in order to obtain good results. Graph convolutional network (GCN) can combine space and time information effectively. However, GCN-based methods have high computational complexity. The integrated strategies of attention modules and multi-stream fusion will cause lower efficiency in the completed training process. The issue of algorithm cost as well as ensuring accuracy is essential to be dealt with in action recognition. Shift graph convolutional network (Shift-GCN) is applied shift to GCN effectively. Shift-GCN is composed of novel shift graph operations and lightweight point-wise convolutions, where the shift graph operations provide flexible receptive fields for spatio-temporal graphs. Our proposed Shift-GCN has its priority with more than 10× less computational complexity based on three datasets for skeleton-based action recognition However, the featured network is redundant and the internal structural design of the network has not yet optimized. Therefore, our research analysis optimizes it on the basis of lightweight Shift-GCN and finally gets our own integer sparse graph convolutional network (IntSparse-GCN).MethodIn order to effectively solve the feature redundancy problem of Shift-GCN, we proposes to move each layer of the network on the basis of the feature shift operation that the odd-numbered columns are moved up and the even-numbered columns are moved down and the removed part is replaced with 0. The input and output of is set to an integer multiple of the joint point. First, we adopt a basic network structure similar to the previous network parameters. In the process of designing the number of input and output channels, try to make the 0 in the characteristics of each joint point balanced and finally get the optimization network structure. This network makes the position of almost half of the feature channel 0, which can express features more accurately, making the feature matrix a sparse feature matrix with strong regularity. The network can improve the robustness of the model and the accuracy of recognition more effectively. Next, we analyzed the mask function in Shift-GCN. The results are illustrated that the learned network mask is distributed in a range centered on 0 and the learned weights will focus on few features. Most of features do not require mask intervention. Finally, our experiments found that more than 80% of the mask function is ineffective. Hence, we conducted a lot of experiments and found that the mask value in different intervals is set to 0. The influence is irregular, so we designed an automated traversal method to obtain the most accurate optimized parameters and then get the optimal network model. Not only improves the accuracy of the network, but also reduces the multiplication operation of the feature matrix and the mask vector.ResultOur ablation experiment shows that each algorithm improvement can harness the ability of the overall algorithm. On the X-sub dataset, the Top-1 of 1 stream(s) IntSparse-GCN reached 87.98%, the Top-1 of 1 s IntSparse-GCN+M-Sparse reached 88.01%; the Top-1 of 2 stream(s) IntSparse-GCN reached 89.80%, and the Top-1 of 2 s IntSparse-GCN+M-Sparse's Top-1 reached 89.82%; 4 stream(s) IntSparse-GCN's Top-1 reached 90.72%, 4 s IntSparse-GCN+M-Sparse's Top-1 reached 90.72%., Our evaluation is carried out on the NTU RGB + D dataset, X-view's 1 s IntSparse-GCN+M-Sparse's Top-1 reached 94.89%, and 2 s IntSparse-GCN+M-Sparse's Top-1 reached 96.21%, and the Top-1 of 4 s IntSparse-GCN+M-Sparse reached 96.57% through the ablation experiment, the Top-1 of 1s IntSparse-GCN+M-Sparse reached 92.89%, the Top-1 of 2 s IntSparse-GCN+M-Sparse reached 95.26%, and the Top of 4 s IntSparse-GCN+M-Sparse-1 reached 96.77%, which is 2.17% higher than the original model through the Northwestern-UCLA dataset evaluation. Compared to other representative algorithms, the multiple data sets accuracy and 4 streams have been improved.ConclusionWe first proposed a novel method called IntSparse-GCN. A spatial shift algorithm is introduced based on integer multiples of the channel. Such feature matrix is a sparse feature matrix with strong regularity. The matrix facilitates the possibility to optimize the model pruning. To obtain the most accurate optimization parameters, our research analyzed the mask function in Shift-GCN and designed an automated traversal method. Sparse feature matrix and the mask parameter have potential to pruning and quantification further.关键词:action recognition;lightweight;sparse feature matrix;integer sparse graph convolutional network (IntSparse-GCN);mask function108|175|2更新时间:2024-08-15 -