最新刊期

卷 27 , 期 6 , 2022

-

摘要:Visual object detection aims to locate and recognize objects in images, which is one of the classical tasks in the field of computer vision and also the premise and foundation of many computer vision tasks. Visual object detection plays a very important role in the applications of automatic driving, video surveillance, which has attracted extensive attention of the researches in past few decades. In recent years, with the development of the technique of deep learning, visual object detection has also made great progress. This paper focuses on a deep survey on deep learning based visual object detection, including monocular object detection and stereo object detection. First, we summarize the pipeline of deep object detection during the training and inference. The training process is composed of data pre-processing, detection network design, and label assignment and loss function in common. Data pre-processing (e.g., multi-scale training and flip) aims to enhance the diversity of the given training data, which can improve detection performance of object detector. Detection network usually consists of three key parts like the backbone (e.g., Visual Geometry Group(VGG) and ResNet), feature fusion module (e.g., feature pyramid network(FPN)), and prediction network (e.g., region of interest head network(RoI head)). Label assignment aims to assign the true value for each prediction, and loss function can supervise the network training. During inference, we adopt the trained detector to generate the detection bounding-boxes and employ the post-processing step (e.g., non-maximum suppression(NMS)) to combine the bounding-boxes. Second, we illustrate a deep review on monocular object detection, including anchor-based, anchor-free, and end-to-end methods, respectively. Anchor-based methods design some default anchors and perform classification and regression based on these default anchors, which can be further split into two-stage and one-stage methods. Two-stage methods first generate some candidate proposals based on the default anchors, and second classify/regress these proposals. Compared to two-stage methods, one-stage methods directly perform classification and regression on default anchors directly, which usually have a faster inference speed. The representative two-stage methods are regional-based convolutional neural network (R-CNN) series, and the representative one-stage methods are you only look once (YOLO) and single shot detector (SSD). Compared to anchor-based methods, more robust anchor-free methods perform classification and regression without any hand-crafted default anchors. We split anchor-free methods into keypoint-based methods, and center-based methods. Keypoint-based methods predict multiple keypoints of objects for localization, while center-based methods predict the left, right, top, and bottom distances to the object boundary. The representative keypoint-based method is CornerNet, and the representative center-based methods are fully convolutional one-stage detector (FCOS) and CenterNet. The anchor-based methods and anchor-free methods mentioned above require the post-processing to remove the redundant detection results for each object in common. To solve this problem, the recently introduced end-to-end methods directly predict one bounding box for each object straightforward, which can avoid the post-processing. The representative end-to-end method is detection transformer (DETR) that performs prediction via a transformer module. In addition, we review some classical modules employed in monocular object detection, including feature pyramid structure, prediction network design, label assignment and loss function. The feature pyramid structure employs different layers to detect multi-scaled objects, which can deal with scale variance issue. Prediction network design contains the re-designs of classification and regression, which aims to better deal with these two sub-tasks. Label assignment and loss function aim to better guide detector training. Third, we introduce stereo object detection. According to the coordinate space of features, existing detectors are divided into two categories: frustum-based and inverse-projection-based approaches. Frustum-based approaches directly predict 3D objects on features in the image frustum space. Stereo R-CNN and StereoCenterNet construct stereo features in the image frustum space via concatenating a pair of unary features concatenation. Plane-sweeping is another method of constructing frustum features as cost volumes, which is used in instance-depth-aware 3D detection (IDA-3D) and YOLOStereo3D. In contrast to the frustum-based approaches, inverse-projection-based approaches explicitly project the pixels or frustum features into 3D Cartesian space. There are mainly three manners of the inverse projection: projecting all pixels to the full 3D space as a pseudo point cloud, projecting the cost volume features to 3D feature volume features, or projecting the pixels in each region proposal to an instance-level point cloud. Pseudo-LiDAR is a pioneer method that transforms stereo images to their point cloud representation, which embraces the advances in both disparity estimation and LiDAR-based 3D detection. Deep stereo geometry network (DSGN) projects the frustum-based cost volume features to 3D volume features and further squeezes them into bird's eye view (BEV) for detection. Disp R-CNN leverages Mask R-CNN, a representative 2D instance segmentation model, and generates a set of instance-level point clouds for each stereo image pair. Based on the summary of monocular and stereo object detection, we further compare the progress of domestic and foreign researches, and present some representative universities or companies on visual object detection. Finally, we present some development tendency in visual object detection, including efficient end-to-end object detection, self-supervised object detection, long-tailed object detection, few-shot and zero-shot object detection, large-scale stereo object detection dataset, weakly-supervised stereo object detection.关键词:visual object detection;deep learning;monocular;stereo;anchor723|683|43更新时间:2024-08-15

摘要:Visual object detection aims to locate and recognize objects in images, which is one of the classical tasks in the field of computer vision and also the premise and foundation of many computer vision tasks. Visual object detection plays a very important role in the applications of automatic driving, video surveillance, which has attracted extensive attention of the researches in past few decades. In recent years, with the development of the technique of deep learning, visual object detection has also made great progress. This paper focuses on a deep survey on deep learning based visual object detection, including monocular object detection and stereo object detection. First, we summarize the pipeline of deep object detection during the training and inference. The training process is composed of data pre-processing, detection network design, and label assignment and loss function in common. Data pre-processing (e.g., multi-scale training and flip) aims to enhance the diversity of the given training data, which can improve detection performance of object detector. Detection network usually consists of three key parts like the backbone (e.g., Visual Geometry Group(VGG) and ResNet), feature fusion module (e.g., feature pyramid network(FPN)), and prediction network (e.g., region of interest head network(RoI head)). Label assignment aims to assign the true value for each prediction, and loss function can supervise the network training. During inference, we adopt the trained detector to generate the detection bounding-boxes and employ the post-processing step (e.g., non-maximum suppression(NMS)) to combine the bounding-boxes. Second, we illustrate a deep review on monocular object detection, including anchor-based, anchor-free, and end-to-end methods, respectively. Anchor-based methods design some default anchors and perform classification and regression based on these default anchors, which can be further split into two-stage and one-stage methods. Two-stage methods first generate some candidate proposals based on the default anchors, and second classify/regress these proposals. Compared to two-stage methods, one-stage methods directly perform classification and regression on default anchors directly, which usually have a faster inference speed. The representative two-stage methods are regional-based convolutional neural network (R-CNN) series, and the representative one-stage methods are you only look once (YOLO) and single shot detector (SSD). Compared to anchor-based methods, more robust anchor-free methods perform classification and regression without any hand-crafted default anchors. We split anchor-free methods into keypoint-based methods, and center-based methods. Keypoint-based methods predict multiple keypoints of objects for localization, while center-based methods predict the left, right, top, and bottom distances to the object boundary. The representative keypoint-based method is CornerNet, and the representative center-based methods are fully convolutional one-stage detector (FCOS) and CenterNet. The anchor-based methods and anchor-free methods mentioned above require the post-processing to remove the redundant detection results for each object in common. To solve this problem, the recently introduced end-to-end methods directly predict one bounding box for each object straightforward, which can avoid the post-processing. The representative end-to-end method is detection transformer (DETR) that performs prediction via a transformer module. In addition, we review some classical modules employed in monocular object detection, including feature pyramid structure, prediction network design, label assignment and loss function. The feature pyramid structure employs different layers to detect multi-scaled objects, which can deal with scale variance issue. Prediction network design contains the re-designs of classification and regression, which aims to better deal with these two sub-tasks. Label assignment and loss function aim to better guide detector training. Third, we introduce stereo object detection. According to the coordinate space of features, existing detectors are divided into two categories: frustum-based and inverse-projection-based approaches. Frustum-based approaches directly predict 3D objects on features in the image frustum space. Stereo R-CNN and StereoCenterNet construct stereo features in the image frustum space via concatenating a pair of unary features concatenation. Plane-sweeping is another method of constructing frustum features as cost volumes, which is used in instance-depth-aware 3D detection (IDA-3D) and YOLOStereo3D. In contrast to the frustum-based approaches, inverse-projection-based approaches explicitly project the pixels or frustum features into 3D Cartesian space. There are mainly three manners of the inverse projection: projecting all pixels to the full 3D space as a pseudo point cloud, projecting the cost volume features to 3D feature volume features, or projecting the pixels in each region proposal to an instance-level point cloud. Pseudo-LiDAR is a pioneer method that transforms stereo images to their point cloud representation, which embraces the advances in both disparity estimation and LiDAR-based 3D detection. Deep stereo geometry network (DSGN) projects the frustum-based cost volume features to 3D volume features and further squeezes them into bird's eye view (BEV) for detection. Disp R-CNN leverages Mask R-CNN, a representative 2D instance segmentation model, and generates a set of instance-level point clouds for each stereo image pair. Based on the summary of monocular and stereo object detection, we further compare the progress of domestic and foreign researches, and present some representative universities or companies on visual object detection. Finally, we present some development tendency in visual object detection, including efficient end-to-end object detection, self-supervised object detection, long-tailed object detection, few-shot and zero-shot object detection, large-scale stereo object detection dataset, weakly-supervised stereo object detection.关键词:visual object detection;deep learning;monocular;stereo;anchor723|683|43更新时间:2024-08-15 -

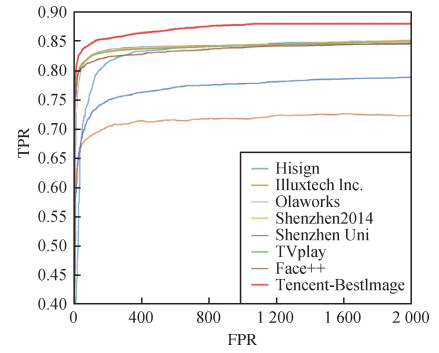

摘要:Public security and social governance is essential to national development nowadays. It is challenged to prevent large-scale riots in communities and various city crimes for spatial and timescaled social governance in corona virus disease 2019(Covid-19) likehighly accurate human identity verification, highly efficient human behavior analysis and crowd flow track and trace. The core of the challenge is to use computer vision technologies to extract visual information in complex scenarios and to fully express, identify and understand the relationship between human behavior and scenes to improve the degree of social administration and governance. Complex scenarios oriented visual technologies recognition can improve the efficiency of social intelligence and accelerate the process of intelligent social governance. The main challenge of human recognition is composed of three aspects as mentioned below: 1) the diversity attack derived from mask occlusion attack, affecting the security of human identity recognition; 2) the large span of time and space information has affected the accuracy of multiple ages oriented face recognition (especially tens of millions of scales retrieval); 3) the complex and changeable scenarios are required for the high robustness of the system and adapt to diverse environments. Therefore, it is necessary to facilitate technologies of remote human identity verification related to the high degree of security, face recognition accuracy, human behavior analysis and scene semantic recognition. The motion analysis of individual behavior and group interaction trend are the key components of complex scenarios based human visual contexts. In detail, individual behavior analysis mainly includes video-based pedestrian re-recognition and video-based action recognition. The group interaction recognition is mainly based on video question-and-answer and dialogue. Video-based network can record the multi-source cameras derived individuals/groups image information. Multi-camera based human behavior research of group segmentation, group tracking, group behavior analysis and abnormal behavior detection. However, it is extremely complex that the individual behavior/group interaction is recorded by multiple cameras in real scenarios, and it is still a great challenge to improve the performance of multi-camera and multi-objective behavior recognition through integrated modeling of real scene structure, individual behavior and group interaction. The video-based network recognition of individual and group behavior mainly depends on visual information in related to scene, individual and group captured. Nonetheless, complex scenarios based individual behavior analysis and group interaction recognition require human knowledge and prior knowledge without visual information in common.Specifically, a crowdsourced data application has improved visual computing performance and visual question-and-answer and dialogue and visual language navigation. The inherited knowledge in crowdsourced data can develop a data-driven machine learning model for comprehensive knowledge and prior applications in individual behavior analysis and group interaction recognition, and establish a new method of data-driven and knowledge-guided visual computing. In addition, the facial expression behavior can be recognized as the human facial micro-motions like speech the voice of language. Speech emotion recognition can capture and understand human emotions and beneficial to support the learning mode of human-machine collaboration better. It is important for research to get deeper into the technology of human visual recognition. Current researches have been focused on human facial expression recognition, speech emotion recognition, expression synthesis, and speech emotion synthesis. We carried out about the contexts of complex scenarios based real-time human identification, individual behavior and group interaction understanding analysis, visual speech emotion recognition and synthesis, comprehensive utilization of knowledge and a priori mode of machine learning. The research and application scenarios for the visual ability is facilitated for complex scenarios. We summarize the current situations, and predict the frontier technologies and development trends. The human visual recognition technology will harness the visual ability to recognize relationship between humans, behavior and scenes. It is potential to improve the capability of standard data construction, model computing resources, and model robustness and interpretability further.关键词:complex scenes;visual understanding;human recognition;deep learning;behavior analysis130|484|0更新时间:2024-08-15

摘要:Public security and social governance is essential to national development nowadays. It is challenged to prevent large-scale riots in communities and various city crimes for spatial and timescaled social governance in corona virus disease 2019(Covid-19) likehighly accurate human identity verification, highly efficient human behavior analysis and crowd flow track and trace. The core of the challenge is to use computer vision technologies to extract visual information in complex scenarios and to fully express, identify and understand the relationship between human behavior and scenes to improve the degree of social administration and governance. Complex scenarios oriented visual technologies recognition can improve the efficiency of social intelligence and accelerate the process of intelligent social governance. The main challenge of human recognition is composed of three aspects as mentioned below: 1) the diversity attack derived from mask occlusion attack, affecting the security of human identity recognition; 2) the large span of time and space information has affected the accuracy of multiple ages oriented face recognition (especially tens of millions of scales retrieval); 3) the complex and changeable scenarios are required for the high robustness of the system and adapt to diverse environments. Therefore, it is necessary to facilitate technologies of remote human identity verification related to the high degree of security, face recognition accuracy, human behavior analysis and scene semantic recognition. The motion analysis of individual behavior and group interaction trend are the key components of complex scenarios based human visual contexts. In detail, individual behavior analysis mainly includes video-based pedestrian re-recognition and video-based action recognition. The group interaction recognition is mainly based on video question-and-answer and dialogue. Video-based network can record the multi-source cameras derived individuals/groups image information. Multi-camera based human behavior research of group segmentation, group tracking, group behavior analysis and abnormal behavior detection. However, it is extremely complex that the individual behavior/group interaction is recorded by multiple cameras in real scenarios, and it is still a great challenge to improve the performance of multi-camera and multi-objective behavior recognition through integrated modeling of real scene structure, individual behavior and group interaction. The video-based network recognition of individual and group behavior mainly depends on visual information in related to scene, individual and group captured. Nonetheless, complex scenarios based individual behavior analysis and group interaction recognition require human knowledge and prior knowledge without visual information in common.Specifically, a crowdsourced data application has improved visual computing performance and visual question-and-answer and dialogue and visual language navigation. The inherited knowledge in crowdsourced data can develop a data-driven machine learning model for comprehensive knowledge and prior applications in individual behavior analysis and group interaction recognition, and establish a new method of data-driven and knowledge-guided visual computing. In addition, the facial expression behavior can be recognized as the human facial micro-motions like speech the voice of language. Speech emotion recognition can capture and understand human emotions and beneficial to support the learning mode of human-machine collaboration better. It is important for research to get deeper into the technology of human visual recognition. Current researches have been focused on human facial expression recognition, speech emotion recognition, expression synthesis, and speech emotion synthesis. We carried out about the contexts of complex scenarios based real-time human identification, individual behavior and group interaction understanding analysis, visual speech emotion recognition and synthesis, comprehensive utilization of knowledge and a priori mode of machine learning. The research and application scenarios for the visual ability is facilitated for complex scenarios. We summarize the current situations, and predict the frontier technologies and development trends. The human visual recognition technology will harness the visual ability to recognize relationship between humans, behavior and scenes. It is potential to improve the capability of standard data construction, model computing resources, and model robustness and interpretability further.关键词:complex scenes;visual understanding;human recognition;deep learning;behavior analysis130|484|0更新时间:2024-08-15 -

摘要:Current intelligent transportation system (ITS) issue is challenged to improve conventional transportation engineering solutions nowadays. ITS has integrated advanced information and communication technologies (ICTs) into the holistic transportation system for a safe, efficient, comfortable and environment friendly transport ecological construction. A variety of ITS applications and services have emerged in the intelligent traffic management system, autonomous driving system, and cooperative vehicle-infrastructure system (CVIS). To realise the intelligentisation of the traffic management, the intelligent traffic management system is mainly supported by optimised smart traffic facilities. Autonomous driving is mainly based on vehicle intelligence, relying on the cooperation of visual perception, radar perception, positioning system, on-board computing and artificial intelligence. The CVIS depends on the collaborative work of intelligent vehicles, roadside equipment and cloud platforms in support of the internet of vehicles (IOV) to fully implement dynamic real-time information interaction between vehicles and related traffic issues to achieve the vehicle-road collaborative management and traffic safety. Image processing is one of the core technologies that can support the deployment of a large number of ITS applications. It is based on computer algorithms application to extract useful visual sensor data derived information, including image enhancement and restoration, feature extraction and classification, as well as the semantic and instance segmentation. Image enhancement and restoration refers to improve the visual performance of the image and render more suitable image for human or machine analysis to deal with the image quality reduction issues of the challenging traffic system scenarios. Image feature extraction and classification technology is the core of object detection for accurate images or videos derived traffic objects locating and identifying, which is the basis for solving more complex higher-level tasks such as segmentation, scene understanding, object tracking, image description, event detection and activity recognition. To achieve higher-precision environmental perception in ITS, the semantic and instance segmentation realises pixel-level image classification based on scene semantic information in terms of the provision of different labels for instances of the same category. These image processing technologies can illustrate crucial references and information to enhance the capabilities of the perception, recognition, object detection, tracking, and path planning modules for more ITS applications. The multifaceted scenarios integration provides important technical support for intelligent traffic management, autonomous driving and the CVIS. In addition, the wide deployment of sensing devices places tremendous demands on data transmission and processing. As the widely recognised data processing technology, the centralised cloud computing is challenged to meet the real-time requirements of most massive data applications in ITS, which leads to uncertainty and barriers in the transmission process. Differentiated from the centralised cloud computing, the multi-access edge computing (MEC) technology deploys sensing, computation, and storage resources close to the network edge and provide a low-latency response-based platform for ITS, high bandwidth, and real-time applications and services access to network information. Our research reviews the current development status and typical applications of ITS. We focus on current image processing and MEC technologies for ITS. Future research direction of ITS and its related image processing and MEC technologies are predicted.关键词:intelligent transportation system(ITS);image processing;edge computing;autonomous driving;cooperative vehicle-infrastructure system(CVIS);deep learning386|128|8更新时间:2024-08-15

摘要:Current intelligent transportation system (ITS) issue is challenged to improve conventional transportation engineering solutions nowadays. ITS has integrated advanced information and communication technologies (ICTs) into the holistic transportation system for a safe, efficient, comfortable and environment friendly transport ecological construction. A variety of ITS applications and services have emerged in the intelligent traffic management system, autonomous driving system, and cooperative vehicle-infrastructure system (CVIS). To realise the intelligentisation of the traffic management, the intelligent traffic management system is mainly supported by optimised smart traffic facilities. Autonomous driving is mainly based on vehicle intelligence, relying on the cooperation of visual perception, radar perception, positioning system, on-board computing and artificial intelligence. The CVIS depends on the collaborative work of intelligent vehicles, roadside equipment and cloud platforms in support of the internet of vehicles (IOV) to fully implement dynamic real-time information interaction between vehicles and related traffic issues to achieve the vehicle-road collaborative management and traffic safety. Image processing is one of the core technologies that can support the deployment of a large number of ITS applications. It is based on computer algorithms application to extract useful visual sensor data derived information, including image enhancement and restoration, feature extraction and classification, as well as the semantic and instance segmentation. Image enhancement and restoration refers to improve the visual performance of the image and render more suitable image for human or machine analysis to deal with the image quality reduction issues of the challenging traffic system scenarios. Image feature extraction and classification technology is the core of object detection for accurate images or videos derived traffic objects locating and identifying, which is the basis for solving more complex higher-level tasks such as segmentation, scene understanding, object tracking, image description, event detection and activity recognition. To achieve higher-precision environmental perception in ITS, the semantic and instance segmentation realises pixel-level image classification based on scene semantic information in terms of the provision of different labels for instances of the same category. These image processing technologies can illustrate crucial references and information to enhance the capabilities of the perception, recognition, object detection, tracking, and path planning modules for more ITS applications. The multifaceted scenarios integration provides important technical support for intelligent traffic management, autonomous driving and the CVIS. In addition, the wide deployment of sensing devices places tremendous demands on data transmission and processing. As the widely recognised data processing technology, the centralised cloud computing is challenged to meet the real-time requirements of most massive data applications in ITS, which leads to uncertainty and barriers in the transmission process. Differentiated from the centralised cloud computing, the multi-access edge computing (MEC) technology deploys sensing, computation, and storage resources close to the network edge and provide a low-latency response-based platform for ITS, high bandwidth, and real-time applications and services access to network information. Our research reviews the current development status and typical applications of ITS. We focus on current image processing and MEC technologies for ITS. Future research direction of ITS and its related image processing and MEC technologies are predicted.关键词:intelligent transportation system(ITS);image processing;edge computing;autonomous driving;cooperative vehicle-infrastructure system(CVIS);deep learning386|128|8更新时间:2024-08-15 -

摘要:Visual understanding, e.g., object detection, semantic/instance segmentation, and action recognition, plays a crucial role in many real-world applications including human-machine interaction, autonomous driving, etc. Recently, deep networks have made great progress in these tasks under the full supervision regime. Based on convolutional neural network (CNN), a series of representative deep models have been developed for these visual understanding tasks, e.g., you only look once (YOLO) and Fast/Faster R-CNN(region CNN) for object detection, fully convolutional networks(FCN) and DeepLab for semantic segmentation, Mask R-CNN and you only look at coefficients (YOLACT) for instance segmentation. Recently, driven by novel network backbone, e.g., Transformer, the performance of these tasks have been further boosted under full supervision regime. However, supervised learning relies on massive accurate annotations, which are usually laborious and costly. By taking semantic segmentation as an example, it is very laborious and costly for collecting dense annotations, i.e., pixel-wise segmentation masks, while weak supervision annotations, e.g., bounding box annotations, point annotations, are much easier to collect. Moreover, for video action recognition, the scenes in videos are very complicated, and it is very likely to be impossible to annotate all the actions with accurate time intervals. Alternatively, weakly supervised learning is effective in reducing the cost of data annotations, and thus is very important to the development and applications of visual understanding. Taking object detection, semantic/instance segmentation, and action recognition as examples, this article aims to provide a survey on recent progress in weakly supervised visual understanding, while pointing out several challenges and opportunities. To begin with, we first introduce two representative weakly supervised learning methods, including multiple instance learning (MIL) and expectation-maximization (EM) algorithms. Despite of different network architectures in recent weakly supervised learning methods, most existing methods can be categorized into the family of MIL or EM. As for object localization and detection, we respectively review the methods based on MIL and class attention map (CAM), where self-training and switching between supervision settings are specifically introduced. By formulating weakly supervised object detection (WSOD) as the problem of MIL-based proposal classification, WSOD methods tend to focus on discriminative parts of object, e.g., head for human or animals may be simply detected to represent the entire object, yielding significant performance drops in comparison to fully supervised object detection. To address this issue, self-training and switching between supervision settings have been respectively developed, and transfer learning has also been introduced to exploit auxiliary information from other tasks, e.g., semantic segmentation. As for weakly supervised object localization, CAM is a popular solution to predict the object position where objects from one class with the highest activation value can be found. Similarly, CAM based localization methods are also facing the issue of discriminative parts, and several solutions, e.g., suppressing the most discriminative parts and attention-based self-produced guidance, have been proposed. Based on pattern analysis, statistical modeling and computational learning visual object classes(PASCAL VOC) and Microsoft common objects in context(MS COCO) datasets, several representative weakly supervised object localization and detection methods have been evaluated, showing performance gaps between fully supervised methods. As for semantic segmentation, we consider several representative weak supervision settings including bounding box annotations, image-level class annotations, point or scribble annotations. In comparison to segmentation mask annotations, these weak annotations cannot provide accurate pixel-wise supervision. Image-level class annotations are the most convenient and easiest way, and the key issue of image-level weakly supervised semantic segmentation methods is to exploit the correlation between class labels and segmentation masks. Based on CAM, coarse segmentation results can be obtained, while facing inaccurate segmentation masks and focusing on discriminative parts. To refine segmentation masks, several strategies are introduced including iterative erasing, learning similarity between pixels, and joint learning of saliency detection and weakly supervised semantic segmentation. Point or scribble annotations and bounding box annotations can provide more accurate localization information than image-level class annotations. Among them, bounding box annotations is likely to be a good solution to balance the annotation cost and performance of weakly supervised semantic segmentation under EM framework. Moreover, weakly supervised instance segmentation is more challenging than weakly supervised semantic segmentation, since a pixel is not only assigned to an object class but also is accurately assigned to one specific object. In this article, we consider bounding box annotations and image-level annotations for weakly supervised instance segmentation. Based on image-level class annotations, peak response map in CAM is highly correlated with object instances, and can be adopted in weakly supervised instance segmentation. Based on bounding box annotations, weakly supervised instance segmentation can be formulated as MIL, where instance masks are usually more accurate than those based on image-level class annotations. Besides, in these weakly supervised segmentation methods, post-processing techniques, e.g., dense conditional random field, are usually adopted to further refine the segmentation masks. On PASCAL VOC and MS COCO datasets, representative weakly supervised semantic and instance segmentation methods with different levels of annotations are evaluated. As for video action recognition, it is much more difficult to collect accurate annotations of all the actions due to complicated scenes in videos, and thus weakly supervised action recognition is attracting research attention in recent years. In this article, we introduce the models and algorithms for different weak supervision settings including film scripts, action sequences, video-level class labels and single-frame labels. Finally, the challenges and opportunities are analyzed and discussed. For these visual understanding tasks, the performance of weakly supervised methods still has improvement room in comparison to fully supervised methods. When applying in the wild, it is also a valuable and challenging topic to exploit large amount of unlabeled and noisy data. In future, weakly supervised visual understanding methods also will benefit from multi-task learning and large-scale pre-trained models. For an example, vision and language pre-trained models, e.g., contrastive language-image pre-training (CLIP), is potential to provide knowledge to significantly improve the performance of weakly supervised visual understanding tasks.关键词:weakly supervised learning;object localization;object detection;semantic segmentation;instance segmentation;action recognition182|372|10更新时间:2024-08-15

摘要:Visual understanding, e.g., object detection, semantic/instance segmentation, and action recognition, plays a crucial role in many real-world applications including human-machine interaction, autonomous driving, etc. Recently, deep networks have made great progress in these tasks under the full supervision regime. Based on convolutional neural network (CNN), a series of representative deep models have been developed for these visual understanding tasks, e.g., you only look once (YOLO) and Fast/Faster R-CNN(region CNN) for object detection, fully convolutional networks(FCN) and DeepLab for semantic segmentation, Mask R-CNN and you only look at coefficients (YOLACT) for instance segmentation. Recently, driven by novel network backbone, e.g., Transformer, the performance of these tasks have been further boosted under full supervision regime. However, supervised learning relies on massive accurate annotations, which are usually laborious and costly. By taking semantic segmentation as an example, it is very laborious and costly for collecting dense annotations, i.e., pixel-wise segmentation masks, while weak supervision annotations, e.g., bounding box annotations, point annotations, are much easier to collect. Moreover, for video action recognition, the scenes in videos are very complicated, and it is very likely to be impossible to annotate all the actions with accurate time intervals. Alternatively, weakly supervised learning is effective in reducing the cost of data annotations, and thus is very important to the development and applications of visual understanding. Taking object detection, semantic/instance segmentation, and action recognition as examples, this article aims to provide a survey on recent progress in weakly supervised visual understanding, while pointing out several challenges and opportunities. To begin with, we first introduce two representative weakly supervised learning methods, including multiple instance learning (MIL) and expectation-maximization (EM) algorithms. Despite of different network architectures in recent weakly supervised learning methods, most existing methods can be categorized into the family of MIL or EM. As for object localization and detection, we respectively review the methods based on MIL and class attention map (CAM), where self-training and switching between supervision settings are specifically introduced. By formulating weakly supervised object detection (WSOD) as the problem of MIL-based proposal classification, WSOD methods tend to focus on discriminative parts of object, e.g., head for human or animals may be simply detected to represent the entire object, yielding significant performance drops in comparison to fully supervised object detection. To address this issue, self-training and switching between supervision settings have been respectively developed, and transfer learning has also been introduced to exploit auxiliary information from other tasks, e.g., semantic segmentation. As for weakly supervised object localization, CAM is a popular solution to predict the object position where objects from one class with the highest activation value can be found. Similarly, CAM based localization methods are also facing the issue of discriminative parts, and several solutions, e.g., suppressing the most discriminative parts and attention-based self-produced guidance, have been proposed. Based on pattern analysis, statistical modeling and computational learning visual object classes(PASCAL VOC) and Microsoft common objects in context(MS COCO) datasets, several representative weakly supervised object localization and detection methods have been evaluated, showing performance gaps between fully supervised methods. As for semantic segmentation, we consider several representative weak supervision settings including bounding box annotations, image-level class annotations, point or scribble annotations. In comparison to segmentation mask annotations, these weak annotations cannot provide accurate pixel-wise supervision. Image-level class annotations are the most convenient and easiest way, and the key issue of image-level weakly supervised semantic segmentation methods is to exploit the correlation between class labels and segmentation masks. Based on CAM, coarse segmentation results can be obtained, while facing inaccurate segmentation masks and focusing on discriminative parts. To refine segmentation masks, several strategies are introduced including iterative erasing, learning similarity between pixels, and joint learning of saliency detection and weakly supervised semantic segmentation. Point or scribble annotations and bounding box annotations can provide more accurate localization information than image-level class annotations. Among them, bounding box annotations is likely to be a good solution to balance the annotation cost and performance of weakly supervised semantic segmentation under EM framework. Moreover, weakly supervised instance segmentation is more challenging than weakly supervised semantic segmentation, since a pixel is not only assigned to an object class but also is accurately assigned to one specific object. In this article, we consider bounding box annotations and image-level annotations for weakly supervised instance segmentation. Based on image-level class annotations, peak response map in CAM is highly correlated with object instances, and can be adopted in weakly supervised instance segmentation. Based on bounding box annotations, weakly supervised instance segmentation can be formulated as MIL, where instance masks are usually more accurate than those based on image-level class annotations. Besides, in these weakly supervised segmentation methods, post-processing techniques, e.g., dense conditional random field, are usually adopted to further refine the segmentation masks. On PASCAL VOC and MS COCO datasets, representative weakly supervised semantic and instance segmentation methods with different levels of annotations are evaluated. As for video action recognition, it is much more difficult to collect accurate annotations of all the actions due to complicated scenes in videos, and thus weakly supervised action recognition is attracting research attention in recent years. In this article, we introduce the models and algorithms for different weak supervision settings including film scripts, action sequences, video-level class labels and single-frame labels. Finally, the challenges and opportunities are analyzed and discussed. For these visual understanding tasks, the performance of weakly supervised methods still has improvement room in comparison to fully supervised methods. When applying in the wild, it is also a valuable and challenging topic to exploit large amount of unlabeled and noisy data. In future, weakly supervised visual understanding methods also will benefit from multi-task learning and large-scale pre-trained models. For an example, vision and language pre-trained models, e.g., contrastive language-image pre-training (CLIP), is potential to provide knowledge to significantly improve the performance of weakly supervised visual understanding tasks.关键词:weakly supervised learning;object localization;object detection;semantic segmentation;instance segmentation;action recognition182|372|10更新时间:2024-08-15 - 摘要:With the development and application of artificial intelligence, intelligent analysis and interpretation of big remotely sensed data using artificial intelligence technology has become a future development trend. By synthesizing domestic and foreign literature and related reports, it sorts out the fields of remote sensing data precision processing, remote sensing data spatio-temporal processing and analysis, remote sensing object classification and identification, remote sensing data association mining, remote sensing open source datasets and sharing platforms. Firstly, for the task of precise processing of remote sensing data, the purpose is to image process and calibrate the spectral reflection or radar scattering data obtained by the sensor, and restore them to image products accurately related to some information dimensions of ground objects. This paper reviews the research progress of refined processing from two aspects: the improvement of imaging quality of remote sensing data such as optics and SAR(synthetic aperture radar) and the reconstruction of low-quality images. The research progress of quantitative improvement is analyzed from two aspects: local feature matching and regional feature matching of remote sensing images. The exploration of existing technologies verifies the feasibility of high-precision remote sensing data processing through artificial intelligence technology. Secondly, for the task of spatio-temporal processing and analysis of remote sensing data, through the comprehensive analysis of multi temporal images, compared with single temporal remote sensing images, it can further show the dynamic changes of the earth's surface and reveal the evolution law of ground objects. For remote sensing applications of multi temporal images, such as monitoring of forest degradation, crop growth, urban expansion and wetland loss, the lack of data caused by clouds and their shadows will prolong the time interval of image acquisition, cause the problem of irregular time interval, and increase the difficulty of subsequent time series processing and analysis. This paper reviews its research progress from two aspects: remote sensing image time series restoration and multi-source remote sensing temporal and spatial fusion. Thirdly, for the task of remote sensing object classification and recognition, most of the existing processing and analysis methods do not make full use of the powerful autonomous learning ability of computers, rely on limited information acquisition and calculation methods, and are difficult to meet the performance requirements such as accuracy and false alarm rate. This paper reviews the research progress of typical feature element extraction and multi element parallel extraction. The existing technology explores how to combine artificial intelligence methods on the basis of traditional artificial mathematical analysis methods to quantitatively describe and analyze target models and mechanisms in remote sensing data to improve target interpretation accuracy. Finally, for the task of remote sensing data association mining, the research progress is reviewed from two aspects: data organization association and professional knowledge graph construction. It shows that there are still some problems in the field of remote sensing data, such as scattered application, difficult to form a knowledge graph and realize knowledge accumulation, updating and optimization. Based on massive multi-source heterogeneous remote sensing data, realizing the rapid association, organization and analysis of multi-dimensional information in time and space is an important direction in the future. In addition, facing the development needs of big intelligence analysis technology, this paper also reviews the research progress of remote sensing open source data set and sharing platform. On this basis, the research situation of intelligent analysis and interpretation of remote sensing data is combed and summarized, and the future development trend and prospect of this field are given.关键词:remote sensing big data;data processing;spatio-temporal processing and analysis;target element classification and identification;data association mining;open source datasets;sharing platform385|174|10更新时间:2024-08-15

-

摘要:Video is the conceptual base of visual information processing technology. The frame rate of traditional video is tens of Hertz, which is incapable to represent the Ultra-high speed change process of light. It also constrains to the speed of machine vision. The concept of video is originated from film imaging, which can't unleash the full potential of electronic and digital technology. The spike vision model generates a spike when the photon energy captured by a photosensitive device reaches the predefined threshold. The longer the spike timing is, the weaker the received light signals are. The occurred light intensity can be inferred to achieve consistent imaging. Current research of spiking vision is focused on developing disruptive ultra-high speed imaging chips and cameras that are 1 000 times faster than videos. It is substituting traditional paradigm derived from machine vision speed linear growth of computing power. Based on the biological evidence and physical principle of spiking vision, our review analyzes the software simulator of spiking vision, the computational process of the photon propagation simulation, spiking vision based visual image reconstruction, and the detailed mechanism of photoelectric sensor structure and the chip design. Our review reveals the ultra-high speed target motion detection, and spiking neural networks based tracking and recognition. The evolution of spiking vision is forecasted. Future spiking vision based chip and system has multifaceted potentials to further harness the industrial tasks (e.g., the monitoring of high-speed train, power grid, turbine and intelligent manufacturing), civil applications (e.g., high-speed camera, intelligent transportation, auxiliary driving, judicial forensics and sports arbitration), and national defense construction (e.g., high-speed confrontation campaigns).关键词:spiking vision;spiking neural networks;visual information processing;brain-inspired vision;artificial intelligence210|1166|6更新时间:2024-08-15

摘要:Video is the conceptual base of visual information processing technology. The frame rate of traditional video is tens of Hertz, which is incapable to represent the Ultra-high speed change process of light. It also constrains to the speed of machine vision. The concept of video is originated from film imaging, which can't unleash the full potential of electronic and digital technology. The spike vision model generates a spike when the photon energy captured by a photosensitive device reaches the predefined threshold. The longer the spike timing is, the weaker the received light signals are. The occurred light intensity can be inferred to achieve consistent imaging. Current research of spiking vision is focused on developing disruptive ultra-high speed imaging chips and cameras that are 1 000 times faster than videos. It is substituting traditional paradigm derived from machine vision speed linear growth of computing power. Based on the biological evidence and physical principle of spiking vision, our review analyzes the software simulator of spiking vision, the computational process of the photon propagation simulation, spiking vision based visual image reconstruction, and the detailed mechanism of photoelectric sensor structure and the chip design. Our review reveals the ultra-high speed target motion detection, and spiking neural networks based tracking and recognition. The evolution of spiking vision is forecasted. Future spiking vision based chip and system has multifaceted potentials to further harness the industrial tasks (e.g., the monitoring of high-speed train, power grid, turbine and intelligent manufacturing), civil applications (e.g., high-speed camera, intelligent transportation, auxiliary driving, judicial forensics and sports arbitration), and national defense construction (e.g., high-speed confrontation campaigns).关键词:spiking vision;spiking neural networks;visual information processing;brain-inspired vision;artificial intelligence210|1166|6更新时间:2024-08-15 -

摘要:Computational imaging breaks the limitation of traditional digital imaging to acquire the information deeper (e.g., high dynamic range imaging and low light imaging) and broader (e.g., spectrum, light field, and 3D imaging). Driven by industry, especially mobile phone manufacturer medical and automotive, computational imaging has become ubiquitous in our daily lives and plays a critical role in accelerating the revolution of industry. It is a new imaging technique that combines illumination, optics, image sensors, and post-processing algorithms. This review takes the latest methods, algorithms, and applications as the mainline and reports the state-of-the-art progress by jointly analyzing the articles and reports at home and aboard. This review covers the topics of end-to-end optics and algorithms design, high dynamic range imaging, light-field imaging, spectrum imaging, lensless imaging, low light imaging, 3D imaging, and computational photography. It focuses on the development status, frontier dynamics, hot issues, and trends in each computational imaging topic. The camera systems have long-term been designed in separated steps: experience-driven lens design followed by costume designed post-processing. Such a general-propose approach achieved success in the past but left the question open for specific tasks and the best compromise among optics, post-processing, and costs. Recent advances aim to build the gap in an end-to-end fashion. To realize the joint optics and algorithms designing, different differentiable optics models have been realized step by step, including the differentiable diffractive optics model, the differentiable refractive optics, and the differentiable complex lens model based on differentiable ray-tracing. Beyond the goal of capturing a sharp and clear image on the sensor, it offers enormous design flexibility that can not only find a compromise between optics and post-processing, but also open up the design space for optical encoding. The end-to-end camera design offers competitive alternatives to modern optics and camera system design. High dynamic range (HDR) imaging has become a commodity imaging technique as evidenced by its applications across many domains, including mobile consumer photography, robotics, drones, surveillance, content capture for display, driver assistance systems, and autonomous driving. This review analyzes the advantages, disadvantages, and industrial applications through analyzing a series of HDR imaging techniques, including optical modulation, multi-exposure, multi-sensor fusion, and post-processing algorithms. Conventional cameras do not record most of the information about the light distribution entering from the world. Light-field imaging records the full 4D light field measuring the amount of light traveling along each ray that intersects the sensor. This review reports how the light field is applied to super-resolution, depth estimation, 3D measurement, and so on and analyzes the state-of-the-art method and industrial application. It also reports the research progress and industrial application in particle image velocimetry and 3D flame imaging. Spectral imaging technique has been used widely and successfully in resource assessment, environmental monitoring, disaster warning, and other remote sensing domains. Spectral image data can be described as a three-dimensional cube. This imaging technique involves capturing the spectrum for each pixel in an image; As a result, the digital images produce detailed characterizations of the scene or object. This review explains multiple methods to acquire spectrum volume data, including the current multi-channel filter, solving the wavelength response curve inversely based on deep learning, diffraction grating, multiplexing, metasurface, and other optimizations to achieve hyper-spectrum acquisition. Lensless imaging eliminates the need for geometric isomorphism between a scene and an image while constructing compact and lightweight imaging systems. It has been applied to bright-field imaging, cytometry, holography, phase recovery, fluorescence, and the quantitative sensing of specific sample properties derived from such images. However, the low reconstructed signal-to-noise ratio makes it an unsolved challenging inverse problem. This review reports the recent progress in designing and optimizing planar optical elements and high-quality image reconstruction algorithms combined with specific applications. Imaging under a low light illumination will be affected by Poisson noise, which becomes increasingly strong as the power of the light source decreases. In the meantime, a series of visual degradation like decreased visibility, intensive noise, and biased color will occur. This review analyzes the challenges of low light imaging and conclude the corresponding solutions, including the noise removal methods of single/multi-frame, flash, and new sensors to deal with the conditions when the sensor exposure to low light. Shape acquisition of three-dimensional objects plays a vital role for various real-world applications, including automotive, machine vision, reverse engineering, industrial inspections, and medical imaging. This review reports the latest active solutions which have been widely applied, including structured light, direct time-of-flight, and indirect time-of-flight. It also notes the difficulties like ambient light (e.g., sunlight), indirect inference (e.g., the mutual reflection of the concave surface, scattering of foggy) of depth acquisition based on those active methods. The use of computation methods in photography refers to digital image capture and processing techniques that use digital calculation instead of optical processes. It can not only improve the camera ability but also add more new features that were not possible at all with traditional film-based photography. Computational photography is an essential branch of computation imaging developed from traditional photography — however, computational photography emphasizes taking a photograph digitally. Limited by the physical size and image quality, computational photography focuses on reasonably arranging the computational resources and showing the high-quality image that pleasures the customer's feeling. As 90 percent of the information transmitted to our human brain is visual, the imaging system plays a vital role for most future intelligence systems. Computational imaging drastically releases human information acquisition ability in no matter depth or scope. For new techniques like metaverse, computational imaging offers a general input tool to collect multi-dimensional visual information for rebuilding the virtual world. This review covers key technological developments, applications, insights, and challenges over the recent years and examines current trends to predict future capabilities.关键词:end-to-end camera design;high dynamic range imaging;light-field imaging;spectral imaging;lensless imaging;low light imaging;active 3D imaging;computational photography471|2241|7更新时间:2024-08-15

摘要:Computational imaging breaks the limitation of traditional digital imaging to acquire the information deeper (e.g., high dynamic range imaging and low light imaging) and broader (e.g., spectrum, light field, and 3D imaging). Driven by industry, especially mobile phone manufacturer medical and automotive, computational imaging has become ubiquitous in our daily lives and plays a critical role in accelerating the revolution of industry. It is a new imaging technique that combines illumination, optics, image sensors, and post-processing algorithms. This review takes the latest methods, algorithms, and applications as the mainline and reports the state-of-the-art progress by jointly analyzing the articles and reports at home and aboard. This review covers the topics of end-to-end optics and algorithms design, high dynamic range imaging, light-field imaging, spectrum imaging, lensless imaging, low light imaging, 3D imaging, and computational photography. It focuses on the development status, frontier dynamics, hot issues, and trends in each computational imaging topic. The camera systems have long-term been designed in separated steps: experience-driven lens design followed by costume designed post-processing. Such a general-propose approach achieved success in the past but left the question open for specific tasks and the best compromise among optics, post-processing, and costs. Recent advances aim to build the gap in an end-to-end fashion. To realize the joint optics and algorithms designing, different differentiable optics models have been realized step by step, including the differentiable diffractive optics model, the differentiable refractive optics, and the differentiable complex lens model based on differentiable ray-tracing. Beyond the goal of capturing a sharp and clear image on the sensor, it offers enormous design flexibility that can not only find a compromise between optics and post-processing, but also open up the design space for optical encoding. The end-to-end camera design offers competitive alternatives to modern optics and camera system design. High dynamic range (HDR) imaging has become a commodity imaging technique as evidenced by its applications across many domains, including mobile consumer photography, robotics, drones, surveillance, content capture for display, driver assistance systems, and autonomous driving. This review analyzes the advantages, disadvantages, and industrial applications through analyzing a series of HDR imaging techniques, including optical modulation, multi-exposure, multi-sensor fusion, and post-processing algorithms. Conventional cameras do not record most of the information about the light distribution entering from the world. Light-field imaging records the full 4D light field measuring the amount of light traveling along each ray that intersects the sensor. This review reports how the light field is applied to super-resolution, depth estimation, 3D measurement, and so on and analyzes the state-of-the-art method and industrial application. It also reports the research progress and industrial application in particle image velocimetry and 3D flame imaging. Spectral imaging technique has been used widely and successfully in resource assessment, environmental monitoring, disaster warning, and other remote sensing domains. Spectral image data can be described as a three-dimensional cube. This imaging technique involves capturing the spectrum for each pixel in an image; As a result, the digital images produce detailed characterizations of the scene or object. This review explains multiple methods to acquire spectrum volume data, including the current multi-channel filter, solving the wavelength response curve inversely based on deep learning, diffraction grating, multiplexing, metasurface, and other optimizations to achieve hyper-spectrum acquisition. Lensless imaging eliminates the need for geometric isomorphism between a scene and an image while constructing compact and lightweight imaging systems. It has been applied to bright-field imaging, cytometry, holography, phase recovery, fluorescence, and the quantitative sensing of specific sample properties derived from such images. However, the low reconstructed signal-to-noise ratio makes it an unsolved challenging inverse problem. This review reports the recent progress in designing and optimizing planar optical elements and high-quality image reconstruction algorithms combined with specific applications. Imaging under a low light illumination will be affected by Poisson noise, which becomes increasingly strong as the power of the light source decreases. In the meantime, a series of visual degradation like decreased visibility, intensive noise, and biased color will occur. This review analyzes the challenges of low light imaging and conclude the corresponding solutions, including the noise removal methods of single/multi-frame, flash, and new sensors to deal with the conditions when the sensor exposure to low light. Shape acquisition of three-dimensional objects plays a vital role for various real-world applications, including automotive, machine vision, reverse engineering, industrial inspections, and medical imaging. This review reports the latest active solutions which have been widely applied, including structured light, direct time-of-flight, and indirect time-of-flight. It also notes the difficulties like ambient light (e.g., sunlight), indirect inference (e.g., the mutual reflection of the concave surface, scattering of foggy) of depth acquisition based on those active methods. The use of computation methods in photography refers to digital image capture and processing techniques that use digital calculation instead of optical processes. It can not only improve the camera ability but also add more new features that were not possible at all with traditional film-based photography. Computational photography is an essential branch of computation imaging developed from traditional photography — however, computational photography emphasizes taking a photograph digitally. Limited by the physical size and image quality, computational photography focuses on reasonably arranging the computational resources and showing the high-quality image that pleasures the customer's feeling. As 90 percent of the information transmitted to our human brain is visual, the imaging system plays a vital role for most future intelligence systems. Computational imaging drastically releases human information acquisition ability in no matter depth or scope. For new techniques like metaverse, computational imaging offers a general input tool to collect multi-dimensional visual information for rebuilding the virtual world. This review covers key technological developments, applications, insights, and challenges over the recent years and examines current trends to predict future capabilities.关键词:end-to-end camera design;high dynamic range imaging;light-field imaging;spectral imaging;lensless imaging;low light imaging;active 3D imaging;computational photography471|2241|7更新时间:2024-08-15 -

摘要:Mobile internet real-time rendering technology facilitates key technical capabilities on the aspects of three dimensions (3D) visualization, computer vision, virtual reality, augmented reality, extended reality, and meta-universe. 3D visualization applications based on online real-time rendering technology have been widely scattered on multiple mobile Internet platforms. 3D based Internet applications have been transformed into new online visualization applications with 3D virtual scenes as the main interactive object and intensive immersion to deal with service locations and time constrained issues of the mobile Internet. Our review is focused on four platforms, including mobile terminals, web terminals, cloud terminals and multi-terminals collaboration. First, we investigate the "graphics processing unit" on mobile devices, which is the critical factor of mobile online real-time graphics rendering, and analyze its recent computing ability, designing, and optimization issues. We examined the possibility of hosting desktop-level rendering algorithms on mobile rendering devices, taking the ray-tracing approach as an example. We analyzed the rendering algorithms issues can be accelerated on the power and bandwidth constrained mobile orientation. We reviewed mobile rendering tools like mobile graphics application programming interface(API) and game engines, and conduct comparative category analysis for their performance. Second, we discussed the current Web3D online rendering mechanisms represented by the combination of WebGL2.0 and HTML5 in terms of web-oriented online real-time graphics rendering; we compared the technical features and rendering performance of well-known Web3D engines, and review the support of mainstream game engines for Web3D applications; we examined the challenged technologies of Web3D lightweighting solutions in related to the online real-time rendering of large-scale Web3D scenes like Web3D scene lightweighting and peer-to-peer networking(P2P) progressive transmission. In respect of online real-time graphics cloud rendering, we analyzed the remote rendering architecture of cloud rendering system; We revealed the core service layer of cloud rendering system in terms of cloud services——"platform as a service (PaaS)", and sorted its three major functions out like application hosting, resource scheduling, and streaming; we analyzed two types of cloud-rendering optimization strategies derived from video stream compression and rendering latency mitigation; we described the core application of online cloud rendering system——the "cloud game", and compared the system framework, technical features, and user experience of some of the latest "cloud game" applications. To enable multi-end collaborative online graphics rendering, we analyzed the rendering-task sharing and collaboration of the end-cloud system; we examined the collaboration pattern and technical features of the end-edge-cloud rendering system based on the introduction of edge computing; we make use of rendering resources toanalyze the load balancing optimization issues originated from computing power deployment and resource scheduling of the multi-end rendering system. Finally, we listed the current industry based online real-time cloud rendering systems, including NVIDIA-derived CloudXR, unity-based render streaming, Microsoft Azure remote rendering, and Kujiale cloud-based home decoration and design platform. We compared these systems through their framework, technical features and rendering effects.关键词:online real-time rendering;cloud rendering;Web3D;end-cloud collaboration;remote rendering219|905|1更新时间:2024-08-15

摘要:Mobile internet real-time rendering technology facilitates key technical capabilities on the aspects of three dimensions (3D) visualization, computer vision, virtual reality, augmented reality, extended reality, and meta-universe. 3D visualization applications based on online real-time rendering technology have been widely scattered on multiple mobile Internet platforms. 3D based Internet applications have been transformed into new online visualization applications with 3D virtual scenes as the main interactive object and intensive immersion to deal with service locations and time constrained issues of the mobile Internet. Our review is focused on four platforms, including mobile terminals, web terminals, cloud terminals and multi-terminals collaboration. First, we investigate the "graphics processing unit" on mobile devices, which is the critical factor of mobile online real-time graphics rendering, and analyze its recent computing ability, designing, and optimization issues. We examined the possibility of hosting desktop-level rendering algorithms on mobile rendering devices, taking the ray-tracing approach as an example. We analyzed the rendering algorithms issues can be accelerated on the power and bandwidth constrained mobile orientation. We reviewed mobile rendering tools like mobile graphics application programming interface(API) and game engines, and conduct comparative category analysis for their performance. Second, we discussed the current Web3D online rendering mechanisms represented by the combination of WebGL2.0 and HTML5 in terms of web-oriented online real-time graphics rendering; we compared the technical features and rendering performance of well-known Web3D engines, and review the support of mainstream game engines for Web3D applications; we examined the challenged technologies of Web3D lightweighting solutions in related to the online real-time rendering of large-scale Web3D scenes like Web3D scene lightweighting and peer-to-peer networking(P2P) progressive transmission. In respect of online real-time graphics cloud rendering, we analyzed the remote rendering architecture of cloud rendering system; We revealed the core service layer of cloud rendering system in terms of cloud services——"platform as a service (PaaS)", and sorted its three major functions out like application hosting, resource scheduling, and streaming; we analyzed two types of cloud-rendering optimization strategies derived from video stream compression and rendering latency mitigation; we described the core application of online cloud rendering system——the "cloud game", and compared the system framework, technical features, and user experience of some of the latest "cloud game" applications. To enable multi-end collaborative online graphics rendering, we analyzed the rendering-task sharing and collaboration of the end-cloud system; we examined the collaboration pattern and technical features of the end-edge-cloud rendering system based on the introduction of edge computing; we make use of rendering resources toanalyze the load balancing optimization issues originated from computing power deployment and resource scheduling of the multi-end rendering system. Finally, we listed the current industry based online real-time cloud rendering systems, including NVIDIA-derived CloudXR, unity-based render streaming, Microsoft Azure remote rendering, and Kujiale cloud-based home decoration and design platform. We compared these systems through their framework, technical features and rendering effects.关键词:online real-time rendering;cloud rendering;Web3D;end-cloud collaboration;remote rendering219|905|1更新时间:2024-08-15

Visual Understanding & Computational Imaging

-

摘要:Optimal data access and massive data derived information extraction has become an essential technology nowadays. Table-related paradigm is a kind of efficient structure for the clustered data designation, display and analysis. It has been widely used on Internet and vertical fields due to its simplicity and intuitiveness. Computer based tables, pictures or portable document format(PDF) files as the carrier will cause structural information loss. It is challenged to trace the original tables back. Inefficient manual based input has more errors. Therefore, two decadal researches have focused on the computer automatic recognition of tables issues originated from documents or PDF files and multiple tasks loop. To obtain the table structure and content and extract specific information, table recognition aims to detect the table via the image or PDF and other electronic files automatically. It is composed of three tasks recognition types like table area detection, table structure recognition and table content recognition. There are two types of existed table recognition methods in common. One is based on optical character recognition (OCR) technology to recognize the characters in the table directly, and then analyze and identify the position of the characters. The other one is to obtain the key intersections and the positions of each frameline of the table through digital image processing to analyze the relationship between cells in the table. However, most of these methods are only applicable to a single field and have poor generalization ability. At the same time, it is constrained of some experience-based threshold design. Thanks to the development of deep learning technology, semantic segmentation algorithm, object detection algorithm, text sequence generation algorithm, pre training model and related technologies facilitates technical problem solving for table recognition. Most deep learning algorithms have carried out adaptive transformation according to the characteristics of tables, which can improve the effect of table recognition. It uses object detection algorithm for table detection task. Object detection and text sequence generation algorithms are mainly used for table structure recognition. Most pre training models have played a good effect on the aspect of table content recognition. But many table structure recognition algorithms still cannot handle these well for wireless tables and less line tables. On the aspects of table images of natural scenes, the relevant algorithms have challenged to achieve the annotation in practice due to the influence of brightness and inclination. A large number of datasets provide sufficient data support for the training of table recognition model and improve the effect of the model currently. However, there are some challenging issues between these datasets multiple annotation formats and different evaluation indicators. Some datasets provide the hyper text markup language(HTML) code of the structure only in the field of table structure recognition and some datasets provide the location of cells in the table and the corresponding row and column attributes. Some datasets are based on the position of cells or the content of cells in accordance with evaluation indicators. Some datasets are based on the adjacent relationship between cells or the editing distance between HTML codes for the recognition of table structure. Our research critically reviews the research situation of three sub tasks like table detection, structure recognition and content recognition and try to predict future research direction further.关键词:table area detection;table structure recognition;table content recognition;deep learning;table cell recognition;table information extraction457|647|8更新时间:2024-08-15