最新刊期

卷 27 , 期 5 , 2022

-

摘要:Underwater optical images have played a key role in related to marine resource development, marine environment protection and marine engineering. Due to the harsh and complex underwater environment, the raw underwater optical images are challenged to the quality degradation, which are mainly introduced via an underwater scenario of light selective absorption and scattering. The degraded underwater images have low contrast, blurred details and color distortion, which severely restrict its performance and applications of underwater intelligent processing systems. In particular, the current deep learning based underwater image recovering has been facilitated currently. Our review first analyzes the mechanism of underwater image degradation, as well as describes the existing underwater imaging models and summarizes the challenges of underwater image reconstruction. Then, we trace the evolving of underwater optical image reconstruction methods. In accordance with the deep learning technology and the physical imaging models contextual, the existing algorithms are segmented into four categories for underwater images recovering methods, which are traditional image enhancement, traditional image restoration, deep-learning-based image enhancement and deep-learning-based image restoration. These four types of existing timescale methods are then discussed and analyzed, including their theories, features, pros and cons, respectively. Specifically, the traditional image enhancement methods tend to deliver unnatural results with color deviations and under-enhancement or over-enhancement of local area to improve the visibility of the underwater images effectively. Conversely, the traditional image restoration methods are based on the mechanism of underwater image degradation and a challenging issue is constrained of the limitations of imaging models and priori knowledge in diverse underwater scenarios. The deep learning based restoration methods is facilitated to nonlinear fitting capabilities of neural networks with an error stacking barrier and insufficient restoration results. The deep-learning-based enhancement methods are comparatively robust and suitable to recover diverse underwater images with flexible neural networks. However, they are relatively difficult to converge, and their generalization capability is insufficient. We introduce the current public underwater image datasets, which can be divided into two categories, and summarize their features and applications, respectively. Moreover, we also demonstrate more evaluation metrics for the quality of underwater images. To evaluate their performance quantitatively and qualitatively, we conduct experiments on eight typical underwater image reconstruction methods further. Three benchmarks are optioned for color cast removal, contrast improvement and comprehensive test. Our demonstrated results indicate that none of these methods are robust enough to recover diverse types of underwater images. The reconstruction of better underwater optical images is a big challenge issue of improving the robustness and generalization of reconstruction, developing more lightweight networks or processing algorithms of real-time applications, utilizing underwater image reconstruction to harness vision tasks ability, as well as establishing an underwater images quality assessment system.关键词:underwater degraded image;deep learning;image enhancement;image restoration;underwater benchmark;underwater image quality assessment345|511|3更新时间:2024-05-07

摘要:Underwater optical images have played a key role in related to marine resource development, marine environment protection and marine engineering. Due to the harsh and complex underwater environment, the raw underwater optical images are challenged to the quality degradation, which are mainly introduced via an underwater scenario of light selective absorption and scattering. The degraded underwater images have low contrast, blurred details and color distortion, which severely restrict its performance and applications of underwater intelligent processing systems. In particular, the current deep learning based underwater image recovering has been facilitated currently. Our review first analyzes the mechanism of underwater image degradation, as well as describes the existing underwater imaging models and summarizes the challenges of underwater image reconstruction. Then, we trace the evolving of underwater optical image reconstruction methods. In accordance with the deep learning technology and the physical imaging models contextual, the existing algorithms are segmented into four categories for underwater images recovering methods, which are traditional image enhancement, traditional image restoration, deep-learning-based image enhancement and deep-learning-based image restoration. These four types of existing timescale methods are then discussed and analyzed, including their theories, features, pros and cons, respectively. Specifically, the traditional image enhancement methods tend to deliver unnatural results with color deviations and under-enhancement or over-enhancement of local area to improve the visibility of the underwater images effectively. Conversely, the traditional image restoration methods are based on the mechanism of underwater image degradation and a challenging issue is constrained of the limitations of imaging models and priori knowledge in diverse underwater scenarios. The deep learning based restoration methods is facilitated to nonlinear fitting capabilities of neural networks with an error stacking barrier and insufficient restoration results. The deep-learning-based enhancement methods are comparatively robust and suitable to recover diverse underwater images with flexible neural networks. However, they are relatively difficult to converge, and their generalization capability is insufficient. We introduce the current public underwater image datasets, which can be divided into two categories, and summarize their features and applications, respectively. Moreover, we also demonstrate more evaluation metrics for the quality of underwater images. To evaluate their performance quantitatively and qualitatively, we conduct experiments on eight typical underwater image reconstruction methods further. Three benchmarks are optioned for color cast removal, contrast improvement and comprehensive test. Our demonstrated results indicate that none of these methods are robust enough to recover diverse types of underwater images. The reconstruction of better underwater optical images is a big challenge issue of improving the robustness and generalization of reconstruction, developing more lightweight networks or processing algorithms of real-time applications, utilizing underwater image reconstruction to harness vision tasks ability, as well as establishing an underwater images quality assessment system.关键词:underwater degraded image;deep learning;image enhancement;image restoration;underwater benchmark;underwater image quality assessment345|511|3更新时间:2024-05-07 -

摘要:The visual quality of captured images in rainy weather conditions is constrained to the outdoor vision degradation. It is essential to design relevant rain removal algorithms. Due to the lack of temporal information, single image rain removal is challenging compared to video-based rain removal. The target of single image rain removal analysis is to restore the rain-removed background image from the corresponding rain-affected image. Current deep learning based vision tasks construct diverse data-driven frameworks like single image rain removal task via multiple network modules. Current research tasks are focusing on the quality of datasets, the design of single image deraining algorithms, the subsequent high-level vision tasks, and the design of performance evaluation metrics. Specifically, the quality of rain datasets largely affects the performance of deep learning based single image deraining methods, since the generalization ability of deep single image rain removal is highly related to the domain gap between synthesized training dataset and real testing dataset. Besides, rain removal plays an important preprocessing role in outdoor visual tasks because its result would affect the performance of the subsequent visual task. Additionally, the design of image quality assessment (IQA) metrics is quite important for the fair quantitative analysis of human perception of image quality in general image restoration tasks. We conducted critical literature review for deep learning based single image rain removal from the four aspects as mentioned below: 1) dataset generation in rain weather conditions; 2) representative deep neural network based single image rain removal algorithms; 3) the research of the downstream high-level task in rainy days and 4) performance metrics for evaluating single image rain removal algorithms. Specifically, in terms of the generation manners, the current rain image datasets are roughly divided into four categories as following: 1) synthesizing rain streaks based on photo-realistic rendering technique and then adding them on clear images based on simple physical model; 2) constructing rain images based on complex physical model via manual parameters setting; 3) generating rain images based on generative adversarial network (GAN); 4) collecting paired rain-free/rain-affected images by shooting different scenarios and adjusting camera parameters. We reviewed the download links of the existing representative rain image datasets. For deep learning based single image rain removal methods, we review the supervised and semi-/unsupervised rain removal methods for single task and joint tasks in terms of task scenarios, learning mechanisms and network design. Here, single task is relevant to rain drop removal, rain streak, rain fog, heavy rain; and the integrated analyses of removal of rain drop and rain streak, or multiple noises removal. Furthermore, we overview the construction manners of representative network architectures, including simplified convolutional neural networks based (CNNs-based) multi-branches architecture, GAN-based mechanism, recurrent and multi-stage framework, multi-scale architecture, the integration of encoder-decoder modules, attention mechanism or transformer based module as well as model-driven and data-driven learning manners. Since the implicit or explicit embedding of domain knowledge can promote network construction, we provide a detailed survey in the context of the relationship between rain removal methods and domain knowledge.We illustrated that the domain knowledge and the learning of benched networks has the potential to improve the generalization performance of single image rain removal algorithm further. Based on the real high-level outdoor vision tasks in rain weather, it would be meaningful to use the joint processing strategies of low-level and high-level tasks and the customized construction of rainy datasets. Meanwhile, we reviewed and clarified some related literatures of high-level computer vision tasks and comprehensively analyzed the performance evaluation metrics in the context of full-reference metrics and non-reference metrics. We analyzed the potential challenges of single image rain removal further in the context of feasible benchmark datasets construction, future fair evaluation metrics designing, and the optimized integration of rain removal and high-level vision tasks.关键词:single image rain removal;deep neural network;rain image dataset;rain image synthesis;model-driven and data-driven methodology;follow-up high-level vision task;performance evaluation metrics255|814|2更新时间:2024-05-07

摘要:The visual quality of captured images in rainy weather conditions is constrained to the outdoor vision degradation. It is essential to design relevant rain removal algorithms. Due to the lack of temporal information, single image rain removal is challenging compared to video-based rain removal. The target of single image rain removal analysis is to restore the rain-removed background image from the corresponding rain-affected image. Current deep learning based vision tasks construct diverse data-driven frameworks like single image rain removal task via multiple network modules. Current research tasks are focusing on the quality of datasets, the design of single image deraining algorithms, the subsequent high-level vision tasks, and the design of performance evaluation metrics. Specifically, the quality of rain datasets largely affects the performance of deep learning based single image deraining methods, since the generalization ability of deep single image rain removal is highly related to the domain gap between synthesized training dataset and real testing dataset. Besides, rain removal plays an important preprocessing role in outdoor visual tasks because its result would affect the performance of the subsequent visual task. Additionally, the design of image quality assessment (IQA) metrics is quite important for the fair quantitative analysis of human perception of image quality in general image restoration tasks. We conducted critical literature review for deep learning based single image rain removal from the four aspects as mentioned below: 1) dataset generation in rain weather conditions; 2) representative deep neural network based single image rain removal algorithms; 3) the research of the downstream high-level task in rainy days and 4) performance metrics for evaluating single image rain removal algorithms. Specifically, in terms of the generation manners, the current rain image datasets are roughly divided into four categories as following: 1) synthesizing rain streaks based on photo-realistic rendering technique and then adding them on clear images based on simple physical model; 2) constructing rain images based on complex physical model via manual parameters setting; 3) generating rain images based on generative adversarial network (GAN); 4) collecting paired rain-free/rain-affected images by shooting different scenarios and adjusting camera parameters. We reviewed the download links of the existing representative rain image datasets. For deep learning based single image rain removal methods, we review the supervised and semi-/unsupervised rain removal methods for single task and joint tasks in terms of task scenarios, learning mechanisms and network design. Here, single task is relevant to rain drop removal, rain streak, rain fog, heavy rain; and the integrated analyses of removal of rain drop and rain streak, or multiple noises removal. Furthermore, we overview the construction manners of representative network architectures, including simplified convolutional neural networks based (CNNs-based) multi-branches architecture, GAN-based mechanism, recurrent and multi-stage framework, multi-scale architecture, the integration of encoder-decoder modules, attention mechanism or transformer based module as well as model-driven and data-driven learning manners. Since the implicit or explicit embedding of domain knowledge can promote network construction, we provide a detailed survey in the context of the relationship between rain removal methods and domain knowledge.We illustrated that the domain knowledge and the learning of benched networks has the potential to improve the generalization performance of single image rain removal algorithm further. Based on the real high-level outdoor vision tasks in rain weather, it would be meaningful to use the joint processing strategies of low-level and high-level tasks and the customized construction of rainy datasets. Meanwhile, we reviewed and clarified some related literatures of high-level computer vision tasks and comprehensively analyzed the performance evaluation metrics in the context of full-reference metrics and non-reference metrics. We analyzed the potential challenges of single image rain removal further in the context of feasible benchmark datasets construction, future fair evaluation metrics designing, and the optimized integration of rain removal and high-level vision tasks.关键词:single image rain removal;deep neural network;rain image dataset;rain image synthesis;model-driven and data-driven methodology;follow-up high-level vision task;performance evaluation metrics255|814|2更新时间:2024-05-07 -

摘要:Low-light image enhancement aims to improve the visual perception quality of captured data in the context of low-light scenarios. The purpose of low-light image enhancement is to improve the visual quality via image brightness enhancement. Low-light image enhancement is a key factor to low-light face detection and nighttime semantic segmentation.Our systematic and detailed review is focused on the recent development of low-light image enhancement., We first carry out a comprehensive and systematic analysis for low-light image enhancement on the three aspects as mentioned below: 1) the development of low-light image datasets, 2) the development of low-light image enhancement technology, and 3) the experimental evaluation synthesis. Finally, our demonstrated results are summarized and forecasted in related to low-light image enhancement further.First of all, as far as the existing low-light image enhancement data set is concerned, it reveals a trend in the scale of sizes (small to large), multi-scenarios(solo to diverse), and data involvement degree(simple to complex). Most of the data sets are attributed to unpaired data, and the target pairwise data sets cannot be effectively synthesized due to the difficulty of illumination in low-light image enhancement modeling. The existing pairs of low illumination image data set labels are mainly subjected to manual parameter settings like the exposure time adjustment or expertise modification).The existing reference images in pairs of data sets have challenged to represent the scene information captured in low-light observation accurately. In addition, the construction process of some data sets is relevant to detection or segmentation labels. It is necessary to establish a connection and explore the impact of low-level visual tasks with high-level visual tasks and faciliate high-level visual tasks like detection and semantic segmentation in a low-light scenario.Second, existing low-light image enhancement techniques can be roughly divided into three categories: 1) distribution-based mapping, 2)model-based optimization, and 3) deep learning, respectively. Data-driven deep learning technology has significantly promoted the development of low-light image enhancement. Thanks to the development of the existing low-light image enhancement technology, the traditional model design method has been transformed into data-driven deep learning technology. Among them, It can resolve low-light image enhancement issue based on mapping the value distribution of low-light input to amplify smaller values (displayed as dark), while exposure unevenness is still the a challenged issue to deal with. The model optimization based methods make assumptions about the ground truth via a priori regular condition designation, and do not depend on the amount of training data, but achieve relatively stable performance through the image analysis itself. The deep learning based image enhancement method is to learn via a large amount of training data and realize image enhancement based on designing a deep network-related/independent of the physical model. The trend moves towards semi-supervised/unsupervised/self-supervised learning mechanisms from fully supervised learning mechanisms and focuses more on image enhancement quality when pairwise ground truth data is not available. However, the loss function design in the training process and the training relies on the design of the loss function and the adjustment of network parameters. The existing enhanced network structure is gradually changing from complex to lightweight. Simultaneously, the improvement of visual quality is related to the running speed of the network. There is a lack of effective indicators that can accurately reflect the enhanced image quality due to the specialty of the low-light image enhancement. Currently, a series of downstream high-level vision tasks have been adopting to evaluate the quality of enhanced images and transferring to user-friendly low-light visual image enhancement to high-level vision task performance priority.Meanwhile, a series of experimental evaluations demonstrate that existing optimized model based methods have better generalization ability than those deep learning based methods, while existing unsupervised learning techniques are more robust and efficient than fully supervised learning methods. It can be obtained from the high-level vision tasks in low-light scenes that low-light enhancement has a certain effect on more tasks although good visual effects has not obtained higher high-level vision task accuracy. This indirect confirmation of the disparity of the visual quality expression is orientated from existing works and high-level visual tasks. It is worth noting that the results obtained of trained networks on paired data lack generality and make it difficult to characterize natural image distributions. The unsupervised method can generate enhanced results to satisfy the natural image distribution via ta natural image distribution related loss function, The following four potential research perspectives are proposed, including 1)the inherent laws issue of low-light images in different scenes and reduce the dependence on paired data to endow the algorithm with scene-independent generalization ability; 2) an efficient network framework construction for low-light image enhancement tasks; 3) an effective learning strategy to make the framework learn completely; and 4) a connection between low-light image enhancement and high-level vision tasks (e.g., detection).关键词:low-light image enhancement;Retinex theory;illumination estimation;deep learning;low-light face detection688|997|16更新时间:2024-05-07

摘要:Low-light image enhancement aims to improve the visual perception quality of captured data in the context of low-light scenarios. The purpose of low-light image enhancement is to improve the visual quality via image brightness enhancement. Low-light image enhancement is a key factor to low-light face detection and nighttime semantic segmentation.Our systematic and detailed review is focused on the recent development of low-light image enhancement., We first carry out a comprehensive and systematic analysis for low-light image enhancement on the three aspects as mentioned below: 1) the development of low-light image datasets, 2) the development of low-light image enhancement technology, and 3) the experimental evaluation synthesis. Finally, our demonstrated results are summarized and forecasted in related to low-light image enhancement further.First of all, as far as the existing low-light image enhancement data set is concerned, it reveals a trend in the scale of sizes (small to large), multi-scenarios(solo to diverse), and data involvement degree(simple to complex). Most of the data sets are attributed to unpaired data, and the target pairwise data sets cannot be effectively synthesized due to the difficulty of illumination in low-light image enhancement modeling. The existing pairs of low illumination image data set labels are mainly subjected to manual parameter settings like the exposure time adjustment or expertise modification).The existing reference images in pairs of data sets have challenged to represent the scene information captured in low-light observation accurately. In addition, the construction process of some data sets is relevant to detection or segmentation labels. It is necessary to establish a connection and explore the impact of low-level visual tasks with high-level visual tasks and faciliate high-level visual tasks like detection and semantic segmentation in a low-light scenario.Second, existing low-light image enhancement techniques can be roughly divided into three categories: 1) distribution-based mapping, 2)model-based optimization, and 3) deep learning, respectively. Data-driven deep learning technology has significantly promoted the development of low-light image enhancement. Thanks to the development of the existing low-light image enhancement technology, the traditional model design method has been transformed into data-driven deep learning technology. Among them, It can resolve low-light image enhancement issue based on mapping the value distribution of low-light input to amplify smaller values (displayed as dark), while exposure unevenness is still the a challenged issue to deal with. The model optimization based methods make assumptions about the ground truth via a priori regular condition designation, and do not depend on the amount of training data, but achieve relatively stable performance through the image analysis itself. The deep learning based image enhancement method is to learn via a large amount of training data and realize image enhancement based on designing a deep network-related/independent of the physical model. The trend moves towards semi-supervised/unsupervised/self-supervised learning mechanisms from fully supervised learning mechanisms and focuses more on image enhancement quality when pairwise ground truth data is not available. However, the loss function design in the training process and the training relies on the design of the loss function and the adjustment of network parameters. The existing enhanced network structure is gradually changing from complex to lightweight. Simultaneously, the improvement of visual quality is related to the running speed of the network. There is a lack of effective indicators that can accurately reflect the enhanced image quality due to the specialty of the low-light image enhancement. Currently, a series of downstream high-level vision tasks have been adopting to evaluate the quality of enhanced images and transferring to user-friendly low-light visual image enhancement to high-level vision task performance priority.Meanwhile, a series of experimental evaluations demonstrate that existing optimized model based methods have better generalization ability than those deep learning based methods, while existing unsupervised learning techniques are more robust and efficient than fully supervised learning methods. It can be obtained from the high-level vision tasks in low-light scenes that low-light enhancement has a certain effect on more tasks although good visual effects has not obtained higher high-level vision task accuracy. This indirect confirmation of the disparity of the visual quality expression is orientated from existing works and high-level visual tasks. It is worth noting that the results obtained of trained networks on paired data lack generality and make it difficult to characterize natural image distributions. The unsupervised method can generate enhanced results to satisfy the natural image distribution via ta natural image distribution related loss function, The following four potential research perspectives are proposed, including 1)the inherent laws issue of low-light images in different scenes and reduce the dependence on paired data to endow the algorithm with scene-independent generalization ability; 2) an efficient network framework construction for low-light image enhancement tasks; 3) an effective learning strategy to make the framework learn completely; and 4) a connection between low-light image enhancement and high-level vision tasks (e.g., detection).关键词:low-light image enhancement;Retinex theory;illumination estimation;deep learning;low-light face detection688|997|16更新时间:2024-05-07 -

摘要:Images and videos quality has a great impact on the information acquisition of human behavior visual system. Image/video quality assessment (I/VQA) can be regarded as a key factor for multimedia and network video services nowadays. The quality assessment methods are mainly segmented into qualitative and quantitative models. Qualitative methods conduct quality assessment based on the human's eyes, which cost lots of manpower and time consuming resources. Quantitative quality assessment simulates human observation and it can automatically forecast input quality for those of I/VQA researchers. Our review is critical reviewed quality assessment(QA) methods like full reference (FR), reduced reference (RR) and no reference (NR) methods, which are categorized based on the "clean" data capability (the reference image with no distortion). The full reference methods assess the quality of the distorted images with comparison of the "clean" data. The pros of these methods are better performance, low complexity and good robustness. The cons are that the reference images are challenged to obtain in the in-situ scenarios. Compared with the full reference methods, the reduced reference methods reduce the number of the reference images based on featured reference data to predict the quality. All reference data are not required for the no reference methods. Traditional IQA methods are mainly based on structural similarity, human visual system (HVS) and natural scene statistical theory (NSS). The structure similarity based methods assesses the measurement quality derived of the structural information changes; the HVS based methods are based on some human eyes features; Natural scene statistics based methods fit the transformed coefficients distribution of images or videos and compare the gap of reference coefficients and test coefficients. The emerging deep learning methods based on convolutional neural network (CNN) extract image features through convolutional operations and implement logistic regression to update the models. The learning capability of the IQA-oriented CNN has its priority. VQA models are mainly divided into two categories. One category is that the temporal video quality can be obtained based on the IQA methods for single frame, and then integrate the quality of all frames. The integration methods are mainly divided into general average and weighted average methods in which the weights can be obtained in terms of manual setting or learning. The other one is forthe three dimensions (3D) video as mentioned below: First, extracting the coefficient distribution by 3D transformation or using 3D CNN to extract features, and then fitting the coefficient distribution or mapping the features to obtain the final quality. Compared to traditional methods, learning-based methods have higher complexity, but better performance. Most of the current VQA methods also use CNN as the backbone structure, which assists in the overall model construction. Our critical review analyzes the growth of I/VQA and lists some representative algorithms. Then, we review some I/VQA based literatures from two aspects, including traditional methods and deep learning-based methods, respectively. The capability of representative algorithms is analyzed derived of Spearman rank order correlation coefficient(SROCC) and Pearson linear correlation coefficient(PLCC) evaluation indexes in terms of laboratory for image & video engineering(LIVE), categorical subjective image quality database(CSIQ), TID2013 and other datasets. Finally, the challenging issues of quality assessment are summarized and predicted.关键词:image/video quality assessment(I/VQA);structural information;human vision system(HVS);natural scene statistics(NSS);deep learning290|323|4更新时间:2024-05-07

摘要:Images and videos quality has a great impact on the information acquisition of human behavior visual system. Image/video quality assessment (I/VQA) can be regarded as a key factor for multimedia and network video services nowadays. The quality assessment methods are mainly segmented into qualitative and quantitative models. Qualitative methods conduct quality assessment based on the human's eyes, which cost lots of manpower and time consuming resources. Quantitative quality assessment simulates human observation and it can automatically forecast input quality for those of I/VQA researchers. Our review is critical reviewed quality assessment(QA) methods like full reference (FR), reduced reference (RR) and no reference (NR) methods, which are categorized based on the "clean" data capability (the reference image with no distortion). The full reference methods assess the quality of the distorted images with comparison of the "clean" data. The pros of these methods are better performance, low complexity and good robustness. The cons are that the reference images are challenged to obtain in the in-situ scenarios. Compared with the full reference methods, the reduced reference methods reduce the number of the reference images based on featured reference data to predict the quality. All reference data are not required for the no reference methods. Traditional IQA methods are mainly based on structural similarity, human visual system (HVS) and natural scene statistical theory (NSS). The structure similarity based methods assesses the measurement quality derived of the structural information changes; the HVS based methods are based on some human eyes features; Natural scene statistics based methods fit the transformed coefficients distribution of images or videos and compare the gap of reference coefficients and test coefficients. The emerging deep learning methods based on convolutional neural network (CNN) extract image features through convolutional operations and implement logistic regression to update the models. The learning capability of the IQA-oriented CNN has its priority. VQA models are mainly divided into two categories. One category is that the temporal video quality can be obtained based on the IQA methods for single frame, and then integrate the quality of all frames. The integration methods are mainly divided into general average and weighted average methods in which the weights can be obtained in terms of manual setting or learning. The other one is forthe three dimensions (3D) video as mentioned below: First, extracting the coefficient distribution by 3D transformation or using 3D CNN to extract features, and then fitting the coefficient distribution or mapping the features to obtain the final quality. Compared to traditional methods, learning-based methods have higher complexity, but better performance. Most of the current VQA methods also use CNN as the backbone structure, which assists in the overall model construction. Our critical review analyzes the growth of I/VQA and lists some representative algorithms. Then, we review some I/VQA based literatures from two aspects, including traditional methods and deep learning-based methods, respectively. The capability of representative algorithms is analyzed derived of Spearman rank order correlation coefficient(SROCC) and Pearson linear correlation coefficient(PLCC) evaluation indexes in terms of laboratory for image & video engineering(LIVE), categorical subjective image quality database(CSIQ), TID2013 and other datasets. Finally, the challenging issues of quality assessment are summarized and predicted.关键词:image/video quality assessment(I/VQA);structural information;human vision system(HVS);natural scene statistics(NSS);deep learning290|323|4更新时间:2024-05-07 -

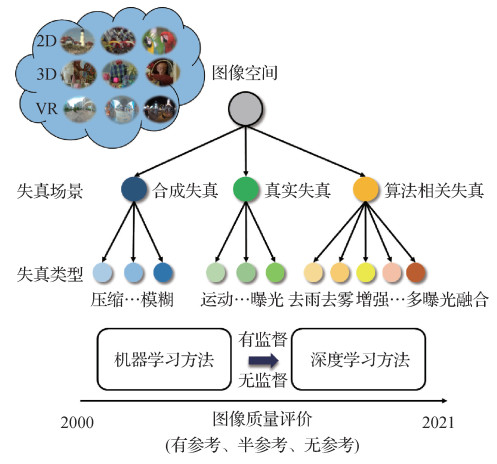

摘要:Image quality assessment (IQA) has been widely applied to multimedia processing, such as low-quality image screening, parameter tuning, and algorithm optimization. IQA models can be classified into three categories like full-reference (FR), reduced-reference (RR) and no-reference/blind (NR/B) models in terms of the accessibility to reference information. The FR and RR IQA models are based on full and partial reference information each while NR/B models can assess image visual quality without reference information. The early IQA models mainly relies on feature engineering, and their performance is limited by the representation ability of hand-crafted features. Benefiting from the excellent representation ability of deep neural networks, the deep learning based IQA models have shown superior performance and surpassed the feature engineering based IQA models, especially in representing large number of images. Due to the strong representation ability, the deep learning based IQA models have attracted most of our attention in the past decade. Additionally, IQA models have been introduced to image restoration, super-resolution and other tasks to improve algorithm performance and facilitate better benchmarks. For instance, some differentiable FR-IQA models like structural similarity(SSIM) and multi-scale SSIM(MSSIM) can be used straightforward via optimization, facilitating the relevant models training. In the development of IQA research, image distortion plays an important role. Both image quality database construction and IQA models are developed by considering distortion. Previous IQA studies are constrained of dozens of pristine images, and then generate their distorted images via disrupting the pristine images artificially. Benefiting from the uniformity of this type of images, the global quality score of each image in the early image quality databases can be regarded as the quality label of the image patches (e.g., 32×32 pixels) extracted from the corresponding image. Consequently, huge amount of data like local patches and the associated global quality scores can be carried out to learn the mapping function from local patch to visual quality. However, the patch-based strategy may be problematic, since such a small patch is unable to capture image context information. Besides, this data augmentation operation could not be simply adapted to quality assessment of authentic distorted images and other types of images such as 3D synthesized images, since authentic distortions are much more complex than simulated distortions and the distortions in 3D synthesized images exhibit non-uniform distribution. Customized solutions regarding to image distortion are required to be implemented in IQA models, e.g., we can use local variation measurement and global change modeling to capture the distortion in 3D synthesized images. Our research mainly introduces the distortion-related IQA models from 2011 to 2021, aiming to facilitate a review on the evolution of image quality databases and IQA models both. In the light of image distortion categories, IQA models are classified into three categories: the models for simulated distortions, the models for authentic distortions, and the models for the distortions related to algorithms. Specifically, simulated distortions are also called artificial distortions, which show uniform distribution, including Gaussian noise and motion blur, etc. Authentic distortions are mainly introduced in the context of image photography scenario, shooting equipment, inadequate operation in shooting process. In comparison of simulated distortions, authentic distortions are more complicated, leading to the challenge of data collection. Authentic distortions can be also classified into several major types, like out-of-focus, blur and over-exposure. A large number of authentical distorted images collection need to be followed some distortion rules, and it is a labor-intensive task. The distortions related to algorithms refer to the image degradations occurred in the resultant images due to the intrinsic defects or the limited performance of image processing or computer vision algorithms, and a distinctive characteristic of this type of distortions is that their distributions are inhomogeneous, which differs from other two types of distortions. Overall, current public image quality databases are initial to be promoted, including the details of image data sources and database construction. The designation of IQA models is then involved in. Finally, we summarize the IQA models introduced in this paper, and point out the potential development directions in the future.关键词:image quality assessment(IQA);image processing;visual perception;computer vision;machine learning;deep learning535|1176|7更新时间:2024-05-07

摘要:Image quality assessment (IQA) has been widely applied to multimedia processing, such as low-quality image screening, parameter tuning, and algorithm optimization. IQA models can be classified into three categories like full-reference (FR), reduced-reference (RR) and no-reference/blind (NR/B) models in terms of the accessibility to reference information. The FR and RR IQA models are based on full and partial reference information each while NR/B models can assess image visual quality without reference information. The early IQA models mainly relies on feature engineering, and their performance is limited by the representation ability of hand-crafted features. Benefiting from the excellent representation ability of deep neural networks, the deep learning based IQA models have shown superior performance and surpassed the feature engineering based IQA models, especially in representing large number of images. Due to the strong representation ability, the deep learning based IQA models have attracted most of our attention in the past decade. Additionally, IQA models have been introduced to image restoration, super-resolution and other tasks to improve algorithm performance and facilitate better benchmarks. For instance, some differentiable FR-IQA models like structural similarity(SSIM) and multi-scale SSIM(MSSIM) can be used straightforward via optimization, facilitating the relevant models training. In the development of IQA research, image distortion plays an important role. Both image quality database construction and IQA models are developed by considering distortion. Previous IQA studies are constrained of dozens of pristine images, and then generate their distorted images via disrupting the pristine images artificially. Benefiting from the uniformity of this type of images, the global quality score of each image in the early image quality databases can be regarded as the quality label of the image patches (e.g., 32×32 pixels) extracted from the corresponding image. Consequently, huge amount of data like local patches and the associated global quality scores can be carried out to learn the mapping function from local patch to visual quality. However, the patch-based strategy may be problematic, since such a small patch is unable to capture image context information. Besides, this data augmentation operation could not be simply adapted to quality assessment of authentic distorted images and other types of images such as 3D synthesized images, since authentic distortions are much more complex than simulated distortions and the distortions in 3D synthesized images exhibit non-uniform distribution. Customized solutions regarding to image distortion are required to be implemented in IQA models, e.g., we can use local variation measurement and global change modeling to capture the distortion in 3D synthesized images. Our research mainly introduces the distortion-related IQA models from 2011 to 2021, aiming to facilitate a review on the evolution of image quality databases and IQA models both. In the light of image distortion categories, IQA models are classified into three categories: the models for simulated distortions, the models for authentic distortions, and the models for the distortions related to algorithms. Specifically, simulated distortions are also called artificial distortions, which show uniform distribution, including Gaussian noise and motion blur, etc. Authentic distortions are mainly introduced in the context of image photography scenario, shooting equipment, inadequate operation in shooting process. In comparison of simulated distortions, authentic distortions are more complicated, leading to the challenge of data collection. Authentic distortions can be also classified into several major types, like out-of-focus, blur and over-exposure. A large number of authentical distorted images collection need to be followed some distortion rules, and it is a labor-intensive task. The distortions related to algorithms refer to the image degradations occurred in the resultant images due to the intrinsic defects or the limited performance of image processing or computer vision algorithms, and a distinctive characteristic of this type of distortions is that their distributions are inhomogeneous, which differs from other two types of distortions. Overall, current public image quality databases are initial to be promoted, including the details of image data sources and database construction. The designation of IQA models is then involved in. Finally, we summarize the IQA models introduced in this paper, and point out the potential development directions in the future.关键词:image quality assessment(IQA);image processing;visual perception;computer vision;machine learning;deep learning535|1176|7更新时间:2024-05-07

Review

-

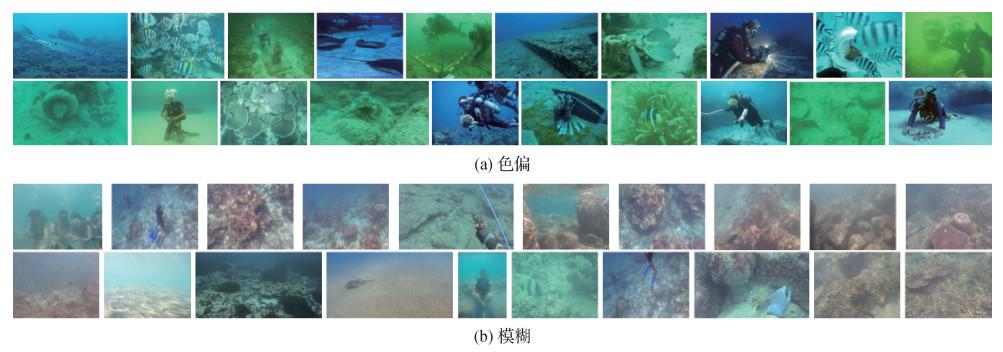

摘要:ObjectiveUnderwater images processing are essential to marine issues in the context of defense, environmental protection and engineering. However, there are always severe quality degradation issues like color cast, blur, and low contrast are greatly restricted the quality of underwater imaging and operation systems due to the inner-water light attenuation/scattering and the microbe derived absorption/reflection of light. Underwater image enhancement (UIE) algorithms have been demonstrated to improve the quality of underwater images nowadays. The two aspects of challenges are critical to be illustrated as below: one of huge gap between the synthesized and in-situ underwater images processing are constrained of complicated degradations of diverse underwater environments. The other is challenged that the existing objective image quality metrics are matched to evaluate the in-situ quality of various UIE algorithms. To resolve the above two issues, our demonstration has illustrated as following: first, we build up a real-world underwater image quality evaluation dataset to compare the performance of different UIE algorithms based on a collection of in-situ underwater images. Next, we evaluate the performance of existing image quality evaluation metrics on our generated dataset.MethodFirst, we collect 100 real-world underwater images, including 60 color cast-dominant and 40 blur-dominant ones, and apply 10 representative UIE algorithms to enhance the 100 raw underwater images. A total number of 1 000 enhanced results (10 results for each raw underwater image) are generated. Next, we conduct complex human subjective studies to evaluate the performance of different UIE algorithms based on the pairwise comparison (PC) strategy. Thirdly, we analyze the results obtained from our subjective studies to demonstrate the reliability of our human subjective studies and get insights on the pros and cons of each UIE algorithm. The Bradley-Terry (B-T) model on the PC results obtained B-T scores as the ground truth quality scores of the enhanced underwater images. Finally, we test the capabilities of existing image quality metrics via the correlations between the B-T scores and the predicted 10 existing representative no-reference image quality metrics for evaluating UIE results.ResultWe illustrates the Kendall coefficient of inner subjects' protocols, a convergence analysis and conducts a significance test to verify the dataset. The Kendall coefficient of inner subjects' protocols on the full-set is around 0.41, which demonstrates a qualified inter-subject consistency level. Such coefficient is slightly different on the two subsets, i.e., 0.39 and 0.44 on the color cast subset and blur subset, respectively. In respect of the convergence analysis, the mean and the variance of each underwater image enhancement algorithms tend to be clarified in the context of the increasing of the number of votes and the number of images.The similar subjective scores are obtained for each underwater enhancement algorithms. The significance for test results demonstrates that GL-Net is the best and underwater image enhancement convolutional neural network(UWCNN) is the worst for the adopted 10 UIE algorithms. In addition, there is slight difference on the performance rankings of different UIE algorithms on the two subsets. Finally, an existing no-reference image quality metrics can be unqualified for UIE algorithms evaluation.ConclusionOur first contribution is based on an in-situ underwater image quality evaluation dataset through conducting human subjective studies to compare the performance of various UIE algorithms with a collection of in-situ underwater images. The other one is that the performance of existing image quality evaluation methods is evaluated based on our dataset and the limitation of the existing image quality metrics is identified for UIE quality evaluation. Overall, this research targets underwater image quality evaluation metrics. All the images and collected data involved are available at: https://github.com/yia-yuese/RealUWIQ-dataset.关键词:image quality evaluation;underwater image enhancement;subject image quality assessment;dataset;pairwise comparison(PC)469|459|1更新时间:2024-05-07

摘要:ObjectiveUnderwater images processing are essential to marine issues in the context of defense, environmental protection and engineering. However, there are always severe quality degradation issues like color cast, blur, and low contrast are greatly restricted the quality of underwater imaging and operation systems due to the inner-water light attenuation/scattering and the microbe derived absorption/reflection of light. Underwater image enhancement (UIE) algorithms have been demonstrated to improve the quality of underwater images nowadays. The two aspects of challenges are critical to be illustrated as below: one of huge gap between the synthesized and in-situ underwater images processing are constrained of complicated degradations of diverse underwater environments. The other is challenged that the existing objective image quality metrics are matched to evaluate the in-situ quality of various UIE algorithms. To resolve the above two issues, our demonstration has illustrated as following: first, we build up a real-world underwater image quality evaluation dataset to compare the performance of different UIE algorithms based on a collection of in-situ underwater images. Next, we evaluate the performance of existing image quality evaluation metrics on our generated dataset.MethodFirst, we collect 100 real-world underwater images, including 60 color cast-dominant and 40 blur-dominant ones, and apply 10 representative UIE algorithms to enhance the 100 raw underwater images. A total number of 1 000 enhanced results (10 results for each raw underwater image) are generated. Next, we conduct complex human subjective studies to evaluate the performance of different UIE algorithms based on the pairwise comparison (PC) strategy. Thirdly, we analyze the results obtained from our subjective studies to demonstrate the reliability of our human subjective studies and get insights on the pros and cons of each UIE algorithm. The Bradley-Terry (B-T) model on the PC results obtained B-T scores as the ground truth quality scores of the enhanced underwater images. Finally, we test the capabilities of existing image quality metrics via the correlations between the B-T scores and the predicted 10 existing representative no-reference image quality metrics for evaluating UIE results.ResultWe illustrates the Kendall coefficient of inner subjects' protocols, a convergence analysis and conducts a significance test to verify the dataset. The Kendall coefficient of inner subjects' protocols on the full-set is around 0.41, which demonstrates a qualified inter-subject consistency level. Such coefficient is slightly different on the two subsets, i.e., 0.39 and 0.44 on the color cast subset and blur subset, respectively. In respect of the convergence analysis, the mean and the variance of each underwater image enhancement algorithms tend to be clarified in the context of the increasing of the number of votes and the number of images.The similar subjective scores are obtained for each underwater enhancement algorithms. The significance for test results demonstrates that GL-Net is the best and underwater image enhancement convolutional neural network(UWCNN) is the worst for the adopted 10 UIE algorithms. In addition, there is slight difference on the performance rankings of different UIE algorithms on the two subsets. Finally, an existing no-reference image quality metrics can be unqualified for UIE algorithms evaluation.ConclusionOur first contribution is based on an in-situ underwater image quality evaluation dataset through conducting human subjective studies to compare the performance of various UIE algorithms with a collection of in-situ underwater images. The other one is that the performance of existing image quality evaluation methods is evaluated based on our dataset and the limitation of the existing image quality metrics is identified for UIE quality evaluation. Overall, this research targets underwater image quality evaluation metrics. All the images and collected data involved are available at: https://github.com/yia-yuese/RealUWIQ-dataset.关键词:image quality evaluation;underwater image enhancement;subject image quality assessment;dataset;pairwise comparison(PC)469|459|1更新时间:2024-05-07

Dataset

-

摘要:ObjectiveThe acquired images relating fog, mist and damp weather conditions are subjected to the atmospheric scattering, affecting the observation and target analysis of intelligent detection systems. The scattering of reflected lights deduct the contrast of images in the context of the increased scene depth, and the uneven sky illumination constrains images visibility. This two constraints yield to deduction and fuzzes on the weak texture in foggy images. The degradation of foggy images affects the pixels based statistical distributions like saturation and weber contrast and changes the statistical distribution between pixels, such as target contour intensity. Thus, visual perception quality related to natural scene statistical (NSS) features of fog image and the target detection accuracy related target category semantic (TCS) features are significantly different with ground truth, Traditional image restoration methods can build the defogging mapping to improve image contrast based on the conventional scattering model. But, it is challenged to remove the severe scattering of image features. Deep learning based image enhancement methods have better scattering image removal results close to the distribution of training data. It is a challenged issue of insufficient generalization ability like dense artifacts for ground truth foggy images derived of complex degradation the degradation in synthetic foggy images excluded. Current methods have focused on semi-supervision technique based generalization ability improvement but the large domain distance constrains between real and synthetic foggy images existing. To optimize the image features, current deep learning based methods are challenged to achieve the niches between visual quality and machine perception quality via image classification or target detection. To interpret pros and cons of prior-based and deep leaning based methods, a semi-supervision prior hyrid network for feature enhancement is illustrated to demonstrate the feature enhancement for detection and object analysis.MethodOur research is designated to a semi-supervision prior hybrid network for NSS and TCS feature enhancement. First, a prior based fog inversion module is used to remove the atmospheric scattering effect and restore the uneven illumination in foggy images. The method is based on an extended atmospheric scattering model and a regional gradient constrained prior for transmission estimation and illumination decomposition. Then, a feature enhancement module is designed based on the condition generative adversarial network (CGAN) as a subsequent module, which regards the defogged image as the input image. The generator uses six Res-blocks with the instance norm layer and a long skip connection to achieve the image domain translation of defogged images. There are three discriminators in the network. The style and feature discriminator with five convolution layers and leakReLU layers are used to identify the image style between "defogged" and "clear", promoting the generator using the adversarial technique with CGAN loss in pixel-level and feature-level. Excluded the CGAN loss for further removing the scattering degradation in defogged images, the generator is also trained based on a content loss, which constrains the details distortion in the image translation process. Moreover, our research analyzes the domain differences between the defogged image and the clear image in the target feature level, and utilizes a target cumulative vector loss based a target sematic discriminator to guide the refinement of target outline in the defogged image. Thus, the feature enhancement module is implemented to contrast and brightness related NSS features and improve the performance of TCS features about target clearance. The reversed features are constrained to match the CGAN loss and content loss in terms of the information differences between image features and image pixel performance. Our network resolves the interconnections between the traditional method and the convolutional neural network (CNN) module and obtains the enhanced result via the scattering removal and feature enhancement. It is beneficial to solve the dependence of feature learning on synthetic paired training data and the instability of semi-supervision learning in realizing image translation. Abstract features representations is also improved through definite direction and fine granularity related feature learning method. The traditional image enhancement module is optimized to make the best defogged result via parameters adjusting. The feature enhancement module is trained with adaptive moment estimation(ADAM) optimizer for 250 epochs with the momentum takes the values of 0.5 and 0.999. The learning rate is set to 2E-4. And unpaired 270×170 patches randomly cropped from 2 000 defogged real-world images and 2 000 clearance images are taken as the inputs of the generator and discriminator. The train and test processes are carried out by PyTorch in a X86 computer with a core i7 3.0 GHz processor, 64 GB RAM and a NVIDIA 2080ti graphic.ResultOur experimental results are compared with 5 state-of-the-art enhancement methods, including the 2 traditional approaches and 3 deep learning methods on 2 public fog ground truth image datasets called RTTS(real-world task-driven testing set) and foggy driving dense dataset. RTTS dataset contains 4 322 foggy or dim images and foggy driving dense dataset has 21 dense fog online images collection. The quantitative evaluation metrics contain the image quality index and the detection quality index. The quality indexes are composed of the enhanced gradient ratio R and the blind image quality analyzer integrated local natural image quality evaluator(IL-NIQE). The detection indexes are based on mean average precision(MAP) and recall. We also demonstrated more enhanced results of each method for qualitative comparison in the experimental section. In RTTS and foggy driving dense dataset, compared with the method ranking second in each index, the mean R value is improved 50.83%, the mean IL-NIQE value is improved 6.33%, the MAP value is improved 6.40% and the mean Recall value is improved 7.79%. The enhanced results from the proposed method are much similar to clear color, brightness and contrast images qualitatively. The obtained experimental results illustrates that our network can improves the visual quality and machine perception for the foggy image captured in bad weather conditions with more than 50 (frame/s)/Mp as well.ConclusionOur semi-supervision prior hybrid network integrates traditional restoration methods and deep learning based enhancement models for multi-level feature enhancement, achieving the enhancement of NSS features and TCS features. Our illustrations demonstrates our method has its priority for real foggy images in terms of image quality and object detectable ability for intelligent detection system.关键词:foggy image dehazing;feature enhancement;prior hyrid network;semi-supervison learning;image domain translation174|462|2更新时间:2024-05-07

摘要:ObjectiveThe acquired images relating fog, mist and damp weather conditions are subjected to the atmospheric scattering, affecting the observation and target analysis of intelligent detection systems. The scattering of reflected lights deduct the contrast of images in the context of the increased scene depth, and the uneven sky illumination constrains images visibility. This two constraints yield to deduction and fuzzes on the weak texture in foggy images. The degradation of foggy images affects the pixels based statistical distributions like saturation and weber contrast and changes the statistical distribution between pixels, such as target contour intensity. Thus, visual perception quality related to natural scene statistical (NSS) features of fog image and the target detection accuracy related target category semantic (TCS) features are significantly different with ground truth, Traditional image restoration methods can build the defogging mapping to improve image contrast based on the conventional scattering model. But, it is challenged to remove the severe scattering of image features. Deep learning based image enhancement methods have better scattering image removal results close to the distribution of training data. It is a challenged issue of insufficient generalization ability like dense artifacts for ground truth foggy images derived of complex degradation the degradation in synthetic foggy images excluded. Current methods have focused on semi-supervision technique based generalization ability improvement but the large domain distance constrains between real and synthetic foggy images existing. To optimize the image features, current deep learning based methods are challenged to achieve the niches between visual quality and machine perception quality via image classification or target detection. To interpret pros and cons of prior-based and deep leaning based methods, a semi-supervision prior hyrid network for feature enhancement is illustrated to demonstrate the feature enhancement for detection and object analysis.MethodOur research is designated to a semi-supervision prior hybrid network for NSS and TCS feature enhancement. First, a prior based fog inversion module is used to remove the atmospheric scattering effect and restore the uneven illumination in foggy images. The method is based on an extended atmospheric scattering model and a regional gradient constrained prior for transmission estimation and illumination decomposition. Then, a feature enhancement module is designed based on the condition generative adversarial network (CGAN) as a subsequent module, which regards the defogged image as the input image. The generator uses six Res-blocks with the instance norm layer and a long skip connection to achieve the image domain translation of defogged images. There are three discriminators in the network. The style and feature discriminator with five convolution layers and leakReLU layers are used to identify the image style between "defogged" and "clear", promoting the generator using the adversarial technique with CGAN loss in pixel-level and feature-level. Excluded the CGAN loss for further removing the scattering degradation in defogged images, the generator is also trained based on a content loss, which constrains the details distortion in the image translation process. Moreover, our research analyzes the domain differences between the defogged image and the clear image in the target feature level, and utilizes a target cumulative vector loss based a target sematic discriminator to guide the refinement of target outline in the defogged image. Thus, the feature enhancement module is implemented to contrast and brightness related NSS features and improve the performance of TCS features about target clearance. The reversed features are constrained to match the CGAN loss and content loss in terms of the information differences between image features and image pixel performance. Our network resolves the interconnections between the traditional method and the convolutional neural network (CNN) module and obtains the enhanced result via the scattering removal and feature enhancement. It is beneficial to solve the dependence of feature learning on synthetic paired training data and the instability of semi-supervision learning in realizing image translation. Abstract features representations is also improved through definite direction and fine granularity related feature learning method. The traditional image enhancement module is optimized to make the best defogged result via parameters adjusting. The feature enhancement module is trained with adaptive moment estimation(ADAM) optimizer for 250 epochs with the momentum takes the values of 0.5 and 0.999. The learning rate is set to 2E-4. And unpaired 270×170 patches randomly cropped from 2 000 defogged real-world images and 2 000 clearance images are taken as the inputs of the generator and discriminator. The train and test processes are carried out by PyTorch in a X86 computer with a core i7 3.0 GHz processor, 64 GB RAM and a NVIDIA 2080ti graphic.ResultOur experimental results are compared with 5 state-of-the-art enhancement methods, including the 2 traditional approaches and 3 deep learning methods on 2 public fog ground truth image datasets called RTTS(real-world task-driven testing set) and foggy driving dense dataset. RTTS dataset contains 4 322 foggy or dim images and foggy driving dense dataset has 21 dense fog online images collection. The quantitative evaluation metrics contain the image quality index and the detection quality index. The quality indexes are composed of the enhanced gradient ratio R and the blind image quality analyzer integrated local natural image quality evaluator(IL-NIQE). The detection indexes are based on mean average precision(MAP) and recall. We also demonstrated more enhanced results of each method for qualitative comparison in the experimental section. In RTTS and foggy driving dense dataset, compared with the method ranking second in each index, the mean R value is improved 50.83%, the mean IL-NIQE value is improved 6.33%, the MAP value is improved 6.40% and the mean Recall value is improved 7.79%. The enhanced results from the proposed method are much similar to clear color, brightness and contrast images qualitatively. The obtained experimental results illustrates that our network can improves the visual quality and machine perception for the foggy image captured in bad weather conditions with more than 50 (frame/s)/Mp as well.ConclusionOur semi-supervision prior hybrid network integrates traditional restoration methods and deep learning based enhancement models for multi-level feature enhancement, achieving the enhancement of NSS features and TCS features. Our illustrations demonstrates our method has its priority for real foggy images in terms of image quality and object detectable ability for intelligent detection system.关键词:foggy image dehazing;feature enhancement;prior hyrid network;semi-supervison learning;image domain translation174|462|2更新时间:2024-05-07 -

摘要:ObjectiveThe quality of captured images tends to yellowish color distortion, reduced contrast, and detailed information loss in the sand dust atmosphere due to the suspended particles derived incident light absorption and scattering. The issues of outdoor computer vision systems like video surveillance, video navigation and intelligent transportation are severely constrained. Traditional sand dust image enhancement methods are originated from visual perception based sand dust image enhancement and physical model based sand dust image restoration. The visual perception based method is not restricted of the physical imaging model. The visual quality is based on color correction and contrast enhancement. The recovered image still has insufficient color distortion, image brightness and image contrast. Physical models based sand dust image restoration is related to additional assumptions and less prior robustness, complex parameters calculation. Nowadays, existing deep learning-based sand dust image enhancement methods are migrated from the deep learning based haze images methods. Although these methods has achieved good results for haze image processing, the color of the output image still has different degrees of distortion, and the sharpness of the image is also relatively poor in terms of transferred and enhanced sand dust images. An enhanced convolutional neural network (CNN) method of restored color sand dust images can be used to resolve and improve the issues mentioned above.MethodOur proposed network structure consists of a sand dust color correction subnet and a dust removal enhancement subnet. We illustrated a novel sand dust color correction network structure to improve gray world algorithm. First, the proposed sand dust color correction subnet (SDCC) is used to correct the color cast of the sand dust image. The sand dust image is de-composed into 3 channels of R, G, and B. For each channel, a convolutional layer with a convolution kernel size of 3 is used to conducted, and each feature map is processed to obtain color correction image via gray world algorithm. To enhance the sand dust images quality, a benched color-corrected image is transmitted into the dust removal enhancement subnet in the context of adaptive instance normalized-residual blocks (AIN-ResBlock). The dust removal enhancement subnet takes the sand image and the color correction image as input, and uses the adaptive instance normalization module to adaptively restore the color distortion issues in the feature mapping in the dust removal enhancement subnet, and realizes the image sand removal through the residual block. Our AIN-ResBlock is capable to resolve the blurred details and missing image content for the natural color factors of the restored image. Additionally, in view of the difficulty in obtaining pairs of sand dust images and their corresponding clear images as training samples for deep learning, a sand dust image synthesis method is illustrated based on a physical imaging model. Absorb and scatter light to attenuate, and the attenuation degree of light of different colors is different. We optioned 15 color marks close to the color of the sand dust image, and simulate the sand dust image under 15 different conditions, and a large-scale dataset of clear image and sand dust images is finally constructed. Our loss function used is composed of

摘要:ObjectiveThe quality of captured images tends to yellowish color distortion, reduced contrast, and detailed information loss in the sand dust atmosphere due to the suspended particles derived incident light absorption and scattering. The issues of outdoor computer vision systems like video surveillance, video navigation and intelligent transportation are severely constrained. Traditional sand dust image enhancement methods are originated from visual perception based sand dust image enhancement and physical model based sand dust image restoration. The visual perception based method is not restricted of the physical imaging model. The visual quality is based on color correction and contrast enhancement. The recovered image still has insufficient color distortion, image brightness and image contrast. Physical models based sand dust image restoration is related to additional assumptions and less prior robustness, complex parameters calculation. Nowadays, existing deep learning-based sand dust image enhancement methods are migrated from the deep learning based haze images methods. Although these methods has achieved good results for haze image processing, the color of the output image still has different degrees of distortion, and the sharpness of the image is also relatively poor in terms of transferred and enhanced sand dust images. An enhanced convolutional neural network (CNN) method of restored color sand dust images can be used to resolve and improve the issues mentioned above.MethodOur proposed network structure consists of a sand dust color correction subnet and a dust removal enhancement subnet. We illustrated a novel sand dust color correction network structure to improve gray world algorithm. First, the proposed sand dust color correction subnet (SDCC) is used to correct the color cast of the sand dust image. The sand dust image is de-composed into 3 channels of R, G, and B. For each channel, a convolutional layer with a convolution kernel size of 3 is used to conducted, and each feature map is processed to obtain color correction image via gray world algorithm. To enhance the sand dust images quality, a benched color-corrected image is transmitted into the dust removal enhancement subnet in the context of adaptive instance normalized-residual blocks (AIN-ResBlock). The dust removal enhancement subnet takes the sand image and the color correction image as input, and uses the adaptive instance normalization module to adaptively restore the color distortion issues in the feature mapping in the dust removal enhancement subnet, and realizes the image sand removal through the residual block. Our AIN-ResBlock is capable to resolve the blurred details and missing image content for the natural color factors of the restored image. Additionally, in view of the difficulty in obtaining pairs of sand dust images and their corresponding clear images as training samples for deep learning, a sand dust image synthesis method is illustrated based on a physical imaging model. Absorb and scatter light to attenuate, and the attenuation degree of light of different colors is different. We optioned 15 color marks close to the color of the sand dust image, and simulate the sand dust image under 15 different conditions, and a large-scale dataset of clear image and sand dust images is finally constructed. Our loss function used is composed of$ L_1$ $ L_1$ $ e$ $ \bar{γ}$ $ σ$ 关键词:sand dust image;sand dust image enhancement;color correction;adaptive instance normalization residual block;synthetic sand dust image dataset245|446|1更新时间:2024-05-07 -

摘要:ObjectiveVision-based computer systems can be used to process and analyze acquired images and videos in fuzzy weather like rainy, snowy, sleet or foggy. These image quality degradation issues derived from severe weather conditions will significantly distort the image visual quality and reduce the performance of the computer vision system. Hence, it is important to develop computer image deraining automatic processing algorithms. Our research focuses on the issue of single image based removing rain streaks. The traditional image rain removal model is mainly based on the prior information to remove the rain from the image. It regards the rain image as a combination of the rain layer and the background layer, and defines the separation of the rain layer and the background layer by the image deraining task. Due to the existing differences in related to direction, density, and size of rain streaks in rain images, a single image derived de-raining issue is a challenging computer vision task currently. Deep learning has benefited to de-raining images but existing models has challenges like excessive rain removal or insufficient rain removal on complicated images scenario. The high-frequency edge information of some complex images is erased during the rain removal process, or rain components remaining in the rain removal image. We propose this paper proposes the three-dimension attention and Transformer de-raining network (TDATDN) single image rain removal network, which improves the image rain removal network based on the encoder-decoder architecture and integrates 3D attention, Transformer and encoder-decoder take advantages of the structure to enhance the image to the rain effect. Our training dataset consists of 12 000 pairs of training images (including three types of rain images with different rain densities), and 1 200 test set images are used to test the rain removal effect. The input image size is scaled to 256×256 for training and testing. Adam optimizer is used for training and learning. The initial learning rate is set to 1×10-4, and its network epoch number is 100. The learning rate is multiplied by 0.5 when reach 15 times.MethodOur method melts the three-dimension attention mechanism into the residual dense block structure to resolve the challenge of high-dimensional feature fusion via the residual dense block channel. Then, our proposed three-dimension attention residual dense block as the backbone network to build an encoder-decoder-based architecture image de-raining network, and uses Transformer mechanism to calculate the global contextual relevance of the deep semantic information of the network. The Transformer obtained self-attention feature encoding by is up-sampling operation based on the decoder structure image restoration path. To obtain a rain removal result with richer high-frequency details the up-sampling operation obtains the feature map of the image is spliced in the channel direction with the corresponding encoder-based feature map. For the image high-frequency information loss and the structure information is erased in the rain removal process, our problem solving combines the multi-scale structure similarity loss with the commonly used image de-raining loss function to improve the training of the de-raining network.ResultOur TDATDN network is demonstrated on the Rain12000 rain streaks dataset. Among them, the peak signal to noise ratio (PSNR) reached 33.01 dB, and the structural similarity (SSIM) reached 0.927 8. A comparative experiment was carried out to verify the fusion algorithm results. The result of the comparative experiment illustrated that our algorithm has its priority to improve the effect of a single image oriented rain removing.ConclusionOur image de-raining network takes the advantages of 3D attention mechanism, Transformer and encoder-decoder architecture into account.关键词:single image deraining;convolutional neural network(CNN);Transformer;3D attention;U-Net175|280|4更新时间:2024-05-07