最新刊期

卷 27 , 期 2 , 2022

-

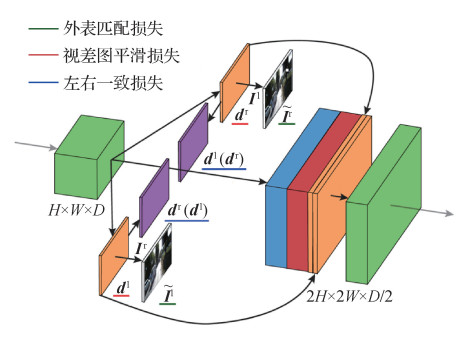

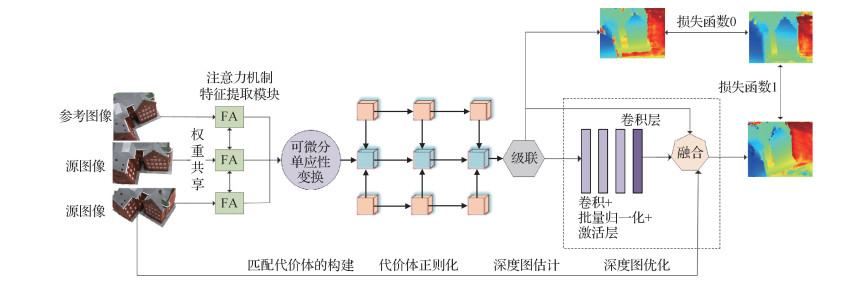

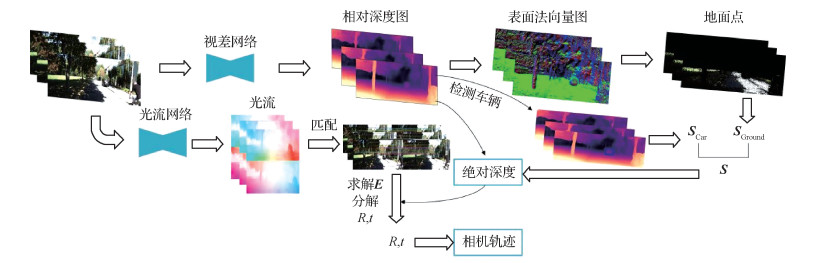

摘要:Scene depth estimation is one of the key issues in the field of computer vision and an important aspect in the applications such as 3D reconstruction and image synthesis. Monocular depth estimation techniques based on deep learning have developed fast recently. Differentiated network structures have been proposed gradually. The current development of monocular depth estimation techniques based on deep learning and a categorical review of supervised and unsupervised learning-based methods have been illustrated in terms of the characteristics of the network structures. The supervised learning methods have been segmented as following: 1) Multi-scale feature fusion strategies: Different scales images contain different kinds of information via fusing multi-scale features extracted from the images. The demonstrated results of depth estimation can be effectively improved. 2)Conditional random fields (CRFs): CRFs, as one of probabilistic graphical models, have good performance in the field of semantic segmentation. Since depth information has similar data distribution attributes as semantic information, the use of consistent CRFs can be effective for predicting continuous depth values. CRFs can be operated as the loss function in the final part of the network as well as a feature fusion module in the medium layer of the network due to its effectiveness for fuse features. 3)Ordinal relations: One category is the relative depth estimation method which uses ordinal relation straight forward to estimate the relative position of two pixels in the image. The other category defines the depth estimation as an ordinal regression issue, which needs to discretize the continuous depth values into discrete depth labels and perform multi-class classification for the global depth. 4) Multiple image information: It is beneficial to combining various image information in depth estimation to improve the accuracy of depth estimation results whereas the image information of different dimensions (time, space, semantics, etc.) can be implicitly related to the depth of the image scene. Four types of information are often adopted: semantic information, neighborhood information, temporal information and object boundary information. 5)Miscellaneous strategies: Some other supervised learning methods still cannot be easily classified into the above-mentioned methods. 6) Various optimization strategies: Acquiring efficiency optimization, using synthetic data obtained via image style transfer for domain adaptation, and the hardware-oriented optimization for underwater scene depth estimation. The unsupervised learning methods of scene depth estimation are classified as below: 1) Stereo vision: Stereo vision aims to deduce the depth information of each pixel in the image from two or more images. Conventional binocular stereo vision algorithm is based on the stereo disparity, and can reconstruct the three-dimensional geometric information of surrounding scenery from the images captured by two camera sensors in terms of the principle of trigonometry. Researchers transform the depth estimation into an image reconstruction, and unsupervised depth estimation method is realized based on binocular (or multi-ocular) images and predicted disparity maps. 2) Structure from motion (SfM): SfM is a technique that automatically recovers camera parameters and the 3D structure of a scene from multiple images or video sequences. The unsupervised method based on SfM has its similarity to the unsupervised method based on stereo vision. It also transforms the depth estimation into the image reconstruction, but there are many differences in details. First, the SfM-based image reconstruction unsupervised processing method is generally using successive frames, that is, the image of the current frame is used to reconstruct the image of the previous or the next frame. Therefore, this kind of method uses image sequence generally-video as the training data. Second, the unsupervised method based on SfM needs to introduce a module for camera pose estimation in the training process. 3) Adversarial strategies: Generative adversarial networks (GANs) facilitate many imaging tasks with their powerful performance, where a discriminator can judge the results generated by the generator to force the generator to produce the same results as the labels. Adding discriminators to unsupervised learning networks can be effective in improving depth estimation results by optimizing image reconstruction results. 4) Ordinal relationship: Similar to the ordinal regression approach that utilizes ordinal relationships in the supervised learning methods, discrete disparity estimation is also desirable in unsupervised networks. In view of the fact that discrete depth values achieve more robust and sharper depth estimates than conventional regression predictions, discrete operations are equally effective in unsupervised networks. 5) Uncertainty: Since unsupervised learning does not use ground truth depth values, the depth results predicted is in doubt. From this viewpoint, it has been proposed to use the uncertainty of the prediction results of unsupervised methods as a benchmark for judging whether the prediction results are credible, and the results can be optimized in monocular depth estimation tasks. Meanwhile, this review refers to the NYU dataset, Karlsruhe Institute Technology and Toyota Technological Institute at Chicago (KITTI) dataset, Make3D dataset and Cityscapes dataset, which are mainly used in monocular deep estimation tasks, as well as six commonly-used evaluation metrics. Based on these datasets and evaluation metrics, a comparison among the reviewed methods is illustrated. Finally, the review discusses the current status of deep learning-based monocular depth estimation techniques in terms of accuracy, generalizability, application scenarios and uncertainty studies in unsupervised networks.关键词:deep learning;monocular depth estimation;supervised learning;unsupervised learning;multi-scale feature fusion;ordinal relationship;stereo vision775|1132|4更新时间:2024-05-07

摘要:Scene depth estimation is one of the key issues in the field of computer vision and an important aspect in the applications such as 3D reconstruction and image synthesis. Monocular depth estimation techniques based on deep learning have developed fast recently. Differentiated network structures have been proposed gradually. The current development of monocular depth estimation techniques based on deep learning and a categorical review of supervised and unsupervised learning-based methods have been illustrated in terms of the characteristics of the network structures. The supervised learning methods have been segmented as following: 1) Multi-scale feature fusion strategies: Different scales images contain different kinds of information via fusing multi-scale features extracted from the images. The demonstrated results of depth estimation can be effectively improved. 2)Conditional random fields (CRFs): CRFs, as one of probabilistic graphical models, have good performance in the field of semantic segmentation. Since depth information has similar data distribution attributes as semantic information, the use of consistent CRFs can be effective for predicting continuous depth values. CRFs can be operated as the loss function in the final part of the network as well as a feature fusion module in the medium layer of the network due to its effectiveness for fuse features. 3)Ordinal relations: One category is the relative depth estimation method which uses ordinal relation straight forward to estimate the relative position of two pixels in the image. The other category defines the depth estimation as an ordinal regression issue, which needs to discretize the continuous depth values into discrete depth labels and perform multi-class classification for the global depth. 4) Multiple image information: It is beneficial to combining various image information in depth estimation to improve the accuracy of depth estimation results whereas the image information of different dimensions (time, space, semantics, etc.) can be implicitly related to the depth of the image scene. Four types of information are often adopted: semantic information, neighborhood information, temporal information and object boundary information. 5)Miscellaneous strategies: Some other supervised learning methods still cannot be easily classified into the above-mentioned methods. 6) Various optimization strategies: Acquiring efficiency optimization, using synthetic data obtained via image style transfer for domain adaptation, and the hardware-oriented optimization for underwater scene depth estimation. The unsupervised learning methods of scene depth estimation are classified as below: 1) Stereo vision: Stereo vision aims to deduce the depth information of each pixel in the image from two or more images. Conventional binocular stereo vision algorithm is based on the stereo disparity, and can reconstruct the three-dimensional geometric information of surrounding scenery from the images captured by two camera sensors in terms of the principle of trigonometry. Researchers transform the depth estimation into an image reconstruction, and unsupervised depth estimation method is realized based on binocular (or multi-ocular) images and predicted disparity maps. 2) Structure from motion (SfM): SfM is a technique that automatically recovers camera parameters and the 3D structure of a scene from multiple images or video sequences. The unsupervised method based on SfM has its similarity to the unsupervised method based on stereo vision. It also transforms the depth estimation into the image reconstruction, but there are many differences in details. First, the SfM-based image reconstruction unsupervised processing method is generally using successive frames, that is, the image of the current frame is used to reconstruct the image of the previous or the next frame. Therefore, this kind of method uses image sequence generally-video as the training data. Second, the unsupervised method based on SfM needs to introduce a module for camera pose estimation in the training process. 3) Adversarial strategies: Generative adversarial networks (GANs) facilitate many imaging tasks with their powerful performance, where a discriminator can judge the results generated by the generator to force the generator to produce the same results as the labels. Adding discriminators to unsupervised learning networks can be effective in improving depth estimation results by optimizing image reconstruction results. 4) Ordinal relationship: Similar to the ordinal regression approach that utilizes ordinal relationships in the supervised learning methods, discrete disparity estimation is also desirable in unsupervised networks. In view of the fact that discrete depth values achieve more robust and sharper depth estimates than conventional regression predictions, discrete operations are equally effective in unsupervised networks. 5) Uncertainty: Since unsupervised learning does not use ground truth depth values, the depth results predicted is in doubt. From this viewpoint, it has been proposed to use the uncertainty of the prediction results of unsupervised methods as a benchmark for judging whether the prediction results are credible, and the results can be optimized in monocular depth estimation tasks. Meanwhile, this review refers to the NYU dataset, Karlsruhe Institute Technology and Toyota Technological Institute at Chicago (KITTI) dataset, Make3D dataset and Cityscapes dataset, which are mainly used in monocular deep estimation tasks, as well as six commonly-used evaluation metrics. Based on these datasets and evaluation metrics, a comparison among the reviewed methods is illustrated. Finally, the review discusses the current status of deep learning-based monocular depth estimation techniques in terms of accuracy, generalizability, application scenarios and uncertainty studies in unsupervised networks.关键词:deep learning;monocular depth estimation;supervised learning;unsupervised learning;multi-scale feature fusion;ordinal relationship;stereo vision775|1132|4更新时间:2024-05-07 -

摘要:A sharp increase in point cloud data past decade, which has facilitated to point cloud data processing algorithms. Point cloud registration is the process of converting point cloud data in two or more camera coordinate systems to the world coordinate system to complete the stitching process. In respect of 3D reconstruction, scanning equipment is used to obtain partial information of the scene in common, and the whole scene is reconstructed based on point cloud registration. In respect of high-precision map and positioning, the local point clouds fragments obtained in driving vehicle are registered to the scene map in advance to complete the high-precision positioning of the vehicle. In addition, point cloud registration is also widely used in pose estimation, robotics, medical and other fields. In the real-world point cloud data collection process, there are a lot of noise, abnormal points and low overlap, which brings great challenges to traditional methods. Currently, deep learning has been widely used in the field of point cloud registration and has achieved remarkable results. In order to solve the limitations of traditional methods, some researchers have developed some point cloud registration methods integrated with deep learning technology, which is called deep point cloud registration. First of all, this analysis distinguishes the current deep learning point cloud registration methods according to the presence or absence of correspondence, which is divided into correspondence-free registration and point cloud registration based on correspondence. The main functions of various methods are classified as follows: 1) geometric feature extraction; 2) key point detection; 3) outlier removal; 4)pose estimation; and 5) end-to-end registration. The geometric feature extraction module aims to learn the coding method of the local geometric structure of the point cloud to generate discriminative features based on the network. Key point detection is used to detect points that are essential to the registration task in a large number of input points, and eliminate potential outliers while reducing computational complexity. Point-to-outliers are the final checking step before estimating the motion parameters to ensure the accuracy and efficiency of the solution. In the correspondence-free point cloud registration, a network structure similar to PointNet is used to obtain the global features of the perceived point cloud pose, and the rigid transformation parameters are estimated from the global features. In the performance of evaluation, the feature matching and registration error performance evaluation indicators are illustrated in detail. Feature matching performance metrics mainly include inlier ratio(IR) and feature matching recall(FMR). Registration error performance metrics include root mean square error(RMSE), mean square error(MSE), and mean Absolute error(MAE), relative translation error(RTE), relative rotation error(RRE), chamfer distance(CD) and registration recall(RR). RMSE, MSE and MAE are the most widely used metrics, but they have the disadvantage of Anisotropic. Isotropic RRE and RTE are indicators that actually measure the differences amongst the angle, the translation distance. The above five metrics all have inequal penalties for the registration of axisymmetric point clouds, and CD is the most fair metric. Meanwhile, real data sets registration tends to focus on the success rate of registration. With respect of real data sets, this research provides comparative data for feature matching and outlier removal. In the synthetic data set, this demonstration presents the comparative data of related methods in partial overlap, realtime, and global registration scenarios. At the end, the future research is from the current challenges in this field. 1) The application scenarios faced by point cloud registration are diverse, and it is difficult to develop general algorithms. Therefore, lightweight and efficient dedicated modules are more popular. 2) By dividing the overlap area, partial overlap can be converted into no overlap problem. This method is expected to lift the restrictions on the overlap rate requirements and fundamentally solve the problem of overlapping point cloud registration, so it has greater application value and prospects. 3) Most mainstream methods use multilayer perceptrons(MLPs) to learn saliency from data. 4) Some researchers introduced the random sample consensus(RANSAC) algorithm idea into the neural network, and achieved advanced results, but also led to higher complexity. Therefore, the balance performance and complexity is an issue to be considered in this sub-field. 5) The correspondence-free registration method is based on learning global features related to poses. The global features extracted by existing methods are more sensitive to noise and partial overlap, which is mainly caused by the fusion of some messy information in the global features. Meanwhile, the correspondence-free method has not been widely used in real data, and its robustness is still questioned by some researchers. Robust extraction of the global features for pose perception is also one of the main research issues further.关键词:point cloud registration;deep learning;registration without corresponding;end-to-end registration;correspondence;geometric feature extraction;outliers removal;review250|401|10更新时间:2024-05-07

摘要:A sharp increase in point cloud data past decade, which has facilitated to point cloud data processing algorithms. Point cloud registration is the process of converting point cloud data in two or more camera coordinate systems to the world coordinate system to complete the stitching process. In respect of 3D reconstruction, scanning equipment is used to obtain partial information of the scene in common, and the whole scene is reconstructed based on point cloud registration. In respect of high-precision map and positioning, the local point clouds fragments obtained in driving vehicle are registered to the scene map in advance to complete the high-precision positioning of the vehicle. In addition, point cloud registration is also widely used in pose estimation, robotics, medical and other fields. In the real-world point cloud data collection process, there are a lot of noise, abnormal points and low overlap, which brings great challenges to traditional methods. Currently, deep learning has been widely used in the field of point cloud registration and has achieved remarkable results. In order to solve the limitations of traditional methods, some researchers have developed some point cloud registration methods integrated with deep learning technology, which is called deep point cloud registration. First of all, this analysis distinguishes the current deep learning point cloud registration methods according to the presence or absence of correspondence, which is divided into correspondence-free registration and point cloud registration based on correspondence. The main functions of various methods are classified as follows: 1) geometric feature extraction; 2) key point detection; 3) outlier removal; 4)pose estimation; and 5) end-to-end registration. The geometric feature extraction module aims to learn the coding method of the local geometric structure of the point cloud to generate discriminative features based on the network. Key point detection is used to detect points that are essential to the registration task in a large number of input points, and eliminate potential outliers while reducing computational complexity. Point-to-outliers are the final checking step before estimating the motion parameters to ensure the accuracy and efficiency of the solution. In the correspondence-free point cloud registration, a network structure similar to PointNet is used to obtain the global features of the perceived point cloud pose, and the rigid transformation parameters are estimated from the global features. In the performance of evaluation, the feature matching and registration error performance evaluation indicators are illustrated in detail. Feature matching performance metrics mainly include inlier ratio(IR) and feature matching recall(FMR). Registration error performance metrics include root mean square error(RMSE), mean square error(MSE), and mean Absolute error(MAE), relative translation error(RTE), relative rotation error(RRE), chamfer distance(CD) and registration recall(RR). RMSE, MSE and MAE are the most widely used metrics, but they have the disadvantage of Anisotropic. Isotropic RRE and RTE are indicators that actually measure the differences amongst the angle, the translation distance. The above five metrics all have inequal penalties for the registration of axisymmetric point clouds, and CD is the most fair metric. Meanwhile, real data sets registration tends to focus on the success rate of registration. With respect of real data sets, this research provides comparative data for feature matching and outlier removal. In the synthetic data set, this demonstration presents the comparative data of related methods in partial overlap, realtime, and global registration scenarios. At the end, the future research is from the current challenges in this field. 1) The application scenarios faced by point cloud registration are diverse, and it is difficult to develop general algorithms. Therefore, lightweight and efficient dedicated modules are more popular. 2) By dividing the overlap area, partial overlap can be converted into no overlap problem. This method is expected to lift the restrictions on the overlap rate requirements and fundamentally solve the problem of overlapping point cloud registration, so it has greater application value and prospects. 3) Most mainstream methods use multilayer perceptrons(MLPs) to learn saliency from data. 4) Some researchers introduced the random sample consensus(RANSAC) algorithm idea into the neural network, and achieved advanced results, but also led to higher complexity. Therefore, the balance performance and complexity is an issue to be considered in this sub-field. 5) The correspondence-free registration method is based on learning global features related to poses. The global features extracted by existing methods are more sensitive to noise and partial overlap, which is mainly caused by the fusion of some messy information in the global features. Meanwhile, the correspondence-free method has not been widely used in real data, and its robustness is still questioned by some researchers. Robust extraction of the global features for pose perception is also one of the main research issues further.关键词:point cloud registration;deep learning;registration without corresponding;end-to-end registration;correspondence;geometric feature extraction;outliers removal;review250|401|10更新时间:2024-05-07 -

摘要:As a 3D representation, point cloud is widely used and brings many challenges to point cloud processing. One of the tasks worth studying is point cloud registration that aims to register multiple point clouds correctly to the same coordinate and form a more complete point cloud. Point cloud registration should deal with the unstructured, uneven, noise, and other interference of the point cloud. It needs a shorter time consumption and achieves a higher accuracy. However, time consumption and precision are often contradictory, but it is optimized to a certain extent is possible. Point cloud registration is widely used in fields such as 3D reconstruction, parameter evaluation, positioning, and posture estimation. Autonomous driving, robotics, augmented reality, and others applications also involve the cloud registration technology. For this reason, various ingenious point cloud registration methods have been developed by researchers. In this paper, several representative point cloud registration methods are sorted out and summarized as a review. Compared with related work, this paper tries to cover various forms of point cloud registration and analyzes the details of several methods. It summarizes the existing methods into nonlearning methods and learning-based methods. Nonlearning methods are divided into classical methods and feature-based methods. Among them, the classic methods include iterative closest point and its variants, normal distributions transform and its variants, and 4-points congruent sets and its variants. Iterative closest point and normal distributions transform and their variants are classical fine registration methods and can achieve a high accuracy, but need a good initial pose. The 4-points congruent sets and its variants are classical coarse registration methods, do not need an initial pose, and can be used as the initial pose for fine registration after this coarse registration. For feature based algorithms, the methods of feature detection, feature description, and feature matching are introduced. They are the main process of a typical point cloud registration method in addition to other steps such as preprocessing of point cloud and calculation and verification of transformation matrix. The features are divided into point-based features, line-based features, surface-based features, and texture-based features. For different features, feature detection, description, and matching are also different, but none of them need an initial position. In addition to registration, these features can also be used for point cloud segmentation, recognition, and other tasks. Similarly, learning-based methods are subdivided into two types: partial learning methods that combine nonlearning components and purely end-to-end learning methods. The partial learning method replaces several components in the nonlearning method with learning-based components and exerts the high speed and high reliability of the learning method, which can bring great improvement to the nonlearning method. This method can also use several learning components designed for other tasks and provide learning components designed for registration tasks for other tasks; thus, it has a high flexibility. Many of these methods are similar to feature-based nonlearning methods and are feature based. However, several methods learn to segment point clouds and then use iterative closest point or normal distributions transform for registration. These partial learning methods have great flexibility, but the data required by partial learning methods are not easy to obtain, and verifying the learning results of partial learning is not easy. The end-to-end learning methods are more convenient to learn, its training data are easier to obtain, and the learning results are easier to verify. The end-to-end method also has a great advantage in speed, which can make full use of the computing power of the graphics processing unit(GPU). Nonlearning methods have lower hardware requirements, are easier to implement, and do not require training. They may not have an advantage in computing speed under the same registration performance, whereas learning-based methods can learn more advanced features in the point cloud, which is very helpful for improving the registration performance but depends on the diversity of the data set and the more advanced deep learning structure. The details of several typical algorithms for each method are introduced, and then the characteristics of these algorithms are compared and summarized. The performance of point cloud registration algorithms is constantly improving, but more point cloud application scenarios also entail higher requirements for point cloud registration, such as the requirements for real-time performance and the effectiveness of noise and lack or repetitive features, and robustness when dealing with unstable point clouds of multiple moving objects. Point cloud registration technology is still a worthy research direction. Point cloud registration technology will inevitably continue to make breakthroughs in speed, precision, and accuracy, and serve more applications.关键词:point cloud;registration;feature;deep learning;review454|177|29更新时间:2024-05-07

摘要:As a 3D representation, point cloud is widely used and brings many challenges to point cloud processing. One of the tasks worth studying is point cloud registration that aims to register multiple point clouds correctly to the same coordinate and form a more complete point cloud. Point cloud registration should deal with the unstructured, uneven, noise, and other interference of the point cloud. It needs a shorter time consumption and achieves a higher accuracy. However, time consumption and precision are often contradictory, but it is optimized to a certain extent is possible. Point cloud registration is widely used in fields such as 3D reconstruction, parameter evaluation, positioning, and posture estimation. Autonomous driving, robotics, augmented reality, and others applications also involve the cloud registration technology. For this reason, various ingenious point cloud registration methods have been developed by researchers. In this paper, several representative point cloud registration methods are sorted out and summarized as a review. Compared with related work, this paper tries to cover various forms of point cloud registration and analyzes the details of several methods. It summarizes the existing methods into nonlearning methods and learning-based methods. Nonlearning methods are divided into classical methods and feature-based methods. Among them, the classic methods include iterative closest point and its variants, normal distributions transform and its variants, and 4-points congruent sets and its variants. Iterative closest point and normal distributions transform and their variants are classical fine registration methods and can achieve a high accuracy, but need a good initial pose. The 4-points congruent sets and its variants are classical coarse registration methods, do not need an initial pose, and can be used as the initial pose for fine registration after this coarse registration. For feature based algorithms, the methods of feature detection, feature description, and feature matching are introduced. They are the main process of a typical point cloud registration method in addition to other steps such as preprocessing of point cloud and calculation and verification of transformation matrix. The features are divided into point-based features, line-based features, surface-based features, and texture-based features. For different features, feature detection, description, and matching are also different, but none of them need an initial position. In addition to registration, these features can also be used for point cloud segmentation, recognition, and other tasks. Similarly, learning-based methods are subdivided into two types: partial learning methods that combine nonlearning components and purely end-to-end learning methods. The partial learning method replaces several components in the nonlearning method with learning-based components and exerts the high speed and high reliability of the learning method, which can bring great improvement to the nonlearning method. This method can also use several learning components designed for other tasks and provide learning components designed for registration tasks for other tasks; thus, it has a high flexibility. Many of these methods are similar to feature-based nonlearning methods and are feature based. However, several methods learn to segment point clouds and then use iterative closest point or normal distributions transform for registration. These partial learning methods have great flexibility, but the data required by partial learning methods are not easy to obtain, and verifying the learning results of partial learning is not easy. The end-to-end learning methods are more convenient to learn, its training data are easier to obtain, and the learning results are easier to verify. The end-to-end method also has a great advantage in speed, which can make full use of the computing power of the graphics processing unit(GPU). Nonlearning methods have lower hardware requirements, are easier to implement, and do not require training. They may not have an advantage in computing speed under the same registration performance, whereas learning-based methods can learn more advanced features in the point cloud, which is very helpful for improving the registration performance but depends on the diversity of the data set and the more advanced deep learning structure. The details of several typical algorithms for each method are introduced, and then the characteristics of these algorithms are compared and summarized. The performance of point cloud registration algorithms is constantly improving, but more point cloud application scenarios also entail higher requirements for point cloud registration, such as the requirements for real-time performance and the effectiveness of noise and lack or repetitive features, and robustness when dealing with unstable point clouds of multiple moving objects. Point cloud registration technology is still a worthy research direction. Point cloud registration technology will inevitably continue to make breakthroughs in speed, precision, and accuracy, and serve more applications.关键词:point cloud;registration;feature;deep learning;review454|177|29更新时间:2024-05-07 -

摘要:Simultaneous localization and mapping (SLAM) technology is widely used in mobile robot applications, and it focuses on the robot's motion state estimation issue and reconstructing the environment model (map) at the same time. The SLAM science community has promoted the technique to be deployed in various applications in real life nowadays, such as virtual reality, augmented reality, autonomous driving, service robots, etc. In complicated scenarios, SLAM systems empowered with single sensor such as a camera or light detection and ranging(LiDAR) often fail to customize the targeted applications due to the deficiency of accuracy and robustness. Current research analyses have gradually improved SLAM solutions based on multi-sensors, multiple feature primitives, and the integration of multi-dimensional information. This research reviews current methods in the multi-source fusion SLAM realm at three scales: multi-sensor fusion (hybrid system with two or more kinds of sensors such as camera, LiDAR and inertial measurement unit (IMU), and combination methods can be divided into two categories(the loosely-coupled and the tightly-coupled), multi-feature-primitive fusion (point, line, plane, other high-dimensional geometric features, and the featureless direct-based method) and multi-dimensional information fusion (geometric information, semantic information, physical information, and inferred information from deep neural networks). The challenges and future research of multi-source fusion SLAM has been predicted as well. Multi-source fusion systems can implement accurate and robust state estimation and mapping, which can meet the requirements in a wider variety of applications. For instance, the fusion of vision and inertial sensors can illustrate the drift and scale missing issue of visual odometry, while the fusion of LiDAR and inertial measurement unit can improve the system's robustness, especially in unstructured or degraded scenes. The fusion of other sensors, such as sonar, radar and GPS(global positioning system) can extend the applicability further. In addition, the fusion of diverse geometric feature primitives such as feature points, lines, curves, planes, curved surfaces, cubes, and featureless direct-based methods can greatly deduct the degree of valid constraints, which is of great importance for state estimation systems. The reconstructed environmental map with multiple feature primitives is informative in autonomous navigation tasks. Furthermore, data-driven deep-learning-based synthesized analysis in the context of probabilistic model-based methods paves a new path to overcome the challenges of the initial SLAM systems. The learning-based methods (supervised learning, unsupervised learning, and hybrid supervised learning) are gradually applied to various modules of the SLAM system, including relative pose regression, map representation, loop closure detection, and unrolled back-end optimization, etc. Learning-based methods will benefit the performance of SLAM more with more cutting-edge research to fill the gap amongst networks and various original methods. This demonstration is shown as following: 1) The analysis of funder mental mechanisms of multi-sensor fusion and current multi-sensor fusion methods are illustrated; 2) Multi-feature primitive fusion and multi-dimensional information fusion are demonstrated; 3) The current difficulties and challenges of multi-source fusion towards SLAM have been issued; 4) The executive summary has been implemented at the end.关键词:simultaneous localization and mapping(SLAM);multi-source fusion;multi-sensor fusion;multi-feature fusion;multi-dimension information fusion517|689|13更新时间:2024-05-07

摘要:Simultaneous localization and mapping (SLAM) technology is widely used in mobile robot applications, and it focuses on the robot's motion state estimation issue and reconstructing the environment model (map) at the same time. The SLAM science community has promoted the technique to be deployed in various applications in real life nowadays, such as virtual reality, augmented reality, autonomous driving, service robots, etc. In complicated scenarios, SLAM systems empowered with single sensor such as a camera or light detection and ranging(LiDAR) often fail to customize the targeted applications due to the deficiency of accuracy and robustness. Current research analyses have gradually improved SLAM solutions based on multi-sensors, multiple feature primitives, and the integration of multi-dimensional information. This research reviews current methods in the multi-source fusion SLAM realm at three scales: multi-sensor fusion (hybrid system with two or more kinds of sensors such as camera, LiDAR and inertial measurement unit (IMU), and combination methods can be divided into two categories(the loosely-coupled and the tightly-coupled), multi-feature-primitive fusion (point, line, plane, other high-dimensional geometric features, and the featureless direct-based method) and multi-dimensional information fusion (geometric information, semantic information, physical information, and inferred information from deep neural networks). The challenges and future research of multi-source fusion SLAM has been predicted as well. Multi-source fusion systems can implement accurate and robust state estimation and mapping, which can meet the requirements in a wider variety of applications. For instance, the fusion of vision and inertial sensors can illustrate the drift and scale missing issue of visual odometry, while the fusion of LiDAR and inertial measurement unit can improve the system's robustness, especially in unstructured or degraded scenes. The fusion of other sensors, such as sonar, radar and GPS(global positioning system) can extend the applicability further. In addition, the fusion of diverse geometric feature primitives such as feature points, lines, curves, planes, curved surfaces, cubes, and featureless direct-based methods can greatly deduct the degree of valid constraints, which is of great importance for state estimation systems. The reconstructed environmental map with multiple feature primitives is informative in autonomous navigation tasks. Furthermore, data-driven deep-learning-based synthesized analysis in the context of probabilistic model-based methods paves a new path to overcome the challenges of the initial SLAM systems. The learning-based methods (supervised learning, unsupervised learning, and hybrid supervised learning) are gradually applied to various modules of the SLAM system, including relative pose regression, map representation, loop closure detection, and unrolled back-end optimization, etc. Learning-based methods will benefit the performance of SLAM more with more cutting-edge research to fill the gap amongst networks and various original methods. This demonstration is shown as following: 1) The analysis of funder mental mechanisms of multi-sensor fusion and current multi-sensor fusion methods are illustrated; 2) Multi-feature primitive fusion and multi-dimensional information fusion are demonstrated; 3) The current difficulties and challenges of multi-source fusion towards SLAM have been issued; 4) The executive summary has been implemented at the end.关键词:simultaneous localization and mapping(SLAM);multi-source fusion;multi-sensor fusion;multi-feature fusion;multi-dimension information fusion517|689|13更新时间:2024-05-07 -

摘要:The development of computer technology promotes the development of computer vision. Nowadays, more researchers focus on the field of 3D vision while monocular depth estimation is one of the basic tasks of 3D vision. Depth estimation from a single image is a critical technology for obtaining scene depth information. This technology has important research value because it has potential applications in intelligent vehicles, robot positioning, and other fields. Compared with traditional depth acquisition methods, monocular depth estimation based on deep learning has the advantages of low cost and simple operation. With the development of deep learning technology, many studies on monocular depth estimation based on deep learning have emerged in recent years, and the performance of monocular depth estimation has made great progress. The monocular depth estimation model needs a large a large amount of data to train the model. The commonly used training data types include RGB and depth (RGB-D) image pairs, stereo image pairs, and image sequences. The depth estimation model training by RGB-D images first extracts the image features through convolutional neural network and then predicts the depth map by using the method of continuous depth value regression. After predicting the depth map, several models use conditional random fields or other methods to optimize the depth map. Unsupervised learning is often used to train the monocular depth estimation model when the training data types are stereo image pairs and image sequences. The monocular estimation model training by stereo image pairs first predicts the disparity map and then estimates depth by using the disparity map. When an image sequence is used to train the model, the model first predicts the depth map of an image in the image sequence, and then the depth estimation model is optimized by images reconstructed by the depth map and other images in the sequence. To improve the accuracy of depth estimation, several researchers use semantic tags, depth range, and other auxiliary information to optimize depth maps. Several data sets can be used for multiple computer vision tasks such as depth estimation and semantic segmentation. Several researchers improve the accuracy of depth estimation by learning depth estimation and semantic segmentation model jointly because depth estimation has a strong correlation with semantic segmentation. When establishing the depth estimation data set, depth camera or light laser detection and ranging (LiDAR) is used to obtain the scene depth. Depth camera and LiDAR are based on the principle that light and other propagation media will reflect when they encounter objects. The depth range obtained by depth cameras and LiDAR is fixed because the propagation medium is dissipated in the transmission, and depth cameras and LiDAR cannot measure depth while the propagation medium energy is very small. Several models first divide the depth range into several depth intervals, take the median value of the depth interval as the depth value of the interval, and then use the method of multiple classifications to predict the depth map. Different training data types not only result in different network model structures but also affect the accuracy of depth estimation. In this review, the current monocular depth estimation methods based on deep learning are surveyed from the perspective of the training data type used by the monocular depth estimation model. Moreover, the single-image training model, the multi-image training model, and the monocular depth estimation model of auxiliary information optimization training are separately discussed. Furthermore, the latest research status of monocular depth estimation is systematically analyzed, and the advantages and disadvantages of various methods are discussed. Finally, the future research trends of monocular depth estimation are summarized.关键词:monocular vision;scene perception;deep learning;3D reconstruction;depth estimation247|154|4更新时间:2024-05-07

摘要:The development of computer technology promotes the development of computer vision. Nowadays, more researchers focus on the field of 3D vision while monocular depth estimation is one of the basic tasks of 3D vision. Depth estimation from a single image is a critical technology for obtaining scene depth information. This technology has important research value because it has potential applications in intelligent vehicles, robot positioning, and other fields. Compared with traditional depth acquisition methods, monocular depth estimation based on deep learning has the advantages of low cost and simple operation. With the development of deep learning technology, many studies on monocular depth estimation based on deep learning have emerged in recent years, and the performance of monocular depth estimation has made great progress. The monocular depth estimation model needs a large a large amount of data to train the model. The commonly used training data types include RGB and depth (RGB-D) image pairs, stereo image pairs, and image sequences. The depth estimation model training by RGB-D images first extracts the image features through convolutional neural network and then predicts the depth map by using the method of continuous depth value regression. After predicting the depth map, several models use conditional random fields or other methods to optimize the depth map. Unsupervised learning is often used to train the monocular depth estimation model when the training data types are stereo image pairs and image sequences. The monocular estimation model training by stereo image pairs first predicts the disparity map and then estimates depth by using the disparity map. When an image sequence is used to train the model, the model first predicts the depth map of an image in the image sequence, and then the depth estimation model is optimized by images reconstructed by the depth map and other images in the sequence. To improve the accuracy of depth estimation, several researchers use semantic tags, depth range, and other auxiliary information to optimize depth maps. Several data sets can be used for multiple computer vision tasks such as depth estimation and semantic segmentation. Several researchers improve the accuracy of depth estimation by learning depth estimation and semantic segmentation model jointly because depth estimation has a strong correlation with semantic segmentation. When establishing the depth estimation data set, depth camera or light laser detection and ranging (LiDAR) is used to obtain the scene depth. Depth camera and LiDAR are based on the principle that light and other propagation media will reflect when they encounter objects. The depth range obtained by depth cameras and LiDAR is fixed because the propagation medium is dissipated in the transmission, and depth cameras and LiDAR cannot measure depth while the propagation medium energy is very small. Several models first divide the depth range into several depth intervals, take the median value of the depth interval as the depth value of the interval, and then use the method of multiple classifications to predict the depth map. Different training data types not only result in different network model structures but also affect the accuracy of depth estimation. In this review, the current monocular depth estimation methods based on deep learning are surveyed from the perspective of the training data type used by the monocular depth estimation model. Moreover, the single-image training model, the multi-image training model, and the monocular depth estimation model of auxiliary information optimization training are separately discussed. Furthermore, the latest research status of monocular depth estimation is systematically analyzed, and the advantages and disadvantages of various methods are discussed. Finally, the future research trends of monocular depth estimation are summarized.关键词:monocular vision;scene perception;deep learning;3D reconstruction;depth estimation247|154|4更新时间:2024-05-07

Review

-

摘要:ObjectiveIntrinsic decomposition is a key problem in computer vision and graphics applications. It aims at separating lighting effects and material-oriented characteristics of object surfaces of the depicted scene within the image. Intrinsic decomposition from a single input image is highly ill-posed since the amount of unknowns is twice of the known values. Most classical approaches model intrinsic decomposition task with handcrafted priors to generate reasonable decomposition results. But they perform poorly in complicated scenarios as the prior knowledge is too limited to model complicated light-material interactions in real-world scenes. Deep neural network based methods can automatically learn the knowledge from data to avoid using handcrafted priors to model the task. However, due to the dependency on training datasets, the performance of current deep learning based methods is still limited because of various constraints in the current intrinsic datasets. Moreover, the learned networks tend to suffer from poor generalization once there is a large difference between the training and target domain. Another issue of deep neural network based methods is that the limited receptive field probably constrains the ability of the models to exploit the non-local information in the intrinsic component prediction process.MethodA graph convolution based module is designed to fully utilize the non-local cues within the input feature space. The module takes a feature map as input and outputs a feature map with same resolution as the input feature map. For producing the output feature vector for each position, the module uses information that includes the feature of itself, the information extracted from the local neighborhood and the information aggregated from the non-local neighbors that are likely to be very distant. The full intrinsic decomposition framework is constructed by integrating the devised non-local feature learning module into a U-Net network. In addition, to improve the piece-wise smoothness of the produced albedo results, we incorporate a neural network based image refinement module into the full pipeline, which is able to adaptively remove unnecessary artifacts while preserving structural information within the scenes depicted in input images. Simultaneously, there are noticeable limitations in existing intrinsic image datasets including limited sample amount, unrealistic scene and achromatic lighting in shading and sparse annotations, which will cause generalization issues for deep learning models and limit the decomposition performance as well. A new photorealistic rendered dataset for intrinsic image decomposition is proposed, which is rendered by leveraging large-scale 3D indoor scene models, along with high-quality textures and lighting to simulate the real-world environment. The chromatic shading components are first implemented.ResultTo validate the effectiveness of the proposed dataset, several state-of-the-art methods are trained on both the proposed dataset and CGIntrinsics dataset, a previously proposed dataset, and tested on intrinsic image evaluation benchmarks, i.e., intrinsie images in the wild (IIW)/shading annotations in the wild (SAW) test sets. Compared to the variants trained on CGIntrinsics dataset, the variants trained on the proposed dataset demonstrate a 7.29% improvement in averaging weighted human disagreement rate (WHDR) on IIW test set and a 2.74% gain for average precision (AP) on SAW test set. Simultaneously, the proposed graph convolution based network achieves comparable quantitative results on both IIW and SAW test sets and gets significantly better qualitative results. To further investigate the intrinsic decomposition quality for different methods, a number of application tasks including re-lighting and texture/lighting editing are conducted utilizing the generated intrinsic components. The proposed method demonstrates more promising application effects comparing with two state-of-the-art methods, further highlighting its superiority and application potential.ConclusionBased on the non-local priors in classical methods for intrinsic image decomposition, a graph convolutional network for intrinsic decomposition is proposed, in which non-local cues are utilized. To mitigate the issues existed in current intrinsic image datasets, a new high quality photorealistic dataset is rendered, which provides dense labels for albedo and shading. The depicted scenes in the images of the proposed dataset have complicated textures and illuminations that closely approximate general indoor scenes in reality, which helps to mitigate the domain gap issues. The shading labels in this dataset first consider chromatic lighting, which allows the neural networks to better separate material properties and lighting effects, especially for the effects introduced by inter-reflections between diffuse surfaces. The decomposition results of both the proposed method and two current state-of-the-art methods are applied to a range of application scenarios, visually demonstrating the superior decomposition quality and application potentials of the proposed method.关键词:image processing;image understanding;intrinsic image decomposition;graph convolutional neural network(GCN);synthetic dataset313|1076|1更新时间:2024-05-07

摘要:ObjectiveIntrinsic decomposition is a key problem in computer vision and graphics applications. It aims at separating lighting effects and material-oriented characteristics of object surfaces of the depicted scene within the image. Intrinsic decomposition from a single input image is highly ill-posed since the amount of unknowns is twice of the known values. Most classical approaches model intrinsic decomposition task with handcrafted priors to generate reasonable decomposition results. But they perform poorly in complicated scenarios as the prior knowledge is too limited to model complicated light-material interactions in real-world scenes. Deep neural network based methods can automatically learn the knowledge from data to avoid using handcrafted priors to model the task. However, due to the dependency on training datasets, the performance of current deep learning based methods is still limited because of various constraints in the current intrinsic datasets. Moreover, the learned networks tend to suffer from poor generalization once there is a large difference between the training and target domain. Another issue of deep neural network based methods is that the limited receptive field probably constrains the ability of the models to exploit the non-local information in the intrinsic component prediction process.MethodA graph convolution based module is designed to fully utilize the non-local cues within the input feature space. The module takes a feature map as input and outputs a feature map with same resolution as the input feature map. For producing the output feature vector for each position, the module uses information that includes the feature of itself, the information extracted from the local neighborhood and the information aggregated from the non-local neighbors that are likely to be very distant. The full intrinsic decomposition framework is constructed by integrating the devised non-local feature learning module into a U-Net network. In addition, to improve the piece-wise smoothness of the produced albedo results, we incorporate a neural network based image refinement module into the full pipeline, which is able to adaptively remove unnecessary artifacts while preserving structural information within the scenes depicted in input images. Simultaneously, there are noticeable limitations in existing intrinsic image datasets including limited sample amount, unrealistic scene and achromatic lighting in shading and sparse annotations, which will cause generalization issues for deep learning models and limit the decomposition performance as well. A new photorealistic rendered dataset for intrinsic image decomposition is proposed, which is rendered by leveraging large-scale 3D indoor scene models, along with high-quality textures and lighting to simulate the real-world environment. The chromatic shading components are first implemented.ResultTo validate the effectiveness of the proposed dataset, several state-of-the-art methods are trained on both the proposed dataset and CGIntrinsics dataset, a previously proposed dataset, and tested on intrinsic image evaluation benchmarks, i.e., intrinsie images in the wild (IIW)/shading annotations in the wild (SAW) test sets. Compared to the variants trained on CGIntrinsics dataset, the variants trained on the proposed dataset demonstrate a 7.29% improvement in averaging weighted human disagreement rate (WHDR) on IIW test set and a 2.74% gain for average precision (AP) on SAW test set. Simultaneously, the proposed graph convolution based network achieves comparable quantitative results on both IIW and SAW test sets and gets significantly better qualitative results. To further investigate the intrinsic decomposition quality for different methods, a number of application tasks including re-lighting and texture/lighting editing are conducted utilizing the generated intrinsic components. The proposed method demonstrates more promising application effects comparing with two state-of-the-art methods, further highlighting its superiority and application potential.ConclusionBased on the non-local priors in classical methods for intrinsic image decomposition, a graph convolutional network for intrinsic decomposition is proposed, in which non-local cues are utilized. To mitigate the issues existed in current intrinsic image datasets, a new high quality photorealistic dataset is rendered, which provides dense labels for albedo and shading. The depicted scenes in the images of the proposed dataset have complicated textures and illuminations that closely approximate general indoor scenes in reality, which helps to mitigate the domain gap issues. The shading labels in this dataset first consider chromatic lighting, which allows the neural networks to better separate material properties and lighting effects, especially for the effects introduced by inter-reflections between diffuse surfaces. The decomposition results of both the proposed method and two current state-of-the-art methods are applied to a range of application scenarios, visually demonstrating the superior decomposition quality and application potentials of the proposed method.关键词:image processing;image understanding;intrinsic image decomposition;graph convolutional neural network(GCN);synthetic dataset313|1076|1更新时间:2024-05-07

Dataset

-

摘要:ObjectiveThe conception of digital twin has attracted tremendous attention and developed rapidly in the fields of smart cities, smart transportation, urban planning, and virtual/augmented reality during past years. The basic objective is to visualize, analyze, simulate, and optimize real world scenes by projecting physical objects onto digital 3D models. To apply digital twin technology successfully to downstream applications such as real-time rendering, human-scene interaction, and numerical simulation, the reconstructed 3D models should preferably be geometrically accurate, vectorized, highly simplified, free of self-intersection, and watertight. To satisfy these requirements, a potential solution called structured reconstruction method extracts geometric planes from discrete point clouds or original triangular mesh and splices them into a compact parametric 3D model. Previous methods address this problem by detecting geometric shapes and then assembling them into a polygonal mesh, but these methods usually suffer from two obstacles. First, traditional shape detection methods such as region growing algorithm rely on iteratively propagating geometric constraints around selected seeds. This greedy strategy only considers local properties and cannot guarantee the quality of global configuration. Second, current shape assembly methods typically recover the surface model by slicing the 3D space into polyhedral cells and assigning inside-outside labels to each cell. Most of these slicing-based methods preserve a high computational complexity and can hardly process more than 100 shapes. In this paper, a novel automatic approach that generates concise polygonal meshes from point clouds or raw triangular meshes in an efficient, robust manner relying upon three ingredients is proposed.MethodOur method consists of three steps: shape detection, space partition, and surface extraction. Our algorithm requires as input a point cloud with oriented normal or triangle mesh. The resulting model is guaranteed to be intersection-free and watertight. First, a multi-source region growing algorithm that detects planar shapes from input 3D data through a global way is proposed. This strategy ensures that points or triangular facets located near the boundary of two shapes can be correctly clustered into their corresponding group. Next, the detected planar shapes are used to partition the bounding box of the object into a polyhedral. To avoid the computational burden involved in the shape assembling step, the partition is performed in a hierarchical manner, that is, the 3D space is recursively divided to build a binary space partitioning (BSP) tree. Starting from the initial bounding box, the largest planar shapes are used to divide a polyhedron cell into two. The planar shapes in the polyhedron are also assigned to the new polyhedron cell. If a shape spans the two polyhedrons, it is divided into two to ensure that every shape in the new polyhedron does not exceed its scope. This operation continues until no divisible polyhedron cell remains. It is equivalent to building a BSP tree. Each leaf node of the BSP tree corresponds to a convex polyhedron cell, and all leaf nodes are combined into the initial bounding box. In such a way, a detected shape only partitions the space locally, without causing redundant partitioning. Hierarchical space partition is the key to reducing the search space and improving the overall pipeline efficiency. Finally, the surface is extracted from the hierarchical partition by labeling each polyhedron as inside or outside the reconstructed model. A ray-shooting-based Markov energy function is defined, and a min-cut is operated to find inside-outside labeling that minimizes the energy function. The output surface is defined as the interface facets between the inside and the outside polyhedral.ResultThe robustness and the performance of our method are demonstrated on a variety of man-made objects and even large-scale scenes from three aspects of fidelity, complexity, and running time. A large number of experimental results prove that our algorithm can process objects composed of tens of thousands of planar shapes on a standard computer without a parallelization scheme. Compared with traditional slicing methods, the number of polyhedral cells obtained through this simple, robust mechanism and the running time are reduced by at least two orders of magnitude. Approximately 70% of the calculation time is used for space partition, but the total time can be controlled within 5 s/10 000 points. In addition, the root-mean-square(RMS) error of the simplified model is mostly controlled within 1%, and the simplification ratio of the facets is controlled within 1.5%. The proposed method greatly improves the calculation efficiency and accuracy of the results, and provides a good trade-off between complexity and fidelity.ConclusionOur structural mesh reconstruction pipeline consists of three steps: shape detection, space partition, and surface extraction. Our method is especially suitable for models with rich structural features. The resulting model is guaranteed to be watertight and free of self-intersection while preserving the features of the structure. The limitation of this algorithm is that reconstruction quality mainly depends on the result of shape detection, which only considers the individual model and does not take advantage of the statistical information of the whole dataset. In addition, this algorithm is only for reconstructing watertight models. When the input data have a large area of missing parts (such as the bottom of the building data), the algorithm relies on the bounding box to close the surface. In the future, data-driven methods will be explored to improve shape detection and take advantage of the hierarchical partition for levels of detail (LOD) reconstruction.关键词:geometric modeling;surface reconstruction;shape detection;binary space partitioning(BSP);Markov random field(MRF)191|301|2更新时间:2024-05-07

摘要:ObjectiveThe conception of digital twin has attracted tremendous attention and developed rapidly in the fields of smart cities, smart transportation, urban planning, and virtual/augmented reality during past years. The basic objective is to visualize, analyze, simulate, and optimize real world scenes by projecting physical objects onto digital 3D models. To apply digital twin technology successfully to downstream applications such as real-time rendering, human-scene interaction, and numerical simulation, the reconstructed 3D models should preferably be geometrically accurate, vectorized, highly simplified, free of self-intersection, and watertight. To satisfy these requirements, a potential solution called structured reconstruction method extracts geometric planes from discrete point clouds or original triangular mesh and splices them into a compact parametric 3D model. Previous methods address this problem by detecting geometric shapes and then assembling them into a polygonal mesh, but these methods usually suffer from two obstacles. First, traditional shape detection methods such as region growing algorithm rely on iteratively propagating geometric constraints around selected seeds. This greedy strategy only considers local properties and cannot guarantee the quality of global configuration. Second, current shape assembly methods typically recover the surface model by slicing the 3D space into polyhedral cells and assigning inside-outside labels to each cell. Most of these slicing-based methods preserve a high computational complexity and can hardly process more than 100 shapes. In this paper, a novel automatic approach that generates concise polygonal meshes from point clouds or raw triangular meshes in an efficient, robust manner relying upon three ingredients is proposed.MethodOur method consists of three steps: shape detection, space partition, and surface extraction. Our algorithm requires as input a point cloud with oriented normal or triangle mesh. The resulting model is guaranteed to be intersection-free and watertight. First, a multi-source region growing algorithm that detects planar shapes from input 3D data through a global way is proposed. This strategy ensures that points or triangular facets located near the boundary of two shapes can be correctly clustered into their corresponding group. Next, the detected planar shapes are used to partition the bounding box of the object into a polyhedral. To avoid the computational burden involved in the shape assembling step, the partition is performed in a hierarchical manner, that is, the 3D space is recursively divided to build a binary space partitioning (BSP) tree. Starting from the initial bounding box, the largest planar shapes are used to divide a polyhedron cell into two. The planar shapes in the polyhedron are also assigned to the new polyhedron cell. If a shape spans the two polyhedrons, it is divided into two to ensure that every shape in the new polyhedron does not exceed its scope. This operation continues until no divisible polyhedron cell remains. It is equivalent to building a BSP tree. Each leaf node of the BSP tree corresponds to a convex polyhedron cell, and all leaf nodes are combined into the initial bounding box. In such a way, a detected shape only partitions the space locally, without causing redundant partitioning. Hierarchical space partition is the key to reducing the search space and improving the overall pipeline efficiency. Finally, the surface is extracted from the hierarchical partition by labeling each polyhedron as inside or outside the reconstructed model. A ray-shooting-based Markov energy function is defined, and a min-cut is operated to find inside-outside labeling that minimizes the energy function. The output surface is defined as the interface facets between the inside and the outside polyhedral.ResultThe robustness and the performance of our method are demonstrated on a variety of man-made objects and even large-scale scenes from three aspects of fidelity, complexity, and running time. A large number of experimental results prove that our algorithm can process objects composed of tens of thousands of planar shapes on a standard computer without a parallelization scheme. Compared with traditional slicing methods, the number of polyhedral cells obtained through this simple, robust mechanism and the running time are reduced by at least two orders of magnitude. Approximately 70% of the calculation time is used for space partition, but the total time can be controlled within 5 s/10 000 points. In addition, the root-mean-square(RMS) error of the simplified model is mostly controlled within 1%, and the simplification ratio of the facets is controlled within 1.5%. The proposed method greatly improves the calculation efficiency and accuracy of the results, and provides a good trade-off between complexity and fidelity.ConclusionOur structural mesh reconstruction pipeline consists of three steps: shape detection, space partition, and surface extraction. Our method is especially suitable for models with rich structural features. The resulting model is guaranteed to be watertight and free of self-intersection while preserving the features of the structure. The limitation of this algorithm is that reconstruction quality mainly depends on the result of shape detection, which only considers the individual model and does not take advantage of the statistical information of the whole dataset. In addition, this algorithm is only for reconstructing watertight models. When the input data have a large area of missing parts (such as the bottom of the building data), the algorithm relies on the bounding box to close the surface. In the future, data-driven methods will be explored to improve shape detection and take advantage of the hierarchical partition for levels of detail (LOD) reconstruction.关键词:geometric modeling;surface reconstruction;shape detection;binary space partitioning(BSP);Markov random field(MRF)191|301|2更新时间:2024-05-07 -

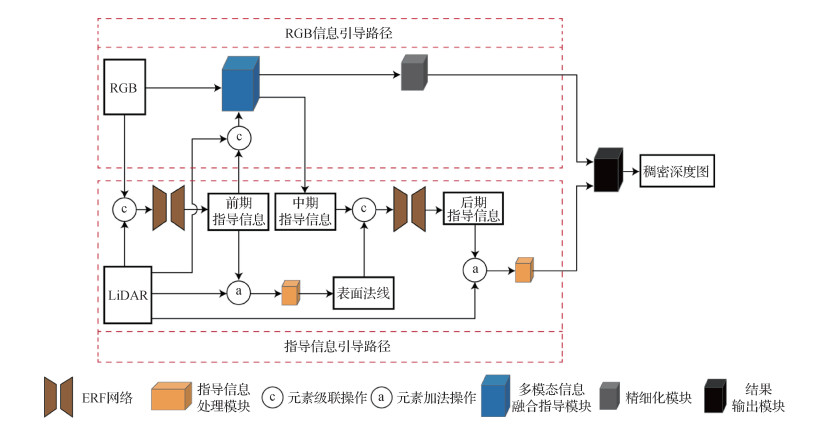

摘要:ObjectiveRecently, depth information plays an important role in the field of autonomous driving and robot navigation, but the sparse depth collected by light detection and ranging (LiDAR) has sparse and noisy deficiencies. To solve such problems, several recently proposed methods that use a single image to guide sparse depth to construct the dense depth map have shown good performance. However, many methods cannot perfectly learn the depth information about edges and details of the object. This paper proposes a multistage guidance network model to cope with this challenge. The deformable convolution and efficient residual factorized(ERF) network are introduced into the network model, and the quality of the dense depth map is improved from the angle of the geometric constraint by surface normal information. The depth and guidance information extracted in the network is dominated, and the information extracted in the RGB picture is used as the guidance information to guide the sparse depth densification and correct the error in depth information.MethodThe multistage guidance network is composed of guidance information guidance path and RGB information guidance path. On the path of guidance information guidance, first, the sparse depth information and RGB images are merged through the ERF network to obtain the initial guidance information, and the sparse depth information and the initial guidance information are input into the guidance information processing module to construct the surface normal. Second, the surface normal and the midterm guidance information obtained by the multimodal information fusion guidance module are input into the ERF network, and the later guidance information containing rich depth information is extracted under the action of the surface normal. The later guidance information is used to guide the sparse depth densification. At the same time, the sparse depth is introduced again to make up for the depth information ignored in the early stage, and then the dense depth map constructed on this path is obtained. On the RGB information guidance path, the initial guidance information can be used to guide the fusion of the sparse depth and the information extracted from the RGB picture, and reduce the influence of sparse depth noise and sparsity. The midterm guidance information and initial dense depth map with rich depth information can be extracted from the multimodal information fusion guidance module. However, the initial dense depth map still contains error information. Through the refined module to correct the dense depth map, the accurate dense depth map can be obtained. The network adds sparse depth and guidance information by adding an operation, which can effectively guide sparse depth densification. Using cascading operation can effectively retain their respective features in different information, which causes the network or module to extract more features. Overall, the initial guidance information is extracted by entering information, which promotes the construction of surface normal and guides the fusion of sparse depth and RGB information. The midterm guidance information is obtained by the multimodal information fusion guidance module, which is the key information to connect two paths. The later guidance information is obtained by fusing the midterm guidance information and the surface normal, which is used to guide the sparse depth densification. From the two paths, on the guidance information guidance path, a dense depth map is constructed by the initial, midterm, and later guidance information to guide the sparse depth; on the RGB information guidance path, the multimodal information fusion guidance module guides the sparse depth through the RGB information.ResultThe proposed network is implemented using PyTorch and Adam optimizer. The parameters of the Adam optimizer are set to β1=0.9 and β2=0.999. The image input to the network is cropped to 256×512 pixels, the graphics card is NVIDIA 3090, the batch size is set to 6, and 30 rounds of training are performed. The initial learning rate is 0.000 125, and the learning rate is reduced by half every 5 rounds. The Karlsruhe Institute of Technology and Toyota Technological Institute at Chicago (KITTI) depth estimation data contains more than 93 000 pairs of ground truth data, aligned LiDAR sparse depth data, and RGB pictures. A total of 85 898 pairs of data can be used to train, and the officially distributed 1 000 pairs of validation set data with ground truth data and 1 000 pairs of test set data without ground truth data can be used to test. The experimental results can be evaluated directly due to the validation set with ground truth data. The test set without ground truth data and the experimental results are required to be submitted to the KITTI official evaluation server to obtain public evaluation results, and the result is an important basis for the performance of a fair assessment model. The validation set and test set do not participate in the training of the network model. The mean square error of the root and the mean square error of inversion root in the evaluation indicators are lower than those of the other methods, and the accuracy of the depth information at the edges and details of the object is more evident.ConclusionA multistage guidance network model for dense depth map construction from LiDAR and RGB information is presented in this paper. The guidance information processing module is used to promote the fusion of guidance information and sparse depth. The multimodal information fusion guidance module can learn a large amount of depth information from sparse depth and RGB pictures. The refined module is used to modify the output results of the multimodal information fusion guidance module. In summary, the dense depth map constructed by the multistage guidance network is composed of the guidance information guidance path and the RGB information guidance path. Two strategies build the dense depth map to form a complementary advantage effectively, using more information to obtain more accurate dense depth maps. Experiments on the KITTI depth estimation data set show that using a multistage guidance network can effectively deal with the depth of the edges and details of the object, and improve the construction quality of dense depth maps.关键词:depth estimation;deep learning;LiDAR;multi-modal data fusion;image processing169|221|3更新时间:2024-05-07