最新刊期

卷 27 , 期 12 , 2022

-

摘要:Dual-pixel (DP) sensor is a kind of Canon-originated hardware technique for autofocusing in 2013. Conventional autofocus methods are divided into two major categories: phase-based and contrast-based methods. However, phase-based autofocusing has higher electronic complexity, and contrast-based autofocusing runs slower in practice. Therefore, current hybrid detection autofocus technique is more concerned, which yields some pixels to imaging and focusing. However, the resolution loss issue cannot be avoided. Hybrid DP-based autofocusing methods enable each pixel to be integrated for both imaging and focusing, which improves the cost-efficiency focusing accuracy. Therefore, it has been widely used in mobile phone cameras and digital single lens reflex (DSLR) cameras. In recent years, DP sensors have been offered by sensor manufacturers and occupied the vast majority of camera sensor market. To guarantee the performance of focusing and imaging, each pixel in a DP-based sensor is equipped with two photodiodes. A DP-based sensor can assign each pixel to two halves and two images can be obtained simultaneously. These two images (DP image pair) could be viewed as a perfectly rectified stereo image pair in relation to a tiny baseline and the same exposure time, or as a two viewing angled light field camera. Unlike stereo image pairs, DP image pairs only have disparity at the out-of-focus regions, while the in-focus regions have no disparity. Defocus disparity, which only exists in the out-of-focus regions, is directly connected with the depth of the scene being captured and is generated by the point spread function. The point spread functions of the left and right views of DP are approximately symmetric, and the point spread functions based focus are relatively symmetric before and after as well. This relationship and the special point spread function can provide extra information for various computer vision tasks. Therefore, the obtained DP image pair can also be used for depth estimation, defocus deblurring and reflection removal beyond automatic focusing applications of the DP sensors. In particular, the relationship between depth and blur size in DP sensor is effective to deal with the depth from defocus task and the defocus deblur task. We critically review the autofocus, imaging principle and current situation of the DP-based sensor. 1) To provide a basic understanding of the dual-pixel sensors, we introduce the dual-pixel imaging model and imaging principle. 2) To specify the breakthrough of them, we carry out comparative analysis related to dual-pixel research in recent years. 3) To develop a reference for researchers further, we trace the current open-source dual-pixel datasets and simulators to facilitate data acquisition. Specifically, we firstly describe dual-pixel from the point of view of enabling automatic focus, where three conventional autofocus methods: 1) phase detection autofocus (PDAF), 2) contrast detection autofocus (CDAF), and 3) hybrid autofocus. The principle and priority of dual-pixel autofocus are critically reviewed in Section I. In Section II, we review the relevant optical concepts and camera imaging model. The imaging principle and geometric features of dual-pixel are introduced on four aspects: 1) dual-pixel geometry, 2) dual-pixel affine ambiguity, 3) dual-pixel point spread function, and 4) the difference between dual-pixel image pair and stereo image pair. It shows that DP image pairs can aid downstream tasks and how to mine effective hidden information from the DP image pairs. DP-based defocus disparity is linked to the contexted depth in terms of the affine ambiguity of dual-pixel, which can be used as a cue of depth estimation, defocus deblur and other related tasks. In Section Ⅲ, we summarize the applications of the DP image pairs in the context of three computer vision tasks: 1) depth estimation, 2) reflection removal, and 3) defocus deblur. As appropriate datasets are fundamental to designing deep learning based architecture of neural networks better in contrast to conventional methods, we briefly introduce the community-derived DP datasets and summarize the algorithm principles of the current DP simulators. Finally, the future challenges and opportunities of the DP sensor have been discussed further in Section V.关键词:dual-pixel(DP);autofocus;deep learning;camera imaging;reflection removal;depth estimation289|2308|0更新时间:2024-05-07

摘要:Dual-pixel (DP) sensor is a kind of Canon-originated hardware technique for autofocusing in 2013. Conventional autofocus methods are divided into two major categories: phase-based and contrast-based methods. However, phase-based autofocusing has higher electronic complexity, and contrast-based autofocusing runs slower in practice. Therefore, current hybrid detection autofocus technique is more concerned, which yields some pixels to imaging and focusing. However, the resolution loss issue cannot be avoided. Hybrid DP-based autofocusing methods enable each pixel to be integrated for both imaging and focusing, which improves the cost-efficiency focusing accuracy. Therefore, it has been widely used in mobile phone cameras and digital single lens reflex (DSLR) cameras. In recent years, DP sensors have been offered by sensor manufacturers and occupied the vast majority of camera sensor market. To guarantee the performance of focusing and imaging, each pixel in a DP-based sensor is equipped with two photodiodes. A DP-based sensor can assign each pixel to two halves and two images can be obtained simultaneously. These two images (DP image pair) could be viewed as a perfectly rectified stereo image pair in relation to a tiny baseline and the same exposure time, or as a two viewing angled light field camera. Unlike stereo image pairs, DP image pairs only have disparity at the out-of-focus regions, while the in-focus regions have no disparity. Defocus disparity, which only exists in the out-of-focus regions, is directly connected with the depth of the scene being captured and is generated by the point spread function. The point spread functions of the left and right views of DP are approximately symmetric, and the point spread functions based focus are relatively symmetric before and after as well. This relationship and the special point spread function can provide extra information for various computer vision tasks. Therefore, the obtained DP image pair can also be used for depth estimation, defocus deblurring and reflection removal beyond automatic focusing applications of the DP sensors. In particular, the relationship between depth and blur size in DP sensor is effective to deal with the depth from defocus task and the defocus deblur task. We critically review the autofocus, imaging principle and current situation of the DP-based sensor. 1) To provide a basic understanding of the dual-pixel sensors, we introduce the dual-pixel imaging model and imaging principle. 2) To specify the breakthrough of them, we carry out comparative analysis related to dual-pixel research in recent years. 3) To develop a reference for researchers further, we trace the current open-source dual-pixel datasets and simulators to facilitate data acquisition. Specifically, we firstly describe dual-pixel from the point of view of enabling automatic focus, where three conventional autofocus methods: 1) phase detection autofocus (PDAF), 2) contrast detection autofocus (CDAF), and 3) hybrid autofocus. The principle and priority of dual-pixel autofocus are critically reviewed in Section I. In Section II, we review the relevant optical concepts and camera imaging model. The imaging principle and geometric features of dual-pixel are introduced on four aspects: 1) dual-pixel geometry, 2) dual-pixel affine ambiguity, 3) dual-pixel point spread function, and 4) the difference between dual-pixel image pair and stereo image pair. It shows that DP image pairs can aid downstream tasks and how to mine effective hidden information from the DP image pairs. DP-based defocus disparity is linked to the contexted depth in terms of the affine ambiguity of dual-pixel, which can be used as a cue of depth estimation, defocus deblur and other related tasks. In Section Ⅲ, we summarize the applications of the DP image pairs in the context of three computer vision tasks: 1) depth estimation, 2) reflection removal, and 3) defocus deblur. As appropriate datasets are fundamental to designing deep learning based architecture of neural networks better in contrast to conventional methods, we briefly introduce the community-derived DP datasets and summarize the algorithm principles of the current DP simulators. Finally, the future challenges and opportunities of the DP sensor have been discussed further in Section V.关键词:dual-pixel(DP);autofocus;deep learning;camera imaging;reflection removal;depth estimation289|2308|0更新时间:2024-05-07 -

摘要:Deep learning technology is capable of image-style transfer tasks recently. The Chinese characters font transfer is focused on content preservation while the font attribute is converted. Thanks to the emerging deep learning, the workload of font design for Chinese characters can be alleviated effectively and the restrictions of human intervention are avoided as well. However, the quality of generated images is still a challenging issue to be resolved. Our review is aimed at the analysis of the most representative image generation and font transfer methods for Chinese characters. The literature review of contemporary font transfer methods for Chinese characters is systematically summarized and divided into three categories: 1) convolutional neural network based (CNN-based), 2) auto-encoder based (AE-based), and 3) generative adversarial networks based (GAN-based). To avoid information missing in the process of data reconstruction, a convolutional neural network extracted features of images without changing the dimensions of data. Auto-encoder processed the data through a deep neural network to learn the distribution of real samples and generate realistic fake samples. Generative adversarial networks became popular in Chinese characters font transfer after being proposed by Goodfellow. Its structure consists of a generator and a discriminator generally. The core idea of generative adversarial networks came from the Nash equilibrium of game theory, which is reflected in the process of continuous optimization between the generator and discriminator. Its generator learned the distribution of real data, generated fake images, and induced discriminators to make wrong decisions. The discriminator tried to determine whether the input data is real or fake. Through this game between generator and discriminator, the latter could not distinguish the real image from the fake in the end. According to the way of learning font style features of Chinese characters, we divided these methods based on GAN into three categories: 1) self-learning font style features, 2) external font style features, and 3) extractive font style features. We introduced twenty-two font transfer methods for Chinese characters and summarized the performance of these methods in terms of dataset requirements, font category supports, and evaluations for generated images. The key factors of these font transfer methods are introduced, compared, and analyzed, including refining Chinese characters features, relying on a pre-trained model for effective feature extraction, and supporting de-stylization. According to the uniformed table of radicals for Chinese characters, we built a data set consisting of 6 683 simplified and traditional characters in five fonts. To accomplish the transformation from source font (simfs.ttf) to target font (printed font and hand-written font), comparative experiments are carried out on the same data set. The comparative analysis of four archetypal font transfer methods for Chinese characters (Rewrite2, zi2zi, TET-GAN, and Unet-GAN) are implemented. Our quantitative evaluation metrics are composed of root mean square error (RMSE) and Pixel-level accuracy (pix_acc), and several generated results of each method for comparison were shown. The strokes of characters generated by Unet-GAN are the most complete and clear according to the subjective and objective evaluation metrics of generated images, which is competent for the transfer and generation of printing and handwriting font. At the same time, the methods named Rewrite2, zi2zi, and TET-GAN are more suitable for the font transfer task of printing characters, and their ability to generate strokes of Chinese characters needs to be improved. We summarized some challenging issues like blurred strokes of Chinese characters, immature methods of multi-domain transformation, and large-scale training data set applications. The future research direction can be further extended on the aspects of 1) integrating the stylization and de-stylization of Chinese characters, 2) reducing the size of the data set, and 3) extracting features of Chinese characters more effectively. Furthermore, its potential can be associated with information hiding technology for document watermarking and embedding secret messages.关键词:Chinese characters font transfer;image generation;convolutional neural network (CNN);auto-encoder(AE);generate adversarial network (GAN)296|853|2更新时间:2024-05-07

摘要:Deep learning technology is capable of image-style transfer tasks recently. The Chinese characters font transfer is focused on content preservation while the font attribute is converted. Thanks to the emerging deep learning, the workload of font design for Chinese characters can be alleviated effectively and the restrictions of human intervention are avoided as well. However, the quality of generated images is still a challenging issue to be resolved. Our review is aimed at the analysis of the most representative image generation and font transfer methods for Chinese characters. The literature review of contemporary font transfer methods for Chinese characters is systematically summarized and divided into three categories: 1) convolutional neural network based (CNN-based), 2) auto-encoder based (AE-based), and 3) generative adversarial networks based (GAN-based). To avoid information missing in the process of data reconstruction, a convolutional neural network extracted features of images without changing the dimensions of data. Auto-encoder processed the data through a deep neural network to learn the distribution of real samples and generate realistic fake samples. Generative adversarial networks became popular in Chinese characters font transfer after being proposed by Goodfellow. Its structure consists of a generator and a discriminator generally. The core idea of generative adversarial networks came from the Nash equilibrium of game theory, which is reflected in the process of continuous optimization between the generator and discriminator. Its generator learned the distribution of real data, generated fake images, and induced discriminators to make wrong decisions. The discriminator tried to determine whether the input data is real or fake. Through this game between generator and discriminator, the latter could not distinguish the real image from the fake in the end. According to the way of learning font style features of Chinese characters, we divided these methods based on GAN into three categories: 1) self-learning font style features, 2) external font style features, and 3) extractive font style features. We introduced twenty-two font transfer methods for Chinese characters and summarized the performance of these methods in terms of dataset requirements, font category supports, and evaluations for generated images. The key factors of these font transfer methods are introduced, compared, and analyzed, including refining Chinese characters features, relying on a pre-trained model for effective feature extraction, and supporting de-stylization. According to the uniformed table of radicals for Chinese characters, we built a data set consisting of 6 683 simplified and traditional characters in five fonts. To accomplish the transformation from source font (simfs.ttf) to target font (printed font and hand-written font), comparative experiments are carried out on the same data set. The comparative analysis of four archetypal font transfer methods for Chinese characters (Rewrite2, zi2zi, TET-GAN, and Unet-GAN) are implemented. Our quantitative evaluation metrics are composed of root mean square error (RMSE) and Pixel-level accuracy (pix_acc), and several generated results of each method for comparison were shown. The strokes of characters generated by Unet-GAN are the most complete and clear according to the subjective and objective evaluation metrics of generated images, which is competent for the transfer and generation of printing and handwriting font. At the same time, the methods named Rewrite2, zi2zi, and TET-GAN are more suitable for the font transfer task of printing characters, and their ability to generate strokes of Chinese characters needs to be improved. We summarized some challenging issues like blurred strokes of Chinese characters, immature methods of multi-domain transformation, and large-scale training data set applications. The future research direction can be further extended on the aspects of 1) integrating the stylization and de-stylization of Chinese characters, 2) reducing the size of the data set, and 3) extracting features of Chinese characters more effectively. Furthermore, its potential can be associated with information hiding technology for document watermarking and embedding secret messages.关键词:Chinese characters font transfer;image generation;convolutional neural network (CNN);auto-encoder(AE);generate adversarial network (GAN)296|853|2更新时间:2024-05-07 -

摘要:Atrial fibrillation (AF) is one of the most arrhythmia symptoms nowadays. The incidence rate of AF increases with elder growth and it can reach 10% population over 75 years old. The AF duration can be divided into paroxysmal, persistent and permanent, and it is induced to the morbidity and mortality of cardiovascular diseases severely. It affects more than 30 million people worldwide like reducing the quality of life and linking high risk of cerebral infarction and death. Although the risk can be reduced with appropriate treatment, AF is often latent and difficult to diagnose and intervene quickly. Recent AF-diagnostic methods have composed of cardiac palpation, optical plethysmography, blood pressure monitoring and vibration, electrocardiogram (ECG) and image-based methods. Most of atrial fibrillation has paroxysmal atrial fibrillation. The four diagnostic methods mentioned above may not capture the onset of atrial fibrillation. It is challenged for long-term diagnosis cycles, high costs, low accuracy and vulnerability. Medical imaging promotes contemporary modern medicine, computed tomography (CT) and magnetic resonance imaging (MRI) via transparent image of the cardiac anatomy. The MRI can be as one of the key medical imaging techniques, which of being unaffected by ionizing radiation, having high soft tissue contrast and high spatial resolution. Current images have limited of low signal-to-noise ratio (SNR) and low resolution to a certain extent. AF is regarded as a heart disease of atrial origin. In order to quantify the morphological and pathological changes of the left atrium (LA), it is necessary to segment the LA derived from the medical image. The medical imaging analysis of AF requires accurate LA-related segmentation and quantitative evaluation of the function. The segmentation and functional evaluation of the LA is crucial to improving our understanding and diagnosis of AF. However, segmentation of the LA on medical images is still being challenged. 1) The LA can occupy a small proportion of the image only compared with the background of the image, making it difficult to locate and identify boundary details. 2) The strength of the LA is quite similar to its surrounding chambers, the myocardial wall is thinner, the quality of medical images is not high, the resolution is limited, and the boundaries often appear blurred or missing in the LA surrounding the pulmonary vein (PV). 3) The shapes and sizes of the LA vary significantly thematically as the number and topology of the PV. Our critical review is focused on the integration of current segmentation algorithms and traditional segmentation methods, deep learning based segmentation, and traditional & deep learning-integrated segmentation. Traditional segmentation methods are mainly composed of the active contour model (ACM), atlas segmentation and threshold issue. ACM requires an accurate initial contour. Atlas segmentation requires complete multiple atlas sets and atlas registration, but the manual annotation of atlas sets is a challenging task due to a large number of atlas sets, which makes manual annotation difficult to be completed. In addition, the result of the annotation is vulnerable to be influenced by different taggers and atlas registration is very time-consuming. The threshold method requires the pre-determination of an appropriate threshold, which may be subjective and could ultimately limit the applicability and reproducibility. Although the traditional segmentation methods have achieved certain results, the accuracy of the segmentation is still insufficient. In recent years, deep learning technique has shown its potentials in medical image analysis, and they have qualified in different imaging modes and different clinical applications. It has improved imaging efficiency and quality, image analysis and interpretation and clinical evaluation. With the development of convolutional neural network (CNN), many variant CNN models have emerged, which have made great impacts on the improvement of segmentation algorithms. The full convolutional network (FCN) is a variant of the CNN. Based on the CNN, the FCN uses the 1×1 convolutional layer to update the full connection layer, and changes the height and width of the feature maps of the intermediate layers back to the size of the input image in terms of transposing the convolutional layer, the prediction results and the input image have one-to-one correspondence in the spatial dimension, the FCN can accept input images of any size, and generate segmentation images of the same size. The FCN mainly uses three techniques: 1) convolution, 2) upsampling and 3) skip connection. The FCN uses the skip connection structure to upsample feature maps of the last layer of the network model, and fused with feature maps of the shallow layer, combining the high-level semantic information with the low-level image information. The U-Net is a variant model of the FCN. The U-Net adopts the encoder-decoder architecture to form a U-shaped structure with four downsampling operations followed by four up sampling steps. The U-Net captures global features on the contraction path and achieves precise positioning on the extension path, thus the segmentation problem-solving of complex neuron structures has achieved excellent performance adequately. On this basis, variant models of the 3D U-Net and the Ⅴ-Net are introduced. The training of neural network models requires a large amount of labeled data as there are millions of parameters in the network that need to be optimized. Accurate segmentation of the LA is of great clinical significance for the diagnosis and analysis of AF. However, manual segmentation of the LA is time-consuming and prone to human-related errors. Therefore, the research of automatic segmentation algorithms is essential in assisting diagnosis and clinical decision-making. We summarize the pros and cons of varied segmentation strategies, existing public data sets and clinical applications of atrial fibrillation analysis and its future trends.关键词:atrial fibrillation(AF);medical image;deep learning(DL);left atrium segmentation;left atrium function146|407|1更新时间:2024-05-07

摘要:Atrial fibrillation (AF) is one of the most arrhythmia symptoms nowadays. The incidence rate of AF increases with elder growth and it can reach 10% population over 75 years old. The AF duration can be divided into paroxysmal, persistent and permanent, and it is induced to the morbidity and mortality of cardiovascular diseases severely. It affects more than 30 million people worldwide like reducing the quality of life and linking high risk of cerebral infarction and death. Although the risk can be reduced with appropriate treatment, AF is often latent and difficult to diagnose and intervene quickly. Recent AF-diagnostic methods have composed of cardiac palpation, optical plethysmography, blood pressure monitoring and vibration, electrocardiogram (ECG) and image-based methods. Most of atrial fibrillation has paroxysmal atrial fibrillation. The four diagnostic methods mentioned above may not capture the onset of atrial fibrillation. It is challenged for long-term diagnosis cycles, high costs, low accuracy and vulnerability. Medical imaging promotes contemporary modern medicine, computed tomography (CT) and magnetic resonance imaging (MRI) via transparent image of the cardiac anatomy. The MRI can be as one of the key medical imaging techniques, which of being unaffected by ionizing radiation, having high soft tissue contrast and high spatial resolution. Current images have limited of low signal-to-noise ratio (SNR) and low resolution to a certain extent. AF is regarded as a heart disease of atrial origin. In order to quantify the morphological and pathological changes of the left atrium (LA), it is necessary to segment the LA derived from the medical image. The medical imaging analysis of AF requires accurate LA-related segmentation and quantitative evaluation of the function. The segmentation and functional evaluation of the LA is crucial to improving our understanding and diagnosis of AF. However, segmentation of the LA on medical images is still being challenged. 1) The LA can occupy a small proportion of the image only compared with the background of the image, making it difficult to locate and identify boundary details. 2) The strength of the LA is quite similar to its surrounding chambers, the myocardial wall is thinner, the quality of medical images is not high, the resolution is limited, and the boundaries often appear blurred or missing in the LA surrounding the pulmonary vein (PV). 3) The shapes and sizes of the LA vary significantly thematically as the number and topology of the PV. Our critical review is focused on the integration of current segmentation algorithms and traditional segmentation methods, deep learning based segmentation, and traditional & deep learning-integrated segmentation. Traditional segmentation methods are mainly composed of the active contour model (ACM), atlas segmentation and threshold issue. ACM requires an accurate initial contour. Atlas segmentation requires complete multiple atlas sets and atlas registration, but the manual annotation of atlas sets is a challenging task due to a large number of atlas sets, which makes manual annotation difficult to be completed. In addition, the result of the annotation is vulnerable to be influenced by different taggers and atlas registration is very time-consuming. The threshold method requires the pre-determination of an appropriate threshold, which may be subjective and could ultimately limit the applicability and reproducibility. Although the traditional segmentation methods have achieved certain results, the accuracy of the segmentation is still insufficient. In recent years, deep learning technique has shown its potentials in medical image analysis, and they have qualified in different imaging modes and different clinical applications. It has improved imaging efficiency and quality, image analysis and interpretation and clinical evaluation. With the development of convolutional neural network (CNN), many variant CNN models have emerged, which have made great impacts on the improvement of segmentation algorithms. The full convolutional network (FCN) is a variant of the CNN. Based on the CNN, the FCN uses the 1×1 convolutional layer to update the full connection layer, and changes the height and width of the feature maps of the intermediate layers back to the size of the input image in terms of transposing the convolutional layer, the prediction results and the input image have one-to-one correspondence in the spatial dimension, the FCN can accept input images of any size, and generate segmentation images of the same size. The FCN mainly uses three techniques: 1) convolution, 2) upsampling and 3) skip connection. The FCN uses the skip connection structure to upsample feature maps of the last layer of the network model, and fused with feature maps of the shallow layer, combining the high-level semantic information with the low-level image information. The U-Net is a variant model of the FCN. The U-Net adopts the encoder-decoder architecture to form a U-shaped structure with four downsampling operations followed by four up sampling steps. The U-Net captures global features on the contraction path and achieves precise positioning on the extension path, thus the segmentation problem-solving of complex neuron structures has achieved excellent performance adequately. On this basis, variant models of the 3D U-Net and the Ⅴ-Net are introduced. The training of neural network models requires a large amount of labeled data as there are millions of parameters in the network that need to be optimized. Accurate segmentation of the LA is of great clinical significance for the diagnosis and analysis of AF. However, manual segmentation of the LA is time-consuming and prone to human-related errors. Therefore, the research of automatic segmentation algorithms is essential in assisting diagnosis and clinical decision-making. We summarize the pros and cons of varied segmentation strategies, existing public data sets and clinical applications of atrial fibrillation analysis and its future trends.关键词:atrial fibrillation(AF);medical image;deep learning(DL);left atrium segmentation;left atrium function146|407|1更新时间:2024-05-07

Review

-

摘要:ObjectiveImages are often distorted by noise during image acquisition, transmission and storage process. The generated noise can degrade image quality and affect image processing, such as edge detection, image segmentation, image recognition and image classification. Image denoising technique plays a key role in image pre-processing for image details preservation. Current Gaussian noise removal denoising techniques is often based on variational model like the total variation (TV) method. It can realize image smoothing through minimizing the corresponding energy function. However, TV-based denoising methods have their staircase effects and detail loss due to local gradient information only. Many researchers integrate the non-local concept into the total variation model after the non-local means was proposed. The existing non-local TV-based methods take advantages of the non-local similarity to denoise the image while keeping the image structure information. Unfortunately, many existing TV-based color image denoising methods fail to fully capture both local and non-local correlations among different image patches, and ignore the fact that the realistic noise varies in different image patches and different color channels. These always lead to over-smoothing and under-smoothing in the denoising result. Our newly TV-based color image denoising method, named adaptive non-local 3D total variation (ANL3DTV), is developed to deal with that.Method1) Decompose the noisy color image into K overlapping color image patches, search for the m most similar neighboring image patches to each center image patch and then group the m image patches together. 2) Vectorize every color image patch in each image patch group and stack them into a 2D noisy matrix. 3) Obtain the corresponding 2D denoised matrices via ANL3DTV. To get the inter-patch and intra-patch correlations, our ANL3DTV takes advantages of a non-local 3D total variation regularization. On the basis of embedding an adaptive weight matrix into the fidelity term of the optimization model, it can automatically control the denoising strength on different color image patches and different color channels in each iteration. The weight matrix is correlated with the estimated noise level of each image patch. 4) Aggregate all the denoised 2D matrices to reconstruct the denoised color image.ResultAccording to different ways to add Gaussian noise, there are two cases in the denoising experiment. In Case 1, the noisy images are corrupted with Gaussian noise with the same noise variance in all color channels. The selected noise levels are σ= 10, 30 and 50. In Case 2, we add Gaussian noise with different noise variances to each color channel. The noise levels are [σR, σG, σB] = [5, 15, 10], [40, 50, 30], [5, 40, 15] and [40, 5, 25]. ANL3DTV is compared to 6 existing TV-based denoising methods. The peak signal-to-noise ratio (PSNR) and structure similarity (SSIM) are adopted to denoising evaluation. The averaged PSNR/SSIM results of ANL3DTV in Case 1 are 32.33 dB/92.99%, 26.92 dB/81.68 and 24.57 dB/73.57%, respectively, and the quantitative results of ANL3DTV in Case 2 are 31.62 dB/92.88%, 24.49 dB/73.02%, 27.47 dB/85.94% and 26.81 dB/81.00%, respectively. Compared with other competing methods, ANL3DTV improves PSNR and SSIM by about 0.16~1.76 dB and 0.12%~6.13%. As can be seen from the denoised images, some competing methods oversmooth the images and lose many structure information. Some mistake noise pattern for the useful edge information and yield obvious ringring artifacts. Our ANL3DTV can remove more noise, preserve more details and suppress more artifacts than the competing methods.ConclusionWe demonstrate an adaptive non-local 3D total variation model for Gaussian noise removal (ANL3DTV). To capture the inter-patch and intra-patch gradient information, ANL3DTV is focused on the non-local 3D total variation regularization. To adaptively adjust the denoising strength on each image patch and each color channel, an adaptive weight matrix into the fidelity term is introduced. To guarantee the feasibility of ANL3DTV mathematically, we develop the iterative solution of ANL3DTV and validate its convergence. The visual results demonstrate our ANL3DTV potentials in noise removal and detail preserving. Furthermore, ANL3DTV achieves more robustness and stablizes noise removal more under different noise levels.关键词:color image denoising;Gaussian noise;non-local similarity;3D total variation;adaptive weight109|464|2更新时间:2024-05-07

摘要:ObjectiveImages are often distorted by noise during image acquisition, transmission and storage process. The generated noise can degrade image quality and affect image processing, such as edge detection, image segmentation, image recognition and image classification. Image denoising technique plays a key role in image pre-processing for image details preservation. Current Gaussian noise removal denoising techniques is often based on variational model like the total variation (TV) method. It can realize image smoothing through minimizing the corresponding energy function. However, TV-based denoising methods have their staircase effects and detail loss due to local gradient information only. Many researchers integrate the non-local concept into the total variation model after the non-local means was proposed. The existing non-local TV-based methods take advantages of the non-local similarity to denoise the image while keeping the image structure information. Unfortunately, many existing TV-based color image denoising methods fail to fully capture both local and non-local correlations among different image patches, and ignore the fact that the realistic noise varies in different image patches and different color channels. These always lead to over-smoothing and under-smoothing in the denoising result. Our newly TV-based color image denoising method, named adaptive non-local 3D total variation (ANL3DTV), is developed to deal with that.Method1) Decompose the noisy color image into K overlapping color image patches, search for the m most similar neighboring image patches to each center image patch and then group the m image patches together. 2) Vectorize every color image patch in each image patch group and stack them into a 2D noisy matrix. 3) Obtain the corresponding 2D denoised matrices via ANL3DTV. To get the inter-patch and intra-patch correlations, our ANL3DTV takes advantages of a non-local 3D total variation regularization. On the basis of embedding an adaptive weight matrix into the fidelity term of the optimization model, it can automatically control the denoising strength on different color image patches and different color channels in each iteration. The weight matrix is correlated with the estimated noise level of each image patch. 4) Aggregate all the denoised 2D matrices to reconstruct the denoised color image.ResultAccording to different ways to add Gaussian noise, there are two cases in the denoising experiment. In Case 1, the noisy images are corrupted with Gaussian noise with the same noise variance in all color channels. The selected noise levels are σ= 10, 30 and 50. In Case 2, we add Gaussian noise with different noise variances to each color channel. The noise levels are [σR, σG, σB] = [5, 15, 10], [40, 50, 30], [5, 40, 15] and [40, 5, 25]. ANL3DTV is compared to 6 existing TV-based denoising methods. The peak signal-to-noise ratio (PSNR) and structure similarity (SSIM) are adopted to denoising evaluation. The averaged PSNR/SSIM results of ANL3DTV in Case 1 are 32.33 dB/92.99%, 26.92 dB/81.68 and 24.57 dB/73.57%, respectively, and the quantitative results of ANL3DTV in Case 2 are 31.62 dB/92.88%, 24.49 dB/73.02%, 27.47 dB/85.94% and 26.81 dB/81.00%, respectively. Compared with other competing methods, ANL3DTV improves PSNR and SSIM by about 0.16~1.76 dB and 0.12%~6.13%. As can be seen from the denoised images, some competing methods oversmooth the images and lose many structure information. Some mistake noise pattern for the useful edge information and yield obvious ringring artifacts. Our ANL3DTV can remove more noise, preserve more details and suppress more artifacts than the competing methods.ConclusionWe demonstrate an adaptive non-local 3D total variation model for Gaussian noise removal (ANL3DTV). To capture the inter-patch and intra-patch gradient information, ANL3DTV is focused on the non-local 3D total variation regularization. To adaptively adjust the denoising strength on each image patch and each color channel, an adaptive weight matrix into the fidelity term is introduced. To guarantee the feasibility of ANL3DTV mathematically, we develop the iterative solution of ANL3DTV and validate its convergence. The visual results demonstrate our ANL3DTV potentials in noise removal and detail preserving. Furthermore, ANL3DTV achieves more robustness and stablizes noise removal more under different noise levels.关键词:color image denoising;Gaussian noise;non-local similarity;3D total variation;adaptive weight109|464|2更新时间:2024-05-07 -

摘要:ObjectiveSteganography is a novel of technology that involves the embedding of hidden information into digital carriers, such as text, image, voice, or video data. To embed hidden information into the audio carrier with no audio quality loss, audio-based steganography utilizes the redundancy of human auditory and the statistical-based audio carrier among them. The voice-enhanced and packet-loss compensation, and internet low bit rate codec based (iLBC-based) techniques can maintain network-context high voice quality with high packet loss rate, which develops the steganography for the iLBC speech in the field of information hiding in recent years. However, it is challenged to hide information in iLBC due to the high compression issue. Moreover, human auditory system, unlike the human visual system, is highly vulnerable for identifying minor distortions. Most of the existing methods are focused on the processes of linear spectrum frequency coefficient vector quantization, the dynamic codebook searching or the acquired quantization in iLBC. Although these methods have good imperceptibility, they are usually at the expense of steganography capacity, and it is difficult to resist the detection of the deep learning-based steganalysis technology. Therefore, the mutual benefit issue is challenged for the iLBC speech steganography between steganography capacities, imperceptibility, and anti-detection, in which the steganography capacity is as high as possible, the imperceptibility is as good as possible, and the resistance to steganalysis is as strong as possible. We develop a hierarchical-based method of high-capacity steganography in iLBC speech.Method1) The structure of iLBC bitstream is analyzed. 2) The influence of steganography processes in the linear spectrum frequency coefficient vector quantization, the dynamic codebook search, and the gain quantization on the voice quality is clarified based on the perceptual evaluation of speech quality-mean opinion score (PESQ-MOS) and Mel cepstral distortion (MCD). A hierarchical-based steganography position method is demonstrated to choose invulnerable layers and reduce distortions via gain quantization and the dynamic codebook searching in terms of the steganography capacity and the hierarchy priority. For the unfilled layer, an embedded position-selected method based on the Logistic chaotic map is also developed to improve the randomness and security of steganography. 3) The quantization index module is to embed the hidden information for steganography security better.ResultOur hierarchical steganography method realizes the one time extended steganography capacity. Additionally, we adopt the Chinese and English speech data set steganalysis-speech-dataset (SSD) to make comparative experiments, which includes 30 ms and 20 ms frames and 2 s, 5 s, and 10 s speech samples. The experimental results on 5 280 speech samples show that our method can strengthen imperceptibility and alleviate distortions in terms of embedding more hidden information. To validate our anti-detection performance against the deep learning-based steganalyzer, we generate 4 000 original speech samples and 4 000 steganographic speech samples, of which 75% is used as the training set and 25% as the test set. The detection results show that the steganography capacity is less than or equal to 18 bit on 30 ms frame, and 12 bit on 20 ms frame. It can resist the detection of the deep learning-based audio steganalyzer well.ConclusionA hierarchical steganography method with high capacity is developed in the iLBC speech. It has the steganography potential of the iLBC speech for imperceptibility and anti-detection optimization on the premise of the steganography capacity extension.关键词:internet low bit rate codec(iLBC);quantization index modulation;hierarchical steganography;embeddable positions;high capacity66|315|0更新时间:2024-05-07

摘要:ObjectiveSteganography is a novel of technology that involves the embedding of hidden information into digital carriers, such as text, image, voice, or video data. To embed hidden information into the audio carrier with no audio quality loss, audio-based steganography utilizes the redundancy of human auditory and the statistical-based audio carrier among them. The voice-enhanced and packet-loss compensation, and internet low bit rate codec based (iLBC-based) techniques can maintain network-context high voice quality with high packet loss rate, which develops the steganography for the iLBC speech in the field of information hiding in recent years. However, it is challenged to hide information in iLBC due to the high compression issue. Moreover, human auditory system, unlike the human visual system, is highly vulnerable for identifying minor distortions. Most of the existing methods are focused on the processes of linear spectrum frequency coefficient vector quantization, the dynamic codebook searching or the acquired quantization in iLBC. Although these methods have good imperceptibility, they are usually at the expense of steganography capacity, and it is difficult to resist the detection of the deep learning-based steganalysis technology. Therefore, the mutual benefit issue is challenged for the iLBC speech steganography between steganography capacities, imperceptibility, and anti-detection, in which the steganography capacity is as high as possible, the imperceptibility is as good as possible, and the resistance to steganalysis is as strong as possible. We develop a hierarchical-based method of high-capacity steganography in iLBC speech.Method1) The structure of iLBC bitstream is analyzed. 2) The influence of steganography processes in the linear spectrum frequency coefficient vector quantization, the dynamic codebook search, and the gain quantization on the voice quality is clarified based on the perceptual evaluation of speech quality-mean opinion score (PESQ-MOS) and Mel cepstral distortion (MCD). A hierarchical-based steganography position method is demonstrated to choose invulnerable layers and reduce distortions via gain quantization and the dynamic codebook searching in terms of the steganography capacity and the hierarchy priority. For the unfilled layer, an embedded position-selected method based on the Logistic chaotic map is also developed to improve the randomness and security of steganography. 3) The quantization index module is to embed the hidden information for steganography security better.ResultOur hierarchical steganography method realizes the one time extended steganography capacity. Additionally, we adopt the Chinese and English speech data set steganalysis-speech-dataset (SSD) to make comparative experiments, which includes 30 ms and 20 ms frames and 2 s, 5 s, and 10 s speech samples. The experimental results on 5 280 speech samples show that our method can strengthen imperceptibility and alleviate distortions in terms of embedding more hidden information. To validate our anti-detection performance against the deep learning-based steganalyzer, we generate 4 000 original speech samples and 4 000 steganographic speech samples, of which 75% is used as the training set and 25% as the test set. The detection results show that the steganography capacity is less than or equal to 18 bit on 30 ms frame, and 12 bit on 20 ms frame. It can resist the detection of the deep learning-based audio steganalyzer well.ConclusionA hierarchical steganography method with high capacity is developed in the iLBC speech. It has the steganography potential of the iLBC speech for imperceptibility and anti-detection optimization on the premise of the steganography capacity extension.关键词:internet low bit rate codec(iLBC);quantization index modulation;hierarchical steganography;embeddable positions;high capacity66|315|0更新时间:2024-05-07

Image Processing and Coding

-

摘要:ObjectiveHuman face recognition has been developing for biometrics applications like online payment and security. Face-related recognition systems are usually deployed in an open environment in reality, which is challenged for the robustness problem. The changing external environment (e.g., improper exposure, poor lighting, extreme weather conditions, background interference), can intervene diversified distortions to the face images like low contrast, blurring and occlusion, which significantly degrades the performance of the face-related recognition system. Therefore, an accurate face image quality assessment method is highly required to improve the performance of the face recognition system from two perspectives as mentioned below: 1) face-related image quality model can be used to filter out low-quality face images since the performance of face recognition systems is often affected by low-quality images, thus avoiding invalid recognition and improving the recognition efficiency. 2) Traditional face recognition features can be enhanced in terms of the integrated facial quality features. In contrast to the traditional image quality assessment approaches, face-related image quality assessment can be achieved with specific face recognition algorithms only. The existing face-related image quality model scan be divided into handcrafted feature-based and deep learning-based.MethodWe develop a new mask-based method for face-related image quality assessment. From the perspective of human recognition, the quality of a face image is mainly determined by the key regions of the face image (eyes, nose, and mouth). Changes in these regions will have different impacts on the recognition performance for face-related images with multi-level quality. A mask added on these regions will also have different impacts for different face images. For example, high-quality images masked tends to have greater impact on the recognition performance compared with low-quality face images. Such a mask can be designed to cover the key regions, and the quality of a face image can be achieved by measuring the influence of the masking operation. Our human face-related image quality model can be segmented into two categories: 1) the masking operation on face images; 2) the quality score regression. Specifically, the mask is added to the key regions at first for an input face image to be evaluated. Next the face image pair is obtained containing the input image and the masked image. Finally, image pair is input into the deep feature extraction module, producing the qualified features. The objective quality score of the input face image is obtained in terms of the feature pair regression. Our method is called mask-based face image quality (MFIQ). For model training, we build a new DDFace(Diversified Distortion Face) database, which contains a total of 350 000 distorted face images of 1 000 people. We use 280 000 face images as the training set and the rest of it as the testing set. We train the model for 40 epochs with the learning rate 0.001 and batch size 32.ResultIn the experiments, five face image datasets are used, including our DDFace-built and four existing face recognition datasets like LFW(Labeled Faces in the Wild), VGGFace2(Visual Geometry Group Face2), CASIA-WebFace(Institute of Automation Chinese Academy of Science-Website Face) and CelebA(CelebFaces Attribute). Our proposed MFIQ model is compared with the popular deep face image quality models, including face quality net-v0 (FaceQnet-v0), face quality net-v1(FaceQnet-v1) and stochastic embedding robustness-face image quality (SER-FIQ). Under the metric area over curve(AOC), our model performance is improved by 14.8%, 0.1%, 2.9%, 3.7% and 4.9% in comparison with LFW, CelebA, DDFace, VGGFace2 and CASIA-WebFace databases, respectively. Furthermore, our MFIQ model is used to predict the face-related image quality in different datasets and the quality distributions of images are calculated. The experimental results show that our distributions predicted is close to the real distributions. Our MFIQ model performance is also compared with the other three models according to face-related images evaluation from singles and multiples. The results show that the proposed MFIQ performs better than SER-FIQ, FaceQnet-v0 and FaceQnet-v1.ConclusionOur research potentials are focused on more robustness and distinguishing ability for the key elements of multiple-level distorted images.关键词:face recognition;image quality assessment;face image utility quality;no reference;mask;pseudo reference105|199|1更新时间:2024-05-07

摘要:ObjectiveHuman face recognition has been developing for biometrics applications like online payment and security. Face-related recognition systems are usually deployed in an open environment in reality, which is challenged for the robustness problem. The changing external environment (e.g., improper exposure, poor lighting, extreme weather conditions, background interference), can intervene diversified distortions to the face images like low contrast, blurring and occlusion, which significantly degrades the performance of the face-related recognition system. Therefore, an accurate face image quality assessment method is highly required to improve the performance of the face recognition system from two perspectives as mentioned below: 1) face-related image quality model can be used to filter out low-quality face images since the performance of face recognition systems is often affected by low-quality images, thus avoiding invalid recognition and improving the recognition efficiency. 2) Traditional face recognition features can be enhanced in terms of the integrated facial quality features. In contrast to the traditional image quality assessment approaches, face-related image quality assessment can be achieved with specific face recognition algorithms only. The existing face-related image quality model scan be divided into handcrafted feature-based and deep learning-based.MethodWe develop a new mask-based method for face-related image quality assessment. From the perspective of human recognition, the quality of a face image is mainly determined by the key regions of the face image (eyes, nose, and mouth). Changes in these regions will have different impacts on the recognition performance for face-related images with multi-level quality. A mask added on these regions will also have different impacts for different face images. For example, high-quality images masked tends to have greater impact on the recognition performance compared with low-quality face images. Such a mask can be designed to cover the key regions, and the quality of a face image can be achieved by measuring the influence of the masking operation. Our human face-related image quality model can be segmented into two categories: 1) the masking operation on face images; 2) the quality score regression. Specifically, the mask is added to the key regions at first for an input face image to be evaluated. Next the face image pair is obtained containing the input image and the masked image. Finally, image pair is input into the deep feature extraction module, producing the qualified features. The objective quality score of the input face image is obtained in terms of the feature pair regression. Our method is called mask-based face image quality (MFIQ). For model training, we build a new DDFace(Diversified Distortion Face) database, which contains a total of 350 000 distorted face images of 1 000 people. We use 280 000 face images as the training set and the rest of it as the testing set. We train the model for 40 epochs with the learning rate 0.001 and batch size 32.ResultIn the experiments, five face image datasets are used, including our DDFace-built and four existing face recognition datasets like LFW(Labeled Faces in the Wild), VGGFace2(Visual Geometry Group Face2), CASIA-WebFace(Institute of Automation Chinese Academy of Science-Website Face) and CelebA(CelebFaces Attribute). Our proposed MFIQ model is compared with the popular deep face image quality models, including face quality net-v0 (FaceQnet-v0), face quality net-v1(FaceQnet-v1) and stochastic embedding robustness-face image quality (SER-FIQ). Under the metric area over curve(AOC), our model performance is improved by 14.8%, 0.1%, 2.9%, 3.7% and 4.9% in comparison with LFW, CelebA, DDFace, VGGFace2 and CASIA-WebFace databases, respectively. Furthermore, our MFIQ model is used to predict the face-related image quality in different datasets and the quality distributions of images are calculated. The experimental results show that our distributions predicted is close to the real distributions. Our MFIQ model performance is also compared with the other three models according to face-related images evaluation from singles and multiples. The results show that the proposed MFIQ performs better than SER-FIQ, FaceQnet-v0 and FaceQnet-v1.ConclusionOur research potentials are focused on more robustness and distinguishing ability for the key elements of multiple-level distorted images.关键词:face recognition;image quality assessment;face image utility quality;no reference;mask;pseudo reference105|199|1更新时间:2024-05-07 -

摘要:ObjectiveHuman facial expression can be as a human emotion style and information transmission carrier in the process of human-robot interaction. Thanks to the artificial intelligence (AI) development, facial expression recognition (FER) has been developing in the context of emotion understanding, human-robot interaction, safe driving, medical treatment, and communications. However, current facial expression recognition studies have been challenging of some problems like large background interference, complex network model parameters, and poor generalization. We develop a lightweight facial expression recognition method based on improved convolutional neural network (CNN-improved) and channel-weighted in order to improve its recognition and classification and the key feature information mining of facial expressions.MethodHuman facial expression recognition network is focused on facial-related image gathering, image preprocessing, feature extraction, and expression-related classification and recognition, amongst feature extraction is as the key step of the network structure. Our demonstration is illustrated as following: 1) different collections of expression-related datasets are obtained for indoor and outdoor scenarios. 2) Data-enhanced method is used to pre-process the expression-related image through avoiding the distorted background information and resolving the problems of over-fitting and poor robustness related to deep learning algorithms. 3) The lightweight expression network is designed and trained in terms of the enhanced depth-segmented convolutional channel feature. To reduce the network parameters effectively, deep-segmented convolution and global average pooling layer are deployed. The squeeze-and-excitation(SE) module is also embedded to optimize the model. Multi-channels-related compression rates are set to extract facial expression features more efficiently and thus the recognition ability of the network is improved. Our main contributions are clarified as mentioned below: 1) data preprocessing module: it is mainly based on data enhancement operations, such as image size normalization, random rotation and cropping, and random noise-added. The interference information is removed and the generalization of the model is improved. 2) Network model: a convolutional neural network (CNN) is adopted and an enhanced depth-segmented convolution channel feature module (also called basic block) for channel weighting is designed. The space and channel information in the local receptive field are extended by setting different compression rates originated from different convolution layers. 3) Verification: facial expression recognition method is performed on a number of popular public datasets and achieved high recognition accuracy.ResultThe best compression ratio combinations of SE modules are sorted out through experiments and embedded into the constructed lightweight network, and experimental evaluation is carried out on five commonly-used expression datasets. It shows that our recognition accuracy of the three indoor-related expression datasets of FER2013(Facial Expression Recognition 2013), CK+(the extended Cohn-Kanade) and JAFFE(Japanses Female Facial Expression) are 79.73%, 99.32%, and 98.48%, which are improved 5.72%, 0.51% and 0.28%. The two outdoor expression datasets of RAF-DB(Real-world Affective Faces Database) and AffectNet are obtained recognition accuracy of 86.14% and 61.78%, which are improved 2.01% and 0.67%. In contrast to the Xception neural network, a lightweight network is facilitated while the parameters are reduced by 63%. The average recognition speed can reach 128frame/s, which meets the real-time requirements.ConclusionOur lightweight expression recognition method has different weighting capabilities in different channels. The key expression information can be obtained. The generalization of this model is enhanced. To improve the recognition ability of the network effectively, our method can recognize facial expressions accurately based on network simplification and calculation cost optimization.关键词:expression recognition;image processing;convolutional neural network(CNN);depth separable convolution;global average pooling;squeeze-and-excitation(SE) module84|198|1更新时间:2024-05-07

摘要:ObjectiveHuman facial expression can be as a human emotion style and information transmission carrier in the process of human-robot interaction. Thanks to the artificial intelligence (AI) development, facial expression recognition (FER) has been developing in the context of emotion understanding, human-robot interaction, safe driving, medical treatment, and communications. However, current facial expression recognition studies have been challenging of some problems like large background interference, complex network model parameters, and poor generalization. We develop a lightweight facial expression recognition method based on improved convolutional neural network (CNN-improved) and channel-weighted in order to improve its recognition and classification and the key feature information mining of facial expressions.MethodHuman facial expression recognition network is focused on facial-related image gathering, image preprocessing, feature extraction, and expression-related classification and recognition, amongst feature extraction is as the key step of the network structure. Our demonstration is illustrated as following: 1) different collections of expression-related datasets are obtained for indoor and outdoor scenarios. 2) Data-enhanced method is used to pre-process the expression-related image through avoiding the distorted background information and resolving the problems of over-fitting and poor robustness related to deep learning algorithms. 3) The lightweight expression network is designed and trained in terms of the enhanced depth-segmented convolutional channel feature. To reduce the network parameters effectively, deep-segmented convolution and global average pooling layer are deployed. The squeeze-and-excitation(SE) module is also embedded to optimize the model. Multi-channels-related compression rates are set to extract facial expression features more efficiently and thus the recognition ability of the network is improved. Our main contributions are clarified as mentioned below: 1) data preprocessing module: it is mainly based on data enhancement operations, such as image size normalization, random rotation and cropping, and random noise-added. The interference information is removed and the generalization of the model is improved. 2) Network model: a convolutional neural network (CNN) is adopted and an enhanced depth-segmented convolution channel feature module (also called basic block) for channel weighting is designed. The space and channel information in the local receptive field are extended by setting different compression rates originated from different convolution layers. 3) Verification: facial expression recognition method is performed on a number of popular public datasets and achieved high recognition accuracy.ResultThe best compression ratio combinations of SE modules are sorted out through experiments and embedded into the constructed lightweight network, and experimental evaluation is carried out on five commonly-used expression datasets. It shows that our recognition accuracy of the three indoor-related expression datasets of FER2013(Facial Expression Recognition 2013), CK+(the extended Cohn-Kanade) and JAFFE(Japanses Female Facial Expression) are 79.73%, 99.32%, and 98.48%, which are improved 5.72%, 0.51% and 0.28%. The two outdoor expression datasets of RAF-DB(Real-world Affective Faces Database) and AffectNet are obtained recognition accuracy of 86.14% and 61.78%, which are improved 2.01% and 0.67%. In contrast to the Xception neural network, a lightweight network is facilitated while the parameters are reduced by 63%. The average recognition speed can reach 128frame/s, which meets the real-time requirements.ConclusionOur lightweight expression recognition method has different weighting capabilities in different channels. The key expression information can be obtained. The generalization of this model is enhanced. To improve the recognition ability of the network effectively, our method can recognize facial expressions accurately based on network simplification and calculation cost optimization.关键词:expression recognition;image processing;convolutional neural network(CNN);depth separable convolution;global average pooling;squeeze-and-excitation(SE) module84|198|1更新时间:2024-05-07 -

摘要:ObjectiveHuman eye fixation recognition has been developing in images-related computer vision in recent years. The distinctive salient regions of an image are selected for capturing visual structure better. Recent saliency models are developed through salient object detection, object segmentation and image cropping. Traditional applications are focused on hand-crafted features based on low-level cues (e.g., contrast, texture, color) for saliency prediction. However, these features are easily failed to simulate the complex activation of the human visual system, especially in complex scenarios. Existing eye fixation prediction models often use jump connections to fuse high-level and low-level features, which easily leads to the difficulty of weighing the importance of features between different levels, and the gazing problem are biased toward the center. Commonly, humans are inclined to look at the center of the image when there are no obvious salient regions. We develop layer attention mechanism that different weights are assigned to different layer features for selective layer features extraction, and the channel attention mechanism and spatial attention mechanism are integrated to selectively extract different channel and spatial features in convolutional features. In addition, we facilitate a method of Gaussian learning to solve the problem of the center priors and improve the prediction accuracy.MethodOur eye fixation prediction model is based on multiple attention mechanism network (MAM-Net), which uses three different attention mechanisms to weight the feature information of different layers, different channels, and different image pixels extracted by the ResNet-50 model with dilated convolution. Our network is mainly composed of the feature extraction module, the novel multiple attention mechanism (MAM) module, and the Gaussian learning optimization module. 1) A dilated convolution network is used to capture long-range information via extracting local and global feature maps, which can contain a lot of different receptive fields. 2) A MAM attention module is incorporated features from different contexts of layer, channels, and image pixels of feature maps and output an intermediate saliency map. 3) A Gaussian learning layer is used to select best kernel automatically to blur the intermediate saliency map and generate the final saliency map. Our MAM module aims to optimize the obtained low-level features automatically in the context of rich details and high-level global semantic information features, fully extract channel and spatial information, and prevent over-reliance on high-level features. The Gaussian learning module is used for the final optimization processing since human eyes tend to focus to the image center, which is inconsistent with the prediction results of common methods. The deficiency of setting Gaussian fuzzy parameters is avoided by human prior in our method.ResultExperiments on the public dataset saliency in context(SALICON) show that our results has improved Kullback-Leibler divergence (KLD), shuffled area under region of interest(ROC) curve (sAUC), and information gain (IG) evaluation criteria by 33%, 0.3%, and 6%; 53%, 0.6%, and 192%, respectively.ConclusionWe propose a novel attentive model for predicting human eye fixations on natural images. Our MAM-Net can be used to predict saliency map of an image, which extract high-level and low-level features. The channel and spatial attention mechanism can optimize the feature maps of different layers, and the layer attention mechanism can predict the saliency map of the image composed of high-level and low-level features as well. We illustrate a Gaussian learning blur layer in terms of the integrated saliency maps optimization with different kernel.关键词:eye fixation prediction;multiple attention;layer attention;channel attention;spatial attention;Gaussian learning121|212|0更新时间:2024-05-07

摘要:ObjectiveHuman eye fixation recognition has been developing in images-related computer vision in recent years. The distinctive salient regions of an image are selected for capturing visual structure better. Recent saliency models are developed through salient object detection, object segmentation and image cropping. Traditional applications are focused on hand-crafted features based on low-level cues (e.g., contrast, texture, color) for saliency prediction. However, these features are easily failed to simulate the complex activation of the human visual system, especially in complex scenarios. Existing eye fixation prediction models often use jump connections to fuse high-level and low-level features, which easily leads to the difficulty of weighing the importance of features between different levels, and the gazing problem are biased toward the center. Commonly, humans are inclined to look at the center of the image when there are no obvious salient regions. We develop layer attention mechanism that different weights are assigned to different layer features for selective layer features extraction, and the channel attention mechanism and spatial attention mechanism are integrated to selectively extract different channel and spatial features in convolutional features. In addition, we facilitate a method of Gaussian learning to solve the problem of the center priors and improve the prediction accuracy.MethodOur eye fixation prediction model is based on multiple attention mechanism network (MAM-Net), which uses three different attention mechanisms to weight the feature information of different layers, different channels, and different image pixels extracted by the ResNet-50 model with dilated convolution. Our network is mainly composed of the feature extraction module, the novel multiple attention mechanism (MAM) module, and the Gaussian learning optimization module. 1) A dilated convolution network is used to capture long-range information via extracting local and global feature maps, which can contain a lot of different receptive fields. 2) A MAM attention module is incorporated features from different contexts of layer, channels, and image pixels of feature maps and output an intermediate saliency map. 3) A Gaussian learning layer is used to select best kernel automatically to blur the intermediate saliency map and generate the final saliency map. Our MAM module aims to optimize the obtained low-level features automatically in the context of rich details and high-level global semantic information features, fully extract channel and spatial information, and prevent over-reliance on high-level features. The Gaussian learning module is used for the final optimization processing since human eyes tend to focus to the image center, which is inconsistent with the prediction results of common methods. The deficiency of setting Gaussian fuzzy parameters is avoided by human prior in our method.ResultExperiments on the public dataset saliency in context(SALICON) show that our results has improved Kullback-Leibler divergence (KLD), shuffled area under region of interest(ROC) curve (sAUC), and information gain (IG) evaluation criteria by 33%, 0.3%, and 6%; 53%, 0.6%, and 192%, respectively.ConclusionWe propose a novel attentive model for predicting human eye fixations on natural images. Our MAM-Net can be used to predict saliency map of an image, which extract high-level and low-level features. The channel and spatial attention mechanism can optimize the feature maps of different layers, and the layer attention mechanism can predict the saliency map of the image composed of high-level and low-level features as well. We illustrate a Gaussian learning blur layer in terms of the integrated saliency maps optimization with different kernel.关键词:eye fixation prediction;multiple attention;layer attention;channel attention;spatial attention;Gaussian learning121|212|0更新时间:2024-05-07 -

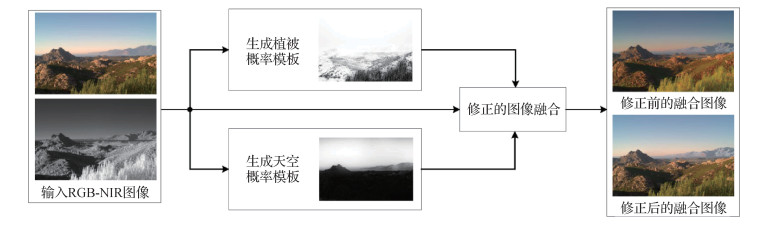

摘要:ObjectiveThe imaging mechanism of near-infrared (NIR) images is different from that of visible images. It can receive the infrared radiation emitted by the object and convert it into grayscale values. Therefore, stronger infrared radiation in the scene yields higher grayscale value in the NIR image and its adaptability to harsh environments (e.g., fog, haze) is better than the visible light imaging. To take advantage of NIR images, RGB-NIR image fusion is a common and effective processing method, which has been widely used in various image vision applications, including recognition, detection and surveillance. Multiple objects will have different imaging results in the same image in terms of their reflection and infrared radiation features, and the same object will have different appearances in visible and NIR images as well. For example, the vegetation part appears as low gray-scale values in the RGB image, but high gray-scale values in the NIR image. In addition, current image fusion algorithms have been challenging in specific regions like vegetation and sky. Therefore, an accurate and robust region detection method is necessary for regional-based processing. However, most algorithms are concerned of single image only and cannot meet the requirements for RGB-NIR image-fused region detection.MethodWe develop a probability-mask generation method from vegetation and sky regions based on RGB-NIR image pairs. The vegetation region: 1) to preserve high contrast and smooth transition, we obtain the ratio of multiple channels of RGB images with the extended normalized difference vegetation index (NDVI). 2) To avoid the extreme case that the red channel is of value minimum or maximum, we use the relationship between NIR and luminance instead of red channel. 3) To get the detection result, we integrate the ratio-guided and the NDVI-extended into the probability mask of vegetation. The sky-region: 1) the local-entropy feature of the RGB image is calculated and a transmission map is for guidance. 2) The guided feature and the extended NDVI is combined, and the results with the height of pixels is enhanced, according to the prior that the sky basically has a great probability of appearing in the upper part of the natural-scene image. 3) The result is a probability mask and considered as the sky detection result. The vegetation and sky detection based algorithms produce corresponding probability masks. We can incorporate them into RGB-NIR image fusion algorithms to improve image quality. The original algorithm uses the Laplacian-Gaussian pyramid and the weight map for multi-scale fusion. We modify the weight map of the NIR image by multiplying it with the vegetation and sky probability masks, and then replace the original NIR weight map with the modified one. The rest of the fusion algorithm remains unchanged.ResultOur algorithm is evaluated on a public dataset containing outdoors images, including country, field, forest, indoor, mountain, old-building, street, urban, and water. To express the health of vegetation in the field of remote sensing, we compare the proposed vegetation detection algorithm with the traditional NDVI. The experimental results of image fusion indicate that our image fusion algorithm can perform better by incorporating the region masks both quantitatively and qualitatively, and produce more realistic and natural images perceptually. Moreover, we analyze the difference between the probability mask and the binary mask when applied to image fusion in the same way. The results show that selected probability mask makes the fused images more colorful and rich in details.ConclusionOur probability mask generation algorithm of vegetation and sky is potential to high accuracy and robustness. Specifically, the detected areas in result images are accurate with clear details and smooth transition, and small objects can be segmented properly. Moreover, our algorithm is beneficial to improving the performance of RGB-NIR image fusion, especially on weight map, making the results have enhanced details and natural colors. It is easy-used without complicated calculations. It is worthy note that our algorithm is more suitable for natural scenes generally.关键词:vegetation detection;sky detection;probability mask;image fusion;visual enhancement53|70|0更新时间:2024-05-07