最新刊期

卷 27 , 期 11 , 2022

-

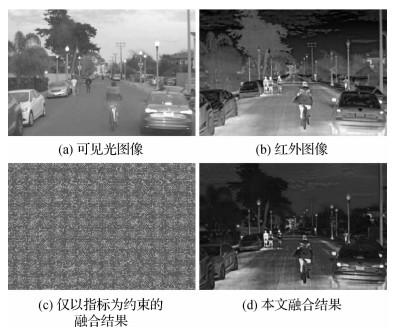

摘要:Body action oriented recognition issue is an essential domain for video interpretation of computer vision analysis. Its potentials can be focused on accurate video-based features extraction for body actions and the related recognition for multiple applications. The data modes of body action recognition modals can be segmented into RGB, depth, skeleton and fusion, respectively. Our multi-modals based critical analysis reviews the research and development of body action recognition algorithm. Our literature review is systematically focused on current algorithms or models. First, we introduce the key aspects of body action recognition method, which can be divided into video input, feature extraction, classification and output results. Next, we introduce the popular datasets of different data modal in the context of body action recognition, including human motion database(HMDB-51), UCF101 dataset, Something-Something datasets of RGB mode, depth modal and skeleton-mode MSR-Action3D dataset, MSR daily activity dataset, UTD-multimodal human action recognition dataset(MHAD) and RGB mode/depth mode/skeleton modal based NTU RGB + D 60/120 dataset, the characteristics of each dataset are explained in detail. Compared to more action recognition reviews, our contributions can be proposed as following: 1) data modal/method/datasets classifications are more instructive; 2) data modal/fusion for body action recognition is discussed more comprehensively; 3) recent challenges of body action recognition is just developed in deep learning and lacks of early manual features methods. We analyze the pros of manual features and deep learning; and 4) their advantages and disadvantages of different data modal, the challenges of action recognition and the future research direction are discussed. According to the data modal classification, the traditional manual feature and deep learning action recognition methods are reviewed via modals analysis of RGB/depth modal/skeleton, as well as multi-modal fused classification and related fusion methods of RGB modal and depth modal. For RGB modal, the traditional manual feature method is related to spatiotemporal volume, spatiotemporal based interest points and the skeleton trajectory based method. The deep learning method involves 3D convolutional neural network and double flow network. 3D convolution can construct the relationship between spatial and temporal dimensions, and the spatiotemporal features extraction is taken into account. The manual feature depth modal methods involve motion change/appearance features. The depth learning method includes representative point cloud network. Point cloud network is leveraged from image processing network. It can extract action features better via point sets processing. The skeleton modal oriented manual method is mainly based on skeleton features, and the deep learning technique mainly uses graph convolution network. Graph convolution network is suitable for the graph shape characteristics of skeleton data, which is beneficial to the transmission of information between skeletons. Next, we summarize the recognition accuracy of representative algorithms and models on RGB modal HMDB-51 dataset, UCF101 dataset and Something-Something V2 dataset, select the data representing manual feature method and depth learning method, and accomplish a histogram for more specific comparative results. For depth modal, we collect the recognition rates of some algorithms and models on MSR-Action3D dataset and NTU RGB + D 60 depth dataset. For skeleton data, we select NTU RGB + D 60 skeleton dataset and NTU RGB + D 120 skeleton dataset, the recognition accuracy of the model is compared. At the same time, we draw the clue of the parameters that need to be trained in the model in recent years. For the multi-modal fusion method, we adopt NTU RGB + D 60 dataset including RGB modal, depth modal and skeleton modal. The comparative recognition rate of single modal is derived of the same algorithm or model and the improved accuracy after multi-modal fusion. We sorted out that RGB modal, depth modal and skeleton modal have their potentials/drawbacks to match applicable scenarios. The fusion of multiple modals can complement mutual information to a certain extent and improve the recognition effect; manual feature method is suitable for some small datasets, and the algorithm complexity is lower; deep learning is suitable for large datasets and can automatically extract features from a large number of data; more researches have changed from deepening the network to lightweight and high recognition rate network. Finally, the current problems and challenges of body action recognition technology are summarized on the aspects of multiple body action recognition, fast and similar body action recognition, and depth and skeleton data collection. At the same time, the data modal issues are predicted further in terms of effective modal fusion, novel network design, and added attention module.关键词:computer vision;action recognition;deep learning;neural network;multimodal;modal fusion357|235|2更新时间:2024-05-07

摘要:Body action oriented recognition issue is an essential domain for video interpretation of computer vision analysis. Its potentials can be focused on accurate video-based features extraction for body actions and the related recognition for multiple applications. The data modes of body action recognition modals can be segmented into RGB, depth, skeleton and fusion, respectively. Our multi-modals based critical analysis reviews the research and development of body action recognition algorithm. Our literature review is systematically focused on current algorithms or models. First, we introduce the key aspects of body action recognition method, which can be divided into video input, feature extraction, classification and output results. Next, we introduce the popular datasets of different data modal in the context of body action recognition, including human motion database(HMDB-51), UCF101 dataset, Something-Something datasets of RGB mode, depth modal and skeleton-mode MSR-Action3D dataset, MSR daily activity dataset, UTD-multimodal human action recognition dataset(MHAD) and RGB mode/depth mode/skeleton modal based NTU RGB + D 60/120 dataset, the characteristics of each dataset are explained in detail. Compared to more action recognition reviews, our contributions can be proposed as following: 1) data modal/method/datasets classifications are more instructive; 2) data modal/fusion for body action recognition is discussed more comprehensively; 3) recent challenges of body action recognition is just developed in deep learning and lacks of early manual features methods. We analyze the pros of manual features and deep learning; and 4) their advantages and disadvantages of different data modal, the challenges of action recognition and the future research direction are discussed. According to the data modal classification, the traditional manual feature and deep learning action recognition methods are reviewed via modals analysis of RGB/depth modal/skeleton, as well as multi-modal fused classification and related fusion methods of RGB modal and depth modal. For RGB modal, the traditional manual feature method is related to spatiotemporal volume, spatiotemporal based interest points and the skeleton trajectory based method. The deep learning method involves 3D convolutional neural network and double flow network. 3D convolution can construct the relationship between spatial and temporal dimensions, and the spatiotemporal features extraction is taken into account. The manual feature depth modal methods involve motion change/appearance features. The depth learning method includes representative point cloud network. Point cloud network is leveraged from image processing network. It can extract action features better via point sets processing. The skeleton modal oriented manual method is mainly based on skeleton features, and the deep learning technique mainly uses graph convolution network. Graph convolution network is suitable for the graph shape characteristics of skeleton data, which is beneficial to the transmission of information between skeletons. Next, we summarize the recognition accuracy of representative algorithms and models on RGB modal HMDB-51 dataset, UCF101 dataset and Something-Something V2 dataset, select the data representing manual feature method and depth learning method, and accomplish a histogram for more specific comparative results. For depth modal, we collect the recognition rates of some algorithms and models on MSR-Action3D dataset and NTU RGB + D 60 depth dataset. For skeleton data, we select NTU RGB + D 60 skeleton dataset and NTU RGB + D 120 skeleton dataset, the recognition accuracy of the model is compared. At the same time, we draw the clue of the parameters that need to be trained in the model in recent years. For the multi-modal fusion method, we adopt NTU RGB + D 60 dataset including RGB modal, depth modal and skeleton modal. The comparative recognition rate of single modal is derived of the same algorithm or model and the improved accuracy after multi-modal fusion. We sorted out that RGB modal, depth modal and skeleton modal have their potentials/drawbacks to match applicable scenarios. The fusion of multiple modals can complement mutual information to a certain extent and improve the recognition effect; manual feature method is suitable for some small datasets, and the algorithm complexity is lower; deep learning is suitable for large datasets and can automatically extract features from a large number of data; more researches have changed from deepening the network to lightweight and high recognition rate network. Finally, the current problems and challenges of body action recognition technology are summarized on the aspects of multiple body action recognition, fast and similar body action recognition, and depth and skeleton data collection. At the same time, the data modal issues are predicted further in terms of effective modal fusion, novel network design, and added attention module.关键词:computer vision;action recognition;deep learning;neural network;multimodal;modal fusion357|235|2更新时间:2024-05-07 -

摘要:The lung adenocarcinoma oriented median survival intervals can be significantly extended through specific targeted therapy based on the identified gene-driven following. Current biopsy is regarded as the "gold standard" for gene-driven tumor detection in clinical practice. Such invasive examination has a certain probability of misdiagnosis and missed diagnosis due to the tumor heterogeneity. Moreover, some of the molecular biology detection technologies are time-consuming and costly, such as the next generation of sequencing and fluorescence in situ hybridization. Therefore, radiogenomics has emerged and provided a new non-invasive method for the prediction of tumor molecular typing. As the most commonly-used way to monitor the lung cancer-related curative effect, computed tomography (CT) has its potential of short-term scanning, high resolution and relatively low-cost, which can carry out the tumor evaluation overall. It makes up the deficiency of biopsy to a certain extent. Thanks to the development of molecular targeted drugs in the context of lung adenocarcinoma treatment, most of researchers have been committed to using medical images to predict the molecular typing of lung adenocarcinoma. We carry out the critical review to harness CT images based molecular typing of lung adenocarcinoma. 1) Current situation of lung adenocarcinoma-oriented molecular typing and the key gene mutation types are introduced. 2) Existing CT images-related methods are divided into two categories: the correlation analysis of CT semantic features and the molecular subtype of lung adenocarcinoma, and the prediction model of molecular typing based on machine learning (ML). Among them, the ML-based prediction model is mainly introduced, which includes radiomics model and deep learning neural network model. 3) Some challenging problems are summarized in this field, and the future research direction is predicted. The correlation between semantic features and molecular typing of lung adenocarcinoma is derived of naked eyes visible tumor features. But, the predicted accuracy is still relatively low. Furthermore, the prediction model is demonstrated based on extracted features of radiomics from the segmented tumor images, and the selected radiomics features are input into the machine learning classifier to obtain the final prediction results. This method is still subject to human subjective influence to some extent, such as the stage of tumor segmentation and pre-setting features. To extract higher-level features for higher prediction accuracy, the convolutional neural network based (CNN-based) deep learning technology can beneficial for low-level features learning in tumor images. The deep learning model has less human-derived intervention, but it needs to be trained and verified through a large amount of data, and the expected effect cannot be achieved temporarily via a small sample. A challenging issue is to be tackled for the complex status of genetic mutations in lung adenocarcinoma and a complete and standardized database for generalization ability. With the database development of gradual standardization and expansion, future research direction can be focused on the construction of large-sample deep learning prediction model based on the integration of multiple medical images. To achieve noninvasive and accurate prediction of molecular typing of lung adenocarcinoma, the model optimization should combine clinical information, CT semantic features and radiomics features further.关键词:non small cell lung cancer;adenocarcinoma;molecular typing;radiogenomics;computed tomography (CT)78|71|0更新时间:2024-05-07

摘要:The lung adenocarcinoma oriented median survival intervals can be significantly extended through specific targeted therapy based on the identified gene-driven following. Current biopsy is regarded as the "gold standard" for gene-driven tumor detection in clinical practice. Such invasive examination has a certain probability of misdiagnosis and missed diagnosis due to the tumor heterogeneity. Moreover, some of the molecular biology detection technologies are time-consuming and costly, such as the next generation of sequencing and fluorescence in situ hybridization. Therefore, radiogenomics has emerged and provided a new non-invasive method for the prediction of tumor molecular typing. As the most commonly-used way to monitor the lung cancer-related curative effect, computed tomography (CT) has its potential of short-term scanning, high resolution and relatively low-cost, which can carry out the tumor evaluation overall. It makes up the deficiency of biopsy to a certain extent. Thanks to the development of molecular targeted drugs in the context of lung adenocarcinoma treatment, most of researchers have been committed to using medical images to predict the molecular typing of lung adenocarcinoma. We carry out the critical review to harness CT images based molecular typing of lung adenocarcinoma. 1) Current situation of lung adenocarcinoma-oriented molecular typing and the key gene mutation types are introduced. 2) Existing CT images-related methods are divided into two categories: the correlation analysis of CT semantic features and the molecular subtype of lung adenocarcinoma, and the prediction model of molecular typing based on machine learning (ML). Among them, the ML-based prediction model is mainly introduced, which includes radiomics model and deep learning neural network model. 3) Some challenging problems are summarized in this field, and the future research direction is predicted. The correlation between semantic features and molecular typing of lung adenocarcinoma is derived of naked eyes visible tumor features. But, the predicted accuracy is still relatively low. Furthermore, the prediction model is demonstrated based on extracted features of radiomics from the segmented tumor images, and the selected radiomics features are input into the machine learning classifier to obtain the final prediction results. This method is still subject to human subjective influence to some extent, such as the stage of tumor segmentation and pre-setting features. To extract higher-level features for higher prediction accuracy, the convolutional neural network based (CNN-based) deep learning technology can beneficial for low-level features learning in tumor images. The deep learning model has less human-derived intervention, but it needs to be trained and verified through a large amount of data, and the expected effect cannot be achieved temporarily via a small sample. A challenging issue is to be tackled for the complex status of genetic mutations in lung adenocarcinoma and a complete and standardized database for generalization ability. With the database development of gradual standardization and expansion, future research direction can be focused on the construction of large-sample deep learning prediction model based on the integration of multiple medical images. To achieve noninvasive and accurate prediction of molecular typing of lung adenocarcinoma, the model optimization should combine clinical information, CT semantic features and radiomics features further.关键词:non small cell lung cancer;adenocarcinoma;molecular typing;radiogenomics;computed tomography (CT)78|71|0更新时间:2024-05-07

Review

-

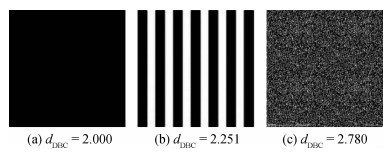

摘要:ObjectiveDigital image has the ability of intuitive and clear information expression, and always carries a lot of valuable information. Therefore, the security of digital image is very important. With the rapid development and popularization of internet of things (IOT) which contains a large number of low-performance electronic devices, and the demand of security and efficient image encryption method in low computing precision environment is becoming more and more urgent. Recently, the chaos-based encryption system is the kind of most representative image encryption method. This type of encryption method depends on float operations and high computing precision. Therefore, the randomness of these chaotic systems in low computing precision will be severely damaged, and results in a sharp decrease about the security of the corresponding encryption method. Moreover, the high time complexity always causes the chaos-based image encryption methods to failure to meet the actual demand of low performance device. To solve the above problems, this paper proposes a batch encryption method based on prime modulo multiplication linear congruence generator to improve the security and efficiency of image encryption in low computing precision environments.MethodThe main idea of the method is to construct a prime modulo multiplication linear congruence generator, which can work well and generate a uniformly distributed pseudo-random sequence at low computing precision. The main steps are as follows: firstly, the set of images are equally divided into three groups and each group generates one combined image based on XOR operation; secondly, the hash value of the set of images is introduced to update the third combined image; thirdly, we introduce a single-byte based prime modulo multiplication linear congruence generator, the updated combined image and the other two combined images are used as the input of the proposed generator to generate an encryption sequence matrix; then the encryption sequence matrix is used as parameter to scramble images; after that, the encryption sequence matrix is exploited to diffuse the scrambled images, and use XOR operation to generate cipher images; finally, the encryption sequence matrix would be encrypted by the improved 2D-SCL which is the existing state-of-art encryption method, thus the cipher images and encrypted sequence matrix can be safely transmitted.ResultThe proposed encryption method has been evaluated by simulation tests in low (2-8) computing precision surroundings. The simulation results show that the statistical information of cipher image are very good in low precision environment, such as low correlation (close to 0), high information entropy (close to 8) and high pass rate of number of pixel changing rate(NPCR) and unified average changed intensity(UACI) which are greater than 90%. In addition, the simulation results also show that the proposed encryption method works well on many attacks in low precision environment, such as high pixel sensitivity, large key space (more than2128), and high resistance of known-plaintext and chosen-plaintext attack, occlusion attack and noise attack. Moreover, the encryption speed of this method is improved compared with the comparative encryption method which has low time complexity.ConclusionThe proposed method can be effectively operated in low computing precision environment by using the single-byte prime modulo multiplication linear congruence generator instead of chaos system. As simulations results shown that the proposed method not only achieves high security for image encryption in low computing precision environment, but also effectively reduces the time cost of image encryption. In addition, the proposed method also provides a new direction for subsequent research of efficient and security image encryption method.关键词:batch image encryption;low precision;security;encryption speed;prime modulo multiplication linear congruence generator(PMMLCG)101|137|0更新时间:2024-05-07

摘要:ObjectiveDigital image has the ability of intuitive and clear information expression, and always carries a lot of valuable information. Therefore, the security of digital image is very important. With the rapid development and popularization of internet of things (IOT) which contains a large number of low-performance electronic devices, and the demand of security and efficient image encryption method in low computing precision environment is becoming more and more urgent. Recently, the chaos-based encryption system is the kind of most representative image encryption method. This type of encryption method depends on float operations and high computing precision. Therefore, the randomness of these chaotic systems in low computing precision will be severely damaged, and results in a sharp decrease about the security of the corresponding encryption method. Moreover, the high time complexity always causes the chaos-based image encryption methods to failure to meet the actual demand of low performance device. To solve the above problems, this paper proposes a batch encryption method based on prime modulo multiplication linear congruence generator to improve the security and efficiency of image encryption in low computing precision environments.MethodThe main idea of the method is to construct a prime modulo multiplication linear congruence generator, which can work well and generate a uniformly distributed pseudo-random sequence at low computing precision. The main steps are as follows: firstly, the set of images are equally divided into three groups and each group generates one combined image based on XOR operation; secondly, the hash value of the set of images is introduced to update the third combined image; thirdly, we introduce a single-byte based prime modulo multiplication linear congruence generator, the updated combined image and the other two combined images are used as the input of the proposed generator to generate an encryption sequence matrix; then the encryption sequence matrix is used as parameter to scramble images; after that, the encryption sequence matrix is exploited to diffuse the scrambled images, and use XOR operation to generate cipher images; finally, the encryption sequence matrix would be encrypted by the improved 2D-SCL which is the existing state-of-art encryption method, thus the cipher images and encrypted sequence matrix can be safely transmitted.ResultThe proposed encryption method has been evaluated by simulation tests in low (2-8) computing precision surroundings. The simulation results show that the statistical information of cipher image are very good in low precision environment, such as low correlation (close to 0), high information entropy (close to 8) and high pass rate of number of pixel changing rate(NPCR) and unified average changed intensity(UACI) which are greater than 90%. In addition, the simulation results also show that the proposed encryption method works well on many attacks in low precision environment, such as high pixel sensitivity, large key space (more than2128), and high resistance of known-plaintext and chosen-plaintext attack, occlusion attack and noise attack. Moreover, the encryption speed of this method is improved compared with the comparative encryption method which has low time complexity.ConclusionThe proposed method can be effectively operated in low computing precision environment by using the single-byte prime modulo multiplication linear congruence generator instead of chaos system. As simulations results shown that the proposed method not only achieves high security for image encryption in low computing precision environment, but also effectively reduces the time cost of image encryption. In addition, the proposed method also provides a new direction for subsequent research of efficient and security image encryption method.关键词:batch image encryption;low precision;security;encryption speed;prime modulo multiplication linear congruence generator(PMMLCG)101|137|0更新时间:2024-05-07 -

摘要:ObjectiveUnderwater-relevant object detection aims to localize and recognize the objects of underwater scenarios. Our research is essential for its widespread applications in oceanography, underwater navigation and fish farming. Current deep convolutional neural network based (DCNN-based) object detection is via large-scale trained datasets like pattern analysis, statistical modeling and computational learning visual object classes 2007 (PASCAL VOC 2007) and Microsoft common objects in context (MS COCO) with degradation-ignored. Nevertheless, the issue of degradation-related has to be resolved as mentioned below: 1) the scarce underwater-relevant detection datasets affects its detection accuracy, which inevitably leads to overfitting of deep neural network models. 2) Underwater-relevant images have the features of low contrast, texture distortion and blur under the complicated underwater environment and illumination circumstances, which limits the detection accuracy of the detection algorithms. In practice, image augmentation method is to alleviate the insufficient problem of datasets. However, image augmentation has limited performance improvement of deep neural network models on small datasets. Another feasible detection solution is to restore (enhance) the underwater-relevant image for a clear image (mainly based on deep learning methods), improve its visibility and contrast, and reduce color cast. Actually, some detection results are relied on synthetic datasets training due to the lack of ground truth images. Its enhancement effect of ground truth images largely derived of the quality of synthetic images. Our pre-trained model is effective for underwater scenes because it is difficult to train a high-accuracy detector. Clear images-based deep neural network detection models' training are difficult to generalize underwater scenes directly because of the domain shift issue caused by imaging differences. We develop a plug-and-play feature enhancement module, which can effectively address the domain shift issue between clear images and underwater images via restoring the features of underwater images extracted from the low-level network. The clear image-based detection network training can be directly applied to underwater image object detection.MethodFirst, to synthesize the underwater version based on an improved light scattering model for underwater imaging, we propose an underwater image synthesis method, which first estimates color cast and luminance from real underwater images and integrate them with the estimated scene depth of a clear image. Next, we design a lightweight feature enhancement module named feature de-drifting module Unet (FDM-Unet) originated from the Unet structure. Third, to extract the shallow features of clear images and their corresponding synthetic underwater images, we use common detectors (e.g., you only look once v3 (YOLO v3) and single shot multibox detector (SSD)) pre-trained on clear images. The shallow feature of the underwater image is input into FDM-Unet for feature de-drifting. To supervise the training of FDM-Unet, our calculated mean square error loss is in terms of the interconnections of the enhanced feature and the original shallow feature. Finally, the embedded training results do not include re-training or fine-tuning further after getting the shallow layer of the pre-trained detectors.ResultThe experimental results show that our FDM-Unet can improve the detection accuracy by 8.58% mean average precision (mAP) and 7.71% mAP on the PASCAL VOC 2007 synthetic underwater image test set for pre-trained detectors YOLO v3 and SSD, respectively. In addition, on the real underwater dataset underwater robot professional contest 19 (URPC19), using different proportions of data for fine-tuning, FDM-Unet can improve the detection accuracy by 4.4%~10.6% mAP and 3.9%~10.7% mAP in contrast to the vanilla detectors YOLO v3 and SSD, respectively.ConclusionOur FDM-Unet can be as a plug-and-play module at the cost of increasing the very small number of parameters and calculation. The detection accuracy of the pre-trained model is improved greatly with no need of retraining or fine-tuning the detection model on the synthetic underwater image. Real underwater fine-tuning experiments show that our FDM-Unet can improve the detection performance compared to the baseline. In addition, the fine-tuning performance can improve the pre-trained detection model for real underwater image beyond synthesis image.关键词:convolutional neural network(CNN);object detection;feature enhancement;imaging model;image synthesis261|192|2更新时间:2024-05-07

摘要:ObjectiveUnderwater-relevant object detection aims to localize and recognize the objects of underwater scenarios. Our research is essential for its widespread applications in oceanography, underwater navigation and fish farming. Current deep convolutional neural network based (DCNN-based) object detection is via large-scale trained datasets like pattern analysis, statistical modeling and computational learning visual object classes 2007 (PASCAL VOC 2007) and Microsoft common objects in context (MS COCO) with degradation-ignored. Nevertheless, the issue of degradation-related has to be resolved as mentioned below: 1) the scarce underwater-relevant detection datasets affects its detection accuracy, which inevitably leads to overfitting of deep neural network models. 2) Underwater-relevant images have the features of low contrast, texture distortion and blur under the complicated underwater environment and illumination circumstances, which limits the detection accuracy of the detection algorithms. In practice, image augmentation method is to alleviate the insufficient problem of datasets. However, image augmentation has limited performance improvement of deep neural network models on small datasets. Another feasible detection solution is to restore (enhance) the underwater-relevant image for a clear image (mainly based on deep learning methods), improve its visibility and contrast, and reduce color cast. Actually, some detection results are relied on synthetic datasets training due to the lack of ground truth images. Its enhancement effect of ground truth images largely derived of the quality of synthetic images. Our pre-trained model is effective for underwater scenes because it is difficult to train a high-accuracy detector. Clear images-based deep neural network detection models' training are difficult to generalize underwater scenes directly because of the domain shift issue caused by imaging differences. We develop a plug-and-play feature enhancement module, which can effectively address the domain shift issue between clear images and underwater images via restoring the features of underwater images extracted from the low-level network. The clear image-based detection network training can be directly applied to underwater image object detection.MethodFirst, to synthesize the underwater version based on an improved light scattering model for underwater imaging, we propose an underwater image synthesis method, which first estimates color cast and luminance from real underwater images and integrate them with the estimated scene depth of a clear image. Next, we design a lightweight feature enhancement module named feature de-drifting module Unet (FDM-Unet) originated from the Unet structure. Third, to extract the shallow features of clear images and their corresponding synthetic underwater images, we use common detectors (e.g., you only look once v3 (YOLO v3) and single shot multibox detector (SSD)) pre-trained on clear images. The shallow feature of the underwater image is input into FDM-Unet for feature de-drifting. To supervise the training of FDM-Unet, our calculated mean square error loss is in terms of the interconnections of the enhanced feature and the original shallow feature. Finally, the embedded training results do not include re-training or fine-tuning further after getting the shallow layer of the pre-trained detectors.ResultThe experimental results show that our FDM-Unet can improve the detection accuracy by 8.58% mean average precision (mAP) and 7.71% mAP on the PASCAL VOC 2007 synthetic underwater image test set for pre-trained detectors YOLO v3 and SSD, respectively. In addition, on the real underwater dataset underwater robot professional contest 19 (URPC19), using different proportions of data for fine-tuning, FDM-Unet can improve the detection accuracy by 4.4%~10.6% mAP and 3.9%~10.7% mAP in contrast to the vanilla detectors YOLO v3 and SSD, respectively.ConclusionOur FDM-Unet can be as a plug-and-play module at the cost of increasing the very small number of parameters and calculation. The detection accuracy of the pre-trained model is improved greatly with no need of retraining or fine-tuning the detection model on the synthetic underwater image. Real underwater fine-tuning experiments show that our FDM-Unet can improve the detection performance compared to the baseline. In addition, the fine-tuning performance can improve the pre-trained detection model for real underwater image beyond synthesis image.关键词:convolutional neural network(CNN);object detection;feature enhancement;imaging model;image synthesis261|192|2更新时间:2024-05-07

Image Processing and Coding

-

摘要:ObjectiveImage aesthetic assessment is oriented to simulate human perception of beauty and identify image-related aesthetic quality assessment. It is essential for computer vision applications in the context of image forecasting, photos portfolio management, image enhancement and retrieval. Current image aesthetic quality evaluation method has been mainly focused on three major tasks as mentioned below: 1) aesthetic binary classification: divide images quality into high aesthetic and low aesthetic context; 2) aesthetic score regression: calculate the overall aesthetic average score of an image; 3) aesthetics distribution prediction: predict the probability of different aesthetic ratings of an image. Beyond binary classification to aesthetic score regression, more aesthetic information can be provided via the prediction of aesthetic distribution. However, these methods are still restricted of the factors of aesthetic prior knowledge and challenged for the source of aesthetic feeling. Image attributes has rich aesthetic contexts like content, brightness, depth of field and color richness. As a "hub" between image low-level features and aesthetic quality, these attributes can enhance the interpretability of aesthetic evaluation and play an important role in image aesthetic quality assessment. The aesthetic quality of an image is judged with a specific scene in common. Specifically, people make aesthetic judgments according to multiple aesthetic attributes. There is a strong correlation between aesthetic attributes and aesthetic quality, and the aesthetic attributes can provide interpretable details for aesthetic quality assessment. For instance, to assess a portrait image, we focus on the details of the foreground rather than those of the background. In contrast, we tend to treat the details less important than in the assessment of a portrait image for assessing a landscape image. Hence, we facilitate an image aesthetic attribute prediction model based on multi-tasks deep learning technique, which uses scene information to assist image aesthetic attributes prediction. More accurate image aesthetic score prediction is achieved.MethodThe model consists of a two-stream deep residual network. To obtain the scene information of the image, the first stream of the network is trained based on the scene prediction task. To predict the aesthetic attributes and overall aesthetic scores of the image, the second stream is used to extract the aesthetic features of the image, and then combine the two features for training through multi-tasks learning. In order to use the scene information of the image to assist the prediction of aesthetic attributes, we train the first stream of the network to predict the image scene category. After training the scene prediction stream, we train the attribute prediction stream via attributes-labeled aesthetic images. We use concatenation to fuse the features of the dual-stream network, and the full connection layers are trained to obtain the joint distribution of the aesthetic attributes and the overall score. For each image aesthetic attribute, we want to get its individual regression score. Our mean square error (MSE) loss function is used to measure the degree of difference between the predicted value and the ground truth. Our experiment is based on the aesthetic and attributes database (AADB). AADB consists of a total of 10 000 images, and the standard partition is followed on the basis of 8 500 images for training, 500 images for validation and the remaining 1 000 images for testing. We scale the images to 256×256×3 before inputting to the network. The i7-10700 CPU and NVIDIA GTX 1660 super GPU are equipped. The batch size is set to 12, epoch is set to 15, and adam optimization algorithm is used. The learning rate of the backbone network is set to 1E-5, and the learning rate of fully connected network is set to 1E-6. In Combination with the image scene information, the proposed model improves the prediction accuracy in terms of the image aesthetic attributes and aesthetic scores.ResultOur method has improved the prediction accuracy of the majority of aesthetic attributes, and the correlation coefficient of the overall aesthetic score prediction has also improved about 6%, which is feasible to melt scene information into the prediction of aesthetic attributes.ConclusionThe integrated scene information for aesthetic attributes prediction clarify the intimate relation between image scene category and aesthetic attributes, and the experimental results demonstrate that our scene information has its potentials for image aesthetic quality assessment. The future research direction can be focused on deep relationship between scene semantics and image aesthetics. This deep relationship could build a more robust image aesthetic assessment framework, which can consistently improve the performance of image aesthetic quality assessment, as well as enhance the interpretability of aesthetic assessment.关键词:image aesthetic quality assessment;aesthetic attributes;deep convolution network;multi-task learning;scene classification167|441|0更新时间:2024-05-07

摘要:ObjectiveImage aesthetic assessment is oriented to simulate human perception of beauty and identify image-related aesthetic quality assessment. It is essential for computer vision applications in the context of image forecasting, photos portfolio management, image enhancement and retrieval. Current image aesthetic quality evaluation method has been mainly focused on three major tasks as mentioned below: 1) aesthetic binary classification: divide images quality into high aesthetic and low aesthetic context; 2) aesthetic score regression: calculate the overall aesthetic average score of an image; 3) aesthetics distribution prediction: predict the probability of different aesthetic ratings of an image. Beyond binary classification to aesthetic score regression, more aesthetic information can be provided via the prediction of aesthetic distribution. However, these methods are still restricted of the factors of aesthetic prior knowledge and challenged for the source of aesthetic feeling. Image attributes has rich aesthetic contexts like content, brightness, depth of field and color richness. As a "hub" between image low-level features and aesthetic quality, these attributes can enhance the interpretability of aesthetic evaluation and play an important role in image aesthetic quality assessment. The aesthetic quality of an image is judged with a specific scene in common. Specifically, people make aesthetic judgments according to multiple aesthetic attributes. There is a strong correlation between aesthetic attributes and aesthetic quality, and the aesthetic attributes can provide interpretable details for aesthetic quality assessment. For instance, to assess a portrait image, we focus on the details of the foreground rather than those of the background. In contrast, we tend to treat the details less important than in the assessment of a portrait image for assessing a landscape image. Hence, we facilitate an image aesthetic attribute prediction model based on multi-tasks deep learning technique, which uses scene information to assist image aesthetic attributes prediction. More accurate image aesthetic score prediction is achieved.MethodThe model consists of a two-stream deep residual network. To obtain the scene information of the image, the first stream of the network is trained based on the scene prediction task. To predict the aesthetic attributes and overall aesthetic scores of the image, the second stream is used to extract the aesthetic features of the image, and then combine the two features for training through multi-tasks learning. In order to use the scene information of the image to assist the prediction of aesthetic attributes, we train the first stream of the network to predict the image scene category. After training the scene prediction stream, we train the attribute prediction stream via attributes-labeled aesthetic images. We use concatenation to fuse the features of the dual-stream network, and the full connection layers are trained to obtain the joint distribution of the aesthetic attributes and the overall score. For each image aesthetic attribute, we want to get its individual regression score. Our mean square error (MSE) loss function is used to measure the degree of difference between the predicted value and the ground truth. Our experiment is based on the aesthetic and attributes database (AADB). AADB consists of a total of 10 000 images, and the standard partition is followed on the basis of 8 500 images for training, 500 images for validation and the remaining 1 000 images for testing. We scale the images to 256×256×3 before inputting to the network. The i7-10700 CPU and NVIDIA GTX 1660 super GPU are equipped. The batch size is set to 12, epoch is set to 15, and adam optimization algorithm is used. The learning rate of the backbone network is set to 1E-5, and the learning rate of fully connected network is set to 1E-6. In Combination with the image scene information, the proposed model improves the prediction accuracy in terms of the image aesthetic attributes and aesthetic scores.ResultOur method has improved the prediction accuracy of the majority of aesthetic attributes, and the correlation coefficient of the overall aesthetic score prediction has also improved about 6%, which is feasible to melt scene information into the prediction of aesthetic attributes.ConclusionThe integrated scene information for aesthetic attributes prediction clarify the intimate relation between image scene category and aesthetic attributes, and the experimental results demonstrate that our scene information has its potentials for image aesthetic quality assessment. The future research direction can be focused on deep relationship between scene semantics and image aesthetics. This deep relationship could build a more robust image aesthetic assessment framework, which can consistently improve the performance of image aesthetic quality assessment, as well as enhance the interpretability of aesthetic assessment.关键词:image aesthetic quality assessment;aesthetic attributes;deep convolution network;multi-task learning;scene classification167|441|0更新时间:2024-05-07 -

摘要:ObjectiveVideo-based abnormal behavior detection has been developing based on the intelligent surveillance technology, and it has potentials in public security. However, the issue of video-based spatio-temporal information modeling is challenged for improving the accuracy of anomaly detection. Traditional video-based abnormal behavior detection methods are focused on manual-based features extraction, such as the clear contour, motion information and trajectory of the target. Such methods are constrained of weak representation in massive video data processing. Current deep learning model method can automatically learn and extract advanced features based on massive video stream datasets, which has been widely used in video anomaly detection methods instead of manual-based features. The structural priorities of generative adversarial network (GAN) have been widely used in video anomaly detection tasks. Aiming at the problems of low utilization rate of spatio-temporal features and poor detection effect of traditional GAN, we demonstrate a video anomaly detection algorithm based on the integration of GAN and gating self-attention mechanism.MethodFirst, the gating self-attention mechanism is introduced into the U-net part of the generative network in the GAN, and the self-attention-mechanism-derived distributed weight of the feature maps is assigned layer by layer in the sampling process. The standard U-net network is linked to the features of the targets through the jump connection structure without effective features orientation. Our research is focused on combining the structural optimization of U-net network and gated self-attention mechanism, the feature representation of background regions irrelevant to anomaly detection tasks is suppressed in input video frames, the related feature expression of different targets is highlighted, and the spatio-temporal information is modeled more effectively. Next, to guarantee the consistency between video sequences, we adopt a smoother and faster LiteFlownet network to extract the motion information between video streams. Finally, to generate higher quality frames, the loss-related multi-functions of intensity, gradient and motion are added to enhance the stability of model detection. The adversarial network is trained by PatchGAN. GAN can achieve a good and stable performance after learning adversarial optimization.ResultOur experiments are carried out on the datasets of recognized video abnormal event, such as Chinese University of Hong Kong(CUHK) Avenue, University of California, San Diego(UCSD) Ped1 and UCSD Ped2, and the featured area value under receiver operating curve (ROC), anomaly rule fraction S and peak signal-to-noise ratio (PSNR) are taken as performance evaluation indexes. For the CUHK Avenue dataset, our area under curve(AUC)reaches 87.2%, which is 2.3% higher than those similar methods. For both UCSD Ped1 and UCSD Ped2 datasets, the AUC-values are higher more. At the same time, four ablation experiments are implemented as mentioned below: 1) the model 1 is applied to video anomaly detection tasks using standard U-net as the generative network; 2) the difference of model 2 is clarified that the gating self-attention mechanism is added to the generation network U-net to verify whether the mechanism is effective or not; 3) model 3 adds a gating self-attention mechanism to the generative network U-net, and the LiteFlownet is added to verify the effectiveness of the optical flow network; and 4) our model 4 is illustrated as well. For the generated network U-net and the gating self-attention mechanism, LiteFlownet is added and the gating self-attention mechanism is merged layer by layer at the coding end to perform feature weighting processing and the merged features are identified at the decoding end. Our method can obtain higher AUC values than the other three ablation model methods. We test the trained model and visualize the PSNR value of video sequence frames. The change of PSNR value shows the accuracy of the model for abnormal behavior detection.ConclusionThe experimental results show that our method achieves better recognition results on CUHK Avenue, UCSD Ped1 and UCSD Ped2 datasets, which is more suitable for video anomaly detection tasks, and effectively improves the stability and accuracy of abnormal behavior detection task model. Moreover, the performance of abnormal behavior detection can be significantly improved via using video sequence interframe motion information.关键词:video anomaly detection;generative adversarial network(GAN);U-Net;gating self-attention mechanism;optical flow network178|1337|2更新时间:2024-05-07

摘要:ObjectiveVideo-based abnormal behavior detection has been developing based on the intelligent surveillance technology, and it has potentials in public security. However, the issue of video-based spatio-temporal information modeling is challenged for improving the accuracy of anomaly detection. Traditional video-based abnormal behavior detection methods are focused on manual-based features extraction, such as the clear contour, motion information and trajectory of the target. Such methods are constrained of weak representation in massive video data processing. Current deep learning model method can automatically learn and extract advanced features based on massive video stream datasets, which has been widely used in video anomaly detection methods instead of manual-based features. The structural priorities of generative adversarial network (GAN) have been widely used in video anomaly detection tasks. Aiming at the problems of low utilization rate of spatio-temporal features and poor detection effect of traditional GAN, we demonstrate a video anomaly detection algorithm based on the integration of GAN and gating self-attention mechanism.MethodFirst, the gating self-attention mechanism is introduced into the U-net part of the generative network in the GAN, and the self-attention-mechanism-derived distributed weight of the feature maps is assigned layer by layer in the sampling process. The standard U-net network is linked to the features of the targets through the jump connection structure without effective features orientation. Our research is focused on combining the structural optimization of U-net network and gated self-attention mechanism, the feature representation of background regions irrelevant to anomaly detection tasks is suppressed in input video frames, the related feature expression of different targets is highlighted, and the spatio-temporal information is modeled more effectively. Next, to guarantee the consistency between video sequences, we adopt a smoother and faster LiteFlownet network to extract the motion information between video streams. Finally, to generate higher quality frames, the loss-related multi-functions of intensity, gradient and motion are added to enhance the stability of model detection. The adversarial network is trained by PatchGAN. GAN can achieve a good and stable performance after learning adversarial optimization.ResultOur experiments are carried out on the datasets of recognized video abnormal event, such as Chinese University of Hong Kong(CUHK) Avenue, University of California, San Diego(UCSD) Ped1 and UCSD Ped2, and the featured area value under receiver operating curve (ROC), anomaly rule fraction S and peak signal-to-noise ratio (PSNR) are taken as performance evaluation indexes. For the CUHK Avenue dataset, our area under curve(AUC)reaches 87.2%, which is 2.3% higher than those similar methods. For both UCSD Ped1 and UCSD Ped2 datasets, the AUC-values are higher more. At the same time, four ablation experiments are implemented as mentioned below: 1) the model 1 is applied to video anomaly detection tasks using standard U-net as the generative network; 2) the difference of model 2 is clarified that the gating self-attention mechanism is added to the generation network U-net to verify whether the mechanism is effective or not; 3) model 3 adds a gating self-attention mechanism to the generative network U-net, and the LiteFlownet is added to verify the effectiveness of the optical flow network; and 4) our model 4 is illustrated as well. For the generated network U-net and the gating self-attention mechanism, LiteFlownet is added and the gating self-attention mechanism is merged layer by layer at the coding end to perform feature weighting processing and the merged features are identified at the decoding end. Our method can obtain higher AUC values than the other three ablation model methods. We test the trained model and visualize the PSNR value of video sequence frames. The change of PSNR value shows the accuracy of the model for abnormal behavior detection.ConclusionThe experimental results show that our method achieves better recognition results on CUHK Avenue, UCSD Ped1 and UCSD Ped2 datasets, which is more suitable for video anomaly detection tasks, and effectively improves the stability and accuracy of abnormal behavior detection task model. Moreover, the performance of abnormal behavior detection can be significantly improved via using video sequence interframe motion information.关键词:video anomaly detection;generative adversarial network(GAN);U-Net;gating self-attention mechanism;optical flow network178|1337|2更新时间:2024-05-07 -

摘要:ObjectiveBolts are widely distributed connecting components in transmission lines for maintaining the safe and stable operation. Bolt-relevant pins loss may threaten to the key components disintegration for transmission lines and even cause large-scale power outages. To eliminate potential safety hazards and ensure the safe and stable operation of the line, it is inevitable to resolve missing-pins bolt issues timely and accurately. Traditional manual-based transmission line inspection has low efficiency, high risk, and is easily restricted by external environmental factors. Unmanned air vehicle (UAV) inspections have emerged to resolve the security problems to a certain extent. The drones-based high-definition inspection pictures are sent back to the ground for manual processing, but this method is still inefficient, and the missed detection rate and false detection rate are relatively high. Current deep learning technique has yielded more target detection algorithms for transmission line inspections. The challenging issues for inspection picture are derived of the size of the bolt structure and its small proportion and its complicated background. Existing target detection algorithms are oriented to obtain feature maps by continuously up-sampling the pictures input to the network. However, the scale of the feature maps tends to be quite smaller in the continuous up-sampling process. The loss of visual detail information in the feature map can get positioning and classification effects better, which is incapable to the recognition and detection of bolt-relevant pins loss, and the detection effect is poor. In order to improve the detection effect of missing pins of bolt in transmission lines, we develop a method based on inter-level cross feature fusion.MethodTo detect multi-scale targets, the single shot multibox detector (SSD) based network is used to output six different scale feature maps. 1) The low-level large-scale feature maps are used to detect small targets, and the high-level small-scale feature maps are used to detect large targets.2) The anchor box mechanism is also introduced into the SSD to guarantee the overall detection in the feature map. Therefore, SSD algorithm is more suitable for detecting bolt-related missing pins in the inspection picture. First, the small target paste data augmentation is carried out on the bolt missing pins fault detection data set. After cutting out the parts corresponding to the missing-pins bolt category and randomly paste into larger-scale inspection pictures, the number of label boxes in the large-scale inspection pictures and the number of images in the data set are both increased to realize data augmentation. Next, the inter-level cross self-adaptive feature fusion module is introduced into SSD network. It can add the feature pyramid structure, improve its structure and increase the level of cross-connection between feature maps. The feature map of the Conv4_3 layer in SSD network is beneficial to the detection of missing-pins bolts. Feature maps of the Conv3_3 layer and the Conv5_3 layer are introduced in terms of the six-layer output feature maps-derived feature pyramid. The fusion of the Conv4_3 layer and the Conv8_2 layer is used to enhance the visual information and semantic information of the feature maps. At the same time, the adaptively spatial feature fusion (ASFF) mechanism is melted into the network to adaptively learn the spatial weights of feature map fusion at various scales, and the obtained weight fusion inspection feature map is used for the final detection. Finally, the K-means clustering method is employed to statistically analyze the size and aspect ratio of the labeled frame for the bolt structure, and the anchor box is adjusted in the original SSD network adequately.ResultThe verification experiments are performed for the effectiveness of the network on the PASCAL VOC(pattern analysis, statistical modeling and computational learning visual object classes) dataset. The improved network has reached a 2.3% growth in detection accuracy compared to the original SSD. In the bolt missing pins detection experiments, the training set and the test set are randomly divided according to the ratio of 7:3. Experimental results show that our detection accuracy is 87.93% for normal bolts, 89.15% for missing-pins bolts. The detection accuracy is increased by 2.71% and 3.99%, respectively.ConclusionOur method has greatly improved the accuracy of bolt-relevant pins loss detection. The detection accuracy of the original SSD network has been significantly improved. Our optimized detection is beneficial to further develop the recognition and detection of other parts in the transmission line.关键词:bolt;missing pins;single shot multibox detector (SSD);inter-level cross feature pyramid;self-adaptive feature fusion;anchor box optimization95|168|1更新时间:2024-05-07

摘要:ObjectiveBolts are widely distributed connecting components in transmission lines for maintaining the safe and stable operation. Bolt-relevant pins loss may threaten to the key components disintegration for transmission lines and even cause large-scale power outages. To eliminate potential safety hazards and ensure the safe and stable operation of the line, it is inevitable to resolve missing-pins bolt issues timely and accurately. Traditional manual-based transmission line inspection has low efficiency, high risk, and is easily restricted by external environmental factors. Unmanned air vehicle (UAV) inspections have emerged to resolve the security problems to a certain extent. The drones-based high-definition inspection pictures are sent back to the ground for manual processing, but this method is still inefficient, and the missed detection rate and false detection rate are relatively high. Current deep learning technique has yielded more target detection algorithms for transmission line inspections. The challenging issues for inspection picture are derived of the size of the bolt structure and its small proportion and its complicated background. Existing target detection algorithms are oriented to obtain feature maps by continuously up-sampling the pictures input to the network. However, the scale of the feature maps tends to be quite smaller in the continuous up-sampling process. The loss of visual detail information in the feature map can get positioning and classification effects better, which is incapable to the recognition and detection of bolt-relevant pins loss, and the detection effect is poor. In order to improve the detection effect of missing pins of bolt in transmission lines, we develop a method based on inter-level cross feature fusion.MethodTo detect multi-scale targets, the single shot multibox detector (SSD) based network is used to output six different scale feature maps. 1) The low-level large-scale feature maps are used to detect small targets, and the high-level small-scale feature maps are used to detect large targets.2) The anchor box mechanism is also introduced into the SSD to guarantee the overall detection in the feature map. Therefore, SSD algorithm is more suitable for detecting bolt-related missing pins in the inspection picture. First, the small target paste data augmentation is carried out on the bolt missing pins fault detection data set. After cutting out the parts corresponding to the missing-pins bolt category and randomly paste into larger-scale inspection pictures, the number of label boxes in the large-scale inspection pictures and the number of images in the data set are both increased to realize data augmentation. Next, the inter-level cross self-adaptive feature fusion module is introduced into SSD network. It can add the feature pyramid structure, improve its structure and increase the level of cross-connection between feature maps. The feature map of the Conv4_3 layer in SSD network is beneficial to the detection of missing-pins bolts. Feature maps of the Conv3_3 layer and the Conv5_3 layer are introduced in terms of the six-layer output feature maps-derived feature pyramid. The fusion of the Conv4_3 layer and the Conv8_2 layer is used to enhance the visual information and semantic information of the feature maps. At the same time, the adaptively spatial feature fusion (ASFF) mechanism is melted into the network to adaptively learn the spatial weights of feature map fusion at various scales, and the obtained weight fusion inspection feature map is used for the final detection. Finally, the K-means clustering method is employed to statistically analyze the size and aspect ratio of the labeled frame for the bolt structure, and the anchor box is adjusted in the original SSD network adequately.ResultThe verification experiments are performed for the effectiveness of the network on the PASCAL VOC(pattern analysis, statistical modeling and computational learning visual object classes) dataset. The improved network has reached a 2.3% growth in detection accuracy compared to the original SSD. In the bolt missing pins detection experiments, the training set and the test set are randomly divided according to the ratio of 7:3. Experimental results show that our detection accuracy is 87.93% for normal bolts, 89.15% for missing-pins bolts. The detection accuracy is increased by 2.71% and 3.99%, respectively.ConclusionOur method has greatly improved the accuracy of bolt-relevant pins loss detection. The detection accuracy of the original SSD network has been significantly improved. Our optimized detection is beneficial to further develop the recognition and detection of other parts in the transmission line.关键词:bolt;missing pins;single shot multibox detector (SSD);inter-level cross feature pyramid;self-adaptive feature fusion;anchor box optimization95|168|1更新时间:2024-05-07 -

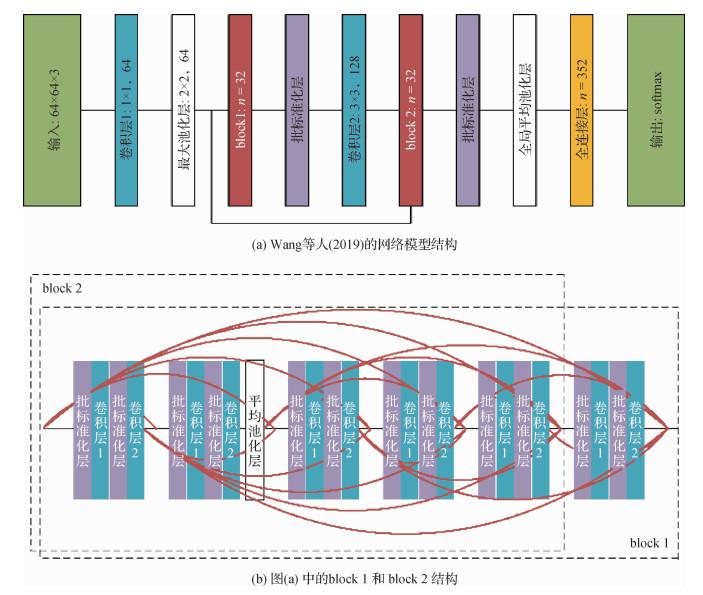

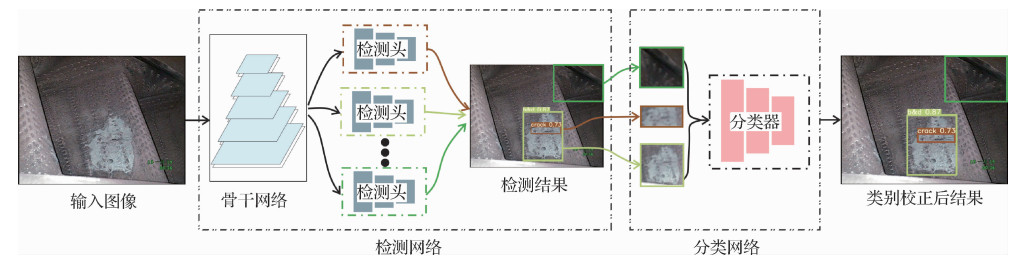

摘要:ObjectiveThe healthy issue of aero-engine is threatened due to high temperature and extreme pressure environment in common. The commonly-used manual inspection method for damage detection is very labor-intensive. Therefore, a high detection accuracy-based computer vision algorithm is cost-effective to detect aero-engine status automatically. The existing bore-scope image damage detection methods are divided into two categories: traditional manual-based and deep learning-based. Traditional detection methods are focused on edge detection or filter detection-like image processing for crack or burn detection. Although traditional methods perform well on simple scenes, they cannot generalize well on the complex images like the erosion and oil contamination-derived regions, and it cannot be extended to the other damages like nick and dent. Most aero-engine damage detection methods are based on deep learning technique to resilient designing features and tuning parameters of traditional methods. Multi-objects detection methods like single shot multibox detector(SSD), you only look once(YOLO) series models are used to detect different damages. However, most of the deep learning-based aero-engine detection methods do not concern about the detection of multiple types of damage in the same region and the low differentiation of damage in the evolution process, which is a limiting factor for the existing state-of-the-art object detectors. To improve the accuracy of detection, we develop an aero-engine damage-oriented detection method via the cascade detector and classifier (Cascade-YOLO). Cascade-YOLO is used to train independent damage detector for each type of damage, which can fully develop the detector of other types of similar damage excluded. Furthermore, a classifier is adopted to modify damage labels after detecting the damages, which is trained with all types of damages.MethodOur YOLO v5-originated cascade-YOLO is developed on object detection benchmarks. There are mainly two differences between Cascade-YOLO and YOLO v5. The first one is that Cascade-YOLO has multiple detection heads for various types of damage detection. The second one is that a multi-class classifier is used to correct damage label in Cascade-YOLO. Specifically, a YOLO v5-based damage detection network is trained by seeing all types' damages as target objects. As the parameters of our backbone network, the parameters of this pre-trained network are used for feature extraction. Thus, the extracted features are suitable for representing all kinds of damages. All parameters of the backbone network are fixed in latter training. Our multiple damage detection heads is employed to extract features based on the backbone network. Each damage detection head is responsible for detecting a single type of damage, which can fully explore the discrimination ability of different types of damages and increase the recall of single type of damage. However, multiple detection heads may fail to classify the damage into correct damage category. To modify the detected label, we adopt a cascade manner via a multi-class classifier after the multi-head detector. We can segment the damage using a modified semantic segmentation method.ResultWe build a bore-scope image dataset with 1 305 images in the context of nine different types of damages, and evaluate six state-of-the-art one-stage object detectors (e.g., single shot multibox detector(SSD), object detector based on multi-level feature pyramid network(M2Det) and YOLO v5) and two-stage object detectors (e.g., region convolutional neural network(Mask R-CNN) and BMask R-CNN) on this dataset. The mean average precision(MAP) of Cascade-YOLO is higher than that of YOLO v5 by 2.49%, and is higher than that of Mask R-CNN by 3.3%. The accuracy and recall of Cascade-YOLO are higher than those of YOLO v5 by 12.59% and 12.46%, respectively.ConclusionOur cascade detector and classifier-based aero-engine damage detection is developed in terms of the tailored bore-scope image. The recall of the damage detection can be increased using a single type damage-oriented independent detection head. The integrated multiple detection heads can detect multiple types of damage. Multiple detection heads share a feature extraction backbone network, which improves the detection efficiency. We adopt a multi-class classifier to correct the labels of the detected damages that having low confidence. Furthermore, we also employ a clear modified semantic segmentation method to segment the damage region. The experiment results show that Cascade-YOLO performances can be qualified for various types of damage detection problem. The Cascade-YOLO is optimized in detection precision, recall and accuracy compared to YOLO v5 and Mask R-CNN. The potential independent detection head method is feasible for the extended damages detection.关键词:damage detection;borescope image;cascade detection;airplane engine;you only look once(YOLO)89|119|2更新时间:2024-05-07

摘要:ObjectiveThe healthy issue of aero-engine is threatened due to high temperature and extreme pressure environment in common. The commonly-used manual inspection method for damage detection is very labor-intensive. Therefore, a high detection accuracy-based computer vision algorithm is cost-effective to detect aero-engine status automatically. The existing bore-scope image damage detection methods are divided into two categories: traditional manual-based and deep learning-based. Traditional detection methods are focused on edge detection or filter detection-like image processing for crack or burn detection. Although traditional methods perform well on simple scenes, they cannot generalize well on the complex images like the erosion and oil contamination-derived regions, and it cannot be extended to the other damages like nick and dent. Most aero-engine damage detection methods are based on deep learning technique to resilient designing features and tuning parameters of traditional methods. Multi-objects detection methods like single shot multibox detector(SSD), you only look once(YOLO) series models are used to detect different damages. However, most of the deep learning-based aero-engine detection methods do not concern about the detection of multiple types of damage in the same region and the low differentiation of damage in the evolution process, which is a limiting factor for the existing state-of-the-art object detectors. To improve the accuracy of detection, we develop an aero-engine damage-oriented detection method via the cascade detector and classifier (Cascade-YOLO). Cascade-YOLO is used to train independent damage detector for each type of damage, which can fully develop the detector of other types of similar damage excluded. Furthermore, a classifier is adopted to modify damage labels after detecting the damages, which is trained with all types of damages.MethodOur YOLO v5-originated cascade-YOLO is developed on object detection benchmarks. There are mainly two differences between Cascade-YOLO and YOLO v5. The first one is that Cascade-YOLO has multiple detection heads for various types of damage detection. The second one is that a multi-class classifier is used to correct damage label in Cascade-YOLO. Specifically, a YOLO v5-based damage detection network is trained by seeing all types' damages as target objects. As the parameters of our backbone network, the parameters of this pre-trained network are used for feature extraction. Thus, the extracted features are suitable for representing all kinds of damages. All parameters of the backbone network are fixed in latter training. Our multiple damage detection heads is employed to extract features based on the backbone network. Each damage detection head is responsible for detecting a single type of damage, which can fully explore the discrimination ability of different types of damages and increase the recall of single type of damage. However, multiple detection heads may fail to classify the damage into correct damage category. To modify the detected label, we adopt a cascade manner via a multi-class classifier after the multi-head detector. We can segment the damage using a modified semantic segmentation method.ResultWe build a bore-scope image dataset with 1 305 images in the context of nine different types of damages, and evaluate six state-of-the-art one-stage object detectors (e.g., single shot multibox detector(SSD), object detector based on multi-level feature pyramid network(M2Det) and YOLO v5) and two-stage object detectors (e.g., region convolutional neural network(Mask R-CNN) and BMask R-CNN) on this dataset. The mean average precision(MAP) of Cascade-YOLO is higher than that of YOLO v5 by 2.49%, and is higher than that of Mask R-CNN by 3.3%. The accuracy and recall of Cascade-YOLO are higher than those of YOLO v5 by 12.59% and 12.46%, respectively.ConclusionOur cascade detector and classifier-based aero-engine damage detection is developed in terms of the tailored bore-scope image. The recall of the damage detection can be increased using a single type damage-oriented independent detection head. The integrated multiple detection heads can detect multiple types of damage. Multiple detection heads share a feature extraction backbone network, which improves the detection efficiency. We adopt a multi-class classifier to correct the labels of the detected damages that having low confidence. Furthermore, we also employ a clear modified semantic segmentation method to segment the damage region. The experiment results show that Cascade-YOLO performances can be qualified for various types of damage detection problem. The Cascade-YOLO is optimized in detection precision, recall and accuracy compared to YOLO v5 and Mask R-CNN. The potential independent detection head method is feasible for the extended damages detection.关键词:damage detection;borescope image;cascade detection;airplane engine;you only look once(YOLO)89|119|2更新时间:2024-05-07 -

摘要:ObjectiveHuman visual system is beneficial to extracting features of the region of interest in images or videos processing. Computer-vision-derived salient object detection aims to improving the ability of visual interpretation for image preprocessing. The quality of the generated saliency map affects the performance of subsequent vision tasks directly. Current deep-learning-based salient object detection can locate salient objects well in terms of the effective semantic features extraction. The issue of clear-edged objects extraction is essential for improving the following visual tasks. In recent years, the complex scenes-oriented edge accuracy of the objects enhancement has been concerned further. Such models are required to obtain fine edges based on the indirect multiple edge losses for the edges of salient objects supervision. To improve the edge details of the object, some models simply fuse the complementary object features and edge features. These models do not make full use of the edge features, resulting in unidentified edge enhancement. Furthermore, it is necessary to use multi-scale information to extract object features because salient objects have variability of positions and scales in visual scenes. In order to regularize clear edges saliency map, we demonstrate a salient object detection model based on semantic assistance and edge feature.MethodWe use a semantic assistant feature fusion module to optimize the lateral output features of the backbone network. The selective layer features of each fuse the adjacent low-level features with semantic assistance to obtain enough structural information and enhance the feature strength of the salient region, which is helpful to generate a regular saliency map to detect the entire salient objects. We design an edge-branched network to obtain accurate edge features. To enhance the distinguishability of the edge regions for salient objects, the object features are integrated. In addition, a bidirectional multi-scale module extracts the multi-scale information. Thanks to the mechanism of dense connection and feature fusion, the bidirectional multi-scale module gradually fuses the multi-scale features of each adjacent layer, which is beneficial to detect multi-scale objects in the scene. Our experiments are equipped with a single NVIDIA GTX 1080ti graphics-processing unit (GPU) for training and test. We use the DUTS-train datasets to train the model, which contains 10 553 images. The model is trained for convergence with no validation set. The Visual Geometry Group(VGG16) is as the backbone network through the PyTorch deep learning framework. The pre-trained model on ImageNet initializes some parameters of the backbone network, and all newly convolutional layers-added are randomly initialized with "0.01"of variance and "0" of deviation. The hyper-parameters and experimental settings are clarified that the learning rate, weight decay, and momentum are set to 5E-5, 0.000 5, and 0.9, respectively. We use adam optimizer for optimization learning. We carried out back-propagation method based on every ten images. The scale of input image is 256×256 pixels, and random flip is for data enhancement only. The model is trained in 100 iterations totally, and the attenuation is 10 times after 60 iterations.ResultOur model is compared to twelve existing popular saliency models based on four commonly-used datasets, i.e., extended complex scene saliency dataset (ECSSD), Dalian University of Technology and OMRON Corporation (DUT-O), HKU-IS, and DUTS. The analyzed results show that the maximum F-measure values of our model on each of four datasets are 0.940, 0.795, 0.929, and 0.870, the mean absolution error(MAE) values are 0.041, 0.057, 0.034, and 0.043, respectively. Our saliency maps obtained are closer to the ground truth.ConclusionWe develop a model to detect salient objects. The semantic assisted feature and edge feature fusion in the model is beneficial to generate regularized saliency maps in the context of clear object edges. The multi-scale feature extraction improves the performance of salient object detection further.关键词:salient object detection;full convolution neural network;semantic assistance;edge feature fusion;multi-scale extraction71|106|0更新时间:2024-05-07