最新刊期

卷 27 , 期 10 , 2022

-

摘要:The growth of multimedia technology has leveraged more available multifaceted media data. Human-perceptive multiple media data fusion has promoted the research and development (R&D) of artificial intelligence (AI) for computer vision. It has a wide range of applications like remote sensing image interpretation, biomedicine, and depth estimation. Multimodality can be as a form of representation of things (RoT). It refers to the description of things from multiple perspectives. Early AI-oriented technology is focused on a single modality of data. Current human-perceptive researches have clarified that each modality has a relatively independent description of things (IDoT), and the use of complementary representations of multimodal data tend to three-dimensional further. Recent processing and applications of multimodal data has been intensively developed like sentiment analysis, machine translation, natural language processing, and biomedicine. Our critical review is focused on the development of multimodality. Computer-vision-oriented multimodal learning is mainly used to analyze the related multimodal data on the aspects of images and videos, modalities-ranged learning and complemented information, and image detection and recognition, semantic segmentation, and video action prediction, etc. Multimodal data has its priority for objects description. First, it is challenged to collect large-scale, high-quality multimodal datasets due to the equipment-limited like multiple imaging devices and sensors. Next, Image and video data processing and labeling are time-consuming and labor-intensive. Based on the limited-data-derived multimodal learning methods, the multimodal data limited methods in the context of computer vision can be segmented into five aspects, including few-shot learning, lack of strong supervised information, active learning, data denoising and data augmentation. The multi-features of samples and the models evolution are critically reviewed as mentioned below: 1) in the case of insufficient multi-modal data, the few-shot learning method has the cognitive ability to make correct judgments via learning a small number of samples only, and it can effectively learn the target features in the case of lack of data. 2) Due to the high cost of the data labeling process, it is challenged to obtain all the ground truth labels of all modalities for strongly supervised learning of the model. The incomplete supervised methods are composed of weakly supervised, unsupervised, semi-supervised, and self-supervised learning methods in common. These methods can optimize modal labeling information and cost-effective manual labeling. 3) The active learning method is based on the integration of prior knowledge and learning regulatory via designing a model using autonomous learning ability, and it is committed to the maximum optimization of few samples. Labeling costs can be effectively reduced in consistency based on the optimized options of samples. 4) Multimodal data denoising refers to reducing data noise, restoring the original data, and then extracting the information of interest. 5) In order to make full use of limited multi-modal data, few-samples-conditioned data enhancement method extends realistic data by performing a series of transformation operations on the original data set. In addition, the data sets are used for the multimodal learning method limited data. Its potential applications are introduced like human pose estimation and person re-identification, and the performance of the existing algorithms is compared and analyzed. The pros and cons, as well as the future development direction, are projected as following: 1) a lightweight multimodal data processing method: we argue that limited-data-conditioned multimodal learning still has the challenge of mobile-devices-oriented models applications. When the existing methods fuse the information of multiple modalities, it is generally necessary to use two or above networks for feature extraction, and then fuse the features. Therefore, the large number of parameters and the complex structure of the model limit its application to mobile devices. Future lightweight model has its potentials. 2) A commonly-used multimodal intelligent processing model: most of existing multimodal data processing methods are derived from the developed multi-algorithms for multitasks, which need to be trained on specific tasks. This tailored training method greatly increases the cost of developing models, making it difficult to meet the needs of more application scenarios. Therefore, for the data of different modalities, it is necessary to promote a consensus perception model to learn the general representation of multimodal data and the parameters and features of the general model can be shared for multiple scenarios. 3) A multi-sources knowledge and data driven model: it is possible to introduce featured data and knowledge of multi-modal data beyond, establish an integrated knowledge-data-driven model, and enhance the model's performance and interpretability.关键词:multimodal data;limited data;deep learning;fusion algorithms;computer vision156|328|5更新时间:2024-05-07

摘要:The growth of multimedia technology has leveraged more available multifaceted media data. Human-perceptive multiple media data fusion has promoted the research and development (R&D) of artificial intelligence (AI) for computer vision. It has a wide range of applications like remote sensing image interpretation, biomedicine, and depth estimation. Multimodality can be as a form of representation of things (RoT). It refers to the description of things from multiple perspectives. Early AI-oriented technology is focused on a single modality of data. Current human-perceptive researches have clarified that each modality has a relatively independent description of things (IDoT), and the use of complementary representations of multimodal data tend to three-dimensional further. Recent processing and applications of multimodal data has been intensively developed like sentiment analysis, machine translation, natural language processing, and biomedicine. Our critical review is focused on the development of multimodality. Computer-vision-oriented multimodal learning is mainly used to analyze the related multimodal data on the aspects of images and videos, modalities-ranged learning and complemented information, and image detection and recognition, semantic segmentation, and video action prediction, etc. Multimodal data has its priority for objects description. First, it is challenged to collect large-scale, high-quality multimodal datasets due to the equipment-limited like multiple imaging devices and sensors. Next, Image and video data processing and labeling are time-consuming and labor-intensive. Based on the limited-data-derived multimodal learning methods, the multimodal data limited methods in the context of computer vision can be segmented into five aspects, including few-shot learning, lack of strong supervised information, active learning, data denoising and data augmentation. The multi-features of samples and the models evolution are critically reviewed as mentioned below: 1) in the case of insufficient multi-modal data, the few-shot learning method has the cognitive ability to make correct judgments via learning a small number of samples only, and it can effectively learn the target features in the case of lack of data. 2) Due to the high cost of the data labeling process, it is challenged to obtain all the ground truth labels of all modalities for strongly supervised learning of the model. The incomplete supervised methods are composed of weakly supervised, unsupervised, semi-supervised, and self-supervised learning methods in common. These methods can optimize modal labeling information and cost-effective manual labeling. 3) The active learning method is based on the integration of prior knowledge and learning regulatory via designing a model using autonomous learning ability, and it is committed to the maximum optimization of few samples. Labeling costs can be effectively reduced in consistency based on the optimized options of samples. 4) Multimodal data denoising refers to reducing data noise, restoring the original data, and then extracting the information of interest. 5) In order to make full use of limited multi-modal data, few-samples-conditioned data enhancement method extends realistic data by performing a series of transformation operations on the original data set. In addition, the data sets are used for the multimodal learning method limited data. Its potential applications are introduced like human pose estimation and person re-identification, and the performance of the existing algorithms is compared and analyzed. The pros and cons, as well as the future development direction, are projected as following: 1) a lightweight multimodal data processing method: we argue that limited-data-conditioned multimodal learning still has the challenge of mobile-devices-oriented models applications. When the existing methods fuse the information of multiple modalities, it is generally necessary to use two or above networks for feature extraction, and then fuse the features. Therefore, the large number of parameters and the complex structure of the model limit its application to mobile devices. Future lightweight model has its potentials. 2) A commonly-used multimodal intelligent processing model: most of existing multimodal data processing methods are derived from the developed multi-algorithms for multitasks, which need to be trained on specific tasks. This tailored training method greatly increases the cost of developing models, making it difficult to meet the needs of more application scenarios. Therefore, for the data of different modalities, it is necessary to promote a consensus perception model to learn the general representation of multimodal data and the parameters and features of the general model can be shared for multiple scenarios. 3) A multi-sources knowledge and data driven model: it is possible to introduce featured data and knowledge of multi-modal data beyond, establish an integrated knowledge-data-driven model, and enhance the model's performance and interpretability.关键词:multimodal data;limited data;deep learning;fusion algorithms;computer vision156|328|5更新时间:2024-05-07 -

摘要:Image big data and easy-use models such as deep networks(DNs) have expedited artificial intelligence (AI) currently. But, the issue for limited images which are often captured from complex, hostile and other scenarios has been challenging still yet: objects are too small to be recognized; the boundaries are fuzzy-overlapped; or even all the object information indicated in the images is uncertain due to camouflages and occlusions. The limited image data are featured with small-sample, small-object, incompleteness, uncertainty. Obviously, the tackle of limited image data is different from image big data: 1) image big data fits Gaussian distributions (mean u and σ) in terms of statistical central limit theorem, especially when data scales are much larger than data dimensions. This feature is beneficial to make statistical inference by the 3σ rule, which indicates that 99.73% of samples are within the range of [u-3σ, u+3σ]. Possibly due to the concentration saliency fundamental, the statistical AI models such as DNs become very popular and seemly successful. However, for small dataset, the statistical consistency is usually poor, and the robust features cannot be identified based on concentration saliency. Thus, the statistical inference AI models are not feasible for small training data. 2) Image objects are often mutually occluded in costly and rare scenarios, and the delicate camouflages and masks, poor and complex luminous environment, as well as hostile disturbances, often make the image information itself or the information dimensions incomplete and unconfident. These issues make the computation load very heavy because the missed information or dimension has a large number of possibilities. 3) A large number of big data techniques have been proposed and seem very competitive in image processing field. For example, the DNs have achieved the best rank in many image big datasets publicly available. These features have extremely highlighted the contribution of DNs. However, many face recognition systems do not meet the requirement of accuracy and precision even when the face data is big enough. The statistical inference models such DNs cannot do precise inference, and some errors do exist due to the statistical inference itself. For limited image data, the consequence has been declined further especially when the number of samples is less than the dimensions. The partition boundaries in the sample space cannot be uniquely fixed by training samples. That is to say, to determine the inference model needs to solve irreversible inverse problems, meaning that the definite model cannot be uniquely fixed, and only a reduced model can be fixed. For any query sample, the simplified model cannot give an explicit solution, and only a subspace can just be given which is constituted of possible solutions. How to choose an appropriate solution from the subspace is still challenging, and seemingly there are no effective and general ways. Many techniques seem a little effective on studying limited image dataset, such as level-set methods, fussy logic methods, all these methods are based on the probability metrics measuring the divergence between the existent limited image data and the apriori knowledge or the specific background. That is to say, the cost functions indicating membership degrees, levels etc. are very critical for these methods.关键词:limited image data;central limit theorem;irreversible inverse problem;fussy logic;research paradigm95|127|0更新时间:2024-05-07

摘要:Image big data and easy-use models such as deep networks(DNs) have expedited artificial intelligence (AI) currently. But, the issue for limited images which are often captured from complex, hostile and other scenarios has been challenging still yet: objects are too small to be recognized; the boundaries are fuzzy-overlapped; or even all the object information indicated in the images is uncertain due to camouflages and occlusions. The limited image data are featured with small-sample, small-object, incompleteness, uncertainty. Obviously, the tackle of limited image data is different from image big data: 1) image big data fits Gaussian distributions (mean u and σ) in terms of statistical central limit theorem, especially when data scales are much larger than data dimensions. This feature is beneficial to make statistical inference by the 3σ rule, which indicates that 99.73% of samples are within the range of [u-3σ, u+3σ]. Possibly due to the concentration saliency fundamental, the statistical AI models such as DNs become very popular and seemly successful. However, for small dataset, the statistical consistency is usually poor, and the robust features cannot be identified based on concentration saliency. Thus, the statistical inference AI models are not feasible for small training data. 2) Image objects are often mutually occluded in costly and rare scenarios, and the delicate camouflages and masks, poor and complex luminous environment, as well as hostile disturbances, often make the image information itself or the information dimensions incomplete and unconfident. These issues make the computation load very heavy because the missed information or dimension has a large number of possibilities. 3) A large number of big data techniques have been proposed and seem very competitive in image processing field. For example, the DNs have achieved the best rank in many image big datasets publicly available. These features have extremely highlighted the contribution of DNs. However, many face recognition systems do not meet the requirement of accuracy and precision even when the face data is big enough. The statistical inference models such DNs cannot do precise inference, and some errors do exist due to the statistical inference itself. For limited image data, the consequence has been declined further especially when the number of samples is less than the dimensions. The partition boundaries in the sample space cannot be uniquely fixed by training samples. That is to say, to determine the inference model needs to solve irreversible inverse problems, meaning that the definite model cannot be uniquely fixed, and only a reduced model can be fixed. For any query sample, the simplified model cannot give an explicit solution, and only a subspace can just be given which is constituted of possible solutions. How to choose an appropriate solution from the subspace is still challenging, and seemingly there are no effective and general ways. Many techniques seem a little effective on studying limited image dataset, such as level-set methods, fussy logic methods, all these methods are based on the probability metrics measuring the divergence between the existent limited image data and the apriori knowledge or the specific background. That is to say, the cost functions indicating membership degrees, levels etc. are very critical for these methods.关键词:limited image data;central limit theorem;irreversible inverse problem;fussy logic;research paradigm95|127|0更新时间:2024-05-07 -

摘要:ObjectiveUrban video surveillance systems have been developing dramatically nowadays. The surveillance videos analysis is essential for security but a huge amount of labor-intensive data processing is highly time-consuming and costly. Intelligent video analysis can be as an effective way to deal with that. To analyze the concrete pedestrians'event, person re-identification is a basic issue of matching pedestrians across non-overlapping cameras views for obtaining the trajectories of persons in a camera network. The cross-camera scene variations are the key challenges for person re-identification, such as illumination, resolution, occlusions and background clutters. Thanks to the development of deep learning, single-modality visible image matching has achieved remarkable performance on benchmark datasets. However, visible image matching is not applicable in low-light scenarios like night-time outdoor scenes or dark indoor scenes. To resilient the related low-light issues, most of surveillance cameras can automatically switch to acquire near infrared images, which are visually different from visible images. When person re-identification is required for the penetration between normal-light and low-light, current person re-identification performance for cross-modality matching between visible images and infrared images cannot be satisfied. Thus, it is necessary to analyze the visible-infrared cross-modality person re-identification further.For visible-infrared cross-modality person re-identification, there are two key challenges as mentioned below: first, the spectrums and visual appearances of visible images and infrared images are significantly different. Visible images contain three channels of red (R), green (G) and blue (B) responses, while infrared images contain only one channel of near infrared responses. This leads to big modality gap. Next, lack of labeled data is still challenged based on manpower-based identification of the same pedestrian across visible image and infrared image. Current multi-modality benchmark dataset contains 500 personal identities only, which is not sufficient for training deep models. Existing visible-infrared cross-modality person re-identification methods mainly focus on bridging the modality gap. The small labeled data problem is still largely ignored by these methods.MethodTo provide prior knowledge for learning cross-modality matching model, we study self-supervised information mining on single-modality data based on auxiliary labeled visible images. First, we propose a data augmentation method called random single-channel mask. For three-channel visible images as input, random masks are applied to preserve the information of only one channel, to realize the robustness of features against spectrum change. The random single-channel mask can force the first layer of convolutional neural network to learn kernels that are specific to R, G or B channels for extracting shared appearance shape features. Furthermore, for pre-training and fine-tuning, we propose mutual learning between single-channel model and three-channel model. To mine and transfer cross-spectrum robust self-supervision information, mutual learning leverages the interrelations between single-channel data and three-channel data. We sort out that the three-channel model focuses on extracting color-sensitive features, and the single-channel model focuses on extracting color-invariant features. Transferring complementary knowledge by mutual learning improves the matching performance of the cross-modality matching model.ResultExtensive comparative experiments were conducted on SYSU-MM01, RGBNT201 and RegDB datasets. Compared with the state-of-the-art methods, our method improve mean average precision (mAP) on RGBNT201 by 5% at most.ConclusionWe propose a single-modality cross-spectrum self-supervised information mining method, which utilizes auxiliary single-modality visible images to mine cross-spectrum robust self-supervision information. The prior knowledge of the self-supervision information can guide single-modality pretraining and multi-modality finetuning for achieving better matching ability of the cross-modality person re-identification model.关键词:person re-identification;cross-modality retrieval;infrared image;self-supervised learning;mutual learning123|199|5更新时间:2024-05-07

摘要:ObjectiveUrban video surveillance systems have been developing dramatically nowadays. The surveillance videos analysis is essential for security but a huge amount of labor-intensive data processing is highly time-consuming and costly. Intelligent video analysis can be as an effective way to deal with that. To analyze the concrete pedestrians'event, person re-identification is a basic issue of matching pedestrians across non-overlapping cameras views for obtaining the trajectories of persons in a camera network. The cross-camera scene variations are the key challenges for person re-identification, such as illumination, resolution, occlusions and background clutters. Thanks to the development of deep learning, single-modality visible image matching has achieved remarkable performance on benchmark datasets. However, visible image matching is not applicable in low-light scenarios like night-time outdoor scenes or dark indoor scenes. To resilient the related low-light issues, most of surveillance cameras can automatically switch to acquire near infrared images, which are visually different from visible images. When person re-identification is required for the penetration between normal-light and low-light, current person re-identification performance for cross-modality matching between visible images and infrared images cannot be satisfied. Thus, it is necessary to analyze the visible-infrared cross-modality person re-identification further.For visible-infrared cross-modality person re-identification, there are two key challenges as mentioned below: first, the spectrums and visual appearances of visible images and infrared images are significantly different. Visible images contain three channels of red (R), green (G) and blue (B) responses, while infrared images contain only one channel of near infrared responses. This leads to big modality gap. Next, lack of labeled data is still challenged based on manpower-based identification of the same pedestrian across visible image and infrared image. Current multi-modality benchmark dataset contains 500 personal identities only, which is not sufficient for training deep models. Existing visible-infrared cross-modality person re-identification methods mainly focus on bridging the modality gap. The small labeled data problem is still largely ignored by these methods.MethodTo provide prior knowledge for learning cross-modality matching model, we study self-supervised information mining on single-modality data based on auxiliary labeled visible images. First, we propose a data augmentation method called random single-channel mask. For three-channel visible images as input, random masks are applied to preserve the information of only one channel, to realize the robustness of features against spectrum change. The random single-channel mask can force the first layer of convolutional neural network to learn kernels that are specific to R, G or B channels for extracting shared appearance shape features. Furthermore, for pre-training and fine-tuning, we propose mutual learning between single-channel model and three-channel model. To mine and transfer cross-spectrum robust self-supervision information, mutual learning leverages the interrelations between single-channel data and three-channel data. We sort out that the three-channel model focuses on extracting color-sensitive features, and the single-channel model focuses on extracting color-invariant features. Transferring complementary knowledge by mutual learning improves the matching performance of the cross-modality matching model.ResultExtensive comparative experiments were conducted on SYSU-MM01, RGBNT201 and RegDB datasets. Compared with the state-of-the-art methods, our method improve mean average precision (mAP) on RGBNT201 by 5% at most.ConclusionWe propose a single-modality cross-spectrum self-supervised information mining method, which utilizes auxiliary single-modality visible images to mine cross-spectrum robust self-supervision information. The prior knowledge of the self-supervision information can guide single-modality pretraining and multi-modality finetuning for achieving better matching ability of the cross-modality person re-identification model.关键词:person re-identification;cross-modality retrieval;infrared image;self-supervised learning;mutual learning123|199|5更新时间:2024-05-07 -

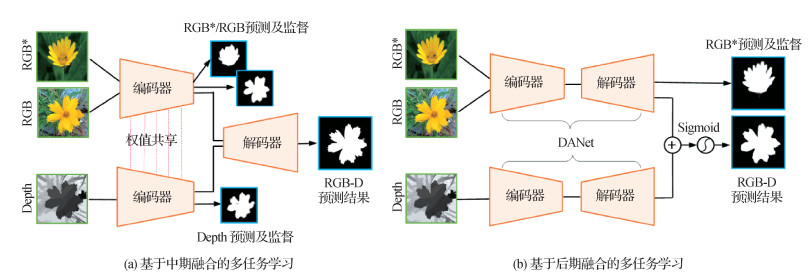

摘要:ObjectiveSalient object detection is mainly used in computer vision pre-processing like video/image segmentation, visual tracking and video/image compression. Current RGB-depth(RGB-D) salient object detection(SOD) can be categorized into fully supervision and self-supervision. Fully supervised RGB-D SOD can effectively fuse the complementary information of two different modes for RGB images input and the corresponding depth maps by means of the three types of fusion (early/middle/late). To capture contextual information, self-supervised salient object detection uses a small number of unlabeled samples for pre-training. However, existing RGB-D salient object detection methods are mostly trained on a small RGB-D training set in a fully supervised manner, so their generalization ability is greatly restricted. Thanks to the emerging few-shot learning methods, our RGB-D salient object detection uses model hypothesis space optimization and training sample augmentation to explore and solve RGB-D salient object detection with few-shot learning.MethodFor model hypothesis space optimization, it can transfer the learned knowledge from extra RGB salient object detection task to RGB-D salient object detection task based on multi-task learning of RGB and RGB-D salient object detection tasks, and the hypothesis space of the model is constrained by sharing model parameters. Model-oriented, takeing into account middle and late fusions can add additional supervision to the network, therefore, the JL-DCF model is selected for middle fusion and the DANet† model is optioned for late fusion. To improve the effectiveness and generalization of RGB-D salient object detection tasks, RGB-D and RGB are simultaneously input into the network for online training and optimization in terms of JL-DCF, and the coarse prediction of RGB is supervised to optimize the network. In view of the commonality between the semantic segmentation and the saliency detection, the dual attention network for scene segmentation(DANet) model is transferred to the RGB-D salient object detection network, named DANet†. Similar to JL-DCF joint training, additional RGB supervision is added to the RGB branch of the two-stream DANet†. Furthermore, the training sample augmentation generates the related depth map based on the additional RGB data in terms of the depth estimation algorithm, and uses the RGB and the synthesized depth map for the training of the RGB-D salient object detection task. We adopt ResNet-101 as our network backbone. The scale of input image is 320×320×3 in JL-DCF network, and the scale of DANet† network input image is fixed to 480×480×3. The depth map is transformed into three-channel map by gray scale mapping. Our training set is composed of data from NJU2K, NLPR and DUTS, and the test set is NJU2K, NLPR, STERE, RGBD135, LFSD, SIP, DUT-RGBD, ReDWeb-S, DUTS (it is worth noting that, DUT-RGBD and ReDWeb-S are tested in the completed dataset based on 1 200 samples and 3 179 samples, respectively). The evaluation metrics are demonstrated as following: S measure (

摘要:ObjectiveSalient object detection is mainly used in computer vision pre-processing like video/image segmentation, visual tracking and video/image compression. Current RGB-depth(RGB-D) salient object detection(SOD) can be categorized into fully supervision and self-supervision. Fully supervised RGB-D SOD can effectively fuse the complementary information of two different modes for RGB images input and the corresponding depth maps by means of the three types of fusion (early/middle/late). To capture contextual information, self-supervised salient object detection uses a small number of unlabeled samples for pre-training. However, existing RGB-D salient object detection methods are mostly trained on a small RGB-D training set in a fully supervised manner, so their generalization ability is greatly restricted. Thanks to the emerging few-shot learning methods, our RGB-D salient object detection uses model hypothesis space optimization and training sample augmentation to explore and solve RGB-D salient object detection with few-shot learning.MethodFor model hypothesis space optimization, it can transfer the learned knowledge from extra RGB salient object detection task to RGB-D salient object detection task based on multi-task learning of RGB and RGB-D salient object detection tasks, and the hypothesis space of the model is constrained by sharing model parameters. Model-oriented, takeing into account middle and late fusions can add additional supervision to the network, therefore, the JL-DCF model is selected for middle fusion and the DANet† model is optioned for late fusion. To improve the effectiveness and generalization of RGB-D salient object detection tasks, RGB-D and RGB are simultaneously input into the network for online training and optimization in terms of JL-DCF, and the coarse prediction of RGB is supervised to optimize the network. In view of the commonality between the semantic segmentation and the saliency detection, the dual attention network for scene segmentation(DANet) model is transferred to the RGB-D salient object detection network, named DANet†. Similar to JL-DCF joint training, additional RGB supervision is added to the RGB branch of the two-stream DANet†. Furthermore, the training sample augmentation generates the related depth map based on the additional RGB data in terms of the depth estimation algorithm, and uses the RGB and the synthesized depth map for the training of the RGB-D salient object detection task. We adopt ResNet-101 as our network backbone. The scale of input image is 320×320×3 in JL-DCF network, and the scale of DANet† network input image is fixed to 480×480×3. The depth map is transformed into three-channel map by gray scale mapping. Our training set is composed of data from NJU2K, NLPR and DUTS, and the test set is NJU2K, NLPR, STERE, RGBD135, LFSD, SIP, DUT-RGBD, ReDWeb-S, DUTS (it is worth noting that, DUT-RGBD and ReDWeb-S are tested in the completed dataset based on 1 200 samples and 3 179 samples, respectively). The evaluation metrics are demonstrated as following: S measure (${S_\alpha }$ $F_\beta ^{\max }$ $E_\varphi ^{\max }$ $M$ $\alpha $ 关键词:multi-modal detection;RGB-D saliency detection;few-shot learning;multi-task learning;depth estimation236|283|1更新时间:2024-05-07

Limited Image Data

-

摘要:Object detection is essential for various of applications like semantic segmentation and human facial recognition, and it has been widely employed in public security related scenarios, including automatic driving, industrial control, and aerospace applications. Traditional object detection technology requires manual-based feature extraction and machine learning methods for classification, which is costly and inaccuracy for detection. Recent deep learning based object detection technology has gradually replaced the traditional object detection technology due to its high detection efficiency and accuracy. However, it has been proved that convolutional neural network (CNN) can be easily fooled by some imperceptible perturbations. These images with the added imperceptible perturbations are called adversarial examples. Adversarial examples were first discovered in the field of image classification, and were gradually developed into other fields. To clarify the vulnerabilities of adversarial attack and deep object detection system it is of great significance to improve the robustness and security of the deep learning based object detection model by using a holistic approach. We aims to enhancing the robustness of object detection models and putting forward defense strategies better in terms of analyzing and summarizing the adversarial attack and defense methods for object detection recently. First, our review is focused on the discussion of the development of object detection, and then introduces the origin, growth, motives of emergence and related terminologies of adversarial examples. The commonly evaluation metrics used and data sets in the generation of adversarial examples in object detection are also introduced. Then, 15 adversarial example generation algorithms for object detection, according to the generation of perturbation level classification, are classified as global perturbation attack and local perturbation attack. A secondary classification under the global perturbation attack is made in terms of the types of of attacks detector like attack on two-stage network, attack on one-stage network, and attack on both kinds of networks. Furthermore, these attack methods are classified and summarized from the following perspectives as mentioned below: 1) the attack methods can be divided into black box attack and white box attack based on the issue of whether the attacker knows the information of the model's internal structure and parameters or not. 2) The attack methods can be divided into target attack and non-target attack derived from the identification results of the generated adversarial examples. 3) The attack methods can be divided into three categories: L0, L2 and L∞ via the perturbation norm used by the attack algorithm. 4) The attack methods can be divided into single loss function attack and combined loss function attack based on the loss function design of attack algorithm. These methods are summarized and analyzed on six aspects of the object detector type and the loss function design, and the following rules of the current adversarial example generation technology for object detection are obtained: 1) diversities of attack forms: a variety of adversarial loss functions are combined with the design of adversarial attack methods, such as background loss and context loss. In addition, the diversity of attack forms is also reflected in the context of diversity of attack methods. Global perturbations and local perturbations are represented by patch attacks both. 2) Diversities of attack objects: with the development of object detection technology, the types of detectors become more diverse, which makes the adversarial examples generation technology against detectors become more changeable, including one-stage attack, two-stage attack, as well as the attack against anchor-free detector. It is credible that future adversarial examples attacks against new techniques of object detection have its potentials. 3) Most of the existing adversarial attack methods are white box attack methods for specific detector, while few are black box attack methods. The reason is that object detection model is more complex and the training cycle is longer compared to image classification, so attacking object detection requires more model information to generate reliable adversarial examples. The issue of designing more and more effective black box attacks can be as a future research direction as well. Additionally, we select four classical methods of those are dense adversary generation (DAG), robust adversarial perturbation (RAP), unified and efficient adversary (UEA), and targeted adversarial objectness gradient attacks (TOG), and carry out comparative analysis through experiments. Then, the commonly attack and defense strategies are introduced from the perspectives of preprocessing and improving the robustness of the model, and these methods are summarized. The current methods of defense against examples are few, and the effect is not sufficient due to the specialty of object detection. Furthermore, the transferability of these models is compared to you only look once (YOLO)-Darknet and single shot multibox detector (SSD300) models, and the experimental results show that the UEA method has the best transferability among these methods. Finally, we summarize the challenges in the generation and defense of adversarial examples for object detection from the following three perspectives: 1) to enhance the transferability of adversarial examples for object detection. Transfer ability is one of the most important metrics to measure adversarial examples, especially in object detection technology. It is potential to enhance the transferability of adversarial examples to attack most object detection systems. 2) To facilitate adversarial defense for object detection. Current adversarial examples attack paths are lack of effective defenses. To enhance the robustness of object detection, it is developed for defense research against adversarial examples further. 3) Decrease the disturbance size and increase the generation speed of adversarial examples. Future development of it is possible to develop adversarial examples for object detection in related to shorter generation time and smaller generation disturbance in the future.关键词:object detection;adversarial examples;deep learning;adversarial defense;global perturbation;local perturbation561|542|8更新时间:2024-05-07

摘要:Object detection is essential for various of applications like semantic segmentation and human facial recognition, and it has been widely employed in public security related scenarios, including automatic driving, industrial control, and aerospace applications. Traditional object detection technology requires manual-based feature extraction and machine learning methods for classification, which is costly and inaccuracy for detection. Recent deep learning based object detection technology has gradually replaced the traditional object detection technology due to its high detection efficiency and accuracy. However, it has been proved that convolutional neural network (CNN) can be easily fooled by some imperceptible perturbations. These images with the added imperceptible perturbations are called adversarial examples. Adversarial examples were first discovered in the field of image classification, and were gradually developed into other fields. To clarify the vulnerabilities of adversarial attack and deep object detection system it is of great significance to improve the robustness and security of the deep learning based object detection model by using a holistic approach. We aims to enhancing the robustness of object detection models and putting forward defense strategies better in terms of analyzing and summarizing the adversarial attack and defense methods for object detection recently. First, our review is focused on the discussion of the development of object detection, and then introduces the origin, growth, motives of emergence and related terminologies of adversarial examples. The commonly evaluation metrics used and data sets in the generation of adversarial examples in object detection are also introduced. Then, 15 adversarial example generation algorithms for object detection, according to the generation of perturbation level classification, are classified as global perturbation attack and local perturbation attack. A secondary classification under the global perturbation attack is made in terms of the types of of attacks detector like attack on two-stage network, attack on one-stage network, and attack on both kinds of networks. Furthermore, these attack methods are classified and summarized from the following perspectives as mentioned below: 1) the attack methods can be divided into black box attack and white box attack based on the issue of whether the attacker knows the information of the model's internal structure and parameters or not. 2) The attack methods can be divided into target attack and non-target attack derived from the identification results of the generated adversarial examples. 3) The attack methods can be divided into three categories: L0, L2 and L∞ via the perturbation norm used by the attack algorithm. 4) The attack methods can be divided into single loss function attack and combined loss function attack based on the loss function design of attack algorithm. These methods are summarized and analyzed on six aspects of the object detector type and the loss function design, and the following rules of the current adversarial example generation technology for object detection are obtained: 1) diversities of attack forms: a variety of adversarial loss functions are combined with the design of adversarial attack methods, such as background loss and context loss. In addition, the diversity of attack forms is also reflected in the context of diversity of attack methods. Global perturbations and local perturbations are represented by patch attacks both. 2) Diversities of attack objects: with the development of object detection technology, the types of detectors become more diverse, which makes the adversarial examples generation technology against detectors become more changeable, including one-stage attack, two-stage attack, as well as the attack against anchor-free detector. It is credible that future adversarial examples attacks against new techniques of object detection have its potentials. 3) Most of the existing adversarial attack methods are white box attack methods for specific detector, while few are black box attack methods. The reason is that object detection model is more complex and the training cycle is longer compared to image classification, so attacking object detection requires more model information to generate reliable adversarial examples. The issue of designing more and more effective black box attacks can be as a future research direction as well. Additionally, we select four classical methods of those are dense adversary generation (DAG), robust adversarial perturbation (RAP), unified and efficient adversary (UEA), and targeted adversarial objectness gradient attacks (TOG), and carry out comparative analysis through experiments. Then, the commonly attack and defense strategies are introduced from the perspectives of preprocessing and improving the robustness of the model, and these methods are summarized. The current methods of defense against examples are few, and the effect is not sufficient due to the specialty of object detection. Furthermore, the transferability of these models is compared to you only look once (YOLO)-Darknet and single shot multibox detector (SSD300) models, and the experimental results show that the UEA method has the best transferability among these methods. Finally, we summarize the challenges in the generation and defense of adversarial examples for object detection from the following three perspectives: 1) to enhance the transferability of adversarial examples for object detection. Transfer ability is one of the most important metrics to measure adversarial examples, especially in object detection technology. It is potential to enhance the transferability of adversarial examples to attack most object detection systems. 2) To facilitate adversarial defense for object detection. Current adversarial examples attack paths are lack of effective defenses. To enhance the robustness of object detection, it is developed for defense research against adversarial examples further. 3) Decrease the disturbance size and increase the generation speed of adversarial examples. Future development of it is possible to develop adversarial examples for object detection in related to shorter generation time and smaller generation disturbance in the future.关键词:object detection;adversarial examples;deep learning;adversarial defense;global perturbation;local perturbation561|542|8更新时间:2024-05-07 -

摘要:To obtain smooth edge information that can accurately describe the local features and conform to the functional sub structure, the superpixel/voxel segmentation algorithm divides the points with the similar structure information into the same sub region. It is widely used in the field of magnetic resonance imaging (MRI) segmentation. We carry out the comparative performance analysis of different algorithms in brain tumor medical image segmentation. Our algorithms are used to set the number of superpixel seed points directly in the contexts of graph-based, normalized cut, entropy rate, topology preserving regularization, lazy random walk, Turbopixels, density-based spatial clustering of applications with noise(DBSCAN), linear spectral clustering(LSC), and simple linear iterative clustering algorithm (SLIC), respectively. Due to the watershed and the superpixel lattice algorithms cannot achieve accurate manipulations of the number of superpixels, it is required to achieve the superpixel segmentation of the brain tumor images in BraTS 2018 dataset. The graph-based algorithm can segment the core tumor region accurately and identify the brain tumor region with vascular filling effectively. However, it is insufficient for the segmentation accuracy of the completed and enhanced tumor regions of slightly. The performance of the normalized cut algorithm can obtain the brain tumor boundary derived of strong dependence information and retain the characteristic information of the tumor boundary. However, the algorithm divides the lesion region, the gray matter, and the white matter into the same superpixel. The whole tumor region can be divided into the multiple regions, which cannot represent the functional substructure of human brains effectively. The superpixel lattice algorithm can obtain the core tumor location better, but the segmented superpixel boundary does not have the strong attachment. The boundary information of the enhanced tumor can be obtained based on the entropy rate algorithm accurately, which has the obvious density difference between the tumor region and the surrounding tumor. Yet, the generated shape of superpixel boundary is irregular, which cannot express the clear neighborhood information. The topology preserving regularization algorithm can describe the focus accurately, but it cannot clarify the large mass span issue. The lazy random walk algorithm can generate more regular core tumor superpixel boundary, but it can not obtain enhanced tumor boundary information and cannot retain the characteristics of tumor boundary information. The watershed algorithm can obtain the weak boundary information of peripheral edema and intratumoral hemorrhage caused by brain tumor with obvious space occupying effect or lateral ventricular extrusion. However, the obtained superpixel does not conform to the structure of brain functional, which tends to different superpixel from the division of the same functional blocks. The Turbopixels algorithm overcomes the problem that the number of superpixels is different in the initial setting, which leads to the difference of the accuracy of the segmentation results and enhances the robustness of the algorithm. However, the algorithm has little contrast to the whole gray level and the accuracy of segmentation is greatly reduced with the presence of adhesion between the brain tumor location and the surrounding tissues. The DBSCAN algorithm can obtain the core tumor information and identify the necrotic region and the liquefied region in accordance with the image density, which can provide tumor information for complications. However, the algorithm is more sensitive to the noise points and is not robust to the boundary information. The LSC algorithm can release boundary blur and fuzziness of medical imaging equipment. But, the superpixel boundary divides the brain regions with the same features and functional substructures into the multiple blocks, which cannot reflect the shape, size, appearance, other forms of brain tumors, and the pull with the surrounding meninges or blood vessels. The SLIC algorithm has a strong compact and complete retention of feature continuity, which can extract brain tumor features. However, there is a lot of redundancy in the algorithm calculation process, which is challenged to large-scale object segmentation operation, the SLICO algorithm is improved through the SLIC algorithm, which has the high efficient segmentation with low computational complexity. In conclusion, such algorithms can preserve tumor boundary information and have local focal information better in related to graph-based, the normalized cut, the lazy random walk, the DBSCAN, and the LSC. The four algorithms keep the shape structure of the superpixel more complete and compact in regular like topology preserving regularization, Turbopixels, SLIC, and SLICO. Furthermore, the feature description of the lesion boundary is smooth and soft to make up the boundary deficiency. We summarize the current results and applications of various superpixel/voxel algorithms. The performance of the algorithm is analyzed by four indexes like boundary recall, under-segmentation error, compactness measure, and achievable segmentation accuracy. The superpixel/voxel algorithm can improve the efficiency of medical image processing with large object efficiency, which is beneficial to the expression of local information of the brain structure. Some future challenging issues are predicted as mentioned below: 1) to divide the brain function and regions without brain structure into the same sub region; 2) to resolve over-fitting or insufficient segmentation caused by abnormal points and noise points near the boundary; 3) to integrate multi-modal lesion information via machine learning.关键词:image processing;magnetic resonance imaging(MRI);superpixel/voxel;brain tumor segmentation;evaluation indicators142|345|0更新时间:2024-05-07

摘要:To obtain smooth edge information that can accurately describe the local features and conform to the functional sub structure, the superpixel/voxel segmentation algorithm divides the points with the similar structure information into the same sub region. It is widely used in the field of magnetic resonance imaging (MRI) segmentation. We carry out the comparative performance analysis of different algorithms in brain tumor medical image segmentation. Our algorithms are used to set the number of superpixel seed points directly in the contexts of graph-based, normalized cut, entropy rate, topology preserving regularization, lazy random walk, Turbopixels, density-based spatial clustering of applications with noise(DBSCAN), linear spectral clustering(LSC), and simple linear iterative clustering algorithm (SLIC), respectively. Due to the watershed and the superpixel lattice algorithms cannot achieve accurate manipulations of the number of superpixels, it is required to achieve the superpixel segmentation of the brain tumor images in BraTS 2018 dataset. The graph-based algorithm can segment the core tumor region accurately and identify the brain tumor region with vascular filling effectively. However, it is insufficient for the segmentation accuracy of the completed and enhanced tumor regions of slightly. The performance of the normalized cut algorithm can obtain the brain tumor boundary derived of strong dependence information and retain the characteristic information of the tumor boundary. However, the algorithm divides the lesion region, the gray matter, and the white matter into the same superpixel. The whole tumor region can be divided into the multiple regions, which cannot represent the functional substructure of human brains effectively. The superpixel lattice algorithm can obtain the core tumor location better, but the segmented superpixel boundary does not have the strong attachment. The boundary information of the enhanced tumor can be obtained based on the entropy rate algorithm accurately, which has the obvious density difference between the tumor region and the surrounding tumor. Yet, the generated shape of superpixel boundary is irregular, which cannot express the clear neighborhood information. The topology preserving regularization algorithm can describe the focus accurately, but it cannot clarify the large mass span issue. The lazy random walk algorithm can generate more regular core tumor superpixel boundary, but it can not obtain enhanced tumor boundary information and cannot retain the characteristics of tumor boundary information. The watershed algorithm can obtain the weak boundary information of peripheral edema and intratumoral hemorrhage caused by brain tumor with obvious space occupying effect or lateral ventricular extrusion. However, the obtained superpixel does not conform to the structure of brain functional, which tends to different superpixel from the division of the same functional blocks. The Turbopixels algorithm overcomes the problem that the number of superpixels is different in the initial setting, which leads to the difference of the accuracy of the segmentation results and enhances the robustness of the algorithm. However, the algorithm has little contrast to the whole gray level and the accuracy of segmentation is greatly reduced with the presence of adhesion between the brain tumor location and the surrounding tissues. The DBSCAN algorithm can obtain the core tumor information and identify the necrotic region and the liquefied region in accordance with the image density, which can provide tumor information for complications. However, the algorithm is more sensitive to the noise points and is not robust to the boundary information. The LSC algorithm can release boundary blur and fuzziness of medical imaging equipment. But, the superpixel boundary divides the brain regions with the same features and functional substructures into the multiple blocks, which cannot reflect the shape, size, appearance, other forms of brain tumors, and the pull with the surrounding meninges or blood vessels. The SLIC algorithm has a strong compact and complete retention of feature continuity, which can extract brain tumor features. However, there is a lot of redundancy in the algorithm calculation process, which is challenged to large-scale object segmentation operation, the SLICO algorithm is improved through the SLIC algorithm, which has the high efficient segmentation with low computational complexity. In conclusion, such algorithms can preserve tumor boundary information and have local focal information better in related to graph-based, the normalized cut, the lazy random walk, the DBSCAN, and the LSC. The four algorithms keep the shape structure of the superpixel more complete and compact in regular like topology preserving regularization, Turbopixels, SLIC, and SLICO. Furthermore, the feature description of the lesion boundary is smooth and soft to make up the boundary deficiency. We summarize the current results and applications of various superpixel/voxel algorithms. The performance of the algorithm is analyzed by four indexes like boundary recall, under-segmentation error, compactness measure, and achievable segmentation accuracy. The superpixel/voxel algorithm can improve the efficiency of medical image processing with large object efficiency, which is beneficial to the expression of local information of the brain structure. Some future challenging issues are predicted as mentioned below: 1) to divide the brain function and regions without brain structure into the same sub region; 2) to resolve over-fitting or insufficient segmentation caused by abnormal points and noise points near the boundary; 3) to integrate multi-modal lesion information via machine learning.关键词:image processing;magnetic resonance imaging(MRI);superpixel/voxel;brain tumor segmentation;evaluation indicators142|345|0更新时间:2024-05-07 -

摘要:Deep learning based data format is mainly related to voice, image and video. A regular data structure is usually represented in Euclidean space. The graph structure representation of non-Euclidean data can be used more widely in practice. A typical data structure graphs can describe the features of objects and the relationship between objects simultaneously. Therefore, the application research of graphs in deep learning has been focused on as a future research direction. However, learning data are generated from non-Euclidean spaces in common, and these data features and their relational structure can be defined by graphs. The graph neural network is facilitated to extend the neural network via process data processing in the graph domain. Each node is clarified by updating the expression of the node related to the neighbor node and the edge structure, which designates a research framework for subsequent research. With the help of the graph neural network framework, the graph convolutional neural network defines the updating functions on the convolutional aspect, information dissemination and aggregation between nodes is completed in terms of the convolution theorem to the graph signal. The graph convolutional neural network becomes an effective way of graph data related modeling. Most existing models have only two or three layers of shallow model architecture due to the challenge of over-smoothing phenomenon of deep layer graph structure. The over-smoothing phenomenon is that the graph convolutional neural network fuses node features smoothly via replicable Laplacian applications from different neighborhoods. The smoothing operation makes the vertex features of the same cluster be similar, which simplifies the classification task. But, the expression of each node tends to converge to a certain value in the graph when the number of layers is deepened, and the vertices become indistinguishable in different clusters. Existing researches have shown that graph convolution is similar to a local filter, which is a linear combination of feature vectors of neighboring neighbors. A shallow graph convolutional neural network cannot transmit the full label information to the entire graph with only a few labels, which cannot explore the global graph structure in the graph convolutional neural network. At the same time, deep graph convolutional neural networks require a larger receiving domain. One of the key advantages provided by deep architecture in computer vision is that they can compose complex functions from simple structures. Inspired by the convolutional neural network, the deep structure of the graph convolutional neural network can obtain more node representation information theoretically, so many researchers conduct in-depth research on its hierarchical information. According to the aggregation and transmission features of the graph convolutional neural network algorithm, the core of transferring hierarchical structure algorithms to graph data analysis lies in the construction of layer-level convolution operators and the fusion of information between layer levels. We review the graph network level information mining algorithms. First, we discuss the current situation and existing issues of graph convolutional neural networks, and then introduce the development of graph convolutional neural network hierarchy algorithms, and propose a new category method in term of the different layer information processing of graph convolution. Existing algorithms are divided into regularization methods and architecture adjustment methods. Regular methods focus on the construction of layer-level convolution operators. To deepen the graph neural network and slow down the occurrence of over-smoothing graph structure relationships are used to constrain the information transmission in the convolution process. Next, architecture adjustment methods are based on information fusion between levels to enrich the representation of nodes, including various residual connections like knowledge jumps or affine skip connections. At the same time, we demonstrate that the hierarchical feature nodes are obtained in the graph structure through the hierarchical features experiment. The hierarchical feature nodes can only be classified by the graph convolutional neural network at the corresponding depth. The graph network hierarchical information mining algorithm uses different data mining methods to classify different characteristic nodes via a unified model and an end-to-end paradigm. If there is a model that can classify hierarchical feature nodes at each level successfully, the task of graph network node classification will make good result. Finally, the main application fields of the graph convolutional neural network hierarchical information mining model are summarized, and the future research direction are predicted from four aspects of computing efficiency, large-scale data, dynamic graphs and application scenarios. Graph network hierarchical information mining is a deeper exploration of graph neural networks. The hierarchical information interaction, transfer and dynamic evolution can obtain the richer node information from the shallow to deep information of the graph neural network. We clarify the adopted issue of deep structured graph neural networks further. The effectiveness of information mining issue between levels has to be developed further although some of hierarchical graph convolutional network algorithms can slow down the occurrence of over-smoothing.关键词:hierarchical structure;graph convolutional networks(GCN);attention mechanism;artificial intelligence;deep learning143|974|1更新时间:2024-05-07

摘要:Deep learning based data format is mainly related to voice, image and video. A regular data structure is usually represented in Euclidean space. The graph structure representation of non-Euclidean data can be used more widely in practice. A typical data structure graphs can describe the features of objects and the relationship between objects simultaneously. Therefore, the application research of graphs in deep learning has been focused on as a future research direction. However, learning data are generated from non-Euclidean spaces in common, and these data features and their relational structure can be defined by graphs. The graph neural network is facilitated to extend the neural network via process data processing in the graph domain. Each node is clarified by updating the expression of the node related to the neighbor node and the edge structure, which designates a research framework for subsequent research. With the help of the graph neural network framework, the graph convolutional neural network defines the updating functions on the convolutional aspect, information dissemination and aggregation between nodes is completed in terms of the convolution theorem to the graph signal. The graph convolutional neural network becomes an effective way of graph data related modeling. Most existing models have only two or three layers of shallow model architecture due to the challenge of over-smoothing phenomenon of deep layer graph structure. The over-smoothing phenomenon is that the graph convolutional neural network fuses node features smoothly via replicable Laplacian applications from different neighborhoods. The smoothing operation makes the vertex features of the same cluster be similar, which simplifies the classification task. But, the expression of each node tends to converge to a certain value in the graph when the number of layers is deepened, and the vertices become indistinguishable in different clusters. Existing researches have shown that graph convolution is similar to a local filter, which is a linear combination of feature vectors of neighboring neighbors. A shallow graph convolutional neural network cannot transmit the full label information to the entire graph with only a few labels, which cannot explore the global graph structure in the graph convolutional neural network. At the same time, deep graph convolutional neural networks require a larger receiving domain. One of the key advantages provided by deep architecture in computer vision is that they can compose complex functions from simple structures. Inspired by the convolutional neural network, the deep structure of the graph convolutional neural network can obtain more node representation information theoretically, so many researchers conduct in-depth research on its hierarchical information. According to the aggregation and transmission features of the graph convolutional neural network algorithm, the core of transferring hierarchical structure algorithms to graph data analysis lies in the construction of layer-level convolution operators and the fusion of information between layer levels. We review the graph network level information mining algorithms. First, we discuss the current situation and existing issues of graph convolutional neural networks, and then introduce the development of graph convolutional neural network hierarchy algorithms, and propose a new category method in term of the different layer information processing of graph convolution. Existing algorithms are divided into regularization methods and architecture adjustment methods. Regular methods focus on the construction of layer-level convolution operators. To deepen the graph neural network and slow down the occurrence of over-smoothing graph structure relationships are used to constrain the information transmission in the convolution process. Next, architecture adjustment methods are based on information fusion between levels to enrich the representation of nodes, including various residual connections like knowledge jumps or affine skip connections. At the same time, we demonstrate that the hierarchical feature nodes are obtained in the graph structure through the hierarchical features experiment. The hierarchical feature nodes can only be classified by the graph convolutional neural network at the corresponding depth. The graph network hierarchical information mining algorithm uses different data mining methods to classify different characteristic nodes via a unified model and an end-to-end paradigm. If there is a model that can classify hierarchical feature nodes at each level successfully, the task of graph network node classification will make good result. Finally, the main application fields of the graph convolutional neural network hierarchical information mining model are summarized, and the future research direction are predicted from four aspects of computing efficiency, large-scale data, dynamic graphs and application scenarios. Graph network hierarchical information mining is a deeper exploration of graph neural networks. The hierarchical information interaction, transfer and dynamic evolution can obtain the richer node information from the shallow to deep information of the graph neural network. We clarify the adopted issue of deep structured graph neural networks further. The effectiveness of information mining issue between levels has to be developed further although some of hierarchical graph convolutional network algorithms can slow down the occurrence of over-smoothing.关键词:hierarchical structure;graph convolutional networks(GCN);attention mechanism;artificial intelligence;deep learning143|974|1更新时间:2024-05-07 -

摘要:The multimedia imaging technology can meet people's visual demands to a certain extent. People can easily obtain high-quality images through mobile devices, so people begin to pay more attention to their aesthetic experience of images, which makes the image aesthetics assessment (IAA) method become a hotspot issue and frontier technology in the current image processing and computer vision fields. Intelligent IAA can be developed to imitate people's aesthetic perception of images and predict the results of aesthetic evaluation automatically. Aesthetic-preference images can be screened out. Consequently, IAA is critical to be applied in photography, beauty, photo album management, interface design, and marketing. Generally, IAA can be classified into two categories, including generic image aesthetics assessment (GIAA) and personalized image aesthetics assessment (PIAA). Early researches believe that people have a consensus on the aesthetic experience of images, and leverage the general photography rules to describe most people's visual aesthetics on images, which are usually affected by many factors, such as light intensity, color richness, and composition. Most of the current GIAA model can predict most people's aesthetic evaluation results of images. GIAA models can be divided into three aesthetic-related tasks like classification, score regression and distribution prediction. The aesthetic classification task is focused on dividing the image into two classes of "high" and "low" according to the aesthetic experience of most people. The research goal of the aesthetic score regression task can predict the aesthetic score of an image. This task leverages the average aesthetic ratings of most people as the image aesthetic score for regression modeling. However, the two tasks shown above need to convert different people's aesthetic ratings of images into a unified result ("high" or "low" and score). Label uncertainty is derived from people's aesthetic experience of images, which makes it difficult for the consensus result. Therefore, the predictable aesthetic distribution is more concerned to reflect people's subjectivity. The goal of the aesthetic distribution prediction task can predict the aesthetic distribution results of multiple people's ratings of an image. This task predicts the aesthetic distribution straightforward and converts the aesthetic distribution result into aesthetic scores and aesthetic classes. Consequently, recent GIAA models researches are mainly focused on the task of aesthetic distribution prediction. Although the aesthetic distribution prediction task of the GIAA model can reflect people's subjectivity of image aesthetics to a certain extent, the task can realize people's visual aesthetic preferences from the image level only. Besides, it is more realistic to develop the PIAA model for specification beneficial from the growth of customized services. Therefore, the PIAA model has received great attention recently, which can predict the accurate aesthetic results for customized users. We introduce the existing PIAA models published from 2014 to 2020 due to the lack of reviews on PIAA models. Generally, the PIAA model faces two challenges for specific users as mentioned below: First, PIAA is a typical small sample learning task. This is because the PIAA model is a real-time system for specific users, which cannot force users to annotate a large number of images aesthetically. In addition, a small number of image samples can just be obtained for model training. Second, the user's subjective characteristics become important factors to affect their aesthetic perception of images since the user's aesthetic experience of images is highly subjective. Meanwhile, users' aesthetic experience is influenced by many subjective factors like emotion and personality traits. Therefore, the existing framework of the PIAA model is mainly divided into two stages based on machine learning or deep learning. In the first stage, the GIAA dataset rated by a large number of users is used to obtain the prior knowledge of the PIAA model through supervision training for the smalls sized sample learning issue of the PIAA task. In the second stage, a user's PIAA dataset is used for fine-tuning on the prior knowledge model for the high subjectivity of users' image aesthetic experience, and the subjective knowledge of users is integrated to obtain the PIAA model that conforms to the user's aesthetic perception. The existing PIAA models can be divided into three categories like collaborative filtering based PIAA models, user interaction based PIAA models, and aesthetic difference based PIAA models. To demonstrate the differences between these three PIAA models, we first introduce each of the three PIAA models separately. Then, we summarize the design clues, pros and cons of existing PIAA models. Meanwhile, we introduce the commonly used datasets and evaluation criteria of PIAA models, as well as the application prospect of PIAA models in precision marketing, personalized recommendation systems, personalized visual enhancement, and personalized art design. Finally, the future direction of the PIAA model is predicted in subjective characteristic analysis and knowledge-driven modeling.关键词:personalized image aesthetics;evaluation metrics;aesthetic experience;subjective characteristics;know-ledge-driven212|928|0更新时间:2024-05-07

摘要:The multimedia imaging technology can meet people's visual demands to a certain extent. People can easily obtain high-quality images through mobile devices, so people begin to pay more attention to their aesthetic experience of images, which makes the image aesthetics assessment (IAA) method become a hotspot issue and frontier technology in the current image processing and computer vision fields. Intelligent IAA can be developed to imitate people's aesthetic perception of images and predict the results of aesthetic evaluation automatically. Aesthetic-preference images can be screened out. Consequently, IAA is critical to be applied in photography, beauty, photo album management, interface design, and marketing. Generally, IAA can be classified into two categories, including generic image aesthetics assessment (GIAA) and personalized image aesthetics assessment (PIAA). Early researches believe that people have a consensus on the aesthetic experience of images, and leverage the general photography rules to describe most people's visual aesthetics on images, which are usually affected by many factors, such as light intensity, color richness, and composition. Most of the current GIAA model can predict most people's aesthetic evaluation results of images. GIAA models can be divided into three aesthetic-related tasks like classification, score regression and distribution prediction. The aesthetic classification task is focused on dividing the image into two classes of "high" and "low" according to the aesthetic experience of most people. The research goal of the aesthetic score regression task can predict the aesthetic score of an image. This task leverages the average aesthetic ratings of most people as the image aesthetic score for regression modeling. However, the two tasks shown above need to convert different people's aesthetic ratings of images into a unified result ("high" or "low" and score). Label uncertainty is derived from people's aesthetic experience of images, which makes it difficult for the consensus result. Therefore, the predictable aesthetic distribution is more concerned to reflect people's subjectivity. The goal of the aesthetic distribution prediction task can predict the aesthetic distribution results of multiple people's ratings of an image. This task predicts the aesthetic distribution straightforward and converts the aesthetic distribution result into aesthetic scores and aesthetic classes. Consequently, recent GIAA models researches are mainly focused on the task of aesthetic distribution prediction. Although the aesthetic distribution prediction task of the GIAA model can reflect people's subjectivity of image aesthetics to a certain extent, the task can realize people's visual aesthetic preferences from the image level only. Besides, it is more realistic to develop the PIAA model for specification beneficial from the growth of customized services. Therefore, the PIAA model has received great attention recently, which can predict the accurate aesthetic results for customized users. We introduce the existing PIAA models published from 2014 to 2020 due to the lack of reviews on PIAA models. Generally, the PIAA model faces two challenges for specific users as mentioned below: First, PIAA is a typical small sample learning task. This is because the PIAA model is a real-time system for specific users, which cannot force users to annotate a large number of images aesthetically. In addition, a small number of image samples can just be obtained for model training. Second, the user's subjective characteristics become important factors to affect their aesthetic perception of images since the user's aesthetic experience of images is highly subjective. Meanwhile, users' aesthetic experience is influenced by many subjective factors like emotion and personality traits. Therefore, the existing framework of the PIAA model is mainly divided into two stages based on machine learning or deep learning. In the first stage, the GIAA dataset rated by a large number of users is used to obtain the prior knowledge of the PIAA model through supervision training for the smalls sized sample learning issue of the PIAA task. In the second stage, a user's PIAA dataset is used for fine-tuning on the prior knowledge model for the high subjectivity of users' image aesthetic experience, and the subjective knowledge of users is integrated to obtain the PIAA model that conforms to the user's aesthetic perception. The existing PIAA models can be divided into three categories like collaborative filtering based PIAA models, user interaction based PIAA models, and aesthetic difference based PIAA models. To demonstrate the differences between these three PIAA models, we first introduce each of the three PIAA models separately. Then, we summarize the design clues, pros and cons of existing PIAA models. Meanwhile, we introduce the commonly used datasets and evaluation criteria of PIAA models, as well as the application prospect of PIAA models in precision marketing, personalized recommendation systems, personalized visual enhancement, and personalized art design. Finally, the future direction of the PIAA model is predicted in subjective characteristic analysis and knowledge-driven modeling.关键词:personalized image aesthetics;evaluation metrics;aesthetic experience;subjective characteristics;know-ledge-driven212|928|0更新时间:2024-05-07 -