最新刊期

卷 26 , 期 9 , 2021

-

摘要:Medical image registration (MIR) has aimed to implement the optimal transformation via aligning anatomical structures of a pair of medical images spatially. The crucial clinical applications like disease diagnosis, surgical guidance and radiation therapy have been envolved. Scholors have categorized MIR into inter-/intra-patient registration, uni-/multi-modal registration and rigid/non-rigid registration. Image classification has been developing deep learning-based (DL-based) MIR methods. The DL-based MIR has demonstrated substantial improvement in computational efficiency and task-specific registration accuracy over traditional iterative registration approaches. A sophisticated literature review of DL-based MIR have benefited to the disciplines. The current MIR has been analysed based on iterative optimization to one-step prediction and supervised learning to unsupervised learning. The DL-based MIR has been classified into fully supervised, dual supervised, weakly supervised and unsupervised approaches to train the DL network via the amount of supervision. Each category has been systematically reviewed. At the beginning, fully supervised methods have been reviewed in terms of the initial exploration to remove the time-consuming with low inference speed issues of deep iterative registration algorithms (deep similarity-based registration, reinforcement learning-based registration). One-step fully supervised registration has predicted the final transformation. The lack of training datasets with ground-truth transformations have barriered to train a fully supervised registration network. Most scholors have generated synthesized transformations with the following three approaches as below: 1) random augmentation-based generation; 2) traditional registration-based generation; 3) model-based generation. Next, the integration of dual-supervised and weak supervised registration have alleviated the reliance on ground truth compared with fully supervised approaches via the transition technologies between fully supervised and unsupervised methods. Dual-supervised registration frameworks have integrated image similarity metric to supervise the training. Weak supervised registration in the context of anatomical labels of interest (solid organs, vessels, ducts, structure boundaries and other subject-specific ad hoc landmarks) has replaced ground truth. The label similarity using label-driven supervised registration has facilitated the network to directly estimate the transformation for paired fixed image and moving image. The end-to-end unsupervision has been used to indicate the DL-based medical image registration evolved into the unsupervised field gradually. The unsupervision has avoided the acquisition of ground-truth transformations and segmentation labels for the supervised methods. Unsupervised registration frameworks have performed spatial data based on spatial transformer network (STN) to flat image similarity loss calculation during the training process with unknown transformations further. The latest developments and applications of DL-based unsupervised registration methods have been summarized from the aspects of loss functions and network architectures. DL-based unsupervised registration algorithms on liver CT(computed tomography) scan datasets have also been re-implemented. The demonstrated analyses have the priority to baseline model. At the end, the potentials and possibilities have been illustrated as following: 1) constructing more robust similarity metrics and more effective regularization terms to deal with multi-modality MIR; 2) quantifying registration result confidence of various DL-based models or integrating domain knowledge into current data-driven networks; 3) designing more qualified networks with fewer parameters (e.g., 3D convolution factorization, capsule network architecture).关键词:medical image registration;deep learning(DL);full supervised learning;dual supervised learning;weakly supervised learning;unsupervised learning295|430|6更新时间:2024-05-07

摘要:Medical image registration (MIR) has aimed to implement the optimal transformation via aligning anatomical structures of a pair of medical images spatially. The crucial clinical applications like disease diagnosis, surgical guidance and radiation therapy have been envolved. Scholors have categorized MIR into inter-/intra-patient registration, uni-/multi-modal registration and rigid/non-rigid registration. Image classification has been developing deep learning-based (DL-based) MIR methods. The DL-based MIR has demonstrated substantial improvement in computational efficiency and task-specific registration accuracy over traditional iterative registration approaches. A sophisticated literature review of DL-based MIR have benefited to the disciplines. The current MIR has been analysed based on iterative optimization to one-step prediction and supervised learning to unsupervised learning. The DL-based MIR has been classified into fully supervised, dual supervised, weakly supervised and unsupervised approaches to train the DL network via the amount of supervision. Each category has been systematically reviewed. At the beginning, fully supervised methods have been reviewed in terms of the initial exploration to remove the time-consuming with low inference speed issues of deep iterative registration algorithms (deep similarity-based registration, reinforcement learning-based registration). One-step fully supervised registration has predicted the final transformation. The lack of training datasets with ground-truth transformations have barriered to train a fully supervised registration network. Most scholors have generated synthesized transformations with the following three approaches as below: 1) random augmentation-based generation; 2) traditional registration-based generation; 3) model-based generation. Next, the integration of dual-supervised and weak supervised registration have alleviated the reliance on ground truth compared with fully supervised approaches via the transition technologies between fully supervised and unsupervised methods. Dual-supervised registration frameworks have integrated image similarity metric to supervise the training. Weak supervised registration in the context of anatomical labels of interest (solid organs, vessels, ducts, structure boundaries and other subject-specific ad hoc landmarks) has replaced ground truth. The label similarity using label-driven supervised registration has facilitated the network to directly estimate the transformation for paired fixed image and moving image. The end-to-end unsupervision has been used to indicate the DL-based medical image registration evolved into the unsupervised field gradually. The unsupervision has avoided the acquisition of ground-truth transformations and segmentation labels for the supervised methods. Unsupervised registration frameworks have performed spatial data based on spatial transformer network (STN) to flat image similarity loss calculation during the training process with unknown transformations further. The latest developments and applications of DL-based unsupervised registration methods have been summarized from the aspects of loss functions and network architectures. DL-based unsupervised registration algorithms on liver CT(computed tomography) scan datasets have also been re-implemented. The demonstrated analyses have the priority to baseline model. At the end, the potentials and possibilities have been illustrated as following: 1) constructing more robust similarity metrics and more effective regularization terms to deal with multi-modality MIR; 2) quantifying registration result confidence of various DL-based models or integrating domain knowledge into current data-driven networks; 3) designing more qualified networks with fewer parameters (e.g., 3D convolution factorization, capsule network architecture).关键词:medical image registration;deep learning(DL);full supervised learning;dual supervised learning;weakly supervised learning;unsupervised learning295|430|6更新时间:2024-05-07 -

摘要:Medical imaging has been a proactive tool for doctors to diagnose and treat diseases via the qualitative and quantitative analyses based on non-invasive lesions. Medical images have been interpreted via computer tomography (CT), X-ray, magnetic resonance imaging (MRI) and positron emission tomography (PET). The barriers of medical image segmentation need to be resolved due to low contrast amongst the lesion, the surrounding tissue and blurred edges of the lesion. Labeling manually for hundreds of slices of organs or lesions has been quite time-consuming due to anatomy of the human body and shape of lesions. Manual labeling has intended to high subjective and low reproducibility. Doctors have been beneficial from a automatically locating, segmenting and quantifying lesions. Deep learning has been used widely in medical image processing. Deep learning-based U-Net has played a key role in the lesions segmentation. The encoding and decoding ways has made U-Net structures simply and symmetrically. Features extraction of medical images has been realized via convolution and down-sampling operations. The image segmentation mask via the transposed convolution and concatenation has been interpreted. A small-sized dataset has achieved qualified medical image segmentation. U-Net has been summarized and analyzed on the four aspects: the definition of U-Net, the upgrading of U-Net model, the setup of U-Net structure and the mechanism of U-Net. Four research areas have been proposed as below: 1) the basic structure and working principle of U-Net via convolution operation, down sampling, up sampling and concatenation. 2) U-Net network model have been demonstrated in three aspects in the context of the number of encoders, multiple U-Net cascades and other models combined with U-Net. U-Net based network have been divided into two, three and four encoders further in terms of the amount of encoders: Y-Net, Ψ-Net and multi-path dense U-Net. Multiple U-Nets cascade has been categorized into multiple U-Nets in series and multiple U-Nets in parallel based on the cascades mode of multiple U-Nets. In addition U-Net has improved the segmentation performance on the aspects of dual tree complex wavelet transform, local difference method, level set, random walk, graph cutting, CNNs(convolutional neural networks) and deep reinforcement learning. The upgrading of U-Net network structure have been divided into six subcategories including image augmentation, convolution operation, down-sampling operation, up-sampling operation, model optimization strategies and concatenation. Image enhancement has be divided into elastic deformation, geometric transformation, generative adversarial networks (GAN), Wasserstein generative adversarial networks (WGAN) and real-time image enhancement further. The convolution operation has been improved via padding mode and convolution redesign. The padding mode mentioned has adapted constant padding, zero padding, replication padding and reflection padding and improvements to dilated convolution, inception module and asymmetric convolution. The down-sampling has been improved via max-pooling, average-pooling, stride convolution, dilated convolution, inception module and spatial pyramid pooling. Several up-sampling improvements have illustrated simultaneously via sub-pixel convolution, transposed convolution, nearest neighbor interpolation, bilinear interpolation and trilinear interpolation. Model optimization strategies have been divided into two aspects in detail of activation function and normalization, the improvements of activation function includes rectified linear unit(ReLU), parametric ReLU(PReLU), random ReLU(RReLU), leaky ReLU(LReLU), hard exponential linear sigmoid squahing(HardELiSH) and exponential linear sigmoid squashing(ELiSH), and normalization method. The improvements have been to shown based on batch normalization, group normalization, instance normalization and layer normalization. The concatenation based improvement has been one of the future research area. The current concatenation improvements have been mainly realized via attention mechanism, new concatenation, feature reuse and de-convolution with activation function, annotation information fusion from Siamese network. The improved mechanisms in the U-Net network have been emphasized based on residual mechanism, dense mechanism, attention mechanism and the multi-mechanisms integration. The segmentation performance of the network can be enhanced. The further four research areas in U-Net have been illustrated as below: 1) the generalization of deep learning methods cannot be customized to fit the segmentation network for specific scenarios in the future. 2) Supervised deep learning models have required a lot of annotated images labeled for treatment. Unsupervised and semi-supervised deep learning models have been a vital research work further. 3) The low interpretability of U-Net network has lead the low acceptance in the mechanism of its operation.4) More accurate segmentation mask with fewer parameters has been obtained via good quality network structure. The precise manual segmentation has been so time-consuming and labor intensive. The simplified and quick semi-automatic segmentation has relied on the parameters and user-specified image preprocessing. The deep learning-based U-Net network has been segmented the lesions quickly, accurately and consistently. The structure, improvements and further research areas of U-Net network have been analyzed to the development of U-Net network.关键词:U-Net;medical image;semantic segmentation;network structure;network model209|158|27更新时间:2024-05-07

摘要:Medical imaging has been a proactive tool for doctors to diagnose and treat diseases via the qualitative and quantitative analyses based on non-invasive lesions. Medical images have been interpreted via computer tomography (CT), X-ray, magnetic resonance imaging (MRI) and positron emission tomography (PET). The barriers of medical image segmentation need to be resolved due to low contrast amongst the lesion, the surrounding tissue and blurred edges of the lesion. Labeling manually for hundreds of slices of organs or lesions has been quite time-consuming due to anatomy of the human body and shape of lesions. Manual labeling has intended to high subjective and low reproducibility. Doctors have been beneficial from a automatically locating, segmenting and quantifying lesions. Deep learning has been used widely in medical image processing. Deep learning-based U-Net has played a key role in the lesions segmentation. The encoding and decoding ways has made U-Net structures simply and symmetrically. Features extraction of medical images has been realized via convolution and down-sampling operations. The image segmentation mask via the transposed convolution and concatenation has been interpreted. A small-sized dataset has achieved qualified medical image segmentation. U-Net has been summarized and analyzed on the four aspects: the definition of U-Net, the upgrading of U-Net model, the setup of U-Net structure and the mechanism of U-Net. Four research areas have been proposed as below: 1) the basic structure and working principle of U-Net via convolution operation, down sampling, up sampling and concatenation. 2) U-Net network model have been demonstrated in three aspects in the context of the number of encoders, multiple U-Net cascades and other models combined with U-Net. U-Net based network have been divided into two, three and four encoders further in terms of the amount of encoders: Y-Net, Ψ-Net and multi-path dense U-Net. Multiple U-Nets cascade has been categorized into multiple U-Nets in series and multiple U-Nets in parallel based on the cascades mode of multiple U-Nets. In addition U-Net has improved the segmentation performance on the aspects of dual tree complex wavelet transform, local difference method, level set, random walk, graph cutting, CNNs(convolutional neural networks) and deep reinforcement learning. The upgrading of U-Net network structure have been divided into six subcategories including image augmentation, convolution operation, down-sampling operation, up-sampling operation, model optimization strategies and concatenation. Image enhancement has be divided into elastic deformation, geometric transformation, generative adversarial networks (GAN), Wasserstein generative adversarial networks (WGAN) and real-time image enhancement further. The convolution operation has been improved via padding mode and convolution redesign. The padding mode mentioned has adapted constant padding, zero padding, replication padding and reflection padding and improvements to dilated convolution, inception module and asymmetric convolution. The down-sampling has been improved via max-pooling, average-pooling, stride convolution, dilated convolution, inception module and spatial pyramid pooling. Several up-sampling improvements have illustrated simultaneously via sub-pixel convolution, transposed convolution, nearest neighbor interpolation, bilinear interpolation and trilinear interpolation. Model optimization strategies have been divided into two aspects in detail of activation function and normalization, the improvements of activation function includes rectified linear unit(ReLU), parametric ReLU(PReLU), random ReLU(RReLU), leaky ReLU(LReLU), hard exponential linear sigmoid squahing(HardELiSH) and exponential linear sigmoid squashing(ELiSH), and normalization method. The improvements have been to shown based on batch normalization, group normalization, instance normalization and layer normalization. The concatenation based improvement has been one of the future research area. The current concatenation improvements have been mainly realized via attention mechanism, new concatenation, feature reuse and de-convolution with activation function, annotation information fusion from Siamese network. The improved mechanisms in the U-Net network have been emphasized based on residual mechanism, dense mechanism, attention mechanism and the multi-mechanisms integration. The segmentation performance of the network can be enhanced. The further four research areas in U-Net have been illustrated as below: 1) the generalization of deep learning methods cannot be customized to fit the segmentation network for specific scenarios in the future. 2) Supervised deep learning models have required a lot of annotated images labeled for treatment. Unsupervised and semi-supervised deep learning models have been a vital research work further. 3) The low interpretability of U-Net network has lead the low acceptance in the mechanism of its operation.4) More accurate segmentation mask with fewer parameters has been obtained via good quality network structure. The precise manual segmentation has been so time-consuming and labor intensive. The simplified and quick semi-automatic segmentation has relied on the parameters and user-specified image preprocessing. The deep learning-based U-Net network has been segmented the lesions quickly, accurately and consistently. The structure, improvements and further research areas of U-Net network have been analyzed to the development of U-Net network.关键词:U-Net;medical image;semantic segmentation;network structure;network model209|158|27更新时间:2024-05-07 -

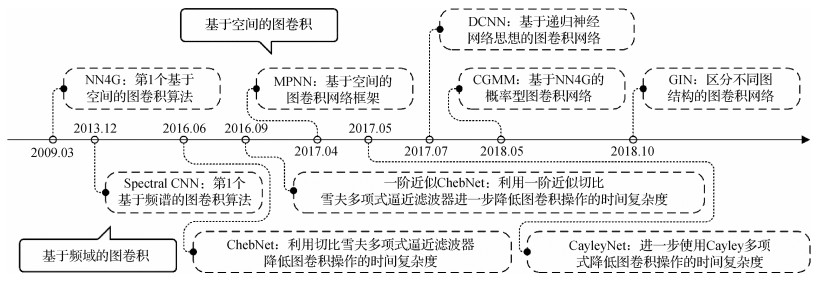

摘要:The convolutional neural networks (CNN) have been facilitated to develop deep learning-based medical image sustainable research. The translation invariance capability has constrained the expression of CNN in the context of non-Euclidean spatial data. In order to realize deep learning-based spatial feature extraction, graph convolution has resolved the topology modeling issue based on non-Euclidean spatial data. The latest theories and applications of graph convolutional networks (GCN) for medical image analysis have been reviewed. This research has been divided into four aspects as follows: 1) Data structure transformation of medical images based on graph-structure; 2) Theoretical development and network architecture of GCN; 3) The optimized and derivative of graph convolution mechanism; 4) GCN implementation in medical image segmentation, disease detection, and image reconstruction. First, graph-structure-based medical images transformation has been reviewed in the context of graph data acquisition, transformation, and reconstruction. The graph-structure-based medical data have been acquired via the professional medical equipment, the sparse pruning algorithm, or the rebuilt graph-structure using the K-nearest neighbor (KNN) algorithm. The graph-structure reconstruction algorithm based on the medical image features has performed better than the graph-structure conversion algorithm based on the medical image data. Next, the critical architecture of the GCN, including the graph convolutional layer, the graph regularization layer, the graph pooling layer, and the graph readout layer, has been summarized. The graph-structural nodes or edges have been updated via the graph convolution layer. The generalization of GCN has been upgraded via the graph regularization layer. The number of calculation parameters has been reduced via the graph pooling layer. The representation of the graph has been generated via the graph readout layer. Graph convolution has been categorized into two methods as mentioned below: a) The spectrum-based graph convolution operation has been implemented via the theory of graph spectrum; b) The spatial domain-based graph convolution operation has been defined via the connectivity of each node. The spectrum-based graph convolution has relied on the eigen-decomposition of the Laplace matrix with the defects of high time complexity, poor portability, and narrow application. The convolution can be optimized by Chebyshev Inequality analysis. The graph pooling layer has effectively reduced parameter size. The graph regularization layer can facilitate the generalization of the model and alleviate the over-fitting and over-smoothing issues. The different structural features, node features, and edge features have been extracted based on the graph convolutional layer. All features need to be aggregated to complete the classification (note: this operation is called the readout operation, and its function is similar to the fully connected layer of CNN). Third, the development and derivation mechanism of GCN optimizations have been summarized. For instance, the jump connection mechanism of deepGCN has alleviated the over-smooth issue. The outputs of multiple GCN based on inception architecture can be integrated to improve the representation ability of the model. The graph attention mechanism has aggregated the differentiated information of the GCN nodes. The adjacency matrix reconstruction has been critically optimized to achieve qualified GCN model performance via learning the hidden structure of the unidentified graph adjacency matrix. Fourth, the main application of GCN for medical image analysis has been interpreted. The general graph-structure construct algorithm for GCN application to medical image segmentation has taken the region of interest (ROI) as the node and the existence of connection in the ROI as the edge. For some unique imaging data (such as brain voxel data and cardiac coronary artery surface grid data), the KNN algorithm has been used to convert them into a graph-structure. The improvement of model architecture has changed from the simple stack of CNN and GCN to the complex combination of various models. The previous medical images application of GCN in disease detection has mainly focused on brain images. Disease detection has been accomplished by using GCN based on the various relationships between objects. Current research on disease detection has mainly divided into three aspects: 1) various CNN models have been used to extract the features based on the original medical images; 2) the KNN algorithm or graph attention algorithm has been used for feature reconstruction; 3) the potential relationship between features is mined by graph convolution for feature classification. In addition, GCN have been used for brain magnetic resonance imaging (MRI) reconstruction, liver image reconstruction, heart image reconstruction, and other diagnoses. In a word, GCN have effectively mined the generalized topological structure in image data on the aspects of medical image segmentation, disease detection, and image reconstruction. The integrated deep learning architecture, which uses pre-trained CNN as feature extractor and GCN as the classifier, has solved the missing issues of medical training samples in a graph structure and significantly improved the performance of deep learning technology in medical image analysis.关键词:medical image;deep learning;graph representation learning;graph neural network(GNN);graph convolution network(GCN)230|150|5更新时间:2024-05-07

摘要:The convolutional neural networks (CNN) have been facilitated to develop deep learning-based medical image sustainable research. The translation invariance capability has constrained the expression of CNN in the context of non-Euclidean spatial data. In order to realize deep learning-based spatial feature extraction, graph convolution has resolved the topology modeling issue based on non-Euclidean spatial data. The latest theories and applications of graph convolutional networks (GCN) for medical image analysis have been reviewed. This research has been divided into four aspects as follows: 1) Data structure transformation of medical images based on graph-structure; 2) Theoretical development and network architecture of GCN; 3) The optimized and derivative of graph convolution mechanism; 4) GCN implementation in medical image segmentation, disease detection, and image reconstruction. First, graph-structure-based medical images transformation has been reviewed in the context of graph data acquisition, transformation, and reconstruction. The graph-structure-based medical data have been acquired via the professional medical equipment, the sparse pruning algorithm, or the rebuilt graph-structure using the K-nearest neighbor (KNN) algorithm. The graph-structure reconstruction algorithm based on the medical image features has performed better than the graph-structure conversion algorithm based on the medical image data. Next, the critical architecture of the GCN, including the graph convolutional layer, the graph regularization layer, the graph pooling layer, and the graph readout layer, has been summarized. The graph-structural nodes or edges have been updated via the graph convolution layer. The generalization of GCN has been upgraded via the graph regularization layer. The number of calculation parameters has been reduced via the graph pooling layer. The representation of the graph has been generated via the graph readout layer. Graph convolution has been categorized into two methods as mentioned below: a) The spectrum-based graph convolution operation has been implemented via the theory of graph spectrum; b) The spatial domain-based graph convolution operation has been defined via the connectivity of each node. The spectrum-based graph convolution has relied on the eigen-decomposition of the Laplace matrix with the defects of high time complexity, poor portability, and narrow application. The convolution can be optimized by Chebyshev Inequality analysis. The graph pooling layer has effectively reduced parameter size. The graph regularization layer can facilitate the generalization of the model and alleviate the over-fitting and over-smoothing issues. The different structural features, node features, and edge features have been extracted based on the graph convolutional layer. All features need to be aggregated to complete the classification (note: this operation is called the readout operation, and its function is similar to the fully connected layer of CNN). Third, the development and derivation mechanism of GCN optimizations have been summarized. For instance, the jump connection mechanism of deepGCN has alleviated the over-smooth issue. The outputs of multiple GCN based on inception architecture can be integrated to improve the representation ability of the model. The graph attention mechanism has aggregated the differentiated information of the GCN nodes. The adjacency matrix reconstruction has been critically optimized to achieve qualified GCN model performance via learning the hidden structure of the unidentified graph adjacency matrix. Fourth, the main application of GCN for medical image analysis has been interpreted. The general graph-structure construct algorithm for GCN application to medical image segmentation has taken the region of interest (ROI) as the node and the existence of connection in the ROI as the edge. For some unique imaging data (such as brain voxel data and cardiac coronary artery surface grid data), the KNN algorithm has been used to convert them into a graph-structure. The improvement of model architecture has changed from the simple stack of CNN and GCN to the complex combination of various models. The previous medical images application of GCN in disease detection has mainly focused on brain images. Disease detection has been accomplished by using GCN based on the various relationships between objects. Current research on disease detection has mainly divided into three aspects: 1) various CNN models have been used to extract the features based on the original medical images; 2) the KNN algorithm or graph attention algorithm has been used for feature reconstruction; 3) the potential relationship between features is mined by graph convolution for feature classification. In addition, GCN have been used for brain magnetic resonance imaging (MRI) reconstruction, liver image reconstruction, heart image reconstruction, and other diagnoses. In a word, GCN have effectively mined the generalized topological structure in image data on the aspects of medical image segmentation, disease detection, and image reconstruction. The integrated deep learning architecture, which uses pre-trained CNN as feature extractor and GCN as the classifier, has solved the missing issues of medical training samples in a graph structure and significantly improved the performance of deep learning technology in medical image analysis.关键词:medical image;deep learning;graph representation learning;graph neural network(GNN);graph convolution network(GCN)230|150|5更新时间:2024-05-07 -

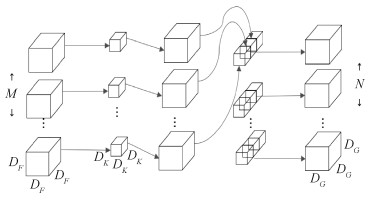

摘要:Medical images can be classified as anatomical images and functional images. The high resolution in anatomical images can provide information about the anatomical structure and morphology of human body organs but cannot reflect the functional information of organs. There is some information about the metabolic functions of organs in functional images; however, it has low resolution and cannot display the anatomical details of organs or lesions. With the continuous development of medical imaging technology, how to use medical images comprehensively to avoid the shortcomings of different medical imaging modalities has become a complex problem. Image fusion has aimed to fuse value-added information from two images to one image in terms of the constraints of single sensor. Effective diagnose and treat diseases have been beneficial to acquire qualified fusion image of organs and tissues and lesions simultaneously via fusing positron emission tomography(PET) images and computed tomography images(CT). Research on pixel-level image fusion based on multi-scale transformation has been focused nowadays. A fusion image with rich edge information has been easy use under the circumstances of image registration. The detailed information for image fusion has been obtained via moderate image decomposition option and fusion strategical implementation. Pixel-level image fusion based on multi-scale transformation has been prior to medical image processing, video surveillance image processing, remote sensing image processing and other tasks. Pixel-level image fusion based on multi-scale transformation has been analyzed and summarized on the following five aspects: 1) mechanism of multi-scale image fusion transformation; 2) multiscale decomposition analysis; 3) a consensus pixel-level fusion framework; 4) subband fusion rules under high and low frequency; and 5) pixel-level image fusion based on multi-scale transformation application in medical image processing. First, the mechanism and framework of image fusion based on multi-scale transformation have been proposed. Next, the multi-temporal decomposition have been summarized via the pyramid decomposition evolvement, wavelet transform and multi-scale geometric analysis. Such as, the advantages of pyramid decomposition are simple implementation and fast calculation speed. The disadvantage of pyramid decomposition is that it has no directionality, sensitive to noise, has no stability during reconstruction, and there is redundancy between the layers of the pyramid. Wavelet transform does not have direction selectivity and shift invariance. The advantage of multi-scale geometric analysis is that it has multiple directions. The original image is decomposed into high-frequency subbands in different directions by nonsubsampled contourlet transform(NSCT), which can enhance the edge details of the image from many directions. Then, two consensus pixel-level fusion frameworks has been illustrated. The Zhang framework has demonstrated four analyses including activity level measurement, coefficient grouping method, coefficient combination method and consistency verification. The Piella framework has consisted of four ways on the aspects of matching measurement, activity measurement, decision module and synthesis module. Low frequency fused sub bands and high frequency fused sub-bands have been categorized. The low frequency fused sub band has been subdivided into five categories: pixel based, region based, fuzzy theory based, sparse representation based and focus metric based. Pixel-based fusion rules include average fusion rules, coefficient maximum fusion rules, etc., region-based fusion rules include regional variance maximum fusion rules, and local energy maximum fusion rules, etc., fuzzy theory fusion rules include fuzzy inference system fusion rules, etc. Fusion rules based on sparse representation include new sum of modified Laplacian, and extended sum of modified Laplacian and other fusion rules. Fusion rules based on focal measures include spatial frequency fusion rules, and local spatial frequencies fusion rules, etc. The high frequency fused sub band rules have been subdivided into five categories: pixel based, edge based, region based, sparse representation based and neural network based. Edge-based fusion rules include edge strength maximum fusion rules and guided filter-based fusion rules, etc., region-based fusion rules include average gradient fusion rules and improved average gradient fusion rules, etc., fusion rules based on sparse representation include multi-scale convolution sparse representation fusion rules and separable dictionary learning method fusion rules, etc. Fusion rules based on neural networks include parameter adaptive dual-channel pulse-coupled neural network, and adaptive dual-channel pulse-coupled neural network fusion rules, simplified pulse-coupled neural network and other fusion rules. At last, 12 pixel-level image fusion based on multi-scale transformation have been summarized for multi-modal medical image fusions. Two-mode medical image fusion and three-mode medical image fusion have been identified. The two-mode medical image fusion has been subdivided into 11 categories. The CT/magnetic resonance image(MRI)/PET has been analyzed in three-mode medical image fusion. At last, the further 4 research areas of pixel-level image fusion based on multi-scale transformation have been summarized: 1) accurate registration of image preprocessing issue; 2) fusion of ultrasonic images with other multimodal medical images issue; 3) integration of multi-algorithms for cascading decomposition; 4) multi-scale decomposition methods and fusion rules for pixel-level image fusion and the application of multi-scale transformation in medical image fusion have been summarized. This article systematically summarizes the multi-scale decomposition methods and fusion rules in the pixel-level image fusion process based on multi-scale transformation, as well as the application of multi-scale transformation in medical image fusion, and the research on the pixel-level medical image fusion method based on multi-scale transformation has a positive guiding significance.关键词:multi-scale transformation;pixel-level fusion;medical images;Zhang framework;Piella framework217|94|6更新时间:2024-05-07

摘要:Medical images can be classified as anatomical images and functional images. The high resolution in anatomical images can provide information about the anatomical structure and morphology of human body organs but cannot reflect the functional information of organs. There is some information about the metabolic functions of organs in functional images; however, it has low resolution and cannot display the anatomical details of organs or lesions. With the continuous development of medical imaging technology, how to use medical images comprehensively to avoid the shortcomings of different medical imaging modalities has become a complex problem. Image fusion has aimed to fuse value-added information from two images to one image in terms of the constraints of single sensor. Effective diagnose and treat diseases have been beneficial to acquire qualified fusion image of organs and tissues and lesions simultaneously via fusing positron emission tomography(PET) images and computed tomography images(CT). Research on pixel-level image fusion based on multi-scale transformation has been focused nowadays. A fusion image with rich edge information has been easy use under the circumstances of image registration. The detailed information for image fusion has been obtained via moderate image decomposition option and fusion strategical implementation. Pixel-level image fusion based on multi-scale transformation has been prior to medical image processing, video surveillance image processing, remote sensing image processing and other tasks. Pixel-level image fusion based on multi-scale transformation has been analyzed and summarized on the following five aspects: 1) mechanism of multi-scale image fusion transformation; 2) multiscale decomposition analysis; 3) a consensus pixel-level fusion framework; 4) subband fusion rules under high and low frequency; and 5) pixel-level image fusion based on multi-scale transformation application in medical image processing. First, the mechanism and framework of image fusion based on multi-scale transformation have been proposed. Next, the multi-temporal decomposition have been summarized via the pyramid decomposition evolvement, wavelet transform and multi-scale geometric analysis. Such as, the advantages of pyramid decomposition are simple implementation and fast calculation speed. The disadvantage of pyramid decomposition is that it has no directionality, sensitive to noise, has no stability during reconstruction, and there is redundancy between the layers of the pyramid. Wavelet transform does not have direction selectivity and shift invariance. The advantage of multi-scale geometric analysis is that it has multiple directions. The original image is decomposed into high-frequency subbands in different directions by nonsubsampled contourlet transform(NSCT), which can enhance the edge details of the image from many directions. Then, two consensus pixel-level fusion frameworks has been illustrated. The Zhang framework has demonstrated four analyses including activity level measurement, coefficient grouping method, coefficient combination method and consistency verification. The Piella framework has consisted of four ways on the aspects of matching measurement, activity measurement, decision module and synthesis module. Low frequency fused sub bands and high frequency fused sub-bands have been categorized. The low frequency fused sub band has been subdivided into five categories: pixel based, region based, fuzzy theory based, sparse representation based and focus metric based. Pixel-based fusion rules include average fusion rules, coefficient maximum fusion rules, etc., region-based fusion rules include regional variance maximum fusion rules, and local energy maximum fusion rules, etc., fuzzy theory fusion rules include fuzzy inference system fusion rules, etc. Fusion rules based on sparse representation include new sum of modified Laplacian, and extended sum of modified Laplacian and other fusion rules. Fusion rules based on focal measures include spatial frequency fusion rules, and local spatial frequencies fusion rules, etc. The high frequency fused sub band rules have been subdivided into five categories: pixel based, edge based, region based, sparse representation based and neural network based. Edge-based fusion rules include edge strength maximum fusion rules and guided filter-based fusion rules, etc., region-based fusion rules include average gradient fusion rules and improved average gradient fusion rules, etc., fusion rules based on sparse representation include multi-scale convolution sparse representation fusion rules and separable dictionary learning method fusion rules, etc. Fusion rules based on neural networks include parameter adaptive dual-channel pulse-coupled neural network, and adaptive dual-channel pulse-coupled neural network fusion rules, simplified pulse-coupled neural network and other fusion rules. At last, 12 pixel-level image fusion based on multi-scale transformation have been summarized for multi-modal medical image fusions. Two-mode medical image fusion and three-mode medical image fusion have been identified. The two-mode medical image fusion has been subdivided into 11 categories. The CT/magnetic resonance image(MRI)/PET has been analyzed in three-mode medical image fusion. At last, the further 4 research areas of pixel-level image fusion based on multi-scale transformation have been summarized: 1) accurate registration of image preprocessing issue; 2) fusion of ultrasonic images with other multimodal medical images issue; 3) integration of multi-algorithms for cascading decomposition; 4) multi-scale decomposition methods and fusion rules for pixel-level image fusion and the application of multi-scale transformation in medical image fusion have been summarized. This article systematically summarizes the multi-scale decomposition methods and fusion rules in the pixel-level image fusion process based on multi-scale transformation, as well as the application of multi-scale transformation in medical image fusion, and the research on the pixel-level medical image fusion method based on multi-scale transformation has a positive guiding significance.关键词:multi-scale transformation;pixel-level fusion;medical images;Zhang framework;Piella framework217|94|6更新时间:2024-05-07

Review

-

摘要:ObjectiveImages-based segmentation of pulmonary anatomy has been set up the anatomical structures to formulate rapid and targeted diagnostic information. The purpose of pulmonary anatomy segmentation has been associated to a pixel in an image with an anatomical structure without the need for manual initialization. A lots of supervised deep learning image segmentation have been illustrated for segmenting regions of interest in pulmonary CT(computer tomography) images. The medical image segmentation has greatly relied on high-quality labeled medical image data, CT images-based lung anatomy labeled data has been insufficient adopted due to the lack of expert annotation of regions of interest and the lack of infrastructure and standards for sharing labeled data. Most of pulmonary CT annotation datasets have focused on thoracic cancer, pulmonary nodules, tuberculosis, pneumonia and lung segmentation. A dataset of pulmonary CT/CTA(computer tomography/computer tomography angiography) scan images with labels has facilitated the evolvement of pulmonary anatomical structure segmentation algorithms. The dataset has been evaluated the performance of state-of-the-art pulmonary anatomy structure segmentation methods for chest CT scans. It has been difficult to compare various algorithms for pulmonary anatomy structure segmentation. Different methods have been evaluated on different datasets using different evaluation measures in common. The related dataset has implemented a dataset of chest CT scans to identify varying abnormalities based on the reference standards in the context of airway, lung parenchyma, lobe and pulmonary artery. The vein segmentations have been established. The dataset has a unique calculation to compare pulmonary anatomy structure algorithms via the comparison all methods against the reference standard baseline.MethodA sum of 67 sets of CT/CTA images of the pulmonary have labeled in this dataset including 24 sets of CT images and 43 sets of CTA images via a total of 26 157 slices images. Each set of CT/CTA images have labeled for airway, lung parenchyma, lobe, pulmonary artery and vein. Multi-channel images have represented a variety of regular-based clinical scanners based on a reconstructed mediastinal window algorithm. The medical image-based dataset has been annotated and verified via. Manual corrections have annotated using internal software funded by the Key Laboratory of Medical Imaging Intelligent Computing, Ministry of Education.ResultPart of dataset representative segmentation tasks have been used via pulmonary CT anatomical structure segmentation (conference details: the medical image challenge competition held during the 4th International Symposium on Image Computing and Digital Medicine (ISICDM) in Shenyang, China). The representative dataset has included 10 groups of CT and CTA in the training dataset and 5 groups of test dataset. The challenge competition has also offered a platform for evaluating model performance of pulmonary blood vessels, airways and lung parenchyma. The result of segmentation and the effect of 3D reconstruction have evaluated by Dice coefficients, over-segmentation rate, under-segmentation rate and medical and algorithmic industry experts.ConclusionFour parts of labeled image datasets have been used as a pulmonary CT dataset. This dataset has labeled using different colored pixels and saved respectively for different pulmonary anatomies structure. The annotated data have been re-formatted to ensure easy access. The location of the markers in color pixels has been displayed via 25 000 labeled sliced images dataset using the image format of the raw data. All annotated images from the digital imaging and communications in medicine (DICOM) format to portable network graphics (PNG) images has been converted based on standard DICOM data. The chest CT image dataset has provided valid annotated data via DICOM-based sensitive information re-movement. First each set of CT/CTA has labeled with 4 different target region categories in the context of airway, lung parenchyma, lobe and pulmonary artery and vein to complement the anatomical structure of CT/CTA image dataset of the pulmonary. Next, a partially representative dataset has been and the have verified by the challenge competition. Lastly, clear and intuitive 3-dimensional visualized structural images have been reconstructed for the acquisition of each anatomical structure of the pulmonary segmented via CT/CTA images to assist in the diagnosis of pulmonary diseases. First, this dataset has not annotated lung segments. It has been difficult to obtain that the invisibility of lung segment boundaries based on targeted and accurate reference segmentation criteria. Second, the annotation data have been basically carried out on healthy images and rarely on lesion images. The most important feature of medical datasets has upgraded the diversity of data. The robustness of image segmentation have been implementing further. Last, manual annotation of medical anatomical structure images have inevitably resulted some errors. Supplementing lung segments markers and improving the diversity of data based on pulmonary CT anatomical structure segmentation algorithms have been implementing further via labeling more lesion images.关键词:pulmonary anatomical structure;pulmonary computed tomography image;dataset;segmentation of images;medical imaging383|647|0更新时间:2024-05-07

摘要:ObjectiveImages-based segmentation of pulmonary anatomy has been set up the anatomical structures to formulate rapid and targeted diagnostic information. The purpose of pulmonary anatomy segmentation has been associated to a pixel in an image with an anatomical structure without the need for manual initialization. A lots of supervised deep learning image segmentation have been illustrated for segmenting regions of interest in pulmonary CT(computer tomography) images. The medical image segmentation has greatly relied on high-quality labeled medical image data, CT images-based lung anatomy labeled data has been insufficient adopted due to the lack of expert annotation of regions of interest and the lack of infrastructure and standards for sharing labeled data. Most of pulmonary CT annotation datasets have focused on thoracic cancer, pulmonary nodules, tuberculosis, pneumonia and lung segmentation. A dataset of pulmonary CT/CTA(computer tomography/computer tomography angiography) scan images with labels has facilitated the evolvement of pulmonary anatomical structure segmentation algorithms. The dataset has been evaluated the performance of state-of-the-art pulmonary anatomy structure segmentation methods for chest CT scans. It has been difficult to compare various algorithms for pulmonary anatomy structure segmentation. Different methods have been evaluated on different datasets using different evaluation measures in common. The related dataset has implemented a dataset of chest CT scans to identify varying abnormalities based on the reference standards in the context of airway, lung parenchyma, lobe and pulmonary artery. The vein segmentations have been established. The dataset has a unique calculation to compare pulmonary anatomy structure algorithms via the comparison all methods against the reference standard baseline.MethodA sum of 67 sets of CT/CTA images of the pulmonary have labeled in this dataset including 24 sets of CT images and 43 sets of CTA images via a total of 26 157 slices images. Each set of CT/CTA images have labeled for airway, lung parenchyma, lobe, pulmonary artery and vein. Multi-channel images have represented a variety of regular-based clinical scanners based on a reconstructed mediastinal window algorithm. The medical image-based dataset has been annotated and verified via. Manual corrections have annotated using internal software funded by the Key Laboratory of Medical Imaging Intelligent Computing, Ministry of Education.ResultPart of dataset representative segmentation tasks have been used via pulmonary CT anatomical structure segmentation (conference details: the medical image challenge competition held during the 4th International Symposium on Image Computing and Digital Medicine (ISICDM) in Shenyang, China). The representative dataset has included 10 groups of CT and CTA in the training dataset and 5 groups of test dataset. The challenge competition has also offered a platform for evaluating model performance of pulmonary blood vessels, airways and lung parenchyma. The result of segmentation and the effect of 3D reconstruction have evaluated by Dice coefficients, over-segmentation rate, under-segmentation rate and medical and algorithmic industry experts.ConclusionFour parts of labeled image datasets have been used as a pulmonary CT dataset. This dataset has labeled using different colored pixels and saved respectively for different pulmonary anatomies structure. The annotated data have been re-formatted to ensure easy access. The location of the markers in color pixels has been displayed via 25 000 labeled sliced images dataset using the image format of the raw data. All annotated images from the digital imaging and communications in medicine (DICOM) format to portable network graphics (PNG) images has been converted based on standard DICOM data. The chest CT image dataset has provided valid annotated data via DICOM-based sensitive information re-movement. First each set of CT/CTA has labeled with 4 different target region categories in the context of airway, lung parenchyma, lobe and pulmonary artery and vein to complement the anatomical structure of CT/CTA image dataset of the pulmonary. Next, a partially representative dataset has been and the have verified by the challenge competition. Lastly, clear and intuitive 3-dimensional visualized structural images have been reconstructed for the acquisition of each anatomical structure of the pulmonary segmented via CT/CTA images to assist in the diagnosis of pulmonary diseases. First, this dataset has not annotated lung segments. It has been difficult to obtain that the invisibility of lung segment boundaries based on targeted and accurate reference segmentation criteria. Second, the annotation data have been basically carried out on healthy images and rarely on lesion images. The most important feature of medical datasets has upgraded the diversity of data. The robustness of image segmentation have been implementing further. Last, manual annotation of medical anatomical structure images have inevitably resulted some errors. Supplementing lung segments markers and improving the diversity of data based on pulmonary CT anatomical structure segmentation algorithms have been implementing further via labeling more lesion images.关键词:pulmonary anatomical structure;pulmonary computed tomography image;dataset;segmentation of images;medical imaging383|647|0更新时间:2024-05-07

Dataset

-

摘要:ObjectiveLiver fibrosis is a common manifestation of many chronic liver diseases. It can develop into cirrhosis and even lead to liver cancer if not treated in time. The early diagnosis of liver fibrosis helps prevent the occurrence of severe liver disease. Studies have shown that timely and correct treatment can reverse liver fibrosis and even cirrhosis. Therefore, the accurate assessment of liver fibrosis is essential to the clinical treatment and prognosis assessment of liver fibrosis. At present, the diagnosis of liver fibrosis in the medical field is evaluated through liver biopsy, which is generally a safe procedure but invasive. The complications of liver biopsy are rare but potentially lethal, so noninvasive diagnosis methods based on imaging have attracted considerable interest.MethodThis paper proposes a network for the segmentation of liver fibrosis regions, called LFSCA-UNet(liver fibrosis region segmentation network based on spatial and channel attention mechanisms-UNet). It has improved the U-Net with two different attention mechanisms. U-Net is a convolutional neural network used for image semantic segmentation. Attention U-Net is an improved version of U-Net, it adds a group of attention gate modules into each skip connection of the original U-Net. The attention gate modules in attention U-Net is a spatial attention mechanism. LFSCA-UNet adds a channel attention mechanism to each skip connection structure. In this study, the efficient channel attention(ECA), which is a channel attention mechanism based on the squeeze and excitation network, was used in implementing the added mechanism. The core idea of the squeeze and excitation network is to allow networks to automatically learn dependencies between channels. This network changes a conventional convolution layer to a convolution layer with a squeeze and excitation block, which can be divided into two parts: squeeze and excitation. The squeezing part uses global pooling to obtain a feature vector of a current convolutional layer feature map, whereas the excitation part uses two fully connected layers with different numbers. The first drop of the dimension and the second upgraded, and finally, the weight of each channel is obtained after sigmoid activation, which is multiplied by the original feature map as the input of the subsequent layer of the network. The efficient channel attention block is an improvement of the squeeze and excitation block, which removes the part of reducing dimension and uses 1 d convolution instead of the fully connected layer. It has better performance and fewer parameters. The CT(computed tomography) images used in this study was obtained from 88 patients with liver fibrosis and provided by the Department of Liver Surgery, Renji Hospital, Shanghai Jiao Tong University School of Medicine. One Nvidia Tesla P100 graphics cards with 16 GB memory were used in training networks, and Python 3.8.5 and PyTorch 1.7.1 were used.ResultThis paper horizontally compared five different experimental networks according to five different indicators, namely, Dice coefficient, Jaccard index, precision, recall (sensitivity), and specificity. LFSCA-UNet gets the highest result of mean Dice coefficient (0.933 3), better than the original U-Net (0.539 6%).ConclusionThis paper verifies that the combination of spatial attention and channel attention mechanisms can effectively improve the segmentation result of liver fibrosis. For the spatial attention module, using the channel attention module in optimizing inputs can increase network stability and optimizing outputs can improve the overall effect of the network.关键词:liver fibrosis;image segmentation;spatial attention mechanism;channel attention mechanism;U-Net160|192|3更新时间:2024-05-07

摘要:ObjectiveLiver fibrosis is a common manifestation of many chronic liver diseases. It can develop into cirrhosis and even lead to liver cancer if not treated in time. The early diagnosis of liver fibrosis helps prevent the occurrence of severe liver disease. Studies have shown that timely and correct treatment can reverse liver fibrosis and even cirrhosis. Therefore, the accurate assessment of liver fibrosis is essential to the clinical treatment and prognosis assessment of liver fibrosis. At present, the diagnosis of liver fibrosis in the medical field is evaluated through liver biopsy, which is generally a safe procedure but invasive. The complications of liver biopsy are rare but potentially lethal, so noninvasive diagnosis methods based on imaging have attracted considerable interest.MethodThis paper proposes a network for the segmentation of liver fibrosis regions, called LFSCA-UNet(liver fibrosis region segmentation network based on spatial and channel attention mechanisms-UNet). It has improved the U-Net with two different attention mechanisms. U-Net is a convolutional neural network used for image semantic segmentation. Attention U-Net is an improved version of U-Net, it adds a group of attention gate modules into each skip connection of the original U-Net. The attention gate modules in attention U-Net is a spatial attention mechanism. LFSCA-UNet adds a channel attention mechanism to each skip connection structure. In this study, the efficient channel attention(ECA), which is a channel attention mechanism based on the squeeze and excitation network, was used in implementing the added mechanism. The core idea of the squeeze and excitation network is to allow networks to automatically learn dependencies between channels. This network changes a conventional convolution layer to a convolution layer with a squeeze and excitation block, which can be divided into two parts: squeeze and excitation. The squeezing part uses global pooling to obtain a feature vector of a current convolutional layer feature map, whereas the excitation part uses two fully connected layers with different numbers. The first drop of the dimension and the second upgraded, and finally, the weight of each channel is obtained after sigmoid activation, which is multiplied by the original feature map as the input of the subsequent layer of the network. The efficient channel attention block is an improvement of the squeeze and excitation block, which removes the part of reducing dimension and uses 1 d convolution instead of the fully connected layer. It has better performance and fewer parameters. The CT(computed tomography) images used in this study was obtained from 88 patients with liver fibrosis and provided by the Department of Liver Surgery, Renji Hospital, Shanghai Jiao Tong University School of Medicine. One Nvidia Tesla P100 graphics cards with 16 GB memory were used in training networks, and Python 3.8.5 and PyTorch 1.7.1 were used.ResultThis paper horizontally compared five different experimental networks according to five different indicators, namely, Dice coefficient, Jaccard index, precision, recall (sensitivity), and specificity. LFSCA-UNet gets the highest result of mean Dice coefficient (0.933 3), better than the original U-Net (0.539 6%).ConclusionThis paper verifies that the combination of spatial attention and channel attention mechanisms can effectively improve the segmentation result of liver fibrosis. For the spatial attention module, using the channel attention module in optimizing inputs can increase network stability and optimizing outputs can improve the overall effect of the network.关键词:liver fibrosis;image segmentation;spatial attention mechanism;channel attention mechanism;U-Net160|192|3更新时间:2024-05-07 -

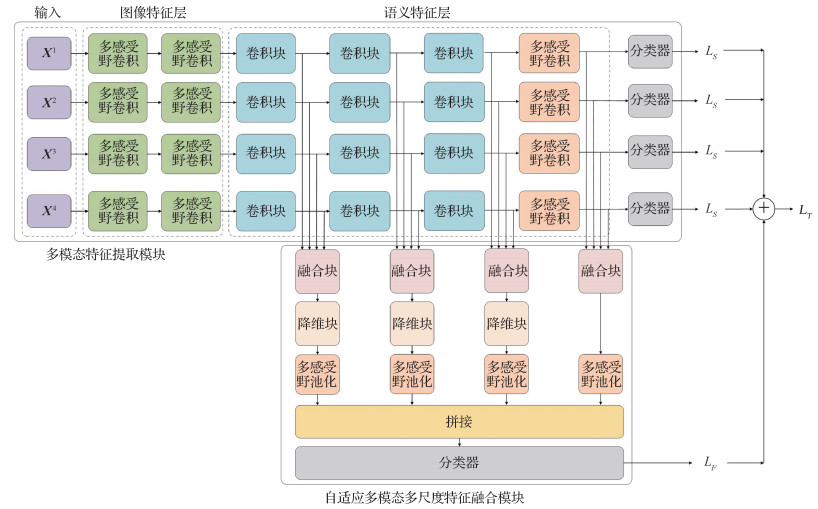

摘要:ObjectiveAutomatic segmentation of organs at risk (OAR) in computed tomography (CT) has been an essential part of implementing effective treatment strategies to resist lung and esophageal cancers. Accurate segmentation of organs' tumors can aid to interpretate inherent position and morphological changes for patients via facilitating adaptive and computer assisted radiotherapy. Manual delineation of OAR cannot be customized in the future. Scholors have conducted segmentation manually for heart-based backward esophagus spinal and cord-based upper trachea based on intensity levels and anatomical knowledge Complicated variations in the shape and position of organs and low soft tissue contrast between neighboring organs in CT images have caused emerging errors. The CT-based images for lifting manual segmentation skill for thoracic organs have been caused time-consuming. Nonlinear-based modeling of deep convolutional neural networks (DCNNs) has been presented tremendous capability in medical image segmentation. Multi organ segmentation deep learning skill has been applied in abdominal CT images. The small size and irregular shape for automatic segmentation of the esophagus have not been outreached in comparison with even larger size organs. Two skills have related to 3D medical image segmentation have been implemented via the independent separation of each slice and instant 3D convolution to aggregate information between slices and segment all slices of the CT image in. The single slice segmentation skill cannot be used in the multi-layer dependencies overall. Higher computational cost for slices 3D segmentation has been operated via all layers aggregation. A 2.5D deep learning framework has been illustrated to identify the organs location robustly and refine the boundaries of each organ accurately.MethodThis network segmentation of 2.5D slice sequences under the coronal plane composed of three adjacent slices as input can learn the most distinctive of a single slice deeply. The features have been presented in the connection between slices. The image intensity values of all scans were truncated to the range of[-384, 384] HU to omit the irrelevant information in one step. An emerging attention module called efficient global context has been demonstrated based on the completed U-Net neural network structure. The integration for effective channel attention and global context module have been achieved. The global context information has been demonstrated via calculating the response at a location as the weighted sum of the features of all locations in the input feature map. A model has been built up to identify the correlation for channels. The effective feature map can obtain useful information. The useless information can be deducted. The single view long distance dependency between slice sequences can be captured. Attention has been divided into three modules on the aspect of context modeling module, feature conversion module and fusion module. Unlike the traditional global context module, feature conversion module has not required dimensionality to realize the information interaction between channels. The channel attention can be obtained via one dimensional convolution effectively. The capability of pyramid convolution has been used in the encoding layer part. Extracted multi-scale information and expanded receptive field for the convolution layer can be used via dense connection. The pyramid convolution has adapted convolution kernels on different scales and depths. The increased convolution kernels can be used in parallel to process the input and capture different levels of information. Feature transformation has been processed uniformly and individually in multiple parallel branches. The output of each branch has been integrated into the final output. Multi-scale feature extraction based on adjusting the size of the convolution kernel has been achieved than the receptive field resolution down sampling upgration. Multi-layer dense connection has realized feature multiplexing and ensures maximum information transmission. The integration of pyramid convolution and dense connection has obtained a wider range of information and good quality integrated multi-scale images. The backward gradient flow can be smoother than before. An accurate multi-organs segmentation have required local and global information fusion, decoder with each layer of encoders connecting network and the low level details of different levels of feature maps with high level semantics in order to make full use of multi-scale features and enhance the recognition of feature maps. The irregular and closely connected shape of multi-organs in CT images can be calculated at the end. Deep supervision has been added to learn the feature representations of different layers based on the sophisticated feature map aggregation. The boundaries of organs and excessive segmentation deduction in non-organ images and network training can be enhanced effectively. More accurate segmentation results can be produced finally.ResultIn the public dataset of the segmentation of thoracic organs at risk in CT images(SegTHOR) 2019 challenge, the research has been performed CT scans operation on four thoracic organs (i.e., esophagus, heart, trachea and aorta), take Dice similarity coefficient (DSC) and Hausdorff distance (HD) as main criteria, the Dice coefficients of the esophagus, heart, trachea and aorta in the test samples reached 0.855 1, 0.945 7, 0.923 0 and 0.938 3 separately. The HD distances have achieved 0.302 3, 0.180 5, 0.212 2 and 0.191 8 respectively.ConclusionLow level detailed feature maps can capture rich spatial information to highlight the boundaries of organs. High level semantic features have reflected position information and located organs. Multi scale features and global context integration have been the key step to accurate segmentation. The highest average DSC value and HD obtained for heart and Aorta have achieved its high contrast, regular shape, and larger size compared to the other organs. The esophagus had the lowest average DSC and HD values due to its irregularity and low contrast to identify within CT volumes more difficult. The research has achieved a DSC score of 85.5% for the esophagus on test dataset. Experimental results have shown that the proposed method has beneficial for segmenting high risk organs to strengthen radiation therapy planning.关键词:multi-organ segmentation;pseudo three dimension;efficient global context;pyramid convolution;multi-scale features102|165|6更新时间:2024-05-07

摘要:ObjectiveAutomatic segmentation of organs at risk (OAR) in computed tomography (CT) has been an essential part of implementing effective treatment strategies to resist lung and esophageal cancers. Accurate segmentation of organs' tumors can aid to interpretate inherent position and morphological changes for patients via facilitating adaptive and computer assisted radiotherapy. Manual delineation of OAR cannot be customized in the future. Scholors have conducted segmentation manually for heart-based backward esophagus spinal and cord-based upper trachea based on intensity levels and anatomical knowledge Complicated variations in the shape and position of organs and low soft tissue contrast between neighboring organs in CT images have caused emerging errors. The CT-based images for lifting manual segmentation skill for thoracic organs have been caused time-consuming. Nonlinear-based modeling of deep convolutional neural networks (DCNNs) has been presented tremendous capability in medical image segmentation. Multi organ segmentation deep learning skill has been applied in abdominal CT images. The small size and irregular shape for automatic segmentation of the esophagus have not been outreached in comparison with even larger size organs. Two skills have related to 3D medical image segmentation have been implemented via the independent separation of each slice and instant 3D convolution to aggregate information between slices and segment all slices of the CT image in. The single slice segmentation skill cannot be used in the multi-layer dependencies overall. Higher computational cost for slices 3D segmentation has been operated via all layers aggregation. A 2.5D deep learning framework has been illustrated to identify the organs location robustly and refine the boundaries of each organ accurately.MethodThis network segmentation of 2.5D slice sequences under the coronal plane composed of three adjacent slices as input can learn the most distinctive of a single slice deeply. The features have been presented in the connection between slices. The image intensity values of all scans were truncated to the range of[-384, 384] HU to omit the irrelevant information in one step. An emerging attention module called efficient global context has been demonstrated based on the completed U-Net neural network structure. The integration for effective channel attention and global context module have been achieved. The global context information has been demonstrated via calculating the response at a location as the weighted sum of the features of all locations in the input feature map. A model has been built up to identify the correlation for channels. The effective feature map can obtain useful information. The useless information can be deducted. The single view long distance dependency between slice sequences can be captured. Attention has been divided into three modules on the aspect of context modeling module, feature conversion module and fusion module. Unlike the traditional global context module, feature conversion module has not required dimensionality to realize the information interaction between channels. The channel attention can be obtained via one dimensional convolution effectively. The capability of pyramid convolution has been used in the encoding layer part. Extracted multi-scale information and expanded receptive field for the convolution layer can be used via dense connection. The pyramid convolution has adapted convolution kernels on different scales and depths. The increased convolution kernels can be used in parallel to process the input and capture different levels of information. Feature transformation has been processed uniformly and individually in multiple parallel branches. The output of each branch has been integrated into the final output. Multi-scale feature extraction based on adjusting the size of the convolution kernel has been achieved than the receptive field resolution down sampling upgration. Multi-layer dense connection has realized feature multiplexing and ensures maximum information transmission. The integration of pyramid convolution and dense connection has obtained a wider range of information and good quality integrated multi-scale images. The backward gradient flow can be smoother than before. An accurate multi-organs segmentation have required local and global information fusion, decoder with each layer of encoders connecting network and the low level details of different levels of feature maps with high level semantics in order to make full use of multi-scale features and enhance the recognition of feature maps. The irregular and closely connected shape of multi-organs in CT images can be calculated at the end. Deep supervision has been added to learn the feature representations of different layers based on the sophisticated feature map aggregation. The boundaries of organs and excessive segmentation deduction in non-organ images and network training can be enhanced effectively. More accurate segmentation results can be produced finally.ResultIn the public dataset of the segmentation of thoracic organs at risk in CT images(SegTHOR) 2019 challenge, the research has been performed CT scans operation on four thoracic organs (i.e., esophagus, heart, trachea and aorta), take Dice similarity coefficient (DSC) and Hausdorff distance (HD) as main criteria, the Dice coefficients of the esophagus, heart, trachea and aorta in the test samples reached 0.855 1, 0.945 7, 0.923 0 and 0.938 3 separately. The HD distances have achieved 0.302 3, 0.180 5, 0.212 2 and 0.191 8 respectively.ConclusionLow level detailed feature maps can capture rich spatial information to highlight the boundaries of organs. High level semantic features have reflected position information and located organs. Multi scale features and global context integration have been the key step to accurate segmentation. The highest average DSC value and HD obtained for heart and Aorta have achieved its high contrast, regular shape, and larger size compared to the other organs. The esophagus had the lowest average DSC and HD values due to its irregularity and low contrast to identify within CT volumes more difficult. The research has achieved a DSC score of 85.5% for the esophagus on test dataset. Experimental results have shown that the proposed method has beneficial for segmenting high risk organs to strengthen radiation therapy planning.关键词:multi-organ segmentation;pseudo three dimension;efficient global context;pyramid convolution;multi-scale features102|165|6更新时间:2024-05-07 -

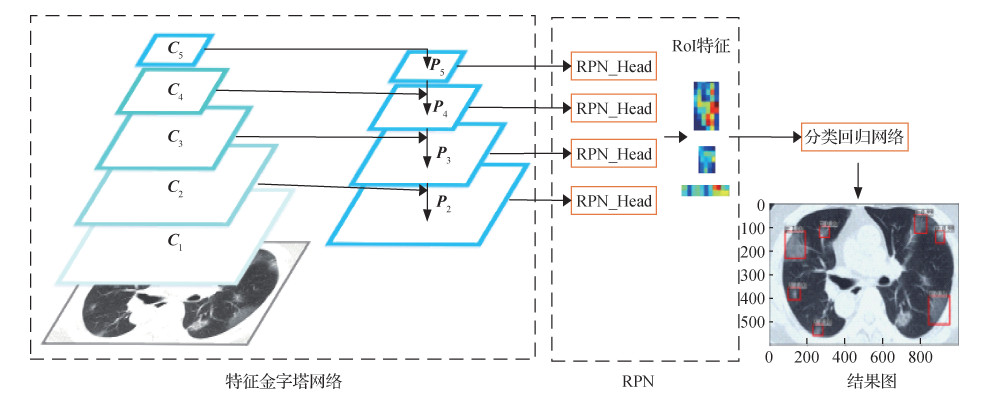

摘要:ObjectiveAs an important criterion for the diagnosis of early-stage lung cancer, chest computed tomography (CT) images-based pulmonary nodules detection have been implemented via location observation, scope and shape of the lesions. The CT image has been analyzed lung organizational structures like the lung parenchyma and the contextual part, such as hydrops, trachea, bronchus, and ribs. CT images-based lung parenchyma has been hard to interpret automatically and precisely. The precise extraction of lung parenchyma has played a vital role in lung-based diseases analyses. Most of lung segmentation have been conducted based on regular image processing algorithms like threshold or morphological operation. The convolutional neural networks (CNNs) have been used in computerized pulmonary disease analysis. CNN-driven lung segmentation algorithms have been adopted in computer-aided diagnosis (CAD). The U-shape structure has been designed for medical image segmentation based on end-to-end fully convolutional network (FCN) structure. The credibility for biomedical image segmentations have been realized based on the encoding and decoding symmetric network structure. A novel convolutional neural network based on U-Net architecture has been illustrated via integrating attention mechanism and dense atrous convolution (DAC).MethodThe network has contained an encoder and a decoder. The encoder has consisted of convolution and down sampling. The deductible spatial dimension of feature maps have been used to learn more semantic information. And the attention mechanism decoder has been implemented for de-convolution and up-sampling to re-configure the spatial dimension of the feature maps. The decoding mode using attention mechanism has been manipulated to make the target area output more effectively. Meanwhile, the algorithm of lung image segmentation has been used to identify the target-oriented neural network's attention using transmitted skip-connection to improve the weight of the salient feature. The feature resolution capability has been enhanced to the requirements for intensive spatial prediction via pooling consecutive operations and convolution striding. The DAC block has been deployed between the encoder and the decoder to extract multi-scale information of the context sufficiently. The advantages of Inception, ResNet and atrous convolution for the block have been inherited to capture multi-sized features consequently. The max-pooling and up-sampling operators have been utilized to reduce and increase the resolution of feature maps intensively based on the classic U-Net framework, which could lead to feature loss and accuracy reduced problems during training. The original max-pooling and up-sampling operators have been replaced via down-sample and up-sample block with inception structure to widen the multi-filters network and avoid feature loss. The Dice coefficient loss function has been used instead of the cross entropy loss to identify the gap between prediction and ground-truth. The deep learning framework Pytorch have been used on a server with two NVIDIA GeForce RTX 2080Ti graphics cards and each GPU has 11 Gigabyte memory. At the experimental stage, the original images have been resized to 256×256 pixels and 80% of these for training besides the test remaining. The proposed model has been trained for 120 epochs. Based on an initial learning rate of 0.000 1, the Adam has been opted as the optimization algorithm.ResultIn order to verify the efficiency of the proposed method, we conduct multi-compatible verifications called FCN-8 s, U-Net, UNet++, ResU-Net and CE-Net (context encoder network) have been conducted. Four segmentation metrics have been adopted to assess the segmentation. These metrics has evolved the Dice similarity coefficient (DSC), the intersection over union (IoU), sensitivity (SE) and accuracy (ACC). The experimental results on the LUNA16 dataset have demonstrated the priorities in terms of all metrics results. The average Dice similarity coefficient has reached 0.985 9, which has 0.443% higher than the segmentation results of the second-performing CE-Net. The model consequence has achieved 0.972 2, 0.993 8, and 0.982 2 each in terms of IoU, ACC and SE. This second qualified segmentation performance has reached: 0.272%, 0.512% and 0.374% each (more better). Compared with other algorithms, the predictable results of modeling has closer to the label made. The adhesive difficulties on the left and right lung cohesion issue have been resolved well.ConclusionAn encoded/decoded structure in novel convolutional neural network has been integrated via attention mechanism and dense atrous convolution for lungs segmentation. The experiment results have illustrated that the qualified and effective framework for segmenting the lung parenchyma area have its own priority.关键词:lung segmentation;convolutional neural networks(CNN);computer-aided diagnosis(CAD);attention mechanism;dense atrous convolution(DAC)88|102|3更新时间:2024-05-07