最新刊期

卷 26 , 期 8 , 2021

-

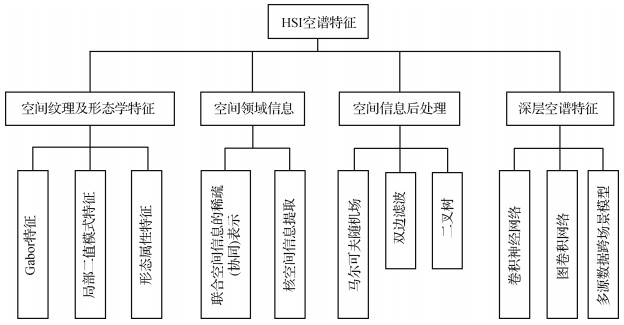

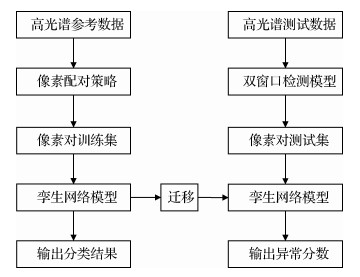

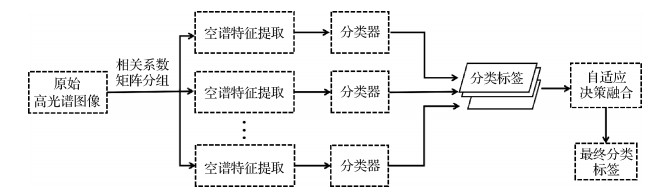

摘要:Hyperspectral imaging spectrometers collect radiation data from the ground in many adjacent and overlapping narrow spectral bands at the same time. The hyperspectral image (HSI) usually has hundreds of bands. Each of these bands contains the reflected light value within the specified range of the electromagnetic spectrum. Thus, the HSI contains a wealth of spectral and radiation information. The development of remote sensing imaging technology has increased the spatial resolution of HSI data obtained by hyperspectral imaging spectrometer. Therefore, HSI can be applied to accurately classify ground objects in various fields, such as geological exploration, precision agriculture, ecological environment, scientific remote sensing, and military target detection. However, many challenges and difficulties are encountered in classification applications because HSI has a large dataset, multiple bands, and strong band correlation. Specifically, the number of dimensionalities of HSI is often more than the number of available training samples. The lack of training samples and high computational cost are the inevitable obstacles in practical classification applications. Dimensionality reduction methods are often used to project HSI data into low-dimensional feature spaces for avoiding "Hughes" phenomenon. Spatial information can help create a more accurate classification map given the high probability of adjacent pixels belonging to the same category. In recent years, an increasing number of studies have applied spatial and spectral information to further improve the accuracy of classification. According to the characteristics and combination of spatial information, spectral information, and classifiers, the methods of spatial-spectral feature extraction for HSI can be defined into three types: spatial texture and morphological feature extraction, spatial neighborhood information acquisition, and spatial information post-processing. For the first type, spatial texture or morphological features (e.g., Gabor, local binary pattern, and morphological attribute features) are extracted in advance to preprocess the spatial information of pixels. In other words, spatial features are extracted through certain structures and rules, and then, the obtained features are sent to the classifier. The second method directly combines the relationship between the pixel and its spatial neighborhood pixels into the classifier. Spatial-spectral information is directly constructed into the classification models (e.g., sparse/collaborative representation of joint spatial information and kernel-based spatial information extraction and classification) through mathematical expressions. As a result, feature extraction and classification can be completed simultaneously. In the third category, spectral features are first classified. Then, the obtained classification results are corrected through spatial information post-processing methods (e.g., random fields, bilateral filtering, and graph segmentation) to further improve the classification accuracy. The traditional spatial-spectral feature extraction method for HSI has small computation, good mathematical theory foundation and explanation, and strong robustness against noise. However, the traditional spatial-spectral feature extraction methods mostly design shallow feature extraction schemes manually, which involves a lot of expert experience and parameter setting and thus affects the ability of feature expression and learning. For HSI data, scattering from other objects can distort the spectral properties of the interest object. In addition, different atmospheric scattering conditions and intra-class variability cause difficulty in extracting spatial-spectral features by traditional methods. Deep neural network has many advantages, such as learning representative and discriminant features, improving information representation through deep structure, and realizing automatic extraction and representation of features. Thus, higher accuracy of HSI classification will be achieved by designing the structure of deep network reasonably. In this study, the application of spatial-spectral feature extraction from deep learning is expounded and analyzed from the perspectives of convolutional neural network (CNN), graph neural network (GNN), and multi-source data cross-scene model. CNN shares weights and uses local connections to extract spatial information effectively. CNN model cannot generally adapt to local regions with various object distributions and geometric appearance because it convolves regular square regions with fixed size and weights. GNN model can represent many potential relationships between data with graphs. As a result, GNN can be applied for spatial-spectral feature extraction and classification for HSI. In some scenes (e.g., complex city scenes), different ground objects composed of the same material or substance need to be distinguished through shape, elevation, texture, and other information. Light detection and ranging data can be used to describe the elevation of the scene and the height of objects and obtain the spatial context and structural information without the effect of time and weather. Therefore, multi-sensor data can be considered to build joint-feature space for more accurate classification in some special scenes. In recent years, spatial-spectral feature extraction techniques for HSI have greatly progressed and achieved satisfactory results. However, the following problems need to be solved. 1) The methods of traditional and deep spatial-spectral feature extraction can be combined to fully utilize their respective advantages. 2) The small-sample-size and over-fitting problems from deep neural network need to be overcome through designing semi-supervised learning, active learning, or self-supervised learning models. 3) Using GNN to train HSI will lead to high computational cost and large memory usage. Thus, the model oriented to reduce the computational complexity should be studied. 4) Combining multi-source data from different sensors should consider reasonably unifying and complementary expressing multi-source data features. 5) Using multi-temporal, hyperspectral, and multi-perspective information to simultaneously mine spatial-spectral-temporal joint-feature information of complex dynamic targets has become a new frontier. 6) Using multi-temporal, hyperspectral and multi-perspective information to simultaneously extract spatial-spectral-temporal features of complex dynamic targets has become a new frontier. 7) With the progress of space remote sensing technologies in China, domestic hyperspectral data will receive more and more attention for research. 8) According to the development trend of big data and machine intelligence, the research on spatial-spectral feature extraction and classification of hyperspectral image based on the combination of applied domain knowledge and hyperspectral data will be a hot topic. From two sides of the traditional and deep spatial-spectral feature extraction in this study, the research status is systematically sorted out and comprehensively summarized. The existing problems are analyzed and evaluated, and the future development trend is evaluated and prospected.关键词:hyperspectral image(HSI);spatial-spectral feature extraction;convolutional neural network(CNN);graph convolutional network(GCN);multi-data fusion;deep neutral network321|920|22更新时间:2024-05-07

摘要:Hyperspectral imaging spectrometers collect radiation data from the ground in many adjacent and overlapping narrow spectral bands at the same time. The hyperspectral image (HSI) usually has hundreds of bands. Each of these bands contains the reflected light value within the specified range of the electromagnetic spectrum. Thus, the HSI contains a wealth of spectral and radiation information. The development of remote sensing imaging technology has increased the spatial resolution of HSI data obtained by hyperspectral imaging spectrometer. Therefore, HSI can be applied to accurately classify ground objects in various fields, such as geological exploration, precision agriculture, ecological environment, scientific remote sensing, and military target detection. However, many challenges and difficulties are encountered in classification applications because HSI has a large dataset, multiple bands, and strong band correlation. Specifically, the number of dimensionalities of HSI is often more than the number of available training samples. The lack of training samples and high computational cost are the inevitable obstacles in practical classification applications. Dimensionality reduction methods are often used to project HSI data into low-dimensional feature spaces for avoiding "Hughes" phenomenon. Spatial information can help create a more accurate classification map given the high probability of adjacent pixels belonging to the same category. In recent years, an increasing number of studies have applied spatial and spectral information to further improve the accuracy of classification. According to the characteristics and combination of spatial information, spectral information, and classifiers, the methods of spatial-spectral feature extraction for HSI can be defined into three types: spatial texture and morphological feature extraction, spatial neighborhood information acquisition, and spatial information post-processing. For the first type, spatial texture or morphological features (e.g., Gabor, local binary pattern, and morphological attribute features) are extracted in advance to preprocess the spatial information of pixels. In other words, spatial features are extracted through certain structures and rules, and then, the obtained features are sent to the classifier. The second method directly combines the relationship between the pixel and its spatial neighborhood pixels into the classifier. Spatial-spectral information is directly constructed into the classification models (e.g., sparse/collaborative representation of joint spatial information and kernel-based spatial information extraction and classification) through mathematical expressions. As a result, feature extraction and classification can be completed simultaneously. In the third category, spectral features are first classified. Then, the obtained classification results are corrected through spatial information post-processing methods (e.g., random fields, bilateral filtering, and graph segmentation) to further improve the classification accuracy. The traditional spatial-spectral feature extraction method for HSI has small computation, good mathematical theory foundation and explanation, and strong robustness against noise. However, the traditional spatial-spectral feature extraction methods mostly design shallow feature extraction schemes manually, which involves a lot of expert experience and parameter setting and thus affects the ability of feature expression and learning. For HSI data, scattering from other objects can distort the spectral properties of the interest object. In addition, different atmospheric scattering conditions and intra-class variability cause difficulty in extracting spatial-spectral features by traditional methods. Deep neural network has many advantages, such as learning representative and discriminant features, improving information representation through deep structure, and realizing automatic extraction and representation of features. Thus, higher accuracy of HSI classification will be achieved by designing the structure of deep network reasonably. In this study, the application of spatial-spectral feature extraction from deep learning is expounded and analyzed from the perspectives of convolutional neural network (CNN), graph neural network (GNN), and multi-source data cross-scene model. CNN shares weights and uses local connections to extract spatial information effectively. CNN model cannot generally adapt to local regions with various object distributions and geometric appearance because it convolves regular square regions with fixed size and weights. GNN model can represent many potential relationships between data with graphs. As a result, GNN can be applied for spatial-spectral feature extraction and classification for HSI. In some scenes (e.g., complex city scenes), different ground objects composed of the same material or substance need to be distinguished through shape, elevation, texture, and other information. Light detection and ranging data can be used to describe the elevation of the scene and the height of objects and obtain the spatial context and structural information without the effect of time and weather. Therefore, multi-sensor data can be considered to build joint-feature space for more accurate classification in some special scenes. In recent years, spatial-spectral feature extraction techniques for HSI have greatly progressed and achieved satisfactory results. However, the following problems need to be solved. 1) The methods of traditional and deep spatial-spectral feature extraction can be combined to fully utilize their respective advantages. 2) The small-sample-size and over-fitting problems from deep neural network need to be overcome through designing semi-supervised learning, active learning, or self-supervised learning models. 3) Using GNN to train HSI will lead to high computational cost and large memory usage. Thus, the model oriented to reduce the computational complexity should be studied. 4) Combining multi-source data from different sensors should consider reasonably unifying and complementary expressing multi-source data features. 5) Using multi-temporal, hyperspectral, and multi-perspective information to simultaneously mine spatial-spectral-temporal joint-feature information of complex dynamic targets has become a new frontier. 6) Using multi-temporal, hyperspectral and multi-perspective information to simultaneously extract spatial-spectral-temporal features of complex dynamic targets has become a new frontier. 7) With the progress of space remote sensing technologies in China, domestic hyperspectral data will receive more and more attention for research. 8) According to the development trend of big data and machine intelligence, the research on spatial-spectral feature extraction and classification of hyperspectral image based on the combination of applied domain knowledge and hyperspectral data will be a hot topic. From two sides of the traditional and deep spatial-spectral feature extraction in this study, the research status is systematically sorted out and comprehensively summarized. The existing problems are analyzed and evaluated, and the future development trend is evaluated and prospected.关键词:hyperspectral image(HSI);spatial-spectral feature extraction;convolutional neural network(CNN);graph convolutional network(GCN);multi-data fusion;deep neutral network321|920|22更新时间:2024-05-07 -

摘要:Hyperspectral imaging (HSI), also known as imaging spectrometer, originated from remote sensing and has been explored for various applications. This tool has been applied in many fields, such as archaeology and art protection, vegetation and water resources control, food quality and safety control, forensics, crime scene detection, and biomedicine, owing to its advantages in acquiring 2D images in a wide range of electromagnetic spectrum. These applications mainly cover the ultraviolet (UV), visible (VIS), and near-infrared (near-IR or NIR) regions. HSI acquires a 3D dataset called hypercube, with two spatial dimensions and one spectral dimension. Spatially resolved spectral imaging obtained by HSI provides diagnostic information about the tissue physiology, morphology, and composition. Furthermore, HSI can be easily adapted to other conventional techniques, such as microscopy and fundus camera. As an emerging imaging technology, HSI has been explored in a variety of laboratory experiments and clinical trials, which strongly indicates that HSI has a great potential for improving accuracy and reliability in disease detection, diagnosis, monitoring, and image-guided surgeries. In the past two decades, the HSI technology has been rapidly developed in hardware and systems. Most medical HSIs only detect the UV, VIS, and near-IR regions of light. Therefore, the exploration of HSI in the mid-IR spectrum may bring new insights for disease detection, diagnosis, and monitoring. The HSI technology is also combined with other imaging methods, such as preoperative positron emission tomography and intraoperative ultrasound, to overcome the limitation on the penetration of biological tissues and broaden HSI application areas. With the increasing integration of technologies, such as microscopes, colposcopy, laparoscopy, and fundus cameras, HSI is becoming an important part of medical imaging technology, which provides important information for potential clinical applications at the molecular, cell, tissue, and organ level. The clinical application of HSI is clearly in adolescence, and more verification is needed before it can be safely and effectively used in clinical practice. With the development of hardware technology, image analysis methods, and computing capabilities, HSI is used for the diagnosis and monitoring of non-invasive diseases, the identification and quantitative analysis of cancer biomarkers, image-guided minimally invasive surgery, and targeted drug delivery. However, HSI, as an emerging technology, also has certain limitations. At present, the application of hyperspectral detection technology in the medical field is still in the experimental stage. Useful information must be extracted from the large amount of data contained in each medical HSI. Data calibration and correction, data compression, dimensionality reduction, and analysis of data to determine the final results require a certain amount of time, which are also major challenges in the biomedical field. Higher spectral resolution, spatial resolution, and larger spectral database provide substantial spatial and spectral information. Accordingly, the main research topics in the future are the manner by which to quickly collect images of target objects in real time in a short period, effectively integrate spectroscopic instruments and algorithms, accurately diagnose the results, and combine with other imaging methods for fusion data analysis. The HSI is widely used and plays a greater role in the field of biomedicine owing to its continuous development and improvement. This work provides a comprehensive overview of HSI technologies and its medical applications, such as applications in cancer, heart disease, retinopathy, diabetic foot, shock, histopathology, and image-guided surgery. Moreover, this work presents an overview of the literature on the medical HSI technology and its applications. This work reviews the basic principles, structure, and characteristics of HSI system and elaborates the application progress of HSI in disease diagnosis and surgical guidance in recent years and analyzes the limitations of HSI and its future development direction.关键词:medical hyperspectral image;precision medicine;medicine hyperspectral image analysis;disease diagnosis;image-guided surgery332|1568|8更新时间:2024-05-07

摘要:Hyperspectral imaging (HSI), also known as imaging spectrometer, originated from remote sensing and has been explored for various applications. This tool has been applied in many fields, such as archaeology and art protection, vegetation and water resources control, food quality and safety control, forensics, crime scene detection, and biomedicine, owing to its advantages in acquiring 2D images in a wide range of electromagnetic spectrum. These applications mainly cover the ultraviolet (UV), visible (VIS), and near-infrared (near-IR or NIR) regions. HSI acquires a 3D dataset called hypercube, with two spatial dimensions and one spectral dimension. Spatially resolved spectral imaging obtained by HSI provides diagnostic information about the tissue physiology, morphology, and composition. Furthermore, HSI can be easily adapted to other conventional techniques, such as microscopy and fundus camera. As an emerging imaging technology, HSI has been explored in a variety of laboratory experiments and clinical trials, which strongly indicates that HSI has a great potential for improving accuracy and reliability in disease detection, diagnosis, monitoring, and image-guided surgeries. In the past two decades, the HSI technology has been rapidly developed in hardware and systems. Most medical HSIs only detect the UV, VIS, and near-IR regions of light. Therefore, the exploration of HSI in the mid-IR spectrum may bring new insights for disease detection, diagnosis, and monitoring. The HSI technology is also combined with other imaging methods, such as preoperative positron emission tomography and intraoperative ultrasound, to overcome the limitation on the penetration of biological tissues and broaden HSI application areas. With the increasing integration of technologies, such as microscopes, colposcopy, laparoscopy, and fundus cameras, HSI is becoming an important part of medical imaging technology, which provides important information for potential clinical applications at the molecular, cell, tissue, and organ level. The clinical application of HSI is clearly in adolescence, and more verification is needed before it can be safely and effectively used in clinical practice. With the development of hardware technology, image analysis methods, and computing capabilities, HSI is used for the diagnosis and monitoring of non-invasive diseases, the identification and quantitative analysis of cancer biomarkers, image-guided minimally invasive surgery, and targeted drug delivery. However, HSI, as an emerging technology, also has certain limitations. At present, the application of hyperspectral detection technology in the medical field is still in the experimental stage. Useful information must be extracted from the large amount of data contained in each medical HSI. Data calibration and correction, data compression, dimensionality reduction, and analysis of data to determine the final results require a certain amount of time, which are also major challenges in the biomedical field. Higher spectral resolution, spatial resolution, and larger spectral database provide substantial spatial and spectral information. Accordingly, the main research topics in the future are the manner by which to quickly collect images of target objects in real time in a short period, effectively integrate spectroscopic instruments and algorithms, accurately diagnose the results, and combine with other imaging methods for fusion data analysis. The HSI is widely used and plays a greater role in the field of biomedicine owing to its continuous development and improvement. This work provides a comprehensive overview of HSI technologies and its medical applications, such as applications in cancer, heart disease, retinopathy, diabetic foot, shock, histopathology, and image-guided surgery. Moreover, this work presents an overview of the literature on the medical HSI technology and its applications. This work reviews the basic principles, structure, and characteristics of HSI system and elaborates the application progress of HSI in disease diagnosis and surgical guidance in recent years and analyzes the limitations of HSI and its future development direction.关键词:medical hyperspectral image;precision medicine;medicine hyperspectral image analysis;disease diagnosis;image-guided surgery332|1568|8更新时间:2024-05-07

Review

-

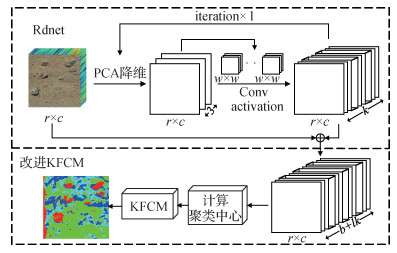

摘要:ObjectiveHyperspectral remote sensing method is a major development in remote sensing field. It uses a lot of narrow band electromagnetic bands to obtain spectral data. It covers visible, near infrared, middle infrared, and far infrared bands, and its spectral resolution can reach the nanometer level. Therefore, hyperspectral remote sensing can find more surface features and has been widely used in covering global environment, land use, resource survey, natural disasters, and even interstellar exploration. Compared with RGB and multispectral images, hyperspectral images not only can improve the information richness but also can provide more reasonable and effective analysis and processing for the related tasks. As a result, they have important application value in many fields. However, the cost of spectral detection systems is relatively high, especially the optical detector that is used to acquire high spectral data. At present, most of the spectrometers can support the spectral imaging from 400 nm to 1 000 nm, while few of them support that from 1 000 nm to 2 500 nm. The reason is that the spectrometer is harder to produce and more expensive with the increase in spectra. The bands of hyperspectral images have internal relations. The performance of low-spectrum spectrometer can be improved by fully utilizing the low spectra to predict high spectra. In other words, the low spectrum spectrometer can be used to obtain the high spectra that are near the spectra which are usually obtained by high-spectrum spectrometer. The cost of getting hyperspectral images will be greatly reduced. Therefore, high spectra prediction has promising applications and prospects in improving spectrometer performance. Nowadays, a single sensor can generally take a limited number of spectra. Thus, the commonly used spectrometers contain multiple sensors. If one of these sensors suffers from a sudden situation and cannot work normally in the process of flight aerial photography, then the data we can obtain will be unusable and we will have to have a flight again, which will cause cost increase and resource waste. Similarly, if a spectrometer mounted on a satellite fails to work normally in case of emergency, then it will suffer much greater loss. However, if we can fully utilize the low spectra to predict high spectra, which means using the low-spectrum spectrometer to obtain the hyperspectral image that is near the spectra from real high-spectrum spectrometer, the loss caused by these situations can be compensated in a great extent.MethodIn recent years, convolutional neural networks (CNNs) have been widely used in various image processing tasks. We propose a hyperspectral image prediction framework based on a CNN as inspired by the great achievements of deep learning in the field of image spatial super resolution. The designed network is based on the residual network, which can fully use multiscale feature maps to obtain better performance and ensure fast convergence. In the CNN, 2D convolution layers use convolution kernels to obtain feature maps, and convolution kernels use relation between space and spectra, which is also helpful to obtain better results. In our network, each of the convolution layers has an activation layer, in which the rectified linear unit function is used. Batch normal layers are used to normalize the feature map, which can improve the feature extracting ability of CNN. Given an input, the proposed network extracts the low-band data features of the hyperspectral image. Then, it uses the extracted features together with the original low-spectra data to predict the high-spectra data for predicting the high spectra with the low spectra. We also design an evaluation system to prove the feasibility and effectiveness of the infrared spectrum prediction. The feasibility is evaluated by three classical image quality evaluation indices (peak signal-to-noise ratio (PSNR), structural similarity (SSIM), and spectral angle (SA)). The feasibility is also evaluated by two classical classification evaluation indices (accuracy and average accuracy) by applying our predicted infrared spectrum to classification tasks.ResultExperiments on Cuprite and Salinas datasets are conducted to validate the effectiveness of the proposed method. On Cuprite dataset, we directly measure the quality of the predicted image through PSNR, SSIM, and SA. On Salinas dataset, we mainly use the predicted image data for classification tasks with support vector machine (SVM) and LeNet. All the experiments are implemented using Torch 1.3 platform with Python 3.7. In our experiments on Cuprite dataset, we use the spectra of the first two sensors to predict the spectra of the third sensor. Five hyperspectral images are present in the original data of Cuprite. The first three spectra of Cuprite are spliced into a large image as the training dataset, and the last two spectra are spliced as the test dataset. In this experiment, 30 training epochs are conducted. The PSNR, SSIM, and SA of the predicted images by the trained network on the test set are 40.145 dB, 0.996, and 0.777 rad, respectively, which indicates that the proposed method can predict high spectra from low spectra, which is near the ground truth. The PSNR, SSIM, and SA on the Salinas dataset are 39.55 dB, 0.997, and 1.78 rad, respectively. The accuracy and average accuracy of SVM and LeNet by using the predicted high-spectra data for classification are both improved by approximately 1% compared with the results which use only low-spectra data.ConclusionAlthough many CNN methods have been proposed to realize spatial super resolution, few of them realize spectral super resolution, which is also important. Therefore, we propose the new application in remote sensing field called spectrum prediction, which uses a CNN to predict high spectra from low spectra. The proposed method can expand the use efficiency of sensor chips and also help deal with spectrometer failure and improve the quality of spectral data.关键词:deep learning;convolutional neural network(CNN);hyperspectral image;spectrum prediction;hyperspectral classification87|161|1更新时间:2024-05-07

摘要:ObjectiveHyperspectral remote sensing method is a major development in remote sensing field. It uses a lot of narrow band electromagnetic bands to obtain spectral data. It covers visible, near infrared, middle infrared, and far infrared bands, and its spectral resolution can reach the nanometer level. Therefore, hyperspectral remote sensing can find more surface features and has been widely used in covering global environment, land use, resource survey, natural disasters, and even interstellar exploration. Compared with RGB and multispectral images, hyperspectral images not only can improve the information richness but also can provide more reasonable and effective analysis and processing for the related tasks. As a result, they have important application value in many fields. However, the cost of spectral detection systems is relatively high, especially the optical detector that is used to acquire high spectral data. At present, most of the spectrometers can support the spectral imaging from 400 nm to 1 000 nm, while few of them support that from 1 000 nm to 2 500 nm. The reason is that the spectrometer is harder to produce and more expensive with the increase in spectra. The bands of hyperspectral images have internal relations. The performance of low-spectrum spectrometer can be improved by fully utilizing the low spectra to predict high spectra. In other words, the low spectrum spectrometer can be used to obtain the high spectra that are near the spectra which are usually obtained by high-spectrum spectrometer. The cost of getting hyperspectral images will be greatly reduced. Therefore, high spectra prediction has promising applications and prospects in improving spectrometer performance. Nowadays, a single sensor can generally take a limited number of spectra. Thus, the commonly used spectrometers contain multiple sensors. If one of these sensors suffers from a sudden situation and cannot work normally in the process of flight aerial photography, then the data we can obtain will be unusable and we will have to have a flight again, which will cause cost increase and resource waste. Similarly, if a spectrometer mounted on a satellite fails to work normally in case of emergency, then it will suffer much greater loss. However, if we can fully utilize the low spectra to predict high spectra, which means using the low-spectrum spectrometer to obtain the hyperspectral image that is near the spectra from real high-spectrum spectrometer, the loss caused by these situations can be compensated in a great extent.MethodIn recent years, convolutional neural networks (CNNs) have been widely used in various image processing tasks. We propose a hyperspectral image prediction framework based on a CNN as inspired by the great achievements of deep learning in the field of image spatial super resolution. The designed network is based on the residual network, which can fully use multiscale feature maps to obtain better performance and ensure fast convergence. In the CNN, 2D convolution layers use convolution kernels to obtain feature maps, and convolution kernels use relation between space and spectra, which is also helpful to obtain better results. In our network, each of the convolution layers has an activation layer, in which the rectified linear unit function is used. Batch normal layers are used to normalize the feature map, which can improve the feature extracting ability of CNN. Given an input, the proposed network extracts the low-band data features of the hyperspectral image. Then, it uses the extracted features together with the original low-spectra data to predict the high-spectra data for predicting the high spectra with the low spectra. We also design an evaluation system to prove the feasibility and effectiveness of the infrared spectrum prediction. The feasibility is evaluated by three classical image quality evaluation indices (peak signal-to-noise ratio (PSNR), structural similarity (SSIM), and spectral angle (SA)). The feasibility is also evaluated by two classical classification evaluation indices (accuracy and average accuracy) by applying our predicted infrared spectrum to classification tasks.ResultExperiments on Cuprite and Salinas datasets are conducted to validate the effectiveness of the proposed method. On Cuprite dataset, we directly measure the quality of the predicted image through PSNR, SSIM, and SA. On Salinas dataset, we mainly use the predicted image data for classification tasks with support vector machine (SVM) and LeNet. All the experiments are implemented using Torch 1.3 platform with Python 3.7. In our experiments on Cuprite dataset, we use the spectra of the first two sensors to predict the spectra of the third sensor. Five hyperspectral images are present in the original data of Cuprite. The first three spectra of Cuprite are spliced into a large image as the training dataset, and the last two spectra are spliced as the test dataset. In this experiment, 30 training epochs are conducted. The PSNR, SSIM, and SA of the predicted images by the trained network on the test set are 40.145 dB, 0.996, and 0.777 rad, respectively, which indicates that the proposed method can predict high spectra from low spectra, which is near the ground truth. The PSNR, SSIM, and SA on the Salinas dataset are 39.55 dB, 0.997, and 1.78 rad, respectively. The accuracy and average accuracy of SVM and LeNet by using the predicted high-spectra data for classification are both improved by approximately 1% compared with the results which use only low-spectra data.ConclusionAlthough many CNN methods have been proposed to realize spatial super resolution, few of them realize spectral super resolution, which is also important. Therefore, we propose the new application in remote sensing field called spectrum prediction, which uses a CNN to predict high spectra from low spectra. The proposed method can expand the use efficiency of sensor chips and also help deal with spectrometer failure and improve the quality of spectral data.关键词:deep learning;convolutional neural network(CNN);hyperspectral image;spectrum prediction;hyperspectral classification87|161|1更新时间:2024-05-07 -

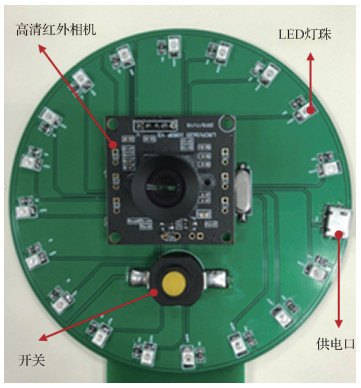

摘要:ObjectiveHyperspectral imaging systems are widely used in various fields owing to their image-spectral characteristic and unique "spectral fingerprint" information. Multispectral imaging systems are generally less costly and more suitable for the large-scale applications compared with hyperspectral imaging systems. Nonetheless, the existing multispectral imaging systems suffer from certain problems, such as high cost, complex structure, difficult operation, and slow response time. Accordingly, a low-cost portable multispectral imaging system based on pulse modulation is proposed in this study, and its imaging parameters are optimized using the objective image quality assessment methods.MethodThis system strives for a high degree of integration in a hardware design. The light source and control central processing unit(CPU) are integrated on a small printed circuit board(PCB). The 18 light emitting diode(LED) pairs of nine different wavelengths are integrated on a circular PCB board to form a circle by using a disc design. The light source can be converged, and the brightness can be improved to a certain extent. The proposed multispectral imaging system is mainly composed of a light source, a control, an image acquisition section, and an image analysis part. The light source part mainly includes nine LED arrays with the center wavelengths of 365 nm, 390 nm, 460 nm, 515 nm, 585 nm, 620 nm, 650 nm, 730 nm, and 840 nm. The control part mainly includes the self-designed LED driver circuit and universal serial bus(USB) power supply, which can light up LED arrays by sending the pulse waves at certain time intervals in time and allows the LED arrays to reach the maximum luminous intensity through the certain impedance matching. The image acquisition part primarily consists of a high-definition infrared industrial camera without the near infrared(IR) cut-off filter, which has an optimal spectral sensing range encompassing the central wavelengths of the selected nine LED arrays. The computer is used to call OpenCV through the Python language to control the camera to take images at a certain frequency. The image analysis part mainly performs the objective image quality assessment algorithms. When the system is executed, the STC89C51 microcontroller emits a pulse wave with a period of T (T is adjustable) to drive nine different wavelengths of LED arrays to light up in time. Subsequently, the computer platform calls the camera modules to capture multispectral images at matching intervals. In the system optimization experiments, we use the sharpness and the blur metrics to objectively evaluate the quality of the obtained multispectral images from three perspectives of camera shooting interval, imaging distance, and light intensity, thus obtaining better system imaging parameters.ResultThe quality of multispectral images acquired from various shooting parameters and shooting conditions under three different scenarios are evaluated. Results show that the image quality of the developed multispectral imaging system is relatively good under the camera shooting interval synchronized with the bead strobe cycle of the LED arrays. The imaging distance is 25 mm, and the light intensity is 45 Lux.ConclusionThe developed multispectral imaging system based on pulse modulation is low-cost, less difficult to operate, has a simple structure, has better imaging quality, has faster imaging speed, and can meet the requirements of large-scale promotion of multispectral imaging systems for various application domains. In addition, the system design methods, design ideas, and experimental protocols covered in the current work may provide references for the subsequent studies.关键词:pulse modulation;multispectral imaging;image quality assessment;experimental design optimization;embedded systems;image processing90|338|3更新时间:2024-05-07

摘要:ObjectiveHyperspectral imaging systems are widely used in various fields owing to their image-spectral characteristic and unique "spectral fingerprint" information. Multispectral imaging systems are generally less costly and more suitable for the large-scale applications compared with hyperspectral imaging systems. Nonetheless, the existing multispectral imaging systems suffer from certain problems, such as high cost, complex structure, difficult operation, and slow response time. Accordingly, a low-cost portable multispectral imaging system based on pulse modulation is proposed in this study, and its imaging parameters are optimized using the objective image quality assessment methods.MethodThis system strives for a high degree of integration in a hardware design. The light source and control central processing unit(CPU) are integrated on a small printed circuit board(PCB). The 18 light emitting diode(LED) pairs of nine different wavelengths are integrated on a circular PCB board to form a circle by using a disc design. The light source can be converged, and the brightness can be improved to a certain extent. The proposed multispectral imaging system is mainly composed of a light source, a control, an image acquisition section, and an image analysis part. The light source part mainly includes nine LED arrays with the center wavelengths of 365 nm, 390 nm, 460 nm, 515 nm, 585 nm, 620 nm, 650 nm, 730 nm, and 840 nm. The control part mainly includes the self-designed LED driver circuit and universal serial bus(USB) power supply, which can light up LED arrays by sending the pulse waves at certain time intervals in time and allows the LED arrays to reach the maximum luminous intensity through the certain impedance matching. The image acquisition part primarily consists of a high-definition infrared industrial camera without the near infrared(IR) cut-off filter, which has an optimal spectral sensing range encompassing the central wavelengths of the selected nine LED arrays. The computer is used to call OpenCV through the Python language to control the camera to take images at a certain frequency. The image analysis part mainly performs the objective image quality assessment algorithms. When the system is executed, the STC89C51 microcontroller emits a pulse wave with a period of T (T is adjustable) to drive nine different wavelengths of LED arrays to light up in time. Subsequently, the computer platform calls the camera modules to capture multispectral images at matching intervals. In the system optimization experiments, we use the sharpness and the blur metrics to objectively evaluate the quality of the obtained multispectral images from three perspectives of camera shooting interval, imaging distance, and light intensity, thus obtaining better system imaging parameters.ResultThe quality of multispectral images acquired from various shooting parameters and shooting conditions under three different scenarios are evaluated. Results show that the image quality of the developed multispectral imaging system is relatively good under the camera shooting interval synchronized with the bead strobe cycle of the LED arrays. The imaging distance is 25 mm, and the light intensity is 45 Lux.ConclusionThe developed multispectral imaging system based on pulse modulation is low-cost, less difficult to operate, has a simple structure, has better imaging quality, has faster imaging speed, and can meet the requirements of large-scale promotion of multispectral imaging systems for various application domains. In addition, the system design methods, design ideas, and experimental protocols covered in the current work may provide references for the subsequent studies.关键词:pulse modulation;multispectral imaging;image quality assessment;experimental design optimization;embedded systems;image processing90|338|3更新时间:2024-05-07

Advances in Hyperspectral Imaging

-

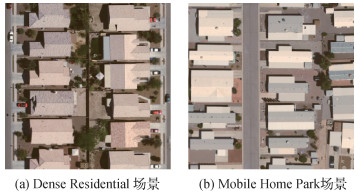

摘要:ObjectiveRemote sensing scene classification is an important research topic in remote sensing community, and it has provided important data or decision support for land resource planning, coverage mapping, ecological environment monitoring, and other real-world applications. In scene classification, extracting scene-level discriminative features is a key factor to bridge the "semantic gap" between low-level visual attributes and high-level understanding of images. Deep learning models are currently showing excellent performance in remote sensing image analysis, and many convolutional neural network (CNN)-based methods have been widely proposed in feature extraction and classification of remote sensing scene images. Although the aforementioned methods have achieved good results, they are all designed for scene images of high spatial resolution, such as University of California(UC) Merced Land-Use, WHU-RS19, scene image dataset designed by RS_IDEA Group in Wuhan University(SIRI-WHU), RSSCN7, aerial image dataset(AID), a publicly available benchmark for remote sensing image scene classification created by Northwestern Polytechnical University(NWPU-RESISC45), and optical imagery analysis and learning(OPTIMAL-31) datasets. Remote sensing data of high spatial resolution can present spatial details of ground objects. However, they contain less spectral information. As a result, their discriminative ability is relatively limited in scene classification. Hyperspectral images have abundant spectral information, and they have strong discriminative ability for ground objects. However, the existing datasets of hyperspectral images (e.g., Indian Pines, Pavia University, Washington DC Mall, Salinas, and Xiongan New Area) are mostly oriented toward pixel-level classification and are difficult to directly apply on research of scene-level image classification. Tiangong-1 hyperspectral remote sensing scene classification dataset (TG1HRSSC) is produced for scene-level image interpretation. However, the TG1HRSSC dataset is small (204 scene images) and has inconsistent image bands. A hyperspectral remote sensing dataset is constructed for scene classification (HSRS-SC) in this study to overcome the aforementioned disadvantages. The dataset can provide a good benchmark platform for evaluating intelligent algorithms of hyperspectral scene classification.MethodThe HSRS-SC is derived from the aerial data of the Heihe Watershed Allied Telemetry Experimental Research (HiWATER), and raw data can be downloaded from the National Tibetan Plateau/Third Pole Environment Data Center. A large-scale dataset is finally formed after calibration coefficient correction, atmospheric correction, image cropping, and manual visual annotation. To the best of our knowledge, the HSRS-SC is currently the largest hyperspectral scene dataset, and it contains 1 385 hyperspectral scene images which have been resized to 256×256 pixels. The dataset is divided into 5 categories, and the number of samples in each category ranges from 154 to 485. In the HSRS-SC dataset, each hyperspectral scene image has a high spatial resolution (1 m) and a wide wavelength range (from visible light to near-infrared, 380~1 050 nm, 48 bands), which can reflect the detailed spatial and spectral information of ground objects, including cars, roadway, buildings, and vegetation. Specifically, the blue band (450~520 nm) has a certain penetration ability to water bodies; the green band (520~600 nm) is more sensitive to the reflection of vegetation; the red band (630~690 nm) is the main absorption band of chlorophyll; the near-infrared band (760900 nm) reflects the strong reflection of vegetation, and it is also the absorption band of water bodies. The dataset will be publicly available in the near future, and it can be used for non-commercial academic research.ResultThis study uses three classic deep models (i.e., AlexNet, VGGNet-16, and GoogLeNet) to organize experiments under three different schemes for providing benchmark results of HSRS-SC dataset. In the first scheme, false color images are synthesized from the 19th, 13th, and 7th bands of visible light range, and then, they are fed into deep models to extract global scene features. In the second and third schemes, information of the visible light (19th, 13th, and 7th) and near-infrared (46th, 47th, and 48th) bands are comprehensively utilized by fusion approaches of addition and concatenation, respectively. In the experiments, 10 samples per class are randomly selected to finetune pre-trained CNN models, and the rest are used for test set. The experimental results on the HSRS-SC dataset show that the effective utilization of information from different bands of hyperspectral images improves the classification performance, and concatenation fusion achieves better results than addition fusion. Comparing the three CNN models shows that the VGGNet-16 model is more suitable for the HSRS-SC dataset, and the highest overall classification accuracy reaches 93.20%. Furthermore, this study shows confusion matrices of different methods. Effective use of spectral information can reduce the confusion of semantic categories given that vegetation, buildings, roads, water bodies, and rocks have great differences in absorption and reflection at different bands. This study also organizes hyperspectral scene classification experiments to further explore the advantages of hyperspectral scenes. Hyperspectral scenes have a higher accuracy advantage than RGB images under the two training samples.ConclusionThe abovementioned experimental results show that the HSRS-SC dataset can reflect detailed information of ground objects, and it can provide effective data support for semantic understanding of remote sensing scenes. Although experiments in this study adopt three different schemes to utilize the information of the visible light (19th, 13th, and 7th bands) and near-infrared (46th, 47th, and 48th bands) of the hyperspectral scenes, the rich spectral information has not been fully explored. For the future work, suitable models will be designed for feature extraction and classification of hyperspectral remote sensing scenes. We will also further expand the HSRS-SC dataset to ensure its practicality by supplementing more semantic categories and the total number of samples and increasing the diversity of data.关键词:remote sensing;scene classification;hyperspectral image;benchmark dataset;deep learning470|199|6更新时间:2024-05-07

摘要:ObjectiveRemote sensing scene classification is an important research topic in remote sensing community, and it has provided important data or decision support for land resource planning, coverage mapping, ecological environment monitoring, and other real-world applications. In scene classification, extracting scene-level discriminative features is a key factor to bridge the "semantic gap" between low-level visual attributes and high-level understanding of images. Deep learning models are currently showing excellent performance in remote sensing image analysis, and many convolutional neural network (CNN)-based methods have been widely proposed in feature extraction and classification of remote sensing scene images. Although the aforementioned methods have achieved good results, they are all designed for scene images of high spatial resolution, such as University of California(UC) Merced Land-Use, WHU-RS19, scene image dataset designed by RS_IDEA Group in Wuhan University(SIRI-WHU), RSSCN7, aerial image dataset(AID), a publicly available benchmark for remote sensing image scene classification created by Northwestern Polytechnical University(NWPU-RESISC45), and optical imagery analysis and learning(OPTIMAL-31) datasets. Remote sensing data of high spatial resolution can present spatial details of ground objects. However, they contain less spectral information. As a result, their discriminative ability is relatively limited in scene classification. Hyperspectral images have abundant spectral information, and they have strong discriminative ability for ground objects. However, the existing datasets of hyperspectral images (e.g., Indian Pines, Pavia University, Washington DC Mall, Salinas, and Xiongan New Area) are mostly oriented toward pixel-level classification and are difficult to directly apply on research of scene-level image classification. Tiangong-1 hyperspectral remote sensing scene classification dataset (TG1HRSSC) is produced for scene-level image interpretation. However, the TG1HRSSC dataset is small (204 scene images) and has inconsistent image bands. A hyperspectral remote sensing dataset is constructed for scene classification (HSRS-SC) in this study to overcome the aforementioned disadvantages. The dataset can provide a good benchmark platform for evaluating intelligent algorithms of hyperspectral scene classification.MethodThe HSRS-SC is derived from the aerial data of the Heihe Watershed Allied Telemetry Experimental Research (HiWATER), and raw data can be downloaded from the National Tibetan Plateau/Third Pole Environment Data Center. A large-scale dataset is finally formed after calibration coefficient correction, atmospheric correction, image cropping, and manual visual annotation. To the best of our knowledge, the HSRS-SC is currently the largest hyperspectral scene dataset, and it contains 1 385 hyperspectral scene images which have been resized to 256×256 pixels. The dataset is divided into 5 categories, and the number of samples in each category ranges from 154 to 485. In the HSRS-SC dataset, each hyperspectral scene image has a high spatial resolution (1 m) and a wide wavelength range (from visible light to near-infrared, 380~1 050 nm, 48 bands), which can reflect the detailed spatial and spectral information of ground objects, including cars, roadway, buildings, and vegetation. Specifically, the blue band (450~520 nm) has a certain penetration ability to water bodies; the green band (520~600 nm) is more sensitive to the reflection of vegetation; the red band (630~690 nm) is the main absorption band of chlorophyll; the near-infrared band (760900 nm) reflects the strong reflection of vegetation, and it is also the absorption band of water bodies. The dataset will be publicly available in the near future, and it can be used for non-commercial academic research.ResultThis study uses three classic deep models (i.e., AlexNet, VGGNet-16, and GoogLeNet) to organize experiments under three different schemes for providing benchmark results of HSRS-SC dataset. In the first scheme, false color images are synthesized from the 19th, 13th, and 7th bands of visible light range, and then, they are fed into deep models to extract global scene features. In the second and third schemes, information of the visible light (19th, 13th, and 7th) and near-infrared (46th, 47th, and 48th) bands are comprehensively utilized by fusion approaches of addition and concatenation, respectively. In the experiments, 10 samples per class are randomly selected to finetune pre-trained CNN models, and the rest are used for test set. The experimental results on the HSRS-SC dataset show that the effective utilization of information from different bands of hyperspectral images improves the classification performance, and concatenation fusion achieves better results than addition fusion. Comparing the three CNN models shows that the VGGNet-16 model is more suitable for the HSRS-SC dataset, and the highest overall classification accuracy reaches 93.20%. Furthermore, this study shows confusion matrices of different methods. Effective use of spectral information can reduce the confusion of semantic categories given that vegetation, buildings, roads, water bodies, and rocks have great differences in absorption and reflection at different bands. This study also organizes hyperspectral scene classification experiments to further explore the advantages of hyperspectral scenes. Hyperspectral scenes have a higher accuracy advantage than RGB images under the two training samples.ConclusionThe abovementioned experimental results show that the HSRS-SC dataset can reflect detailed information of ground objects, and it can provide effective data support for semantic understanding of remote sensing scenes. Although experiments in this study adopt three different schemes to utilize the information of the visible light (19th, 13th, and 7th bands) and near-infrared (46th, 47th, and 48th bands) of the hyperspectral scenes, the rich spectral information has not been fully explored. For the future work, suitable models will be designed for feature extraction and classification of hyperspectral remote sensing scenes. We will also further expand the HSRS-SC dataset to ensure its practicality by supplementing more semantic categories and the total number of samples and increasing the diversity of data.关键词:remote sensing;scene classification;hyperspectral image;benchmark dataset;deep learning470|199|6更新时间:2024-05-07

Dataset

-

摘要:ObjectiveHyperspectral imaging systems have become promising auxiliary diagnostic tools for intelligent medicine in recent years, especially in disease diagnosis and image-guided surgery. Hyperspectral image (HSI) has hundreds of contiguous narrow spectral bands from visible to infrared electromagnetic spectrum. These bands provide a wealth of information to distinguish different chemical composition of biological tissue. The reflected, fluorescent, and transmitted light from tissue captured by HSI carry quantitative diagnostic information about tissue pathology. Wealthy spectral bands also contain redundancy, which not only degrades classification performance but also increases computational complexity. Thus, dimensionality reduction (DR) needs to be conducted to reveal the essence of data by discarding redundant information. However, most of the current DR methods are based on spectral vector input (first-order representation) that ignores important correlations in the spatial domain. Although some spectral-spatial joint technologies have been investigated to overcome this disadvantage, they still consider the spectral-spatial feature into first-order data for analysis and ignore the cubic nature of hyperspectral data. Thus, a novel tensor-based Laplacian regularized sparse and low-rank graph (T-LapSLRG) for discriminant analysis is proposed to preserve the original intrinsic structure information of medical hyperspectral data and enhance the discriminant ability of features.MethodSparse and low-rank constraints are suggested in the proposed T-LapSLRG to exploit local and global data structures while tensor analysis is developed to preserve the spatial neighborhood information. Multi-manifold is utilized to enhance the discriminant ability and describe the intrinsic geometric information. Consequently, the proposed method not only can preserve local and global structure information but also can utilize the intrinsic geometric information. Thus, it offers more discriminative power than existing tensor-based DR methods. Vector-based methods treat each pixel as an independent and identically distributed item. By contrast, the samples in T-LapSLRG are represented in the form of a third-order tensor that can preserve the original spatial neighborhood information. In addition, only a small set of the labeled training samples is needed by adopting tensor training samples. With the assumption that the samples belonging to the same class lie on a unique sub-manifold, T-LapSLRG constructs tensor-based within-class graph to characterize the within-class compactness for making the resulting graph more discriminative. In summary, T-LapSLRG jointly utilizes spatial neighborhoods and discriminative and intrinsic structure information that capture the local and global structures and the discriminative information simultaneously and make the resulting graph more robust and discriminative.ResultTo evaluate the effectiveness of the proposed T-LapSLRG, the medical hyperspectral data of membranous nephropathy (MN) is used. The traditional diagnosis methods of MN mainly rely on serological characteristics and renal pathological characteristics, which is tough to reach the intelligent and automated requirements of clinical diagnosis. Two types of MN are used as the experimental verification data, including primary membranous nephropathy (PMN) and hepatitis B virus-related membranous nephropathy (HBV-MN). The microscopic hyperspectral images of PMN and HBV-MN are captured by the line scan hyperspectral imaging system SOC-710 together with the biological microscope CX31RTSF. The SOC-710 system captures 128 spectral bands with 696×520 pixels and a spectral wavelength range from 400 to 1 000 nm. The obtained medical HSI dataset consists of 30 HBV-MN images and 24 PMN images, involving 10 HBV-MN patients and 9 PMN patients. Classification is performed on the obtained low-dimensional features by the classical support vector machine classifier to evaluate the performance of the proposed T-LapSLRG. Four objective quality indices (i.e., individual class accuracy, overall accuracy (OA), average accuracy (AA), and kappa coefficient (Kappa)) are used. The proposed T-LapSLRG outperforms other methods by 1.40% to 34.75% in OA, 1.46% to 36.89% in AA, and 0.031 to 0.73 in Kappa. In addition, the classification accuracy obtained by T-LapSLRG for all patients has reached more than 90%. In clinical diagnosis, the type of disease can be determined when the pixel level accuracy reaches 85% or more.ConclusionIn this study, we proposed a novel tensor-based Laplacian regularized sparse and low-rank graph for discriminant analysis. Experiments on the MN dataset demonstrate that the proposed T-LapSLRG is effective in discriminant analysis with sparse and low-rank constraints and multi-manifold, and significantly improves the classification performance. Experimental results verify the nonnegligible potential of T-LapSLRG for further application in MN identification.关键词:medical hyperspectral image;membranous nephropathy;tensor;dimensionality reduction(DR);graph embedding141|121|1更新时间:2024-05-07

摘要:ObjectiveHyperspectral imaging systems have become promising auxiliary diagnostic tools for intelligent medicine in recent years, especially in disease diagnosis and image-guided surgery. Hyperspectral image (HSI) has hundreds of contiguous narrow spectral bands from visible to infrared electromagnetic spectrum. These bands provide a wealth of information to distinguish different chemical composition of biological tissue. The reflected, fluorescent, and transmitted light from tissue captured by HSI carry quantitative diagnostic information about tissue pathology. Wealthy spectral bands also contain redundancy, which not only degrades classification performance but also increases computational complexity. Thus, dimensionality reduction (DR) needs to be conducted to reveal the essence of data by discarding redundant information. However, most of the current DR methods are based on spectral vector input (first-order representation) that ignores important correlations in the spatial domain. Although some spectral-spatial joint technologies have been investigated to overcome this disadvantage, they still consider the spectral-spatial feature into first-order data for analysis and ignore the cubic nature of hyperspectral data. Thus, a novel tensor-based Laplacian regularized sparse and low-rank graph (T-LapSLRG) for discriminant analysis is proposed to preserve the original intrinsic structure information of medical hyperspectral data and enhance the discriminant ability of features.MethodSparse and low-rank constraints are suggested in the proposed T-LapSLRG to exploit local and global data structures while tensor analysis is developed to preserve the spatial neighborhood information. Multi-manifold is utilized to enhance the discriminant ability and describe the intrinsic geometric information. Consequently, the proposed method not only can preserve local and global structure information but also can utilize the intrinsic geometric information. Thus, it offers more discriminative power than existing tensor-based DR methods. Vector-based methods treat each pixel as an independent and identically distributed item. By contrast, the samples in T-LapSLRG are represented in the form of a third-order tensor that can preserve the original spatial neighborhood information. In addition, only a small set of the labeled training samples is needed by adopting tensor training samples. With the assumption that the samples belonging to the same class lie on a unique sub-manifold, T-LapSLRG constructs tensor-based within-class graph to characterize the within-class compactness for making the resulting graph more discriminative. In summary, T-LapSLRG jointly utilizes spatial neighborhoods and discriminative and intrinsic structure information that capture the local and global structures and the discriminative information simultaneously and make the resulting graph more robust and discriminative.ResultTo evaluate the effectiveness of the proposed T-LapSLRG, the medical hyperspectral data of membranous nephropathy (MN) is used. The traditional diagnosis methods of MN mainly rely on serological characteristics and renal pathological characteristics, which is tough to reach the intelligent and automated requirements of clinical diagnosis. Two types of MN are used as the experimental verification data, including primary membranous nephropathy (PMN) and hepatitis B virus-related membranous nephropathy (HBV-MN). The microscopic hyperspectral images of PMN and HBV-MN are captured by the line scan hyperspectral imaging system SOC-710 together with the biological microscope CX31RTSF. The SOC-710 system captures 128 spectral bands with 696×520 pixels and a spectral wavelength range from 400 to 1 000 nm. The obtained medical HSI dataset consists of 30 HBV-MN images and 24 PMN images, involving 10 HBV-MN patients and 9 PMN patients. Classification is performed on the obtained low-dimensional features by the classical support vector machine classifier to evaluate the performance of the proposed T-LapSLRG. Four objective quality indices (i.e., individual class accuracy, overall accuracy (OA), average accuracy (AA), and kappa coefficient (Kappa)) are used. The proposed T-LapSLRG outperforms other methods by 1.40% to 34.75% in OA, 1.46% to 36.89% in AA, and 0.031 to 0.73 in Kappa. In addition, the classification accuracy obtained by T-LapSLRG for all patients has reached more than 90%. In clinical diagnosis, the type of disease can be determined when the pixel level accuracy reaches 85% or more.ConclusionIn this study, we proposed a novel tensor-based Laplacian regularized sparse and low-rank graph for discriminant analysis. Experiments on the MN dataset demonstrate that the proposed T-LapSLRG is effective in discriminant analysis with sparse and low-rank constraints and multi-manifold, and significantly improves the classification performance. Experimental results verify the nonnegligible potential of T-LapSLRG for further application in MN identification.关键词:medical hyperspectral image;membranous nephropathy;tensor;dimensionality reduction(DR);graph embedding141|121|1更新时间:2024-05-07 -

摘要:ObjectiveCholangiocarcinoma is a rare but highly malignant tumor. Hyperspectral imaging (HSI), which originated from remote sensing, is an emerging image modality for diagnosis and image-guided surgery. HSI takes the advantage of acquiring 2D images across a wide range of electromagnetic spectrum. HSI can obtain spectral and optical properties of tissue and provide more information than RGB images. Redundant information will persist even though HSI contains tens the amount of data compared with RGB images with the same spatial dimension. Traditional dimensionality reduction methods, such as principal component analysis and kernel method, reduce the data by converting the original spectral space to a low-dimensional one, which is not suitable in end-to-end models. Recently, convolutional neural networks have demonstrated excellent performance on computer vision tasks, including classification, segmentation, and detection. Attention mechanism is used in convolutional neural network(CNN) to improve the representation of feature maps. Typical channel attention modules, such as squeeze-and-excitation net (SENet), squeezes the input features by global average pooling to produce a channel descriptor. However, different channels could have the same mean value. We proposed frequency selecting channel attention (FSCA) mechanism to address this issue. An Inception-FSCA network is also proposed for the segmentation of a hyperspectral image of cholangiocarcinoma tissues.MethodFSCA can exploit the information from different frequency components. This method consists of three steps. First, the input feature map is transformed in the frequency domain by Fourier transform. Second, a representative frequency amplitude is selected to efficiently use the obtained frequencies. These selected frequencies are arranged in a column of vectors. Third, these vectors are sent to two consecutive fully connected layers to obtain a channel weight vector. Then, a sigmoid function is used to scale each channel weight between zero and one. Every element in the channel weight vector is multiplied with the corresponding channel feature. FSCA can adjust the channel information, strengthen the important channels, and suppress the unimportant. This work uses a microscopic hyperspectral imaging system to obtain hyperspectral images of cholangiocarcinoma tissues. These images have a spectral bandwidth from 550 nm to 1 000 nm in 7.5 nm increments, producing a hypercube with 60 spectral bands. Spatial resolution of each image is 1 280×1 024 pixels. The ground truth label is manually annotated by experts. The method is implemented using Python3.6 and TensorFlow1.14.0 on NVDIA TITAN X GPU, Intel i7-9700KF CPU. The learning rate is 0.000 5, the batch size is 256, and the optimization strategy is Adam. Cancerous areas have different sizes, resulting in unbalanced positive and negative samples. Focal loss is chosen as a loss function.ResultWe conducted comparative and ablation experiments on our dataset. We use several evaluation metrics to evaluate the performance of the inception-FSCA. The accuracy, precision, sensitivity, specificity, and Kappa are 0.978 0, 0.965 4, 0.958 6, 0.985 2, and 0.945 6, respectively.ConclusionIn this study, we proposed a Fourier transform frequency selecting channel attention mechanism. The proposed channel attention module can be conveniently inserted in CNN. An Inception-FSCA network is built for the segmentation of hyperspectral images of cholangiocarcinoma tissues. Quantitative results show that our method has excellent performance. Inception-FSCA can be applied in the outer image segmentation and classification tasks.关键词:hyperspectral image of cholangiocarcinoma;convolutional neural network (CNN);image segmentation;channel attention mechanism;Fourier transform107|79|5更新时间:2024-05-07

摘要:ObjectiveCholangiocarcinoma is a rare but highly malignant tumor. Hyperspectral imaging (HSI), which originated from remote sensing, is an emerging image modality for diagnosis and image-guided surgery. HSI takes the advantage of acquiring 2D images across a wide range of electromagnetic spectrum. HSI can obtain spectral and optical properties of tissue and provide more information than RGB images. Redundant information will persist even though HSI contains tens the amount of data compared with RGB images with the same spatial dimension. Traditional dimensionality reduction methods, such as principal component analysis and kernel method, reduce the data by converting the original spectral space to a low-dimensional one, which is not suitable in end-to-end models. Recently, convolutional neural networks have demonstrated excellent performance on computer vision tasks, including classification, segmentation, and detection. Attention mechanism is used in convolutional neural network(CNN) to improve the representation of feature maps. Typical channel attention modules, such as squeeze-and-excitation net (SENet), squeezes the input features by global average pooling to produce a channel descriptor. However, different channels could have the same mean value. We proposed frequency selecting channel attention (FSCA) mechanism to address this issue. An Inception-FSCA network is also proposed for the segmentation of a hyperspectral image of cholangiocarcinoma tissues.MethodFSCA can exploit the information from different frequency components. This method consists of three steps. First, the input feature map is transformed in the frequency domain by Fourier transform. Second, a representative frequency amplitude is selected to efficiently use the obtained frequencies. These selected frequencies are arranged in a column of vectors. Third, these vectors are sent to two consecutive fully connected layers to obtain a channel weight vector. Then, a sigmoid function is used to scale each channel weight between zero and one. Every element in the channel weight vector is multiplied with the corresponding channel feature. FSCA can adjust the channel information, strengthen the important channels, and suppress the unimportant. This work uses a microscopic hyperspectral imaging system to obtain hyperspectral images of cholangiocarcinoma tissues. These images have a spectral bandwidth from 550 nm to 1 000 nm in 7.5 nm increments, producing a hypercube with 60 spectral bands. Spatial resolution of each image is 1 280×1 024 pixels. The ground truth label is manually annotated by experts. The method is implemented using Python3.6 and TensorFlow1.14.0 on NVDIA TITAN X GPU, Intel i7-9700KF CPU. The learning rate is 0.000 5, the batch size is 256, and the optimization strategy is Adam. Cancerous areas have different sizes, resulting in unbalanced positive and negative samples. Focal loss is chosen as a loss function.ResultWe conducted comparative and ablation experiments on our dataset. We use several evaluation metrics to evaluate the performance of the inception-FSCA. The accuracy, precision, sensitivity, specificity, and Kappa are 0.978 0, 0.965 4, 0.958 6, 0.985 2, and 0.945 6, respectively.ConclusionIn this study, we proposed a Fourier transform frequency selecting channel attention mechanism. The proposed channel attention module can be conveniently inserted in CNN. An Inception-FSCA network is built for the segmentation of hyperspectral images of cholangiocarcinoma tissues. Quantitative results show that our method has excellent performance. Inception-FSCA can be applied in the outer image segmentation and classification tasks.关键词:hyperspectral image of cholangiocarcinoma;convolutional neural network (CNN);image segmentation;channel attention mechanism;Fourier transform107|79|5更新时间:2024-05-07

Medical Hyperspectral Imagery

-