最新刊期

卷 26 , 期 7 , 2021

-

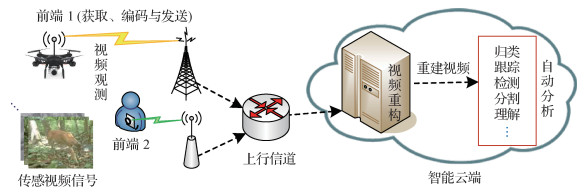

摘要:Uplink streaming media has an emerging strategic value in the civil-military integration field. For uplink streaming media applications, any compressive sensing video stream has technological advantages in terms of low-complexity terminal, good error resilience, and widely available signals. This technology is becoming one of the main issues in visual communication research. The compressive sensing video stream is a new type of visual communication whose functional modules mainly consist of front-end video observation and cloud-end video reconstruction. The core technology of compressive sensing video stream has not developed to a degree that can be standardized. When the uplink streaming media provides a large number of video sensing signals not for human viewing but for universal machine vision, any compressive-sensing video stream utilizes a new signal-processing mechanism that can avoid the shortage of existing uplink streaming technologies to first obtain additional information and then discard it. Based on the application characteristics of uplink streaming media, this study analyzes the basic theories and key technologies of compressive-sensing video stream, i.e., performance metrics, parallel block computational imaging, low-complexity video encoding, video reconstruction, and semantic quality evaluation. The latest research progress is also investigated and compared in this survey. The video sensing signal is usually divided into group-of-frames (GOF), and each GOF is further divided into a key frame and several non-key frames. As block compressive sensing (BCS) requires less sensing or storage resources at the front end, it not only realizes the lightweight observation matrix but also transmits block-by-block or in parallel. In a compressive sensing video stream, the GOF-BCS block array denotes the set of all BCS blocks in a GOF. The existing compressive sensing video stream adopts such a technical framework as single-frame observation, open-loop encoding, and fidelity-guided reconstruction. The study results show that for uplink streaming media, the existing compressive-sensing video stream faces bottleneck problems such as uncontrollable observation efficiency, lack of bitstream adaptation, and low reconstruction quality. Therefore, the technology development trend of compressive-sensing video streams have to be examined. The research directions of future compressive sensing video streams aim to focus on the following aspects. 1) Efficiency-optimized GOF-BCS block-array layout. The existing compressive-sensing video stream only uses a simple combination of GOF frame number, BCS block size, and sampling rate, which is a special layout of the GOF-BCS block-array. This special layout lacks a rationality proof. Therefore, we need to compare and analyze various block-array layouts and spatial-temporal partitions, and then design a universally optimized GOF-BCS block-array to quickly generate the observation vectors with more spatiotemporal semantics. At the same time, this approach is conducive to the hierarchical sparse modeling of video reconstruction. 2) Observation control and bitstream adaptation of video sensing signal. During video encoding, a trade-off occurs between the sampling rate and quantization depth. In subsequent study, an important task is to know how to construct the distribution model of observation vectors and adaptively adjust the sampling rate and quantization depth. Based on an efficiency-optimized GOF-BCS block-array, the novel compressive sensing video stream may improve the observation efficiency at the front end, and adapt both low-complexity encoding and wireless transmission. Through the dynamic interaction between source and channel at the front end, the feedback coordination is formed between video observation and wireless transmission, and the front-end complexity may be quantitatively controlled. 3) During video reconstruction, an important methodology is to obtain the sparse solution of the underdetermined system by prior modeling. When the hierarchical sparse model cannot stably represent the observation vectors, the data-driven reconstruction mechanism can make up for the deficiency of prior modeling. Future research will construct the generation and recovery mechanism of partial reversible signals, and explore the hybrid reconstruction mechanism of hierarchical sparse model and deep neural network (DNN). 4) Semantic quality assessment model for any reconstructed block-array. At present, the quality evaluation of reconstructed videos is limited to pixel-level fidelity. For universal machine vision, the video reconstruction relies more on semantic quality evaluation. On the basis of sparse residual prediction reconstruction, the cloud end gradually adds the data-driven reconstruction by DNN. By integrating the semantic quality assessment model, the video reconstruction mechanism with memory learning may be provided at cloud end. 5) A new technical framework will combine the high-efficiency observation and semantic-guided hybrid reconstruction. One of the important research directions is to construct the effective division and cooperation between the front and cloud ends. Besides the complexity-controllable front end, the new technical framework should demonstrate the higher semantic quality in video reconstruction and enhance the interpretability of compressive-sensing deep learning. For the video-sensing signal with dynamic scene changes, the new technical framework can balance the observation distortion, bitrate, and power consumption at any resource-constrained front end. The research directions are expected to break through the limitations of the existing compressive-sensing video stream. Such key technologies have to be developed as high-efficiency observation and semantic-guided hybrid reconstruction, which can further highlight the unique advantage and quantitative evolution of compressive-sensing video stream technology for uplink streaming media applications.关键词:video stream;observation efficiency;bitstream adaptation;semantic quality;video reconstruction;review81|282|1更新时间:2024-05-07

摘要:Uplink streaming media has an emerging strategic value in the civil-military integration field. For uplink streaming media applications, any compressive sensing video stream has technological advantages in terms of low-complexity terminal, good error resilience, and widely available signals. This technology is becoming one of the main issues in visual communication research. The compressive sensing video stream is a new type of visual communication whose functional modules mainly consist of front-end video observation and cloud-end video reconstruction. The core technology of compressive sensing video stream has not developed to a degree that can be standardized. When the uplink streaming media provides a large number of video sensing signals not for human viewing but for universal machine vision, any compressive-sensing video stream utilizes a new signal-processing mechanism that can avoid the shortage of existing uplink streaming technologies to first obtain additional information and then discard it. Based on the application characteristics of uplink streaming media, this study analyzes the basic theories and key technologies of compressive-sensing video stream, i.e., performance metrics, parallel block computational imaging, low-complexity video encoding, video reconstruction, and semantic quality evaluation. The latest research progress is also investigated and compared in this survey. The video sensing signal is usually divided into group-of-frames (GOF), and each GOF is further divided into a key frame and several non-key frames. As block compressive sensing (BCS) requires less sensing or storage resources at the front end, it not only realizes the lightweight observation matrix but also transmits block-by-block or in parallel. In a compressive sensing video stream, the GOF-BCS block array denotes the set of all BCS blocks in a GOF. The existing compressive sensing video stream adopts such a technical framework as single-frame observation, open-loop encoding, and fidelity-guided reconstruction. The study results show that for uplink streaming media, the existing compressive-sensing video stream faces bottleneck problems such as uncontrollable observation efficiency, lack of bitstream adaptation, and low reconstruction quality. Therefore, the technology development trend of compressive-sensing video streams have to be examined. The research directions of future compressive sensing video streams aim to focus on the following aspects. 1) Efficiency-optimized GOF-BCS block-array layout. The existing compressive-sensing video stream only uses a simple combination of GOF frame number, BCS block size, and sampling rate, which is a special layout of the GOF-BCS block-array. This special layout lacks a rationality proof. Therefore, we need to compare and analyze various block-array layouts and spatial-temporal partitions, and then design a universally optimized GOF-BCS block-array to quickly generate the observation vectors with more spatiotemporal semantics. At the same time, this approach is conducive to the hierarchical sparse modeling of video reconstruction. 2) Observation control and bitstream adaptation of video sensing signal. During video encoding, a trade-off occurs between the sampling rate and quantization depth. In subsequent study, an important task is to know how to construct the distribution model of observation vectors and adaptively adjust the sampling rate and quantization depth. Based on an efficiency-optimized GOF-BCS block-array, the novel compressive sensing video stream may improve the observation efficiency at the front end, and adapt both low-complexity encoding and wireless transmission. Through the dynamic interaction between source and channel at the front end, the feedback coordination is formed between video observation and wireless transmission, and the front-end complexity may be quantitatively controlled. 3) During video reconstruction, an important methodology is to obtain the sparse solution of the underdetermined system by prior modeling. When the hierarchical sparse model cannot stably represent the observation vectors, the data-driven reconstruction mechanism can make up for the deficiency of prior modeling. Future research will construct the generation and recovery mechanism of partial reversible signals, and explore the hybrid reconstruction mechanism of hierarchical sparse model and deep neural network (DNN). 4) Semantic quality assessment model for any reconstructed block-array. At present, the quality evaluation of reconstructed videos is limited to pixel-level fidelity. For universal machine vision, the video reconstruction relies more on semantic quality evaluation. On the basis of sparse residual prediction reconstruction, the cloud end gradually adds the data-driven reconstruction by DNN. By integrating the semantic quality assessment model, the video reconstruction mechanism with memory learning may be provided at cloud end. 5) A new technical framework will combine the high-efficiency observation and semantic-guided hybrid reconstruction. One of the important research directions is to construct the effective division and cooperation between the front and cloud ends. Besides the complexity-controllable front end, the new technical framework should demonstrate the higher semantic quality in video reconstruction and enhance the interpretability of compressive-sensing deep learning. For the video-sensing signal with dynamic scene changes, the new technical framework can balance the observation distortion, bitrate, and power consumption at any resource-constrained front end. The research directions are expected to break through the limitations of the existing compressive-sensing video stream. Such key technologies have to be developed as high-efficiency observation and semantic-guided hybrid reconstruction, which can further highlight the unique advantage and quantitative evolution of compressive-sensing video stream technology for uplink streaming media applications.关键词:video stream;observation efficiency;bitstream adaptation;semantic quality;video reconstruction;review81|282|1更新时间:2024-05-07

Review

-

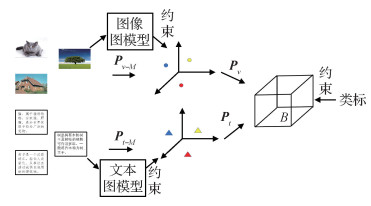

摘要:ObjectiveWith the rapid development of multimedia technology, the scale of multimedia data has been growing rapidly. For example, people are used to describing the things they want to show with multimedia data such as texts, images, and videos. Obtaining the relevant results of one modality using another modality is a good objective. In this sense, how to effectively perform semantic correlation analysis and measure the similarity between the data has gradually become a hot research topic. As the representation of different modal data is heterogeneous, it poses a great challenge to the cross-modal retrieval task. Hashing-based methods have received great attention in cross-modal retrieval because of its fast retrieval speed and low storage consumption. To solve the problem of heterogeneity between different modalities of the data, most of the current supervised hashing algorithms directly map different modal data into the Hamming space. However, these methods have the following limitations: 1) The data from each modality have different feature representations, and the dimensions of their feature spaces vary greatly. Therefore, it is difficult for these methods to obtain a consistent hash code by directly mapping the data from different modalities into the same Hamming space. 2) Although label information has been considered for these hashing methods, the structural information of the original data is ignored, which could result in a less-representative hash code to encode the original structural information in each modality. To solve these issues, a novel hashing algorithm called structure-preserving hashing with coupled projections (SPHCP) is proposed in this paper for cross-modal retrieval.MethodConsidering the heterogeneity between the cross-modal data, this algorithm first projects the data from different modalities into their respective subspaces to reduce the modal difference. A local graph model is also designed in the subspace learning to maintain the structural consistency between the samples. Then, to build a semantic relationship between different modalities, the algorithm maps the subspace features to the Hamming space to obtain a consistent hash code. At the same time, the label constraint is exploited to improve the discriminant power of the obtained hash codes. Finally, the algorithm measures the similarity of different modal data in terms of the Hamming distance.ResultWe compared our model with several state-of-the-art methods on three public datasets, namely, Wikipedia, MIRFlickr, and Pascal Sentence. The mean average precision (mAP) is used as the quantitative evaluation metric. We first test our method on two benchmark datasets, Wikipedia and MIRFlickr. To evaluate the impact of hash-code length on the performance of the algorithm, this experiment set the hash code length to 16, 32, 64, and 128 bits. The experimental results show that for both the text-retrieving image task and image-retrieving text task, our proposed method outperforms the existing methods in each length setting. To further measure the performance of our proposed method on the dataset with deep features, we test the algorithm on the Pascal Sentence dataset. The experimental results show that our SPHCP algorithm can also achieve higher mAP on such dataset with deep features. In general, cross-modal retrieval methods based on deep networks can handle nonlinear features well, so their retrieval accuracy is supposed to be higher than that of traditional methods, but they need much more computational power. As a "shallow" method, the proposed SPHCP algorithm is competitive with deep methods in terms of mAP. Therefore, as an interesting direction, our framework can be used in conjunction with the deep learning method in the future, i.e., using deep learning to extract the features of images and text offline, and using the SPHCP algorithm for fast retrieval. Furthermore, we analyze the parameter sensitivity of the proposed algorithm. As this algorithm has 7 parameters, a controlled variable method is used for evaluation. The experimental results show that the proposed algorithm is not sensitive to parameters, which means that the training process does not require much optimization time, making it suitable for practical application.ConclusionIn this study, a novel method called SPHCP is proposed to solve the problems mentioned. First, aiming at the "modal gap" between cross-modal data, the scheme of coupled projections is applied to gradually reduce the modal difference of multimedia data. In this way, a more consistent hash code can be obtained. Second, considering the structural information and semantic discrimination of the original data, the algorithm introduces the graph model in subspace learning, which can maintain the intra-class and inter-class relationship of the samples. Finally, a label constraint is introduced to improve the discriminability of the hash code. The experiments on the benchmark datasets verify the effectiveness of the proposed algorithm. Specifically, compared with the second-best method, SPHCP achieves an improvement by 6% and 3% on Wikipedia for two retrieval tasks. On MIRFlickr, SPHCP achieves an improvement by 2% and 5%. On Pascal Sentence, the improvement is approximately 10% and 7%. However, the proposed method requires a large amount of computing power when dealing with large-scale data, because SPHCP introduces a graph model to maintain the structural information between the data. The calculation of the structural information between each sample leads to a larger computing complexity.In future research, we will introduce nonlinear feature mapping into our SPHCP framework to improve its scalability when dealing with nonlinear feature data. Furthermore, we can extend the SPHCP from a cross-modal retrieval algorithm to a multi-modal version.关键词:cross-modal retrieval;Hashing;structure-preserving;graph model;coupled projections;subspace learning95|165|0更新时间:2024-05-07

摘要:ObjectiveWith the rapid development of multimedia technology, the scale of multimedia data has been growing rapidly. For example, people are used to describing the things they want to show with multimedia data such as texts, images, and videos. Obtaining the relevant results of one modality using another modality is a good objective. In this sense, how to effectively perform semantic correlation analysis and measure the similarity between the data has gradually become a hot research topic. As the representation of different modal data is heterogeneous, it poses a great challenge to the cross-modal retrieval task. Hashing-based methods have received great attention in cross-modal retrieval because of its fast retrieval speed and low storage consumption. To solve the problem of heterogeneity between different modalities of the data, most of the current supervised hashing algorithms directly map different modal data into the Hamming space. However, these methods have the following limitations: 1) The data from each modality have different feature representations, and the dimensions of their feature spaces vary greatly. Therefore, it is difficult for these methods to obtain a consistent hash code by directly mapping the data from different modalities into the same Hamming space. 2) Although label information has been considered for these hashing methods, the structural information of the original data is ignored, which could result in a less-representative hash code to encode the original structural information in each modality. To solve these issues, a novel hashing algorithm called structure-preserving hashing with coupled projections (SPHCP) is proposed in this paper for cross-modal retrieval.MethodConsidering the heterogeneity between the cross-modal data, this algorithm first projects the data from different modalities into their respective subspaces to reduce the modal difference. A local graph model is also designed in the subspace learning to maintain the structural consistency between the samples. Then, to build a semantic relationship between different modalities, the algorithm maps the subspace features to the Hamming space to obtain a consistent hash code. At the same time, the label constraint is exploited to improve the discriminant power of the obtained hash codes. Finally, the algorithm measures the similarity of different modal data in terms of the Hamming distance.ResultWe compared our model with several state-of-the-art methods on three public datasets, namely, Wikipedia, MIRFlickr, and Pascal Sentence. The mean average precision (mAP) is used as the quantitative evaluation metric. We first test our method on two benchmark datasets, Wikipedia and MIRFlickr. To evaluate the impact of hash-code length on the performance of the algorithm, this experiment set the hash code length to 16, 32, 64, and 128 bits. The experimental results show that for both the text-retrieving image task and image-retrieving text task, our proposed method outperforms the existing methods in each length setting. To further measure the performance of our proposed method on the dataset with deep features, we test the algorithm on the Pascal Sentence dataset. The experimental results show that our SPHCP algorithm can also achieve higher mAP on such dataset with deep features. In general, cross-modal retrieval methods based on deep networks can handle nonlinear features well, so their retrieval accuracy is supposed to be higher than that of traditional methods, but they need much more computational power. As a "shallow" method, the proposed SPHCP algorithm is competitive with deep methods in terms of mAP. Therefore, as an interesting direction, our framework can be used in conjunction with the deep learning method in the future, i.e., using deep learning to extract the features of images and text offline, and using the SPHCP algorithm for fast retrieval. Furthermore, we analyze the parameter sensitivity of the proposed algorithm. As this algorithm has 7 parameters, a controlled variable method is used for evaluation. The experimental results show that the proposed algorithm is not sensitive to parameters, which means that the training process does not require much optimization time, making it suitable for practical application.ConclusionIn this study, a novel method called SPHCP is proposed to solve the problems mentioned. First, aiming at the "modal gap" between cross-modal data, the scheme of coupled projections is applied to gradually reduce the modal difference of multimedia data. In this way, a more consistent hash code can be obtained. Second, considering the structural information and semantic discrimination of the original data, the algorithm introduces the graph model in subspace learning, which can maintain the intra-class and inter-class relationship of the samples. Finally, a label constraint is introduced to improve the discriminability of the hash code. The experiments on the benchmark datasets verify the effectiveness of the proposed algorithm. Specifically, compared with the second-best method, SPHCP achieves an improvement by 6% and 3% on Wikipedia for two retrieval tasks. On MIRFlickr, SPHCP achieves an improvement by 2% and 5%. On Pascal Sentence, the improvement is approximately 10% and 7%. However, the proposed method requires a large amount of computing power when dealing with large-scale data, because SPHCP introduces a graph model to maintain the structural information between the data. The calculation of the structural information between each sample leads to a larger computing complexity.In future research, we will introduce nonlinear feature mapping into our SPHCP framework to improve its scalability when dealing with nonlinear feature data. Furthermore, we can extend the SPHCP from a cross-modal retrieval algorithm to a multi-modal version.关键词:cross-modal retrieval;Hashing;structure-preserving;graph model;coupled projections;subspace learning95|165|0更新时间:2024-05-07 -

摘要:ObjectiveVisual retrieval methods need to accurately and efficiently retrieve the most relevant visual content from large-scale images or video datasets. However, due to a large amount of image data and high feature dimensionality in the dataset, existing methods face difficulty in ensuring fast retrieval speed and good retrieval results. Hashing is a widely studied solution for approximate nearest neighbor search, which aims to convert high-dimensional data items into a low-dimensional representation or a hash code consisting of a set of bit sequences. Locality-sensitive hashing (LSH) is a data-independent, unsupervised hashing algorithm that provides asymptotic theoretical properties, thereby ensuring performance. LSH is considered as one of the most common methods for fast nearest-neighbor search in high-dimensional space. Nevertheless, if the number of hash functions k is set too small, it leads to too many data items falling into each hash bucket, thus increasing the query response time. By contrast, if k is set too large, the number of data items in each hash bucket is reduced. Moreover, to achieve the desired search accuracy, LSH usually needs to use long hash codes, thereby reducing the recall rate. Although the use of multiple hash tables can alleviate this problem, it significantly increases memory cost and query time. Besides, due to the semantic gap between the visual semantic space and metric space, LSH may not obtain good search performance.MethodFor visual retrieval of high-dimensional data, we first propose a hash algorithm called weighted semantic locality-sensitive hashing (WSLSH), which is based on feature space partitioning, to address the aforementioned drawbacks of LSH. While building the indices, WSLSH considers the distance relationship between reference and query features, divides the reference feature space into two subspaces by a two-layer visual dictionary, and employs weighted-semantic locality sensitive hashing in each subspace to index, thereby forming a hierarchical index structure. The proposed algorithm can rapidly converge the target to a small range in the process of large-scale retrieval and make accurate queries, which greatly improves the retrieval speed. Then, dynamic variable-length hashing codes are applied in a hashing table to retrieve multiple hashing buckets, which can reduce the number of hashing tables and improve the retrieval speed based on guaranteeing the retrieval performance. Through these two improvements, the retrieval speed can be greatly improved. In addition, to solve the random instability of LSH, statistical information reflecting the semantics of reference feature space is introduced into the LSH function, and a simple projection semantic-hashing function is designed to ensure the stability of the retrieval performance.ResultExperiment results on Holidays, Oxford5k, and DataSetB datasets show that the retrieval accuracy and retrieval speed are effectively improved in comparison with the representative unsupervised hash methods. WSLSH achieves the shortest average retrieval time (0.034 25 s) on DataSetB. When the encoding length is 64 bit, the mean average precision (mAP) of the WSLSH algorithm is improved by 1.2%32.6%, 1.7%19.1%, and 2.6%28.6%. WSLSH is not highly sensitive to the size change of the reference feature subset involved, so the retrieval time does not change significantly, which reflects the retrieval advantage of WSLSH for large-scale datasets. With the increase of encoding length, the performance of the WSLSH algorithm is improved gradually. When the encoding length is 64 bit, the WSLSH algorithm obtains the highest precision and recall on the three datasets, which is superior to other compared methods.ConclusionThe LSH algorithm is improved by performing feature space division twice, weighting the number of hash indexes of reference features, dynamically using variable-length hash codes, and introducing a simple-projection semantic-hash function. Thus, the proposed WSLSH algorithm has faster retrieval speed. In the case of long encoding length, WSLSH achieves better performance than the compared works and shows high application value for large-scale image datasets.关键词:feature space partitioning;locality-sensitive hashing(LSH);dynamic variable-length hashing code;visual retrieval;nearest neighbor search77|163|1更新时间:2024-05-07

摘要:ObjectiveVisual retrieval methods need to accurately and efficiently retrieve the most relevant visual content from large-scale images or video datasets. However, due to a large amount of image data and high feature dimensionality in the dataset, existing methods face difficulty in ensuring fast retrieval speed and good retrieval results. Hashing is a widely studied solution for approximate nearest neighbor search, which aims to convert high-dimensional data items into a low-dimensional representation or a hash code consisting of a set of bit sequences. Locality-sensitive hashing (LSH) is a data-independent, unsupervised hashing algorithm that provides asymptotic theoretical properties, thereby ensuring performance. LSH is considered as one of the most common methods for fast nearest-neighbor search in high-dimensional space. Nevertheless, if the number of hash functions k is set too small, it leads to too many data items falling into each hash bucket, thus increasing the query response time. By contrast, if k is set too large, the number of data items in each hash bucket is reduced. Moreover, to achieve the desired search accuracy, LSH usually needs to use long hash codes, thereby reducing the recall rate. Although the use of multiple hash tables can alleviate this problem, it significantly increases memory cost and query time. Besides, due to the semantic gap between the visual semantic space and metric space, LSH may not obtain good search performance.MethodFor visual retrieval of high-dimensional data, we first propose a hash algorithm called weighted semantic locality-sensitive hashing (WSLSH), which is based on feature space partitioning, to address the aforementioned drawbacks of LSH. While building the indices, WSLSH considers the distance relationship between reference and query features, divides the reference feature space into two subspaces by a two-layer visual dictionary, and employs weighted-semantic locality sensitive hashing in each subspace to index, thereby forming a hierarchical index structure. The proposed algorithm can rapidly converge the target to a small range in the process of large-scale retrieval and make accurate queries, which greatly improves the retrieval speed. Then, dynamic variable-length hashing codes are applied in a hashing table to retrieve multiple hashing buckets, which can reduce the number of hashing tables and improve the retrieval speed based on guaranteeing the retrieval performance. Through these two improvements, the retrieval speed can be greatly improved. In addition, to solve the random instability of LSH, statistical information reflecting the semantics of reference feature space is introduced into the LSH function, and a simple projection semantic-hashing function is designed to ensure the stability of the retrieval performance.ResultExperiment results on Holidays, Oxford5k, and DataSetB datasets show that the retrieval accuracy and retrieval speed are effectively improved in comparison with the representative unsupervised hash methods. WSLSH achieves the shortest average retrieval time (0.034 25 s) on DataSetB. When the encoding length is 64 bit, the mean average precision (mAP) of the WSLSH algorithm is improved by 1.2%32.6%, 1.7%19.1%, and 2.6%28.6%. WSLSH is not highly sensitive to the size change of the reference feature subset involved, so the retrieval time does not change significantly, which reflects the retrieval advantage of WSLSH for large-scale datasets. With the increase of encoding length, the performance of the WSLSH algorithm is improved gradually. When the encoding length is 64 bit, the WSLSH algorithm obtains the highest precision and recall on the three datasets, which is superior to other compared methods.ConclusionThe LSH algorithm is improved by performing feature space division twice, weighting the number of hash indexes of reference features, dynamically using variable-length hash codes, and introducing a simple-projection semantic-hash function. Thus, the proposed WSLSH algorithm has faster retrieval speed. In the case of long encoding length, WSLSH achieves better performance than the compared works and shows high application value for large-scale image datasets.关键词:feature space partitioning;locality-sensitive hashing(LSH);dynamic variable-length hashing code;visual retrieval;nearest neighbor search77|163|1更新时间:2024-05-07

Image Processing and Coding

-

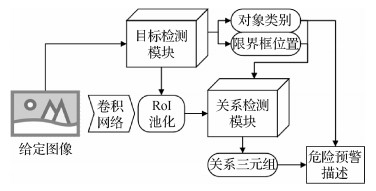

摘要:ObjectiveThe past decade has seen a steady increase in deep learning areas, where extensive research has been published to improve the learning capabilities of deep neural networks. Thus, a growing number of regulators in the electric power industry utilize such deep learning techniques with powerful recognition and detection capabilities to build their surveillance systems, which greatly reduce the risk of major accidents in daily work. However, most of the current early-warning systems are based on object detection technologies, which can only provide annotations of dangerous targets within the image, ignoring the significant information about unary relationships of electrical equipment and binary relationships between paired objects. This condition limits the capabilities of emergency recognition and forewarning. With the presence of powerful object detectors such as Faster region convolutional neural network (R-CNN) and huge visual datasets such as visual genome, visual relationship detection has attracted much attention in recent years. By utilizing the basic building blocks for single-object detection and understanding, visual relationship detection aims to not only accurately localize a pair of objects but also precisely determine the predicate between them. As a mid-level learning task, visual relationship detection can capture the detailed semantics of visual scenes by explicitly modeling objects along with their relationships with other objects. This approach bridges the gap between low-level visual tasks and high-level vision-language tasks, as well as helps machines to solve more challenging visual tasks such as image captioning, visual question answering, and image generation. However, the difficulty is in developing robust algorithms to recognize relationships between paired objects with challenging factors, such as highly diverse visual features in the same predicate category, incomplete annotation and long-tailed distribution in the dataset, and optimum predicate matching problem. Although numerous methods have been proposed to build efficient relationship detectors, few of them concentrate on applying detection technologies to actual use.MethodDifferent from existing methods, our method introduces the visual relationship detection technology into current early-warning systems. Specifically, our method not only identifies dangerous objects but also recognizes the potential unary or binary relationships that may cause an accident. To sum up, we propose a two-stage emergency recognition and forewarning system for the electric power industry. The system consists of a pre-trained object-detection module and a relationship detection module. The pipeline of our system mainly includes three stages. First, we train an object-detection module based on Faster R-CNN in advance. When given an image, the pre-trained object detector localizes all the object bounding boxes and annotates their categories. Then, the relationship-detection module integrates multiple cues (visual appearance, spatial location, and semantic embedding) to compute the predicate confidence of all the object pairs, and output the top instances as the relationship predictions. Finally, based on the targets and relationship information provided by the detectors, our system performs emergency prediction and generates a warning description that may help regulators in the electric power industry to make suitable decisions.ResultWe conduct several experiments to prove the efficiency and superiority of our method. First, we collect and build a dataset consisting of large amounts of images from multiple scenarios in the electric power industry. Using instructions from experts, we define and label the relationship categories that may pose risks to the images in the dataset. Then, according to the number of objects forming a relationship, we divide the dataset into two parts. Thus, our experiments involve two relevant tasks to evaluate the proposed method: unary relationship detection and binary relationship detection. For the unary relationship detection, we use precision and recall as thee valuation metrics. For the binary relationship detection, the evaluation metrics are Recall@5 and Recall@10. As our proposed relationship-detection module contains multiple cues to learn the holistic representation of a relationship instance, we conduct ablation experiments to explore their influence on the final performance. Experiment results show that the detector that uses visual, spatial, and semantic features as input achieve the best performance of 86.80% in Recall@5 and 93.93% in Recall@10.ConclusionExtensive experiments show that our proposed method is efficient and effective in detecting defective electrical equipment and dangerous relationships between paired objects. Moreover, we formulate a pre-defined rule to generate the early-warning description according to the results of the object and relationship detectors. All of the proposed methods can help regulators take proper and timely actions to avoid harmful accidents in the electric power industry.关键词:emergency early-warning;object detection;visual relationship detection;multimodal feature fusion;multi-label margin loss66|206|2更新时间:2024-05-07

摘要:ObjectiveThe past decade has seen a steady increase in deep learning areas, where extensive research has been published to improve the learning capabilities of deep neural networks. Thus, a growing number of regulators in the electric power industry utilize such deep learning techniques with powerful recognition and detection capabilities to build their surveillance systems, which greatly reduce the risk of major accidents in daily work. However, most of the current early-warning systems are based on object detection technologies, which can only provide annotations of dangerous targets within the image, ignoring the significant information about unary relationships of electrical equipment and binary relationships between paired objects. This condition limits the capabilities of emergency recognition and forewarning. With the presence of powerful object detectors such as Faster region convolutional neural network (R-CNN) and huge visual datasets such as visual genome, visual relationship detection has attracted much attention in recent years. By utilizing the basic building blocks for single-object detection and understanding, visual relationship detection aims to not only accurately localize a pair of objects but also precisely determine the predicate between them. As a mid-level learning task, visual relationship detection can capture the detailed semantics of visual scenes by explicitly modeling objects along with their relationships with other objects. This approach bridges the gap between low-level visual tasks and high-level vision-language tasks, as well as helps machines to solve more challenging visual tasks such as image captioning, visual question answering, and image generation. However, the difficulty is in developing robust algorithms to recognize relationships between paired objects with challenging factors, such as highly diverse visual features in the same predicate category, incomplete annotation and long-tailed distribution in the dataset, and optimum predicate matching problem. Although numerous methods have been proposed to build efficient relationship detectors, few of them concentrate on applying detection technologies to actual use.MethodDifferent from existing methods, our method introduces the visual relationship detection technology into current early-warning systems. Specifically, our method not only identifies dangerous objects but also recognizes the potential unary or binary relationships that may cause an accident. To sum up, we propose a two-stage emergency recognition and forewarning system for the electric power industry. The system consists of a pre-trained object-detection module and a relationship detection module. The pipeline of our system mainly includes three stages. First, we train an object-detection module based on Faster R-CNN in advance. When given an image, the pre-trained object detector localizes all the object bounding boxes and annotates their categories. Then, the relationship-detection module integrates multiple cues (visual appearance, spatial location, and semantic embedding) to compute the predicate confidence of all the object pairs, and output the top instances as the relationship predictions. Finally, based on the targets and relationship information provided by the detectors, our system performs emergency prediction and generates a warning description that may help regulators in the electric power industry to make suitable decisions.ResultWe conduct several experiments to prove the efficiency and superiority of our method. First, we collect and build a dataset consisting of large amounts of images from multiple scenarios in the electric power industry. Using instructions from experts, we define and label the relationship categories that may pose risks to the images in the dataset. Then, according to the number of objects forming a relationship, we divide the dataset into two parts. Thus, our experiments involve two relevant tasks to evaluate the proposed method: unary relationship detection and binary relationship detection. For the unary relationship detection, we use precision and recall as thee valuation metrics. For the binary relationship detection, the evaluation metrics are Recall@5 and Recall@10. As our proposed relationship-detection module contains multiple cues to learn the holistic representation of a relationship instance, we conduct ablation experiments to explore their influence on the final performance. Experiment results show that the detector that uses visual, spatial, and semantic features as input achieve the best performance of 86.80% in Recall@5 and 93.93% in Recall@10.ConclusionExtensive experiments show that our proposed method is efficient and effective in detecting defective electrical equipment and dangerous relationships between paired objects. Moreover, we formulate a pre-defined rule to generate the early-warning description according to the results of the object and relationship detectors. All of the proposed methods can help regulators take proper and timely actions to avoid harmful accidents in the electric power industry.关键词:emergency early-warning;object detection;visual relationship detection;multimodal feature fusion;multi-label margin loss66|206|2更新时间:2024-05-07 -

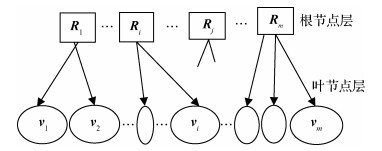

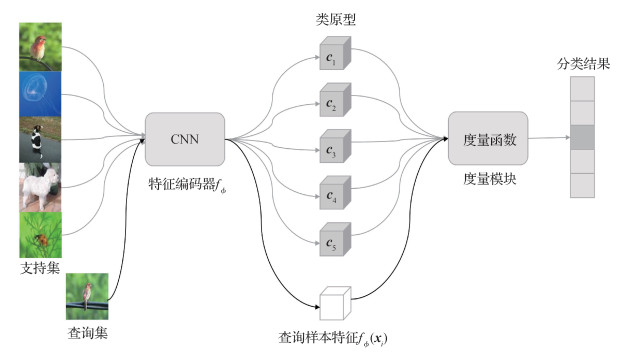

摘要:ObjectiveDeep learning has made remarkable achievements in many fields such as image recognition, object detection, and speech recognition. However, most of the extraordinary achievements of these models depend on extraordinary data size. Existing deep-learning models often need large-scale training data. Building large-scale training data sets not only necessitates a large amount of manpower and material resources but are also not feasible in scenarios such as obtaining a large number of rare image class data samples. Inspired by the fact that human children can learn how to distinguish an object through a small number of samples, few-shot image classification aims to identify target categories with only a few labeled samples. Image recognition based on few-shot learning solves the problem in which a deep learning model needs large-scale training data. At present, the mainstream methods of few-shot image recognition are based on meta learning, which mainly includes three methods: meta learning based on metric, meta learning based on optimization, and meta learning based on model. The method of meta learning is divided into two stages: training and testing. However, most of the metric-based meta-learning methods do not use few shots of the target class in the training stage, which leads to a lack of good generalization ability of these models. These metric-based meta-learning models often show high accuracy in the training stage, but the recognition effect for few-shot image categories in the test stage is poor. The deep feature representation learned by the models cannot be effectively generalized to the target class. To improve the generalization ability of the few-shot learning image recognition method, this study proposes a few-shot learning method based on class semantic similarity supervision.MethodThe method proposed in this paper mainly includes two parts: the first step is to obtain the class similarity matrix between the image dataset classes, and the second step is to use the class similarity matrix as additional supervision information to train the few-shot image recognition model. The details are as follows: a common crawl database containing one billion level webpage data is used to train an unsupervised word-vector learning algorithm GloVe model (global vectors for word representation), which generates 300 dimensional vectors for every word. For classes whose names contain more than one word, we match all the words in the training GloVe model and find their word-embedding vectors. By averaging these word-embedding vectors, we obtain the word embedding vector of the class name. Then, the cosine distance between the word-embedding vectors of classes is used to represent the semantic similarity between classes. In addition to the negative logarithm loss caused by the category labels of the original prototypical networks, this study introduces the semantic similarity measure between categories as the extra supervision information in the training stage of the model to establish the implicit relationship between the source class and few-shot target class. This condition enables the model to have better generalization ability. Furthermore, the loss of class semantic similarity can constrain the features of samples within and between classes learned by the model so that the sample features within each class are more similar, and the distribution of sample features between different classes is more consistent with the semantic similarity between categories. By introducing the loss of class semantic similarity to supervise the training process of the model, our proposed model can implicitly learn the relationship between different classes and obtain a feature representation with more constraint and generalization abilities of class sample features.ResultThis study compared the proposed model with several state-of-the-art few-shot image classification models, including prototypical, matching, and relation networks and other classic methods. In this study, a large number of experiments are conducted on miniImageNet and tieredImageNet. The results show that the proposed method is effective and competitive with the current advanced methods. To ensure fair comparison with the advanced methods, the classical paradigm of meta learning is used to train and test the model, and many experiments are conducted on the widely used 5-way 1-shot and 5-way 5-shot settings. The experimental results show that on the 5-way 1-shot and 5-way 5-shot settings of the miniImageNet dataset, the classification accuracy of the proposed method is improved by 1.9% and 0.32%, respectively, compared with the classical few-shot image recognition meta-learning method prototypical networks. In the tieredImageNet dataset on the 5-way 1-shot setting, the classification accuracy rate is improved by 0.33% compared with that in the prototypical networks. On the 5-way 5-shot setting of the tieredImageNet dataset, the proposed model achieves a competitive result compared with the prototypical networks. At the same time, several ablation experiments are conducted to verify the effectiveness of the key modules of the proposed method, and the influence of prior information of class semantic similarity on the experimental results is analyzed from multiple perspectives.ConclusionThis study proposed a few-shot image recognition model based on class semantic similarity supervision, which improves the generalization ability and class-feature constraint ability of the few-shot image recognition model. Experimental results show that the proposed method improves the accuracy of few-shot image recognition.关键词:few shot learning;image recognition;feature representation;class semantic similarity supervision;generalization ability103|111|5更新时间:2024-05-07

摘要:ObjectiveDeep learning has made remarkable achievements in many fields such as image recognition, object detection, and speech recognition. However, most of the extraordinary achievements of these models depend on extraordinary data size. Existing deep-learning models often need large-scale training data. Building large-scale training data sets not only necessitates a large amount of manpower and material resources but are also not feasible in scenarios such as obtaining a large number of rare image class data samples. Inspired by the fact that human children can learn how to distinguish an object through a small number of samples, few-shot image classification aims to identify target categories with only a few labeled samples. Image recognition based on few-shot learning solves the problem in which a deep learning model needs large-scale training data. At present, the mainstream methods of few-shot image recognition are based on meta learning, which mainly includes three methods: meta learning based on metric, meta learning based on optimization, and meta learning based on model. The method of meta learning is divided into two stages: training and testing. However, most of the metric-based meta-learning methods do not use few shots of the target class in the training stage, which leads to a lack of good generalization ability of these models. These metric-based meta-learning models often show high accuracy in the training stage, but the recognition effect for few-shot image categories in the test stage is poor. The deep feature representation learned by the models cannot be effectively generalized to the target class. To improve the generalization ability of the few-shot learning image recognition method, this study proposes a few-shot learning method based on class semantic similarity supervision.MethodThe method proposed in this paper mainly includes two parts: the first step is to obtain the class similarity matrix between the image dataset classes, and the second step is to use the class similarity matrix as additional supervision information to train the few-shot image recognition model. The details are as follows: a common crawl database containing one billion level webpage data is used to train an unsupervised word-vector learning algorithm GloVe model (global vectors for word representation), which generates 300 dimensional vectors for every word. For classes whose names contain more than one word, we match all the words in the training GloVe model and find their word-embedding vectors. By averaging these word-embedding vectors, we obtain the word embedding vector of the class name. Then, the cosine distance between the word-embedding vectors of classes is used to represent the semantic similarity between classes. In addition to the negative logarithm loss caused by the category labels of the original prototypical networks, this study introduces the semantic similarity measure between categories as the extra supervision information in the training stage of the model to establish the implicit relationship between the source class and few-shot target class. This condition enables the model to have better generalization ability. Furthermore, the loss of class semantic similarity can constrain the features of samples within and between classes learned by the model so that the sample features within each class are more similar, and the distribution of sample features between different classes is more consistent with the semantic similarity between categories. By introducing the loss of class semantic similarity to supervise the training process of the model, our proposed model can implicitly learn the relationship between different classes and obtain a feature representation with more constraint and generalization abilities of class sample features.ResultThis study compared the proposed model with several state-of-the-art few-shot image classification models, including prototypical, matching, and relation networks and other classic methods. In this study, a large number of experiments are conducted on miniImageNet and tieredImageNet. The results show that the proposed method is effective and competitive with the current advanced methods. To ensure fair comparison with the advanced methods, the classical paradigm of meta learning is used to train and test the model, and many experiments are conducted on the widely used 5-way 1-shot and 5-way 5-shot settings. The experimental results show that on the 5-way 1-shot and 5-way 5-shot settings of the miniImageNet dataset, the classification accuracy of the proposed method is improved by 1.9% and 0.32%, respectively, compared with the classical few-shot image recognition meta-learning method prototypical networks. In the tieredImageNet dataset on the 5-way 1-shot setting, the classification accuracy rate is improved by 0.33% compared with that in the prototypical networks. On the 5-way 5-shot setting of the tieredImageNet dataset, the proposed model achieves a competitive result compared with the prototypical networks. At the same time, several ablation experiments are conducted to verify the effectiveness of the key modules of the proposed method, and the influence of prior information of class semantic similarity on the experimental results is analyzed from multiple perspectives.ConclusionThis study proposed a few-shot image recognition model based on class semantic similarity supervision, which improves the generalization ability and class-feature constraint ability of the few-shot image recognition model. Experimental results show that the proposed method improves the accuracy of few-shot image recognition.关键词:few shot learning;image recognition;feature representation;class semantic similarity supervision;generalization ability103|111|5更新时间:2024-05-07 -

摘要:ObjectiveObject distance estimation is a fundamental problem in 3D vision. However, most successful object distance estimators need extra 3D information from active depth cameras or laser scanner, which increases the cost. Stereo vision is a convenient and cheap solution for this problem. Modern object distance estimation solutions are mainly based on deep neural network, which provides better accuracy than traditional methods. Deep learning-based solutions are of two main types. The first solution is combining a 2D object detector and a stereo image disparity estimator. The disparity estimator outputs depth information of the image, and the object detector detects object boxes or masks from the image. Then, the detected object boxes or masks are applied to the depth image to extract the pixel depth in the detected box, are then sorted, and the closest is selected to represent the distance of the object. However, such systems are not accurate enough to solve this problem according to the experiments. The second solution is to use a monocular 3D object detector. Such detectors can output 3D bounding boxes of objects, which indicate their distance. 3D object detectors are more accurate, but need annotations of 3D bounding box coordinates for training, which require special devices to collect data and entail high labelling costs. Therefore, we need a solution that has good accuracy while keeping the simplicity of model training.MethodWe propose a region convolutional neural network(R-CNN)-based network to perform object detection and distance estimation from stereo images simultaneously. This network can be trained only using object distance labels, which is easy to apply to many fields such as surveillance scenes and robot motion. We utilize stereo region proposal network to extract proposals of the corresponding target bounding box from the left view and right view images in one step. Then, a stereo bounding-box regression module is used to regress corresponding bounding-box coordinates simultaneously. The disparity could be calculated from the corresponding bounding box coordinate at x axis, but the obtained distance from disparity may be inaccurate due to the reciprocal relation between depth and disparity. Therefore, we propose a disparity estimation branch to estimate object disparity accurately. This branch estimates object-wise disparity from local object features from corresponding areas in the left view and right view images. This process can be treated as regression, so we can use a similar network structure as the stereo bounding-box regression module. However, the disparity estimated by this branch is still inaccurate. Inspired by other disparity image estimation methods, we propose to use a similar structure as disparity image estimation networks in this module. We use groupwise correlation and 3D convolutional stacked-hourglass network structure to construct this disparity estimation branch.ResultWe validated and trained our method on Karlsruhe Institute of Technology and Toyota Technological Institute(KITTI) dataset to show that our network is accurate for this task. We compare our method with other types of methods, including disparity image estimation-based methods and 3D object detection-based methods. We also provide qualitative experiment results by visualizing distance-estimation errors on the left view image. Our method outperforms disparity image estimation-based methods by a large scale, and is comparable with or superior to 3D object detection-based methods, which require 3D box annotation. In addition, we also compare experiments between different disparity estimation solutions proposed in this paper, showing that our proposed disparity estimation branch helps our network to obtain much more robust object distance, and the network structure based on 3D convolutional stacked-hourglass further improves the object-distance estimation accuracy. To prove that our method can be applied to surveillance stereo-object distance estimation, we collect and labeled a new dataset containing surveillance pedestrian scenes. The dataset contains 3 265 images shot by a stereo camera, and we label all the pedestrians in the left-view images with their bounding box as well as the pixel position of their head and foot, which helps to recover the pedestrian distance from the disparity image. We perform similar experiments on this dataset, which proved that our method can be applied to surveillance scenes effectively and accurately. As this dataset does not contain 3D bounding box annotation, 3D object detection-based methods cannot be applied in this scenario.ConclusionIn this study, we propose an R-CNN-based network to perform object detection and distance estimation simultaneously from stereo images. The experiment results show that our model is accurate enough and easy to train and apply to other fields.关键词:stereo vision;object distance estimation;disparity estimation;deep neural network;3D convolution;surveillance scene115|177|3更新时间:2024-05-07

摘要:ObjectiveObject distance estimation is a fundamental problem in 3D vision. However, most successful object distance estimators need extra 3D information from active depth cameras or laser scanner, which increases the cost. Stereo vision is a convenient and cheap solution for this problem. Modern object distance estimation solutions are mainly based on deep neural network, which provides better accuracy than traditional methods. Deep learning-based solutions are of two main types. The first solution is combining a 2D object detector and a stereo image disparity estimator. The disparity estimator outputs depth information of the image, and the object detector detects object boxes or masks from the image. Then, the detected object boxes or masks are applied to the depth image to extract the pixel depth in the detected box, are then sorted, and the closest is selected to represent the distance of the object. However, such systems are not accurate enough to solve this problem according to the experiments. The second solution is to use a monocular 3D object detector. Such detectors can output 3D bounding boxes of objects, which indicate their distance. 3D object detectors are more accurate, but need annotations of 3D bounding box coordinates for training, which require special devices to collect data and entail high labelling costs. Therefore, we need a solution that has good accuracy while keeping the simplicity of model training.MethodWe propose a region convolutional neural network(R-CNN)-based network to perform object detection and distance estimation from stereo images simultaneously. This network can be trained only using object distance labels, which is easy to apply to many fields such as surveillance scenes and robot motion. We utilize stereo region proposal network to extract proposals of the corresponding target bounding box from the left view and right view images in one step. Then, a stereo bounding-box regression module is used to regress corresponding bounding-box coordinates simultaneously. The disparity could be calculated from the corresponding bounding box coordinate at x axis, but the obtained distance from disparity may be inaccurate due to the reciprocal relation between depth and disparity. Therefore, we propose a disparity estimation branch to estimate object disparity accurately. This branch estimates object-wise disparity from local object features from corresponding areas in the left view and right view images. This process can be treated as regression, so we can use a similar network structure as the stereo bounding-box regression module. However, the disparity estimated by this branch is still inaccurate. Inspired by other disparity image estimation methods, we propose to use a similar structure as disparity image estimation networks in this module. We use groupwise correlation and 3D convolutional stacked-hourglass network structure to construct this disparity estimation branch.ResultWe validated and trained our method on Karlsruhe Institute of Technology and Toyota Technological Institute(KITTI) dataset to show that our network is accurate for this task. We compare our method with other types of methods, including disparity image estimation-based methods and 3D object detection-based methods. We also provide qualitative experiment results by visualizing distance-estimation errors on the left view image. Our method outperforms disparity image estimation-based methods by a large scale, and is comparable with or superior to 3D object detection-based methods, which require 3D box annotation. In addition, we also compare experiments between different disparity estimation solutions proposed in this paper, showing that our proposed disparity estimation branch helps our network to obtain much more robust object distance, and the network structure based on 3D convolutional stacked-hourglass further improves the object-distance estimation accuracy. To prove that our method can be applied to surveillance stereo-object distance estimation, we collect and labeled a new dataset containing surveillance pedestrian scenes. The dataset contains 3 265 images shot by a stereo camera, and we label all the pedestrians in the left-view images with their bounding box as well as the pixel position of their head and foot, which helps to recover the pedestrian distance from the disparity image. We perform similar experiments on this dataset, which proved that our method can be applied to surveillance scenes effectively and accurately. As this dataset does not contain 3D bounding box annotation, 3D object detection-based methods cannot be applied in this scenario.ConclusionIn this study, we propose an R-CNN-based network to perform object detection and distance estimation simultaneously from stereo images. The experiment results show that our model is accurate enough and easy to train and apply to other fields.关键词:stereo vision;object distance estimation;disparity estimation;deep neural network;3D convolution;surveillance scene115|177|3更新时间:2024-05-07 -

摘要:ObjectiveText can be seen everywhere, such as on street signs, billboards, newspapers, and other items. The text on these items expresses the information they intend to convey. The ability of text detection determines the level of text recognition and understanding of the scene. With the rapid development of modern technologies such as computer vision and internet of things, many emerging application scenarios need to extract text information from images. In recent years, some new methods for detecting scene text have been proposed. However, many of these methods are slow in detection because of the complexity of the large post-processing methods of the model, which limits their actual deployment. On the other hand, the previous high-efficiency text detectors mainly used quadrilateral bounding boxes for prediction, and accurately predicting arbitrary-shaped scenes is difficult.MethodIn this paper, an efficient arbitrary shape text detector called non-local pixel aggregation network (non-local PAN) is proposed. Non-local PAN follows a segmentation-based method to detect scene text instances. To increase the detection speed, the backbone network must be a lightweight network. However, the presentation capabilities of lightweight backbone networks are usually weak. Therefore, a non-local module is added to the backbone network to enhance its ability to extract features. Resnet-18 is used as the backbone network of non-local PAN, and non-local modules are embedded before the last residual block of the third layer. In addition, a feature-vector fusion module is designed to fuse feature vectors of different levels to enhance the feature expression of scene texts of different scales. The feature-vector fusion module is formed by concatenating multiple feature-vector fusion blocks. Causal convolution is the core component of the feature-vector fusion block. After training, the method can predict the fused feature vector based on the previously input feature vector. This study also uses a lightweight segmentation head that can effectively process features with a small computational cost. The segmentation head contains two key modules, namely, feature pyramid enhancement module (FPEM) and feature fusion module (FFM). FPEM is cascadable and has a low computational cost. It can be attached behind the backbone network to deepen the characteristics of different scales and make the network more expressive. Then, FFM merges the features generated by FPEM at different depths into the final features for segmentation. Non-local PAN uses the predicted text area to describe the complete shape of the text instance and predicts the core of the text to distinguish various text instances. The network also predicts the similarity vector of each text pixel to guide each pixel to the correct core.ResultThis method is compared with other methods on three scene-text datasets, and it has outstanding performance in speed and accuracy. On the International Conference on Document Analysis and Recognition(ICDAR) 2015 dataset, the F value of this method is 0.9% higher than that of the best method, and the detection speed reaches 23.1 frame/s. On the Curve Text in the Wild(CTW) 1500 dataset, the F value of this method is 1.2% higher than that of the best method, and the detection speed reaches 71.8 frame/s. On the total-text dataset, the F value of this method is 1.3% higher than that of the best method, and the detection speed reaches 34.3 frame/s, which is far beyond the result of other methods. In addition, we design parameter setting experiments to explore the best location for non-local module embedding. Experiments have proved that the effect of embedding the non-local module is better than non-embedding, indicating that non-local modules play an active role in the detection process. According to the detection accuracy, the effect of embedding non-local blocks into the second, third, and fourth layers of ResNet-18 is significant, while the effect of embedding the fifth layer is not obvious. Among the methods, embedding non-local blocks in the third layer has the best effect. We designed ablation experiments on the ICDAR 2015 dataset for the non-local and feature-vector fusion modules. The experimental results prove that the superiority of the non-local module does not come from deepening the network but from its own structural characteristics. The feature vector fusion module also plays an active role in the scene text-detection process, which combines feature maps of different scales to enhance the feature expression of scene texts with variable scales.ConclusionIn this paper, an efficient text detection method for arbitrary shape scene is proposed, which considers accuracy and realtime. The experimental results show that the performance of our model is better than that of previous methods, and our model is superior in accuracy and speed.关键词:object detection;scene text detection;neural network;non-local module;pixel aggregation;real-time detection;arbitrary shape62|47|4更新时间:2024-05-07

摘要:ObjectiveText can be seen everywhere, such as on street signs, billboards, newspapers, and other items. The text on these items expresses the information they intend to convey. The ability of text detection determines the level of text recognition and understanding of the scene. With the rapid development of modern technologies such as computer vision and internet of things, many emerging application scenarios need to extract text information from images. In recent years, some new methods for detecting scene text have been proposed. However, many of these methods are slow in detection because of the complexity of the large post-processing methods of the model, which limits their actual deployment. On the other hand, the previous high-efficiency text detectors mainly used quadrilateral bounding boxes for prediction, and accurately predicting arbitrary-shaped scenes is difficult.MethodIn this paper, an efficient arbitrary shape text detector called non-local pixel aggregation network (non-local PAN) is proposed. Non-local PAN follows a segmentation-based method to detect scene text instances. To increase the detection speed, the backbone network must be a lightweight network. However, the presentation capabilities of lightweight backbone networks are usually weak. Therefore, a non-local module is added to the backbone network to enhance its ability to extract features. Resnet-18 is used as the backbone network of non-local PAN, and non-local modules are embedded before the last residual block of the third layer. In addition, a feature-vector fusion module is designed to fuse feature vectors of different levels to enhance the feature expression of scene texts of different scales. The feature-vector fusion module is formed by concatenating multiple feature-vector fusion blocks. Causal convolution is the core component of the feature-vector fusion block. After training, the method can predict the fused feature vector based on the previously input feature vector. This study also uses a lightweight segmentation head that can effectively process features with a small computational cost. The segmentation head contains two key modules, namely, feature pyramid enhancement module (FPEM) and feature fusion module (FFM). FPEM is cascadable and has a low computational cost. It can be attached behind the backbone network to deepen the characteristics of different scales and make the network more expressive. Then, FFM merges the features generated by FPEM at different depths into the final features for segmentation. Non-local PAN uses the predicted text area to describe the complete shape of the text instance and predicts the core of the text to distinguish various text instances. The network also predicts the similarity vector of each text pixel to guide each pixel to the correct core.ResultThis method is compared with other methods on three scene-text datasets, and it has outstanding performance in speed and accuracy. On the International Conference on Document Analysis and Recognition(ICDAR) 2015 dataset, the F value of this method is 0.9% higher than that of the best method, and the detection speed reaches 23.1 frame/s. On the Curve Text in the Wild(CTW) 1500 dataset, the F value of this method is 1.2% higher than that of the best method, and the detection speed reaches 71.8 frame/s. On the total-text dataset, the F value of this method is 1.3% higher than that of the best method, and the detection speed reaches 34.3 frame/s, which is far beyond the result of other methods. In addition, we design parameter setting experiments to explore the best location for non-local module embedding. Experiments have proved that the effect of embedding the non-local module is better than non-embedding, indicating that non-local modules play an active role in the detection process. According to the detection accuracy, the effect of embedding non-local blocks into the second, third, and fourth layers of ResNet-18 is significant, while the effect of embedding the fifth layer is not obvious. Among the methods, embedding non-local blocks in the third layer has the best effect. We designed ablation experiments on the ICDAR 2015 dataset for the non-local and feature-vector fusion modules. The experimental results prove that the superiority of the non-local module does not come from deepening the network but from its own structural characteristics. The feature vector fusion module also plays an active role in the scene text-detection process, which combines feature maps of different scales to enhance the feature expression of scene texts with variable scales.ConclusionIn this paper, an efficient text detection method for arbitrary shape scene is proposed, which considers accuracy and realtime. The experimental results show that the performance of our model is better than that of previous methods, and our model is superior in accuracy and speed.关键词:object detection;scene text detection;neural network;non-local module;pixel aggregation;real-time detection;arbitrary shape62|47|4更新时间:2024-05-07 -