最新刊期

卷 26 , 期 5 , 2021

-

摘要:Participating media are frequent in real-world scenes, such as milk, fruit juices, oil, or muddy water in river or ocean scenes. Realistic rendering of participating media is one of the important aspects in the rendering domain. Incoming light interacts with these participating media in a more complex manner than surface rendering: it is refracted at the boundary, absorbed, and scattered as it travels inside the medium. These physical phenomena have different contributions to the overall aspect of the material: refraction focuses light in several parts of the medium, creating high-frequency events, or volume caustics. Scattering blurs incoming light, spreading its contribution. Absorption reduces light intensity as it travels inside the medium. In recent years, several algorithms, such as virtual ray lights (VRL), several extensions to photon mapping culminating with unified points, beams, and paths (UPBP), and manifold exploration Metropolis light transport(MEMLT), have been introduced for rendering participating media. All these methods have greatly improved the simulation of participating media but still encounter problems for simulation of materials with a high albedo, where multiple scattering dominates or high-frequency volumetric caustics effects. Another group of work is called diffusion-based methods. These methods are fast and designed to work with materials with a high albedo. However, these methods produce less accurate results, especially for highly anisotropic media, whereas diffusion-theory-based methods use similarity theory for anisotropic media, which leads to increased inaccuracy. In this paper, several efficient rendering methods are introduced for homogeneous participating media rendering. The first is point-based rendering method for participating media. The point-based method is different from the previous two frameworks (Monte Carlo rendering and density estimation). It first distributes several points in the participating media to generate a point cloud, organizes the point cloud into spatial hierarchy, and then uses it to accelerate the single, second, and multiple scattering computation. For multiple scattering simulation, a precomputed multiple scattering distribution representation in infinite media is presented. With further GPU(graphics processing unit) implementation, this method can achieve interactive efficiency and support the editing of materials and light sources for arbitrary homogeneous participating media, from high-scattered media to high-absorbed media and from isotropic media to highly anisotropic media. The first work is an extension of the point-based method from the surface rendering to volume rendering. Different from the two other frameworks, it is deterministic and noise-free. However, this method is biased. Its target application is material editing or light editing or high-quality rendering with a limited time budget. The second is a precomputed model based on multiple reflections. It precomputes multiple scattering distributions in infinite participating media, proposes a more compact representation than the prior works by analyzing the symmetric of the light distribution, and decreases the number of dimensions from four to three. The precomputed model is then applied to various Monte Carlo rendering methods, such as VRL, UPBP, and MEMET. The original algorithms are in charge of low-order scattering, combined with multiple scattering computed using the precomputed model. Results show substantial improvements in convergence speed and memory costs, and a negligible effect on accuracy. This method is especially interesting for materials with a large albedo and a small mean-free-path, where higher-order scattering effects dominate. It has a limited influence but also a limited cost for more transparent materials with a larger mean-free-path. This method can be used for unidirectional rendering algorithms (e.g., path tracing) and bidirectional algorithms (e.g., VRL), but this method has less impressive speedup in unidirectional algorithms because unidirectional rendering algorithms have difficulties in finding a path for participating media with boundaries. The last introduced method is a path guiding method in participating media rendering, which is under the framework of path tracing. In simple terms, the paths become lost in the medium. Path guiding has been proposed for surface rendering to make the convergence faster by guiding the sampling. In this work, a path guiding solution to translucent materials is introduced. It includes two steps, namely, learning and rendering. In the learning step, the radiance distribution in the volume is learned with path tracing, and this 4D distribution is represent with an SD-tree. In the rendering step, this representation is used for sampling the outgoing direction, combining with the phase function sampling by resampled importance sampling. The key insight of resampled importance sampling is about sampling two multiplied high-frequency functions. The proposed method remarkably improves the performance of light transport simulation in participating media, especially for small lights and media with refractive boundaries. This method can handle any homogeneous participating media, from high scattering to low scattering, from high absorption to low absorption, and from isotropic media to highly anisotropic media. Unlike the previous two works, this method is unbiased. However, it can only handle direction sampling with path guiding and leaves the distance sampling with the original method, which can be further improved. The three methods all target the efficient rendering of homogenous participating media but in different methods.关键词:participating media;rendering;Monte Carlo based methods;path guiding;point-based rendering method;multiple scattering;survey;progress128|155|3更新时间:2024-05-07

摘要:Participating media are frequent in real-world scenes, such as milk, fruit juices, oil, or muddy water in river or ocean scenes. Realistic rendering of participating media is one of the important aspects in the rendering domain. Incoming light interacts with these participating media in a more complex manner than surface rendering: it is refracted at the boundary, absorbed, and scattered as it travels inside the medium. These physical phenomena have different contributions to the overall aspect of the material: refraction focuses light in several parts of the medium, creating high-frequency events, or volume caustics. Scattering blurs incoming light, spreading its contribution. Absorption reduces light intensity as it travels inside the medium. In recent years, several algorithms, such as virtual ray lights (VRL), several extensions to photon mapping culminating with unified points, beams, and paths (UPBP), and manifold exploration Metropolis light transport(MEMLT), have been introduced for rendering participating media. All these methods have greatly improved the simulation of participating media but still encounter problems for simulation of materials with a high albedo, where multiple scattering dominates or high-frequency volumetric caustics effects. Another group of work is called diffusion-based methods. These methods are fast and designed to work with materials with a high albedo. However, these methods produce less accurate results, especially for highly anisotropic media, whereas diffusion-theory-based methods use similarity theory for anisotropic media, which leads to increased inaccuracy. In this paper, several efficient rendering methods are introduced for homogeneous participating media rendering. The first is point-based rendering method for participating media. The point-based method is different from the previous two frameworks (Monte Carlo rendering and density estimation). It first distributes several points in the participating media to generate a point cloud, organizes the point cloud into spatial hierarchy, and then uses it to accelerate the single, second, and multiple scattering computation. For multiple scattering simulation, a precomputed multiple scattering distribution representation in infinite media is presented. With further GPU(graphics processing unit) implementation, this method can achieve interactive efficiency and support the editing of materials and light sources for arbitrary homogeneous participating media, from high-scattered media to high-absorbed media and from isotropic media to highly anisotropic media. The first work is an extension of the point-based method from the surface rendering to volume rendering. Different from the two other frameworks, it is deterministic and noise-free. However, this method is biased. Its target application is material editing or light editing or high-quality rendering with a limited time budget. The second is a precomputed model based on multiple reflections. It precomputes multiple scattering distributions in infinite participating media, proposes a more compact representation than the prior works by analyzing the symmetric of the light distribution, and decreases the number of dimensions from four to three. The precomputed model is then applied to various Monte Carlo rendering methods, such as VRL, UPBP, and MEMET. The original algorithms are in charge of low-order scattering, combined with multiple scattering computed using the precomputed model. Results show substantial improvements in convergence speed and memory costs, and a negligible effect on accuracy. This method is especially interesting for materials with a large albedo and a small mean-free-path, where higher-order scattering effects dominate. It has a limited influence but also a limited cost for more transparent materials with a larger mean-free-path. This method can be used for unidirectional rendering algorithms (e.g., path tracing) and bidirectional algorithms (e.g., VRL), but this method has less impressive speedup in unidirectional algorithms because unidirectional rendering algorithms have difficulties in finding a path for participating media with boundaries. The last introduced method is a path guiding method in participating media rendering, which is under the framework of path tracing. In simple terms, the paths become lost in the medium. Path guiding has been proposed for surface rendering to make the convergence faster by guiding the sampling. In this work, a path guiding solution to translucent materials is introduced. It includes two steps, namely, learning and rendering. In the learning step, the radiance distribution in the volume is learned with path tracing, and this 4D distribution is represent with an SD-tree. In the rendering step, this representation is used for sampling the outgoing direction, combining with the phase function sampling by resampled importance sampling. The key insight of resampled importance sampling is about sampling two multiplied high-frequency functions. The proposed method remarkably improves the performance of light transport simulation in participating media, especially for small lights and media with refractive boundaries. This method can handle any homogeneous participating media, from high scattering to low scattering, from high absorption to low absorption, and from isotropic media to highly anisotropic media. Unlike the previous two works, this method is unbiased. However, it can only handle direction sampling with path guiding and leaves the distance sampling with the original method, which can be further improved. The three methods all target the efficient rendering of homogenous participating media but in different methods.关键词:participating media;rendering;Monte Carlo based methods;path guiding;point-based rendering method;multiple scattering;survey;progress128|155|3更新时间:2024-05-07 -

摘要:The study of cloth modeling methods has a long history. Cloth simulation has always been a popular and difficult research topic in computer animation. Improving the quality of computer animation and user experience is of great significance. Cloth is a classic flexible material object, which can be seen almost everywhere in people's daily life. The clothing animation of realistic virtual characters can bring a strong sense of visual reality to the virtual characters and can enhance the user experience. It has very broad application prospects in animation production, game entertainment, film and television, and other fields. In addition, this technology can also be applied to the clothing industry, where virtual clothing can be used to design and display clothing more intuitively. In recent years, with the continuous emergence of applications involving virtual reality and human-computer interaction, especially the emergence of network virtual environments with high interactive characteristics, people's demand for high-quality real-time virtual character clothing animation has increased. Efficiently and realistically simulating the movement of cloth (e.g., flags, clothing, tablecloths) on a computer has become a very challenging subject. Cloth animation is an important branch in the field of computer animation, belonging to the category of soft body fabric material deformation animation. In cloth simulation modeling, the accuracy of cloth simulation and the speed of cloth simulation often restrict each other. At present, some traditional cloth simulation methods can only take into account one of the two, and it is difficult to achieve a balance. Therefore, researchers have found a method that can balance the simulation accuracy and simulation speed to a certain degree. It is the focus of research in cloth simulation technology. When performing simulation modeling for flexible fabrics, constructing an appropriate and accurate modeling method has become the key to cloth simulation technology. After years of development of cloth simulation research, there are currently three mainstream cloth modeling methods: geometric modeling-based methods, physics-based modeling methods, and hybrid-based modeling methods. Hybrid-based modeling methods are a combination between geometry-based methods and physics-based methods. They require more calculation time compared with geometric modeling methods and have a lower accuracy compared with physics-based methods. In cloth simulation research, the main problem at present is how to meet the increasing real-time requirements on the basis of ensuring the cloth simulation effect. In response to this problem, researchers have made contributions in different ways, including the continuous development of numerical integration, from explicit Euler integration, implicit Euler integration to fourth-order Runge-Kutta and Verlet integration. The development of numerical integration has reduced the numerical calculation time in cloth simulation to a certain extent. In recent years, algorithms combined with machine learning have emerged in various fields. In computer animation, especially in the field of cloth simulation, researchers have begun to use the idea of machine learning to optimize cloth modeling. The commonly used methods of machine learning are convolutional neural network, recurrent neural network, back propagation(BP) neural network, and random forest. This study reviews the related work of cloth simulation modeling methods and summarizes the research and development of methods in China and abroad. According to the improvement of the cloth integration method, the improvement of the multi-resolution grid, and the use of machine learning methods, the development of the cloth simulation method is briefly described. According to the characteristics of the integral method and the multi-resolution grid method and the characteristics of the application of machine learning methods in cloth simulation, several major types of improved methods are summarized and prospected accordingly. Researchers have some considerations whether to improve the simulation speed or to ensure the speed to improve the simulation accuracy. Because of their different research entry points for the improvement of cloth modeling methods, their research purposes are also different. This article selects several algorithms to make a corresponding comparison and provides some suggestions for learners to learn from.关键词:virtual simulation;cloth simulation;integration method;multi-resolution grid;machine learning;progress232|288|1更新时间:2024-05-07

摘要:The study of cloth modeling methods has a long history. Cloth simulation has always been a popular and difficult research topic in computer animation. Improving the quality of computer animation and user experience is of great significance. Cloth is a classic flexible material object, which can be seen almost everywhere in people's daily life. The clothing animation of realistic virtual characters can bring a strong sense of visual reality to the virtual characters and can enhance the user experience. It has very broad application prospects in animation production, game entertainment, film and television, and other fields. In addition, this technology can also be applied to the clothing industry, where virtual clothing can be used to design and display clothing more intuitively. In recent years, with the continuous emergence of applications involving virtual reality and human-computer interaction, especially the emergence of network virtual environments with high interactive characteristics, people's demand for high-quality real-time virtual character clothing animation has increased. Efficiently and realistically simulating the movement of cloth (e.g., flags, clothing, tablecloths) on a computer has become a very challenging subject. Cloth animation is an important branch in the field of computer animation, belonging to the category of soft body fabric material deformation animation. In cloth simulation modeling, the accuracy of cloth simulation and the speed of cloth simulation often restrict each other. At present, some traditional cloth simulation methods can only take into account one of the two, and it is difficult to achieve a balance. Therefore, researchers have found a method that can balance the simulation accuracy and simulation speed to a certain degree. It is the focus of research in cloth simulation technology. When performing simulation modeling for flexible fabrics, constructing an appropriate and accurate modeling method has become the key to cloth simulation technology. After years of development of cloth simulation research, there are currently three mainstream cloth modeling methods: geometric modeling-based methods, physics-based modeling methods, and hybrid-based modeling methods. Hybrid-based modeling methods are a combination between geometry-based methods and physics-based methods. They require more calculation time compared with geometric modeling methods and have a lower accuracy compared with physics-based methods. In cloth simulation research, the main problem at present is how to meet the increasing real-time requirements on the basis of ensuring the cloth simulation effect. In response to this problem, researchers have made contributions in different ways, including the continuous development of numerical integration, from explicit Euler integration, implicit Euler integration to fourth-order Runge-Kutta and Verlet integration. The development of numerical integration has reduced the numerical calculation time in cloth simulation to a certain extent. In recent years, algorithms combined with machine learning have emerged in various fields. In computer animation, especially in the field of cloth simulation, researchers have begun to use the idea of machine learning to optimize cloth modeling. The commonly used methods of machine learning are convolutional neural network, recurrent neural network, back propagation(BP) neural network, and random forest. This study reviews the related work of cloth simulation modeling methods and summarizes the research and development of methods in China and abroad. According to the improvement of the cloth integration method, the improvement of the multi-resolution grid, and the use of machine learning methods, the development of the cloth simulation method is briefly described. According to the characteristics of the integral method and the multi-resolution grid method and the characteristics of the application of machine learning methods in cloth simulation, several major types of improved methods are summarized and prospected accordingly. Researchers have some considerations whether to improve the simulation speed or to ensure the speed to improve the simulation accuracy. Because of their different research entry points for the improvement of cloth modeling methods, their research purposes are also different. This article selects several algorithms to make a corresponding comparison and provides some suggestions for learners to learn from.关键词:virtual simulation;cloth simulation;integration method;multi-resolution grid;machine learning;progress232|288|1更新时间:2024-05-07

Scholar View

-

摘要:This is the 26th annual survey series of bibliographies on image engineering in China. This statistic and analysis study aims to capture the up-to-date development of image engineering in China, provide a targeted means of literature searching facility for readers working in related areas, and supply a useful recommendation for the editors of journals and potential authors of papers. Specifically, considering the wide distribution of related publications in China, 813 references on image engineering research and technique are selected carefully from 2 785 research papers published in 154 issues of a set of 15 Chinese journals. These 15 journals are considered important, in which papers concerning image engineering have higher quality and are relatively concentrated. The selected references are initially classified into five categories (image processing, image analysis, image understanding, technique application, and survey) and then into 23 specialized classes in accordance with their main contents (same as the last 15 years). Analysis and discussions about the statistics of the results of classifications by journal as well as by category are also presented. Analysis on the statistics in 2020 shows that image analysis is receiving the most attention, in which the focuses are mainly on object detection and recognition, image segmentation and edge detection, as well as human biometrics detection and identification. In addition, the studies and applications of image technology in various areas, such as remote sensing, radar, sonar and mapping, as well as biology and medicine are continuously active. In conclusion, this work shows a general and up-to-date picture of the various continuing progresses, either for depth or for width, of image engineering in China in 2020. The statistics for 26 years also provide readers with more comprehensive and credible information on the development trends of various research directions.关键词:image engineering;image processing;image analysis;image understanding;technique application;literature survey;literature statistics;literature classification;bibliometrics48|97|5更新时间:2024-05-07

摘要:This is the 26th annual survey series of bibliographies on image engineering in China. This statistic and analysis study aims to capture the up-to-date development of image engineering in China, provide a targeted means of literature searching facility for readers working in related areas, and supply a useful recommendation for the editors of journals and potential authors of papers. Specifically, considering the wide distribution of related publications in China, 813 references on image engineering research and technique are selected carefully from 2 785 research papers published in 154 issues of a set of 15 Chinese journals. These 15 journals are considered important, in which papers concerning image engineering have higher quality and are relatively concentrated. The selected references are initially classified into five categories (image processing, image analysis, image understanding, technique application, and survey) and then into 23 specialized classes in accordance with their main contents (same as the last 15 years). Analysis and discussions about the statistics of the results of classifications by journal as well as by category are also presented. Analysis on the statistics in 2020 shows that image analysis is receiving the most attention, in which the focuses are mainly on object detection and recognition, image segmentation and edge detection, as well as human biometrics detection and identification. In addition, the studies and applications of image technology in various areas, such as remote sensing, radar, sonar and mapping, as well as biology and medicine are continuously active. In conclusion, this work shows a general and up-to-date picture of the various continuing progresses, either for depth or for width, of image engineering in China in 2020. The statistics for 26 years also provide readers with more comprehensive and credible information on the development trends of various research directions.关键词:image engineering;image processing;image analysis;image understanding;technique application;literature survey;literature statistics;literature classification;bibliometrics48|97|5更新时间:2024-05-07

Review

-

摘要:ObjectiveGiven a low-resolution image, the task of single image super-resolution (SR) is to reconstruct the corresponding high-resolution image. Due to the ill-posed characteristic of this problem, it is challenging to recover the lost details and well preserve the structures in images. To deal with this problem, different kinds of methods have been proposed in the past two decades, including interpolation-based methods, learning-based methods, and reconstruction-based methods. Recently, convolutional neural network (CNN)-based SR methods have achieved great success and received much attention. Several CNNs have been proposed for the SR task, including residual dense network (RDN), enhanced deep residual network for super-resolution (EDSR), and residual channel attention network. Although superior performance has been achieved, many methods utilize very large-scale networks, which definitely lead to a large number of parameters and heavy computational complexity. For example, RDN costs 22.3 million (M) parameters, and the number of parameters of EDSR even reaches 43 M. As a result, those methods might not be suitable for applications with limited memory and computing resources. To solve the above problem, this study proposes a lightweight CNN model using the two-stage information distillation strategy.MethodThe proposed lightweight CNN model is called two-stage feature-compensated information distillation network (TFIDN). There are three main characteristics in TFIDN. First of all, a highly efficient module, called two-stage feature-compensated information distillation block (TFIDB), is proposed as the basic building block of TFIDN. In each TFIDB, the features can be accurately divided into different parts and then progressively refined by the two stages of information distillation. To this end, 1×1 convolution layers are applied in TFIDB to implicitly learn the packing strategy, which is responsible for selecting the suitable components from the target features for further refinement. Compared with the existing information distillation network (IDN) where only one stage of information distillation is carried out, the proposed two-stage information distillation strategy can extract the features much more precisely. Besides information distillation, TFIDB additionally introduces a feature compensation mechanism, which guarantees the completeness of the features and also enforces the consistence among local memory. More specifically, the operation of feature compensation is performed by concatenating and fusing the cross-layer transferred features and the refined features. Unlike IDN, there is no need to manually adjust the output feature dimensions of different convolution layers in TFIDB; thus, the structure of TFIDB is more flexible. Second, to further increase the ability of feature extraction and discrimination learning, the wide activated super-resolution (WDSR) unit and the channel attention (CA) mechanism are both introduced in TFIDB. To improve the performance of the normal residual learning block, the WDSR unit expands the features before performing activation. To maintain the same number of parameters as that of a normal residual learning block, the input feature dimension of the WDSR unit is set as 32 in this study. Although the CA unit can effectively improve the discrimination learning ability of the network, applying too many CA units could significantly increase the depth of the network. Therefore, only one CA unit is attached at the end of each TFIDB, so as to maintain the efficiency of the network. Because the CA operation is carried out on the precisely refined features, the effectiveness of the network can be ensured. Finally, to build the full TFIDN, a number of TFIDBs are cascaded. To keep a balance between model complexity and performance, the number of TFIDBs is set as 3. To fully take advantage of different levels of information, an information fusion unit (IFU) is designed to fuse the outputs of different TFIDBs. In the existing cascading residual network (CARN), dense connections are utilized among the building blocks, leading to a relatively large number of parameters. Different from CARN, to keep a small number of parameters, IFU only introduces one 1×1 convolution layer, which only results in 3 kilo (K) parameters.ResultThe proposed TFIDN is trained using DIV2K dataset. Five widely used datasets, including Set5, Set14, BSD100, Urban100, and Manga109, are used for testing. The ablation study shows that the proposed building block TFIDB and the IFU both contribute to the superior performance of the network. Compared with six famous lightweight models, including fast super-resolution convolutional neural networks, very deep network for super-resolution, Laplacian pyramid super-resolution network, persistent memory network, IDN, and CARN, the proposed TFIDN is able to achieve the highest peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) values. Specifically, with a scale factor of 2 on five testing datasets, the PSNR improvements of TFIDN over the second best method CARN are 0.29 dB, 0.08 dB, 0.08 dB, 0.27 dB, and 0.42 dB, respectively, whereas the SSIM improvements are 0.001 6, 0.000 9, 0.001 7, 0.003 0, and 0.000 9, respectively. The significant PSNR and SSIM improvements indicate that TFIDN is more effective than CARN. On the other hand, the number of parameters and the number of mult-adds required by TFIDN are 933 K and 53.5 giga (G), respectively, both of which are smaller than those of CARN. This phenomenon suggests that TFIDN is more efficient than CARN. Although the proposed TFIDN consumes more parameters and mult-adds than IDN, TFIDN achieves significantly higher performance in terms of PSNR and SSIM.ConclusionThe proposed two-stage feature-compensated information distillation mechanism is efficient and effective. By cascading a number of TFIDBs and introducing the IFU, the proposed lightweight network TFIDN can achieve a better trade-off in terms of model size, computational complexity, and performance.关键词:super-resolution (SR);convolutional neural network (CNN);information distillation;wide activation;channel attention (CA)119|267|1更新时间:2024-05-07

摘要:ObjectiveGiven a low-resolution image, the task of single image super-resolution (SR) is to reconstruct the corresponding high-resolution image. Due to the ill-posed characteristic of this problem, it is challenging to recover the lost details and well preserve the structures in images. To deal with this problem, different kinds of methods have been proposed in the past two decades, including interpolation-based methods, learning-based methods, and reconstruction-based methods. Recently, convolutional neural network (CNN)-based SR methods have achieved great success and received much attention. Several CNNs have been proposed for the SR task, including residual dense network (RDN), enhanced deep residual network for super-resolution (EDSR), and residual channel attention network. Although superior performance has been achieved, many methods utilize very large-scale networks, which definitely lead to a large number of parameters and heavy computational complexity. For example, RDN costs 22.3 million (M) parameters, and the number of parameters of EDSR even reaches 43 M. As a result, those methods might not be suitable for applications with limited memory and computing resources. To solve the above problem, this study proposes a lightweight CNN model using the two-stage information distillation strategy.MethodThe proposed lightweight CNN model is called two-stage feature-compensated information distillation network (TFIDN). There are three main characteristics in TFIDN. First of all, a highly efficient module, called two-stage feature-compensated information distillation block (TFIDB), is proposed as the basic building block of TFIDN. In each TFIDB, the features can be accurately divided into different parts and then progressively refined by the two stages of information distillation. To this end, 1×1 convolution layers are applied in TFIDB to implicitly learn the packing strategy, which is responsible for selecting the suitable components from the target features for further refinement. Compared with the existing information distillation network (IDN) where only one stage of information distillation is carried out, the proposed two-stage information distillation strategy can extract the features much more precisely. Besides information distillation, TFIDB additionally introduces a feature compensation mechanism, which guarantees the completeness of the features and also enforces the consistence among local memory. More specifically, the operation of feature compensation is performed by concatenating and fusing the cross-layer transferred features and the refined features. Unlike IDN, there is no need to manually adjust the output feature dimensions of different convolution layers in TFIDB; thus, the structure of TFIDB is more flexible. Second, to further increase the ability of feature extraction and discrimination learning, the wide activated super-resolution (WDSR) unit and the channel attention (CA) mechanism are both introduced in TFIDB. To improve the performance of the normal residual learning block, the WDSR unit expands the features before performing activation. To maintain the same number of parameters as that of a normal residual learning block, the input feature dimension of the WDSR unit is set as 32 in this study. Although the CA unit can effectively improve the discrimination learning ability of the network, applying too many CA units could significantly increase the depth of the network. Therefore, only one CA unit is attached at the end of each TFIDB, so as to maintain the efficiency of the network. Because the CA operation is carried out on the precisely refined features, the effectiveness of the network can be ensured. Finally, to build the full TFIDN, a number of TFIDBs are cascaded. To keep a balance between model complexity and performance, the number of TFIDBs is set as 3. To fully take advantage of different levels of information, an information fusion unit (IFU) is designed to fuse the outputs of different TFIDBs. In the existing cascading residual network (CARN), dense connections are utilized among the building blocks, leading to a relatively large number of parameters. Different from CARN, to keep a small number of parameters, IFU only introduces one 1×1 convolution layer, which only results in 3 kilo (K) parameters.ResultThe proposed TFIDN is trained using DIV2K dataset. Five widely used datasets, including Set5, Set14, BSD100, Urban100, and Manga109, are used for testing. The ablation study shows that the proposed building block TFIDB and the IFU both contribute to the superior performance of the network. Compared with six famous lightweight models, including fast super-resolution convolutional neural networks, very deep network for super-resolution, Laplacian pyramid super-resolution network, persistent memory network, IDN, and CARN, the proposed TFIDN is able to achieve the highest peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) values. Specifically, with a scale factor of 2 on five testing datasets, the PSNR improvements of TFIDN over the second best method CARN are 0.29 dB, 0.08 dB, 0.08 dB, 0.27 dB, and 0.42 dB, respectively, whereas the SSIM improvements are 0.001 6, 0.000 9, 0.001 7, 0.003 0, and 0.000 9, respectively. The significant PSNR and SSIM improvements indicate that TFIDN is more effective than CARN. On the other hand, the number of parameters and the number of mult-adds required by TFIDN are 933 K and 53.5 giga (G), respectively, both of which are smaller than those of CARN. This phenomenon suggests that TFIDN is more efficient than CARN. Although the proposed TFIDN consumes more parameters and mult-adds than IDN, TFIDN achieves significantly higher performance in terms of PSNR and SSIM.ConclusionThe proposed two-stage feature-compensated information distillation mechanism is efficient and effective. By cascading a number of TFIDBs and introducing the IFU, the proposed lightweight network TFIDN can achieve a better trade-off in terms of model size, computational complexity, and performance.关键词:super-resolution (SR);convolutional neural network (CNN);information distillation;wide activation;channel attention (CA)119|267|1更新时间:2024-05-07 -

摘要:ObjectiveSuperpixel segmentation is widely used as a preprocessing step in many computer vision applications. It groups the pixels of an image into homogeneous regions while trying to respect the object boundary. Generally, a good superpixel segmentation method would meet the following three conditions. First, the boundaries of the superpixel should adhere well to the image boundaries. Second, the boundaries of the superpixel should not wing across different objects in the image. Third, superpixels should have similar sizes and regular shapes. In recent years, various superpixel segmentation methods have been proposed; however, most of these state-of-the-art methods only use the pixel information as a clustering feature. Therefore, they can be severely impacted by high-frequency contrast variations and fail to produce equally sized regions having the same texture. To make superpixels robust to contrast variations such as strong gradient texture, we propose a texture-aware superpixel segmentation algorithm that uses patch-level features for clustering purposes.MethodThe main idea of our algorithm is to calculate the color distance by using a specially designed quarter-circular mean filtering operator. Because the mean filtering has the characteristics of noise suppression and texture smoothing and the rotated quarter-circular window ensures that the mean filtering sampled pixels are located inside the superpixels as much as possible, the quarter-circular mean filtering operator has the capacity to identify the texture pattern. The Sobel gradient has the advantages of fast speed and thin edge, but it is easy to be disturbed by strong gradient texture. The interval gradient is characterized by texture suppression and structure preservation, but its edge is too thick. To overcome their shortcomings while retaining their strengths, we devise a hybrid gradient based on the multiplication of the Sobel gradient and interval gradient, which has the advantages of texture suppression, structure preservation, and edge thinning; therefore, its magnitude can represent the possibility that a pixel belongs to the structure. Finally, an integrated structure-avoiding clustering distance is proposed by looking for the maximum hybrid gradient magnitude along the linear path, which can further enhance the boundary adherence of the superpixels.ResultTo verify the universality of our algorithm, we test the Berkeley segmentation dataset (BSDS500), which contains 500 images of indoor, outdoor, human, animal, and other scenes with five manually ground truths. To verify the particularity of our algorithm, we test two mosaic images with strong gradient texture. Unfortunately, these two mosaic images do not have ground truth, and they can only be evaluated subjectively by the human eye. All experiments of our algorithm are run on the Windows platform, which requires the mixed programming of MATLAB and Visual studio. Two main parameters need to be set in our algorithm, namely, the mean filtering window radius and the interval gradient operator radius. As both of them aim to capture the regularity of the texture, we set them the same value. Furthermore, the size of the window radius depends on the texture size; the larger the texture size, the larger the window radius required. Normally, we suggest taking the window radius between 3 and 5. We compare our algorithm with other popular superpixel segmentation methods, and all methods use the code with the optimal parameters provided by the authors to obtain their superpixel segmentation results. The superpixel segmentation performance is tested and judged in terms of the boundary recall, undersegmentation error (UE), achievable segmentation accuracy (ASA), and compactness measure (CM). By testing on the BSDS500 image dataset, our algorithm obtains a 1.5% lower UE value, 0.2% higher ASA value, and 4.3% higher CM value. By testing on many mosaic images with strong gradient textures, our algorithm generates superpixels with not only regular shapes but also better boundary adherence. The experimental results show that our algorithm surpasses the state-of-the-art methods in superpixel segmentation performance on BSDS500 and mosaic images.ConclusionTo make superpixel segmentation robust to high-frequency contrast variations such as strong gradient texture and noise, we propose a texture-aware superpixel segmentation algorithm, which mainly contributes in the following three aspects. First, we design a quarter-circular mean filtering operator, which is sensitive to the texture pattern. Second, we bring forward a hybrid gradient based on the multiplication of the Sobel gradient and interval gradient, which can distinguish between texture and structure pixels. Third, an integrated structure-avoiding clustering distance is devised based on the hybrid gradient magnitude. It aims to prevent the superpixels from crossing the structure boundary and keep the superpixels with regular size. The experimental results show that our algorithm performs equally well or better than state-of-the-art superpixel segmentation methods in terms of the commonly used evaluation metrics. In the face of strong gradient textures, our method can generate superpixels with regular shape and better boundary adherence. Thus, our superpixel segmentation algorithm has great potential in target recognition, target tracking, and significance detection.关键词:image segmentation;superpixel;clustering;strong gradient texture;patch;linear path72|202|4更新时间:2024-05-07

摘要:ObjectiveSuperpixel segmentation is widely used as a preprocessing step in many computer vision applications. It groups the pixels of an image into homogeneous regions while trying to respect the object boundary. Generally, a good superpixel segmentation method would meet the following three conditions. First, the boundaries of the superpixel should adhere well to the image boundaries. Second, the boundaries of the superpixel should not wing across different objects in the image. Third, superpixels should have similar sizes and regular shapes. In recent years, various superpixel segmentation methods have been proposed; however, most of these state-of-the-art methods only use the pixel information as a clustering feature. Therefore, they can be severely impacted by high-frequency contrast variations and fail to produce equally sized regions having the same texture. To make superpixels robust to contrast variations such as strong gradient texture, we propose a texture-aware superpixel segmentation algorithm that uses patch-level features for clustering purposes.MethodThe main idea of our algorithm is to calculate the color distance by using a specially designed quarter-circular mean filtering operator. Because the mean filtering has the characteristics of noise suppression and texture smoothing and the rotated quarter-circular window ensures that the mean filtering sampled pixels are located inside the superpixels as much as possible, the quarter-circular mean filtering operator has the capacity to identify the texture pattern. The Sobel gradient has the advantages of fast speed and thin edge, but it is easy to be disturbed by strong gradient texture. The interval gradient is characterized by texture suppression and structure preservation, but its edge is too thick. To overcome their shortcomings while retaining their strengths, we devise a hybrid gradient based on the multiplication of the Sobel gradient and interval gradient, which has the advantages of texture suppression, structure preservation, and edge thinning; therefore, its magnitude can represent the possibility that a pixel belongs to the structure. Finally, an integrated structure-avoiding clustering distance is proposed by looking for the maximum hybrid gradient magnitude along the linear path, which can further enhance the boundary adherence of the superpixels.ResultTo verify the universality of our algorithm, we test the Berkeley segmentation dataset (BSDS500), which contains 500 images of indoor, outdoor, human, animal, and other scenes with five manually ground truths. To verify the particularity of our algorithm, we test two mosaic images with strong gradient texture. Unfortunately, these two mosaic images do not have ground truth, and they can only be evaluated subjectively by the human eye. All experiments of our algorithm are run on the Windows platform, which requires the mixed programming of MATLAB and Visual studio. Two main parameters need to be set in our algorithm, namely, the mean filtering window radius and the interval gradient operator radius. As both of them aim to capture the regularity of the texture, we set them the same value. Furthermore, the size of the window radius depends on the texture size; the larger the texture size, the larger the window radius required. Normally, we suggest taking the window radius between 3 and 5. We compare our algorithm with other popular superpixel segmentation methods, and all methods use the code with the optimal parameters provided by the authors to obtain their superpixel segmentation results. The superpixel segmentation performance is tested and judged in terms of the boundary recall, undersegmentation error (UE), achievable segmentation accuracy (ASA), and compactness measure (CM). By testing on the BSDS500 image dataset, our algorithm obtains a 1.5% lower UE value, 0.2% higher ASA value, and 4.3% higher CM value. By testing on many mosaic images with strong gradient textures, our algorithm generates superpixels with not only regular shapes but also better boundary adherence. The experimental results show that our algorithm surpasses the state-of-the-art methods in superpixel segmentation performance on BSDS500 and mosaic images.ConclusionTo make superpixel segmentation robust to high-frequency contrast variations such as strong gradient texture and noise, we propose a texture-aware superpixel segmentation algorithm, which mainly contributes in the following three aspects. First, we design a quarter-circular mean filtering operator, which is sensitive to the texture pattern. Second, we bring forward a hybrid gradient based on the multiplication of the Sobel gradient and interval gradient, which can distinguish between texture and structure pixels. Third, an integrated structure-avoiding clustering distance is devised based on the hybrid gradient magnitude. It aims to prevent the superpixels from crossing the structure boundary and keep the superpixels with regular size. The experimental results show that our algorithm performs equally well or better than state-of-the-art superpixel segmentation methods in terms of the commonly used evaluation metrics. In the face of strong gradient textures, our method can generate superpixels with regular shape and better boundary adherence. Thus, our superpixel segmentation algorithm has great potential in target recognition, target tracking, and significance detection.关键词:image segmentation;superpixel;clustering;strong gradient texture;patch;linear path72|202|4更新时间:2024-05-07

Image Processing and Coding

-

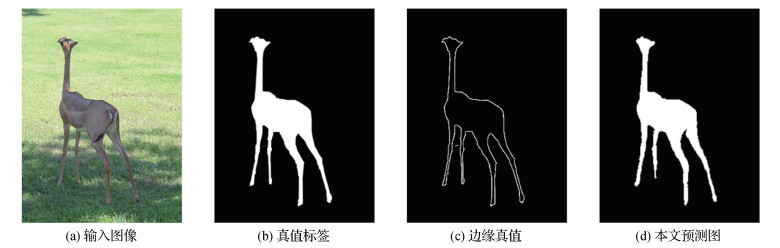

摘要:ObjectiveSalient object detection is a basic task in the field of computer vision, which simulates the human visual attention mechanism and quickly detects attractive objects in the scene that are most likely to represent user query variables and contain the most information. As a preprocessing step of other vision tasks, such as image resizing, visual tracking, person re-identification, and image segmentation, salient object detection plays a very important role. The traditional salient object detection method mainly uses the method of manually extracting features of the image to detect. However, this process is time-consuming and labor-intensive, and the results cannot meet the requirements. With the rise of deep learning, a large number of feature extraction algorithms based on convolutional neural networks have emerged. Compared with traditional feature extraction methods, using deep neural networks to extract features has better quality and more accurate prediction. In order to obtain accurate salient object segmentation results, deep learning-based methods mostly introduce attention mechanisms for feature weighting to suppress noise and redundant information. However, the modeling process of the existing attention mechanism is quite rough, which treats each position in the feature tensor equally and directly solves the attention score. This strategy cannot explicitly learn the global importance of different channels and different spatial regions, which may lead to missed detection or misdetection. To this end, in this study, we propose a deep clustering attention (DCA) mechanism to better model the feature-level pixel-by-pixel relationship.MethodIn this study, the proposed DCA explicitly divides the feature tensors into several categories channel-wise and spatial-wise; that is, it clusters the features into foreground and background sensitive regions. Then, general per-pixel attention weighting is performed within each class, and semantical attention weighting is further performed inter-classes. The idea of DCA is easy to understand, whose parameter quantity is also small and can be deployed in any salient detection network. This method can efficiently separate the foreground and background regions. In addition, through supervised learning on the edges of salient objects, the prediction can get clearer edges, and the results are more accurate.ResultComparison of 19 state-of-the-art methods on six large public datasets demonstrates the effectiveness of DCA in modeling pixel-wise attention, which is very helpful for obtaining finely salient object segmentation mask. On various evaluation indicators, the effects of the model after the deployment of DCA have improved. On the extended cornplex scene saliency(ECSSD) dataset, the performance of DCANet increased by 0.9% over the second place (F-measure value). On the Dalian University of Technology and OMRON Corporation(DUT-OMRON) dataset, the performance of DCANet increased by 0.5% over the second place (F-measure value), and the MAE decreased by 3.2%. On the HKU-IS dataset, the performance of DCANet is 0.3% higher than the second place (F-measure value), and the MAE is reduced by 2.8%. On the pattern analysis, statistical modeling and computational learning(PASCAL)-subset(S) dataset, the performance of DCANet is 0.8% higher than the second place (F-measure value), and the MAE is reduced by 4.2%.ConclusionThe DCA proposed in this study effectively enhances the globally salient scores of foreground sensitive classes through more fine-grained channel partitioning and spatial region partitioning. This paper analyzes the deficiencies of the existing salient object detection algorithm based on attention mechanism and proposes a method for explicitly dividing feature channels and spatial regions. The attention modeling mechanism helps the model training process perceive and adapt tasks quickly. Compared with the existing attention mechanism, the idea of DCA is clear, the effect is significant, and it is simple to deploy. Meanwhile, DCA provides a viable new research direction for the study of more general attention mechanisms.关键词:saliency detection;attention mechanism;deep clustering;spatial-channel decoupling;full convolutional network (FCN)41|33|2更新时间:2024-05-07

摘要:ObjectiveSalient object detection is a basic task in the field of computer vision, which simulates the human visual attention mechanism and quickly detects attractive objects in the scene that are most likely to represent user query variables and contain the most information. As a preprocessing step of other vision tasks, such as image resizing, visual tracking, person re-identification, and image segmentation, salient object detection plays a very important role. The traditional salient object detection method mainly uses the method of manually extracting features of the image to detect. However, this process is time-consuming and labor-intensive, and the results cannot meet the requirements. With the rise of deep learning, a large number of feature extraction algorithms based on convolutional neural networks have emerged. Compared with traditional feature extraction methods, using deep neural networks to extract features has better quality and more accurate prediction. In order to obtain accurate salient object segmentation results, deep learning-based methods mostly introduce attention mechanisms for feature weighting to suppress noise and redundant information. However, the modeling process of the existing attention mechanism is quite rough, which treats each position in the feature tensor equally and directly solves the attention score. This strategy cannot explicitly learn the global importance of different channels and different spatial regions, which may lead to missed detection or misdetection. To this end, in this study, we propose a deep clustering attention (DCA) mechanism to better model the feature-level pixel-by-pixel relationship.MethodIn this study, the proposed DCA explicitly divides the feature tensors into several categories channel-wise and spatial-wise; that is, it clusters the features into foreground and background sensitive regions. Then, general per-pixel attention weighting is performed within each class, and semantical attention weighting is further performed inter-classes. The idea of DCA is easy to understand, whose parameter quantity is also small and can be deployed in any salient detection network. This method can efficiently separate the foreground and background regions. In addition, through supervised learning on the edges of salient objects, the prediction can get clearer edges, and the results are more accurate.ResultComparison of 19 state-of-the-art methods on six large public datasets demonstrates the effectiveness of DCA in modeling pixel-wise attention, which is very helpful for obtaining finely salient object segmentation mask. On various evaluation indicators, the effects of the model after the deployment of DCA have improved. On the extended cornplex scene saliency(ECSSD) dataset, the performance of DCANet increased by 0.9% over the second place (F-measure value). On the Dalian University of Technology and OMRON Corporation(DUT-OMRON) dataset, the performance of DCANet increased by 0.5% over the second place (F-measure value), and the MAE decreased by 3.2%. On the HKU-IS dataset, the performance of DCANet is 0.3% higher than the second place (F-measure value), and the MAE is reduced by 2.8%. On the pattern analysis, statistical modeling and computational learning(PASCAL)-subset(S) dataset, the performance of DCANet is 0.8% higher than the second place (F-measure value), and the MAE is reduced by 4.2%.ConclusionThe DCA proposed in this study effectively enhances the globally salient scores of foreground sensitive classes through more fine-grained channel partitioning and spatial region partitioning. This paper analyzes the deficiencies of the existing salient object detection algorithm based on attention mechanism and proposes a method for explicitly dividing feature channels and spatial regions. The attention modeling mechanism helps the model training process perceive and adapt tasks quickly. Compared with the existing attention mechanism, the idea of DCA is clear, the effect is significant, and it is simple to deploy. Meanwhile, DCA provides a viable new research direction for the study of more general attention mechanisms.关键词:saliency detection;attention mechanism;deep clustering;spatial-channel decoupling;full convolutional network (FCN)41|33|2更新时间:2024-05-07 -

摘要:ObjectiveWith the rapid development of computer vision technology, the demand for intelligent and humanized transportation systems is gradually increasing. Vehicle logo recognition (VLR) is an important part of intelligent transportation systems, and the requirements for its recognition effect are gradually increasing. Considering the difficulty in achieving the samples of some vehicle logos from real surveillance systems in certain areas and the cost of collecting samples and training, the recognition of vehicle logos under small training samples still has very important application value. Vehicle logos captured from real surveillance systems on the road suffer from the following characteristics: 1) low resolution, 2) easy to blur due to the movement of vehicles, and 3) easily influenced by light from the environment. Thus, the recognition of vehicle logos is still a challenging problem. Given the fact that some vehicle logos have similar structures and part of the vehicle logos has salient features, we consider how to enhance the overall characteristics of vehicle logos from the aspects of symmetrical structural and local saliency, which can benefit VLR, and propose a VLR method based on feature enhancement, called feature enhancement-based vehicle logo recognition (FE-VLR).MethodFE-VLR comprehensively considers the structural similarity features and local salient features of the vehicle logo and then combines them together with the overall features of the vehicle logo to identify the vehicle logo. Based on the analysis of the structural symmetry of the left and right parts of the vehicle logo, this study calculates the similarity value of the image block to express the similarity feature. In addition, a method for calculating salient regions based on the correlation of neighborhood blocks is proposed to locate and extract the salient features of the vehicle logo. First, it extracts similar self-symmetrical features of vehicle logo images and then builds an image pyramid. Under each layer of the pyramid, the overall features and local salient features of the vehicle logos are extracted. Local salient locations are obtained by salient region detection based on the correlation of neighborhood blocks. Finally, a collaborative representation-based classification (CRC) classifier is used to classify the vehicle logos. CRC is a fast and effective classifier suitable for small training samples. The classifier uses the collaborative coding of all samples in the sample dictionary to represent the prediction samples, so as to improve the recognition rate by using the difference of the same attribute between different types of vehicle logos.ResultThe effectiveness of our algorithm is evaluated on the public vehicle logo datasets Vehicle Logo Dataset from Hefei University of Technology (HFUT-VL) and Xiamen University Vehicle Logo Dataset (XMU). The experimental results show that under small training samples, FE-VLR is superior to some other traditional VLR methods and also has higher recognition rates than some convolutional neural network-based methods. On the HFUT-VL dataset, when the number of training samples is 5, the recognition rate of FE-VLR reaches 97.78%, and when the number of training samples is 20, the recognition rate reaches 99.1%. On the more complex XMU dataset, FE-VLR is more efficient and robust. When the number of training samples is equal or less than 15, FE-VLR can improve the recognition rate by at least 7.2% compared with overlapping enhanced patterns of oriented edge magnitudes(OE-POEM). The experimental results show that FE-VLR always has better performance under small samples.ConclusionThe FE-VLR method increases the recognition ability of low-quality and low-resolution vehicle logo images obtained from real surveillance systems on the road, which can better meet the needs of VLR in practical applications. The experimental results on the public HFUT-VL and XMU datasets show that in case of small samples, the recognition rate of FE-VLR is higher than that of other VLR methods and better than some recognition methods based on deep learning models.关键词:vehicle logo recognition(VLR);feature enhancement(FE);self-symmetrical similar features;local salient features;the correlation degree of neighborhood blocks106|184|2更新时间:2024-05-07

摘要:ObjectiveWith the rapid development of computer vision technology, the demand for intelligent and humanized transportation systems is gradually increasing. Vehicle logo recognition (VLR) is an important part of intelligent transportation systems, and the requirements for its recognition effect are gradually increasing. Considering the difficulty in achieving the samples of some vehicle logos from real surveillance systems in certain areas and the cost of collecting samples and training, the recognition of vehicle logos under small training samples still has very important application value. Vehicle logos captured from real surveillance systems on the road suffer from the following characteristics: 1) low resolution, 2) easy to blur due to the movement of vehicles, and 3) easily influenced by light from the environment. Thus, the recognition of vehicle logos is still a challenging problem. Given the fact that some vehicle logos have similar structures and part of the vehicle logos has salient features, we consider how to enhance the overall characteristics of vehicle logos from the aspects of symmetrical structural and local saliency, which can benefit VLR, and propose a VLR method based on feature enhancement, called feature enhancement-based vehicle logo recognition (FE-VLR).MethodFE-VLR comprehensively considers the structural similarity features and local salient features of the vehicle logo and then combines them together with the overall features of the vehicle logo to identify the vehicle logo. Based on the analysis of the structural symmetry of the left and right parts of the vehicle logo, this study calculates the similarity value of the image block to express the similarity feature. In addition, a method for calculating salient regions based on the correlation of neighborhood blocks is proposed to locate and extract the salient features of the vehicle logo. First, it extracts similar self-symmetrical features of vehicle logo images and then builds an image pyramid. Under each layer of the pyramid, the overall features and local salient features of the vehicle logos are extracted. Local salient locations are obtained by salient region detection based on the correlation of neighborhood blocks. Finally, a collaborative representation-based classification (CRC) classifier is used to classify the vehicle logos. CRC is a fast and effective classifier suitable for small training samples. The classifier uses the collaborative coding of all samples in the sample dictionary to represent the prediction samples, so as to improve the recognition rate by using the difference of the same attribute between different types of vehicle logos.ResultThe effectiveness of our algorithm is evaluated on the public vehicle logo datasets Vehicle Logo Dataset from Hefei University of Technology (HFUT-VL) and Xiamen University Vehicle Logo Dataset (XMU). The experimental results show that under small training samples, FE-VLR is superior to some other traditional VLR methods and also has higher recognition rates than some convolutional neural network-based methods. On the HFUT-VL dataset, when the number of training samples is 5, the recognition rate of FE-VLR reaches 97.78%, and when the number of training samples is 20, the recognition rate reaches 99.1%. On the more complex XMU dataset, FE-VLR is more efficient and robust. When the number of training samples is equal or less than 15, FE-VLR can improve the recognition rate by at least 7.2% compared with overlapping enhanced patterns of oriented edge magnitudes(OE-POEM). The experimental results show that FE-VLR always has better performance under small samples.ConclusionThe FE-VLR method increases the recognition ability of low-quality and low-resolution vehicle logo images obtained from real surveillance systems on the road, which can better meet the needs of VLR in practical applications. The experimental results on the public HFUT-VL and XMU datasets show that in case of small samples, the recognition rate of FE-VLR is higher than that of other VLR methods and better than some recognition methods based on deep learning models.关键词:vehicle logo recognition(VLR);feature enhancement(FE);self-symmetrical similar features;local salient features;the correlation degree of neighborhood blocks106|184|2更新时间:2024-05-07

Image Analysis and Recognition

-

摘要:ObjectiveGlaucoma can cause irreversible damage to vision. Glaucoma is often diagnosed on the basis of the cup-to-disc ratio (CDR). A CDR greater than 0.65 is considered to be glaucoma. Therefore, segmenting the optic disc (OD) and optic cup (OC) accurately from fundus images is an important task. The traditional methods used to segment the OD and OC are mainly based on deformable model, graph-cut, edge detection, and super pixel classification. Traditional methods need to manually extract image features, which are easily affected by light and contrast, and the segmentation accuracy is often low. In addition, these methods require careful adjustment of model parameters to achieve performance improvements and are not suitable for large-scale promotion. In recent years, with the development of deep learning, the segmentation methods of OD and OC based on the convolutional neural network (CNN), which can automatically extract image features, have become the main research direction and have achieved better segmentation performance than traditional methods. OC segmentation is more difficult than OD segmentation because the OC boundary is not obvious. The existing methods based on CNN can be divided into two categories. One is joint segmentation; that is, the OD and OC can be segmented simultaneously using the same segmentation network. The other is two-stage segmentation; that is, the OD is segmented first, and then the OC is segmented. Previous studies have shown that the accuracy of joint segmentation is often inferior to that of two-stage segmentation, and joint segmentation can lead to biased optimization result to OD or OC. However, the connection between OD and OC is often ignored in two-stage segmentation. In this study, U-Net is improved, and a two-stage segmentation network called context attention U-Net (CA-Net) is proposed to segment the OD and OC sequentially. The prior knowledge is introduced into the OC segmentation network to further improve the OC segmentation accuracy.MethodFirst, we locate the OD center and crop the region of interest (ROI) from the whole fundus image according to the OD center, which can reduce irrelevant regions. The size of the ROI image is 512×512. Then, the cropped ROI image is transferred by polar transformation from the Cartesian coordinate into the polar coordinate, which can balance disc and cup proportion. Because the OC region always accounts for a low proportion, it easily leads to overfitting and bias in training the deep model. Finally, the transferred images are fed into CA-Net to predict the final OD or OC segmentation maps. The OC is inside the OD, which means that the area that belongs to the OC also belongs to the OD. Specifically, we train two segmentation networks with the same structure to segment the OD and OC, respectively. To segment the OC more accurately, we utilize the connection between the OD and the OC as prior information. The modified pre-trained ResNet34 is used as the feature extraction network to enhance the feature extraction capability. Concretely, the first max pooling layer, the last average pooling layer, and the full connectivity layer are removed from the original ResNet34. Compared with training the deep learning model from scratch, loading pre-trained parameters on ImageNet (ImageNet Large-Scale Visual Recognition Challenge) helps prevent overfitting. Moreover, a context aggregation module (CAM) is proposed to aggregate the context information of images from multiple scales, which exploits the different sizes of atrous convolution to encode the rich semantic information. Because there will be a lot of irrelevant information during the fusion of shallow and deep feature maps, an attention guidance module (AGM) is proposed to recalibrate the feature maps after fusion of shallow and deep feature maps to enhance the useful feature information. In addition, the idea of deep supervision is also used to train the weights of shallow network. Finally, CA-Net outputs the probability map, and the largest connected region is selected as the final segmentation result to remove noise. We do not use any post-processing techniques such as ellipse fitting. DiceLoss is used as loss function to train CA-Net. We use a NVIDIA GeForce GTX 1080 Ti device to train and test the proposed CA-Net.ResultWe conducted experiments on three commonly used public datasets (Drishti-GS1, RIM-ONE-v3, and Refuge) to verify the effectiveness and generalization of CA-Net. We trained the model on the training set and reported the model performance on the test set. The Drishti-GS1 dataset was split into 50 training images and 51 test images. The RIM-ONE-v3 dataset was randomly split into 99 training images and 60 test images. The Refuge dataset was randomly split into 320 training images and 80 test images. Two measures were used to evaluate the results, namely, Dice coefficient (Dice) and intersection-over-union (IOU). For OD segmentation, the Dice and IOU obtained by CA-Net are 0.981 4 and 0.963 5 on the Drishti-GS1 dataset and 0.976 8 and 0.954 6 on the retinal image database for optic nerve evaluation(RIM-ONE)-v3 dataset, respectively. For OC segmentation, the Dice and IOU obtained by CA-Net are 0.926 6 and 0.863 3 on the Drishti-GS1 dataset and 0.864 2 and 0.760 9 on the RIM-ONE-v3 dataset, respectively. Moreover, CA-Net achieved a Dice of 0.975 8 and IOU of 0.952 7 in the case of OD segmentation and a Dice of 0.887 1 and IOU of 0.797 2 in the case of OC segmentation on the Refuge dataset, which further demonstrated the effectiveness and generalization of CA-Net. We also used the Refuge training dataset to train CA-Net and directly evaluated it on the Drishti-GS1 and RIM-ONE-v3 testing datasets. In addition, ablation experiments on the three datasets also showed the effectiveness of each module in the network, such as AGM, CAM, polar transformation, and deep supervision. The experiments also showed that CA-Net could achieve higher segmentation accuracy in the case of Dice and IOU when compared with U-Net, M-Net, and DeepLab v3+. The visual segmentation results also proved that CA-Net could achieve segmentation results more similar to ground truth.ConclusionThis study presents a new two-stage segmentation method based on U-Net for OD and OC segmentation, which is proved to be effective. The experiments showed that CA-Net can obtain better results than other methods on the Drishti-GS1, RIM-ONE-v3, and Refuge datasets. In the future, we will focus on the problem of domain adaptation and solve the problem of OD and OC segmentation when the training samples are insufficient.关键词:Glaucoma;optic disc(OD);optic cup(OC);context aggregation module(CAM);attention guidance module(AGM);deep supervision;prior knowledge129|328|3更新时间:2024-05-07

摘要:ObjectiveGlaucoma can cause irreversible damage to vision. Glaucoma is often diagnosed on the basis of the cup-to-disc ratio (CDR). A CDR greater than 0.65 is considered to be glaucoma. Therefore, segmenting the optic disc (OD) and optic cup (OC) accurately from fundus images is an important task. The traditional methods used to segment the OD and OC are mainly based on deformable model, graph-cut, edge detection, and super pixel classification. Traditional methods need to manually extract image features, which are easily affected by light and contrast, and the segmentation accuracy is often low. In addition, these methods require careful adjustment of model parameters to achieve performance improvements and are not suitable for large-scale promotion. In recent years, with the development of deep learning, the segmentation methods of OD and OC based on the convolutional neural network (CNN), which can automatically extract image features, have become the main research direction and have achieved better segmentation performance than traditional methods. OC segmentation is more difficult than OD segmentation because the OC boundary is not obvious. The existing methods based on CNN can be divided into two categories. One is joint segmentation; that is, the OD and OC can be segmented simultaneously using the same segmentation network. The other is two-stage segmentation; that is, the OD is segmented first, and then the OC is segmented. Previous studies have shown that the accuracy of joint segmentation is often inferior to that of two-stage segmentation, and joint segmentation can lead to biased optimization result to OD or OC. However, the connection between OD and OC is often ignored in two-stage segmentation. In this study, U-Net is improved, and a two-stage segmentation network called context attention U-Net (CA-Net) is proposed to segment the OD and OC sequentially. The prior knowledge is introduced into the OC segmentation network to further improve the OC segmentation accuracy.MethodFirst, we locate the OD center and crop the region of interest (ROI) from the whole fundus image according to the OD center, which can reduce irrelevant regions. The size of the ROI image is 512×512. Then, the cropped ROI image is transferred by polar transformation from the Cartesian coordinate into the polar coordinate, which can balance disc and cup proportion. Because the OC region always accounts for a low proportion, it easily leads to overfitting and bias in training the deep model. Finally, the transferred images are fed into CA-Net to predict the final OD or OC segmentation maps. The OC is inside the OD, which means that the area that belongs to the OC also belongs to the OD. Specifically, we train two segmentation networks with the same structure to segment the OD and OC, respectively. To segment the OC more accurately, we utilize the connection between the OD and the OC as prior information. The modified pre-trained ResNet34 is used as the feature extraction network to enhance the feature extraction capability. Concretely, the first max pooling layer, the last average pooling layer, and the full connectivity layer are removed from the original ResNet34. Compared with training the deep learning model from scratch, loading pre-trained parameters on ImageNet (ImageNet Large-Scale Visual Recognition Challenge) helps prevent overfitting. Moreover, a context aggregation module (CAM) is proposed to aggregate the context information of images from multiple scales, which exploits the different sizes of atrous convolution to encode the rich semantic information. Because there will be a lot of irrelevant information during the fusion of shallow and deep feature maps, an attention guidance module (AGM) is proposed to recalibrate the feature maps after fusion of shallow and deep feature maps to enhance the useful feature information. In addition, the idea of deep supervision is also used to train the weights of shallow network. Finally, CA-Net outputs the probability map, and the largest connected region is selected as the final segmentation result to remove noise. We do not use any post-processing techniques such as ellipse fitting. DiceLoss is used as loss function to train CA-Net. We use a NVIDIA GeForce GTX 1080 Ti device to train and test the proposed CA-Net.ResultWe conducted experiments on three commonly used public datasets (Drishti-GS1, RIM-ONE-v3, and Refuge) to verify the effectiveness and generalization of CA-Net. We trained the model on the training set and reported the model performance on the test set. The Drishti-GS1 dataset was split into 50 training images and 51 test images. The RIM-ONE-v3 dataset was randomly split into 99 training images and 60 test images. The Refuge dataset was randomly split into 320 training images and 80 test images. Two measures were used to evaluate the results, namely, Dice coefficient (Dice) and intersection-over-union (IOU). For OD segmentation, the Dice and IOU obtained by CA-Net are 0.981 4 and 0.963 5 on the Drishti-GS1 dataset and 0.976 8 and 0.954 6 on the retinal image database for optic nerve evaluation(RIM-ONE)-v3 dataset, respectively. For OC segmentation, the Dice and IOU obtained by CA-Net are 0.926 6 and 0.863 3 on the Drishti-GS1 dataset and 0.864 2 and 0.760 9 on the RIM-ONE-v3 dataset, respectively. Moreover, CA-Net achieved a Dice of 0.975 8 and IOU of 0.952 7 in the case of OD segmentation and a Dice of 0.887 1 and IOU of 0.797 2 in the case of OC segmentation on the Refuge dataset, which further demonstrated the effectiveness and generalization of CA-Net. We also used the Refuge training dataset to train CA-Net and directly evaluated it on the Drishti-GS1 and RIM-ONE-v3 testing datasets. In addition, ablation experiments on the three datasets also showed the effectiveness of each module in the network, such as AGM, CAM, polar transformation, and deep supervision. The experiments also showed that CA-Net could achieve higher segmentation accuracy in the case of Dice and IOU when compared with U-Net, M-Net, and DeepLab v3+. The visual segmentation results also proved that CA-Net could achieve segmentation results more similar to ground truth.ConclusionThis study presents a new two-stage segmentation method based on U-Net for OD and OC segmentation, which is proved to be effective. The experiments showed that CA-Net can obtain better results than other methods on the Drishti-GS1, RIM-ONE-v3, and Refuge datasets. In the future, we will focus on the problem of domain adaptation and solve the problem of OD and OC segmentation when the training samples are insufficient.关键词:Glaucoma;optic disc(OD);optic cup(OC);context aggregation module(CAM);attention guidance module(AGM);deep supervision;prior knowledge129|328|3更新时间:2024-05-07

Medical Image Processing

-