最新刊期

卷 26 , 期 3 , 2021

-

摘要:Medical big data mainly include electronic health record data, such as medical imaging data and genetic information data, among which medical imaging data takes up the most of medical data currently. One of the problems that researchers in computer science are greatly concerned about is how to apply medical big data in clinical practice.Artificial intelligence (AI) provides a good way to address this problem. AI algorithms, particularly deep learning, have demonstrated remarkable progress in image-recognition tasks. Historically, in radiology practice, trained physicians visually assess medical images for the detection, characterization, and monitoring of diseases. AI methods excel at automatically recognizing complex patterns in imaging data and providing quantitative, rather than qualitative, assessments of radiographic characteristics. Methods ranging from convolutional neural networks to variational autoencoders have found myriad applications in the medical image analysis field, propelling it forward at a rapid pace. In this review, by combining recent work and the latest research progress of big data analysis of medical images until 2020, we have summarized the theory, main process, and evaluation results of multiple deep learning algorithms in some fields of medical image analysis, including magnetic resonance imaging (MRI), pathology imaging, ultrasound imaging, electrical signals, digital radiography, molybdenum target, and diabetic eye imaging, using deep learning. MRI is one of the main research areas of medical image analysis. The existing research literature includes Alzheimer's disease MRI, Parkinson's disease MRI, brain tumor MRI, prostate cancer MRI, and cardiac MRI. MRI is also divided into two-dimensional and three-dimensional image analysis, especially for three-dimensional data, where insufficient data volume leads to problems such as overfitting, large calculations, and slow training.Medical ultrasound (also known as diagnostic sonography or ultrasonography) is a diagnostic imaging technique or therapeutic application of ultrasound. It is used to create an image of internal body structures such as tendons, muscles, joints, blood vessels, and internal organs. It aims to find the source of a disease or to exclude pathology. The practice of examining pregnant women using ultrasound is called obstetric ultrasonography and was an early development and application of clinical ultrasonography.Ultrasonography uses sound waves with higher frequencies than those audible to humans (>20 000 Hz). Ultrasonic images, also known as sonograms, are made by sending ultrasound pulses into the tissue using a probe. The ultrasound pulses echo off tissues with different reflection properties and are recorded and displayed as an image.Many different types of images can be formed. The most common is a B-mode image (brightness), which displays the acoustic impedance of a two-dimensional cross-section of a tissue. Other types can display blood flow, tissue motion over time, the location of blood, the presence of specific molecules, the stiffness of a tissue, or the anatomy of a three-dimensional region. Pathology is the gold standard for diagnosing some diseases, especially digital image of pathology.We specifically discuss AI combined with digital pathology images for diagnosis.Electroencephalography (EEG) is an electrophysiological monitoring method to record the electrical activity of the brain. It is typically noninvasive, with the electrodes placed along the scalp.However, invasive electrodes are sometimes used, for example in electrocorticography, sometimes called intracranial EEG. EEG is most often used to diagnose epilepsy, which causes abnormalities in EEG readings. It is also used to diagnose sleep disorders, depth of anesthesia, coma, encephalopathies, and brain death. EEG used to be a first-line method of diagnosis for tumors, stroke, and other focal brain disorders, but its use has decreased with the advent of high-resolution anatomical imaging techniques such as MRI and computed tomography (CT). Despite limited spatial resolution, EEG continues to be a valuable tool for research and diagnosis. It is one of the few mobile techniques available and offers millisecond-range temporal resolution, which is not possible with CT, positron emission tomography (PET), or MRI.Electrocardiography(ECG or EKG) is the process of producing an electrocardiogram. It is a graph of voltage versus time of the electrical activity of the heart using electrodes placed on the skin. These electrodes detect small electrical changes that are a consequence of cardiac muscle depolarization followed by repolarization during each cardiac cycle (heartbeat). Changes in the normal ECG pattern occur in numerous cardiac abnormalities, including cardiac rhythm disturbances (e.g., atrial fibrillation and ventricular tachycardia), inadequate coronary artery blood flow (e.g., myocardial ischemia and myocardial infarction), and electrolyte disturbances (e.g., hypokalemia and hyperkalemia).We analyzed the advantages and disadvantages of existing algorithms and the important and difficult points in the field of medical imaging, and introduced the application of intelligent imaging and deep learning in the field of big data analysis and early disease diagnosis. The current algorithms in the field of medical imaging have made considerable progress, but there is still a lot of room for development. We also focus on the optimization and improvement of different algorithms in different sub-fields under a variety of segmentation and classification indicators (e.g., Dice, IoU, accuracy and recall rate), and we look forward to the future development hotspots in this field. Deep learning has developed rapidly in the field of medical imaging and has broad prospects for development. It plays an important role in the early diagnosis of diseases. It can effectively improve the work efficiency of doctors and reduce their burden. Moreover, it has important theoretical research and practical application value.关键词:deep learning;target segmentation;magnetic resonance imaging(MRI);pathology;ultrasound;review199|301|11更新时间:2024-05-07

摘要:Medical big data mainly include electronic health record data, such as medical imaging data and genetic information data, among which medical imaging data takes up the most of medical data currently. One of the problems that researchers in computer science are greatly concerned about is how to apply medical big data in clinical practice.Artificial intelligence (AI) provides a good way to address this problem. AI algorithms, particularly deep learning, have demonstrated remarkable progress in image-recognition tasks. Historically, in radiology practice, trained physicians visually assess medical images for the detection, characterization, and monitoring of diseases. AI methods excel at automatically recognizing complex patterns in imaging data and providing quantitative, rather than qualitative, assessments of radiographic characteristics. Methods ranging from convolutional neural networks to variational autoencoders have found myriad applications in the medical image analysis field, propelling it forward at a rapid pace. In this review, by combining recent work and the latest research progress of big data analysis of medical images until 2020, we have summarized the theory, main process, and evaluation results of multiple deep learning algorithms in some fields of medical image analysis, including magnetic resonance imaging (MRI), pathology imaging, ultrasound imaging, electrical signals, digital radiography, molybdenum target, and diabetic eye imaging, using deep learning. MRI is one of the main research areas of medical image analysis. The existing research literature includes Alzheimer's disease MRI, Parkinson's disease MRI, brain tumor MRI, prostate cancer MRI, and cardiac MRI. MRI is also divided into two-dimensional and three-dimensional image analysis, especially for three-dimensional data, where insufficient data volume leads to problems such as overfitting, large calculations, and slow training.Medical ultrasound (also known as diagnostic sonography or ultrasonography) is a diagnostic imaging technique or therapeutic application of ultrasound. It is used to create an image of internal body structures such as tendons, muscles, joints, blood vessels, and internal organs. It aims to find the source of a disease or to exclude pathology. The practice of examining pregnant women using ultrasound is called obstetric ultrasonography and was an early development and application of clinical ultrasonography.Ultrasonography uses sound waves with higher frequencies than those audible to humans (>20 000 Hz). Ultrasonic images, also known as sonograms, are made by sending ultrasound pulses into the tissue using a probe. The ultrasound pulses echo off tissues with different reflection properties and are recorded and displayed as an image.Many different types of images can be formed. The most common is a B-mode image (brightness), which displays the acoustic impedance of a two-dimensional cross-section of a tissue. Other types can display blood flow, tissue motion over time, the location of blood, the presence of specific molecules, the stiffness of a tissue, or the anatomy of a three-dimensional region. Pathology is the gold standard for diagnosing some diseases, especially digital image of pathology.We specifically discuss AI combined with digital pathology images for diagnosis.Electroencephalography (EEG) is an electrophysiological monitoring method to record the electrical activity of the brain. It is typically noninvasive, with the electrodes placed along the scalp.However, invasive electrodes are sometimes used, for example in electrocorticography, sometimes called intracranial EEG. EEG is most often used to diagnose epilepsy, which causes abnormalities in EEG readings. It is also used to diagnose sleep disorders, depth of anesthesia, coma, encephalopathies, and brain death. EEG used to be a first-line method of diagnosis for tumors, stroke, and other focal brain disorders, but its use has decreased with the advent of high-resolution anatomical imaging techniques such as MRI and computed tomography (CT). Despite limited spatial resolution, EEG continues to be a valuable tool for research and diagnosis. It is one of the few mobile techniques available and offers millisecond-range temporal resolution, which is not possible with CT, positron emission tomography (PET), or MRI.Electrocardiography(ECG or EKG) is the process of producing an electrocardiogram. It is a graph of voltage versus time of the electrical activity of the heart using electrodes placed on the skin. These electrodes detect small electrical changes that are a consequence of cardiac muscle depolarization followed by repolarization during each cardiac cycle (heartbeat). Changes in the normal ECG pattern occur in numerous cardiac abnormalities, including cardiac rhythm disturbances (e.g., atrial fibrillation and ventricular tachycardia), inadequate coronary artery blood flow (e.g., myocardial ischemia and myocardial infarction), and electrolyte disturbances (e.g., hypokalemia and hyperkalemia).We analyzed the advantages and disadvantages of existing algorithms and the important and difficult points in the field of medical imaging, and introduced the application of intelligent imaging and deep learning in the field of big data analysis and early disease diagnosis. The current algorithms in the field of medical imaging have made considerable progress, but there is still a lot of room for development. We also focus on the optimization and improvement of different algorithms in different sub-fields under a variety of segmentation and classification indicators (e.g., Dice, IoU, accuracy and recall rate), and we look forward to the future development hotspots in this field. Deep learning has developed rapidly in the field of medical imaging and has broad prospects for development. It plays an important role in the early diagnosis of diseases. It can effectively improve the work efficiency of doctors and reduce their burden. Moreover, it has important theoretical research and practical application value.关键词:deep learning;target segmentation;magnetic resonance imaging(MRI);pathology;ultrasound;review199|301|11更新时间:2024-05-07

Scholar View

-

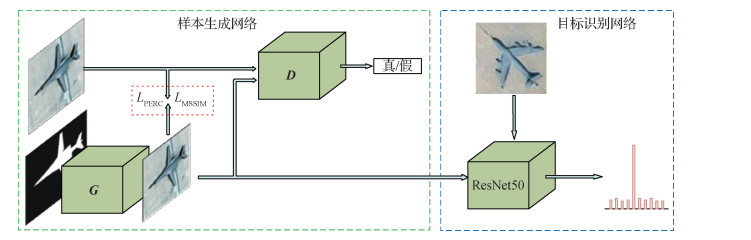

摘要:Deep learning has a tremendous influence on numerous research fields due to its outstanding performance in representing high-level feature for high-dimensional data. Especially in computer vision field, deep learning has shown its powerful abilities for various tasks such as image classification, object detection, and image segmentation. Normally, when constructing networks and using the deep learning-based method, a suitable neural network architecture is designed for our data and task, a reasonable task-oriented objective function is set, and a large amount of labeled training data is used to calculate the target loss, optimize the model parameters by the gradient descent method, and finally train an "end-to-end" deep neural network model to perform our task. Data, as the driving forces for deep learning, is areessential for training the model. With sufficient data, the overfitting problem during training can be alleviated, and the parametric search space can be expanded such that the model can be further optimized toward the global optimal solution. However, in several areas or tasks, attaining sufficient labeled samples for training a model is difficult and expensive. As a result, the overfitting problem during training occurs often and prevents deep learning models from achieving a higher performance. Thus, many methods have been proposed to address this issue, and data augmentation becomes one of the most important solutions to addressthis problem by increasing the amount and variety for the limited data set. Innumerable works have proven the effectiveness of data augmentation for improving the performance of deep learning models, which can be traced back to the seminal work of convolutional neural networks-LeNet. In this review, we examine the most representative image data augmentation methods for deep learning. This review can facilitate the researchers to adopt the appropriate methods for their task and promote the research progression of data augmentation. Current diverse data augmentation methods that can relieve the overfitting problem in deep learning models are compared and analyzed. Based on the difference of internal mechanism, a taxonomy for data augmentation methods is proposed with four classes: single data warping, multiple data mixing, learning the data distribution, and learning the augmentation strategy. First, for the image data, single data warping generates new data by image transformation over spatial space or spectral space. These methods can be divided into five categories: geometric transformations, color space transformations, sharpness transformations, noise injection, and local erasing.These methods have been widely used in image data augmentation for a long time due to their simplicity. Second, multiple data mixing can be divided according to the mixture in image space and the mixture in feature space. The mixing modes include linear mixing and nonlinear mixing for more than one image. Although mixing images seems to be a counter-intuitive method for data augmentation, experiments in many works have proven its effectiveness in improving the performance of the deep learning model. Third, the methods of learning data distribution try to capture the potential probability distribution of training data and generate new samples by sampling in that data distribution. This goal can be achieved by adversarial networks. Therefore this kind of data augmentation method is mainly based on generative adversarial network and the application of image-to-image translation. Fourth, the methods of learning augmentation strategy try to train a model to select the optimal data augmentation strategy adaptively according to the characteristics of the data or task. This goal can be achieved by metalearning, replacing data augmentation with a trainable neural network. The strategy searching problem can also be solved by reinforcement learning. When performing data augmentation in practical applications, researchers can select and combine the most suitable methods from the above methods according to the characteristics of data and tasks to form a set of effective data augmentation schemes, which in turn provides a stronger motivation for the application of deep learning methods with more effective training data. Although a better data augmentation strategy can be obtained more intelligently through learning data distribution or searching data augmentation strategies, how to customize an optimal data augmentation scheme automatically for a given task remains to be studied. In the future, conducting theoretical analysis and experimental verification of the suitability of various data augmentation methods for different data and tasks is of great research significance and application value, and will enable researchers to customize an optimal data augmentation scheme for their task. A large gap remains in applying the idea of metalearning in performing data augmentation, constructing a "data augmentation network" to learn an optimal way of data warping or data mixing. Moreover, improving the ability of generative adversarial networks(GAN)to fit the data distribution more perfectly is substantial because the oversampling in real data space should be the ideal manner of obtaining unobserved new data infinitely. The real world has numerous cross-domain and cross-modality data. The style transfer ability of encoder-decoder networks and GAN can formulate mapping functions between the different data distributions and achieve the complementation of data in different domains. Thus, exploring the application of "image-to-image translation" in different fields has bright prospects.关键词:deep learning;overfitting;data augmentation;image transformation;generative adversarial networks(GAN);meta-learning;reinforcement learning578|592|18更新时间:2024-05-07

摘要:Deep learning has a tremendous influence on numerous research fields due to its outstanding performance in representing high-level feature for high-dimensional data. Especially in computer vision field, deep learning has shown its powerful abilities for various tasks such as image classification, object detection, and image segmentation. Normally, when constructing networks and using the deep learning-based method, a suitable neural network architecture is designed for our data and task, a reasonable task-oriented objective function is set, and a large amount of labeled training data is used to calculate the target loss, optimize the model parameters by the gradient descent method, and finally train an "end-to-end" deep neural network model to perform our task. Data, as the driving forces for deep learning, is areessential for training the model. With sufficient data, the overfitting problem during training can be alleviated, and the parametric search space can be expanded such that the model can be further optimized toward the global optimal solution. However, in several areas or tasks, attaining sufficient labeled samples for training a model is difficult and expensive. As a result, the overfitting problem during training occurs often and prevents deep learning models from achieving a higher performance. Thus, many methods have been proposed to address this issue, and data augmentation becomes one of the most important solutions to addressthis problem by increasing the amount and variety for the limited data set. Innumerable works have proven the effectiveness of data augmentation for improving the performance of deep learning models, which can be traced back to the seminal work of convolutional neural networks-LeNet. In this review, we examine the most representative image data augmentation methods for deep learning. This review can facilitate the researchers to adopt the appropriate methods for their task and promote the research progression of data augmentation. Current diverse data augmentation methods that can relieve the overfitting problem in deep learning models are compared and analyzed. Based on the difference of internal mechanism, a taxonomy for data augmentation methods is proposed with four classes: single data warping, multiple data mixing, learning the data distribution, and learning the augmentation strategy. First, for the image data, single data warping generates new data by image transformation over spatial space or spectral space. These methods can be divided into five categories: geometric transformations, color space transformations, sharpness transformations, noise injection, and local erasing.These methods have been widely used in image data augmentation for a long time due to their simplicity. Second, multiple data mixing can be divided according to the mixture in image space and the mixture in feature space. The mixing modes include linear mixing and nonlinear mixing for more than one image. Although mixing images seems to be a counter-intuitive method for data augmentation, experiments in many works have proven its effectiveness in improving the performance of the deep learning model. Third, the methods of learning data distribution try to capture the potential probability distribution of training data and generate new samples by sampling in that data distribution. This goal can be achieved by adversarial networks. Therefore this kind of data augmentation method is mainly based on generative adversarial network and the application of image-to-image translation. Fourth, the methods of learning augmentation strategy try to train a model to select the optimal data augmentation strategy adaptively according to the characteristics of the data or task. This goal can be achieved by metalearning, replacing data augmentation with a trainable neural network. The strategy searching problem can also be solved by reinforcement learning. When performing data augmentation in practical applications, researchers can select and combine the most suitable methods from the above methods according to the characteristics of data and tasks to form a set of effective data augmentation schemes, which in turn provides a stronger motivation for the application of deep learning methods with more effective training data. Although a better data augmentation strategy can be obtained more intelligently through learning data distribution or searching data augmentation strategies, how to customize an optimal data augmentation scheme automatically for a given task remains to be studied. In the future, conducting theoretical analysis and experimental verification of the suitability of various data augmentation methods for different data and tasks is of great research significance and application value, and will enable researchers to customize an optimal data augmentation scheme for their task. A large gap remains in applying the idea of metalearning in performing data augmentation, constructing a "data augmentation network" to learn an optimal way of data warping or data mixing. Moreover, improving the ability of generative adversarial networks(GAN)to fit the data distribution more perfectly is substantial because the oversampling in real data space should be the ideal manner of obtaining unobserved new data infinitely. The real world has numerous cross-domain and cross-modality data. The style transfer ability of encoder-decoder networks and GAN can formulate mapping functions between the different data distributions and achieve the complementation of data in different domains. Thus, exploring the application of "image-to-image translation" in different fields has bright prospects.关键词:deep learning;overfitting;data augmentation;image transformation;generative adversarial networks(GAN);meta-learning;reinforcement learning578|592|18更新时间:2024-05-07

Review

-

摘要:ObjectiveIn the robot's path planning and obstacle avoidance process, measuring the relative distance between the target and the sensor is very important. Distance measurement methods based on optical sensors have the advantages of portability, intuitiveness, and low cost. Therefore, they are widely used in real applications. Visual distance measurement methods commonly include monocular visual measurement and binocular visual measurement. There are two main methods for binocular image measurement: estimating the distance between the target and the camera by comparing two blurred images with different optical parameters or measuring the distance by comparing the parallax maps of these two images. However, both methods require sufficient baseline distance between the left and right cameras. Therefore, they are not suitable for smaller installation sites (unmanned boats). Furthermore, they are computationally expensive and difficult to be used in real-time applications. Monocular vision measurement was first proposed by Pentland. The basic principle is calculating the distance between the edge in the target image and the camera through the brightness changes on both sides of the target edge. Compared with binocular vision measurement, monocular vision measurement requires only one camera, and its calculation principle is simple. However, the following problems still exist in practical applications. 1) These methods are based on the exact position evaluation of the target step edge in a single image. However, this condition is difficult to be satisfied in practical applications. Therefore, a preprocessing module for detecting edges should be added, which increases the complexity and reduces the adaptability of these methods. 2) When using the Gaussian filter to approximate the fuzzy point spread function, the problems of losing the edge of slow change and the low accuracy of edge positioning in Gaussian filtering are ignored. The positioning accuracy directly affects the accuracy of monocular measurement. 3) The blurring degree of an image is related directly to the accuracy of measurement. In spite of this, the distance measurement is performed by the method to re-blur the target image multiple times. Moreover, the quantitative relationship between the number of re-blur process and the accuracy of the distance measurement is unknown. Therefore, the distance measurement accuracy is difficult to control and improve from the perspective of active blurring.MethodThis study proposes an adaptive method of measuring distance on the basis of a single blurred image and the B-spline wavelet function. First, the Laplacian operator is introduced to quantitatively evaluate the blurring degree of the original image and locate the target edge automatically. According to the principle of blurring, the blurring degree of the original image increases as the number of re-blur increases. When the blurring degree reaches a certain amount, it will change more slowly until it cannot judge the change in the blurring degree caused by two adjacent re-blurs. On the other hand, as the re-blur number increases, the distance measurement accuracy first increases and then decreases rapidly when the re-blur number reaches a certain value. Therefore, the relationship among the calculated blurring degree, re-blur number, and distance measurement error is established. Then, the B-spline wavelet function is used to replace the traditional Gaussian filter to re-blur the target image actively, and the optimal re-blur number is adaptively calculated for different scene images on the basis of the relationship among the blurring degree of image, number of blurs, and measurement error. Finally, according to the change ratio of the blurring degree on both sides of the step edge in the original blurred image, the distance between the edge and the camera is calculated.ResultA comparison experiment with respect to different practical images is conducted. The experimental results show that the method in this study has higher accuracy than the method of measuring distance on the basis of re-blur with the Gaussian function. Comparatively, the average relative error decreases by 5%. Furthermore, when the distance measurement is performed on the same image with different re-blur numbers, the measurement accuracy is higher with the optimal re-blur number obtained with the relationship among the blurring degree of image, number of blurs, and measurement error.ConclusionOur proposed distance measurement method combines the advantages of traditional distance measurement method with the Gaussian function and the cubic B-spline function. Owing to the advantages of B-spline in progressive optimization and the optimal re-blur number achievement method, our method has higher measurement accuracy, adaptivity, and robustness.关键词:monocular vision;measure distance;B-spline wavelet;Laplace operator;evaluation of the blurring degree56|158|2更新时间:2024-05-07

摘要:ObjectiveIn the robot's path planning and obstacle avoidance process, measuring the relative distance between the target and the sensor is very important. Distance measurement methods based on optical sensors have the advantages of portability, intuitiveness, and low cost. Therefore, they are widely used in real applications. Visual distance measurement methods commonly include monocular visual measurement and binocular visual measurement. There are two main methods for binocular image measurement: estimating the distance between the target and the camera by comparing two blurred images with different optical parameters or measuring the distance by comparing the parallax maps of these two images. However, both methods require sufficient baseline distance between the left and right cameras. Therefore, they are not suitable for smaller installation sites (unmanned boats). Furthermore, they are computationally expensive and difficult to be used in real-time applications. Monocular vision measurement was first proposed by Pentland. The basic principle is calculating the distance between the edge in the target image and the camera through the brightness changes on both sides of the target edge. Compared with binocular vision measurement, monocular vision measurement requires only one camera, and its calculation principle is simple. However, the following problems still exist in practical applications. 1) These methods are based on the exact position evaluation of the target step edge in a single image. However, this condition is difficult to be satisfied in practical applications. Therefore, a preprocessing module for detecting edges should be added, which increases the complexity and reduces the adaptability of these methods. 2) When using the Gaussian filter to approximate the fuzzy point spread function, the problems of losing the edge of slow change and the low accuracy of edge positioning in Gaussian filtering are ignored. The positioning accuracy directly affects the accuracy of monocular measurement. 3) The blurring degree of an image is related directly to the accuracy of measurement. In spite of this, the distance measurement is performed by the method to re-blur the target image multiple times. Moreover, the quantitative relationship between the number of re-blur process and the accuracy of the distance measurement is unknown. Therefore, the distance measurement accuracy is difficult to control and improve from the perspective of active blurring.MethodThis study proposes an adaptive method of measuring distance on the basis of a single blurred image and the B-spline wavelet function. First, the Laplacian operator is introduced to quantitatively evaluate the blurring degree of the original image and locate the target edge automatically. According to the principle of blurring, the blurring degree of the original image increases as the number of re-blur increases. When the blurring degree reaches a certain amount, it will change more slowly until it cannot judge the change in the blurring degree caused by two adjacent re-blurs. On the other hand, as the re-blur number increases, the distance measurement accuracy first increases and then decreases rapidly when the re-blur number reaches a certain value. Therefore, the relationship among the calculated blurring degree, re-blur number, and distance measurement error is established. Then, the B-spline wavelet function is used to replace the traditional Gaussian filter to re-blur the target image actively, and the optimal re-blur number is adaptively calculated for different scene images on the basis of the relationship among the blurring degree of image, number of blurs, and measurement error. Finally, according to the change ratio of the blurring degree on both sides of the step edge in the original blurred image, the distance between the edge and the camera is calculated.ResultA comparison experiment with respect to different practical images is conducted. The experimental results show that the method in this study has higher accuracy than the method of measuring distance on the basis of re-blur with the Gaussian function. Comparatively, the average relative error decreases by 5%. Furthermore, when the distance measurement is performed on the same image with different re-blur numbers, the measurement accuracy is higher with the optimal re-blur number obtained with the relationship among the blurring degree of image, number of blurs, and measurement error.ConclusionOur proposed distance measurement method combines the advantages of traditional distance measurement method with the Gaussian function and the cubic B-spline function. Owing to the advantages of B-spline in progressive optimization and the optimal re-blur number achievement method, our method has higher measurement accuracy, adaptivity, and robustness.关键词:monocular vision;measure distance;B-spline wavelet;Laplace operator;evaluation of the blurring degree56|158|2更新时间:2024-05-07

Image Processing and Coding

-

摘要:ObjectiveAn appearance model plays a key role in the performance of visual object tracking. In recent years, tracking algorithms based on network modulation learn an appearance model by building an effective subnetwork, and thus, they can more robustly match the target in the search frames. The algorithms exhibite xcellent performance in many object tracking benchmarks. However, these tracking methods disregard the importance of high-order feature information, causing a drift when large-scale target appearance occurs. This study utilizes a global contextual attention-enhanced second-order network to model target appearance.This network is helpful in enhancing nonlinear modeling capability in visual tracking.MethodThe tracker includes two components: target estimation and classification components. It can be regarded as a two-stage tracker. Combined with the method based on Siamese networks, the speed of this method is relatively slow. The target estimation component is trained off-line to predict the overlapping of the target and the estimated bounding boxes. This tracker presents an effective network architecture for visual tracking.This architecture includes two novel module designs. The first design is called pixel-wisely global contextual attention (pGCA), which leverages bidirectional long short-term memory(Bi-LSTM) to sweep row-wisely and column-wisely across feature maps and fully capture the global context information of each pixel. The other design is second-order pooling modulation (SPM), which uses the feature covariance matrix of the template frame to learn a second-order modulation vector. Then, the modulation vector channel-wisely multiplies the intermediate feature maps of the query image to transfer the target-specific information from the template frame to the query frame. In addition, this study selects the widely adopted ResNet-50 as our backbone network.This network is pretrained on ImageNet classification task. Given the input template image X0 with bounding box b0 and query image X, this studyselects the feature maps of the third and fourth layers for subsequent processing. The feature maps are fed into the pGCA module and the precise region of interest pooling (PrPool) module, which are used to obtain the features of the annotation area.The maps are then concatenated to yield the multi-scale features enhanced by global context information. Moreover, to handle them is aligned feature caused by the large-scale deformation between the query and the template images, the tracker injects two deformable convolution blocks into the bottom branch for feature alignment. Then, the fused feature is passed through two branches of SPM, generating two modulation vectors that channel-wisely multiply the corresponding feature layers on the bottom branch of the search frame. The fused feature is more helpful to the performance of the tracker via network modulation instead of a correlation in Siamese networks. Thereafter, the modulated features are fed into two PrPool layers and then concatenated. The output features are finally fed into the intersection over union predictor module that is composed of three fully connected layers. Given the annotated ground truth, the tracker minimizes the estimation error to train all the network parameters in an end-to-end manner. The classification component is a two-layer full convolutional neural network. In contrast with the estimation component, it trains online to predict a target confidence score. Thus, this component can provide a rough 2D location of the object. During online learning, the objective function is optimized using the conjugate gradient method instead of stochastic gradient descent for real-time tracking. For the robustness of the tracker, this study uses an averaging strategy to update object appearance in this component.This strategy has been widely been used in discriminative correlation filters. For this strategy, this study assumes that the appearance of the object changes smoothly and consistently in succession. Simultaneously, it the strategy can fully utilize the information of the previous frame. The overall tracking process involves using the classification to obtain a rough location of the target, which is a response map with dimensions of 14×14×1. This tracker can distinguish the specific foreground and background in accordance with the response map. Gaussian sampling is used to obtain some predicted target bounding boxes. Before selecting which predicted bounding box is the tracking result, the tracker trains the estimation component off-line. The predicted bounding boxes are fed to the estimation component. The highest score in the estimation component determines which box is the tracking result.ResultThe tracker validates the effectiveness and robustness of the proposed method on the OTB100(object tracking benchmark) and the challenging VOT2018(visual object tracking) datasets. The proposed method achieves the best performance in terms of success plots and precision plots with an area under the curve (AUC) score of 67.9% and a precision score 87.9%, outperforming the state-of-the-art ATOM(accurate tracking by overlap maximization) by 1.5% in terms of AUC score.Simultaneously, the expected average overlap (EAO) score of our method ranks first, with 0.441 1, significantly outperforming the second best-performing method ATOM by 4%, with an EAO score of 0.401 1.ConclusionThis study proposes a visual tracker that uses network modulation.This tracker includes pGCA and SPM modules. The pGCA module leverages Bi-LSTM to capture the global context information of each pixel.The SPM module uses the feature covariance matrix of the template frame to learn a second-order modulation vector to model target appearance. It reduces the information loss of the first frame and enhances the correlation between features. The tracker utilizes an averaging strategy to update object appearance in the classification component for robustness. Therefore, the proposed tracker significantly outperforms state-of-the-art methods in terms of accuracy and efficiency.关键词:visual object tracking(VOT);convolutional neural network(CNN);network modulation;global context;attention mechanism68|110|1更新时间:2024-05-07

摘要:ObjectiveAn appearance model plays a key role in the performance of visual object tracking. In recent years, tracking algorithms based on network modulation learn an appearance model by building an effective subnetwork, and thus, they can more robustly match the target in the search frames. The algorithms exhibite xcellent performance in many object tracking benchmarks. However, these tracking methods disregard the importance of high-order feature information, causing a drift when large-scale target appearance occurs. This study utilizes a global contextual attention-enhanced second-order network to model target appearance.This network is helpful in enhancing nonlinear modeling capability in visual tracking.MethodThe tracker includes two components: target estimation and classification components. It can be regarded as a two-stage tracker. Combined with the method based on Siamese networks, the speed of this method is relatively slow. The target estimation component is trained off-line to predict the overlapping of the target and the estimated bounding boxes. This tracker presents an effective network architecture for visual tracking.This architecture includes two novel module designs. The first design is called pixel-wisely global contextual attention (pGCA), which leverages bidirectional long short-term memory(Bi-LSTM) to sweep row-wisely and column-wisely across feature maps and fully capture the global context information of each pixel. The other design is second-order pooling modulation (SPM), which uses the feature covariance matrix of the template frame to learn a second-order modulation vector. Then, the modulation vector channel-wisely multiplies the intermediate feature maps of the query image to transfer the target-specific information from the template frame to the query frame. In addition, this study selects the widely adopted ResNet-50 as our backbone network.This network is pretrained on ImageNet classification task. Given the input template image X0 with bounding box b0 and query image X, this studyselects the feature maps of the third and fourth layers for subsequent processing. The feature maps are fed into the pGCA module and the precise region of interest pooling (PrPool) module, which are used to obtain the features of the annotation area.The maps are then concatenated to yield the multi-scale features enhanced by global context information. Moreover, to handle them is aligned feature caused by the large-scale deformation between the query and the template images, the tracker injects two deformable convolution blocks into the bottom branch for feature alignment. Then, the fused feature is passed through two branches of SPM, generating two modulation vectors that channel-wisely multiply the corresponding feature layers on the bottom branch of the search frame. The fused feature is more helpful to the performance of the tracker via network modulation instead of a correlation in Siamese networks. Thereafter, the modulated features are fed into two PrPool layers and then concatenated. The output features are finally fed into the intersection over union predictor module that is composed of three fully connected layers. Given the annotated ground truth, the tracker minimizes the estimation error to train all the network parameters in an end-to-end manner. The classification component is a two-layer full convolutional neural network. In contrast with the estimation component, it trains online to predict a target confidence score. Thus, this component can provide a rough 2D location of the object. During online learning, the objective function is optimized using the conjugate gradient method instead of stochastic gradient descent for real-time tracking. For the robustness of the tracker, this study uses an averaging strategy to update object appearance in this component.This strategy has been widely been used in discriminative correlation filters. For this strategy, this study assumes that the appearance of the object changes smoothly and consistently in succession. Simultaneously, it the strategy can fully utilize the information of the previous frame. The overall tracking process involves using the classification to obtain a rough location of the target, which is a response map with dimensions of 14×14×1. This tracker can distinguish the specific foreground and background in accordance with the response map. Gaussian sampling is used to obtain some predicted target bounding boxes. Before selecting which predicted bounding box is the tracking result, the tracker trains the estimation component off-line. The predicted bounding boxes are fed to the estimation component. The highest score in the estimation component determines which box is the tracking result.ResultThe tracker validates the effectiveness and robustness of the proposed method on the OTB100(object tracking benchmark) and the challenging VOT2018(visual object tracking) datasets. The proposed method achieves the best performance in terms of success plots and precision plots with an area under the curve (AUC) score of 67.9% and a precision score 87.9%, outperforming the state-of-the-art ATOM(accurate tracking by overlap maximization) by 1.5% in terms of AUC score.Simultaneously, the expected average overlap (EAO) score of our method ranks first, with 0.441 1, significantly outperforming the second best-performing method ATOM by 4%, with an EAO score of 0.401 1.ConclusionThis study proposes a visual tracker that uses network modulation.This tracker includes pGCA and SPM modules. The pGCA module leverages Bi-LSTM to capture the global context information of each pixel.The SPM module uses the feature covariance matrix of the template frame to learn a second-order modulation vector to model target appearance. It reduces the information loss of the first frame and enhances the correlation between features. The tracker utilizes an averaging strategy to update object appearance in the classification component for robustness. Therefore, the proposed tracker significantly outperforms state-of-the-art methods in terms of accuracy and efficiency.关键词:visual object tracking(VOT);convolutional neural network(CNN);network modulation;global context;attention mechanism68|110|1更新时间:2024-05-07 -

摘要:ObjectiveAlthough the backgrocund-aware correlation filters (BACF) algorithm increases the number of samples and guarantees the sample quality, the algorithm performs equal weight training on the background information, resulting in the problem of target drift when the target is similar to the background information in complex scenes. The value weight training method ignores the priority of sample collection in the target movement direction and the importance of weight distribution. If the sample sampling method can be effectively designed in the target movement direction and the sample weights can be allocated reasonably, the tracking effect will be improved, and the target drift will be solved effectively. Therefore, this paper adds Kalman filtering to the BACF algorithm framework.MethodFor the single-target tracking problem, the algorithm in this paper only takes the motion vector from the predicted value and does not locate the target according to constant speed or acceleration. The target position is still determined by the response peak value. The maximum response value is obtained by linear interpolation. The target location is determined. When the speed is zero, the response peak of the target positioning in the previous frame image is still used to determine the target position in the current frame image. Kalman filtering is used to predict the target's motion state and direction, and the background information in the target's motion direction and non-motion direction is subjected to filter training to ensure that the training weight assigned to the background information in the target's motion direction is higher than the non-motion direction weights. The objective function problem is optimized and solved, auxiliary factor g is constructed, the augmented Lagrangian multiplier methodis usedto place the constraints in the optimization function, and the alternating solution method (alternating direction method of multipliers(ADMM) is used to optimize the filter and auxiliary factors, andreduce computational complexity.ResultThis paper selects standard data sets OTB50(abject tracking benchmark) and OTB100 to facilitate experimental comparison with the current mainstream algorithms. OTB50 is a commonly used tracking dataset, which contains 50 groups of video sequences and has 11 different attributes, such as lighting changes and occlusions. OTB100 containsan additional 50 test sequences based on OTB50. Each sequence may have different video attributes, making tracking challenges difficult. The algorithm in this paper uses one-pass evaluation (OPE) to analyze the performance of the algorithm, and tracking accuracy and success rate as the evaluation criteria. In video sequence Board_1, the algorithm in this paper, ECO(efficient convolution operators), SRDCF(spatially regularized correlation filters), and DeepSTRCF(deep spatial-temporal regularized) can achieve accurate tracking, but the speed of the algorithm in this paper is substantially better than that of the three two algorithms of ECO, SRDCF, and DeepSTRCF. In video sequence Panda_1, the tracking effect of the algorithm in this paper is stable under a low resolution. In video sequence Box_1, only the algorithm in this paper can accurately track the target from the initial frame to the last frame because the Kalman filter is used to predict the direction of the target and distinguish the target from the background information effectively. The tracker is prevented from tracking other similar background information. Experimental results show that the average accuracy rate and average success rate of the algorithm on datasets OTB50 and OTB100 are 0.804 and 0.748, respectively, which are 7% and 16% higher than the BACF algorithm, respectively. In tracking the experimental sequence, the tracking success rate and tracking accuracy of the algorithm in this paper are high and meet the real-time requirements, and the tracking performance is good.ConclusionThis paper uses Kalman filtering to predict the direction and state of the target, assigns different weights to the background information in different directions, performs filter training, and obtains the maximum response value based on linear interpolation to determine the target position. The ADMM method is used to transform the problem of solving the target model into two subproblems with the optimal solution. The online adaptive method is used to solve the problem of target deformation in model update. Numerous comparative experiments are performed on OTB50 and OTB100 datasets. On OTB50, the algorithm success rate and accuracy rate of this paper are 0.720 and 0.777, respectively. On OTB100, the algorithm success rate and accuracy rate of this paper are 0.773 and 0.828, respectively.Both are better than the current mainstream algorithms, which shows that the algorithm in this paper has better accuracy and robustness. In background sensing, the sample sampling method and weight allocation directly affect target tracking performance. The next step is to conduct an in-depth research on the construction of a speed-adaptive sample collection model.关键词:computer vision;target tracking;correlation filter;background-aware;Kalman filters;alternating direction method of multipliers (ADMM)121|222|5更新时间:2024-05-07

摘要:ObjectiveAlthough the backgrocund-aware correlation filters (BACF) algorithm increases the number of samples and guarantees the sample quality, the algorithm performs equal weight training on the background information, resulting in the problem of target drift when the target is similar to the background information in complex scenes. The value weight training method ignores the priority of sample collection in the target movement direction and the importance of weight distribution. If the sample sampling method can be effectively designed in the target movement direction and the sample weights can be allocated reasonably, the tracking effect will be improved, and the target drift will be solved effectively. Therefore, this paper adds Kalman filtering to the BACF algorithm framework.MethodFor the single-target tracking problem, the algorithm in this paper only takes the motion vector from the predicted value and does not locate the target according to constant speed or acceleration. The target position is still determined by the response peak value. The maximum response value is obtained by linear interpolation. The target location is determined. When the speed is zero, the response peak of the target positioning in the previous frame image is still used to determine the target position in the current frame image. Kalman filtering is used to predict the target's motion state and direction, and the background information in the target's motion direction and non-motion direction is subjected to filter training to ensure that the training weight assigned to the background information in the target's motion direction is higher than the non-motion direction weights. The objective function problem is optimized and solved, auxiliary factor g is constructed, the augmented Lagrangian multiplier methodis usedto place the constraints in the optimization function, and the alternating solution method (alternating direction method of multipliers(ADMM) is used to optimize the filter and auxiliary factors, andreduce computational complexity.ResultThis paper selects standard data sets OTB50(abject tracking benchmark) and OTB100 to facilitate experimental comparison with the current mainstream algorithms. OTB50 is a commonly used tracking dataset, which contains 50 groups of video sequences and has 11 different attributes, such as lighting changes and occlusions. OTB100 containsan additional 50 test sequences based on OTB50. Each sequence may have different video attributes, making tracking challenges difficult. The algorithm in this paper uses one-pass evaluation (OPE) to analyze the performance of the algorithm, and tracking accuracy and success rate as the evaluation criteria. In video sequence Board_1, the algorithm in this paper, ECO(efficient convolution operators), SRDCF(spatially regularized correlation filters), and DeepSTRCF(deep spatial-temporal regularized) can achieve accurate tracking, but the speed of the algorithm in this paper is substantially better than that of the three two algorithms of ECO, SRDCF, and DeepSTRCF. In video sequence Panda_1, the tracking effect of the algorithm in this paper is stable under a low resolution. In video sequence Box_1, only the algorithm in this paper can accurately track the target from the initial frame to the last frame because the Kalman filter is used to predict the direction of the target and distinguish the target from the background information effectively. The tracker is prevented from tracking other similar background information. Experimental results show that the average accuracy rate and average success rate of the algorithm on datasets OTB50 and OTB100 are 0.804 and 0.748, respectively, which are 7% and 16% higher than the BACF algorithm, respectively. In tracking the experimental sequence, the tracking success rate and tracking accuracy of the algorithm in this paper are high and meet the real-time requirements, and the tracking performance is good.ConclusionThis paper uses Kalman filtering to predict the direction and state of the target, assigns different weights to the background information in different directions, performs filter training, and obtains the maximum response value based on linear interpolation to determine the target position. The ADMM method is used to transform the problem of solving the target model into two subproblems with the optimal solution. The online adaptive method is used to solve the problem of target deformation in model update. Numerous comparative experiments are performed on OTB50 and OTB100 datasets. On OTB50, the algorithm success rate and accuracy rate of this paper are 0.720 and 0.777, respectively. On OTB100, the algorithm success rate and accuracy rate of this paper are 0.773 and 0.828, respectively.Both are better than the current mainstream algorithms, which shows that the algorithm in this paper has better accuracy and robustness. In background sensing, the sample sampling method and weight allocation directly affect target tracking performance. The next step is to conduct an in-depth research on the construction of a speed-adaptive sample collection model.关键词:computer vision;target tracking;correlation filter;background-aware;Kalman filters;alternating direction method of multipliers (ADMM)121|222|5更新时间:2024-05-07 -

摘要:ObjectiveObject detection is a fundamental task in computer vision applications, which provides support for subsequent object tracking, semantic segmentation, and behavior recognition. Recent years have witnessed substantial progress in still image object detection based on deep convolutional neural network (DCNN). The task of still image object detection is to determine the category and position of each object in an image. Video object detection aims to locate a moving object in sequential images and assign a specific category label to each object. The accuracy of video object detection suffers from degenerated object appearances in videos, such as motion blur, multiobject occlusion, and rare poses. The methods of still image object detection achieve excellent results, but directly applying them to video object detection is challenging. According to the temporal and spatial information in videos, most existing video object detection methods improve the accuracy of moving object detection by considering spatiotemporal consistency based on still image object detection.MethodIn this paper, we propose a video object detection method using fusion of single shot multibox detector (SSD) and spatiotemporal features. Under the framework of SSD, temporal and spatial information of the video are applied to video object detection through the optical flow network and the feature pyramid network. On the one hand, the network combining residual network (ResNet) 101 with four extra convolutional layers is used for feature extraction to produce the feature map in each frame of the video. An optical flow network estimates the optical flow fields between the current frame and multiple adjacent frames to enhance the feature of the current frame. The feature maps from adjacent frames are compensated to the current frame according to the optical flow fields. The multiple compensated feature maps as well the feature map of the current frame are aggregated according to adaptive weights. The adaptive weights indicate the importance of all compensated feature maps to the current frame. Here, the cosine similarity metric is utilized to measure the similarity between the compensated feature map and the feature map extracted from the current frame. If the compensated feature map is close to the feature map of the current frame, then the compensated feature map is assigned a larger weight; otherwise, it is assigned a smaller weight. Moreover, an embedding network that consists of three convolutional layers is applied on the compensated feature maps and the current feature map to produce the embedding feature maps, and the embedding feature maps are used to compute the adaptive weights. On the other hand, the feature pyramid network is used to extract multiscale feature maps that are used to detect the object of different sizes. The low-and high-level feature maps are used to detect smaller and larger objects, respectively. For the problem of small object detection in the original SSD network, the low-level feature map is combined with the high-level feature map to enhance the semantic information of the low-level feature map via upsampling operation and a 1×1 convolutional layer. The upsampling operation is used to extend the high-level feature map to the same resolution as the low-level feature map, and the 1×1 convolution layer is used to reduce the channel dimensions of the low-level feature map to be consistent with those of the high-level feature map. Then, multiscale feature maps are input into the detection network to predict bounding boxes, and nonmaximum suppression is carried out to filter the redundant bounding boxes and obtain the final bounding boxes.ResultExperimental results show that the mean average precision (mAP) score of the proposed method on the ImageNet VID(ImageNet for video object detection) dataset can reach 72.0%, which is 24.5%, 3.6%, and 2.5% higher than those of the temporal convolutional network, the method combining tubelet proposal network with long short memory network, and the method combining SSD and siamese network, respectively. In addition, an ablation experiment is conducted with five network structures, namely, 16-layer visual geometry group(VGG16) network, ResNet101 network, the network combining ResNet101 with feature pyramid network, and the network combining ResNet101 with spatiotemporal fusion. The network structure combining ResNet101 with spatiotemporal fusion improves the mAP score by 11.8%, 7.0%, and 1.2% compared with the first four network structures. For further analysis, the mAP scores of the slow, medium, and fast objects are reported in addition to the standard mAP score. Our method combined with optical flow improves the mAP score of slow, medium, and fast objects by 0.6%, 1.9%, and 2.3%, respectively, compared with the network structure combining ResNet101 with feature pyramid network. Experimental results show that the proposed method can improve the accuracy of video object detection, especially the performance of fast object detection.ConclusionTemporal and spatial correlation of the video by spatiotemporal fusion are used to improve the accuracy of video object detection in the proposed method. Using the optical flow network in video object detection can compensate the feature map of the current frame according to the feature maps of multiple adjacent frames. False negatives and false positives can be reduced through temporal feature fusion in video object detection. In addition, multiscale feature maps produced by the feature pyramid network can detect the object of different sizes, and the multiscale feature map fusion can enhance the semantic information of the low-level feature map, which improves the detection ability of the low-level feature map for small objects.关键词:object detection;single shot multibox detector (SSD);feature fusion;optical flow;feature pyramid network98|212|7更新时间:2024-05-07

摘要:ObjectiveObject detection is a fundamental task in computer vision applications, which provides support for subsequent object tracking, semantic segmentation, and behavior recognition. Recent years have witnessed substantial progress in still image object detection based on deep convolutional neural network (DCNN). The task of still image object detection is to determine the category and position of each object in an image. Video object detection aims to locate a moving object in sequential images and assign a specific category label to each object. The accuracy of video object detection suffers from degenerated object appearances in videos, such as motion blur, multiobject occlusion, and rare poses. The methods of still image object detection achieve excellent results, but directly applying them to video object detection is challenging. According to the temporal and spatial information in videos, most existing video object detection methods improve the accuracy of moving object detection by considering spatiotemporal consistency based on still image object detection.MethodIn this paper, we propose a video object detection method using fusion of single shot multibox detector (SSD) and spatiotemporal features. Under the framework of SSD, temporal and spatial information of the video are applied to video object detection through the optical flow network and the feature pyramid network. On the one hand, the network combining residual network (ResNet) 101 with four extra convolutional layers is used for feature extraction to produce the feature map in each frame of the video. An optical flow network estimates the optical flow fields between the current frame and multiple adjacent frames to enhance the feature of the current frame. The feature maps from adjacent frames are compensated to the current frame according to the optical flow fields. The multiple compensated feature maps as well the feature map of the current frame are aggregated according to adaptive weights. The adaptive weights indicate the importance of all compensated feature maps to the current frame. Here, the cosine similarity metric is utilized to measure the similarity between the compensated feature map and the feature map extracted from the current frame. If the compensated feature map is close to the feature map of the current frame, then the compensated feature map is assigned a larger weight; otherwise, it is assigned a smaller weight. Moreover, an embedding network that consists of three convolutional layers is applied on the compensated feature maps and the current feature map to produce the embedding feature maps, and the embedding feature maps are used to compute the adaptive weights. On the other hand, the feature pyramid network is used to extract multiscale feature maps that are used to detect the object of different sizes. The low-and high-level feature maps are used to detect smaller and larger objects, respectively. For the problem of small object detection in the original SSD network, the low-level feature map is combined with the high-level feature map to enhance the semantic information of the low-level feature map via upsampling operation and a 1×1 convolutional layer. The upsampling operation is used to extend the high-level feature map to the same resolution as the low-level feature map, and the 1×1 convolution layer is used to reduce the channel dimensions of the low-level feature map to be consistent with those of the high-level feature map. Then, multiscale feature maps are input into the detection network to predict bounding boxes, and nonmaximum suppression is carried out to filter the redundant bounding boxes and obtain the final bounding boxes.ResultExperimental results show that the mean average precision (mAP) score of the proposed method on the ImageNet VID(ImageNet for video object detection) dataset can reach 72.0%, which is 24.5%, 3.6%, and 2.5% higher than those of the temporal convolutional network, the method combining tubelet proposal network with long short memory network, and the method combining SSD and siamese network, respectively. In addition, an ablation experiment is conducted with five network structures, namely, 16-layer visual geometry group(VGG16) network, ResNet101 network, the network combining ResNet101 with feature pyramid network, and the network combining ResNet101 with spatiotemporal fusion. The network structure combining ResNet101 with spatiotemporal fusion improves the mAP score by 11.8%, 7.0%, and 1.2% compared with the first four network structures. For further analysis, the mAP scores of the slow, medium, and fast objects are reported in addition to the standard mAP score. Our method combined with optical flow improves the mAP score of slow, medium, and fast objects by 0.6%, 1.9%, and 2.3%, respectively, compared with the network structure combining ResNet101 with feature pyramid network. Experimental results show that the proposed method can improve the accuracy of video object detection, especially the performance of fast object detection.ConclusionTemporal and spatial correlation of the video by spatiotemporal fusion are used to improve the accuracy of video object detection in the proposed method. Using the optical flow network in video object detection can compensate the feature map of the current frame according to the feature maps of multiple adjacent frames. False negatives and false positives can be reduced through temporal feature fusion in video object detection. In addition, multiscale feature maps produced by the feature pyramid network can detect the object of different sizes, and the multiscale feature map fusion can enhance the semantic information of the low-level feature map, which improves the detection ability of the low-level feature map for small objects.关键词:object detection;single shot multibox detector (SSD);feature fusion;optical flow;feature pyramid network98|212|7更新时间:2024-05-07 -

摘要:ObjectiveAs an important part of intelligent transportation systems, automatic license plate detection and recognition (ALPR) has always been a research hotspot in the field of computer vision. With the development of deep learning technology and new requirements for license plate recognition in the field of unmanned driving and safe cities as well as the upgrading challenges brought by complex license plate images taken by mobile phones and various mobile terminal devices, license plate recognition technology is now facing new challenges, mainly reflected in license plate background color; size and type varying in different countries; susceptibility of license plate images to complex environmental factors, such as poor lighting conditions, rain, snow, and complex background information interference; and diversity of acquisition equipment (such as mobile phone and law enforcement recorder) in real ALPR application, which leads to various irregular distortions of license plate images. The shape of a license plate is usually rectangular, with a fixed aspect ratio and definite color; hence, edge information and color features are frequently used to detect license plates in traditional ALPR techniques. These methods are highly efficient in controlled scenarios such as the entrance of a parking lot, but they are very sensitive to illumination variation, multiple viewpoints, stains, occlusion, image blur, and other influencing factors of the license plate image in natural scenarios, and the detection result is far from reaching application level. Methods based on deep learning technology have made remarkable achievements in license plate detection and character recognition tasks, and their recognition accuracy is higher than that of traditional ALPR techniques. However, they simply treat the license plate as a regular rectangular area and fail to consider the problem that the license plate will be distorted into an irregular quadrilateral in natural scenarios. These methods all use the anchor-based object detector to detect the license plate, but the size of the anchor is usually fixed, resulting in low detection accuracy for the object with a large distortion. License plates captured in natural scenarios are often distorted, especially in surveillance and cellphone videos; thus, the recognition accuracy of methods based on deep learning technology can still be improved. This paper designs a distorted license plate detection model in natural scenarios, named distorted license plate detection network (DLPD-Net), to solve the problem of irregular, distorted license plate in natural scenarios and make full use of the license plate shape characteristics.MethodFor the first time, DLPD-Net applies the anchor-free object detection method to license plate detection. Instead of using the anchor to obtain the proposal license plate regions, it predicts the license plate center based on the heat map and offset map of the license plate. First, DLPD-Net uses ResNet-50 to extract the feature map of the input image, and then obtains the feature map of nine channels by using a detection block (including heat map, offset map, and affine transformation parameter map). Local peaks in the heat map are taken as the center of the license plate, and a square with fixed size is assumed at this location. Affine transformation parameters obtained by regression are used to construct the affine matrix, and the imaginary square is transformed into a quadrilateral corresponding to the shape of the license plate. Finally, the license plate region is obtained by using the offset value to translate the quadrilateral, then a distorted license plate is extracted and corrected to a plane rectangle similar to the front view. A complete loss function is designed, which consists of three parts, namely, heat map loss, offset loss, and affine loss, to train DLPD-Net effectively. Focal loss function is used to train the heat map and address the imbalance of positive and negative samples in license plate center prediction. L1 loss is used to train the offset map and obtain the local offset of each object center because the existence of the output stride will lead to the discretization error of real object coordinates. Affine loss is obtained by calculating the difference between the transformation value of the unit square's corners and the normalized value of the license plate's corners, and then summing.ResultOn the one hand, the performance of DLPD-Net is evaluated on the CD-HARD dataset, and results show that DLPD-Net could find the corners of distorted license plates well. On the other hand, based on DLPD-Net, this paper designs a distorted license plate recognition system in natural scenarios, which is composed of three modules: vehicle detection module, license plate detection, and correction module and license plate character recognition module. Experimental results show that compared with other commercial systems and license plate detection methods proposed in paper, DLPD-Net outperforms in distorted license plate detection and can improve the recognition accuracy of the license plate recognition system. In the CD-HARD dataset, the system's recognition accuracy is 79.4%, 4.4%12.1% higher than that of other methods, and the average processing time is 237 ms. In the AOLP dataset, the system's recognition accuracy reaches 96.6%, and that is 94.9% without augmented samples, which is 1.6%25.2% higher than that of other methods, and the average processing time is 185 ms.ConclusionA distorted license plate detection model in natural scenarios, named DLPD-Net, is proposed. The model can extract the distorted license plate from the image and correct it into a plane rectangle similar to the front view, which is very useful for license plate character recognition. Based on DLPD-Net, an ALPR system is proposed. Experimental results show that DLPD-Net can achieve license plate detection under various distortion conditions in challenging datasets. It is robust and has a very good detection effect in complex natural scenarios such as occlusion, dirt, and image blur. The distorted license plate recognition system based on DLPD-Net is more practicable in unconstrained natural scenarios.关键词:automatic license plate detection and recognition(ALPR);deep learning;license plate detection;license plate correction;character recognition82|125|1更新时间:2024-05-07