最新刊期

卷 26 , 期 2 , 2021

- 摘要:The Conference on Neural Information Processing Systems (NeurIPS), as a top-tier conference in the field of machine learning and also a China Computer Federation(CCF)-A conference, has been receiving lots of attention. NeurIPS 2020 received a record-breaking 9 467 submissions, and finally accepted 1 898 papers, which covered various topics of artificial intelligence(AI), such as deep learning and its applications, reinforcement learning and planning, theory, probabilistic methods, optimization, and the social aspect of machine learning. In this paper, we first reviewed the highlights and statistical information of NeurIPS 2020, for example, using GatherTown (each attendee is represented by a cartoon character) to improve the experience of immersive interactions with each other. Following that, we summarized the invited talks which covered multiple disciplines such as cryptography, feedback control theory, causal inference, and biology. Moreover, we provide a quick review of best papers, orals and some interesting posters, hoping to help readers have a quick glance over NeurIPS 2020.关键词:artificial intelligence(AI);machine learning;deep learning;reinforcement learning;theory;optimization;academic conference;NeurIPS 202068|57|0更新时间:2024-05-07

Frontier

-

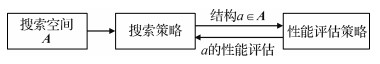

摘要:Deep neural networks(DNNs) have achieved remarkable progress over the past years on a variety of tasks, such as image recognition, speech recognition, and machine translation. One of the most crucial aspects for this progress is novel neural architectures, in which hierarchical feature extractors are learned from data in an end-to-end manner rather than manually designed. Neural network training can be considered an automatic feature engineering process, and its success has been accompanied by an increasing demand for architecture engineering. At present, most neural networks are developed by human experts; however, the process involved is time-consuming and error-prone. Consequently, interest in automated neural architecture search methods has increased recently. Neural architecture search can be regarded as a subfield of automated machine learning, and it significantly overlaps with hyperparameter optimization and meta learning. Neural architecture search can be categorized into three dimensions: search space, search strategy, and performance estimation strategy. The search space defines which architectures can be represented in principle. The choice of search space largely determines the difficulty of optimization and search time. To reduce search time, neural architecture search is typically not applied to the entire network, but instead, the neural network is divided into several blocks and the search space is designed inside the blocks. All the blocks are combined into a whole neural network by using a predefined paradigm. In this manner, the search space can be significantly reduced, saving search time. In accordance with different situations, the architecture of the searched block can be shared or not. If the architecture is not shared, then every block has a unique architecture; otherwise, all the blocks in the neural network exhibit the same architecture. In this manner, search time can be further reduced. The search strategy details how the search space can be explored. Many search strategies can be used to explore the space of neural architectures, including random search, reinforcement learning, evolution algorithm, Bayesian optimization, and gradient-based optimization. A search strategy encompasses the classical exploration-exploitation trade-off. The objective of neural architecture search is typically to find architectures that achieve high predictive performance on unseen data. Performance estimation refers to the process of estimating this performance. The most direct approach is performing complete training and validation of the architecture on target data. This technique is extremely time-consuming, in the order of thousands of graphics processing unit (GPU) days. Thus, we generally do not train each candidate to converge. Instead, methods, such as like weight sharing, early stopping, or searching smaller proxy datasets, are used in the performance estimation strategy, considerably reducing training time for each candidate architecture performance estimation. Weight sharing can be achieved by inheriting weights from pretrained models or searching a one-shot model, whose weights are then shared across different architectures that are merely subgraphs of the one-shot model. The early stopping method estimates performance in accordance with the early stage validation result via learning curve extrapolation. Training on a smaller proxy dataset finds a neural architecture on a small dataset, such as CIFAR-10. Then, the architecture is trained on the target large dataset, such as ImageNet. Compared with neural networks developed by human experts, models found via neural architecture search exhibit better performance on various tasks, such as image classification, image detection, and semantic segmentation. For the ImageNet classification task, for example, MobileNetV3, which was found via neural architecture search, reduced approximately 30% FLOPs compared with the MobileNetV2, which was designed by human experts, with more 3.2% top-1 accuracy. For the Cityscapes segmentation task, Auto-DeepLab-L found via neural architecture search has exhibited better performance than DeepLabv3+, with only half multi-adds. In this survey, we propose several neural architecture methods and applications, demonstrating that neural networks found via neural architecture search outperform manually designed architectures on certain tasks, such as image classification, object detection, and semantic segmentation. However, insights into why specific architectures work efficiently remain minimal. Identifying common motifs, providing an understanding why these motifs are important for high performance, and investigating whether these motifs can be generalized over different problems will be desirable.关键词:artificial intelligence;computer vision;deep neural networks(DNNs);reinforcement learning;evolution algorithm;neural architecture search (NAS)39|73|3更新时间:2024-05-07

摘要:Deep neural networks(DNNs) have achieved remarkable progress over the past years on a variety of tasks, such as image recognition, speech recognition, and machine translation. One of the most crucial aspects for this progress is novel neural architectures, in which hierarchical feature extractors are learned from data in an end-to-end manner rather than manually designed. Neural network training can be considered an automatic feature engineering process, and its success has been accompanied by an increasing demand for architecture engineering. At present, most neural networks are developed by human experts; however, the process involved is time-consuming and error-prone. Consequently, interest in automated neural architecture search methods has increased recently. Neural architecture search can be regarded as a subfield of automated machine learning, and it significantly overlaps with hyperparameter optimization and meta learning. Neural architecture search can be categorized into three dimensions: search space, search strategy, and performance estimation strategy. The search space defines which architectures can be represented in principle. The choice of search space largely determines the difficulty of optimization and search time. To reduce search time, neural architecture search is typically not applied to the entire network, but instead, the neural network is divided into several blocks and the search space is designed inside the blocks. All the blocks are combined into a whole neural network by using a predefined paradigm. In this manner, the search space can be significantly reduced, saving search time. In accordance with different situations, the architecture of the searched block can be shared or not. If the architecture is not shared, then every block has a unique architecture; otherwise, all the blocks in the neural network exhibit the same architecture. In this manner, search time can be further reduced. The search strategy details how the search space can be explored. Many search strategies can be used to explore the space of neural architectures, including random search, reinforcement learning, evolution algorithm, Bayesian optimization, and gradient-based optimization. A search strategy encompasses the classical exploration-exploitation trade-off. The objective of neural architecture search is typically to find architectures that achieve high predictive performance on unseen data. Performance estimation refers to the process of estimating this performance. The most direct approach is performing complete training and validation of the architecture on target data. This technique is extremely time-consuming, in the order of thousands of graphics processing unit (GPU) days. Thus, we generally do not train each candidate to converge. Instead, methods, such as like weight sharing, early stopping, or searching smaller proxy datasets, are used in the performance estimation strategy, considerably reducing training time for each candidate architecture performance estimation. Weight sharing can be achieved by inheriting weights from pretrained models or searching a one-shot model, whose weights are then shared across different architectures that are merely subgraphs of the one-shot model. The early stopping method estimates performance in accordance with the early stage validation result via learning curve extrapolation. Training on a smaller proxy dataset finds a neural architecture on a small dataset, such as CIFAR-10. Then, the architecture is trained on the target large dataset, such as ImageNet. Compared with neural networks developed by human experts, models found via neural architecture search exhibit better performance on various tasks, such as image classification, image detection, and semantic segmentation. For the ImageNet classification task, for example, MobileNetV3, which was found via neural architecture search, reduced approximately 30% FLOPs compared with the MobileNetV2, which was designed by human experts, with more 3.2% top-1 accuracy. For the Cityscapes segmentation task, Auto-DeepLab-L found via neural architecture search has exhibited better performance than DeepLabv3+, with only half multi-adds. In this survey, we propose several neural architecture methods and applications, demonstrating that neural networks found via neural architecture search outperform manually designed architectures on certain tasks, such as image classification, object detection, and semantic segmentation. However, insights into why specific architectures work efficiently remain minimal. Identifying common motifs, providing an understanding why these motifs are important for high performance, and investigating whether these motifs can be generalized over different problems will be desirable.关键词:artificial intelligence;computer vision;deep neural networks(DNNs);reinforcement learning;evolution algorithm;neural architecture search (NAS)39|73|3更新时间:2024-05-07 -

摘要:Image quality assessment (IQA) has been a fundamental issue in the fields of image processing and computer vision. It has also been extensively applied to other relevant research areas, such as image/video coding, super-resolution and visual enhancement. In general, IQA consists of subjective and objective evaluations. Subjective evaluation always refers to estimating the visual quality of images by subject, with the goal of building test benchmarks. Objective evaluation typically resorts to computational algorithms (i.e., IQA models) to make visual quality predictions, and its ultimate objective is to provide consistent judgment with subjects. The effectiveness of objective IQA models must be verified on test benchmarks built via subjective evaluation. Undoubtedly, subjective evaluation cannot be fully embedded into multimedia processing applications because such process is time-consuming and labor-intensive. By contrast, an objective IQA model can work efficiently as an important module in multimedia processing applications, playing roles in visual image quality monitoring, image filtering, and visual quality enhancement. Given their availability, research on objective IQA models has elicited considerable attention from industries and academia. Objective IQA models can be classified into three categories: full-reference (FR), reduced-reference (RR), and no-reference/blind (NR) models. FR and RR models denote that reference information for estimating the visual quality of images is completely and partially available, respectively. Meanwhile, an NR model indicates that reference information is unavailable for visual quality prediction. Although reference-based IQA models (i.e., FR and RR models) are relatively reliable, their applications are limited to specific scenarios due to their dependence on reference information. By contrast, NR-IQA models are more flexible than reference-based models because they are free from the constraint of reference information. Consequently, NR-IQA models have consistently been a popular research topic over the past decades. In this study, we introduce NR-IQA models published from 2012 to 2020 to provide a comprehensive survey on feature engineering and end-to-end learning techniques in NR-IQA. In accordance with whether subjective quality scores are involved in training procedures, NR-IQA models are classified into two categories: opinion-aware/supervised and opinion-unaware/unsupervised NR-IQA models. To present a clear and integrated description, each category is further divided into two subclasses: traditional machine learning-based models (MLMs) and deep learning-based models (DLMs). For the former subclass, we mostly investigate their individual feature extraction schemes and the principle behind these schemes. In particular, a widely adopted feature extraction approach in MLMs, namely, natural scene statistics (NSS), is introduced in this study. The principle of NSS is as follows: some visual features of quality perfect images follow certain associated distributions; meanwhile, different types of distortions will break this rule in corresponding methods. On the basis of this observation/fact, researchers have proposed many NSS-based NR-IQA methods, in which the estimated parameters of the established distributions are used as quality-aware features. Thereafter, a machine learning algorithm is selected to train the IQA models. Another well-known feature extraction approach described in this study relies on dictionary learning, which is frequently accompanied by sparse coding. The core component of this type of feature extraction approach is to learn a dictionary by searching for a group of over-complete bases. Then, these over-complete bases are used to build a reference system for image representation. A test image can be concretely represented directly or indirectly by the constructed dictionary by using sparse indexes or cluster centroids. Image representations are further used as quality-aware features to capture variations in image quality. For the latter subclass (i.e., DLMs), the design principles described in detail in this paper mostly correspond to different architectures of deep neural networks. In particular, we introduce three different schemes for designing opinion-aware DLMs and commonly used strategies in opinion-unaware DLMs. To guarantee length balance among various contents and clearly exhibit the differences between NR-IQA models designed for natural images and other types of images, we introduce them separately in subsections. In addition, we provide a brief introduction into IQA research on new media, including virtual reality, light field, and underwater sonar images, along with the applications of IQA models. Finally, an in-depth conclusion about NR-IQA models is drawn in the last section. We summarize the current achievements and limitations of MLMs and DLMs. Furthermore, we highlight the potential development trends and directions of NR-IQA models for further improvements from the perspectives of image contents and NR-IQA models.关键词:image quality assessment (IQA);human visual system (HVS);visual perception;natural scene statistics (NSS);machine learning;deep learning257|1276|19更新时间:2024-05-07

摘要:Image quality assessment (IQA) has been a fundamental issue in the fields of image processing and computer vision. It has also been extensively applied to other relevant research areas, such as image/video coding, super-resolution and visual enhancement. In general, IQA consists of subjective and objective evaluations. Subjective evaluation always refers to estimating the visual quality of images by subject, with the goal of building test benchmarks. Objective evaluation typically resorts to computational algorithms (i.e., IQA models) to make visual quality predictions, and its ultimate objective is to provide consistent judgment with subjects. The effectiveness of objective IQA models must be verified on test benchmarks built via subjective evaluation. Undoubtedly, subjective evaluation cannot be fully embedded into multimedia processing applications because such process is time-consuming and labor-intensive. By contrast, an objective IQA model can work efficiently as an important module in multimedia processing applications, playing roles in visual image quality monitoring, image filtering, and visual quality enhancement. Given their availability, research on objective IQA models has elicited considerable attention from industries and academia. Objective IQA models can be classified into three categories: full-reference (FR), reduced-reference (RR), and no-reference/blind (NR) models. FR and RR models denote that reference information for estimating the visual quality of images is completely and partially available, respectively. Meanwhile, an NR model indicates that reference information is unavailable for visual quality prediction. Although reference-based IQA models (i.e., FR and RR models) are relatively reliable, their applications are limited to specific scenarios due to their dependence on reference information. By contrast, NR-IQA models are more flexible than reference-based models because they are free from the constraint of reference information. Consequently, NR-IQA models have consistently been a popular research topic over the past decades. In this study, we introduce NR-IQA models published from 2012 to 2020 to provide a comprehensive survey on feature engineering and end-to-end learning techniques in NR-IQA. In accordance with whether subjective quality scores are involved in training procedures, NR-IQA models are classified into two categories: opinion-aware/supervised and opinion-unaware/unsupervised NR-IQA models. To present a clear and integrated description, each category is further divided into two subclasses: traditional machine learning-based models (MLMs) and deep learning-based models (DLMs). For the former subclass, we mostly investigate their individual feature extraction schemes and the principle behind these schemes. In particular, a widely adopted feature extraction approach in MLMs, namely, natural scene statistics (NSS), is introduced in this study. The principle of NSS is as follows: some visual features of quality perfect images follow certain associated distributions; meanwhile, different types of distortions will break this rule in corresponding methods. On the basis of this observation/fact, researchers have proposed many NSS-based NR-IQA methods, in which the estimated parameters of the established distributions are used as quality-aware features. Thereafter, a machine learning algorithm is selected to train the IQA models. Another well-known feature extraction approach described in this study relies on dictionary learning, which is frequently accompanied by sparse coding. The core component of this type of feature extraction approach is to learn a dictionary by searching for a group of over-complete bases. Then, these over-complete bases are used to build a reference system for image representation. A test image can be concretely represented directly or indirectly by the constructed dictionary by using sparse indexes or cluster centroids. Image representations are further used as quality-aware features to capture variations in image quality. For the latter subclass (i.e., DLMs), the design principles described in detail in this paper mostly correspond to different architectures of deep neural networks. In particular, we introduce three different schemes for designing opinion-aware DLMs and commonly used strategies in opinion-unaware DLMs. To guarantee length balance among various contents and clearly exhibit the differences between NR-IQA models designed for natural images and other types of images, we introduce them separately in subsections. In addition, we provide a brief introduction into IQA research on new media, including virtual reality, light field, and underwater sonar images, along with the applications of IQA models. Finally, an in-depth conclusion about NR-IQA models is drawn in the last section. We summarize the current achievements and limitations of MLMs and DLMs. Furthermore, we highlight the potential development trends and directions of NR-IQA models for further improvements from the perspectives of image contents and NR-IQA models.关键词:image quality assessment (IQA);human visual system (HVS);visual perception;natural scene statistics (NSS);machine learning;deep learning257|1276|19更新时间:2024-05-07 -

摘要:As a national critical infrastructure, economic artery, and popular transportation, a railway plays an irreplaceable role in supporting the economic and social development of a nation. The rail is the key component of a railway, and correspondingly, rail defect detection is a core activity in railway engineering. Traditional manual inspection is time-consuming and laborious, and its results are easily influenced by various subjective factors. Therefore, automatic defect inspection for maintaining railway safety is highly significant. Considering the advantages of visual inspection in terms of speed, cost, and visualization, this study focuses on machine vision-based techniques. The track structure is first introduced by using the widely used ballastless track as an example. Sample presentation, causal analysis, and impact assessment of typical surface defects are provided. Then, the basic principles and application scenarios of common automatic rail defect detection technologies are briefly reviewed. In particular, ultrasonic techniques can be used to detect rail internal flaws, but it can hardly inspect fatigue damage on rail surface because of factors, such as ultrasonic reflection. Furthermore, detection speed is typically unsatisfactory. Eddy current inspection can obtain information about rail surface defects with the use of a detection coil by measuring the variance of eddy currents generated by an excitation coil. In contrast with ultrasonic technology, eddy current testing is fast and exhibits a distinct advantage in terms of detecting defects, such as shelling and scratch. However, it fails at finding defects that are located at the rail waist and base. Consequently, eddy current detection is frequently used in conjunction with ultrasonic equipment. Notably, eddy current inspection has high requirements for the installation position of the detection coil and the actual operation. Debugging the equipment is a complicated task, and the stability of the detection result is insufficient. Thereafter, current major challenges in the visual inspection of rail defects, namely, inhomogeneity of image qualities, limitation of available features, and difficulty in model updating, are summarized. Then, research actuality in the visual inspection of rail defects is systematically reviewed by categorizing the techniques into foreground, background, blind source separation, and deep learning-based models. One or two representative studies are elaborated in each category, followed by the analysis of technical features and practical limitations. In particular, foreground models typically suppress disturbing noise through operations, such as local image filtering, which can enhance contrast between the defect and the background, and thus, help recognize rail surface defects. This type of models generally exhibits low computational complexity, and thus, can meet the requirements of real-time inspection. However, they easily generate false positives and can hardly segment the defect target. Instead of directly placing emphasis on the defect, background methods model the image background by utilizing the spatial consistency and continuity of the rail image. Similar to foreground models, such methods also exhibit good real-time performance, but effectively decreasing false detection still requires further research. Blind source separation models detect rail defects on the basis of the low rank of the image background and the sparseness of the defect. Compared with the aforementioned two types of models, these approaches do not simply rely on the low-level visual characteristics of the defect target. However, these models tend to require high computational complexity. Deep learning-based models generally exhibit promising performance in the visual inspection of rail defects. However, training a deep learning model frequently requires a large amount of samples, and collecting and labeling numerous defect images can be costly. Moreover, these approaches typically depend on a dataset with specific supervision information, and thus, they may not perform well in other similar scenarios. Finally, future research trends in the visual inspection of rail defects are prospected by targeting the development requirements of smart railways. That is, technologies, such as few-shot or zero-shot learning, multitask learning, and multisource heterogeneous data fusion, should be explored to solve the problems of weak robustness and high false alarm rate existing in current visual inspection systems.关键词:rail defects;visual inspection;foreground;background;blind source separation;deep learning443|787|2更新时间:2024-05-07

摘要:As a national critical infrastructure, economic artery, and popular transportation, a railway plays an irreplaceable role in supporting the economic and social development of a nation. The rail is the key component of a railway, and correspondingly, rail defect detection is a core activity in railway engineering. Traditional manual inspection is time-consuming and laborious, and its results are easily influenced by various subjective factors. Therefore, automatic defect inspection for maintaining railway safety is highly significant. Considering the advantages of visual inspection in terms of speed, cost, and visualization, this study focuses on machine vision-based techniques. The track structure is first introduced by using the widely used ballastless track as an example. Sample presentation, causal analysis, and impact assessment of typical surface defects are provided. Then, the basic principles and application scenarios of common automatic rail defect detection technologies are briefly reviewed. In particular, ultrasonic techniques can be used to detect rail internal flaws, but it can hardly inspect fatigue damage on rail surface because of factors, such as ultrasonic reflection. Furthermore, detection speed is typically unsatisfactory. Eddy current inspection can obtain information about rail surface defects with the use of a detection coil by measuring the variance of eddy currents generated by an excitation coil. In contrast with ultrasonic technology, eddy current testing is fast and exhibits a distinct advantage in terms of detecting defects, such as shelling and scratch. However, it fails at finding defects that are located at the rail waist and base. Consequently, eddy current detection is frequently used in conjunction with ultrasonic equipment. Notably, eddy current inspection has high requirements for the installation position of the detection coil and the actual operation. Debugging the equipment is a complicated task, and the stability of the detection result is insufficient. Thereafter, current major challenges in the visual inspection of rail defects, namely, inhomogeneity of image qualities, limitation of available features, and difficulty in model updating, are summarized. Then, research actuality in the visual inspection of rail defects is systematically reviewed by categorizing the techniques into foreground, background, blind source separation, and deep learning-based models. One or two representative studies are elaborated in each category, followed by the analysis of technical features and practical limitations. In particular, foreground models typically suppress disturbing noise through operations, such as local image filtering, which can enhance contrast between the defect and the background, and thus, help recognize rail surface defects. This type of models generally exhibits low computational complexity, and thus, can meet the requirements of real-time inspection. However, they easily generate false positives and can hardly segment the defect target. Instead of directly placing emphasis on the defect, background methods model the image background by utilizing the spatial consistency and continuity of the rail image. Similar to foreground models, such methods also exhibit good real-time performance, but effectively decreasing false detection still requires further research. Blind source separation models detect rail defects on the basis of the low rank of the image background and the sparseness of the defect. Compared with the aforementioned two types of models, these approaches do not simply rely on the low-level visual characteristics of the defect target. However, these models tend to require high computational complexity. Deep learning-based models generally exhibit promising performance in the visual inspection of rail defects. However, training a deep learning model frequently requires a large amount of samples, and collecting and labeling numerous defect images can be costly. Moreover, these approaches typically depend on a dataset with specific supervision information, and thus, they may not perform well in other similar scenarios. Finally, future research trends in the visual inspection of rail defects are prospected by targeting the development requirements of smart railways. That is, technologies, such as few-shot or zero-shot learning, multitask learning, and multisource heterogeneous data fusion, should be explored to solve the problems of weak robustness and high false alarm rate existing in current visual inspection systems.关键词:rail defects;visual inspection;foreground;background;blind source separation;deep learning443|787|2更新时间:2024-05-07 - 摘要:Traditional convolutional neural networks (CNNs) use convolutional layers and activation functions to achieve nonlinear transformation from input images to output labels. The end-to-end training method is convenient, but it seriously hinders the introduction of prior knowledge regarding remote sensing images, leading to high dependency on the quality and quantity of training samples. The trained parameters of CNNs are used to extract features from input images. However, these features cannot be interpreted. That is, the learning process and the learned features are uninterpretable, further increasing dependency on training samples. Restricted by an end-to-end training method, traditional CNNs can only learn general features from the training set, while the learned general features are difficult to transfer to another training set. At present, CNNs can be used on multiple tasks if the model is trained using a target training set. However, improving training accuracy on a finite training set is an extremely difficult task. Traditional CNNs cannot correlate the features contained in the input data and the requirements of certain applications. In addition, loss functions that can be used in certain applications are limited. Among which, some loss functions can only describe the difference between the predicted results and the corresponding labels. In such case, the network will sacrifice the disadvantaged classes to ensure global optimum, resulting in the loss of detailed information.CNNs construct a complex nonlinear function to transfer input images to output labels. The features learned by CNNs cannot be understood and are also difficult to be merged with other features in an explainable manner. By contrast, artificial features can reflect some aspects of information of an image, and the information contained in artificial features is meaningful, i.e., it can be used in most images. Artificial features can be considered prior knowledge that describes the empirical understanding of images. They cannot fully express the information contained in an image. Consequently, combining the advantages of CNNs and prior knowledge is efficient for learning essential features from images. Riemannian manifold feature space (RMFS) exhibits a powerful feature expression capability, through which the spectral and spatial features of an image can be unified. To benefit from CNNs and RMFS, this study analyzes the contribution of RMFS to the interpretability of CNNs and the corresponding evolution of image features from the perspective of CNN modeling and remote sensing image feature representation. Then, an RMFS-CNN classification framework is proposed to bridge the gap between CNNs and prior knowledge of remote sensing images. First, this study proposes using CNNs instead of traditional mathematical transformations to map the original remote sensing image onto points in RMFS. Mapping via CNNs can overcome the effects of neighboring sizes and modeling methods, improving the feature expression capability of RMFS. Second, the features learned via RMFS-CNN can be customized in RMFS to highlight specific information that can benefit certain applications. Furthermore, the customized features can also be used to design a rule-driven data perceptron on the basis of their interpretability and evolutions. Finally, new RMFS-CNN models based on the rule-driven data perceptron can be proposed. Considering the feature expression capability of RMFS, the proposed RMFS-CNN models will outperform traditional models in terms of learning capability and the stability of learned features. New loss functions, which can control the training process of RMFS-CNN models, can be developed by combining the customized features in RMFS. In general, the proposed RMFS-CNN framework can bridge the gap between remote sensing prior knowledge and CNN models. Its advantages are as follows. 1) Points in RMFS are interpretable due to the excellent feature expression capability of RMFS and the one-to-one correspondence between points in RMFS and pixels in the image domain. Therefore, RMFS can connect remote sensing prior knowledge and the learning capability of CNNs. The use of CNNs to learn specific information from remote sensing prior knowledge is efficient on the one hand, and it can ensure the stability of learned features on the other hand. Consequently, the dependency of CNNs on the quality and quantity of training samples can be reduced. 2) Points in RMFS contain the spectral features of corresponding pixels and spatial connections in the neighborhood system. Pixels representing the same object in the image domain are subject to a linear distribution when mapped onto RMFS. On the basis of these characteristics, RMFS can provide a platform for the interpretable features of remote sensing images. Under the premise of knowing the physical meaning and corresponding distribution of remote sensing images in RMFS, data-driven convolution can be converted into rule-driven data perceptron to improve the learning capability of RMFS-CNN models. The learning process and corresponding learned features can be interpreted using the rule-driven data perceptron. 3) RMFS exhibits another interesting distribution characteristic. Data points that represent the main body of an object construct a linear distribution, whereas data points that represent the edge of the object are randomly distributed in areas far from the linear distribution. This distribution characteristic enables RMFS to express different features of an object separately. Accordingly, features conducive to certain applications can be customized in RMFS and then abstracted by following the rule-driven data perceptron. With their feature customization capability, RMFS-CNN models can be refined in accordance to their input data and applications. 4) The RMFS-CNN framework can express the interpretable features of remote sensing images. These features can then be customized to adapt to the input data and the corresponding applications. The customized features contain useful information for a certain application, which can be used to define a constraint on the loss function to control the training process of RMFS-CNN models. Given that the constraint can force the network to learn features beneficial for the target application, two advantages are implemented: learning favorable features for a certain application can improve the training accuracy of a network on the one hand, and the interpretability of the learned features can be maintained on the other hand. Consequently, the trained network is easier to transfer compared with that of traditional CNNs.关键词:remote sensing image classification;deep learning;convolutional neural network(CNN);Riemannian manifold feature space (RMFS);feature representation;feature customization;model training219|150|3更新时间:2024-05-07

Scholar View

- 摘要:The amount of medical imaging data is increasing rapidly every year. Although large-scale medical imaging data pose considerable challenges to the work of clinicians, they also offer opportunities for improving disease diagnosis and treatment models. Algorithms based on deep learning exhibit advantages over humans in processing big data, analyzing complex and nondeterministic data, and delving into potential information that can be obtained from data. In recent years, an increasing number of scholars have use deep learning to process and analyze medical image data, promoting the rapid development of precision medicine and personalized medicine. The application of deep learning to medical image processing and analysis, which are characterized by multiple diseases, modals, functions, and omics, is relatively extensive. To facilitate the further exploration and effective application of deep learning methods by researchers in the field of medical image processing, this study systematically reviewed relevant research progress, expecting that such review will be beneficial for researchers in this field. First, general thoughts and the current situation of the application of deep learning to medical imaging were clarified from the perspective of deep learning applications to imaging genomics. Second, state-of-the-art ideas and methods and recent improvements in original deep learning methods were comprehensively described. Lastly, existing problems in this field were highlighted and development trends were explored. In accordance with application status, the application of deep learning to medical imaging was divided into three modules: intelligent diagnosis, response evaluation, and prediction prognosis. The modules were subdivided into different diseases for summary, and the advantages and disadvantages of each deep learning method and existing problems and challenges were highlighted. In terms of intelligent diagnosis, the disadvantages of manual doctor diagnosis, such as heavy workload, subjective cognitive susceptibility, low efficiency, and high misdiagnosis rate, are becoming increasingly evident due to the increasing complexity of medical imaging information. The use of deep learning to interpret medical images and then comparing the results with other case records will help doctors locate lesions and assist in diagnosis. Moreover, the burden of doctors and medical misjudgments can be effectively reduced, improving the accuracy of diagnosis and treatment. Further research on the applications of deep learning and computer vision technologies to radiography is a pressing task in the 21st century, particularly for diseases with high incidence, such as brain and fundus disorders. In the follow-up study, we should focus on optimizing the generation of labels, specifying precise pathological regions in medical images, and establishing a strong supervision model instead of a weak one. In addition, deploying a cropping algorithm on a picture archiving and communication system platform will pave the way to algorithm improvement and entry to the clinical environment. In terms of response evaluation, the pathological evaluation of surgical specimens is the only reliable indicator of long-term tumor prognosis. However, these pathological data can only be obtained after completing all preoperative and surgical treatments, and they cannot be used as a guide for adjusting treatment. The development of noninvasive biomarkers with early prediction potential is important. At present, most relevant studies have conducted analysis by using traditional machine learning algorithms or statistical methods. Biological and clinical data extracted using medical imaging artificial intelligence programs designed by precision medicine researchers can determine the level of lymphocyte infiltration into tumors, predict imaging omics indicators of the therapeutic effect of immunotherapy to patients, and guide chemoradiotherapy treatment. The realization and development of this technique are of considerable clinical significance and deserve additional effort from researchers. With regard to prediction prognosis, imaging markers can predict the mutation status of genes, the molecular categories that regulate the activity of treatment-related proteins, and disease status and prognosis by using deep learning. Intelligent processing and analysis of medical images using deep learning is noninvasive, repeatable, and inexpensive. In the succeeding research, the data fusion of different omics should be completed to realize a link model of the reasoning mechanism based on content and semantics. Moreover, a fast retrieval method for structured data should be established by using the correlation relationship among data to develop an intelligent prediction model with high accuracy and strong robustness. Valuable research results and meaningful progress of the intelligent processing and analysis of medical images based on deep learning have been obtained; however, they have not been widely used in the clinical setting. In-depth research on deep learning theories and methods should be conducted further. In particular, the acquisition of a large number of high-quality labeled imaging cases, multicenter research and verification, the visualization of the decision-making process and diagnosis basis, and the establishment of a tripartite evaluation system are critical. Moreover, the development of intelligent medical imaging requires the fusion of big data and medical imaging technologies, clinical experience and multiomics big data, and artificial intelligence and medical imaging capabilities. Medical problems and clinical results should be used as guides to realize micro/macro system precision micro-closed-loop research for solving practical clinical problems, such as accurate tumor segmentation before, during, and after surgery; intelligent disease diagnosis; and noninvasive tracking of treatment effect, treatment response, and disease status.关键词:medical imaging processing;artificial intelligence;deep learning;imaging genomics;precision medicine383|175|7更新时间:2024-05-07

-

摘要:Smoke-emitting vehicles have gradually become one of the major pollution sources in cities. Algorithms that detect smoke-emitting vehicles from videos have good effects, low cost, and extensive applications. They also do not obstruct traffic. However, they still suffer from high false detection rates and poor interpretability. To fully reflect the research progress of these algorithms, this paper provides a comprehensive summary of articles published from 2016 to 2019. A video black smoke detection framework can be divided into surveillance video preprocessing, suspected smoke area extraction, smoke feature selection, classification, and analysis of algorithm performance. This order can be fine-tuned in accordance with the actual situation. This paper introduces and summarizes video smoke detection frameworks and analyzes the extraction of suspected smoky areas and the selection of smoke features from a hierarchical perspective. The method for extracting suspected smoky areas can be divided into four levels (from low to high): image-level extraction, object-level extraction, pixel-level extraction, and pure smoke reconstruction. The accuracy and stability of an extraction method gradually increases, and high-level methods can generally be applied to the results of low-level methods. Smoke features can be divided into three levels: bottom-, middle-, and high-level features. They are divided in accordance with the number of times of learning-based nonlinear projection, and demarcation points are once and three times. The expression of features becomes stronger and the false detection rate of black smoke decreases as level increases; however, the first two are not strictly linear. Then, the high-level features are generalized from the perspective of interpretability. In addition, this paper summarizes feature extraction methods from the perspective of the presence or absence of deep learning, and then classifies methods into traditional and deep learning. Lastly, the evaluation indexes of algorithms are introduced. At present, video smoke detection algorithms face three challenges: extracting features with increased expressiveness, improving generalization and interpretability, and estimating black smoke concentration. Considering these challenges, this paper provides suggestions on the future development direction of video smoke detection algorithms. First, the level of features should be rationally increased while ensuring expression and computational efficiency to improve feature fusion methods. Second, a deep neural network structure that considers generalization and interpretability should be designed in accordance with the space and motion characteristics of smoke. Further research should be conducted on how to fully alleviate the problems of insufficient number of smoky image training samples and uneven distribution. Third, an adaptive calibration algorithm should be designed to compare the extracted smoky gray level with the Lingerman standard black level.关键词:smoky vehicle detection;feature extraction;smoke classification;interpretability of deep learning;review84|233|0更新时间:2024-05-07

摘要:Smoke-emitting vehicles have gradually become one of the major pollution sources in cities. Algorithms that detect smoke-emitting vehicles from videos have good effects, low cost, and extensive applications. They also do not obstruct traffic. However, they still suffer from high false detection rates and poor interpretability. To fully reflect the research progress of these algorithms, this paper provides a comprehensive summary of articles published from 2016 to 2019. A video black smoke detection framework can be divided into surveillance video preprocessing, suspected smoke area extraction, smoke feature selection, classification, and analysis of algorithm performance. This order can be fine-tuned in accordance with the actual situation. This paper introduces and summarizes video smoke detection frameworks and analyzes the extraction of suspected smoky areas and the selection of smoke features from a hierarchical perspective. The method for extracting suspected smoky areas can be divided into four levels (from low to high): image-level extraction, object-level extraction, pixel-level extraction, and pure smoke reconstruction. The accuracy and stability of an extraction method gradually increases, and high-level methods can generally be applied to the results of low-level methods. Smoke features can be divided into three levels: bottom-, middle-, and high-level features. They are divided in accordance with the number of times of learning-based nonlinear projection, and demarcation points are once and three times. The expression of features becomes stronger and the false detection rate of black smoke decreases as level increases; however, the first two are not strictly linear. Then, the high-level features are generalized from the perspective of interpretability. In addition, this paper summarizes feature extraction methods from the perspective of the presence or absence of deep learning, and then classifies methods into traditional and deep learning. Lastly, the evaluation indexes of algorithms are introduced. At present, video smoke detection algorithms face three challenges: extracting features with increased expressiveness, improving generalization and interpretability, and estimating black smoke concentration. Considering these challenges, this paper provides suggestions on the future development direction of video smoke detection algorithms. First, the level of features should be rationally increased while ensuring expression and computational efficiency to improve feature fusion methods. Second, a deep neural network structure that considers generalization and interpretability should be designed in accordance with the space and motion characteristics of smoke. Further research should be conducted on how to fully alleviate the problems of insufficient number of smoky image training samples and uneven distribution. Third, an adaptive calibration algorithm should be designed to compare the extracted smoky gray level with the Lingerman standard black level.关键词:smoky vehicle detection;feature extraction;smoke classification;interpretability of deep learning;review84|233|0更新时间:2024-05-07 -

摘要:Rigid object pose estimation, which is one of the most fundamental and challenging problems in computer vision, has elicited considerable attention in recent years. Researchers are searching for methods to obtain multiple degrees of freedom (DOFs) for rigid objects in a 3D scene, such as position translation and azimuth rotation, and to detect object instances from a large number of predefined categories in natural images. Simultaneously, the development of technologies in computer vision have achieved considerable progress in the rigid object pose estimation task, which is an important task in an increasing number of applications, e.g., robotic manipulations, orbit services in space, autonomous driving, and augmented reality. This work extensively reviews most papers related to the development history of rigid object pose estimation, spanning over a quarter century (from the 1990s to 2019). However, a review of the use of a single image in rigid object pose estimation does not exist at present. Most relevant studies focus only on the optimization and improvement of pose estimation in a single-class method and then briefly summarize related work in this field. To provide local and overseas researchers with a more comprehensive understanding of the rigid body target pose process, We reviewed the classification and existing problems based on computer vision systematically. In this study, we summarize each multi-DOF pose estimation method by using a single rigid body target image from major research institutions in the world. We classify various pose estimation methods by comparing their key intermediate representation. Deep learning techniques have emerged as a powerful strategy for learning feature representations directly from data and have led to considerable breakthroughs in the field of generic object pose estimation. This paper provides an extensive review of techniques for 20 years of object pose estimation history at two levels: traditional pose estimation period (e.g., feature-based, template matching-based, and 3D coordinate-based methods) and deep learning-based pose estimation period (e.g., improved traditional methods and direct and indirect estimation methods). Finally, we discuss them in accordance with each relevant technical process, focusing on crucial aspects, such as the general process of pose estimation, methodology evolution and classification, commonly used datasets and evaluation criteria, and overseas and domestic research status and prospects. For each type of pose estimation method, we first find the representation space of the image feature in the articles and use it to determine the specific classification of the method. Then, we conclude the estimation process to determine the image feature extraction method, such as the handcrafted design method and convolutional neural network extraction. In the third step, we determine how to match the feature representation space in the articles, summarize the matching process, and finally, identify the pose optimization method used in the article. To date, all pose estimation methods can be finely classified. At present, the multi-DOF rigid object pose estimation method is mostly effective in a single specific application scenario. No universal method is available for composite scenes. When existing methods meet multiple lighting conditions, highly cluttered scenes, and objects with rotational symmetry, the estimation accuracy and efficiency of the similarity target among classes are significantly reduced. Although a certain type of method and its improved version can achieve considerable accuracy improvement, the results will decline significantly when it is applied to other scenarios or new datasets. When applied to highly occluded complex scenes, the accuracy of this method is frequently halved. Moreover, various types of pose estimation methods rely excessively on specialized datasets, particularly various methods based on deep learning. After training, a neural network exhibits strong learning and reasoning capabilities for similar datasets. When introducing new datasets, the network parameters will require a new training set for learning and fine-tuning. Consequently, the method will rely on a neural network framework to achieve pose estimation of a rigid body. This situation requires a large training dataset for multiple scenarios to learn, making the method more practical; however, accuracy is generally not optimal. By contrast, the accuracy of most advanced single-class estimation can be achieved by researchers' manually designed methods under certain single-scenario conditions, but migration application capability is insufficient. When encountering such problems, researchers typically choose two solutions. The first solution is to apply a deep learning technology, using its powerful feature abstraction and data representation capabilities to improve the overall usability of the estimation method, optimize accuracy, and enhance the effect. The other solution is to improve the handcrafted pose estimation method. A researcher can design an intermediate medium with increased representation capability to improve the applicability of a method while ensuring accuracy. History helps readers build complete knowledge hierarchy and find future directions in this rapidly developing field. By combining existing problems with the boosting effects of current deep learning technologies, we introduce six aspects to be considered, namely, scene-level multi-objective inference, self-supervised learning methods, front-end detection networks, lightweight and efficient network designs, multi-information fusion attitude estimation frameworks, and image data representation space. We prospect all the above aspects from the the perspective of development trends in multi-DOF rigid object pose estimation. The multi-DOF pose estimation method for the single image of a rigid object based on computer vision technology has high research value in many fields. However, further research is necessary for some limitations of current technical methods and application scenarios.关键词:computer vision;single image;rigid object;pose estimation;deep learning127|787|4更新时间:2024-05-07

摘要:Rigid object pose estimation, which is one of the most fundamental and challenging problems in computer vision, has elicited considerable attention in recent years. Researchers are searching for methods to obtain multiple degrees of freedom (DOFs) for rigid objects in a 3D scene, such as position translation and azimuth rotation, and to detect object instances from a large number of predefined categories in natural images. Simultaneously, the development of technologies in computer vision have achieved considerable progress in the rigid object pose estimation task, which is an important task in an increasing number of applications, e.g., robotic manipulations, orbit services in space, autonomous driving, and augmented reality. This work extensively reviews most papers related to the development history of rigid object pose estimation, spanning over a quarter century (from the 1990s to 2019). However, a review of the use of a single image in rigid object pose estimation does not exist at present. Most relevant studies focus only on the optimization and improvement of pose estimation in a single-class method and then briefly summarize related work in this field. To provide local and overseas researchers with a more comprehensive understanding of the rigid body target pose process, We reviewed the classification and existing problems based on computer vision systematically. In this study, we summarize each multi-DOF pose estimation method by using a single rigid body target image from major research institutions in the world. We classify various pose estimation methods by comparing their key intermediate representation. Deep learning techniques have emerged as a powerful strategy for learning feature representations directly from data and have led to considerable breakthroughs in the field of generic object pose estimation. This paper provides an extensive review of techniques for 20 years of object pose estimation history at two levels: traditional pose estimation period (e.g., feature-based, template matching-based, and 3D coordinate-based methods) and deep learning-based pose estimation period (e.g., improved traditional methods and direct and indirect estimation methods). Finally, we discuss them in accordance with each relevant technical process, focusing on crucial aspects, such as the general process of pose estimation, methodology evolution and classification, commonly used datasets and evaluation criteria, and overseas and domestic research status and prospects. For each type of pose estimation method, we first find the representation space of the image feature in the articles and use it to determine the specific classification of the method. Then, we conclude the estimation process to determine the image feature extraction method, such as the handcrafted design method and convolutional neural network extraction. In the third step, we determine how to match the feature representation space in the articles, summarize the matching process, and finally, identify the pose optimization method used in the article. To date, all pose estimation methods can be finely classified. At present, the multi-DOF rigid object pose estimation method is mostly effective in a single specific application scenario. No universal method is available for composite scenes. When existing methods meet multiple lighting conditions, highly cluttered scenes, and objects with rotational symmetry, the estimation accuracy and efficiency of the similarity target among classes are significantly reduced. Although a certain type of method and its improved version can achieve considerable accuracy improvement, the results will decline significantly when it is applied to other scenarios or new datasets. When applied to highly occluded complex scenes, the accuracy of this method is frequently halved. Moreover, various types of pose estimation methods rely excessively on specialized datasets, particularly various methods based on deep learning. After training, a neural network exhibits strong learning and reasoning capabilities for similar datasets. When introducing new datasets, the network parameters will require a new training set for learning and fine-tuning. Consequently, the method will rely on a neural network framework to achieve pose estimation of a rigid body. This situation requires a large training dataset for multiple scenarios to learn, making the method more practical; however, accuracy is generally not optimal. By contrast, the accuracy of most advanced single-class estimation can be achieved by researchers' manually designed methods under certain single-scenario conditions, but migration application capability is insufficient. When encountering such problems, researchers typically choose two solutions. The first solution is to apply a deep learning technology, using its powerful feature abstraction and data representation capabilities to improve the overall usability of the estimation method, optimize accuracy, and enhance the effect. The other solution is to improve the handcrafted pose estimation method. A researcher can design an intermediate medium with increased representation capability to improve the applicability of a method while ensuring accuracy. History helps readers build complete knowledge hierarchy and find future directions in this rapidly developing field. By combining existing problems with the boosting effects of current deep learning technologies, we introduce six aspects to be considered, namely, scene-level multi-objective inference, self-supervised learning methods, front-end detection networks, lightweight and efficient network designs, multi-information fusion attitude estimation frameworks, and image data representation space. We prospect all the above aspects from the the perspective of development trends in multi-DOF rigid object pose estimation. The multi-DOF pose estimation method for the single image of a rigid object based on computer vision technology has high research value in many fields. However, further research is necessary for some limitations of current technical methods and application scenarios.关键词:computer vision;single image;rigid object;pose estimation;deep learning127|787|4更新时间:2024-05-07 -

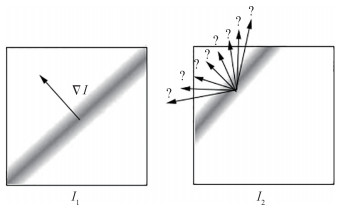

摘要:The motion analysis of fluid image sequences has been an important research topic in the fields of fluid mechanics, medicine, and computer vision. The dense and accurate velocity vector field extracted from image pairs can provide valuable information for these fields. For example, in the field of fluid mechanics, the velocity vector field can be used to calculate the divergence and curl fields of fluid; in the field of meteorology, the analysis of the velocity vector field can be used to provide weather forecast; in the field of medicine, the velocity vector field is applied to match medical images. In recent years, fluid motion estimation technology based on an optical flow method has become a promising direction in this subject due to its unique advantages. Compared with particle image velocimetry based on a correlation method, an optical flow method can obtain a denser velocity field and can estimate the motion of a scalar image and not just a particle image. In addition, an optical flow method can easily introduce various physical constraints in accordance with the motion characteristics of the fluid and obtain more accurate motion estimation results. In accordance with the basic principles of an optical flow method, this paper reviews a fluid motion estimation algorithm based on an optical flow method. Referring to a large number of domestic and foreign studies, existing algorithms are classified in accordance with outstanding problems to be solved: combining the energy minimization function with the knowledge of fluid mechanics, improving robustness to illumination changes, estimating large displacements, and eliminating outliers. Combining the minimization function with the knowledge of fluid mechanics introduces various physical constraints for improving the energy minimization function, providing physically meaningful data items and regularization terms, and improving the accuracy of fluid motion estimation results. Algorithms for improving robustness to illumination changes can be classified into four types: using a high-order constancy assumption to expand data items that depend on the constant brightness assumption, extracting illumination-invariant features in the image for data items, using structure-texture decomposition methods, and establishing a mathematical model for light changes. Various methods are applicable to different light change conditions. For the large displacement estimation problem, the pyramid-based multi-resolution optical flow method is first used; however, this method cannot estimate the large displacement of fine structures. To solve this problem, a hybrid motion estimation method that combines the cross-correlation method with a wavelet-based optical flow method is proposed in recent research. This hybrid method uses the cross-correlation method to calculate the large displacement of a fine structure and then uses an optical flow method to refine and redetermine the flow field, combining the advantages of the two methods. The optical flow estimation method based on wavelet transform provides a good mathematical framework for the multi-resolution estimation algorithm and avoids the linear problem that exists in the "coarse-to-fine" multi-resolution framework when estimating large displacements. Methods for eliminating outliers can be divided into three basic categories: methods that use a robust penalty function, median filtering, and forward-backward optical flow consistency check. In this paper, each kind of method is introduced from the perspective of the problem solving process, and the characteristics and limitations of existing algorithms are analyzed in various outstanding problems. Finally, the major research problems are summarized and discussed, and several possible research directions for the future are proposed. First, an optical flow method introduces various physical constraints into the objective function to conform to fluid motion characteristics. Hence, although accurate estimation results can be obtained, the resulting optical flow equation is too complex to solve, and no good numerical solution is obtained. Second, several methods based on an optical flow method exhibit different advantages under varying light change conditions; they also have corresponding shortcomings. Therefore, further research on how to combine the advantages of various methods to cope with different light changing conditions is particularly important. Third, although the hybrid method that combines the cross-correlation and optical flow methods can utilize the advantages of the two methods to obtain high-resolution motion results for the large displacement problem, this method can only be successfully applied to the motion estimation of particle images at present. Thus, exploring this method for other types of fluid motion images is worthwhile. Finally, an optical flow method requires complex variational optimization and its computational efficiency is low. Although some graphics processing unit(GPU) parallel algorithms proposed in recent years have effectively improved computational efficiency, they still cannot achieve real-time estimation. Therefore, improving the computational efficiency of fluid motion estimation algorithms and realizing real-time estimation are among the directions that are worth studying in the future.关键词:fluid motion estimation;optical flow method;fluid mechanics;illumination change;large displacement estimation;outlier detection101|163|8更新时间:2024-05-07

摘要:The motion analysis of fluid image sequences has been an important research topic in the fields of fluid mechanics, medicine, and computer vision. The dense and accurate velocity vector field extracted from image pairs can provide valuable information for these fields. For example, in the field of fluid mechanics, the velocity vector field can be used to calculate the divergence and curl fields of fluid; in the field of meteorology, the analysis of the velocity vector field can be used to provide weather forecast; in the field of medicine, the velocity vector field is applied to match medical images. In recent years, fluid motion estimation technology based on an optical flow method has become a promising direction in this subject due to its unique advantages. Compared with particle image velocimetry based on a correlation method, an optical flow method can obtain a denser velocity field and can estimate the motion of a scalar image and not just a particle image. In addition, an optical flow method can easily introduce various physical constraints in accordance with the motion characteristics of the fluid and obtain more accurate motion estimation results. In accordance with the basic principles of an optical flow method, this paper reviews a fluid motion estimation algorithm based on an optical flow method. Referring to a large number of domestic and foreign studies, existing algorithms are classified in accordance with outstanding problems to be solved: combining the energy minimization function with the knowledge of fluid mechanics, improving robustness to illumination changes, estimating large displacements, and eliminating outliers. Combining the minimization function with the knowledge of fluid mechanics introduces various physical constraints for improving the energy minimization function, providing physically meaningful data items and regularization terms, and improving the accuracy of fluid motion estimation results. Algorithms for improving robustness to illumination changes can be classified into four types: using a high-order constancy assumption to expand data items that depend on the constant brightness assumption, extracting illumination-invariant features in the image for data items, using structure-texture decomposition methods, and establishing a mathematical model for light changes. Various methods are applicable to different light change conditions. For the large displacement estimation problem, the pyramid-based multi-resolution optical flow method is first used; however, this method cannot estimate the large displacement of fine structures. To solve this problem, a hybrid motion estimation method that combines the cross-correlation method with a wavelet-based optical flow method is proposed in recent research. This hybrid method uses the cross-correlation method to calculate the large displacement of a fine structure and then uses an optical flow method to refine and redetermine the flow field, combining the advantages of the two methods. The optical flow estimation method based on wavelet transform provides a good mathematical framework for the multi-resolution estimation algorithm and avoids the linear problem that exists in the "coarse-to-fine" multi-resolution framework when estimating large displacements. Methods for eliminating outliers can be divided into three basic categories: methods that use a robust penalty function, median filtering, and forward-backward optical flow consistency check. In this paper, each kind of method is introduced from the perspective of the problem solving process, and the characteristics and limitations of existing algorithms are analyzed in various outstanding problems. Finally, the major research problems are summarized and discussed, and several possible research directions for the future are proposed. First, an optical flow method introduces various physical constraints into the objective function to conform to fluid motion characteristics. Hence, although accurate estimation results can be obtained, the resulting optical flow equation is too complex to solve, and no good numerical solution is obtained. Second, several methods based on an optical flow method exhibit different advantages under varying light change conditions; they also have corresponding shortcomings. Therefore, further research on how to combine the advantages of various methods to cope with different light changing conditions is particularly important. Third, although the hybrid method that combines the cross-correlation and optical flow methods can utilize the advantages of the two methods to obtain high-resolution motion results for the large displacement problem, this method can only be successfully applied to the motion estimation of particle images at present. Thus, exploring this method for other types of fluid motion images is worthwhile. Finally, an optical flow method requires complex variational optimization and its computational efficiency is low. Although some graphics processing unit(GPU) parallel algorithms proposed in recent years have effectively improved computational efficiency, they still cannot achieve real-time estimation. Therefore, improving the computational efficiency of fluid motion estimation algorithms and realizing real-time estimation are among the directions that are worth studying in the future.关键词:fluid motion estimation;optical flow method;fluid mechanics;illumination change;large displacement estimation;outlier detection101|163|8更新时间:2024-05-07

Review

-

摘要:ObjectiveWith the recent rapid development of technologies in cloud computing and mobile internet, screen content coding (SCC) has become a key technology in many popular applications, such as videoconferencing with document or slide sharing, remote desktop, screen sharing, mobile or external display interfacing, and cloud gaming. Typical computer screen content contains a mixture of camera-captured and screen contents. Screen content exhibits highly different characteristics and varied levels of human's visual sensitivity to distortion compared with traditional camera-captured content. Screen content videos are typically not noisy, with sharp edges and multiple repeated patterns. New coding tools for better utilizing the correlation characteristics of screen content are necessary. Accordingly, SCC has become a popular topic in multimedia applications in recent years and has elicited increasing research attention from academia and industry. Several international video coding standards include efficient SCC capability, such as high efficiency video coding (HEVC), versatile video coding (VVC), and second-generation and third-generation audio video coding standard (AVS2 and AVS3, respectively). Repeated identical patterns (i.e., matching patterns) are frequently observed on the same picture of screen content. Two major SCC tools in HEVC SCC were developed in recent years to utilize these repeated identical patterns with a variety of sizes and shapes. These tools are intra block copy (IBC) and palette coding. IBC, also called intra picture block compensation or current picture referencing, is a highly efficient technique for improving coding performance. It is effective for coding repeated identical patterns with a few fixed sizes and shapes. IBC is a direct extension of the traditional inter-prediction technique for the current picture, wherein a current prediction block is predicted from a reference block located in the already reconstrcted regions of the same picture. IBC has been adopted in the HEVC SCC extensions, VVC, and AVS3. In IBC, a displacement vector (DV) is used to signal the relative displacement from the position of the current block to that of the reference block. The coding efficiency of IBC primarily depends on the coding efficiency of DV. The existing DV coding algorithm used in HEVC SCC is the same as the motion vector coding algorithm used in the inter-prediction scheme. However, the existing DV coding algorithm only utilizes the correlations among the DVs of neighboring blocks. Moreover, intra block and inter block matching characteristics exhibit numerous differences. Thus, in accordance with the inherent intra block matching characteristics and different correlations of DV parameters in the IBC algorithm, we propose an improved DV algorithm for further increasing the coding efficiency of IBC. MethodThe DV to be coded has been proven to exhibit numerous correlations not only with neighboring blocks but also with recently coded blocks. To utilize the correlations of DVs with neighboring and recently coded blocks, we first apply an improved DV coding algorithm that adaptively uses DV predictions from either neighboring or recently coded blocks. Second, a direct DV coding scheme that uses a DV region division algorithm and a DV adjusting algorithm is proposed to eliminate redundancies further in the existing DV coding algorithm. Lastly, we evaluate coding performance and complexity on 17 test datasets from the SCC standard test dataset with three encoding configurations. In particular, 13 sequences are selected to represent the most common screen content videos, referred to as the "text and graphics with motion" (TGM) category, and 4 sequences are selected to represent a mixture of natural video and text/graphics, referred to as the "mixed content" category. ResultExperimental results show that the proposed algorithm achieves average Y BD-rate reductions of up to 1.04%, 0.87%, and 0.93% for the TGM category of the SCC common test sequences with lossy all intra (AI), random access, and low-delay B configurations, respectively, compared with the latest HEVC SCC at the same encoding and decoding runtimes. The maximum Y Bjφntegaard Delta(BD)-rate reduction reaches 2.99% in the AI configuration.ConclusionThe experiment results demonstrate that our algorithm outperforms the DV coding algorithm for IBC in HEVC SCC.关键词:high efficiency video coding (HEVC);audio video coding standard (AVS);screen content coding (SCC);displacement vector (DV);prediction coding;direct coding105|183|0更新时间:2024-05-07