最新刊期

卷 26 , 期 12 , 2021

-

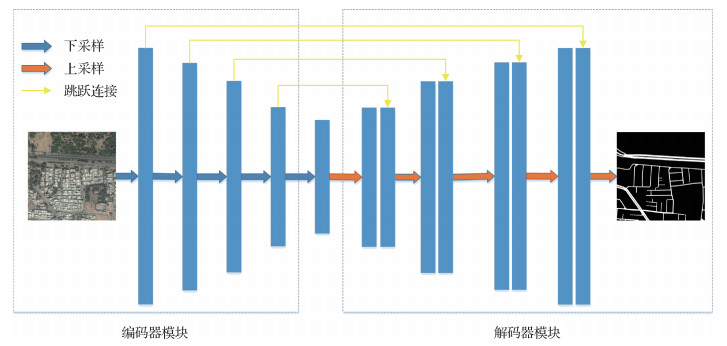

摘要:Deep visual generation has aimed to create synthetic photo-realistic visual contents (such as images and videos) that could fool or please human perceptions according to some specific requirements. In fact, many human activities belong to the field of visual generation, e.g., advertisement making, house designing and film making. However, these tasks normally can only be done by experts with professional skills gained through long-term training and the help of professional software such as Adobe Photoshop. Besides, it may also take a very long time to produce photo-realistic contents since the process can be very tedious and cumbersome. Thus, how to make these processes automated is a very important yet non-trivial problem. Nowadays, deep visual generation has become a significant research direction in computer vision and machine learning, and has been applied in many tasks, such as automatic content generation, beautification, rendering and data augmentation. Thanks to the current deep generative methods can be categorized into two groups: variational auto-encoder (VAE) based methods and generative adversarial networks (GANs) based methods. Based on encoder-decoder architecture, VAE methods first map input data into a latent distribution, and then minimize the distance between the latent distribution and some prior distribution, e.g., Gaussian distribution. A well-trained VAE model could be used in the tasks of dimensionality reduction and image generation. However, an inevitable gap between the latent distribution and prior distribution would make the generated images/videos blurred. Unlike the VAE model, GAN has learned a mapping between input and output distributions to synthesize sharper images/videos. A GAN model has contained two major modules. A generator has aimed to generate the fake data and a discriminator has distinguished whether a sample is fake or not. To produce plausible fake data, the generator has been matched the distribution of real data and synthesized fake data that would fulfill the requirements of reality and diversity. The optimization problem of learning the generator and discriminator has been formulated into a two-player minimax game. During the training, the two modules have been optimized alternately using stochastic gradient methods. At the end of the training, the generator and discriminator have been supposed to reach a Nash Equilibria of the minimax game. Due to the development of GAN model, more deep visual generation applications and tasks have occurred based on GAN model. The six typical tasks for deep visual generation have been presented as follows: 1) Image generation from noises: it is the earliest task of deep visual generation in which GAN model seeks to generate an image (e.g., face image) from random noises. 2) Image generation from images: it tries to transform a given image into a new one (e.g., from black-and-white image to color image). This task can be applied to applications like style transfer and image reconstruction. 3) Image generation from texts: it is a very natural task just like that humans describe the content of a painting and then the painters draw the corresponding images based on the texts. 4) Video generation from images: it aims to turn a static image into a dynamic video, which can be used in time-lapse photography, making animated videos from pictures, etc. 5) Video generation from videos: it is mainly used for video style transfer, video super-resolution and so on. 6) Video generation from texts: it is more difficult than image generation from texts since it needs the generated videos focusing on both semantical alignments with text and consistency among video frames. The challenges in deep visual generation have been analyzed and discussed. First, rather than 2D data, we should try to generate high-quality 3D data, which contains more information and details. Second, we could pay more attention to video generation instead of only image generation. Third, we could conduct some researches on controllable deep visual generation methods, which are more practical in real-world applications. Finally, we could try to expand the style transfer methods from two domains to multiple domains. In this review, we have summarized very recent works on deep adversarial visual generation through a systematic investigation. The review has mainly included an introduction of deep visual generation background, typical generation models, an overview of mainstream deep visual generation tasks and related algorithms. The deep adversarial visual generation research has been conducted further.关键词:deep learning;visual generation;generative adversarial networks (GANs);image generation;video generation;3D-depth image generation;style transfer;controllable generation209|179|1更新时间:2024-05-07

摘要:Deep visual generation has aimed to create synthetic photo-realistic visual contents (such as images and videos) that could fool or please human perceptions according to some specific requirements. In fact, many human activities belong to the field of visual generation, e.g., advertisement making, house designing and film making. However, these tasks normally can only be done by experts with professional skills gained through long-term training and the help of professional software such as Adobe Photoshop. Besides, it may also take a very long time to produce photo-realistic contents since the process can be very tedious and cumbersome. Thus, how to make these processes automated is a very important yet non-trivial problem. Nowadays, deep visual generation has become a significant research direction in computer vision and machine learning, and has been applied in many tasks, such as automatic content generation, beautification, rendering and data augmentation. Thanks to the current deep generative methods can be categorized into two groups: variational auto-encoder (VAE) based methods and generative adversarial networks (GANs) based methods. Based on encoder-decoder architecture, VAE methods first map input data into a latent distribution, and then minimize the distance between the latent distribution and some prior distribution, e.g., Gaussian distribution. A well-trained VAE model could be used in the tasks of dimensionality reduction and image generation. However, an inevitable gap between the latent distribution and prior distribution would make the generated images/videos blurred. Unlike the VAE model, GAN has learned a mapping between input and output distributions to synthesize sharper images/videos. A GAN model has contained two major modules. A generator has aimed to generate the fake data and a discriminator has distinguished whether a sample is fake or not. To produce plausible fake data, the generator has been matched the distribution of real data and synthesized fake data that would fulfill the requirements of reality and diversity. The optimization problem of learning the generator and discriminator has been formulated into a two-player minimax game. During the training, the two modules have been optimized alternately using stochastic gradient methods. At the end of the training, the generator and discriminator have been supposed to reach a Nash Equilibria of the minimax game. Due to the development of GAN model, more deep visual generation applications and tasks have occurred based on GAN model. The six typical tasks for deep visual generation have been presented as follows: 1) Image generation from noises: it is the earliest task of deep visual generation in which GAN model seeks to generate an image (e.g., face image) from random noises. 2) Image generation from images: it tries to transform a given image into a new one (e.g., from black-and-white image to color image). This task can be applied to applications like style transfer and image reconstruction. 3) Image generation from texts: it is a very natural task just like that humans describe the content of a painting and then the painters draw the corresponding images based on the texts. 4) Video generation from images: it aims to turn a static image into a dynamic video, which can be used in time-lapse photography, making animated videos from pictures, etc. 5) Video generation from videos: it is mainly used for video style transfer, video super-resolution and so on. 6) Video generation from texts: it is more difficult than image generation from texts since it needs the generated videos focusing on both semantical alignments with text and consistency among video frames. The challenges in deep visual generation have been analyzed and discussed. First, rather than 2D data, we should try to generate high-quality 3D data, which contains more information and details. Second, we could pay more attention to video generation instead of only image generation. Third, we could conduct some researches on controllable deep visual generation methods, which are more practical in real-world applications. Finally, we could try to expand the style transfer methods from two domains to multiple domains. In this review, we have summarized very recent works on deep adversarial visual generation through a systematic investigation. The review has mainly included an introduction of deep visual generation background, typical generation models, an overview of mainstream deep visual generation tasks and related algorithms. The deep adversarial visual generation research has been conducted further.关键词:deep learning;visual generation;generative adversarial networks (GANs);image generation;video generation;3D-depth image generation;style transfer;controllable generation209|179|1更新时间:2024-05-07 -

摘要:Human computer interaction technology has been promoting to realize intelligent human-computer interaction. The user's emotional experience in the human-computer interaction system has been facilitated based on the realization of emotional interaction. Emotional interaction has been intended to use widely via Gartner's analysis next decade. The agent can be real or virtual to detect the user's emotion and adjust the user's emotion. It can greatly enhance the user's experience in human-computer interaction on the aspects of psychological rehabilitation, E-education, digital entertainment, smart home, virtual tourism, E-commerce and etc. The research of agent's affective computing has involved in computer graphics, virtual reality, human-computer-based interaction, machine learning, psychology, social science. Based on Scopus database, 2 080 journal papers have been optioned via using virtual human (agent, multimodal) plus emotional interaction as the key words each. The perspective of agent's perceptions and influence of users' emotions have been analyzed and summarized. The importance of multi-channel in emotion perception and the typical machine learning algorithms in emotion recognition have been summarized from the perspective of agent's perception of users' emotions. The external and internal factors affecting users' emotions have been analyzed from the perspective of agent's influence on users' emotions. The emotional architecture, emotional generation and expression algorithms have been implemented. Customized evaluation methods have been applied to improve the accuracy of the affective computing algorithm. The importance of emotional agent in human-computer interaction has been analyzed. Four key steps of agent affective computing have been summarized based on current studies: 1) An agent expressed its emotion to the user. 2) The user gave their feedback to the agent (they may or may not express their satisfaction or dissatisfaction via some channels like facial expressions). 3) The real-time agent perceived the user's emotional state and intention and adjustable emotional performance to respond to user's feedback. 4) A standard (e.g., the completion of emotion regulation task, the end of plot) has been reached, the agent stopped interacting with the user, otherwise, returns to step 1). The current studies have shown that user's expressed emotions via facial expressions, voices, postures, physiological signals and texts on the aspect of user emotion recognition. The multi-channel method has been more reliable than the single channel method. Machine learning can be used to extract emotional features. Typical machine learning algorithms and their applicable scenarios have been sorted out based on CNN (convolutional neural network) nowadays. Some solutions have been facilitated to resolve insufficient data and over fitting issues. Spatial distance, the number of agents, the appearance of the agent, brightness and shadow have been set as external factors. Agent's autonomous emotion expression has been targeted as the internal factor. An agent should have an emotional architecture and use facial expression, eye gaze, posture, head movement gesture and other channels to express its emotion. The accuracy of the emotional classification model and users' feelings has been assessed based on an affective computing model. The statistical sampling analysis has been listed in the table. The existing emotional agents such as low intelligence, lack of common-sense database, lack of interactivity have been as the constrained factors. The research of agent affective computing in the field of human-computer interaction has been developed further. An affective computing for human-computer-based interaction and an agent could be a channel of emotional interaction. Knowledge-based database and appropriated machine learning algorithms have been adopted to build an agent with the ability of emotion perception and emotion regulation. Qualified physiological detection equipment and sustainable enrichment of emotional information assessment methods have developed the affective computing further.关键词:human-computer interaction;agent;affective computing;emotion;perception;performance312|444|2更新时间:2024-05-07

摘要:Human computer interaction technology has been promoting to realize intelligent human-computer interaction. The user's emotional experience in the human-computer interaction system has been facilitated based on the realization of emotional interaction. Emotional interaction has been intended to use widely via Gartner's analysis next decade. The agent can be real or virtual to detect the user's emotion and adjust the user's emotion. It can greatly enhance the user's experience in human-computer interaction on the aspects of psychological rehabilitation, E-education, digital entertainment, smart home, virtual tourism, E-commerce and etc. The research of agent's affective computing has involved in computer graphics, virtual reality, human-computer-based interaction, machine learning, psychology, social science. Based on Scopus database, 2 080 journal papers have been optioned via using virtual human (agent, multimodal) plus emotional interaction as the key words each. The perspective of agent's perceptions and influence of users' emotions have been analyzed and summarized. The importance of multi-channel in emotion perception and the typical machine learning algorithms in emotion recognition have been summarized from the perspective of agent's perception of users' emotions. The external and internal factors affecting users' emotions have been analyzed from the perspective of agent's influence on users' emotions. The emotional architecture, emotional generation and expression algorithms have been implemented. Customized evaluation methods have been applied to improve the accuracy of the affective computing algorithm. The importance of emotional agent in human-computer interaction has been analyzed. Four key steps of agent affective computing have been summarized based on current studies: 1) An agent expressed its emotion to the user. 2) The user gave their feedback to the agent (they may or may not express their satisfaction or dissatisfaction via some channels like facial expressions). 3) The real-time agent perceived the user's emotional state and intention and adjustable emotional performance to respond to user's feedback. 4) A standard (e.g., the completion of emotion regulation task, the end of plot) has been reached, the agent stopped interacting with the user, otherwise, returns to step 1). The current studies have shown that user's expressed emotions via facial expressions, voices, postures, physiological signals and texts on the aspect of user emotion recognition. The multi-channel method has been more reliable than the single channel method. Machine learning can be used to extract emotional features. Typical machine learning algorithms and their applicable scenarios have been sorted out based on CNN (convolutional neural network) nowadays. Some solutions have been facilitated to resolve insufficient data and over fitting issues. Spatial distance, the number of agents, the appearance of the agent, brightness and shadow have been set as external factors. Agent's autonomous emotion expression has been targeted as the internal factor. An agent should have an emotional architecture and use facial expression, eye gaze, posture, head movement gesture and other channels to express its emotion. The accuracy of the emotional classification model and users' feelings has been assessed based on an affective computing model. The statistical sampling analysis has been listed in the table. The existing emotional agents such as low intelligence, lack of common-sense database, lack of interactivity have been as the constrained factors. The research of agent affective computing in the field of human-computer interaction has been developed further. An affective computing for human-computer-based interaction and an agent could be a channel of emotional interaction. Knowledge-based database and appropriated machine learning algorithms have been adopted to build an agent with the ability of emotion perception and emotion regulation. Qualified physiological detection equipment and sustainable enrichment of emotional information assessment methods have developed the affective computing further.关键词:human-computer interaction;agent;affective computing;emotion;perception;performance312|444|2更新时间:2024-05-07 -

摘要:The four core technologies of automatic driving have evolved environment perception, precise positioning, path planning. and line control execution. Perfect planning must establish a deep understanding of the surrounding environment for environmental perception, especially the dynamic environment. Visual environment perception has played a key role in the development of autonomous vehicles. It has been widely used in intelligent rearview mirror, reversing radar, 360° panorama, driving recorder, collision warning, traffic light recognition, lane departure, line-parallel assistance, automatic parking and etc. The traditional way to obtain environmental information is the narrow-angle pinhole camera, which has limited field of vision and blind area. Multiple cameras are often needed to be covered around the car body, which not only increases the cost, but also increases the information processing time. Fisheye lens perception can be an effective way to use for environmental information. The large field of view (FOV) can provide the entire hemisphere view of 180°. Theoretically, The capability to cover 360° to avoid visual blindness, reduce the occlusion of visual objects, provide more information for visual perception and greatly reduce the processing time with only two cameras. Based on deep learning, processing surrounded image has been mainly processed in two ways. First, the surrounded fisheye image is transformed into ordinary normal image based on the image correction and distortion. The corrected image has been processed via classical image processing algorithm. The disadvantage is that image distortion can damage image quality, especially the image edges, lead to important visual information missing, the closer the image edge, the more loss of information. Second, the distorted fisheye image has been modeled and processed directly. The complexity of the fisheye image geometric process (model) cannot make the algorithm to migrate to the surrounded fisheye image very well, which is determined by the imaging characteristics of ordinary image and fisheye image, there is no surround fisheye image modeling model with better effect. Finally, there is no representative public dataset to carry out unified evaluation of the vision algorithm, and there is also a lack of a large number of data for model training. The related research directions of the fisheye image including the correction processing of the fisheye image have been summarized. Subdivided into the fisheye image correction method based on calibration has been conducted and the fisheye image correction method based on the projection transformation model has been demonstrated; the target detection in the fisheye image has been mainly introduced to pedestrian detection as well. The city road environment semantic segmentation, pseudo fisheye image dataset generation method has mainly been introduced based on the semantic segmentation of fisheye images. The other fisheye image modeling methods have been used to list the approximate proportion of these research directions and analyze the application background and real-time characteristics of the environment of automatic driving vehicle. In addition, the general datasets of the fisheye image has included the size of these datasets, publishing time, annotation category and etc. The experimental results of object detection methods and semantic segmentation methods in the fisheye image have been compared and analyzed. The evaluation dataset of fisheye image, the construction of algorithm model of fisheye image and the efficiency of the model issues have been discussed. The fisheye image processing has been benefited from the development of weak supervised and unsupervised learning.关键词:automatic drive;surround fisheye images;image correction;object detection;semantic segmentation;fisheye image dataset;research overview135|426|0更新时间:2024-05-07

摘要:The four core technologies of automatic driving have evolved environment perception, precise positioning, path planning. and line control execution. Perfect planning must establish a deep understanding of the surrounding environment for environmental perception, especially the dynamic environment. Visual environment perception has played a key role in the development of autonomous vehicles. It has been widely used in intelligent rearview mirror, reversing radar, 360° panorama, driving recorder, collision warning, traffic light recognition, lane departure, line-parallel assistance, automatic parking and etc. The traditional way to obtain environmental information is the narrow-angle pinhole camera, which has limited field of vision and blind area. Multiple cameras are often needed to be covered around the car body, which not only increases the cost, but also increases the information processing time. Fisheye lens perception can be an effective way to use for environmental information. The large field of view (FOV) can provide the entire hemisphere view of 180°. Theoretically, The capability to cover 360° to avoid visual blindness, reduce the occlusion of visual objects, provide more information for visual perception and greatly reduce the processing time with only two cameras. Based on deep learning, processing surrounded image has been mainly processed in two ways. First, the surrounded fisheye image is transformed into ordinary normal image based on the image correction and distortion. The corrected image has been processed via classical image processing algorithm. The disadvantage is that image distortion can damage image quality, especially the image edges, lead to important visual information missing, the closer the image edge, the more loss of information. Second, the distorted fisheye image has been modeled and processed directly. The complexity of the fisheye image geometric process (model) cannot make the algorithm to migrate to the surrounded fisheye image very well, which is determined by the imaging characteristics of ordinary image and fisheye image, there is no surround fisheye image modeling model with better effect. Finally, there is no representative public dataset to carry out unified evaluation of the vision algorithm, and there is also a lack of a large number of data for model training. The related research directions of the fisheye image including the correction processing of the fisheye image have been summarized. Subdivided into the fisheye image correction method based on calibration has been conducted and the fisheye image correction method based on the projection transformation model has been demonstrated; the target detection in the fisheye image has been mainly introduced to pedestrian detection as well. The city road environment semantic segmentation, pseudo fisheye image dataset generation method has mainly been introduced based on the semantic segmentation of fisheye images. The other fisheye image modeling methods have been used to list the approximate proportion of these research directions and analyze the application background and real-time characteristics of the environment of automatic driving vehicle. In addition, the general datasets of the fisheye image has included the size of these datasets, publishing time, annotation category and etc. The experimental results of object detection methods and semantic segmentation methods in the fisheye image have been compared and analyzed. The evaluation dataset of fisheye image, the construction of algorithm model of fisheye image and the efficiency of the model issues have been discussed. The fisheye image processing has been benefited from the development of weak supervised and unsupervised learning.关键词:automatic drive;surround fisheye images;image correction;object detection;semantic segmentation;fisheye image dataset;research overview135|426|0更新时间:2024-05-07

Review

-

摘要:Objective Good quality images with rich information and good visual effect have been concerned in digital imaging. Due to the limitation of "dynamic range", existing imaging equipment cannot record all the details of one scene via one exposure imaging, which seriously affects the visual effect and key information retention of source images. A mismatch in the dynamic range has been caused to real scene amongst existing imaging, display equipment and human eye's response. The dynamic range can be regarded as the brightness ratio between the brightest and darkest points in natural scene ima-ges. The dynamic range of human visual system is 105: 1. The dynamic range of images captured/displayed by digital imaging/display equipment is only 102: 1, which is significantly lower than the corresponding one of human visual system. Multi-exposure image fusion has provided a simple and effective way to solve the mismatch of dynamic range between existing imaging/display equipment and human eye. Multi-exposure image fusion has been used to perform the weighted fusion of multiple images via various cameras at different exposure levels. The information of source images has been maximally retained in the fused images, which ensures the fused images have the high-dynamic-range visual effect that matches the resolution of human eyes. Method Multi-exposure image fusion methods have usually categorized into spatial domain methods and transform domain methods. Spatial domain fusion methods either first divide source image sequences into image blocks according to certain rules and then perform the fusion of image blocks, or directly do the pixel-based fusion. The fused images often have different problems, such as unnatural connection of transition areas and uneven brightness distribution, which causes the low structural similarity between the fused images and source images. Transform domain fusion methods first decompose source images into the transform domain, and then perform the fusion according to certain fusion rules. Image decomposition has mainly divided into multi-scale decomposition and two-scale decomposition. Multi-scale decomposition requires up-sampling and down-sampling operations, which cause a certain degree of image information loss. Two-scale decomposition does not contain up-sampling and down-sampling operations, which avoids the problem of information loss caused by multi-scale decomposition, and avoids the shortcomings of spatial domain method to a certain extent. Two-scale decomposition can be directly decomposed into base and detail layers using filters, but the selection of filters seriously affects the quality of the fused images. A new exposure fusion algorithm based on two-scale decomposition and color prior has been proposed to obtain visual effect images like high-quality HDR (high dynamic range) images. The details of overexposed area and dark area can be involved in and the fused image has good color saturation. The main contributions have shown as follows: 1) To use the difference between image brightness and image saturation to determine the degree of exposure; To combine the difference and the image contrast as a quality measure. This method can distinguish the overexposed area and the unexposed area quickly and efficiently. The texture details of the overexposed area and the unexposed area have been considered. The color saturation and contrast of the fused image have been improved. 2) A fusion method based on two-scale decomposition has been presented. Fast guided filter to decompose the image can reduce the halo artifacts of the fused image to a certain extent, which makes the image have better visual effect. The detailed research workflows have shown as follows: First, the image has been decomposed by fast guided filtering. The obtained detail layer has been enhanced, which retains more detailed information, and reduces the halo artifacts of the fused image. Next, based on the color prior, the difference between brightness and saturation has been used to determine the degree of image exposure. The difference and the image contrast have been combined to calculate the fusion weight of multi-exposure images, ensuring the brightness and contrast of the fused images at the same time. At last, the guided filtering has been used to optimize the weight map, suppress noise, increase the pixels correlation and improve the visual effect of the fused images. Result 24 sets of multi-exposure image sequences have been included. From the overall contrast and color saturation of the fused images and the details of both overexposed and underexposed areas have been improved on the perspective of subjective evaluation. In terms of the analysis of objective evaluation criteria, two different quality evaluation algorithms have used to evaluate the fused results of multi-exposure image sequences. The evaluation results have shown that the averages of corresponding indicators reach 0.982 and 0.970 each. The two different structural similarity indexes have been improved, with an average improvement of 1.2% and 1.1% respectively. Conclusion According to subjective and objective evaluations, the good fusion performance of significant processing effects on image contrast, color saturation and detail information retention has been outreached. The algorithm has three main advantages with respect to low complexity, simple implementation and relatively fast running speed, to customize mobile devices. It can be applied to imaging equipment with low dynamic range to obtain ideal images.关键词:multi-exposure fusion (MEF);high dynamic range imaging;guided filtering;fast guided filtering;color prior63|134|1更新时间:2024-05-07

摘要:Objective Good quality images with rich information and good visual effect have been concerned in digital imaging. Due to the limitation of "dynamic range", existing imaging equipment cannot record all the details of one scene via one exposure imaging, which seriously affects the visual effect and key information retention of source images. A mismatch in the dynamic range has been caused to real scene amongst existing imaging, display equipment and human eye's response. The dynamic range can be regarded as the brightness ratio between the brightest and darkest points in natural scene ima-ges. The dynamic range of human visual system is 105: 1. The dynamic range of images captured/displayed by digital imaging/display equipment is only 102: 1, which is significantly lower than the corresponding one of human visual system. Multi-exposure image fusion has provided a simple and effective way to solve the mismatch of dynamic range between existing imaging/display equipment and human eye. Multi-exposure image fusion has been used to perform the weighted fusion of multiple images via various cameras at different exposure levels. The information of source images has been maximally retained in the fused images, which ensures the fused images have the high-dynamic-range visual effect that matches the resolution of human eyes. Method Multi-exposure image fusion methods have usually categorized into spatial domain methods and transform domain methods. Spatial domain fusion methods either first divide source image sequences into image blocks according to certain rules and then perform the fusion of image blocks, or directly do the pixel-based fusion. The fused images often have different problems, such as unnatural connection of transition areas and uneven brightness distribution, which causes the low structural similarity between the fused images and source images. Transform domain fusion methods first decompose source images into the transform domain, and then perform the fusion according to certain fusion rules. Image decomposition has mainly divided into multi-scale decomposition and two-scale decomposition. Multi-scale decomposition requires up-sampling and down-sampling operations, which cause a certain degree of image information loss. Two-scale decomposition does not contain up-sampling and down-sampling operations, which avoids the problem of information loss caused by multi-scale decomposition, and avoids the shortcomings of spatial domain method to a certain extent. Two-scale decomposition can be directly decomposed into base and detail layers using filters, but the selection of filters seriously affects the quality of the fused images. A new exposure fusion algorithm based on two-scale decomposition and color prior has been proposed to obtain visual effect images like high-quality HDR (high dynamic range) images. The details of overexposed area and dark area can be involved in and the fused image has good color saturation. The main contributions have shown as follows: 1) To use the difference between image brightness and image saturation to determine the degree of exposure; To combine the difference and the image contrast as a quality measure. This method can distinguish the overexposed area and the unexposed area quickly and efficiently. The texture details of the overexposed area and the unexposed area have been considered. The color saturation and contrast of the fused image have been improved. 2) A fusion method based on two-scale decomposition has been presented. Fast guided filter to decompose the image can reduce the halo artifacts of the fused image to a certain extent, which makes the image have better visual effect. The detailed research workflows have shown as follows: First, the image has been decomposed by fast guided filtering. The obtained detail layer has been enhanced, which retains more detailed information, and reduces the halo artifacts of the fused image. Next, based on the color prior, the difference between brightness and saturation has been used to determine the degree of image exposure. The difference and the image contrast have been combined to calculate the fusion weight of multi-exposure images, ensuring the brightness and contrast of the fused images at the same time. At last, the guided filtering has been used to optimize the weight map, suppress noise, increase the pixels correlation and improve the visual effect of the fused images. Result 24 sets of multi-exposure image sequences have been included. From the overall contrast and color saturation of the fused images and the details of both overexposed and underexposed areas have been improved on the perspective of subjective evaluation. In terms of the analysis of objective evaluation criteria, two different quality evaluation algorithms have used to evaluate the fused results of multi-exposure image sequences. The evaluation results have shown that the averages of corresponding indicators reach 0.982 and 0.970 each. The two different structural similarity indexes have been improved, with an average improvement of 1.2% and 1.1% respectively. Conclusion According to subjective and objective evaluations, the good fusion performance of significant processing effects on image contrast, color saturation and detail information retention has been outreached. The algorithm has three main advantages with respect to low complexity, simple implementation and relatively fast running speed, to customize mobile devices. It can be applied to imaging equipment with low dynamic range to obtain ideal images.关键词:multi-exposure fusion (MEF);high dynamic range imaging;guided filtering;fast guided filtering;color prior63|134|1更新时间:2024-05-07 -

摘要:Objective Image fusion technology is of great significance for image recognition and comprehension. Infrared and visible image fusion has been widely applied in computer vision, target detection, video surveillance, military and many other areas. The weakened target, unclear background details, blurred edges and low fusion efficiency have been existing due to high algorithm complexity in fusion. The dual-scale methods can reduce the complexity of the algorithm and obtain satisfying results in the first level of decomposition itself compared to most multi-scale methods that require more than two decomposition levels, with utilizing the large difference of information on the two scales. However, insufficient extraction of salient features and neglect of the influence of noise which may lead to unexpected fusion effect. Dual-scale decomposition has been combined to the saliency analysis and spatial consistency for acquiring high-quality fusion of infrared and visible images. Method The visual saliency has been used to integrate the important and valuable information of the source images into the fused image. The spatial consistency has been fully considered to prevent the influence of noise on the fusion results. First, the mean filter has been used to filter the source image, to separate the high-frequency and low-frequency information in the image: the base image containing low-frequency information has been obtained first. The detail image containing high-frequency information has been acquired second via subtracting from the source image. Next, a simple weighted average fusion rule, that is, the arithmetic average rule, has been used to fuse the base image via the different sensitivity of the human visual system to the information of base image and detail image. The common features of the source images can be preserved and the redundant information of the fused base image can be reduced; For the detail image, the fusion weight based on visual saliency has been selected to guide the weighting. The saliency information of the image can be extracted using the difference between the mean and the median filter output. The saliency map of the source images can be obtained via Gaussian filter on the output difference. Therefore, the initial weight map has been constructed via the visual saliency. Furthermore, combined with the principle of spatial consistency, the initial weight map has been optimized based on guided filtering for the purpose of reducing noise and keeping the boundary aligned. The detail image can be fused under the guidance of the final weight map obtained. Therefore, the target, background details and edge information can be enhanced and the noise can be released. At last, the dual-scale reconstruction has been performed to obtain the final fused image of the fused base image and detail image. Result Based on the different characteristics of traditional and deep learning methods, two groups of different gray images from TNO and other public datasets have been opted for comparison experiments. The subjective and objective evaluations have been conducted with other methods to verify the effectiveness and superiority performance of the proposed method on the experimental platform MATLAB R2018a.The key prominent areas have been marked with white boxes in the results to fit the subjective analysis for illustrating the differences of the fused images in detail. The subjective analyzing method can comprehensively and accurately extract the information to obtain clear visual effect based on the source images and the fused image. First, the first group of experimental images and the effectiveness of the proposed method in improving the fusion effect can be verified on the aspect of objective evaluation. Next, the qualified average precision of average gradient, edge intensity, spatial frequency, feature mutual information and cross-entropy have been presented quantitatively, which are 3.990 7, 41.793 7, 10.536 6, 0.446 0 and 1.489 7, respectively. At last, the proposed method has shown obvious advantages in the second group of experimental images compared with a deep learning method. The highest entropy has been obtained both. An average increase of 91.28%, 91.45%, 85.10%, 0.18% and 45.45% in the above five metrics have been acquired respectively. Conclusion Due to the complexity of salient feature extraction and the uncertainty of noise in the fusion process, the extensive experiments have demonstrated that some existing fusion methods are inevitably limited, and the fusion effect cannot meet high-quality requirements of image processing. By contrast, the proposed method combining the dual-scale decomposition and the fusion weight based on visual saliency has achieved good results. The enhancement effect of the target, background details and edge information are particularly significant including anti-noise performance. High-quality fusion of multiple groups of images can be achieved quickly and effectively for providing the possibility of real-time fusion of infrared and visible images. The actual effect of this method has been more qualified in comparison with a fusion method based on deep learning framework. The further research method has been more universal and can be used to fuse multi-source and other multi-source and multi-mode images.关键词:infrared image;visible image;saliency analysis;spatial consistency;dual-scale decomposition;image fusion71|158|9更新时间:2024-05-07

摘要:Objective Image fusion technology is of great significance for image recognition and comprehension. Infrared and visible image fusion has been widely applied in computer vision, target detection, video surveillance, military and many other areas. The weakened target, unclear background details, blurred edges and low fusion efficiency have been existing due to high algorithm complexity in fusion. The dual-scale methods can reduce the complexity of the algorithm and obtain satisfying results in the first level of decomposition itself compared to most multi-scale methods that require more than two decomposition levels, with utilizing the large difference of information on the two scales. However, insufficient extraction of salient features and neglect of the influence of noise which may lead to unexpected fusion effect. Dual-scale decomposition has been combined to the saliency analysis and spatial consistency for acquiring high-quality fusion of infrared and visible images. Method The visual saliency has been used to integrate the important and valuable information of the source images into the fused image. The spatial consistency has been fully considered to prevent the influence of noise on the fusion results. First, the mean filter has been used to filter the source image, to separate the high-frequency and low-frequency information in the image: the base image containing low-frequency information has been obtained first. The detail image containing high-frequency information has been acquired second via subtracting from the source image. Next, a simple weighted average fusion rule, that is, the arithmetic average rule, has been used to fuse the base image via the different sensitivity of the human visual system to the information of base image and detail image. The common features of the source images can be preserved and the redundant information of the fused base image can be reduced; For the detail image, the fusion weight based on visual saliency has been selected to guide the weighting. The saliency information of the image can be extracted using the difference between the mean and the median filter output. The saliency map of the source images can be obtained via Gaussian filter on the output difference. Therefore, the initial weight map has been constructed via the visual saliency. Furthermore, combined with the principle of spatial consistency, the initial weight map has been optimized based on guided filtering for the purpose of reducing noise and keeping the boundary aligned. The detail image can be fused under the guidance of the final weight map obtained. Therefore, the target, background details and edge information can be enhanced and the noise can be released. At last, the dual-scale reconstruction has been performed to obtain the final fused image of the fused base image and detail image. Result Based on the different characteristics of traditional and deep learning methods, two groups of different gray images from TNO and other public datasets have been opted for comparison experiments. The subjective and objective evaluations have been conducted with other methods to verify the effectiveness and superiority performance of the proposed method on the experimental platform MATLAB R2018a.The key prominent areas have been marked with white boxes in the results to fit the subjective analysis for illustrating the differences of the fused images in detail. The subjective analyzing method can comprehensively and accurately extract the information to obtain clear visual effect based on the source images and the fused image. First, the first group of experimental images and the effectiveness of the proposed method in improving the fusion effect can be verified on the aspect of objective evaluation. Next, the qualified average precision of average gradient, edge intensity, spatial frequency, feature mutual information and cross-entropy have been presented quantitatively, which are 3.990 7, 41.793 7, 10.536 6, 0.446 0 and 1.489 7, respectively. At last, the proposed method has shown obvious advantages in the second group of experimental images compared with a deep learning method. The highest entropy has been obtained both. An average increase of 91.28%, 91.45%, 85.10%, 0.18% and 45.45% in the above five metrics have been acquired respectively. Conclusion Due to the complexity of salient feature extraction and the uncertainty of noise in the fusion process, the extensive experiments have demonstrated that some existing fusion methods are inevitably limited, and the fusion effect cannot meet high-quality requirements of image processing. By contrast, the proposed method combining the dual-scale decomposition and the fusion weight based on visual saliency has achieved good results. The enhancement effect of the target, background details and edge information are particularly significant including anti-noise performance. High-quality fusion of multiple groups of images can be achieved quickly and effectively for providing the possibility of real-time fusion of infrared and visible images. The actual effect of this method has been more qualified in comparison with a fusion method based on deep learning framework. The further research method has been more universal and can be used to fuse multi-source and other multi-source and multi-mode images.关键词:infrared image;visible image;saliency analysis;spatial consistency;dual-scale decomposition;image fusion71|158|9更新时间:2024-05-07 -

摘要:ObjectiveDeep convolutional neural network has shown strong reconstruction ability in image super-resolution (SR) task. Efficient super-resolution has a great practical application scenario due to the popularity of intelligent edge devices such as mobile phones. A very lightweight and efficient super-resolution network has been proposed. The proposed method has reduced the number of parameters and floating point operations(FLOPs) greatly and achieved excellent reconstruction performance based on recursive feature selection module and parameter sharing mechanism. MethodThe proposed lightweight attention feature selection recursive network (AFSNet)has mainly evolved three key components: low-level feature extraction, high-level feature extraction and upsample reconstruction. In the low-level feature extraction part, the input low-resolution image has passed through a 3×3 convolutional layer to extract the low-level features. In the high-level feature extraction part, a recursive feature selection module(FSM) to capture the high-level features has been designed. At the end of the network, a shared upsample block to super-resolve low-level and high-level features has been utilized to obtain the final high-resolution image. Specifically, the FSM has contained a feature enhancement block and an efficient channel attention block. The feature enhancement block has four convolutional layers. Different from other cascaded convolutional layers, this block has retained part of features in each convolutional layer and fused them at the end of this module. Features extracted from different convolutional layers have different levels of hierarchical information, so the proposed network can choose to preserve part of them step-by-step and aggregate them at the end of this module. An efficient channel attention (ECA) block has been presented following the feature enhancement block. Different from the channel attention (CA) in the residual channel attention networks(RCAN), the ECA has avoided the dimensionality reduction operation, which involves two 1×1 convolutional layers to realize no-linear mapping and cross-channel interaction. A local cross-channel interaction strategy has been implemented excluded dimensionality reduction via one-dimensional (1D) convolution. Furthermore, ECA block has adaptively opted kernel size of 1D convolution for determining coverage of local cross-channel interaction. The proposed ECA block has not increased the parameter numbers to improve the reconstruction performance.This network has employed recursive mechanism to share parameters across the efficient feature enhancement block as well to reduce the number of parameters extremely. In the end of the high-level feature extraction part, this network has concatenated and fused the output of all the FSM. The research network can capture valuable contextual information via this multi-stage feature fusion (MSFF) mechanism. In the upsample reconstruction part, this network has utilized a shared upsample block to reconstruct the low-level and high-level features into a high-resolution image, which includes a convolutional layer and a sub-pixel layer. The high-resolution image has fused low and high frequency information together without increasing the parameter numbers. ResultThe DF2K dataset as training dataset has been adopted, which includes 800 images from the DIV2K dataset and 2 650 images from the Flickr2k dataset. Data augmentation has been performed based on random horizontal flipping and 90 degree rotation further. The corresponding low-resolution image has been obtained by bicubic downsampling from the high-resolution image (the downscale scale is×2, ×3, ×4). The evaluation has used five benchmark datasets: Set5, Set14, B100, Urban100 and Manga109 respectively. Peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) have been used as the evaluation metrics to measure reconstruction performance.The AFSNet crops borders and the metrics in the luminance channel of transformed YCbCr space have been calculated following the evaluation protocol in residual dense network(RDN). In training process, 16 low-resoution patches of size 48×48 and their corresponding high-resolution patches have been randomly cropped. In the high-level feature extraction stage, six recursive feature selection modules have been used. The number of channels in each convolution layer C=64 for our FSM has been set. In each channel split operation, the features of 16 channels have been preserved. The remaining 48 channels have been continued to perform the next convolution. The network parameters with Adam optimizer have been optimized. The network has been trained using L1 loss function. The initial learning rate has been set to 2E-4 and decreased half for every 200 epochs. The network has been implemented under the PyTorch framework with an NVIDIA 2080 Ti GPU for acceleration. The proposed AFSNet with several state-of-the-art lightweight convolutional neural networks(CNNs)-based SISR methods has been compared. The AFSNet has achieved the best performance in terms of both PSNR and SSIM among all compared methods in almost all benchmark datasets excluded×2 results on the Set5. The AFSNet has much less parameter numbers and much smaller FLOPs in particular. For×4 SR in the Set14 test dataset, the PSNR results have increased 0.4 dB, 0.6 dB and 0.43 dB respectively compared with SRFBN-S, IDN and CARN-M. The parameter numbers of AFSNet have been decreased by 47%, 53% and 38%. Meanwhile, the 24.5 G FLOPs of AFSNet have been superior to 30 G FLOPs as usual. In addition, the AFSNet has conducted ablation study on the effectiveness of the ECA module and MSFF mechanism. The AFSNet has selected×4 Set5 as test dataset.The PSNR results have been decrease by 0.09 dB and 0.11 dB, which shows the effectiveness of the proposed ECA module and MSFF mechanism when the AFSsNet dropped out ECA module and MSFF mechanism respectively. ConclusionThe research has presented a lightweight attention feature selection recursive network for super-resolution, which improved reconstruction performance without large parameters and FLOPs. The network has employed a 3×3 convolutional layer in the low-level feature extraction part to extract low-resolution(LR) low-level features, then six recursive feature selection modules have been used to learn non-linear mapping and exploit high-level features. The FSM has preserved hierarchical features step-by-step and aggregated them according to the importance of candidate features based on the proposed efficient channel attention module evalution. Meanwhile, multi-stage feature fusion by concatenating outputs of all the FSM has been conducted to effectively capture contextual information of different stages. The extracted low-level and high-level features have been upsampled by a parameter-shared upsample block.关键词:image super-resolution;lightweight networks;recursive mechanism;parameter share;feature enhancement;efficient channel attention63|26|1更新时间:2024-05-07

摘要:ObjectiveDeep convolutional neural network has shown strong reconstruction ability in image super-resolution (SR) task. Efficient super-resolution has a great practical application scenario due to the popularity of intelligent edge devices such as mobile phones. A very lightweight and efficient super-resolution network has been proposed. The proposed method has reduced the number of parameters and floating point operations(FLOPs) greatly and achieved excellent reconstruction performance based on recursive feature selection module and parameter sharing mechanism. MethodThe proposed lightweight attention feature selection recursive network (AFSNet)has mainly evolved three key components: low-level feature extraction, high-level feature extraction and upsample reconstruction. In the low-level feature extraction part, the input low-resolution image has passed through a 3×3 convolutional layer to extract the low-level features. In the high-level feature extraction part, a recursive feature selection module(FSM) to capture the high-level features has been designed. At the end of the network, a shared upsample block to super-resolve low-level and high-level features has been utilized to obtain the final high-resolution image. Specifically, the FSM has contained a feature enhancement block and an efficient channel attention block. The feature enhancement block has four convolutional layers. Different from other cascaded convolutional layers, this block has retained part of features in each convolutional layer and fused them at the end of this module. Features extracted from different convolutional layers have different levels of hierarchical information, so the proposed network can choose to preserve part of them step-by-step and aggregate them at the end of this module. An efficient channel attention (ECA) block has been presented following the feature enhancement block. Different from the channel attention (CA) in the residual channel attention networks(RCAN), the ECA has avoided the dimensionality reduction operation, which involves two 1×1 convolutional layers to realize no-linear mapping and cross-channel interaction. A local cross-channel interaction strategy has been implemented excluded dimensionality reduction via one-dimensional (1D) convolution. Furthermore, ECA block has adaptively opted kernel size of 1D convolution for determining coverage of local cross-channel interaction. The proposed ECA block has not increased the parameter numbers to improve the reconstruction performance.This network has employed recursive mechanism to share parameters across the efficient feature enhancement block as well to reduce the number of parameters extremely. In the end of the high-level feature extraction part, this network has concatenated and fused the output of all the FSM. The research network can capture valuable contextual information via this multi-stage feature fusion (MSFF) mechanism. In the upsample reconstruction part, this network has utilized a shared upsample block to reconstruct the low-level and high-level features into a high-resolution image, which includes a convolutional layer and a sub-pixel layer. The high-resolution image has fused low and high frequency information together without increasing the parameter numbers. ResultThe DF2K dataset as training dataset has been adopted, which includes 800 images from the DIV2K dataset and 2 650 images from the Flickr2k dataset. Data augmentation has been performed based on random horizontal flipping and 90 degree rotation further. The corresponding low-resolution image has been obtained by bicubic downsampling from the high-resolution image (the downscale scale is×2, ×3, ×4). The evaluation has used five benchmark datasets: Set5, Set14, B100, Urban100 and Manga109 respectively. Peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) have been used as the evaluation metrics to measure reconstruction performance.The AFSNet crops borders and the metrics in the luminance channel of transformed YCbCr space have been calculated following the evaluation protocol in residual dense network(RDN). In training process, 16 low-resoution patches of size 48×48 and their corresponding high-resolution patches have been randomly cropped. In the high-level feature extraction stage, six recursive feature selection modules have been used. The number of channels in each convolution layer C=64 for our FSM has been set. In each channel split operation, the features of 16 channels have been preserved. The remaining 48 channels have been continued to perform the next convolution. The network parameters with Adam optimizer have been optimized. The network has been trained using L1 loss function. The initial learning rate has been set to 2E-4 and decreased half for every 200 epochs. The network has been implemented under the PyTorch framework with an NVIDIA 2080 Ti GPU for acceleration. The proposed AFSNet with several state-of-the-art lightweight convolutional neural networks(CNNs)-based SISR methods has been compared. The AFSNet has achieved the best performance in terms of both PSNR and SSIM among all compared methods in almost all benchmark datasets excluded×2 results on the Set5. The AFSNet has much less parameter numbers and much smaller FLOPs in particular. For×4 SR in the Set14 test dataset, the PSNR results have increased 0.4 dB, 0.6 dB and 0.43 dB respectively compared with SRFBN-S, IDN and CARN-M. The parameter numbers of AFSNet have been decreased by 47%, 53% and 38%. Meanwhile, the 24.5 G FLOPs of AFSNet have been superior to 30 G FLOPs as usual. In addition, the AFSNet has conducted ablation study on the effectiveness of the ECA module and MSFF mechanism. The AFSNet has selected×4 Set5 as test dataset.The PSNR results have been decrease by 0.09 dB and 0.11 dB, which shows the effectiveness of the proposed ECA module and MSFF mechanism when the AFSsNet dropped out ECA module and MSFF mechanism respectively. ConclusionThe research has presented a lightweight attention feature selection recursive network for super-resolution, which improved reconstruction performance without large parameters and FLOPs. The network has employed a 3×3 convolutional layer in the low-level feature extraction part to extract low-resolution(LR) low-level features, then six recursive feature selection modules have been used to learn non-linear mapping and exploit high-level features. The FSM has preserved hierarchical features step-by-step and aggregated them according to the importance of candidate features based on the proposed efficient channel attention module evalution. Meanwhile, multi-stage feature fusion by concatenating outputs of all the FSM has been conducted to effectively capture contextual information of different stages. The extracted low-level and high-level features have been upsampled by a parameter-shared upsample block.关键词:image super-resolution;lightweight networks;recursive mechanism;parameter share;feature enhancement;efficient channel attention63|26|1更新时间:2024-05-07 -

摘要:ObjectiveAs an important branch of image processing, image super-resolution has attracted extensive attention of many scholars. The attention mechanism has originally been applied to machine translation in deep learning. As an extension of the attention mechanism, the channel attention mechanism has been widely used in image super-resolution. A single image super-resolution using region-level channel attention has been proposed. A region-level channel attention mechanism has been presented in the network, which can assign different attention to different channels in different regions. Meanwhile, the high-frequency aware loss has been demonstrated with aiming at the characteristics that L1 and L2 losses commonly used at present tend to produce very smooth results. This loss function has strengthened the weight of losses at high-frequency positions to the generation of high-frequency details. MethodThe network structure has consisted of three parts: low-level feature extraction, high-level feature extraction and image reconstruction. In the low-level feature extraction part, the algorithm used 1 layer of 3×3 convolution. The high-level feature extraction part has contained a non-local module and several residual dense block attention modules. The non-local module has extracted the non-local similarity information via the non-local operation. Sub-pixel convolutional layer has been used before calculating non-local similar information. The calculation has been conducted at low resolution. Dense connection has been used in the residual dense block attention modules to facilitate the network adaptive accumulation of features of different layers. Meanwhile, residual learning has been used to further optimize the gradient propagation problem. Region-level channel attention mechanism has been introduced to pay attention to information in different regions adaptively. The initial non-local similarity information has been added to the last layer by skip connection. In the image reconstruction part, sub-pixel convolution has been used to up-sampling operation on features and a 3×3 convolutional layer has been used to obtain the final reconstruction result. In terms of loss function, the high-frequency aware loss has been operated for enhancing the network's ability of reconstructing high frequency details. Before training, the locations of high-frequency details in the image have been extracted. During training, more weight has been added to the losses at these locations to better learn the reconstruction process of high-frequency details. The whole training process has been divided into two stages. In the first stage, L1 loss has used to train the network. In the second stage, the high-frequency aware loss and L1 loss has used to fine-tune the model of the first stage together. ResultRegion-level channel attention and the high-frequency aware loss have been verified via ablation study. The model using the region-level channel attention is significantly better on peak signal to noise ratio (PSNR). The high-frequency aware loss and L1 loss together to fine-tune the model is better on PSNR than the model only use L1 loss to fine-tune. The good effect of the region-level channel attention and the high-frequency aware loss have been verified both at the same time. Set5, Set14, Berkeley segmentation dataset (BSD100) and Urban100 have been selected for testing in comparison with other algorithms. The comparison algorithms have included Bicubic, image super-resolution using deep convolutional networks (SRCNN), accurate image super-resolution using very deep convolutional networks (VDSR), image super-resolution using very deep residual channel attention networks (RCAN), feedback network for image super-resolution (SRFBN) and single image super-resolution via a holistic attention network (HAN) respectively. On the subjective effect of the present, the results with a factor of 4, three of the results have been selected for display. The results generated by the algorithm have presented more rich in texture without any blurring or distortion. In the presentation of objective indicators, PSNR and structural similarity (SSIM) have been used as indicators to make a comprehensive comparison under three different factors of 2, 3 and 4, respectively. PSNR of the model with amplification factor of 4 under four standard test sets is 32.51 dB, 28.82 dB, 27.72 dB and 26.66 dB, respectively. Conclusion A super-resolution algorithm using region-level channel attention mechanism has been commonly used channel attention in region-level. Based on the high-frequency aware loss, the network can reconstruct high frequency details by increasing the attention degree of the network to the high frequency detail location. The experimental results have shown that the proposed algorithm has its priority in objective indicators and subjective effects via using region-level channel attention mechanism and high-frequency aware loss.关键词:deep learning;convolutional neural network(CNN);super-resolution;attention mechanism;non-local neural network159|255|4更新时间:2024-05-07

摘要:ObjectiveAs an important branch of image processing, image super-resolution has attracted extensive attention of many scholars. The attention mechanism has originally been applied to machine translation in deep learning. As an extension of the attention mechanism, the channel attention mechanism has been widely used in image super-resolution. A single image super-resolution using region-level channel attention has been proposed. A region-level channel attention mechanism has been presented in the network, which can assign different attention to different channels in different regions. Meanwhile, the high-frequency aware loss has been demonstrated with aiming at the characteristics that L1 and L2 losses commonly used at present tend to produce very smooth results. This loss function has strengthened the weight of losses at high-frequency positions to the generation of high-frequency details. MethodThe network structure has consisted of three parts: low-level feature extraction, high-level feature extraction and image reconstruction. In the low-level feature extraction part, the algorithm used 1 layer of 3×3 convolution. The high-level feature extraction part has contained a non-local module and several residual dense block attention modules. The non-local module has extracted the non-local similarity information via the non-local operation. Sub-pixel convolutional layer has been used before calculating non-local similar information. The calculation has been conducted at low resolution. Dense connection has been used in the residual dense block attention modules to facilitate the network adaptive accumulation of features of different layers. Meanwhile, residual learning has been used to further optimize the gradient propagation problem. Region-level channel attention mechanism has been introduced to pay attention to information in different regions adaptively. The initial non-local similarity information has been added to the last layer by skip connection. In the image reconstruction part, sub-pixel convolution has been used to up-sampling operation on features and a 3×3 convolutional layer has been used to obtain the final reconstruction result. In terms of loss function, the high-frequency aware loss has been operated for enhancing the network's ability of reconstructing high frequency details. Before training, the locations of high-frequency details in the image have been extracted. During training, more weight has been added to the losses at these locations to better learn the reconstruction process of high-frequency details. The whole training process has been divided into two stages. In the first stage, L1 loss has used to train the network. In the second stage, the high-frequency aware loss and L1 loss has used to fine-tune the model of the first stage together. ResultRegion-level channel attention and the high-frequency aware loss have been verified via ablation study. The model using the region-level channel attention is significantly better on peak signal to noise ratio (PSNR). The high-frequency aware loss and L1 loss together to fine-tune the model is better on PSNR than the model only use L1 loss to fine-tune. The good effect of the region-level channel attention and the high-frequency aware loss have been verified both at the same time. Set5, Set14, Berkeley segmentation dataset (BSD100) and Urban100 have been selected for testing in comparison with other algorithms. The comparison algorithms have included Bicubic, image super-resolution using deep convolutional networks (SRCNN), accurate image super-resolution using very deep convolutional networks (VDSR), image super-resolution using very deep residual channel attention networks (RCAN), feedback network for image super-resolution (SRFBN) and single image super-resolution via a holistic attention network (HAN) respectively. On the subjective effect of the present, the results with a factor of 4, three of the results have been selected for display. The results generated by the algorithm have presented more rich in texture without any blurring or distortion. In the presentation of objective indicators, PSNR and structural similarity (SSIM) have been used as indicators to make a comprehensive comparison under three different factors of 2, 3 and 4, respectively. PSNR of the model with amplification factor of 4 under four standard test sets is 32.51 dB, 28.82 dB, 27.72 dB and 26.66 dB, respectively. Conclusion A super-resolution algorithm using region-level channel attention mechanism has been commonly used channel attention in region-level. Based on the high-frequency aware loss, the network can reconstruct high frequency details by increasing the attention degree of the network to the high frequency detail location. The experimental results have shown that the proposed algorithm has its priority in objective indicators and subjective effects via using region-level channel attention mechanism and high-frequency aware loss.关键词:deep learning;convolutional neural network(CNN);super-resolution;attention mechanism;non-local neural network159|255|4更新时间:2024-05-07

Image Processing and Coding

-

摘要:ObjectivePoint cloud semantic segmentation has been an essential visual task for scene understanding from two-dimensional vision to three-dimensional vision. Deep learning processing point cloud has been divided into three methods as following: point-based method, projection-based method and voxel-based method. Projection-based methods have obtained a two-dimensional image from the point cloud based on spherical projection. The semantic segmentation on the point cloud has been conducted via a two-dimensional convolution neural network method. The original point cloud has been restored via some post-processing. However, those methods have usually only been used for LiDAR point clouds. Voxel-based methods have often consumed a lot of memory due to voxel representation. The above two methods have both represented the unstructured point cloud into a structured form and processed it via a two-dimensional convolutional neural network or a three-dimensional convolutional neural network. However, this method will lose geometric details. Point-based methods have often consumed more memory subjected to additional neighborhood information storage. Some existing methods have usually divided the entire point cloud into blocks for processing. However, this method will destroy the geometric structure of the scene to cause incomplete information capture from the scene. In addition, some point-based methods in large-scale scenes have the problem of insufficient receptive fields caused by shallow network structures due to excessive memory consumption. A computation-based and memory-efficient network structure has been presented that can be used for end-to-end large-scale scene semantic segmentation.MethodThe spatial depthwise residual (SDR) block has been designed via combining the spatial depthwise convolution and residual structure to learn geometric features from the point cloud effectively. The receptive field has been regarded as one of the key factors in semantic segmentation. In order to increase the receptive field, a dilated feature aggregation (DFA) module, which has a larger receptive field than the SDR block, but with less calculation. The core idea of this module has reduced computational consumption and memory consumption via down sampling. Combining SDR block and DFA module, SDRNet, a deeper encoder-decoder network structure has been constructed, which can be applied to large-scale scenes semantic segmentation. The data distribution of the input data has affected the training process of the network. Data distribution is not conducive to network learning based on the analysis of input data of the convolution kernel. Hierarchical normalization (HN) can reduce the learning difficulty of the convolution kernel. A special SDR block has been used for a kind of rotation invariance of sparse LiDAR point clouds. Before convolution, the point and its neighborhood have been first rotated to a fixed angle. The influence of the rotation of the radar data around the Z-axis can be eliminated. The prediction result has not be changed via the rotated point cloud around the Z-axis. This special SDR block can significantly improve the performance of the network when processing LiDAR point clouds.ResultThe stanford large-scale 3D indoor space(S3DIS) dataset and the Karlsruhe Institute of Technology and Toyota Technological Institute(SemanticKITTI) dataset have been used. Different parameters for different tasks to adapt to the application scenarios of the task have been setup. A larger model for higher accuracy has been constructed because the S3DIS task has been focused on accuracy. The SemanticKITTI scene has required more speed. A lighter hyperparameter has been chosen. The designed model has been compared with several state-of-the-art models on the S3DIS datasets by using 6-flod cross validation. Mean intersection over union (mIoU), mean accuracy (mAcc) and overall accuracy (OA) have been evaluated on the S3DIS dataset. The method has achieved 88.9% OA, 82.4% mAcc and 71.7% mIoU each. These methods have presented well on different metrics. The online single scan evaluation has been conducted on the SemanticKITTI dataset. 59.1% mIoU has been obtained. The method has achieved better results in mIoU and several accuracy of several classes in comparison with point-based methods and projection-based methods. In an unmanned driving scenario like SemanticKITTI, the inference speed of the mode is a crucial factor. In addition, the inference speed of SDRNet with the different number of points has been tested. When the number of points is 50 K, the network processing point cloud speed can reach 11 frames per second (fps) by using a machine with NVIDIA RTX and i7-8700K. Moreover, this paper has constructed experiment of ablation study to explain the performance of each part of the model further.ConclusionThe experiments on the S3DIS dataset SemanticKITTI dataset have shown that the research method can directly perform semantic segmentation in large-scale point cloud scenes. It can extract information from the scene effectively and achieve high accuracy. The experiment of ablation study on S3DIS area-5 has demonstrated that both DFA and HN can improve performance. The experiment of ablation study on SemanticKITTI validation set has presented that eliminating the influence of rotation by using the special SDR block can effectively improve the performance of the network. The higher accuracy and a relatively fast speed have been achieved via analyzing the relationship between the number of points and the frame rate.关键词:deep learning;semantic segmentation;normalization;point cloud;residual neural network;receptive field63|178|3更新时间:2024-05-07