最新刊期

卷 26 , 期 11 , 2021

-

摘要:With the continuous improvement of China's economic strength and people's living standards, the requirements of the state and the people for electric power are gradually improving. To meet the increasing demand for electricity, the grid system is constantly developing, leading to increased time and capital costs required for the safe operation and maintenance of power grids. The rise of unmanned aerial vehicle (UAV) technology has introduced new detection ideas, which make the intelligent and efficient detection of defects of transmission line components a reality. Compared with manual inspection, UAV has advantages of low cost, high efficiency, strong mobility, and high safety. Thus, it has gradually replaced manual inspection. At the same time, artificial intelligence (AI) technology based on deep learning is also developing rapidly, and the related technology of applying AI to the maintenance of power equipment has developed rapidly in recent years. However, how to accurately and efficiently detect the visual defects of transmission line components is a key problem to be solved. Early component visual defect detection methods based on image processing and feature engineering have high requirements on image quality, and designing features for various transmission line components consumes much time and money. The current UAV aerial photography technology cannot meet the requirements of image quality, and its detection accuracy cannot meet the actual requirements of defect inspection of basic transmission line components. Thus, applying the component visual defect detection method based on image processing and feature engineering to complex real-life scenes is impossible. With deep learning, transmission line component defect detection models based on deep learning can effectively extract transmission line component objects and defects from aerial images with complex backgrounds. Deep learning-based detection models have many other advantages. 1) Deep learning can automatically extract multi-level, multi-angle features from original data instead of artificial design. 2) Deep learning has strong generalization and expression capabilities, that is, it possesses translation invariance. 3) Deep learning is more adaptable to complex real-world environments than traditional techniques. Therefore, the object detection model based on deep learning is an inevitable choice for processing transmission line inspection images. Before applying a deep learning model to the defect detection of key components of transmission lines, a complete defect data set of components should be created for the training of the deep learning model. However, in transmission line component defect detection, no data set is available to the public. This work aims to review the visual defect detection methods of transmission line components. On the basis of extensive research on the visual defect detection of transmission line components, existing detection methods are summarized and analyzed. First, the visual defect detection technology of key parts of transmission lines is described based on traditional algorithms. The development process of deep learning is reviewed, and the advantages and disadvantages of deep learning in defect detection are analyzed. Second, the status of research on the positioning and defect detection of three important components on transmission lines(i.e., insulator, metal, and bolt)is introduced. Third, several key problems, such as sample imbalance, small object detection, and fine-grained detection, in transmission line component defect detection are analyzed. Lastly, the future development trend of transmission line component defect detection technology that meets the requirements of complex-scene grid inspection and fault diagnosis criteria is analyzed. The conclusion is that the development of visual defect detection of transmission line components cannot be separated from the development of deep learning in the field of image processing and image data augmentation. In short, the establishment of a high-precision, high-efficiency, strongly intelligent, multi-level, full-coverage defect detection model of key components of transmission lines on the basis of deep learning remains unrealized.关键词:power equipment operation and maintenance;transmission line components;visual defect detection;deep learning;object detection;knowledge guidance351|408|29更新时间:2024-05-07

摘要:With the continuous improvement of China's economic strength and people's living standards, the requirements of the state and the people for electric power are gradually improving. To meet the increasing demand for electricity, the grid system is constantly developing, leading to increased time and capital costs required for the safe operation and maintenance of power grids. The rise of unmanned aerial vehicle (UAV) technology has introduced new detection ideas, which make the intelligent and efficient detection of defects of transmission line components a reality. Compared with manual inspection, UAV has advantages of low cost, high efficiency, strong mobility, and high safety. Thus, it has gradually replaced manual inspection. At the same time, artificial intelligence (AI) technology based on deep learning is also developing rapidly, and the related technology of applying AI to the maintenance of power equipment has developed rapidly in recent years. However, how to accurately and efficiently detect the visual defects of transmission line components is a key problem to be solved. Early component visual defect detection methods based on image processing and feature engineering have high requirements on image quality, and designing features for various transmission line components consumes much time and money. The current UAV aerial photography technology cannot meet the requirements of image quality, and its detection accuracy cannot meet the actual requirements of defect inspection of basic transmission line components. Thus, applying the component visual defect detection method based on image processing and feature engineering to complex real-life scenes is impossible. With deep learning, transmission line component defect detection models based on deep learning can effectively extract transmission line component objects and defects from aerial images with complex backgrounds. Deep learning-based detection models have many other advantages. 1) Deep learning can automatically extract multi-level, multi-angle features from original data instead of artificial design. 2) Deep learning has strong generalization and expression capabilities, that is, it possesses translation invariance. 3) Deep learning is more adaptable to complex real-world environments than traditional techniques. Therefore, the object detection model based on deep learning is an inevitable choice for processing transmission line inspection images. Before applying a deep learning model to the defect detection of key components of transmission lines, a complete defect data set of components should be created for the training of the deep learning model. However, in transmission line component defect detection, no data set is available to the public. This work aims to review the visual defect detection methods of transmission line components. On the basis of extensive research on the visual defect detection of transmission line components, existing detection methods are summarized and analyzed. First, the visual defect detection technology of key parts of transmission lines is described based on traditional algorithms. The development process of deep learning is reviewed, and the advantages and disadvantages of deep learning in defect detection are analyzed. Second, the status of research on the positioning and defect detection of three important components on transmission lines(i.e., insulator, metal, and bolt)is introduced. Third, several key problems, such as sample imbalance, small object detection, and fine-grained detection, in transmission line component defect detection are analyzed. Lastly, the future development trend of transmission line component defect detection technology that meets the requirements of complex-scene grid inspection and fault diagnosis criteria is analyzed. The conclusion is that the development of visual defect detection of transmission line components cannot be separated from the development of deep learning in the field of image processing and image data augmentation. In short, the establishment of a high-precision, high-efficiency, strongly intelligent, multi-level, full-coverage defect detection model of key components of transmission lines on the basis of deep learning remains unrealized.关键词:power equipment operation and maintenance;transmission line components;visual defect detection;deep learning;object detection;knowledge guidance351|408|29更新时间:2024-05-07 -

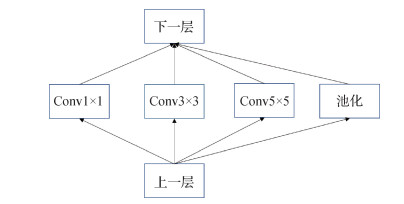

摘要:ObjectiveThe insulator is the key component in transmission lines. Insulators are numerous and widely distributed in transmission lines. They operate in the field for a long time. Affected by high voltage and complex climate, faults, such as defects and cracks, occur easily. Faults have serious consequences and entail economic losses. Therefore, the insulator components in aerial images need to be identified efficiently and accurately to provide a basis for fault diagnosis and other related work. Traditional insulator recognition methods include threshold segmentation based on target features and recognition algorithms based on image enhancement. These methods need features to be designed manually, but manual selection of labeled features is prone to errors or false checks, and the recognition efficiency and accuracy are low. Hence, this approach cannot fully meet actual needs. Compared with traditional methods, such as image segmentation and image enhancement, deep learning extracts insulator features automatically by using a machine, and it is more accurate and faster than manual extraction.Researchers have used the popular algorithm faster region convolutional neural network(Faster RCNN) to identify insulators and generated proposal regions in the last feature layer by convolution to identify insulator targets. This algorithm results in an insufficient number of feature maps to be identified, and the small scale leads to weak semantic information of insulators, which easily causes misdetection and even non-detection. When the single-shot multi-box detector and "you only look once" use a fixed-size convolution kernel to identify insulators with a large scale difference, the semantic information of insulator features with a relatively small scale is reduced, which easily causes small-scale insulation misdetection.MethodAn insulator recognition model based on the improved scale-transferrable network is proposed to address the problems that traditional methods cannot automatically extract insulator features and that the deep learning network is insufficient to extract insulator semantic information. This model meets the requirements of automatic recognition and semantic information enhancement. The length and width of the insulator images are limited to 300×300 pixels. The preprocessed insulator images are outputted to the backbone network Densenet-169. Densenet-169 completes the feature extraction of the insulator images. The improvement work in this study is mainly divided into three parts. First, the feature integration method is used to enhance the semantic information of the feature map generated by Densenet-169. Second, after feature extraction, the semantic information loss of the small-scale insulator becomes serious; therefore, the small-scale feature map in the network is expanded to further enrich the semantic information. Lastly, the parameters of the anchor box are improved to effectively identify the insulator with a large-scale difference. After the improvement work is completed, the accurate position information of the insulator is obtained through bounding box regression, and the insulator is identified.ResultThe experimental data set is composed of composite, glass, and ceramic insulators. The images have a total of 4 350, which include 2 000 composite, 1 350 glass, and 1 000 ceramic insulator images. The scale of each image is preprocessed as 300×300 pixels, and the training and test sets are divided randomly(3 250 and 1 100, respectively). Experimental results show that the model structure is improved, and the recognition accuracy is 96.28%. The improvement in recognition accuracy ranges from 1.98% to 11.99% relative to the traditional Faster RCNN, improved Faster RCNN, and improved region-based fully convolutional neural network(R-FCN).ConclusionThe improved model increases the accuracy of insulator identification significantly and lays a solid foundation for subsequent transmission line detection work. Considering that the total number of anchor boxes is increased due to the improved scaling module, the compression parameters will be considered in future work to reduce the calculation.关键词:scale-transferrable network;insulator recognition;semantic information;feature integration;convolution;pooling;anchor box122|328|3更新时间:2024-05-07

摘要:ObjectiveThe insulator is the key component in transmission lines. Insulators are numerous and widely distributed in transmission lines. They operate in the field for a long time. Affected by high voltage and complex climate, faults, such as defects and cracks, occur easily. Faults have serious consequences and entail economic losses. Therefore, the insulator components in aerial images need to be identified efficiently and accurately to provide a basis for fault diagnosis and other related work. Traditional insulator recognition methods include threshold segmentation based on target features and recognition algorithms based on image enhancement. These methods need features to be designed manually, but manual selection of labeled features is prone to errors or false checks, and the recognition efficiency and accuracy are low. Hence, this approach cannot fully meet actual needs. Compared with traditional methods, such as image segmentation and image enhancement, deep learning extracts insulator features automatically by using a machine, and it is more accurate and faster than manual extraction.Researchers have used the popular algorithm faster region convolutional neural network(Faster RCNN) to identify insulators and generated proposal regions in the last feature layer by convolution to identify insulator targets. This algorithm results in an insufficient number of feature maps to be identified, and the small scale leads to weak semantic information of insulators, which easily causes misdetection and even non-detection. When the single-shot multi-box detector and "you only look once" use a fixed-size convolution kernel to identify insulators with a large scale difference, the semantic information of insulator features with a relatively small scale is reduced, which easily causes small-scale insulation misdetection.MethodAn insulator recognition model based on the improved scale-transferrable network is proposed to address the problems that traditional methods cannot automatically extract insulator features and that the deep learning network is insufficient to extract insulator semantic information. This model meets the requirements of automatic recognition and semantic information enhancement. The length and width of the insulator images are limited to 300×300 pixels. The preprocessed insulator images are outputted to the backbone network Densenet-169. Densenet-169 completes the feature extraction of the insulator images. The improvement work in this study is mainly divided into three parts. First, the feature integration method is used to enhance the semantic information of the feature map generated by Densenet-169. Second, after feature extraction, the semantic information loss of the small-scale insulator becomes serious; therefore, the small-scale feature map in the network is expanded to further enrich the semantic information. Lastly, the parameters of the anchor box are improved to effectively identify the insulator with a large-scale difference. After the improvement work is completed, the accurate position information of the insulator is obtained through bounding box regression, and the insulator is identified.ResultThe experimental data set is composed of composite, glass, and ceramic insulators. The images have a total of 4 350, which include 2 000 composite, 1 350 glass, and 1 000 ceramic insulator images. The scale of each image is preprocessed as 300×300 pixels, and the training and test sets are divided randomly(3 250 and 1 100, respectively). Experimental results show that the model structure is improved, and the recognition accuracy is 96.28%. The improvement in recognition accuracy ranges from 1.98% to 11.99% relative to the traditional Faster RCNN, improved Faster RCNN, and improved region-based fully convolutional neural network(R-FCN).ConclusionThe improved model increases the accuracy of insulator identification significantly and lays a solid foundation for subsequent transmission line detection work. Considering that the total number of anchor boxes is increased due to the improved scaling module, the compression parameters will be considered in future work to reduce the calculation.关键词:scale-transferrable network;insulator recognition;semantic information;feature integration;convolution;pooling;anchor box122|328|3更新时间:2024-05-07 -

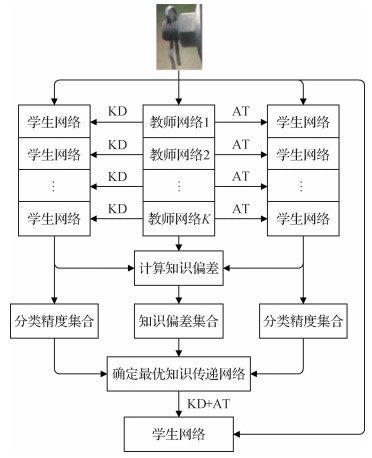

摘要:ObjectiveBolts play a key role in fixing and connecting various metal parts in transmission lines. Defects seriously affect the power transmission of transmission lines. The imaging background of an inspection image is complicated, the imaging distance and angle are variable, and the bolts occupy a small proportion in the inspection image. Thus, bolt defect images of transmission lines have low resolution and scarce visual information, and they usually require a large model with high complexity and excellent performance to classify bolt defects and ensure accuracy. A large model has a complex structure and numerous parameters, and deploying it on a large scale is difficult due to a large amount of computing resources needed in data analysis. A small model has a simple structure and few parameters, but it cannot completely guarantee the accuracy of bolt defect classification. This study proposes an image classification method of transmission line bolt defects based on the optimal knowledge transfer network to compensate for the limitations of bolt defect classification using large and small models.MethodThe width of the large model is changed, that is, the dimension of network feature expression is broadened, to fully mine the target information in the bolt image, thereby increasing the bolt defect knowledge of the transferability of the large model to the small model. To reduce the parameters of the small model considerably and improve its operation and maintenance capabilities, the structure of the small model is simplified to a 10-layer residual network with three residual blocks. The number of convolution kernels of each residual block is 16, 32, and 64. Therefore, the small model still has obvious features that focus on the low gradient of the bolt image in the low layer, the high difference area in the middle layer, and the overall characteristics of the bolt image in the high layer. Then, the large models of different widths use the attention transfer algorithm and the knowledge distillation algorithm to guide the training of the small models, and the accuracy of the small models under different widths after the training is calculated. Afterward, the concept of knowledge deviation is proposed to measure the degree of bolt defect knowledge transfer of large models and select the large model with the best performance in transferring bolt defect knowledge. The performance of the large and small models is mapped on a number line in the form of accuracy. The calculation process of knowledge deviation proceeds as follows. First, the difference in bolt defect classification accuracy between the large model with a known width and the small model being instructed is calculated. Second, the difference in bolt defect classification accuracy between the large model and the small model that is not being instructed is computed. Lastly, the ratio of the two differences before and after the calculation is adopted as the knowledge deviation. The smaller the knowledge deviation is, the greater the degree of bolt defect knowledge transfer is from the large model to the small model. The optimal knowledge transfer model is determined according to the knowledge deviation of different widths and the bolt defect classification accuracy of the small models under different guidance methods. The optimal knowledge transfer model combines the attention algorithm and the knowledge distillation algorithm to guide in small model training and maximize the bolt defect classification performance of the small model.ResultThe self-built bolt defect image classification data set verifies the effectiveness of this method in improving the accuracy of bolt classification of the simplified small model. The data set is constructed by clipping and optimizing the transmission line inspection image. The bolt defect image classification data set contains a total of 6 420 images, including 3 136 normal bolts, 2 820 bolts with missing pins, and 464 bolts with missing nuts, which belong to three categories. Experimental results show that the large model with a width of 5 has the best performance in transferring bolt defect knowledge to the small model, and it increases the bolt defect classification accuracy of the small model by 5.56%. The difference in the accuracy of bolt defect classification between the small model and the optimal knowledge transfer model is only 2.17%. The knowledge deviation is 0.28, and the parameter of the small model is only 0.56% of the parameter of the large model.ConclusionThe proposed bolt defect classification method based on the optimal knowledge transfer network greatly alleviates the problems of large model parameters and low classification accuracy of small models caused by bolt image characteristics. Balance is achieved between the classification accuracy of the bolt defect image and resource consumption. The method meets the requirements of actual field operation and maintenance, such as the work requirements of embedded equipment (e.g., online monitoring in transmission lines) and reduces the resource consumption of transmission line patrol data analysis.关键词:bolt defect classification;optimal knowledge transfer;knowledge deviation;knowledge distillation;attention transfer85|160|1更新时间:2024-05-07

摘要:ObjectiveBolts play a key role in fixing and connecting various metal parts in transmission lines. Defects seriously affect the power transmission of transmission lines. The imaging background of an inspection image is complicated, the imaging distance and angle are variable, and the bolts occupy a small proportion in the inspection image. Thus, bolt defect images of transmission lines have low resolution and scarce visual information, and they usually require a large model with high complexity and excellent performance to classify bolt defects and ensure accuracy. A large model has a complex structure and numerous parameters, and deploying it on a large scale is difficult due to a large amount of computing resources needed in data analysis. A small model has a simple structure and few parameters, but it cannot completely guarantee the accuracy of bolt defect classification. This study proposes an image classification method of transmission line bolt defects based on the optimal knowledge transfer network to compensate for the limitations of bolt defect classification using large and small models.MethodThe width of the large model is changed, that is, the dimension of network feature expression is broadened, to fully mine the target information in the bolt image, thereby increasing the bolt defect knowledge of the transferability of the large model to the small model. To reduce the parameters of the small model considerably and improve its operation and maintenance capabilities, the structure of the small model is simplified to a 10-layer residual network with three residual blocks. The number of convolution kernels of each residual block is 16, 32, and 64. Therefore, the small model still has obvious features that focus on the low gradient of the bolt image in the low layer, the high difference area in the middle layer, and the overall characteristics of the bolt image in the high layer. Then, the large models of different widths use the attention transfer algorithm and the knowledge distillation algorithm to guide the training of the small models, and the accuracy of the small models under different widths after the training is calculated. Afterward, the concept of knowledge deviation is proposed to measure the degree of bolt defect knowledge transfer of large models and select the large model with the best performance in transferring bolt defect knowledge. The performance of the large and small models is mapped on a number line in the form of accuracy. The calculation process of knowledge deviation proceeds as follows. First, the difference in bolt defect classification accuracy between the large model with a known width and the small model being instructed is calculated. Second, the difference in bolt defect classification accuracy between the large model and the small model that is not being instructed is computed. Lastly, the ratio of the two differences before and after the calculation is adopted as the knowledge deviation. The smaller the knowledge deviation is, the greater the degree of bolt defect knowledge transfer is from the large model to the small model. The optimal knowledge transfer model is determined according to the knowledge deviation of different widths and the bolt defect classification accuracy of the small models under different guidance methods. The optimal knowledge transfer model combines the attention algorithm and the knowledge distillation algorithm to guide in small model training and maximize the bolt defect classification performance of the small model.ResultThe self-built bolt defect image classification data set verifies the effectiveness of this method in improving the accuracy of bolt classification of the simplified small model. The data set is constructed by clipping and optimizing the transmission line inspection image. The bolt defect image classification data set contains a total of 6 420 images, including 3 136 normal bolts, 2 820 bolts with missing pins, and 464 bolts with missing nuts, which belong to three categories. Experimental results show that the large model with a width of 5 has the best performance in transferring bolt defect knowledge to the small model, and it increases the bolt defect classification accuracy of the small model by 5.56%. The difference in the accuracy of bolt defect classification between the small model and the optimal knowledge transfer model is only 2.17%. The knowledge deviation is 0.28, and the parameter of the small model is only 0.56% of the parameter of the large model.ConclusionThe proposed bolt defect classification method based on the optimal knowledge transfer network greatly alleviates the problems of large model parameters and low classification accuracy of small models caused by bolt image characteristics. Balance is achieved between the classification accuracy of the bolt defect image and resource consumption. The method meets the requirements of actual field operation and maintenance, such as the work requirements of embedded equipment (e.g., online monitoring in transmission lines) and reduces the resource consumption of transmission line patrol data analysis.关键词:bolt defect classification;optimal knowledge transfer;knowledge deviation;knowledge distillation;attention transfer85|160|1更新时间:2024-05-07 -

摘要:ObjectiveUnmanned aerial vehicle(UAV)-based transmission line inspection technology has achieved long-term progress and development. The use of computer vision technology to automatically and accurately locate line equipment, such as wires, insulators, and bolts, from aerial inspection images under complex natural backgrounds and accurately detect their defects has become animportant technical issue. The defects inspected by transmission lines mainly include tower, wire, insulator, and metal fitting defects. Given the large size of metal fittings and insulators, their defects are obvious and easy to identify. By contrast, numerous bolts are present in poles, insulators, and metal fittings. Bolts change easily from the normal state to the defect state due to the large number of bolts and complex stress conditions. The use of deep learning has achieved good results in visual detection, identification, and classification of tower, wire, insulator, and metal fitting defects, but only a few studies have been conducted on bolt defects. In addition, bolt defects are not completely visually separable problems; they are visually inseparable, and they can not be solved by object detection algorithms alone. Thus, we believe that the bolt defect detection problem is not only an object detection problem, but also an image classification problem. Multi-label classification of bolts must be implemented efficiently and quickly to provide a basis for defect detection. The convolutional neural network (CNN) is inherently limited by model geometry transformation due to its fixed geometric structure. An offset variable must be added to the position of each sampling point in the convolution kernel to weaken this limitation and improve the feature extraction capability of bolts. By adding these variables, the convolution kernel is given random sampling near the current position and is no longer limited to the previous regular grid points. The convolution operation after expansion is called deformable convolution. Deformable convolution changes the sampling position of the standard convolution kernel by adding an additional offset to the sampling point. The compensation obtained can be learned through training without additional supervision.MethodThe object to be inspected in the transmission line bolt multi-label classification task has similar overall characteristics as those of the object in the general image multi-label classification task. The classification model needs to capture the key local features that can distinguish the attributes of different categories. The idea of using local regions to assist in classification belongs to fine-grained classification. Several studies on fine-grained classification algorithms used detailed local area labels to train the model so that the model can accurately locate the regions containing detailed semantic information. However, this approach requires a huge amount of work in the production of labels. In other studies, unsupervised learning was used to locate key areas. Although this strategy eliminates tedious label-making work, the accuracy of the model in locating key details can not be ensured. The multi-label classification method proposed in this study is mainly divided into three steps.First, navigator-teacher-scrutinizer network(NTS-Net) is used as the basic network, and the feature extraction network is improved into a deformable ResNet-50 network in accordance with the various properties of the bolt target shape. Second, the navigator network in NTS-Net continuously learns and provides k regions with the most information under the guidance of the teacher network to obtain the discriminative region of the bolt target. Lastly, to make the model use discriminant features effectively, the input features of the k regions receiving the most information from the navigator network are extracted, and corresponding feature vectors are generated and connected to the feature vectors of the entire input image. Afterward, the features need to be passed through the channel attention module, which can enhance the feature with a large weight and suppress the feature with a small weight.ResultThis study uses the bolt multi-attribute classification dataset to evaluate the model. The bolt defect images are from samples obtained by UAV line inspection. The data sample has a total of 2 000 pictures, of which 1 500 are used as training samples and 500 are used as test samples. The bolt defect attributes are divided into six categories based the idea of visual separability. Each bolt defect image contains one or more defect attributes, which can be divided into the following six categories: a pin hole is present, shim is present, a nut is present, rust is present, the nut is loose, and the pin is loose; they are labeled 0-5 respectively. In the multi-label classification task in this study, a 1×6 matrix is constructed for each picture as the label of the picture. If the corresponding attribute category exists, the value is set to 1 and vice versa. Experimental results show that the mean average precision of the proposed method in the bolt multi-attribute classification dataset is 84.5%, which is 10%~20% higher than the accuracy of multi-label classification using traditional networks.ConclusionThe feature extraction capability of the network is improved through deformable convolution, and the channel attention mechanism is introduced to realize the efficient utilization of the local features provided by NTS-Net. Experimental results show that the proposed method performs better than the traditional method in the bolt multi-attribute classification dataset. The proposed method provides a new idea for applying multi-attribute information to bolt defect reasoning and realizing bolt defect detection.关键词:bolt defect;deformable convolution;NTS-Net network;multi-label classification;channel attention81|30|5更新时间:2024-05-07

摘要:ObjectiveUnmanned aerial vehicle(UAV)-based transmission line inspection technology has achieved long-term progress and development. The use of computer vision technology to automatically and accurately locate line equipment, such as wires, insulators, and bolts, from aerial inspection images under complex natural backgrounds and accurately detect their defects has become animportant technical issue. The defects inspected by transmission lines mainly include tower, wire, insulator, and metal fitting defects. Given the large size of metal fittings and insulators, their defects are obvious and easy to identify. By contrast, numerous bolts are present in poles, insulators, and metal fittings. Bolts change easily from the normal state to the defect state due to the large number of bolts and complex stress conditions. The use of deep learning has achieved good results in visual detection, identification, and classification of tower, wire, insulator, and metal fitting defects, but only a few studies have been conducted on bolt defects. In addition, bolt defects are not completely visually separable problems; they are visually inseparable, and they can not be solved by object detection algorithms alone. Thus, we believe that the bolt defect detection problem is not only an object detection problem, but also an image classification problem. Multi-label classification of bolts must be implemented efficiently and quickly to provide a basis for defect detection. The convolutional neural network (CNN) is inherently limited by model geometry transformation due to its fixed geometric structure. An offset variable must be added to the position of each sampling point in the convolution kernel to weaken this limitation and improve the feature extraction capability of bolts. By adding these variables, the convolution kernel is given random sampling near the current position and is no longer limited to the previous regular grid points. The convolution operation after expansion is called deformable convolution. Deformable convolution changes the sampling position of the standard convolution kernel by adding an additional offset to the sampling point. The compensation obtained can be learned through training without additional supervision.MethodThe object to be inspected in the transmission line bolt multi-label classification task has similar overall characteristics as those of the object in the general image multi-label classification task. The classification model needs to capture the key local features that can distinguish the attributes of different categories. The idea of using local regions to assist in classification belongs to fine-grained classification. Several studies on fine-grained classification algorithms used detailed local area labels to train the model so that the model can accurately locate the regions containing detailed semantic information. However, this approach requires a huge amount of work in the production of labels. In other studies, unsupervised learning was used to locate key areas. Although this strategy eliminates tedious label-making work, the accuracy of the model in locating key details can not be ensured. The multi-label classification method proposed in this study is mainly divided into three steps.First, navigator-teacher-scrutinizer network(NTS-Net) is used as the basic network, and the feature extraction network is improved into a deformable ResNet-50 network in accordance with the various properties of the bolt target shape. Second, the navigator network in NTS-Net continuously learns and provides k regions with the most information under the guidance of the teacher network to obtain the discriminative region of the bolt target. Lastly, to make the model use discriminant features effectively, the input features of the k regions receiving the most information from the navigator network are extracted, and corresponding feature vectors are generated and connected to the feature vectors of the entire input image. Afterward, the features need to be passed through the channel attention module, which can enhance the feature with a large weight and suppress the feature with a small weight.ResultThis study uses the bolt multi-attribute classification dataset to evaluate the model. The bolt defect images are from samples obtained by UAV line inspection. The data sample has a total of 2 000 pictures, of which 1 500 are used as training samples and 500 are used as test samples. The bolt defect attributes are divided into six categories based the idea of visual separability. Each bolt defect image contains one or more defect attributes, which can be divided into the following six categories: a pin hole is present, shim is present, a nut is present, rust is present, the nut is loose, and the pin is loose; they are labeled 0-5 respectively. In the multi-label classification task in this study, a 1×6 matrix is constructed for each picture as the label of the picture. If the corresponding attribute category exists, the value is set to 1 and vice versa. Experimental results show that the mean average precision of the proposed method in the bolt multi-attribute classification dataset is 84.5%, which is 10%~20% higher than the accuracy of multi-label classification using traditional networks.ConclusionThe feature extraction capability of the network is improved through deformable convolution, and the channel attention mechanism is introduced to realize the efficient utilization of the local features provided by NTS-Net. Experimental results show that the proposed method performs better than the traditional method in the bolt multi-attribute classification dataset. The proposed method provides a new idea for applying multi-attribute information to bolt defect reasoning and realizing bolt defect detection.关键词:bolt defect;deformable convolution;NTS-Net network;multi-label classification;channel attention81|30|5更新时间:2024-05-07 -

摘要:ObjectiveIn transmission lines, bolts are widely used as a kind of fasteners to connect various parts of transmission lines and make the overall structure stable and safe. However, bolts are easily damaged because of their complex working environment. The damage or loss of a bolt may cause a large area of transmission line failure, which seriously threatens the safety and stability of the power grid. Bolts are the most common components of transmission lines. Thus, bolt defect detection is an important task in transmission line inspection. Good features are difficult extract because of the complex background, small target, small difference between categories, and loss of gradient information. This study proposes a dual-attention scheme to enhance the visual features of different scales and positions.MethodFirst, for different scales, the network extracts the feature map of each layer, uses the multi-scale attention model to obtain the corresponding attention map, calculates the difference of the attention map for adjacent layers, and adds it to the loss function as a regularization term to enhance the fine features of the bolt area. The trained network continuously reduces the difference in the attention maps of different layers. The learned attention maps of different scales are introduced into the network as a kind of context information. This procedure can avoid the loss of important information in the process of feature extraction. No additional regulatory information is required because the attention map is from the network itself. Second, for different positions, bolts appear in specific positions of the accessories, but due to light blocking and other reasons, the characteristics of these positions are not obvious. In this study, we use the feature map to derive a spatial attention map of the image. Each element in the attention map indicates the degree of similarity between two spatial locations. Then, the attention map is used to combine the features of each position with the global feature. This process enhances the features in similar regions and improves the difference degree between dissimilar areas. Hence, the difference between the bolt and the background is increased, and the detection accuracy of the bolt area is improved.ResultThe method is tested on a typical bolt data set for aerial transmission lines. The typical bolt data set contains 1 483 images of three types of bolts. Each image has a size of approximately 3 000×4 000 pixels. A total of 2 692 targets are labeled, and they include 1 443 normal bolt samples, 670 missing bolt samples, and 579 missing nut bolt samples. The ratio of the training set to the test set is 8:2. The baseline model used in this study is the faster region convolutional neural network(Faster R-CNN) model. Experimental results show that compared with the baseline, the proposed model's mean average precision (mAP) is increased by 0.29% when the multi-scale attention module is added. Normal, missing and missing nut bolts increase by 0.62%, 2.54%, and 0.69%, respectively. After the addition of the spatial attention module, the mAP of the model increases by 0.61%; specifically, the AP of normal bolts increases by 0.3%, that of missing bolts increases by 2.05%, and that of missing nut bolts increases by 0.52%. This result is obtained because several shaded nuts of missing bolts are confused with the nuts of normal bolts, leading to misjudgment. After introducing multi-scale attention and spatial attention at the same time, the model's mAP is increased by 2.21%; the AP of the normal, missing, and missing nut bolts is increased by 0.29%, 5.23%, and 1.10%, respectively. These experimental results prove the effectiveness of the bolt defect detection method for aerial transmission lines based on the dual attention mechanism. This study also conducts visualization experiments, including the establishment of feature maps, model training loss function curve, precision-recall(PR) curve, and bolt defect detection result map, to prove that the proposed method can be applied to feature extraction.ConclusionExperimental results prove that the proposed detection method for aerial transmission line bolt defects based on the dual attention mechanism is effective. The process of supervising feature extraction can ensure that abundant useful information is retained when extracting features. For the bolt defect detection task, increasing the difference between the target and the background can improve the detection accuracy of the target area. The visualization experiments verify that the proposed method can retain abundant useful information in the process of feature extraction. The visualized test examples also prove that the proposed method can effectively avoid the problem of misjudgment in bolt defect detection.关键词:dual attention mechanism;multi-scale;spatial position;bolt defect detection;deep learning132|63|16更新时间:2024-05-07

摘要:ObjectiveIn transmission lines, bolts are widely used as a kind of fasteners to connect various parts of transmission lines and make the overall structure stable and safe. However, bolts are easily damaged because of their complex working environment. The damage or loss of a bolt may cause a large area of transmission line failure, which seriously threatens the safety and stability of the power grid. Bolts are the most common components of transmission lines. Thus, bolt defect detection is an important task in transmission line inspection. Good features are difficult extract because of the complex background, small target, small difference between categories, and loss of gradient information. This study proposes a dual-attention scheme to enhance the visual features of different scales and positions.MethodFirst, for different scales, the network extracts the feature map of each layer, uses the multi-scale attention model to obtain the corresponding attention map, calculates the difference of the attention map for adjacent layers, and adds it to the loss function as a regularization term to enhance the fine features of the bolt area. The trained network continuously reduces the difference in the attention maps of different layers. The learned attention maps of different scales are introduced into the network as a kind of context information. This procedure can avoid the loss of important information in the process of feature extraction. No additional regulatory information is required because the attention map is from the network itself. Second, for different positions, bolts appear in specific positions of the accessories, but due to light blocking and other reasons, the characteristics of these positions are not obvious. In this study, we use the feature map to derive a spatial attention map of the image. Each element in the attention map indicates the degree of similarity between two spatial locations. Then, the attention map is used to combine the features of each position with the global feature. This process enhances the features in similar regions and improves the difference degree between dissimilar areas. Hence, the difference between the bolt and the background is increased, and the detection accuracy of the bolt area is improved.ResultThe method is tested on a typical bolt data set for aerial transmission lines. The typical bolt data set contains 1 483 images of three types of bolts. Each image has a size of approximately 3 000×4 000 pixels. A total of 2 692 targets are labeled, and they include 1 443 normal bolt samples, 670 missing bolt samples, and 579 missing nut bolt samples. The ratio of the training set to the test set is 8:2. The baseline model used in this study is the faster region convolutional neural network(Faster R-CNN) model. Experimental results show that compared with the baseline, the proposed model's mean average precision (mAP) is increased by 0.29% when the multi-scale attention module is added. Normal, missing and missing nut bolts increase by 0.62%, 2.54%, and 0.69%, respectively. After the addition of the spatial attention module, the mAP of the model increases by 0.61%; specifically, the AP of normal bolts increases by 0.3%, that of missing bolts increases by 2.05%, and that of missing nut bolts increases by 0.52%. This result is obtained because several shaded nuts of missing bolts are confused with the nuts of normal bolts, leading to misjudgment. After introducing multi-scale attention and spatial attention at the same time, the model's mAP is increased by 2.21%; the AP of the normal, missing, and missing nut bolts is increased by 0.29%, 5.23%, and 1.10%, respectively. These experimental results prove the effectiveness of the bolt defect detection method for aerial transmission lines based on the dual attention mechanism. This study also conducts visualization experiments, including the establishment of feature maps, model training loss function curve, precision-recall(PR) curve, and bolt defect detection result map, to prove that the proposed method can be applied to feature extraction.ConclusionExperimental results prove that the proposed detection method for aerial transmission line bolt defects based on the dual attention mechanism is effective. The process of supervising feature extraction can ensure that abundant useful information is retained when extracting features. For the bolt defect detection task, increasing the difference between the target and the background can improve the detection accuracy of the target area. The visualization experiments verify that the proposed method can retain abundant useful information in the process of feature extraction. The visualized test examples also prove that the proposed method can effectively avoid the problem of misjudgment in bolt defect detection.关键词:dual attention mechanism;multi-scale;spatial position;bolt defect detection;deep learning132|63|16更新时间:2024-05-07 -

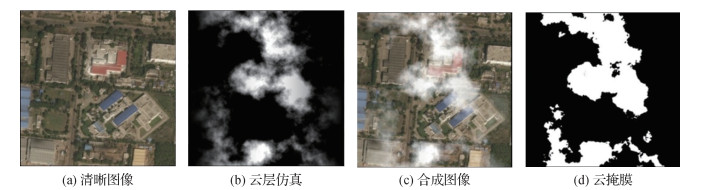

摘要:ObjectivePowerline semantic segmentation of aerial images, as an important content of powerline intelligent inspection research, has received widespread attention. Recently, several deep learning-based methods have been proposed in this field and achieved high accuracy. However, two major problems still need to be solved before deep learning models can be applied in practice. First, the sample size of publicly available datasets is small. Unlike target objects in other semantic segmentation tasks (e.g., cars and buildings), powerlines have few textures and structural features, which make powerlines easy to be misidentified, especially in scenes that are not covered by the training set. Therefore, constructing a training set that contains many different background samples is crucial to improve the generalization capability of the model. The second problem is the conflict between the amount of model computation and the limited terminal computing resources. Previous work has demonstrated that an improved U-Net model can segment powerlines from aerial images with satisfactory accuracy. However, the model is computationally expensive for many resource-constrained inference terminals (e.g., unmanned aerial vehicles(UAVs)).MethodIn this study, the background images in the training set were learned using a generative adversarial network (GAN) to generate a series of pseudo-backgrounds, and curved powerlines were drawn on the generated images by utilizing conic curves. In detail, a multi-scale-based automatic growth model called progressive growing of GANs (PGGAN) was adopted to learn the mapping of a random noise vector to the background images in the training set. Then, its generator was used to generate serials of the background images. These background images and the curved powerlines generated by the conic curves were fused in the alpha channel. We created three training sets. The first one consisted of only 2 000 real background pictures, and the second was a mixture of 10 000 real and generated background images. The third training dataset was composed of 200 generated backgrounds and used to evaluate the similarity between the generated and original images. At the input of the segmentation network, random hue perturbation was applied to the images to enhance the generalization of the model across seasons. Then, the convergence accuracy of U-Net networks with three different loss functions was compared in RGB and grayscale color spaces to determine the best combination. Specifically, we trained U-Net with focal, soft-IoU, and Dice loss functions in RGB and gray spaces and compared the convergence accuracy, convergence speed, and overfitting of the six obtained models. Afterward, sparse regularization was applied to the pre-trained full model, and structured network pruning was performed to reduce the computation load in network inference. A saliency metric that combines first-order Taylor expansion and 2-norm metric was proposed to guide the regularization and pruning process. It provided a higher compression rate compared with the 2-norm that was used in the previous pruning algorithm. Conventional saliency metrics based on first-order expansion can change by orders of magnitude during the regularization process, thus making threshold selection during the iterative process difficult. Compared with these conventional metrics, the proposed metric has a more stable range of values, which enables the use of iteration-based regularization methods. We adopted a 0-norm-based regularization method to widen the saliency gap between important and unimportant neurons. To select the decision threshold, we used an adaptive approach, which was more robust to changes in luminance compared with the fixed-threshold method used in previous work.ResultExperimental results showed that the convergence accuracy of the curved powerline dataset was higher than that of the straight powerline dataset. In RGB space, the hybrid dataset using GAN had higher convergence accuracy than the dataset using only real images, but no significant improvement in gray space was observed due to the possibility of model collapse. We confirmed that hue disturbance can effectively improve the performance of the model across seasons. The experimental results of the different loss functions revealed that the convergence intersection-over-union(IoU) of RGB and gray spaces under their respective optimal loss functions was 0.578 and 0.586, respectively. Dice and soft-IoU had a negligible difference in convergence speed and achieved the best accuracy in gray and RGB spaces, respectively. The convergence of focal loss was the slowest in both spaces, and neither achieved the optimal accuracy. At the pruning stage, by using the conventional 2-norm saliency metric, the proposed gray space lightweight model (IoU of 0.459) reduced the number of floating-point operations per second (FLOPs) and parameters to 3.05% and 0.03% of the full model in RGB space, respectively (IoU of 0.573). When the proposed joint saliency metric was used, the numbers of FLOPs and parameters further decreased to 0.947% and 0.015% of the complete model, respectively, while maintaining an IoU of 0.42. The experiment also showed that the Otsu threshold method worked stably within the appropriate range of illumination changes, and a negligible difference from the optimal threshold was observed.ConclusionImprovements in the dataset and loss function independently enhanced the performance of the baseline model. Sparse regularization and network pruning reduced the network parameters and calculation load, which facilitated the deployment of the model on resource-constrained inferring terminals, such as UAVs. The proposed saliency measure exhibited better compression capabilities than the conventional 2-norm metric, and the adaptive threshold method helped improve the robustness of the model when the luminance changed.关键词:smart inspection;image semantic segmentation;sparse regularization;network pruning;generated adversarial network (GAN)86|76|4更新时间:2024-05-07

摘要:ObjectivePowerline semantic segmentation of aerial images, as an important content of powerline intelligent inspection research, has received widespread attention. Recently, several deep learning-based methods have been proposed in this field and achieved high accuracy. However, two major problems still need to be solved before deep learning models can be applied in practice. First, the sample size of publicly available datasets is small. Unlike target objects in other semantic segmentation tasks (e.g., cars and buildings), powerlines have few textures and structural features, which make powerlines easy to be misidentified, especially in scenes that are not covered by the training set. Therefore, constructing a training set that contains many different background samples is crucial to improve the generalization capability of the model. The second problem is the conflict between the amount of model computation and the limited terminal computing resources. Previous work has demonstrated that an improved U-Net model can segment powerlines from aerial images with satisfactory accuracy. However, the model is computationally expensive for many resource-constrained inference terminals (e.g., unmanned aerial vehicles(UAVs)).MethodIn this study, the background images in the training set were learned using a generative adversarial network (GAN) to generate a series of pseudo-backgrounds, and curved powerlines were drawn on the generated images by utilizing conic curves. In detail, a multi-scale-based automatic growth model called progressive growing of GANs (PGGAN) was adopted to learn the mapping of a random noise vector to the background images in the training set. Then, its generator was used to generate serials of the background images. These background images and the curved powerlines generated by the conic curves were fused in the alpha channel. We created three training sets. The first one consisted of only 2 000 real background pictures, and the second was a mixture of 10 000 real and generated background images. The third training dataset was composed of 200 generated backgrounds and used to evaluate the similarity between the generated and original images. At the input of the segmentation network, random hue perturbation was applied to the images to enhance the generalization of the model across seasons. Then, the convergence accuracy of U-Net networks with three different loss functions was compared in RGB and grayscale color spaces to determine the best combination. Specifically, we trained U-Net with focal, soft-IoU, and Dice loss functions in RGB and gray spaces and compared the convergence accuracy, convergence speed, and overfitting of the six obtained models. Afterward, sparse regularization was applied to the pre-trained full model, and structured network pruning was performed to reduce the computation load in network inference. A saliency metric that combines first-order Taylor expansion and 2-norm metric was proposed to guide the regularization and pruning process. It provided a higher compression rate compared with the 2-norm that was used in the previous pruning algorithm. Conventional saliency metrics based on first-order expansion can change by orders of magnitude during the regularization process, thus making threshold selection during the iterative process difficult. Compared with these conventional metrics, the proposed metric has a more stable range of values, which enables the use of iteration-based regularization methods. We adopted a 0-norm-based regularization method to widen the saliency gap between important and unimportant neurons. To select the decision threshold, we used an adaptive approach, which was more robust to changes in luminance compared with the fixed-threshold method used in previous work.ResultExperimental results showed that the convergence accuracy of the curved powerline dataset was higher than that of the straight powerline dataset. In RGB space, the hybrid dataset using GAN had higher convergence accuracy than the dataset using only real images, but no significant improvement in gray space was observed due to the possibility of model collapse. We confirmed that hue disturbance can effectively improve the performance of the model across seasons. The experimental results of the different loss functions revealed that the convergence intersection-over-union(IoU) of RGB and gray spaces under their respective optimal loss functions was 0.578 and 0.586, respectively. Dice and soft-IoU had a negligible difference in convergence speed and achieved the best accuracy in gray and RGB spaces, respectively. The convergence of focal loss was the slowest in both spaces, and neither achieved the optimal accuracy. At the pruning stage, by using the conventional 2-norm saliency metric, the proposed gray space lightweight model (IoU of 0.459) reduced the number of floating-point operations per second (FLOPs) and parameters to 3.05% and 0.03% of the full model in RGB space, respectively (IoU of 0.573). When the proposed joint saliency metric was used, the numbers of FLOPs and parameters further decreased to 0.947% and 0.015% of the complete model, respectively, while maintaining an IoU of 0.42. The experiment also showed that the Otsu threshold method worked stably within the appropriate range of illumination changes, and a negligible difference from the optimal threshold was observed.ConclusionImprovements in the dataset and loss function independently enhanced the performance of the baseline model. Sparse regularization and network pruning reduced the network parameters and calculation load, which facilitated the deployment of the model on resource-constrained inferring terminals, such as UAVs. The proposed saliency measure exhibited better compression capabilities than the conventional 2-norm metric, and the adaptive threshold method helped improve the robustness of the model when the luminance changed.关键词:smart inspection;image semantic segmentation;sparse regularization;network pruning;generated adversarial network (GAN)86|76|4更新时间:2024-05-07

Frontier Technology of Power Computer Vision

-

摘要:Although the current intelligent vision system has certain advantages in feature detection, the extraction and matching of large-scale visual information and the cognition of deep-seated visual information remain uncertain and fragile. How to mine and understand the connotation of visual information efficiently, and make cognitive decisions is an engaging research field in computer vision. Especially for the visual cognitive task based on visual perception, the related mathematical logic and image processing methods have not achieved a qualitative breakthrough at present due to limitations by the western philosophy system. It makes the development of computer vision processing intelligent algorithm enter a bottleneck period and completely replacing human to perform more complex operations such as understanding, reasoning, decision making, and learning difficult. The basic framework of hybrid enhanced visual cognition and the application fields and key technologies that can be included in the framework to promote the development of intelligent visual perception and cognitive technology based on the application status of hybrid enhanced intelligence in the field of visual cognition are summarized in this paper. First, on the basis of analyzing the connotation and basic category of intelligent visual perception, human visual perception and psychological cognition are integrated; the definition, category, and deepening of hybrid enhanced visual cognition are discussed; different visual information processing stages are compared and analyzed; and then the basic framework of hybrid enhanced visual cognition on analyzing the development status of relevant cognitive models is constructed. The framework can rely on intelligent algorithms for rapid detection, recognition, understanding, and other processing to maximize the computational potential of "machine"; can effectively enhance the accuracy and reliability of system cognition with timely, appropriate artificial reasoning, prediction, and decision making; and give full play to human cognitive advantages. Second, the representative applications and existing problems of the framework are discussed from four fields, namely, hybrid enhanced visual monitoring, hybrid enhanced visual driving, hybrid enhanced visual decision making, and hybrid enhanced visual sharing, and the hybrid enhanced visual cognitive framework is identified as an expedient measure to enhance computer efficiency and reduce the pressure on people to process information under existing technical conditions. Then, based on high, medium, and low computer vision processing technology systems, the macro and micro relationships of several medium- and high-level visual processing technologies in a hybrid enhanced visual cognition framework are analyzed, focusing on key technologies such as visual analysis, visual enhancement, visual attention, visual understanding, visual reasoning, interactive learning, and cognitive evaluation. This framework will help break through the bottleneck of "weak artificial intelligence" in current visual information cognition and effectively promote the further development of intelligent vision system toward the direction of human-computer deep integration. Next, more indepth research must be carried out on pure basic innovation, efficient human-computer interaction, and flexible connection path.关键词:visual cognition;visual perception;intelligent visual perception;hybrid enhanced visual cognition;man-machine fusion111|231|1更新时间:2024-05-07

摘要:Although the current intelligent vision system has certain advantages in feature detection, the extraction and matching of large-scale visual information and the cognition of deep-seated visual information remain uncertain and fragile. How to mine and understand the connotation of visual information efficiently, and make cognitive decisions is an engaging research field in computer vision. Especially for the visual cognitive task based on visual perception, the related mathematical logic and image processing methods have not achieved a qualitative breakthrough at present due to limitations by the western philosophy system. It makes the development of computer vision processing intelligent algorithm enter a bottleneck period and completely replacing human to perform more complex operations such as understanding, reasoning, decision making, and learning difficult. The basic framework of hybrid enhanced visual cognition and the application fields and key technologies that can be included in the framework to promote the development of intelligent visual perception and cognitive technology based on the application status of hybrid enhanced intelligence in the field of visual cognition are summarized in this paper. First, on the basis of analyzing the connotation and basic category of intelligent visual perception, human visual perception and psychological cognition are integrated; the definition, category, and deepening of hybrid enhanced visual cognition are discussed; different visual information processing stages are compared and analyzed; and then the basic framework of hybrid enhanced visual cognition on analyzing the development status of relevant cognitive models is constructed. The framework can rely on intelligent algorithms for rapid detection, recognition, understanding, and other processing to maximize the computational potential of "machine"; can effectively enhance the accuracy and reliability of system cognition with timely, appropriate artificial reasoning, prediction, and decision making; and give full play to human cognitive advantages. Second, the representative applications and existing problems of the framework are discussed from four fields, namely, hybrid enhanced visual monitoring, hybrid enhanced visual driving, hybrid enhanced visual decision making, and hybrid enhanced visual sharing, and the hybrid enhanced visual cognitive framework is identified as an expedient measure to enhance computer efficiency and reduce the pressure on people to process information under existing technical conditions. Then, based on high, medium, and low computer vision processing technology systems, the macro and micro relationships of several medium- and high-level visual processing technologies in a hybrid enhanced visual cognition framework are analyzed, focusing on key technologies such as visual analysis, visual enhancement, visual attention, visual understanding, visual reasoning, interactive learning, and cognitive evaluation. This framework will help break through the bottleneck of "weak artificial intelligence" in current visual information cognition and effectively promote the further development of intelligent vision system toward the direction of human-computer deep integration. Next, more indepth research must be carried out on pure basic innovation, efficient human-computer interaction, and flexible connection path.关键词:visual cognition;visual perception;intelligent visual perception;hybrid enhanced visual cognition;man-machine fusion111|231|1更新时间:2024-05-07 -

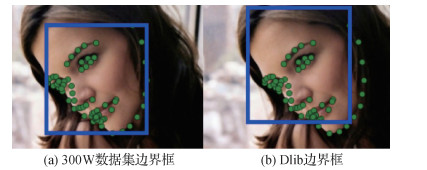

摘要:Face feature point location is to locate the predefined key facial feature points automatically according to the physiological characteristics of the human face, such as eyes, nose tip, mouth corner, and face contour. It is one of the important problems in face registration, face recognition, 3D face reconstruction, craniofacial analysis, craniofacial registration, and many other related fields. In recent years, various algorithms for facial feature point localization have emerged constantly, but several problems remain in the calibration of feature points, especially in the calibration of 3D facial feature points, such as manual intervention, low or inaccurate number of feature points, and long calibration time. In recent years, convolutional neural networks have been widely used in face feature point detection. This study focuses on the analysis of automatic feature point location methods based on deep learning for 2D and 3D facial data. Training data with real feature point labels in 2D texture image data are abundant. The research of automatic location method of 2D facial feature points based on deep learning is relatively extensive and indepth. The classical methods for 2D data include cascade convolution neural network methods, end-to-end regression methods, auto encoder network methods, different pose estimation methods, and other improved convolutional neural network (CNN) methods. In cascaded regression methods, rough detection is performed first, and then the feature points are finetuned. The end-to-end method propagates the error between the real results and the predicted results until the model converges. Autoencoder methods can select features automatically through encoding and decoding. Head pose estimation has great importance for face feature point detection because image-based methods are always affected by illumination and pose.Head pose estimation and feature points detection is improved by modifying network structure and loss function. The disadvantage of cascade regression method is that it can update the regressor by independent learning, and the descent direction may cancel each other. The flexibility of the end-to-end model is low. CNN is applied to 2D training data with real feature point tags. However, in the case of a 3D, training data with rich real feature point labels are lacking. Therefore, compared with 2D facial feature points, 3D facial feature point location remains a challenge. Several automatic feature point location for 3D data are introduced. The methods for 3D data are mainly based on depth information and 3D morphable model (3DMM). In recent years, with the development of RGB+depth map (RGBD) technology, depth data have attracted more attention. Feature point detection based on depth information has become an important preprocessing step for automatic feature point detection in 3D data. Initialization is crucial for deep data, but information is easily lost. The method based on 3DMM represents 3D face data for locating feature points through deep learning. On the one hand, the shape and expression parameters of 3DMM are highly nonlinear with the image texture information, which makes image mapping difficult to estimate. Compared with 2D face data, 3D face data lack training data with remarkable changes in face shape, race, and expression. Face feature point detection still faces great challenges.In summary, this study explains the meaning of automatic location of facial feature points, summarizes the currently open and commonly used face datasets, introduces various methods of automatic location of feature points for 2D and 3D data, summarizes the research status and application of each domestic and international method, analyzes the problems and development trend of automatic location technology of face feature points in deep learning application on 2D and 3D datasets, and compares the experimental results of the latest methods. In conclusion, the research on automatic location method of 2D face feature points based on deep learning is relatively indepth. Challenges in processing 3D data remain. The current solution for locating feature points is to project 3D face data onto 2D images through cylindrical coordinates, depth maps, 3DMM, and other methods. Information loss is the main problem of these methods. The method of feature point location directly on 3D model needs further exploration and research. The accuracy and speed of feature point location also need to be improved. In the future, 3D facial feature point localization methods based on deep learning will gradually become a trend.关键词:deep learning;2D facial feature point location;3D facial feature point location;convolutional neural network (CNN);registration72|44|0更新时间:2024-05-07

摘要:Face feature point location is to locate the predefined key facial feature points automatically according to the physiological characteristics of the human face, such as eyes, nose tip, mouth corner, and face contour. It is one of the important problems in face registration, face recognition, 3D face reconstruction, craniofacial analysis, craniofacial registration, and many other related fields. In recent years, various algorithms for facial feature point localization have emerged constantly, but several problems remain in the calibration of feature points, especially in the calibration of 3D facial feature points, such as manual intervention, low or inaccurate number of feature points, and long calibration time. In recent years, convolutional neural networks have been widely used in face feature point detection. This study focuses on the analysis of automatic feature point location methods based on deep learning for 2D and 3D facial data. Training data with real feature point labels in 2D texture image data are abundant. The research of automatic location method of 2D facial feature points based on deep learning is relatively extensive and indepth. The classical methods for 2D data include cascade convolution neural network methods, end-to-end regression methods, auto encoder network methods, different pose estimation methods, and other improved convolutional neural network (CNN) methods. In cascaded regression methods, rough detection is performed first, and then the feature points are finetuned. The end-to-end method propagates the error between the real results and the predicted results until the model converges. Autoencoder methods can select features automatically through encoding and decoding. Head pose estimation has great importance for face feature point detection because image-based methods are always affected by illumination and pose.Head pose estimation and feature points detection is improved by modifying network structure and loss function. The disadvantage of cascade regression method is that it can update the regressor by independent learning, and the descent direction may cancel each other. The flexibility of the end-to-end model is low. CNN is applied to 2D training data with real feature point tags. However, in the case of a 3D, training data with rich real feature point labels are lacking. Therefore, compared with 2D facial feature points, 3D facial feature point location remains a challenge. Several automatic feature point location for 3D data are introduced. The methods for 3D data are mainly based on depth information and 3D morphable model (3DMM). In recent years, with the development of RGB+depth map (RGBD) technology, depth data have attracted more attention. Feature point detection based on depth information has become an important preprocessing step for automatic feature point detection in 3D data. Initialization is crucial for deep data, but information is easily lost. The method based on 3DMM represents 3D face data for locating feature points through deep learning. On the one hand, the shape and expression parameters of 3DMM are highly nonlinear with the image texture information, which makes image mapping difficult to estimate. Compared with 2D face data, 3D face data lack training data with remarkable changes in face shape, race, and expression. Face feature point detection still faces great challenges.In summary, this study explains the meaning of automatic location of facial feature points, summarizes the currently open and commonly used face datasets, introduces various methods of automatic location of feature points for 2D and 3D data, summarizes the research status and application of each domestic and international method, analyzes the problems and development trend of automatic location technology of face feature points in deep learning application on 2D and 3D datasets, and compares the experimental results of the latest methods. In conclusion, the research on automatic location method of 2D face feature points based on deep learning is relatively indepth. Challenges in processing 3D data remain. The current solution for locating feature points is to project 3D face data onto 2D images through cylindrical coordinates, depth maps, 3DMM, and other methods. Information loss is the main problem of these methods. The method of feature point location directly on 3D model needs further exploration and research. The accuracy and speed of feature point location also need to be improved. In the future, 3D facial feature point localization methods based on deep learning will gradually become a trend.关键词:deep learning;2D facial feature point location;3D facial feature point location;convolutional neural network (CNN);registration72|44|0更新时间:2024-05-07

Review

-