最新刊期

卷 26 , 期 10 , 2021

-

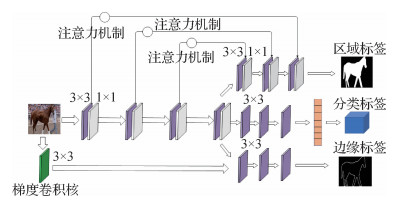

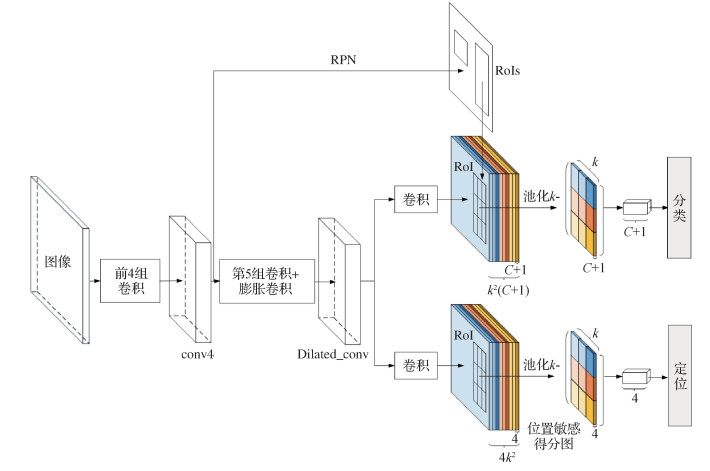

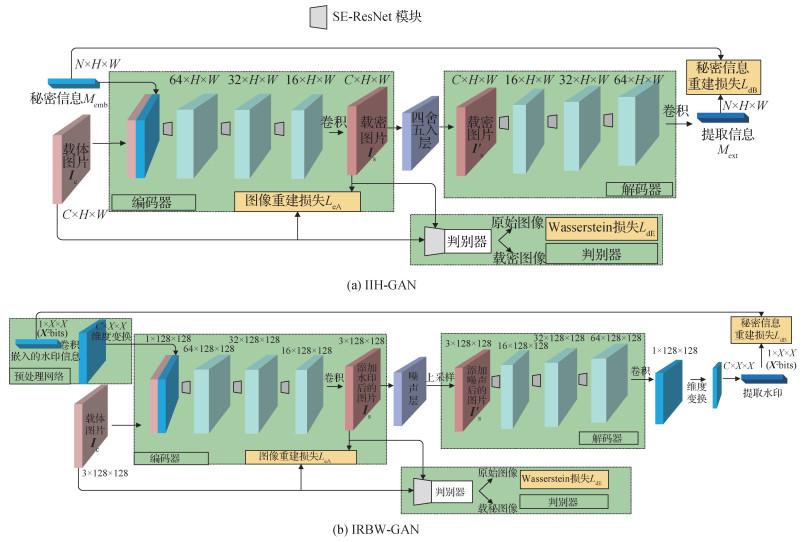

摘要:Image classification(IC) is one of important tasks in support of computer vision. Traditional image classification methods have limitations on the aspect of computer vision. Deep learning technology has become more mature than before based on deep convolutional neural network(DCNN) with the development of artificial intelligence(AI) recently. The performance of image classification has been upgraded based on the maturation of the deep convolutional neural network model.This research has mainly focused on a comprehensive overview of image classification in DCNN via the deep convolutional neural network model structure of image classification. Firstly, the modeling methodology has been analyzed and summarized. The DCNN analysis has been formulated into four categories listed below: 1)classic deep convolutional neural networks; 2)deep convolutional neural networks based on the attention mechanism; 3) lightweight networks; 4) the neural architecture search method. DCNN has high optimization capability using convolution to extract effective features of the images and learn feature expression from a large number of samples automatically. DCNN achieves better performance on image classification due to the effective features based on the deeper DCNN research and development. DCNN has been encounting lots of difficulities such as overfitting, vanishing gradient and huge model parameters.Hence, DCNN has become more and more difficult to optimize. The researchers in the context of IC have illustrated different DCNN models for different problems. Researchers have been making the network deeper that before via AlexNet. Subsequently, the classified analyses such as network in network(NIN), Overfeat, ZFNet, Visual Geometry Group(VGGNet), GoogLeNet have been persisted on.The problem of vanishing gradient has been more intensified via the deepening of the network.The optimization of the network becomes more complicated. Researchers have proposed residual network(ResNet) to ease gradient vanishing to improve the performance of image classification greatly. To further improve the performance of ResNet, researchers have issued a series of ResNet variants which can be divided into three categories in terms of different solutions via ResNet variants based on very deep ResNet optimization, ResNet variants based on increasing width and the new dimensions in ResNet variants. The ResNet has been attributed to the use of shortcut connections maximization. Densely connected convolutional network (DenseNet) have been demonstrated and the information flow in DenseNet between each layer has been maximized. To further promote the information flow between layers, the DenseNet variants have been illustrated via DPN(dual path network) and CliqueNet. DCNN based on the attention mechanism has focused on the regions of interest based on the classic DCNN models and channel attention mechanism, spatial attention mechanism and layer attention mechanism can be categorized. DCNN need higher accuracy and a small amount of parameters and fast model calculation speed. The researchers have proposed the lightweight networks such as the ShuffleNet series and MobileNet series. The NAS(neural architecture search) methods using neural networks to automatically design neural networks have been conerned. The NAS methods can be divided into three categories: design search space, model optimization and others. Secondly, The image classification datasets have been commonly presented in common including MNIST(modified NIST(MNIST)) dataset, ImageNet dataset, CIFAR dataset and SVHN(street view house number(SVHN)) dataset. The comparative performance and analysis of experimental results of various models were conducted as well.The accuracy, parameter and FLOPs(floating point operations) analyses to measure the results of classification have been mentioned. The capability of model optimization has been upgraded gradually via the accuracy improvement of image classification, the decreasing amount of parameters of the model and increasing speed of training and inference. Finally, the DCNN model has been constrained some factors. The DCNN model has been mainly used to supervise deep learning for image classification in constraint of the quality and scale of the datasets.The speed and resource consuming of the DCNN model have been upgraded in mobile devices.The measurment and optimization in analyzing the advantages and disadvantages of the DCNN model need to be studied further.The neural architecture search method will be the development direction of future deep convolutional neural network model designs. The DCNN models of image classification have been reviewed and the experimental results of the DCNNs have been demonstrated.关键词:deep learning;image classification(IC);deep convolutional neural networks(DCNN);model structure;model optimization429|291|35更新时间:2024-05-07

摘要:Image classification(IC) is one of important tasks in support of computer vision. Traditional image classification methods have limitations on the aspect of computer vision. Deep learning technology has become more mature than before based on deep convolutional neural network(DCNN) with the development of artificial intelligence(AI) recently. The performance of image classification has been upgraded based on the maturation of the deep convolutional neural network model.This research has mainly focused on a comprehensive overview of image classification in DCNN via the deep convolutional neural network model structure of image classification. Firstly, the modeling methodology has been analyzed and summarized. The DCNN analysis has been formulated into four categories listed below: 1)classic deep convolutional neural networks; 2)deep convolutional neural networks based on the attention mechanism; 3) lightweight networks; 4) the neural architecture search method. DCNN has high optimization capability using convolution to extract effective features of the images and learn feature expression from a large number of samples automatically. DCNN achieves better performance on image classification due to the effective features based on the deeper DCNN research and development. DCNN has been encounting lots of difficulities such as overfitting, vanishing gradient and huge model parameters.Hence, DCNN has become more and more difficult to optimize. The researchers in the context of IC have illustrated different DCNN models for different problems. Researchers have been making the network deeper that before via AlexNet. Subsequently, the classified analyses such as network in network(NIN), Overfeat, ZFNet, Visual Geometry Group(VGGNet), GoogLeNet have been persisted on.The problem of vanishing gradient has been more intensified via the deepening of the network.The optimization of the network becomes more complicated. Researchers have proposed residual network(ResNet) to ease gradient vanishing to improve the performance of image classification greatly. To further improve the performance of ResNet, researchers have issued a series of ResNet variants which can be divided into three categories in terms of different solutions via ResNet variants based on very deep ResNet optimization, ResNet variants based on increasing width and the new dimensions in ResNet variants. The ResNet has been attributed to the use of shortcut connections maximization. Densely connected convolutional network (DenseNet) have been demonstrated and the information flow in DenseNet between each layer has been maximized. To further promote the information flow between layers, the DenseNet variants have been illustrated via DPN(dual path network) and CliqueNet. DCNN based on the attention mechanism has focused on the regions of interest based on the classic DCNN models and channel attention mechanism, spatial attention mechanism and layer attention mechanism can be categorized. DCNN need higher accuracy and a small amount of parameters and fast model calculation speed. The researchers have proposed the lightweight networks such as the ShuffleNet series and MobileNet series. The NAS(neural architecture search) methods using neural networks to automatically design neural networks have been conerned. The NAS methods can be divided into three categories: design search space, model optimization and others. Secondly, The image classification datasets have been commonly presented in common including MNIST(modified NIST(MNIST)) dataset, ImageNet dataset, CIFAR dataset and SVHN(street view house number(SVHN)) dataset. The comparative performance and analysis of experimental results of various models were conducted as well.The accuracy, parameter and FLOPs(floating point operations) analyses to measure the results of classification have been mentioned. The capability of model optimization has been upgraded gradually via the accuracy improvement of image classification, the decreasing amount of parameters of the model and increasing speed of training and inference. Finally, the DCNN model has been constrained some factors. The DCNN model has been mainly used to supervise deep learning for image classification in constraint of the quality and scale of the datasets.The speed and resource consuming of the DCNN model have been upgraded in mobile devices.The measurment and optimization in analyzing the advantages and disadvantages of the DCNN model need to be studied further.The neural architecture search method will be the development direction of future deep convolutional neural network model designs. The DCNN models of image classification have been reviewed and the experimental results of the DCNNs have been demonstrated.关键词:deep learning;image classification(IC);deep convolutional neural networks(DCNN);model structure;model optimization429|291|35更新时间:2024-05-07 -

摘要:This is an overview of the annual survey series of bibliographies on image engineering in China for the past 25 years. Images are an important medium for human beings to observe the information of the real world around. In its general sense, the word "image" could include all entities that can be visualized by human eyes, such as a still image or picture, a clip of video, as well as graphics, animations, cartoons, charts, drawings, paintings, even also text, etc. Nowadays, with the progress of information science and society, "image" rather than "picture" is used because computers store numerical images of a picture or scene. Image techniques are those techniques that have been invented, designed, implemented, developed, and utilized to treat various types of images for different and specified purposes. They are expanding over wider and wider application areas. They have attracted more and more attention in recent years with the fast advances of mathematic theories and physical principles, as well as the progress of computer and electronic devices, etc. Image engineering (IE) is an integrated discipline/subject comprising the study of all the different branches of image techniques, which has been formally proposed and defined 25 years ago to cover the whole domain. Image engineering, from a perspective more oriented to techniques for treating images, could be referred to as the collection of three related and partially overlapped groups of image techniques, that is, image processing (IP) techniques (in its narrow sense), image analysis (IA) techniques and image understanding (IU) techniques. In a structural sense, IP, IA and IU build up three inter-connected layers of IE. The three layers follow a progression of increasing abstractness and of decreasing compactness from IP to IA to IU. Each of them operates on different elements (IP's operand is pixel, IA's operand is object, and IU's operand is symbol) and works with altered semantic levels (from low level for IP, via middle level for IA, and to high level for IU). The evolving of image engineering is very quickly, its advances are closely related to the development of biomedical engineering, office automation, industrial inspection, intelligent transportation, remote sensing, surveying and mapping, and telecommunications, etc. To follow the development and to record the progress of image engineering, a bibliography series have been started since the propose of image engineering 25 years ago, and this work has been made consecutively till this year. With a set of carefully selected journals and the thoroughly reading on the papers published, several hundreds of papers related to image engineering are chosen each year for further classification and statistical analysis. The motivations and purposes for this work are three folds. 1) to enable the vast number of scientific and technical personnel engaged in image engineering research and image technology applications for grasping the current status of image engineering research and development, 2) to help them for searching relevant literature in a targeted manner, 3) to provide useful information for journal editors and authors. Based on these three points, the statistics and analysis of the previous year's literature related to image engineering are carried out in every year. In particular, three works are mainly conducted. 1) Forming a classification scheme for literatures. The coverage of research and technology in image engineering is quite large. For the analysis to be general for the whole domain and specific for particular direction, a classification scheme in two levels for literatures is formed. The top level is for the research domains in general (main-class), and the bottom level is for the specific research directions (sub-class). With the development of technology, the sub-classes have been adjusted and increased, from the initial 18 sub-classes to the current 23 sub-classes. 2) Analyzing the statistics for main classes of literatures. This analysis could provide a general picture over the different research domains along the years. 3) Analyzing the statistics for sub-classes of literatures. This analysis could deliver a specific figure to the different research directions at the current year. In the past 25 years, a total of 2 964 issues of 15 major Chinese journals on image engineering in China have been selected for this annual survey series. From 65 014 academic research and technical application papers published in these issues, 15 850 papers in the field of image engineering have been chosen, and classified according to the contents, into five broad classes: (A) image processing, (B) image analysis, (C) image understanding, (D) technology application and (E) review. Then they are further divided into 23 professional sub-classes, namely in brief: (A1) image capturing; (A2) image reconstruction from projections; (A3) image filtering, transformation, enhancement, restoration; (A4) image and/or video coding; (A5) image safety and security; (A6) image multiple-resolutions; (B1) image segmentation; (B2) representation, description, and measurement of objects; (B3) analysis of color, shape, texture, structure, motion, spatial relation; (B4) object extraction, tracking, and recognition; (B5) human biometrics (face, organ, etc.) identification; (C1) image registration, matching and fusion; (C2) 3-D modeling and real world/scene recovery; (C3) image perception, interpretation and reasoning; (C4) content-based image and video retrieval; (C5) spatial-temporal technology; (D1) system and hardware; (D2) telecommunication application; (D3) document application; (D4) bio-medical imaging and applications; (D5) remote sensing, radar, surveying and mapping; (D6) other application domains; and (E1) cross category summary and survey. In this paper, these classified data for all 25 years are integrated and analyzed. The foremost intention is to show a progression of image engineering, and to provide a vivid current picture of image engineering. An overview of the literature survey series on image engineering made in the last 25 years is supplied. The idea behind as well as a thorough summary of obtained statistics for this survey are illustrated and discussed. Many useful information regarding the tendency of fast progresses of image engineering can be obtained. According to the statistics collected and analyses performed, it is seen that the field of image engineering has changed enormously in recent years. It is seen that techniques for image engineering being developed, implemented and utilized on a large scale no one would have predicted a few years ago. One interesting point should be mentioned here is that the fast growth and relative increase of image analysis publications over image processing publications are clearly observed. This gives an indication of a general tendency for the image engineering toward to higher layer. After image analysis, image understanding will catch up and come from behind. This trend has already made an appearance, for example, in the International Conference on Image Processing (ICIP 2017), the new research papers related to topic of "Image & Video Interpretation & Understanding" are much more than those related to topic of "Image & Video Analysis" (http://2017.ieeeicip.org/). In addition to the statistical information for publication in these 25 years, some main research directions are analyzed and deliberated, especially those in image processing, image analysis and image understanding, to present more comprehensive and credible information on the development trends of each technique class. Some insights from it are also pointed and discussed. It is evident that through the statistical analysis of the published papers in important journals of image engineering, it cannot only help people understand the general situation of researches and applications, but also provide scientific basis for the development of relevant disciplines and research strategies.关键词:image engineering(IE);image processing(IP);image analysis(IA);image understanding(IU);technique application(TA);literature survey;literature classification;bibliometrics54|88|3更新时间:2024-05-07

摘要:This is an overview of the annual survey series of bibliographies on image engineering in China for the past 25 years. Images are an important medium for human beings to observe the information of the real world around. In its general sense, the word "image" could include all entities that can be visualized by human eyes, such as a still image or picture, a clip of video, as well as graphics, animations, cartoons, charts, drawings, paintings, even also text, etc. Nowadays, with the progress of information science and society, "image" rather than "picture" is used because computers store numerical images of a picture or scene. Image techniques are those techniques that have been invented, designed, implemented, developed, and utilized to treat various types of images for different and specified purposes. They are expanding over wider and wider application areas. They have attracted more and more attention in recent years with the fast advances of mathematic theories and physical principles, as well as the progress of computer and electronic devices, etc. Image engineering (IE) is an integrated discipline/subject comprising the study of all the different branches of image techniques, which has been formally proposed and defined 25 years ago to cover the whole domain. Image engineering, from a perspective more oriented to techniques for treating images, could be referred to as the collection of three related and partially overlapped groups of image techniques, that is, image processing (IP) techniques (in its narrow sense), image analysis (IA) techniques and image understanding (IU) techniques. In a structural sense, IP, IA and IU build up three inter-connected layers of IE. The three layers follow a progression of increasing abstractness and of decreasing compactness from IP to IA to IU. Each of them operates on different elements (IP's operand is pixel, IA's operand is object, and IU's operand is symbol) and works with altered semantic levels (from low level for IP, via middle level for IA, and to high level for IU). The evolving of image engineering is very quickly, its advances are closely related to the development of biomedical engineering, office automation, industrial inspection, intelligent transportation, remote sensing, surveying and mapping, and telecommunications, etc. To follow the development and to record the progress of image engineering, a bibliography series have been started since the propose of image engineering 25 years ago, and this work has been made consecutively till this year. With a set of carefully selected journals and the thoroughly reading on the papers published, several hundreds of papers related to image engineering are chosen each year for further classification and statistical analysis. The motivations and purposes for this work are three folds. 1) to enable the vast number of scientific and technical personnel engaged in image engineering research and image technology applications for grasping the current status of image engineering research and development, 2) to help them for searching relevant literature in a targeted manner, 3) to provide useful information for journal editors and authors. Based on these three points, the statistics and analysis of the previous year's literature related to image engineering are carried out in every year. In particular, three works are mainly conducted. 1) Forming a classification scheme for literatures. The coverage of research and technology in image engineering is quite large. For the analysis to be general for the whole domain and specific for particular direction, a classification scheme in two levels for literatures is formed. The top level is for the research domains in general (main-class), and the bottom level is for the specific research directions (sub-class). With the development of technology, the sub-classes have been adjusted and increased, from the initial 18 sub-classes to the current 23 sub-classes. 2) Analyzing the statistics for main classes of literatures. This analysis could provide a general picture over the different research domains along the years. 3) Analyzing the statistics for sub-classes of literatures. This analysis could deliver a specific figure to the different research directions at the current year. In the past 25 years, a total of 2 964 issues of 15 major Chinese journals on image engineering in China have been selected for this annual survey series. From 65 014 academic research and technical application papers published in these issues, 15 850 papers in the field of image engineering have been chosen, and classified according to the contents, into five broad classes: (A) image processing, (B) image analysis, (C) image understanding, (D) technology application and (E) review. Then they are further divided into 23 professional sub-classes, namely in brief: (A1) image capturing; (A2) image reconstruction from projections; (A3) image filtering, transformation, enhancement, restoration; (A4) image and/or video coding; (A5) image safety and security; (A6) image multiple-resolutions; (B1) image segmentation; (B2) representation, description, and measurement of objects; (B3) analysis of color, shape, texture, structure, motion, spatial relation; (B4) object extraction, tracking, and recognition; (B5) human biometrics (face, organ, etc.) identification; (C1) image registration, matching and fusion; (C2) 3-D modeling and real world/scene recovery; (C3) image perception, interpretation and reasoning; (C4) content-based image and video retrieval; (C5) spatial-temporal technology; (D1) system and hardware; (D2) telecommunication application; (D3) document application; (D4) bio-medical imaging and applications; (D5) remote sensing, radar, surveying and mapping; (D6) other application domains; and (E1) cross category summary and survey. In this paper, these classified data for all 25 years are integrated and analyzed. The foremost intention is to show a progression of image engineering, and to provide a vivid current picture of image engineering. An overview of the literature survey series on image engineering made in the last 25 years is supplied. The idea behind as well as a thorough summary of obtained statistics for this survey are illustrated and discussed. Many useful information regarding the tendency of fast progresses of image engineering can be obtained. According to the statistics collected and analyses performed, it is seen that the field of image engineering has changed enormously in recent years. It is seen that techniques for image engineering being developed, implemented and utilized on a large scale no one would have predicted a few years ago. One interesting point should be mentioned here is that the fast growth and relative increase of image analysis publications over image processing publications are clearly observed. This gives an indication of a general tendency for the image engineering toward to higher layer. After image analysis, image understanding will catch up and come from behind. This trend has already made an appearance, for example, in the International Conference on Image Processing (ICIP 2017), the new research papers related to topic of "Image & Video Interpretation & Understanding" are much more than those related to topic of "Image & Video Analysis" (http://2017.ieeeicip.org/). In addition to the statistical information for publication in these 25 years, some main research directions are analyzed and deliberated, especially those in image processing, image analysis and image understanding, to present more comprehensive and credible information on the development trends of each technique class. Some insights from it are also pointed and discussed. It is evident that through the statistical analysis of the published papers in important journals of image engineering, it cannot only help people understand the general situation of researches and applications, but also provide scientific basis for the development of relevant disciplines and research strategies.关键词:image engineering(IE);image processing(IP);image analysis(IA);image understanding(IU);technique application(TA);literature survey;literature classification;bibliometrics54|88|3更新时间:2024-05-07

Review

-

摘要:ObjectiveMaritime activities are quite important to human society as they influence economic and social development. Thus, maintaining marine safety is of great significance to promoting social stability. As the main vehicle of marine activities, numerous ships of varying types cruise the sea daily. The fine-grained classification of ships has become one of the basic technologies for marine surveillance. With the development of remote sensing technology, satellite optical remote sensing is becoming one of the main means for marine surveillance due to its wide coverage, low acquisition cost, reliability, and real-time monitoring. As a result, ship classification through optical remote sensing images has attracted the attention of researchers. For the image target recognition task in computer vision, deep learning-based methods outperform traditional methods based on handcrafted features due to the powerful representation ability of convolutional neural networks. Thus, it is natural to combine deep learning technology and ship classification task in optical remote sensing images. However, most deep learning-based algorithms are data-driven, which rely on well-annotated large-scale datasets. To date, problems of small amount of data and few target categories exist in public ship classification datasets with optical remote sensing, which cannot meet the requirements of studies on deep learning-based ship classification tasks, especially the fine-grained ship classification task.MethodOn the basis of the above analysis and requirements, a fine-grained ship collection named FGSC-23 with high-resolution optical remote sensing images is established in this study. There are a total of 4 052 instance chips and 23 categories of targets including 22-category ships and 1-category negative samples in FGSC-23. All the images are obtained from public images of Google Earth and GF-2 satellite, and the ship chips are split from these images. All the ships are labeled by human interpretation. Except for the category labels, the attributes of ship aspect ratio and the angle between the ship's central axis and image's horizontal axis are also annotated. To our knowledge, FGSC-23 contains more fine-grained categories of ships compared with the public datasets. Thus, it can be used for fine-grained ship classification research. Overall, FGSC-23 shares the properties of category diversity, imaging scene diversity, instance label diversity, and category imbalance.ResultExperiments are conducted on the constructed FGSC-23 to test the classification accuracy of classical convolutional neural networks. FGSC-23 is divided into a testing set and training set with a ratio of about 1:4. Models including VGG16(Visual Geometry Group 16-layer net), ResNet50, Inception-v3, DenseNet121, MobileNet, and Xception are trained using the training set and tested on the testing set. The accuracy rate of each category and the overall accuracy rate are recorded, and the visualization of confusion matrixes of the classification results is also given. The overall accuracies of these models are 79.88%, 81.33%, 83.88%, 84.00%, 84.24%, and 87.76%, respectively. Besides these basic classification models, an optimizing model using the ship's attribute feature and enhanced multi-level local features is also tested on FGSC-23. A state-of-the-art classification performance is achieved as 93.58%.ConclusionThe experimental results show that the constructed FGSC-23 can be used to verify the effectiveness of deep learning-based ship classification methods for optical remote sensing images. It is also helpful to promote the development of related researches.关键词:optical remote sensing image;ship;fine-grained recognition;dataset;deep learning546|312|3更新时间:2024-05-07

摘要:ObjectiveMaritime activities are quite important to human society as they influence economic and social development. Thus, maintaining marine safety is of great significance to promoting social stability. As the main vehicle of marine activities, numerous ships of varying types cruise the sea daily. The fine-grained classification of ships has become one of the basic technologies for marine surveillance. With the development of remote sensing technology, satellite optical remote sensing is becoming one of the main means for marine surveillance due to its wide coverage, low acquisition cost, reliability, and real-time monitoring. As a result, ship classification through optical remote sensing images has attracted the attention of researchers. For the image target recognition task in computer vision, deep learning-based methods outperform traditional methods based on handcrafted features due to the powerful representation ability of convolutional neural networks. Thus, it is natural to combine deep learning technology and ship classification task in optical remote sensing images. However, most deep learning-based algorithms are data-driven, which rely on well-annotated large-scale datasets. To date, problems of small amount of data and few target categories exist in public ship classification datasets with optical remote sensing, which cannot meet the requirements of studies on deep learning-based ship classification tasks, especially the fine-grained ship classification task.MethodOn the basis of the above analysis and requirements, a fine-grained ship collection named FGSC-23 with high-resolution optical remote sensing images is established in this study. There are a total of 4 052 instance chips and 23 categories of targets including 22-category ships and 1-category negative samples in FGSC-23. All the images are obtained from public images of Google Earth and GF-2 satellite, and the ship chips are split from these images. All the ships are labeled by human interpretation. Except for the category labels, the attributes of ship aspect ratio and the angle between the ship's central axis and image's horizontal axis are also annotated. To our knowledge, FGSC-23 contains more fine-grained categories of ships compared with the public datasets. Thus, it can be used for fine-grained ship classification research. Overall, FGSC-23 shares the properties of category diversity, imaging scene diversity, instance label diversity, and category imbalance.ResultExperiments are conducted on the constructed FGSC-23 to test the classification accuracy of classical convolutional neural networks. FGSC-23 is divided into a testing set and training set with a ratio of about 1:4. Models including VGG16(Visual Geometry Group 16-layer net), ResNet50, Inception-v3, DenseNet121, MobileNet, and Xception are trained using the training set and tested on the testing set. The accuracy rate of each category and the overall accuracy rate are recorded, and the visualization of confusion matrixes of the classification results is also given. The overall accuracies of these models are 79.88%, 81.33%, 83.88%, 84.00%, 84.24%, and 87.76%, respectively. Besides these basic classification models, an optimizing model using the ship's attribute feature and enhanced multi-level local features is also tested on FGSC-23. A state-of-the-art classification performance is achieved as 93.58%.ConclusionThe experimental results show that the constructed FGSC-23 can be used to verify the effectiveness of deep learning-based ship classification methods for optical remote sensing images. It is also helpful to promote the development of related researches.关键词:optical remote sensing image;ship;fine-grained recognition;dataset;deep learning546|312|3更新时间:2024-05-07

Dataset

-

摘要:ObjectiveImage-to-image translation involves the automated conversion of input data into a corresponding output image, which differs in characteristics such as color and style. Examples include converting a photograph to a sketch or a visible image to a semantic label map. Translation has various applications in the field of computer vision such facial recognition, person identification, and image dehazing. In 2014, Goodfellow proposed an image generation model based on generative adversarial networks (GANs). This algorithm uses a loss function to classify output images as authentic or fabricated while simultaneously training a generative model to minimize loss. GANs have achieved impressive image generation results using adversarial loss specifically. For example, the image-to-image translation framework Pix2Pix was developed using a GAN architecture. Pix2Pix operates by learning a conditional generative model from input-output image pairs, which is more suitable for translation tasks. In addition, U-Net has often been used as generator networks in place of conventional decoders. While Pix2Pix provides a robust framework for image translation, acquiring sufficient quantities of paired input-output training data can be challenging. In order to solve this problem, cycle-consistent adversarial networks (CycleGANs) were developed by adding an inverse mapping and cycle consistency loss to enforce the relationship between generated and input images. In addition, ResNets have been used as generators to enhance translated image quality. Pix2PixHD offers high-resolution (2 048×1 024 pixels) output using a modified multiscale generator network that includes an instance map in the training step. Although these algorithms have effectively been used for image-to-image translation and a variety of related applications, they typically adopt U-Net or ResNet generators. These single-structure networks struggle to keep high performance across multiple evaluation indicators. As such, this study presents a novel parallel stream-based generator network to increase the robustness across multiple evaluation indicators. Unlike in previous studies, this model consists of two entirely different convolutional neural network (CNN) structures. The output translated visible image of each stream is fused with a linear interpolation-based fusion method to allow for simultaneous optimization of parameters in each model.MethodThe proposed parallel generator network consists of one ResNet processing stream and one DenseNet processing stream, which are fused in parallel. The ResNet stream includes down-sampling and nine Res-Unit feature extraction networks. Each Res-Unit consists of a feedforward neural network exhibiting elementwise addition. Two convolution layers are skipped. Similarly, the DenseNet stream includes down-sampling and nine Den-Unit feature extraction networks. Every Den-Unit is composed of three convolutional layers and two concatenation layers. As a result, the Den-Units output a concatenation of deep feature maps produced in all three convolutional layers. To utilize the advantages of both ResNet and DenseNet streams, two generated images are segmented into low-and high-intensity image parts with an optimal intensity threshold. Then, a linear interpolation method is proposed to fuse the segmented output images of two generator streams in the R, G, B channel respectively. We also design an intensity threshold objective function to obtain optimal parameters in the generator raining process. In addition, to avoid overfitting during training under a small dataset, we modify the discriminator structure by including four convolution-dropout pairs and a convolution layer.ResultWe compared our model with six state-of-the-art saliency models, including CRN(cascaded refinement networks), SIMS(semi-parametric image synthesis), Pix2Pix(pixel to pixel), CycleGAN(cycle generative adversarial networks), MUNIT(multimodal unsupervised image-to-image translation) and GauGAN(group adaptive normalization generative adversarial networks), on a public dataset named "AAU(Aalborg University) RainSnow Traffic Surveillance Dataset". The experimental dataset, which was composed of 22 5-min video sequences acquired from traffic intersections in the Danish cities of Aalborg and Viborg, was used for testing purposes. This dataset was collected at seven different locations with a conventional RGB camera and a thermal camera, each with a resolution of 640×480 pixels, at 20 frames per second. The total experimental dataset consisted of 2 100 RGB-IR image pairs, and each scene was then randomly divided into training and test datasets by 80%-20%. In this study, multi-perspective evaluation results were acquired using the mean square error (MSE), structural similarity index (SSIM), gray intensity histogram correlation, and Bhattacharyya distance. The advantages of a parallel stream-based generator network were assessed by comparing the proposed parallel generator with a ResNet, DenseNet, and residual dense block (RDN)-based hybrid network. We evaluated the average MSE and SSIM values for the test data, produced using four different generators (ParaNet, ResNet, DenseNet, and RDN). The proposed method achieved an average MSE of 34.835 8, which was lower than that of ResNet, DenseNet, and hybrid RDN network. Simultaneously, the average SSIM value produced with the proposed method was 0.747 7, which was also higher than that of DenseNet, ResNet, and RDN. This result shows that the proposed parallel structure-based network produced more effective fusion results than RDB-based hybrid network structure. Moreover, comparative experiments demonstrated that parallel generator structure improves the robustness performance across multi-perspective evaluations for infrared-to-visible image translation. Compared with the six conventional methods, the MSE performance (lower is better) increased by at least 22.30%, and the SSIM (higher is better) decreased by at least 8.55%. The experimental results show that the proposed parallel generator network-based infrared-to-visible image translation deep learning model achieves high performance in terms of MSE or SSIM compared with conventional deep learning models such as CRN, SIMS, Pix2Pix, CycleGAN, MUNIT, and GauGAN.ConclusionA novel parallel stream architecture-based generator network was proposed for infrared-to-visible image translation. Unlike conventional models, the proposed parallel generator structure consists of two different network architectures: a ResNet and a DenseNet. Parallel linear combination-based fusion allowed the model to incorporate benefits from both networks simultaneously. The structure of discriminator networks used in the conditional GAN framework was also improved for training and identifying optimal ParaNet parameters. The experimental results showed that the inclusion of different networks led to increases in common assessment metrics. The MSE, SSIM, and intensity histogram similarity for the proposed parallel generator network were higher than those of existing models. In the future, this algorithm will be applied to image dehazing.关键词:modal translation;ResNet;DenseNet;linear interpolation fusion;parallel generator network81|107|5更新时间:2024-05-07

摘要:ObjectiveImage-to-image translation involves the automated conversion of input data into a corresponding output image, which differs in characteristics such as color and style. Examples include converting a photograph to a sketch or a visible image to a semantic label map. Translation has various applications in the field of computer vision such facial recognition, person identification, and image dehazing. In 2014, Goodfellow proposed an image generation model based on generative adversarial networks (GANs). This algorithm uses a loss function to classify output images as authentic or fabricated while simultaneously training a generative model to minimize loss. GANs have achieved impressive image generation results using adversarial loss specifically. For example, the image-to-image translation framework Pix2Pix was developed using a GAN architecture. Pix2Pix operates by learning a conditional generative model from input-output image pairs, which is more suitable for translation tasks. In addition, U-Net has often been used as generator networks in place of conventional decoders. While Pix2Pix provides a robust framework for image translation, acquiring sufficient quantities of paired input-output training data can be challenging. In order to solve this problem, cycle-consistent adversarial networks (CycleGANs) were developed by adding an inverse mapping and cycle consistency loss to enforce the relationship between generated and input images. In addition, ResNets have been used as generators to enhance translated image quality. Pix2PixHD offers high-resolution (2 048×1 024 pixels) output using a modified multiscale generator network that includes an instance map in the training step. Although these algorithms have effectively been used for image-to-image translation and a variety of related applications, they typically adopt U-Net or ResNet generators. These single-structure networks struggle to keep high performance across multiple evaluation indicators. As such, this study presents a novel parallel stream-based generator network to increase the robustness across multiple evaluation indicators. Unlike in previous studies, this model consists of two entirely different convolutional neural network (CNN) structures. The output translated visible image of each stream is fused with a linear interpolation-based fusion method to allow for simultaneous optimization of parameters in each model.MethodThe proposed parallel generator network consists of one ResNet processing stream and one DenseNet processing stream, which are fused in parallel. The ResNet stream includes down-sampling and nine Res-Unit feature extraction networks. Each Res-Unit consists of a feedforward neural network exhibiting elementwise addition. Two convolution layers are skipped. Similarly, the DenseNet stream includes down-sampling and nine Den-Unit feature extraction networks. Every Den-Unit is composed of three convolutional layers and two concatenation layers. As a result, the Den-Units output a concatenation of deep feature maps produced in all three convolutional layers. To utilize the advantages of both ResNet and DenseNet streams, two generated images are segmented into low-and high-intensity image parts with an optimal intensity threshold. Then, a linear interpolation method is proposed to fuse the segmented output images of two generator streams in the R, G, B channel respectively. We also design an intensity threshold objective function to obtain optimal parameters in the generator raining process. In addition, to avoid overfitting during training under a small dataset, we modify the discriminator structure by including four convolution-dropout pairs and a convolution layer.ResultWe compared our model with six state-of-the-art saliency models, including CRN(cascaded refinement networks), SIMS(semi-parametric image synthesis), Pix2Pix(pixel to pixel), CycleGAN(cycle generative adversarial networks), MUNIT(multimodal unsupervised image-to-image translation) and GauGAN(group adaptive normalization generative adversarial networks), on a public dataset named "AAU(Aalborg University) RainSnow Traffic Surveillance Dataset". The experimental dataset, which was composed of 22 5-min video sequences acquired from traffic intersections in the Danish cities of Aalborg and Viborg, was used for testing purposes. This dataset was collected at seven different locations with a conventional RGB camera and a thermal camera, each with a resolution of 640×480 pixels, at 20 frames per second. The total experimental dataset consisted of 2 100 RGB-IR image pairs, and each scene was then randomly divided into training and test datasets by 80%-20%. In this study, multi-perspective evaluation results were acquired using the mean square error (MSE), structural similarity index (SSIM), gray intensity histogram correlation, and Bhattacharyya distance. The advantages of a parallel stream-based generator network were assessed by comparing the proposed parallel generator with a ResNet, DenseNet, and residual dense block (RDN)-based hybrid network. We evaluated the average MSE and SSIM values for the test data, produced using four different generators (ParaNet, ResNet, DenseNet, and RDN). The proposed method achieved an average MSE of 34.835 8, which was lower than that of ResNet, DenseNet, and hybrid RDN network. Simultaneously, the average SSIM value produced with the proposed method was 0.747 7, which was also higher than that of DenseNet, ResNet, and RDN. This result shows that the proposed parallel structure-based network produced more effective fusion results than RDB-based hybrid network structure. Moreover, comparative experiments demonstrated that parallel generator structure improves the robustness performance across multi-perspective evaluations for infrared-to-visible image translation. Compared with the six conventional methods, the MSE performance (lower is better) increased by at least 22.30%, and the SSIM (higher is better) decreased by at least 8.55%. The experimental results show that the proposed parallel generator network-based infrared-to-visible image translation deep learning model achieves high performance in terms of MSE or SSIM compared with conventional deep learning models such as CRN, SIMS, Pix2Pix, CycleGAN, MUNIT, and GauGAN.ConclusionA novel parallel stream architecture-based generator network was proposed for infrared-to-visible image translation. Unlike conventional models, the proposed parallel generator structure consists of two different network architectures: a ResNet and a DenseNet. Parallel linear combination-based fusion allowed the model to incorporate benefits from both networks simultaneously. The structure of discriminator networks used in the conditional GAN framework was also improved for training and identifying optimal ParaNet parameters. The experimental results showed that the inclusion of different networks led to increases in common assessment metrics. The MSE, SSIM, and intensity histogram similarity for the proposed parallel generator network were higher than those of existing models. In the future, this algorithm will be applied to image dehazing.关键词:modal translation;ResNet;DenseNet;linear interpolation fusion;parallel generator network81|107|5更新时间:2024-05-07

Image Processing and Coding

-

摘要:ObjectiveShoeprints are impressions created when footwear is pressed or stamped against a surface during a human's walking, in which the characteristics of the shoe and feet are transferred to the surface. Shoeprints can be divided into two categories: 2D and 3D shoeprints. In this paper, we focus on 2D shoeprints. The shoeprint sequence is defined as the sequential shoeprints in time order, which conveys many important human characteristics, such as foot morphology, walking habits, and identity, and plays a vital role in crime investigations. Existing research has mainly focused on using one single footprint or footprint sequence to recognize a person and has achieved a promising performance. However, unlike shoeprints, footprint sequences seldom appear in most scenarios. Although a single shoeprint is not sufficient to represent a person for the influence of shoe patterns, a shoeprint sequence could be possible because it can additionally provide walking characteristics. Therefore, our goal is to try to identify a person using his/her shoeprint sequence.MethodA shoeprint sequence is a time series that 2D shoeprints from one person repeat at a stable frequency, and we propose a spatial representation named shoeprint energy map set (SEMS) to represent a shoeprint sequence and use it to identify a person in collaboration with the proposed matching score method. An SEMS consists of left/right tread energy maps, left/right step energy maps, and left/right step width energy maps. The tread energy map (TEM) is defined as the average image of all the aligned left or right shoeprint images from a shoeprint sequence, which includes LTEM and RTEM. The LTEM is constructed from left shoeprints only, and the RTEM is computed from right shoeprints only. The TEM carries information about one's personal features such as foot morphology, walking habits, and step angles. The step energy map (SEM) is computed by averaging all aligned step images of multiple walking cycles from a shoeprint sequence. The step image refers to the region cropped from a shoeprint sequence according to the bounding box that only encloses two successive shoeprints. According to which foot is ahead in one walking cycle, step images can be divided into right step images and left step images. Hence, LSEM and RSEM are produced. Compared with TEM, one's SEM carries additional step information such as step length and step width. The step width energy map (SWEM) is constructed by averaging all step width images of multiple walking cycles from a shoeprint sequence, which includes LSWEM and RSWEM. The step width image refers to the image removing the blank region representing the step length from the step image. Different from SEM, the SWEM does not carry one's step length information. The matching score between two shoeprint sequences is defined as the weighted average of the element wise similarity scores of two SEMSs. The element wise similarity score is computed by max pooling of the normalized 2D cross-correlation response map of two corresponding elements such as LTEMs. The weights are learned from the training sets by maximizing the proposed hinge loss function.ResultAccording to the imaging methods, the status of shoes and the kinds of shoe sole patterns, three datasets, namely MUSSRO-SR, MUSSRO-SS, and MUSSRS-SS, are constructed. Volunteers are young college students whose heights range from 155 cm to 185 cm and weights are between 43 kg and 85 kg. MUSSRO-SR consists of 875 shoeprint sequences from 125 volunteers. The shoeprint sequences are captured by the way that each person walks normally on the footprint sequence scanner wearing his/her daily shoes. MUSSRO-SS is composed of 595 shoeprint sequences from 85 persons, and the capturing method is that each volunteer walks normally on the footprint sequence scanner wearing new shoes of same patterns. MUSSRS-SS is constructed by scanning papers, where each of 100 persons walks normally wearing new shoes after stepping in a tray full of black ink. The proposed method is evaluated in identification mode and verification mode. To the best of our knowledge, we have not found shoeprint sequence-based person recognition methods in the literature. Therefore, our method is compared with gait measurement (GM)-based method used in forensic practice. The correct recognition rates (higher is better) in identification mode on three datasets are 100%, 97.65%, and 83%. Compared with GM, the correct recognition rates of the proposed method are increased by 57.6%, 61.18%, and 48.35%. The performances in verification mode are measured by equal error rate (ERR). The lower the EER is, the higher the performance is. The ERR on MUSSRO-SR is 0.36%. Compared with GM, it is decreased by 14.1 percentage points. ERR on MUSSRO-SS is 1.17%, which is decreased by 10.43 percentage points. The ERR on MUSSRS-SS is 6.99%, which is decreased by 10.8 percentage points. Performances on MUSSRO-SR are higher than those on other datasets for the following reasons: 1) Shoeprints left by wearing daily shoes carry unique personal wear characteristics. 2) Shoe patterns are not all the same. Performances on MUSSRS-SS are lower than others for the following reasons: 1) Shoeprints scanned from inked paper carry less personal information than those captured by specified acquisition devices. 2) The amount of ink attached to the sole decreases while a person is walking, which degrades the image quality of shoeprints. In addition, a series of ablation studies is conducted to show the effectiveness of the proposed three kinds of shoeprint energy maps and the matching score computing method.ConclusionIn this study, a shoeprint sequence is used to recognize a person, and constructing an SEMS is proposed to represent a shoeprint sequence, which carries the psychological and behavioral characteristics of humans. Experimental results show the promising, competitive performance of the proposed method. How the sole pattern or substrate material with a large difference affects the performance is not studied, and cross-pattern/substrate material shoeprint sequence recognition is our next work.Moreover, a larger volume dataset is under preparation.关键词:person recognition;shoeprint sequence recognition;shoeprint energy map set(SEMS);tread energy map(TEM);step energy map(SEM);step width energy map(SWEM)76|48|1更新时间:2024-05-07

摘要:ObjectiveShoeprints are impressions created when footwear is pressed or stamped against a surface during a human's walking, in which the characteristics of the shoe and feet are transferred to the surface. Shoeprints can be divided into two categories: 2D and 3D shoeprints. In this paper, we focus on 2D shoeprints. The shoeprint sequence is defined as the sequential shoeprints in time order, which conveys many important human characteristics, such as foot morphology, walking habits, and identity, and plays a vital role in crime investigations. Existing research has mainly focused on using one single footprint or footprint sequence to recognize a person and has achieved a promising performance. However, unlike shoeprints, footprint sequences seldom appear in most scenarios. Although a single shoeprint is not sufficient to represent a person for the influence of shoe patterns, a shoeprint sequence could be possible because it can additionally provide walking characteristics. Therefore, our goal is to try to identify a person using his/her shoeprint sequence.MethodA shoeprint sequence is a time series that 2D shoeprints from one person repeat at a stable frequency, and we propose a spatial representation named shoeprint energy map set (SEMS) to represent a shoeprint sequence and use it to identify a person in collaboration with the proposed matching score method. An SEMS consists of left/right tread energy maps, left/right step energy maps, and left/right step width energy maps. The tread energy map (TEM) is defined as the average image of all the aligned left or right shoeprint images from a shoeprint sequence, which includes LTEM and RTEM. The LTEM is constructed from left shoeprints only, and the RTEM is computed from right shoeprints only. The TEM carries information about one's personal features such as foot morphology, walking habits, and step angles. The step energy map (SEM) is computed by averaging all aligned step images of multiple walking cycles from a shoeprint sequence. The step image refers to the region cropped from a shoeprint sequence according to the bounding box that only encloses two successive shoeprints. According to which foot is ahead in one walking cycle, step images can be divided into right step images and left step images. Hence, LSEM and RSEM are produced. Compared with TEM, one's SEM carries additional step information such as step length and step width. The step width energy map (SWEM) is constructed by averaging all step width images of multiple walking cycles from a shoeprint sequence, which includes LSWEM and RSWEM. The step width image refers to the image removing the blank region representing the step length from the step image. Different from SEM, the SWEM does not carry one's step length information. The matching score between two shoeprint sequences is defined as the weighted average of the element wise similarity scores of two SEMSs. The element wise similarity score is computed by max pooling of the normalized 2D cross-correlation response map of two corresponding elements such as LTEMs. The weights are learned from the training sets by maximizing the proposed hinge loss function.ResultAccording to the imaging methods, the status of shoes and the kinds of shoe sole patterns, three datasets, namely MUSSRO-SR, MUSSRO-SS, and MUSSRS-SS, are constructed. Volunteers are young college students whose heights range from 155 cm to 185 cm and weights are between 43 kg and 85 kg. MUSSRO-SR consists of 875 shoeprint sequences from 125 volunteers. The shoeprint sequences are captured by the way that each person walks normally on the footprint sequence scanner wearing his/her daily shoes. MUSSRO-SS is composed of 595 shoeprint sequences from 85 persons, and the capturing method is that each volunteer walks normally on the footprint sequence scanner wearing new shoes of same patterns. MUSSRS-SS is constructed by scanning papers, where each of 100 persons walks normally wearing new shoes after stepping in a tray full of black ink. The proposed method is evaluated in identification mode and verification mode. To the best of our knowledge, we have not found shoeprint sequence-based person recognition methods in the literature. Therefore, our method is compared with gait measurement (GM)-based method used in forensic practice. The correct recognition rates (higher is better) in identification mode on three datasets are 100%, 97.65%, and 83%. Compared with GM, the correct recognition rates of the proposed method are increased by 57.6%, 61.18%, and 48.35%. The performances in verification mode are measured by equal error rate (ERR). The lower the EER is, the higher the performance is. The ERR on MUSSRO-SR is 0.36%. Compared with GM, it is decreased by 14.1 percentage points. ERR on MUSSRO-SS is 1.17%, which is decreased by 10.43 percentage points. The ERR on MUSSRS-SS is 6.99%, which is decreased by 10.8 percentage points. Performances on MUSSRO-SR are higher than those on other datasets for the following reasons: 1) Shoeprints left by wearing daily shoes carry unique personal wear characteristics. 2) Shoe patterns are not all the same. Performances on MUSSRS-SS are lower than others for the following reasons: 1) Shoeprints scanned from inked paper carry less personal information than those captured by specified acquisition devices. 2) The amount of ink attached to the sole decreases while a person is walking, which degrades the image quality of shoeprints. In addition, a series of ablation studies is conducted to show the effectiveness of the proposed three kinds of shoeprint energy maps and the matching score computing method.ConclusionIn this study, a shoeprint sequence is used to recognize a person, and constructing an SEMS is proposed to represent a shoeprint sequence, which carries the psychological and behavioral characteristics of humans. Experimental results show the promising, competitive performance of the proposed method. How the sole pattern or substrate material with a large difference affects the performance is not studied, and cross-pattern/substrate material shoeprint sequence recognition is our next work.Moreover, a larger volume dataset is under preparation.关键词:person recognition;shoeprint sequence recognition;shoeprint energy map set(SEMS);tread energy map(TEM);step energy map(SEM);step width energy map(SWEM)76|48|1更新时间:2024-05-07 -

摘要:ObjectiveThe task of video object segmentation (VOS) is to track and segment a single object or multiple objects in a video sequence. VOS is an important issue in the field of computer vision. Its goal is to manually or automatically provide specific object masks on the first frame or reference frame and then segment these specific objects in the entire video sequence. VOS plays an important role in video understanding. According to the types of video object labels, VOS methods can be divided into four categories: unsupervised, interactive, semi-supervised, and weakly supervised. In this study, we deal with the problem of semi-supervised VOS; that is, the ground truth of object mask is only given in the first frame, the segmented object is arbitrary, and no further assumptions are made about the object category. Currently, semi-supervised VOS methods are mostly based on deep learning. These methods can be divided into two types: detection-based methods and matching-based or motion propagation methods. Without using temporal information, detection-based methods learn the appearance model to perform pixel-level detection and object segmentation at each frame of the video. Matching-based or motion propagation methods utilize the temporal correlation of object motion to propagate from the first frame or a given mask frame to the object mask of the subsequent frame. Matching-based methods first calculate the pixel-level matching between the features of the template frame and the current frame in the video and then directly divide each pixel of the current frame from the matching result. There are two types of methods based on motion propagation. One type of method is to introduce optical flow to train the VOS model. Another type of method learns deep object features from the object mask of the previous frame and refines the object mask of the current frame. Most existing methods mainly rely on the reference mask of the first frame (assisted by optical flow or previous mask) to estimate the object segmentation mask. However, due to the limitations of these models in modeling spatial and temporal domain, they easily fail under rapid appearance changes or occlusion. Therefore, a spatial-temporal part-based graph model is proposed to generate robust spatial-temporal object features.MethodIn this study, we propose an encode-decode-based VOS framework for spatial-temporal part-based graph. First, we use the Siamese architecture for the encode model. The input has two branches: the historical image frame branch stream and the current image frame branch stream. To simplify the model, we introduce a Markov hypothesis, that is, given the current frame and

摘要:ObjectiveThe task of video object segmentation (VOS) is to track and segment a single object or multiple objects in a video sequence. VOS is an important issue in the field of computer vision. Its goal is to manually or automatically provide specific object masks on the first frame or reference frame and then segment these specific objects in the entire video sequence. VOS plays an important role in video understanding. According to the types of video object labels, VOS methods can be divided into four categories: unsupervised, interactive, semi-supervised, and weakly supervised. In this study, we deal with the problem of semi-supervised VOS; that is, the ground truth of object mask is only given in the first frame, the segmented object is arbitrary, and no further assumptions are made about the object category. Currently, semi-supervised VOS methods are mostly based on deep learning. These methods can be divided into two types: detection-based methods and matching-based or motion propagation methods. Without using temporal information, detection-based methods learn the appearance model to perform pixel-level detection and object segmentation at each frame of the video. Matching-based or motion propagation methods utilize the temporal correlation of object motion to propagate from the first frame or a given mask frame to the object mask of the subsequent frame. Matching-based methods first calculate the pixel-level matching between the features of the template frame and the current frame in the video and then directly divide each pixel of the current frame from the matching result. There are two types of methods based on motion propagation. One type of method is to introduce optical flow to train the VOS model. Another type of method learns deep object features from the object mask of the previous frame and refines the object mask of the current frame. Most existing methods mainly rely on the reference mask of the first frame (assisted by optical flow or previous mask) to estimate the object segmentation mask. However, due to the limitations of these models in modeling spatial and temporal domain, they easily fail under rapid appearance changes or occlusion. Therefore, a spatial-temporal part-based graph model is proposed to generate robust spatial-temporal object features.MethodIn this study, we propose an encode-decode-based VOS framework for spatial-temporal part-based graph. First, we use the Siamese architecture for the encode model. The input has two branches: the historical image frame branch stream and the current image frame branch stream. To simplify the model, we introduce a Markov hypothesis, that is, given the current frame and$K$ $K$ ${\mathit{\boldsymbol{G}}_{{\rm{ST}}}}$ $K$ $t$ $K$ $t$ ${\mathit{\boldsymbol{G}}_{{\rm{S}}}}$ ${\mathit{\boldsymbol{G}}_{{\rm{ST}}}}$ $K$ 关键词:video object segmentation(VOS);graph convolutional network;spatial-temporal features;attention mechanism;deep neural network77|152|2更新时间:2024-05-07 -

摘要:ObjectiveSaliency detection is a fundamental technology in computer vision and image processing,which aims to identify the most visually distinctive objects or regions in an image. As a preprocessing step,salient object detection plays a critical role in many computer vision applications,including visual tracking,scene classification,image retrieval,and content-based image compression. While numerous salient object detection methods have been presented,most of them are designed for RGB images only or depth RGB (RGB-D) images. However,these methods remain challenging in some complex scenarios. RGB methods may fail to distinguish salient objects from backgrounds when exposed to similar foreground and background or low-contrast conditions. RGB-D methods also suffer from challenging scenarios characterized by low-light conditions and variations in illumination. Considering that thermal infrared images are invariant to illumination conditions,we propose a multi-path collaborative salient object detection method in this study,which is designed to improve the performance of saliency detection by using the multi-mode feature information of RGB and thermal images.MethodIn this study,we design a novel end-to-end deep neural network for thermal RGB (RGB-T) salient object detection,which consists of an encoder network and a decoder network,including the feature enhance module,the pyramid pooling module,the channel attention module,and the l1-norm fusion strategy. First,the main body of the model contains two backbone networks for extracting the feature representations of RGB and thermal images,respectively. Then,three decoding branches are used to predict the saliency maps in a coordinated and complementary manner for extracted RGB feature,thermal feature,and fusion feature of both,respectively. The two backbone network streams have the same structure,which is based on Visual Geometry Group 19-layer (VGG-19) net. In order to make a better fit with saliency detection task,we only maintain five convolutional blocks of VGG-19 net and discard the last pooling and fully connected layers to preserve more spatial information from the input image. Second,the feature enhance module is used to fully extract and fuse multi-modal complementary cues from RGB and thermal streams. The modified pyramid pooling module is employed to capture global semantic information from deep-level features,which is used to locate salient objects. Finally,in the decoding process,the channel attention mechanism is designed to distinguish the semantic differences between the different channels,thereby improving the decoder's ability to separate salient objects from backgrounds. The entire model is trained in an end-to-end manner. Our training set consists of 900 aligned RGB-T image pairs that are randomly selected from each subset of the VT1000 dataset. To prevent overfitting,we augment the training set by flipping and rotating operations. Our method is implemented with PyTorch toolbox and trained on a PC with GTX 1080Ti GPU and 11 GB of memory. The input images are uniformly resized to 256×256 pixels. The momentum,weight decay,and learning rate are set as 0.9,0.000 5,and 1E-9,respectively. During training,the softmax entropy loss is used to converge the entire network.ResultWe compare our model with four state-of-the-art saliency models,including two RGB-based methods and two RGB-D-based methods,on two public datasets,namely,VT821 and VT1000. The quantitative evaluation metrics contain F-measure,mean absolute error (MAE),and precision-recall(PR) curves,and we also provide several saliency maps of each method for visual comparison. The experimental results demonstrate that our model outperforms other methods,and the saliency maps have more refined shapes under challenging conditions,such as poor illumination and low contrast. Compared with the other four methods in VT821,our method obtains the best results on maximum F-measure and MAE. The maximum F-measure (higher is better) increases by 0.26%,and the MAE (less is better) decreases by 0.17% than the second-ranked method. Compared with the other four methods in VT1000,our model also achieves the best result on maximum F-measure,which reaches 88.05% and increases by 0.46% compared with the second-ranked method. However,the MAE is 3.22%,which increases by 0.09% and is slightly poorer than the first-ranked method.ConclusionWe propose a CNN-based method for RGB-T salient object detection. To the best of our knowledge,existing saliency detection methods are mostly based on RGB or RGB-D images,so it is very meaningful to explore the application of CNN for RGB-T salient object detection. The experimental results on two public RGB-T datasets demonstrate that the method proposed in this study performs better than the state-of-the-art methods,especially for challenging scenes with poor illumination,complex background,or low contrast,which proves that it is effective to improve the performance by fusing multi-modal information from RGB and thermal images. However,public datasets for RGB-T salient detection are lacking,which is very important for the performance of deep learning network. At the same time,detection speed is a key measurement in the preprocessing step of other computer vision tasks. Thus,in the future work,we will collect more high-quality datasets for RGB-T salient detection and design more light-weight models to increase the speed of detection.关键词:RGB-T salient object detection;multi-modal images fusion;multi-path collaborative prediction;channel attention mechanism;pyramid pooling module(PPM)116|1161|5更新时间:2024-05-07

摘要:ObjectiveSaliency detection is a fundamental technology in computer vision and image processing,which aims to identify the most visually distinctive objects or regions in an image. As a preprocessing step,salient object detection plays a critical role in many computer vision applications,including visual tracking,scene classification,image retrieval,and content-based image compression. While numerous salient object detection methods have been presented,most of them are designed for RGB images only or depth RGB (RGB-D) images. However,these methods remain challenging in some complex scenarios. RGB methods may fail to distinguish salient objects from backgrounds when exposed to similar foreground and background or low-contrast conditions. RGB-D methods also suffer from challenging scenarios characterized by low-light conditions and variations in illumination. Considering that thermal infrared images are invariant to illumination conditions,we propose a multi-path collaborative salient object detection method in this study,which is designed to improve the performance of saliency detection by using the multi-mode feature information of RGB and thermal images.MethodIn this study,we design a novel end-to-end deep neural network for thermal RGB (RGB-T) salient object detection,which consists of an encoder network and a decoder network,including the feature enhance module,the pyramid pooling module,the channel attention module,and the l1-norm fusion strategy. First,the main body of the model contains two backbone networks for extracting the feature representations of RGB and thermal images,respectively. Then,three decoding branches are used to predict the saliency maps in a coordinated and complementary manner for extracted RGB feature,thermal feature,and fusion feature of both,respectively. The two backbone network streams have the same structure,which is based on Visual Geometry Group 19-layer (VGG-19) net. In order to make a better fit with saliency detection task,we only maintain five convolutional blocks of VGG-19 net and discard the last pooling and fully connected layers to preserve more spatial information from the input image. Second,the feature enhance module is used to fully extract and fuse multi-modal complementary cues from RGB and thermal streams. The modified pyramid pooling module is employed to capture global semantic information from deep-level features,which is used to locate salient objects. Finally,in the decoding process,the channel attention mechanism is designed to distinguish the semantic differences between the different channels,thereby improving the decoder's ability to separate salient objects from backgrounds. The entire model is trained in an end-to-end manner. Our training set consists of 900 aligned RGB-T image pairs that are randomly selected from each subset of the VT1000 dataset. To prevent overfitting,we augment the training set by flipping and rotating operations. Our method is implemented with PyTorch toolbox and trained on a PC with GTX 1080Ti GPU and 11 GB of memory. The input images are uniformly resized to 256×256 pixels. The momentum,weight decay,and learning rate are set as 0.9,0.000 5,and 1E-9,respectively. During training,the softmax entropy loss is used to converge the entire network.ResultWe compare our model with four state-of-the-art saliency models,including two RGB-based methods and two RGB-D-based methods,on two public datasets,namely,VT821 and VT1000. The quantitative evaluation metrics contain F-measure,mean absolute error (MAE),and precision-recall(PR) curves,and we also provide several saliency maps of each method for visual comparison. The experimental results demonstrate that our model outperforms other methods,and the saliency maps have more refined shapes under challenging conditions,such as poor illumination and low contrast. Compared with the other four methods in VT821,our method obtains the best results on maximum F-measure and MAE. The maximum F-measure (higher is better) increases by 0.26%,and the MAE (less is better) decreases by 0.17% than the second-ranked method. Compared with the other four methods in VT1000,our model also achieves the best result on maximum F-measure,which reaches 88.05% and increases by 0.46% compared with the second-ranked method. However,the MAE is 3.22%,which increases by 0.09% and is slightly poorer than the first-ranked method.ConclusionWe propose a CNN-based method for RGB-T salient object detection. To the best of our knowledge,existing saliency detection methods are mostly based on RGB or RGB-D images,so it is very meaningful to explore the application of CNN for RGB-T salient object detection. The experimental results on two public RGB-T datasets demonstrate that the method proposed in this study performs better than the state-of-the-art methods,especially for challenging scenes with poor illumination,complex background,or low contrast,which proves that it is effective to improve the performance by fusing multi-modal information from RGB and thermal images. However,public datasets for RGB-T salient detection are lacking,which is very important for the performance of deep learning network. At the same time,detection speed is a key measurement in the preprocessing step of other computer vision tasks. Thus,in the future work,we will collect more high-quality datasets for RGB-T salient detection and design more light-weight models to increase the speed of detection.关键词:RGB-T salient object detection;multi-modal images fusion;multi-path collaborative prediction;channel attention mechanism;pyramid pooling module(PPM)116|1161|5更新时间:2024-05-07 -