最新刊期

卷 25 , 期 9 , 2020

-

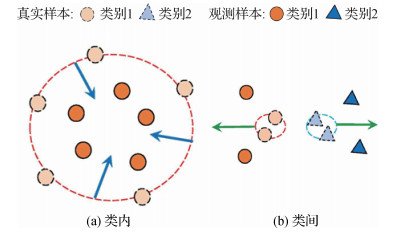

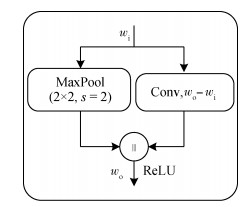

摘要:Remote sensing edge intelligence technology has become an important research topic due to the rapid development of aerospace, remote sensing, and communication as well as the innovation of 5G and other efficient communication technologies. Remote sensing edge intelligence technology aims to achieve the front of intelligent application and perform efficient information analysis and decision making at a location close to the data source. This technology can be effectively used in satellite on-orbit processing and interpretation, unmanned aerial vehicle(UAV) dynamic real-time tracking, large-scale urban environment reconstruction, automatic driving recognition planning, and other scenarios to saving a considerable amount of transmission bandwidth, processing time, and resource consumption and achieve fast, accurate, and compact design of intelligent technology algorithm. We summarize the research status of edge intelligence in remote sensing in this study. First, we discuss the problems faced by the current remote sensing field in deployment of applications on edge devices, namely, 1) limitation of number of samples: compared with visual scene images, remote sensing data continue to be a problem of small samples. Remote sensing scenes contain a large number of complex backgrounds and target categories, but the actual number of effective samples is relatively small. Newly emerged and modified targets typically face serious problems of uneven distribution of categories. 2) Limitation of computing resources: coverage area of remote sensing images that can generally reach several or even hundreds of kilometers and data size of a single image that can reach up to several hundred GBs require a large amount of storage space for edge devices. In addition, the increasing complexity of deep learning models increases the requirements for computing power resources. Therefore, remote sensing edge intelligence must solve the contradiction between model complexity and power consumption on edge devices. 3) Catastrophic forgetting: new tasks and categories continue to emerge in the analysis of remote sensing data. Existing algorithms have poor generalization ability for continuous input data. Hence, continuous learning must also be solved to maintain high accuracy and high performance of algorithms. We then introduce solutions and primary technical approaches to related problems, including generalized learning in the case of small samples, design and training strategy of the lightweight model, and continuous learning for multitasks. 1) Generalized learning in the case of small samples: we summarize existing solutions into two categories, namely, combine characteristics of remote sensing images to expand the sample intelligently and meet data requirements of the model training as well as introduce priority knowledge from the perspective of knowledge reuse through different learning strategies, such as transfer learning, meta- learning, and metric learning, to assist the model in learning new categories and reduce the model's need for remote sensing data. 2) Design and training strategy of the lightweight model: the former introduces convolution calculation unit design, artificial network design, automatic design, model pruning and quantification methods, while the latter compares training frameworks based on knowledge distillation and traditional training methods. 3) Continuous learning for multitasks: the first category is based on the reproduction of sample data. The model plays back stored samples while learning new tasks by storing samples of previous tasks or applying a generated model to generate pseudo samples to balance the training data of different tasks and reduce the problem of catastrophic forgetting. The second category is based on the method of model structure expansion. The model is divided into subsets dedicated to each task by constraining parameter update strategies or isolating model parameters. The method of model structure expansion improves the task adaptability of the model and avoids catastrophic forgetting without relying on historical data. Furthermore, combined with typical applications of remote sensing edge intelligence technology, we analyze the advantages and disadvantages of representative algorithms. Finally, we discuss challenges faced by remote sensing edge intelligence technology and future directions of this study. Further research is required in remote sensing edge intelligence technology to improve its depth and breadth of application.关键词:remote sensing data;edge intelligence;few-shot learning;lightweight model;continuous learning89|77|5更新时间:2024-05-07

摘要:Remote sensing edge intelligence technology has become an important research topic due to the rapid development of aerospace, remote sensing, and communication as well as the innovation of 5G and other efficient communication technologies. Remote sensing edge intelligence technology aims to achieve the front of intelligent application and perform efficient information analysis and decision making at a location close to the data source. This technology can be effectively used in satellite on-orbit processing and interpretation, unmanned aerial vehicle(UAV) dynamic real-time tracking, large-scale urban environment reconstruction, automatic driving recognition planning, and other scenarios to saving a considerable amount of transmission bandwidth, processing time, and resource consumption and achieve fast, accurate, and compact design of intelligent technology algorithm. We summarize the research status of edge intelligence in remote sensing in this study. First, we discuss the problems faced by the current remote sensing field in deployment of applications on edge devices, namely, 1) limitation of number of samples: compared with visual scene images, remote sensing data continue to be a problem of small samples. Remote sensing scenes contain a large number of complex backgrounds and target categories, but the actual number of effective samples is relatively small. Newly emerged and modified targets typically face serious problems of uneven distribution of categories. 2) Limitation of computing resources: coverage area of remote sensing images that can generally reach several or even hundreds of kilometers and data size of a single image that can reach up to several hundred GBs require a large amount of storage space for edge devices. In addition, the increasing complexity of deep learning models increases the requirements for computing power resources. Therefore, remote sensing edge intelligence must solve the contradiction between model complexity and power consumption on edge devices. 3) Catastrophic forgetting: new tasks and categories continue to emerge in the analysis of remote sensing data. Existing algorithms have poor generalization ability for continuous input data. Hence, continuous learning must also be solved to maintain high accuracy and high performance of algorithms. We then introduce solutions and primary technical approaches to related problems, including generalized learning in the case of small samples, design and training strategy of the lightweight model, and continuous learning for multitasks. 1) Generalized learning in the case of small samples: we summarize existing solutions into two categories, namely, combine characteristics of remote sensing images to expand the sample intelligently and meet data requirements of the model training as well as introduce priority knowledge from the perspective of knowledge reuse through different learning strategies, such as transfer learning, meta- learning, and metric learning, to assist the model in learning new categories and reduce the model's need for remote sensing data. 2) Design and training strategy of the lightweight model: the former introduces convolution calculation unit design, artificial network design, automatic design, model pruning and quantification methods, while the latter compares training frameworks based on knowledge distillation and traditional training methods. 3) Continuous learning for multitasks: the first category is based on the reproduction of sample data. The model plays back stored samples while learning new tasks by storing samples of previous tasks or applying a generated model to generate pseudo samples to balance the training data of different tasks and reduce the problem of catastrophic forgetting. The second category is based on the method of model structure expansion. The model is divided into subsets dedicated to each task by constraining parameter update strategies or isolating model parameters. The method of model structure expansion improves the task adaptability of the model and avoids catastrophic forgetting without relying on historical data. Furthermore, combined with typical applications of remote sensing edge intelligence technology, we analyze the advantages and disadvantages of representative algorithms. Finally, we discuss challenges faced by remote sensing edge intelligence technology and future directions of this study. Further research is required in remote sensing edge intelligence technology to improve its depth and breadth of application.关键词:remote sensing data;edge intelligence;few-shot learning;lightweight model;continuous learning89|77|5更新时间:2024-05-07

Scholar View

-

摘要:Infrared acquisition technologies are not easily disturbed by environmental factors and have strong penetrability. In addition, the effect of infrared acquisition is mainly determined by the temperature of the object itself. Therefore, such technology has been widely used in the military field, such as in infrared guidance, infrared antimissile, and early warning systems. With the rapid development of computer vision and digital image processing technologies, infrared small-target detection has gradually become the focus and challenge of research, and the number of relevant methods and kinds of infrared small-target detection techniques are increasing. However, given the characteristics of small imaging area, long distance, lack of detailed features, weak shape features, and low signal-to-noise ratio, infrared dim- and small-target detection technology has always been a key technical problem in infrared guidance systems. In this study, two kinds of methods, which are based on single-frame images and infrared sequence and extensively used at present, are reviewed. This work serves as basis for follow-up research on the theory and development of small-target detection. The corresponding infrared small-target algorithm is selected for comparison on the basis of the analysis of the characteristics of the target and background in infrared small-target images and the difficulties of infrared small-target detection technology, in accordance with whether the interframe correlation information is used, and from the perspective of single-frame infrared image and infrared sequence. Single-frame based algorithms can be divided into three categories, including filtering methods, human vision system based methods low-rank sparse recovery base methods.The method based on filtering estimates the background of infrared images, using the frequency difference among the target, background and clutter to filter the background and clutter, to achieve the effect of background suppression. The method based on human vision systems mainly uses the visual perception characteristics of human eyes, that is, the appearance of small targets results in considerable changes of local texture rather than global texture. In recent years, the method based on low-rank sparse recovery has been widely used; it is also an algorithm with improved effect in single-frame image detection. This kind of algorithm maximizes the sparsity of small targets, the low rank of backgrounds, and the high frequency of clutter. Moreover, it uses optimization algorithms to solve the objective function and gradually improve the accuracy of detection in the process of iteration. However, this kind of infrared small-target detection method based on single-frame images requires a high signal-to-noise ratio and does not take advantage of the correlation between adjacent frames; thus, it is prone to false detection and demonstrates a relatively poor performance in real time. Therefore, a sequence-based detection method based on spatial-temporal correlation is introduced. For the detection of small moving infrared targets, prior information, such as the shape of small targets, the continuity of gray level change in time, and the continuity of moving track, is key to segment noise and small targets from infrared images effectively. Therefore, in accordance with the order of using these prior information, current mainstream infrared moving small-target detection methods are divided into two categories: detect before motion (DBM) and motion before detect (MBD). These two kinds of algorithms have different application ranges according to their own characteristics. The DBM method is relatively simple, easy to explement, and widely used in tasks with high real-time requirements. By contrast, the MBD method has high detection rate and low false alarm rate and can achieve good detection results in low signals to clutter ratio backgrounds. In this review, the principle, process, and characteristics of typical algorithms are introduced in detail, and the performance of each kind of detection algorithm is compared. At present, infrared small-target detection technologies may have reliable performance in short-term small-target detection and tracking tasks; however, the difficulty of small-target detection is prominent due to complex application scenarios, high requirements for long-term detection, and the particularity of target and background in practical applications. Therefore, according to the characteristics of infrared small targets, this work analyzes the difficulties of infrared small-target detection methods, provides solutions and shortcomings of various algorithms, and discusses the development direction of infrared small-target detection. Thus far, infrared small-target detection technologies have made remarkable progress and have been widely used in infrared guidance and antimissile tasks. However, infrared small-target detection technologies still suffer from some problems. For the characteristics of infrared small-target detection, we need to test and improve the detection theory of small targets further. To improve the detection effect of small targets in infrared images, we must constantly study the corresponding detection methods and improve the schemes. The application of infrared dim- and small-target detection is challenging and complex. The robustness and accuracy of the corresponding algorithms are constantly improved, and the detection speed is also required to meet real-time requirements. Combined with the application characteristics and scope of different military equipment, a universal overseas small-target detection algorithm should be studied. The algorithm should have high accuracy and robustness and must meet real-time requirements to enhance the all-weather reconnaissance capability and the target battlefield information collection capability of the equipment. Therefore, we can also summarize the major development directions of infrared small-target detection technology in the future. First, from the perspective of image fusion of different imaging systems, imaging quality is improved. Second, the existing algorithm is improved by combining the spatial-temporal information of images and the idea of iterative optimization. Third, several datasets are collected, and deep learning methods are explored to improve the accuracy of detection algorithm. Lastly, the improvements of hardware systems are used to accelerate the algorithm and improve the real-time detection. In the future, we will conduct corresponding research from these directions.关键词:infrared image;infrared sequences;infrared small target;low-rank and sparse representation(LRSR);small-target detection442|412|20更新时间:2024-05-07

摘要:Infrared acquisition technologies are not easily disturbed by environmental factors and have strong penetrability. In addition, the effect of infrared acquisition is mainly determined by the temperature of the object itself. Therefore, such technology has been widely used in the military field, such as in infrared guidance, infrared antimissile, and early warning systems. With the rapid development of computer vision and digital image processing technologies, infrared small-target detection has gradually become the focus and challenge of research, and the number of relevant methods and kinds of infrared small-target detection techniques are increasing. However, given the characteristics of small imaging area, long distance, lack of detailed features, weak shape features, and low signal-to-noise ratio, infrared dim- and small-target detection technology has always been a key technical problem in infrared guidance systems. In this study, two kinds of methods, which are based on single-frame images and infrared sequence and extensively used at present, are reviewed. This work serves as basis for follow-up research on the theory and development of small-target detection. The corresponding infrared small-target algorithm is selected for comparison on the basis of the analysis of the characteristics of the target and background in infrared small-target images and the difficulties of infrared small-target detection technology, in accordance with whether the interframe correlation information is used, and from the perspective of single-frame infrared image and infrared sequence. Single-frame based algorithms can be divided into three categories, including filtering methods, human vision system based methods low-rank sparse recovery base methods.The method based on filtering estimates the background of infrared images, using the frequency difference among the target, background and clutter to filter the background and clutter, to achieve the effect of background suppression. The method based on human vision systems mainly uses the visual perception characteristics of human eyes, that is, the appearance of small targets results in considerable changes of local texture rather than global texture. In recent years, the method based on low-rank sparse recovery has been widely used; it is also an algorithm with improved effect in single-frame image detection. This kind of algorithm maximizes the sparsity of small targets, the low rank of backgrounds, and the high frequency of clutter. Moreover, it uses optimization algorithms to solve the objective function and gradually improve the accuracy of detection in the process of iteration. However, this kind of infrared small-target detection method based on single-frame images requires a high signal-to-noise ratio and does not take advantage of the correlation between adjacent frames; thus, it is prone to false detection and demonstrates a relatively poor performance in real time. Therefore, a sequence-based detection method based on spatial-temporal correlation is introduced. For the detection of small moving infrared targets, prior information, such as the shape of small targets, the continuity of gray level change in time, and the continuity of moving track, is key to segment noise and small targets from infrared images effectively. Therefore, in accordance with the order of using these prior information, current mainstream infrared moving small-target detection methods are divided into two categories: detect before motion (DBM) and motion before detect (MBD). These two kinds of algorithms have different application ranges according to their own characteristics. The DBM method is relatively simple, easy to explement, and widely used in tasks with high real-time requirements. By contrast, the MBD method has high detection rate and low false alarm rate and can achieve good detection results in low signals to clutter ratio backgrounds. In this review, the principle, process, and characteristics of typical algorithms are introduced in detail, and the performance of each kind of detection algorithm is compared. At present, infrared small-target detection technologies may have reliable performance in short-term small-target detection and tracking tasks; however, the difficulty of small-target detection is prominent due to complex application scenarios, high requirements for long-term detection, and the particularity of target and background in practical applications. Therefore, according to the characteristics of infrared small targets, this work analyzes the difficulties of infrared small-target detection methods, provides solutions and shortcomings of various algorithms, and discusses the development direction of infrared small-target detection. Thus far, infrared small-target detection technologies have made remarkable progress and have been widely used in infrared guidance and antimissile tasks. However, infrared small-target detection technologies still suffer from some problems. For the characteristics of infrared small-target detection, we need to test and improve the detection theory of small targets further. To improve the detection effect of small targets in infrared images, we must constantly study the corresponding detection methods and improve the schemes. The application of infrared dim- and small-target detection is challenging and complex. The robustness and accuracy of the corresponding algorithms are constantly improved, and the detection speed is also required to meet real-time requirements. Combined with the application characteristics and scope of different military equipment, a universal overseas small-target detection algorithm should be studied. The algorithm should have high accuracy and robustness and must meet real-time requirements to enhance the all-weather reconnaissance capability and the target battlefield information collection capability of the equipment. Therefore, we can also summarize the major development directions of infrared small-target detection technology in the future. First, from the perspective of image fusion of different imaging systems, imaging quality is improved. Second, the existing algorithm is improved by combining the spatial-temporal information of images and the idea of iterative optimization. Third, several datasets are collected, and deep learning methods are explored to improve the accuracy of detection algorithm. Lastly, the improvements of hardware systems are used to accelerate the algorithm and improve the real-time detection. In the future, we will conduct corresponding research from these directions.关键词:infrared image;infrared sequences;infrared small target;low-rank and sparse representation(LRSR);small-target detection442|412|20更新时间:2024-05-07 -

摘要:General object detection has been one of most important research topics in the field of computer vision. This task attempts to locate and mark an object instance that appears in a natural image using a series of given labels. The technique has been widely used in actual application scenarios, such as automatic driving and security monitoring. With the development and popularization of deep learning technology, the acquisition of the semantic information of images has become easier; thus, the general object detection framework based on convolutional neural networks (CNNs) has obtained better results compared with other target detection methods. Given that the large-scale dataset of the task is relatively better than datasets designed for other vision tasks and the metrics are well defined, this task rapidly evolves in CNN-based computer vision tasks. However, general object detection tasks still face many problems, such as scale and illumination changes and occlusions, due to the limitations of the CNN structure. Given that the features extracted by CNNs are sensitive to the scale, multiscale detection is often valuable but challenging in the field of CNN-based target detection. Research on scale transformation also has reference value for other scales in small target- or pixel-level tasks, such as the semantic segmentation and pose detection of images. This study mainly aims to provide a comprehensive overview of object detection strategies for scales in CNN architectures, that is, how to locate and classify different sizes of targets robustly. First, we introduce the development of general target detection problems and the main datasets used. Then, we introduce two categories of the general object detection framework. One of the categories, i.e., two-stage strategies, first obtains the region proposals and then selects the proposals by points of classification confidence; it mostly takes region-based convolutional neural networks (RCNN) as the baseline. With the development of the RCNN structure, all the links are transformed into specific convolution layers, thus forming an end-to-end structure. In addition, several tricks are designed for the baseline to solve specific problems, thus improving the robustness of the baseline for all kinds of object regions. The other category, i.e., one-stage strategies, obtains the region location and category by regressing once; it starts with a structure named "you only look once" which regresses the information of the object for every block divided. Then, the baseline becomes convolutional and end to end and uses deep and effective features. This baseline has also become popular since focal loss has been proposed because it solves the problem in which regression may cause an unbalance of positive and negative samples. Besides, some other methods, which detect objects via point location and learn from pose estimation tasks, also obtain satisfactory results in general target detection. We then introduce a simple classification of the optimization ideas for scale problems; these ideas include multi-feature fusion strategies, convolution deformations for receptive fields, and training strategy designs. Multi-feature fusion strategies are used to detect the classes of objects that are not always performed in a small scale. Multi-feature fusion can obtain semantic information from different image scales and fuse them to attain the most suitable scale. It can also effectively identify the different sizes of one-class objects. Widely used structures can be divided as follows: those that use single-shot detection and those with feature pyramid networks. Some structures have a jump layer fusion design. In a receptive field, every feature corresponds with an image or lower-level feature. The specific design can solve a target that always appears small in the image. The general receptive field of a convolution is the same as the size of the kernel; another special convolution kernel is designed. Dilated kernels are the most deformed kernels, which are used with the designed pooling layer to obtain a dense high-level feature. Some scholars have designed an offset layer to attain the most useful deformation information automatically for the convolution kernel. A training strategy can also be designed for small targets. A dataset that only includes small objects can be designed, and different sizes of the image can be trained in the structure in an orderly manner. Resampling images is also a common strategy. We provide the detection accuracy results for different sizes of targets on common datasets for different detection frameworks. Results are obtained from the Microsoft common objects in context (MS COCO) dataset. We use average precision (AP) to measure the result of the detection, and the result set includes results for small, medium, and large targets and those for different intersection-over-union thresholds. It shows the influence of the changes for scale. This study provides a set of possible future development directions for scale transformation. It also includes strategies on how to obtain robust features and detection modules and how to design a training dataset.关键词:image semantic understanding;general object detection;convolutional neural network (CNN);scale changing;small target detection23|42|1更新时间:2024-05-07

摘要:General object detection has been one of most important research topics in the field of computer vision. This task attempts to locate and mark an object instance that appears in a natural image using a series of given labels. The technique has been widely used in actual application scenarios, such as automatic driving and security monitoring. With the development and popularization of deep learning technology, the acquisition of the semantic information of images has become easier; thus, the general object detection framework based on convolutional neural networks (CNNs) has obtained better results compared with other target detection methods. Given that the large-scale dataset of the task is relatively better than datasets designed for other vision tasks and the metrics are well defined, this task rapidly evolves in CNN-based computer vision tasks. However, general object detection tasks still face many problems, such as scale and illumination changes and occlusions, due to the limitations of the CNN structure. Given that the features extracted by CNNs are sensitive to the scale, multiscale detection is often valuable but challenging in the field of CNN-based target detection. Research on scale transformation also has reference value for other scales in small target- or pixel-level tasks, such as the semantic segmentation and pose detection of images. This study mainly aims to provide a comprehensive overview of object detection strategies for scales in CNN architectures, that is, how to locate and classify different sizes of targets robustly. First, we introduce the development of general target detection problems and the main datasets used. Then, we introduce two categories of the general object detection framework. One of the categories, i.e., two-stage strategies, first obtains the region proposals and then selects the proposals by points of classification confidence; it mostly takes region-based convolutional neural networks (RCNN) as the baseline. With the development of the RCNN structure, all the links are transformed into specific convolution layers, thus forming an end-to-end structure. In addition, several tricks are designed for the baseline to solve specific problems, thus improving the robustness of the baseline for all kinds of object regions. The other category, i.e., one-stage strategies, obtains the region location and category by regressing once; it starts with a structure named "you only look once" which regresses the information of the object for every block divided. Then, the baseline becomes convolutional and end to end and uses deep and effective features. This baseline has also become popular since focal loss has been proposed because it solves the problem in which regression may cause an unbalance of positive and negative samples. Besides, some other methods, which detect objects via point location and learn from pose estimation tasks, also obtain satisfactory results in general target detection. We then introduce a simple classification of the optimization ideas for scale problems; these ideas include multi-feature fusion strategies, convolution deformations for receptive fields, and training strategy designs. Multi-feature fusion strategies are used to detect the classes of objects that are not always performed in a small scale. Multi-feature fusion can obtain semantic information from different image scales and fuse them to attain the most suitable scale. It can also effectively identify the different sizes of one-class objects. Widely used structures can be divided as follows: those that use single-shot detection and those with feature pyramid networks. Some structures have a jump layer fusion design. In a receptive field, every feature corresponds with an image or lower-level feature. The specific design can solve a target that always appears small in the image. The general receptive field of a convolution is the same as the size of the kernel; another special convolution kernel is designed. Dilated kernels are the most deformed kernels, which are used with the designed pooling layer to obtain a dense high-level feature. Some scholars have designed an offset layer to attain the most useful deformation information automatically for the convolution kernel. A training strategy can also be designed for small targets. A dataset that only includes small objects can be designed, and different sizes of the image can be trained in the structure in an orderly manner. Resampling images is also a common strategy. We provide the detection accuracy results for different sizes of targets on common datasets for different detection frameworks. Results are obtained from the Microsoft common objects in context (MS COCO) dataset. We use average precision (AP) to measure the result of the detection, and the result set includes results for small, medium, and large targets and those for different intersection-over-union thresholds. It shows the influence of the changes for scale. This study provides a set of possible future development directions for scale transformation. It also includes strategies on how to obtain robust features and detection modules and how to design a training dataset.关键词:image semantic understanding;general object detection;convolutional neural network (CNN);scale changing;small target detection23|42|1更新时间:2024-05-07

Review

-

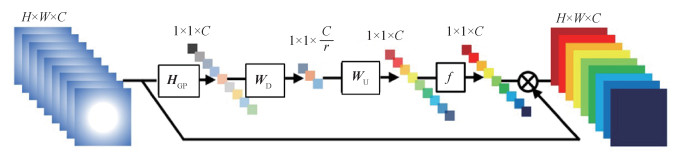

摘要:ObjectiveSingle-image super-resolution (SISR) techniques aim to reconstruct a high-resolution image from a single low-resolution image. Given that high-resolution images contain substantial useful information, SISR technology has been widely used in medical imaging, face authentication, public relations, security monitoring, and other tasks. With the rapid development of deep learning, the convolution neural network (CNN)-based SISR method has achieved remarkable success in the field of SISR. From super-resolution CNN (SRCNN) to residual channel attention network (RCAN), the depth and the performance of the network have considerably improved. However, some problems need to be improved. 1) Increasing the depth of a network can improve reconstruction performance effectively; however, it also increases the calculation complexity of the network and leads to a poor real-time performance. 2) An image contains a large amount of high- and low-frequency information. The area with high-frequency information should be more important than the area with low-frequency information. However, most recent CNN-based methods treat these two areas equally and thus lack flexibility. 3) Feature maps at different depths carry different receptive field information with different scales. Integrating these feature maps can enhance the information flow of different convolution layers. Most current CNN-based methods only consider feature maps with a single scale. To solve these problems, we propose a lightweight hierarchical feature fusion spatial attention network to learn additional useful high-frequency information.MethodThe proposed network is mainly composed of four parts, namely, the shallow feature extraction, hierarchical feature fusion, up-sampling, and reconstruction parts. In the shallow feature extraction part, a convolution layer is used to extract the shallow feature and expand the number of channels. The hierarchical feature fusion part comprises nine residual attention blocks, which are evenly divided into three residual attention groups, each of which contains three residual attention blocks. The feature maps at different depths are fused by using local and global feature fusion strategies. On the one hand, the local feature fusion strategy is used to fuse the feature maps obtained by the three residual attention blocks in each residual attention group. On the other hand, the global feature fusion strategy is used to fuse the feature maps obtained by three residual attention groups. The two feature fusion strategies can integrate feature maps with different scales to enhance the information flow of different depths in the network. This study focuses on the residual attention block, which is composed of a residual block module and a spatial attention module. In each residual attention block, two 3×3 convolution layers are first used to extract several feature maps, and then a spatial attention module is used to assign different weights to different spatial positions for different feature maps. The core problem is how to obtain the appropriate weight set. According to our analysis, pooling along the channel axis can effectively highlight the importance of the areas with high-frequency information. Hence, we first apply average and maximum pooling along the channel axis to generate two representative feature descriptors. Afterward, a 5×5 and a 1×1 convolution layer are used to fuse the information in each position with its neighbor positions. The spatial attention value of each position is finally obtained by using a sigmoid function. The third part is the up-sampling part, which uses subpixel convolution to upsample the low-resolution (LR) feature maps and obtain a large-scale feature map. Lastly, in the reconstruction part, the number of channels is compressed to the target number by using a 3×3 convolution layer, thus obtaining a reconstructed high-resolution image. During the training stage, a DIVerse 2K(DIV2K) dateset is used to train the proposed network, and 32 000 image patches with a size of 48×48 pixels are obtained as LR images by random cropping. L1 loss is used as the loss function in our network; this function is optimized using the Adam algorithm.ResultWe compare our network with some traditional methods, such as bicubic interpolation, SRCNN, very deep super-resolution convolutional networks (VDSR), deep recursive residual networks (DRRN), residual dense networks (RDN), and RCAN. Five datasets, including Set5, Set14, Berkeley segmentation dataset(BSD)100, Urban100, and Manga109, are used as testsets to show the performance of the proposed method. Two indices, including peak signal-to-noise ratio (PSNR) and structural similarity (SSIM), are used to evaluate the reconstruction results of the proposed method and the other methods used for comparison. The average PSNR and SSIM values are obtained from the results of different methods on the five test datasets with different scale factors. Four test images with different scales are used to show the reconstruction results from using different methods. In addition, the proposed method is compared with enhanced deep residual networks (EDSR) in the convergence curve. Experiments show that the proposed method can recover more detailed information and clearer edges compared with most of the compared methods.ConclusionWe propose a hierarchical feature fusion attention network in this study. Such network can quickly recover high-frequency details with the help of the spatial attention module and the hierarchical feature fusion structure, thus obtaining reconstructed results that have a more detailed texture.关键词:super-resolution reconstruction;convolution neural network (CNN);hierarchical feature fusion;residual learning;attention mechanism211|144|11更新时间:2024-05-07

摘要:ObjectiveSingle-image super-resolution (SISR) techniques aim to reconstruct a high-resolution image from a single low-resolution image. Given that high-resolution images contain substantial useful information, SISR technology has been widely used in medical imaging, face authentication, public relations, security monitoring, and other tasks. With the rapid development of deep learning, the convolution neural network (CNN)-based SISR method has achieved remarkable success in the field of SISR. From super-resolution CNN (SRCNN) to residual channel attention network (RCAN), the depth and the performance of the network have considerably improved. However, some problems need to be improved. 1) Increasing the depth of a network can improve reconstruction performance effectively; however, it also increases the calculation complexity of the network and leads to a poor real-time performance. 2) An image contains a large amount of high- and low-frequency information. The area with high-frequency information should be more important than the area with low-frequency information. However, most recent CNN-based methods treat these two areas equally and thus lack flexibility. 3) Feature maps at different depths carry different receptive field information with different scales. Integrating these feature maps can enhance the information flow of different convolution layers. Most current CNN-based methods only consider feature maps with a single scale. To solve these problems, we propose a lightweight hierarchical feature fusion spatial attention network to learn additional useful high-frequency information.MethodThe proposed network is mainly composed of four parts, namely, the shallow feature extraction, hierarchical feature fusion, up-sampling, and reconstruction parts. In the shallow feature extraction part, a convolution layer is used to extract the shallow feature and expand the number of channels. The hierarchical feature fusion part comprises nine residual attention blocks, which are evenly divided into three residual attention groups, each of which contains three residual attention blocks. The feature maps at different depths are fused by using local and global feature fusion strategies. On the one hand, the local feature fusion strategy is used to fuse the feature maps obtained by the three residual attention blocks in each residual attention group. On the other hand, the global feature fusion strategy is used to fuse the feature maps obtained by three residual attention groups. The two feature fusion strategies can integrate feature maps with different scales to enhance the information flow of different depths in the network. This study focuses on the residual attention block, which is composed of a residual block module and a spatial attention module. In each residual attention block, two 3×3 convolution layers are first used to extract several feature maps, and then a spatial attention module is used to assign different weights to different spatial positions for different feature maps. The core problem is how to obtain the appropriate weight set. According to our analysis, pooling along the channel axis can effectively highlight the importance of the areas with high-frequency information. Hence, we first apply average and maximum pooling along the channel axis to generate two representative feature descriptors. Afterward, a 5×5 and a 1×1 convolution layer are used to fuse the information in each position with its neighbor positions. The spatial attention value of each position is finally obtained by using a sigmoid function. The third part is the up-sampling part, which uses subpixel convolution to upsample the low-resolution (LR) feature maps and obtain a large-scale feature map. Lastly, in the reconstruction part, the number of channels is compressed to the target number by using a 3×3 convolution layer, thus obtaining a reconstructed high-resolution image. During the training stage, a DIVerse 2K(DIV2K) dateset is used to train the proposed network, and 32 000 image patches with a size of 48×48 pixels are obtained as LR images by random cropping. L1 loss is used as the loss function in our network; this function is optimized using the Adam algorithm.ResultWe compare our network with some traditional methods, such as bicubic interpolation, SRCNN, very deep super-resolution convolutional networks (VDSR), deep recursive residual networks (DRRN), residual dense networks (RDN), and RCAN. Five datasets, including Set5, Set14, Berkeley segmentation dataset(BSD)100, Urban100, and Manga109, are used as testsets to show the performance of the proposed method. Two indices, including peak signal-to-noise ratio (PSNR) and structural similarity (SSIM), are used to evaluate the reconstruction results of the proposed method and the other methods used for comparison. The average PSNR and SSIM values are obtained from the results of different methods on the five test datasets with different scale factors. Four test images with different scales are used to show the reconstruction results from using different methods. In addition, the proposed method is compared with enhanced deep residual networks (EDSR) in the convergence curve. Experiments show that the proposed method can recover more detailed information and clearer edges compared with most of the compared methods.ConclusionWe propose a hierarchical feature fusion attention network in this study. Such network can quickly recover high-frequency details with the help of the spatial attention module and the hierarchical feature fusion structure, thus obtaining reconstructed results that have a more detailed texture.关键词:super-resolution reconstruction;convolution neural network (CNN);hierarchical feature fusion;residual learning;attention mechanism211|144|11更新时间:2024-05-07 -

摘要:ObjectiveThe application of underwater video technology has a history of more than 60 years. This technology plays an important role in promoting research on marine bioecology, fish species, and underwater object detection and tracking. Video quality assessment is one of the key areas being studied in video technology research. Such assessment is especially vital for underwater videos because underwater environments are more complex than atmospheric ones. On the one hand, natural sunlight is seriously absorbed in deep water, and the artificial light used in video shooting suffers from light absorption, dispersion, and scattering due to water turbidity and submarine topography. As a result, underwater videos have blurred picture, low contrast, color cast, and uneven lighting. On the other hand, underwater video quality is affected by the limitation of photography equipment and the influence of water flow. When shooting a moving object, the lens hardly stabilizes and turns unsmooth. Compared with videos shot in natural scenes, underwater videos are characterized by large lens movement, shaking, and serious out of focus. These characteristics make it difficult for conventional video quality assessment(VQA) methods to evaluate underwater video accurately and effectively. Thus, the "quality" of underwater videos must be redefined, and an effective quality assessment method must be established. In this study, we establish an underwater video dataset by considering underwater video imaging characteristics, annotate its video quality via subjective quality assessment, and propose an objective underwater video quality assessment model on the basis of spatial naturalness and video compression index.MethodFirst, a new underwater video dataset is established to 1) collect several underwater videos captured in real deep sea environments for processing as source data; 2) filter these videos preliminarily to include different underwater scenes; 3) cut the preliminary screened videos at intervals of 10 seconds; 4) refilter the short video sequences to cover different shoot characteristics and color diversity, thus generating 25 video sequences with rich color information, different video contents, and different underwater video features; and 5) expand the dataset using different frame rates and bit rates as compression parameters. A total of 250 (25+25×3×3) video sequences are obtained. Then, subjective quality assessment is conducted. Absolute category rating is used by 20 participants to annotate all the 250 videos with scores ranging from 1 to 5. Then, we consider influences on the underwater video quality from the aspects of spatial, temporal, and compression features. The spatial features are expressed by natural scene statistics distortion characteristics in the spatial domain and are calculated using the blind/referenceless image spatial quality evaluator(BRISQUE) algorithm. The temporal features are expressed by optical flow motion features. We first compute the dense optical flow matrix between adjacent frames and then extract the mean and variation of overall optical flows and the mean and variation of the main objects in the video. Compression features use resolution, frame rate, and bit rate, which are easy-to-access video coding parameters. Considering the redundancy and relevancy of these potential features, we analyze the correlations among the features and between the features and the subjective quality scores. Then, we select 21 features as influence factors, which only contain 18 spatial natural characteristics and three compression indexes. Lastly, we establish a linear model with the selected features to evaluate underwater video quality objectively through linear regression with cross validation.ResultExperimental results show that the proposed underwater video quality assessment model based on spatial naturalness and compression index can obtain the highest correlation with subjective scores in comparison with several mainstream quality assessment models, including two underwater image quality indices (underwater image quality measure(UIQM) and underwater color image quality evaluation(UCIQE)), a natural image quality distortion index (BRISQUE), and a video quality assessment model (video intrinsic integrity and distortion evaluation oracle(VIIDEO)). Performance evaluation is based on Pearson's correlation coefficient (PCC), Spearman's rank order correlation coefficient (SROCC) and the mean squared errors (MSE) between the predicted video quality scores of each model and the subjective scores. On the test video dataset, our method achieves the highest correlation (PCC=0.840 8, SROCC=0.832 2) and a minimum MSE value of 0.113 1. This result indicates that our proposed method is more stable and can predict video quality more accurately than other methods. By contrast, the video quality assessment model VIIDEO can hardly provide correct results, whereas UIQM and UCIQE demonstrate poor performance with a PCC and SROCC of 0.3~0.4. In addition, BRISQUE performs relatively better than the other methods although still poorer than our method.ConclusionUnderwater videos are characterized by blurred picture, low contrast, color distortion, uneven lighting, large lens movement, and out of focus. To achieve an accurate assessment of underwater video quality, we fully consider the characteristics and shooting conditions of underwater videos and establish a labeled underwater video dataset with subjective video quality assessment. By fitting a linear regression model for subjective quality scores with natural statistical characteristics of video frames and video compression parameters, we propose an objective underwater video quality assessment model. The proposed nonreference underwater video quality assessment method is suitable to establish a prediction model that is highly related to human visual perception, with a small sample size of underwater video dataset.关键词:video quality assessment;objective quality assessment model;underwater video;natural scene statistics;compression parameters55|130|4更新时间:2024-05-07

摘要:ObjectiveThe application of underwater video technology has a history of more than 60 years. This technology plays an important role in promoting research on marine bioecology, fish species, and underwater object detection and tracking. Video quality assessment is one of the key areas being studied in video technology research. Such assessment is especially vital for underwater videos because underwater environments are more complex than atmospheric ones. On the one hand, natural sunlight is seriously absorbed in deep water, and the artificial light used in video shooting suffers from light absorption, dispersion, and scattering due to water turbidity and submarine topography. As a result, underwater videos have blurred picture, low contrast, color cast, and uneven lighting. On the other hand, underwater video quality is affected by the limitation of photography equipment and the influence of water flow. When shooting a moving object, the lens hardly stabilizes and turns unsmooth. Compared with videos shot in natural scenes, underwater videos are characterized by large lens movement, shaking, and serious out of focus. These characteristics make it difficult for conventional video quality assessment(VQA) methods to evaluate underwater video accurately and effectively. Thus, the "quality" of underwater videos must be redefined, and an effective quality assessment method must be established. In this study, we establish an underwater video dataset by considering underwater video imaging characteristics, annotate its video quality via subjective quality assessment, and propose an objective underwater video quality assessment model on the basis of spatial naturalness and video compression index.MethodFirst, a new underwater video dataset is established to 1) collect several underwater videos captured in real deep sea environments for processing as source data; 2) filter these videos preliminarily to include different underwater scenes; 3) cut the preliminary screened videos at intervals of 10 seconds; 4) refilter the short video sequences to cover different shoot characteristics and color diversity, thus generating 25 video sequences with rich color information, different video contents, and different underwater video features; and 5) expand the dataset using different frame rates and bit rates as compression parameters. A total of 250 (25+25×3×3) video sequences are obtained. Then, subjective quality assessment is conducted. Absolute category rating is used by 20 participants to annotate all the 250 videos with scores ranging from 1 to 5. Then, we consider influences on the underwater video quality from the aspects of spatial, temporal, and compression features. The spatial features are expressed by natural scene statistics distortion characteristics in the spatial domain and are calculated using the blind/referenceless image spatial quality evaluator(BRISQUE) algorithm. The temporal features are expressed by optical flow motion features. We first compute the dense optical flow matrix between adjacent frames and then extract the mean and variation of overall optical flows and the mean and variation of the main objects in the video. Compression features use resolution, frame rate, and bit rate, which are easy-to-access video coding parameters. Considering the redundancy and relevancy of these potential features, we analyze the correlations among the features and between the features and the subjective quality scores. Then, we select 21 features as influence factors, which only contain 18 spatial natural characteristics and three compression indexes. Lastly, we establish a linear model with the selected features to evaluate underwater video quality objectively through linear regression with cross validation.ResultExperimental results show that the proposed underwater video quality assessment model based on spatial naturalness and compression index can obtain the highest correlation with subjective scores in comparison with several mainstream quality assessment models, including two underwater image quality indices (underwater image quality measure(UIQM) and underwater color image quality evaluation(UCIQE)), a natural image quality distortion index (BRISQUE), and a video quality assessment model (video intrinsic integrity and distortion evaluation oracle(VIIDEO)). Performance evaluation is based on Pearson's correlation coefficient (PCC), Spearman's rank order correlation coefficient (SROCC) and the mean squared errors (MSE) between the predicted video quality scores of each model and the subjective scores. On the test video dataset, our method achieves the highest correlation (PCC=0.840 8, SROCC=0.832 2) and a minimum MSE value of 0.113 1. This result indicates that our proposed method is more stable and can predict video quality more accurately than other methods. By contrast, the video quality assessment model VIIDEO can hardly provide correct results, whereas UIQM and UCIQE demonstrate poor performance with a PCC and SROCC of 0.3~0.4. In addition, BRISQUE performs relatively better than the other methods although still poorer than our method.ConclusionUnderwater videos are characterized by blurred picture, low contrast, color distortion, uneven lighting, large lens movement, and out of focus. To achieve an accurate assessment of underwater video quality, we fully consider the characteristics and shooting conditions of underwater videos and establish a labeled underwater video dataset with subjective video quality assessment. By fitting a linear regression model for subjective quality scores with natural statistical characteristics of video frames and video compression parameters, we propose an objective underwater video quality assessment model. The proposed nonreference underwater video quality assessment method is suitable to establish a prediction model that is highly related to human visual perception, with a small sample size of underwater video dataset.关键词:video quality assessment;objective quality assessment model;underwater video;natural scene statistics;compression parameters55|130|4更新时间:2024-05-07

Image Processing and Coding

-

摘要:ObjectiveWith the development of the textile industry, the manual identification of cloth has been unable to meet the growing demand for production. More and more image recognition technologies are applied to cloth recognition. Image recognition is a technology that combines feature extraction and feature learning; it plays an important role in improving the competitiveness of the clothing industry. Compared with general-purpose images, cloth images usually only show subtle differences in texture and shape. Current clothing recognition algorithms are based on machine learning; that is, they learn the features of clothing images through machine learning and compare the features of known fabric to determine the clothing category. However, these clothing recognition algorithms usually have low recognition rates because they only consider the vision attribute, which cannot fully describe the fabric and ignores the properties of the fabric itself. Touch and vision are two important sensing modalities for humans, and they offer complementary information for sensing cloth. Machine learning can also benefit from such multimodal sensing ability. To solve the problem of low recognition accuracy of common fabrics, a fabric image recognition method based on fabric properties and tactile sensing is proposed.MethodThe proposed method involves four steps, including image measurement, tactile sensing, fabric learning, and fabric recognition. The main idea of the method is to use AlexNet to extract tactile image features adaptively and match the fabric properties extracted by MATLAB morphology. First, the geometric measurement method is established to measure the input fabric image samples, and a parametric model is obtained after quantitatively analyzing the three key factors by testing the recovery, stretching, and bending behavior of different real cloth samples. The geometric measures of fabric properties can be obtained through parametric modeling. Second, fabric tactile sensing is measured through tactile sensor settings, and the low-level features of tactile images are extracted using convolutional neural network (CNN). Third, the fabric identification model is trained by matching the fabric geometric measures with the extracted features of tactile image and parameter learning through the CNN to learn the different parameters of fabric properties. Finally, the fabric is recognized, and results are obtained. In this study, the issue on cloth recognition is addressed by the basis of tactile image and vision; in this manner, missing sensory information can be avoided. Furthermore, a new fusion method named deep maximum covariance analysis (DMCA) is utilized to learn a joint latent space for sharing features through vision and tactile sensing, which can match weak paired vision and tactile data. Considering that the current fabric dataset contains only a few fabric types, which cannot be classified as everyday fabric, two fabric sample datasets are constructed. The first is a fabric image dataset for fabric property measurement, including the recovery, stretching, and bending images of 12 kinds of fabric types, such as coarse cotton, fine cotton, and canvas. Each type of fabric has 10 images, thus having a total of 360 images. The second is a fabric tactile image dataset, which includes 12 fabric types, each comprising 500 images with a total of 6 000 images. The size of all images are set to 227×227 pixels for the convenience of the experiment.ResultTo verify the effectiveness of the proposed method, experiments are performed on 12 common fabric samples. Experimental results show that the recognition average accuracy can reach 89.5%. Compared with the method of using only a single and three kinds of fabric attributes, the proposed method obtains a higher recognition rate. The proposed method also possesses better recognition effect compared with that of the mainstream methods. For example, compared with recognition accuracy of sparse coding (SC) combined with support vector machine (SVM), that of the proposed method increases to 89.5%.ConclusionA fabric image recognition method of combining vision and tactile sensing is proposed. The method can accurately identify fabric for clothing and improve the accuracy of fabric recognition. For the feature extraction task, the AlexNet network achieves simplified high-dimensional features, which can adaptively extract effective features to avoid manual screening. Moreover, the DMCA model performs well in cross-modal matching. Compared with other clothing recognition methods, our method shows several advantages in terms of accuracy, without the cost of expensive equipment. However, our method does not consider the recognition accuracy problem, which is influenced by a small number of samples, a low image measurement data dimension, and a lack of tactile information. In the future, the issues to improve the recognition accuracy of various fabric types will be focused on further.关键词:cloth recognition;fabric properties;tactile sensing;convolutional neural network (CNN);parameter learning16|14|0更新时间:2024-05-07

摘要:ObjectiveWith the development of the textile industry, the manual identification of cloth has been unable to meet the growing demand for production. More and more image recognition technologies are applied to cloth recognition. Image recognition is a technology that combines feature extraction and feature learning; it plays an important role in improving the competitiveness of the clothing industry. Compared with general-purpose images, cloth images usually only show subtle differences in texture and shape. Current clothing recognition algorithms are based on machine learning; that is, they learn the features of clothing images through machine learning and compare the features of known fabric to determine the clothing category. However, these clothing recognition algorithms usually have low recognition rates because they only consider the vision attribute, which cannot fully describe the fabric and ignores the properties of the fabric itself. Touch and vision are two important sensing modalities for humans, and they offer complementary information for sensing cloth. Machine learning can also benefit from such multimodal sensing ability. To solve the problem of low recognition accuracy of common fabrics, a fabric image recognition method based on fabric properties and tactile sensing is proposed.MethodThe proposed method involves four steps, including image measurement, tactile sensing, fabric learning, and fabric recognition. The main idea of the method is to use AlexNet to extract tactile image features adaptively and match the fabric properties extracted by MATLAB morphology. First, the geometric measurement method is established to measure the input fabric image samples, and a parametric model is obtained after quantitatively analyzing the three key factors by testing the recovery, stretching, and bending behavior of different real cloth samples. The geometric measures of fabric properties can be obtained through parametric modeling. Second, fabric tactile sensing is measured through tactile sensor settings, and the low-level features of tactile images are extracted using convolutional neural network (CNN). Third, the fabric identification model is trained by matching the fabric geometric measures with the extracted features of tactile image and parameter learning through the CNN to learn the different parameters of fabric properties. Finally, the fabric is recognized, and results are obtained. In this study, the issue on cloth recognition is addressed by the basis of tactile image and vision; in this manner, missing sensory information can be avoided. Furthermore, a new fusion method named deep maximum covariance analysis (DMCA) is utilized to learn a joint latent space for sharing features through vision and tactile sensing, which can match weak paired vision and tactile data. Considering that the current fabric dataset contains only a few fabric types, which cannot be classified as everyday fabric, two fabric sample datasets are constructed. The first is a fabric image dataset for fabric property measurement, including the recovery, stretching, and bending images of 12 kinds of fabric types, such as coarse cotton, fine cotton, and canvas. Each type of fabric has 10 images, thus having a total of 360 images. The second is a fabric tactile image dataset, which includes 12 fabric types, each comprising 500 images with a total of 6 000 images. The size of all images are set to 227×227 pixels for the convenience of the experiment.ResultTo verify the effectiveness of the proposed method, experiments are performed on 12 common fabric samples. Experimental results show that the recognition average accuracy can reach 89.5%. Compared with the method of using only a single and three kinds of fabric attributes, the proposed method obtains a higher recognition rate. The proposed method also possesses better recognition effect compared with that of the mainstream methods. For example, compared with recognition accuracy of sparse coding (SC) combined with support vector machine (SVM), that of the proposed method increases to 89.5%.ConclusionA fabric image recognition method of combining vision and tactile sensing is proposed. The method can accurately identify fabric for clothing and improve the accuracy of fabric recognition. For the feature extraction task, the AlexNet network achieves simplified high-dimensional features, which can adaptively extract effective features to avoid manual screening. Moreover, the DMCA model performs well in cross-modal matching. Compared with other clothing recognition methods, our method shows several advantages in terms of accuracy, without the cost of expensive equipment. However, our method does not consider the recognition accuracy problem, which is influenced by a small number of samples, a low image measurement data dimension, and a lack of tactile information. In the future, the issues to improve the recognition accuracy of various fabric types will be focused on further.关键词:cloth recognition;fabric properties;tactile sensing;convolutional neural network (CNN);parameter learning16|14|0更新时间:2024-05-07 -

摘要:ObjectiveBlurred car license plate recognition is a bottleneck in the field of license recognition. The development of deep learning brings a new research direction for license recognition. Benefitting from the superfeature extraction power of convolutional neural network (CNN) and the good context learning capacity of convolutional recurrent neural network (CRNN), the procedure of license recognition changes from a segmentation-based to an end-to-end method. Cur-rent deep learning-based license recognition methods suffer from two major problems. First, the size of the model is too large compared with traditional algorithms. In many applications, license recognition algorithms need to be deployed into embedded or mobile equipment. The generalization of algorithms is limited by its heavy size. Thus, keeping the balance between recognition efficiency and model size is challenging for license recognition methods based on deep learning. Second, the recognition effect of deep learning-based methods rely on large datasets, whereas the training dataset cannot be used widely because the car license formats vary in different countries. Collecting numerous car plate images manually is difficult in each country, let alone collecting blurred license images. Thus, the lack of training images is another challenge for deep learning-based license recognition methods. In this study, a lightweight car license plate recognition method is proposed. Car license plate images generated by optimized deep convolutional generative adversarial networks (DCGAN) are used to solve the problem of a lack of training dataset. The method simultaneously increases recognition accuracy and improves the generality of the model.MethodThe method includes two parts, namely, blurred car license image generation based on optimized DCGAN and lightweight car license plate recognition based on the depth of separable convolution networks with bidirectional long short-term memory (LSTM). In the first part, Wasserstein distance is used to optimize DCGAN and improve the diversity and stability of generated blurred images. Usually, generative adversarial networks (GAN) are designed to generate images with high quality; it is seldom used to generate images with low quality. Car license images are difficult to collect for format diversity and privacy reasons. In this study, GAN is used to generate images with low quality, that is, blurred car license images, to fill the training dataset and train deep models of license recognition. In the second part, a lightweight license recognition model is designed on the basis of deep separable convolution. Based on CRNN, the deep separable convolution operation can not only reduce the computation of recognition algorithms but also learn the features of training samples effectively. Lastly, the feature graphs are converted into feature sequences and input into bidirectional LSTM for sequence learning and labeling.ResultExperimental results show that the proposed method is effective. First, by adding the generated blurred license images of DCGAN into the training dataset, recognition accuracy can be improved effectively, not only for the proposed method but also for the traditional method and other deep learning-based methods. Using generated image is a feasible scheme for all kinds of methods to improve their recognition accuracy. Second, by combining deep separable convolution layers with CRNN, the size of the recognition model is reduced, and the computation speed is improved effectively; furthermore, recognition accuracy is guaranteed. The proposed lightweight blurred car plate recognition model has a similar recognition accuracy with CRNN-based methods after improving its performance by using generated images in this research. By contrast, the size and recognition speed of the model are better than those of the CRNN model. For the proposed method, the size and recognition speed of the model are 45 MB and 12.5 frame/s, respectively; for the CRNN-based method, the values are 82 MB and 7 frame/s, respectively. The high recognition accuracy and small size of the model improve its possibility for application into mobile or embedded equipment.ConclusionThe problem of lack blurred license images can be solved by using generated GAN images. Moreover, the lightweight license recognition model, which is improved with deep separable convolution, has high recognition accuracy and generalization performance.关键词:blur car license plate recognition;deep learning;generative adversarial network (GAN);deep separable convolution;convolutional recurrent neural network (CRNN)35|22|2更新时间:2024-05-07

摘要:ObjectiveBlurred car license plate recognition is a bottleneck in the field of license recognition. The development of deep learning brings a new research direction for license recognition. Benefitting from the superfeature extraction power of convolutional neural network (CNN) and the good context learning capacity of convolutional recurrent neural network (CRNN), the procedure of license recognition changes from a segmentation-based to an end-to-end method. Cur-rent deep learning-based license recognition methods suffer from two major problems. First, the size of the model is too large compared with traditional algorithms. In many applications, license recognition algorithms need to be deployed into embedded or mobile equipment. The generalization of algorithms is limited by its heavy size. Thus, keeping the balance between recognition efficiency and model size is challenging for license recognition methods based on deep learning. Second, the recognition effect of deep learning-based methods rely on large datasets, whereas the training dataset cannot be used widely because the car license formats vary in different countries. Collecting numerous car plate images manually is difficult in each country, let alone collecting blurred license images. Thus, the lack of training images is another challenge for deep learning-based license recognition methods. In this study, a lightweight car license plate recognition method is proposed. Car license plate images generated by optimized deep convolutional generative adversarial networks (DCGAN) are used to solve the problem of a lack of training dataset. The method simultaneously increases recognition accuracy and improves the generality of the model.MethodThe method includes two parts, namely, blurred car license image generation based on optimized DCGAN and lightweight car license plate recognition based on the depth of separable convolution networks with bidirectional long short-term memory (LSTM). In the first part, Wasserstein distance is used to optimize DCGAN and improve the diversity and stability of generated blurred images. Usually, generative adversarial networks (GAN) are designed to generate images with high quality; it is seldom used to generate images with low quality. Car license images are difficult to collect for format diversity and privacy reasons. In this study, GAN is used to generate images with low quality, that is, blurred car license images, to fill the training dataset and train deep models of license recognition. In the second part, a lightweight license recognition model is designed on the basis of deep separable convolution. Based on CRNN, the deep separable convolution operation can not only reduce the computation of recognition algorithms but also learn the features of training samples effectively. Lastly, the feature graphs are converted into feature sequences and input into bidirectional LSTM for sequence learning and labeling.ResultExperimental results show that the proposed method is effective. First, by adding the generated blurred license images of DCGAN into the training dataset, recognition accuracy can be improved effectively, not only for the proposed method but also for the traditional method and other deep learning-based methods. Using generated image is a feasible scheme for all kinds of methods to improve their recognition accuracy. Second, by combining deep separable convolution layers with CRNN, the size of the recognition model is reduced, and the computation speed is improved effectively; furthermore, recognition accuracy is guaranteed. The proposed lightweight blurred car plate recognition model has a similar recognition accuracy with CRNN-based methods after improving its performance by using generated images in this research. By contrast, the size and recognition speed of the model are better than those of the CRNN model. For the proposed method, the size and recognition speed of the model are 45 MB and 12.5 frame/s, respectively; for the CRNN-based method, the values are 82 MB and 7 frame/s, respectively. The high recognition accuracy and small size of the model improve its possibility for application into mobile or embedded equipment.ConclusionThe problem of lack blurred license images can be solved by using generated GAN images. Moreover, the lightweight license recognition model, which is improved with deep separable convolution, has high recognition accuracy and generalization performance.关键词:blur car license plate recognition;deep learning;generative adversarial network (GAN);deep separable convolution;convolutional recurrent neural network (CRNN)35|22|2更新时间:2024-05-07 -