最新刊期

卷 25 , 期 8 , 2020

-

摘要:Diffusion magnetic resonance imaging is currently the only non-invasive white matter fiber imaging method that provides a new tool for understanding the fiber structure of thee living brain and shows great significance in the fields of clinical medicine,disease analysis,and neuroscience. The diffusion of water molecules in the brain due to the influence of nerve fibers exhibits anisotropy. Diffusion magnetic resonance imaging indirectly characterizes the local structural information of a fiber by measuring the water molecule diffusion attenuation signal of each voxel. Fiber tracking is an important step in diffusion magnetic resonance imaging where the spatial orientation information of voxels is integrated to depict anatomically significant fiber space structures. Many studies on the white fiber tracking algorithm have been published over the past two decades since its introduction in 1998. However,a large number of studies and clinical applications have shown that this tracking algorithm reconstructs a large number of false fibers. To provide researchers with a systematic understanding of the field and to provide clinicians with a basis for selecting fiber tracking algorithms,this paper quantitatively evaluates and qualitatively compares nine of the most commonly used algorithms. The typical algorithms are introduced in detail from the perspectives of deterministic,probabilistic,and global optimization. The deterministic algorithm focuses on the streamlines tracking and fiber assignment by continuous tracking (FACT) algorithms. The probabilistic tracking algorithm focuses on the Bayesian probability tracking framework,the Bayesian-framework-based particle filtering tractography (PFT),and unscented Kalman filter (UKF). Meanwhile,the global tracking algorithm focuses on the graph-based fiber tracking and Gibbs tracking algorithms and introduces the anatomically constrained tractography (ACT) algorithm-which is commonly used in fiber tracking-and the fiber tracking algorithm combined with machine learning. The simulated Fibercup and International Society for Magnetic Resonance in Medicine(ISMRM) 2015 challenge data are then used to test and compare the results of the nine algorithms (TensorDet,SD_Stream,FACT,iFOD2,ACT_iFOD2,PFT,UKF,Gibbs,and MLBT(machine learning based tractograph)) and to calculate the Tractometer quantitative indicators of their results. The advantages and disadvantages of these algorithms are then determined,and clinical data are used for experimental verification. The intrinsic connection and differences among these algorithms are then analyzed by combining the experimental results with algorithm theory. The deterministic tracking algorithm selects the only largest possible direction for fiber tracking at each step. This algorithm is simple and easy to implement and can quickly obtain the fiber tracking result. However,the local direction of the fiber caused by the noise of the image voxel is inaccurate and further leads to deterministic tracking. Meanwhile,the probabilistic tracking algorithm selects the tracking direction of the fiber from its probability distribution in the local direction and produces a highly comprehensive fiber tracking result that can describe the complex fiber structure region. However,sampling from the probability distribution of the local direction of the fiber produces a large number of pseudofibers and subsequently produces confusing imaging results. The probabilistic fiber tracking based on the Bayesian framework calculates the posterior probability of the fiber distribution and samples the fiber tracking direction from the posterior probability,thereby effectively reducing the number of pseudofibers. The global fiber tracking algorithm is optimized from a global perspective to obtain the fiber trajectory that is most suitable for the global diffusion magnetic resonance imaging(dMRI) signal in order to avoid the cumulative error of the deterministic and probabilistic tracking algorithms. However,while the main structure of the fiber tracking results is obvious,their detailed structure is imperceptible. The calculation results also cannot guarantee convergence and require a large amount of calculations,which is not conducive to practical clinical application. The ACT algorithm is mainly applied as a screening mechanism for the fiber results and needs to be combined with other fiber tracking algorithms to reduce its error fiber ratio. The results have varying degrees of impact on subsequent fiber tracking algorithms based on the accuracy of the ACT step results. The machine learning algorithm guides the tracking of fiber trajectories through a random forest classifier generated via specimen training. However,the current machine learning algorithm only post-processes the fiber tracking results and needs to be trained with the tracking results of other algorithms. In this case,the fiber tracking results are greatly influenced by the training specimen. Fiber tracking has high research and application value for analyzing human brain nerve fiber connections. Different algorithms for fiber tracking have their own advantages and disadvantages. At present,a tracking algorithm that can address the disadvantages and combine the advantages of other algorithms is yet to be devised. The results of the proposed fiber tracking algorithm also show a certain gap from the actual situation,and drawing a highly accurate fiber trajectory remains a challenge.关键词:diffusion magnetic resonance imaging(dMRI);anisotropy;white matter tractography(WMT);Bayesian;global optimization;International Society for Magnetic Resonance in Medicine(ISMRM) 2015 challenge data20|38|3更新时间:2024-05-07

摘要:Diffusion magnetic resonance imaging is currently the only non-invasive white matter fiber imaging method that provides a new tool for understanding the fiber structure of thee living brain and shows great significance in the fields of clinical medicine,disease analysis,and neuroscience. The diffusion of water molecules in the brain due to the influence of nerve fibers exhibits anisotropy. Diffusion magnetic resonance imaging indirectly characterizes the local structural information of a fiber by measuring the water molecule diffusion attenuation signal of each voxel. Fiber tracking is an important step in diffusion magnetic resonance imaging where the spatial orientation information of voxels is integrated to depict anatomically significant fiber space structures. Many studies on the white fiber tracking algorithm have been published over the past two decades since its introduction in 1998. However,a large number of studies and clinical applications have shown that this tracking algorithm reconstructs a large number of false fibers. To provide researchers with a systematic understanding of the field and to provide clinicians with a basis for selecting fiber tracking algorithms,this paper quantitatively evaluates and qualitatively compares nine of the most commonly used algorithms. The typical algorithms are introduced in detail from the perspectives of deterministic,probabilistic,and global optimization. The deterministic algorithm focuses on the streamlines tracking and fiber assignment by continuous tracking (FACT) algorithms. The probabilistic tracking algorithm focuses on the Bayesian probability tracking framework,the Bayesian-framework-based particle filtering tractography (PFT),and unscented Kalman filter (UKF). Meanwhile,the global tracking algorithm focuses on the graph-based fiber tracking and Gibbs tracking algorithms and introduces the anatomically constrained tractography (ACT) algorithm-which is commonly used in fiber tracking-and the fiber tracking algorithm combined with machine learning. The simulated Fibercup and International Society for Magnetic Resonance in Medicine(ISMRM) 2015 challenge data are then used to test and compare the results of the nine algorithms (TensorDet,SD_Stream,FACT,iFOD2,ACT_iFOD2,PFT,UKF,Gibbs,and MLBT(machine learning based tractograph)) and to calculate the Tractometer quantitative indicators of their results. The advantages and disadvantages of these algorithms are then determined,and clinical data are used for experimental verification. The intrinsic connection and differences among these algorithms are then analyzed by combining the experimental results with algorithm theory. The deterministic tracking algorithm selects the only largest possible direction for fiber tracking at each step. This algorithm is simple and easy to implement and can quickly obtain the fiber tracking result. However,the local direction of the fiber caused by the noise of the image voxel is inaccurate and further leads to deterministic tracking. Meanwhile,the probabilistic tracking algorithm selects the tracking direction of the fiber from its probability distribution in the local direction and produces a highly comprehensive fiber tracking result that can describe the complex fiber structure region. However,sampling from the probability distribution of the local direction of the fiber produces a large number of pseudofibers and subsequently produces confusing imaging results. The probabilistic fiber tracking based on the Bayesian framework calculates the posterior probability of the fiber distribution and samples the fiber tracking direction from the posterior probability,thereby effectively reducing the number of pseudofibers. The global fiber tracking algorithm is optimized from a global perspective to obtain the fiber trajectory that is most suitable for the global diffusion magnetic resonance imaging(dMRI) signal in order to avoid the cumulative error of the deterministic and probabilistic tracking algorithms. However,while the main structure of the fiber tracking results is obvious,their detailed structure is imperceptible. The calculation results also cannot guarantee convergence and require a large amount of calculations,which is not conducive to practical clinical application. The ACT algorithm is mainly applied as a screening mechanism for the fiber results and needs to be combined with other fiber tracking algorithms to reduce its error fiber ratio. The results have varying degrees of impact on subsequent fiber tracking algorithms based on the accuracy of the ACT step results. The machine learning algorithm guides the tracking of fiber trajectories through a random forest classifier generated via specimen training. However,the current machine learning algorithm only post-processes the fiber tracking results and needs to be trained with the tracking results of other algorithms. In this case,the fiber tracking results are greatly influenced by the training specimen. Fiber tracking has high research and application value for analyzing human brain nerve fiber connections. Different algorithms for fiber tracking have their own advantages and disadvantages. At present,a tracking algorithm that can address the disadvantages and combine the advantages of other algorithms is yet to be devised. The results of the proposed fiber tracking algorithm also show a certain gap from the actual situation,and drawing a highly accurate fiber trajectory remains a challenge.关键词:diffusion magnetic resonance imaging(dMRI);anisotropy;white matter tractography(WMT);Bayesian;global optimization;International Society for Magnetic Resonance in Medicine(ISMRM) 2015 challenge data20|38|3更新时间:2024-05-07 -

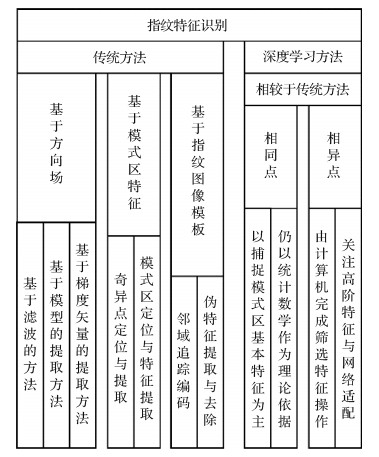

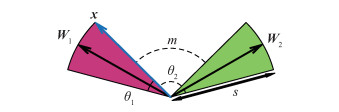

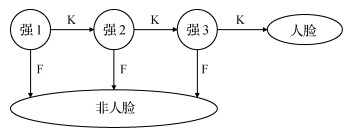

摘要:Biometrics,which is an important means of identity authentication,has been integrated into all aspects of daily life. The convenience and efficiency of single-modal biometrics and the reliability of multimodal biometrics have enabled the feature extraction technology to play a key role in directly affecting recognition results. As feature extraction techniques mature,researchers are turning their attention to the relevance of biometrics. In this research,the feature extraction methods in single-modal and multimodal biometrics are the object. We first review the feature extraction methods of face and fingerprint through the literature. The fingerprint feature extraction methods can roughly be divided into two categories. The first category calculates the fingerprint direction,which completes the estimation and judgment of the fingerprint local or the hole direction field. This method can be subdivided into three categories,which utilize gradient vector,filter,or mathematical model to build the fingerprint direction field. The second category targets the fingerprint pattern area,and the widely utilized methods are presented in this paper. Face feature extraction is based on the face representation process. Face representation can be divided into 2D-and 3D-based face representation methods according to different data represented by face. Pixels,including different color or points,are converted into feature vectors for different facial features that are invisible to the naked eye. In the traditional identification method,this recognition process relies on the accumulation of biometrics and recognition experience known to humans. A computer can learn and generalize when machine learning and deep learning are introduced. It can gradually overcome the cognitive deficit of humanity in face recognition and other fields. We analyze feature classification based on empirical knowledge and computer logic sampling extraction based on deep learning and operate these methods on single mode and multimode. The modeling of the correlation among biometrics that may progress in the future is explored on the basis of a comparison of multimodal biometrics. The knowledge gained in the field of computer science comes entirely from the natural evolution of our own or the Earth. Current results of single-modal and multimodal biometric technologies have saturated with the current requirements for identity verification applications. The high-efficiency and high-precision biofeature extraction method and the feature extraction requirements under the biorecognition framework are matched effectively. However,the study of the correlation among different biometrics remains blank. Such study is significant not only for image processing but also for many subdisciplines in the biological field. In this paper,we explain the feasibility of modeling the correlation among biometrics from the perspective of image processing. The assumption that biometrics can be converted into one another in the form of computer images is based on the following points:1) the origin of biometrics comes from DNA strands,which makes the characteristics of each individual possess mutualism and universality; 2) features obtained from images do not have the irreversibility of complex transformation processes,such as DNA to protein construction; 3) the aggregation,analysis,and coding of features can be realized on computers,and the vision of a computer gives it a stability that is far superior to that of human vision in the classification process. Biometric feature extraction methods based on single-modality and multimodality have been applied extensively. The current results of single-modal and multimodal biometric extraction techniques are reviewed,and the correlation between biometrics and their application prospects is determined.关键词:biometric feature extraction;fingerprint;face;multimodal;relevance53|161|2更新时间:2024-05-07

摘要:Biometrics,which is an important means of identity authentication,has been integrated into all aspects of daily life. The convenience and efficiency of single-modal biometrics and the reliability of multimodal biometrics have enabled the feature extraction technology to play a key role in directly affecting recognition results. As feature extraction techniques mature,researchers are turning their attention to the relevance of biometrics. In this research,the feature extraction methods in single-modal and multimodal biometrics are the object. We first review the feature extraction methods of face and fingerprint through the literature. The fingerprint feature extraction methods can roughly be divided into two categories. The first category calculates the fingerprint direction,which completes the estimation and judgment of the fingerprint local or the hole direction field. This method can be subdivided into three categories,which utilize gradient vector,filter,or mathematical model to build the fingerprint direction field. The second category targets the fingerprint pattern area,and the widely utilized methods are presented in this paper. Face feature extraction is based on the face representation process. Face representation can be divided into 2D-and 3D-based face representation methods according to different data represented by face. Pixels,including different color or points,are converted into feature vectors for different facial features that are invisible to the naked eye. In the traditional identification method,this recognition process relies on the accumulation of biometrics and recognition experience known to humans. A computer can learn and generalize when machine learning and deep learning are introduced. It can gradually overcome the cognitive deficit of humanity in face recognition and other fields. We analyze feature classification based on empirical knowledge and computer logic sampling extraction based on deep learning and operate these methods on single mode and multimode. The modeling of the correlation among biometrics that may progress in the future is explored on the basis of a comparison of multimodal biometrics. The knowledge gained in the field of computer science comes entirely from the natural evolution of our own or the Earth. Current results of single-modal and multimodal biometric technologies have saturated with the current requirements for identity verification applications. The high-efficiency and high-precision biofeature extraction method and the feature extraction requirements under the biorecognition framework are matched effectively. However,the study of the correlation among different biometrics remains blank. Such study is significant not only for image processing but also for many subdisciplines in the biological field. In this paper,we explain the feasibility of modeling the correlation among biometrics from the perspective of image processing. The assumption that biometrics can be converted into one another in the form of computer images is based on the following points:1) the origin of biometrics comes from DNA strands,which makes the characteristics of each individual possess mutualism and universality; 2) features obtained from images do not have the irreversibility of complex transformation processes,such as DNA to protein construction; 3) the aggregation,analysis,and coding of features can be realized on computers,and the vision of a computer gives it a stability that is far superior to that of human vision in the classification process. Biometric feature extraction methods based on single-modality and multimodality have been applied extensively. The current results of single-modal and multimodal biometric extraction techniques are reviewed,and the correlation between biometrics and their application prospects is determined.关键词:biometric feature extraction;fingerprint;face;multimodal;relevance53|161|2更新时间:2024-05-07

Review

-

摘要:ObjectiveGait has become a popular research topic that is currently investigated by using visual and machine learning methods. However,most of these studies are concentrated in the field of human identification and use 2D RGB images. In contrast to these studies,this paper investigates abnormal gait recognition by using 3D data. A method based on 3D point cloud data and the semantic body model is then proposed for view-invariant abnormal gait recognition. Compared with traditional 2D abnormal gait recognition approaches,the proposed 3D-based method can easily deal with many obstacles in abnormal gait modelling and recognition processes,including view-invariant problems and interference from external items.MethodThe point cloud data of human gait are obtained by using an infrared structured light sensor,which is a 3D depth camera that uses a structure projector and reflecting light receiver to gain the depth information of an object and calculate its point cloud data. Although the point cloud data of the human body are also in 3D,they are generally unstructured,thereby influencing the 3D representation of the human body and posture. To deal with this problem,a 3D parametric human body learned from the 3D body dataset by using a statistic method is introduced in this paper. The parameterized human body model refers to the description and construction of the corresponding visual human body mesh through abstract high-order semantic features,such as height,weight,age,gender,and skeletal joints. The parameters are determined by using statistical learning methods. The human body is embedded into the model,and the 3D parametric model can be deformed both in shapes and poses. Unlike traditional methods that directly model the 3D body from point cloud data via the point cloud reduction algorithm and triangle mesh grid method,the related 3D parameterized body model is deformed to fit the point cloud data in both shape and posture. The standard 3D human model proposed in this paper is constructed based on the body shape PCA (principal component analysis) analysis and skin method. An observation function that measures the similarity of the deformed 3D model with the raw point cloud data of the human body is also introduced. An accurate deformation of the 3D body is ensured by iteratively minimizing the observation function. After the 3D model estimation process,the features of the raw point cloud data of the human body are converted into a high-level structured representation of the human body. This process not only abstracts the unstructured data to a high-order semantic description but also effectively reduces the dimensionality of the original data. After 3D modelling and structured feature representation,a convolution gated recurrent unit (ConvGRU) recurrent neural network is applied to extract the temporal-spatial features of the projected depth gait images. ConvGRU has the advantages of both convolutional and recurrent neural networks,the latter of which is based on the gate structure. The tow gates (i.e.,reset and update gates) help the model memorize useful information and forget useless data. In the final classification process,the samples are divided into positive,negative,and anchor samples. The anchor sample is the sample itself,the positive samples are same-category samples that belong to different objects,and the negative samples are those that belong to opposite categories. Training the classifier by using the triples elements strategy can improve its ability to discriminate small feature differences of different categories. At the same time,a virtual 3D sample synthesizing method based on body,pose,and view deformation is proposed to deal with the data shortage problem of abnormal gait. Compared with normal gait datasets,abnormal gait data,especially 3D abnormal datasets,are rare and difficult to obtain. Moreover,given the limited amount of ground truth data,most of the abnormal data are imitated by the experimental participates. As a result,the virtual synthesizing method can help extend the training data and improve the generalization ability of the abnormal gait classification model.ResultExperiments were performed by using the CSU(Central South University) abnormal 3D gait database and the depth-included human action video (DHA) dataset,and different abnormal gait or action recognition methods were compared with the proposed approach. In the CSU abnormal gait database,the rank-1 mean detection and recognition rate of abnormal gait is 96.6% at the 0°,45°,and 90° views. In the 90°-0° cross view recognition experiment,the proposed method outperforms the other approaches that use DMHI(difference motion history image) or DMM-CNN(depth motion map-convolutional neural network) as feature representation by at least 25%. Meanwhile,in the DHA dataset,the proposed method result has a rank-1 mean detection and recognition rate of near 98%,which is 2% to 3% higher than that of novel approaches,including DMM based methods.ConclusionBased on the feature extraction method of the 3D parameterized human body model,abnormal gait image data can be abstracted to high-order descriptions and effectively complete the feature extraction and dimensionality reduction of the original data. ConvGRU can extract the spatial and temporal features of the abnormal gait data well. The virtual sample synthesis and triple classification methods can be combined to classify and recognize abnormal gait data from different views. The proposed method not only improves the recognition accuracy of abnormal gait under various view angles but also provides a new approach for the detection and recognition of abnormal gait.关键词:machine vision;human recognition;3D abnormal gait modeling;virtual sample generation;convolutional recurrent neural network28|18|0更新时间:2024-05-07

摘要:ObjectiveGait has become a popular research topic that is currently investigated by using visual and machine learning methods. However,most of these studies are concentrated in the field of human identification and use 2D RGB images. In contrast to these studies,this paper investigates abnormal gait recognition by using 3D data. A method based on 3D point cloud data and the semantic body model is then proposed for view-invariant abnormal gait recognition. Compared with traditional 2D abnormal gait recognition approaches,the proposed 3D-based method can easily deal with many obstacles in abnormal gait modelling and recognition processes,including view-invariant problems and interference from external items.MethodThe point cloud data of human gait are obtained by using an infrared structured light sensor,which is a 3D depth camera that uses a structure projector and reflecting light receiver to gain the depth information of an object and calculate its point cloud data. Although the point cloud data of the human body are also in 3D,they are generally unstructured,thereby influencing the 3D representation of the human body and posture. To deal with this problem,a 3D parametric human body learned from the 3D body dataset by using a statistic method is introduced in this paper. The parameterized human body model refers to the description and construction of the corresponding visual human body mesh through abstract high-order semantic features,such as height,weight,age,gender,and skeletal joints. The parameters are determined by using statistical learning methods. The human body is embedded into the model,and the 3D parametric model can be deformed both in shapes and poses. Unlike traditional methods that directly model the 3D body from point cloud data via the point cloud reduction algorithm and triangle mesh grid method,the related 3D parameterized body model is deformed to fit the point cloud data in both shape and posture. The standard 3D human model proposed in this paper is constructed based on the body shape PCA (principal component analysis) analysis and skin method. An observation function that measures the similarity of the deformed 3D model with the raw point cloud data of the human body is also introduced. An accurate deformation of the 3D body is ensured by iteratively minimizing the observation function. After the 3D model estimation process,the features of the raw point cloud data of the human body are converted into a high-level structured representation of the human body. This process not only abstracts the unstructured data to a high-order semantic description but also effectively reduces the dimensionality of the original data. After 3D modelling and structured feature representation,a convolution gated recurrent unit (ConvGRU) recurrent neural network is applied to extract the temporal-spatial features of the projected depth gait images. ConvGRU has the advantages of both convolutional and recurrent neural networks,the latter of which is based on the gate structure. The tow gates (i.e.,reset and update gates) help the model memorize useful information and forget useless data. In the final classification process,the samples are divided into positive,negative,and anchor samples. The anchor sample is the sample itself,the positive samples are same-category samples that belong to different objects,and the negative samples are those that belong to opposite categories. Training the classifier by using the triples elements strategy can improve its ability to discriminate small feature differences of different categories. At the same time,a virtual 3D sample synthesizing method based on body,pose,and view deformation is proposed to deal with the data shortage problem of abnormal gait. Compared with normal gait datasets,abnormal gait data,especially 3D abnormal datasets,are rare and difficult to obtain. Moreover,given the limited amount of ground truth data,most of the abnormal data are imitated by the experimental participates. As a result,the virtual synthesizing method can help extend the training data and improve the generalization ability of the abnormal gait classification model.ResultExperiments were performed by using the CSU(Central South University) abnormal 3D gait database and the depth-included human action video (DHA) dataset,and different abnormal gait or action recognition methods were compared with the proposed approach. In the CSU abnormal gait database,the rank-1 mean detection and recognition rate of abnormal gait is 96.6% at the 0°,45°,and 90° views. In the 90°-0° cross view recognition experiment,the proposed method outperforms the other approaches that use DMHI(difference motion history image) or DMM-CNN(depth motion map-convolutional neural network) as feature representation by at least 25%. Meanwhile,in the DHA dataset,the proposed method result has a rank-1 mean detection and recognition rate of near 98%,which is 2% to 3% higher than that of novel approaches,including DMM based methods.ConclusionBased on the feature extraction method of the 3D parameterized human body model,abnormal gait image data can be abstracted to high-order descriptions and effectively complete the feature extraction and dimensionality reduction of the original data. ConvGRU can extract the spatial and temporal features of the abnormal gait data well. The virtual sample synthesis and triple classification methods can be combined to classify and recognize abnormal gait data from different views. The proposed method not only improves the recognition accuracy of abnormal gait under various view angles but also provides a new approach for the detection and recognition of abnormal gait.关键词:machine vision;human recognition;3D abnormal gait modeling;virtual sample generation;convolutional recurrent neural network28|18|0更新时间:2024-05-07 -

摘要:ObjectiveA pointwise convolution network (PCN) has great potential for the classification and segmentation of point cloud data. The pointwise convolution operator in each layer directly functions on the point cloud data to generate the local feature vectors point-by-point. The PCN can solve the problems caused by dimension increase and information loss because of the avoidance of point data structuration. However,the structure of PCN is responsible for the consistent maintenance of the point cloud during the implementation of pointwise convolution. Consequently,the PCN has no constituent that can describe the global features of point cloud. The PCN essentially needs a characteristic expansion considering the global features in terms of classification and segmentation accuracies for the point cloud data.MethodA so-called central point radiation model is proposed in this paper to pointwisely describe the global geometric properties. With the introduction of the radiation model into feature concatenation,the result ant extended PCN (EPCN) realizes a complete representation of local and global features for point cloud classification and segmentation. First,the point cloud in the central point radiation model is regarded as a set of projection point on the object surface by a radiation line from the central point. The amplitude of radiation vector determines the owner surface and tightness of each point around the central point,and the direction specifies the pointwise encircling direction and contributing radiation line. The central point is prescribed by the calculation of coordinate information in the point cloud,and the pointwise radiation vector is generated. Thus,the central point radiation model is constructed to describe global features. Second,the local feature description of the point cloud data at each depth is obtained through a multilayer pointwise convolution. The coordinates of the point cloud data provide an index for retrieving point attributes and determining the neighborhood points involved in the convolution of a concerned point. The pointwise convolution traverses all points in the point cloud and completely yields the pointwise local features. Finally,an EPCN is derived through the concatenation of the global features from the central point radiation model and the local features from the pointwise convolution. The complete feature description vector is adopted as the input of the fully connected layer of PCN for the category label prediction,and the input of the pointwise convolution layer is used for the label prediction point-by-point.ResultThe classification and segmentation performance of the proposed EPCN model are validated on the point cloud data sets of ModelNet40 and S3DIS(Stanford large-scale 3D indoor dataset),respectively. The EPCN classification on ModelNet40 leads to 1.8% and 3.5% increases in mean and m-class accuracies compared with the PCN. The EPCN segmentation on S3DIS provides 0.7% and 2.2% increases in s-mean and ms-class accuracies compared with the PCN.ConclusionExperimental results verify the effectiveness of the proposed central point radiation model for the global feature extraction of the point cloud data. The EPCN model provides an improved performance in terms of classification and segmentation.关键词:point cloud;pointwise convolution;central point radiation model;classification;segmentation;feature extension67|31|3更新时间:2024-05-07

摘要:ObjectiveA pointwise convolution network (PCN) has great potential for the classification and segmentation of point cloud data. The pointwise convolution operator in each layer directly functions on the point cloud data to generate the local feature vectors point-by-point. The PCN can solve the problems caused by dimension increase and information loss because of the avoidance of point data structuration. However,the structure of PCN is responsible for the consistent maintenance of the point cloud during the implementation of pointwise convolution. Consequently,the PCN has no constituent that can describe the global features of point cloud. The PCN essentially needs a characteristic expansion considering the global features in terms of classification and segmentation accuracies for the point cloud data.MethodA so-called central point radiation model is proposed in this paper to pointwisely describe the global geometric properties. With the introduction of the radiation model into feature concatenation,the result ant extended PCN (EPCN) realizes a complete representation of local and global features for point cloud classification and segmentation. First,the point cloud in the central point radiation model is regarded as a set of projection point on the object surface by a radiation line from the central point. The amplitude of radiation vector determines the owner surface and tightness of each point around the central point,and the direction specifies the pointwise encircling direction and contributing radiation line. The central point is prescribed by the calculation of coordinate information in the point cloud,and the pointwise radiation vector is generated. Thus,the central point radiation model is constructed to describe global features. Second,the local feature description of the point cloud data at each depth is obtained through a multilayer pointwise convolution. The coordinates of the point cloud data provide an index for retrieving point attributes and determining the neighborhood points involved in the convolution of a concerned point. The pointwise convolution traverses all points in the point cloud and completely yields the pointwise local features. Finally,an EPCN is derived through the concatenation of the global features from the central point radiation model and the local features from the pointwise convolution. The complete feature description vector is adopted as the input of the fully connected layer of PCN for the category label prediction,and the input of the pointwise convolution layer is used for the label prediction point-by-point.ResultThe classification and segmentation performance of the proposed EPCN model are validated on the point cloud data sets of ModelNet40 and S3DIS(Stanford large-scale 3D indoor dataset),respectively. The EPCN classification on ModelNet40 leads to 1.8% and 3.5% increases in mean and m-class accuracies compared with the PCN. The EPCN segmentation on S3DIS provides 0.7% and 2.2% increases in s-mean and ms-class accuracies compared with the PCN.ConclusionExperimental results verify the effectiveness of the proposed central point radiation model for the global feature extraction of the point cloud data. The EPCN model provides an improved performance in terms of classification and segmentation.关键词:point cloud;pointwise convolution;central point radiation model;classification;segmentation;feature extension67|31|3更新时间:2024-05-07 -

摘要:ObjectiveVideo object segmentation is an important topic in the field of computer vision. However,the existing segmentation methods are unable to address some issues,such as irregular objects,noise optical flows,and fast movements. To this end,this paper proposes an effective and efficient algorithm that solves these issues based on feature consistency.MethodThe proposed segmentation algorithm framework is based on the graph theory method of Markov random field (MRF). First,the Gaussian mixture model (GMM) is applied to model the color features of pre-specified marked areas,and the segmented data items are obtained. Second,a spatiotemporal smoothing term is established by combining various characteristics,such as color and optical flow direction. The algorithm then adds energy constraints based on feature consistency to enhance the appearance consistency of the segmentation results. The added energy belongs to a higher-order energy constraint,thereby significantly increasing the computational complexity of energy optimization. The energy optimization problem is solved by adding auxiliary nodes to improve the speed of the algorithm. The higher-order constraint term comes from the idea of text classification,which is used in this paper to model the higher-order term of the segmentation equation. Each super-pixel point corresponds to a text,and the scale-invariant feature transform (SIFT) feature point in the super-pixel point is used as a word in the text. The higher-order term is modeled afterward via extraction and clustering. Given the running speed of the algorithm,auxiliary nodes are added to optimize the high-order term. The high-order term is approximated to the data and smoothing items,and then the graph cutting algorithm is used to complete the segmentation.ResultThe test data were taken from the DAVIS_2016(densely annotated video segmentation) dataset,which contains 50 sets of data,of which 30 and 20 are sets of training and verification data,respectively. This dataset has a resolution of 854×480 pixels. Given that many methods are based on MRF expansion,α=0.3 and β=0.2 are empirically set in the proposed algorithm to maintain a capability balance among the data,smoothing,and feature consistency items. Similar to extant methods,the number of submodels used to establish the Gaussian mixture model for the front/background is set to 5,σh=σh=0.1. This paper focuses on the verification and evaluation of the proposed feature consistency constraint terms and sets β=0 and β=0.2 to divide the videos under the constraint condition. The experimental results show that the IoU score with higher-order constraints is 10.2% higher than that without higher-order constraints. To demonstrate its effectiveness,the proposed method is compared with some other classical video segmentation algorithms based on graph theory. The experimental results highlight the competitive segmentation effect of the proposed algorithm. Meanwhile,the average IoU score reported in this paper is slightly lower than that of the video segmenfation via object flow(OFL) algorithm because the latter continuously iteratively optimizes the optical flow calculation results to achieve a relatively high segmentation accuracy. The proposed algorithm takes nearly 10 seconds on average to segment each frame,which is shorter than the running time of other algorithms. For instance,although the OFL algorithm reports a slightly higher accuracy,its average processing time for each frame is approximately 1 minute,which is 6 times longer than that of the proposed algorithm. In sum,the proposed algorithm can achieve the same segmentation effect with a much lower computational complexity than the OFL algorithm. However,the accuracy of its segmentation results is 1.6% lower than that of the results obtained by the OFL algorithm. Nevertheless,in terms of running speed,the proposed algorithm is ahead of other methods and is approximately 6 times faster than the OFL algorithm.ConclusionExperimental results show that when the current/background color is not clear enough,the foreground object and the background are often confused,thereby resulting in incorrect segmentation. However,when the global feature consistency constraint is added,the proposed algorithm can optimize the segmentation result of each frame by the feature statistics of the entire video. By using global information to optimize local information,the proposed segmentation method shows strong robustness to random noise,irregular motions,blurry backgrounds,and other problems in the video. According to the experimental results,the proposed algorithm spends most of its time in calculating the optical flow and can be replaced by a more efficient motion estimation algorithm in the future. However,compared with other segmentation algorithms,the proposed method shows great advantages in its performance. Based on the MRF framework,the proposed segmentation algorithm integrates the constraints of feature consistency and improves both segmentation accuracy and operation speed without increasing computational complexity. However,this method has several shortcomings. First,given that the proposed algorithm segments a video based on super pixels,the segmentation results depend on the segmentation accuracy of these super pixels. Second,the proposed high-order feature energy constraint has no obvious effect on feature-free regions because the SIFT feature points detected in similar regions will be greatly reduced,thereby creating super-pixel blocks that are unable to detect a sufficient number of feature points,which subsequently influences the global statistics of front/background features and prevents the proposed method from optimizing the segmentation results of feature-free regions. Similar to traditional methods,the optical flow creates a bottleneck in the performance of the proposed method. Therefore,additional efforts should be devoted in finding a highly efficient replacement strategy. As mentioned before,the method based on graph theory (including the proposed method) still lags behind the current end-to-end video segmentation methods based on convolutional neural network (CNN) in terms of segmentation accuracy. Future works should then attempt to combine these two approaches to benefit from their respective advantages.关键词:video object segmentation;feature consistency;Markov random field (MRF);auxiliary node;energy optimization30|30|3更新时间:2024-05-07

摘要:ObjectiveVideo object segmentation is an important topic in the field of computer vision. However,the existing segmentation methods are unable to address some issues,such as irregular objects,noise optical flows,and fast movements. To this end,this paper proposes an effective and efficient algorithm that solves these issues based on feature consistency.MethodThe proposed segmentation algorithm framework is based on the graph theory method of Markov random field (MRF). First,the Gaussian mixture model (GMM) is applied to model the color features of pre-specified marked areas,and the segmented data items are obtained. Second,a spatiotemporal smoothing term is established by combining various characteristics,such as color and optical flow direction. The algorithm then adds energy constraints based on feature consistency to enhance the appearance consistency of the segmentation results. The added energy belongs to a higher-order energy constraint,thereby significantly increasing the computational complexity of energy optimization. The energy optimization problem is solved by adding auxiliary nodes to improve the speed of the algorithm. The higher-order constraint term comes from the idea of text classification,which is used in this paper to model the higher-order term of the segmentation equation. Each super-pixel point corresponds to a text,and the scale-invariant feature transform (SIFT) feature point in the super-pixel point is used as a word in the text. The higher-order term is modeled afterward via extraction and clustering. Given the running speed of the algorithm,auxiliary nodes are added to optimize the high-order term. The high-order term is approximated to the data and smoothing items,and then the graph cutting algorithm is used to complete the segmentation.ResultThe test data were taken from the DAVIS_2016(densely annotated video segmentation) dataset,which contains 50 sets of data,of which 30 and 20 are sets of training and verification data,respectively. This dataset has a resolution of 854×480 pixels. Given that many methods are based on MRF expansion,α=0.3 and β=0.2 are empirically set in the proposed algorithm to maintain a capability balance among the data,smoothing,and feature consistency items. Similar to extant methods,the number of submodels used to establish the Gaussian mixture model for the front/background is set to 5,σh=σh=0.1. This paper focuses on the verification and evaluation of the proposed feature consistency constraint terms and sets β=0 and β=0.2 to divide the videos under the constraint condition. The experimental results show that the IoU score with higher-order constraints is 10.2% higher than that without higher-order constraints. To demonstrate its effectiveness,the proposed method is compared with some other classical video segmentation algorithms based on graph theory. The experimental results highlight the competitive segmentation effect of the proposed algorithm. Meanwhile,the average IoU score reported in this paper is slightly lower than that of the video segmenfation via object flow(OFL) algorithm because the latter continuously iteratively optimizes the optical flow calculation results to achieve a relatively high segmentation accuracy. The proposed algorithm takes nearly 10 seconds on average to segment each frame,which is shorter than the running time of other algorithms. For instance,although the OFL algorithm reports a slightly higher accuracy,its average processing time for each frame is approximately 1 minute,which is 6 times longer than that of the proposed algorithm. In sum,the proposed algorithm can achieve the same segmentation effect with a much lower computational complexity than the OFL algorithm. However,the accuracy of its segmentation results is 1.6% lower than that of the results obtained by the OFL algorithm. Nevertheless,in terms of running speed,the proposed algorithm is ahead of other methods and is approximately 6 times faster than the OFL algorithm.ConclusionExperimental results show that when the current/background color is not clear enough,the foreground object and the background are often confused,thereby resulting in incorrect segmentation. However,when the global feature consistency constraint is added,the proposed algorithm can optimize the segmentation result of each frame by the feature statistics of the entire video. By using global information to optimize local information,the proposed segmentation method shows strong robustness to random noise,irregular motions,blurry backgrounds,and other problems in the video. According to the experimental results,the proposed algorithm spends most of its time in calculating the optical flow and can be replaced by a more efficient motion estimation algorithm in the future. However,compared with other segmentation algorithms,the proposed method shows great advantages in its performance. Based on the MRF framework,the proposed segmentation algorithm integrates the constraints of feature consistency and improves both segmentation accuracy and operation speed without increasing computational complexity. However,this method has several shortcomings. First,given that the proposed algorithm segments a video based on super pixels,the segmentation results depend on the segmentation accuracy of these super pixels. Second,the proposed high-order feature energy constraint has no obvious effect on feature-free regions because the SIFT feature points detected in similar regions will be greatly reduced,thereby creating super-pixel blocks that are unable to detect a sufficient number of feature points,which subsequently influences the global statistics of front/background features and prevents the proposed method from optimizing the segmentation results of feature-free regions. Similar to traditional methods,the optical flow creates a bottleneck in the performance of the proposed method. Therefore,additional efforts should be devoted in finding a highly efficient replacement strategy. As mentioned before,the method based on graph theory (including the proposed method) still lags behind the current end-to-end video segmentation methods based on convolutional neural network (CNN) in terms of segmentation accuracy. Future works should then attempt to combine these two approaches to benefit from their respective advantages.关键词:video object segmentation;feature consistency;Markov random field (MRF);auxiliary node;energy optimization30|30|3更新时间:2024-05-07 -

摘要:ObjectiveLandmark recognition,which is a new application in computer vision,has been increasing investigated in the past several years and has been widely used to implement landmark image recognition function in image retrieval. However,this application has many problems unsolved,such as the global features are sensitive to view change,and the local features are sensitive to light change. Most existing methods based on convolutional neural network (CNN) are used to extract image features for replacing traditional feature extraction methods,such as scale-invariant feature transform(SIFT) or speeded up robust feature (SURF). At present,the best model is deep local feature(DeLF),but its retrieval needs the combination of product quantization(PQ) and K-dimensional(KD) trees. The process is complex and consumes approximately 6 GB of display memory,which is unsuitable for rapid deployment and use,and the most time-consuming process is random sample consensus.MethodA multiple feature fusion method is needed when focusing on the problems of a single feature,and multiple features can be horizontally connected to create a single vector for improving the performance of CNN global features. For large-scale landmark data,manual labeling of images is time consuming and laborious,and artificial cognitive bias exists in labeling. To minimize human work in labeling images,weakly supervised loss,such as the additive angular margin loss function(ArcFace loss function),which is improved from standard cross-entry loss and changes the Euclidean distances to angular domain,is used to train the model in image-level annotations. The ArcFace loss function performs well in facial recognition and image classification and is easy to use in other deep learning applications. This paper provides the values of the parameters in ArcFace loss function and the proof process. Thus,a weakly supervised recognition model based on ArcFace loss and multiple feature fusion is proposed for landmark recognition. The proposed model uses ResNet50 as its trunk and has two steps in model training,including the trunk's finetuning and attention layer's training. Finetuning uses the Google landmark image dataset,and the trunk is finetuned on the weights pretrained on the ImageNet dataset. The average pooling layer is replaced by a generalized mean(GeM) pooling layer because it is proven useful in image retrieval. The attention mechanism is built using two convolutional layers that use 1×1 kernel to train the features focusing on the local features needed. Image preprocessing is required before training. The preprocessing consists of three stages,including center crop/resize and random crop. People usually prefer to place buildings and themselves in the center of images. Thus,a center crop method is suitable to ignore the problems occurring in padding or resizing. The proposed model uses classification training to complete the image retrieval task. The final input image size is set to 4482. This value is a compromise value because the input image size in image retrieval is usually 800×8001 500×1 500 pixels and the classification size is 224×224~300×300 pixels. The image is center cropped first,and then its size is resized to 500×500 pixels because it is a useful method to enhance the data through random cropping. For inference,the image is center cropped and directly resized to 448×448 pixels because it only needs to be processed twice. The inference of this model is divided into three parts,namely,extracting global features,obtaining local features,and feature fusion. For the inputted query image,the global feature is first extracted from the embedding layer of CNN fine-tuned by ArcFace loss function; Second,the attention mechanism is used to obtain local features in the middle layer of the network,and the useful local features must be larger than the threshold; finally,two features are fused,and the results that are the most similar with the current query image in the database are obtained through image retrieval.ResultWe compared the proposed model with several state-of-the-art models,including the traditional approaches and deep learning methods on two public reviewed datasets,namely,Oxford and Paris building datasets. The two datasets are reconstructed in 2018 and are classified into three levels,namely,easy,medium,and hard. Three groups of comparisons are used in the experiment,and they are all compared on the reviewed Oxford and Paris datasets. The first group is to compare the proposed model's performance with other models,such as HesAff-rSIFT-VLAD and VggNet-NetVLAD. The second group is designed to compare the performance of single global feature with the performance of fused features. The last group compares the results obtained from the whiting of the extracted features at different layers of the proposed model. Results show that the feature fusion method can make the shallow network achieve the effect of deep pretrained network,and the mean average precision(mAP) increases by approximately 1% compared with the global features on the two previously mentioned datasets. The proposed model achieves satisfactory results in urban street view images.ConclusionIn this study,we proposed a composite model that contains a CNN,an attention model,and a fusion algorithm to fuse two types of features. Experimental results show that the proposed model performs well,the fusion algorithm improves its performance,and the performance in urban street datasets ensures the practical application value of the proposed model.关键词:landmark recognition;additive angular margin loss function(ArcFace loss function);attention mechanism;multiple features fusion;convolutional neural network(CNN)31|17|2更新时间:2024-05-07

摘要:ObjectiveLandmark recognition,which is a new application in computer vision,has been increasing investigated in the past several years and has been widely used to implement landmark image recognition function in image retrieval. However,this application has many problems unsolved,such as the global features are sensitive to view change,and the local features are sensitive to light change. Most existing methods based on convolutional neural network (CNN) are used to extract image features for replacing traditional feature extraction methods,such as scale-invariant feature transform(SIFT) or speeded up robust feature (SURF). At present,the best model is deep local feature(DeLF),but its retrieval needs the combination of product quantization(PQ) and K-dimensional(KD) trees. The process is complex and consumes approximately 6 GB of display memory,which is unsuitable for rapid deployment and use,and the most time-consuming process is random sample consensus.MethodA multiple feature fusion method is needed when focusing on the problems of a single feature,and multiple features can be horizontally connected to create a single vector for improving the performance of CNN global features. For large-scale landmark data,manual labeling of images is time consuming and laborious,and artificial cognitive bias exists in labeling. To minimize human work in labeling images,weakly supervised loss,such as the additive angular margin loss function(ArcFace loss function),which is improved from standard cross-entry loss and changes the Euclidean distances to angular domain,is used to train the model in image-level annotations. The ArcFace loss function performs well in facial recognition and image classification and is easy to use in other deep learning applications. This paper provides the values of the parameters in ArcFace loss function and the proof process. Thus,a weakly supervised recognition model based on ArcFace loss and multiple feature fusion is proposed for landmark recognition. The proposed model uses ResNet50 as its trunk and has two steps in model training,including the trunk's finetuning and attention layer's training. Finetuning uses the Google landmark image dataset,and the trunk is finetuned on the weights pretrained on the ImageNet dataset. The average pooling layer is replaced by a generalized mean(GeM) pooling layer because it is proven useful in image retrieval. The attention mechanism is built using two convolutional layers that use 1×1 kernel to train the features focusing on the local features needed. Image preprocessing is required before training. The preprocessing consists of three stages,including center crop/resize and random crop. People usually prefer to place buildings and themselves in the center of images. Thus,a center crop method is suitable to ignore the problems occurring in padding or resizing. The proposed model uses classification training to complete the image retrieval task. The final input image size is set to 4482. This value is a compromise value because the input image size in image retrieval is usually 800×8001 500×1 500 pixels and the classification size is 224×224~300×300 pixels. The image is center cropped first,and then its size is resized to 500×500 pixels because it is a useful method to enhance the data through random cropping. For inference,the image is center cropped and directly resized to 448×448 pixels because it only needs to be processed twice. The inference of this model is divided into three parts,namely,extracting global features,obtaining local features,and feature fusion. For the inputted query image,the global feature is first extracted from the embedding layer of CNN fine-tuned by ArcFace loss function; Second,the attention mechanism is used to obtain local features in the middle layer of the network,and the useful local features must be larger than the threshold; finally,two features are fused,and the results that are the most similar with the current query image in the database are obtained through image retrieval.ResultWe compared the proposed model with several state-of-the-art models,including the traditional approaches and deep learning methods on two public reviewed datasets,namely,Oxford and Paris building datasets. The two datasets are reconstructed in 2018 and are classified into three levels,namely,easy,medium,and hard. Three groups of comparisons are used in the experiment,and they are all compared on the reviewed Oxford and Paris datasets. The first group is to compare the proposed model's performance with other models,such as HesAff-rSIFT-VLAD and VggNet-NetVLAD. The second group is designed to compare the performance of single global feature with the performance of fused features. The last group compares the results obtained from the whiting of the extracted features at different layers of the proposed model. Results show that the feature fusion method can make the shallow network achieve the effect of deep pretrained network,and the mean average precision(mAP) increases by approximately 1% compared with the global features on the two previously mentioned datasets. The proposed model achieves satisfactory results in urban street view images.ConclusionIn this study,we proposed a composite model that contains a CNN,an attention model,and a fusion algorithm to fuse two types of features. Experimental results show that the proposed model performs well,the fusion algorithm improves its performance,and the performance in urban street datasets ensures the practical application value of the proposed model.关键词:landmark recognition;additive angular margin loss function(ArcFace loss function);attention mechanism;multiple features fusion;convolutional neural network(CNN)31|17|2更新时间:2024-05-07 -

摘要:ObjectiveFine-grained image retrieval is a major issue in current fine-grained image analysis and computer vision. Traditional methods typically retrieve similar replicated images, which are primarily based on large-scale coarse-grained retrieval but with low precision. Fine-grained image retrieval belongs to fine-grained image identification and retrieval subclasses. The traditional image retrieval task extracts only the coarse-grained features of images and cannot be effectively used for fine-grained retrieval. It also lacks key semantic attributes, and this deficiency brings difficulty in distinguishing the nuances among parts. The difficulty in fine-grained image retrieval is that the traditional coarse-grained feature extraction cannot represent images effectively. Fine-grained images of the same subclasses also cause a significant difference due to such factors as shape, posture, and color; consequently, search results cannot be effectively applied to actual needs. Compared with conventional image analysis problems, fine-grained image retrieval is more challenging due to the inter-level subcategories of its smaller class differences and the class differences within the larger ones. A fine-grained image retrieval method by part detection and semantic network for various shoe images is therefore proposed to solve the above-mentioned problems.MethodFirst, part-based detection is conducted to detect undetected shoe images through an annotated training dataset of shoe images. Second, the semantic network is trained based on the semantic attributes of the detected shoe and training images, and feature vectors are extracted. Third, principal component analysis is used for dimensionality reduction. Finally, the results are implemented and output by metric learning to calculate the similarity among images, and fine-grained image retrieval is implemented. On the UT-Zap50K dataset, fine-grained shoe attributes are defined for the shoe images in combination with the component area of shoes. The toe area defines a shape attribute that contains five attribute values. Two attributes of shape and height are defined for the heel area, which contains 13 attribute values. A height attribute is defined for the upper area, which contains four attribute values. A closed-mode attribute is defined for the upper area, which contains nine attribute values. Footwear global properties are defined to include colors and styles, which contain 20 attribute values.ResultThe experiment is compared with four methods with good retrieval performance on the UT-Zap50K dataset. The retrieval accuracy is improved by nearly 6%. Compared with the semantic hierarchy of attribute convolutional neural network(SHOE-CNN) retrieval method of the same task, the proposed method has higher retrieval accuracy. The proposed semantic network is compared with traditional GIST(generalized search trees) features, the linear support vector machine(LSVM) method, and the deep learning method to illustrate the effectiveness of the proposed retrieval method. The performance is evaluated in terms of the accuracy of top-K retrieval. Results show that the method based on deep learning is much better than the traditional GIST features and LSVM method. The retrieval accuracy of this method is better than that of the metric network and SHOE-CNN by combining a metric learning algorithm.ConclusionA fine-grained shoe image retrieval method is proposed to address the low accuracy of shoe image retrieval caused by the lack of fine visual description of traditional image features. The method can accurately detect different parts of a shoe image and define the detailed semantic attributes of the shoe image. The visual attribute features of the shoe image are obtained by training the semantic network. The problem of unsatisfied accuracy of shoe image retrieval caused by using only coarse-grained features to represent images is solved. The experimental results show that the proposed method can retrieve the same image as the image to be detected on the UT-Zap50K dataset. The accuracy can reach 80% and 86% while ensuring the running efficiency. However, this method exhibits shortcomings. On the one hand, the accuracy of partial image detection is low because of the many styles and complexity of shoes. On the other hand, the prediction of some semantic attributes is inaccurate, and the fine-grained semantic attributes of shoe images are imperfect. The follow-up work will focus on these issues to improve the search accuracy, and the application issues will be extended to different scenarios.关键词:fine-grained image retrieval;shoe image;part detection;semantic network;feature vector;metric learning56|79|3更新时间:2024-05-07

摘要:ObjectiveFine-grained image retrieval is a major issue in current fine-grained image analysis and computer vision. Traditional methods typically retrieve similar replicated images, which are primarily based on large-scale coarse-grained retrieval but with low precision. Fine-grained image retrieval belongs to fine-grained image identification and retrieval subclasses. The traditional image retrieval task extracts only the coarse-grained features of images and cannot be effectively used for fine-grained retrieval. It also lacks key semantic attributes, and this deficiency brings difficulty in distinguishing the nuances among parts. The difficulty in fine-grained image retrieval is that the traditional coarse-grained feature extraction cannot represent images effectively. Fine-grained images of the same subclasses also cause a significant difference due to such factors as shape, posture, and color; consequently, search results cannot be effectively applied to actual needs. Compared with conventional image analysis problems, fine-grained image retrieval is more challenging due to the inter-level subcategories of its smaller class differences and the class differences within the larger ones. A fine-grained image retrieval method by part detection and semantic network for various shoe images is therefore proposed to solve the above-mentioned problems.MethodFirst, part-based detection is conducted to detect undetected shoe images through an annotated training dataset of shoe images. Second, the semantic network is trained based on the semantic attributes of the detected shoe and training images, and feature vectors are extracted. Third, principal component analysis is used for dimensionality reduction. Finally, the results are implemented and output by metric learning to calculate the similarity among images, and fine-grained image retrieval is implemented. On the UT-Zap50K dataset, fine-grained shoe attributes are defined for the shoe images in combination with the component area of shoes. The toe area defines a shape attribute that contains five attribute values. Two attributes of shape and height are defined for the heel area, which contains 13 attribute values. A height attribute is defined for the upper area, which contains four attribute values. A closed-mode attribute is defined for the upper area, which contains nine attribute values. Footwear global properties are defined to include colors and styles, which contain 20 attribute values.ResultThe experiment is compared with four methods with good retrieval performance on the UT-Zap50K dataset. The retrieval accuracy is improved by nearly 6%. Compared with the semantic hierarchy of attribute convolutional neural network(SHOE-CNN) retrieval method of the same task, the proposed method has higher retrieval accuracy. The proposed semantic network is compared with traditional GIST(generalized search trees) features, the linear support vector machine(LSVM) method, and the deep learning method to illustrate the effectiveness of the proposed retrieval method. The performance is evaluated in terms of the accuracy of top-K retrieval. Results show that the method based on deep learning is much better than the traditional GIST features and LSVM method. The retrieval accuracy of this method is better than that of the metric network and SHOE-CNN by combining a metric learning algorithm.ConclusionA fine-grained shoe image retrieval method is proposed to address the low accuracy of shoe image retrieval caused by the lack of fine visual description of traditional image features. The method can accurately detect different parts of a shoe image and define the detailed semantic attributes of the shoe image. The visual attribute features of the shoe image are obtained by training the semantic network. The problem of unsatisfied accuracy of shoe image retrieval caused by using only coarse-grained features to represent images is solved. The experimental results show that the proposed method can retrieve the same image as the image to be detected on the UT-Zap50K dataset. The accuracy can reach 80% and 86% while ensuring the running efficiency. However, this method exhibits shortcomings. On the one hand, the accuracy of partial image detection is low because of the many styles and complexity of shoes. On the other hand, the prediction of some semantic attributes is inaccurate, and the fine-grained semantic attributes of shoe images are imperfect. The follow-up work will focus on these issues to improve the search accuracy, and the application issues will be extended to different scenarios.关键词:fine-grained image retrieval;shoe image;part detection;semantic network;feature vector;metric learning56|79|3更新时间:2024-05-07

Image Analysis and Recognition

-

摘要:ObjectiveWith the development of deep learning, the problem of image generation has achieved great progress. Text-to-image generation is an important research field based on deep learning image generation. A large number of related papers conducted by researchers have proposed to implement text-to-image. However, a significant limitation exists, that is, the model will behave poorly in terms of relationships when generating images involving multiple objects. The existing solution is to replace the description text with a scene graph structure that closely represents the scene relationship in the image and then use the scene graphs to generate an image. Scene graphs are the preferred structured representation between natural language and images, which is conducive to the transfer of information between objects in the graphs. Although the scene graphs to image generation model solve the problem of image generation, including multiple objects and relationships, the existing scene graphs to image generation model ultimately produce images with lower quality, and the object details are unremarkable compared with real samples. A model with improved performance should be developed to generate high-quality images and to solve large errors.MethodWe propose a model called image generation from scene graphs with a graph attention network (GA-SG2IM), which is an improved model implementing image generation from scene graphs, to generate high-quality images containing multiple objects and relationships. The proposed model mainly realizes image generation in three parts:First, a feature extraction network is used to realize the feature extraction of the scene graphs. The attention network of the graphs introduces the attention mechanism in the convolution network of original graphs, enabling the output object vector to have strong expression ability. The object vector is then passed to the improved object layout network for obtaining a respectful and factual scene layout. Finally, the scene layout is passed to the cascaded refinement network for obtaining the final output image. A network of discriminators consisting of an object discriminator and an image discriminator is connected to the end to ensure that the generated image is sufficiently realistic. At the same time, we use feature matching as our image loss function to ensure that the final generated and real images are similar in semantics and to obtain high-quality images.ResultWe use the COCO-Stuff image dataset to train and validate the proposed model. The dataset includes more than 40 000 images of different scenes, where each of them provides annotation information of the borders and segmentation masks of the objects in the image, and the annotation information can be used to synthesize and input the scene graph of the proposed model. We train the proposed model to generate 64×64 images and compare them with other image generation models to prove its feasibility. At the same time, the quantitative results of the Inception Score and the bounding box intersection over union(IoU) of the generated image are compared to determine the improvement effects of the proposed model and SG2IM(image generation from scene graph) and StackGAN models. The final experimental results show that the proposed model achieves an Inception Score of 7.8, which increases by 0.5 compared with the SG2IM model.ConclusionQualitative experimental results show that the proposed model can realize the generation of complex scene images containing multiple objects and relationships and improves the quality of the generated images to a certain extent, making the final generated images clear and the object details evident. A machine can autonomously model its input data and takes a step toward "wisdom" when it can generate high-quality images containing multiple objects and relationships. Our next goal is to enable the proposed model for generating real-time high-resolution images, such as photographic images, which requires many theoretical supports and practical operations.关键词:image generation from scene graphs;graph attention network;scene layout;feature matching;cascaded refinement network43|27|3更新时间:2024-05-07