最新刊期

卷 25 , 期 6 , 2020

-

摘要:Semantic segmentation is a fundamental task in computer vision applications, such as scene analysis and behavior recognition. The recent years have witnessed significant progress in semantic image segmentation based on deep convolutional neural network(DCNN). Semantic segmentation is a type of pixel-level image understanding with the objective of assigning a semantic label for each pixel of a given image. Object detection only locates the bounding box of the object, while the task of semantic segmentation is to segment an image into several meaningful objects and then assign a specific semantic label to each object. The difficulty of image semantic segmentation mostly originates from three aspects: object, category, and background. From the perspective of objects, when an object is in different lighting, angle of view, and distance, or when it is still or moving, the image taken will significantly differ. Occlusion may also occur between adjacent objects. In terms of categories, objects from the same category have dissimilarities and objects from different categories have similarities. From the background perspective, a simple background helps output accurate semantic segmentation results, but the background of real scenes is complex. In this study, we provide a systematic review of recent advances in DCNN methods for semantic segmentation. In this paper, we first discuss the difficulties and challenges in semantic segmentation and provide datasets and quantitative metrics for evaluating the performance of these methods. Then, we detail how recent CNN-based semantic segmentation methods work and analyze their strengths and limitations. According to whether to use pixel-level labeled images to train the network, these methods are grouped into two categories: supervised and weakly supervised learning-based semantic segmentation. Supervised semantic segmentation requires pixel-level annotations. By contrast, weakly supervised semantic segmentation aims to segment images by class labels, bounding boxes, and scribbles. In this study, we divide supervised semantic segmentation models into four groups: encoder-decoder methods, feature map-based methods, probability map-based methods, and various strategies. In an encoder-decoder network, an encoder module gradually reduces feature maps and captures high semantic information, while a decoder module gradually recovers spatial information. At present, most state-of-the-art deep CNN for semantic segmentation originate from a common forerunner, i.e., the fully convolutional network (FCN), which is an encoder-decoder network. FCN transforms existing and well-known classification models, such as AlexNet, visual geometry group 16-layer net (VGG16), GoogLeNet, and ResNet, into fully convolutional models by replacing fully connected layers with convolutional ones to output spatial maps instead of classification scores. Such maps are upsampled using deconvolutions to produce dense per-pixel labeled outputs. A feature map-based method aims to take complete advantage of the context information of a feature map, including its spatial context (position) and scale context (size), facilitating the segmentation and parsing of an image. These methods obtain the spatial and scale contexts by increasing the receptive field and fusing multiscale information, effectively improving the performance of the network. Some models, such as the pyramid scene parsing network or Deeplab v3, perform spatial pyramid pooling at several different scales (including image-level pooling) or apply several parallel atrous convolutions with different rates. These models have presented promising results by involving the spatial and scale contexts. A probability map-based method combines the semantic context (probability) and the spatial context (location) with postprocess probability score maps and semantic label predictions primarily through the use of a probabilistic graph model. A probabilistic graph is a probabilistic model that uses a graph to present conditional dependence between random variables. It is the combination of probability and graph theories. Probabilistic graph models have several types, such as conditional random fields (CRFs), Markov random fields, and Bayesian networks. Object boundary is refined and network performance is improved by establishing semantic relationships between pixels. This family of approaches typically includes CRF-recurrent neural networks, deep parsing networks, and EncNet. Some methods combine two or more of the aforementioned strategies to significantly improve the segmentation performance of a network, such as a global convolutional network, DeepLab v1, DeepLab v2, DeepLab v3+, and a discriminative feature network. In accordance with the type of weak supervision used by a training network, weakly supervised semantic segmentation methods are divided into four groups: class label-based, bounding box-based, scribble-based, and various forms of annotations. Class-label annotations only indicate the presence of an object. Thus, the substantial problem in class label-based methods is accurately assigning image-level labels to their corresponding pixels. In general, this problem can be solved by using the multiple instance learning-based strategy to train models for semantic segmentation or adopting an alternative training procedure based on the expectation-maximization algorithm to dynamically predict semantic foreground and background pixels. A recent work attempted to increase the quality of an object localization map by integrating a seed region growing technique into the segmentation network, significantly increasing pixel accuracy. Bounding box-based methods use bounding boxes and class labels as supervision information. By using region proposal methods and the traditional image segmentation theory to generate candidate segmentation masks, a convolutional network is trained under the supervision of these approximate segmentation masks. BoxSup proposes a recursive training procedure wherein a convolutional network is trained under the supervision of segment object proposals. In turn, the updated network improves the segmentation mask used for training. Scribble-supervised training methods apply a graphical model to propagate information from scribbles to unmarked pixels on the basis of spatial constraints, appearance, and semantic content, accounting for two tasks. The first task is to propagate the class labels from scribbles to other pixels and fully annotate an image. The second task is to learn a convolutional network for semantic segmentation. We compare some semantic segmentation methods of supervised learning and weakly supervised learning on the PASCAL VOC (pattern analysis, statistical modelling and computational learning visual object classes) 2012 dataset. We also give the optimal methods of supervised learning methools and wedakly supervised learning methods, and the corresponding MIoU(mean intersection-over-union). Lastly, we present related research areas, including video semantic segmentation, 3D dataset semantic segmentation, real-time semantic segmentation, and instance segmentation. Image semantic segmentation is a popular topic in the fields of computer vision and artificial intelligence. Many applications require accurate and efficient segmentation models, e.g., autonomous driving, indoor navigation, and smart medicine. Thus, further work should be conducted on semantic segmentation to improve the accuracy of object boundaries and the performance of semantic segmentation.关键词:semantic segmentation;convolutional neural network (CNN);supervised learning;weakly supervised learning68|109|22更新时间:2024-05-07

摘要:Semantic segmentation is a fundamental task in computer vision applications, such as scene analysis and behavior recognition. The recent years have witnessed significant progress in semantic image segmentation based on deep convolutional neural network(DCNN). Semantic segmentation is a type of pixel-level image understanding with the objective of assigning a semantic label for each pixel of a given image. Object detection only locates the bounding box of the object, while the task of semantic segmentation is to segment an image into several meaningful objects and then assign a specific semantic label to each object. The difficulty of image semantic segmentation mostly originates from three aspects: object, category, and background. From the perspective of objects, when an object is in different lighting, angle of view, and distance, or when it is still or moving, the image taken will significantly differ. Occlusion may also occur between adjacent objects. In terms of categories, objects from the same category have dissimilarities and objects from different categories have similarities. From the background perspective, a simple background helps output accurate semantic segmentation results, but the background of real scenes is complex. In this study, we provide a systematic review of recent advances in DCNN methods for semantic segmentation. In this paper, we first discuss the difficulties and challenges in semantic segmentation and provide datasets and quantitative metrics for evaluating the performance of these methods. Then, we detail how recent CNN-based semantic segmentation methods work and analyze their strengths and limitations. According to whether to use pixel-level labeled images to train the network, these methods are grouped into two categories: supervised and weakly supervised learning-based semantic segmentation. Supervised semantic segmentation requires pixel-level annotations. By contrast, weakly supervised semantic segmentation aims to segment images by class labels, bounding boxes, and scribbles. In this study, we divide supervised semantic segmentation models into four groups: encoder-decoder methods, feature map-based methods, probability map-based methods, and various strategies. In an encoder-decoder network, an encoder module gradually reduces feature maps and captures high semantic information, while a decoder module gradually recovers spatial information. At present, most state-of-the-art deep CNN for semantic segmentation originate from a common forerunner, i.e., the fully convolutional network (FCN), which is an encoder-decoder network. FCN transforms existing and well-known classification models, such as AlexNet, visual geometry group 16-layer net (VGG16), GoogLeNet, and ResNet, into fully convolutional models by replacing fully connected layers with convolutional ones to output spatial maps instead of classification scores. Such maps are upsampled using deconvolutions to produce dense per-pixel labeled outputs. A feature map-based method aims to take complete advantage of the context information of a feature map, including its spatial context (position) and scale context (size), facilitating the segmentation and parsing of an image. These methods obtain the spatial and scale contexts by increasing the receptive field and fusing multiscale information, effectively improving the performance of the network. Some models, such as the pyramid scene parsing network or Deeplab v3, perform spatial pyramid pooling at several different scales (including image-level pooling) or apply several parallel atrous convolutions with different rates. These models have presented promising results by involving the spatial and scale contexts. A probability map-based method combines the semantic context (probability) and the spatial context (location) with postprocess probability score maps and semantic label predictions primarily through the use of a probabilistic graph model. A probabilistic graph is a probabilistic model that uses a graph to present conditional dependence between random variables. It is the combination of probability and graph theories. Probabilistic graph models have several types, such as conditional random fields (CRFs), Markov random fields, and Bayesian networks. Object boundary is refined and network performance is improved by establishing semantic relationships between pixels. This family of approaches typically includes CRF-recurrent neural networks, deep parsing networks, and EncNet. Some methods combine two or more of the aforementioned strategies to significantly improve the segmentation performance of a network, such as a global convolutional network, DeepLab v1, DeepLab v2, DeepLab v3+, and a discriminative feature network. In accordance with the type of weak supervision used by a training network, weakly supervised semantic segmentation methods are divided into four groups: class label-based, bounding box-based, scribble-based, and various forms of annotations. Class-label annotations only indicate the presence of an object. Thus, the substantial problem in class label-based methods is accurately assigning image-level labels to their corresponding pixels. In general, this problem can be solved by using the multiple instance learning-based strategy to train models for semantic segmentation or adopting an alternative training procedure based on the expectation-maximization algorithm to dynamically predict semantic foreground and background pixels. A recent work attempted to increase the quality of an object localization map by integrating a seed region growing technique into the segmentation network, significantly increasing pixel accuracy. Bounding box-based methods use bounding boxes and class labels as supervision information. By using region proposal methods and the traditional image segmentation theory to generate candidate segmentation masks, a convolutional network is trained under the supervision of these approximate segmentation masks. BoxSup proposes a recursive training procedure wherein a convolutional network is trained under the supervision of segment object proposals. In turn, the updated network improves the segmentation mask used for training. Scribble-supervised training methods apply a graphical model to propagate information from scribbles to unmarked pixels on the basis of spatial constraints, appearance, and semantic content, accounting for two tasks. The first task is to propagate the class labels from scribbles to other pixels and fully annotate an image. The second task is to learn a convolutional network for semantic segmentation. We compare some semantic segmentation methods of supervised learning and weakly supervised learning on the PASCAL VOC (pattern analysis, statistical modelling and computational learning visual object classes) 2012 dataset. We also give the optimal methods of supervised learning methools and wedakly supervised learning methods, and the corresponding MIoU(mean intersection-over-union). Lastly, we present related research areas, including video semantic segmentation, 3D dataset semantic segmentation, real-time semantic segmentation, and instance segmentation. Image semantic segmentation is a popular topic in the fields of computer vision and artificial intelligence. Many applications require accurate and efficient segmentation models, e.g., autonomous driving, indoor navigation, and smart medicine. Thus, further work should be conducted on semantic segmentation to improve the accuracy of object boundaries and the performance of semantic segmentation.关键词:semantic segmentation;convolutional neural network (CNN);supervised learning;weakly supervised learning68|109|22更新时间:2024-05-07

Review

-

摘要:ObjectiveUnder the background of the continuously increasing quantity of digital documents transmitted over the Internet, efficient and practical data hiding techniques should be designed to protect intellectual property rights. Digital watermarking techniques have been historically used to ensure security in terms of ownership protection and tamper-proofing for various data formats, including images, audio, video, natural language processing software, and relational databases. This study focuses on audio watermarking. In general, digital audio watermarking refers to the technology of embedding useful data (watermark data) within a host audio without substantially degrading the perceptual quality of the host audio. For different purposes, audio watermarking can be divided into two classifications: robust and fragile audio watermarking. The former is used to protect ownership of digital audio. By contrast, the latter is used to authenticate digital audio, i.e., to ensure the integrity of digital audio. A digital watermarking scheme generally has three major properties: imperceptibility, robustness, and payload. Imperceptibility indicates that the watermarked audio is perceptually indistinguishable from the original one. This property is required to maintain the commercial value of audio data or the secrecy of embedded data. Robustness refers to the ability of a watermark to survive various attacks, such as JPEG/MP3 compression, additive noise, filtering, and amplification. Payload refers to the total amount of information that can be hidden within digital audio. Imperceptibility, robustness, and payload are three major requirements of any digital audio watermarking system to guarantee desired functionalities. However, a trade-off exists among them from the information-theoretic perspective. Simultaneously improving robustness, imperceptibility, and payload has been a challenge for digital audio watermarking algorithms. A digital audio watermarking scheme must be robust against various possible attacks. Attacks that attempt to destroy or invalidate watermarks can be classified into two types: noise-like common signal processing operations and desynchronization attacks. Desynchronization attacks are more difficult to address than other types of attacks. Designing a robust digital audio watermarking algorithm against desynchronization attacks is a challenging task.MethodIn this study, we propose a new second-generation digital audio watermarking in the undecimated discrete wavelet transform (UDWT) domain based on robust local audio features. First, robust audio feature points are detected by utilizing a smooth gradient. These feature points are always invariant to common signal processing operations and desynchronization attacks. Then, local digital audio segments, centering at the detected audio feature points, are extracted for watermarking use. Lastly, a watermark is embedded into local digital audio segments in the UDWT domain by modulating low-frequency coefficients. We use robust significant UDWT coefficients that can effectively capture important audio texture features to accurately locate watermark embedding/extraction position, even when under desynchronization attacks.ResultTo evaluate the performance of our scheme, watermark imperceptibility and robustness tests are conducted for the proposed watermarking algorithm. The watermark detection results of the proposed algorithm are compared with those of several state-of-the-art audio watermarking schemes against various attacks under equal conditions. All the audio signals in the test are music with 16 bit/sample, 44.1 kHz sample rates, and 15 s duration. All our experiments are executed on a personal computer with Intel Core i7-4790 CPU 3.60 GHz, 16 GB memory, and Microsoft Windows 7 Ultimate operating system. Moreover, MATLAB R2016a is used to perform the simulation experiments. To quantitatively evaluate the imperceptibility performance of the proposed watermarking algorithm, we also calculate signal-to-noise ratio (SNR), which is an objective criterion and always used to evaluate audio quality. The SNR of the proposed scheme is improved by 5.7 dB on average, demonstrating its effectiveness in terms of the invisibility of the watermark. Watermark robustness is measured as the correctly extracted percentage of extracted segments. The average detection rate remains at 0.925 and 0.913, which are higher than those of most traditional algorithms. Therefore, the experimental results show that the proposed approach exhibits good transparency and strong robustness against common audio processing activities, such as MP3 compression, resampling, and requantization. The scheme also demonstrates good robustness against desynchronization attacks, such as random cropping, pitch-scale modification, and jittering.ConclusionAn audio watermarking algorithm based on robust feature points of the wavelet domain is proposed on the basis of audio content features and the stability of the low-frequency coefficient of UDWT. First, the original audio is dealt with using UDWT, and then by calculating the first-order gradient responses of the low-frequency coefficient, ranking these responses in descending order, and selecting the highest response as criterion to set the threshold. From these processes, stable and evenly distributed feature points are obtained. Then, the robust feature point is set for identification, and audio watermarking is embedded. Finally, the low-frequency coefficient is inserted into watermarking via quantization index modulation. The proposed scheme effectively solves the disadvantages of poor stability and uneven distribution of audio feature points, improving the resistance of digital audio watermarks to pitch-scale modification, random cropping, and jittering attacks.关键词:digital audio watermarking;desynchronization attacks;feature points;smooth gradient;undecimated discrete wavelet transform (UDWT)34|24|1更新时间:2024-05-07

摘要:ObjectiveUnder the background of the continuously increasing quantity of digital documents transmitted over the Internet, efficient and practical data hiding techniques should be designed to protect intellectual property rights. Digital watermarking techniques have been historically used to ensure security in terms of ownership protection and tamper-proofing for various data formats, including images, audio, video, natural language processing software, and relational databases. This study focuses on audio watermarking. In general, digital audio watermarking refers to the technology of embedding useful data (watermark data) within a host audio without substantially degrading the perceptual quality of the host audio. For different purposes, audio watermarking can be divided into two classifications: robust and fragile audio watermarking. The former is used to protect ownership of digital audio. By contrast, the latter is used to authenticate digital audio, i.e., to ensure the integrity of digital audio. A digital watermarking scheme generally has three major properties: imperceptibility, robustness, and payload. Imperceptibility indicates that the watermarked audio is perceptually indistinguishable from the original one. This property is required to maintain the commercial value of audio data or the secrecy of embedded data. Robustness refers to the ability of a watermark to survive various attacks, such as JPEG/MP3 compression, additive noise, filtering, and amplification. Payload refers to the total amount of information that can be hidden within digital audio. Imperceptibility, robustness, and payload are three major requirements of any digital audio watermarking system to guarantee desired functionalities. However, a trade-off exists among them from the information-theoretic perspective. Simultaneously improving robustness, imperceptibility, and payload has been a challenge for digital audio watermarking algorithms. A digital audio watermarking scheme must be robust against various possible attacks. Attacks that attempt to destroy or invalidate watermarks can be classified into two types: noise-like common signal processing operations and desynchronization attacks. Desynchronization attacks are more difficult to address than other types of attacks. Designing a robust digital audio watermarking algorithm against desynchronization attacks is a challenging task.MethodIn this study, we propose a new second-generation digital audio watermarking in the undecimated discrete wavelet transform (UDWT) domain based on robust local audio features. First, robust audio feature points are detected by utilizing a smooth gradient. These feature points are always invariant to common signal processing operations and desynchronization attacks. Then, local digital audio segments, centering at the detected audio feature points, are extracted for watermarking use. Lastly, a watermark is embedded into local digital audio segments in the UDWT domain by modulating low-frequency coefficients. We use robust significant UDWT coefficients that can effectively capture important audio texture features to accurately locate watermark embedding/extraction position, even when under desynchronization attacks.ResultTo evaluate the performance of our scheme, watermark imperceptibility and robustness tests are conducted for the proposed watermarking algorithm. The watermark detection results of the proposed algorithm are compared with those of several state-of-the-art audio watermarking schemes against various attacks under equal conditions. All the audio signals in the test are music with 16 bit/sample, 44.1 kHz sample rates, and 15 s duration. All our experiments are executed on a personal computer with Intel Core i7-4790 CPU 3.60 GHz, 16 GB memory, and Microsoft Windows 7 Ultimate operating system. Moreover, MATLAB R2016a is used to perform the simulation experiments. To quantitatively evaluate the imperceptibility performance of the proposed watermarking algorithm, we also calculate signal-to-noise ratio (SNR), which is an objective criterion and always used to evaluate audio quality. The SNR of the proposed scheme is improved by 5.7 dB on average, demonstrating its effectiveness in terms of the invisibility of the watermark. Watermark robustness is measured as the correctly extracted percentage of extracted segments. The average detection rate remains at 0.925 and 0.913, which are higher than those of most traditional algorithms. Therefore, the experimental results show that the proposed approach exhibits good transparency and strong robustness against common audio processing activities, such as MP3 compression, resampling, and requantization. The scheme also demonstrates good robustness against desynchronization attacks, such as random cropping, pitch-scale modification, and jittering.ConclusionAn audio watermarking algorithm based on robust feature points of the wavelet domain is proposed on the basis of audio content features and the stability of the low-frequency coefficient of UDWT. First, the original audio is dealt with using UDWT, and then by calculating the first-order gradient responses of the low-frequency coefficient, ranking these responses in descending order, and selecting the highest response as criterion to set the threshold. From these processes, stable and evenly distributed feature points are obtained. Then, the robust feature point is set for identification, and audio watermarking is embedded. Finally, the low-frequency coefficient is inserted into watermarking via quantization index modulation. The proposed scheme effectively solves the disadvantages of poor stability and uneven distribution of audio feature points, improving the resistance of digital audio watermarks to pitch-scale modification, random cropping, and jittering attacks.关键词:digital audio watermarking;desynchronization attacks;feature points;smooth gradient;undecimated discrete wavelet transform (UDWT)34|24|1更新时间:2024-05-07

Image Processing and Coding

-

摘要:ObjectiveMany saliency detection algorithms use background priors to improve algorithm performance. In the past, however, most traditional models simply used the edge region around an image as the background region, resulting in false detection in cases wherein a salient object touches the edge of the image. To accurately apply background priors, we propose a saliency detection method that integrates the background block reselection process.MethodFirst, the original image is segmented using a superpixel segmentation algorithm, namely, simple linear iterative clustering (SLIC), to generate a superpixel image. Then, a background prior, a central prior, and a color distribution feature are used to select a partial superpixel block from the superpixel image to form a seed vector, which constructs a diffusion matrix. Second, the seed vector is diffused by the diffusion matrix to obtain a preliminary saliency map. Then, the preliminary saliency map is used as an input and then diffused by the diffusion matrix to obtain a second saliency map to obtain high-level features. Third, we develop a background block reselection process in accordance with the idea of Fisher's criterion. The two-layer saliency map is first fed into the background block reselection algorithm to extract background blocks. Then, we use the selected background blocks to form the background vector, which can be utilized to construct a new diffusion matrix. Lastly, the seed vector is diffused by the new diffusion matrix to obtain a background saliency map. Fourth, the background and two-layer saliency maps are nonlinearly fused to obtain the final saliency map.ResultThe experiments are performed on five general datasets: Microsoft Research Asia 10K (MSRA10K), extended complex scene saliency dataset (ECSSD), Dalian University of Technology and OMRON Corporation (DUT-OMRON), salient object dataset (SOD), and segmentation evaluation database 2 (SED2). Our method is compared with six recent algorithms, namely, generic promotion of diffusion-based salient object detection (GP), inner and inter label propagation: salient object detection in the wild (LPS), saliency detection via cellular automata (BSCA), salient object detection via structured matrix decomposition (SMD), salient region detection using a diffusion process on a two-layer sparse graph (TSG), and salient object detection via a multifeature diffusion-based method LMH (salient object detection via multi-feature diffusion-based method), by using three evaluation indicators: PR(precision-recall) curve, F index, and mean absolute error (MAE). On the MSRA10K dataset, MAE achieved the minimum value in all the comparison algorithms. Compared with the preimproved algorithm LMH, the F value increased by 0.84% and MAE decreased by 1.9%. On the ECSSD dataset, MAE was the second and the F value reached the maximum value in all the methods. Compared with the algorithm LMH, the F value increased by 1.33%. On the SED2 dataset, MAE and F values were both second in all the methods. Compared with the algorithm LMH, the F value increased by 0.7% and MAE decreased by 0.93%. Simultaneously, we separately extract the generated background saliency map and the final saliency map from our method and compare them with the corresponding high-level saliency map and final saliency map generated using the algorithm LMH. The experiment shows that our method also performs better at the subjective level. The salient objects in the saliency map are more complete and exhibit higher confidence, which is consistent with the phenomenon that recall rate in the objective comparison is better than that of the algorithm LMH. In addition, we experimentally verify the process of dynamically selecting thresholds in the proposed background block reselection process. The F-indexes obtained on three datasets (MSRA10K, SOD, and SED2) are better than those in the corresponding static processes. On ECSSD, the performance on the dataset is basically the same as that in the static process. However, the performance on the DUT-OMRON dataset is not as good as that in the static process. Consequently, we conduct theoretical analysis and verify the experiment by increasing the selection interval of the background block.ConclusionThe proposed saliency detection method can better apply the background prior, such that the final detection effect is better at the subjective and objective indicator levels. Simultaneously, the proposed method performs better when dealing with the type of image in which the salient region touches the edge of the image. In addition, the comparative experiment on the dynamic selection process of thresholds shows that the process of dynamically selecting thresholds is effective and reliable.关键词:saliency detection;background priori;re-selection of background block;Fisher criterion;diffusion method19|25|1更新时间:2024-05-07

摘要:ObjectiveMany saliency detection algorithms use background priors to improve algorithm performance. In the past, however, most traditional models simply used the edge region around an image as the background region, resulting in false detection in cases wherein a salient object touches the edge of the image. To accurately apply background priors, we propose a saliency detection method that integrates the background block reselection process.MethodFirst, the original image is segmented using a superpixel segmentation algorithm, namely, simple linear iterative clustering (SLIC), to generate a superpixel image. Then, a background prior, a central prior, and a color distribution feature are used to select a partial superpixel block from the superpixel image to form a seed vector, which constructs a diffusion matrix. Second, the seed vector is diffused by the diffusion matrix to obtain a preliminary saliency map. Then, the preliminary saliency map is used as an input and then diffused by the diffusion matrix to obtain a second saliency map to obtain high-level features. Third, we develop a background block reselection process in accordance with the idea of Fisher's criterion. The two-layer saliency map is first fed into the background block reselection algorithm to extract background blocks. Then, we use the selected background blocks to form the background vector, which can be utilized to construct a new diffusion matrix. Lastly, the seed vector is diffused by the new diffusion matrix to obtain a background saliency map. Fourth, the background and two-layer saliency maps are nonlinearly fused to obtain the final saliency map.ResultThe experiments are performed on five general datasets: Microsoft Research Asia 10K (MSRA10K), extended complex scene saliency dataset (ECSSD), Dalian University of Technology and OMRON Corporation (DUT-OMRON), salient object dataset (SOD), and segmentation evaluation database 2 (SED2). Our method is compared with six recent algorithms, namely, generic promotion of diffusion-based salient object detection (GP), inner and inter label propagation: salient object detection in the wild (LPS), saliency detection via cellular automata (BSCA), salient object detection via structured matrix decomposition (SMD), salient region detection using a diffusion process on a two-layer sparse graph (TSG), and salient object detection via a multifeature diffusion-based method LMH (salient object detection via multi-feature diffusion-based method), by using three evaluation indicators: PR(precision-recall) curve, F index, and mean absolute error (MAE). On the MSRA10K dataset, MAE achieved the minimum value in all the comparison algorithms. Compared with the preimproved algorithm LMH, the F value increased by 0.84% and MAE decreased by 1.9%. On the ECSSD dataset, MAE was the second and the F value reached the maximum value in all the methods. Compared with the algorithm LMH, the F value increased by 1.33%. On the SED2 dataset, MAE and F values were both second in all the methods. Compared with the algorithm LMH, the F value increased by 0.7% and MAE decreased by 0.93%. Simultaneously, we separately extract the generated background saliency map and the final saliency map from our method and compare them with the corresponding high-level saliency map and final saliency map generated using the algorithm LMH. The experiment shows that our method also performs better at the subjective level. The salient objects in the saliency map are more complete and exhibit higher confidence, which is consistent with the phenomenon that recall rate in the objective comparison is better than that of the algorithm LMH. In addition, we experimentally verify the process of dynamically selecting thresholds in the proposed background block reselection process. The F-indexes obtained on three datasets (MSRA10K, SOD, and SED2) are better than those in the corresponding static processes. On ECSSD, the performance on the dataset is basically the same as that in the static process. However, the performance on the DUT-OMRON dataset is not as good as that in the static process. Consequently, we conduct theoretical analysis and verify the experiment by increasing the selection interval of the background block.ConclusionThe proposed saliency detection method can better apply the background prior, such that the final detection effect is better at the subjective and objective indicator levels. Simultaneously, the proposed method performs better when dealing with the type of image in which the salient region touches the edge of the image. In addition, the comparative experiment on the dynamic selection process of thresholds shows that the process of dynamically selecting thresholds is effective and reliable.关键词:saliency detection;background priori;re-selection of background block;Fisher criterion;diffusion method19|25|1更新时间:2024-05-07 -

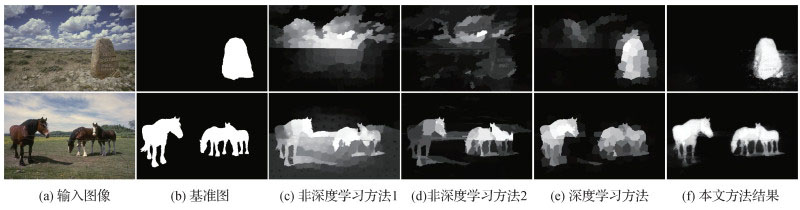

摘要:ObjectiveSalient object detection aims to localize and segment the most conspicuous and eye-attracting objects or regions in an image. Its results are usually expressed by saliency maps, in which the intensity of each pixel presents the strength of the probability that the pixel belongs to a salient region. Visual saliency detection has been used as a pre-processing step to facilitate a wide range of vision applications, including image and video compression, image retargeting, visual tracking, and robot navigation. Traditional saliency detection models focus on handcrafted features and prior information for detection, such as background prior, center prior, and contrast prior. However, these models are less applicable to a wide range of problems in practice. For example, salient objects are difficult to recognize when the background and salient objects share similar visual attributes. Moreover, failure may occur when multiple salient objects overlap partly or entirely with one another. With the rise of deep convolutional neural networks (CNNs), visual saliency detection has achieved rapid progress in the recent years. It has been successful in overcoming the disadvantages of handcrafted-feature-based approaches and greatly enhancing the performance of saliency detection. These CNNs-based models have shown their superiority on feature extraction. They also efficiently capture high-level information on the objects and their cluttered surroundings, thus achieving better performance compared with the traditional methods, especially the emergence of fully convolutional networks (FCN). Most mainstream saliency detection algorithms are now based on FCN. The FCN model unifies the two stages of feature extraction and saliency calculation and optimizes it through supervised learning. As a result, the features extracted by FCN network have stronger advantages in expression and robustness than do handcrafted features. However, existing saliency approaches share common drawbacks, such as difficulties in uniformly highlighting the entire salient objects with explicit boundaries and heterogeneous regions in complex images. This drawback is largely due to the lack of sufficient and rich features for detecting salient objects.MethodIn this study, we propose a simple but efficient CNN for pixel-wise saliency prediction to capture various features simultaneously. It also utilizes ulti-scale information from different convolutional layers of a CNN. To design a FCN-like network that is capable of carrying out the task of pixel-level saliency inference, we develop a multi-scale deep CNN for discovering more information in saliency computation. The multi-scale feature extraction network generates feature maps with different resolution from different side outputs of convolutional layer groups of a base network. The shallow convolutional layers contain rich detailed structure information at the expense of global representation. By contrast, the deep convolutional layers contain rich semantic information but lack spatial context. It is also capable of incorporating high-level semantic cues and low-level detailed information in a data-driven framework. Finally, to efficiently preserve object boundaries and uniform interior region, we adopt a fully connected conditional random field (CRF) model to refine the estimated saliency map.ResultExtensive experiments are conducted on the six most widely used and challenging benchmark datasets, namely, DUT-OMRON(Dalian University of Technology and OMRON Corporation), ECSSD(extended complex scene saliency dataset), SED2(segmentation evalution database 2), HKU, PASCAL-S, and SOD (salient objects dataset). The F-measure scores of our proposed scheme on these six benchmark datasets are 0.696, 0.876, 0.797, 0.868, 0.772, and 0.785, respectively. The max F-measure scores are 0.747, 0.899, 0.859, 0.889, 0.814, and 0.833, respectively. The weighted F-measure scores are 0.656, 0.854, 0.772, 0.844, 0.732, and 0.762, respectively. The mean absolute error (MAE) scores are 0.074, 0.061, 0.093, 0.049, 0.099, and 0.124, respectively. We compare our proposed method with 14 state-of-the-art methods as well. Results demonstrate the efficiency and robustness of the proposed approach against the 14 state-of-the-art methods in terms of popular evaluation metrics.ConclusionWe propose an efficient FCN-like salient object detection model that can generate rich and efficient features. The algorithm used in this study is robust to image saliency detection in various scenarios. Simultaneously, the boundary and inner area of the salient object are uniform, and the detection result is accurate.关键词:salient object detection(SOD);saliency;convolutional neural network(CNN);multi-scale features;data-driven24|27|4更新时间:2024-05-07

摘要:ObjectiveSalient object detection aims to localize and segment the most conspicuous and eye-attracting objects or regions in an image. Its results are usually expressed by saliency maps, in which the intensity of each pixel presents the strength of the probability that the pixel belongs to a salient region. Visual saliency detection has been used as a pre-processing step to facilitate a wide range of vision applications, including image and video compression, image retargeting, visual tracking, and robot navigation. Traditional saliency detection models focus on handcrafted features and prior information for detection, such as background prior, center prior, and contrast prior. However, these models are less applicable to a wide range of problems in practice. For example, salient objects are difficult to recognize when the background and salient objects share similar visual attributes. Moreover, failure may occur when multiple salient objects overlap partly or entirely with one another. With the rise of deep convolutional neural networks (CNNs), visual saliency detection has achieved rapid progress in the recent years. It has been successful in overcoming the disadvantages of handcrafted-feature-based approaches and greatly enhancing the performance of saliency detection. These CNNs-based models have shown their superiority on feature extraction. They also efficiently capture high-level information on the objects and their cluttered surroundings, thus achieving better performance compared with the traditional methods, especially the emergence of fully convolutional networks (FCN). Most mainstream saliency detection algorithms are now based on FCN. The FCN model unifies the two stages of feature extraction and saliency calculation and optimizes it through supervised learning. As a result, the features extracted by FCN network have stronger advantages in expression and robustness than do handcrafted features. However, existing saliency approaches share common drawbacks, such as difficulties in uniformly highlighting the entire salient objects with explicit boundaries and heterogeneous regions in complex images. This drawback is largely due to the lack of sufficient and rich features for detecting salient objects.MethodIn this study, we propose a simple but efficient CNN for pixel-wise saliency prediction to capture various features simultaneously. It also utilizes ulti-scale information from different convolutional layers of a CNN. To design a FCN-like network that is capable of carrying out the task of pixel-level saliency inference, we develop a multi-scale deep CNN for discovering more information in saliency computation. The multi-scale feature extraction network generates feature maps with different resolution from different side outputs of convolutional layer groups of a base network. The shallow convolutional layers contain rich detailed structure information at the expense of global representation. By contrast, the deep convolutional layers contain rich semantic information but lack spatial context. It is also capable of incorporating high-level semantic cues and low-level detailed information in a data-driven framework. Finally, to efficiently preserve object boundaries and uniform interior region, we adopt a fully connected conditional random field (CRF) model to refine the estimated saliency map.ResultExtensive experiments are conducted on the six most widely used and challenging benchmark datasets, namely, DUT-OMRON(Dalian University of Technology and OMRON Corporation), ECSSD(extended complex scene saliency dataset), SED2(segmentation evalution database 2), HKU, PASCAL-S, and SOD (salient objects dataset). The F-measure scores of our proposed scheme on these six benchmark datasets are 0.696, 0.876, 0.797, 0.868, 0.772, and 0.785, respectively. The max F-measure scores are 0.747, 0.899, 0.859, 0.889, 0.814, and 0.833, respectively. The weighted F-measure scores are 0.656, 0.854, 0.772, 0.844, 0.732, and 0.762, respectively. The mean absolute error (MAE) scores are 0.074, 0.061, 0.093, 0.049, 0.099, and 0.124, respectively. We compare our proposed method with 14 state-of-the-art methods as well. Results demonstrate the efficiency and robustness of the proposed approach against the 14 state-of-the-art methods in terms of popular evaluation metrics.ConclusionWe propose an efficient FCN-like salient object detection model that can generate rich and efficient features. The algorithm used in this study is robust to image saliency detection in various scenarios. Simultaneously, the boundary and inner area of the salient object are uniform, and the detection result is accurate.关键词:salient object detection(SOD);saliency;convolutional neural network(CNN);multi-scale features;data-driven24|27|4更新时间:2024-05-07 -

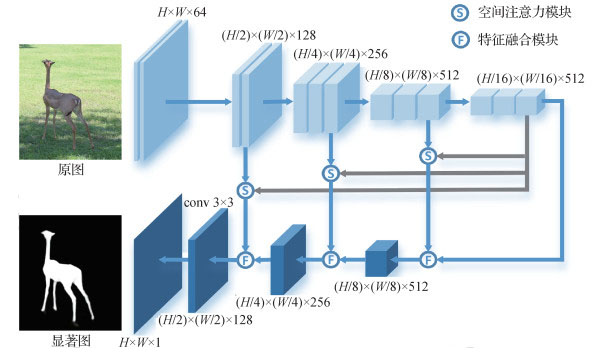

摘要:ObjectiveIn contrast with semantic segmentation and edge detection, saliency detection focuses on finding the most attractive target in an image. Saliency maps can be widely used as a preprocessing step in various computer vision tasks, such as image retrieval, image segmentation, object recognition, object detection, and visual tracking. In computer graphics, a map scan is used in non-photorealistic rendering, automatic image cropping, video summarization, and image retargeting. Early saliency detection methods mostly measure the salient score through basic characteristics, such as color, texture, and contrast. Although considerable progress has been achieved, handcrafted features typically lack global information and tend to highlight the edges of salient targets rather than the overall area to describe complex scenes and structures. Given the development of deep learning, the introduction of convolutional neural networks frees saliency detection from the restraint of traditional handcrafted features and achieves the best results at present. Fully convolutional networks (FCNs) stack convolution and pooling layers to obtain global semantic information. Spatial structure information may be lost and the edge information of saliency targets may be destroyed when we increase the receptive field to obtain global semantic features. Thus, the FCN cannot satisfy the requirement of a complex saliency detection task. To obtain accurate saliency maps, some studies have attempted to introduce handcrafted features to retain the edge of a saliency target and obtain the final saliency maps by combining the extracted edge's handcrafted features with the higher-level features of the FCN. However, the extraction of handcrafted features takes considerable time. Details may be gradually lost in the process of transforming features from low level to high level. Some studies have achieved good results; they combine high- and low-level features and use low-level features to enrich the details of high-level features. Many models based on multilevel feature fusion have been proposed in recent years, including multi flow, side fusion, bottom-up, and top-down structures. These models focus on network structures and disregard the importance of transmission and the difference between high- and low-level features. This condition may cause the loss of the global semantic information of high-level features and increase the interference of low-level features. Multilevel features play an important role in saliency detection. The method of multilevel feature extraction and fusion is one of the important research directions in saliency detection. To solve the problems of feature fusion and sensitivity to background interference, this study proposes a new saliency detection method based on feature pyramid networks and spatial attention. This method achieves the fusion and transmission of multilevel features with simple network architecture.MethodWe propose a multilevel feature fusion network architecture based on a feature pyramid network and spatial attention to integrate different levels of features. The proposed architecture adopts the feature pyramid network, which is the classic bottom-up and top-down structure, as the backbone network and focuses on the optimization of multilevel feature fusion and the transmission process. The network proposed in this work consists of two parts. The first part is the bottom-up convolution part, which is used to extract features. The second part is the top-down upsampling part. Each upsampling of high-level features will be fused with the low-level features of the corresponding scale and transmitted forward. The feature pyramid network removes the high-resolution feature before the first pooling to reduce computation. Multilevel features are extracted using visual geometry group (VGG)-16, which is one of the most excellent feature extraction networks. To improve the quality of feature fusion, a multilevel feature fusion module that optimizes the fusion and transmission processes of high-level features and various low-level features through the pooling and convolution of different scales is designed. To reduce the background interference of low-level features, a spatial attention module that supplies global semantic information for low-level features through attention maps obtained from high-level features via the pooling and convolution of different scales is designed. These attention maps can assist low-level features to highlight the foreground and suppress the background.ResultThe experimental results show that the saliency maps obtained using the proposed method are highly similar to the ground truth maps in four standard datasets, namely, DUTS, DUT-OMRON(Dalian University of Technology and OMRON Corporation), HKU-IS, and extended complex scene saliency dataset(ECSSD), the max F-measure MaxF increased by 1.04%, and mean absolute error (MAE) decreased by 4.35% compared with the second in the DUTS-test dataset. The method proposed in this study performs the best in simple or complex scenes. The network exhibits good feature fusion and edge learning abilities, which can effectively suppress the background of salient areas and fuse the details of low-level features. The saliency maps from our method have more complete salient areas and clearer edges. The results in terms of four common evaluation indexes are better than those obtained by nine state-of-the-art methods.ConclusionIn this study, the fusion of multilevel features is realized well by using a simple network structure. The multilevel feature fusion module can retain the location information of saliency targets and improve the quality of feature fusion and transmission. The spatial attention module reduces the background details and makes the saliency areas more complete. This module realizes feature selection and avoids the interference of background noise. Many experiments have proven the performance of the model and the effectiveness of each module proposed in this work.关键词:saliency detection;deep learning;feature pyramid;feature fusion;spatial attention34|24|4更新时间:2024-05-07

摘要:ObjectiveIn contrast with semantic segmentation and edge detection, saliency detection focuses on finding the most attractive target in an image. Saliency maps can be widely used as a preprocessing step in various computer vision tasks, such as image retrieval, image segmentation, object recognition, object detection, and visual tracking. In computer graphics, a map scan is used in non-photorealistic rendering, automatic image cropping, video summarization, and image retargeting. Early saliency detection methods mostly measure the salient score through basic characteristics, such as color, texture, and contrast. Although considerable progress has been achieved, handcrafted features typically lack global information and tend to highlight the edges of salient targets rather than the overall area to describe complex scenes and structures. Given the development of deep learning, the introduction of convolutional neural networks frees saliency detection from the restraint of traditional handcrafted features and achieves the best results at present. Fully convolutional networks (FCNs) stack convolution and pooling layers to obtain global semantic information. Spatial structure information may be lost and the edge information of saliency targets may be destroyed when we increase the receptive field to obtain global semantic features. Thus, the FCN cannot satisfy the requirement of a complex saliency detection task. To obtain accurate saliency maps, some studies have attempted to introduce handcrafted features to retain the edge of a saliency target and obtain the final saliency maps by combining the extracted edge's handcrafted features with the higher-level features of the FCN. However, the extraction of handcrafted features takes considerable time. Details may be gradually lost in the process of transforming features from low level to high level. Some studies have achieved good results; they combine high- and low-level features and use low-level features to enrich the details of high-level features. Many models based on multilevel feature fusion have been proposed in recent years, including multi flow, side fusion, bottom-up, and top-down structures. These models focus on network structures and disregard the importance of transmission and the difference between high- and low-level features. This condition may cause the loss of the global semantic information of high-level features and increase the interference of low-level features. Multilevel features play an important role in saliency detection. The method of multilevel feature extraction and fusion is one of the important research directions in saliency detection. To solve the problems of feature fusion and sensitivity to background interference, this study proposes a new saliency detection method based on feature pyramid networks and spatial attention. This method achieves the fusion and transmission of multilevel features with simple network architecture.MethodWe propose a multilevel feature fusion network architecture based on a feature pyramid network and spatial attention to integrate different levels of features. The proposed architecture adopts the feature pyramid network, which is the classic bottom-up and top-down structure, as the backbone network and focuses on the optimization of multilevel feature fusion and the transmission process. The network proposed in this work consists of two parts. The first part is the bottom-up convolution part, which is used to extract features. The second part is the top-down upsampling part. Each upsampling of high-level features will be fused with the low-level features of the corresponding scale and transmitted forward. The feature pyramid network removes the high-resolution feature before the first pooling to reduce computation. Multilevel features are extracted using visual geometry group (VGG)-16, which is one of the most excellent feature extraction networks. To improve the quality of feature fusion, a multilevel feature fusion module that optimizes the fusion and transmission processes of high-level features and various low-level features through the pooling and convolution of different scales is designed. To reduce the background interference of low-level features, a spatial attention module that supplies global semantic information for low-level features through attention maps obtained from high-level features via the pooling and convolution of different scales is designed. These attention maps can assist low-level features to highlight the foreground and suppress the background.ResultThe experimental results show that the saliency maps obtained using the proposed method are highly similar to the ground truth maps in four standard datasets, namely, DUTS, DUT-OMRON(Dalian University of Technology and OMRON Corporation), HKU-IS, and extended complex scene saliency dataset(ECSSD), the max F-measure MaxF increased by 1.04%, and mean absolute error (MAE) decreased by 4.35% compared with the second in the DUTS-test dataset. The method proposed in this study performs the best in simple or complex scenes. The network exhibits good feature fusion and edge learning abilities, which can effectively suppress the background of salient areas and fuse the details of low-level features. The saliency maps from our method have more complete salient areas and clearer edges. The results in terms of four common evaluation indexes are better than those obtained by nine state-of-the-art methods.ConclusionIn this study, the fusion of multilevel features is realized well by using a simple network structure. The multilevel feature fusion module can retain the location information of saliency targets and improve the quality of feature fusion and transmission. The spatial attention module reduces the background details and makes the saliency areas more complete. This module realizes feature selection and avoids the interference of background noise. Many experiments have proven the performance of the model and the effectiveness of each module proposed in this work.关键词:saliency detection;deep learning;feature pyramid;feature fusion;spatial attention34|24|4更新时间:2024-05-07 -

摘要:ObjectiveVisual object tracking is an important research subject in computer vision. It has extensive applications that include surveillance, human-computer interaction, and medical imaging. The goal of tracking is to estimate the states of a moving target in a video sequence. Considerable effort has been devoted to this field, but many challenges remain due to appearance variations caused by heavy occlusion, illumination variation, and fast motion. Low-rank approximation can acquire the underlying structure of a target because some candidate particles have extremely similar appearance. This approximation can prune irrelevant particles and is robust to global appearance changes, such as pose change and illumination variation. Sparse representation formulates candidate particles by using a linear combination of a few dictionary templates. This representation is robust against local appearance changes, e.g., partial occlusions. Therefore, combining low-rank approximation with sparsity representation can improve the efficiency and effectiveness of object tracking. However, object tracking via low-rank sparse learning easily results in tracking drift when facing objects with fast motion and severe occlusions. Therefore, reverse low-rank sparse learning with a variation regularization-based tracking algorithm is proposed.MethodFirst, a low-rank constraint is used to restrain the temporal correlation of the objective appearance, and thus, remove the uncorrelated particles and adapt the object appearance change. The rank minimization problem is known to be computationally intractable. Hence, we resort to minimizing its convex envelope via a nuclear norm. Second, the traditional sparse representation method requires solving numerous L1 optimization problems. Computational cost increases linearly with the number of candidate particles. We build an inverse sparse representation formulation for object appearance using candidate particles to represent the target template inversely, reducing the number of L1 optimization problems for online tracking from candidate particles to one. Third, variation regularization is introduced to model the sparse coefficient difference. The variation method can model the variable selection problem in bounded variation space, which can restrict object appearance with only a slight difference between consecutive frames but allow the existing difference between individual frames to jump discontinuously, and thus, adapt to fast object motion. Lastly, an online updating scheme based on alternating iteration is proposed for tracking computation. Each iteration updates one variable at a time. Meanwhile, the other variables are fixed to their latest values. To accommodate target appearance change, we also use a local updating scheme to update the local parts individually. This scheme captures changes in target appearance even when heavy occlusion occurs. In such case, the unoccluded local parts are still updated in the target template and the occluded ones are discarded. Consequently, we can obtain a representation coefficient for the observation model and realize online tracking.ResultTo evaluate our proposed tracker, qualitative and quantitative analyses are performed using MATLAB on benchmark tracking sequences (occlusion 1, David, boy, deer) obtained from OTB (object tracking benchmark) datasets. The selected videos include many challenging factors in visual tracking, such as occlusion, fast motion, illumination, and scale variation. The experimental results show that when faced with these challenging situations in the benchmark tracking dataset, the proposed algorithm can perform tracking effectively in complicated scenes. Comparative studies with five state-of-the-art visual trackers, namely, DDL (discriminative dictionary learning), SCM (sparse collaborative model), LLR (locally low-rank representation), IST (inverse sparse tracker), and CNT (convolutional networks training) are conducted. To achieve fair comparison, we use publicly available source codes or results provided by the authors. Among these trackers, DDL, SCM, LLR, and IST are the most relevant. Compared with the CNT tracker, we mostly consider that deep networks have attracted considerable attention in complicated visual tracking tasks. For qualitative comparison, the representative tracking results are discussed on the basis of the major challenging factors in each video. For quantitative comparison, the central pixel error (CPE), which records the Euclidean distance between the central location of the tracked target and the manually labeled ground truth, is used. When the metric value is smaller, the tracking results are more accurate. From the evolution process of CPE versus frame number, our tracker achieves the best results in these challenging sequences. In particular, our tracker outperforms the SCM, IST, and CNT trackers in terms of occlusion, illumination, and scale variation sequences. It outperforms the LLR, IST, and DDL trackers in terms of fast motion sequences. These results demonstrate the effectiveness and robustness of our tracker to occlusion, illumination, scale variation, and fast motion.ConclusionQualitative and quantitative evaluations demonstrate that the proposed algorithm achieves higher precision compared with many state-of-the-art algorithms. In particular, it exhibits better adaptability for objects with fast motion. In the future, we will extend our tracker by applying deep learning to enhance its discriminatory ability.关键词:object tracking;variation method;sparse representation;low-rank constraint;particle filter50|46|1更新时间:2024-05-07

摘要:ObjectiveVisual object tracking is an important research subject in computer vision. It has extensive applications that include surveillance, human-computer interaction, and medical imaging. The goal of tracking is to estimate the states of a moving target in a video sequence. Considerable effort has been devoted to this field, but many challenges remain due to appearance variations caused by heavy occlusion, illumination variation, and fast motion. Low-rank approximation can acquire the underlying structure of a target because some candidate particles have extremely similar appearance. This approximation can prune irrelevant particles and is robust to global appearance changes, such as pose change and illumination variation. Sparse representation formulates candidate particles by using a linear combination of a few dictionary templates. This representation is robust against local appearance changes, e.g., partial occlusions. Therefore, combining low-rank approximation with sparsity representation can improve the efficiency and effectiveness of object tracking. However, object tracking via low-rank sparse learning easily results in tracking drift when facing objects with fast motion and severe occlusions. Therefore, reverse low-rank sparse learning with a variation regularization-based tracking algorithm is proposed.MethodFirst, a low-rank constraint is used to restrain the temporal correlation of the objective appearance, and thus, remove the uncorrelated particles and adapt the object appearance change. The rank minimization problem is known to be computationally intractable. Hence, we resort to minimizing its convex envelope via a nuclear norm. Second, the traditional sparse representation method requires solving numerous L1 optimization problems. Computational cost increases linearly with the number of candidate particles. We build an inverse sparse representation formulation for object appearance using candidate particles to represent the target template inversely, reducing the number of L1 optimization problems for online tracking from candidate particles to one. Third, variation regularization is introduced to model the sparse coefficient difference. The variation method can model the variable selection problem in bounded variation space, which can restrict object appearance with only a slight difference between consecutive frames but allow the existing difference between individual frames to jump discontinuously, and thus, adapt to fast object motion. Lastly, an online updating scheme based on alternating iteration is proposed for tracking computation. Each iteration updates one variable at a time. Meanwhile, the other variables are fixed to their latest values. To accommodate target appearance change, we also use a local updating scheme to update the local parts individually. This scheme captures changes in target appearance even when heavy occlusion occurs. In such case, the unoccluded local parts are still updated in the target template and the occluded ones are discarded. Consequently, we can obtain a representation coefficient for the observation model and realize online tracking.ResultTo evaluate our proposed tracker, qualitative and quantitative analyses are performed using MATLAB on benchmark tracking sequences (occlusion 1, David, boy, deer) obtained from OTB (object tracking benchmark) datasets. The selected videos include many challenging factors in visual tracking, such as occlusion, fast motion, illumination, and scale variation. The experimental results show that when faced with these challenging situations in the benchmark tracking dataset, the proposed algorithm can perform tracking effectively in complicated scenes. Comparative studies with five state-of-the-art visual trackers, namely, DDL (discriminative dictionary learning), SCM (sparse collaborative model), LLR (locally low-rank representation), IST (inverse sparse tracker), and CNT (convolutional networks training) are conducted. To achieve fair comparison, we use publicly available source codes or results provided by the authors. Among these trackers, DDL, SCM, LLR, and IST are the most relevant. Compared with the CNT tracker, we mostly consider that deep networks have attracted considerable attention in complicated visual tracking tasks. For qualitative comparison, the representative tracking results are discussed on the basis of the major challenging factors in each video. For quantitative comparison, the central pixel error (CPE), which records the Euclidean distance between the central location of the tracked target and the manually labeled ground truth, is used. When the metric value is smaller, the tracking results are more accurate. From the evolution process of CPE versus frame number, our tracker achieves the best results in these challenging sequences. In particular, our tracker outperforms the SCM, IST, and CNT trackers in terms of occlusion, illumination, and scale variation sequences. It outperforms the LLR, IST, and DDL trackers in terms of fast motion sequences. These results demonstrate the effectiveness and robustness of our tracker to occlusion, illumination, scale variation, and fast motion.ConclusionQualitative and quantitative evaluations demonstrate that the proposed algorithm achieves higher precision compared with many state-of-the-art algorithms. In particular, it exhibits better adaptability for objects with fast motion. In the future, we will extend our tracker by applying deep learning to enhance its discriminatory ability.关键词:object tracking;variation method;sparse representation;low-rank constraint;particle filter50|46|1更新时间:2024-05-07 -

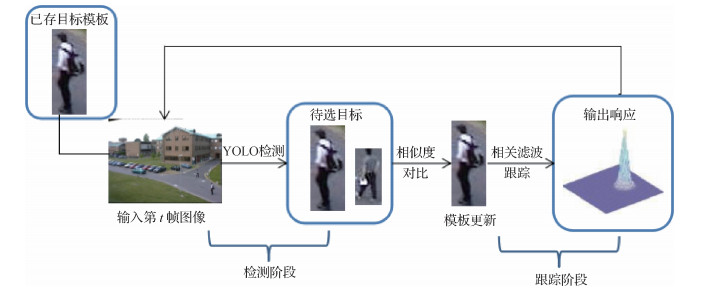

摘要:ObjectiveVideo-based object detection and tracking have always been a research topic of high concern in the academic field of computer vision. Video object tracking has important research significance and broad application prospects in intelligent monitoring,human-computer interaction,robot vision navigation,and other aspects. Although the theoretical research of video object tracking technology has made considerable progress and several achievements have entered the practical stage,research on this technology still faces tremendous challenges,such as scale change,illumination change,motion blur,object deformation,and object occlusion,which result in many difficulties in visual tracking,particularly object scale mutation within a short time. It will lead to the loss of tracking elements,and the accumulation of tracking errors will lead to tracking drift. If the object scale is consistent,then considerable scale information will be lost. Thus,scale mutation is a challenging task in object tracking. To solve this problem,this study proposes an adaptive scale mutation tracking algorithm (kernelized correlation filter_you only look once,KCF_YOLO).MethodThe algorithm uses a correlation filter tracker to realize fast tracking in the training phase of tracking and uses you only look once (YOLO) V3 neural network in the detection phase. An adaptive template updating strategy is also designed. This strategy uses the method of comparing the color features of the detected object with the object template and the similarity of the image fingerprint features after fusion to determine whether occlusion occurs and whether the object template should be updated in the current frame. In the first frame of the video,the object is selected,assuming that the category of the object to be tracked is human. The object area is stored as the object template T. The object is selected,and it enters the training stage. The KCF algorithm is used for tracking. KCF extracts the multichannel history of gradients features of the object template. In the tracking process,1.5 times of the object template is selected as the object search range of the next frame,considerably reducing the search range. Tracking speed is remarkably improved. When the frame number is a multiple of 20,it enters the detection stage and uses YOLO V3 for object detection. YOLO V3 identifies all the people (P1,P2, P3,…,Pn) in the current frame image. All the people to be identified are compared with the object template stored before 20 frames,and their color and image fingerprint features are extracted and compared with similarity (the similarity selection image fingerprint algorithm and color features are combined). If the similarity is greater than the average similarity of the first 20 frames,then the object template will be updated to the person with the greatest similarity. Simultaneously,the scale of the tracking box will be updated in accordance with the YOLO detection to achieve scale adaptation; otherwise,the object is judged as occluded and the template will not be updated. In the tracking phase,the updated or not updated object template is used as the latest status of the object in the tracking process for subsequent tracking. The preceding steps are repeated every 20 frames until the video and tracking end. The color and phase features are complementary. The image fingerprint feature selects the perceptual Hash(PHash) algorithm. After the discrete cosine transformation,the internal information of the image is mostly concentrated in the low-frequency area,reducing the calculation scope to the low-frequency area and losing color information. The color feature counts the distribution of colors in the entire image. The combination ensures the accuracy of similarity. A total of 11 video sequences representative of scale mutation in the object tracking benchmark(OTB)-2015 dataset are tested to prove the effectiveness of the proposed method. The results show that the average tracking accuracy of this algorithm is 0.955,and the average tracking speed is 36 frames/s. The self-made data of object reproduction are completely occluded for 130 frames. The result shows that tracking accuracy is 0.9,proving the validity of the algorithm that combines kernel correlation filtering and the YOLO V3 network. Compared with the classical scale adaptive tracking algorithm,accuracy is improved by 31.74% on average.ConclusionIn this study,we adopt the ideas of correlation filtering and neural network to detect and track targets,improving the adaptability of the algorithm to scale mutation in the object tracking process. The experimental results show that the detection strategy can correct the tracking drift caused by the subsequent scale mutation and ensure the effectiveness of the adaptive template updating strategy. To address the problems of the traditional nuclear correlation filter being unable to deal with a sudden change in object scale within a short time and the slow tracking speed of a neural network,this work establishes a bridge between a correlation filter and a neural network. The tracker that combines a correlation filter and a neural network opens a new way.关键词:object tracking;correlation filtering;neural network detection;scale mutation;scale adaptation33|33|2更新时间:2024-05-07