最新刊期

卷 25 , 期 5 , 2020

- 摘要:With the rapid development of earth observation technology, remote sensing opens up the possibility of multiplatform, multisensor, and multiangle observation, and the acquisition ability of multimodal datasets in a joint manner has been considerably improved. Extensive attention has been given to multisource data fusion because such technology can be used to improve the performance of processing approaches with respect to available applications. In this study, we focus on reviewing state-of-the-art multisource remote image fusion. Three typical problems, namely, pansharpening, hypersharpening, and the fusion of hyperspectral ands multispectral images, have been comprehensively investigated. A mathematical modeling view of many important contributions specifically dedicated to the three topics is provided.First, the major challenge and complex imaging relationship between available multisource spatial-spectral remote sensing images are discussed. As an inverse problem of recovering the latent high-resolution image from two branches of incomplete observed multichannel data, the challenge lies in the ill-posed condition caused by insufficient supplementary information, optical blur, and noise. Therefore, the existing data fusion method still has considerable room for resolution enhancing with complementary information preserving capacity. Second, a comprehensive survey is conducted for the representative mathematical modeling paradigms, including the component substitution scheme, multiresolution analysis framework, Bayesian model, variational model, and data- and model-driven optimization methods, and their existing problems. From the point of view of Bayesian fusion modeling, this study analyzes the key role and modeling mechanism of complementary features, preserving data fidelity and image prior terms in the optimization model. Then, this work summarizes the new trends in image priori modeling, including fractional-order regularization, nonlocal regularization, structured sparse representation, matrix low rank, tensor representation, and compound regularization with analytical and deep priors. Lastly, the major challenges and possible research direction in each area are outlined and discussed. The hybrid analytical model and data-driven framework will be important research directions. Breaking through the technical bottleneck of existing model optimization-based fusion methods with the imaging degradation model, data compact representation, and efficient computation, and developing fusion methods with improved spectral-preserving performance and reduced computational complexity are necessary. To cope with the big data problem, high-performance computing on big data platforms, such as Hadoop and SPARK, will obtain promising applications for multisource data-accelerated-based fusion.关键词:image fusion;Pansharpening;inverse problem;regularization;model optimization;data driven;deep learning39|31|11更新时间:2024-05-08

Scholar View

-

摘要:This is the 25th annual survey series of bibliographies on image engineering in China. This statistic and analysis study aims to capture the up-to-date development of image engineering in China, provide a targeted means of literature searching facility for readers working in related areas, and supply a useful recommendation for the editors of journals and potential authors of papers. Considering the wide distribution of related publications in China, 761 references on image engineering research and technique are selected carefully from 2 854 research papers published in 148 issues of a set of 15 Chinese journals. These 15 journals are considered important, in which papers concerning image engineering have higher quality and are relatively concentrated. The selected references are initially classified into five categories (image processing, image analysis, image understanding, technique application, and survey) and then into 23 specialized classes in accordance with their main contents (same as the last 14 years). Analysis and discussions about the statistics of the results of classifications by journal and by category are also presented. In addition, as a roundup of the quarter of a century for this review series, the 15 856 articles in the field of image engineering selected from 65 040 academic research and technical applications published in a total of 2 964 issues over the past 25 years are divided into five periods of five-years, comprehensive statistics and analysis were made on the selection of image engineering literature and the number of image engineering literatures in each category and in each class. Analysis on the statistics in 2019 shows that image analysis is receiving the most attention, in which the focuses are on object detection and recognition, image segmentation and edge detection, and object feature extraction and analysis. The studies and applications of image technology in various areas, such as remote sensing, radar, and mapping, are continuously active. According to the comparison of 25 years of statistical data, it can be seen that the number of literatures in some classes of the four categories of image processing, image analysis, image understanding, and technology applications has kept ahead, but there are also some classes that the numbers are gradually decreasing, reflecting changes in different research directions over the years. This work shows a general and op-to-date picture of the various progresses, either for depth or for width, of image engineering in China in 2019. The statistics for 25 years also provide readers with more comprehensive and credible information on the development trends of various research directions.关键词:image engineering;image processing;image analysis;image understanding;technique application;literature survey;literature statistics;literature classification;bibliometrics67|54|5更新时间:2024-05-08

摘要:This is the 25th annual survey series of bibliographies on image engineering in China. This statistic and analysis study aims to capture the up-to-date development of image engineering in China, provide a targeted means of literature searching facility for readers working in related areas, and supply a useful recommendation for the editors of journals and potential authors of papers. Considering the wide distribution of related publications in China, 761 references on image engineering research and technique are selected carefully from 2 854 research papers published in 148 issues of a set of 15 Chinese journals. These 15 journals are considered important, in which papers concerning image engineering have higher quality and are relatively concentrated. The selected references are initially classified into five categories (image processing, image analysis, image understanding, technique application, and survey) and then into 23 specialized classes in accordance with their main contents (same as the last 14 years). Analysis and discussions about the statistics of the results of classifications by journal and by category are also presented. In addition, as a roundup of the quarter of a century for this review series, the 15 856 articles in the field of image engineering selected from 65 040 academic research and technical applications published in a total of 2 964 issues over the past 25 years are divided into five periods of five-years, comprehensive statistics and analysis were made on the selection of image engineering literature and the number of image engineering literatures in each category and in each class. Analysis on the statistics in 2019 shows that image analysis is receiving the most attention, in which the focuses are on object detection and recognition, image segmentation and edge detection, and object feature extraction and analysis. The studies and applications of image technology in various areas, such as remote sensing, radar, and mapping, are continuously active. According to the comparison of 25 years of statistical data, it can be seen that the number of literatures in some classes of the four categories of image processing, image analysis, image understanding, and technology applications has kept ahead, but there are also some classes that the numbers are gradually decreasing, reflecting changes in different research directions over the years. This work shows a general and op-to-date picture of the various progresses, either for depth or for width, of image engineering in China in 2019. The statistics for 25 years also provide readers with more comprehensive and credible information on the development trends of various research directions.关键词:image engineering;image processing;image analysis;image understanding;technique application;literature survey;literature statistics;literature classification;bibliometrics67|54|5更新时间:2024-05-08

Review

-

摘要:ObjectiveMultimedia forensics and copyright protection have become hot issues in the society. The widespread use of portable cameras, mobile phones, and surveillance cameras has led to an explosive growth in the amount of digital video data. Although people enjoy the convenience given by the popularity of digital multimedia, they also experience considerable security problems. Double compression for digital video file is a necessary procedure for malicious video content modification. The detection for double compression is also an important auxiliary measure for video content forensics. Content-tampered video inevitably undergo two or more re-compression operations. If the video test is judged to have undergone multiple re-compressions, it is more likely to undergo content tampering operation. At present, high efficiency video coding (HEVC) video double compression detection with different coding parameters achieves high accuracy. However, in the compression process with the same coding parameters, the trace of HEVC video double compression is very small and the detection is considerably more difficult. For most attackers, their concern is focused on the modification of video content. With the video stream containing the video parameter set and the image parameter set, the video editing software generally uses the same parameters for re-compression as default setting. This study proposes a detection algorithm for video double compression with the same coding parameters. The proposed algorithm is based on the video quality degradation mechanism.MethodAfter multiple compression times with the same coding parameters, the video quality tends to be unchanged. The single compressed and double compressed videos can be distinguished by the degree of video quality degradation. Video coding is based on rate-distortion optimization to balance the bitrate and distortion to choose the optimal parameters for the encoder. When the video is compressed with the same coding parameters, the trace of video re-compression operations is extremely little because of the slight changes in division mode of the coding unit to the prediction unit (PU) and the little influence on the distribution of PU size type. Thus, the double compression with the same parameters is more difficult to detect. Given that the transform quantization coding process of each coding unit is independent, the quantization error and its distribution characteristics are independent, too. The discontinuous boundaries of adjacent blocks will affect the mode selection of intra-prediction. In the process of motion compensation prediction, the predicted values of adjacent blocks come from different positions of different images, which results in the numerical discontinuity of the predicted residual at the block boundary. It will affect the selection of motion vectors predicted between frames and reference pictures. This study proposes a detection algorithm based on two kinds of video features: the I frame PU mode (intra-coded picture prediction unit mode, IPUM) and the P frame PU mode (predicted picture prediction unit mode, PPUM). These video features are extracted from the luminance component (Y) in I frame and P frame, respectively. First, the IPUM and PPUM features are extracted from the tested HEVC videos. Then, the video is compressed three times with the same coding parameters. In this study, the above features are repetitively extracted for each compressing time. A larger number of PU should be selected as the statistical feature because the numbers of PU of different sizes in I frame and P frame are quite different. Finally, the average different PU modes of the nth compression and the (n+1)th compression of each I frame and P frame at the same position are counted to form a 6-dimensional feature set, which is sent to a support vector machine (SVM) for classification.ResultThe experiment is composed of three resolution video sets: common intermediate format (CIF) (352×288 pixels), 720p (1 280×720 pixels), and 1 080p (1 920×1 080 pixels). To increase the number of video samples, each test sequence is clipped into smaller video clips. Each video clip contains 100 frames. If the video exceeds 1 000 frames, only the first 1 000 frames are considered to generate the samples in our experiments. Accordingly, a total of 132 CIF-video sequence segments, 87 720p-video sequence segments, and 98 1080p-video sequence segments are obtained. For each set, 4/5 positive samples and their corresponding negative samples are randomly selected as the training set, while the rest are used as the test set. The binary classification is applied by using the SVM classifier with radial basis function kernel. The optimized parameters, gamma and cost, are determined by using grid search with fivefold cross validation. The final detection accuracy values are collected by averaging the accuracy results from 30 repetitions of the experimental test, where the training and testing data are randomly selected for each time. Considering the computational complexity of the experiment, the repetition of re-compression for each experimental test is especially important. With the increase in the times of re-compression, the computational complexity of video encoding and decoding will increase linearly. However, the classification accuracy is not significantly improved. Thus, the repetition of re-compression is finally adjusted to three. The average detection accuracies for the different video test datasets, CIF, 720p, and 1 080p, are 95.45%, 94.8%, and 95.53%, respectively. In addition, video compression is usually affected by coding parameters. Group of pictures (GOP) is the basic coding unit of video compression. The interval of GOP has a significant impact on video quality owing to the error propagation in the inter-coding process. In the CIF dataset, the detection accuracy of this method with different GOP reaches more than 90%. With the increase of GOP, the detection accuracy will decline slightly. The final experimental test is regarding frame deletion, which is a common operation of video tampering. In the CIF dataset, the detection accuracy of video re-compression with 10 consecutive deleted frames can maintain above 88%, which means the proposed method is robust to frame deletion.ConclusionIn this study, the law of video quality degradation is revealed by the changed number of different PU modes in the same position of I frame and P frame. In sum, our proposed method clearly performs well in different test situations. The detection accuracy of the proposed method can reach high rates of different GOP settings, video resolutions, and frame deletion rate.关键词:video forensics;double video compression;high efficiency video coding (HEVC);the same coding parameters;quality degradation;prediction unit (PU)79|117|1更新时间:2024-05-08

摘要:ObjectiveMultimedia forensics and copyright protection have become hot issues in the society. The widespread use of portable cameras, mobile phones, and surveillance cameras has led to an explosive growth in the amount of digital video data. Although people enjoy the convenience given by the popularity of digital multimedia, they also experience considerable security problems. Double compression for digital video file is a necessary procedure for malicious video content modification. The detection for double compression is also an important auxiliary measure for video content forensics. Content-tampered video inevitably undergo two or more re-compression operations. If the video test is judged to have undergone multiple re-compressions, it is more likely to undergo content tampering operation. At present, high efficiency video coding (HEVC) video double compression detection with different coding parameters achieves high accuracy. However, in the compression process with the same coding parameters, the trace of HEVC video double compression is very small and the detection is considerably more difficult. For most attackers, their concern is focused on the modification of video content. With the video stream containing the video parameter set and the image parameter set, the video editing software generally uses the same parameters for re-compression as default setting. This study proposes a detection algorithm for video double compression with the same coding parameters. The proposed algorithm is based on the video quality degradation mechanism.MethodAfter multiple compression times with the same coding parameters, the video quality tends to be unchanged. The single compressed and double compressed videos can be distinguished by the degree of video quality degradation. Video coding is based on rate-distortion optimization to balance the bitrate and distortion to choose the optimal parameters for the encoder. When the video is compressed with the same coding parameters, the trace of video re-compression operations is extremely little because of the slight changes in division mode of the coding unit to the prediction unit (PU) and the little influence on the distribution of PU size type. Thus, the double compression with the same parameters is more difficult to detect. Given that the transform quantization coding process of each coding unit is independent, the quantization error and its distribution characteristics are independent, too. The discontinuous boundaries of adjacent blocks will affect the mode selection of intra-prediction. In the process of motion compensation prediction, the predicted values of adjacent blocks come from different positions of different images, which results in the numerical discontinuity of the predicted residual at the block boundary. It will affect the selection of motion vectors predicted between frames and reference pictures. This study proposes a detection algorithm based on two kinds of video features: the I frame PU mode (intra-coded picture prediction unit mode, IPUM) and the P frame PU mode (predicted picture prediction unit mode, PPUM). These video features are extracted from the luminance component (Y) in I frame and P frame, respectively. First, the IPUM and PPUM features are extracted from the tested HEVC videos. Then, the video is compressed three times with the same coding parameters. In this study, the above features are repetitively extracted for each compressing time. A larger number of PU should be selected as the statistical feature because the numbers of PU of different sizes in I frame and P frame are quite different. Finally, the average different PU modes of the nth compression and the (n+1)th compression of each I frame and P frame at the same position are counted to form a 6-dimensional feature set, which is sent to a support vector machine (SVM) for classification.ResultThe experiment is composed of three resolution video sets: common intermediate format (CIF) (352×288 pixels), 720p (1 280×720 pixels), and 1 080p (1 920×1 080 pixels). To increase the number of video samples, each test sequence is clipped into smaller video clips. Each video clip contains 100 frames. If the video exceeds 1 000 frames, only the first 1 000 frames are considered to generate the samples in our experiments. Accordingly, a total of 132 CIF-video sequence segments, 87 720p-video sequence segments, and 98 1080p-video sequence segments are obtained. For each set, 4/5 positive samples and their corresponding negative samples are randomly selected as the training set, while the rest are used as the test set. The binary classification is applied by using the SVM classifier with radial basis function kernel. The optimized parameters, gamma and cost, are determined by using grid search with fivefold cross validation. The final detection accuracy values are collected by averaging the accuracy results from 30 repetitions of the experimental test, where the training and testing data are randomly selected for each time. Considering the computational complexity of the experiment, the repetition of re-compression for each experimental test is especially important. With the increase in the times of re-compression, the computational complexity of video encoding and decoding will increase linearly. However, the classification accuracy is not significantly improved. Thus, the repetition of re-compression is finally adjusted to three. The average detection accuracies for the different video test datasets, CIF, 720p, and 1 080p, are 95.45%, 94.8%, and 95.53%, respectively. In addition, video compression is usually affected by coding parameters. Group of pictures (GOP) is the basic coding unit of video compression. The interval of GOP has a significant impact on video quality owing to the error propagation in the inter-coding process. In the CIF dataset, the detection accuracy of this method with different GOP reaches more than 90%. With the increase of GOP, the detection accuracy will decline slightly. The final experimental test is regarding frame deletion, which is a common operation of video tampering. In the CIF dataset, the detection accuracy of video re-compression with 10 consecutive deleted frames can maintain above 88%, which means the proposed method is robust to frame deletion.ConclusionIn this study, the law of video quality degradation is revealed by the changed number of different PU modes in the same position of I frame and P frame. In sum, our proposed method clearly performs well in different test situations. The detection accuracy of the proposed method can reach high rates of different GOP settings, video resolutions, and frame deletion rate.关键词:video forensics;double video compression;high efficiency video coding (HEVC);the same coding parameters;quality degradation;prediction unit (PU)79|117|1更新时间:2024-05-08 -

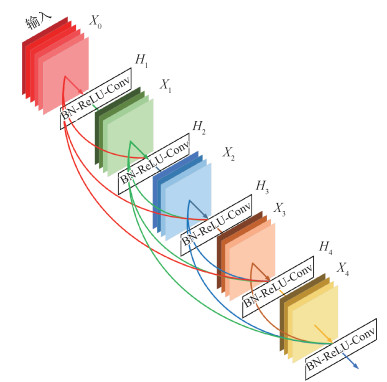

摘要:ObjectiveNon-uniform blind deblurring is a challenging problem in image processing and computer vision communities. Motion blur can be caused by a variety of reasons, such as the motion of multiple objects, camera shake, and scene depth variation. Traditional methods applied various constraints to model the characteristics of blur and utilized different natural image prior to the regularization of the solution space. Most of these methods involve heuristic parameter-tuning and expensive computation. Blur kernels are more complicated than these assumptions. Thus, these methods are not useful for real world images. Impressive results have been obtained in image processing with the development of neural networks. Scholars use neural networks for image generation. In this study, motion deblurring is regarded as a special problem of image generation. We also propose a fast deblurring method based on neural network without using multi-scale, unlike other scholars.MethodFirst, this study adopts the densely connected convolutional network (DenseNets) which recently performed well in image classification direction. Improvements are made for the model to make it suitable for image generation. Our network is a full convolutional network designed to accept various sizes of input images. The input images are trained through two convolutional layers to obtain a total of 256 feature maps with the dimension of 64×64 pixels. Then, these feature maps are introduced into the DenseNets containing bottleneck layers and transitions. The output of bottleneck layers in each dense block is 1 024 feature maps, while the output of the last convolution layer of each dense block is 256 feature maps. Finally, the output of the DenseNets is restored to the size of the original image by three convolutional layers. A residual connection is added between the input and output to preserve the color information of the original image as much as possible. We also speed up the time of training. To ensure the efficiency of deblurring, this network uses only nine dense layers and does not use the multi-scale model adopted by other scholars. However, this method can still guarantee the quality of the restored image. In this study, every layer in DenseNets is connected. With this method, the intermediate information in the network can be fully utilized. In our experiment, the denser the connection among the layers, the better the results obtained. Generative adversarial networks (GANs) help image generation domain achieve a qualitative fly-by. The images generated this way are closer to the real-world image in terms of overall details. Therefore, we also use this idea to improve the performance of deblurring. In terms of the loss function, we use perceptual loss to measure the difference between restored and sharp images. We choose the VGG(visual geometry group)-19 conv3.3 feature maps to define the loss function because a shallower network can better represent the texture information of an image. In the image deblurring field, we focus on restoring the texture and edges of the object rather than wasting too much computation to determine its exact position. Thus, we choose the latter one in meansquare error (MSE) loss and perceptual loss.ResultCompared with typical traditional deblurring methods and the recent neural network-based method, the performance of the algorithm is estimated by testing the restoring time, peak signal to noise ratio (PSNR), and structural similarity (SSIM) between the restored and sharp images. Experimental results show that the restored image via our method is clearer than those of others. Moreover, the average PSNR on the test set increased by 0.91, which is obviously superior to that of the traditional deblurring algorithm. According to the qualitative comparison, our method can handle the details of blurry image better than other approaches can. Our proposed method can also restore the small objects, such as text in the image. Results of other relative datasets are also better than other methods. Abandoning the multi-scale approach leads to multiple advantages, such as effectively reduced parameters, shorter training time, and reduced restoring time up to 0.32 s.ConclusionOur algorithm has a simple network structure and good restoring effect. The speed of image generation is also significantly faster than that of other methods. At the same time, the proposed method is robust and suitable for dealing with various image degradation problems caused by motion blur.关键词:motion blur;blind deblurring;generative adversarial network(GAN);densely connected convolution network(DenseNets);perceptual loss;fully convolution network(FCN)70|94|9更新时间:2024-05-08

摘要:ObjectiveNon-uniform blind deblurring is a challenging problem in image processing and computer vision communities. Motion blur can be caused by a variety of reasons, such as the motion of multiple objects, camera shake, and scene depth variation. Traditional methods applied various constraints to model the characteristics of blur and utilized different natural image prior to the regularization of the solution space. Most of these methods involve heuristic parameter-tuning and expensive computation. Blur kernels are more complicated than these assumptions. Thus, these methods are not useful for real world images. Impressive results have been obtained in image processing with the development of neural networks. Scholars use neural networks for image generation. In this study, motion deblurring is regarded as a special problem of image generation. We also propose a fast deblurring method based on neural network without using multi-scale, unlike other scholars.MethodFirst, this study adopts the densely connected convolutional network (DenseNets) which recently performed well in image classification direction. Improvements are made for the model to make it suitable for image generation. Our network is a full convolutional network designed to accept various sizes of input images. The input images are trained through two convolutional layers to obtain a total of 256 feature maps with the dimension of 64×64 pixels. Then, these feature maps are introduced into the DenseNets containing bottleneck layers and transitions. The output of bottleneck layers in each dense block is 1 024 feature maps, while the output of the last convolution layer of each dense block is 256 feature maps. Finally, the output of the DenseNets is restored to the size of the original image by three convolutional layers. A residual connection is added between the input and output to preserve the color information of the original image as much as possible. We also speed up the time of training. To ensure the efficiency of deblurring, this network uses only nine dense layers and does not use the multi-scale model adopted by other scholars. However, this method can still guarantee the quality of the restored image. In this study, every layer in DenseNets is connected. With this method, the intermediate information in the network can be fully utilized. In our experiment, the denser the connection among the layers, the better the results obtained. Generative adversarial networks (GANs) help image generation domain achieve a qualitative fly-by. The images generated this way are closer to the real-world image in terms of overall details. Therefore, we also use this idea to improve the performance of deblurring. In terms of the loss function, we use perceptual loss to measure the difference between restored and sharp images. We choose the VGG(visual geometry group)-19 conv3.3 feature maps to define the loss function because a shallower network can better represent the texture information of an image. In the image deblurring field, we focus on restoring the texture and edges of the object rather than wasting too much computation to determine its exact position. Thus, we choose the latter one in meansquare error (MSE) loss and perceptual loss.ResultCompared with typical traditional deblurring methods and the recent neural network-based method, the performance of the algorithm is estimated by testing the restoring time, peak signal to noise ratio (PSNR), and structural similarity (SSIM) between the restored and sharp images. Experimental results show that the restored image via our method is clearer than those of others. Moreover, the average PSNR on the test set increased by 0.91, which is obviously superior to that of the traditional deblurring algorithm. According to the qualitative comparison, our method can handle the details of blurry image better than other approaches can. Our proposed method can also restore the small objects, such as text in the image. Results of other relative datasets are also better than other methods. Abandoning the multi-scale approach leads to multiple advantages, such as effectively reduced parameters, shorter training time, and reduced restoring time up to 0.32 s.ConclusionOur algorithm has a simple network structure and good restoring effect. The speed of image generation is also significantly faster than that of other methods. At the same time, the proposed method is robust and suitable for dealing with various image degradation problems caused by motion blur.关键词:motion blur;blind deblurring;generative adversarial network(GAN);densely connected convolution network(DenseNets);perceptual loss;fully convolution network(FCN)70|94|9更新时间:2024-05-08 -

摘要:ObjectiveTo extract image features that can fully express image semantic information, reduce projection errors in Hash retrieval, and generate more compact binary Hash codes, a method based on dense network and improved supervised Hashing with kernels is proposed.MethodThe pre-processed image data set is used to train the dense network. To reduce the over-fitting phenomenon, L2 regularization term is added into the cross entropy as a new loss function. When the dense network model is training, batch normalization (BN) algorithm and root mean square prop (RMSProp) optimization algorithm are used to improve the accuracy and robustness of the model. High-level semantic features of images with trained and optimized dense network model are removed to enhance the ability of image features to express image information and build an image feature library of the image dataset. The kernel principal component analysis projection is then performed on the extracted image features. The nonlinear information implicit in the image features is fully exploited to reduce the projection error. The supervised kernel Hash method is also used to supervise the image features, enhance the resolution of the linear inseparable image feature data, and map the features to the Hamming space. According to the correspondence between the inner product of Hash code and Hamming distance and the semantic similarity monitoring matrix composed of image label information, the Hamming distance is optimized to generate a more compact binary Hash code. Next, the image feature Hash code library of the image dataset is constructed. Finally, the same operation is performed on the input query image to obtain the Hash code of the query image. The Hamming distance between the Hash code of the query image and the Hash code of the image feature in the image dataset is compared to measure the similarity. The retrieved similar images are returned in ascending order.ResultTo verify the effectiveness, expansion, and efficiency of the proposed method, our method is used respectively in Paris6K and lung nodule analysis 16(LUNA16) datasets. It is also compared with other six commonly used Hashing methods. The average retrieval accuracy is compared in 12, 24, 32, 48, 64, and 128 bits of code length. Experimental results show that the average retrieval accuracy increases with the increase of Hash code length. When the Hash code length increases to a certain value, the average retrieval accuracy decreases. The average retrieval accuracy of the proposed method is always higher than that of the other six Hash methods. Except for the semantic Hashing method, the average retrieval accuracy value reaches the maximum when the Hash code length is 48 bits. Other Hash methods, including the proposed method, have the maximum average retrieval accuracy value when the Hash code length is 64 bits, and the retrieval accuracy is better. When the Hash code length is 64 bits, the average retrieval accuracy value of the proposed method is as high as 89.2% and 92.9% in the Paris6K and LUNA16 datasets, respectively. The time complexity of the proposed method and the convolutional neural network (CNN) Hashing method is compared in the Paris6K and LUNA16 data sets when the Hash code length is 12, 24, 32, 48, 64, and 128 bits. Results show that the time complexity of the proposed method is reduced under different Hash code lengths and is efficient to a certain degree.ConclusionA method based on dense network and improved supervised Hashing with kernels is proposed. This method improves the expression ability of image features and projection accuracy and is superior to other similar methods in average retrieval accuracy, recall rate, and precision rate. It improves the retrieval performance to some extent. It has a lower time complexity of algorithm than the method of CNN Hashing method. In addition, the proposed method has better extensibility, which can be used not only in the field of color image retrieval but also in the field of medical gray scale image retrieval.关键词:dense convolutional network(DenseNet);supervised Hashing with Kernels;Image features;projection error;kernel principal component analysis(KPCA)24|19|0更新时间:2024-05-08

摘要:ObjectiveTo extract image features that can fully express image semantic information, reduce projection errors in Hash retrieval, and generate more compact binary Hash codes, a method based on dense network and improved supervised Hashing with kernels is proposed.MethodThe pre-processed image data set is used to train the dense network. To reduce the over-fitting phenomenon, L2 regularization term is added into the cross entropy as a new loss function. When the dense network model is training, batch normalization (BN) algorithm and root mean square prop (RMSProp) optimization algorithm are used to improve the accuracy and robustness of the model. High-level semantic features of images with trained and optimized dense network model are removed to enhance the ability of image features to express image information and build an image feature library of the image dataset. The kernel principal component analysis projection is then performed on the extracted image features. The nonlinear information implicit in the image features is fully exploited to reduce the projection error. The supervised kernel Hash method is also used to supervise the image features, enhance the resolution of the linear inseparable image feature data, and map the features to the Hamming space. According to the correspondence between the inner product of Hash code and Hamming distance and the semantic similarity monitoring matrix composed of image label information, the Hamming distance is optimized to generate a more compact binary Hash code. Next, the image feature Hash code library of the image dataset is constructed. Finally, the same operation is performed on the input query image to obtain the Hash code of the query image. The Hamming distance between the Hash code of the query image and the Hash code of the image feature in the image dataset is compared to measure the similarity. The retrieved similar images are returned in ascending order.ResultTo verify the effectiveness, expansion, and efficiency of the proposed method, our method is used respectively in Paris6K and lung nodule analysis 16(LUNA16) datasets. It is also compared with other six commonly used Hashing methods. The average retrieval accuracy is compared in 12, 24, 32, 48, 64, and 128 bits of code length. Experimental results show that the average retrieval accuracy increases with the increase of Hash code length. When the Hash code length increases to a certain value, the average retrieval accuracy decreases. The average retrieval accuracy of the proposed method is always higher than that of the other six Hash methods. Except for the semantic Hashing method, the average retrieval accuracy value reaches the maximum when the Hash code length is 48 bits. Other Hash methods, including the proposed method, have the maximum average retrieval accuracy value when the Hash code length is 64 bits, and the retrieval accuracy is better. When the Hash code length is 64 bits, the average retrieval accuracy value of the proposed method is as high as 89.2% and 92.9% in the Paris6K and LUNA16 datasets, respectively. The time complexity of the proposed method and the convolutional neural network (CNN) Hashing method is compared in the Paris6K and LUNA16 data sets when the Hash code length is 12, 24, 32, 48, 64, and 128 bits. Results show that the time complexity of the proposed method is reduced under different Hash code lengths and is efficient to a certain degree.ConclusionA method based on dense network and improved supervised Hashing with kernels is proposed. This method improves the expression ability of image features and projection accuracy and is superior to other similar methods in average retrieval accuracy, recall rate, and precision rate. It improves the retrieval performance to some extent. It has a lower time complexity of algorithm than the method of CNN Hashing method. In addition, the proposed method has better extensibility, which can be used not only in the field of color image retrieval but also in the field of medical gray scale image retrieval.关键词:dense convolutional network(DenseNet);supervised Hashing with Kernels;Image features;projection error;kernel principal component analysis(KPCA)24|19|0更新时间:2024-05-08

Image Processing and Coding

-

摘要:ObjectiveSemantic segmentation is a core computer vision task where one aims to densely assign labels to each pixel in the input image, such as person, car, road, pole, traffic light, or tree. Convolutional neural network-based approaches achieve state-of-the-art performance on various semantic segmentation tasks with applications for autonomous driving, image editing, and video monitoring. Despite such progress, these models often rely on massive amounts of pixel-level labels. However, for a real urban scene task, large amounts of labeled data are unavailable because of the high labor of annotating segmentation ground truth. When the labeled dataset is difficult to obtain, adversarial-training-based methods are preferred. These methods seek to adapt by confusing the domain discriminator with domain alignment standalone from task-specific learning under a separate loss. Another challenge is that a large difference exists between source data and target data in real scenarios. For instance, the distribution of appearance for objects and scenes may vary in different places, and even weather and lighting conditions can change significantly at the same place. In particular, such differences are often called as "domain gaps" and could cause significantly decreased performance. Unsupervised domain adaptation seeks to overcome such problems without target domain labels. Domain adaption aims to bridge the source and target domains by learning domain-invariant feature representations without using target labels. Such efforts have been made by using a deep learning network like AdaptSegNet, which has gained good results in the semantic segmentation of urban scenes. However, the network is trained directly using the synthetic dataset GTA5 and the real urban scene dataset Cityscapes, which exhibit a domain gap in gray, structure, and edge information. The fixed learning rate is employed in the model during the adversarial learning of different feature layers. In sum, segmentation accuracy needs to be improved.MethodTo handle these problems, a new domain adaptation method is proposed for urban scene semantic segmentation. To reduce the domain gap between source and target datasets, knowledge transfer or domain adaption is proposed to close the gap between source and target domains. This work is based on adversarial learning. First, the semantic-aware grad-generative adversarial network(GAN) (SG-GAN) is introduced to pre-process the synthetic dataset of GTA5. As a result, a new dataset SG-GTA5 is generated, which brings the newly dataset SG-GTA5 considerably closer to the urban scene dataset Cityscapes in gray, structure, and edge information. It is also suitable to substitute the original dataset GTA5 in AdaptSegNet. Second, the newly dataset SG-GTA5 is used as input of our network. To further enhance the adapted model and handle the fixed learning rate of AdaptSegNet, a multi-level adversarial network is constructed to effectively perform output space domain adaptation at different feature levels. Third, an adaptive learning rate is introduced in different feature levels of the network. Fourth, the loss value of different levels is adjusted by the proposed adaptive learning rate. Thus, the network's parameters can be updated dynamically. Fifth, a new convolution layer is added into the discriminator of GAN. As a result, the discriminant ability of the network is enhanced. For the discriminator, we use an architecture that uses fully convolutional layers to replace all fully connected layers to retain the spatial information. This architecture is composed of six convolution layers with 4×4 kernel and a stride of 2 for the first four kernels, a stride of 1 for the fifth kernel and channel numbers of 64, 128, 256, 512, 1 024, and 1, respectively. Except for the last layer, each convolution layer is followed by a leaky ReLU parameterized by 0.2. An up-sampling layer is added to the last convolution layer for re-scaling the output to the input size. No batch-normalization layers are used because we jointly train the discriminator with the segmentation network using a small batch size. For the segmentation network, it is essential to build upon a good baseline model to achieve high-quality segmentation results. We adopt the DeepLab-v2 framework with ResNet-101 model pre-trained on ImageNet as our segmentation baseline network. Similar to the recent work on semantic segmentation, we remove the last classification layer and modify the stride of the last two convolution layers from 2 to 1, making the resolution of the output feature maps effectively 1/8 times the input image size. To enlarge the receptive field, we apply dilated convolution layers in conv4 and conv5 layers with a stride of 2 and 4, respectively. After the last layer, we use the atrous spatial pyramid pooling (ASPP)as the final classifier. Finally, the batch normalization(BN) layers are removed because the discriminator network is trained with small batch generator network. Furthermore, we implement our network using the PyTorch toolbox on a single GTX1080Ti GPU with 11 GB memory.ResultThe new model is verified using the Cityscapes dataset. Experimental results demonstrate that the presented model is capable of segmenting more complex targets in the urban traffic scene precisely. The model also performs well against existing state-of-the-art segmentation model in terms of accuracy and visual quality. The segmentation accuracy of sidewalk, wall, pole, car and sky are improved by 9.6%, 5.9%, 4.9%, 5.5%, and 4.8%, respectively.ConclusionThe effectiveness of the proposed model is validated by using the real urban scene Cityscapes. The segmentation precision is improved through the presented dataset preprocessing scheme on the synthetic dataset of GTA5 by using the SG-GAN model, which makes the newly dataset SG-GTA5 much closer to the urban scene dataset Cityscapes on gray, structure, and edge information. The presented data preprocessing method also reduces the adversarial loss value effectively and avoids gradient explosion during the back propagation process. The network's learning capability is also further strengthened and the model's segmentation precision is improved through the presented adaptive learning rate, which is used for different adversarial layers to adjust the loss value of each layer. The learning rate can also update network parameters dynamically and optimize the performance of the generator and discriminator network. Finally, the discrimination capability of the proposed model is further improved by adding a new convolution layer in the discriminator, which enables the model to learn high layer semantic information. The domain shift is also alleviated to some extent.关键词:urban scene;semantic segmentation;generative adversarial network(GAN);domain adaptation;adapt learning rate36|34|4更新时间:2024-05-08

摘要:ObjectiveSemantic segmentation is a core computer vision task where one aims to densely assign labels to each pixel in the input image, such as person, car, road, pole, traffic light, or tree. Convolutional neural network-based approaches achieve state-of-the-art performance on various semantic segmentation tasks with applications for autonomous driving, image editing, and video monitoring. Despite such progress, these models often rely on massive amounts of pixel-level labels. However, for a real urban scene task, large amounts of labeled data are unavailable because of the high labor of annotating segmentation ground truth. When the labeled dataset is difficult to obtain, adversarial-training-based methods are preferred. These methods seek to adapt by confusing the domain discriminator with domain alignment standalone from task-specific learning under a separate loss. Another challenge is that a large difference exists between source data and target data in real scenarios. For instance, the distribution of appearance for objects and scenes may vary in different places, and even weather and lighting conditions can change significantly at the same place. In particular, such differences are often called as "domain gaps" and could cause significantly decreased performance. Unsupervised domain adaptation seeks to overcome such problems without target domain labels. Domain adaption aims to bridge the source and target domains by learning domain-invariant feature representations without using target labels. Such efforts have been made by using a deep learning network like AdaptSegNet, which has gained good results in the semantic segmentation of urban scenes. However, the network is trained directly using the synthetic dataset GTA5 and the real urban scene dataset Cityscapes, which exhibit a domain gap in gray, structure, and edge information. The fixed learning rate is employed in the model during the adversarial learning of different feature layers. In sum, segmentation accuracy needs to be improved.MethodTo handle these problems, a new domain adaptation method is proposed for urban scene semantic segmentation. To reduce the domain gap between source and target datasets, knowledge transfer or domain adaption is proposed to close the gap between source and target domains. This work is based on adversarial learning. First, the semantic-aware grad-generative adversarial network(GAN) (SG-GAN) is introduced to pre-process the synthetic dataset of GTA5. As a result, a new dataset SG-GTA5 is generated, which brings the newly dataset SG-GTA5 considerably closer to the urban scene dataset Cityscapes in gray, structure, and edge information. It is also suitable to substitute the original dataset GTA5 in AdaptSegNet. Second, the newly dataset SG-GTA5 is used as input of our network. To further enhance the adapted model and handle the fixed learning rate of AdaptSegNet, a multi-level adversarial network is constructed to effectively perform output space domain adaptation at different feature levels. Third, an adaptive learning rate is introduced in different feature levels of the network. Fourth, the loss value of different levels is adjusted by the proposed adaptive learning rate. Thus, the network's parameters can be updated dynamically. Fifth, a new convolution layer is added into the discriminator of GAN. As a result, the discriminant ability of the network is enhanced. For the discriminator, we use an architecture that uses fully convolutional layers to replace all fully connected layers to retain the spatial information. This architecture is composed of six convolution layers with 4×4 kernel and a stride of 2 for the first four kernels, a stride of 1 for the fifth kernel and channel numbers of 64, 128, 256, 512, 1 024, and 1, respectively. Except for the last layer, each convolution layer is followed by a leaky ReLU parameterized by 0.2. An up-sampling layer is added to the last convolution layer for re-scaling the output to the input size. No batch-normalization layers are used because we jointly train the discriminator with the segmentation network using a small batch size. For the segmentation network, it is essential to build upon a good baseline model to achieve high-quality segmentation results. We adopt the DeepLab-v2 framework with ResNet-101 model pre-trained on ImageNet as our segmentation baseline network. Similar to the recent work on semantic segmentation, we remove the last classification layer and modify the stride of the last two convolution layers from 2 to 1, making the resolution of the output feature maps effectively 1/8 times the input image size. To enlarge the receptive field, we apply dilated convolution layers in conv4 and conv5 layers with a stride of 2 and 4, respectively. After the last layer, we use the atrous spatial pyramid pooling (ASPP)as the final classifier. Finally, the batch normalization(BN) layers are removed because the discriminator network is trained with small batch generator network. Furthermore, we implement our network using the PyTorch toolbox on a single GTX1080Ti GPU with 11 GB memory.ResultThe new model is verified using the Cityscapes dataset. Experimental results demonstrate that the presented model is capable of segmenting more complex targets in the urban traffic scene precisely. The model also performs well against existing state-of-the-art segmentation model in terms of accuracy and visual quality. The segmentation accuracy of sidewalk, wall, pole, car and sky are improved by 9.6%, 5.9%, 4.9%, 5.5%, and 4.8%, respectively.ConclusionThe effectiveness of the proposed model is validated by using the real urban scene Cityscapes. The segmentation precision is improved through the presented dataset preprocessing scheme on the synthetic dataset of GTA5 by using the SG-GAN model, which makes the newly dataset SG-GTA5 much closer to the urban scene dataset Cityscapes on gray, structure, and edge information. The presented data preprocessing method also reduces the adversarial loss value effectively and avoids gradient explosion during the back propagation process. The network's learning capability is also further strengthened and the model's segmentation precision is improved through the presented adaptive learning rate, which is used for different adversarial layers to adjust the loss value of each layer. The learning rate can also update network parameters dynamically and optimize the performance of the generator and discriminator network. Finally, the discrimination capability of the proposed model is further improved by adding a new convolution layer in the discriminator, which enables the model to learn high layer semantic information. The domain shift is also alleviated to some extent.关键词:urban scene;semantic segmentation;generative adversarial network(GAN);domain adaptation;adapt learning rate36|34|4更新时间:2024-05-08 -

摘要:ObjectiveMultiphase image segmentation, an extension of two-phase image segmentation, is designed to partition images automatically into different regions according to different image features. It is a basic problem in image processing, image analysis, and computer vision. Variational image segmentation Vese-Chan model is a basic model of multiphase image segmentation that can construct characteristic functions for different phases or regions using fewer label functions, thus producing small-scale solutions. Graph cut (GC) algorithm can transform the optimization problem of energy function into the min-cut/max-flow problem, which greatly improves computational efficiency. In the spatially discrete setting, the computational results of the min-cut method are influenced by the discrete grid, resulting in measurement errors. In recent years, the continuous max-flow (CMF) method was proposed. As a continuous expression of the classical GC algorithm, CMF can keep the high efficiency of the GC algorithm and overcome measurement errors caused by the discretization of the classical GC algorithm. On the basis of the framework of variational theory, the CMF method for multiphase image segmentation Potts model and two-phase image segmentation Chan-Vese model was proposed and studied. However, a CMF method for the Vese-Chan model has not been studied. Therefore, we propose a CMF method for multiphase image segmentation Vese-Chan model based on convex relaxation and study its computational effectiveness and efficiency.MethodIn this study, binary label functions are used to construct different characteristic functions for different phases according to the relationship between a natural number and a binary representation of partitioned regions. The characteristic functions are divided into two parts according to the value of binary expression, i.e., 0 or 1. The characteristic functions are different. The date term of the segmentation model is also divided into two parts. Therefore, multiphase image segmentation can be transformed into two-phase image segmentation. This model can be expressed as a symmetric form of label functions, which are beneficial to interpret and realize. We introduce three dual variables, namely, source flow, sink flow, and spatial flow field. Next, we rewrite the optimization problem of energy function for the Vese-Chan model, including the above three dual variables. Flow conservation conditions are obtained by solving minimum problems of energy function. The Vese-Chan model can be transformed into a continuous max-flow problem corresponding to the min-cut problem. To improve computational efficiency in the experiments, we likewise design the alternating direction method of multipliers (ADMM) by introducing Lagrange multipliers and penalty parameters for the proposed model. The main idea of alternating optimization is to solve the optimization problem of one variable by fixing other variables. Therefore, the optimization problem of energy function can be transformed into three simple sub-problems of optimization, which can be achieved and solved more easily. For example, to solve one sub-problem on the source flow variable, the sink flow variable and spatial flow field variable should be fixed. Three dual variables must be calculated and Lagrange multipliers should be updated at each step. All of these variable need projection to satisfy the range of values. When the energy error formula is satisfied, the computational iteration stops. To represent the boundary of images after segmenting, it is necessary to threshold convex relaxed label functions into binary label functions. Finally, we can obtain segmentation results according to the binary label functions.ResultNumerical experiments are performed on gray and colored images. According to the area numbers of images, numerical experiments are divided into three parts: experiments using two binary label functions, three binary label functions, and four binary label functions. Segmentation results are represented by curves of different colors. In particular, to accurately represent the segmentation effects for complicated images, we obtain the approximate segmentation results. For the segmentation effectiveness, experimental results prove that the ADMM method and our proposed method have the same segmentation effectiveness for simple synthetic images. However, compared with ADMM, our proposed method is more accurate for complex images, such as medical and remote sensing images. Moreover, our method can achieve better separation for segmented objects and background. For the computational efficiency, we use two binary label functions to compare the efficiency of four gray images, including synthetic images, a natural image, and a medical image. The acceleration ratios of computational time for our proposed method are 6.35%, 10.75%, 12.39%, and 7.83%, respectively. For the experiment of three binary label functions, three gray images are compared, including synthetic images and a remote sensing image are compared. The computational times of our proposed method improve by 12.32%, 15.45%, and 14.04% for each image. For the experiment of four binary label functions, we compare two color images, including a natural image and a synthetic image. The computational times of our proposed method improve by 16.69% and 20.07%. In the experiments, our proposed method reduces the number of iterations and improves the convergence speed. By comparing the acceleration ratio of computational time with the increase of region phases or the complexity of the image, the advantages of the computational efficiency for our method are more evident.ConclusionContinuous max-flow method is used to solve the multiphase image segmentation Vese-Chan model. Numerical experiments are performed to demonstrate the superiority of our method in terms of computational effectiveness and efficiency for medical images, remote sensing images, and color images. Our method can be applied to multiphase segmentation for three-dimensional reconstruction of medical images in the future.关键词:multiphase image segmentation;Vese-Chan model;convex relaxation;continuous max-flow method(CMF);alternating direction method of multipliers(ADMM)17|15|0更新时间:2024-05-08

摘要:ObjectiveMultiphase image segmentation, an extension of two-phase image segmentation, is designed to partition images automatically into different regions according to different image features. It is a basic problem in image processing, image analysis, and computer vision. Variational image segmentation Vese-Chan model is a basic model of multiphase image segmentation that can construct characteristic functions for different phases or regions using fewer label functions, thus producing small-scale solutions. Graph cut (GC) algorithm can transform the optimization problem of energy function into the min-cut/max-flow problem, which greatly improves computational efficiency. In the spatially discrete setting, the computational results of the min-cut method are influenced by the discrete grid, resulting in measurement errors. In recent years, the continuous max-flow (CMF) method was proposed. As a continuous expression of the classical GC algorithm, CMF can keep the high efficiency of the GC algorithm and overcome measurement errors caused by the discretization of the classical GC algorithm. On the basis of the framework of variational theory, the CMF method for multiphase image segmentation Potts model and two-phase image segmentation Chan-Vese model was proposed and studied. However, a CMF method for the Vese-Chan model has not been studied. Therefore, we propose a CMF method for multiphase image segmentation Vese-Chan model based on convex relaxation and study its computational effectiveness and efficiency.MethodIn this study, binary label functions are used to construct different characteristic functions for different phases according to the relationship between a natural number and a binary representation of partitioned regions. The characteristic functions are divided into two parts according to the value of binary expression, i.e., 0 or 1. The characteristic functions are different. The date term of the segmentation model is also divided into two parts. Therefore, multiphase image segmentation can be transformed into two-phase image segmentation. This model can be expressed as a symmetric form of label functions, which are beneficial to interpret and realize. We introduce three dual variables, namely, source flow, sink flow, and spatial flow field. Next, we rewrite the optimization problem of energy function for the Vese-Chan model, including the above three dual variables. Flow conservation conditions are obtained by solving minimum problems of energy function. The Vese-Chan model can be transformed into a continuous max-flow problem corresponding to the min-cut problem. To improve computational efficiency in the experiments, we likewise design the alternating direction method of multipliers (ADMM) by introducing Lagrange multipliers and penalty parameters for the proposed model. The main idea of alternating optimization is to solve the optimization problem of one variable by fixing other variables. Therefore, the optimization problem of energy function can be transformed into three simple sub-problems of optimization, which can be achieved and solved more easily. For example, to solve one sub-problem on the source flow variable, the sink flow variable and spatial flow field variable should be fixed. Three dual variables must be calculated and Lagrange multipliers should be updated at each step. All of these variable need projection to satisfy the range of values. When the energy error formula is satisfied, the computational iteration stops. To represent the boundary of images after segmenting, it is necessary to threshold convex relaxed label functions into binary label functions. Finally, we can obtain segmentation results according to the binary label functions.ResultNumerical experiments are performed on gray and colored images. According to the area numbers of images, numerical experiments are divided into three parts: experiments using two binary label functions, three binary label functions, and four binary label functions. Segmentation results are represented by curves of different colors. In particular, to accurately represent the segmentation effects for complicated images, we obtain the approximate segmentation results. For the segmentation effectiveness, experimental results prove that the ADMM method and our proposed method have the same segmentation effectiveness for simple synthetic images. However, compared with ADMM, our proposed method is more accurate for complex images, such as medical and remote sensing images. Moreover, our method can achieve better separation for segmented objects and background. For the computational efficiency, we use two binary label functions to compare the efficiency of four gray images, including synthetic images, a natural image, and a medical image. The acceleration ratios of computational time for our proposed method are 6.35%, 10.75%, 12.39%, and 7.83%, respectively. For the experiment of three binary label functions, three gray images are compared, including synthetic images and a remote sensing image are compared. The computational times of our proposed method improve by 12.32%, 15.45%, and 14.04% for each image. For the experiment of four binary label functions, we compare two color images, including a natural image and a synthetic image. The computational times of our proposed method improve by 16.69% and 20.07%. In the experiments, our proposed method reduces the number of iterations and improves the convergence speed. By comparing the acceleration ratio of computational time with the increase of region phases or the complexity of the image, the advantages of the computational efficiency for our method are more evident.ConclusionContinuous max-flow method is used to solve the multiphase image segmentation Vese-Chan model. Numerical experiments are performed to demonstrate the superiority of our method in terms of computational effectiveness and efficiency for medical images, remote sensing images, and color images. Our method can be applied to multiphase segmentation for three-dimensional reconstruction of medical images in the future.关键词:multiphase image segmentation;Vese-Chan model;convex relaxation;continuous max-flow method(CMF);alternating direction method of multipliers(ADMM)17|15|0更新时间:2024-05-08 -

摘要:ObjectivePerson re-identification (ReID) refers to the retrieval of target pedestrians from multiple non-overlapping cameras. This technology can be widely used in various fields. In the security field, police can quickly track and retrieve the location of suspects and easily find missing individuals by using person ReID. In the field of album management, users manage electronic albums according to person ReID. In the field of e-commerce, managers of unmanned supermarkets use person ReID to track user behavior. However, this technology poses a variety of challenges, such as low resolution, light change, background clutter, variable pedestrian action, and occlusion of pedestrian body parts. Numerous methods have been proposed to solve these problems. The traditional methods mainly include two ways, namely, feature design and distance metric. The main idea of feature design is to design a feature representation with strong discriminability and robustness. Thus, an expressive feature can be extracted from a pedestrian image. The distance metric method is used to reduce the distance among similar pedestrian images while increasing the distance among different pedestrian images. In recent years, deep learning has been widely used in the field of computer vision. Given the popularity of deep learning, person ReID based on deep learning has achieved higher recognition rate than traditional methods. The deep learning method mainly uses convolutional neural networks (CNNs) to classify pedestrian images, extract the representation of pedestrian images from the network, and determine whether pedestrian pairs form matches by calculating the similarity between pedestrian pairs. In this work, the proposed method is based on a CNN. We observe that the problems of misjudgment due to the occlusion of pedestrian body parts and misalignment between pedestrian image pairs are frequent. Thus, we attempt to focus on pedestrian parts with distinctive features and ignore other parts with interference information to determine whether pedestrian pairs captured by different cameras form matches. We then propose a method for person ReID using attention and attributes to overcome pedestrian occlusion and misalignment problem in ReID.MethodOur method introduces the attribute information of pedestrians. Attribute information is divided into local and global attributes. The local attributes are composed of the head, upper body, hand, and lower body part attributes. The global attributes include gender and age. The proposed method falls into two stages: training and test stages. In the training stage, the improved ResNet50 network is used as the basic framework to extract features. The training stage is composed of two branches: a global branch and a local branch. In the global branch, features are used as the global feature to classify identity and global attributes. Meanwhile, in the local branch, multiple processes are involved. First, features are extended into multiple channels. Second, we obtain the point with the highest response value per channel. Third, we cluster these points, and the cluster points are divided into four pedestrian parts. Fourth, we obtain the weight of the distinctive feature on each pedestrian part through calculation. Fifth, the weights of these four parts are multiplied by the initial features to obtain the total features of each part. Finally, the total features of these four parts are classified according to the identity and corresponding attributes. In the test stage, the part and global features are extracted through the trained network and concatenated as joint features. The similarity between pedestrian pairs is calculated to determine whether the pedestrian pairs form matches. In this method, the network locates pedestrian parts by using attribute information. At the same time, the attention mechanisms of the network are used to obtain discriminative features of pedestrian parts.ResultThe proposed method uses attribute information in the Market-1501_attribute and DukeMTMC-attribute datasets and then evaluates the Market-1501 and DukeMTMC-reid datasets. In the field of person ReID, two evaluation protocols are used to evaluate the performance of the model: cumulative matching characteristics (CMC) curve and mean average precision (mAP). The following steps are performed to draw the CMC curve. First, we calculate the Euclidean distance between the probe image and the gallery images. Second, we sort the ranking order of all gallery images from the image with the shortest distance to the longest distance for each probe image. Finally, the true match percentage of the top m sorted images is calculated and called as rank-m. Specifically, rank-1 is an important indicator of person ReID. The following steps are performed to complete the mAP. First, we compute the average precision, which is the area under the precise-recall curve of each probe image. Next, the mean of average precision of all probe images is calculated. In the Market-1501 and DukeMTMC-reid datasets, the rank-1 accuracies are 90.67% and 80.2%, respectively, whereas the mAP accuracies are 76.65% and 62.14%, respectively. Recently, the re-ranking method has been widely used in the field of person ReID. In the re-ranking method, the rank-1 accuracies are 92.4% and 84.15%, whereas the mAP accuracies are 87.5% and 78.41%. Compared with other state-of-the-art methods, our accuracies are greatly improved.ConclusionIn this study, we propose a method for ReID based on attention and attributes. This proposed method can direct attention toward pedestrian parts by learning the attributes of pedestrians. Then, the attention mechanism can be used to learn the distinctive features of pedestrians. Even if pedestrians are occluded and misaligned, the proposed method can still achieve high accuracy.关键词:person re-identification;global feature;local feature;pedestrians part;attention mechanism;attribute information32|37|9更新时间:2024-05-08

摘要:ObjectivePerson re-identification (ReID) refers to the retrieval of target pedestrians from multiple non-overlapping cameras. This technology can be widely used in various fields. In the security field, police can quickly track and retrieve the location of suspects and easily find missing individuals by using person ReID. In the field of album management, users manage electronic albums according to person ReID. In the field of e-commerce, managers of unmanned supermarkets use person ReID to track user behavior. However, this technology poses a variety of challenges, such as low resolution, light change, background clutter, variable pedestrian action, and occlusion of pedestrian body parts. Numerous methods have been proposed to solve these problems. The traditional methods mainly include two ways, namely, feature design and distance metric. The main idea of feature design is to design a feature representation with strong discriminability and robustness. Thus, an expressive feature can be extracted from a pedestrian image. The distance metric method is used to reduce the distance among similar pedestrian images while increasing the distance among different pedestrian images. In recent years, deep learning has been widely used in the field of computer vision. Given the popularity of deep learning, person ReID based on deep learning has achieved higher recognition rate than traditional methods. The deep learning method mainly uses convolutional neural networks (CNNs) to classify pedestrian images, extract the representation of pedestrian images from the network, and determine whether pedestrian pairs form matches by calculating the similarity between pedestrian pairs. In this work, the proposed method is based on a CNN. We observe that the problems of misjudgment due to the occlusion of pedestrian body parts and misalignment between pedestrian image pairs are frequent. Thus, we attempt to focus on pedestrian parts with distinctive features and ignore other parts with interference information to determine whether pedestrian pairs captured by different cameras form matches. We then propose a method for person ReID using attention and attributes to overcome pedestrian occlusion and misalignment problem in ReID.MethodOur method introduces the attribute information of pedestrians. Attribute information is divided into local and global attributes. The local attributes are composed of the head, upper body, hand, and lower body part attributes. The global attributes include gender and age. The proposed method falls into two stages: training and test stages. In the training stage, the improved ResNet50 network is used as the basic framework to extract features. The training stage is composed of two branches: a global branch and a local branch. In the global branch, features are used as the global feature to classify identity and global attributes. Meanwhile, in the local branch, multiple processes are involved. First, features are extended into multiple channels. Second, we obtain the point with the highest response value per channel. Third, we cluster these points, and the cluster points are divided into four pedestrian parts. Fourth, we obtain the weight of the distinctive feature on each pedestrian part through calculation. Fifth, the weights of these four parts are multiplied by the initial features to obtain the total features of each part. Finally, the total features of these four parts are classified according to the identity and corresponding attributes. In the test stage, the part and global features are extracted through the trained network and concatenated as joint features. The similarity between pedestrian pairs is calculated to determine whether the pedestrian pairs form matches. In this method, the network locates pedestrian parts by using attribute information. At the same time, the attention mechanisms of the network are used to obtain discriminative features of pedestrian parts.ResultThe proposed method uses attribute information in the Market-1501_attribute and DukeMTMC-attribute datasets and then evaluates the Market-1501 and DukeMTMC-reid datasets. In the field of person ReID, two evaluation protocols are used to evaluate the performance of the model: cumulative matching characteristics (CMC) curve and mean average precision (mAP). The following steps are performed to draw the CMC curve. First, we calculate the Euclidean distance between the probe image and the gallery images. Second, we sort the ranking order of all gallery images from the image with the shortest distance to the longest distance for each probe image. Finally, the true match percentage of the top m sorted images is calculated and called as rank-m. Specifically, rank-1 is an important indicator of person ReID. The following steps are performed to complete the mAP. First, we compute the average precision, which is the area under the precise-recall curve of each probe image. Next, the mean of average precision of all probe images is calculated. In the Market-1501 and DukeMTMC-reid datasets, the rank-1 accuracies are 90.67% and 80.2%, respectively, whereas the mAP accuracies are 76.65% and 62.14%, respectively. Recently, the re-ranking method has been widely used in the field of person ReID. In the re-ranking method, the rank-1 accuracies are 92.4% and 84.15%, whereas the mAP accuracies are 87.5% and 78.41%. Compared with other state-of-the-art methods, our accuracies are greatly improved.ConclusionIn this study, we propose a method for ReID based on attention and attributes. This proposed method can direct attention toward pedestrian parts by learning the attributes of pedestrians. Then, the attention mechanism can be used to learn the distinctive features of pedestrians. Even if pedestrians are occluded and misaligned, the proposed method can still achieve high accuracy.关键词:person re-identification;global feature;local feature;pedestrians part;attention mechanism;attribute information32|37|9更新时间:2024-05-08 -