最新刊期

卷 25 , 期 4 , 2020

-

摘要:The task of object detection is to accurately and efficiently identify and locate a large number of predefined objects from images. It aims to locate interested objects from images, accurately determine the categories of each object, and provide the boundaries of each object. Since the proposal of Hinton on the use of deep neural network for automatic learning of high-level features in multimedia data, object detection based on deep learning has become an important research hotspot in computer vision. With the wide application of deep learning, the accuracy and efficiency of object detection are greatly improved. However, object detection based on deep learning still have four key technology challenges, namely, improving and optimizing the mainstream object detection algorithms, balancing the detection speed and accuracy, improving the small object detection accuracy, achieving multiclass object detection, and lightweighting the detection model. In view of the above challenges, this study analyzes and summarizes the existing research methods from different aspects. On the basis of extensive literature research, this work analyzed the methods of improving and optimizing the mainstream object detection algorithm from three aspects:the improvement of two-stage object detection algorithm, the improvement of single-stage object detection algorithm, and the combination of two-stage object detection algorithm and single-stage object detection algorithm. In the improvement of the two-stage object detection algorithm, some classical two-stage object detection algorithms, such as R-CNN (region based convolutional neural network), SPPNet(spatial pyramid pooling net), Fast R-CNN, and Faster R-CNN, and some state-of-the-art two-stage object detection algorithms, including Mask R-CNN, Soft-NMS(non maximum suppression), and Softer-NMS, are mainly described. In the improvement of single-stage object detection algorithm, some classical single-stage object detection algorithms, such as YOLO(you only look once)v1, SSD(single shot multiBox detector), and YOLOv2, and the state-of-the-art single-stage object detection algorithms, including YOLOv3, are mainly described. In the combination of two-stage and one-stage object detection algorithms, RON(reverse connection with objectness prior networks) and RefineDet algorithms are mainly described. This study analyzes and summarizes the methods to improve the accuracy of small object detection from five perspectives:using new backbone network, increasing visual field, feature fusion, cascade convolution neural network, and modifying the training method of the model. The new backbone network mainly introduces DetNet, DenseNet, and DarkNet. The backbone network DarkNet is introduced in detail in the improvement of single segment object detection algorithm. It mainly includes two backbone network architectures:DarkNet-19 application in YOLOv2 and DarkNet-53 application in YOLOv3. The related algorithms of increasing receptive field mainly include RFB(receptive field block) Net and TridentNet. The methods of feature fusion mainly involve feature pyramid networks, DES(detection with enriched semantics), and NAS-FPN(neural architecture search-feature pyramid networks). The related algorithms of cascade convolutional neural network mainly include Cascade R-CNN and HRNet. The related algorithms of model training mode optimization mainly consist of YOLOv2, SNIP(scale normalization for image pyramids), and Perceptual GAN(generative adversarial networks). In this study, the method of multiclass object detection is analyzed from the point of view of training method and network structure. The related algorithms of training method optimization mainly include large scale detection through Adaptation, YOLO9000, and Soft Sampling. The related algorithms of network structure improvement mainly include R-FCN-3000. This study analyzes the methods used in lightweight detection model from the perspective of network structure, such as ShuffleNetv1, ShuffleNetv2, MobileNetv1, MobileNetv2, and Mobile Netv3. MobileNetv1 uses depthwise separable convolution to reduce the parameters and computational complexity of the model, and employs pointwise convolution to solve the problem of information flow between the feature maps. MobileNetv2 uses linear bottlenecks to remove the nonlinear activation layer behind the small dimension output layer, thus ensuring the expressive ability of the model. MobileNetv2 also utilizes inverted residual block to improve the model. MobileNetv3 employs complementary search technology combination and network structure improvement to improve the detection accuracy and speed of the model. In this study, the common datasets, such as Caltech, Tiny Images, Cifar, Sun, Places, and Open Images, and the commonly used datasets, including PASCAL VOC 2007, PASCAL VOC 2012, MS COCO(common objects in context), and ImageNet, are introduced in detail. The information of each dataset is summarized, and a set of datasets is established. A table of general datasets is presented, and the dataset name, total images, number of categories, image size, started year, and characteristics of each dataset are introduced in detail. At the same time, the main performance indexes of object detection algorithms, such as accuracy, precision, recall, average precision, and mean average precision, are introduced in detail. Finally, according to the object detection, this work introduces the main performance indicators in detail. Four key technical challenges in the process of measurement, research, and development are compared and analyzed. In addition, a table is set up to describe the performance of some representative algorithms in object detection from the aspects of algorithm name, backbone network, input image size, test dataset, detection accuracy, detection speed, and single-stage or two-stage partition. The traditional object detection algorithm, the improvement and optimization algorithm of the mainstream object detection algorithm, the related information of the small object detection accuracy algorithm, and the multicategory object detection algorithm are improved, to predict and prospect the problems to be solved in object detection and the future research direction. The related research of object detection is still a hot spot in computer vision and pattern recognition. Several high-precision and efficient algorithms are proposed constantly, and increasing research directions will be developed in the future. The key technologies of object detection based on in-depth learning need to be solved in the next step. The future research directions mainly include how to make the model suitable for the detection needs of specific scenarios, how to achieve accurate object detection problems under the condition of lack of prior knowledge, how to obtain high-performance backbone network and information, how to add rich image semantic information, how to improve the interpretability of deep learning model, and how to automate the realization of the optimal network architecture.关键词:object detection;deep learning;small object;multi-class;lightweighting404|245|85更新时间:2024-05-07

摘要:The task of object detection is to accurately and efficiently identify and locate a large number of predefined objects from images. It aims to locate interested objects from images, accurately determine the categories of each object, and provide the boundaries of each object. Since the proposal of Hinton on the use of deep neural network for automatic learning of high-level features in multimedia data, object detection based on deep learning has become an important research hotspot in computer vision. With the wide application of deep learning, the accuracy and efficiency of object detection are greatly improved. However, object detection based on deep learning still have four key technology challenges, namely, improving and optimizing the mainstream object detection algorithms, balancing the detection speed and accuracy, improving the small object detection accuracy, achieving multiclass object detection, and lightweighting the detection model. In view of the above challenges, this study analyzes and summarizes the existing research methods from different aspects. On the basis of extensive literature research, this work analyzed the methods of improving and optimizing the mainstream object detection algorithm from three aspects:the improvement of two-stage object detection algorithm, the improvement of single-stage object detection algorithm, and the combination of two-stage object detection algorithm and single-stage object detection algorithm. In the improvement of the two-stage object detection algorithm, some classical two-stage object detection algorithms, such as R-CNN (region based convolutional neural network), SPPNet(spatial pyramid pooling net), Fast R-CNN, and Faster R-CNN, and some state-of-the-art two-stage object detection algorithms, including Mask R-CNN, Soft-NMS(non maximum suppression), and Softer-NMS, are mainly described. In the improvement of single-stage object detection algorithm, some classical single-stage object detection algorithms, such as YOLO(you only look once)v1, SSD(single shot multiBox detector), and YOLOv2, and the state-of-the-art single-stage object detection algorithms, including YOLOv3, are mainly described. In the combination of two-stage and one-stage object detection algorithms, RON(reverse connection with objectness prior networks) and RefineDet algorithms are mainly described. This study analyzes and summarizes the methods to improve the accuracy of small object detection from five perspectives:using new backbone network, increasing visual field, feature fusion, cascade convolution neural network, and modifying the training method of the model. The new backbone network mainly introduces DetNet, DenseNet, and DarkNet. The backbone network DarkNet is introduced in detail in the improvement of single segment object detection algorithm. It mainly includes two backbone network architectures:DarkNet-19 application in YOLOv2 and DarkNet-53 application in YOLOv3. The related algorithms of increasing receptive field mainly include RFB(receptive field block) Net and TridentNet. The methods of feature fusion mainly involve feature pyramid networks, DES(detection with enriched semantics), and NAS-FPN(neural architecture search-feature pyramid networks). The related algorithms of cascade convolutional neural network mainly include Cascade R-CNN and HRNet. The related algorithms of model training mode optimization mainly consist of YOLOv2, SNIP(scale normalization for image pyramids), and Perceptual GAN(generative adversarial networks). In this study, the method of multiclass object detection is analyzed from the point of view of training method and network structure. The related algorithms of training method optimization mainly include large scale detection through Adaptation, YOLO9000, and Soft Sampling. The related algorithms of network structure improvement mainly include R-FCN-3000. This study analyzes the methods used in lightweight detection model from the perspective of network structure, such as ShuffleNetv1, ShuffleNetv2, MobileNetv1, MobileNetv2, and Mobile Netv3. MobileNetv1 uses depthwise separable convolution to reduce the parameters and computational complexity of the model, and employs pointwise convolution to solve the problem of information flow between the feature maps. MobileNetv2 uses linear bottlenecks to remove the nonlinear activation layer behind the small dimension output layer, thus ensuring the expressive ability of the model. MobileNetv2 also utilizes inverted residual block to improve the model. MobileNetv3 employs complementary search technology combination and network structure improvement to improve the detection accuracy and speed of the model. In this study, the common datasets, such as Caltech, Tiny Images, Cifar, Sun, Places, and Open Images, and the commonly used datasets, including PASCAL VOC 2007, PASCAL VOC 2012, MS COCO(common objects in context), and ImageNet, are introduced in detail. The information of each dataset is summarized, and a set of datasets is established. A table of general datasets is presented, and the dataset name, total images, number of categories, image size, started year, and characteristics of each dataset are introduced in detail. At the same time, the main performance indexes of object detection algorithms, such as accuracy, precision, recall, average precision, and mean average precision, are introduced in detail. Finally, according to the object detection, this work introduces the main performance indicators in detail. Four key technical challenges in the process of measurement, research, and development are compared and analyzed. In addition, a table is set up to describe the performance of some representative algorithms in object detection from the aspects of algorithm name, backbone network, input image size, test dataset, detection accuracy, detection speed, and single-stage or two-stage partition. The traditional object detection algorithm, the improvement and optimization algorithm of the mainstream object detection algorithm, the related information of the small object detection accuracy algorithm, and the multicategory object detection algorithm are improved, to predict and prospect the problems to be solved in object detection and the future research direction. The related research of object detection is still a hot spot in computer vision and pattern recognition. Several high-precision and efficient algorithms are proposed constantly, and increasing research directions will be developed in the future. The key technologies of object detection based on in-depth learning need to be solved in the next step. The future research directions mainly include how to make the model suitable for the detection needs of specific scenarios, how to achieve accurate object detection problems under the condition of lack of prior knowledge, how to obtain high-performance backbone network and information, how to add rich image semantic information, how to improve the interpretability of deep learning model, and how to automate the realization of the optimal network architecture.关键词:object detection;deep learning;small object;multi-class;lightweighting404|245|85更新时间:2024-05-07

Review

-

摘要:ObjectiveFacial images are widely used in many applications such as social media, medical systems, and smart transportation systems. Such data, however, are inherently sensitive and private. Individuals' private information may be leaked if their facial images are released directly in the application systems. In social network platforms, attackers can use the facial images of individuals to attack their sensitive information. Many classical privacy-preserving methods, such as k-anonymous and data encryption, have been proposed to handle the privacy problem in facial images. However, the classical methods always rely on strong background assumptions, which cannot be supported in real-world applications. Differential privacy is the state-of-the-art method used to address the privacy concerns in data publication, which provides rigorous guarantees for the privacy of each user by adding randomized noise in Google Chrome, Apple iOS, and macOS. Therefore, to protect the private information in facial images, this paper proposes three efficient algorithms, namely, low rank-based private facial image release algorithm (LRA), singular value decomposition (SVD)-based private facial image release algorithm (SRA), and enhanced SVD-based private facial image release algorithm (ESRA), which are based on matrix decomposition combined with differential privacy.MethodThe three algorithms employed the real-valued matrix to model facial images in which each cell corresponds to each pixel point of facial images. Based on the real-valued matrix, the neighborhood of some facial images can be defined easily, which are crucial bases to use Laplace mechanism to generate Laplace noise. Then, LRA, SRA, and ESRA rely on low-rank decomposition and SVD to compress facial images. This step aims to reduce the Laplace noise and boost the accuracy of the publication of facial images. The three algorithms use the Laplace mechanism to inject noise into each value of the compressed facial image to ensure differential privacy. Finally, the three algorithms use matrix algebraic operations to reconstruct the noisy facial image. However, in the SRA and ESRA algorithms, two sources of errors are encountered:1) Laplace error (LE) due to Laplace noise injected and 2) reconstruction error (RE) caused by lossy compression. The two errors are controlled by r parameter, which is the compression factor in the SRA and ESRA algorithms. Setting the compact parameter r constrains the LE and RE. The SRA algorithm sets the parameter in a heuristic manner in which one may fix the value in terms of experiences. However, the choice of r in the SRA algorithm is a problem because a large r leads to excessive LE, while a small r makes the RE extremely large. Furthermore, r cannot be directly set based on the real-valued matrix; otherwise, the choice of r itself violates differential privacy. Based on the preceding observation, the ESRA algorithm is proposed to handle the problem caused by the selection of the parameter r. The main idea of the ESRA algorithm involves two steps:employing exponential mechanism to sample r elements in the decomposition matrix and injecting the Laplace noise into the elements. According to the sequential composition of differential privacy, the two steps in the ESRA algorithm meet ε-differential privacy.ResultOn the basis of the SVM classification and information entropy technique, two group experiments were conducted over six real facial image datasets (Yale, ORL, CMU, Yale B, Faces95, and Faces94) to evaluate the quality of the facial images generated from the LRA, SRA, ESRA, LAP(Laplace-based facial image protection), LRM(low-rank mechanism), and MM(matrix mechanism) algorithms using a variety of metrics, including precision, recall, F1 score, and entropy. Our experiments show that the proposed LRA, SRA, and ESRA algorithms outperform LAP, LRM, and MM in terms of the abovementioned six metrics. For example, based on the Faces95 dataset, ε=0.1 and matrix=200×180 were set to compare the precision of ESRA, LRM, LRA, and LAP. Result show that the precision of ESRA is 40 and 20 times that of LAP, LRA, and LRM. Based on the six datasets, ESRA achieves better accuracy than LRA and SRA. For example, on the Faces94 dataset, the matrix=200×180 was set and the privacy budget ε (i.e., 0.1, 0.5, 0.9, and 1.3) was varied to study the utility of each algorithm. Results show that the utility measures of all algorithms increase when ε increases. When ε varies from 0.1 to 1.3, ESRA still achieves better precision, recall, F1-score, and entropy than the other algorithms.ConclusionThe oretical analysis and extensive experiments were conducted to compare our algorithms with the LAP, LRM, and MM algorithms. Results show that the proposed algorithms could achieve better utility and outperform the existing solutions. In addition, the proposed algorithms provided new ideas and technical support for further research on facial image release with differential privacy in several application systems.关键词:facial image;privacy protection;differential privacy;matrix decomposition;low-rank decomposition;singular value decomposition(SVD)77|109|3更新时间:2024-05-07

摘要:ObjectiveFacial images are widely used in many applications such as social media, medical systems, and smart transportation systems. Such data, however, are inherently sensitive and private. Individuals' private information may be leaked if their facial images are released directly in the application systems. In social network platforms, attackers can use the facial images of individuals to attack their sensitive information. Many classical privacy-preserving methods, such as k-anonymous and data encryption, have been proposed to handle the privacy problem in facial images. However, the classical methods always rely on strong background assumptions, which cannot be supported in real-world applications. Differential privacy is the state-of-the-art method used to address the privacy concerns in data publication, which provides rigorous guarantees for the privacy of each user by adding randomized noise in Google Chrome, Apple iOS, and macOS. Therefore, to protect the private information in facial images, this paper proposes three efficient algorithms, namely, low rank-based private facial image release algorithm (LRA), singular value decomposition (SVD)-based private facial image release algorithm (SRA), and enhanced SVD-based private facial image release algorithm (ESRA), which are based on matrix decomposition combined with differential privacy.MethodThe three algorithms employed the real-valued matrix to model facial images in which each cell corresponds to each pixel point of facial images. Based on the real-valued matrix, the neighborhood of some facial images can be defined easily, which are crucial bases to use Laplace mechanism to generate Laplace noise. Then, LRA, SRA, and ESRA rely on low-rank decomposition and SVD to compress facial images. This step aims to reduce the Laplace noise and boost the accuracy of the publication of facial images. The three algorithms use the Laplace mechanism to inject noise into each value of the compressed facial image to ensure differential privacy. Finally, the three algorithms use matrix algebraic operations to reconstruct the noisy facial image. However, in the SRA and ESRA algorithms, two sources of errors are encountered:1) Laplace error (LE) due to Laplace noise injected and 2) reconstruction error (RE) caused by lossy compression. The two errors are controlled by r parameter, which is the compression factor in the SRA and ESRA algorithms. Setting the compact parameter r constrains the LE and RE. The SRA algorithm sets the parameter in a heuristic manner in which one may fix the value in terms of experiences. However, the choice of r in the SRA algorithm is a problem because a large r leads to excessive LE, while a small r makes the RE extremely large. Furthermore, r cannot be directly set based on the real-valued matrix; otherwise, the choice of r itself violates differential privacy. Based on the preceding observation, the ESRA algorithm is proposed to handle the problem caused by the selection of the parameter r. The main idea of the ESRA algorithm involves two steps:employing exponential mechanism to sample r elements in the decomposition matrix and injecting the Laplace noise into the elements. According to the sequential composition of differential privacy, the two steps in the ESRA algorithm meet ε-differential privacy.ResultOn the basis of the SVM classification and information entropy technique, two group experiments were conducted over six real facial image datasets (Yale, ORL, CMU, Yale B, Faces95, and Faces94) to evaluate the quality of the facial images generated from the LRA, SRA, ESRA, LAP(Laplace-based facial image protection), LRM(low-rank mechanism), and MM(matrix mechanism) algorithms using a variety of metrics, including precision, recall, F1 score, and entropy. Our experiments show that the proposed LRA, SRA, and ESRA algorithms outperform LAP, LRM, and MM in terms of the abovementioned six metrics. For example, based on the Faces95 dataset, ε=0.1 and matrix=200×180 were set to compare the precision of ESRA, LRM, LRA, and LAP. Result show that the precision of ESRA is 40 and 20 times that of LAP, LRA, and LRM. Based on the six datasets, ESRA achieves better accuracy than LRA and SRA. For example, on the Faces94 dataset, the matrix=200×180 was set and the privacy budget ε (i.e., 0.1, 0.5, 0.9, and 1.3) was varied to study the utility of each algorithm. Results show that the utility measures of all algorithms increase when ε increases. When ε varies from 0.1 to 1.3, ESRA still achieves better precision, recall, F1-score, and entropy than the other algorithms.ConclusionThe oretical analysis and extensive experiments were conducted to compare our algorithms with the LAP, LRM, and MM algorithms. Results show that the proposed algorithms could achieve better utility and outperform the existing solutions. In addition, the proposed algorithms provided new ideas and technical support for further research on facial image release with differential privacy in several application systems.关键词:facial image;privacy protection;differential privacy;matrix decomposition;low-rank decomposition;singular value decomposition(SVD)77|109|3更新时间:2024-05-07

Image Processing and Coding

-

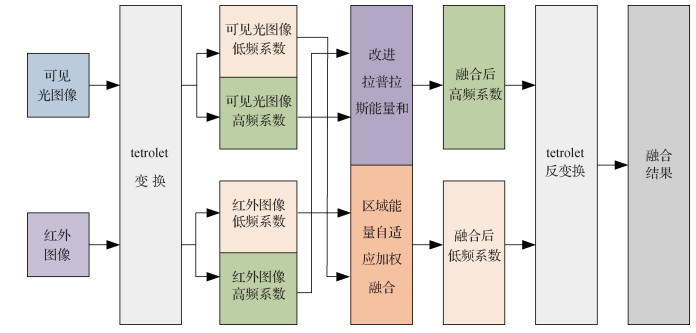

摘要:ObjectiveChange detection aims at detecting the difference of the images captured from same scene in different time observations. This condition is an important research problem in computer vision. However, the traditional change detection methods, which use the handcrafted features and heuristic models, suffer from lighting variations and camera pose differences, resulting in bad change detection results. Recent deep learning-based convolutional neural networks (CNN) achieve huge success on several computer vision problems, such as image classification, semantic segmentation, and saliency detection. The main reason of the success of the deep learning-based methods is the abstract ability of CNN. To conquer the bad effects of lighting variations and camera pose differences, we can employ deep learning-based CNN in change detection problems. Unlike semantic segmentation, change detection inputs image pairs of two time observations. Thus, a key research problem is how to design an effective architecture of CNN, which can fully explore the intrinsic changes of the image pairs. To generate robust change detection results, we propose in this study a multiscale deep feature fusion-based change detection (MDFCD).MethodThe proposed MDFCD network has two streams of feature extracting sub-networks, which share weight parameters. Each sub-network is responsible for learning to extract semantic features from the corresponding RGB image. We use VGG(visual geometry group)16 as the basic backbone of the proposed MDFCD. The last fully connected layers of VGG16 are removed to save the spatial resolution of the features of the last convolutional layer. We adopt the features of convolutional blocks Conv3, Conv4, and Conv5 of VGG16 as our multiscale deep features because they can capture high-level, middle-level, and low-level features. Then, the Enc (encoding) module is proposed to fuse the deep features from the two time observations of the same convolutional block. We use "concat" operation to concatenate the features. The resulted features are input into Enc to generate change detection adaptive features at the corresponding feature level. The encoded features from the lower layer are upsampled twice in height and width. Then, we concatenate the deep features of the previous layers' convolutional blocks. Subsequently, Enc is used again to learn adaptive features. By progressively incorporating the features from Conv5 to Conv3, we obtain deep fusion of CNN features at multiple scales. To generate robust change detection, we add a convolutional layer with 2×3×3 convolutional filters to generate change prediction at each scale encoding module. Then, the change predictions of all scales are concatenated together to produce final change detection results. Note that we use bicubic upsampling operation to upsample the change detection map at each scale to the size of the input image.ResultWe compared three benchmark datasets, namely, VL_CMU_CD(visual localization of Carnegie Mellon University for change detection), PCD(panoramic change detection), CDnet(change detection net), by the state-of-the-art change detection methods, that is, FGCD(fine-grained change detection), SC_SOBS(SC-self-organizing background subtraction), SuBSENSE(self-balanced sensitirity segmenter), and FCN(fully convolutional network). We employed F1-measure, recall, precision, specific, FPR(false positive rate), FNR(false negative rate), PWC(percentage of wrong classification) to evaluate the difference of the compared change detection methods. The experiments show that MDFCD are better than the other compared methods. Among the compared methods, deep learning-based change detection method FCD performs the best. On VL_CMU_CD, the F1-measure and Precision of MDFCD achieve 12.2% and 24.4% relative improvements over the second-placed change detection method FCN, respectively. On PCD, the F1-measure and precision of MDFCD obtain 2.1% and 17.7% relative improvements over FCN, respectively. On CDnet, compared with FCN, our F1-measure and precision achieve 8.5% and 5.8% relative improvements, respectively. From the experiments, we can find that MDFCD can detect the fine grained changes, such as telegraph poles. The proposed MDFCD are better in distinguishing the real changes with false changes caused by lighting variations and camera pose difference compared with FCN.ConclusionWe studied how to effectively explore the deep convolutional neural networks for change detection problem. MDFCD network is proposed to alleviate the bad effects introduced by lighting variations and camera pose differences. The proposed method adopts a siamese network with VGG16 as the backbone. Each path is responsible for extracting deep features from reference and query images. We also proposed encoding module that fuses multiscale deep convolutional features and learn change detection adaptive features. The deep features are integrated together from high layers' semantic features with low layers' texture features. With this fusion strategy, the proposed method can generate more robust change detection results than other compared methods. The high layers' semantic features can effectively avoid the negative changes caused by lighting and season change. Meanwhile, the low layers' texture features help the proposed method obtain accurate changes at the object boundaries. Compared with deep learning method, FCN, where in the input is concatenate reference and query images, our method of extracting features with respect to each image can extract representatively features for change detection. However, as a general problem of deep learning-based methods, one should use large volume of training images to train CNNs. Another problem is that the present change detection methods pay considerable attention on region changes but not on object-level changes. In our future work, we plan to use weak supervised and unsupervised method to study the change detection to avoid using pixel-level labeled training images. We also plan to study incorporating object detection in change detection to generate object-level changes.关键词:change detection;feature fusion;multiscale;siamese network;deep learning51|30|6更新时间:2024-05-07

摘要:ObjectiveChange detection aims at detecting the difference of the images captured from same scene in different time observations. This condition is an important research problem in computer vision. However, the traditional change detection methods, which use the handcrafted features and heuristic models, suffer from lighting variations and camera pose differences, resulting in bad change detection results. Recent deep learning-based convolutional neural networks (CNN) achieve huge success on several computer vision problems, such as image classification, semantic segmentation, and saliency detection. The main reason of the success of the deep learning-based methods is the abstract ability of CNN. To conquer the bad effects of lighting variations and camera pose differences, we can employ deep learning-based CNN in change detection problems. Unlike semantic segmentation, change detection inputs image pairs of two time observations. Thus, a key research problem is how to design an effective architecture of CNN, which can fully explore the intrinsic changes of the image pairs. To generate robust change detection results, we propose in this study a multiscale deep feature fusion-based change detection (MDFCD).MethodThe proposed MDFCD network has two streams of feature extracting sub-networks, which share weight parameters. Each sub-network is responsible for learning to extract semantic features from the corresponding RGB image. We use VGG(visual geometry group)16 as the basic backbone of the proposed MDFCD. The last fully connected layers of VGG16 are removed to save the spatial resolution of the features of the last convolutional layer. We adopt the features of convolutional blocks Conv3, Conv4, and Conv5 of VGG16 as our multiscale deep features because they can capture high-level, middle-level, and low-level features. Then, the Enc (encoding) module is proposed to fuse the deep features from the two time observations of the same convolutional block. We use "concat" operation to concatenate the features. The resulted features are input into Enc to generate change detection adaptive features at the corresponding feature level. The encoded features from the lower layer are upsampled twice in height and width. Then, we concatenate the deep features of the previous layers' convolutional blocks. Subsequently, Enc is used again to learn adaptive features. By progressively incorporating the features from Conv5 to Conv3, we obtain deep fusion of CNN features at multiple scales. To generate robust change detection, we add a convolutional layer with 2×3×3 convolutional filters to generate change prediction at each scale encoding module. Then, the change predictions of all scales are concatenated together to produce final change detection results. Note that we use bicubic upsampling operation to upsample the change detection map at each scale to the size of the input image.ResultWe compared three benchmark datasets, namely, VL_CMU_CD(visual localization of Carnegie Mellon University for change detection), PCD(panoramic change detection), CDnet(change detection net), by the state-of-the-art change detection methods, that is, FGCD(fine-grained change detection), SC_SOBS(SC-self-organizing background subtraction), SuBSENSE(self-balanced sensitirity segmenter), and FCN(fully convolutional network). We employed F1-measure, recall, precision, specific, FPR(false positive rate), FNR(false negative rate), PWC(percentage of wrong classification) to evaluate the difference of the compared change detection methods. The experiments show that MDFCD are better than the other compared methods. Among the compared methods, deep learning-based change detection method FCD performs the best. On VL_CMU_CD, the F1-measure and Precision of MDFCD achieve 12.2% and 24.4% relative improvements over the second-placed change detection method FCN, respectively. On PCD, the F1-measure and precision of MDFCD obtain 2.1% and 17.7% relative improvements over FCN, respectively. On CDnet, compared with FCN, our F1-measure and precision achieve 8.5% and 5.8% relative improvements, respectively. From the experiments, we can find that MDFCD can detect the fine grained changes, such as telegraph poles. The proposed MDFCD are better in distinguishing the real changes with false changes caused by lighting variations and camera pose difference compared with FCN.ConclusionWe studied how to effectively explore the deep convolutional neural networks for change detection problem. MDFCD network is proposed to alleviate the bad effects introduced by lighting variations and camera pose differences. The proposed method adopts a siamese network with VGG16 as the backbone. Each path is responsible for extracting deep features from reference and query images. We also proposed encoding module that fuses multiscale deep convolutional features and learn change detection adaptive features. The deep features are integrated together from high layers' semantic features with low layers' texture features. With this fusion strategy, the proposed method can generate more robust change detection results than other compared methods. The high layers' semantic features can effectively avoid the negative changes caused by lighting and season change. Meanwhile, the low layers' texture features help the proposed method obtain accurate changes at the object boundaries. Compared with deep learning method, FCN, where in the input is concatenate reference and query images, our method of extracting features with respect to each image can extract representatively features for change detection. However, as a general problem of deep learning-based methods, one should use large volume of training images to train CNNs. Another problem is that the present change detection methods pay considerable attention on region changes but not on object-level changes. In our future work, we plan to use weak supervised and unsupervised method to study the change detection to avoid using pixel-level labeled training images. We also plan to study incorporating object detection in change detection to generate object-level changes.关键词:change detection;feature fusion;multiscale;siamese network;deep learning51|30|6更新时间:2024-05-07 -

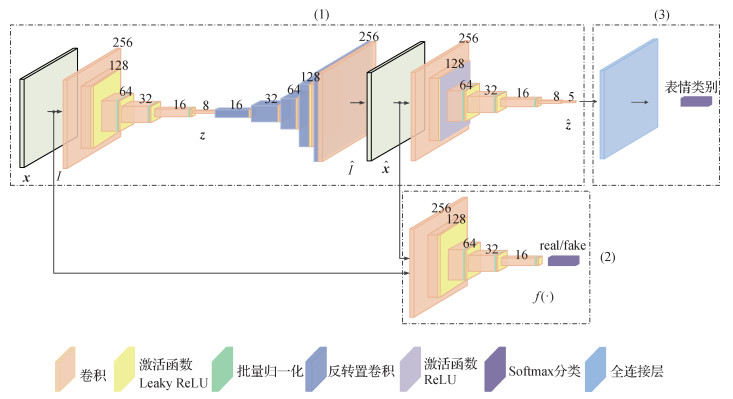

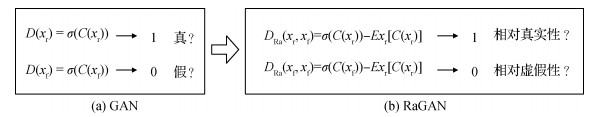

摘要:ObjectiveChina has a large population of 1.3 billion in 2005, accounting for 19% of the world's population. This number is equivalent to the population of Europe or Africa added to the populations of Australia, North America, and Central America. It is one of the few populous countries in the world, and its huge population size has brought many problems. With the rapid development of the economy, the number of people working outside of their homes is increasing, the population is moving frequently, and the safety of the floating population is even difficult to control. The huge mobile population provides the city's infrastructure and public services tremendous pressure. Thus, to conduct a comprehensive check on the area of adult traffic, which is time-consuming and labor-intensive, is difficult for security and related staff. Particularly, complex environmental safety problems, such as subways, railway stations, and airports, are becoming increasingly serious. Unstable events occur frequently, security situation is receiving much attention, and urban management and service systems are seriously lagging behind. These conditions need to be improved, especially after the September 11 incident in the United States. The situation has aroused widespread concern in the international community. Meanwhile, expression is the most intuitive way for humans to express emotions. In addition to language communication, expressions are extremely effective means of communication. People usually express their inner feelings through specific expressions. Expression can be used to judge other person's thoughts. For expressions used to express information, psychologist Mehrabian summed up a formula:emotional expression=7% of words + 38% of sound + 55% of facial expressions. Expression is one of the most important features of human emotion recognition. Expression is the emotional state expressed by facial muscle changes. Through the facial expression of the person's face, to evaluate abnormal psychological state, speculate on extreme emotions, and observe the facial expressions of pedestrians in the subway, railway station, and airport to further judge the psychology of the person is possible. We provide technical support to determine who is suspicious and prevent certain criminal activities in a timely manner. Strengthening urban surveillance and identifying the facial expressions of criminals are especially important. Expression plays an important role in human emotion cognition. However, factors affecting facial expression recognition in safety screening are extremely large, and the large intra-class gap seriously inflluences the accuracy of facial expression recognition. The problem of large gaps in facial expression recognition in a real environment is solved by identifying suspected molecules to be monitored should be identified, and the security personnel should prepare in advance to accurately identify them. Facial expressions are also particularly important for preventing security problems. The era of large data has arrived. Meanwhile, with the advancement of computer hardware, deep learning continues to develop. The traditional facial expression recognition method cannot meet the needs of the development of the times, and a new algorithm based on deep learning facial expression recognition is coming soon. Learning methods are widely used in facial expression recognition. Although facial recognition intelligent recognition technology has a long history of research, a large number of research methods have been proposed. However, due to the large facial expression gap, the expression is complex, and the influencing factors are many. The current intelligent recognition effect of facial expression results is not ideal. Considering the deep learning because of its powerful expressive ability, this study introduces the model structure of traditional neural network and carries out corresponding experiments and analysis in the context of real-life facial expression recognition and proposes real-world facial expression recognition research based on deep learning. In the next period, real-world facial expression recognition will make considerable progress. This work further studies the realistic facial expression recognition based on deep learning.MethodThis study constructs a new IC-GAN(intra-class gap GAN(generative adversarial network)) recognition network model, providing good adaptability to the facial expression recognition task with large gap within the class. The network consists of a convolutional layer, a fully connected layer, an active layer, a BathNorm layer, and a Softmax layer, in which a convolutional assembly encoder and a decoder are used to perform deep feature extraction on facial expression images, and download and parse from the network. The video self-made mixed facial expression data set is based on the real environment, the image is expanded, and the facial expression data are normalized. The complexity of the facial expression features with large differences within the class also increased the network training and network recognition. The momentum-based Adam is used to update the network weight, adjust the network parameters, and optimize the network structure based on this factor. In this study, the facial expression category data are trained based on the Pytorch platform in deep learning and tested on the verification set of the self-made mixed facial expression data set.ResultWhen the input image is 256×256 pixels, the IC-GAN network model can reduce the false positive rate of the expression in large difference in the class, image blur, and facial expression incompleteness, and improve the system robustness. Compared with deep belief network(DBN) deep trust network and GoogLeNet network, the recognition result of IC-GAN network is 11% higher than that of DBN network and 8.3% higher than that of GoogLeNet network.ConclusionThe IC-GAN accuracy in facial expression recognition with large gaps in the class is verified by experiments. This condition reduces the misunderstanding rate of facial expressions in large intra-class differences, improves the system robustness, and lays down the solid foundation for facial expression generation.关键词:deep learning;generative adversarial network(GAN);intra-class gap GAN(IC-GAN);facial expression recognition50|80|1更新时间:2024-05-07

摘要:ObjectiveChina has a large population of 1.3 billion in 2005, accounting for 19% of the world's population. This number is equivalent to the population of Europe or Africa added to the populations of Australia, North America, and Central America. It is one of the few populous countries in the world, and its huge population size has brought many problems. With the rapid development of the economy, the number of people working outside of their homes is increasing, the population is moving frequently, and the safety of the floating population is even difficult to control. The huge mobile population provides the city's infrastructure and public services tremendous pressure. Thus, to conduct a comprehensive check on the area of adult traffic, which is time-consuming and labor-intensive, is difficult for security and related staff. Particularly, complex environmental safety problems, such as subways, railway stations, and airports, are becoming increasingly serious. Unstable events occur frequently, security situation is receiving much attention, and urban management and service systems are seriously lagging behind. These conditions need to be improved, especially after the September 11 incident in the United States. The situation has aroused widespread concern in the international community. Meanwhile, expression is the most intuitive way for humans to express emotions. In addition to language communication, expressions are extremely effective means of communication. People usually express their inner feelings through specific expressions. Expression can be used to judge other person's thoughts. For expressions used to express information, psychologist Mehrabian summed up a formula:emotional expression=7% of words + 38% of sound + 55% of facial expressions. Expression is one of the most important features of human emotion recognition. Expression is the emotional state expressed by facial muscle changes. Through the facial expression of the person's face, to evaluate abnormal psychological state, speculate on extreme emotions, and observe the facial expressions of pedestrians in the subway, railway station, and airport to further judge the psychology of the person is possible. We provide technical support to determine who is suspicious and prevent certain criminal activities in a timely manner. Strengthening urban surveillance and identifying the facial expressions of criminals are especially important. Expression plays an important role in human emotion cognition. However, factors affecting facial expression recognition in safety screening are extremely large, and the large intra-class gap seriously inflluences the accuracy of facial expression recognition. The problem of large gaps in facial expression recognition in a real environment is solved by identifying suspected molecules to be monitored should be identified, and the security personnel should prepare in advance to accurately identify them. Facial expressions are also particularly important for preventing security problems. The era of large data has arrived. Meanwhile, with the advancement of computer hardware, deep learning continues to develop. The traditional facial expression recognition method cannot meet the needs of the development of the times, and a new algorithm based on deep learning facial expression recognition is coming soon. Learning methods are widely used in facial expression recognition. Although facial recognition intelligent recognition technology has a long history of research, a large number of research methods have been proposed. However, due to the large facial expression gap, the expression is complex, and the influencing factors are many. The current intelligent recognition effect of facial expression results is not ideal. Considering the deep learning because of its powerful expressive ability, this study introduces the model structure of traditional neural network and carries out corresponding experiments and analysis in the context of real-life facial expression recognition and proposes real-world facial expression recognition research based on deep learning. In the next period, real-world facial expression recognition will make considerable progress. This work further studies the realistic facial expression recognition based on deep learning.MethodThis study constructs a new IC-GAN(intra-class gap GAN(generative adversarial network)) recognition network model, providing good adaptability to the facial expression recognition task with large gap within the class. The network consists of a convolutional layer, a fully connected layer, an active layer, a BathNorm layer, and a Softmax layer, in which a convolutional assembly encoder and a decoder are used to perform deep feature extraction on facial expression images, and download and parse from the network. The video self-made mixed facial expression data set is based on the real environment, the image is expanded, and the facial expression data are normalized. The complexity of the facial expression features with large differences within the class also increased the network training and network recognition. The momentum-based Adam is used to update the network weight, adjust the network parameters, and optimize the network structure based on this factor. In this study, the facial expression category data are trained based on the Pytorch platform in deep learning and tested on the verification set of the self-made mixed facial expression data set.ResultWhen the input image is 256×256 pixels, the IC-GAN network model can reduce the false positive rate of the expression in large difference in the class, image blur, and facial expression incompleteness, and improve the system robustness. Compared with deep belief network(DBN) deep trust network and GoogLeNet network, the recognition result of IC-GAN network is 11% higher than that of DBN network and 8.3% higher than that of GoogLeNet network.ConclusionThe IC-GAN accuracy in facial expression recognition with large gaps in the class is verified by experiments. This condition reduces the misunderstanding rate of facial expressions in large intra-class differences, improves the system robustness, and lays down the solid foundation for facial expression generation.关键词:deep learning;generative adversarial network(GAN);intra-class gap GAN(IC-GAN);facial expression recognition50|80|1更新时间:2024-05-07 -

摘要:ObjectiveUyghur belongs to adhesive language, and the formation and meaning of words in Uyghur language depend on affix connection, which add affixes to the stems to achieve different semantic meaning. For example, in Uyghur "مەكتەپنى" refers to school, and it could be added by a first person singular in Uyghur "ىر" as a suffix to form a new word "بەرسىڭىز", which means my school. During adding suffixes, certain morphological changes will occur at the tail of the stems. In addition, phonetic change also happens at that process, such as weakling (some morphological changes occur at the tail of a stem), epenthesis (a few characters were added at the tail of the stem), and deletion(a few characters were deleted at the tail of the stem). Multiple phonetic changes also appear simultaneously, causing further morphological change. All the semorphological changes form the word different from the stem. Therefore, using the current word spotting technique, only a specific Uyghur vocabulary can be retrieved, and a certain stem cannot search its corresponding suffixed words. In addition, traditional word spotting approaches only aim at the number of the matching sets and noton the spatial relationship of the matching sets. Therefore, some drawbacks for word spotting technique occur. This study proposes Uyghur-printed suffix word retrieval based on mapping relationship that take advantage of the spatial relationship of the matching sets to retrieve the corresponding suffix words of the stems.MethodThe process of the proposed approach is described as follows.First, the segmentation algorithm segments printed the Uyghur document images to word image corps. Then, the local features of the Uyghur word image are extracted. To compare the efficiency of the different local features, scale-invariant feature transform(SIFT) and speeded up robust features(SURF) have been adopted. The experiment result shows that SIFT feature has better performance than SURF because SIFT can obtain more feature points than SURF, and the distribution of SIFT feature points is more diverse than SURF, which is very helpful for further retrieval steps. However, SURF is more efficient than SIFT considering the time efficiency. Then, Brute-Force matching and fast library for approximate nearest neighbor(FLANN) bilateral feature matching have been adopted as matching algorithm. The experiment result shows that FLANN bilateral feature matching has better performance than Brute-Force matching because FLANN bilateral feature matching can filter more mismatch pairs than Brute-Force matching. In addition, the correctness of the feature matching set is very important to the following suffix word retrieval, and the accuracy of FLANN bilateral feature matching is very outstanding. Finally, the feature matching sets are subjected to homography transformation and perspective transformation to the Uyghur word image for the final retrieval steps. After homography transformation and perspective transformation, a quadrilateral is built. If this quadrilateral belongs to rectangle and the right part of the acquired rectangle simply match with the outline of the query word image, then the retrieved word belongs to corresponding suffixed words of the query stem.Meanwhile, if this quadrilateral does not belong to rectangle and does not match with the outline of the query word image, then the retrieved word does not belong to the corresponding suffixed words of the query stem but to the mismatch. This result indicates that the proposed method not only can retrieve corresponding suffixed words of the query word but also can filter the mismatch word. In other words, the feature matching sets are transformed into a spatial relationship and are further determined whether the retrieved word belongs to suffix word or mismatched word according to the spatial relationship. The spatial relationship of the feature matching set is searched for the suffix word, thereby implementing the printed Uyghur Text image suffix word retrieval.ResultThe experimental data selected 17 648 segmented word images in 190 Uyghur print text images and 30 word images, which have 167 corresponding suffix words considered as the search terms. In the experiment, we used different local feature algorithms to suffix retrieval. The comparison results show that the SIFT algorithm's suffix retrieval effect is better than SURF algorithm, and its accuracy and recall rate reach 94.23% and 88.02%, respectively. In addition, we carried out comparative experiments in different situations, such as weakling, epenthesis, deletion, and replacement. Moreover, multiple phonetic changes appear simultaneously and change in the tail of the word stem. In those five different situations, the retrieval result of changes in the tail of the word stem was the best, and its accuracy and recall rate reach 98.9% and 96.07%, respectively. The main reason for this result is that the changes in the tail of the word stem do not make very obvious formation changes of the stem word, which can be very helpful for the suffix word retrieval. However, in different cases, multiple phonetic changes appear simultaneously that the accuracy and recall rate of the retrieval reach 66.6% and 22.2%. The reason for such low performance is that multiple phonetic changes simultaneously change the formation of the stem part in the suffix word.In addition, several characters in the stem part have been replaced by other characters, which are very difficult to retrieve its corresponding suffix word by the original stem word. However, this kind of situation only takes few percentages of the whole morphological changes.ConclusionTherefore, the proposed algorithm meets the different retrieval needs of users. The method of suffix word retrieval based on mapping relationship is also the first implementation of Uyghur suffix word retrieval method. The Uyghur suffix image is efficiently retrieved by the spatial relationship between the matching sets.关键词:Uyghur language;suffix word retrieval;local feature;homograph;perspective transform20|24|0更新时间:2024-05-07

摘要:ObjectiveUyghur belongs to adhesive language, and the formation and meaning of words in Uyghur language depend on affix connection, which add affixes to the stems to achieve different semantic meaning. For example, in Uyghur "مەكتەپنى" refers to school, and it could be added by a first person singular in Uyghur "ىر" as a suffix to form a new word "بەرسىڭىز", which means my school. During adding suffixes, certain morphological changes will occur at the tail of the stems. In addition, phonetic change also happens at that process, such as weakling (some morphological changes occur at the tail of a stem), epenthesis (a few characters were added at the tail of the stem), and deletion(a few characters were deleted at the tail of the stem). Multiple phonetic changes also appear simultaneously, causing further morphological change. All the semorphological changes form the word different from the stem. Therefore, using the current word spotting technique, only a specific Uyghur vocabulary can be retrieved, and a certain stem cannot search its corresponding suffixed words. In addition, traditional word spotting approaches only aim at the number of the matching sets and noton the spatial relationship of the matching sets. Therefore, some drawbacks for word spotting technique occur. This study proposes Uyghur-printed suffix word retrieval based on mapping relationship that take advantage of the spatial relationship of the matching sets to retrieve the corresponding suffix words of the stems.MethodThe process of the proposed approach is described as follows.First, the segmentation algorithm segments printed the Uyghur document images to word image corps. Then, the local features of the Uyghur word image are extracted. To compare the efficiency of the different local features, scale-invariant feature transform(SIFT) and speeded up robust features(SURF) have been adopted. The experiment result shows that SIFT feature has better performance than SURF because SIFT can obtain more feature points than SURF, and the distribution of SIFT feature points is more diverse than SURF, which is very helpful for further retrieval steps. However, SURF is more efficient than SIFT considering the time efficiency. Then, Brute-Force matching and fast library for approximate nearest neighbor(FLANN) bilateral feature matching have been adopted as matching algorithm. The experiment result shows that FLANN bilateral feature matching has better performance than Brute-Force matching because FLANN bilateral feature matching can filter more mismatch pairs than Brute-Force matching. In addition, the correctness of the feature matching set is very important to the following suffix word retrieval, and the accuracy of FLANN bilateral feature matching is very outstanding. Finally, the feature matching sets are subjected to homography transformation and perspective transformation to the Uyghur word image for the final retrieval steps. After homography transformation and perspective transformation, a quadrilateral is built. If this quadrilateral belongs to rectangle and the right part of the acquired rectangle simply match with the outline of the query word image, then the retrieved word belongs to corresponding suffixed words of the query stem.Meanwhile, if this quadrilateral does not belong to rectangle and does not match with the outline of the query word image, then the retrieved word does not belong to the corresponding suffixed words of the query stem but to the mismatch. This result indicates that the proposed method not only can retrieve corresponding suffixed words of the query word but also can filter the mismatch word. In other words, the feature matching sets are transformed into a spatial relationship and are further determined whether the retrieved word belongs to suffix word or mismatched word according to the spatial relationship. The spatial relationship of the feature matching set is searched for the suffix word, thereby implementing the printed Uyghur Text image suffix word retrieval.ResultThe experimental data selected 17 648 segmented word images in 190 Uyghur print text images and 30 word images, which have 167 corresponding suffix words considered as the search terms. In the experiment, we used different local feature algorithms to suffix retrieval. The comparison results show that the SIFT algorithm's suffix retrieval effect is better than SURF algorithm, and its accuracy and recall rate reach 94.23% and 88.02%, respectively. In addition, we carried out comparative experiments in different situations, such as weakling, epenthesis, deletion, and replacement. Moreover, multiple phonetic changes appear simultaneously and change in the tail of the word stem. In those five different situations, the retrieval result of changes in the tail of the word stem was the best, and its accuracy and recall rate reach 98.9% and 96.07%, respectively. The main reason for this result is that the changes in the tail of the word stem do not make very obvious formation changes of the stem word, which can be very helpful for the suffix word retrieval. However, in different cases, multiple phonetic changes appear simultaneously that the accuracy and recall rate of the retrieval reach 66.6% and 22.2%. The reason for such low performance is that multiple phonetic changes simultaneously change the formation of the stem part in the suffix word.In addition, several characters in the stem part have been replaced by other characters, which are very difficult to retrieve its corresponding suffix word by the original stem word. However, this kind of situation only takes few percentages of the whole morphological changes.ConclusionTherefore, the proposed algorithm meets the different retrieval needs of users. The method of suffix word retrieval based on mapping relationship is also the first implementation of Uyghur suffix word retrieval method. The Uyghur suffix image is efficiently retrieved by the spatial relationship between the matching sets.关键词:Uyghur language;suffix word retrieval;local feature;homograph;perspective transform20|24|0更新时间:2024-05-07 -

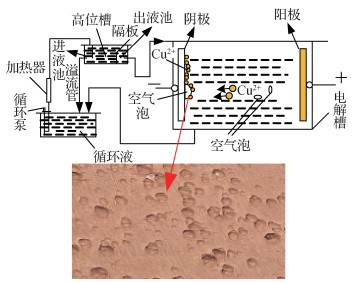

摘要:ObjectiveFrom the global copper demand area, China still occupies the world's largest consumer of copper, accounting for nearly 40% of global total demand. Chinese economic data and news have been the focus of the copper market. In recent years, China's copper processing industry has shown a steady growth, and industry output gradually expanded. Copper processing materials are widely used in almost all national economy industries. Electrolytic copper plays an important role in copper smelting industry. During copper electrolysis, a large amount of air mixed in electrolyte is the main reason for the pores on the surface of copper cathode. The electrolyte dissolved gas is oversaturated to produce gas phase core, which gradually grows to form bubbles, and attaches to the surface of the cathode copper plate to form insulation point. This condition hinders the precipitation of copper ions, resulting in the appearance of bumps on the surface quality of copper plate. The surface quality of the copper plate is usually identified by the operator's eyes to determine the classification to solve the problem of low accuracy and efficiency of manually discriminating the surface quality of electrolytic cathode copper plate. This study proposes an intelligent recognition method of copper plate surface-raised image based on chaotic bird swarm algorithm.MethodTo enhance the global searching ability of the algorithm, the chaos theory is improved to make it enter into a completely chaotic state. The improved chaos theory was introduced based on the local optimal judgment of birds' own fitness value. In the iteration of bird flock, low-quality individuals were selected alternately to carry out chaos and dynamic step position update to increase the population diversity to avoid local optimization of the algorithm. Based on the analysis of surface defects of the copper plate, the base point growth method is proposed to design and detect structural elements. In addition, the texture of copper plate image is eliminated by combining with the morphological operation to improve the algorithm accuracy in calculating the raised area. The birds used Kapur-Sahoo-Wong(KSW) entropy as the fitness function to segment the copper plate images at the best threshold. Background pixels are mixed with raised category pixels in the initial segmentation result due to uneven illumination. Therefore, this study classifies the pixel of the corrected copper plate image through eight neighborhood search and takes it as the final segmentation result. According to the statistics of the number of raised pixels in the segmented image, the actual raised area proportion is obtained to determine whether the copper plate is qualified or defective. In this work, three copper plates with the smallest raised area are selected from 30 copper plates with fixed defective products through rough recognition by the human eyes. The exact bulge ratio is calculated using the algorithm in this study. To strengthen the fault tolerance rate of the algorithm, appropriate adjustment was made on the basis of the minimum value as the final threshold of copper plate classification. In addition, two arbitrary copper plates were selected to verify the method.ResultThe algorithm in this study is compared with genetic algorithm(GA), chicken swarm optimization(CSO), glowworm swarm optimization(GSO), and bird swarm algorithm(BSA) in three indexes of time, fitness value, and structural similarity index measurement(SSIM) value, respectively. Experimental results show that the fitness value of the algorithm in this study was increased by 0.0030.701, and the SSIM value was increased by 0.0750.169.The finding reveals the fault tolerance and superiority of this algorithm in the calculation of the proportion of copper plate surface bulge.ConclusionThe proposed method can effectively detect the proportion of copper surface bulge area and classify qualified and defective products, thus providing a reference to determine the surface quality of electrolytic copper in metallurgical industry.关键词:copper plate defects;threshold segmentation;bird swarm algorithm(BSA);chaos theory;base growth method;Kapur-Sahoo-Wong(KSW) entropy method56|70|1更新时间:2024-05-07

摘要:ObjectiveFrom the global copper demand area, China still occupies the world's largest consumer of copper, accounting for nearly 40% of global total demand. Chinese economic data and news have been the focus of the copper market. In recent years, China's copper processing industry has shown a steady growth, and industry output gradually expanded. Copper processing materials are widely used in almost all national economy industries. Electrolytic copper plays an important role in copper smelting industry. During copper electrolysis, a large amount of air mixed in electrolyte is the main reason for the pores on the surface of copper cathode. The electrolyte dissolved gas is oversaturated to produce gas phase core, which gradually grows to form bubbles, and attaches to the surface of the cathode copper plate to form insulation point. This condition hinders the precipitation of copper ions, resulting in the appearance of bumps on the surface quality of copper plate. The surface quality of the copper plate is usually identified by the operator's eyes to determine the classification to solve the problem of low accuracy and efficiency of manually discriminating the surface quality of electrolytic cathode copper plate. This study proposes an intelligent recognition method of copper plate surface-raised image based on chaotic bird swarm algorithm.MethodTo enhance the global searching ability of the algorithm, the chaos theory is improved to make it enter into a completely chaotic state. The improved chaos theory was introduced based on the local optimal judgment of birds' own fitness value. In the iteration of bird flock, low-quality individuals were selected alternately to carry out chaos and dynamic step position update to increase the population diversity to avoid local optimization of the algorithm. Based on the analysis of surface defects of the copper plate, the base point growth method is proposed to design and detect structural elements. In addition, the texture of copper plate image is eliminated by combining with the morphological operation to improve the algorithm accuracy in calculating the raised area. The birds used Kapur-Sahoo-Wong(KSW) entropy as the fitness function to segment the copper plate images at the best threshold. Background pixels are mixed with raised category pixels in the initial segmentation result due to uneven illumination. Therefore, this study classifies the pixel of the corrected copper plate image through eight neighborhood search and takes it as the final segmentation result. According to the statistics of the number of raised pixels in the segmented image, the actual raised area proportion is obtained to determine whether the copper plate is qualified or defective. In this work, three copper plates with the smallest raised area are selected from 30 copper plates with fixed defective products through rough recognition by the human eyes. The exact bulge ratio is calculated using the algorithm in this study. To strengthen the fault tolerance rate of the algorithm, appropriate adjustment was made on the basis of the minimum value as the final threshold of copper plate classification. In addition, two arbitrary copper plates were selected to verify the method.ResultThe algorithm in this study is compared with genetic algorithm(GA), chicken swarm optimization(CSO), glowworm swarm optimization(GSO), and bird swarm algorithm(BSA) in three indexes of time, fitness value, and structural similarity index measurement(SSIM) value, respectively. Experimental results show that the fitness value of the algorithm in this study was increased by 0.0030.701, and the SSIM value was increased by 0.0750.169.The finding reveals the fault tolerance and superiority of this algorithm in the calculation of the proportion of copper plate surface bulge.ConclusionThe proposed method can effectively detect the proportion of copper surface bulge area and classify qualified and defective products, thus providing a reference to determine the surface quality of electrolytic copper in metallurgical industry.关键词:copper plate defects;threshold segmentation;bird swarm algorithm(BSA);chaos theory;base growth method;Kapur-Sahoo-Wong(KSW) entropy method56|70|1更新时间:2024-05-07 -

摘要:ObjectiveMulti-object tracking is an important research topic in computer vision. Although several previous studies have dealt with varieties of particular problems in multi-object tracking, many challenges are still observed, such as object detection errors, missed detection, frequent and long-term occlusion of objects in complex scenes, and identity switches of tracking objects with similar appearance. All of which are easy to lead to trajectory drift or tracking interruption. With the improvement of object detection, the object tracking method based on detection shows good performance. The key of tracking-by-detection algorithm is the data association between detection points, which mainly consists of two types, namely, frame-by-frame association and multi-frame association. Frame-by-frame data association refers to the association between detection points in the two consecutive frames, which is carried out according to the properties of detection points, such as appearance, location, and size. Tracking drift or failure is likely to occur when object is blocked, misdetected or similar appearance exist due to that the frame-by-frame data association only contains the information of the previous two frames. Multi-frame data association establishes a relational model by using object detection information of multiple frames rather than only previous two frames. This condition can effectively reduce the object error association and deal with occlusion. However, if the occlusion time is longer than the time segment needed for multi-frame data association, the detection points before and after still cannot be successfully associated, and the tracking will also be interrupted. Moreover, this method needs all detection information before tracking, which cannot meet the real-time requirement. Aiming at the problems of ID switches and trajectory fragmentation caused by long-term occlusion, an online multi-object tracking algorithm based on adaptive online discriminative appearance learning and hierarchical association is proposed for multi-object tracking in complex scenes. This process combines the low-level appearance, position-size characteristics used in local association, and high-level motion model established in global association and can meet the real-time tracking requirement.MethodIn this study, multi-object tracking is divided into two stages according to track confidence:local association and global association. The establishment of the object robust appearance model is the key to local association and global association. An online incremental linear discriminant analysis(ILDA) method was introduced to discriminate the appearances of objects and adaptively update the object appearance models based on the difference value between the new sample and the mean of object samples to address the problem of identity switches. The reliable tracklet with high confidence in the local association stage is associated with the current frame detections by low-level properties of detection points:appearance and position-size similarity, which allows reliable trajectories to grow constantly. The unreliable tracklet with low confidence in the global association stage resulted from long-term occlusion is further associated. In this stage, the candidate object consists of two kinds. One is the detection points that are not associated in local association, and the other one is continuous trajectory with high confidence meeting the time condition. The end time of trajectory is before the current time. When we associate detection points that reappear after long-term occlusion, only appearance similarity is utilized within a validation range without the position-size property due to the unreliable motion dynamics of unreliable objects. At the same time, introducing a valid association range is related to the trajectory confidence. Once the track confidence is reduced, the valid association range is increased because the distance between a drifting track and the corresponding object can grow large if the track drift persists. This condition allows us to reassign drifting tracks to detections of reappearing objects, which is even distant from the corresponding tracks. When two track fragments are associated, a motion model is introduced to determine whether the two trajectories belong to the same object. In this condition, the average velocity vector angle of the two track fragments is larger than a threshold, indicating that it may include unreliable tracks. Thus, we only consider appearance similarity between the pair. Otherwise, we combine the appearance, position size, and motion similarity to make an association between the pair. If two track fragments are associated successfully, the linear interpolation is used to fill the lost interval of this object. Thus, the two trajectory fragments can be connected effectively.ResultWe compared our method with 10 state-of-the-art multi-object tracking algorithms, including five offline tracking approaches and five online tracking methods on three public datasets, namely, PETS09-S2L1, TUD-Stadmitte, and Town-Center. The quantitative evaluation metrics contained multi-object tracking accuracy (MOTA), multi-object tracking precision (MOTP), the number of identity switches (IDS), the ratio of mostly tracked trajectories (MT), the ratio of mostly lost trajectories (ML), and the number of track fragmentation (Frag). The experiment results illustrate that our tracking method outperforms in MOTA and MOTP compared with selected online multi-object tracking methods, which include two tracking approaches based on hierarchical association. In addition, the proposed approach performs almost the same or even better when compared with offline tracking methods. In the PETS09-S2L1 data set, the proposed approaches are superior to other comparators in MOTP, IDS, and Frag. MOTP increased by 6.1%, IDS reduced by 5, and Frag reduced by 21. In TUD-Stadmitte dataset, IDS reduced by 4. Compared with online tracking approaches, the MOTP and MOTA increased by 36.3% and 11.1%, respectively. In Town-Center dataset, MOTA and MT increased by 5.2% and 16.9%, respectively. IDS and Frag reduced by 60 and 84, respectively, and ML decreased by 1.5%.ConclusionIn this study, we take the idea of hierarchical data association, proposing a multi-object tracking based on adaptive online discriminative appearance learning and hierarchical association. The experiment results indicate that our method has a good solution to the problems of ID switches and trajectory fragmentation caused by long-term occlusion in complex scenes.关键词:multi-object tracking;local association;global association;track confidence;incremental linear discriminant analysis(ILDA)45|218|6更新时间:2024-05-07