最新刊期

卷 25 , 期 3 , 2020

-

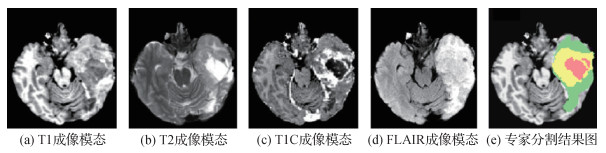

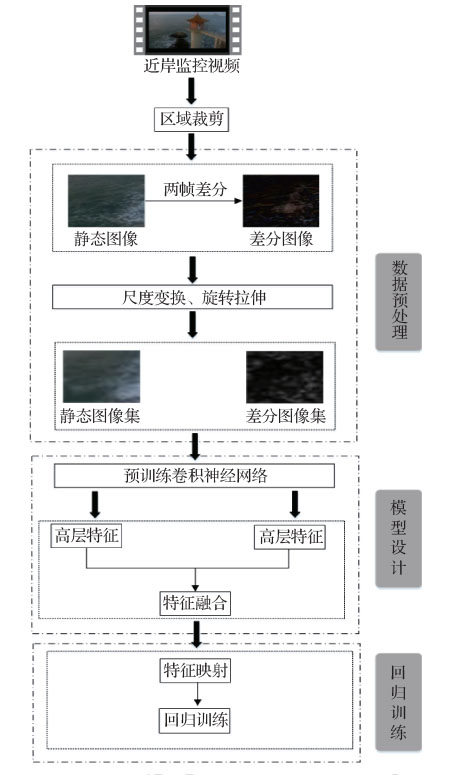

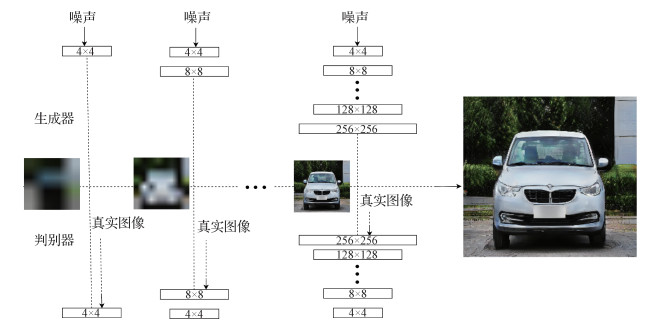

摘要:Brain tumor segmentation is an important part of medical image processing. It assists doctors in making accurate diagnoses and treatment plans. It clearly carries important practical value in clinical brain medicine. With the development of medical imaging, imaging technology plays an important role in the evaluation of the treatment of brain tumor patients and can provide doctors with a clear internal structure of the human body. Magnetic resonance imaging (MRI) is the main imaging tool for clinicians to study the structure of brain tissue. Brain tumor MRI modalities include T1-weighted, contrast-enhanced T1-weighted, T2-weighted, and liquid attenuation inversion recovery pulses. Different imaging modalities can provide complementary information to analyze brain tumors. These four types of modalities are usually combined to diagnose the location and size of brain tumors. At present, due to the extensive application of MRI equipment in brain examination, a large number of brain MRI images are generated in the clinical setting. This rise in the quantity of brain MRI images hinders doctors in manually annotating and segmenting all images promptly. Moreover, the manual segmentation of brain tumor tissues highly depends on doctors' professional experience. Therefore, research has focused on the ways to segment brain tumors efficiently, accurately, and automatically. In recent years, significant advancement has been made in the study of brain tumor segmentation methods. To enable other researchers to explore the theory and development of segmentation methods for brain tumor MRI images, this work reviews the current research status in this field. In this study, the current semiautomatic and fully automatic segmentation methods for brain tumor MRI images are divided into two categories:unsupervised segmentation and supervised segmentation. The difference between the two methods lies in the use of hand-labeled image data. Unsupervised segmentation is a nonpriori image segmentation method based on computer clustering statistical analysis. The unsupervised methods are divided into threshold-based, region-based, pixel-based, and model-based segmentation technologies according to the different segmentation principles. This work briefly describes the unsupervised methods according to the above classification and summarizes their advantages and disadvantages. The main feature of supervised segmentation is its use of labeled image data. The segmentation process involves model training and testing. In the former, labeled data are used to learn the mapping from image features to labels. In the latter, the model assigns labels to unlabeled data. Supervised segmentationis mainly based on the segmentation technology of pixel classification. It generally includes traditional machine learning methods and methods based on neural networks. The common traditional machine learning methods and the methods based on neural networks used in brain tumor segmentation are briefly described herein, and their advantages and disadvantages are summarized. The segmentation methods for brain tumor images based on deep learning are mainly described. With the advancement of artificial intelligence, deep learning, especially the new technology represented by convolutional neural networks (CNNs), has been well received because of its superior brain tumor segmentation results. Compared with traditional segmentation methods, CNNs can automatically learn representative complex features directly from the data. Hence, the research of brain tumor segmentation based on CNNs mainly focuses on network structure design rather than image processing before feature extraction. This study focuses on the structure of neural networks used in the field of brain tumor image segmentation and summarizes the optimization strategies of deep learning. Lastly, the challenge of brain tumor segmentation (BraTS) is introduced, and the future development trend of brain tumor segmentation is established in combination with the methods used in the challenge. The BraTS challenge is a competition to evaluate the segmentation methods for brain tumor MRI images.The BraTS challenge uses preoperative MRI image data from multiple institutional brains to focus on the segmentation of gliomas. In addition, the BraTS challenge involves predicting overall patient survival by combining radiological features with machine learning algorithms to determine the clinical relevance of this segmentation task. The segmentation methods for brain tumor MRI images have inherent advantages, disadvantages, and application scope. Researchers have been working on how to improve the accuracy of segmentation results, the robustness of models, and the overall operational efficiency. Hence, this study analyzes the advantages and disadvantages of various methods, the optimization strategies of deep learning, and future development trends. The optimization strategy of deep learning is as follows.In the aspect of imaging data, data enhancement techniques, such as flipping, scaling, and cropping, are used to increase the amount of training data and improve the generalization ability of models. Acascade framework is introduced to realize the segmentation of whole tumors, core tumors, and enhanced tumors by combining the acascade framework with the inclusion relationship of tumors in the brain anatomical structure. An improved loss function is used to deal with image category imbalances. In terms of network structure, multiscale and multichannel strategies are adopted to make full use of image feature information.In the process of downsampling, aconvolution operation is used instead of pooling so that image information can be further learned while reducing image information loss.Between the convolutional layers, the jump connection method is applied to effectively solve the degradation problem of the deep network.In different cases, the appropriate standardization method, activation function, and loss rate are selected to achieve satisfactory segmentation effects. This work summarizes the development trend of brain tumor segmentation methods by learning and arranging the methods used in the BraTS challenge. As a result of the diversification of MRI imaging modalities, making full use of the each modal image information can effectively improve the accuracy of brain tumor segmentation. Therefore, the reasonable utilization of multimodality images can be expected to become a research hotspot.Methods based on deep learning are outstanding in the field of brain tumor segmentation and have become a hot research direction.The defects of machine learning algorithms lead to an inaccurate segmentation of brain tumors. A popular trend is to improve the original method or combine various methods effectively. Remarkable progress has been made in the segmentation of brain tumor MRI images. The development of deep learning, in particular, provides new ideas for the research in this field. However, brain tumor image segmentation is still a challenging subject because brain tumors vary in size, shape, and position. Moreover, brain tumor image data are limited, and the categories are not balanced. As a result of the lack of interpretability and transparency in the segmentation process, the application of a fully automated segmentation method to clinical trials still requires further research.关键词:brain tumor image segmentation;magnetic resonance imaging (MRI);unsupervised segmentation;supervised segmentation;deep learning304|285|11更新时间:2024-05-07

摘要:Brain tumor segmentation is an important part of medical image processing. It assists doctors in making accurate diagnoses and treatment plans. It clearly carries important practical value in clinical brain medicine. With the development of medical imaging, imaging technology plays an important role in the evaluation of the treatment of brain tumor patients and can provide doctors with a clear internal structure of the human body. Magnetic resonance imaging (MRI) is the main imaging tool for clinicians to study the structure of brain tissue. Brain tumor MRI modalities include T1-weighted, contrast-enhanced T1-weighted, T2-weighted, and liquid attenuation inversion recovery pulses. Different imaging modalities can provide complementary information to analyze brain tumors. These four types of modalities are usually combined to diagnose the location and size of brain tumors. At present, due to the extensive application of MRI equipment in brain examination, a large number of brain MRI images are generated in the clinical setting. This rise in the quantity of brain MRI images hinders doctors in manually annotating and segmenting all images promptly. Moreover, the manual segmentation of brain tumor tissues highly depends on doctors' professional experience. Therefore, research has focused on the ways to segment brain tumors efficiently, accurately, and automatically. In recent years, significant advancement has been made in the study of brain tumor segmentation methods. To enable other researchers to explore the theory and development of segmentation methods for brain tumor MRI images, this work reviews the current research status in this field. In this study, the current semiautomatic and fully automatic segmentation methods for brain tumor MRI images are divided into two categories:unsupervised segmentation and supervised segmentation. The difference between the two methods lies in the use of hand-labeled image data. Unsupervised segmentation is a nonpriori image segmentation method based on computer clustering statistical analysis. The unsupervised methods are divided into threshold-based, region-based, pixel-based, and model-based segmentation technologies according to the different segmentation principles. This work briefly describes the unsupervised methods according to the above classification and summarizes their advantages and disadvantages. The main feature of supervised segmentation is its use of labeled image data. The segmentation process involves model training and testing. In the former, labeled data are used to learn the mapping from image features to labels. In the latter, the model assigns labels to unlabeled data. Supervised segmentationis mainly based on the segmentation technology of pixel classification. It generally includes traditional machine learning methods and methods based on neural networks. The common traditional machine learning methods and the methods based on neural networks used in brain tumor segmentation are briefly described herein, and their advantages and disadvantages are summarized. The segmentation methods for brain tumor images based on deep learning are mainly described. With the advancement of artificial intelligence, deep learning, especially the new technology represented by convolutional neural networks (CNNs), has been well received because of its superior brain tumor segmentation results. Compared with traditional segmentation methods, CNNs can automatically learn representative complex features directly from the data. Hence, the research of brain tumor segmentation based on CNNs mainly focuses on network structure design rather than image processing before feature extraction. This study focuses on the structure of neural networks used in the field of brain tumor image segmentation and summarizes the optimization strategies of deep learning. Lastly, the challenge of brain tumor segmentation (BraTS) is introduced, and the future development trend of brain tumor segmentation is established in combination with the methods used in the challenge. The BraTS challenge is a competition to evaluate the segmentation methods for brain tumor MRI images.The BraTS challenge uses preoperative MRI image data from multiple institutional brains to focus on the segmentation of gliomas. In addition, the BraTS challenge involves predicting overall patient survival by combining radiological features with machine learning algorithms to determine the clinical relevance of this segmentation task. The segmentation methods for brain tumor MRI images have inherent advantages, disadvantages, and application scope. Researchers have been working on how to improve the accuracy of segmentation results, the robustness of models, and the overall operational efficiency. Hence, this study analyzes the advantages and disadvantages of various methods, the optimization strategies of deep learning, and future development trends. The optimization strategy of deep learning is as follows.In the aspect of imaging data, data enhancement techniques, such as flipping, scaling, and cropping, are used to increase the amount of training data and improve the generalization ability of models. Acascade framework is introduced to realize the segmentation of whole tumors, core tumors, and enhanced tumors by combining the acascade framework with the inclusion relationship of tumors in the brain anatomical structure. An improved loss function is used to deal with image category imbalances. In terms of network structure, multiscale and multichannel strategies are adopted to make full use of image feature information.In the process of downsampling, aconvolution operation is used instead of pooling so that image information can be further learned while reducing image information loss.Between the convolutional layers, the jump connection method is applied to effectively solve the degradation problem of the deep network.In different cases, the appropriate standardization method, activation function, and loss rate are selected to achieve satisfactory segmentation effects. This work summarizes the development trend of brain tumor segmentation methods by learning and arranging the methods used in the BraTS challenge. As a result of the diversification of MRI imaging modalities, making full use of the each modal image information can effectively improve the accuracy of brain tumor segmentation. Therefore, the reasonable utilization of multimodality images can be expected to become a research hotspot.Methods based on deep learning are outstanding in the field of brain tumor segmentation and have become a hot research direction.The defects of machine learning algorithms lead to an inaccurate segmentation of brain tumors. A popular trend is to improve the original method or combine various methods effectively. Remarkable progress has been made in the segmentation of brain tumor MRI images. The development of deep learning, in particular, provides new ideas for the research in this field. However, brain tumor image segmentation is still a challenging subject because brain tumors vary in size, shape, and position. Moreover, brain tumor image data are limited, and the categories are not balanced. As a result of the lack of interpretability and transparency in the segmentation process, the application of a fully automated segmentation method to clinical trials still requires further research.关键词:brain tumor image segmentation;magnetic resonance imaging (MRI);unsupervised segmentation;supervised segmentation;deep learning304|285|11更新时间:2024-05-07

Scholar View

-

摘要:ObjectiveMany image processing models and algorithms in the field of image processing exist at present. Retinex algorithms and multifocus image fusion algorithms in the field have become the most widely used image processing algorithms because of their simplicity, efficiency, and easy implementation. However, Retinex algorithms and multifocus image fusion algorithms have limitations on image processing. This study aims to combine the algorithm with the ideas of Retinex and multifocus image fusion algorithms to achieve image enhancement. In view of the traditional Retinex algorithms, graying, halo, and boundary phenomena are considered, as well as the problem in which the details of high-exposure area is not enhanced. The traditional multifocus image fusion algorithms must capture multiple images of different focus points in the same scene, extract the focus area from multiple images of different focus points, and operate the focus area for multifocus fusion. Extracting the repeated operations of multiple image focus areas for the multifocus fusion algorithm is necessary. This study proposes a multifocus image fusion algorithm based on Retinex the improved bilateral filtering of Retinex.MethodThe Retinex algorithm is used to improve the process of estimating illumination images. The effects of image space proximity similarity and pixel similarity on the enhancement effect are fully considered. The improved kernel function of the traditional Retinex algorithm is enhanced to obtain an improved bilateral filter function. First, the improved bilateral filtering function is used to estimate the illumination image, and the influence of the illumination image on the visual effect is reduced or suppressed, and then the reflected image of the essence of the response image is obtained. Furthermore, the optimal parameters of the optimal bright and dark regions are calculated at the pixel level of the reflected image, and the optimal parameters are brought into the algorithm to find the optimal solution. The optimal solution can be used to decompose the reflected image into the optimal bright region and the optimal dark area. In combination with the idea of multifocus image fusion algorithms, the original image is introduced at the same time, which can ensure the enhancement effect of the image and preserve the detail and quality in the original image, so that the enhancement effect is more obvious and clear. The original image, optimal bright region image, and optimal dark region image are multifocus fusion, and the linear integral transform and adjacent pixel optimal recommendation algorithm are used to obtain a smooth and accurate steering map. The steering filter is used to further smooth the steering map. Using the processed guide map as a basis, the original image and optimal bright region are fused according to different focal lengths to obtain an image

摘要:ObjectiveMany image processing models and algorithms in the field of image processing exist at present. Retinex algorithms and multifocus image fusion algorithms in the field have become the most widely used image processing algorithms because of their simplicity, efficiency, and easy implementation. However, Retinex algorithms and multifocus image fusion algorithms have limitations on image processing. This study aims to combine the algorithm with the ideas of Retinex and multifocus image fusion algorithms to achieve image enhancement. In view of the traditional Retinex algorithms, graying, halo, and boundary phenomena are considered, as well as the problem in which the details of high-exposure area is not enhanced. The traditional multifocus image fusion algorithms must capture multiple images of different focus points in the same scene, extract the focus area from multiple images of different focus points, and operate the focus area for multifocus fusion. Extracting the repeated operations of multiple image focus areas for the multifocus fusion algorithm is necessary. This study proposes a multifocus image fusion algorithm based on Retinex the improved bilateral filtering of Retinex.MethodThe Retinex algorithm is used to improve the process of estimating illumination images. The effects of image space proximity similarity and pixel similarity on the enhancement effect are fully considered. The improved kernel function of the traditional Retinex algorithm is enhanced to obtain an improved bilateral filter function. First, the improved bilateral filtering function is used to estimate the illumination image, and the influence of the illumination image on the visual effect is reduced or suppressed, and then the reflected image of the essence of the response image is obtained. Furthermore, the optimal parameters of the optimal bright and dark regions are calculated at the pixel level of the reflected image, and the optimal parameters are brought into the algorithm to find the optimal solution. The optimal solution can be used to decompose the reflected image into the optimal bright region and the optimal dark area. In combination with the idea of multifocus image fusion algorithms, the original image is introduced at the same time, which can ensure the enhancement effect of the image and preserve the detail and quality in the original image, so that the enhancement effect is more obvious and clear. The original image, optimal bright region image, and optimal dark region image are multifocus fusion, and the linear integral transform and adjacent pixel optimal recommendation algorithm are used to obtain a smooth and accurate steering map. The steering filter is used to further smooth the steering map. Using the processed guide map as a basis, the original image and optimal bright region are fused according to different focal lengths to obtain an imageT . The imageT is repeated with the optimal dark region image to obtain the process imageS by the aforementioned experimental steps and finally utilized. The pilot filter performs boundary repair on the process imageS to obtain a final result image.ResultPictures of a girl and a boat, which were selected for comparison experiments, with single-scale Retinex algorithm, bilateral filter-based Retinex algorithm, BIFT algorithm (Retinex image enhancement algorithm based on image fusion technology), and RVRG algorithm (Retinex variational model based on relative gradient regularization and its application). The proposed algorithm shows obvious advantages in variance and information entropy, specifically with the average of 16.37 higher than that of BIFT, and the average is increased by 20.90 compared with RVRG. The average increase is 1.25 higher compared with BIFT in terms of variance, which is an average increase of 4.42 compared with that of RVRG. In terms of information entropy, the average increase is 0.1 compared with that of BIFT, which is an average increase of 0.17 compared with that of RVRG. In terms of average gradient, the average increase was 1.21 compared with that of BIFT, which was an average increase of 0.42 compared with that of RVRG. Experimental data comparing BIFT and RVRG demonstrate the effectiveness of the proposed algorithm. The details in the original image and the dark portion of the right side of the car body in the image of the girl are significantly enhanced, and the details of the bright areas in the original image are well-preserved while being enhanced. The overall pixel value of the image is low and the visual effect is darker in the image of the boat. After the enhancement, the outline of the character is clear, the water surface is distinct, and the reef texture enhancement effect is remarkable.ConclusionThe extensive experiments show that due to the uncertainty of the process of acquiring images and the complexity of image information, the traditional algorithms are inevitably limited and the enhancement effect cannot meet the high-quality requirements of image preprocessing. Compared with the traditional algorithms, the combination of Retinex and multifocus fusion algorithms proposed in this paper has a better image enhancement effect. Compared with the single-scale Retinex, bilateral filter-based Retinex, BIFT, and RVRG, the reconstructed image is superior to the contrast algorithm at the subjective and objective levels. The objective evaluation parameters of the resulting image are greatly improved, especially in terms of information entropy. The algorithm in this paper can effectively suppress image graying, halo, and boundary prominent phenomena. The contrast is significantly enhanced and has a good enhancement effect on uneven illumination images and low contrast images, making it superior to other contrast algorithms in image enhancement and robustness. The image detail enhancement effect is particularly remarkable because it serves as the foundation for the subsequent image processing.关键词:Retinex algorithm;guided filtering;optimal bright and dark regions;generalized random walk;improved bilateral filtering56|471|3更新时间:2024-05-07 -

摘要:ObjectiveThe texture is often characterized by various unregular types, varied shapes, and complex structures, which directly weaken the accuracy of texture image segmentation. The semantic segmentation methods based on deep learning need benchmark training sets. Constructing the training sets composed by the complex and diverse texture images is difficult. Therefore, utilizing the unsupervised image segmentation methods to solve the problem of texture segmentation is necessary. However, the traditional unsupervised texture image segmentation algorithms have limitations and cannot be used to effect tivelyextract the stable texture features.MethodBased on the idea that the Gabor operator can extract the texture diversity features and the local ternary pattern (LTP) operators imply the threshold differences, combining Gabor filters with extended LTP operators is proposed to describe texture diversity features in this paper. Gabor filter is used to extract the texture features of the same or similar texture patterns. Then, the texture difference features are extracted. Compared with the traditional LTP, the main advantages of the extended LTP operator are embodied in two aspects.On the one hand, the extended size make the LTP operator effective in image segmentation based on the size features of the segmented image.On the other hand, the weights are given to each position of the extended LTP operator. The exponential weight differences are given according to the distances between each position and the central point. Finally, these extracted features are integrated into the level set frame to segment the texture image. The advantages of the proposed method are described as follows:First, the extended LTP operator can effectively extract the texture difference features of local regions. Second, the Gabor filter and extended LTP operator are complementary. The main contributions of the proposed method in this paper are elaborated in the following:1) By improving the traditional LTP operator, we propose an extended LTP operator to extract the texture difference features of pixels in complex images. 2) The Gabor filter and extended LTP operator are complementary. The extended LTP operator and Gabor filter are combined and incorporated into the level set method. The extended LTP operator extracts the texture difference information of complex images, while the Gabor method detects similar information, such as similar frequency, size, and direction of images. The two operators have obvious complementary characteristics. Therefore, the extended LTP operator and Gabor filter are combined to extract the texture features of complex images in a complementary manner. The two operators are integrated into the level set method to effectively solve the segmentation problem of the complex images.ResultIn the experimental results section, the proposed method is compared with the classical unsupervised texture segmentation methods, including the methods based on Gabor filter, structure tensor, extended structure tensor, and local similarity factor. By segmenting all kinds of texture images, such as the images with varied texture directions and sizes, the images with the complex background, and the images with the weak texture features, the proposed method achieved better segmentation results than that of the traditional Gabor filter, where the structure tensor was expanded with the local similarity factor. By comparing the proposed method with the LTP-based method in this study, we found that the segmentation results of the proposed method are still better than those of the LTP-based method. In the experimental results section, the segmentation results of several commonly used level set methods (including Gabor filter, structure tensor, extended structure tensor, and robust local similarity factor (RLSF)) are presented and compared with the segmentation results of the proposed method. Fig. 8-10 show the advantages of the proposed method for segmentation of three types of texture images. More intuitively, specific quantitative results of segmentation accuracy were given for comparison between the proposed method and the various unsupervised texture segmentation methods in this study. On some typical texture images, the accuracy of the proposed method is more than 97%, which is higher than that of the other methods.ConclusionAn unsupervised multi-feature-based texture image segmentation algorithm is proposed in this paper. This method can be utilized to extract the features of the similar texture patterns and the texture differences by combining the Gabor filter and extended LTP operator. These texture features were integrated into the level set framework to segment the texture images accurately. Several experiments show that the proposed method can achieve desirable segmentation results for complex texture images in the real world. The advantages and disadvantages of the proposed unsupervised segmentation method were analyzed and the methods based on deep learning in the conclusion section. Compared with the segmentation methods based on deep learning, the proposed method is unsupervised, and therefore does not need prior or training information. However, the segmentation methods based on deep learning relies on training information heavily. Obtaining the training information of the complex texture images is difficult. At the same time, future research ideas are elaborated. Considering some structural relevance of texture images, our future work aims to focus on the extraction and analysis of texture structures to obtain improved segmentation effects.关键词:texture image segmentation;Gabor filter;extended local ternary pattern (LTP) operator;unsupervised43|131|1更新时间:2024-05-07

摘要:ObjectiveThe texture is often characterized by various unregular types, varied shapes, and complex structures, which directly weaken the accuracy of texture image segmentation. The semantic segmentation methods based on deep learning need benchmark training sets. Constructing the training sets composed by the complex and diverse texture images is difficult. Therefore, utilizing the unsupervised image segmentation methods to solve the problem of texture segmentation is necessary. However, the traditional unsupervised texture image segmentation algorithms have limitations and cannot be used to effect tivelyextract the stable texture features.MethodBased on the idea that the Gabor operator can extract the texture diversity features and the local ternary pattern (LTP) operators imply the threshold differences, combining Gabor filters with extended LTP operators is proposed to describe texture diversity features in this paper. Gabor filter is used to extract the texture features of the same or similar texture patterns. Then, the texture difference features are extracted. Compared with the traditional LTP, the main advantages of the extended LTP operator are embodied in two aspects.On the one hand, the extended size make the LTP operator effective in image segmentation based on the size features of the segmented image.On the other hand, the weights are given to each position of the extended LTP operator. The exponential weight differences are given according to the distances between each position and the central point. Finally, these extracted features are integrated into the level set frame to segment the texture image. The advantages of the proposed method are described as follows:First, the extended LTP operator can effectively extract the texture difference features of local regions. Second, the Gabor filter and extended LTP operator are complementary. The main contributions of the proposed method in this paper are elaborated in the following:1) By improving the traditional LTP operator, we propose an extended LTP operator to extract the texture difference features of pixels in complex images. 2) The Gabor filter and extended LTP operator are complementary. The extended LTP operator and Gabor filter are combined and incorporated into the level set method. The extended LTP operator extracts the texture difference information of complex images, while the Gabor method detects similar information, such as similar frequency, size, and direction of images. The two operators have obvious complementary characteristics. Therefore, the extended LTP operator and Gabor filter are combined to extract the texture features of complex images in a complementary manner. The two operators are integrated into the level set method to effectively solve the segmentation problem of the complex images.ResultIn the experimental results section, the proposed method is compared with the classical unsupervised texture segmentation methods, including the methods based on Gabor filter, structure tensor, extended structure tensor, and local similarity factor. By segmenting all kinds of texture images, such as the images with varied texture directions and sizes, the images with the complex background, and the images with the weak texture features, the proposed method achieved better segmentation results than that of the traditional Gabor filter, where the structure tensor was expanded with the local similarity factor. By comparing the proposed method with the LTP-based method in this study, we found that the segmentation results of the proposed method are still better than those of the LTP-based method. In the experimental results section, the segmentation results of several commonly used level set methods (including Gabor filter, structure tensor, extended structure tensor, and robust local similarity factor (RLSF)) are presented and compared with the segmentation results of the proposed method. Fig. 8-10 show the advantages of the proposed method for segmentation of three types of texture images. More intuitively, specific quantitative results of segmentation accuracy were given for comparison between the proposed method and the various unsupervised texture segmentation methods in this study. On some typical texture images, the accuracy of the proposed method is more than 97%, which is higher than that of the other methods.ConclusionAn unsupervised multi-feature-based texture image segmentation algorithm is proposed in this paper. This method can be utilized to extract the features of the similar texture patterns and the texture differences by combining the Gabor filter and extended LTP operator. These texture features were integrated into the level set framework to segment the texture images accurately. Several experiments show that the proposed method can achieve desirable segmentation results for complex texture images in the real world. The advantages and disadvantages of the proposed unsupervised segmentation method were analyzed and the methods based on deep learning in the conclusion section. Compared with the segmentation methods based on deep learning, the proposed method is unsupervised, and therefore does not need prior or training information. However, the segmentation methods based on deep learning relies on training information heavily. Obtaining the training information of the complex texture images is difficult. At the same time, future research ideas are elaborated. Considering some structural relevance of texture images, our future work aims to focus on the extraction and analysis of texture structures to obtain improved segmentation effects.关键词:texture image segmentation;Gabor filter;extended local ternary pattern (LTP) operator;unsupervised43|131|1更新时间:2024-05-07

Image Processing and Coding

-

摘要:Objective3D human pose estimation from monocular videos has become an open research problem in the computer vision and graphics community for a long time. An understanding of human posture and limb articulation is important for high-level computer vision tasks, such as human-computer interaction, augmented and virtual reality, and human action or activity recognition. The recent success of deep networks has led many state-of-the-art methods for 3D pose estimation to train deep networks end to end for direct image prediction. The top-performing approaches have shown the effectiveness of dividing the task of 3D pose estimation into two steps, as follows:using a state-of-the-art 2D pose estimator to estimate the 2D poses from images and then mapping them into 3D space. Results indicate that a large portion of the error of modern deep 3D pose estimation systems stems from 2D pose estimation error. Therefore, mapping a 2D pose containing error or noise into its optimum and most reasonable 3D pose is crucial. We propose a 3D pose estimation method by jointly using a sparse representation and a depth model. Through this method, we combine the spatial geometric priori of 3D poses with temporal information to improve the 3D pose estimation accuracy.MethodFirst, we use a 3D variable shape model that integrates sparse representation (SR) to represent rich 3D human posture changes. A convex relaxation method based on L1/2 regularization is used to transform the nonconvex optimization problem of a single-frame image in a shape-space model into a convex programming problem and provide reasonable initial values for a single frame of image. In this manner, the possibility of ambiguous reconstructions is considerably reduced. Second, the initial 3D poses obtained from the SR module, regarded as the 3D data with noise, are fed into a multi-channel long short term memory (MLSTM) denoising en-decoder in the form of pose sequences in temporal dimension. The 3D data with noise are converted into three components of X, Y, and Z to ensure the spatial structure of the 3D pose. For each component, multilayer LSTM cells are used to capture the different frames of time variation. The output of the LSTM unit is not the optimization result on the corresponding component; it is the time dependence between the two adjacent frames of the character posture of the input sequence implicitly encoded by the hidden layer of the LSTM unit. The time information learned is added with the initial value by using residual connection to maintain the time consistency of the 3D pose and effectively alleviate the problem of sequence jitter. Moreover, the shaded joints can be corrected by smoothing the constraint between the two frames. Lastly, we obtain the optimized 3D pose estimation results by decoding the last linear layer.ResultA comparative experiment is conducted to verify the validity of the proposed method. The method is conducted using the Human3.6M dataset, and the results are compared with the state-of-the-art methods. The quantitative evaluation metrics contain a common approach used to align the predicted 3D pose with the ground truth 3D pose using a similarity transformation. We use the average error per joint in millimeters between the estimated and the ground truth 3D pose. 2D joint ground truth and 2D pose estimations using a convolutional network are separately used as inputs. The quantitative experimental results suggest that the proposed method can remarkably improve the 3D estimation accuracy. When the input data are the 2D joint ground truth given by the Human 3.6 M dataset, the average reconstruction error is decreased by 12.6% after the optimization of our model as compared with individual frame estimation. Compared with the existing sparse model method based on video, the average reconstruction error is decreased by 6.4% after using our method. When the input data are 2D pose estimations using a convolutional network, the average reconstruction error is decreased by 13% after the optimization of our model as compared with single frame estimation. Compared with the existing depth model method, the average reconstruction error is decreased by 12.8% after using our method. Compared with the existing sparse model method based on video, the average reconstruction error is decreased by 9.1% after using our method.ConclusionCombining our MLSTM en-decoder based on temporal information with the sparse model, we adequately exploit the 3D pose prior knowledge, temporal, and spatial dependence of continuous human pose changes and achieve a remarkable improvement in monocular video 3D pose estimation accuracy.关键词:pose estimation;3D human pose;sparse representation;long short term memory (LSTM);residual connection69|59|1更新时间:2024-05-07

摘要:Objective3D human pose estimation from monocular videos has become an open research problem in the computer vision and graphics community for a long time. An understanding of human posture and limb articulation is important for high-level computer vision tasks, such as human-computer interaction, augmented and virtual reality, and human action or activity recognition. The recent success of deep networks has led many state-of-the-art methods for 3D pose estimation to train deep networks end to end for direct image prediction. The top-performing approaches have shown the effectiveness of dividing the task of 3D pose estimation into two steps, as follows:using a state-of-the-art 2D pose estimator to estimate the 2D poses from images and then mapping them into 3D space. Results indicate that a large portion of the error of modern deep 3D pose estimation systems stems from 2D pose estimation error. Therefore, mapping a 2D pose containing error or noise into its optimum and most reasonable 3D pose is crucial. We propose a 3D pose estimation method by jointly using a sparse representation and a depth model. Through this method, we combine the spatial geometric priori of 3D poses with temporal information to improve the 3D pose estimation accuracy.MethodFirst, we use a 3D variable shape model that integrates sparse representation (SR) to represent rich 3D human posture changes. A convex relaxation method based on L1/2 regularization is used to transform the nonconvex optimization problem of a single-frame image in a shape-space model into a convex programming problem and provide reasonable initial values for a single frame of image. In this manner, the possibility of ambiguous reconstructions is considerably reduced. Second, the initial 3D poses obtained from the SR module, regarded as the 3D data with noise, are fed into a multi-channel long short term memory (MLSTM) denoising en-decoder in the form of pose sequences in temporal dimension. The 3D data with noise are converted into three components of X, Y, and Z to ensure the spatial structure of the 3D pose. For each component, multilayer LSTM cells are used to capture the different frames of time variation. The output of the LSTM unit is not the optimization result on the corresponding component; it is the time dependence between the two adjacent frames of the character posture of the input sequence implicitly encoded by the hidden layer of the LSTM unit. The time information learned is added with the initial value by using residual connection to maintain the time consistency of the 3D pose and effectively alleviate the problem of sequence jitter. Moreover, the shaded joints can be corrected by smoothing the constraint between the two frames. Lastly, we obtain the optimized 3D pose estimation results by decoding the last linear layer.ResultA comparative experiment is conducted to verify the validity of the proposed method. The method is conducted using the Human3.6M dataset, and the results are compared with the state-of-the-art methods. The quantitative evaluation metrics contain a common approach used to align the predicted 3D pose with the ground truth 3D pose using a similarity transformation. We use the average error per joint in millimeters between the estimated and the ground truth 3D pose. 2D joint ground truth and 2D pose estimations using a convolutional network are separately used as inputs. The quantitative experimental results suggest that the proposed method can remarkably improve the 3D estimation accuracy. When the input data are the 2D joint ground truth given by the Human 3.6 M dataset, the average reconstruction error is decreased by 12.6% after the optimization of our model as compared with individual frame estimation. Compared with the existing sparse model method based on video, the average reconstruction error is decreased by 6.4% after using our method. When the input data are 2D pose estimations using a convolutional network, the average reconstruction error is decreased by 13% after the optimization of our model as compared with single frame estimation. Compared with the existing depth model method, the average reconstruction error is decreased by 12.8% after using our method. Compared with the existing sparse model method based on video, the average reconstruction error is decreased by 9.1% after using our method.ConclusionCombining our MLSTM en-decoder based on temporal information with the sparse model, we adequately exploit the 3D pose prior knowledge, temporal, and spatial dependence of continuous human pose changes and achieve a remarkable improvement in monocular video 3D pose estimation accuracy.关键词:pose estimation;3D human pose;sparse representation;long short term memory (LSTM);residual connection69|59|1更新时间:2024-05-07 -

摘要:ObjectiveThe process of shooting a normal camera involves forming a 2D image after projecting a 3D scene onto the imaging plane. This process will lose the information of the scene, so the related research based on depth information cannot be developed. For example, target detection and tracking, 3D model reconstruction, and intelligent robots in industrial automation need to obtain depth information for the scene. The depth information of the scene is the basic problem in the field of machine vision. With the development of machine vision, the use of visualization methods to solve the deep extraction problem became an important topic in computer vision research. Among the methods, image depth measurement based on monocular vision has the advantages of simple equipment, low price, and fast calculation speed and could become a research hot spot today. The traditional measurement method based on monocular visual depth requires complex calibration of the camera, so the operability is not strong and it is difficult to apply in practice. Most of the traditional methods are only suitable for specific scene conditions, such as occlusion relations or defocusing in the scene, which limits the application of traditional methods. Aiming at the limitations of traditional methods, this paper proposes an object depth measurement method based on motion parallax cues, extracts feature points from images, analyzes the relationship between feature points and image depth, and obtains image depth results based on the relationship between the two.MethodThe method uses the parallax cues generated by the camera motion to obtain the image depth, so the method requires two images. The camera is mounted on a movable rail, and after the first image is taken, the camera is moved along the optical axis. The second image is taken at a distance when no adjustment to any parameter of the camera is required. First, the two images acquired by camera movement are segmented, and the region of interest (ROI) is segmented. Second, the improved scale-invariant feature transform algorithm proposed in this paper is used to perform two images. The results of image segmentation and image matching are combined to obtain the matching result of the object to be measured. Then, Graham scanning method is used to obtain the convex hull of the feature points after the two images are matched, thereby obtaining the length of the longest line segment on the convex hull. Finally, the basic principles of camera imaging and triangulation knowledge are used to calculate image depth.ResultThis proposed method is compared with another method, and results are provided in a table. The experiment is divided into two groups, mainly comparing the two methods from two aspects:measurement time and precision. The first set of experimental results show that the proposed method achieves good measurement results in a simple background environment. The error between the actual distance and the measured distance is 2.60%, and the time consumption of the measured distance is 1.577 s. The second set of experiments shows that when partial occlusion occurs in the scene, the error between the actual distance and the measured distance is 3.19%, and the time required to measure the distance is 1.689 s. By comparing the two sets of experimental data, we found that the method has improved the measurement accuracy and measurement time compared with the previous method, especially in reducing the image depth measurement time.ConclusionThrough research, the method estimates the image depth by moving the camera to obtain the corresponding line segment length on the two images, which avoids the complicated camera calibration process. This method improves the image matching algorithm and reduces the computational complexity, which is fast and accurate. The method of obtaining image depth information has certain research value and only needs to process the two pictures, where the hardware requirement is simple. The measurement process does not require a large amount of scene information, so the scope of application is wide. The method utilizes feature points on the image to perform image depth measurement, so the method is not constrained by partial occlusion of the measured object, and still has good robustness. However, as the method uses an image segmentation algorithm, the result of image segmentation greatly influences the accuracy of the measurement. If the captured image contains a complex background environment, obtaining accurate image segmentation results and ideal depth measurement results is difficult. Therefore, the directions of optimization and improvement of this method are adaptable to complex background environments.关键词:image depth;monocular vision;motion parallax;improved scale invariant feature transform (SIFT) algorithm;Graham scanning method20|44|1更新时间:2024-05-07

摘要:ObjectiveThe process of shooting a normal camera involves forming a 2D image after projecting a 3D scene onto the imaging plane. This process will lose the information of the scene, so the related research based on depth information cannot be developed. For example, target detection and tracking, 3D model reconstruction, and intelligent robots in industrial automation need to obtain depth information for the scene. The depth information of the scene is the basic problem in the field of machine vision. With the development of machine vision, the use of visualization methods to solve the deep extraction problem became an important topic in computer vision research. Among the methods, image depth measurement based on monocular vision has the advantages of simple equipment, low price, and fast calculation speed and could become a research hot spot today. The traditional measurement method based on monocular visual depth requires complex calibration of the camera, so the operability is not strong and it is difficult to apply in practice. Most of the traditional methods are only suitable for specific scene conditions, such as occlusion relations or defocusing in the scene, which limits the application of traditional methods. Aiming at the limitations of traditional methods, this paper proposes an object depth measurement method based on motion parallax cues, extracts feature points from images, analyzes the relationship between feature points and image depth, and obtains image depth results based on the relationship between the two.MethodThe method uses the parallax cues generated by the camera motion to obtain the image depth, so the method requires two images. The camera is mounted on a movable rail, and after the first image is taken, the camera is moved along the optical axis. The second image is taken at a distance when no adjustment to any parameter of the camera is required. First, the two images acquired by camera movement are segmented, and the region of interest (ROI) is segmented. Second, the improved scale-invariant feature transform algorithm proposed in this paper is used to perform two images. The results of image segmentation and image matching are combined to obtain the matching result of the object to be measured. Then, Graham scanning method is used to obtain the convex hull of the feature points after the two images are matched, thereby obtaining the length of the longest line segment on the convex hull. Finally, the basic principles of camera imaging and triangulation knowledge are used to calculate image depth.ResultThis proposed method is compared with another method, and results are provided in a table. The experiment is divided into two groups, mainly comparing the two methods from two aspects:measurement time and precision. The first set of experimental results show that the proposed method achieves good measurement results in a simple background environment. The error between the actual distance and the measured distance is 2.60%, and the time consumption of the measured distance is 1.577 s. The second set of experiments shows that when partial occlusion occurs in the scene, the error between the actual distance and the measured distance is 3.19%, and the time required to measure the distance is 1.689 s. By comparing the two sets of experimental data, we found that the method has improved the measurement accuracy and measurement time compared with the previous method, especially in reducing the image depth measurement time.ConclusionThrough research, the method estimates the image depth by moving the camera to obtain the corresponding line segment length on the two images, which avoids the complicated camera calibration process. This method improves the image matching algorithm and reduces the computational complexity, which is fast and accurate. The method of obtaining image depth information has certain research value and only needs to process the two pictures, where the hardware requirement is simple. The measurement process does not require a large amount of scene information, so the scope of application is wide. The method utilizes feature points on the image to perform image depth measurement, so the method is not constrained by partial occlusion of the measured object, and still has good robustness. However, as the method uses an image segmentation algorithm, the result of image segmentation greatly influences the accuracy of the measurement. If the captured image contains a complex background environment, obtaining accurate image segmentation results and ideal depth measurement results is difficult. Therefore, the directions of optimization and improvement of this method are adaptable to complex background environments.关键词:image depth;monocular vision;motion parallax;improved scale invariant feature transform (SIFT) algorithm;Graham scanning method20|44|1更新时间:2024-05-07 -

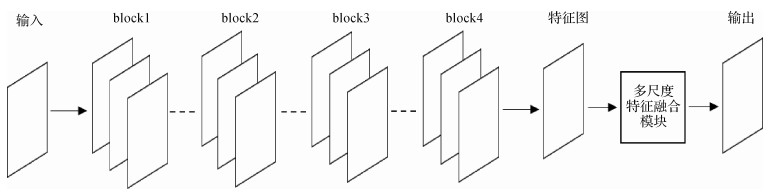

摘要:ObjectiveImage segmentation technology is one of the most difficult aspects of computer vision and image processing and is an indispensable step in the process of understanding and analyzing image information. The disadvantage of image segmentation technology is that the size and direction of the target in the image make the level of the image unpredictable. At the same time, the segmentation of images has a complex background, different brightness, and different textures is still problems in the image segmentation technology. The target semantic feature extraction effect directly influences the accuracy of image semantic segmentation. The image capturing device mounted on the robot has a variable spatial relationship with the target during the operation of the robot in the automated production line that segments the target by machine vision technology. When the image capturing device takes images from different distances and angles, the target has different scales in the image. The traditional single-scale feature extraction method has lower precision for semantic segmentation of the target. This study shows how to use the context information of the image to create the multiscale feature fusion module and develop the ability to extract rich target features and improve the segmentation performance of the network model.MethodThis paper proposes a method of workpiece target semantic segmentation based on multiscale feature fusion. The convolutional neural network is used to extract the multiscale local feature semantic information of the target, and the semantic information of different scales is pixel-fused so that the neural network fully captures context information of the image and obtains a better feature representation, thereby effectively achieving semantic segmentation of the workpiece target. The method uses the ResNet as the underlying network structure and combines the image gold tower theory to construct a multiscale feature fusion module. As the image pyramid is simply a change in image resolution, although the multiscale information representation of the image can be obtained, the output of the fourth block layer of the ResNet network is already a feature map with a small dimension. Reduced resolution of the feature map is not conducive to the feature response of the network model and tends to increase the amount of parameters calculated by the network model. Therefore, the resolution reduction operation in the original image pyramid is replaced in the form of the atrous convolution. The sensitivity field of the filter is effectively increased under a nonreduced resolution of the image, and the local feature information of the superior image can be fully obtained. In this study, a three-layer image pyramid is used, where the bottom layer image is the feature map of the Block4 layer output, the middle layer is a plurality of parallel atrous convolution layers with different sampling rates to extract local feature information of different scales, and the top layer is the fusion layer of the local feature information extracted by the middle layer.ResultThe method of this study is compared with the traditional single-scale feature extraction method through qualitative and quantitative experimental methods, and mean intersection over union (mIOU) is used as the evaluation index. Experiments show that the segmentation network model obtained by this method has more accurate segmentation ability for the targets in the test set. Compared with the traditional single-scale feature extraction network, the mIOU evaluation index of this method on the test set is improved by 4.84% compared with the network that also adopts the porous convolution strategy. The parallel structure proposed in this paper improves the mIOU evaluation index on the test set by 3.57%, compared with the network using the atrous spatial pyramid pooling strategy to improve the network semantic segmentation ability. The mIOU evaluation index of the method in the test set is also improved by 2.24%. When the test sample contains fewer types of targets and the target edges are clearer, more accurate segmentation results can be obtained. To verify that the method has certain generalization, this study uses the method to verify the dataset of the tennis court scene. The tennis court scene dataset includes nine categories of goals:tennis, rackets, inside the tennis court, venue lines, outside the tennis court, nets, people, tennis court fence, and sky. The size and scale of these types of targets are different, which is consistent with the multiscale feature extraction ideas proposed in this paper. Under the condition that the parameters set by the method are completely adopted and the network model has not optimized the parameter for the tennis court scene dataset in the mIOU evaluation index of the test set, the accuracy increased from 54.68% to 56.43%.ConclusionThis study introduces the labeling method of multi-workpiece datasets, and uses methods such as data expansion and definition of learning rate update to effectively prevent the overfitting phenomenon in network training and improve the basic performance of the network model. The value of the neural network depth and the value of the hyperparameter in the neural network training process are determined by comparing the experiments. At the same time, a multiscale feature fusion module is designed to extract multiscale semantic information of the target. The multiscale feature fusion enhances the ability of the neural network model to extract the target features, and the designed MsFFNet network model in more accurate in extracting the semantic features of the target. Therefore, the method can perform the semantic segmentation task of robot vision-based robotic grabbing target on the automated production line under the condition that the spatial position between the image capturing device and the target is variable. In this study, the network model determined by the specific dataset provides a reference value for the subsequent artifact detection. The next step will also focus on the generalization ability of the dataset of other industrial scenes.关键词:residual network;semantic segmentation;multiscale feature;deep learning;visual task31|47|4更新时间:2024-05-07

摘要:ObjectiveImage segmentation technology is one of the most difficult aspects of computer vision and image processing and is an indispensable step in the process of understanding and analyzing image information. The disadvantage of image segmentation technology is that the size and direction of the target in the image make the level of the image unpredictable. At the same time, the segmentation of images has a complex background, different brightness, and different textures is still problems in the image segmentation technology. The target semantic feature extraction effect directly influences the accuracy of image semantic segmentation. The image capturing device mounted on the robot has a variable spatial relationship with the target during the operation of the robot in the automated production line that segments the target by machine vision technology. When the image capturing device takes images from different distances and angles, the target has different scales in the image. The traditional single-scale feature extraction method has lower precision for semantic segmentation of the target. This study shows how to use the context information of the image to create the multiscale feature fusion module and develop the ability to extract rich target features and improve the segmentation performance of the network model.MethodThis paper proposes a method of workpiece target semantic segmentation based on multiscale feature fusion. The convolutional neural network is used to extract the multiscale local feature semantic information of the target, and the semantic information of different scales is pixel-fused so that the neural network fully captures context information of the image and obtains a better feature representation, thereby effectively achieving semantic segmentation of the workpiece target. The method uses the ResNet as the underlying network structure and combines the image gold tower theory to construct a multiscale feature fusion module. As the image pyramid is simply a change in image resolution, although the multiscale information representation of the image can be obtained, the output of the fourth block layer of the ResNet network is already a feature map with a small dimension. Reduced resolution of the feature map is not conducive to the feature response of the network model and tends to increase the amount of parameters calculated by the network model. Therefore, the resolution reduction operation in the original image pyramid is replaced in the form of the atrous convolution. The sensitivity field of the filter is effectively increased under a nonreduced resolution of the image, and the local feature information of the superior image can be fully obtained. In this study, a three-layer image pyramid is used, where the bottom layer image is the feature map of the Block4 layer output, the middle layer is a plurality of parallel atrous convolution layers with different sampling rates to extract local feature information of different scales, and the top layer is the fusion layer of the local feature information extracted by the middle layer.ResultThe method of this study is compared with the traditional single-scale feature extraction method through qualitative and quantitative experimental methods, and mean intersection over union (mIOU) is used as the evaluation index. Experiments show that the segmentation network model obtained by this method has more accurate segmentation ability for the targets in the test set. Compared with the traditional single-scale feature extraction network, the mIOU evaluation index of this method on the test set is improved by 4.84% compared with the network that also adopts the porous convolution strategy. The parallel structure proposed in this paper improves the mIOU evaluation index on the test set by 3.57%, compared with the network using the atrous spatial pyramid pooling strategy to improve the network semantic segmentation ability. The mIOU evaluation index of the method in the test set is also improved by 2.24%. When the test sample contains fewer types of targets and the target edges are clearer, more accurate segmentation results can be obtained. To verify that the method has certain generalization, this study uses the method to verify the dataset of the tennis court scene. The tennis court scene dataset includes nine categories of goals:tennis, rackets, inside the tennis court, venue lines, outside the tennis court, nets, people, tennis court fence, and sky. The size and scale of these types of targets are different, which is consistent with the multiscale feature extraction ideas proposed in this paper. Under the condition that the parameters set by the method are completely adopted and the network model has not optimized the parameter for the tennis court scene dataset in the mIOU evaluation index of the test set, the accuracy increased from 54.68% to 56.43%.ConclusionThis study introduces the labeling method of multi-workpiece datasets, and uses methods such as data expansion and definition of learning rate update to effectively prevent the overfitting phenomenon in network training and improve the basic performance of the network model. The value of the neural network depth and the value of the hyperparameter in the neural network training process are determined by comparing the experiments. At the same time, a multiscale feature fusion module is designed to extract multiscale semantic information of the target. The multiscale feature fusion enhances the ability of the neural network model to extract the target features, and the designed MsFFNet network model in more accurate in extracting the semantic features of the target. Therefore, the method can perform the semantic segmentation task of robot vision-based robotic grabbing target on the automated production line under the condition that the spatial position between the image capturing device and the target is variable. In this study, the network model determined by the specific dataset provides a reference value for the subsequent artifact detection. The next step will also focus on the generalization ability of the dataset of other industrial scenes.关键词:residual network;semantic segmentation;multiscale feature;deep learning;visual task31|47|4更新时间:2024-05-07 -

摘要:ObjectiveImage classification is one of the important research technologies in computer vision. The development of deep learning and convolutional neural networks (CNNs) has laid the technical foundation for image classification. In recent years, image classification methods based on deep CNN have become an important research topic. DenseNet is one of the widely applied deep CNNs in image classification, encouraging feature reusage and alleviating the vanishing gradient problem. However, this approach has obvious limitations. First, each layer simply combines the feature maps obtained from preceding layers by concatenating operation without considering the interdependencies between different channels. The network representation can be further improved by modeling feature channel correlation and realizing channel feature recalibration. Second, the correlation of the interlayer feature map is not explicitly modeled. Thus, adaptively learning the correlation coefficients by modeling the correlation of feature maps between the layers is important.MethodThe conventional DenseNet networks do not adequately consider the channel feature correlation and interlayer feature correlation. To address these limitations, multiple feature reweight DenseNet (MFR-DenseNet) combines channel feature reweight DenseNet (CFR-DenseNet) and inter-layer feature reweight DenseNet (ILFR-DenseNet) by ensemble learning method, thereby improving the representation power of the DenseNet by adaptively recalibrating the channel-wise feature responses and explicitly modeling the interdependencies between the features of different convolutional layers. However, MFR-DenseNet uses two independent parallel networks for image classification, which is not end-to-end training. The CFR-DenseNet and the ILFR-DenseNet models should be trained and saved in training. First, the models and weights are loaded, and the MFR-DenseNet needs multiple save and load. The training process is cumbersome. Second, the parameters and calculations are large, so the training takes a long time. In the test, the final prediction results of the MFR-DenseNet are obtained by taking an average of predictions from the two models. The parameters and test time are almost doubled compared with a single-channel feature reweight or interlayer feature reweight network. Therefore, the MFR-DenseNet has high requirements on the storage space and computing performance of the device in practical applications, thereby limiting its application. To address these limitations of MFR-DenseNet, this paper proposes an end-to-end dual feature reweight DenseNet (DFR-DenseNet) based on the soft attention mechanism. The network implements the channel feature reweight and interlayer feature reweight of DenseNet. First, the channel feature reweight and interlayer feature reweight method are integrated in DenseNet. By introducing a squeeze-and-excitation module (SEM) after each 3×3 convolutional layer, our method solves the problem of exploiting the channel dependencies. Each feature map of each layer in the SEM obtains a weight through a squeeze and excitation operation. The representation of the network can be improved by explicitly modeling the interdependencies between the channels. The output feature map of the convolutional layer is subjected to two squeeze excitation operations. Thus, the weight value of each layer can be obtained to achieve the reweight of the interlayer features. Then, DFR-DenseNet was constructed. The output feature map of each convolution layer completes the channel feature reweight and interlayer feature reweight through two channels. The concat and convolution operations were used to achieve the combination of two types of reweighted feature maps.ResultFirst, the DFR-DenseNet is compared with the serial fusion method and parallel-addition fusion method on the image classification dataset CIFAR-10, which proves that DFR-DenseNet is the most effective. Second, to demonstrate the advantage of the DFR-DenseNet, we performed different experiments on the image classification dataset CIFAR-10/100. To show the effectiveness of the method on the high-resolution dataset, we conducted the age classification experiment on the face dataset MORPH, and the age group classification comparison experiment was performed on the unconstrained Adience dataset. The image classification accuracy was significantly improved. The 40-layer DFR-DenseNet had a 4.69% error and outperformed the 40-layer DenseNet by 12% on CIFAR-10 with only 1.87% more parameters. The 64-layer DFR-DenseNet resulted in a 4.29% error on CIFAR-10 and outperformed the 64-layer DenseNet by 9.11%. On CIFAR-100, the 40-layer DFR-DenseNet and 64-layer DFR-DenseNet resulted in a 24.29% and 21.86% test error on the test set, and they outperformed the 40-layer DenseNet and 64-layer DenseNet by 5.56% and 5.41%, respectively. Age estimation from a single face image is an essential task in the field of human-computer interaction and computer vision, which has a wide range of practical applications. Age estimation consists of two categories:age classification and age regression. Adience is used for age group classification and obtained 58.79% accuracy. MORPH Album 2 is used for age regression. The 121-layer DFR-DenseNet had a 3.16 mean absolute error and outperformed the 121-layer DenseNet by 7.33% on the MORPH Album 2. Compared with the MFR-DenseNet, the DFR-DenseNet reduced the number of parameters by half. The test time of the DFR-DenseNet network was shortened to approximately 61% in the MFR-DenseNet test.ConclusionThe experimental results show that the end-to-end dual feature reweight DenseNet can enhance the learning ability of the network and improve the accuracy of image classification.关键词:dual feature reweight DenseNet(DFR-DenseNet);channel feature reweight;inter-layer feature reweight;image classification;end-to-end35|63|5更新时间:2024-05-07

摘要:ObjectiveImage classification is one of the important research technologies in computer vision. The development of deep learning and convolutional neural networks (CNNs) has laid the technical foundation for image classification. In recent years, image classification methods based on deep CNN have become an important research topic. DenseNet is one of the widely applied deep CNNs in image classification, encouraging feature reusage and alleviating the vanishing gradient problem. However, this approach has obvious limitations. First, each layer simply combines the feature maps obtained from preceding layers by concatenating operation without considering the interdependencies between different channels. The network representation can be further improved by modeling feature channel correlation and realizing channel feature recalibration. Second, the correlation of the interlayer feature map is not explicitly modeled. Thus, adaptively learning the correlation coefficients by modeling the correlation of feature maps between the layers is important.MethodThe conventional DenseNet networks do not adequately consider the channel feature correlation and interlayer feature correlation. To address these limitations, multiple feature reweight DenseNet (MFR-DenseNet) combines channel feature reweight DenseNet (CFR-DenseNet) and inter-layer feature reweight DenseNet (ILFR-DenseNet) by ensemble learning method, thereby improving the representation power of the DenseNet by adaptively recalibrating the channel-wise feature responses and explicitly modeling the interdependencies between the features of different convolutional layers. However, MFR-DenseNet uses two independent parallel networks for image classification, which is not end-to-end training. The CFR-DenseNet and the ILFR-DenseNet models should be trained and saved in training. First, the models and weights are loaded, and the MFR-DenseNet needs multiple save and load. The training process is cumbersome. Second, the parameters and calculations are large, so the training takes a long time. In the test, the final prediction results of the MFR-DenseNet are obtained by taking an average of predictions from the two models. The parameters and test time are almost doubled compared with a single-channel feature reweight or interlayer feature reweight network. Therefore, the MFR-DenseNet has high requirements on the storage space and computing performance of the device in practical applications, thereby limiting its application. To address these limitations of MFR-DenseNet, this paper proposes an end-to-end dual feature reweight DenseNet (DFR-DenseNet) based on the soft attention mechanism. The network implements the channel feature reweight and interlayer feature reweight of DenseNet. First, the channel feature reweight and interlayer feature reweight method are integrated in DenseNet. By introducing a squeeze-and-excitation module (SEM) after each 3×3 convolutional layer, our method solves the problem of exploiting the channel dependencies. Each feature map of each layer in the SEM obtains a weight through a squeeze and excitation operation. The representation of the network can be improved by explicitly modeling the interdependencies between the channels. The output feature map of the convolutional layer is subjected to two squeeze excitation operations. Thus, the weight value of each layer can be obtained to achieve the reweight of the interlayer features. Then, DFR-DenseNet was constructed. The output feature map of each convolution layer completes the channel feature reweight and interlayer feature reweight through two channels. The concat and convolution operations were used to achieve the combination of two types of reweighted feature maps.ResultFirst, the DFR-DenseNet is compared with the serial fusion method and parallel-addition fusion method on the image classification dataset CIFAR-10, which proves that DFR-DenseNet is the most effective. Second, to demonstrate the advantage of the DFR-DenseNet, we performed different experiments on the image classification dataset CIFAR-10/100. To show the effectiveness of the method on the high-resolution dataset, we conducted the age classification experiment on the face dataset MORPH, and the age group classification comparison experiment was performed on the unconstrained Adience dataset. The image classification accuracy was significantly improved. The 40-layer DFR-DenseNet had a 4.69% error and outperformed the 40-layer DenseNet by 12% on CIFAR-10 with only 1.87% more parameters. The 64-layer DFR-DenseNet resulted in a 4.29% error on CIFAR-10 and outperformed the 64-layer DenseNet by 9.11%. On CIFAR-100, the 40-layer DFR-DenseNet and 64-layer DFR-DenseNet resulted in a 24.29% and 21.86% test error on the test set, and they outperformed the 40-layer DenseNet and 64-layer DenseNet by 5.56% and 5.41%, respectively. Age estimation from a single face image is an essential task in the field of human-computer interaction and computer vision, which has a wide range of practical applications. Age estimation consists of two categories:age classification and age regression. Adience is used for age group classification and obtained 58.79% accuracy. MORPH Album 2 is used for age regression. The 121-layer DFR-DenseNet had a 3.16 mean absolute error and outperformed the 121-layer DenseNet by 7.33% on the MORPH Album 2. Compared with the MFR-DenseNet, the DFR-DenseNet reduced the number of parameters by half. The test time of the DFR-DenseNet network was shortened to approximately 61% in the MFR-DenseNet test.ConclusionThe experimental results show that the end-to-end dual feature reweight DenseNet can enhance the learning ability of the network and improve the accuracy of image classification.关键词:dual feature reweight DenseNet(DFR-DenseNet);channel feature reweight;inter-layer feature reweight;image classification;end-to-end35|63|5更新时间:2024-05-07 -