最新刊期

卷 25 , 期 2 , 2020

-

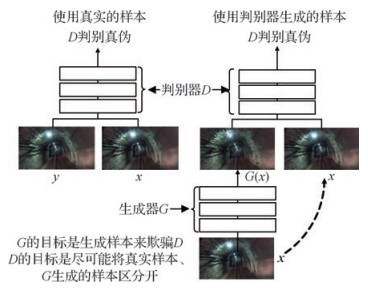

摘要:Brain tumors,abnormal cells growing in the human brain,are common neurological diseases that are extremely harmful to human health. Malignant brain tumors can lead to high mortality. Magnetic resonance imaging (MRI),a typical noninvasive imaging technology,can produce high-quality brain images without damage and skull artifacts,as well as provide comprehensive information to facilitate the diagnosis and treatment of brain tumors. Additionally,the segmentation of MRI brain tumors utilizes computer technology to segment and label tumors (necrosis,edema,and nonenhanced and enhanced tumors) and normal tissues automatically on multimodal brain images,which assists in their diagnosis and treatment. However,given the complexity of brain tissue structure,the diversity of spatial location,the shape and size of brain tumors,and various influence factors,such as field offset effect,volume effect,and equipment noise,during the processing of MRI brain images,automatically achieving accurate tumor segmentation results from MRI brain images has been challenging. With the continuous breakthroughs of deep learning technology in computer vision and medical image analysis,MRI brain tumor segmentation methods based on deep learning have also attracted wide attention in recent years. A series of important research results have been reported,illuminating the promising potential of deep learning methods for MRI brain tumor segmentation task. Therefore,this work aims to review deep learning-based MRI brain tumor segmentation methods,i.e.,the current mainstream of MRI brain tumor segmentation. Through an extensive study of the literature on MRI brain tumor segmentation problem,we comprehensively summarize and analyze the existing deep learning methods for MRI brain tumor segmentation. To provide a further understanding of this task,we first introduce a family of authoritative brain tumor segmentation databases,i.e.,BraTS (2012-2018) Databases,which run in conjunction with the Medical Image Computing and Computer Assisted Intervention (MICCAI) 2012-2018 Conferences. Several important evaluation metrics,including dice similarity coefficient,predictive positivity value,and sensitivity,are also briefly described. On the basis of the basic network architecture for brain tumor segmentation,we classify the existing deep learning-based MRI brain tumor segmentation methods into three categories,namely,convolutional neural network-,fully convolutional network-,and generative adversarial network-based MRI brain tumor segmentation methods. Convolutional neural network-based methods can be further divided into three sub-categories:single network-based,multinetwork-based,and traditional-method-combination-based approaches. On the basis of the three categories,we comprehensively describe and analyze the basic ideas,network architecture,and typical improvement schemes for each type of method. In addition,we compare the performance results of the representative methods achieved on the BraTS series datasets and summarize the comparative analysis results as well as the advantages and disadvantages of the representative methods. Finally,we discuss three possible future research directions.By reviewing the main work in this field,the existing deep learning methods for MRI brain tumor segmentation are examined well,and our threefold conclusion follows:1) Embedding advanced network architecture or introducing prior information of brain tumors into the deep segmentation network will achieve superior accuracy performance for each type of method. 2) Fully convolutional network-based MRI brain tumor segmentation methods can obtain improved balance between accuracy and efficiency. 3) Generative adversarial network-based MRI brain tumor segmentation methods,a novel and powerful semi-supervised method,has shown good potential for the extremely challenging construction of a large-scale MRI brain tumor segmentation dataset with fine labels. Three possible future research directions are recommended,namely,embedding numerous powerful feature representation modules (e.g.,squeeze-and-excitation block,matrix power normalization unit),constructing semi-supervised networks with prior medical knowledge (e.g.,constraint information,location,and size and shape information of brain tumors),and transferring networks from other image tasks (e.g.,promising detection networks of faster and masker region-based convolutional neural networks). MRI brain tumor segmentation is an important step in the diagnosis and treatment of brain tumors. This process can quickly obtain further accurate MRI brain tumor segmentation results through computer technology,which can effectively assist doctors in computing the location and size of tumors and formulating numerous reasonable treatment and rehabilitation strategies for patients with brain tumors. As a new development direction in recent years,deep learning-based MRI brain tumor segmentation has achieved significant performance improvement over traditional methods. As the mainstream in this field,this method will further promote the clinical diagnosis and treatment level of computer-aided MRI brain tumor segmentation technology.关键词:magnetic resonance imaging(MRI);brain tumor;artificial neural networks;deep learning;segmentation114|274|18更新时间:2024-05-07

摘要:Brain tumors,abnormal cells growing in the human brain,are common neurological diseases that are extremely harmful to human health. Malignant brain tumors can lead to high mortality. Magnetic resonance imaging (MRI),a typical noninvasive imaging technology,can produce high-quality brain images without damage and skull artifacts,as well as provide comprehensive information to facilitate the diagnosis and treatment of brain tumors. Additionally,the segmentation of MRI brain tumors utilizes computer technology to segment and label tumors (necrosis,edema,and nonenhanced and enhanced tumors) and normal tissues automatically on multimodal brain images,which assists in their diagnosis and treatment. However,given the complexity of brain tissue structure,the diversity of spatial location,the shape and size of brain tumors,and various influence factors,such as field offset effect,volume effect,and equipment noise,during the processing of MRI brain images,automatically achieving accurate tumor segmentation results from MRI brain images has been challenging. With the continuous breakthroughs of deep learning technology in computer vision and medical image analysis,MRI brain tumor segmentation methods based on deep learning have also attracted wide attention in recent years. A series of important research results have been reported,illuminating the promising potential of deep learning methods for MRI brain tumor segmentation task. Therefore,this work aims to review deep learning-based MRI brain tumor segmentation methods,i.e.,the current mainstream of MRI brain tumor segmentation. Through an extensive study of the literature on MRI brain tumor segmentation problem,we comprehensively summarize and analyze the existing deep learning methods for MRI brain tumor segmentation. To provide a further understanding of this task,we first introduce a family of authoritative brain tumor segmentation databases,i.e.,BraTS (2012-2018) Databases,which run in conjunction with the Medical Image Computing and Computer Assisted Intervention (MICCAI) 2012-2018 Conferences. Several important evaluation metrics,including dice similarity coefficient,predictive positivity value,and sensitivity,are also briefly described. On the basis of the basic network architecture for brain tumor segmentation,we classify the existing deep learning-based MRI brain tumor segmentation methods into three categories,namely,convolutional neural network-,fully convolutional network-,and generative adversarial network-based MRI brain tumor segmentation methods. Convolutional neural network-based methods can be further divided into three sub-categories:single network-based,multinetwork-based,and traditional-method-combination-based approaches. On the basis of the three categories,we comprehensively describe and analyze the basic ideas,network architecture,and typical improvement schemes for each type of method. In addition,we compare the performance results of the representative methods achieved on the BraTS series datasets and summarize the comparative analysis results as well as the advantages and disadvantages of the representative methods. Finally,we discuss three possible future research directions.By reviewing the main work in this field,the existing deep learning methods for MRI brain tumor segmentation are examined well,and our threefold conclusion follows:1) Embedding advanced network architecture or introducing prior information of brain tumors into the deep segmentation network will achieve superior accuracy performance for each type of method. 2) Fully convolutional network-based MRI brain tumor segmentation methods can obtain improved balance between accuracy and efficiency. 3) Generative adversarial network-based MRI brain tumor segmentation methods,a novel and powerful semi-supervised method,has shown good potential for the extremely challenging construction of a large-scale MRI brain tumor segmentation dataset with fine labels. Three possible future research directions are recommended,namely,embedding numerous powerful feature representation modules (e.g.,squeeze-and-excitation block,matrix power normalization unit),constructing semi-supervised networks with prior medical knowledge (e.g.,constraint information,location,and size and shape information of brain tumors),and transferring networks from other image tasks (e.g.,promising detection networks of faster and masker region-based convolutional neural networks). MRI brain tumor segmentation is an important step in the diagnosis and treatment of brain tumors. This process can quickly obtain further accurate MRI brain tumor segmentation results through computer technology,which can effectively assist doctors in computing the location and size of tumors and formulating numerous reasonable treatment and rehabilitation strategies for patients with brain tumors. As a new development direction in recent years,deep learning-based MRI brain tumor segmentation has achieved significant performance improvement over traditional methods. As the mainstream in this field,this method will further promote the clinical diagnosis and treatment level of computer-aided MRI brain tumor segmentation technology.关键词:magnetic resonance imaging(MRI);brain tumor;artificial neural networks;deep learning;segmentation114|274|18更新时间:2024-05-07

Review

-

摘要:ObjectiveThe image captured by the monitor will be affected by the ambiguity of the atmosphere and motion transformation of the target, resulting in the low resolution of the captured face image, which cannot be recognized by human or machine. Therefore, the clarity of face images must be urgently improved. The method of enhancing the resolution of face image by using super-resolution (SR) restoration technology has become an important means for solving this problem. Face SR reconstruction is the process of predicting high-resolution (HR) face images from one or more observed low-resolution (LR) face images, which is a typical pathological problem. As a domain-specific super-resolution task, we can use facial priori knowledge to improve the effect of super-resolution. We propose a deep end-to-end face SR reconstruction algorithm based on multitask joint learning method. Multitask learning algorithm is an inductive transfer mechanism, which can improve the generalization performance of backbone models by utilizing specific domain information hidden in training signals. Existing SR methods use different methods to fuse face priori information, which substantially improves the performance of face super-resolution algorithm. However, these networks generally use the method of the direct fusion of facial geometry information and image features to integrate the priori feature information, but they do not fully utilize the semantic information, such as facial landmark, gender, and facial expression. Moreover, at a large magnification, the priori features obtained by these methods are rough to reconstruct detailed facial edges and texture details. To solve this problem, we propose a face SR reconstruction algorithm based on multitask joint learning. MTFSR combines face SR with assistant tasks, such as facial feature point detection, gender classification, and facial expression recognition, by using multitask learning method to obtain the shared representation of facial features among related tasks, acquire rich facial prior knowledge, and optimize the performance of face SR algorithm.MethodFirst, a face SR reconstruction algorithm based on multitask joint learning is proposed. The model uses residual learning and symmetrical cross-layer connection to extract multilevel features. Local residual mapping improves the expressive capability of the network to enhance performance, solves gradient dissipation in network training, and reduces the number of convolution cores in the model through feature reuse. To reduce the parameters of the convolution kernel, the dimension transformation of the input of the residual block is performed by using the convolution kernel with 1×1 scale, which initially reduces and then increases the dimension. The network adopts the encoder-decoder structure. In the encoder structure, the dimension of feature space is gradually reduced, and the redundant information in the input image is discarded. The feature expression of the face image at the high-dimensional visual level is obtained. The visual feature is sent to the decoder through the cross-layer connection structure. The decoder cascades and fuses all levels of visual features in the unit to achieve accurate filtering of effective information. The deconvolution layer is used to restore the spatial dimension gradually and repair the details and texture features of the face. We design a joint training method for face multiattribute learning tasks:setting loss weights and loss thresholds on the basis of the learning difficulty of different tasks and avoiding the influence of subtasks on the learning of head tasks after fitting the training set to obtain considerable abundant face prior knowledge information. The perceptual loss function is used to measure the semantic gap between HR and SR images, and the output feature map of perceptual loss network is visually processed to demonstrate the effectiveness of perceptual loss in improving the reconstruction effect of facial semantic information. Finally, we enhance the dataset of face attributes and filter the data that are missing relevant attribute labels. The key point detection algorithm is used to re-extract the attributes of feature points. On this basis, joint multitask learning is conducted to obtain numerous realistic SR results of visual perception.ResultIn this experiment, a total of 35 000 face images are selected, and two sets of LR/HR face image data pairs with different resolution magnifications are produced via double cubic interpolation downsampling using×4 and×8 scales. The sizes of each pair of images are 322/1282 and 162/1282. The first 30 000 face images are used as training set, and the last 5 000 face images are used as test set. Six models are trained for each of the three cases:×4 and×8 resolution amplifications, whether to use multitask joint learning, and whether to use perceptual loss function. The single task face SR network using pixel-by-pixel loss is defined as STFSR-MSE, and the face SR network using pixel-by-pixel loss is defined as MTFSR-MSE. Face SR network based on multitask joint learning with sense perception loss is defined as MTFSR-Perce. Two objective evaluation criteria, namely, peak signal-to-noise ratio and structural similarity index, are used to test the experimental results. The effect of this algorithm is improved by 2.15 dB at the scale of×8 magnification, compared with the general SR MemNet algorithm, and approximately 1.2 dB at the scale of×8 magnification, compared with the face SR FSRNet algorithm. A joint training method for the multiattribute learning task of the face is designed. On the basis of the learning difficulty of different tasks, loss weights and loss thresholds are set to avoid the influence of subtasks on the learning of head services after the training set has been fitted and to obtain abundant face prior knowledge information.ConclusionExperimental data and results show that the proposed algorithm can further utilize the face prior knowledge and create further realistic and clear facial edges and texture details in visual perception.关键词:multi-task joint learning;image restoration;deep learning;super resolution;perception loss58|99|4更新时间:2024-05-07

摘要:ObjectiveThe image captured by the monitor will be affected by the ambiguity of the atmosphere and motion transformation of the target, resulting in the low resolution of the captured face image, which cannot be recognized by human or machine. Therefore, the clarity of face images must be urgently improved. The method of enhancing the resolution of face image by using super-resolution (SR) restoration technology has become an important means for solving this problem. Face SR reconstruction is the process of predicting high-resolution (HR) face images from one or more observed low-resolution (LR) face images, which is a typical pathological problem. As a domain-specific super-resolution task, we can use facial priori knowledge to improve the effect of super-resolution. We propose a deep end-to-end face SR reconstruction algorithm based on multitask joint learning method. Multitask learning algorithm is an inductive transfer mechanism, which can improve the generalization performance of backbone models by utilizing specific domain information hidden in training signals. Existing SR methods use different methods to fuse face priori information, which substantially improves the performance of face super-resolution algorithm. However, these networks generally use the method of the direct fusion of facial geometry information and image features to integrate the priori feature information, but they do not fully utilize the semantic information, such as facial landmark, gender, and facial expression. Moreover, at a large magnification, the priori features obtained by these methods are rough to reconstruct detailed facial edges and texture details. To solve this problem, we propose a face SR reconstruction algorithm based on multitask joint learning. MTFSR combines face SR with assistant tasks, such as facial feature point detection, gender classification, and facial expression recognition, by using multitask learning method to obtain the shared representation of facial features among related tasks, acquire rich facial prior knowledge, and optimize the performance of face SR algorithm.MethodFirst, a face SR reconstruction algorithm based on multitask joint learning is proposed. The model uses residual learning and symmetrical cross-layer connection to extract multilevel features. Local residual mapping improves the expressive capability of the network to enhance performance, solves gradient dissipation in network training, and reduces the number of convolution cores in the model through feature reuse. To reduce the parameters of the convolution kernel, the dimension transformation of the input of the residual block is performed by using the convolution kernel with 1×1 scale, which initially reduces and then increases the dimension. The network adopts the encoder-decoder structure. In the encoder structure, the dimension of feature space is gradually reduced, and the redundant information in the input image is discarded. The feature expression of the face image at the high-dimensional visual level is obtained. The visual feature is sent to the decoder through the cross-layer connection structure. The decoder cascades and fuses all levels of visual features in the unit to achieve accurate filtering of effective information. The deconvolution layer is used to restore the spatial dimension gradually and repair the details and texture features of the face. We design a joint training method for face multiattribute learning tasks:setting loss weights and loss thresholds on the basis of the learning difficulty of different tasks and avoiding the influence of subtasks on the learning of head tasks after fitting the training set to obtain considerable abundant face prior knowledge information. The perceptual loss function is used to measure the semantic gap between HR and SR images, and the output feature map of perceptual loss network is visually processed to demonstrate the effectiveness of perceptual loss in improving the reconstruction effect of facial semantic information. Finally, we enhance the dataset of face attributes and filter the data that are missing relevant attribute labels. The key point detection algorithm is used to re-extract the attributes of feature points. On this basis, joint multitask learning is conducted to obtain numerous realistic SR results of visual perception.ResultIn this experiment, a total of 35 000 face images are selected, and two sets of LR/HR face image data pairs with different resolution magnifications are produced via double cubic interpolation downsampling using×4 and×8 scales. The sizes of each pair of images are 322/1282 and 162/1282. The first 30 000 face images are used as training set, and the last 5 000 face images are used as test set. Six models are trained for each of the three cases:×4 and×8 resolution amplifications, whether to use multitask joint learning, and whether to use perceptual loss function. The single task face SR network using pixel-by-pixel loss is defined as STFSR-MSE, and the face SR network using pixel-by-pixel loss is defined as MTFSR-MSE. Face SR network based on multitask joint learning with sense perception loss is defined as MTFSR-Perce. Two objective evaluation criteria, namely, peak signal-to-noise ratio and structural similarity index, are used to test the experimental results. The effect of this algorithm is improved by 2.15 dB at the scale of×8 magnification, compared with the general SR MemNet algorithm, and approximately 1.2 dB at the scale of×8 magnification, compared with the face SR FSRNet algorithm. A joint training method for the multiattribute learning task of the face is designed. On the basis of the learning difficulty of different tasks, loss weights and loss thresholds are set to avoid the influence of subtasks on the learning of head services after the training set has been fitted and to obtain abundant face prior knowledge information.ConclusionExperimental data and results show that the proposed algorithm can further utilize the face prior knowledge and create further realistic and clear facial edges and texture details in visual perception.关键词:multi-task joint learning;image restoration;deep learning;super resolution;perception loss58|99|4更新时间:2024-05-07 -

摘要:ObjectiveThe style transfer of images has been a research hotspot in computer vision and image processing in recent years. The image style transfer technology can transfer the style of the style image to the content image, and the obtained result image contains the main content structure information of the content image and the style information of the style image, thereby satisfying people's artistic requirements for the image. The development of image style transfer can be divided into two phases. In the first phase, people often use non-photorealistic rendering methods to add artistic style to the design works. These methods only use the low-level features of the image for style transfer, and most of them have problems, such as poor visual effects and low operational efficiency. In the second phase, researchers have performed considerable meaningful work by introducing the achievements of deep learning to style transfer. In the framework of convolutional neural networks, Researchers proposed a classical image style transfer method, which uses convolutional neural networks to extract advanced features of style and content images, and obtained the stylized result image by minimizing the loss function. Compared with the traditional non-photorealistic rendering method, the convolutional neural network-based method does not require user intervention in the style transfer process, is applicable to any type of style image, and has good universality. However, the resulting image has uneven texture expression and increased noise, and the method is more complex than other traditional methods. To address these problems, we propose a new model of total variational style transfer based on correlation alignment from a detailed analysis of the traditional style transfer method.MethodIn this study, we design a style texture extraction method based on correlation alignment to make the style information evenly distributed on the resulting image. In addition, the total variational regularity is introduced to suppress the noise generated during the style transfer effectively, and a more efficient result image convolution layer selection strategy is adopted to improve the overall efficiency of the new model. We build a new model consisting of three VGG-19 networks. Only the cov4_3 convolutional layer of the VGG(visual geometry group)-style network is used to provide style information. Only the cov4_2 convolutional layer of the VGG content network is used to provide content information. For a given content image

摘要:ObjectiveThe style transfer of images has been a research hotspot in computer vision and image processing in recent years. The image style transfer technology can transfer the style of the style image to the content image, and the obtained result image contains the main content structure information of the content image and the style information of the style image, thereby satisfying people's artistic requirements for the image. The development of image style transfer can be divided into two phases. In the first phase, people often use non-photorealistic rendering methods to add artistic style to the design works. These methods only use the low-level features of the image for style transfer, and most of them have problems, such as poor visual effects and low operational efficiency. In the second phase, researchers have performed considerable meaningful work by introducing the achievements of deep learning to style transfer. In the framework of convolutional neural networks, Researchers proposed a classical image style transfer method, which uses convolutional neural networks to extract advanced features of style and content images, and obtained the stylized result image by minimizing the loss function. Compared with the traditional non-photorealistic rendering method, the convolutional neural network-based method does not require user intervention in the style transfer process, is applicable to any type of style image, and has good universality. However, the resulting image has uneven texture expression and increased noise, and the method is more complex than other traditional methods. To address these problems, we propose a new model of total variational style transfer based on correlation alignment from a detailed analysis of the traditional style transfer method.MethodIn this study, we design a style texture extraction method based on correlation alignment to make the style information evenly distributed on the resulting image. In addition, the total variational regularity is introduced to suppress the noise generated during the style transfer effectively, and a more efficient result image convolution layer selection strategy is adopted to improve the overall efficiency of the new model. We build a new model consisting of three VGG-19 networks. Only the cov4_3 convolutional layer of the VGG(visual geometry group)-style network is used to provide style information. Only the cov4_2 convolutional layer of the VGG content network is used to provide content information. For a given content image$\mathit{\boldsymbol{c}}$ $\mathit{\boldsymbol{s}}$ $\mathit{\boldsymbol{x}}$ $\mathit{\boldsymbol{c}}$ $\mathit{\boldsymbol{x}}$ 关键词:correlation alignment;total variation;style transfer;machine vision;convolutional neural network (CNN)31|33|7更新时间:2024-05-07 -

摘要:ObjectiveSemantic segmentation, a challenging task in computer vision, aims to assign corresponding semantic class labels to every pixel in an image. This process is widely applied into many fields, such as autonomous driving, obstacle detection, medical image analysis, 3D geometry, environment modeling, reconstruction of indoor environment, and 3D semantic segmentation. Despite the many achievements in semantic segmentation task, two challenges remain:1) the lack of rich multiscale information and 2) the loss of spatial information. Starting from capturing rich multiscale information and extracting affluent spatial information, a new semantic segmentation model is proposed, which can greatly improve the segmentation results.MethodThe new module is built on an encoder-decoder structure, which can effectively promote the fusion of high-level semantic information and low-level spatial information. The details of the entire architecture are elaborated as follows:First, in the encoder part, the ResNet-101 network is used as our backbone to capture feature maps. In ResNet-101 network, the last two blocks utilize atrous convolutions with rate=2 and rate=4, which can reduce the spatial resolution loss. A multiscale information fusion module is designed in the encoder part to capture feature maps with rich multiscale and discriminative information in the deep stage of the network. In this module, by applying expansion and stacking principle, Kronecker convolutions are arranged in parallel structure to expand the receptive field for extracting multiscale information. A global attention module is applied to highlight discriminative information selectively in the feature maps captured by Kronecker convolutions. Subsequently, a spatial information capturing module is introduced as a decoder part in the shallow stage of the network. The spatial information capturing module aims to supplement the affluent spatial information, which can compensate for the spatial resolution loss caused by the repeated combination of max-pooling and striding at consecutive layers in ResNet-101. Moreover, the spatial information-capturing module plays an important role in effectively enhancing the relationships between the widely separated spatial regions. The feature maps with rich multiscale and discriminative information captured by the multiscale information fusion module in the deep stage and the feature maps with affluent spatial information captured by the spatial information-capturing module will be fused to obtain a new feature map set, which is full of effective information. Afterward, a multikernel convolution block is utilized to refine these feature maps. In the multikernel convolution block, two convolutions are in parallel. The sizes of the two convolution kernels are 3×3 and 5×5. The feature maps refined by the multikernel convolution block will be fed to a Data-dependent Upsampling (DUpsampling) operator to obtain the final prediction feature maps. The reason for replacing the upsample operators with bilinear interpolation with DUpsampling is that DUpsampling not only can utilize the redundancy in the segmentation label space but also can effectively recover the pixel-wise prediction. We can safely downsample arbitrary low-level feature maps to the resolution of the lowest resolution of feature maps and then fuse these features to produce the final prediction.ResultTo prove the effectiveness of the proposals, extensive experiments are conducted on two public datasets:PASCAL VOC 2012 and Cityscapes. We first conduct several ablation studies on the PASCAL VOC 2012 dataset to evaluate the effectiveness of each module and then perform several contrast experiments on the PASCAL VOC 2012 and Cityscapes datasets with existing approaches, such as FCN (fully convolutional network), FRRN(full-resolution residual networks), DeepLabv2, CRF-RNN(conditional random fields as recurrent neural networks), DeconvNet, GCRF (Gaussion conditional random field network), DeepLabv2-CRF, Piecewise, Dilation10, DPN (deep parsing network), LRR (Laplacian reconstruction and refinement), and RefineNet models, to verify the effectiveness of the entire architecture. On the Cityscapes dataset, our model achieves 0.52%, 3.72%, and 4.42% mIoU improvement in performance compared with the RefineNet, DeepLabv2-CRF, and LRR models, respectively. On the PASCAL VOC 2012 dataset, our model achieves 6.23%, 7.43%, and 8.33% mIoU improvement in performance compared with the Piecewise, DPN, and GCRF models, respectively. Several examples of visualization results from our model experimented on the Cityscapes and PASCAL VOC 2012 datasets demonstrate the superiority of the proposals.ConclusionExperimental results show that our model outperforms several state-of-the-art saliency approaches and can dramatically improve the results of semantic segmentation. This model has great application value in many fields, such as medical image analysis, automatic driving, and unmanned aerial vehicle.关键词:semantic segmentation;Kronecker convolution;multiscale information;spatial information;attention mechanism;encoder-decoder structure;Cityscapes dataset;PASCAL VOC 2012 dataset53|73|5更新时间:2024-05-07

摘要:ObjectiveSemantic segmentation, a challenging task in computer vision, aims to assign corresponding semantic class labels to every pixel in an image. This process is widely applied into many fields, such as autonomous driving, obstacle detection, medical image analysis, 3D geometry, environment modeling, reconstruction of indoor environment, and 3D semantic segmentation. Despite the many achievements in semantic segmentation task, two challenges remain:1) the lack of rich multiscale information and 2) the loss of spatial information. Starting from capturing rich multiscale information and extracting affluent spatial information, a new semantic segmentation model is proposed, which can greatly improve the segmentation results.MethodThe new module is built on an encoder-decoder structure, which can effectively promote the fusion of high-level semantic information and low-level spatial information. The details of the entire architecture are elaborated as follows:First, in the encoder part, the ResNet-101 network is used as our backbone to capture feature maps. In ResNet-101 network, the last two blocks utilize atrous convolutions with rate=2 and rate=4, which can reduce the spatial resolution loss. A multiscale information fusion module is designed in the encoder part to capture feature maps with rich multiscale and discriminative information in the deep stage of the network. In this module, by applying expansion and stacking principle, Kronecker convolutions are arranged in parallel structure to expand the receptive field for extracting multiscale information. A global attention module is applied to highlight discriminative information selectively in the feature maps captured by Kronecker convolutions. Subsequently, a spatial information capturing module is introduced as a decoder part in the shallow stage of the network. The spatial information capturing module aims to supplement the affluent spatial information, which can compensate for the spatial resolution loss caused by the repeated combination of max-pooling and striding at consecutive layers in ResNet-101. Moreover, the spatial information-capturing module plays an important role in effectively enhancing the relationships between the widely separated spatial regions. The feature maps with rich multiscale and discriminative information captured by the multiscale information fusion module in the deep stage and the feature maps with affluent spatial information captured by the spatial information-capturing module will be fused to obtain a new feature map set, which is full of effective information. Afterward, a multikernel convolution block is utilized to refine these feature maps. In the multikernel convolution block, two convolutions are in parallel. The sizes of the two convolution kernels are 3×3 and 5×5. The feature maps refined by the multikernel convolution block will be fed to a Data-dependent Upsampling (DUpsampling) operator to obtain the final prediction feature maps. The reason for replacing the upsample operators with bilinear interpolation with DUpsampling is that DUpsampling not only can utilize the redundancy in the segmentation label space but also can effectively recover the pixel-wise prediction. We can safely downsample arbitrary low-level feature maps to the resolution of the lowest resolution of feature maps and then fuse these features to produce the final prediction.ResultTo prove the effectiveness of the proposals, extensive experiments are conducted on two public datasets:PASCAL VOC 2012 and Cityscapes. We first conduct several ablation studies on the PASCAL VOC 2012 dataset to evaluate the effectiveness of each module and then perform several contrast experiments on the PASCAL VOC 2012 and Cityscapes datasets with existing approaches, such as FCN (fully convolutional network), FRRN(full-resolution residual networks), DeepLabv2, CRF-RNN(conditional random fields as recurrent neural networks), DeconvNet, GCRF (Gaussion conditional random field network), DeepLabv2-CRF, Piecewise, Dilation10, DPN (deep parsing network), LRR (Laplacian reconstruction and refinement), and RefineNet models, to verify the effectiveness of the entire architecture. On the Cityscapes dataset, our model achieves 0.52%, 3.72%, and 4.42% mIoU improvement in performance compared with the RefineNet, DeepLabv2-CRF, and LRR models, respectively. On the PASCAL VOC 2012 dataset, our model achieves 6.23%, 7.43%, and 8.33% mIoU improvement in performance compared with the Piecewise, DPN, and GCRF models, respectively. Several examples of visualization results from our model experimented on the Cityscapes and PASCAL VOC 2012 datasets demonstrate the superiority of the proposals.ConclusionExperimental results show that our model outperforms several state-of-the-art saliency approaches and can dramatically improve the results of semantic segmentation. This model has great application value in many fields, such as medical image analysis, automatic driving, and unmanned aerial vehicle.关键词:semantic segmentation;Kronecker convolution;multiscale information;spatial information;attention mechanism;encoder-decoder structure;Cityscapes dataset;PASCAL VOC 2012 dataset53|73|5更新时间:2024-05-07

Image Processing and Coding

-

摘要:ObjectiveThe task of salient object detection is to detect the most salient and attention-attracting regions in an image. It can be divided depending on its usage into two different types:predicting human fixations and detecting salient objects. Unlike detecting large-size objects from images with simple backgrounds and high center bias, the detection of small targets under complex backgrounds remains challenging. The main characteristics are the diverse resolution of input images, complicated backgrounds, and scenes with multiple small targets. Moreover, the location of objects under this scenario lacks prior information. To cope with these challenges, the salient object detection models must be able to detect salient objects quickly and accurately in an image without losing the information of the original image and the target and must be able to maintain stable performance when processing images with different sizes. In recent years, superpixel-based salient object detection methods have performed well on several public benchmark datasets. However, the number and size of superpixel can hardly self-adapt to the variation of image resolution and target size when transferred to realistic applications, resulting in reduced performance. Excessive segmentation of superpixels also results in high time consumption. Therefore, superpixel-based methods are unsuitable for salient object detection under complex backgrounds. Despite its shortcomings in suppressing cluttered backgrounds, methods based on global color contrast can uniformly highlight salient objects in an image. In addition, this type of method is computationally efficient compared with most superpixel-based methods. A newly proposed Boolean map-based saliency method has been known for its simplicity, high performance, and high computational efficiency. This method utilizes the Gestalt principle of foreground-background segregation to compute saliency maps from Boolean maps. The advantage of this method is that the calculation of the saliency map is independent of the size of the image or the target; thus, it can maintain stable performance on input images with different resolutions. However, the results remain less than ideal when dealing with images with a complex background, especially those with multiple small targets. Considering these problems, this paper proposes a novel bottom-up salient object detection method that combines the advantages of Boolean map and grayscale rarity.MethodFirst, the input RGB image is converted into a grayscale image. Second, a set of Boolean maps is obtained by binarizing the grayscale image with equally spaced thresholds. The salient surrounded regions are extracted from the Boolean map. The saliency value of each salient region is assigned on the basis of its area. Third, the grayscale image is quantized at different levels, and its histogram is calculated. Afterward, the less frequent grayscale value in the histogram will be assigned a high saliency value to suppress several typical backgrounds, such as smog, cloud, and light vignetting. Finally, Boolean map and grayscale rarity saliencies are merged to obtain a final saliency map with full resolution, highlighted salient object, and clear contour. In the second step, instead of directly using the area of the salient region as the saliency value, the logarithmic value of the area is used to expand the difference between cluttered backgrounds and real salient objects. This strategy can efficiently suppress cluttered backgrounds, such as grass, car trail, and rock on the ground, and it can highlight the large salient object without excessively suppressing the small one. In the third step, when assigning saliency value to an individual pixel, not only the grayscale rarity but also the quantization coefficient is considered. The more the grayscale image is compressed, the greater the weight of the saliency value of its corresponding quantization level. In the final step, a simple linear multiplication strategy is used to fuse two different saliency maps.ResultThe experiment is divided into two parts:qualitative analysis and quantitative evaluation. In the first part, the stability and time consumption of our method and other classic methods are analyzed through computation on five images with multiple targets. These five images are downsampled from the same image; thus, they vary in resolution but are identical in content. We verify that most superpixel-based methods can hardly maintain stability when handling images with different resolutions. Several methods are good in large images, whereas others specialize in small images. In addition to instability, the time consumption of several methods on large-size images is unacceptable. Combined with time consumption and stability, the models that can maintain stability and have fast run speed are mainly pixel-based models, including Itti, LC (local contrast), HC(histogram-based contrasty), and our method. In the second part, all methods are quantitatively evaluated on three different datasets:the complex-background images, which are annotated by ourselves, the SED2 dataset, and the small-object images, which are from DUT-OMRON, ECSSD, ImgSal, and MSRA10K. First, our method obtains the highest F-measure value and smallest MAE (mean absolute error) score over the complex-background images and is only slightly lower than the DRFI (discriminative regional feature integration) and ASNet (attentive saliency network) method in terms of AUC (area under ROC curve) value. The AUC, F-measure, and MAE scores of our method are 0.910 2, 0.700 2, and 0.045 8, respectively. Second, our method outperforms six traditional methods in the SED2 dataset. Furthermore, on the small-object images from public datasets, the performance of our algorithm is second only to the ASNet method and has the highest F-measure value. On the basis of the visual comparison of different saliency maps, 1) superpixel-based methods tend to ignore the small objects, even though these objects have the same feature as the large objects; 2) methods based on color contrast can detect large and small objects in the image, but they also highlight the background; 3) our method can efficiently suppress the background and detect almost all objects, and the saliency map is the closest to the ground truth map.ConclusionIn this study, we propose a full-resolution salient object detection method that combines Boolean map and grayscale rarity. This method is robust to the size variation of salient objects and the diverse resolution of input images and can efficiently cope with the detection of small targets in complex backgrounds. Experimental results demonstrate that our method has the best comprehensive performance on our dataset and outperforms six traditional saliency models on the SED2 dataset. In addition, our method is computationally efficient on images with various sizes.关键词:salient object detection;Boolean map;grayscale rarity;small target;complex background44|15|3更新时间:2024-05-07

摘要:ObjectiveThe task of salient object detection is to detect the most salient and attention-attracting regions in an image. It can be divided depending on its usage into two different types:predicting human fixations and detecting salient objects. Unlike detecting large-size objects from images with simple backgrounds and high center bias, the detection of small targets under complex backgrounds remains challenging. The main characteristics are the diverse resolution of input images, complicated backgrounds, and scenes with multiple small targets. Moreover, the location of objects under this scenario lacks prior information. To cope with these challenges, the salient object detection models must be able to detect salient objects quickly and accurately in an image without losing the information of the original image and the target and must be able to maintain stable performance when processing images with different sizes. In recent years, superpixel-based salient object detection methods have performed well on several public benchmark datasets. However, the number and size of superpixel can hardly self-adapt to the variation of image resolution and target size when transferred to realistic applications, resulting in reduced performance. Excessive segmentation of superpixels also results in high time consumption. Therefore, superpixel-based methods are unsuitable for salient object detection under complex backgrounds. Despite its shortcomings in suppressing cluttered backgrounds, methods based on global color contrast can uniformly highlight salient objects in an image. In addition, this type of method is computationally efficient compared with most superpixel-based methods. A newly proposed Boolean map-based saliency method has been known for its simplicity, high performance, and high computational efficiency. This method utilizes the Gestalt principle of foreground-background segregation to compute saliency maps from Boolean maps. The advantage of this method is that the calculation of the saliency map is independent of the size of the image or the target; thus, it can maintain stable performance on input images with different resolutions. However, the results remain less than ideal when dealing with images with a complex background, especially those with multiple small targets. Considering these problems, this paper proposes a novel bottom-up salient object detection method that combines the advantages of Boolean map and grayscale rarity.MethodFirst, the input RGB image is converted into a grayscale image. Second, a set of Boolean maps is obtained by binarizing the grayscale image with equally spaced thresholds. The salient surrounded regions are extracted from the Boolean map. The saliency value of each salient region is assigned on the basis of its area. Third, the grayscale image is quantized at different levels, and its histogram is calculated. Afterward, the less frequent grayscale value in the histogram will be assigned a high saliency value to suppress several typical backgrounds, such as smog, cloud, and light vignetting. Finally, Boolean map and grayscale rarity saliencies are merged to obtain a final saliency map with full resolution, highlighted salient object, and clear contour. In the second step, instead of directly using the area of the salient region as the saliency value, the logarithmic value of the area is used to expand the difference between cluttered backgrounds and real salient objects. This strategy can efficiently suppress cluttered backgrounds, such as grass, car trail, and rock on the ground, and it can highlight the large salient object without excessively suppressing the small one. In the third step, when assigning saliency value to an individual pixel, not only the grayscale rarity but also the quantization coefficient is considered. The more the grayscale image is compressed, the greater the weight of the saliency value of its corresponding quantization level. In the final step, a simple linear multiplication strategy is used to fuse two different saliency maps.ResultThe experiment is divided into two parts:qualitative analysis and quantitative evaluation. In the first part, the stability and time consumption of our method and other classic methods are analyzed through computation on five images with multiple targets. These five images are downsampled from the same image; thus, they vary in resolution but are identical in content. We verify that most superpixel-based methods can hardly maintain stability when handling images with different resolutions. Several methods are good in large images, whereas others specialize in small images. In addition to instability, the time consumption of several methods on large-size images is unacceptable. Combined with time consumption and stability, the models that can maintain stability and have fast run speed are mainly pixel-based models, including Itti, LC (local contrast), HC(histogram-based contrasty), and our method. In the second part, all methods are quantitatively evaluated on three different datasets:the complex-background images, which are annotated by ourselves, the SED2 dataset, and the small-object images, which are from DUT-OMRON, ECSSD, ImgSal, and MSRA10K. First, our method obtains the highest F-measure value and smallest MAE (mean absolute error) score over the complex-background images and is only slightly lower than the DRFI (discriminative regional feature integration) and ASNet (attentive saliency network) method in terms of AUC (area under ROC curve) value. The AUC, F-measure, and MAE scores of our method are 0.910 2, 0.700 2, and 0.045 8, respectively. Second, our method outperforms six traditional methods in the SED2 dataset. Furthermore, on the small-object images from public datasets, the performance of our algorithm is second only to the ASNet method and has the highest F-measure value. On the basis of the visual comparison of different saliency maps, 1) superpixel-based methods tend to ignore the small objects, even though these objects have the same feature as the large objects; 2) methods based on color contrast can detect large and small objects in the image, but they also highlight the background; 3) our method can efficiently suppress the background and detect almost all objects, and the saliency map is the closest to the ground truth map.ConclusionIn this study, we propose a full-resolution salient object detection method that combines Boolean map and grayscale rarity. This method is robust to the size variation of salient objects and the diverse resolution of input images and can efficiently cope with the detection of small targets in complex backgrounds. Experimental results demonstrate that our method has the best comprehensive performance on our dataset and outperforms six traditional saliency models on the SED2 dataset. In addition, our method is computationally efficient on images with various sizes.关键词:salient object detection;Boolean map;grayscale rarity;small target;complex background44|15|3更新时间:2024-05-07 -

摘要:ObjectiveIn recent years, the frequent occurrence of large-scale mine accidents has led to many casualties and property losses. The mines' production and transportation should be promoted in the way of intelligence. When moving under the mine, the controller of a locomotive needs track information to detect the presence of pedestrians or obstacles in front of it. Then, the locomotive slows down or stops as soon as an emergency condition appears. Track detection, which uses image processing technology to identify the track area in a video or image and displays the specific position of the track, is a key technology for computer vision to achieve automatic driving downhole. Track detection algorithms based on traditional image processing can be classified into two categories. The first is a feature-based approach, which uses the difference between the edge and surrounding environment to extract the track region and obtains the specific track location in the image. However, this method relies heavily on the underlying features of the image and is easily interfered by the surrounding environment, which brings great challenges to the subsequent work and affects the final detection effect of the track. The second is a model-based strategy, which converts the track detection into a problem of solving the track model parameters and achieves the fitting of the track based on its shape in the local range. The track model often cannot adapt to multiple scenarios, and the approach lacks robustness and flexibility. Track detection results based on the convolutional neural network algorithm lack a detailed, unique characterization of the object and rely heavily on visual post-processing techniques. Therefore, we propose conditional generative adversarial net, a track detection algorithm combining multiscale information.MethodFirst, the multigranularity structure is used to decompose it into global and local parts in the generator network. The global part is responsible for low-resolution image generation, and the local part will combine with the global part to generate high-resolution images. Second, the multiscale shared convolution structure is adopted in the discriminator network. The primary features of the real and synthesized samples are extracted by sharing the convolution layer, the corresponding feature map is obtained, and different samples are sent to the multiscale discriminator to supervise the training of the generator further. Finally, the Monte Carlo search technique is introduced to search the intermediate state of the generator, and the result is sent to the discriminator for comparison.ResultThe proposed algorithm achieves an average pixel accuracy of 82.43% and a mean intersection over-union of 0.621 8. Moreover, the accuracy of detecting the track can reach 95.01%. For many different underground scenes, the track test results show good performance and superiority compared with the existing semantic segmentation algorithms.ConclusionThe proposed algorithm can be effectively applied to the complex underground environment and resolves the dilemma of algorithms existing in traditional image processing and convolutional neural network, thus effectively serving underground automatic driving. The algorithm has the following virtues. First, it generates high-resolution images by generative adversarial nets and addresses unstable training in generating edge features of high-resolution images. Second, the multitask learning mechanism is further conducive to discriminator identification, thereby effectively monitoring the results generated by the generator. Finally, the Monte Carlo search strategy is used to search the intermediate state of the generator, which is then feed it into the discriminator, thereby strengthening the constraints of the generator and enhancing the quality of the generated image. Experimental results show that our algorithm can achieve satisfactory results. In the future, we will focus on overcoming the issue of track line prediction under occlusion, expanding the datasets, and strengthening the speed, robustness, and practicality of the algorithm.关键词:track detection;conditional generative adversarial nets(CGAN);multi-scale information;Monte Carlo search;automatic driving downhole19|18|2更新时间:2024-05-07

摘要:ObjectiveIn recent years, the frequent occurrence of large-scale mine accidents has led to many casualties and property losses. The mines' production and transportation should be promoted in the way of intelligence. When moving under the mine, the controller of a locomotive needs track information to detect the presence of pedestrians or obstacles in front of it. Then, the locomotive slows down or stops as soon as an emergency condition appears. Track detection, which uses image processing technology to identify the track area in a video or image and displays the specific position of the track, is a key technology for computer vision to achieve automatic driving downhole. Track detection algorithms based on traditional image processing can be classified into two categories. The first is a feature-based approach, which uses the difference between the edge and surrounding environment to extract the track region and obtains the specific track location in the image. However, this method relies heavily on the underlying features of the image and is easily interfered by the surrounding environment, which brings great challenges to the subsequent work and affects the final detection effect of the track. The second is a model-based strategy, which converts the track detection into a problem of solving the track model parameters and achieves the fitting of the track based on its shape in the local range. The track model often cannot adapt to multiple scenarios, and the approach lacks robustness and flexibility. Track detection results based on the convolutional neural network algorithm lack a detailed, unique characterization of the object and rely heavily on visual post-processing techniques. Therefore, we propose conditional generative adversarial net, a track detection algorithm combining multiscale information.MethodFirst, the multigranularity structure is used to decompose it into global and local parts in the generator network. The global part is responsible for low-resolution image generation, and the local part will combine with the global part to generate high-resolution images. Second, the multiscale shared convolution structure is adopted in the discriminator network. The primary features of the real and synthesized samples are extracted by sharing the convolution layer, the corresponding feature map is obtained, and different samples are sent to the multiscale discriminator to supervise the training of the generator further. Finally, the Monte Carlo search technique is introduced to search the intermediate state of the generator, and the result is sent to the discriminator for comparison.ResultThe proposed algorithm achieves an average pixel accuracy of 82.43% and a mean intersection over-union of 0.621 8. Moreover, the accuracy of detecting the track can reach 95.01%. For many different underground scenes, the track test results show good performance and superiority compared with the existing semantic segmentation algorithms.ConclusionThe proposed algorithm can be effectively applied to the complex underground environment and resolves the dilemma of algorithms existing in traditional image processing and convolutional neural network, thus effectively serving underground automatic driving. The algorithm has the following virtues. First, it generates high-resolution images by generative adversarial nets and addresses unstable training in generating edge features of high-resolution images. Second, the multitask learning mechanism is further conducive to discriminator identification, thereby effectively monitoring the results generated by the generator. Finally, the Monte Carlo search strategy is used to search the intermediate state of the generator, which is then feed it into the discriminator, thereby strengthening the constraints of the generator and enhancing the quality of the generated image. Experimental results show that our algorithm can achieve satisfactory results. In the future, we will focus on overcoming the issue of track line prediction under occlusion, expanding the datasets, and strengthening the speed, robustness, and practicality of the algorithm.关键词:track detection;conditional generative adversarial nets(CGAN);multi-scale information;Monte Carlo search;automatic driving downhole19|18|2更新时间:2024-05-07 -

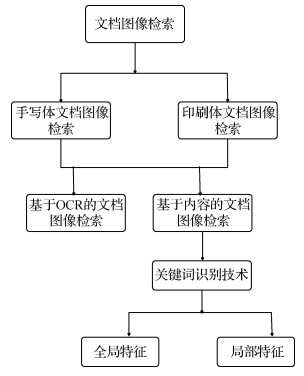

摘要:ObjectiveIn the 21st century, the rapid development of Internet information provides great convenience for people's lifestyles, but people also must face information redundancy when they go online because most information is now in text. The existence of forms emphasizes the importance of accurately and efficiently obtaining the information that users need. Moreover, with the acceleration of informationization, the number of electronic documents has risen sharply, making the efficient and fast retrieval of document images further urgent. In document image retrieval, traditional optical character recognition (OCR) technology has difficulty in achieving effective retrieval due to the quality of document images and fonts. As an alternative to OCR technology, word recognition technology does not require cumbersome OCR recognition and can directly search for keywords to achieve good results. In keyword extraction, local feature extraction has a more detailed and accurate description than global feature extraction. In terms of corner detection, this paper focuses on the serious clustering and slow computing speed of Harris algorithm.MethodIn the framework of word-spotting technology, an improved Harris image matching algorithm is proposed, which is used for document image retrieval for the first time. First, the original Harris algorithm uses the Gaussian function to smooth the window in the feature point extraction process of the image. When calculating the corner response value R, the differential operator is used as the directional derivative to calculate the number of multiplication operations, resulting in many computational algorithms, slow operation, and other issues. Given the deficiencies of the original Harris algorithm, FAST algorithm is used in the detection of corner points. 1) The gray values of the center pixel and surrounding 16 pixels are compared using the formula in the radius 3 field. 2) To improve the detection speed, the 0th and 8th pixel points on the circumference are first detected, and the two points on the other diameters are sequentially detected. 3) A difference between the gray values of 12 consecutive points and the p-point exceeding the threshold indicates a corner point. 4) After obtaining the primary corner, the Harris algorithm is used to remove the pseudo corner. Second, the original Harris algorithm sorts and compares the local maximum of the corner response function, establishes the response and coordinate matrices, records the local maximum and response coordinates, and compares the global maximum. At this point, all corner points have been recorded, but a case wherein multiple corner points coexist in the domain of a corner point, namely, "clustering" phenomenon, is likely. To address the serious clustering problem of the Harris algorithm, a nonmaximum value suppression method is adopted, which essentially searches for the local maximum and suppresses nonmaximum elements. When detecting the diagonal points, the local maximum is sorted from large to small, the suppression radius is set, a new response function matrix is established, and the corner points are extracted by continuously reducing the radius, thereby effectively avoiding Harris corner clustering. 1) The value of the corner response function of all pixels in the graph is calculated, the local maximum is searched for, and the pixel of the local maximum is recorded. 2) The local maximum ordering matrix and corresponding coordinate matrix are established, and the local maxima are sorted from large to small. 3) The suppression radius is set to the local maximum, a new matrix of response functions is established, and the corner points are extracted by continuously reducing the radius. 4) Whether the local maximum value is the maximum value within the suppression radius, that is, the desired corner point, is judged; if the condition is satisfied, then the local maximum value is added to the response function matrix. 5) The radius reduction is continued to extract the corner points. If the number of corner points is preset, then the process ends. Otherwise, step 4) is repeated.ResultExperimental results show that the accuracy rate is 98% and the recall rate is 87.5% without noise. Good experimental results are obtained under the condition of constant mean and continuous improvement of variance of Gaussian noise. Compared with the SIFT algorithm, the time is considerably improved, and the accuracy is increased.ConclusionStarting from the document image of the printed matter, FAST+Harris is used to search for keywords under the framework of keyword recognition technology. On the one hand, this method saves retrieval time and improves the real-time performance of the algorithm. On the other hand, it improves the aggregation of Harris. The cluster phenomenon improves the accuracy of corner detection. Compared with the SIFT algorithm, time is greatly improved, and good experimental results are achieved under the influence of different degrees of noise.关键词:Fast+Harris;feature extraction;brute force;corner detection;word spotting;print document image21|23|1更新时间:2024-05-07

摘要:ObjectiveIn the 21st century, the rapid development of Internet information provides great convenience for people's lifestyles, but people also must face information redundancy when they go online because most information is now in text. The existence of forms emphasizes the importance of accurately and efficiently obtaining the information that users need. Moreover, with the acceleration of informationization, the number of electronic documents has risen sharply, making the efficient and fast retrieval of document images further urgent. In document image retrieval, traditional optical character recognition (OCR) technology has difficulty in achieving effective retrieval due to the quality of document images and fonts. As an alternative to OCR technology, word recognition technology does not require cumbersome OCR recognition and can directly search for keywords to achieve good results. In keyword extraction, local feature extraction has a more detailed and accurate description than global feature extraction. In terms of corner detection, this paper focuses on the serious clustering and slow computing speed of Harris algorithm.MethodIn the framework of word-spotting technology, an improved Harris image matching algorithm is proposed, which is used for document image retrieval for the first time. First, the original Harris algorithm uses the Gaussian function to smooth the window in the feature point extraction process of the image. When calculating the corner response value R, the differential operator is used as the directional derivative to calculate the number of multiplication operations, resulting in many computational algorithms, slow operation, and other issues. Given the deficiencies of the original Harris algorithm, FAST algorithm is used in the detection of corner points. 1) The gray values of the center pixel and surrounding 16 pixels are compared using the formula in the radius 3 field. 2) To improve the detection speed, the 0th and 8th pixel points on the circumference are first detected, and the two points on the other diameters are sequentially detected. 3) A difference between the gray values of 12 consecutive points and the p-point exceeding the threshold indicates a corner point. 4) After obtaining the primary corner, the Harris algorithm is used to remove the pseudo corner. Second, the original Harris algorithm sorts and compares the local maximum of the corner response function, establishes the response and coordinate matrices, records the local maximum and response coordinates, and compares the global maximum. At this point, all corner points have been recorded, but a case wherein multiple corner points coexist in the domain of a corner point, namely, "clustering" phenomenon, is likely. To address the serious clustering problem of the Harris algorithm, a nonmaximum value suppression method is adopted, which essentially searches for the local maximum and suppresses nonmaximum elements. When detecting the diagonal points, the local maximum is sorted from large to small, the suppression radius is set, a new response function matrix is established, and the corner points are extracted by continuously reducing the radius, thereby effectively avoiding Harris corner clustering. 1) The value of the corner response function of all pixels in the graph is calculated, the local maximum is searched for, and the pixel of the local maximum is recorded. 2) The local maximum ordering matrix and corresponding coordinate matrix are established, and the local maxima are sorted from large to small. 3) The suppression radius is set to the local maximum, a new matrix of response functions is established, and the corner points are extracted by continuously reducing the radius. 4) Whether the local maximum value is the maximum value within the suppression radius, that is, the desired corner point, is judged; if the condition is satisfied, then the local maximum value is added to the response function matrix. 5) The radius reduction is continued to extract the corner points. If the number of corner points is preset, then the process ends. Otherwise, step 4) is repeated.ResultExperimental results show that the accuracy rate is 98% and the recall rate is 87.5% without noise. Good experimental results are obtained under the condition of constant mean and continuous improvement of variance of Gaussian noise. Compared with the SIFT algorithm, the time is considerably improved, and the accuracy is increased.ConclusionStarting from the document image of the printed matter, FAST+Harris is used to search for keywords under the framework of keyword recognition technology. On the one hand, this method saves retrieval time and improves the real-time performance of the algorithm. On the other hand, it improves the aggregation of Harris. The cluster phenomenon improves the accuracy of corner detection. Compared with the SIFT algorithm, time is greatly improved, and good experimental results are achieved under the influence of different degrees of noise.关键词:Fast+Harris;feature extraction;brute force;corner detection;word spotting;print document image21|23|1更新时间:2024-05-07 -

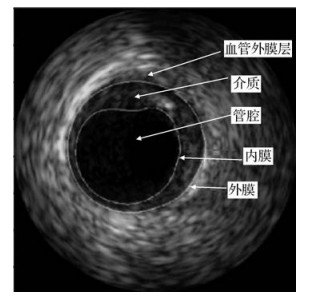

摘要:ObjectiveImage segmentation is based on the grayscale information of the image, and the homogenous regions with different properties of the image are divided using different methods without overlapping each other. When image segmentation is performed to obtain the region of interest, the image will inevitably have a certain degree of noise due to various factors, the noise will cause the image edge to be weakened, the false edge will be generated during segmentation, the segmentation curve will fall into the local minimum, and the evolution will stop. The situation, in turn, affects the accuracy of the edge recognition of the target to be segmented during image segmentation, and the segmentation result encounters difficulty in achieving the desired effect. The level set method based on active set is widely used in image segmentation, but it is still affected by noise. Thus, a new method is proposed to solve the noise problem.MethodSince given that the anisotropic diffusion model is applied to image segmentation, including denoising and maintaining the edge of the target to be segmented, a new model is proposed to fuse the anisotropic diffusion divergence field information into the DRLSE model to improve the efficiency and accuracy of the existing segmentation algorithm of the noise image based on the distance regularization level set (DRLSE) evolution model.The model can overcome the problems of the distance regularized level set model, such as slow convergence speed, easy to fall into the false boundary and leak from the weak edge. The improved model can accelerate the initial contour evolution to the edge of the target to be segmented when segmenting the noise image. The main improvement is to change the constant coefficient α of the control area term in the DRLSE evolution model to the variable weight coefficient α(

摘要:ObjectiveImage segmentation is based on the grayscale information of the image, and the homogenous regions with different properties of the image are divided using different methods without overlapping each other. When image segmentation is performed to obtain the region of interest, the image will inevitably have a certain degree of noise due to various factors, the noise will cause the image edge to be weakened, the false edge will be generated during segmentation, the segmentation curve will fall into the local minimum, and the evolution will stop. The situation, in turn, affects the accuracy of the edge recognition of the target to be segmented during image segmentation, and the segmentation result encounters difficulty in achieving the desired effect. The level set method based on active set is widely used in image segmentation, but it is still affected by noise. Thus, a new method is proposed to solve the noise problem.MethodSince given that the anisotropic diffusion model is applied to image segmentation, including denoising and maintaining the edge of the target to be segmented, a new model is proposed to fuse the anisotropic diffusion divergence field information into the DRLSE model to improve the efficiency and accuracy of the existing segmentation algorithm of the noise image based on the distance regularization level set (DRLSE) evolution model.The model can overcome the problems of the distance regularized level set model, such as slow convergence speed, easy to fall into the false boundary and leak from the weak edge. The improved model can accelerate the initial contour evolution to the edge of the target to be segmented when segmenting the noise image. The main improvement is to change the constant coefficient α of the control area term in the DRLSE evolution model to the variable weight coefficient α($I$ 关键词:image segmentation;noise;edge recognition;level set;anisotropic diffusion40|53|0更新时间:2024-05-07 -