最新刊期

卷 25 , 期 12 , 2020

-

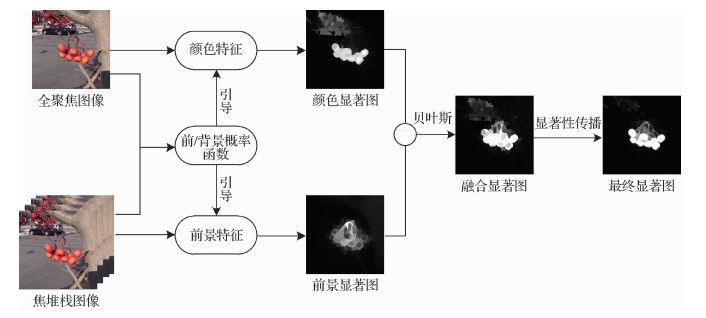

摘要:Saliency detection is an important task in the computer vision community, especially in visual tracking, image compression, and object recognition tasks. However, the extant saliency detection methods based on RGB or RGB depth (RGB-D) often suffer from problems related to complex backgrounds, illumination, occlusion, and other factors, thereby leading to their inferior detection performance. In this case, a solution for improving the robustness of saliency detection results is warranted. In recent years, commercial and industrial light field cameras based on micro-lens arrays inserted between the main lens and the photosensor have introduced a new method for solving the saliency detection problem. The light field not only records spatial information but also the directions of all incoming light rays. The spatial and angular information inherent in a light field implicitly contains the geometry and reflection characteristics of the observed scene, which can provide reliable prior information for saliency detection, such as background clues and depth information. For example, the digital refocus technique can divide the light field into focal slices that focus at different depths. The background clues can be obtained from the focused areas. The light field contains effective saliency object occlusion information. Depth information also be obtained from the light field in various ways. Therefore, using light fields offers many advantages in dealing with problems related to saliency detection. Although saliency detection based on light fields has received much attention in recent years, a deep understanding of this method is yet to be achieved. In this paper, we review the research progress on light field saliency detection to build a foundation for future studies on this topic. First, we briefly discuss light field imaging theory, light field cameras, and the existing light field datasets used for saliency detection and then point out the differences among various datasets. Second, we systematically review the extant algorithms and the latest progress in light filed saliency detection from the aspects of hand-crafted features, sparse coding, and deep learning. Saliency detection algorithms based on light field hand-crafted features are generally based on the idea of contrast. These algorithms detect salient regions by calculating the feature difference between each pixel and super pixel as well as between other pixels and other super pixels. Saliency detection based on sparse coding and deep learning follow the same idea of feature learning, that is, they use image feature coding or the outstanding feature representation abilities of convolution network to determine the salient regions. By analyzing the experimental data on four publicly available light field saliency detection datasets, we summarize the advantages and disadvantages of the existing light field saliency detection methods, summarize the recent progress in light-field-based saliency detection and point out the limitations in this field. Only a few light field datasets are presently available for saliency detection, and these datasets are all generated by light field cameras based on micro-lens array, which has a narrow baseline. Therefore, the effective utilization of various information present in a light field remains a challenge. While saliency detection algorithms based on light fields have been proposed in previous studies, saliency detection based on light fields warrant further study due to the complexity of real scenes.关键词:saliency detection;light field cameras;light field features;sparse coding;deep learning74|83|4更新时间:2024-05-07

摘要:Saliency detection is an important task in the computer vision community, especially in visual tracking, image compression, and object recognition tasks. However, the extant saliency detection methods based on RGB or RGB depth (RGB-D) often suffer from problems related to complex backgrounds, illumination, occlusion, and other factors, thereby leading to their inferior detection performance. In this case, a solution for improving the robustness of saliency detection results is warranted. In recent years, commercial and industrial light field cameras based on micro-lens arrays inserted between the main lens and the photosensor have introduced a new method for solving the saliency detection problem. The light field not only records spatial information but also the directions of all incoming light rays. The spatial and angular information inherent in a light field implicitly contains the geometry and reflection characteristics of the observed scene, which can provide reliable prior information for saliency detection, such as background clues and depth information. For example, the digital refocus technique can divide the light field into focal slices that focus at different depths. The background clues can be obtained from the focused areas. The light field contains effective saliency object occlusion information. Depth information also be obtained from the light field in various ways. Therefore, using light fields offers many advantages in dealing with problems related to saliency detection. Although saliency detection based on light fields has received much attention in recent years, a deep understanding of this method is yet to be achieved. In this paper, we review the research progress on light field saliency detection to build a foundation for future studies on this topic. First, we briefly discuss light field imaging theory, light field cameras, and the existing light field datasets used for saliency detection and then point out the differences among various datasets. Second, we systematically review the extant algorithms and the latest progress in light filed saliency detection from the aspects of hand-crafted features, sparse coding, and deep learning. Saliency detection algorithms based on light field hand-crafted features are generally based on the idea of contrast. These algorithms detect salient regions by calculating the feature difference between each pixel and super pixel as well as between other pixels and other super pixels. Saliency detection based on sparse coding and deep learning follow the same idea of feature learning, that is, they use image feature coding or the outstanding feature representation abilities of convolution network to determine the salient regions. By analyzing the experimental data on four publicly available light field saliency detection datasets, we summarize the advantages and disadvantages of the existing light field saliency detection methods, summarize the recent progress in light-field-based saliency detection and point out the limitations in this field. Only a few light field datasets are presently available for saliency detection, and these datasets are all generated by light field cameras based on micro-lens array, which has a narrow baseline. Therefore, the effective utilization of various information present in a light field remains a challenge. While saliency detection algorithms based on light fields have been proposed in previous studies, saliency detection based on light fields warrant further study due to the complexity of real scenes.关键词:saliency detection;light field cameras;light field features;sparse coding;deep learning74|83|4更新时间:2024-05-07

Review

-

摘要:ObjectiveRain lines adversely affect the visual quality of images collected from outdoors. Severe weather conditions, such as rain, fog, and haze, can affect the quality of these images and make them unusable. These degraded images may also drastically affect the performance of man's vision system. Given that rain is a common meteorological phenomenon, an algorithm that can remove rain from single image is of practical significance. Given that video-based de-raining methods obtain pixel information of the same location at different periods, removing rain from an individual image is more challenging because of less available information. Traditional de-raining methods mainly focus on rain map modeling and use mathematical optimization to detect and remove rain streaks, but the performance of such approach requires further improvement.MethodTo address the above problems, this paper establishes a convolution neural network for single image rain removal that is trained on a synthetic dataset. The contributions of this work are as follows. 1) To expand the neural receptive field of a convolution neural network that learns abstract feature representation of rain streaks and the ground truth, this work establishes a selective kernel network based on multi-scale convolution with different kernel for feature learning. To accomplish useful information fusion and selection, an external non-linear weight learning mechanism is developed to redistribute the weight for the corresponding channel's feature information from different convolution kernels. This mechanism enables the network to select the feature information of different receptive fields adaptively and enhance its expression ability and rain removal capability. 2) The existing rain map model shows some limitations at the training stage. Completing this model by adding a learnable refine factor that modifies each pixel in a rain streak image, can enhance the accuracy of the result and prevent background misjudgment. The range of the refining factor is also limited to reduce the mapping range of the network training process. 3) At the training stage the existing single image rain removal networks need to learn various types of image content, including rain streaks removal and background restoration, which will undoubtedly increase their burden. By using the novel idea of residual learning the proposed network can directly learn the rain streak map by using the input rain map. In this way, the mapping interval of the network learning process is reduced, the background of the original graph can be preserved, and loss of details can be prevented. The validity of the above arguments is tested by designing a comparison network with different modules. Specifically, based on general convolution, different modules are combined step by step, including the SK net, residual learning mechanism, and refine factor learning net. Single image rain removal network based on selective kernel convolution using residual refine factor (SKRF) is eventually designed. The residual learning mechanism is used to reduce the mapping interval, and the refined factor is used to enhance the rain streak map to improve the rain removal performance.ResultAn SKRF network, including the three subnets of SK net, refine factor net, and residual net, is designed in a rain removal experiment and tested on the open Rain12 test set. This network achieves a higher accuracy, peak signal to noise ratio(PSNR) (34.62), and structural similarity(SSIM) (0.970 6) compared with the existing methods. The SKRF network shows obvious advantages in removing rain from single image.ConclusionWe construct a convolution neural network based on SKRF to remove rain streaks from single image. A selective kernel convolution network is established to improve the expression ability of the proposed network via the adaptive adjustment mechanism of the size of the receptive field by the internal neurons. A rain map with different characteristics can be well learned, and the effect of rain removal can be improved. The residual learning mechanism can reduce the mapping interval of the network learning process and retain more details of the original image. In the modified rain map model, an additional refine factor is provided for the rain streak map, which can further reduce the mapping interval and reduce background misjudgment. This network not only removes the majority of the visible rain streaks but also retains the ground truth. In our feature work, we plan to extend this network to a wider range of image restoration tasks.关键词:single image rain removal;deep learning;selective kernel network(SK Net);refine factor (RF);residual learning40|23|4更新时间:2024-05-07

摘要:ObjectiveRain lines adversely affect the visual quality of images collected from outdoors. Severe weather conditions, such as rain, fog, and haze, can affect the quality of these images and make them unusable. These degraded images may also drastically affect the performance of man's vision system. Given that rain is a common meteorological phenomenon, an algorithm that can remove rain from single image is of practical significance. Given that video-based de-raining methods obtain pixel information of the same location at different periods, removing rain from an individual image is more challenging because of less available information. Traditional de-raining methods mainly focus on rain map modeling and use mathematical optimization to detect and remove rain streaks, but the performance of such approach requires further improvement.MethodTo address the above problems, this paper establishes a convolution neural network for single image rain removal that is trained on a synthetic dataset. The contributions of this work are as follows. 1) To expand the neural receptive field of a convolution neural network that learns abstract feature representation of rain streaks and the ground truth, this work establishes a selective kernel network based on multi-scale convolution with different kernel for feature learning. To accomplish useful information fusion and selection, an external non-linear weight learning mechanism is developed to redistribute the weight for the corresponding channel's feature information from different convolution kernels. This mechanism enables the network to select the feature information of different receptive fields adaptively and enhance its expression ability and rain removal capability. 2) The existing rain map model shows some limitations at the training stage. Completing this model by adding a learnable refine factor that modifies each pixel in a rain streak image, can enhance the accuracy of the result and prevent background misjudgment. The range of the refining factor is also limited to reduce the mapping range of the network training process. 3) At the training stage the existing single image rain removal networks need to learn various types of image content, including rain streaks removal and background restoration, which will undoubtedly increase their burden. By using the novel idea of residual learning the proposed network can directly learn the rain streak map by using the input rain map. In this way, the mapping interval of the network learning process is reduced, the background of the original graph can be preserved, and loss of details can be prevented. The validity of the above arguments is tested by designing a comparison network with different modules. Specifically, based on general convolution, different modules are combined step by step, including the SK net, residual learning mechanism, and refine factor learning net. Single image rain removal network based on selective kernel convolution using residual refine factor (SKRF) is eventually designed. The residual learning mechanism is used to reduce the mapping interval, and the refined factor is used to enhance the rain streak map to improve the rain removal performance.ResultAn SKRF network, including the three subnets of SK net, refine factor net, and residual net, is designed in a rain removal experiment and tested on the open Rain12 test set. This network achieves a higher accuracy, peak signal to noise ratio(PSNR) (34.62), and structural similarity(SSIM) (0.970 6) compared with the existing methods. The SKRF network shows obvious advantages in removing rain from single image.ConclusionWe construct a convolution neural network based on SKRF to remove rain streaks from single image. A selective kernel convolution network is established to improve the expression ability of the proposed network via the adaptive adjustment mechanism of the size of the receptive field by the internal neurons. A rain map with different characteristics can be well learned, and the effect of rain removal can be improved. The residual learning mechanism can reduce the mapping interval of the network learning process and retain more details of the original image. In the modified rain map model, an additional refine factor is provided for the rain streak map, which can further reduce the mapping interval and reduce background misjudgment. This network not only removes the majority of the visible rain streaks but also retains the ground truth. In our feature work, we plan to extend this network to a wider range of image restoration tasks.关键词:single image rain removal;deep learning;selective kernel network(SK Net);refine factor (RF);residual learning40|23|4更新时间:2024-05-07 -

摘要:ObjectiveRandom-valued impulse noise (RVIN) is a common cause of image degradation that is frequently observed in images captured by digital camera sensors. In addition to degrading image quality, this type of noise also leads to pixel failure and inaccurate storage location or transmission. The presence of impulse noise may also introduce difficulties in feature extraction, target tracking, image classification, and subsequent image processing and analysis works. For RVIN, the noise value of a corrupted pixels uniformly distributed between 0 and 255. In this case, detecting the RVIN is very difficult. The available local image statistics for RVIN detection, which are used to determine whether the center pixel of an image patch is corrupted by RVIN noise or not, have are latively weak description ability, thereby restricting their accuracy to some extent and affecting the restoration performance of subsequent switching RVIN denoising modules.MethodNine local image statistics, including eight neighbor rank-ordered logarithmic difference (ROLD) statistics and one min-imum orientation logarithmic difference (MOLD) statistics, were used to construct a highly sensitive RVIN noise-aware feature vector that can describe the RVIN likeness of the center pixel of a given patch. Based on this vector, RVIN noise-aware feature vectors extracted from numerous noisy patches, their corresponding noise labels were formed as a set of training pairs for a multi-layer perception (MLP) network, and the MLP-based RVIN detector was trained.ResultComparative experiments were performed to test the estimation accuracy and denoising effect of the proposed RVIN detector. The proposed detector was compared with several state-of-the-art image denoising methods, including progressive switching median filter(PSMF), ROLD-edge preserving regularization(ROLD-EPR), adaptive switching median(ASWM), robust outlyingness ratio nonlocal means(ROR-NLM), MLP-edge preserving regularization(MLP-EPR), convolutional neural network based(CNN-based), blind convolutional neural network(BCNN), and MLP neural network classifier(MLPNNC), to demonstrate its estimation accuracy. Two image sets were used in the experiments. One image set included the "Lena", "House", "Peppers", "Couple", "Hill", "Barbara", "Boat", "Man", "Cameraman", and "Monarch" images, whereas the other set contained 50 textured images that were randomly selected from the BSD database(unlike the noise detection model training set). For a fair comparison, all competing algorithms were implemented in the MATLAB 2017b environment on the same hardware platform. To verify the estimation accuracy of the proposed RVIN detector, we applied different RVIN noise ratios to images taken from commonly used image sets, applied the proposed detector to count the instances of error, false, and missed detections for a noisy image, and compared its performance with that of existing classical RVIN noise reduction algorithms. Usually, a higher rate of error detection indicates that more noise has been left undetected in an image, and a false detection can reduce the noise of normal non-distorted pixels during the noise reduction stage, which can lead to blurry images. The total number of errors represents the number of missed and false detections, whereas a smaller number of these detections corresponds to a lower algorithm detection error rate and a better image quality after noise reduction. Experimental results show that the proposed algorithm has a relatively balanced number of missed and false detections and ranks second among all compared algorithms in this respect, thereby offering a solid foundation for the subsequent noise reduction module. In the second image set, we combined the proposed RVIN detector with the generic iteratively reweighted annihilating filter(GIRAF) algorithm to form a RVIN noise reduction algorithm. To verify the effectiveness of the proposed detector, we applied different ratios of RVIN noise (i.e., 10%, 20%, 30%, 40%, 50%, and 60%) to 50 textured images and recorded the average peak signal-to-noise ratio (PSNR) of these images under each noise ratio. Experimental results show that the images restored by the proposed-GIRAF algorithm achieve the optimal PSNR under each noise ratio and that this algorithm greatly outperforms the Xu, Chen-GIRAF, and MLPNNC-GIRAF algorithms. The proposed-GIRAF algorithm also outperforms the second-best algorithm by 0.47 dB to 1.96 dB in terms of the average PSNR of its 50 images, thereby suggesting that the actual detection results of the proposed noise detector are the most effective for the subsequent noise reduction module. Experimental results also show that the proposed RVIN detector outperforms most of the existing detectors in terms of detection accuracy. As such, a switching RVIN removal method with an improved denoising performance can be obtained by combining the proposed RVIN detector with any inpainting algorithm.ConclusionExtensive experiments show that the estimation accuracy of the proposed MLP-based noise detector is robust across a wide range of noise ratios. When combined with the GIRAF algorithm, this detector significantly outperforms the traditional RVIN denoising algorithm in terms of denoising effect.关键词:image denoising;random-valued impulse noise (RVIN);local spatial structure;eight neighbor rank-ordered logarithmic difference (EN-ROLD);minimum orientation logarithmic difference (MOLD);multi-layer perception (MLP);detection accuracy29|22|0更新时间:2024-05-07

摘要:ObjectiveRandom-valued impulse noise (RVIN) is a common cause of image degradation that is frequently observed in images captured by digital camera sensors. In addition to degrading image quality, this type of noise also leads to pixel failure and inaccurate storage location or transmission. The presence of impulse noise may also introduce difficulties in feature extraction, target tracking, image classification, and subsequent image processing and analysis works. For RVIN, the noise value of a corrupted pixels uniformly distributed between 0 and 255. In this case, detecting the RVIN is very difficult. The available local image statistics for RVIN detection, which are used to determine whether the center pixel of an image patch is corrupted by RVIN noise or not, have are latively weak description ability, thereby restricting their accuracy to some extent and affecting the restoration performance of subsequent switching RVIN denoising modules.MethodNine local image statistics, including eight neighbor rank-ordered logarithmic difference (ROLD) statistics and one min-imum orientation logarithmic difference (MOLD) statistics, were used to construct a highly sensitive RVIN noise-aware feature vector that can describe the RVIN likeness of the center pixel of a given patch. Based on this vector, RVIN noise-aware feature vectors extracted from numerous noisy patches, their corresponding noise labels were formed as a set of training pairs for a multi-layer perception (MLP) network, and the MLP-based RVIN detector was trained.ResultComparative experiments were performed to test the estimation accuracy and denoising effect of the proposed RVIN detector. The proposed detector was compared with several state-of-the-art image denoising methods, including progressive switching median filter(PSMF), ROLD-edge preserving regularization(ROLD-EPR), adaptive switching median(ASWM), robust outlyingness ratio nonlocal means(ROR-NLM), MLP-edge preserving regularization(MLP-EPR), convolutional neural network based(CNN-based), blind convolutional neural network(BCNN), and MLP neural network classifier(MLPNNC), to demonstrate its estimation accuracy. Two image sets were used in the experiments. One image set included the "Lena", "House", "Peppers", "Couple", "Hill", "Barbara", "Boat", "Man", "Cameraman", and "Monarch" images, whereas the other set contained 50 textured images that were randomly selected from the BSD database(unlike the noise detection model training set). For a fair comparison, all competing algorithms were implemented in the MATLAB 2017b environment on the same hardware platform. To verify the estimation accuracy of the proposed RVIN detector, we applied different RVIN noise ratios to images taken from commonly used image sets, applied the proposed detector to count the instances of error, false, and missed detections for a noisy image, and compared its performance with that of existing classical RVIN noise reduction algorithms. Usually, a higher rate of error detection indicates that more noise has been left undetected in an image, and a false detection can reduce the noise of normal non-distorted pixels during the noise reduction stage, which can lead to blurry images. The total number of errors represents the number of missed and false detections, whereas a smaller number of these detections corresponds to a lower algorithm detection error rate and a better image quality after noise reduction. Experimental results show that the proposed algorithm has a relatively balanced number of missed and false detections and ranks second among all compared algorithms in this respect, thereby offering a solid foundation for the subsequent noise reduction module. In the second image set, we combined the proposed RVIN detector with the generic iteratively reweighted annihilating filter(GIRAF) algorithm to form a RVIN noise reduction algorithm. To verify the effectiveness of the proposed detector, we applied different ratios of RVIN noise (i.e., 10%, 20%, 30%, 40%, 50%, and 60%) to 50 textured images and recorded the average peak signal-to-noise ratio (PSNR) of these images under each noise ratio. Experimental results show that the images restored by the proposed-GIRAF algorithm achieve the optimal PSNR under each noise ratio and that this algorithm greatly outperforms the Xu, Chen-GIRAF, and MLPNNC-GIRAF algorithms. The proposed-GIRAF algorithm also outperforms the second-best algorithm by 0.47 dB to 1.96 dB in terms of the average PSNR of its 50 images, thereby suggesting that the actual detection results of the proposed noise detector are the most effective for the subsequent noise reduction module. Experimental results also show that the proposed RVIN detector outperforms most of the existing detectors in terms of detection accuracy. As such, a switching RVIN removal method with an improved denoising performance can be obtained by combining the proposed RVIN detector with any inpainting algorithm.ConclusionExtensive experiments show that the estimation accuracy of the proposed MLP-based noise detector is robust across a wide range of noise ratios. When combined with the GIRAF algorithm, this detector significantly outperforms the traditional RVIN denoising algorithm in terms of denoising effect.关键词:image denoising;random-valued impulse noise (RVIN);local spatial structure;eight neighbor rank-ordered logarithmic difference (EN-ROLD);minimum orientation logarithmic difference (MOLD);multi-layer perception (MLP);detection accuracy29|22|0更新时间:2024-05-07 -

摘要:ObjectiveImage inpainting is a hot research topic in computer vision. In recent years, this task has been considered a conditional pattern generation problem in deep learning that has received much attention from researchers. Compared with traditional algorithms, deep-learning-based image inpainting methods can be used in more extensive scenarios with better inpainting effects. Nevertheless, these methods have limitations. For instance, their image inpainting results need to be improved in terms of semantic rationality, structural coherence, and detail accuracy when processing the close association among global and local attributed images, especially when dealing with images involving a large defect area. This paper proposes a novel image inpainting model based on the fully convolutional neural network and the idea of generative adversarial network to solve the above problems. This model optimizes the network structure, loss constraints, and training strategies to obtain improved image inpainting effects.MethodFirst, this paper proposes a novel image inpainting network as a generator to repair defective images by using effective methods in the field of image processing. A network framework based on a fully convolutional neural network is then built in the form of an encoder-decoder. For instance, we replace part of convolutional layers in the network decoding stage with dilated convolution. We also apply dilated convolution superposition with multiple dilation rates to obtain a larger input image area compared with ordinary convolution in small-size feature graphs and then effectively increase the receptive field of the convolution kernel without increasing the calculation amount to develop a better understanding of images. We also set long-skip connections in the corresponding stage of encoding-decoding. This connection strengthens the structural information by transmitting low-level features to the decoding stage. The setting enhances the correlation among deep features and reduces the difficulties in network training. Second, we introduce structural similarity (SSIM) as the reconstruction loss of image inpainting. This image quality evaluation index is built from the perspective of the human visual perception system and differs from the common mean square error (MSE) loss per pixel. This index comprehensively evaluates via an experiment the similarity between two images in their brightness, contrast, and structure. Structural similarity, as the reconstruction loss of an image, can effectively improve the visual effects of image inpainting results. We use the improved global and local context discriminator as a two-way discriminator to determine the authenticity of the inpainting results. The global context discriminator guarantees the consistency of attributes between the image inpainting area and the entire image, whereas the local context discriminator improves the detailed performance of the image inpainting area. Combined with adversarial loss, this paper proposes a joint loss to improve the performance of the model and reduce the difficulties in its training. By drawing lessons from the training mode of generative adversarial networks, we presents a novel method to alternately train image inpainting network and image discriminative network, which obtains an ideal result. In practical applications, we only use image inpainting network to repair defective images.ResultTo verify the effectiveness of the proposed image inpainting model, we compare the image inpainting effect of this model with that of mainstream image inpainting algorithms on the CelebA-HQ dataset by using subjective perception and objective indicators. To achieve the best inpainting effect in controlled experiments, we use official versions of codes and examples. The image inpainting result is taken from loading pre-training files or online demos. We place the specific defect mask onto 50 randomly selected images as test cases and then apply different image inpainting algorithms to repair and collect statistics for the comparison. The CelebA-HQ dataset is a cropped and super-resolution reconstructed version of the CelebA dataset, which contains 30 000 high-resolution face images. The human face represents a special image that not only contains specific features but also an infinite amount of details. Therefore, face images can fully test the expressiveness of the image inpainting method. Considering the algorithm consistent attribute of the global and local images in the controlled experiment, experiment results show that the image inpainting model demonstrates some improvements in its semantic rationality, structural coherence, and detail performance compared with other algorithms. Subjectively, this model has a natural edge transition and a very detailed image inpainting area. Objectively, this model has a peak signal-to-noise ratio(PSNR), and SSIM of 31.30 dB and 90.58% on average, respective, both of which exceed those of mainstream deep learning-based image inpainting algorithms. To verify its generality, we test the image inpainting model on the Places2 dataset.ConclusionThis paper proposes a novel image inpainting model that shows improvements in terms of network structure, cost, training strategy, and image inpainting results. This model also provides a better understanding of the high-level semantics of images. Given its highly accurate context and details, the proposed model obtains better image inpainting results from human visual perception. We will continue to improve the effect of image inpainting and explore the conditional image inpainting task in the future. Our plan is to improve and optimize this model in terms of network structure and loss constraint to reduce losses in an image during the feature extraction process under a controllable network training setup. We shall also try to make the defect mask do more work with channel domain attention mechanism to further improve the quality of image inpainting. We also plan to analyze the relationship between image boundary structure and feature reconstruction. We aim to improve the convergence speed of network training and the quality of image inpainting by using an accurate and effective loss function. Furthermore, we would use human-computer interaction or presupposed condition to affect the results of image inpainting, which explores more practical values of the model.关键词:image inpainting;fully convolutional neural network;dilated convolution;skip connection;adversarial loss94|146|4更新时间:2024-05-07

摘要:ObjectiveImage inpainting is a hot research topic in computer vision. In recent years, this task has been considered a conditional pattern generation problem in deep learning that has received much attention from researchers. Compared with traditional algorithms, deep-learning-based image inpainting methods can be used in more extensive scenarios with better inpainting effects. Nevertheless, these methods have limitations. For instance, their image inpainting results need to be improved in terms of semantic rationality, structural coherence, and detail accuracy when processing the close association among global and local attributed images, especially when dealing with images involving a large defect area. This paper proposes a novel image inpainting model based on the fully convolutional neural network and the idea of generative adversarial network to solve the above problems. This model optimizes the network structure, loss constraints, and training strategies to obtain improved image inpainting effects.MethodFirst, this paper proposes a novel image inpainting network as a generator to repair defective images by using effective methods in the field of image processing. A network framework based on a fully convolutional neural network is then built in the form of an encoder-decoder. For instance, we replace part of convolutional layers in the network decoding stage with dilated convolution. We also apply dilated convolution superposition with multiple dilation rates to obtain a larger input image area compared with ordinary convolution in small-size feature graphs and then effectively increase the receptive field of the convolution kernel without increasing the calculation amount to develop a better understanding of images. We also set long-skip connections in the corresponding stage of encoding-decoding. This connection strengthens the structural information by transmitting low-level features to the decoding stage. The setting enhances the correlation among deep features and reduces the difficulties in network training. Second, we introduce structural similarity (SSIM) as the reconstruction loss of image inpainting. This image quality evaluation index is built from the perspective of the human visual perception system and differs from the common mean square error (MSE) loss per pixel. This index comprehensively evaluates via an experiment the similarity between two images in their brightness, contrast, and structure. Structural similarity, as the reconstruction loss of an image, can effectively improve the visual effects of image inpainting results. We use the improved global and local context discriminator as a two-way discriminator to determine the authenticity of the inpainting results. The global context discriminator guarantees the consistency of attributes between the image inpainting area and the entire image, whereas the local context discriminator improves the detailed performance of the image inpainting area. Combined with adversarial loss, this paper proposes a joint loss to improve the performance of the model and reduce the difficulties in its training. By drawing lessons from the training mode of generative adversarial networks, we presents a novel method to alternately train image inpainting network and image discriminative network, which obtains an ideal result. In practical applications, we only use image inpainting network to repair defective images.ResultTo verify the effectiveness of the proposed image inpainting model, we compare the image inpainting effect of this model with that of mainstream image inpainting algorithms on the CelebA-HQ dataset by using subjective perception and objective indicators. To achieve the best inpainting effect in controlled experiments, we use official versions of codes and examples. The image inpainting result is taken from loading pre-training files or online demos. We place the specific defect mask onto 50 randomly selected images as test cases and then apply different image inpainting algorithms to repair and collect statistics for the comparison. The CelebA-HQ dataset is a cropped and super-resolution reconstructed version of the CelebA dataset, which contains 30 000 high-resolution face images. The human face represents a special image that not only contains specific features but also an infinite amount of details. Therefore, face images can fully test the expressiveness of the image inpainting method. Considering the algorithm consistent attribute of the global and local images in the controlled experiment, experiment results show that the image inpainting model demonstrates some improvements in its semantic rationality, structural coherence, and detail performance compared with other algorithms. Subjectively, this model has a natural edge transition and a very detailed image inpainting area. Objectively, this model has a peak signal-to-noise ratio(PSNR), and SSIM of 31.30 dB and 90.58% on average, respective, both of which exceed those of mainstream deep learning-based image inpainting algorithms. To verify its generality, we test the image inpainting model on the Places2 dataset.ConclusionThis paper proposes a novel image inpainting model that shows improvements in terms of network structure, cost, training strategy, and image inpainting results. This model also provides a better understanding of the high-level semantics of images. Given its highly accurate context and details, the proposed model obtains better image inpainting results from human visual perception. We will continue to improve the effect of image inpainting and explore the conditional image inpainting task in the future. Our plan is to improve and optimize this model in terms of network structure and loss constraint to reduce losses in an image during the feature extraction process under a controllable network training setup. We shall also try to make the defect mask do more work with channel domain attention mechanism to further improve the quality of image inpainting. We also plan to analyze the relationship between image boundary structure and feature reconstruction. We aim to improve the convergence speed of network training and the quality of image inpainting by using an accurate and effective loss function. Furthermore, we would use human-computer interaction or presupposed condition to affect the results of image inpainting, which explores more practical values of the model.关键词:image inpainting;fully convolutional neural network;dilated convolution;skip connection;adversarial loss94|146|4更新时间:2024-05-07 -

摘要:ObjectiveHuman action recognition in videos aims to identify action categories by analyzing human action-related information and utilizing spatial and temporal cues. Research on human action recognition are crucial in the development of intelligent security, pedestrian monitoring, and clinical nursing; hence, this topic has become increasingly popular among researchers. The key point of improving the accuracy of human action recognition lies on how to construct distinctive features to describe human action categories effectively. Existing human action recognition methods fall into three categories:extracting visual features using deep learning networks, manually constructing image visual descriptors, and combining manual construction with deep learning networks. The methods that use deep learning networks normally operate convolution and pooling on small neighbor regions, thereby ignoring the connection among regions. By contrast, manual construction methods often have strong pertinence and poor adaptability to specific human actions, and its application scenarios are limited. Therefore, some researchers combine the idea of handmade features with deep learning computation. However, the existing methods still have problems in the effective utilization of the spatial and temporal information of human action, and the accuracy of human action recognition still needs to be improved. Considering the above problems, we research on how to design and construct distinguishable human action features and propose a new human action recognition method in which the key semantic information in the spatial domain of human action is extracted using a deep learning network and then connected and analyzed in the time domain.MethodHuman action videos usually record more than 24 frames per second; however, human poses do not change at this speed. In the computation of human action characteristics in videos, changes between consecutive video frames are usually minimal, and most human action information contained in the video is similar or repeated. To avoid redundant computations, we calculate the key frames of videos in accordance with the amplitude variation of the image content of interframes. Frames with repetitive content or slight changes are eliminated to avoid redundant calculation in the subsequent semantic information analysis and extraction. The calculated key frames contain evident changes of human body and human-related background and thus reveal sufficient human action information in videos for recognition. Then, to analyze and describe the spatial information of human action effectively, we design and construct a deep learning network to analyze the semantic information of images and extract the key semantic regions that can express important semantic information. The constructed network is denoted as Net1, which is trained by transfer learning and can use continuous convolutional layers to mine the semantic information of images. The output data of Net1 provides image regions, which contain various kinds of foreground semantic information and region scores, which represent the probability of containing foreground information. In addition, a nonmaximal suppression algorithm is used to eliminate areas that have too much overlap. Afterward, the key semantic regions are classified into person and nonperson regions, and then the position and proportion of person regions are used to distinguish the main person and the secondary persons. Moreover, object regions that have no relationship with the main person are eliminated, and only foreground regions that reveal human action-related semantic information are reserved. Afterward, a Siamese network is constructed to calculate the correlation of key semantic regions among frames and concatenate key semantic regions in the temporal domain. The proposed Siamese network is denoted as Net2, which has two inputs and one output; Net2 can be used to mine deeply and measure the similarity between two input image regions, and the output values are used to express the similarity. The constructed Net2 can concatenate the key semantic regions into a semantic region chain to ensure the time consistency of semantic information, and express human action change information in time domain more effectively. Moreover, we tailor the feature map of Net1 using the interpolation and scaling method, in order to obtain feature submaps of uniform size. That is, each semantic region chain corresponds to a feature matrix chain. Given that the length of each feature matrix chain is different, the maximum fusion method is used to fuse the feature matrix chain and obtain a single fused matrix, which reveals one kind of video semantic information. We stack the fused matrix from all feature matrix chains together and then design and train a classifier, which consists of two fully connected layers and a support vector machine. The output of the classifier is the final human action recognition result for videos.ResultThe UCF(University of Central Florida)50 dataset, a publicly available challenging human action recognition dataset, is used to verify the performance of our proposed human action recognition method. In this dataset, the average human action recognition accuracy of the proposed method is 94.3%, which is higher than that of state-of-the-are methods, such as that based on optical flow motion expression (76.9%), that based on a two-stream convolutional neural network (88.0%), and that based on SURF(speeded up robust features) descriptors and Fisher encoding (91.7%). In addition, the proposed crucial algorithms of the semantic region chain computation and the key semantic region correlation calculation are verified through a control experiment. Results reveal that the two crucial algorithms effectively improve the accuracy of human action recognition.ConclusionThe proposed human action recognition method, which uses semantic region extraction and concatenation, can effectively improve the accuracy of human action recognition in videos.关键词:human-machine interaction;deep learning network;key semantic information of human action;human action recognition;video key frame33|26|1更新时间:2024-05-07

摘要:ObjectiveHuman action recognition in videos aims to identify action categories by analyzing human action-related information and utilizing spatial and temporal cues. Research on human action recognition are crucial in the development of intelligent security, pedestrian monitoring, and clinical nursing; hence, this topic has become increasingly popular among researchers. The key point of improving the accuracy of human action recognition lies on how to construct distinctive features to describe human action categories effectively. Existing human action recognition methods fall into three categories:extracting visual features using deep learning networks, manually constructing image visual descriptors, and combining manual construction with deep learning networks. The methods that use deep learning networks normally operate convolution and pooling on small neighbor regions, thereby ignoring the connection among regions. By contrast, manual construction methods often have strong pertinence and poor adaptability to specific human actions, and its application scenarios are limited. Therefore, some researchers combine the idea of handmade features with deep learning computation. However, the existing methods still have problems in the effective utilization of the spatial and temporal information of human action, and the accuracy of human action recognition still needs to be improved. Considering the above problems, we research on how to design and construct distinguishable human action features and propose a new human action recognition method in which the key semantic information in the spatial domain of human action is extracted using a deep learning network and then connected and analyzed in the time domain.MethodHuman action videos usually record more than 24 frames per second; however, human poses do not change at this speed. In the computation of human action characteristics in videos, changes between consecutive video frames are usually minimal, and most human action information contained in the video is similar or repeated. To avoid redundant computations, we calculate the key frames of videos in accordance with the amplitude variation of the image content of interframes. Frames with repetitive content or slight changes are eliminated to avoid redundant calculation in the subsequent semantic information analysis and extraction. The calculated key frames contain evident changes of human body and human-related background and thus reveal sufficient human action information in videos for recognition. Then, to analyze and describe the spatial information of human action effectively, we design and construct a deep learning network to analyze the semantic information of images and extract the key semantic regions that can express important semantic information. The constructed network is denoted as Net1, which is trained by transfer learning and can use continuous convolutional layers to mine the semantic information of images. The output data of Net1 provides image regions, which contain various kinds of foreground semantic information and region scores, which represent the probability of containing foreground information. In addition, a nonmaximal suppression algorithm is used to eliminate areas that have too much overlap. Afterward, the key semantic regions are classified into person and nonperson regions, and then the position and proportion of person regions are used to distinguish the main person and the secondary persons. Moreover, object regions that have no relationship with the main person are eliminated, and only foreground regions that reveal human action-related semantic information are reserved. Afterward, a Siamese network is constructed to calculate the correlation of key semantic regions among frames and concatenate key semantic regions in the temporal domain. The proposed Siamese network is denoted as Net2, which has two inputs and one output; Net2 can be used to mine deeply and measure the similarity between two input image regions, and the output values are used to express the similarity. The constructed Net2 can concatenate the key semantic regions into a semantic region chain to ensure the time consistency of semantic information, and express human action change information in time domain more effectively. Moreover, we tailor the feature map of Net1 using the interpolation and scaling method, in order to obtain feature submaps of uniform size. That is, each semantic region chain corresponds to a feature matrix chain. Given that the length of each feature matrix chain is different, the maximum fusion method is used to fuse the feature matrix chain and obtain a single fused matrix, which reveals one kind of video semantic information. We stack the fused matrix from all feature matrix chains together and then design and train a classifier, which consists of two fully connected layers and a support vector machine. The output of the classifier is the final human action recognition result for videos.ResultThe UCF(University of Central Florida)50 dataset, a publicly available challenging human action recognition dataset, is used to verify the performance of our proposed human action recognition method. In this dataset, the average human action recognition accuracy of the proposed method is 94.3%, which is higher than that of state-of-the-are methods, such as that based on optical flow motion expression (76.9%), that based on a two-stream convolutional neural network (88.0%), and that based on SURF(speeded up robust features) descriptors and Fisher encoding (91.7%). In addition, the proposed crucial algorithms of the semantic region chain computation and the key semantic region correlation calculation are verified through a control experiment. Results reveal that the two crucial algorithms effectively improve the accuracy of human action recognition.ConclusionThe proposed human action recognition method, which uses semantic region extraction and concatenation, can effectively improve the accuracy of human action recognition in videos.关键词:human-machine interaction;deep learning network;key semantic information of human action;human action recognition;video key frame33|26|1更新时间:2024-05-07 -

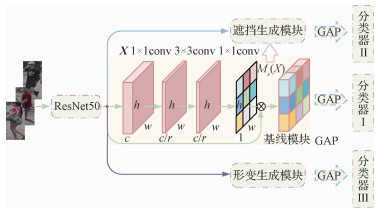

摘要:ObjectivePerson re-identification (re-ID) identifies a target person from a collection of images and shows great value in person retrieval and tracking from a collection of images captured by network cameras. Due to its important applications in public security and surveillance, person re-ID has attracted the attention of academic and industrial practitioner sat home and abroad. Although most existing re-ID methods have achieved significant progress, person re-ID continues to face two challenges resulting from the change of view in different surveillance cameras. First, pedestrians have a wide range of pose variations. Second, some people in public spaces are often occluded by various obstructions, such as bicycles or other people. These problems result in significant appearance changes and may introduce some distracting information. As a result, the same pedestrian captured by different cameras may look drastically different from each other and may prevent re-ID. One simple, effective method for addressing this problem is to obtain additional pedestrian samples. Using abundant practical scene images can help generate more post-variant and occluded samples, thereby helping re-ID systems achieve excellent robustness in complex situations. Some researchers have considered the representations of both the image and the key point-based pose as inputs to generate target poses and views via the generative adversarial networks (GAN) approach. However, GAN usually suffers from a convergence problem, and the generated target images usually have poor texture. In random erasing, a rectangle region is randomly selected from an image or feature map, and the original pixel value is discarded afterward to generate occluded examples. However, this approach only creates hard examples by spatially blocking the original image and (similar to the methods mentioned above) is very time consuming. To address these problems, we propose a person re-ID algorithm that generates hard deformation and occlusion samples.MethodWe use a deformable convolution module to simulate variations in pedestrian posture. The 2D offsets of regular grid sampling locations on the last feature map of the ResNet50 network are calculated by other branches that contain a multiple convolutional layer structure. These 2D offsets include the horizontal and vertical values X and Y. Afterward, these offsets are reapplied to the feature maps to produce new feature maps and deformable features via resampling. In this way, the network can change the posture of pedestrians in both horizontal and vertical directions and subsequently generate deformable features, thereby improving the ability of the network in dealing with deformed images. To address the occlusion problem, we generate spatial attention maps by using the spatial attention mechanism. We also apply other convolutional operations on the last feature map of the ResNet50 backbone to produce a spatial attention map that highlights the important spatial locations. Afterward, we mask out the most discriminative regions in the spatial attention map and retain only the low responses by using a fixed threshold value. The processed spatial attention map is then multiplied by the original features to produce the occluded features. In this way, we simulate the occluded pedestrian samples and further improve the ability of the network to adapt to other occluded samples. In the testing, we cascade two features with the original features as our final descriptors. We implement and train our network by using Pytorch and an NVIDIA TITAN GPU device, respectively. We set the batch size to 32 and rescaled all images to a fixed size of 256×128 pixels during the training and testing procedures. We also adopt a stochastic gradient descent (SGD) with a momentum of 0.9 and weight decay coefficient of 0.000 5 to update our network parameters. The initial learning rate is set to 0.04, which is further divided by 10 after 40 epochs (the training process has 60 epochs). We fix the reduction ratio and erasing threshold to 16 and 0.7 in all datasets, respectively. We adopt random flip as our data augmentation technique, and we use ResNet50 as our backbone model that contains parameters that are pre-trained on the ImageNet dataset. This model is also trained end-to-end. We adopt cumulative match characteristic (CMC) and mean average precision (mAP) to compare the re-ID performance of the proposed method with that of existing methods.ResultThe performance of our proposed method is evaluated on public large-scale datasets Market-1501, DukeMTMC-reID, and CUHK03. We use a uniform random seed to ensure the repeatability of the equity comparison and the results. In the Market-1501, DukeMTMC-reID, and CUHK03 (detected and labeled) datasets, the proposed method has obtained Rank-1 (represents the proportion of the queried people) values of 89.52%, 81.96%, 48.79%, and 50.29%, respectively, while its mAP values in these datasets reach 73.98%, 64.45%, 43.77%, and 45.57%, respectively. In the detected and labeled CUHK03 datasets, the proposed method shows 9.43%/8.74% and 8.72%/8.0% improvements in its Rank-1 and mAP values, respectively. These experimental results validate the competitive performance of this method for small and large datasets.ConclusionThe proposed person re-ID system based on the deformation and occlusion mechanisms can construct a highly recognizable model for extracting robust pedestrian features. This system maintains high recognition accuracy in complex application scenarios where occlusion and wide variations in pedestrian posture are observed. The proposed method can also effectively mitigate model overfitting in small-scale datasets (e.g., CUHK03 dataset), thereby improving its recognition rate.关键词:person re-identification;deformation;occlusion;spatial attention mechanism;robustness117|121|7更新时间:2024-05-07

摘要:ObjectivePerson re-identification (re-ID) identifies a target person from a collection of images and shows great value in person retrieval and tracking from a collection of images captured by network cameras. Due to its important applications in public security and surveillance, person re-ID has attracted the attention of academic and industrial practitioner sat home and abroad. Although most existing re-ID methods have achieved significant progress, person re-ID continues to face two challenges resulting from the change of view in different surveillance cameras. First, pedestrians have a wide range of pose variations. Second, some people in public spaces are often occluded by various obstructions, such as bicycles or other people. These problems result in significant appearance changes and may introduce some distracting information. As a result, the same pedestrian captured by different cameras may look drastically different from each other and may prevent re-ID. One simple, effective method for addressing this problem is to obtain additional pedestrian samples. Using abundant practical scene images can help generate more post-variant and occluded samples, thereby helping re-ID systems achieve excellent robustness in complex situations. Some researchers have considered the representations of both the image and the key point-based pose as inputs to generate target poses and views via the generative adversarial networks (GAN) approach. However, GAN usually suffers from a convergence problem, and the generated target images usually have poor texture. In random erasing, a rectangle region is randomly selected from an image or feature map, and the original pixel value is discarded afterward to generate occluded examples. However, this approach only creates hard examples by spatially blocking the original image and (similar to the methods mentioned above) is very time consuming. To address these problems, we propose a person re-ID algorithm that generates hard deformation and occlusion samples.MethodWe use a deformable convolution module to simulate variations in pedestrian posture. The 2D offsets of regular grid sampling locations on the last feature map of the ResNet50 network are calculated by other branches that contain a multiple convolutional layer structure. These 2D offsets include the horizontal and vertical values X and Y. Afterward, these offsets are reapplied to the feature maps to produce new feature maps and deformable features via resampling. In this way, the network can change the posture of pedestrians in both horizontal and vertical directions and subsequently generate deformable features, thereby improving the ability of the network in dealing with deformed images. To address the occlusion problem, we generate spatial attention maps by using the spatial attention mechanism. We also apply other convolutional operations on the last feature map of the ResNet50 backbone to produce a spatial attention map that highlights the important spatial locations. Afterward, we mask out the most discriminative regions in the spatial attention map and retain only the low responses by using a fixed threshold value. The processed spatial attention map is then multiplied by the original features to produce the occluded features. In this way, we simulate the occluded pedestrian samples and further improve the ability of the network to adapt to other occluded samples. In the testing, we cascade two features with the original features as our final descriptors. We implement and train our network by using Pytorch and an NVIDIA TITAN GPU device, respectively. We set the batch size to 32 and rescaled all images to a fixed size of 256×128 pixels during the training and testing procedures. We also adopt a stochastic gradient descent (SGD) with a momentum of 0.9 and weight decay coefficient of 0.000 5 to update our network parameters. The initial learning rate is set to 0.04, which is further divided by 10 after 40 epochs (the training process has 60 epochs). We fix the reduction ratio and erasing threshold to 16 and 0.7 in all datasets, respectively. We adopt random flip as our data augmentation technique, and we use ResNet50 as our backbone model that contains parameters that are pre-trained on the ImageNet dataset. This model is also trained end-to-end. We adopt cumulative match characteristic (CMC) and mean average precision (mAP) to compare the re-ID performance of the proposed method with that of existing methods.ResultThe performance of our proposed method is evaluated on public large-scale datasets Market-1501, DukeMTMC-reID, and CUHK03. We use a uniform random seed to ensure the repeatability of the equity comparison and the results. In the Market-1501, DukeMTMC-reID, and CUHK03 (detected and labeled) datasets, the proposed method has obtained Rank-1 (represents the proportion of the queried people) values of 89.52%, 81.96%, 48.79%, and 50.29%, respectively, while its mAP values in these datasets reach 73.98%, 64.45%, 43.77%, and 45.57%, respectively. In the detected and labeled CUHK03 datasets, the proposed method shows 9.43%/8.74% and 8.72%/8.0% improvements in its Rank-1 and mAP values, respectively. These experimental results validate the competitive performance of this method for small and large datasets.ConclusionThe proposed person re-ID system based on the deformation and occlusion mechanisms can construct a highly recognizable model for extracting robust pedestrian features. This system maintains high recognition accuracy in complex application scenarios where occlusion and wide variations in pedestrian posture are observed. The proposed method can also effectively mitigate model overfitting in small-scale datasets (e.g., CUHK03 dataset), thereby improving its recognition rate.关键词:person re-identification;deformation;occlusion;spatial attention mechanism;robustness117|121|7更新时间:2024-05-07

Image Processing and Coding

-

摘要:ObjectiveWith the rapid development of internet technology and the increasing popularity of video shooting equipment (e.g., digital cameras and smart phones), online video services have shown an explosive growth. Short videos have become indispensable sources of information for people in their daily production and life. Therefore, identifying how these people understand these videos is critical. Videos contain rich amounts of hidden information as these media can store more information compared with traditional ones, such as images and texts. Videos also show complexity in their space-time structure, content, temporal relevance, and event integrity. Given such complexities, behavior recognition research is presently facing challenges in extracting the time domain representation and features of videos. To address these difficulties, this study proposes a behavior recognition model based on multi-feature fusion.MethodThe proposed model is mainly composed of three parts, namely, the time domain fusion, two-way feature extraction, and feature modeling modules. The two- and three-frame fusion algorithms are initially adopted to compress the original data by extracting high- and low-frequency information from videos. This approach not only retains most information contained in these videos but also enhances the original dataset to facilitate the expression of original behavior information. Second, based on the design of a two-way feature extraction network, detailed features are extracted from videos through the positive input of the fused data to the network, whereas overall features are extracted through the reserve input of these data. A weighted fusion of these features is then achieved by using the common video descriptor, 3D ConvNets (3D convolutional neural networks) structure. Afterward, BiConvLSTM (bidirectional convolutional long short-term memory network) is used to further extract the local information of the fused features and to establish a model on the time axis to address the relatively long behavior intervals in some video sequences. Softmax is then applied to maximize the likelihood function and to classify the behavioral actions.ResultTo verify its effectiveness, the proposed algorithm was tested and analyzed on public datasets UCF101 and HMDB51. Results of a five-fold cross-validation show that this algorithm has average accuracies of 96.47% and 80.03%for these datasets, respectively. Comparative statistics for each type of behavior show that the classification accuracy of the proposed algorithm is approximately equal in almost all categories.ConclusionCompared with the available mainstream behavior recognition models, the proposed multi-feature model achieves higher recognition accuracy and is more universal, compact, simple, and efficient. The accuracy of this model is mainly improved via two- and three-frame fusions in the time domain to facilitate video information analysis and behavior information expression. The network is extracted by a two-way feature to efficiently determine the spatio-temporal features of videos. The BiConvLSTM network is then applied to further extract the features and establish a timing relationship.关键词:behavior recognition;two-way feature extraction network;3D convolutional neural networks (3D ConvNets);bidirectional convolutional long short-term memory network (BiConvLSTM);weighted fusion;high-frequency feature;low-frequency feature55|50|5更新时间:2024-05-07

摘要:ObjectiveWith the rapid development of internet technology and the increasing popularity of video shooting equipment (e.g., digital cameras and smart phones), online video services have shown an explosive growth. Short videos have become indispensable sources of information for people in their daily production and life. Therefore, identifying how these people understand these videos is critical. Videos contain rich amounts of hidden information as these media can store more information compared with traditional ones, such as images and texts. Videos also show complexity in their space-time structure, content, temporal relevance, and event integrity. Given such complexities, behavior recognition research is presently facing challenges in extracting the time domain representation and features of videos. To address these difficulties, this study proposes a behavior recognition model based on multi-feature fusion.MethodThe proposed model is mainly composed of three parts, namely, the time domain fusion, two-way feature extraction, and feature modeling modules. The two- and three-frame fusion algorithms are initially adopted to compress the original data by extracting high- and low-frequency information from videos. This approach not only retains most information contained in these videos but also enhances the original dataset to facilitate the expression of original behavior information. Second, based on the design of a two-way feature extraction network, detailed features are extracted from videos through the positive input of the fused data to the network, whereas overall features are extracted through the reserve input of these data. A weighted fusion of these features is then achieved by using the common video descriptor, 3D ConvNets (3D convolutional neural networks) structure. Afterward, BiConvLSTM (bidirectional convolutional long short-term memory network) is used to further extract the local information of the fused features and to establish a model on the time axis to address the relatively long behavior intervals in some video sequences. Softmax is then applied to maximize the likelihood function and to classify the behavioral actions.ResultTo verify its effectiveness, the proposed algorithm was tested and analyzed on public datasets UCF101 and HMDB51. Results of a five-fold cross-validation show that this algorithm has average accuracies of 96.47% and 80.03%for these datasets, respectively. Comparative statistics for each type of behavior show that the classification accuracy of the proposed algorithm is approximately equal in almost all categories.ConclusionCompared with the available mainstream behavior recognition models, the proposed multi-feature model achieves higher recognition accuracy and is more universal, compact, simple, and efficient. The accuracy of this model is mainly improved via two- and three-frame fusions in the time domain to facilitate video information analysis and behavior information expression. The network is extracted by a two-way feature to efficiently determine the spatio-temporal features of videos. The BiConvLSTM network is then applied to further extract the features and establish a timing relationship.关键词:behavior recognition;two-way feature extraction network;3D convolutional neural networks (3D ConvNets);bidirectional convolutional long short-term memory network (BiConvLSTM);weighted fusion;high-frequency feature;low-frequency feature55|50|5更新时间:2024-05-07 -

摘要:ObjectiveAlong with the wide usage of various digital image processing hardware and software and the continuous advancements in the field of computer vision, biometric recognition has been introduced to solve identification problems in people's daily lives and has been applied in the fields of finance, education, healthcare, and social security, among others. Compared with iris, palm print, and other biometric recognition technologies, face recognition has received the most attention due to its special characteristics (e.g., on-contact, imperceptible, and easy to promote). Given the wide usage of mobile Internet, cloud face recognition can achieve high recognition accuracy requires a large amount of face data to be uploaded to a third-party server. On the one hand, face images may reflect one's private information, such as gender, age, and health status. On the other hand, given that each person has unique facial features, hacking into face image databases may expose people to threats, including template and fake attacks. Therefore, how to boost the privacy and security of face images has become a core issue in the field of biometric recognition. Among the available biometric template protection methods, the transform-based method can simultaneously satisfy multiple criteria of biometric template protection and is presently considered the most typical cancelable biometric algorithm. The protected biometric template is obtained via anon-invertible transformation of the original biometric that is saved in a database. When this biometric template is attacked or threatened, a new feature template can be reissued to replace the previous template by modifying the external factors. To guarantee the security of the face recognition system and improve its recognition rate, this paper investigates a cancelable face recognition algorithm that integrates the structural features of the human face.MethodFirst, structural features are extracted from the original face image by using its gradient, local binary pattern, and local variance. By taking the original face images as real components and the extracted structural features as imaginary components, a complex matrix is built to represent the face image. To render the original face images and contour of their structural features invisible, the complex matrix is permuted by multiplying it by a random binary matrix. Afterward, complex 2D principal component analysis (C2DPCA) is performed to project a random permuted complex matrix into a new feature space. The 2DPCA result for the scrambled complex face matrix is theoretically deduced and verified to be the result of original complex face matrix 2DPCA multiplied by a random binary matrix. The resulting value does not change after scrambling given that only the row of the original 2DPCA is scrambled in the process. The nearest neighbor classifier based on Manhattan distance is then employed to calculate the recognition rate, that is, the distance between the tested face images and all training samples, and the training sample category that corresponds to the minimum distance is taken as the category.ResultThe experimental results obtained for the four face databases reveal that after scrambling the original face and structural feature images by using a random binary matrix, the human eye cannot detect useful information, and the scrambled results can be regenerated. Therefore, scrambling the random binary matrix can ensure the security of the proposed algorithm. Compared with three other algorithms, the fusion of a structural feature can effectively improve the recognition rate. Among the three structural features considered in this work, the variance feature obtains the highest recognition rate, which has increased by 4.9% on the Georgia Tech(GT) database, 2.25% on the Near Infrared(NIR) database, 2.25% on the Visible Light(VIS) database, and 1.98% on the YouTube MakeupYMU database. The employed random binary matrix does not affect the recognition rate, that is, the recognition rates of each database are the same before and after random scrambling. Given that the introduced random matrix is binary, the values do not change after random scrambling. The average testing time for the four face databases is within 1 millisecond.ConclusionA combination of the original face image with the structural features of the human face enriches the representation ability of face image information and helps improve facial recognition rate. A random permutation operation can also protect the privacy of the original face image. When the biometric template is leaked, resetting position 1 in the random binary matrix will re-scramble the complex face matrix and generate a new biometric template. The proposed algorithm also shows an excellent real-time performance and can meet the demands of practical application scenarios.关键词:cancelable face recognition;random binary matrix;two-dimensional principal component analysis(2DPCA);face structural feature;complex matrix18|19|1更新时间:2024-05-07