最新刊期

卷 25 , 期 10 , 2020

-

摘要:Deep learning can automatically learn from a large amount of data to obtain effective feature representations, thereby effectively improving the performance of various machine learning tasks. It has been widely used in various fields of medical imaging. Smart healthcare has become an important application area of deep learning, which is an effective approach to solve the following clinical problems: 1) given the limited medical resources, the experienced radiologists are not fully available, which cannot satisfy the fast development of the clinical requirement; 2) the lack of experienced radiologists, which cannot satisfy the fast increase of medical demand. At present, deep learning-based intelligent medical imaging systems are the typical scenarios in smart healthcare. This paper primarily reviews the applications of deep learning methods in various applications using four major clinical imaging techniques (i.e., X-ray, ultrasound, computed tomography(CT), and magnetic resonance imaging(MRI)). These works cover the whole pipeline of medical imaging, including reconstruction, detection, segmentation, registration, and computer-aided diagnosis (CAD). The reviews on medical image reconstruction focus on both MRI reconstruction and low-dose CT reconstruction on the basis of deep learning. Deep learning methods for MRI reconstruction can be divided into two categories: 1) data-driven end-to-end methods, 2) model-based methods. The low-dose CT reconstruction primarily introduces methods on the basis of convolutional neural networks and generative adversarial networks. In addition, deep learning methods for ultrasound imaging, medical image synthesis, and medical image super-resolution are reviewed. The reviews on lesion detection primarily focuses on the deep learning methods for lung lesions detection using CT, the deep learning detection model for tumor lesions, and the deep learning methods for the general lesion area detection. At present, deep learning has been widely used in medical image segmentation tasks, and its performance is significantly improved compared with traditional image segmentation methods. Most deep learning segmentation methods are typical data-driven machine learning models. We review supervised models, semi-supervised models, and self-supervised models with regard to the amount of labeled data and annotation. Medical images contain rich anatomical information, which enhances the performance of deep learning models with different supervision. Deep learning models incorporating prior knowledge are also reviewed. Medical image registration consistency is a difficult task in the field of medical image analysis. Deep learning has become a breakthrough to improve the performance of medical image registration. The end-to-end network structures produce high-precision registration results and have become a hotspot in the field of image registration. Compared with the conventional methods, the deep learning methods for medical image registration have a significant improvement in registration performance. According to the different supervision in the training procedure, this paper divides the deep learning methods for medical image registration into three modes: fully supervised methods, unsupervised methods, and weakly supervised methods. Computer-aided diagnosis is another application of deep learning in the field of medical imaging. This paper summarizes the deep learning methods on CAD with different supervision and the CAD works on the basis of multi-modality medical images. Notably, although deep learning methods have been applied in medical imaging, several challenges are still identified. For example, the small-sample size problem is common in medical imaging analysis. Advanced machine learning methods, including weakly supervised learning, transfer learning, few-shot learning, self-supervised learning, and increase learning, can help alleviate this problem. In addition, the data annotation of medical images is a problem that seriously restricts the extensive and in-depth application of deep learning, and extensive research on automatic data labeling must be carried out. Interpretability of the deep neural networks is also important in medical image analysis. Improving the interpretability of a deep neural network has always been a difficult point, and in-depth research must be carried out in this area. Furthermore, carrying out human-computer collaboration in medical care is important. The lightweight deep neural network is easy to deploy into portable medical devices, giving portable devices more powerful functions, which is also an important research direction. Deep learning has been successful in various tasks in medical imaging analysis. New methods must be developed for its further application in intelligent medical products.关键词:deep learning;medical imaging;image reconstruction;lesion detection;image segmentation;image registration;computer-aided diagnosis(CAD)1473|588|37更新时间:2024-05-07

摘要:Deep learning can automatically learn from a large amount of data to obtain effective feature representations, thereby effectively improving the performance of various machine learning tasks. It has been widely used in various fields of medical imaging. Smart healthcare has become an important application area of deep learning, which is an effective approach to solve the following clinical problems: 1) given the limited medical resources, the experienced radiologists are not fully available, which cannot satisfy the fast development of the clinical requirement; 2) the lack of experienced radiologists, which cannot satisfy the fast increase of medical demand. At present, deep learning-based intelligent medical imaging systems are the typical scenarios in smart healthcare. This paper primarily reviews the applications of deep learning methods in various applications using four major clinical imaging techniques (i.e., X-ray, ultrasound, computed tomography(CT), and magnetic resonance imaging(MRI)). These works cover the whole pipeline of medical imaging, including reconstruction, detection, segmentation, registration, and computer-aided diagnosis (CAD). The reviews on medical image reconstruction focus on both MRI reconstruction and low-dose CT reconstruction on the basis of deep learning. Deep learning methods for MRI reconstruction can be divided into two categories: 1) data-driven end-to-end methods, 2) model-based methods. The low-dose CT reconstruction primarily introduces methods on the basis of convolutional neural networks and generative adversarial networks. In addition, deep learning methods for ultrasound imaging, medical image synthesis, and medical image super-resolution are reviewed. The reviews on lesion detection primarily focuses on the deep learning methods for lung lesions detection using CT, the deep learning detection model for tumor lesions, and the deep learning methods for the general lesion area detection. At present, deep learning has been widely used in medical image segmentation tasks, and its performance is significantly improved compared with traditional image segmentation methods. Most deep learning segmentation methods are typical data-driven machine learning models. We review supervised models, semi-supervised models, and self-supervised models with regard to the amount of labeled data and annotation. Medical images contain rich anatomical information, which enhances the performance of deep learning models with different supervision. Deep learning models incorporating prior knowledge are also reviewed. Medical image registration consistency is a difficult task in the field of medical image analysis. Deep learning has become a breakthrough to improve the performance of medical image registration. The end-to-end network structures produce high-precision registration results and have become a hotspot in the field of image registration. Compared with the conventional methods, the deep learning methods for medical image registration have a significant improvement in registration performance. According to the different supervision in the training procedure, this paper divides the deep learning methods for medical image registration into three modes: fully supervised methods, unsupervised methods, and weakly supervised methods. Computer-aided diagnosis is another application of deep learning in the field of medical imaging. This paper summarizes the deep learning methods on CAD with different supervision and the CAD works on the basis of multi-modality medical images. Notably, although deep learning methods have been applied in medical imaging, several challenges are still identified. For example, the small-sample size problem is common in medical imaging analysis. Advanced machine learning methods, including weakly supervised learning, transfer learning, few-shot learning, self-supervised learning, and increase learning, can help alleviate this problem. In addition, the data annotation of medical images is a problem that seriously restricts the extensive and in-depth application of deep learning, and extensive research on automatic data labeling must be carried out. Interpretability of the deep neural networks is also important in medical image analysis. Improving the interpretability of a deep neural network has always been a difficult point, and in-depth research must be carried out in this area. Furthermore, carrying out human-computer collaboration in medical care is important. The lightweight deep neural network is easy to deploy into portable medical devices, giving portable devices more powerful functions, which is also an important research direction. Deep learning has been successful in various tasks in medical imaging analysis. New methods must be developed for its further application in intelligent medical products.关键词:deep learning;medical imaging;image reconstruction;lesion detection;image segmentation;image registration;computer-aided diagnosis(CAD)1473|588|37更新时间:2024-05-07 -

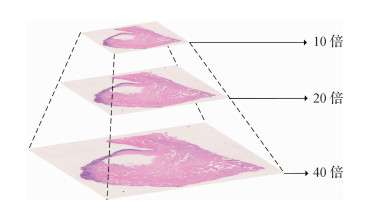

摘要:Histopathology is the gold standard for the clinical diagnosis of tumors and directly related to clinical treatment and prognosis. Its application inclinics has presented challenges in terms of the accuracy and efficiency of histopathological diagnosis, and pathological diagnosis is time consuming and requires pathologists to examine slides with a microscope for them to make reliable decisions. Moreover, the training period of a pathologist is long. In many parts of China, pathology departments are generally overworked due to the insufficient number of pathologists. Recently, deep learning has achieved great success in computer vision. The utilization of whole slide scanners enables the application of deep learning-based classification and segmentation methods to histopathological diagnosis, thereby improving efficiency and accuracy. In this paper, we first introduce the medical background of histopathology diagnosis. Then, we provide an overview of the primary datasets of histopathological diagnosis. We focus on introducing the datasets of three types of malignant tumors along with corresponding computer vision tasks. Breast cancer forms in the cells of breasts and is one of the most common cancer diagnosed in women. Early diagnosis can significantly improve survival rate and quality of life. Sentinel lymph node metastasis are visible when a cancer spreads. The diagnosis of lymph node metastasis is directly related to cancer staging and surgical plan decision. Colon cancer can be detected by colonoscopy biopsy, and early diagnosis requires pathologists to examine slides thoroughly for small malignancies.Computer-aided diagnosis can increase the efficiency of pathologists. Moreover, we propose three key technical problems: data storage and processing, model design and improvement, and learning with small amount or weakly labeled data. Then we review research progress related to tasks, including data preprocessing, classification, and segmentation and transfer learning and multiple instance learning. Pathology datasets are usually stored in a pyramidal tiled image format for fast loading and rescaling. The OpenSlide library provides high-performance pathology data reading, and the open-source softwareautomated slide analysis platform (ASAP) can be used in viewing and labeling these data. Trimming white backgrounds can reduce storage and calculation overhead to 82% on mainstream datasets. A stain normalization technology can eliminate color difference caused by slide production and scanning process. The classification of pathological image patches is the basic structure of whole slide classification and the backbone network of segmentation. Mainstream convolutional neural network models in the field of computer vision, including AlexNet, visual geometry group(VGG), GoogLeNet, and residual neural networks can reach satisfying results for pathological image patches. The patch sampling method can divide a whole slide image into smaller patches that can be processed by the mainstream convolutional neural network models. By aggregating the features of sampled patches through random forest or voting, a patch sampling method can be used in classifying or segmenting arbitrarily sized images. A migration learning technology based on neural network models pretrained on an ImageNet dataset is effective in alleviating the problem introduced by the small number of training samples in histopathological data. Fully convolutional network (FCN) represented by U-Net is a network designed for medical image segmentation tasks and are faster than convolutional neural networks with patch sampling methods. To utilize weakly labeled data, multiple instance learning (MIL) treats whole slide image as a bag of unlabeled pathological image patches. With bags labeled, MIL can be used for weakly supervised learning. Finally, this paper summarizes the main works surveyed and identifies challenges for future research. To make deep learning-based computer aided diagnosis clinically practical, researchers have to improve model accuracy, expand clinical application scenarios, and improve the interpretability of results.关键词:histopathology;deep learning;convolutional neural network;transfer learning;multiple instance learning205|277|9更新时间:2024-05-07

摘要:Histopathology is the gold standard for the clinical diagnosis of tumors and directly related to clinical treatment and prognosis. Its application inclinics has presented challenges in terms of the accuracy and efficiency of histopathological diagnosis, and pathological diagnosis is time consuming and requires pathologists to examine slides with a microscope for them to make reliable decisions. Moreover, the training period of a pathologist is long. In many parts of China, pathology departments are generally overworked due to the insufficient number of pathologists. Recently, deep learning has achieved great success in computer vision. The utilization of whole slide scanners enables the application of deep learning-based classification and segmentation methods to histopathological diagnosis, thereby improving efficiency and accuracy. In this paper, we first introduce the medical background of histopathology diagnosis. Then, we provide an overview of the primary datasets of histopathological diagnosis. We focus on introducing the datasets of three types of malignant tumors along with corresponding computer vision tasks. Breast cancer forms in the cells of breasts and is one of the most common cancer diagnosed in women. Early diagnosis can significantly improve survival rate and quality of life. Sentinel lymph node metastasis are visible when a cancer spreads. The diagnosis of lymph node metastasis is directly related to cancer staging and surgical plan decision. Colon cancer can be detected by colonoscopy biopsy, and early diagnosis requires pathologists to examine slides thoroughly for small malignancies.Computer-aided diagnosis can increase the efficiency of pathologists. Moreover, we propose three key technical problems: data storage and processing, model design and improvement, and learning with small amount or weakly labeled data. Then we review research progress related to tasks, including data preprocessing, classification, and segmentation and transfer learning and multiple instance learning. Pathology datasets are usually stored in a pyramidal tiled image format for fast loading and rescaling. The OpenSlide library provides high-performance pathology data reading, and the open-source softwareautomated slide analysis platform (ASAP) can be used in viewing and labeling these data. Trimming white backgrounds can reduce storage and calculation overhead to 82% on mainstream datasets. A stain normalization technology can eliminate color difference caused by slide production and scanning process. The classification of pathological image patches is the basic structure of whole slide classification and the backbone network of segmentation. Mainstream convolutional neural network models in the field of computer vision, including AlexNet, visual geometry group(VGG), GoogLeNet, and residual neural networks can reach satisfying results for pathological image patches. The patch sampling method can divide a whole slide image into smaller patches that can be processed by the mainstream convolutional neural network models. By aggregating the features of sampled patches through random forest or voting, a patch sampling method can be used in classifying or segmenting arbitrarily sized images. A migration learning technology based on neural network models pretrained on an ImageNet dataset is effective in alleviating the problem introduced by the small number of training samples in histopathological data. Fully convolutional network (FCN) represented by U-Net is a network designed for medical image segmentation tasks and are faster than convolutional neural networks with patch sampling methods. To utilize weakly labeled data, multiple instance learning (MIL) treats whole slide image as a bag of unlabeled pathological image patches. With bags labeled, MIL can be used for weakly supervised learning. Finally, this paper summarizes the main works surveyed and identifies challenges for future research. To make deep learning-based computer aided diagnosis clinically practical, researchers have to improve model accuracy, expand clinical application scenarios, and improve the interpretability of results.关键词:histopathology;deep learning;convolutional neural network;transfer learning;multiple instance learning205|277|9更新时间:2024-05-07 -

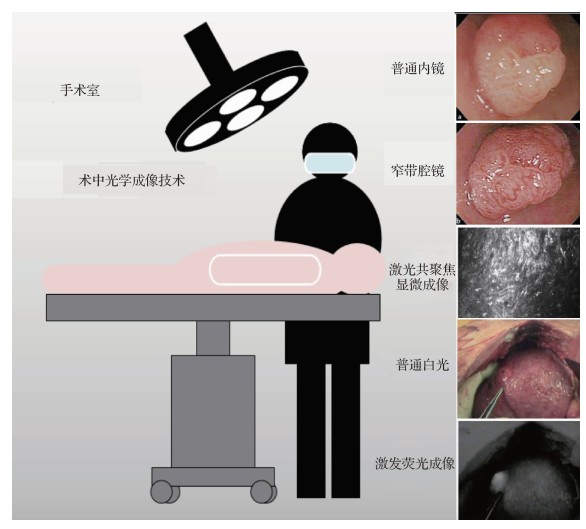

摘要:The rise of intraoperative optical imaging technologies provides a convenient and intuitive observation method for clinical surgery. Traditional intraoperative optical imaging methods include open optical and intraoperative endoscopic imaging. These methods ensure the smooth implementation of clinical surgery and promote the development of minimally invasive surgery. Subsequent methods include narrow-band endoscopic, intraoperative laser confocal microscopy, and near-infrared excited fluorescence imaging. Narrow-band endoscopic imaging uses a filter to filter out the broad-band spectrum emitted by the endoscope light source, leaving only the narrow-band spectrum for the diagnosis of various diseases of the digestive tract. The narrow-band spectrum is conducive to enhancing the image of the gastrointestinal mucosa vessels. In some lesions with microvascular changes, the narrow-band imaging system has evident advantages over ordinary endoscopy in distinguishing lesions. Narrow-band endoscopic imaging has also been widely used in the fields of otolaryngology, respiratory tract, gynecological endoscopy, and laparoscopic surgery in addition to the digestive tract. Intraoperative laser confocal microscopy is a new type of imaging method. It can realize superficial tissue imaging in vivo and provide pathological information by using the principle of excited fluorescence imaging. This imaging method has high clarity due to the application of confocal imaging and can be used for lesion positioning. Near-infrared excited fluorescence imaging uses excitation fluorescence imaging equipment combined with corresponding fluorescent contrast agents (such as ICG(indocyanine green), and methylene blue) to achieve intraoperative specific imaging of lesions, tissues, and organs in vivo. The basic principle is to stimulate the contrast agent accumulated in the tissue, the fluorescent contrast agent emits a fluorescent signal, and real-time imaging is realized by collecting the signals. In clinical research, the near-infrared fluorescence imaging technology is often used for lymphatic vessel tracing and accurate tumor resection. Contrast agents have different imaging spectral bands; hence, the corresponding near-infrared fluorescence imaging equipment is also developing to a multichannel imaging mode to image substantial contrast agents and label multiple tissues in the same field of view specifically during surgery. Multichannel near-infrared fluorescent surgical navigation equipment that has been gradually developed can realize simultaneous fluorescence imaging of multiple organs and tissues. These intraoperative optical imaging technologies can assist doctors in accurately locating tumors, rapidly distinguishing between benign and malignant tissues, and detecting small lesions. They have gained benefits in many clinical applications. However, optical imaging is susceptible to interference from ambient light, and optical signals are difficult to propagate in tissues without optical signal absorption and scattering. Intraoperative optical imaging technologies have the problems of limited imaging quality and superficial tissue imaging. In clinical research, intelligent analysis of preoperative imaging is fiercely developing, while information analysis of intraoperative imaging is still lacking of powerful analytical tools and analytical methods. The study of effective intraoperative optical imaging analysis algorithms needs further exploration. Machine learning is a tool developed with the age of computer information technology and is expected to provide an effective solution to the abovementioned problems. With the accumulation and explosion of data volume, deep learning, as a type of machine learning, is an end-to-end algorithm. It can gain the internal relationship among things autonomously through network training, establish an empirical model, and realize the function of traditional algorithms. Deep learning has shown enhanced results in the analysis and processing of natural images and is being continuously promoted and applied to various fields. Machine learning provides powerful technical means for intelligent analysis, image processing, and three-dimensional reconstruction, but the application research of using machine learning in intraoperative optical imaging is relatively few. The addition of machine learning is expected to break through the bottleneck and promote the development of intraoperative optical imaging technologies. This article focuses on intraoperative optical imaging technologies and investigates the application of machine learning in this field in recent years, including optimizing intraoperative optical imaging quality, assisting intelligent analysis of intraoperative optical imaging, and promoting three-dimensional modeling of intraoperative optical imaging. In the field of machine learning for intraoperative optical imaging optimization, existing research includes target detection of specific tissues, such as soft tissue segmentation and image fusion, and optimization of imaging effects, such as resolution enhancement of near-infrared fluorescence imaging during surgery and intraoperative endoscopic smoke removal. Furthermore, machine learning assists doctors in performing intraoperative optical imaging analysis, including the identification of benign and malignant tissues and the classification of lesion types and grades. Therefore, it can provide a timely reference value for the surgeon to judge the state of the patient during the clinical operation and before the pathological examination. In the field of intraoperative optical imaging reconstruction, machine learning can be combined with preoperative images (such as computed tomography and magnetic resonance imaging) to assist in intraoperative soft tissue reconstruction, or it can be based on intraoperative images for three-dimensional reconstruction. It can be used for localization, three-dimensional organ morphology reconstruction, and tracking of intraoperative tissues and surgical instruments. Thus, machine learning is expected to provide corresponding technical foundation for robotic surgery and augmented reality surgery in the future.This article summarizes and analyzes the application of machine learning in the field of intraoperative optical imaging and describes the application prospects of deep learning. As a review, it investigates the application research of machine learning in intraoperative optical imaging mainly from three aspects: intraoperative optical image optimization, intelligent analysis of optical imaging, and three-dimensional reconstruction. We also introduce related research and expected effects in the above fields. At the end of this article, the application of machine learning in the field of intraoperative optical imaging technologies is discussed, and the advantages and possible problems of machine-learning methods are analyzed. Furthermore, this article elaborates the possible future development direction of intraoperative optical imaging combined with machine learning, providing a broad view for subsequent research.关键词:intraoperative optical imaging;machine learning;imaging optimization;intelligent imaging analysis;3D modeling29|22|0更新时间:2024-05-07

摘要:The rise of intraoperative optical imaging technologies provides a convenient and intuitive observation method for clinical surgery. Traditional intraoperative optical imaging methods include open optical and intraoperative endoscopic imaging. These methods ensure the smooth implementation of clinical surgery and promote the development of minimally invasive surgery. Subsequent methods include narrow-band endoscopic, intraoperative laser confocal microscopy, and near-infrared excited fluorescence imaging. Narrow-band endoscopic imaging uses a filter to filter out the broad-band spectrum emitted by the endoscope light source, leaving only the narrow-band spectrum for the diagnosis of various diseases of the digestive tract. The narrow-band spectrum is conducive to enhancing the image of the gastrointestinal mucosa vessels. In some lesions with microvascular changes, the narrow-band imaging system has evident advantages over ordinary endoscopy in distinguishing lesions. Narrow-band endoscopic imaging has also been widely used in the fields of otolaryngology, respiratory tract, gynecological endoscopy, and laparoscopic surgery in addition to the digestive tract. Intraoperative laser confocal microscopy is a new type of imaging method. It can realize superficial tissue imaging in vivo and provide pathological information by using the principle of excited fluorescence imaging. This imaging method has high clarity due to the application of confocal imaging and can be used for lesion positioning. Near-infrared excited fluorescence imaging uses excitation fluorescence imaging equipment combined with corresponding fluorescent contrast agents (such as ICG(indocyanine green), and methylene blue) to achieve intraoperative specific imaging of lesions, tissues, and organs in vivo. The basic principle is to stimulate the contrast agent accumulated in the tissue, the fluorescent contrast agent emits a fluorescent signal, and real-time imaging is realized by collecting the signals. In clinical research, the near-infrared fluorescence imaging technology is often used for lymphatic vessel tracing and accurate tumor resection. Contrast agents have different imaging spectral bands; hence, the corresponding near-infrared fluorescence imaging equipment is also developing to a multichannel imaging mode to image substantial contrast agents and label multiple tissues in the same field of view specifically during surgery. Multichannel near-infrared fluorescent surgical navigation equipment that has been gradually developed can realize simultaneous fluorescence imaging of multiple organs and tissues. These intraoperative optical imaging technologies can assist doctors in accurately locating tumors, rapidly distinguishing between benign and malignant tissues, and detecting small lesions. They have gained benefits in many clinical applications. However, optical imaging is susceptible to interference from ambient light, and optical signals are difficult to propagate in tissues without optical signal absorption and scattering. Intraoperative optical imaging technologies have the problems of limited imaging quality and superficial tissue imaging. In clinical research, intelligent analysis of preoperative imaging is fiercely developing, while information analysis of intraoperative imaging is still lacking of powerful analytical tools and analytical methods. The study of effective intraoperative optical imaging analysis algorithms needs further exploration. Machine learning is a tool developed with the age of computer information technology and is expected to provide an effective solution to the abovementioned problems. With the accumulation and explosion of data volume, deep learning, as a type of machine learning, is an end-to-end algorithm. It can gain the internal relationship among things autonomously through network training, establish an empirical model, and realize the function of traditional algorithms. Deep learning has shown enhanced results in the analysis and processing of natural images and is being continuously promoted and applied to various fields. Machine learning provides powerful technical means for intelligent analysis, image processing, and three-dimensional reconstruction, but the application research of using machine learning in intraoperative optical imaging is relatively few. The addition of machine learning is expected to break through the bottleneck and promote the development of intraoperative optical imaging technologies. This article focuses on intraoperative optical imaging technologies and investigates the application of machine learning in this field in recent years, including optimizing intraoperative optical imaging quality, assisting intelligent analysis of intraoperative optical imaging, and promoting three-dimensional modeling of intraoperative optical imaging. In the field of machine learning for intraoperative optical imaging optimization, existing research includes target detection of specific tissues, such as soft tissue segmentation and image fusion, and optimization of imaging effects, such as resolution enhancement of near-infrared fluorescence imaging during surgery and intraoperative endoscopic smoke removal. Furthermore, machine learning assists doctors in performing intraoperative optical imaging analysis, including the identification of benign and malignant tissues and the classification of lesion types and grades. Therefore, it can provide a timely reference value for the surgeon to judge the state of the patient during the clinical operation and before the pathological examination. In the field of intraoperative optical imaging reconstruction, machine learning can be combined with preoperative images (such as computed tomography and magnetic resonance imaging) to assist in intraoperative soft tissue reconstruction, or it can be based on intraoperative images for three-dimensional reconstruction. It can be used for localization, three-dimensional organ morphology reconstruction, and tracking of intraoperative tissues and surgical instruments. Thus, machine learning is expected to provide corresponding technical foundation for robotic surgery and augmented reality surgery in the future.This article summarizes and analyzes the application of machine learning in the field of intraoperative optical imaging and describes the application prospects of deep learning. As a review, it investigates the application research of machine learning in intraoperative optical imaging mainly from three aspects: intraoperative optical image optimization, intelligent analysis of optical imaging, and three-dimensional reconstruction. We also introduce related research and expected effects in the above fields. At the end of this article, the application of machine learning in the field of intraoperative optical imaging technologies is discussed, and the advantages and possible problems of machine-learning methods are analyzed. Furthermore, this article elaborates the possible future development direction of intraoperative optical imaging combined with machine learning, providing a broad view for subsequent research.关键词:intraoperative optical imaging;machine learning;imaging optimization;intelligent imaging analysis;3D modeling29|22|0更新时间:2024-05-07

Review

-

摘要:Medical imaging is an important tool used for medical diagnosis and clinical decision support that enables clinicians to view the internal of human bodies. Medical image analysis, as an important part of healthcare artificial intelligence, provides fast, smart, and accurate decision supports for clinicians and radiologists. 3D computer vision is an emerging research area with the rapid development and popularization of 3D sensors (e.g., light detection and ranging (LIDAR), RGB-D cameras) and computer-aided design in game industry and smart manufacturing. In particular, we focus on the interface of medical image analysis and 3D computer vision called medical 3D computer vision. We introduce the research advances and challenges in medical 3D computer vision in three levels, namely, tasks (medical 3D computer vision tasks), data (data modalities and datasets), and representation (efficient and effective representation learning for 3D images). First, we introduce classification, segmentation, detection, registration, and reconstruction in medical 3D computer vision at the task level. Classification, such as malignancy stratification and symptom estimation, is an everyday task for clinicians and radiologists. Segmentation denotes assigning each voxel (pixel) a semantic label. Detection refers to localizing key objects from medical images. Segmentation and detection include organ segmentation/detection and lesion segmentation/detection. Registration, that is, calculating the spatial transformation from one image to another, plays an important role in medical imaging scenarios, such as spatially aligning multiple images from serial examination of a follow-up patient. Reconstruction is also a key task in medical imaging that aims at fast and accurate imaging results to reduce patients' costs. Second, we introduce the important data modalities in medical 3D computer vision, such as computed tomography (CT), magnetic resonance imaging (MRI), and positron emission tomography (PET), at the data level. The principle and clinical scenario of each imaging modality are briefly discussed. We then depict a comprehensive list of medical 3D image research datasets that cover classification, segmentation, detection, registration, and reconstruction tasks in CT, MRI, and graphics format (mesh). Third, we discuss the representation learning for medical 3D computer vision. 2D convolutional neural networks, 3D convolutional neural networks, and hybrid approaches are the commonly used methods for 3D representation learning. 2D approaches can benefiting from large-scale 2D pretraining, triplanar, and trislice 2D representation for 3D medical images, whereas they are generally weak in capturing large 3D contexts. 3D approaches are natively strong in 3D context. However, few publicly available 3D medical datasets are large and sufficiently diverse for universal 3D pretraining. For hybrid (2D + 3D) approaches, we introduce multistream and multistage approaches. Although they are empirically effective, the intrinsic disadvantages within the 2D/3D parts still exist. To address the small-data issues for medical 3D computer vision, we discuss the pretraining approaches for medical 3D images. Pretraining for 3D convolutional neural network(CNN) with videos is straightforward to implement. However, a significant domain gap is found between medical images and videos. Collecting massive medical datasets for pretraining is theoretically feasible. However, it only results in thousands of 3D medical image cases with tens of medical datasets, which is significantly smaller compared with natural 2D image datasets. Research efforts exploring unsupervised (self-supervised) learning to obtain the pretrained 3D models are reported. Although its results are extremely impressive, the model performance of up-to-date unsupervised learning is incomparable with that of fully supervised learning. The unsupervised representation learning from medical 3D images cannot leverage the power of massive 2D supervised learning datasets. We introduce several techniques for 2D-to-3D transfer learning, including inflated 3D(I3D), axial-coronal-sagittal(ACS) convolutions, and AlignShift. I3D enables 2D-to-3D transfer learning by inflating 2D convolution kernels into 3D, and ACS convolutions and AlignShift enable that by introducing novel operators that shuffle the features from 3D receptive fields into a 2D manner. Finally, we discuss several research challenges, problems, and directions for medical 3D computer vision. We first determine the anisotropy issue in medical 3D images, which can be a source of domain gap, that is, between thick- and thin-slice data. We then discuss the data privacy and information silos in medical images, which are important factors that lead to small-data issues in medical 3D computer vision. Federated learning is highlighted as a possible solution for information silos. However, numerous problems, such as how to develop efficient systems and algorithms for federated learning, how to deal with adversarial participators in federated learning, and how to deal with unaligned and missing data, are found. We determine the data imbalance and long tail issues in medical 3D computer vision. Efficient and effective learning of representation from the noisy, imbalanced, and long-tailed real-world data can be extremely challenging in practice because of the imbalanced and long-tailed distributions of real-world patients. We mention the automatic machine learning as a future direction of medical 3D computer vision. With end-to-end deep learning, the development and deployment of medical image application is inapplicable. However, excessive engineering staff need to be tuned for a new medical image task, such as design of deep neural networks, choices of data argumentation, how to preform data preprocessing, and how to tune the learning procedure. The tuning of these hyperparameters can be performed with a hand-crafted or intelligent system to reduce the research efforts by numerous researchers and engineers. Thus, medical 3D computer vision is an emerging research area. With increasing large-scale datasets, easy-to-use and reproducible methodology, and innovative tasks, medical 3D computer vision is an exciting research area that can facilitate healthcare into a novel level.关键词:medical image analysis;3D computer vision;deep learning;convolutional neural networks(CNN);pre-training158|180|2更新时间:2024-05-07

摘要:Medical imaging is an important tool used for medical diagnosis and clinical decision support that enables clinicians to view the internal of human bodies. Medical image analysis, as an important part of healthcare artificial intelligence, provides fast, smart, and accurate decision supports for clinicians and radiologists. 3D computer vision is an emerging research area with the rapid development and popularization of 3D sensors (e.g., light detection and ranging (LIDAR), RGB-D cameras) and computer-aided design in game industry and smart manufacturing. In particular, we focus on the interface of medical image analysis and 3D computer vision called medical 3D computer vision. We introduce the research advances and challenges in medical 3D computer vision in three levels, namely, tasks (medical 3D computer vision tasks), data (data modalities and datasets), and representation (efficient and effective representation learning for 3D images). First, we introduce classification, segmentation, detection, registration, and reconstruction in medical 3D computer vision at the task level. Classification, such as malignancy stratification and symptom estimation, is an everyday task for clinicians and radiologists. Segmentation denotes assigning each voxel (pixel) a semantic label. Detection refers to localizing key objects from medical images. Segmentation and detection include organ segmentation/detection and lesion segmentation/detection. Registration, that is, calculating the spatial transformation from one image to another, plays an important role in medical imaging scenarios, such as spatially aligning multiple images from serial examination of a follow-up patient. Reconstruction is also a key task in medical imaging that aims at fast and accurate imaging results to reduce patients' costs. Second, we introduce the important data modalities in medical 3D computer vision, such as computed tomography (CT), magnetic resonance imaging (MRI), and positron emission tomography (PET), at the data level. The principle and clinical scenario of each imaging modality are briefly discussed. We then depict a comprehensive list of medical 3D image research datasets that cover classification, segmentation, detection, registration, and reconstruction tasks in CT, MRI, and graphics format (mesh). Third, we discuss the representation learning for medical 3D computer vision. 2D convolutional neural networks, 3D convolutional neural networks, and hybrid approaches are the commonly used methods for 3D representation learning. 2D approaches can benefiting from large-scale 2D pretraining, triplanar, and trislice 2D representation for 3D medical images, whereas they are generally weak in capturing large 3D contexts. 3D approaches are natively strong in 3D context. However, few publicly available 3D medical datasets are large and sufficiently diverse for universal 3D pretraining. For hybrid (2D + 3D) approaches, we introduce multistream and multistage approaches. Although they are empirically effective, the intrinsic disadvantages within the 2D/3D parts still exist. To address the small-data issues for medical 3D computer vision, we discuss the pretraining approaches for medical 3D images. Pretraining for 3D convolutional neural network(CNN) with videos is straightforward to implement. However, a significant domain gap is found between medical images and videos. Collecting massive medical datasets for pretraining is theoretically feasible. However, it only results in thousands of 3D medical image cases with tens of medical datasets, which is significantly smaller compared with natural 2D image datasets. Research efforts exploring unsupervised (self-supervised) learning to obtain the pretrained 3D models are reported. Although its results are extremely impressive, the model performance of up-to-date unsupervised learning is incomparable with that of fully supervised learning. The unsupervised representation learning from medical 3D images cannot leverage the power of massive 2D supervised learning datasets. We introduce several techniques for 2D-to-3D transfer learning, including inflated 3D(I3D), axial-coronal-sagittal(ACS) convolutions, and AlignShift. I3D enables 2D-to-3D transfer learning by inflating 2D convolution kernels into 3D, and ACS convolutions and AlignShift enable that by introducing novel operators that shuffle the features from 3D receptive fields into a 2D manner. Finally, we discuss several research challenges, problems, and directions for medical 3D computer vision. We first determine the anisotropy issue in medical 3D images, which can be a source of domain gap, that is, between thick- and thin-slice data. We then discuss the data privacy and information silos in medical images, which are important factors that lead to small-data issues in medical 3D computer vision. Federated learning is highlighted as a possible solution for information silos. However, numerous problems, such as how to develop efficient systems and algorithms for federated learning, how to deal with adversarial participators in federated learning, and how to deal with unaligned and missing data, are found. We determine the data imbalance and long tail issues in medical 3D computer vision. Efficient and effective learning of representation from the noisy, imbalanced, and long-tailed real-world data can be extremely challenging in practice because of the imbalanced and long-tailed distributions of real-world patients. We mention the automatic machine learning as a future direction of medical 3D computer vision. With end-to-end deep learning, the development and deployment of medical image application is inapplicable. However, excessive engineering staff need to be tuned for a new medical image task, such as design of deep neural networks, choices of data argumentation, how to preform data preprocessing, and how to tune the learning procedure. The tuning of these hyperparameters can be performed with a hand-crafted or intelligent system to reduce the research efforts by numerous researchers and engineers. Thus, medical 3D computer vision is an emerging research area. With increasing large-scale datasets, easy-to-use and reproducible methodology, and innovative tasks, medical 3D computer vision is an exciting research area that can facilitate healthcare into a novel level.关键词:medical image analysis;3D computer vision;deep learning;convolutional neural networks(CNN);pre-training158|180|2更新时间:2024-05-07 -

摘要:A point cloud refers to a set of data points in a three-dimensional space. Each point is composed of a three-dimensional coordinate, with object's reflectivity, reflection intensity, distance from the point to the center of the scanner, horizontal angle, vertical angle, and deviation value. The point cloud is obtained by two methods, one is obtained by scanning the target object by the three-dimensional sensing device, such as LiDAR sensor and RGB-D camera, and the other is obtained by reconstruction from two-dimensional medical images. The point cloud can express the geometric position, shape, and scale of the target object. The point cloud has a wide range of applications in areas such as autonomous driving, robots, surveillance systems, surveying and mapping geography, virtual reality, and medicine, which has achieved remarkable results. Many researchers in the field of medical imaging have also devoted themselves to the research of medical image point cloud processing algorithms. Point cloud can intuitively simulate the three-dimensional structure of biological organs and tissues. With important application value in clinical medicine, the classification, segmentation, registration, and other tasks based on medical point cloud can help doctors make accurate diagnosis and treatment. The point cloud-based medical diagnosis has advantages and has the potential for future application in clinical screening diagnosis, personalized medical device-assisted design, and 3D printing. At this stage, deep learning algorithms have achieved remarkable results in tasks such as target detection, segmentation, and recognition. Deep learning algorithms gradually become efficient and popular in tasks such as target detection, segmentation, and recognition. Therefore, increasing point cloud processing algorithms are gradually extended from traditional algorithms to deep learning algorithms. This article reviews the research and progress of point cloud algorithms in the medical field. This review aims to summarize the current point cloud methods used in the medical field and focuses on 1) the characteristics, acquisition methods, and data conversion methods of medical point clouds; 2) traditional algorithms and deep learning algorithms in medical point cloud segmentation; and 3) the definition and significance of medical point cloud registration tasks. This review is based on feature or non-feature registration method. Finally, although the point cloud method has been applied in the medical field, the current application of the point cloud-based frontier method in the medical point cloud is still insufficient. Applying state-of-the-art algorithms to medical point clouds also requires continuous in-depth exploration and research. To date, medical point clouds have been used to assist doctors in completing some diagnostic tasks, but they are still in the process of continuous development and cannot replace the role of clinicians. The clinical application of medical point cloud has some limitations and challenges: 1) In the application research of point clouds in the medical field, the first task is to obtain point cloud data that can accurately characterize disease information. At present, point cloud data acquisition methods are relatively simple. In the future, high-quality point cloud imaging equipment can be combined to obtain accurate medical point cloud dataset. In applied research, the first task is to obtain point cloud data that can accurately characterize medical anatomical structure information. Considering that the morphological structure of human tissues and organs is relatively complex, most of the point cloud data of human organs are obtained by reconstructing medical images (such as (computed tomography(CT)) and (magnetic resonance imaging(MRI)). Therefore, such point clouds are sparsely distributed, with noise and errors. Obtaining accurate and dense medical point cloud datasets from medical images is an important subject to be studied. 2) In addition to facing the challenges of sparse reconstruction and data imbalance in point clouds, the difficulty of labeling medical point cloud data sets, the high cost of data integration, and the inevitable subjective labeling errors are the reasons why deep learning algorithms have not been widely used in the field of medical point clouds. The small amount of sample data and the imbalance of sample data may affect the accuracy of disease diagnosis. In the future, methods such as semi-supervised learning, active learning, and generating samples against the generated network can be used to improve learning accuracy. 3) A large number of medical point clouds are generated in the hospital but are not used to train and improve the diagnostic model. With the emergence of super-resolution algorithms and point cloud up-sampling networks, the prediction of sparse point clouds to dense point clouds based on medical image reconstruction will be an important means to construct high-quality medical point clouds. In the future, with the improvement of the quality and quantity of medical point cloud data sets, the research of medical point cloud processing algorithms will attract more researchers. Current research only focuses on model training and evaluation of specific data sets, which makes the universality of these algorithms challenging. The application and development of point cloud in medical images are currently a hot topic. Although point clouds have gradually penetrated into a considerable number of fields in medicine, the application of the current frontier methods of point cloud processing in medical point clouds is still insufficient. Research work using medical point cloud still needs to invest more research energy and attention.关键词:point clouds;medical applications;deep learning;segmentation;registration213|80|8更新时间:2024-05-07

摘要:A point cloud refers to a set of data points in a three-dimensional space. Each point is composed of a three-dimensional coordinate, with object's reflectivity, reflection intensity, distance from the point to the center of the scanner, horizontal angle, vertical angle, and deviation value. The point cloud is obtained by two methods, one is obtained by scanning the target object by the three-dimensional sensing device, such as LiDAR sensor and RGB-D camera, and the other is obtained by reconstruction from two-dimensional medical images. The point cloud can express the geometric position, shape, and scale of the target object. The point cloud has a wide range of applications in areas such as autonomous driving, robots, surveillance systems, surveying and mapping geography, virtual reality, and medicine, which has achieved remarkable results. Many researchers in the field of medical imaging have also devoted themselves to the research of medical image point cloud processing algorithms. Point cloud can intuitively simulate the three-dimensional structure of biological organs and tissues. With important application value in clinical medicine, the classification, segmentation, registration, and other tasks based on medical point cloud can help doctors make accurate diagnosis and treatment. The point cloud-based medical diagnosis has advantages and has the potential for future application in clinical screening diagnosis, personalized medical device-assisted design, and 3D printing. At this stage, deep learning algorithms have achieved remarkable results in tasks such as target detection, segmentation, and recognition. Deep learning algorithms gradually become efficient and popular in tasks such as target detection, segmentation, and recognition. Therefore, increasing point cloud processing algorithms are gradually extended from traditional algorithms to deep learning algorithms. This article reviews the research and progress of point cloud algorithms in the medical field. This review aims to summarize the current point cloud methods used in the medical field and focuses on 1) the characteristics, acquisition methods, and data conversion methods of medical point clouds; 2) traditional algorithms and deep learning algorithms in medical point cloud segmentation; and 3) the definition and significance of medical point cloud registration tasks. This review is based on feature or non-feature registration method. Finally, although the point cloud method has been applied in the medical field, the current application of the point cloud-based frontier method in the medical point cloud is still insufficient. Applying state-of-the-art algorithms to medical point clouds also requires continuous in-depth exploration and research. To date, medical point clouds have been used to assist doctors in completing some diagnostic tasks, but they are still in the process of continuous development and cannot replace the role of clinicians. The clinical application of medical point cloud has some limitations and challenges: 1) In the application research of point clouds in the medical field, the first task is to obtain point cloud data that can accurately characterize disease information. At present, point cloud data acquisition methods are relatively simple. In the future, high-quality point cloud imaging equipment can be combined to obtain accurate medical point cloud dataset. In applied research, the first task is to obtain point cloud data that can accurately characterize medical anatomical structure information. Considering that the morphological structure of human tissues and organs is relatively complex, most of the point cloud data of human organs are obtained by reconstructing medical images (such as (computed tomography(CT)) and (magnetic resonance imaging(MRI)). Therefore, such point clouds are sparsely distributed, with noise and errors. Obtaining accurate and dense medical point cloud datasets from medical images is an important subject to be studied. 2) In addition to facing the challenges of sparse reconstruction and data imbalance in point clouds, the difficulty of labeling medical point cloud data sets, the high cost of data integration, and the inevitable subjective labeling errors are the reasons why deep learning algorithms have not been widely used in the field of medical point clouds. The small amount of sample data and the imbalance of sample data may affect the accuracy of disease diagnosis. In the future, methods such as semi-supervised learning, active learning, and generating samples against the generated network can be used to improve learning accuracy. 3) A large number of medical point clouds are generated in the hospital but are not used to train and improve the diagnostic model. With the emergence of super-resolution algorithms and point cloud up-sampling networks, the prediction of sparse point clouds to dense point clouds based on medical image reconstruction will be an important means to construct high-quality medical point clouds. In the future, with the improvement of the quality and quantity of medical point cloud data sets, the research of medical point cloud processing algorithms will attract more researchers. Current research only focuses on model training and evaluation of specific data sets, which makes the universality of these algorithms challenging. The application and development of point cloud in medical images are currently a hot topic. Although point clouds have gradually penetrated into a considerable number of fields in medicine, the application of the current frontier methods of point cloud processing in medical point clouds is still insufficient. Research work using medical point cloud still needs to invest more research energy and attention.关键词:point clouds;medical applications;deep learning;segmentation;registration213|80|8更新时间:2024-05-07 -

摘要:Hepatocellular carcinoma is one of the most common malignant tumors of the digestive system in clinic. It ranks third after gastric cancer and lung cancer in the death ranking of malignant tumors. Computed tomography (CT) can well display the organs composed of soft tissue and show the lesions in the abdominal image. It has become a typical method for the diagnosis and treatment of liver diseases. It produces high-quality liver imaging that can provide comprehensive information for the diagnosis and treatment of liver tumors, alleviate the heavy workload of doctors, and have an important value for subsequent diagnosis and treatment. Segmentation of CT images of liver tumors is a crucial step in the diagnosis of liver cancer. In accordance with the maximum diameter, volume, and number of liver lesions, medical workers can give patients accurate diagnosis results and treatment plans conveniently and rapidly. However, the manual three-dimensional segmentation of liver tumors is time consuming and requires substantial work. Therefore, a method for automatically segmenting liver tumors is urgently needed. Many challenges occur in the segmentation of liver tumors. First, the CT image of a liver tumor shows the cross section of the human body, and the contrast of the liver and liver tumor tissue is inconsiderably different from that of the surrounding adjacent tissues (such as the stomach, pancreas, and heart). The segmentation by using grayscale differences is difficult. Second, the individual differences of patients result in diverse sizes and shapes of liver tumors. Third, CT images are susceptible to various external factors, such as noise, partial volume effects, and magnetic field bias. The interference of the shift makes the image blurry. Dealing with the effects of these factors in a timely manner is a great challenge for medical imaging researchers. Accurate segmentation can ensure that clinicians can make wise surgical treatment plans. With the rise of big data and artificial intelligence in recent years, assisted diagnosis of liver cancer based on deep learning has gradually become a popular research topic. Its combination with medicine can realize and predict the condition and assist diagnosis, which has great clinical significance. Segmentation methods for liver tumor CT images based on deep learning have also attracted wide attention in the past few years. From relevant literature in the field of liver tumor image segmentation, this paper mainly summarizes several commonly used segmentation methods for current liver tumor CT images based on deep learning, aiming to provide convenience to related researchers. We comprehensively summarize and analyze the deep learning methods for liver tumor CT images from three aspects: datasets, evaluation indicators and algorithms. First, we introduce common databases of liver tumors and analyze and compare them in terms of year, resolution, number of cases, slice thickness, pixel size, and voxel size to compare the segmentation methods for emerging liver tumors objectively. Second, several important evaluation indicators, such as Dice, relative volume difference, and volumetric overlap error, are also briefly introduced, analyzed, and compared to evaluate the effectiveness of each algorithm in the accuracy of liver tumor segmentation. On the basis of the previous work, we divide the deep learning segmentation methods for CT images of liver tumors into three categories, namely, liver tumor segmentation methods based on fully convolutional network (FCN), U-Net, and generative adversarial network (GAN). The segmentation methods based on FCN can be further divided into two- and three-dimensional methods in accordance with the dimension of the convolution kernel. The segmentation methods based on U-Net are divided into three subcategories, which are methods based on single network, methods based on multinetwork, and methods combined with traditional methods. Similarly, the segmentation methods based on GAN are divided into three subcategories, which are based on network architecture improvements, generator-based improvements, and other methods. The basic ideas, network architecture forms, improvement schemes, advantages, and disadvantages of various methods are emphasized, and the performance of these methods on typical datasets is compared. Lastly, the advantages, disadvantages, and application scope of the three methods are summarized and compared. The future research trends of liver tumor deep learning segmentation methods are analyzed. 1) The use of three-dimensional neural networks and network deepening is a future research direction in this field. 2) The use of multimodal liver images for segmentation and the combination of multiple different deep neural networks to extract deep information of images for improving the accuracy of liver tumor segmentation are also main research directions in this field. 3) To overcome the problem of lack or unavailability of data, some researchers have shifted the supervised field to a semi-supervised or unsupervised field. For example, GAN is combined with other higher-performance networks. This situation can be further studied in the future. In summary, accurate segmentation of liver tumors is a necessary step in liver disease diagnosis, surgical planning, and postoperative evaluation. Deep learning is superior to traditional segmentation methods when segmenting liver tumors, and the obtained images have higher sensitivity and specificity. This study hopes that clinicians can intuitively and clearly observe the anatomical structure of normal and diseased tissues through the increasingly mature liver tumor segmentation technologies. It provides a scientific basis for clinical diagnosis, surgical procedures, and biomedical research. The research and development of medical image segmentation technologies play an important role in the reform of the medical field and have great research value and significance.关键词:computed tomography(CT);liver tumor;deep learning;medical image segmentation;fully convolutional network(FCN);U-Net;generative adversarial network(GAN)235|78|9更新时间:2024-05-07

摘要:Hepatocellular carcinoma is one of the most common malignant tumors of the digestive system in clinic. It ranks third after gastric cancer and lung cancer in the death ranking of malignant tumors. Computed tomography (CT) can well display the organs composed of soft tissue and show the lesions in the abdominal image. It has become a typical method for the diagnosis and treatment of liver diseases. It produces high-quality liver imaging that can provide comprehensive information for the diagnosis and treatment of liver tumors, alleviate the heavy workload of doctors, and have an important value for subsequent diagnosis and treatment. Segmentation of CT images of liver tumors is a crucial step in the diagnosis of liver cancer. In accordance with the maximum diameter, volume, and number of liver lesions, medical workers can give patients accurate diagnosis results and treatment plans conveniently and rapidly. However, the manual three-dimensional segmentation of liver tumors is time consuming and requires substantial work. Therefore, a method for automatically segmenting liver tumors is urgently needed. Many challenges occur in the segmentation of liver tumors. First, the CT image of a liver tumor shows the cross section of the human body, and the contrast of the liver and liver tumor tissue is inconsiderably different from that of the surrounding adjacent tissues (such as the stomach, pancreas, and heart). The segmentation by using grayscale differences is difficult. Second, the individual differences of patients result in diverse sizes and shapes of liver tumors. Third, CT images are susceptible to various external factors, such as noise, partial volume effects, and magnetic field bias. The interference of the shift makes the image blurry. Dealing with the effects of these factors in a timely manner is a great challenge for medical imaging researchers. Accurate segmentation can ensure that clinicians can make wise surgical treatment plans. With the rise of big data and artificial intelligence in recent years, assisted diagnosis of liver cancer based on deep learning has gradually become a popular research topic. Its combination with medicine can realize and predict the condition and assist diagnosis, which has great clinical significance. Segmentation methods for liver tumor CT images based on deep learning have also attracted wide attention in the past few years. From relevant literature in the field of liver tumor image segmentation, this paper mainly summarizes several commonly used segmentation methods for current liver tumor CT images based on deep learning, aiming to provide convenience to related researchers. We comprehensively summarize and analyze the deep learning methods for liver tumor CT images from three aspects: datasets, evaluation indicators and algorithms. First, we introduce common databases of liver tumors and analyze and compare them in terms of year, resolution, number of cases, slice thickness, pixel size, and voxel size to compare the segmentation methods for emerging liver tumors objectively. Second, several important evaluation indicators, such as Dice, relative volume difference, and volumetric overlap error, are also briefly introduced, analyzed, and compared to evaluate the effectiveness of each algorithm in the accuracy of liver tumor segmentation. On the basis of the previous work, we divide the deep learning segmentation methods for CT images of liver tumors into three categories, namely, liver tumor segmentation methods based on fully convolutional network (FCN), U-Net, and generative adversarial network (GAN). The segmentation methods based on FCN can be further divided into two- and three-dimensional methods in accordance with the dimension of the convolution kernel. The segmentation methods based on U-Net are divided into three subcategories, which are methods based on single network, methods based on multinetwork, and methods combined with traditional methods. Similarly, the segmentation methods based on GAN are divided into three subcategories, which are based on network architecture improvements, generator-based improvements, and other methods. The basic ideas, network architecture forms, improvement schemes, advantages, and disadvantages of various methods are emphasized, and the performance of these methods on typical datasets is compared. Lastly, the advantages, disadvantages, and application scope of the three methods are summarized and compared. The future research trends of liver tumor deep learning segmentation methods are analyzed. 1) The use of three-dimensional neural networks and network deepening is a future research direction in this field. 2) The use of multimodal liver images for segmentation and the combination of multiple different deep neural networks to extract deep information of images for improving the accuracy of liver tumor segmentation are also main research directions in this field. 3) To overcome the problem of lack or unavailability of data, some researchers have shifted the supervised field to a semi-supervised or unsupervised field. For example, GAN is combined with other higher-performance networks. This situation can be further studied in the future. In summary, accurate segmentation of liver tumors is a necessary step in liver disease diagnosis, surgical planning, and postoperative evaluation. Deep learning is superior to traditional segmentation methods when segmenting liver tumors, and the obtained images have higher sensitivity and specificity. This study hopes that clinicians can intuitively and clearly observe the anatomical structure of normal and diseased tissues through the increasingly mature liver tumor segmentation technologies. It provides a scientific basis for clinical diagnosis, surgical procedures, and biomedical research. The research and development of medical image segmentation technologies play an important role in the reform of the medical field and have great research value and significance.关键词:computed tomography(CT);liver tumor;deep learning;medical image segmentation;fully convolutional network(FCN);U-Net;generative adversarial network(GAN)235|78|9更新时间:2024-05-07 - 摘要:Schizophrenia is a severe psychiatric disorder with abnormalities in brain structure and function. The early diagnosis and timely intervention can significantly improve the quality of life. However, no effective methods are available for the diagnosis and treatment of schizophrenia yet due to the complex pathology of this disease. Recently, deep learning methods have been widely used in medical imaging and have shown their great feature representation capability. The performance of deep learning methods has been demonstrated to be superior to that of traditional machine-learning methods. Researchers have attempted to use deep learning to assist the diagnosis of schizophrenia on the basis of magnetic resonance imaging (MRI) data. However, few surveys have systematically summarized the application of deep learning in the MRI-based diagnosis of schizophrenia. Therefore, MRI-based deep learning methods for the classification of schizophrenia patients and healthy controls were reviewed in this paper. First, commonly used deep learning models in the field of MRI-based diagnosis of schizophrenia were introduced. These models were classified into five categories: feed-forward neural network, recurrent neural network, convolutional neural network, unsupervised feature-learning models, and other deep models. The basic idea of the model in each category was introduced. Specifically, perceptron and its deeper expanding model, called multilayer perceptron, were briefly introduced in the review of feed-forward neural network. The most famous recurrent neural network model, named long short-term memory, was described. For the convolutional neural network, the common model components were reviewed. Unsupervised feature-learning models were further divided into two subcategories: stacked auto-encoder and deep belief network. The basic components of the model in each subcategory were described. We also reviewed two other deep models that cannot be classified into the categories described above, namely, capsule network and multi-grained cascade forest. Examples of deep learning studies used in the diagnosis of schizophrenia in each category were included to show the effectiveness of these models. Second, the deep learning methods for schizophrenia classification were divided on the basis of the data modality of MRI and reviewed in accordance with the following categories: structural MRI (sMRI)-, functional MRI (fMRI)-, and multimodal-based methods. Papers using MRI-based deep learning models for schizophrenia diagnosis were also summarized in a table. For sMRI-based methods, studies using 2D and 3D models and different methods for solving the requirement of large sample size were discussed. Resting-state fMRI- and task-fMRI-based methods were included in the fMRI-based category. In addition to different methods for solving limited sample size, studies using regional- or voxel-based functional activity features and functional connectivity-based features were introduced in this category. Some multimodal-based methods, which show better performance than most of unimodal-based approaches, were also described. Lastly, on the basis of existing studies, we concluded the main challenges and obstacles in the real application of these models in schizophrenia diagnosis. The first one refers to sample-related problems, including limited sample size and imbalanced sample class distribution. The second one is the lack of interpretability in deep learning models, which is a critical limitation for the application of deep learning models in real clinical condition. The last one is related to problems in multimodality analyses. Missing modality and the lack of effective multimodal feature fusion strategies would be the key problems to be solved. Future research directions lie in multisite and longitudinal data analyses and the construction of personalized precise models for the diagnosis of different subtypes of schizophrenia. Multisite data analyses would lead to a robust model and enable effective evaluation for deep learning models before the translation to clinical applications. Longitudinal data would provide the dynamically developmental patterns of the disease, leading to enhanced model performance. Personalized precise models are urgently needed to improve the accuracy of computer-aided diagnostic systems in consideration of the broad symptoms of schizophrenia and existing biased methods. In summary, this article reviewed the application of MRI-based deep learning models in the diagnosis of schizophrenia and provided guidance for future studies in this field.关键词:deep learning;schizophrenia;magnetic resonance imaging(MRI);diagnosis;classification68|92|0更新时间:2024-05-07

-