最新刊期

卷 25 , 期 1 , 2020

- 摘要:Deep learning models have been widely used in multimedia signal processing. They considerably improve the performance of signal processing tasks by introducing nonlinearities but lack analytical formulation of optimum and optimality conditions due to their black-box architectures. In recent years, analyzing the optimal formulation and approximating the deep learning models based on classical signal processing theory have been popular for multimedia, that is, transform/basis projection-based models. This paper presents and analyzes the mathematical models and their theoretical bounds for high-dimensional nonlinear and irregular structured methods based on the fundamental theories of signal processing. The main content includes structured sparse representation, frame-based deep networks, multilayer convolutional sparse coding, and graph signal processing. We begin with sparse representation models based on group and hierarchical sparsities with their optimization methods and subsequently analyze the deep/multilayer networks developed using semi-discrete frames and convolutional sparse coding. We also present graph signal processing models by extending classical signal processing to the non-Euclidean geometry. Recent advances in these topics achieved by domestic and foreign researchers are compared and discussed. Structured sparse representation introduces the mixed norms to formulate a group Lasso problem for structural information, which can be solved using proximal method or network flow optimization. Considering that structured sparse representation is still based on the linear projection onto dictionary atoms, frame-based deep networks are developed to extend the semi-discrete frames in multiscale geometric analysis. They inherit the scale and directional decomposition led by frame theory and introduce nonlinearities to guarantee deformation stability. Inspired by scattering networks, multilayer convolutional sparse coding introduces combined regularization into sparse representation to fit max pooling operation. Sparse representation of irregular multiscale structures can be achieved with the trained overcomplete dictionary in a recursive manner. Graph signal processing extends conventional signal processing into non-Euclidean spaces. When integrated with convolutional neural networks, graph neural networks learn complex relational networks and are desirable for data-driven large-scale high-dimensional irregular signal processing. This paper forecasts the future work of mathematical theories and models for multimedia signal processing. This research is useful for developing a generalized graph signal processing model for large-scale irregular multimedia signals by analyzing the mathematical properties and linkages of conventional signal processing and graph spectral model.关键词:structured sparse representation;frame-based deep convolutional network;multi-layer convolutional sparse coding;graph signal processing;multimedia signal processing25|33|1更新时间:2024-05-07

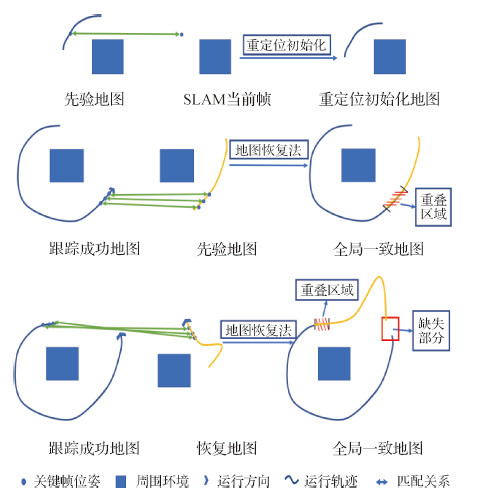

- 摘要:Traditional visual sensing is based on RGB optical and video imaging data and has achieved great success with the development of computer vision. However, traditional RGB optical imaging has limitations in spectral characterization, sampling effectiveness, measurement accuracy, and operating conditions. The new mechanism of visual sensing and new data processing technology have been developed rapidly recently, bringing considerable opportunities for improving sensing and cognitive capability. The developments are also endowed with important theoretical merits and offer a great chance for major application requirements. This report describes the development status and trends on visual sensing, including laser scanning, sonar, new dynamic imaging system, computational imaging, pose sensing, and other related fields. Researches on laser scanning are increasingly being conducted. In terms of algorithm developments for point cloud data processing, many domestic organizations and teams have reached international synchronization or leading level. Moreover, the application of point cloud data is more extensively shown by Chinese teams. However, at present, several foreign countries still show considerable advantages in hardware equipment, data acquisition, and pre-processing. In terms of event-based (i.e., dynamic vision sensor, DVS) imaging, domestic teams have focused on target classification, target recognition and tracking, stereo matching, and super resolution, achieving progress and breakthroughs. Hardware design and production technology of DVS are concentrated in foreign research institutes, and almost all these institutes have a research history of about 10 years. Few domestic institutions can independently produce DVS. Generally, although domestic DVS research started relatively late, the development in recent years has been very rapid. Moving target detection and underwater acoustic imaging for small static targets have always been the focus in the field of underwater information technology. Underwater acoustic imaging has the characteristics of military and civil applications. Domestically, high-tech research is mainly supported by civil sectors. For example, synthetic aperture sonar was developed under sustained national support. Substantial breakthroughs, such as in common mechanism, key technologies, and demonstration applications, are difficult to achieve in a short time. Therefore, sustained and stable support guarantees technological breakthroughs and industrialization. Learning-based visual positioning and 3D information processing have made remarkable progress, but many problems remain. In non-cooperative target pose imaging perception, many countries and organizations with advanced technology for space have carried out numerous investigations, and results from some of these endeavors have been successfully applied to space operations in practice. By contrast, visual measurement of non-cooperative targets started late in China. Related programs are under way, such as for rendezvous and docking of space non-cooperative targets and on-orbit service of space robots. However, most of the related investigations remain in the stage of theoretical research and ground experiment verification, and no mature engineering application is available. According to the literature survey, at present, in the field of visual sensing, domestic institutions and teams have made substantial progress in data processing and application. However, lags are observable, especially in the development of related hardware. Laser scanning imaging has a large amount of data and abundant information but lacks semantic information. Research has emerged in the frontiers of unmanned driving, virtual reality, and augmented reality. Wide applications are expected in the future, such as in the minimal description of massive 3D point cloud data and cross-dimensional structure description. DVS has a research history of over 10 years and has progressed in SLAM, tracking, reconfiguration, and other fields. The most evident advantages of DVS are in capturing high-speed moving objects and in high-efficiency and low-cost processing. Moreover, the real-time background filtering function of DVS has great prospects in unmanned driving and trajectory analysis, which will attract much attention for wide applications. The development of small-target detection technology in deep-sea area can be used in deep-sea resource development, protection of marine rights, search and rescue, and military applications. However, inadequacy in the sonar equipment for deep-sea small-target detection seriously restricts applications. Two new system imaging sonars, namely, high-speed imaging sonar based on frequency division multiple-input multiple-output and multi-static imaging sonar, are expected to improve the detection rate and recognition rate for underwater small targets. Robustness is critical for visual positioning and 3D information processing. Intelligent methods can solve the problems of visual positioning and 3D information processing. At present, the pose perception algorithm still shows low efficiency, is imperfect, and requires further investigation. Space operations have prerequisites, including relative pose of space non-cooperative target, reconstruction of 3D structure of target, and recognition of feature parts of target. The model information of the target itself can be totally or partly known. Thus, making full use of the priori information of the target model can greatly help solve the target position. Pose tracking based on 3D model to obtain the initial pose of a target is expected to be a future hotspot. In addition, in the tide of artificial intelligence, how to combine it with pose perception is worthy of exploration. Object position and attitude perception based on vision system are crucial for promoting the development of future space operation, including space close-range operation scenarios (e.g., target perception, docking, and capture), small autonomous aircraft, ground intelligent vehicles, and mobile robots. The prospects are given in this paper, which may provide a reference for researchers of related fields.关键词:visual sensing;laser scanning;synthetic aperture sonar;new dynamic imaging system;computational imaging;pose sensing61|54|2更新时间:2024-05-07

Review

-

摘要:ObjectiveImage compression, which aims to remove redundant information in an image, is a popular issue in image processing and computer vision. In recent years, image compression based on deep learning has attracted much attention of scholars in the field of image processing. Image compression using convolutional neural networks (CNNs) can be roughly divided into two categories. One is the image compression method based on the end-to-end convolutional network. The other category is CNNs combined with the traditional image compression method, which uses CNNs to deeply perceive the image content and obtains salient regions. High-quality coding is then applied to the salient regions, and lower-quality coding is used for non-significant regions to improve the visual quality of the compressed reconstructed images. However, in the latter method, the quality of the reconstructed image is often considerably affected because there is no effective perception of the image content information. In view of the effectiveness of image content perception, the influence of scale on image content detection is disregarded in several conventionally proposed salient region detection methods. Furthermore, the difference in size between the input image and the output saliency map is not considered, which limits the model's perception domain to the image. Consequently, several salient objects in the original image cannot be effectively perceived, which affects the reconstructed image's quality in the subsequent compression. A novel image compression method based on multi-scale depth feature salient region (MS-DFSR) detection is proposed in the current study to deal with this problem.MethodImproved CNNs are used to detect the depth features of multi-scale images. For multi-scale images, with the help of the scale space concept, a plurality of saliency maps is generated by inputting an image into the MS-DFSR model using a pyramid structure to complete the detection of multi-scale saliency regions. Scale selection, in the presence of an extremely large scale, causes the resulting salient area to become too divergent and loses salient meaning. Therefore, two scales are used in this work. The first one is the standard output scale of the network, and the second scale is the larger scale adopted in this work. The latter scale is used to effectively detect multiple salient objects in an image and perceive the image content effectively. For depth features' salient region detection, we replace the fully connected layer and the fourth max pooling layer with a global average pooling layer and an avg pooling layer in order to retain spatial location information on multiple salient objects in an image as much as possible. Then, the salient areas of different scales that are detected by MS-DFSR are obtained. To increase the perceived domain of an image and the perceived image content effectively, the size of the salient region map is adaptively adjusted according to the size of the input image by considering the difference between the input and output salient image sizes. Meanwhile, a Gaussian function is introduced to filter the salient region, retain the original image content information, and obtain a multi-scale fusion saliency region map. Finally, we complete image compression and reconstruction by combining the obtained multi-scale saliency region map with image coding methods. To protect the image's salient content and improve the reconstructed image's quality, the salient regions of an image are compressed using near-lossless and lossy compression methods, such as joint photographic experts' group (JPEG) and set partitioning in hierarchical trees (SPIHT), on the non-salient regions.ResultWe compare our model with three traditional compression methods, namely, JPEG, SPIHT, and run-length encoding (RLE) compression techniques. The experimental datasets include two public datasets, namely, Kodak PhotoCD and Pascal Voc. The quantitative evaluation metrics (higher is better) include the peak signal-to-noise ratio (PSNR), the structural similarity index measure (SSIM), and a modified PSNR metric based on HVS (PSNR-HVS). Experiment results show that our model outperforms all the other traditional methods on the Kodak PhotoCD and Pascal Voc datasets. The saliency map shows that our model can produce results that cover multiple salient objects and improve the effective perception of image content. We compare the image compression method based on MS-DFSR detection with the image compression method based on single-scale depth feature salient region (SS-DFSR) detection, and the validity of the MS-DFSR detection model is verified. Comparative experiments demonstrate that the proposed compression method improves image compression quality. The quality of the image reconstructed using the proposed compression method is higher than that using the JPEG image compression method. When the code rate is approximately 0.39 bpp on the Kodak PhotoCD dataset, PSNR is improved by 2.23 dB, SSIM by 0.024, and PSNR-HVS by 2.07. On the Pascal Voc dataset, PSNR, SSIM, and PSNR-HVS increase by 1.63 dB, 0.039, and 1.57, respectively. At the same time, when MS-DFSR is combined with SPIHT and RLE compression technology on the Kodak PhotoCD dataset, PSNR is increased by 1.85 dB and 1.98 dB, respectively. SSIM is improved by 0.006 and 0.023, respectively, and PSNR-HVS is increaseal by 1.90 and 1.88, respectively.ConclusionThe proposed image compression method using multi-scale depth features exhibits better performance than traditional image compression methods because the proposed method effectively reduces image content loss by improving the effectiveness of image content perception during the image compression process. Consequently, the quality of the reconstructed image can be improved significantly.关键词:image compression;multi-scale depth features;saliency region detection;convolutional neural networks (CNNs);peak signal to noise ratio (PSNR);structural similarity (SSIM)19|21|2更新时间:2024-05-07

摘要:ObjectiveImage compression, which aims to remove redundant information in an image, is a popular issue in image processing and computer vision. In recent years, image compression based on deep learning has attracted much attention of scholars in the field of image processing. Image compression using convolutional neural networks (CNNs) can be roughly divided into two categories. One is the image compression method based on the end-to-end convolutional network. The other category is CNNs combined with the traditional image compression method, which uses CNNs to deeply perceive the image content and obtains salient regions. High-quality coding is then applied to the salient regions, and lower-quality coding is used for non-significant regions to improve the visual quality of the compressed reconstructed images. However, in the latter method, the quality of the reconstructed image is often considerably affected because there is no effective perception of the image content information. In view of the effectiveness of image content perception, the influence of scale on image content detection is disregarded in several conventionally proposed salient region detection methods. Furthermore, the difference in size between the input image and the output saliency map is not considered, which limits the model's perception domain to the image. Consequently, several salient objects in the original image cannot be effectively perceived, which affects the reconstructed image's quality in the subsequent compression. A novel image compression method based on multi-scale depth feature salient region (MS-DFSR) detection is proposed in the current study to deal with this problem.MethodImproved CNNs are used to detect the depth features of multi-scale images. For multi-scale images, with the help of the scale space concept, a plurality of saliency maps is generated by inputting an image into the MS-DFSR model using a pyramid structure to complete the detection of multi-scale saliency regions. Scale selection, in the presence of an extremely large scale, causes the resulting salient area to become too divergent and loses salient meaning. Therefore, two scales are used in this work. The first one is the standard output scale of the network, and the second scale is the larger scale adopted in this work. The latter scale is used to effectively detect multiple salient objects in an image and perceive the image content effectively. For depth features' salient region detection, we replace the fully connected layer and the fourth max pooling layer with a global average pooling layer and an avg pooling layer in order to retain spatial location information on multiple salient objects in an image as much as possible. Then, the salient areas of different scales that are detected by MS-DFSR are obtained. To increase the perceived domain of an image and the perceived image content effectively, the size of the salient region map is adaptively adjusted according to the size of the input image by considering the difference between the input and output salient image sizes. Meanwhile, a Gaussian function is introduced to filter the salient region, retain the original image content information, and obtain a multi-scale fusion saliency region map. Finally, we complete image compression and reconstruction by combining the obtained multi-scale saliency region map with image coding methods. To protect the image's salient content and improve the reconstructed image's quality, the salient regions of an image are compressed using near-lossless and lossy compression methods, such as joint photographic experts' group (JPEG) and set partitioning in hierarchical trees (SPIHT), on the non-salient regions.ResultWe compare our model with three traditional compression methods, namely, JPEG, SPIHT, and run-length encoding (RLE) compression techniques. The experimental datasets include two public datasets, namely, Kodak PhotoCD and Pascal Voc. The quantitative evaluation metrics (higher is better) include the peak signal-to-noise ratio (PSNR), the structural similarity index measure (SSIM), and a modified PSNR metric based on HVS (PSNR-HVS). Experiment results show that our model outperforms all the other traditional methods on the Kodak PhotoCD and Pascal Voc datasets. The saliency map shows that our model can produce results that cover multiple salient objects and improve the effective perception of image content. We compare the image compression method based on MS-DFSR detection with the image compression method based on single-scale depth feature salient region (SS-DFSR) detection, and the validity of the MS-DFSR detection model is verified. Comparative experiments demonstrate that the proposed compression method improves image compression quality. The quality of the image reconstructed using the proposed compression method is higher than that using the JPEG image compression method. When the code rate is approximately 0.39 bpp on the Kodak PhotoCD dataset, PSNR is improved by 2.23 dB, SSIM by 0.024, and PSNR-HVS by 2.07. On the Pascal Voc dataset, PSNR, SSIM, and PSNR-HVS increase by 1.63 dB, 0.039, and 1.57, respectively. At the same time, when MS-DFSR is combined with SPIHT and RLE compression technology on the Kodak PhotoCD dataset, PSNR is increased by 1.85 dB and 1.98 dB, respectively. SSIM is improved by 0.006 and 0.023, respectively, and PSNR-HVS is increaseal by 1.90 and 1.88, respectively.ConclusionThe proposed image compression method using multi-scale depth features exhibits better performance than traditional image compression methods because the proposed method effectively reduces image content loss by improving the effectiveness of image content perception during the image compression process. Consequently, the quality of the reconstructed image can be improved significantly.关键词:image compression;multi-scale depth features;saliency region detection;convolutional neural networks (CNNs);peak signal to noise ratio (PSNR);structural similarity (SSIM)19|21|2更新时间:2024-05-07 -

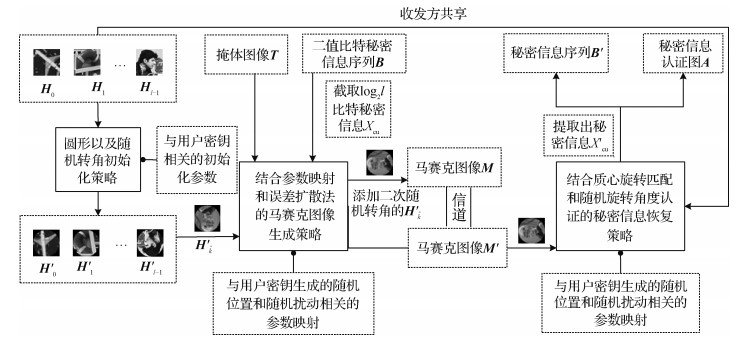

摘要:ObjectiveTraditional search-based coverless information hiding usually searches natural and unmodified carriers that contain appropriate secret vectors to transmit secret information. However, for natural images and texts, the ability to express irrelevant secret information is low. Thus, the hidden capacity is low. Search-based coverless information hiding cannot avoid the dense transmission of massive carriers that attract attacks easily and results in distorted secret information. Texture-based coverless information hiding usually divides sample texture image into several blocks, creating the mapping relationship between texture blocks and secret segments. Although such methods generated stego texture images by sample texture synthesis, they can only generate simple unnatural texture images. Mosaic-based information hiding can generate meaningful stego images, but in essence, such methods are modification-based information hiding, and they inevitably leave modification traces in carriers and have no authentication strategy. Therefore, verifying the authenticity of recovered secret information is impossible. Moreover, mosaic-based information hiding typically uses the LSB(least significant bit)-based modification embedding strategy for a large embedding capacity to embed the transformation parameters. The LSB-based embedding strategy is sensitive to attacks, and the embedded information is easily lost when suffering attacks. To address these problems, this study proposed a generative camouflage method combined with block rotation and photo mosaic.MethodIn the embedding, the proposed method transformed grayscale images into circle images with pseudo-random angles by user keys to construct photo mosaics and then generated random integer coordinate sequence to determine the hidden positions of secret bit strings. For each hidden position, a circle image related to user key and position was placed to express secret bits, and then the random angle related to user key and position was added for authentication. For each non-hidden position, a circle image similar to cover image pixel was set to conceal the secret image, and the added random perturbation angle precluded distinguishing hidden and non-hidden positions. The deviation caused by the placement of the circle image was scattered around unprocessed pixels by the error diffusion method to form the stego photo mosaic image. In the extraction, coordinates for all secret bits in the stego photo mosaic image were obtained by user key, and circle images expressing secret bits were fetched. The centroid of each circle image that expresses secret bits was normalized into the right half-axis and then identified by the strategy of centroid rotation matching. Each circle image index was found to extract the hidden secret bits by the minimum quadratic difference distance. To authenticate the correctness of each circle image fetched secret bits, the factual and theory angles were compared, where the minimum quadratic difference distance of the factual angle, and the theory angle was computed by the user key, and the fetched secret bits. If the factual angle equals the theory angle, then the extracted secret bits are correctly recovered.ResultThe proposed method regards each circle image as a hidden unit and only involves the rotation of circle images without any modification. The added random rotation angles also provide the security assurance for the specific user to extract secret information and conduct angle authentication. Only the user with the correct user key can eliminate the influence of random rotation angles and extract the correct secret information with its corresponding authentication information. Moreover, the proposed method uses photo mosaic to hide secret information. The method is robust in anti-attacks and has good authentication accuracy. At the same time, the proposed strategy relies entirely on the user's key and has high security. The experiments show that secret information can be completely recovered only by the correct key. Whether one or more user key parameters are changed, the changed user key parameters lead to the inaccurate extraction of secret information. In addition, in case of JPEG compression attacks with a quality factor of 50~80 and random rotation angle attacks, the embedded secret information can be completely recovered. Even with salt-and-pepper noise attacks of 8%~20%, the error rate(ER) of extracted information remains approximately 5%. The authentication success rates of the restored information are all above 80%.ConclusionThe proposed method uses randomly placed circle images to express secret information without any modification. The method can use photo mosaic to generate meaningful hidden carrier images and avoid the high cost of database creation and searching of the search-based coverless information hiding. The proposed method combines the parameter mapping and error diffusion mosaic image generation strategy to place stego circle images in their corresponding positions and then generate the stego mosaic image, avoiding the dense transmission of massive carriers in search-based coverless information hiding. Compared with the texture-based coverless information hiding, the proposed method can generate meaningful stego photo mosaic images, avoiding the generation of meaningless texture images in texture-based coverless information hiding. Even during attacks, each circle image expressed secret bits and its placement angle are not easily lost. The proposed method can extract secret information by centroid rotation matching and random rotation angle strategy based on user keys and improve the accuracy of secret information recognition.关键词:photo mosaic;coverless;generative hiding;centroid rotation matching;error diffusion;random angular authentication118|49|2更新时间:2024-05-07

摘要:ObjectiveTraditional search-based coverless information hiding usually searches natural and unmodified carriers that contain appropriate secret vectors to transmit secret information. However, for natural images and texts, the ability to express irrelevant secret information is low. Thus, the hidden capacity is low. Search-based coverless information hiding cannot avoid the dense transmission of massive carriers that attract attacks easily and results in distorted secret information. Texture-based coverless information hiding usually divides sample texture image into several blocks, creating the mapping relationship between texture blocks and secret segments. Although such methods generated stego texture images by sample texture synthesis, they can only generate simple unnatural texture images. Mosaic-based information hiding can generate meaningful stego images, but in essence, such methods are modification-based information hiding, and they inevitably leave modification traces in carriers and have no authentication strategy. Therefore, verifying the authenticity of recovered secret information is impossible. Moreover, mosaic-based information hiding typically uses the LSB(least significant bit)-based modification embedding strategy for a large embedding capacity to embed the transformation parameters. The LSB-based embedding strategy is sensitive to attacks, and the embedded information is easily lost when suffering attacks. To address these problems, this study proposed a generative camouflage method combined with block rotation and photo mosaic.MethodIn the embedding, the proposed method transformed grayscale images into circle images with pseudo-random angles by user keys to construct photo mosaics and then generated random integer coordinate sequence to determine the hidden positions of secret bit strings. For each hidden position, a circle image related to user key and position was placed to express secret bits, and then the random angle related to user key and position was added for authentication. For each non-hidden position, a circle image similar to cover image pixel was set to conceal the secret image, and the added random perturbation angle precluded distinguishing hidden and non-hidden positions. The deviation caused by the placement of the circle image was scattered around unprocessed pixels by the error diffusion method to form the stego photo mosaic image. In the extraction, coordinates for all secret bits in the stego photo mosaic image were obtained by user key, and circle images expressing secret bits were fetched. The centroid of each circle image that expresses secret bits was normalized into the right half-axis and then identified by the strategy of centroid rotation matching. Each circle image index was found to extract the hidden secret bits by the minimum quadratic difference distance. To authenticate the correctness of each circle image fetched secret bits, the factual and theory angles were compared, where the minimum quadratic difference distance of the factual angle, and the theory angle was computed by the user key, and the fetched secret bits. If the factual angle equals the theory angle, then the extracted secret bits are correctly recovered.ResultThe proposed method regards each circle image as a hidden unit and only involves the rotation of circle images without any modification. The added random rotation angles also provide the security assurance for the specific user to extract secret information and conduct angle authentication. Only the user with the correct user key can eliminate the influence of random rotation angles and extract the correct secret information with its corresponding authentication information. Moreover, the proposed method uses photo mosaic to hide secret information. The method is robust in anti-attacks and has good authentication accuracy. At the same time, the proposed strategy relies entirely on the user's key and has high security. The experiments show that secret information can be completely recovered only by the correct key. Whether one or more user key parameters are changed, the changed user key parameters lead to the inaccurate extraction of secret information. In addition, in case of JPEG compression attacks with a quality factor of 50~80 and random rotation angle attacks, the embedded secret information can be completely recovered. Even with salt-and-pepper noise attacks of 8%~20%, the error rate(ER) of extracted information remains approximately 5%. The authentication success rates of the restored information are all above 80%.ConclusionThe proposed method uses randomly placed circle images to express secret information without any modification. The method can use photo mosaic to generate meaningful hidden carrier images and avoid the high cost of database creation and searching of the search-based coverless information hiding. The proposed method combines the parameter mapping and error diffusion mosaic image generation strategy to place stego circle images in their corresponding positions and then generate the stego mosaic image, avoiding the dense transmission of massive carriers in search-based coverless information hiding. Compared with the texture-based coverless information hiding, the proposed method can generate meaningful stego photo mosaic images, avoiding the generation of meaningless texture images in texture-based coverless information hiding. Even during attacks, each circle image expressed secret bits and its placement angle are not easily lost. The proposed method can extract secret information by centroid rotation matching and random rotation angle strategy based on user keys and improve the accuracy of secret information recognition.关键词:photo mosaic;coverless;generative hiding;centroid rotation matching;error diffusion;random angular authentication118|49|2更新时间:2024-05-07 -

摘要:ObjectiveIn modern times, individuals and communities have devoted increasing attention to privacy problems. With the development of multidirectional technologies, the digital secret key exposes its insufficient in vulnerable to loss, easy to be stolen and difficult to remember. Although biometrics exhibits great advantages in identity recognition technology due to their uniqueness and stability, they also characterized by inaccuracy due to feature instability. Moreover, the security problem of the biometric template should be urgently addressed. Therefore, the biometric key generation and protection technology, which is a branch of biometric encryption technology, has emerged. This technology combines biometrics and cryptography and retains the properties of the biometrics and the secret key while ensuring the security of the biological data. Although the secret key is the most important factor in any cryptosystem, the security of the biometric data is as critical as the management of the keys for the biometric key. To construct a bio-key that can be used in data transmission and can keep a good balance between the ambiguity of the biometrics and accuracy of the cryptography, this study proposes a data encryption and decryption scheme using fingerprint bio-key combing with time stamp.MethodFirst, all minutiae from the fingerprint of the communication sender are extracted. The fingerprint feature-line set of the communication sender is then generated based on the relative information among the minutiae. A 2D coordinate model is generated and segmented by two segmentation metrics (horizontal and vertical). The size of the segmentation metrics can be adjusted through practical application. The feature lines in the set are individually mapped into the given 2D coordinate model to generate a 2D 0-1 matrix called fingerprint feature-line set matrix. The elements in this matrix are multiplied with a confidential random matrix stored by the sender to obtain a fingerprint bio-key matrix. The fingerprint bio-key is the bit string transformed from the fingerprint bio-key matrix, which is a connection of the individual lines in the matrix. The bit string is also protected by this random matrix in the proposed scheme. Then, a preset number, which is predefined by the two communicators, and time stamp are utilized to generate a 256-bit long SHA256 hash value. The hash value is processed to obtain an auxiliary bit-string, which is as long as the fingerprint bio-key, and do the xor(enclusive OR) operation with the fingerprint bio-key to obtain the auxiliary data, which is transmitted to the receiver and used for the fingerprint bio-key recovery. Finally, the fingerprint bio-key of the communication sender is used to encrypt the plain communication data. The encrypted cipher and auxiliary data, along with the SHA256 hash value of the confidential random matrix, are transmitted to the communication receiver in the final step of the encryption stage. At the decryption stage, the communication receiver should use the same preset number and the time stamp to generate the same auxiliary data to obtain the fingerprint bio-key of the communication sender so that the communication receiver can decrypt the cipher date and obtain the original plain data with the help of the sender's fingerprint bio-key. In addition, the authentication of the communication sender is illustrated. When the receiver requests the identity authentication of the sender, the communication sender should provide the regenerated fingerprint bio-key through the same key generation method and calculate the similarity value between the original and regenerated one. The authentication of the communication sender is successful only if the similarity value is not less than the predefined threshold. Moreover, the receiver should check the SHA256 hash value of the received bio-key, as well as the one provided by the sender, which means that only the two hash values are equal, the identity authentication of the sender is complete.ResultWe simulated the data encryption and decryption interfaces for the proposed scheme. Then, to prove the identity authentication function of the generated fingerprint bio-key, we tested the genuine acceptance rate (GAR) and the FAR (false acceptance rate) of the regenerated fingerprint bio-key for the genuine and impostors based on our fingerprint database. The test data proved that the fingerprint bio-key, which also served as a digital identity, was available and verifiable. In addition, the GAR of the regenerated fingerprint bio-key of the genuine reached 99.8%, whereas that of the impostor is close to 0.2%. Moreover, the proposed scheme can be applied in many different scenarios, such as instant communication and symmetric encryption algorithms (e.g., aclvanced encryption standard (AES) and SM4). The length of the fingerprint bio-key can be adjusted using different segmentation metrics.ConclusionThe proposed scheme utilizes a fingerprint key generation method to generate the unique fingerprint bio-key of the communication sender. In addition, the scheme determines the uniqueness and undeniability of every communication event and implements the vital conception "one secret key, one event" of the fingerprint bio-key recovery using time stamp. The revocability of the fingerprint bio-key was realized with the help of the confidential random matrix, which effectively ensures the security of the fingerprint data. Then, a detailed analysis of the availability and security of the proposed scheme is conducted. The innovations and advantages are also identified. However, these experiments are mainly based on the laboratory fingerprint database, so the influence of different feature extraction methods on our scheme is not investigated. Thus, further research is imminent.关键词:fingerprint bio-key;time stamp;preset number;hash value;random matrix;segmentation metric20|22|0更新时间:2024-05-07

摘要:ObjectiveIn modern times, individuals and communities have devoted increasing attention to privacy problems. With the development of multidirectional technologies, the digital secret key exposes its insufficient in vulnerable to loss, easy to be stolen and difficult to remember. Although biometrics exhibits great advantages in identity recognition technology due to their uniqueness and stability, they also characterized by inaccuracy due to feature instability. Moreover, the security problem of the biometric template should be urgently addressed. Therefore, the biometric key generation and protection technology, which is a branch of biometric encryption technology, has emerged. This technology combines biometrics and cryptography and retains the properties of the biometrics and the secret key while ensuring the security of the biological data. Although the secret key is the most important factor in any cryptosystem, the security of the biometric data is as critical as the management of the keys for the biometric key. To construct a bio-key that can be used in data transmission and can keep a good balance between the ambiguity of the biometrics and accuracy of the cryptography, this study proposes a data encryption and decryption scheme using fingerprint bio-key combing with time stamp.MethodFirst, all minutiae from the fingerprint of the communication sender are extracted. The fingerprint feature-line set of the communication sender is then generated based on the relative information among the minutiae. A 2D coordinate model is generated and segmented by two segmentation metrics (horizontal and vertical). The size of the segmentation metrics can be adjusted through practical application. The feature lines in the set are individually mapped into the given 2D coordinate model to generate a 2D 0-1 matrix called fingerprint feature-line set matrix. The elements in this matrix are multiplied with a confidential random matrix stored by the sender to obtain a fingerprint bio-key matrix. The fingerprint bio-key is the bit string transformed from the fingerprint bio-key matrix, which is a connection of the individual lines in the matrix. The bit string is also protected by this random matrix in the proposed scheme. Then, a preset number, which is predefined by the two communicators, and time stamp are utilized to generate a 256-bit long SHA256 hash value. The hash value is processed to obtain an auxiliary bit-string, which is as long as the fingerprint bio-key, and do the xor(enclusive OR) operation with the fingerprint bio-key to obtain the auxiliary data, which is transmitted to the receiver and used for the fingerprint bio-key recovery. Finally, the fingerprint bio-key of the communication sender is used to encrypt the plain communication data. The encrypted cipher and auxiliary data, along with the SHA256 hash value of the confidential random matrix, are transmitted to the communication receiver in the final step of the encryption stage. At the decryption stage, the communication receiver should use the same preset number and the time stamp to generate the same auxiliary data to obtain the fingerprint bio-key of the communication sender so that the communication receiver can decrypt the cipher date and obtain the original plain data with the help of the sender's fingerprint bio-key. In addition, the authentication of the communication sender is illustrated. When the receiver requests the identity authentication of the sender, the communication sender should provide the regenerated fingerprint bio-key through the same key generation method and calculate the similarity value between the original and regenerated one. The authentication of the communication sender is successful only if the similarity value is not less than the predefined threshold. Moreover, the receiver should check the SHA256 hash value of the received bio-key, as well as the one provided by the sender, which means that only the two hash values are equal, the identity authentication of the sender is complete.ResultWe simulated the data encryption and decryption interfaces for the proposed scheme. Then, to prove the identity authentication function of the generated fingerprint bio-key, we tested the genuine acceptance rate (GAR) and the FAR (false acceptance rate) of the regenerated fingerprint bio-key for the genuine and impostors based on our fingerprint database. The test data proved that the fingerprint bio-key, which also served as a digital identity, was available and verifiable. In addition, the GAR of the regenerated fingerprint bio-key of the genuine reached 99.8%, whereas that of the impostor is close to 0.2%. Moreover, the proposed scheme can be applied in many different scenarios, such as instant communication and symmetric encryption algorithms (e.g., aclvanced encryption standard (AES) and SM4). The length of the fingerprint bio-key can be adjusted using different segmentation metrics.ConclusionThe proposed scheme utilizes a fingerprint key generation method to generate the unique fingerprint bio-key of the communication sender. In addition, the scheme determines the uniqueness and undeniability of every communication event and implements the vital conception "one secret key, one event" of the fingerprint bio-key recovery using time stamp. The revocability of the fingerprint bio-key was realized with the help of the confidential random matrix, which effectively ensures the security of the fingerprint data. Then, a detailed analysis of the availability and security of the proposed scheme is conducted. The innovations and advantages are also identified. However, these experiments are mainly based on the laboratory fingerprint database, so the influence of different feature extraction methods on our scheme is not investigated. Thus, further research is imminent.关键词:fingerprint bio-key;time stamp;preset number;hash value;random matrix;segmentation metric20|22|0更新时间:2024-05-07 -

摘要:ObjectiveAs a powerful image compression method, set partition coding (SPC) method effectively uses the correlation between the wavelet coefficients to obtain a higher data compression ratio, and it has been widely used for all types of image compression. The SPC method uses the idea of successive quantization approximation of the wavelet coefficient set, such as set partition coding system (SPACS), which partitions the coefficient set step-by-step to find significant coefficients and code them. A significance map was used to decide whether a set is significant, based on which set will be partitioned. If a set is significant, then SPC will output a location bit "1" and the set will be partitioned into 4 subsets; otherwise, the set is prepared for the next set coding operation. If set partition operations are conformable to the distribution of the insignificant coefficients, then location bits and unnecessary bits will be decreased. When the coefficient set is sparse, the SPC method can use fewer bits to encode the image. However, with the decrease of the bit plane, the sparseness of the coefficient set decreases, and the SPC method will waste many location and unnecessary bits, especially for lossless compression. To increase the lossless encoding performance of the set SPC method, we construct a set partition coding method that embeds a generalized tree classifier named SPACS_C.MethodThe previous SPC methods process all coordinate sets with "test before partition, " thereby increasing the number of location and unnecessary bits when the correlation between data decreases. SPACS_C calculates the bit costs of two different coding ways called "test before partition" and "partition before test" for each coordinate set. Then, it chooses the one with lower bit cost to process the set. The process method used in SPACS_C takes advantage of the data characteristics in which the sparseness of bottom bit-planes decrease rapidly in wavelet transformed images. SPACS_C performs the coding process in the domain of the wavelet transform of the image. Daubechies (4, 4) integer wavelet transform is used in this study. The level of wavelet transform is determined by the image size. For instance, a level of 5 is recommended for an image with a spatial size of 512×512. Similar to SPACS, a general tree (GT) is used to simultaneously represent the tree and square sets in the wavelet domain. The processing flow of SPACS_C is as follows:1)Initialization. Let the threshold n be most significant digit of the maximum of the wavelet coefficient and the list of significant points (LSP) be an empty list. Add all GTs into the list of insignificant points (LIP), where all GTs that have descendants are added into the list of insignificant sets (LIS). 2)Sorting pass. Perform the significant test for every entry (

摘要:ObjectiveAs a powerful image compression method, set partition coding (SPC) method effectively uses the correlation between the wavelet coefficients to obtain a higher data compression ratio, and it has been widely used for all types of image compression. The SPC method uses the idea of successive quantization approximation of the wavelet coefficient set, such as set partition coding system (SPACS), which partitions the coefficient set step-by-step to find significant coefficients and code them. A significance map was used to decide whether a set is significant, based on which set will be partitioned. If a set is significant, then SPC will output a location bit "1" and the set will be partitioned into 4 subsets; otherwise, the set is prepared for the next set coding operation. If set partition operations are conformable to the distribution of the insignificant coefficients, then location bits and unnecessary bits will be decreased. When the coefficient set is sparse, the SPC method can use fewer bits to encode the image. However, with the decrease of the bit plane, the sparseness of the coefficient set decreases, and the SPC method will waste many location and unnecessary bits, especially for lossless compression. To increase the lossless encoding performance of the set SPC method, we construct a set partition coding method that embeds a generalized tree classifier named SPACS_C.MethodThe previous SPC methods process all coordinate sets with "test before partition, " thereby increasing the number of location and unnecessary bits when the correlation between data decreases. SPACS_C calculates the bit costs of two different coding ways called "test before partition" and "partition before test" for each coordinate set. Then, it chooses the one with lower bit cost to process the set. The process method used in SPACS_C takes advantage of the data characteristics in which the sparseness of bottom bit-planes decrease rapidly in wavelet transformed images. SPACS_C performs the coding process in the domain of the wavelet transform of the image. Daubechies (4, 4) integer wavelet transform is used in this study. The level of wavelet transform is determined by the image size. For instance, a level of 5 is recommended for an image with a spatial size of 512×512. Similar to SPACS, a general tree (GT) is used to simultaneously represent the tree and square sets in the wavelet domain. The processing flow of SPACS_C is as follows:1)Initialization. Let the threshold n be most significant digit of the maximum of the wavelet coefficient and the list of significant points (LSP) be an empty list. Add all GTs into the list of insignificant points (LIP), where all GTs that have descendants are added into the list of insignificant sets (LIS). 2)Sorting pass. Perform the significant test for every entry ($i,j$ $i,j$ $i,j$ $n$ $n$ $n$ $c$ $\mathit{\boldsymbol{c}}$ $c$ ${2^n}$ $c$ ${2^{n + 1}}$ $c$ ${2^n}$ 关键词:set partition coding(SPC);classifier;lossless encoding;location bit;unnecessary bit45|59|0更新时间:2024-05-07

Image Processing and Coding

-

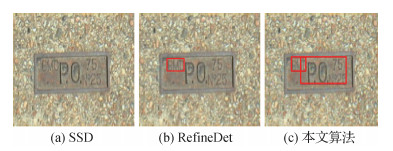

摘要:ObjectiveSteel plates are important raw materials in industry. In its manufacturing process, a variety of surface defects inevitably arise. These surface defects have a negative effect on the appearance and performance of the product; thus, detecting and controlling them in time is necessary. At present, an increasing number of iron and steel manufacturing enterprises use the machine vision method to detect and identify steel-plate surface defects automatically. The defect detection of the steel-plate surface based on machine vision collects the image of the steel-plate surface by using a charge-coupled device camera. By image denoising and enhancement, the defect image is segmented, the defect features are extracted, and the defect classification is conducted. In image acquisition, being disturbed by the on-site environment of the production line is unavoidable, as are the reflection of the steel plate, the illumination environment or the instability of the optical elements, often resulting in the non-uniform illumination of the image. If the image is not enhanced, great interference in the detection and recognition of small surface defects of the steel plate would occur. The common characteristics of small defects on the steel plate surface are non-uniform gray scale, low contrast between defects and background, obscure edge, diverse and small shape, and a small proportion of a defective area in the entire image, which is even mixed with noise. The contrast between the surface defect of the steel plate and its background is low. To conduct subsequent image analysis and defect recognition effectively, we need to conduct image enhancement processing to emphasize the surface defect information. The purpose of image enhancement is to make the original image clear or emphasize interesting features, thereby improving the overall contrast of the image and enhancing the local details of the image, which has good visual effect and rich information features. On this basis, the surface defect target is segmented from the background by image segmentation; thus, the feature of the defect can be extracted and recognized in the future.MethodLow-contrast image enhancement methods often include histogram equalization (HE), Retinex model, homomorphic filtering, and gray transform. The HE algorithm is widely used because of its simple principle and easy implementation, but it cannot adapt to the local contrast of the image of small defects on the surface of the steel plate. The Retinex model algorithm is also a common method for low-contrast image enhancement. Based on this model, single-scale Retinex (SSR) and multi-scale Retinex (MSR) algorithms have emerged. These series of algorithms have achieved good results, but the computational complexity is high. Homomorphic filtering can also enhance low-contrast images. This method avoids the distortion of image directly processed by Fourier transform (FFT), but it also has problems, such as over-enhancement and poor enhancement effect in high-light regions. In low-contrast image enhancement, if the local contrast of the image is enhanced in the spatial domain and the detailed information is enhanced by high-pass processing in frequency domain; the spatial and frequency characteristics of the image are considered at the same time. Compared with Fourier transform, wavelet transform (WT) is a localized analysis of space and frequency. It refines the signal step-by-step by scaling and translation operations, and it finally achieves time subdivision at high frequency and frequency subdivision at low frequency, focusing on arbitrary details of the signal. The wavelet-homomorphic filtering algorithm is used in image enhancement to eliminate non-uniform illumination. First, the image is decomposed by wavelet transform, and then the low-frequency coefficients of wavelet are modified by homomorphic filtering, while the high-pass filtering is applied to the high-frequency coefficients. Then, the low-frequency coefficients and high-frequency coefficients of the processed wavelet are reconstructed to obtain the enhanced image to eliminate non-uniform illumination. After image enhancement with wavelet transform and homomorphic filtering, the surface defect target is segmented to obtain the surface defect area of the steel plate. Many methods are used in image segmentation. The common classical methods are threshold segmentation based on gray histogram and edge detection. Given the low contrast of small defects on the surface of the steel plate, the distribution of gray histogram of the image does not have obvious peaks and valleys; thus, obtaining satisfactory results for image segmentation by using threshold method alone is difficult. The edge detection methods of Roberts, Sobel, and Prewitt operators are also poor for this type of small defects with low contrast, and canny edge detection operator remains widely studied and applied, but the segmentation effect is greatly affected by the threshold. On this basis, this study uses the Otsu-Canny algorithm in defect edge detection. In other words, the method of maximum inter-class variance (Otsu method) is used to determine the adaptive threshold for the Canny operator to perform edge detection.ResultIn this study, the algorithm is used to study the multiple types of low-contrast surface small defects on the strip surface, thereby effectively eliminating the non-uniform illumination. The Otsu algorithm or Canny operator cannot easily detect these defects effectively, and the correct detection rate of the Otsu-Canny algorithm in this study is 96%.ConclusionAfter image enhancement with wavelet-homomorphic filtering, the Otsu-Canny algorithm is used to detect the edges of small defects with multiple types and low contrast on the surface of the steel plate, and good results are obtained. Image enhancement and image segmentation should focus not only on the effect of processing but also on the real-time performance of the algorithm. In steel-plate surface defect detection based on machine vision, a real-time algorithm can be used for conventional surface defects. This algorithm is suitable for small surface defects with low contrast. To improve the processing speed, the parallel algorithm of a high-performance processor graphic processing unit can greatly improve the speed of image processing, thereby satisfying the effectiveness and real-time performance of the algorithm.关键词:machine vision;surface defects;low contrast;wavelet transform (WT);homomorphic filtering44|50|7更新时间:2024-05-07

摘要:ObjectiveSteel plates are important raw materials in industry. In its manufacturing process, a variety of surface defects inevitably arise. These surface defects have a negative effect on the appearance and performance of the product; thus, detecting and controlling them in time is necessary. At present, an increasing number of iron and steel manufacturing enterprises use the machine vision method to detect and identify steel-plate surface defects automatically. The defect detection of the steel-plate surface based on machine vision collects the image of the steel-plate surface by using a charge-coupled device camera. By image denoising and enhancement, the defect image is segmented, the defect features are extracted, and the defect classification is conducted. In image acquisition, being disturbed by the on-site environment of the production line is unavoidable, as are the reflection of the steel plate, the illumination environment or the instability of the optical elements, often resulting in the non-uniform illumination of the image. If the image is not enhanced, great interference in the detection and recognition of small surface defects of the steel plate would occur. The common characteristics of small defects on the steel plate surface are non-uniform gray scale, low contrast between defects and background, obscure edge, diverse and small shape, and a small proportion of a defective area in the entire image, which is even mixed with noise. The contrast between the surface defect of the steel plate and its background is low. To conduct subsequent image analysis and defect recognition effectively, we need to conduct image enhancement processing to emphasize the surface defect information. The purpose of image enhancement is to make the original image clear or emphasize interesting features, thereby improving the overall contrast of the image and enhancing the local details of the image, which has good visual effect and rich information features. On this basis, the surface defect target is segmented from the background by image segmentation; thus, the feature of the defect can be extracted and recognized in the future.MethodLow-contrast image enhancement methods often include histogram equalization (HE), Retinex model, homomorphic filtering, and gray transform. The HE algorithm is widely used because of its simple principle and easy implementation, but it cannot adapt to the local contrast of the image of small defects on the surface of the steel plate. The Retinex model algorithm is also a common method for low-contrast image enhancement. Based on this model, single-scale Retinex (SSR) and multi-scale Retinex (MSR) algorithms have emerged. These series of algorithms have achieved good results, but the computational complexity is high. Homomorphic filtering can also enhance low-contrast images. This method avoids the distortion of image directly processed by Fourier transform (FFT), but it also has problems, such as over-enhancement and poor enhancement effect in high-light regions. In low-contrast image enhancement, if the local contrast of the image is enhanced in the spatial domain and the detailed information is enhanced by high-pass processing in frequency domain; the spatial and frequency characteristics of the image are considered at the same time. Compared with Fourier transform, wavelet transform (WT) is a localized analysis of space and frequency. It refines the signal step-by-step by scaling and translation operations, and it finally achieves time subdivision at high frequency and frequency subdivision at low frequency, focusing on arbitrary details of the signal. The wavelet-homomorphic filtering algorithm is used in image enhancement to eliminate non-uniform illumination. First, the image is decomposed by wavelet transform, and then the low-frequency coefficients of wavelet are modified by homomorphic filtering, while the high-pass filtering is applied to the high-frequency coefficients. Then, the low-frequency coefficients and high-frequency coefficients of the processed wavelet are reconstructed to obtain the enhanced image to eliminate non-uniform illumination. After image enhancement with wavelet transform and homomorphic filtering, the surface defect target is segmented to obtain the surface defect area of the steel plate. Many methods are used in image segmentation. The common classical methods are threshold segmentation based on gray histogram and edge detection. Given the low contrast of small defects on the surface of the steel plate, the distribution of gray histogram of the image does not have obvious peaks and valleys; thus, obtaining satisfactory results for image segmentation by using threshold method alone is difficult. The edge detection methods of Roberts, Sobel, and Prewitt operators are also poor for this type of small defects with low contrast, and canny edge detection operator remains widely studied and applied, but the segmentation effect is greatly affected by the threshold. On this basis, this study uses the Otsu-Canny algorithm in defect edge detection. In other words, the method of maximum inter-class variance (Otsu method) is used to determine the adaptive threshold for the Canny operator to perform edge detection.ResultIn this study, the algorithm is used to study the multiple types of low-contrast surface small defects on the strip surface, thereby effectively eliminating the non-uniform illumination. The Otsu algorithm or Canny operator cannot easily detect these defects effectively, and the correct detection rate of the Otsu-Canny algorithm in this study is 96%.ConclusionAfter image enhancement with wavelet-homomorphic filtering, the Otsu-Canny algorithm is used to detect the edges of small defects with multiple types and low contrast on the surface of the steel plate, and good results are obtained. Image enhancement and image segmentation should focus not only on the effect of processing but also on the real-time performance of the algorithm. In steel-plate surface defect detection based on machine vision, a real-time algorithm can be used for conventional surface defects. This algorithm is suitable for small surface defects with low contrast. To improve the processing speed, the parallel algorithm of a high-performance processor graphic processing unit can greatly improve the speed of image processing, thereby satisfying the effectiveness and real-time performance of the algorithm.关键词:machine vision;surface defects;low contrast;wavelet transform (WT);homomorphic filtering44|50|7更新时间:2024-05-07 -

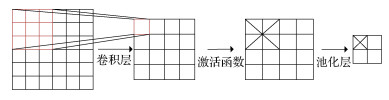

摘要:ObjectiveChinese ancient murals, as a type of painting on the wall, have a long history of 4000 years and are an indispensable part of Chinese ancient paintings. With the increasing abundance of digital mural images, classifying mural resources is becoming increasingly urgent. The core of mural image classification is how to construct the feature description of an object. In addition to expressing the object adequately, this description should be able to distinguish among different types of objects. However, ancient mural images have certain pluralism and subjectivity due to artificial drawing. Considering the subjective singularity and objective insufficiency of traditional mural image feature extraction, we propose a convolutional neural network based on classical AlexNet network model and feature fusion idea for the automatic classification of ancient mural images.MethodFirst, we define the optimizer as Adam with a learning rate of 0.001 through experiments. We then extract each convolution layer feature of AlexNet for classification. Through the comparison of running time and accuracy, we select the convolution layer to express mural features further. Second, we combine the idea of feature fusion and exchange the two convolution kernels to form channels 1 and 2. The convolution kernels of channel 1 are 11, 5, and 3, and those of channel 2 are 11, 3, and 5. The combination of this method constitutes a two-channel convolution feature extraction layer, which enables the model to utilize multilocal features fully. The overfitting phenomenon caused by numerous full-connection layers is considered. On the basis of the two-channel convolution feature extraction layer, we continue to compare the features of different full-connection layers and select further appropriate full-connection layer features to express mural images. Finally, a mural image classification model with a two-channel convolution layer and optimal full connection layer is presented. The proposed mural image classification model can be divided into three processes. 1) Mural image preprocessing. Given the lack of large mural datasets, we use image enhancement operations, such as zooming, brightness transformation, noise addition, and flipping, to enlarge the mural samples. An ancient mural image dataset, including Buddha, Bodhisattva, Buddhist disciples, secular figures, animals, plants, buildings, and auspicious cloud, is constructed. 2) Training stage of mural image classification model. The module has three stages. In the first stage, the model extracts the low-level features, such as the edge information of the trainset images. In the second stage, the two-channel network with different structures is used to abstract the features of the first stage. The features of the two channels are then obtained. In the last stage, the loss function training network model is constructed by fusing the features of the two channels. Feature fusion improves the robustness of the model and the capability of feature expression. 3) Training stage of mural image classification model. We use the network model with trained parameters to predict the classification results of test set samples. The classification accuracy, recall, and f1-score are obtained.ResultThrough the comparison of running time and accuracy, the comparative experimental results of different convolution layers show that in the AlexNet model, the third convolution layer is the most suitable network layer for this dataset. In addition, the accuracy rate will decrease if the number of layers is higher or lower than the number of layers in the paper. Similarly, the comparative experimental results of different full-connection layers show that the features of the three-layer full-connection layer are further stable and sufficient based on the two-channel convolution extraction layer. Therefore, a six-layer convolution neural network model, including a three-layer dual channel and three-layer full connection layer, is presented with five convolution layers in the two-channel model. The model achieves 85.39% accuracy on the constructed mural image dataset. Experimental results show that the accuracy of the model in most classes is the highest, and each evaluation index of the model is improved by approximately 5%, compared with the AlexNet model and several improved convolution neural network models. Compared with the classical model without pretraining, this model encounters increased difficulty in producing overfitting. Compared with the model with pretraining, the accuracy of the model is improved by approximately 1%~5%, and the cost is reduced in terms of hardware conditions, network structure, and memory consumption. These experimental data verify the validity of the model for the automatic classification of mural images.ConclusionConsidering the influence of network width and depth, ancient mural classification model with AlexNet model using feature fusion can fully express the rich details of mural images. This model has certain advantages and application value and can be further integrated into the mural classification-related model. However, this method is a shallow convolution neural network based on AlexNet, which fails to mine the high-level features of mural images fully. As a result, some images with similar low-level features, such as color and texture, cannot be classified correctly. Moreover, the running time of mural classification in this model is measured by hour, which consumes considerable resources and is inefficient. Therefore, we will combine deep models to express the high-level features of mural images in future work. We will also improve the efficiency of model training to make mural classification further effective and fast.关键词:mural classification;feature fusion;AlexNet model;convolution neural network (CNN);mural dataset73|0|3更新时间:2024-05-07

摘要:ObjectiveChinese ancient murals, as a type of painting on the wall, have a long history of 4000 years and are an indispensable part of Chinese ancient paintings. With the increasing abundance of digital mural images, classifying mural resources is becoming increasingly urgent. The core of mural image classification is how to construct the feature description of an object. In addition to expressing the object adequately, this description should be able to distinguish among different types of objects. However, ancient mural images have certain pluralism and subjectivity due to artificial drawing. Considering the subjective singularity and objective insufficiency of traditional mural image feature extraction, we propose a convolutional neural network based on classical AlexNet network model and feature fusion idea for the automatic classification of ancient mural images.MethodFirst, we define the optimizer as Adam with a learning rate of 0.001 through experiments. We then extract each convolution layer feature of AlexNet for classification. Through the comparison of running time and accuracy, we select the convolution layer to express mural features further. Second, we combine the idea of feature fusion and exchange the two convolution kernels to form channels 1 and 2. The convolution kernels of channel 1 are 11, 5, and 3, and those of channel 2 are 11, 3, and 5. The combination of this method constitutes a two-channel convolution feature extraction layer, which enables the model to utilize multilocal features fully. The overfitting phenomenon caused by numerous full-connection layers is considered. On the basis of the two-channel convolution feature extraction layer, we continue to compare the features of different full-connection layers and select further appropriate full-connection layer features to express mural images. Finally, a mural image classification model with a two-channel convolution layer and optimal full connection layer is presented. The proposed mural image classification model can be divided into three processes. 1) Mural image preprocessing. Given the lack of large mural datasets, we use image enhancement operations, such as zooming, brightness transformation, noise addition, and flipping, to enlarge the mural samples. An ancient mural image dataset, including Buddha, Bodhisattva, Buddhist disciples, secular figures, animals, plants, buildings, and auspicious cloud, is constructed. 2) Training stage of mural image classification model. The module has three stages. In the first stage, the model extracts the low-level features, such as the edge information of the trainset images. In the second stage, the two-channel network with different structures is used to abstract the features of the first stage. The features of the two channels are then obtained. In the last stage, the loss function training network model is constructed by fusing the features of the two channels. Feature fusion improves the robustness of the model and the capability of feature expression. 3) Training stage of mural image classification model. We use the network model with trained parameters to predict the classification results of test set samples. The classification accuracy, recall, and f1-score are obtained.ResultThrough the comparison of running time and accuracy, the comparative experimental results of different convolution layers show that in the AlexNet model, the third convolution layer is the most suitable network layer for this dataset. In addition, the accuracy rate will decrease if the number of layers is higher or lower than the number of layers in the paper. Similarly, the comparative experimental results of different full-connection layers show that the features of the three-layer full-connection layer are further stable and sufficient based on the two-channel convolution extraction layer. Therefore, a six-layer convolution neural network model, including a three-layer dual channel and three-layer full connection layer, is presented with five convolution layers in the two-channel model. The model achieves 85.39% accuracy on the constructed mural image dataset. Experimental results show that the accuracy of the model in most classes is the highest, and each evaluation index of the model is improved by approximately 5%, compared with the AlexNet model and several improved convolution neural network models. Compared with the classical model without pretraining, this model encounters increased difficulty in producing overfitting. Compared with the model with pretraining, the accuracy of the model is improved by approximately 1%~5%, and the cost is reduced in terms of hardware conditions, network structure, and memory consumption. These experimental data verify the validity of the model for the automatic classification of mural images.ConclusionConsidering the influence of network width and depth, ancient mural classification model with AlexNet model using feature fusion can fully express the rich details of mural images. This model has certain advantages and application value and can be further integrated into the mural classification-related model. However, this method is a shallow convolution neural network based on AlexNet, which fails to mine the high-level features of mural images fully. As a result, some images with similar low-level features, such as color and texture, cannot be classified correctly. Moreover, the running time of mural classification in this model is measured by hour, which consumes considerable resources and is inefficient. Therefore, we will combine deep models to express the high-level features of mural images in future work. We will also improve the efficiency of model training to make mural classification further effective and fast.关键词:mural classification;feature fusion;AlexNet model;convolution neural network (CNN);mural dataset73|0|3更新时间:2024-05-07 -