最新刊期

卷 24 , 期 9 , 2019

- 摘要:UVRSM (urban video real-scene map) has important significance for construction of urban monitoring system, the development of Internet map products, and the implementation of future "real-scene China construction" strategy given the ability of unified information expression in three-dimensional space and time dimension. This paper presents a preliminary exploration on the construction of UVRSM for the convenience of researchers in related fields. From the point of view of augmented virtual environment (AVE) technology, this work proposes to construct the UVRSM by integrating panoramic video and geographic three-dimensional models. To this end, the technologies and methods involved in panoramic camera calibration, panoramic video geo-registration, automatic video texture mapping, and real-time rendering are comprehensively discussed. Some valuable research ideas and schemes are given. The theory and method of camera calibration and image geo-registration suitable for traditional pinhole model need to be expanded according to the spherical projection model of panoramic camera. The LOD (level of detail) technology and strategy for large-scale 3D scene rendering suitable for static texture need to be innovated to obtain the characteristics of video transmission bandwidth limitation and high frame rate. The construction of UVRSM is a new topic and will strongly promote the development of Internet and AI (artificial intelliqence) frontier technology. This field is expected to bring trillions of market opportunities to UVRSM-related domains or industries.关键词:video real-scene map;3D map;virtual reality;panoramic camera calibration;video geo-registration;video texture mapping;real-time rendering63|64|4更新时间:2024-05-07

Scholar View

-

摘要:Optical remote sensing images are often affected by clouds or haze, and in most cases, auxiliary data are not available for removing haze from the original satellite images. Therefore, the single image-based haze removal method has become the necessary preprocessing technology. A variety of algorithms have been developed by different researchers, but systematic summary and comparative analysis are rare. This paper aims to systematically summarize the research progress of a single image-based haze removal algorithm and provide the basic theory, advantages, and disadvantages and applicable scenarios of typical algorithms. This paper first classifies and summarizes the current haze removal algorithms from three aspects (haze attenuation model, basic theory, and evaluation method), then analyzes the application scope and problems of current algorithms combined with specific application scenarios, and finally presents feasible solutions with respect to special problems. The imaging models used in the haze removal process are the additive, haze degradation, and illumination-reflection models. The additive model, which is simplified from radiation transfer equations, considers that the at-satellite radiance under haze or cloud conditions is the sum of different radiation components, including the constant path radiance, the surface reflected radiance, and spatially varying haze contribution. This model is adopted by the classical dark object subtraction (DOS) method and its various improved versions. The haze degradation model divides the observed light intensity into two components:direct attenuation describing the scene radiance and its decay in the atmosphere and airlight resulting from scattered light. Both components are correlated to the medium transmission that describes the portion of the light that is not scattered and reaches the sensor. Haze removal methods that rely on the dark channel prior (DCP) usually estimate the medium transmission through the haze degradation model. The illumination-reflection model abstracts the observed image as a product of the illumination component of the light source and the reflection component of the object. In the frequency domain, the haze or the cloud signal is mainly concentrated in the low-frequency region and can be suppressed through high-pass filtering. The widely used methods based on the illumination-reflection model include homomorphic filtering and wavelet decomposition. A haze removal procedure generally consists of two consecutive stages:haze detection and haze correction. Haze detection involves obtaining the precise spatial intensity of haze or thin clouds in an image, and haze correction is the process of removing haze influence depending on the estimated haze intensity. The distributions of haze usually vary dramatically in the spatial and temporal domains; as a result, the collection of detailed in situ measurements of haze conditions during the time of image acquisition is almost impossible in practical applications. Thus, single image-based haze removal methods have attracted increasing interest over the past decades. Existing methods retrieved in the literature fall into four common categories:DOS, frequency filtering, DCP, and image transformation-based approaches. DOS-based methods have evolved from the stage where they are suitable for homogeneous haze conditions only to the stage where they are able to compensate for spatially varying haze contaminations. The typical algorithms belong to the dense dark vegetation (DDV) technique and haze thickness map (HTM) method. The DDV technique depends on the empirical correlation between the reflectance of visible bands (usually blue and/or red) and that of a haze-transparent band (e.g., band 7 in the case of Landsat data) for DDV pixels. A DDV-based method would fail to work if a scene does not contain sufficient and evenly distributed vegetated pixels or the correlation of the DDV pixels is significantly different from the standard one. The HTM algorithm estimates haze intensity by searching dark targets within local neighboring blocks instead of searching in an entire scene. The HTM algorithm is feasible for satellite images with high spatial resolutions because pure dark pixels without mixing with bright targets are required in a small local region, but it is unable to handle scenes that have large areas of relatively bright surfaces. Frequency filtering-based approaches operate in the spatial frequency domain assuming that haze contamination is in a relatively low frequency compared with the changeable reflectance of surface covers and can thus be removed by applying a filtering process. Wavelet decomposition and homomorphic filtering are two representative approaches. The major obstacle in applying these methods is determining a cut-off frequency or choosing the wavelet basis. Current solutions rely on empirical criteria and are usually suitable for some special issues. DCP-based methods combine the haze degradation model and DCP, which originates from the statistics of outdoor haze-free images (i.e., in most non-sky patches of haze-free images, at least one color channel has very low intensity at some pixels). When applying DCP-based methods for haze removal in remote sensing images, improvements are required due to the different characteristics between natural scenes and satellite images. Image transformation-based haze removals are initially developed based on the tasseled cap transformation (TCT) because haze contamination seems to be the major contributor to the fourth component of TCT. Haze-optimized transformation (HOT) might be the most widely used transformation-based haze removal method, which supposes that digital numbers of red and blue bands are highly correlated for pixels within the clearest portions of a scene and that this relationship holds for all surface classes. Given that the algorithm relies on only two visible bands, which means that no haze-transparent band is needed, it can be applied to a broad range of satellite images (e.g., Landsat, MODIS, Sentinel-2, QuikBird, and IKONOS). Nevertheless, serious spurious HOT responses exist over non-vegetated areas (e.g., water bodies, snow cover, bare soil, and urban targets), leading to under-correction or overcorrection of these targets. A usual solution is to exclude sensitive land cover types from original HOT and then estimate HOT values for the excluded pixels through spatial inference. Another suggested strategy for addressing this issue is to fill the sinks and flatten the peaks in a HOT image. Other haze removal methods are also involved in band combination, mixed pixel decomposition, or machine learning techniques. For example, multi-scale residual convolutional neural network (MRCNN) is designed for haze removal of Landsat 8 OLI images. MRCNN is able to predict haze intensity by feeding into specific hazy image blocks after it automatically learns the mapping relations between hazy images and their associated haze transmission from sufficient training samples. As for algorithm analysis and evaluation, researchers are inclined to adopt subjective analysis or choose reference images to evaluate spectral consistency before and after haze removal. Recently, image quality indices have been utilized more frequently to evaluate the contrast, brightness, structural consistency, and fidelity of dehazed images. The existing algorithms are not suitable for all scenarios or images, and they face some problems. For instance, parameters are difficult to adjust adaptively, the model is sensitive to special land cover types, and outputted results are seriously distorted. The evaluation of different algorithms is mainly based on subjective comparative analysis, and building objective indicators according to application requirements has become the current research direction.关键词:remote sensing dehazing;dark object subtraction;dark channel prior;haze degradation model;image quality evaluation;haze optimized transformation;wavelet decomposition95|120|1更新时间:2024-05-07

摘要:Optical remote sensing images are often affected by clouds or haze, and in most cases, auxiliary data are not available for removing haze from the original satellite images. Therefore, the single image-based haze removal method has become the necessary preprocessing technology. A variety of algorithms have been developed by different researchers, but systematic summary and comparative analysis are rare. This paper aims to systematically summarize the research progress of a single image-based haze removal algorithm and provide the basic theory, advantages, and disadvantages and applicable scenarios of typical algorithms. This paper first classifies and summarizes the current haze removal algorithms from three aspects (haze attenuation model, basic theory, and evaluation method), then analyzes the application scope and problems of current algorithms combined with specific application scenarios, and finally presents feasible solutions with respect to special problems. The imaging models used in the haze removal process are the additive, haze degradation, and illumination-reflection models. The additive model, which is simplified from radiation transfer equations, considers that the at-satellite radiance under haze or cloud conditions is the sum of different radiation components, including the constant path radiance, the surface reflected radiance, and spatially varying haze contribution. This model is adopted by the classical dark object subtraction (DOS) method and its various improved versions. The haze degradation model divides the observed light intensity into two components:direct attenuation describing the scene radiance and its decay in the atmosphere and airlight resulting from scattered light. Both components are correlated to the medium transmission that describes the portion of the light that is not scattered and reaches the sensor. Haze removal methods that rely on the dark channel prior (DCP) usually estimate the medium transmission through the haze degradation model. The illumination-reflection model abstracts the observed image as a product of the illumination component of the light source and the reflection component of the object. In the frequency domain, the haze or the cloud signal is mainly concentrated in the low-frequency region and can be suppressed through high-pass filtering. The widely used methods based on the illumination-reflection model include homomorphic filtering and wavelet decomposition. A haze removal procedure generally consists of two consecutive stages:haze detection and haze correction. Haze detection involves obtaining the precise spatial intensity of haze or thin clouds in an image, and haze correction is the process of removing haze influence depending on the estimated haze intensity. The distributions of haze usually vary dramatically in the spatial and temporal domains; as a result, the collection of detailed in situ measurements of haze conditions during the time of image acquisition is almost impossible in practical applications. Thus, single image-based haze removal methods have attracted increasing interest over the past decades. Existing methods retrieved in the literature fall into four common categories:DOS, frequency filtering, DCP, and image transformation-based approaches. DOS-based methods have evolved from the stage where they are suitable for homogeneous haze conditions only to the stage where they are able to compensate for spatially varying haze contaminations. The typical algorithms belong to the dense dark vegetation (DDV) technique and haze thickness map (HTM) method. The DDV technique depends on the empirical correlation between the reflectance of visible bands (usually blue and/or red) and that of a haze-transparent band (e.g., band 7 in the case of Landsat data) for DDV pixels. A DDV-based method would fail to work if a scene does not contain sufficient and evenly distributed vegetated pixels or the correlation of the DDV pixels is significantly different from the standard one. The HTM algorithm estimates haze intensity by searching dark targets within local neighboring blocks instead of searching in an entire scene. The HTM algorithm is feasible for satellite images with high spatial resolutions because pure dark pixels without mixing with bright targets are required in a small local region, but it is unable to handle scenes that have large areas of relatively bright surfaces. Frequency filtering-based approaches operate in the spatial frequency domain assuming that haze contamination is in a relatively low frequency compared with the changeable reflectance of surface covers and can thus be removed by applying a filtering process. Wavelet decomposition and homomorphic filtering are two representative approaches. The major obstacle in applying these methods is determining a cut-off frequency or choosing the wavelet basis. Current solutions rely on empirical criteria and are usually suitable for some special issues. DCP-based methods combine the haze degradation model and DCP, which originates from the statistics of outdoor haze-free images (i.e., in most non-sky patches of haze-free images, at least one color channel has very low intensity at some pixels). When applying DCP-based methods for haze removal in remote sensing images, improvements are required due to the different characteristics between natural scenes and satellite images. Image transformation-based haze removals are initially developed based on the tasseled cap transformation (TCT) because haze contamination seems to be the major contributor to the fourth component of TCT. Haze-optimized transformation (HOT) might be the most widely used transformation-based haze removal method, which supposes that digital numbers of red and blue bands are highly correlated for pixels within the clearest portions of a scene and that this relationship holds for all surface classes. Given that the algorithm relies on only two visible bands, which means that no haze-transparent band is needed, it can be applied to a broad range of satellite images (e.g., Landsat, MODIS, Sentinel-2, QuikBird, and IKONOS). Nevertheless, serious spurious HOT responses exist over non-vegetated areas (e.g., water bodies, snow cover, bare soil, and urban targets), leading to under-correction or overcorrection of these targets. A usual solution is to exclude sensitive land cover types from original HOT and then estimate HOT values for the excluded pixels through spatial inference. Another suggested strategy for addressing this issue is to fill the sinks and flatten the peaks in a HOT image. Other haze removal methods are also involved in band combination, mixed pixel decomposition, or machine learning techniques. For example, multi-scale residual convolutional neural network (MRCNN) is designed for haze removal of Landsat 8 OLI images. MRCNN is able to predict haze intensity by feeding into specific hazy image blocks after it automatically learns the mapping relations between hazy images and their associated haze transmission from sufficient training samples. As for algorithm analysis and evaluation, researchers are inclined to adopt subjective analysis or choose reference images to evaluate spectral consistency before and after haze removal. Recently, image quality indices have been utilized more frequently to evaluate the contrast, brightness, structural consistency, and fidelity of dehazed images. The existing algorithms are not suitable for all scenarios or images, and they face some problems. For instance, parameters are difficult to adjust adaptively, the model is sensitive to special land cover types, and outputted results are seriously distorted. The evaluation of different algorithms is mainly based on subjective comparative analysis, and building objective indicators according to application requirements has become the current research direction.关键词:remote sensing dehazing;dark object subtraction;dark channel prior;haze degradation model;image quality evaluation;haze optimized transformation;wavelet decomposition95|120|1更新时间:2024-05-07

Review

-

摘要:ObjectiveDiffusion weight imaging (DWI), as a new medical imaging technique, transforms the diffusion motion of water molecules in tissues into grayscale or other parameter information of an image by applying multi-directional diffusion magnetic sensitive gradients under each diffusion sensitive gradient. This technique can be used for the auxiliary diagnosis of living heart myocardial fiber modeling, brain fiber, lesions of the central nervous system, liver fibrosis, and other diseases. With the popularization of telemedicine diagnostic technology, an increasing amount of DWI data are being used for remote diagnosis and scientific research. DWI images, which are originally stored and used on a single machine in a hospital, must be transmitted and used over the network. Many scholars have proposed many watermarking algorithms for protecting medical images, such as the reversible watermarking algorithm, robust reversible watermarking algorithm, and zero-watermarking algorithm. The advantage of the reversible algorithm is that it can be completely used for nondestructive image recovery. The robustness of the reversible watermarking algorithm is too weak to guarantee the existence of the reversible watermark when embedded images are attacked intentionally or unintentionally. Therefore, some researchers propose the robust reversible watermarking algorithm. The robust reversible watermarking algorithm could restore an original picture when no attack occurs and could draw embedded watermarking. It ensures that its robust reversible performance should carry additional information. Thus, it must consume a large amount of transmission bandwidth. Some robust reversible watermarks are constructed by dual watermarking, and they depend on one another's information to extract the watermarks. To protect medical images by other methods, some researchers use the zero-watermarking algorithm, which is different from the traditional method that embeds information into images. The zero-watermarking algorithm can retrieve internal features from data to build binary watermarking and then save it in a third-party application. When the image is used by other people without the license, we could use zero-watermarking to prove copyright. Thus, the zero-watermarking algorithm, as a non-embedded algorithm, cannot actively complete the protection of property information. The robust watermarking algorithm plays an irreplaceable role in ensuring that the medical image watermarking information has certain robustness. To prevent unauthorized DWI images from being used or tampered with, this study proposes a robust watermarking algorithm based on DWI images.MethodThe algorithm initially obtains specified slips by the maximum inter-class variance segmentation algorithm and area control threshold to ensure that the selected slice has a sufficient embedding area because the tip and the bottom of the heart are unsuitable for embedding. The foreground region with a diffusion gradient direction image is prepared for embedding. We obtain the low-frequency sub-band coefficient by using integer wavelet transform in the default region. Then, we count the low frequency and analyze the low sub-frequency coefficient by using the fixed step length; the low sub-frequency coefficient follows the characteristics of the coefficient of DWI images. The ratio relation of adjacent clusters in the histogram subject area is adjusted for watermark embedding. Finally, we propose to design the quantitative reversible relationship between apparent DWI coefficients, with diffusion tensor imaging (DTI) as the key. We use this key to encrypt a DWI image after embedding the watermark to effectively protect the copyright information of the DWI image.ResultThe algorithm can maintain its robustness and reduce the change in the DTI parameters in the experiment on robustness and changes in the parameters of DTI after embedding. The proposed algorithm also has excellent robustness in attack experiments, such as those involving Gaussian noise, contrast expansion, and small angle rotation. In the experiment on parameter change measurement before and after embedding, the algorithm is greatly reduced the volume of change in isotropic and fiber main direction of the myocardial fiber. In our proposed method, the main direction of the fiber is reduced by more than 100, and the average change of the mean diffusivity is reduced by more than 30 in the same database. In the visual quality of the algorithm, the peak signal-to-noise ratio is approximately 8 dB higher than that specified in the comparative literature.ConclusionAn embedded selection feedback mechanism is proposed to carry out the selection of watermark embedding according to actual embedding demands. Then, the statistical histogram of the sub-band coefficient is constructed by specifying the fixed step length according to the characteristics of the wavelet transform coefficient of the DWI image. Finally, the reversible key algorithm based on the quantitative relationship between DWI and DTI is constructed. Experiments show that this algorithm can be applied to the watermark embedding of dispersion weighted imaging and can satisfy fiber direction as little as possible.关键词:diffusion weight imaging (DWI);histogram;stable step;ration relationship;robust watermark24|46|6更新时间:2024-05-07

摘要:ObjectiveDiffusion weight imaging (DWI), as a new medical imaging technique, transforms the diffusion motion of water molecules in tissues into grayscale or other parameter information of an image by applying multi-directional diffusion magnetic sensitive gradients under each diffusion sensitive gradient. This technique can be used for the auxiliary diagnosis of living heart myocardial fiber modeling, brain fiber, lesions of the central nervous system, liver fibrosis, and other diseases. With the popularization of telemedicine diagnostic technology, an increasing amount of DWI data are being used for remote diagnosis and scientific research. DWI images, which are originally stored and used on a single machine in a hospital, must be transmitted and used over the network. Many scholars have proposed many watermarking algorithms for protecting medical images, such as the reversible watermarking algorithm, robust reversible watermarking algorithm, and zero-watermarking algorithm. The advantage of the reversible algorithm is that it can be completely used for nondestructive image recovery. The robustness of the reversible watermarking algorithm is too weak to guarantee the existence of the reversible watermark when embedded images are attacked intentionally or unintentionally. Therefore, some researchers propose the robust reversible watermarking algorithm. The robust reversible watermarking algorithm could restore an original picture when no attack occurs and could draw embedded watermarking. It ensures that its robust reversible performance should carry additional information. Thus, it must consume a large amount of transmission bandwidth. Some robust reversible watermarks are constructed by dual watermarking, and they depend on one another's information to extract the watermarks. To protect medical images by other methods, some researchers use the zero-watermarking algorithm, which is different from the traditional method that embeds information into images. The zero-watermarking algorithm can retrieve internal features from data to build binary watermarking and then save it in a third-party application. When the image is used by other people without the license, we could use zero-watermarking to prove copyright. Thus, the zero-watermarking algorithm, as a non-embedded algorithm, cannot actively complete the protection of property information. The robust watermarking algorithm plays an irreplaceable role in ensuring that the medical image watermarking information has certain robustness. To prevent unauthorized DWI images from being used or tampered with, this study proposes a robust watermarking algorithm based on DWI images.MethodThe algorithm initially obtains specified slips by the maximum inter-class variance segmentation algorithm and area control threshold to ensure that the selected slice has a sufficient embedding area because the tip and the bottom of the heart are unsuitable for embedding. The foreground region with a diffusion gradient direction image is prepared for embedding. We obtain the low-frequency sub-band coefficient by using integer wavelet transform in the default region. Then, we count the low frequency and analyze the low sub-frequency coefficient by using the fixed step length; the low sub-frequency coefficient follows the characteristics of the coefficient of DWI images. The ratio relation of adjacent clusters in the histogram subject area is adjusted for watermark embedding. Finally, we propose to design the quantitative reversible relationship between apparent DWI coefficients, with diffusion tensor imaging (DTI) as the key. We use this key to encrypt a DWI image after embedding the watermark to effectively protect the copyright information of the DWI image.ResultThe algorithm can maintain its robustness and reduce the change in the DTI parameters in the experiment on robustness and changes in the parameters of DTI after embedding. The proposed algorithm also has excellent robustness in attack experiments, such as those involving Gaussian noise, contrast expansion, and small angle rotation. In the experiment on parameter change measurement before and after embedding, the algorithm is greatly reduced the volume of change in isotropic and fiber main direction of the myocardial fiber. In our proposed method, the main direction of the fiber is reduced by more than 100, and the average change of the mean diffusivity is reduced by more than 30 in the same database. In the visual quality of the algorithm, the peak signal-to-noise ratio is approximately 8 dB higher than that specified in the comparative literature.ConclusionAn embedded selection feedback mechanism is proposed to carry out the selection of watermark embedding according to actual embedding demands. Then, the statistical histogram of the sub-band coefficient is constructed by specifying the fixed step length according to the characteristics of the wavelet transform coefficient of the DWI image. Finally, the reversible key algorithm based on the quantitative relationship between DWI and DTI is constructed. Experiments show that this algorithm can be applied to the watermark embedding of dispersion weighted imaging and can satisfy fiber direction as little as possible.关键词:diffusion weight imaging (DWI);histogram;stable step;ration relationship;robust watermark24|46|6更新时间:2024-05-07

Image Processing and Coding

-

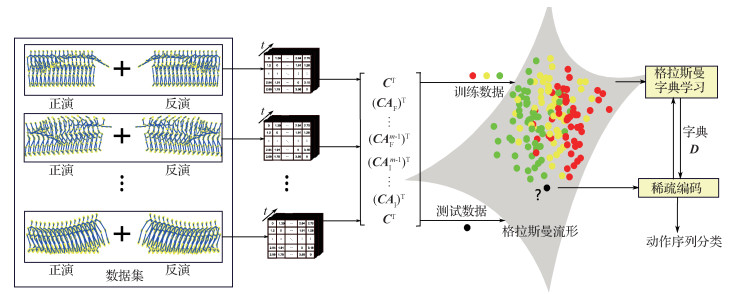

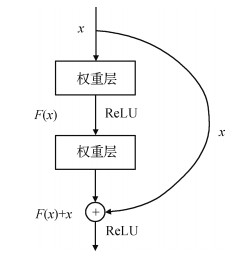

摘要:ObjectiveHuman action recognition has a very wide application prospect in fields such as video surveillance, human-computer interface, environment-assisted life, human-computer interaction, and intelligent driving. In image or video analysis, most of these tasks use color and texture cues in 2D images for recognition. However, due to occlusion, shadows, illumination changes, perspective changes, scale changes, intra-class variations, and similarities between classes, the recognition rate of human behavior is not ideal. In recent years, with the release of 3D depth cameras, such as Microsoft Kinect, 3D depth data can provide pictures of scene changes, thereby improving the recognition rates for the first three challenges of human recognition. In addition, 3D depth cameras provide powerful human motion capture technology, which can output the human skeleton of a 3D joint point position. Therefore, much attention has been paid to skeleton-based action recognition. The linear dynamical system (LDS) is the most common method for encoding spatio-temporal time-series data in various disciplines due to its simplicity and efficiency. A new method is proposed to obtain the parameters of a tensor-based LDS with forward and inverse action sequences to construct a complete observation matrix. The linear subspace of the observation matrix, which maps to a point on Grassmann manifold for human action recognition, is obtained. In this manner, an action can be expressed as a subspace spanned by columns of the matrix corresponding to a point on the Grassmann manifold. On the basis of such action, classification can be performed using dictionary learning and sparse coding.MethodConsidering the dynamics and persistence of human behavior, we do not vectorize the time series according to the general method but retain its own tensor characteristics, that is, we transform the high-dimensional vector into a low-dimensional subspace to analyze the factors affecting actions from various angles (modules). In this method, human skeletons are modeled using human joint points, which are initially extracted from a depth camera recording. To preserve the original spatio-temporal information of an action video and enhance the accuracy of human action recognition, we develop a time series of skeleton motions on the basis of the data in a three-order tensor and convert the skeleton into a two-order tensor. With this action representation, Tucker tensor decomposition methods are applied to obtain dimensionality reduction. Using the tensor-based LDS model with forward and inverse action sequence, we learn a parameter tuple (AF, AI, C), in which C represents the spatial appearance of skeleton information, AF describes the dynamics of the forward time series, and AI describes the dynamics of the inversion time series. We consider using an m-order observable matrix to approximate the extended observable matrix because human behavior has a limited duration and does not extend indefinitely in time. When

摘要:ObjectiveHuman action recognition has a very wide application prospect in fields such as video surveillance, human-computer interface, environment-assisted life, human-computer interaction, and intelligent driving. In image or video analysis, most of these tasks use color and texture cues in 2D images for recognition. However, due to occlusion, shadows, illumination changes, perspective changes, scale changes, intra-class variations, and similarities between classes, the recognition rate of human behavior is not ideal. In recent years, with the release of 3D depth cameras, such as Microsoft Kinect, 3D depth data can provide pictures of scene changes, thereby improving the recognition rates for the first three challenges of human recognition. In addition, 3D depth cameras provide powerful human motion capture technology, which can output the human skeleton of a 3D joint point position. Therefore, much attention has been paid to skeleton-based action recognition. The linear dynamical system (LDS) is the most common method for encoding spatio-temporal time-series data in various disciplines due to its simplicity and efficiency. A new method is proposed to obtain the parameters of a tensor-based LDS with forward and inverse action sequences to construct a complete observation matrix. The linear subspace of the observation matrix, which maps to a point on Grassmann manifold for human action recognition, is obtained. In this manner, an action can be expressed as a subspace spanned by columns of the matrix corresponding to a point on the Grassmann manifold. On the basis of such action, classification can be performed using dictionary learning and sparse coding.MethodConsidering the dynamics and persistence of human behavior, we do not vectorize the time series according to the general method but retain its own tensor characteristics, that is, we transform the high-dimensional vector into a low-dimensional subspace to analyze the factors affecting actions from various angles (modules). In this method, human skeletons are modeled using human joint points, which are initially extracted from a depth camera recording. To preserve the original spatio-temporal information of an action video and enhance the accuracy of human action recognition, we develop a time series of skeleton motions on the basis of the data in a three-order tensor and convert the skeleton into a two-order tensor. With this action representation, Tucker tensor decomposition methods are applied to obtain dimensionality reduction. Using the tensor-based LDS model with forward and inverse action sequence, we learn a parameter tuple (AF, AI, C), in which C represents the spatial appearance of skeleton information, AF describes the dynamics of the forward time series, and AI describes the dynamics of the inversion time series. We consider using an m-order observable matrix to approximate the extended observable matrix because human behavior has a limited duration and does not extend indefinitely in time. When$m$ $m$ 关键词:time series forward inversion;human behavior recognition;human skeleton;linear dynamic system(LDS);Grassmann manifold29|46|0更新时间:2024-05-07 -

摘要:ObjectiveThe comprehensive perception of traffic management through computer vision technology is particularly important in intelligent transportation systems (ITSs). As the core element of ITSs, vehicles are important objects of perception. Vehicle logos carry important information that benefits vehicle information collection, vehicle identification, and illegal vehicle tracking. As logos are distinctive features of any vehicle, their classification and recognition can also greatly narrow the scope of vehicle model recognition. However, traditional feature descriptor-based vehicle logo recognition methods have the following disadvantages. On the one hand, the number of features extracted is limited; thus, the characteristics of vehicle logos cannot be fully described. On the other hand, the extracted features are extremely complicated and highly dimensional. These traditional methods thus entail substantial computation time and are difficult to apply to actual vehicle identification-related systems. A vehicle logo recognition method based on local quantization of enhanced edge gradient features is proposed in this work to extract abundant vehicle logo image features while effectively reducing feature dimensions to improve recognition efficiency.MethodFirst, the characteristics of vehicle logo images are considered, and the edge gradient is used to represent vehicle logos. The gradient information of the vehicle logo images is extracted by calculating the gradient information of each pixel in each vehicle logo image and dividing the gradient information according to the gradient direction. The sum of the gradient magnitudes corresponding to different gradient directions in the neighborhood of each pixel is calculated to generate a gradient magnitude matrix of different directions. Then, the LTP (local tarnary patterns) descriptor is used to re-extract features from the gradient magnitude matrix. The LTP descriptor has stronger anti-noise capability than LBP (local binary patterns) and can generate features with greater robustness. The extracted features belonging to different direction gradients are concatenated, and the results serve as the final feature for each vehicle logo. Second, the

摘要:ObjectiveThe comprehensive perception of traffic management through computer vision technology is particularly important in intelligent transportation systems (ITSs). As the core element of ITSs, vehicles are important objects of perception. Vehicle logos carry important information that benefits vehicle information collection, vehicle identification, and illegal vehicle tracking. As logos are distinctive features of any vehicle, their classification and recognition can also greatly narrow the scope of vehicle model recognition. However, traditional feature descriptor-based vehicle logo recognition methods have the following disadvantages. On the one hand, the number of features extracted is limited; thus, the characteristics of vehicle logos cannot be fully described. On the other hand, the extracted features are extremely complicated and highly dimensional. These traditional methods thus entail substantial computation time and are difficult to apply to actual vehicle identification-related systems. A vehicle logo recognition method based on local quantization of enhanced edge gradient features is proposed in this work to extract abundant vehicle logo image features while effectively reducing feature dimensions to improve recognition efficiency.MethodFirst, the characteristics of vehicle logo images are considered, and the edge gradient is used to represent vehicle logos. The gradient information of the vehicle logo images is extracted by calculating the gradient information of each pixel in each vehicle logo image and dividing the gradient information according to the gradient direction. The sum of the gradient magnitudes corresponding to different gradient directions in the neighborhood of each pixel is calculated to generate a gradient magnitude matrix of different directions. Then, the LTP (local tarnary patterns) descriptor is used to re-extract features from the gradient magnitude matrix. The LTP descriptor has stronger anti-noise capability than LBP (local binary patterns) and can generate features with greater robustness. The extracted features belonging to different direction gradients are concatenated, and the results serve as the final feature for each vehicle logo. Second, the$K$ 关键词:vehicle logo recognition;gradient feature;multiple gradient direction;enhanced edge gradient feature;local quantization45|195|1更新时间:2024-05-07 -

摘要:ObjectiveIn the circulation process, the appearance quality of coins decreases due to wear. Thus, recycling coins with worn out appearance is necessary. Generally, the need for coins to be recycled is determined by evaluating the quality of their appearance. The year of coins is an important information to judge their appearance quality. Accurately identifying Euro coins in circulation requires detecting and identifying the year they were issued. However, due to the uncertainty of the position and posture of the Euro coin number, the non-normalization of size, the interference of other characters, and the diversity of number arrangement, one cannot easily realize the automatic detection, recognition, and interpretation of Euro coin year by using computer vision algorithms.MethodThe method of detecting and recognizing Euro coin year consists of two steps. First, we use Faster-RCNN (faster-region convolutional neural network) to detect the number. The model algorithm is mainly completed in the following four steps:the first step is to send the entire image to be detected into the convolution neural network to obtain the convolution feature map; the second step is to input the feature map into the RPN (region proposal network) to obtain multiple candidate regions of the target; the third step is to use the ROI (region of interest) pooling layer to extract the features of the candidate regions; the fourth step is to use the multi-task classifier to carry out position regression to obtain the precise position coordinates of the target. A self-built experimental platform is used to collect five large coins from 12 EU (European Union) countries. The five currencies are 2 Euros, 1 Euro, 50 Euro cents, 20 Euro cents, and 10 Euro cents. In the collection process, the coins are rotated at small angles continuously and then captured at various angles as far as possible. A total of 4 429 pictures are collected from different angles. The ranking order of the number of coin years can be interpreted using four methods. For a given coin image, the method to be used to interpret the year must be determined first. According to observation, the year arrangement of a certain currency value in a country is fixed. If we can predetermine the currency value and country of a coin, then the corresponding year interpretation rules can be determined. This problem can be solved because the image sizes of coins with different values vary significantly and the coin patterns of different countries are different. Second, the obtained digital candidate boxes are grouped into four categories by using $K$-means clustering algorithm, and the most confident candidate boxes are selected in each category. Finally, according to the predetermined year arrangement pattern of coins from different countries, the accurate year information can be obtained by an appropriate sorting algorithm.ResultOn a self-built experimental platform, 4 429 pictures are collected from five types of coins with large currency values from 12 EU countries. The training and test samples are divided at a 1:1 ratio. Experimental results show that the detection accuracy of the method is 89.62% and that the calculation time is approximately 215 ms; these values satisfy the accuracy and real-time requirements.ConclusionThe proposed algorithm offers good real-time performance, robustness, and precision and carries high practical application value. Although the detection accuracy of existing algorithms is close to 90%, they can still be improved from two aspects to solve existing error situations. One aspect is to improve the clustering algorithm to achieve compact clustering or clustering in accordance with the law of the year number distribution; doing so can prevent the misdetection of characters or symbols to a certain extent. The other aspect is to further improve the Faster-RCNN network model and the simplified processing algorithm of candidate boxes to improve the detection accuracy of closely arranged digital boxes.关键词:object detection;digital detection;year sorting;Euro coin;Faster-RCNN;$K$-means clustering12|4|0更新时间:2024-05-07

摘要:ObjectiveIn the circulation process, the appearance quality of coins decreases due to wear. Thus, recycling coins with worn out appearance is necessary. Generally, the need for coins to be recycled is determined by evaluating the quality of their appearance. The year of coins is an important information to judge their appearance quality. Accurately identifying Euro coins in circulation requires detecting and identifying the year they were issued. However, due to the uncertainty of the position and posture of the Euro coin number, the non-normalization of size, the interference of other characters, and the diversity of number arrangement, one cannot easily realize the automatic detection, recognition, and interpretation of Euro coin year by using computer vision algorithms.MethodThe method of detecting and recognizing Euro coin year consists of two steps. First, we use Faster-RCNN (faster-region convolutional neural network) to detect the number. The model algorithm is mainly completed in the following four steps:the first step is to send the entire image to be detected into the convolution neural network to obtain the convolution feature map; the second step is to input the feature map into the RPN (region proposal network) to obtain multiple candidate regions of the target; the third step is to use the ROI (region of interest) pooling layer to extract the features of the candidate regions; the fourth step is to use the multi-task classifier to carry out position regression to obtain the precise position coordinates of the target. A self-built experimental platform is used to collect five large coins from 12 EU (European Union) countries. The five currencies are 2 Euros, 1 Euro, 50 Euro cents, 20 Euro cents, and 10 Euro cents. In the collection process, the coins are rotated at small angles continuously and then captured at various angles as far as possible. A total of 4 429 pictures are collected from different angles. The ranking order of the number of coin years can be interpreted using four methods. For a given coin image, the method to be used to interpret the year must be determined first. According to observation, the year arrangement of a certain currency value in a country is fixed. If we can predetermine the currency value and country of a coin, then the corresponding year interpretation rules can be determined. This problem can be solved because the image sizes of coins with different values vary significantly and the coin patterns of different countries are different. Second, the obtained digital candidate boxes are grouped into four categories by using $K$-means clustering algorithm, and the most confident candidate boxes are selected in each category. Finally, according to the predetermined year arrangement pattern of coins from different countries, the accurate year information can be obtained by an appropriate sorting algorithm.ResultOn a self-built experimental platform, 4 429 pictures are collected from five types of coins with large currency values from 12 EU countries. The training and test samples are divided at a 1:1 ratio. Experimental results show that the detection accuracy of the method is 89.62% and that the calculation time is approximately 215 ms; these values satisfy the accuracy and real-time requirements.ConclusionThe proposed algorithm offers good real-time performance, robustness, and precision and carries high practical application value. Although the detection accuracy of existing algorithms is close to 90%, they can still be improved from two aspects to solve existing error situations. One aspect is to improve the clustering algorithm to achieve compact clustering or clustering in accordance with the law of the year number distribution; doing so can prevent the misdetection of characters or symbols to a certain extent. The other aspect is to further improve the Faster-RCNN network model and the simplified processing algorithm of candidate boxes to improve the detection accuracy of closely arranged digital boxes.关键词:object detection;digital detection;year sorting;Euro coin;Faster-RCNN;$K$-means clustering12|4|0更新时间:2024-05-07 -

摘要:ObjectivePedestrian detection is an important research topic in computer vision and plays a crucial role in all parts of life. The deformable part model (DPM) algorithm is a graphical model (Markov random field) that uses a series of parts and the spatial positional relationship of parts to represent an object. The DPM algorithm achieves superior detection accuracy in the field of pedestrian detection. However, because the DPM algorithm uses a sliding window strategy to search for objects in images, it handles a large number of candidate areas with low recall rates before constructing the feature pyramid. This property restricts the detection efficiency of the DPM algorithm. In view of this problem, this study proposes a novel model to improve the process of selecting candidate detection regions and puts forward the DPM algorithm integrated with a clustering algorithm with grid density and a selective search algorithm. Compared with the sliding window search method, the proposed model can provide fewer proposal windows and thus reduces computational complexity. Therefore, this study focuses on the advantages of the proposed model and the DPM algorithm to improve detection efficiency and accuracy.MethodThe proposed model contains three modules:the collection module of the coordinate points of moving targets, the generation module of frequently moving regions of targets, and the generation module of candidate detection windows. The modules are executed in series. Three frame difference methods and Gauss mixture models are used to detect moving targets in the first module. The centroid coordinates of each effective target are calculated by the obtained object contour, and a certain number of moving object coordinate points are collected and stored in the queue. In the second module, a G-cluster clustering algorithm based on grid structure and DBSCAN (density-based spatial clustering of applications with noise) clustering is proposed. The greatest advantage of the DBSCAN clustering algorithm is that it can find clusters of different sizes and shapes. However, this algorithm requires traversing every data point in the dataset, thereby leading to a high running time cost. Therefore, we draw lessons from the idea of the grid clustering algorithm based on the DBSCAN clustering algorithm. We develop a grid coordinate model and use the sliding window search method with an adaptive step size instead of neighborhood search to improve the method. This method can greatly reduce the number of data point searches and accelerate the speed of clustering. The specific experimental steps are as follows. The data points in the queue (QUE) are read, and the grid coordinate model is constructed. Frequently moving regions of targets are found by the sliding window strategy with an adaptive step size. Each region is dynamically adjusted with the aid of the moving object detection algorithm, and then the non-frequently moving regions are processed by masking to improve the effects of the candidate window generation module following the generation of frequently moving regions. Finally, the processed images are entered into the next module. In the third module, an improved selective search algorithm is introduced. As a mature image segmentation algorithm, the selective search algorithm can detect object proposals rapidly and effectively. It can also satisfy the accuracy and real-time requirements of pedestrian detection. Therefore, this thesis uses the selective search algorithm to extract a target from an image, and obtains a series of windows with high probability of complete target extraction. To further exclude the candidate windows with low credibility, we count and adjust the width-height ratio of the pedestrian contours in the INRIA dataset of public pedestrian image datasets. The range of the target width-height ratio is considered the condition for further screening candidate windows. Then, according to the coordinates of the final candidate detection window on the image, the corresponding features are extracted and entered into the classifier of the DPM algorithm. The final pedestrian detection window is obtained by classification.ResultExperiments on the PETS 2009 Bench-mark dataset were conducted to evaluate the performance of the proposed algorithm. Results indicated that compared with the sliding window search strategy, our algorithm effectively reduced some redundant windows. Moreover, the detection efficiency of the DPM algorithm showed improvement. The average precision of the proposed method increased by 1.71%, the LAMR (log-average miss rate) decreased by 2.2%, and speed increased by more than threefold.ConclusionTo deal with the problems of high computational complexity and high LAMR of the classical DPM algorithm, this study proposes a candidate field generation algorithm for pedestrian detection based on grid density clustering to improve the DPM model. This algorithm can realize effective candidate detection with a high recall rate, effectively improve the detection accuracy of the model, reduce the LAMR, and accelerate the detection speed. Furthermore, the proposed algorithm can effectively improve the pedestrian detection performance of the DPM model. However, the processing speed of the three-frame difference method and the Gauss model for background migration still requires improvement in the detection process, and further research is required for background migration processing.关键词:deformable part model;grid density;selective search;pedestrian detection;candidate window15|4|0更新时间:2024-05-07

摘要:ObjectivePedestrian detection is an important research topic in computer vision and plays a crucial role in all parts of life. The deformable part model (DPM) algorithm is a graphical model (Markov random field) that uses a series of parts and the spatial positional relationship of parts to represent an object. The DPM algorithm achieves superior detection accuracy in the field of pedestrian detection. However, because the DPM algorithm uses a sliding window strategy to search for objects in images, it handles a large number of candidate areas with low recall rates before constructing the feature pyramid. This property restricts the detection efficiency of the DPM algorithm. In view of this problem, this study proposes a novel model to improve the process of selecting candidate detection regions and puts forward the DPM algorithm integrated with a clustering algorithm with grid density and a selective search algorithm. Compared with the sliding window search method, the proposed model can provide fewer proposal windows and thus reduces computational complexity. Therefore, this study focuses on the advantages of the proposed model and the DPM algorithm to improve detection efficiency and accuracy.MethodThe proposed model contains three modules:the collection module of the coordinate points of moving targets, the generation module of frequently moving regions of targets, and the generation module of candidate detection windows. The modules are executed in series. Three frame difference methods and Gauss mixture models are used to detect moving targets in the first module. The centroid coordinates of each effective target are calculated by the obtained object contour, and a certain number of moving object coordinate points are collected and stored in the queue. In the second module, a G-cluster clustering algorithm based on grid structure and DBSCAN (density-based spatial clustering of applications with noise) clustering is proposed. The greatest advantage of the DBSCAN clustering algorithm is that it can find clusters of different sizes and shapes. However, this algorithm requires traversing every data point in the dataset, thereby leading to a high running time cost. Therefore, we draw lessons from the idea of the grid clustering algorithm based on the DBSCAN clustering algorithm. We develop a grid coordinate model and use the sliding window search method with an adaptive step size instead of neighborhood search to improve the method. This method can greatly reduce the number of data point searches and accelerate the speed of clustering. The specific experimental steps are as follows. The data points in the queue (QUE) are read, and the grid coordinate model is constructed. Frequently moving regions of targets are found by the sliding window strategy with an adaptive step size. Each region is dynamically adjusted with the aid of the moving object detection algorithm, and then the non-frequently moving regions are processed by masking to improve the effects of the candidate window generation module following the generation of frequently moving regions. Finally, the processed images are entered into the next module. In the third module, an improved selective search algorithm is introduced. As a mature image segmentation algorithm, the selective search algorithm can detect object proposals rapidly and effectively. It can also satisfy the accuracy and real-time requirements of pedestrian detection. Therefore, this thesis uses the selective search algorithm to extract a target from an image, and obtains a series of windows with high probability of complete target extraction. To further exclude the candidate windows with low credibility, we count and adjust the width-height ratio of the pedestrian contours in the INRIA dataset of public pedestrian image datasets. The range of the target width-height ratio is considered the condition for further screening candidate windows. Then, according to the coordinates of the final candidate detection window on the image, the corresponding features are extracted and entered into the classifier of the DPM algorithm. The final pedestrian detection window is obtained by classification.ResultExperiments on the PETS 2009 Bench-mark dataset were conducted to evaluate the performance of the proposed algorithm. Results indicated that compared with the sliding window search strategy, our algorithm effectively reduced some redundant windows. Moreover, the detection efficiency of the DPM algorithm showed improvement. The average precision of the proposed method increased by 1.71%, the LAMR (log-average miss rate) decreased by 2.2%, and speed increased by more than threefold.ConclusionTo deal with the problems of high computational complexity and high LAMR of the classical DPM algorithm, this study proposes a candidate field generation algorithm for pedestrian detection based on grid density clustering to improve the DPM model. This algorithm can realize effective candidate detection with a high recall rate, effectively improve the detection accuracy of the model, reduce the LAMR, and accelerate the detection speed. Furthermore, the proposed algorithm can effectively improve the pedestrian detection performance of the DPM model. However, the processing speed of the three-frame difference method and the Gauss model for background migration still requires improvement in the detection process, and further research is required for background migration processing.关键词:deformable part model;grid density;selective search;pedestrian detection;candidate window15|4|0更新时间:2024-05-07 -

摘要:ObjectiveSaliency detection, as a preprocessing component of computer vision, has received increasing attention in the areas of object relocation, scene classification, semantic segmentation, and visual tracking. Although object detection has been greatly developed, it remains challenging because of a series of realistic factors, such as background complexity and attention mechanism. In the past, many significant target detection methods have been developed. These methods are mainly divided into traditional methods and new methods based on deep learning. The traditional approach is to find significant targets through low-level manual features, such as contrast, color, and texture. These general techniques are proven effective in maintaining image structure and reducing computational effort. However, these low-level features cause difficulty in capturing high-level semantic knowledge about objects and their surroundings. Therefore, these low-level feature-based methods do not achieve excellent results when salient objects are stripped from the stacked background. The saliency detection method based on deep learning mainly seeks significant targets by automatically extracting advanced features. However, most of these advanced models focus on the nonlinear combination of advanced features extracted from the final convolutional layer. The boundaries of salient objects are often extremely blurry due to the lack of low-level visual information such as edges. In these jobs, convolutional neural network (CNN) features are applied directly to the model without any processing. The features extracted from the CNN are generally high in dimension and contain a large amount of noise, thereby reducing the utilization efficiency of CNN features and revealing an opposite effect. Sparse methods can effectively aggregate the salient objects in a feature map and eliminate some of the noise interference. Sparse self-encoding is a sparse method. A traditional saliency recognition method based on sparse self-encoding and image fusion, combined with background prior and contrast analysis and VGG (visual geometry group) saliency calculation, is proposed to solve these problems.MethodThe proposed algorithm is mainly composed of the following:traditional saliency map extraction, VGG feature extraction, sparse self-encoding, and saliency result optimization. The traditional method to be improved is selected, and the corresponding saliency map is calculated. In this experiment, we select four traditional methods with excellent results, namely, discriminative regional feature integration (DRFI), high-dimensional color transform (HDCT), regularized random walks ranking (RRWR), and contour-guided visual search (CGVS). Then, the VGG network is used to extract feature maps. The feature maps obtained by each pooled layer are sparsely self-encoded to obtain 25 sparse saliency feature maps. When a feature map is selected, excessive edge information and texture information are retained because the features extracted by the first three pooling layers are mainly low-level features, indicating duplicate effects with feature maps obtained by the conventional method; thus, the feature maps from low-level are not used. The comparison between the fourth and fifth feature maps shows that the feature information of the fifth pooling layer is excessively lost. After experimental verification, the fifth layer characteristic map exerts an interference effect. Thus, we use the feature map extracted from the fourth pooling layer. Then, these feature maps are placed into the sparse self-encoder to perform the sparse operation to obtain five feature maps. Each feature map is integrated with the corresponding saliency map obtained in the previous volume. Finally, the neural network performs the operation and calculates the final saliency map.ResultOur experiments involved four open datasets:DUT-OMRON, ECSSD, HKU-IS, and MSRA. Then, we obtained half of the images from the four datasets used in the experiment to form a training set and the remaining four test sets. The results obtained can be extremely credible. The following conclusions are drawn from the experiment. 1) The proposed model greatly improves the F value in the four datasets of the four methods, including an increase of 24.53% in the HKU-IS dataset of the DRFI method. 2) The MAE (mean absolute error) value has also been greatly reduced, the least of which is reduced by 12.78% for the ECSSD dataset of the CGVS method and the highest of which is reduced by nearly 50%. 3) The proposed model network has few layers, few parameters, and short calculation time. The training time is approximately 2 h, and the average test time of the image is approximately 0.2 s. On the contrary, Liu chooses an image saliency optimization scheme using adaptive fusion. The training time is approximately 47 h, and the average test time of the image is 56.95 s. The proposed model greatly improves the computational efficiency. 4) The proposed model achieves a significant improvement for the four datasets, especially the HKU-IS and MSRA datasets. These datasets contain difficult images, thereby confirming the effectiveness of the proposed method.ConclusionA low-level feature map based on traditional models, such as a texture and high-level feature map of a sparsely self-encoded VGG network, is proposed to optimize saliency results and greatly improve saliency target recognition. The traditional methods based on DRFI, HDCT, RRWR, and CGVS are tested in the publicly significant object detection datasets DUT-OMRON, ECSSD, HKU-IS, and MSRA, respectively. The obtained F value and MAE value are significantly improved, thereby confirming the effectiveness of the proposed method. Moreover, the method steps and network structure are simple and easy to understand, the training takes little time, and popular promotion can be easily obtained. The limitation of the study is that some of the extracted feature maps are missing. In practice, only the fourth layer of VGG maps is selected, and not all useful information is fully utilized.关键词:significant detection;visual geometry group (VGG);sparse self-encoding;image fusion;convolutional neural network (CNN)13|4|0更新时间:2024-05-07

摘要:ObjectiveSaliency detection, as a preprocessing component of computer vision, has received increasing attention in the areas of object relocation, scene classification, semantic segmentation, and visual tracking. Although object detection has been greatly developed, it remains challenging because of a series of realistic factors, such as background complexity and attention mechanism. In the past, many significant target detection methods have been developed. These methods are mainly divided into traditional methods and new methods based on deep learning. The traditional approach is to find significant targets through low-level manual features, such as contrast, color, and texture. These general techniques are proven effective in maintaining image structure and reducing computational effort. However, these low-level features cause difficulty in capturing high-level semantic knowledge about objects and their surroundings. Therefore, these low-level feature-based methods do not achieve excellent results when salient objects are stripped from the stacked background. The saliency detection method based on deep learning mainly seeks significant targets by automatically extracting advanced features. However, most of these advanced models focus on the nonlinear combination of advanced features extracted from the final convolutional layer. The boundaries of salient objects are often extremely blurry due to the lack of low-level visual information such as edges. In these jobs, convolutional neural network (CNN) features are applied directly to the model without any processing. The features extracted from the CNN are generally high in dimension and contain a large amount of noise, thereby reducing the utilization efficiency of CNN features and revealing an opposite effect. Sparse methods can effectively aggregate the salient objects in a feature map and eliminate some of the noise interference. Sparse self-encoding is a sparse method. A traditional saliency recognition method based on sparse self-encoding and image fusion, combined with background prior and contrast analysis and VGG (visual geometry group) saliency calculation, is proposed to solve these problems.MethodThe proposed algorithm is mainly composed of the following:traditional saliency map extraction, VGG feature extraction, sparse self-encoding, and saliency result optimization. The traditional method to be improved is selected, and the corresponding saliency map is calculated. In this experiment, we select four traditional methods with excellent results, namely, discriminative regional feature integration (DRFI), high-dimensional color transform (HDCT), regularized random walks ranking (RRWR), and contour-guided visual search (CGVS). Then, the VGG network is used to extract feature maps. The feature maps obtained by each pooled layer are sparsely self-encoded to obtain 25 sparse saliency feature maps. When a feature map is selected, excessive edge information and texture information are retained because the features extracted by the first three pooling layers are mainly low-level features, indicating duplicate effects with feature maps obtained by the conventional method; thus, the feature maps from low-level are not used. The comparison between the fourth and fifth feature maps shows that the feature information of the fifth pooling layer is excessively lost. After experimental verification, the fifth layer characteristic map exerts an interference effect. Thus, we use the feature map extracted from the fourth pooling layer. Then, these feature maps are placed into the sparse self-encoder to perform the sparse operation to obtain five feature maps. Each feature map is integrated with the corresponding saliency map obtained in the previous volume. Finally, the neural network performs the operation and calculates the final saliency map.ResultOur experiments involved four open datasets:DUT-OMRON, ECSSD, HKU-IS, and MSRA. Then, we obtained half of the images from the four datasets used in the experiment to form a training set and the remaining four test sets. The results obtained can be extremely credible. The following conclusions are drawn from the experiment. 1) The proposed model greatly improves the F value in the four datasets of the four methods, including an increase of 24.53% in the HKU-IS dataset of the DRFI method. 2) The MAE (mean absolute error) value has also been greatly reduced, the least of which is reduced by 12.78% for the ECSSD dataset of the CGVS method and the highest of which is reduced by nearly 50%. 3) The proposed model network has few layers, few parameters, and short calculation time. The training time is approximately 2 h, and the average test time of the image is approximately 0.2 s. On the contrary, Liu chooses an image saliency optimization scheme using adaptive fusion. The training time is approximately 47 h, and the average test time of the image is 56.95 s. The proposed model greatly improves the computational efficiency. 4) The proposed model achieves a significant improvement for the four datasets, especially the HKU-IS and MSRA datasets. These datasets contain difficult images, thereby confirming the effectiveness of the proposed method.ConclusionA low-level feature map based on traditional models, such as a texture and high-level feature map of a sparsely self-encoded VGG network, is proposed to optimize saliency results and greatly improve saliency target recognition. The traditional methods based on DRFI, HDCT, RRWR, and CGVS are tested in the publicly significant object detection datasets DUT-OMRON, ECSSD, HKU-IS, and MSRA, respectively. The obtained F value and MAE value are significantly improved, thereby confirming the effectiveness of the proposed method. Moreover, the method steps and network structure are simple and easy to understand, the training takes little time, and popular promotion can be easily obtained. The limitation of the study is that some of the extracted feature maps are missing. In practice, only the fourth layer of VGG maps is selected, and not all useful information is fully utilized.关键词:significant detection;visual geometry group (VGG);sparse self-encoding;image fusion;convolutional neural network (CNN)13|4|0更新时间:2024-05-07 -

摘要:ObjectiveThe leakage monitoring technology of high fill channels is key to the safe monitoring of the South-to-North Water Transfer Project. Aimed at addressing the current problem of leakage detection for high fill channels being easily affected by the environment and resulting in inaccurate judgment results, a model based on Gabor-support vector machine (SVM) is designed for damage monitoring in the cement slope of the high fill channel in the middle route of the South-to-North Water Transfer Project. The high fill channel is widely distributed in the middle route of the South-to-North Water Transfer Project. As a result of the high filling height, wide distribution range, and complex engineering geological conditions of the middle route of the South-to-North Water Transfer Project, the lining panel is cracked, the canal slope surface is damaged, and seepage occurs. Although the effect of leakage is small, the long channel still leads to a large leakage. Therefore, monitoring the seepage of the high fill channel is necessary to ensure its safe operation.MethodThe image of the high fill channel cement is preprocessed. In general, because the acquired image is affected by various noises, it needs to be preprocessed before extracting its features. These processing methods include image enhancement, median filtering, and grayscale processing. Then, Gabor wavelet is used to extract the texture features of the image, as well as the image convolution, commonly used amplitude, and phase to represent the texture features. The amplitude information reflects the energy spectrum of the image and is relatively stable. Therefore, amplitude information is selected as the extracted characteristic data. After analyzing the mean and variance eigenvalues of the amplitude, the image features of the variance are linearly separable. Therefore, the variance characteristic data of amplitude are considered as the characteristic data for classification and recognition. Under the scale and direction of different Gabor wavelets, the image characteristics of the extracted cement slope are analyzed to find the optimal scale and direction parameter group. The scale range is 17, and the direction range is 113. Different Gabor filters are obtained according to different directions and scales. Different Gabor filters are used to filter the high fill cement slope and thereby obtain different image features. Finally, according to the well-trained sample features, SVM is used to classify the damage degrees of cement slope, and the recognition results are refined with the following labels:normal, crack, fracture, and hole. At the same time, various feature extraction methods are studied to objectively reflect the recognition effects of Gabor-SVM. These methods include histogram-SVM, grayscale symbiosis matrix-SVM, and Canny edge detection algorithm-SVM.ResultExperimental results show that the damage recognition model of the cement surface based on Gabor-SVM tends to have a stable value when the small wave has the 6th scales and the 12th directions. The recognition rate of the normal slope image is generally good and stable, mostly distributed between 0.8 and 1.0. The recognition rate of the slope image under the crack category presents stable growth from low to high, but the overall recognition rate is low, with most values being in the range of 0.500.65. The recognition rate of the slope image of the hole type fluctuates greatly, and its recognition rate has a significant relationship with scale changes. For example, when the scale value is 1 or 2, the recognition rate is low. When the scale value is 3, 4, or 5, the recognition rate increases gradually. When the scale value is 6 or 7, the recognition rate decreases. The recognition rate is mostly distributed between 0.78 and 0.88. A certain relationship exists between the size of the slope image recognition rate of the fracture category and the scale. It has the characteristic of fluctuating growth from low to high and then to low, and the recognition rate is generally between 0.80 and 0.95. The normal, crack, hole, and fracture recognition rates are 0.98, 0.63, 0.88, and 0.90, respectively. The average recognition rate of the Gabor-SVM method is approximately 0.85. Compared with the average recognition rates of the other methods (approximately 0.50), that of the proposed method has better recognition ability.ConclusionThe damage recognition model based on Gabor-SVM has a slight recognition effect. The recognition effect peaks given 6 scales/12 directions. The average recognition rate of the Gabor-SVM method for the slope of the high fill channel cement is approximately 0.85. Meanwhile, the cement surface recognition effect of the crack category is unsatisfactory at 0.63. Thus, further research is required to provide technical support for finding the hidden dangers of high fill channels in the South-to-North Water Transfer Project.关键词:middle route of South-to-North Water Transfer Project;feature extraction of multiple scales and directions;damage recognition;Gabor wavelet;support vector machine (SVM) classification11|4|0更新时间:2024-05-07