最新刊期

卷 24 , 期 8 , 2019

- 摘要:As a representative technique for deep learning, the deep convolution neural network (DCNN) with strong feature learning and nonlinear mapping ability in the field of digital image processing offers a novel opportunity for image denoising research. DCNN-based denoising models show significant advantages over traditional methods in terms of their denoising effect and execution efficiency. However, most of the existing image denoising models are driven by data. Given their inherent restrictions, the denoising performance of these models can be further improved. To promote the development of existing image denoising technologies, some key challenges that restrict their further improvement must be analyzed and addressed. We first summarize the core ideas of traditional image denoising algorithms based on three types of prior knowledge of the natural image, namely, non-local self-similarity, sparsity, and low rank, and then analyze the advantages and disadvantages of these algorithms. Image denoising algorithms modeled with prior knowledge can flexibly deal with distorted images under different noise levels. Unfortunately, they demonstrate the following limitations:1) the limited hand-crafted image priors are not enough to describe all changes in the image structure, thereby limiting the denoising ability of these algorithms; 2) most of the traditional image denoising algorithms iteratively solve their objective functions, thereby resulting in a high computational complexity; and 3) the optimal solution of the objective function needs to adjust several parameters manually according to the actual situation. Based on the above problems, we point out that the technical advantage of the DCNN-based denoising model lies in its strong nonlinear approximation supported by a graphics processing unit. The inherent characteristics of the DCNN-based denoising model are then analyzed, the bottleneck problems that restrict their future development are presented, and the possible solutions (research directions) to these problems are discussed in detail. A thorough analysis reveals many bottleneck problems in data-driven DCNN-based denoising models that need to be solved, including:1) the small receptive field of the DCNN network that limits the range of image feature representation and the ability to fully utilize the priors contained in natural images; 2) the strong dependence of DCNN-based model parameters on the training dataset, that is, the optimal denoising effect can only be obtained if the distortion level of the observed image is close to that of the training images; and 3) the training set cannot be easily constructed and the denoising model cannot be easily trained to denoise an image if both the noise type and level of noisy image are unknown. To solve these problems, we expand the receptive field of the convolution kernel, weaken the dependency between the network parameters and the training set, or fully utilize the modeling ability of the DCNN network. Therefore, the bottlenecks of the existing DCNN denoising models can be addressed, and research on image denoising algorithms can move to a higher level. In this paper, the technical advantages and development bottlenecks of DCNN in the field of image denoising are summarized, and some future research directions for the image denoising method are proposed. This paper should be of interest to readers in the area of image denoising.关键词:review;image denoising;deep convolutional neural network(DCNN);bottleneck problem;receptive field;data dependencies;parameter space101|63|4更新时间:2024-05-07

Scholar View

-

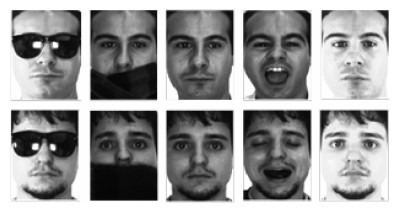

摘要:ObjectiveAs an important part of human biometrics, age information has extensive application prospects in the fields of security monitoring, human-computer interaction, and video retrieval. As an emerging biometric recognition technology, age estimation technology based on face image is an important research subject in the fields of computer vision and face analysis. With the fast development of deep learning, the face age estimation method based on deep convolutional neural network has become a research hotspot in these fields.MethodReal and apparent age estimation methods based on deep learning are reviewed based on extensive research and the latest achievements of relevant literature. The basic ideas and characteristics of various methods are analyzed. The research status, key technologies, and limitations based on various age estimation methods are summarized. The performance of various methods on common age estimation datasets is compared. Finally, existing major research problems are summarized and discussed, and potential future research directions are presented.ResultFace age estimation can be divided into real and apparent age estimation according to the subjectivity and objectivity of age labeling, and it can be divided into age group estimation and age value estimation according to the accuracy of age labeling. With the deep convolutional neural network (DCNN) becoming a hotspot in the field of computer vision, from 5-conv 3-fc's AlexNet 33 to 16-conv 3-fc's VGG-19 network and from 21-conv 1-fc's GoogleNet to thousands of layers of ResNets, the learning ability and the depth of the network have improved considerably. An increasing number of face age estimation researchers are focusing on face age estimation based on DCNN with powerful feature extraction and learning capabilities. According to different views, face age estimation methods based on deep learning can be roughly divided into three categories:regression model, multi-class classification, and rank model. Regression model uses regression analysis to achieve age estimation by establishing a functional model that characterizes the age variation of faces. Regression-based age estimation methods may be affected by overfitting due to the randomness in the aging process and the fuzzy mapping between the appearance of the face and its actual age. The age of a person can be easily divided into several age groups. Age group estimation under unconstrained conditions has become a current research topic, and the multi-classification model is the main means of achieving age group estimation because the regression-based age estimation model has difficulty achieving convergence. Moreover, age group classification can meet the needs of most practical applications. The age estimation model based on the rank model regards the age label as a data sequence and converts the age estimation problem into a problem in which the age to be estimated is greater or less than a certain age, thereby transforming the age estimation problem into a series of binary classification problems. Other technologies in the field of computer vision are applied in face age estimation. Although various deep learning-based face age estimation methods have achieved considerable progress, the performance of age estimation fails to meet the practical needs of unconstrained age estimation because current face age estimation research continues to face the following difficulties and challenges:1) insufficient prior knowledge introduced to face age estimation methods; 2) lack of face age estimation feature representation that considers global and local details; 3) the limitations of existing face age estimation datasets; and 4) multi-scale face age estimation problems in practical application environments.ConclusionDeep learning-based face age estimation methods have achieved considerable progress, but they perform poorly due to the complexity of actual application scenarios. A comprehensive review of the current deep learning-based face age estimation techniques is needed to help researchers solve existing problems. Age estimation techniques based on face images are expected to play an important role in the future with the continued efforts of researchers and the in-depth development of related technologies.关键词:face age estimation;deep learning;deep convolutional neural networks(DCNNs);real age;apparent age23|47|10更新时间:2024-05-07

摘要:ObjectiveAs an important part of human biometrics, age information has extensive application prospects in the fields of security monitoring, human-computer interaction, and video retrieval. As an emerging biometric recognition technology, age estimation technology based on face image is an important research subject in the fields of computer vision and face analysis. With the fast development of deep learning, the face age estimation method based on deep convolutional neural network has become a research hotspot in these fields.MethodReal and apparent age estimation methods based on deep learning are reviewed based on extensive research and the latest achievements of relevant literature. The basic ideas and characteristics of various methods are analyzed. The research status, key technologies, and limitations based on various age estimation methods are summarized. The performance of various methods on common age estimation datasets is compared. Finally, existing major research problems are summarized and discussed, and potential future research directions are presented.ResultFace age estimation can be divided into real and apparent age estimation according to the subjectivity and objectivity of age labeling, and it can be divided into age group estimation and age value estimation according to the accuracy of age labeling. With the deep convolutional neural network (DCNN) becoming a hotspot in the field of computer vision, from 5-conv 3-fc's AlexNet 33 to 16-conv 3-fc's VGG-19 network and from 21-conv 1-fc's GoogleNet to thousands of layers of ResNets, the learning ability and the depth of the network have improved considerably. An increasing number of face age estimation researchers are focusing on face age estimation based on DCNN with powerful feature extraction and learning capabilities. According to different views, face age estimation methods based on deep learning can be roughly divided into three categories:regression model, multi-class classification, and rank model. Regression model uses regression analysis to achieve age estimation by establishing a functional model that characterizes the age variation of faces. Regression-based age estimation methods may be affected by overfitting due to the randomness in the aging process and the fuzzy mapping between the appearance of the face and its actual age. The age of a person can be easily divided into several age groups. Age group estimation under unconstrained conditions has become a current research topic, and the multi-classification model is the main means of achieving age group estimation because the regression-based age estimation model has difficulty achieving convergence. Moreover, age group classification can meet the needs of most practical applications. The age estimation model based on the rank model regards the age label as a data sequence and converts the age estimation problem into a problem in which the age to be estimated is greater or less than a certain age, thereby transforming the age estimation problem into a series of binary classification problems. Other technologies in the field of computer vision are applied in face age estimation. Although various deep learning-based face age estimation methods have achieved considerable progress, the performance of age estimation fails to meet the practical needs of unconstrained age estimation because current face age estimation research continues to face the following difficulties and challenges:1) insufficient prior knowledge introduced to face age estimation methods; 2) lack of face age estimation feature representation that considers global and local details; 3) the limitations of existing face age estimation datasets; and 4) multi-scale face age estimation problems in practical application environments.ConclusionDeep learning-based face age estimation methods have achieved considerable progress, but they perform poorly due to the complexity of actual application scenarios. A comprehensive review of the current deep learning-based face age estimation techniques is needed to help researchers solve existing problems. Age estimation techniques based on face images are expected to play an important role in the future with the continued efforts of researchers and the in-depth development of related technologies.关键词:face age estimation;deep learning;deep convolutional neural networks(DCNNs);real age;apparent age23|47|10更新时间:2024-05-07 -

摘要:ObjectiveIn recent years, as an emerging biometrics technology, low-resolution palmprint recognition has attracted attention due to its potential for civilian applications. Many effective palmprint recognition methods have been proposed. These traditional methods can be roughly divided into categories, such as texture-based, line-based, subspace learning-based, correlation filter-based, local descriptor-based, and orientation coding-based. In the past decade, deep learning was the most important technique in the field of artificial intelligence, introducing performance breakthroughs in many fields such as speech recognition, natural language processing, computer vision, image and video analysis, and multimedia. In the field of biometrics, especially in face recognition, deep learning has become the most mainstream technology. However, research on deep learning-based palmprint recognition remains at the preliminary stage. Research on deep learning-based palmprint recognition is relatively rare, and in-depth analysis and discussion on deep learning-based palmprint recognition is scarce. In addition, most existing work on deep learning-based palmprint recognition exploited simple networks only. In palmprint databases, the palmprint images were usually captured in two different sessions. In traditional palmprint recognition work, the images captured in the first session were usually treated as the training data, and the images captured in the second session were typically used as the test data. However, in existing work on deep learning-based palmprint recognition, the images captured in the first and second sessions are exploited as the training data, which leads to a high recognition accuracy. In this study, we evaluate the performance of various convolutional neural networks (CNNs) in palmprint recognition to thoroughly investigate the problem of deep learning-based palmprint recognition.MethodWe systematically review the classic CNNs in recent years and analyze the structure of various networks and their underlying connections. Then, we perform a large-scale performance evaluation for palmprint recognition. First, we select eight typical CNN networks, namely, AlexNet, VGG, Inception_v3, ResNet, Inception_v4, Inception_ResNet_v2, DenseNet, and Xception, and evaluate these networks on five palmprint databases to determine the best network. We choose the pretrained model in ImageNet Large Scale Visual Recognition Challenge for training because training the CNN model in the case of insufficient data (the scale of the dataset is small) is time consuming and may lead to poor results. Second, we conduct evaluations by using six learning rates from large to small to analyze the impact on performance and obtain the suitable learning rate. Third, we compare the performance of VGG-16 and VGG-19 and ResNet18, ResNet34, and ResNet50 in the evaluation on different layer numbers of the network. Fourth, starting from a single training data, we gradually increase the data amount until the training data contains all the data of the first session to analyze the influence of different training data quantities on performance. Finally, the performance of CNNs is compared with that of several traditional methods, such as competitive code, ordinal code, RLOC, and LLDP.ResultExperimental results on eight CNNs with different structures show that ResNet18 outperforms other networks and can achieve 100% recognition rate on the PolyU M_B database. The performance of DenseNet121 is similar to that of ResNe18, and the performance of AlexNet is poor. To evaluate the learning rate, results show that 5×10-5 is suitable for the palmprint dataset used in this study. If the learning rate is too large, then the performance of these CNNs will be poor. In addition, the appropriate learning rate of the VGG network is 10-5. The performance evaluation of different numbers of network layers indicated that the recognition rate of VGG-16 and VGG-19 is similar. As the layer number of ResNet increases from 18 to 34 and to 50, the recognition rate gradually decreases. Generally speaking, more data involved in network training results in improved performance. In the early stage of the increase in the amount of data, the performance is significantly improved. A comparison of the performance of CNNs with that of traditional non-deep learning methods shows that the performance of CNNs is equivalent to that of non-deep learning methods on the PolyU M_B database. On other databases, the performance of CNNs is worse than that of traditional non-deep learning methods.ConclusionThis paper reviews the CNNs proposed in the literature and conducts a large-scale performance evaluation of palmprint recognition on five different palmprint databases under different network structures, learning rates, network layers, and training data amounts. Results show that ResNet is suitable for palmprint recognition and that 5×10-5 is an appropriate learning rate, which can help researchers engaged in deep learning and palmprint recognition. We also compared the performance of CNNs with that of four traditional methods. The overall performance of CNN is slightly worse than that of traditional methods, but we can still see the great potential of deep learning methods.关键词:biometrics;palmprint recognition;deep learning(DL);convolutional neural network(CNN);palmprint dataset;performance evaluation43|352|2更新时间:2024-05-07

摘要:ObjectiveIn recent years, as an emerging biometrics technology, low-resolution palmprint recognition has attracted attention due to its potential for civilian applications. Many effective palmprint recognition methods have been proposed. These traditional methods can be roughly divided into categories, such as texture-based, line-based, subspace learning-based, correlation filter-based, local descriptor-based, and orientation coding-based. In the past decade, deep learning was the most important technique in the field of artificial intelligence, introducing performance breakthroughs in many fields such as speech recognition, natural language processing, computer vision, image and video analysis, and multimedia. In the field of biometrics, especially in face recognition, deep learning has become the most mainstream technology. However, research on deep learning-based palmprint recognition remains at the preliminary stage. Research on deep learning-based palmprint recognition is relatively rare, and in-depth analysis and discussion on deep learning-based palmprint recognition is scarce. In addition, most existing work on deep learning-based palmprint recognition exploited simple networks only. In palmprint databases, the palmprint images were usually captured in two different sessions. In traditional palmprint recognition work, the images captured in the first session were usually treated as the training data, and the images captured in the second session were typically used as the test data. However, in existing work on deep learning-based palmprint recognition, the images captured in the first and second sessions are exploited as the training data, which leads to a high recognition accuracy. In this study, we evaluate the performance of various convolutional neural networks (CNNs) in palmprint recognition to thoroughly investigate the problem of deep learning-based palmprint recognition.MethodWe systematically review the classic CNNs in recent years and analyze the structure of various networks and their underlying connections. Then, we perform a large-scale performance evaluation for palmprint recognition. First, we select eight typical CNN networks, namely, AlexNet, VGG, Inception_v3, ResNet, Inception_v4, Inception_ResNet_v2, DenseNet, and Xception, and evaluate these networks on five palmprint databases to determine the best network. We choose the pretrained model in ImageNet Large Scale Visual Recognition Challenge for training because training the CNN model in the case of insufficient data (the scale of the dataset is small) is time consuming and may lead to poor results. Second, we conduct evaluations by using six learning rates from large to small to analyze the impact on performance and obtain the suitable learning rate. Third, we compare the performance of VGG-16 and VGG-19 and ResNet18, ResNet34, and ResNet50 in the evaluation on different layer numbers of the network. Fourth, starting from a single training data, we gradually increase the data amount until the training data contains all the data of the first session to analyze the influence of different training data quantities on performance. Finally, the performance of CNNs is compared with that of several traditional methods, such as competitive code, ordinal code, RLOC, and LLDP.ResultExperimental results on eight CNNs with different structures show that ResNet18 outperforms other networks and can achieve 100% recognition rate on the PolyU M_B database. The performance of DenseNet121 is similar to that of ResNe18, and the performance of AlexNet is poor. To evaluate the learning rate, results show that 5×10-5 is suitable for the palmprint dataset used in this study. If the learning rate is too large, then the performance of these CNNs will be poor. In addition, the appropriate learning rate of the VGG network is 10-5. The performance evaluation of different numbers of network layers indicated that the recognition rate of VGG-16 and VGG-19 is similar. As the layer number of ResNet increases from 18 to 34 and to 50, the recognition rate gradually decreases. Generally speaking, more data involved in network training results in improved performance. In the early stage of the increase in the amount of data, the performance is significantly improved. A comparison of the performance of CNNs with that of traditional non-deep learning methods shows that the performance of CNNs is equivalent to that of non-deep learning methods on the PolyU M_B database. On other databases, the performance of CNNs is worse than that of traditional non-deep learning methods.ConclusionThis paper reviews the CNNs proposed in the literature and conducts a large-scale performance evaluation of palmprint recognition on five different palmprint databases under different network structures, learning rates, network layers, and training data amounts. Results show that ResNet is suitable for palmprint recognition and that 5×10-5 is an appropriate learning rate, which can help researchers engaged in deep learning and palmprint recognition. We also compared the performance of CNNs with that of four traditional methods. The overall performance of CNN is slightly worse than that of traditional methods, but we can still see the great potential of deep learning methods.关键词:biometrics;palmprint recognition;deep learning(DL);convolutional neural network(CNN);palmprint dataset;performance evaluation43|352|2更新时间:2024-05-07

Review

-

摘要:ObjectiveImage colorization is the process of assigning color information to grayscale images and retains grayscale image texture information. The aim of colorization is to increase the visual appeal of an image. This technology is widely used in many areas, such as medical image illustrations, remote sensing images, and old black-and-white photos. Colorization methods have to main categories, namely, user-assisted and automatic colorization methods. User-assisted colorization methods require users to manually define a layer mask or mark color scribbles on a grayscale image. This method is time-consuming and cannot provide sufficient and desirable color scribbles. Automatic colorization methods can reduce user effort and transfer color from a sample color image. The color image is called the reference/source image, and the grayscale image to be colorized is called the target image. The primary difficulty of these automatic colorization methods is to accurately transfer colors and satisfy spatial consistency. Most of these approaches achieve spatial coherency by using weighted filter or global optimization algorithms during colorizing. However, these methods may result in oversmoothed colorization or blur color in edge regions.MethodWe use a grayscale image colorization approach based on a local adaptive weighted average filter. This proposed method considers local neighborhood pixel information and automatically adjusts the domain pixel weights to ensure correct color migration and clear boundary results. A reference image with similar contents as the target image is provided to achieve color transfer. The method includes the following steps:First, the class probability distribution and classification are obtained. Support vector machine (SVM) is adopted to calculate class probability based on feature descriptors, mean luminance, entropy, variance, and local binary pattern (LBP) and Gabor features. The probability results and classification are post-processed to enhance the spatial coherency combined with superpixels that are extracted based on improved simple linear iterative clustering (ISLIC). Second, the color candidate in the reference image is determined based on matching low-level features in the corresponding class. Thereafter, each pixel with high-confidence class probability is assigned a color from the candidate pixel by using an adaptive weight filter. The adaptive weight, which is defined by the class probability of small neighborhood pixels around the corresponding pixel, can improve local spatial consistency and avoid confusion colorization in the boundary region. Finally, the optimization-based colorization algorithm is used on the remaining unassigned pixels with low-confidence class probability.ResultThis paper analyzes single pixel-based, weighted average, and adaptive weighted average methods. Results demonstrate that the adaptive weighted average method is better than the other strategies. The colorized images illustrate that our method takes advantage of the other strategies and that it not only has high spatial consistence but also ensures the boundary detail information with high color discrimination. Compared with previous colorization methods, our method works well in colorization. Colorized images achieved by Gupta's method and Irony's method have obvious erroneous colors due to inaccurate matching or corresponding pixels. Charpiat's method produce oversmoothed color on the boundary regions. Images colorized by using Zhang's method are extracted by training more than a million color images and diversities of colors based on CNN. However, some unreliable and undistinguished colors appear on the boundary contours. The colorization results obtained by using a local adaptive weighted average filter ensures the correctness of color transfer and spatial consistence and avoids oversmoothing simultaneously in the edge areas. Thus, our proposed method performs better than the existing methods. The evaluation scores for experimental images using our method are higher than 3.5, especially in local areas, and the evaluation results are close to or higher than 4.0, which is greater than the results of those using the existing colorization method.ConclusionA new colorization approach using color reference images is presented in this paper. The proposed method combines SVM and ISLIC to determine the class probabilities and classifications with high spatial coherence for images. The corresponding pixels are matched based on the space features according to the same class label between the reference and the target images. A local adaptive weighted average filter is defined to transfer the chrominance from the source image to the grayscale image with high-confidence pixels to facilitate spatially coherent colorization and avoid oversmoothing. The colorized pixels are considered automatic scribbles to be spread across all pixels by using global optimization to obtain the final colorization result. Experimental results demonstrate that our proposed method can achieve satisfactory results and is competitive with the existing methods. However, several limitations are observed. First, this method is not fully automated, requiring some human intervention to provide class samples during the process of calculating class probability. Second, the selected space features are not optimal for all images, especially for images with complex textures and rich colors. We will focus on fully automatic operation and general features in our future work.关键词:colorization;color transfer;local spatial coherency;consistency;smooth16|31|4更新时间:2024-05-07

摘要:ObjectiveImage colorization is the process of assigning color information to grayscale images and retains grayscale image texture information. The aim of colorization is to increase the visual appeal of an image. This technology is widely used in many areas, such as medical image illustrations, remote sensing images, and old black-and-white photos. Colorization methods have to main categories, namely, user-assisted and automatic colorization methods. User-assisted colorization methods require users to manually define a layer mask or mark color scribbles on a grayscale image. This method is time-consuming and cannot provide sufficient and desirable color scribbles. Automatic colorization methods can reduce user effort and transfer color from a sample color image. The color image is called the reference/source image, and the grayscale image to be colorized is called the target image. The primary difficulty of these automatic colorization methods is to accurately transfer colors and satisfy spatial consistency. Most of these approaches achieve spatial coherency by using weighted filter or global optimization algorithms during colorizing. However, these methods may result in oversmoothed colorization or blur color in edge regions.MethodWe use a grayscale image colorization approach based on a local adaptive weighted average filter. This proposed method considers local neighborhood pixel information and automatically adjusts the domain pixel weights to ensure correct color migration and clear boundary results. A reference image with similar contents as the target image is provided to achieve color transfer. The method includes the following steps:First, the class probability distribution and classification are obtained. Support vector machine (SVM) is adopted to calculate class probability based on feature descriptors, mean luminance, entropy, variance, and local binary pattern (LBP) and Gabor features. The probability results and classification are post-processed to enhance the spatial coherency combined with superpixels that are extracted based on improved simple linear iterative clustering (ISLIC). Second, the color candidate in the reference image is determined based on matching low-level features in the corresponding class. Thereafter, each pixel with high-confidence class probability is assigned a color from the candidate pixel by using an adaptive weight filter. The adaptive weight, which is defined by the class probability of small neighborhood pixels around the corresponding pixel, can improve local spatial consistency and avoid confusion colorization in the boundary region. Finally, the optimization-based colorization algorithm is used on the remaining unassigned pixels with low-confidence class probability.ResultThis paper analyzes single pixel-based, weighted average, and adaptive weighted average methods. Results demonstrate that the adaptive weighted average method is better than the other strategies. The colorized images illustrate that our method takes advantage of the other strategies and that it not only has high spatial consistence but also ensures the boundary detail information with high color discrimination. Compared with previous colorization methods, our method works well in colorization. Colorized images achieved by Gupta's method and Irony's method have obvious erroneous colors due to inaccurate matching or corresponding pixels. Charpiat's method produce oversmoothed color on the boundary regions. Images colorized by using Zhang's method are extracted by training more than a million color images and diversities of colors based on CNN. However, some unreliable and undistinguished colors appear on the boundary contours. The colorization results obtained by using a local adaptive weighted average filter ensures the correctness of color transfer and spatial consistence and avoids oversmoothing simultaneously in the edge areas. Thus, our proposed method performs better than the existing methods. The evaluation scores for experimental images using our method are higher than 3.5, especially in local areas, and the evaluation results are close to or higher than 4.0, which is greater than the results of those using the existing colorization method.ConclusionA new colorization approach using color reference images is presented in this paper. The proposed method combines SVM and ISLIC to determine the class probabilities and classifications with high spatial coherence for images. The corresponding pixels are matched based on the space features according to the same class label between the reference and the target images. A local adaptive weighted average filter is defined to transfer the chrominance from the source image to the grayscale image with high-confidence pixels to facilitate spatially coherent colorization and avoid oversmoothing. The colorized pixels are considered automatic scribbles to be spread across all pixels by using global optimization to obtain the final colorization result. Experimental results demonstrate that our proposed method can achieve satisfactory results and is competitive with the existing methods. However, several limitations are observed. First, this method is not fully automated, requiring some human intervention to provide class samples during the process of calculating class probability. Second, the selected space features are not optimal for all images, especially for images with complex textures and rich colors. We will focus on fully automatic operation and general features in our future work.关键词:colorization;color transfer;local spatial coherency;consistency;smooth16|31|4更新时间:2024-05-07 -

摘要:ObjectiveImage super-resolution is an important branch of digital image processing and computer vision. This method has been widely used in video surveillance, medical imaging, and security and surveillance imaging in recent years. Super-resolution aims to reconstruct a high-resolution image from an observed degraded low-resolution one. Early methods include interpolation, neighborhood embedding, and sparse coding. Deep convolutional neural network has recently become a major research topic in the field of single image super-resolution reconstruction. This network can learn the mapping between high-and low-resolution images better than traditional learning-based methods. However, many deep learning-based methods present two evident drawbacks. First, most methods use chained stacking to create the network. Each layer of the network is only related to its previous layer, leading to weak inter-layer relationships. Second, the hierarchical features of the network are partially utilized. These shortcomings can lead to loss of high frequency components. A novel image super-resolution reconstruction method based on multi-staged fusion network is proposed to address these drawbacks. This method is used to improve the quality of image reconstruction.MethodNumerous studies have shown that feature re-usage can improve the capability of the network to extract and express features. Thus, our research is based on the idea of feature re-usage. We implemented this idea through the multipath connection, which includes two forms, namely, global multipath mode and local fusion unit. First, the proposed model uses an interpolated low-resolution image as input. The feature extraction network extracts shallow features as the mixture network's input. Mixture network consists of two parts. The first one is pixel encoding network, which is used to obtain structural feature information of the image. This network presents four weight layers, each consisting of 64 filters with a size of 1×1, which can guarantee that the feature map distribution will be protected. This process is similar to those of encoding and decoding pixels. The other one is multi-path feedforward network, which is used to extract the high-frequency components needed for reconstruction. This network is formed by staged feature fusion units connected by multi-path mode. Each fusion unit is composed of dense connection, residual learning, and feature selection layers. The dense connection layer is composed of four weight layers with 32 filters with a size of 3×3. This layer is used to improve the nonlinear mapping capability of the network and extract substantial high frequency information. The residual learning layer contains a 1×1 weight layer to alleviate the vanishing gradient problem. Feature selection layer uses a 1×1 weight layer to obtain effective features. Then, the multi-path mode is used to connect different units, which could enhance the relationship between the fusion units. This mode extracts substantial effective features and increases the utilization of hierarchical features. Both sub-networks output 64 feature-maps, fusing their output features as input of reconstructed network that includes a 1×1 weight layer. Therefore, the final residual image between low-and high-resolution images can be obtained. Finally, the reconstructed image can be obtained by combining the original low-resolution and residual images. In the training process, we select the rectified linear unit as the activation function to accelerate the training process and avoid gradient vanishing. For a weight layer with a filter size of 3×3, we pad one pixel to ensure that all feature-maps have the same size, which can improve the edge information of the reconstructed image. Furthermore, the initial learning rate is set to 0.1 and then decreased to half every 10 epochs, which can accelerate network convergence. We set mini-batch size of SGD and momentum parameter to 0.9. We use 291 images as the training set. In addition, we used data augmentation (rotation 90°, 180°, 270°, and vertical flip) to augment the training set, which could avoid the overfitting problems and increase sample diversity. The network is trained with multiple scale factors (×2, ×3, and×4) to ensure that it could be used to solve the reconstruction problem of different scale factors.ResultAll experiments are implemented under the PyTorch framework. We use four common benchmark sets (Set5, Set14, B100, and Urban100) to evaluate our model. Moreover, we use peak signal-to-noise ratio as evaluation criteria. The images of RGB space are converted to YCbCr space. The proposed algorithm only reconstructs the luminance channel Y because human vision is highly sensitive to the luminance channel. The Cb and Cr channels are reconstructed by using the interpolation method. Experimental results on four benchmark sets for scaling factor of four are 31.69 dB, 28.24 dB, 27.39 dB, and 25.46 dB, respectively. The proposed method shows better performance and visual effects than Bicubic, A+, SRCNN, VDSR, DRCN, and DRRN. In addition, we have validated the effectiveness of the proposed components, which includes multipath mode, staged fusion unit, and pixel coding network.ConclusionThe proposed network overcomes the shortcoming of the chain structure and extracts substantial high-frequency information by fully utilizing the hierarchical features. Moreover, such network simultaneously uses the structural feature information carried by the low-resolution image to complete the reconstruction together. Furthermore, techniques that include dense connection and residual learning are adopted to accelerate convergence and mitigate gradient problems during training. Extensive experiments show that the proposed method can reconstruct an image with more high-frequency details than other methods with the same preprocessing step. We will consider using the idea of recursive learning and increasing the number of training samples to optimize the model further in the subsequent work.关键词:convolutional neural network (CNN);super-resolution reconstructions;hierarchical features;staged feature fusion;multi-path mode64|118|9更新时间:2024-05-07

摘要:ObjectiveImage super-resolution is an important branch of digital image processing and computer vision. This method has been widely used in video surveillance, medical imaging, and security and surveillance imaging in recent years. Super-resolution aims to reconstruct a high-resolution image from an observed degraded low-resolution one. Early methods include interpolation, neighborhood embedding, and sparse coding. Deep convolutional neural network has recently become a major research topic in the field of single image super-resolution reconstruction. This network can learn the mapping between high-and low-resolution images better than traditional learning-based methods. However, many deep learning-based methods present two evident drawbacks. First, most methods use chained stacking to create the network. Each layer of the network is only related to its previous layer, leading to weak inter-layer relationships. Second, the hierarchical features of the network are partially utilized. These shortcomings can lead to loss of high frequency components. A novel image super-resolution reconstruction method based on multi-staged fusion network is proposed to address these drawbacks. This method is used to improve the quality of image reconstruction.MethodNumerous studies have shown that feature re-usage can improve the capability of the network to extract and express features. Thus, our research is based on the idea of feature re-usage. We implemented this idea through the multipath connection, which includes two forms, namely, global multipath mode and local fusion unit. First, the proposed model uses an interpolated low-resolution image as input. The feature extraction network extracts shallow features as the mixture network's input. Mixture network consists of two parts. The first one is pixel encoding network, which is used to obtain structural feature information of the image. This network presents four weight layers, each consisting of 64 filters with a size of 1×1, which can guarantee that the feature map distribution will be protected. This process is similar to those of encoding and decoding pixels. The other one is multi-path feedforward network, which is used to extract the high-frequency components needed for reconstruction. This network is formed by staged feature fusion units connected by multi-path mode. Each fusion unit is composed of dense connection, residual learning, and feature selection layers. The dense connection layer is composed of four weight layers with 32 filters with a size of 3×3. This layer is used to improve the nonlinear mapping capability of the network and extract substantial high frequency information. The residual learning layer contains a 1×1 weight layer to alleviate the vanishing gradient problem. Feature selection layer uses a 1×1 weight layer to obtain effective features. Then, the multi-path mode is used to connect different units, which could enhance the relationship between the fusion units. This mode extracts substantial effective features and increases the utilization of hierarchical features. Both sub-networks output 64 feature-maps, fusing their output features as input of reconstructed network that includes a 1×1 weight layer. Therefore, the final residual image between low-and high-resolution images can be obtained. Finally, the reconstructed image can be obtained by combining the original low-resolution and residual images. In the training process, we select the rectified linear unit as the activation function to accelerate the training process and avoid gradient vanishing. For a weight layer with a filter size of 3×3, we pad one pixel to ensure that all feature-maps have the same size, which can improve the edge information of the reconstructed image. Furthermore, the initial learning rate is set to 0.1 and then decreased to half every 10 epochs, which can accelerate network convergence. We set mini-batch size of SGD and momentum parameter to 0.9. We use 291 images as the training set. In addition, we used data augmentation (rotation 90°, 180°, 270°, and vertical flip) to augment the training set, which could avoid the overfitting problems and increase sample diversity. The network is trained with multiple scale factors (×2, ×3, and×4) to ensure that it could be used to solve the reconstruction problem of different scale factors.ResultAll experiments are implemented under the PyTorch framework. We use four common benchmark sets (Set5, Set14, B100, and Urban100) to evaluate our model. Moreover, we use peak signal-to-noise ratio as evaluation criteria. The images of RGB space are converted to YCbCr space. The proposed algorithm only reconstructs the luminance channel Y because human vision is highly sensitive to the luminance channel. The Cb and Cr channels are reconstructed by using the interpolation method. Experimental results on four benchmark sets for scaling factor of four are 31.69 dB, 28.24 dB, 27.39 dB, and 25.46 dB, respectively. The proposed method shows better performance and visual effects than Bicubic, A+, SRCNN, VDSR, DRCN, and DRRN. In addition, we have validated the effectiveness of the proposed components, which includes multipath mode, staged fusion unit, and pixel coding network.ConclusionThe proposed network overcomes the shortcoming of the chain structure and extracts substantial high-frequency information by fully utilizing the hierarchical features. Moreover, such network simultaneously uses the structural feature information carried by the low-resolution image to complete the reconstruction together. Furthermore, techniques that include dense connection and residual learning are adopted to accelerate convergence and mitigate gradient problems during training. Extensive experiments show that the proposed method can reconstruct an image with more high-frequency details than other methods with the same preprocessing step. We will consider using the idea of recursive learning and increasing the number of training samples to optimize the model further in the subsequent work.关键词:convolutional neural network (CNN);super-resolution reconstructions;hierarchical features;staged feature fusion;multi-path mode64|118|9更新时间:2024-05-07 -

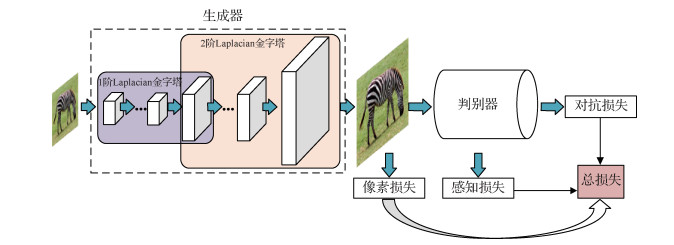

摘要:ObjectiveSingle image super-resolution (SISR) is a research hotspot in computer vision. SISR aims to reconstruct a high-resolution image from its low-resolution counterpart and is widely used in video surveillance, remote sensing image, and medical imaging. In recent years, many researchers have concentrated on convolutional SISR networking to the massive development of deep learning. They constructed shallow convolutional networks, which perform poorly in improving the quality of reconstructed images. However, these methods adopt mean square error as objective function to obtain a high evaluation index. As a result, they are unable to characterize good edge details, thereby failing to sufficiently infer plausible high frequency. To address this problem, we propose a novel generative adversarial network (GAN) for image super-resolution combining perceptual loss to further improve SR performance. This method outperforms state-of-the-art methods by a large margin in terms of peak signal-to-noise ratio and structure similarity, resulting in noticeable improvement of the reconstruction results.MethodSISR is inherently ill-posed because many solutions exist for any given low-resolution pixel. In other words, it is an underdetermined inverse problem that does not have a unique solution. Classical methods constrain the solution space by mitigating the prior information of a natural-scene image, thereby leading to unsatisfactory color analysis and context accuracy results with real high-resolution images. With its strong feature representation ability, CNN outperforms conventional methods. However, these forward CNNs for super-resolution are a single-path model that limits their reconstructive performance because they attempt to optimize the mean square error (MSE) in a pixelwise manner between the super-resolved image and the ground truth. Measuring pixel-wise difference cannot capture perceptual semantic well. Therefore, we propose a novel GAN for image super-resolution that integrates perceptual loss to boost visual performance. Our algorithm model consists of two modules:a generative subnetwork that is mainly composed of Laplacian feature pyramids and a pyramid that contains many dense residual blocks, which serve as the fundamental component. We introduce global residual learning in the identity branch of each residual unit to construct the dense residual block. Therefore, the full usage of all layers not only stabilizes the training process but also effectively preserves information flow through the network. As a result, the generative subnetwork can progressively extract different high-frequency scales of the reconstructed image. The other discriminative subnetwork is a type of forward CNN that introduces stride convolution and global average pooling to enlarge the receptive field and reduce spatial dimensions over a large image region to ensure efficient memory usage and fast inference. The discriminator estimates the probability that a generated high-resolution image came from the ground truth rather than the generative subnetwork by inspecting their feature maps and then feeds back the result to help the generator synthesize more perceptual high-frequency details. Finally, the algorithm model optimizes the objective function to complete the parameter updating.ResultAll experiments are implemented on the PyTorch framework. We train PSGAN (perceptual super-resolution using generative adversarial network) for 100 epochs by using 291 datasets. Following previous experiments, we transform all RGB images into YCbCr format and resolve the Y channel only because the human eye is most sensitive to this channel. We choose two standard datasets (Set5 and Set14) to verify the effectiveness of our proposed network compared with that of other state-of-the-art methods. For subjective visual evaluation, experiment results that the accuracy of all test samples is reasonable given that the perceptual quality difference between the original ground truth and our generated high-resolution image is not significant. Overall, PSGAN achieves superior clarity and barely shows a ripple effect. For objective evaluation, the average peak signal-to-noise ratio achieved by this method is 37.44 dB and 33.14 dB with scale factor 2 and 31.72 dB and 28.34 dB with scale factor 4 on Set5 and Set14, respectively. In the case of structure similarity measurement, the proposed approach obtains 0.961 4/0.892 4 on Set5 and 0.919 3/0.785 6 on Set14, respectively, which indicates PSGAN produces the best index results. In terms of perceptual measures, we calculate the FSIM of each method, and our PSGAN obtains 0.92/0.91 on Set5 and 0.92/0.88 on Set14, respectively. Experiment results demonstrate that our method improves the unsampled image quality by a large margin.ConclusionWe employ a compact and recurrent CNN that mainly consists of dense residual blocks to super-resolve high-resolution image progressively. Comprehensive experiments show that PSGAN achieves considerable improvement in quantitation and visual perception against other state-of-the-art methods. This algorithm provides stronger supervision for brightness consistency and texture recovery and can be applied for photorealistic super-resolution of natural-scene images.关键词:super-resolution reconstruction;deep learning;convolutional neural network(CNN);residual learning;generative adversarial network(GAN);perceptual loss17|4|4更新时间:2024-05-07

摘要:ObjectiveSingle image super-resolution (SISR) is a research hotspot in computer vision. SISR aims to reconstruct a high-resolution image from its low-resolution counterpart and is widely used in video surveillance, remote sensing image, and medical imaging. In recent years, many researchers have concentrated on convolutional SISR networking to the massive development of deep learning. They constructed shallow convolutional networks, which perform poorly in improving the quality of reconstructed images. However, these methods adopt mean square error as objective function to obtain a high evaluation index. As a result, they are unable to characterize good edge details, thereby failing to sufficiently infer plausible high frequency. To address this problem, we propose a novel generative adversarial network (GAN) for image super-resolution combining perceptual loss to further improve SR performance. This method outperforms state-of-the-art methods by a large margin in terms of peak signal-to-noise ratio and structure similarity, resulting in noticeable improvement of the reconstruction results.MethodSISR is inherently ill-posed because many solutions exist for any given low-resolution pixel. In other words, it is an underdetermined inverse problem that does not have a unique solution. Classical methods constrain the solution space by mitigating the prior information of a natural-scene image, thereby leading to unsatisfactory color analysis and context accuracy results with real high-resolution images. With its strong feature representation ability, CNN outperforms conventional methods. However, these forward CNNs for super-resolution are a single-path model that limits their reconstructive performance because they attempt to optimize the mean square error (MSE) in a pixelwise manner between the super-resolved image and the ground truth. Measuring pixel-wise difference cannot capture perceptual semantic well. Therefore, we propose a novel GAN for image super-resolution that integrates perceptual loss to boost visual performance. Our algorithm model consists of two modules:a generative subnetwork that is mainly composed of Laplacian feature pyramids and a pyramid that contains many dense residual blocks, which serve as the fundamental component. We introduce global residual learning in the identity branch of each residual unit to construct the dense residual block. Therefore, the full usage of all layers not only stabilizes the training process but also effectively preserves information flow through the network. As a result, the generative subnetwork can progressively extract different high-frequency scales of the reconstructed image. The other discriminative subnetwork is a type of forward CNN that introduces stride convolution and global average pooling to enlarge the receptive field and reduce spatial dimensions over a large image region to ensure efficient memory usage and fast inference. The discriminator estimates the probability that a generated high-resolution image came from the ground truth rather than the generative subnetwork by inspecting their feature maps and then feeds back the result to help the generator synthesize more perceptual high-frequency details. Finally, the algorithm model optimizes the objective function to complete the parameter updating.ResultAll experiments are implemented on the PyTorch framework. We train PSGAN (perceptual super-resolution using generative adversarial network) for 100 epochs by using 291 datasets. Following previous experiments, we transform all RGB images into YCbCr format and resolve the Y channel only because the human eye is most sensitive to this channel. We choose two standard datasets (Set5 and Set14) to verify the effectiveness of our proposed network compared with that of other state-of-the-art methods. For subjective visual evaluation, experiment results that the accuracy of all test samples is reasonable given that the perceptual quality difference between the original ground truth and our generated high-resolution image is not significant. Overall, PSGAN achieves superior clarity and barely shows a ripple effect. For objective evaluation, the average peak signal-to-noise ratio achieved by this method is 37.44 dB and 33.14 dB with scale factor 2 and 31.72 dB and 28.34 dB with scale factor 4 on Set5 and Set14, respectively. In the case of structure similarity measurement, the proposed approach obtains 0.961 4/0.892 4 on Set5 and 0.919 3/0.785 6 on Set14, respectively, which indicates PSGAN produces the best index results. In terms of perceptual measures, we calculate the FSIM of each method, and our PSGAN obtains 0.92/0.91 on Set5 and 0.92/0.88 on Set14, respectively. Experiment results demonstrate that our method improves the unsampled image quality by a large margin.ConclusionWe employ a compact and recurrent CNN that mainly consists of dense residual blocks to super-resolve high-resolution image progressively. Comprehensive experiments show that PSGAN achieves considerable improvement in quantitation and visual perception against other state-of-the-art methods. This algorithm provides stronger supervision for brightness consistency and texture recovery and can be applied for photorealistic super-resolution of natural-scene images.关键词:super-resolution reconstruction;deep learning;convolutional neural network(CNN);residual learning;generative adversarial network(GAN);perceptual loss17|4|4更新时间:2024-05-07 -

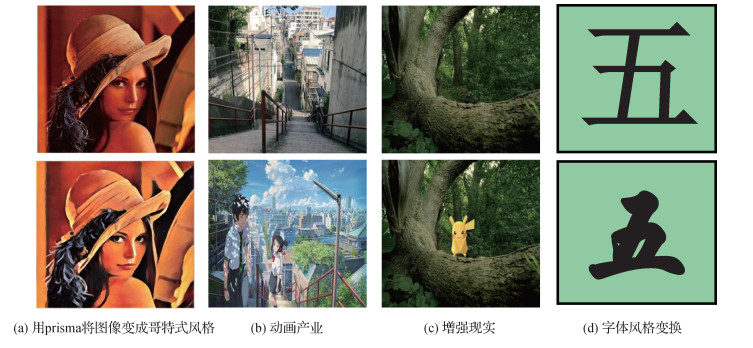

摘要:ObjectiveGatys et al. successfully used convolutional neural networks (CNNs) to render a content image in different styles in a process referred to as neural style transfer (NST). Their work was the first time deep learning demonstrated its ability in the field of style transfer. In the past, most related problems in style transfer were manually modeled, which was a time consuming and laborious process. The goal of traditional NST is to learn the mapping between two different styles of paired images. Cycle-consistent adversarial networks (CycleGAN) is the first method to apply the generative adversarial network (GAN) to image style transfer. This method has a good performance on unpaired training data but does not work well when the test image is different from the training images. Thus, instance style transfer was developed to address this problem. Instance style transfer is built on image segmentation and should be applied only on the object of interest. The main challenge is the transition between the object and a non-stylized background. Most studies on instance style transfer have focused on the CNN. In this paper, some of these methods are extended to CycleGAN, and some steps are improved based on actual conditions. We propose a method to achieve instance style transfer by combining fully convolutional network (FCN) with CycleGAN. A dataset is used to verify that training data are not the reason CycleGAN cannot work well on instance style transfer.MethodThis study is divided into two parts:The first part is to improve the performance of CycleGAN to make it work efficiently in instance style transfer. The second part is to verify the conjecture in the reference. In the first part, the FCN is utilized to obtain the semantic segmentation of input image

摘要:ObjectiveGatys et al. successfully used convolutional neural networks (CNNs) to render a content image in different styles in a process referred to as neural style transfer (NST). Their work was the first time deep learning demonstrated its ability in the field of style transfer. In the past, most related problems in style transfer were manually modeled, which was a time consuming and laborious process. The goal of traditional NST is to learn the mapping between two different styles of paired images. Cycle-consistent adversarial networks (CycleGAN) is the first method to apply the generative adversarial network (GAN) to image style transfer. This method has a good performance on unpaired training data but does not work well when the test image is different from the training images. Thus, instance style transfer was developed to address this problem. Instance style transfer is built on image segmentation and should be applied only on the object of interest. The main challenge is the transition between the object and a non-stylized background. Most studies on instance style transfer have focused on the CNN. In this paper, some of these methods are extended to CycleGAN, and some steps are improved based on actual conditions. We propose a method to achieve instance style transfer by combining fully convolutional network (FCN) with CycleGAN. A dataset is used to verify that training data are not the reason CycleGAN cannot work well on instance style transfer.MethodThis study is divided into two parts:The first part is to improve the performance of CycleGAN to make it work efficiently in instance style transfer. The second part is to verify the conjecture in the reference. In the first part, the FCN is utilized to obtain the semantic segmentation of input image$\mathit{\boldsymbol{X}}$ $\mathit{\boldsymbol{Y}}$ $\mathit{\boldsymbol{Z}}$ $\mathit{\boldsymbol{Z}}$ $\mathit{\boldsymbol{Y}}$ $\mathit{\boldsymbol{Y}}$ $\mathit{\boldsymbol{R}}$ $\mathit{\boldsymbol{Y}}$ $ \circ $ $\mathit{\boldsymbol{Z}}$ $\mathit{\boldsymbol{Z}}$ $\mathit{\boldsymbol{R}}$ $\mathit{\boldsymbol{X}}$ 关键词:deep learning;style transfer;cycle-consistent generative adversarial network (CycleGAN);semantic segmentation;fully convolutional network(FCN)21|4|9更新时间:2024-05-07 -

摘要:ObjectiveWith the rapid development of 3D scanner and growing requirements for 3D models in various applications, interest in developing high-quality 3D models is increasing. The unavoidable noise not only damages the quality of 3D models but also affects their appearance. The earliest mesh denoising algorithm is implemented by adjusting the positions of vertices; this process is called the one-step denoising method. Then, the two-step denoising framework that first filters the normal vector of the patch and then updates the vertex position according to the patch normal vector is proposed to improve the denoising effects. Both methods have their own advantages. More algorithms are being proposed as the denoising process matures. However, removing noise while preserving the structural features of the model remains a challenging problem that needs to be solved. Feature preserving methods for mesh denoising have recently become a hot topic in this research field. This paper proposes a three-step denoising framework to retain the feature information of 3D models in the process of denoising, which adds a preprocessing operation to better preserve the features of the mesh and maintain the mesh topology.MethodThe proposed method adds a preprocessing stage before the traditional denoising method and introduces the variational shape approximation (VSA) segmentation algorithm to extract the feature information of the model. On the basis of the combined filters, different features can be processed separately for mesh denoising. The VSA method is a mesh segmentation solution for 3D models, which can extract sharp features from the given meshes. This method performs better in extracting the structural features from the meshes with different noise. The VSA segmentation result also enables the mesh to reduce noise, and it can perform a noise-reducing operation on the entire model without losing the feature information. Specifically, each of the divided regions is locally reduced in noise and finally combined into a grid to obtain an initial low-level noise mesh input. The proposed method uses three major steps to denoise a given mesh. First, the VSA method is used to divide the mesh into several segments. For each segment, local Laplacian smoothing is introduced for the preprocessing of the mesh. Second, on the basis of the difference between the normal of two adjacent surfaces, a predefined feature pattern is used to match the boundaries of the partitions, and the feature boundaries are expanded to divide the model into feature and non-feature regions. In the non-feature region, the center plane normal is filtered by the weighted average neighborhood inner normal vector. For the feature region, the weighted one neighborhood uniform surface normal vector is used to filter the central plane normal. Third, on the basis of the filtered surface normal, the position of the vertex is updated in a non-iterative manner.ResultThe proposed method uses extracted information from noisy meshes to classify different feature segments. The feature information is extracted from the segmentation results based on the VSA method, which gives better results than other existing methods. In the case of moderate Gaussian noise, the results of our method are superior to the results of other methods in the models with sharp features. This method can maintain the characteristics of the model effectively, and the introduced noise reduction preprocessing has a good effect on the preservation of the topology of the nonuniform mesh. This method also has a good effect on other kinds of models, generating good denoising results in the experiment. Consistent with experimental observations obtained by calculating the average angle error of the original and the denoising models, the proposed method has a better denoising effect in visual and numerical aspects than the other method. In the experimental test, the denoising effect is improved by more than 15% compared with the other method.ConclusionThe proposed method can better maintain the characteristics of the model with sharp features than the other methods and has advantages in producing an overall denoising effect. For non-uniformly sampled meshes, this method has a better denoising effect while maintaining the original topology of the mesh. The proposed method obtains robust results for meshes with middle-level noise and can preserve the original feature information of the given meshes.关键词:geometric modeling;three-dimensional mesh denoising;variational shape approximation(VSA);geometric feature extraction;feature-preserving13|4|2更新时间:2024-05-07

摘要:ObjectiveWith the rapid development of 3D scanner and growing requirements for 3D models in various applications, interest in developing high-quality 3D models is increasing. The unavoidable noise not only damages the quality of 3D models but also affects their appearance. The earliest mesh denoising algorithm is implemented by adjusting the positions of vertices; this process is called the one-step denoising method. Then, the two-step denoising framework that first filters the normal vector of the patch and then updates the vertex position according to the patch normal vector is proposed to improve the denoising effects. Both methods have their own advantages. More algorithms are being proposed as the denoising process matures. However, removing noise while preserving the structural features of the model remains a challenging problem that needs to be solved. Feature preserving methods for mesh denoising have recently become a hot topic in this research field. This paper proposes a three-step denoising framework to retain the feature information of 3D models in the process of denoising, which adds a preprocessing operation to better preserve the features of the mesh and maintain the mesh topology.MethodThe proposed method adds a preprocessing stage before the traditional denoising method and introduces the variational shape approximation (VSA) segmentation algorithm to extract the feature information of the model. On the basis of the combined filters, different features can be processed separately for mesh denoising. The VSA method is a mesh segmentation solution for 3D models, which can extract sharp features from the given meshes. This method performs better in extracting the structural features from the meshes with different noise. The VSA segmentation result also enables the mesh to reduce noise, and it can perform a noise-reducing operation on the entire model without losing the feature information. Specifically, each of the divided regions is locally reduced in noise and finally combined into a grid to obtain an initial low-level noise mesh input. The proposed method uses three major steps to denoise a given mesh. First, the VSA method is used to divide the mesh into several segments. For each segment, local Laplacian smoothing is introduced for the preprocessing of the mesh. Second, on the basis of the difference between the normal of two adjacent surfaces, a predefined feature pattern is used to match the boundaries of the partitions, and the feature boundaries are expanded to divide the model into feature and non-feature regions. In the non-feature region, the center plane normal is filtered by the weighted average neighborhood inner normal vector. For the feature region, the weighted one neighborhood uniform surface normal vector is used to filter the central plane normal. Third, on the basis of the filtered surface normal, the position of the vertex is updated in a non-iterative manner.ResultThe proposed method uses extracted information from noisy meshes to classify different feature segments. The feature information is extracted from the segmentation results based on the VSA method, which gives better results than other existing methods. In the case of moderate Gaussian noise, the results of our method are superior to the results of other methods in the models with sharp features. This method can maintain the characteristics of the model effectively, and the introduced noise reduction preprocessing has a good effect on the preservation of the topology of the nonuniform mesh. This method also has a good effect on other kinds of models, generating good denoising results in the experiment. Consistent with experimental observations obtained by calculating the average angle error of the original and the denoising models, the proposed method has a better denoising effect in visual and numerical aspects than the other method. In the experimental test, the denoising effect is improved by more than 15% compared with the other method.ConclusionThe proposed method can better maintain the characteristics of the model with sharp features than the other methods and has advantages in producing an overall denoising effect. For non-uniformly sampled meshes, this method has a better denoising effect while maintaining the original topology of the mesh. The proposed method obtains robust results for meshes with middle-level noise and can preserve the original feature information of the given meshes.关键词:geometric modeling;three-dimensional mesh denoising;variational shape approximation(VSA);geometric feature extraction;feature-preserving13|4|2更新时间:2024-05-07

Image Processing and Coding

-

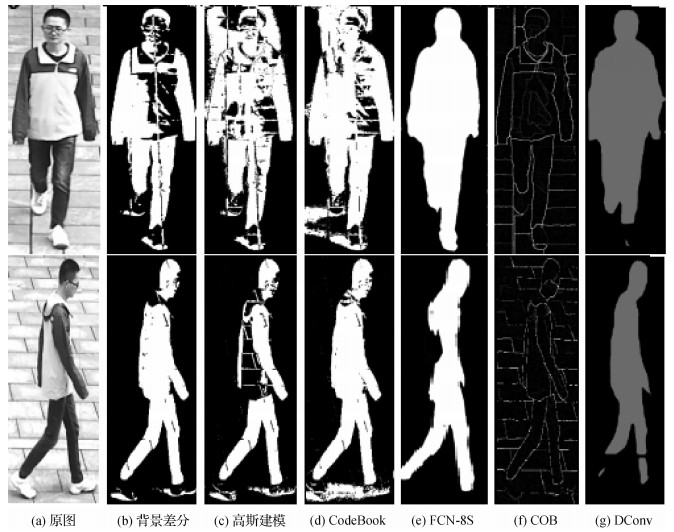

摘要:ObjectiveGait recognition has many advantages over DNA, fingerprint, iris, and 2D and 3D face recognition methods. For example, the observer does not need to cooperate in this method. In addition, the method can be performed at a relatively long distance and at a relatively lower image quality. Moreover, a person's gait is difficult to camouflage and hide. Therefore, gait recognition has become a research hotspot in recent years, and it is widely used in security, anti-terrorism, and medical applications, such as personal identification, treatment, and rehabilitation of abnormal leg and foot diseases. This paper proposes a novel gait human semantic segmentation method based on RPGNet (Region of Interest + Parts of Body Semantics + GaitNet) network to solve the problems of contour loss, human shadow, and long computing time caused by lighting, camera angles, and obstructions when gait recognition is performed by using a surveillance video in the field of anti-terrorism and security.MethodThis method is divided into the R (region of interest), P (parts of body semantics), and GNet (GaitNet) modules according to function. The R module obtains the area of interest of the gait body, which could improve computing efficiency and reduce image noise. First, the original image is processed by using the background subtraction method and translated into a binary image. Then, the image is operated by morphological processing methods, such as expansion, corrosion, and filtering. Second, we search the connected region of the human body in the graph and frame that area with a rectangular frame. Finally, we enlarge the length and width of the rectangular frame by a quarter and clip the image. Therefore, we obtain the connected regions of interest. The main function of the P module is to annotate gait body parts semantically by using LabelMe, an open-source image annotation tool. We train the human body according to its position. The semantics of the human body is defined as six parts:head, trunk, upper arm, lower arm, thigh, and lower leg. We map the semantics of the human body parts to six RGB information one by one. Then, we use LabelMe to annotate the image semantics captured by the camera, which generates the structure file of the image semantics annotation in XML format. Finally, the XML file and the original RGB image are imported into MATLAB to generate a human body part semantic annotation map. The GNet module designs a detailed semantic segmentation network model of the gait body. In the light of existing ResNet and RefineNet network models, we use ResNet model for reference to extract the high-level and the low-level semantics of the gait human body. The RefineNet network model is used to integrate low-level semantics with high-level semantics. Multi-resolution images generate fine low-level semantic feature maps and rough high-level semantic feature maps through residual network convolution units. Then, the feature maps are input into the fusion unit of multi-resolution feature map to generate the fused feature maps. Afterwards, the chained residual pools the fused feature maps to generate the fused pooled feature maps. Furthermore, the pooled feature maps of multi-resolution fusion are processed by output convolution. Thus, we obtain the semantically segmented feature maps. Finally, we use the softmax classifier to output the final gait semantics segmentation image by using bilinear interpolation. Through many experiments, we find that when the resolution is 1/8, 1/16, 1/32, and 1/64 of the original image, the semantics segmentation effect of the gait human body is better than that in other situations.ResultA test conducted on 1 380 images from the gait database shows that the proposed RPGNet method has a higher segmentation accuracy in local and global information processing compared with six human contour segmentation methods, especially at viewing angles of 0°, 45°, and 90°. In this study, we define the formula of segmentation accuracy

摘要:ObjectiveGait recognition has many advantages over DNA, fingerprint, iris, and 2D and 3D face recognition methods. For example, the observer does not need to cooperate in this method. In addition, the method can be performed at a relatively long distance and at a relatively lower image quality. Moreover, a person's gait is difficult to camouflage and hide. Therefore, gait recognition has become a research hotspot in recent years, and it is widely used in security, anti-terrorism, and medical applications, such as personal identification, treatment, and rehabilitation of abnormal leg and foot diseases. This paper proposes a novel gait human semantic segmentation method based on RPGNet (Region of Interest + Parts of Body Semantics + GaitNet) network to solve the problems of contour loss, human shadow, and long computing time caused by lighting, camera angles, and obstructions when gait recognition is performed by using a surveillance video in the field of anti-terrorism and security.MethodThis method is divided into the R (region of interest), P (parts of body semantics), and GNet (GaitNet) modules according to function. The R module obtains the area of interest of the gait body, which could improve computing efficiency and reduce image noise. First, the original image is processed by using the background subtraction method and translated into a binary image. Then, the image is operated by morphological processing methods, such as expansion, corrosion, and filtering. Second, we search the connected region of the human body in the graph and frame that area with a rectangular frame. Finally, we enlarge the length and width of the rectangular frame by a quarter and clip the image. Therefore, we obtain the connected regions of interest. The main function of the P module is to annotate gait body parts semantically by using LabelMe, an open-source image annotation tool. We train the human body according to its position. The semantics of the human body is defined as six parts:head, trunk, upper arm, lower arm, thigh, and lower leg. We map the semantics of the human body parts to six RGB information one by one. Then, we use LabelMe to annotate the image semantics captured by the camera, which generates the structure file of the image semantics annotation in XML format. Finally, the XML file and the original RGB image are imported into MATLAB to generate a human body part semantic annotation map. The GNet module designs a detailed semantic segmentation network model of the gait body. In the light of existing ResNet and RefineNet network models, we use ResNet model for reference to extract the high-level and the low-level semantics of the gait human body. The RefineNet network model is used to integrate low-level semantics with high-level semantics. Multi-resolution images generate fine low-level semantic feature maps and rough high-level semantic feature maps through residual network convolution units. Then, the feature maps are input into the fusion unit of multi-resolution feature map to generate the fused feature maps. Afterwards, the chained residual pools the fused feature maps to generate the fused pooled feature maps. Furthermore, the pooled feature maps of multi-resolution fusion are processed by output convolution. Thus, we obtain the semantically segmented feature maps. Finally, we use the softmax classifier to output the final gait semantics segmentation image by using bilinear interpolation. Through many experiments, we find that when the resolution is 1/8, 1/16, 1/32, and 1/64 of the original image, the semantics segmentation effect of the gait human body is better than that in other situations.ResultA test conducted on 1 380 images from the gait database shows that the proposed RPGNet method has a higher segmentation accuracy in local and global information processing compared with six human contour segmentation methods, especially at viewing angles of 0°, 45°, and 90°. In this study, we define the formula of segmentation accuracy$ρ$ $ρ$ 关键词:gait recognition;semantic segmentation;convolutional neural network(CNN);multi-covariate;human contour segmentation24|110|3更新时间:2024-05-07 -