最新刊期

卷 24 , 期 5 , 2019

-

摘要:ObjectiveThis work is the 24rd annual survey series of bibliographies on image engineering in China. This statistic and analysis study aims to capture the up-to-date development of image engineering in China, provide a targeted means of literature searching facility for readers working in related areas, and supply a useful recommendation for editors of journals and potential authors of papers.MethodConsidering the wide distribution of related publications in China, 747 references on image engineering research and technique are selected carefully from 2 863 research papers published in 148 issues of 15 Chinese journals. These 15 journals are considered important, in which papers concerning image engineering have high quality and are relatively concentrated. The selected references are initially classified into five categories (image processing, image analysis, image understanding, technique application, and survey) and then into 23 specialized classes in accordance with their main contents (similar to the last 13 years). Analysis and discussions about the statistics of the results of classifications by journal and category are also presented.ResultAnalysis on the statistics in 2018 shows that image analysis is receiving the most attention, in which the focuses are on object detection and recognition, image segmentation and edge detection, and object feature extraction and analysis. The studies and applications of image technology in various areas, such as remote sensing, radar, and mapping, are continuously active.ConclusionThis work shows a general and up-to-date picture of the various progresses, either for depth or for width, of image engineering in China in 2018.关键词:image engineering;image processing;image analysis;image understanding;technique application;literature survey;literature statistics;literature classification;bibliometrics160|183|0更新时间:2024-05-08

摘要:ObjectiveThis work is the 24rd annual survey series of bibliographies on image engineering in China. This statistic and analysis study aims to capture the up-to-date development of image engineering in China, provide a targeted means of literature searching facility for readers working in related areas, and supply a useful recommendation for editors of journals and potential authors of papers.MethodConsidering the wide distribution of related publications in China, 747 references on image engineering research and technique are selected carefully from 2 863 research papers published in 148 issues of 15 Chinese journals. These 15 journals are considered important, in which papers concerning image engineering have high quality and are relatively concentrated. The selected references are initially classified into five categories (image processing, image analysis, image understanding, technique application, and survey) and then into 23 specialized classes in accordance with their main contents (similar to the last 13 years). Analysis and discussions about the statistics of the results of classifications by journal and category are also presented.ResultAnalysis on the statistics in 2018 shows that image analysis is receiving the most attention, in which the focuses are on object detection and recognition, image segmentation and edge detection, and object feature extraction and analysis. The studies and applications of image technology in various areas, such as remote sensing, radar, and mapping, are continuously active.ConclusionThis work shows a general and up-to-date picture of the various progresses, either for depth or for width, of image engineering in China in 2018.关键词:image engineering;image processing;image analysis;image understanding;technique application;literature survey;literature statistics;literature classification;bibliometrics160|183|0更新时间:2024-05-08 -

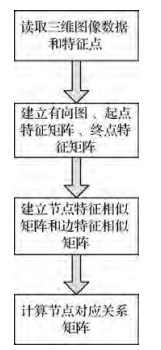

摘要:ObjectiveImage matching, the core task of computer vision, is the key of subsequent advanced image processing, such as object recognition, image mosaic, 3D reconstruction, visual location, and scene depth calculation. Although many excellent methods have been proposed by domestic and foreign scholars in this field in recent years, no comprehensive summary of image matching methods has been reported. On this basis, this study reviews these methods from three aspects, namely, locally invariant feature points, straight lines, and regions.MethodLocally invariant feature point matching first appeared in image matching development, such as Harris corner detector, features from accelerated segment test, and scale-invariant feature transform. The classical algorithms in this type of method are only briefly described in this paper. New methods, especially deep learning-based matching methods, including temporally invariant learned detector, Quad-networks, discriminative learning of deep convolutional feature point descriptors, and learned invariant feature transform (LIFT), are mainly introduced in recent years. Other methods, including bilateral functions for global motion modeling, grid-based motion statistics, and vector field consensus, are also introduced because the outer point culling method is often used to improve the accuracy of local invariant feature matching. Lines contain more scene and object structure information and are more suitable for matching image pairs with repeated texture information than local invariant feature points. Research on line matching should overcome various problems, such as inaccurate endpoint position, inconspicuous line segment, and segment fragmentation. The methods for solving such problems are line band descriptor, two-view line matching algorithm based on context and appearance, line matching leveraged by point correspondences, and new coplanar line point projection invariant. This paper introduces such methods from the perspective of problem solving process. Region matching is introduced from two aspects of region feature extraction and matching and template matching. Typical regional feature extraction and matching methods include maximally stable extremal regions, tree-based morse regions, template matching (including fast affine template matching), fast affine template matching for color images, deformable diversity similarity, occlusion aware template matching, and deep learning methods, such as MatchNet, L2-Net, PN-Net, and DeepCD. Medical image matching is an important application in the image matching field, which is significant for clinically precise diagnosis and treatment. This work introduces this type of method from the point of view of practical applications, such as fractional total variation-L1 and feature matching with learned nonlinear descriptors.ResultIn the analysis and comparison of multiple image matching algorithms, the CPU with two cores at 3.4 GHz and with graphics card NVIDIA GTX TITAN X GPU are selected as the experimental environment of the computer. The test datasets are the Technical University of Denmark dataset and Oxford University dataset Graf. This paper summarizes and compares these methods from three aspects, namely, local invariant feature points, straight lines, and regions. The comparison results of influential factors in feature matching methods, mismatched point removal methods, between hand-crafted and learn-based descriptors, and matching objects and the implementation forms of semantic matching methods are also presented. The corresponding papers and downloaded code addresses of such methods are provided, and the future research directions of image matching algorithms are prospected.ConclusionImage matching is the basis for subsequent advanced processing in the computer vision field. This method is widely used in medical image analysis, satellite image processing, remote sensing image processing, and computer vision. At present, further research is required on wide baseline and real-time matching.关键词:image matching;local invariant feature matching;line matching;region matching;semantic matching;deep learning277|178|0更新时间:2024-05-08

摘要:ObjectiveImage matching, the core task of computer vision, is the key of subsequent advanced image processing, such as object recognition, image mosaic, 3D reconstruction, visual location, and scene depth calculation. Although many excellent methods have been proposed by domestic and foreign scholars in this field in recent years, no comprehensive summary of image matching methods has been reported. On this basis, this study reviews these methods from three aspects, namely, locally invariant feature points, straight lines, and regions.MethodLocally invariant feature point matching first appeared in image matching development, such as Harris corner detector, features from accelerated segment test, and scale-invariant feature transform. The classical algorithms in this type of method are only briefly described in this paper. New methods, especially deep learning-based matching methods, including temporally invariant learned detector, Quad-networks, discriminative learning of deep convolutional feature point descriptors, and learned invariant feature transform (LIFT), are mainly introduced in recent years. Other methods, including bilateral functions for global motion modeling, grid-based motion statistics, and vector field consensus, are also introduced because the outer point culling method is often used to improve the accuracy of local invariant feature matching. Lines contain more scene and object structure information and are more suitable for matching image pairs with repeated texture information than local invariant feature points. Research on line matching should overcome various problems, such as inaccurate endpoint position, inconspicuous line segment, and segment fragmentation. The methods for solving such problems are line band descriptor, two-view line matching algorithm based on context and appearance, line matching leveraged by point correspondences, and new coplanar line point projection invariant. This paper introduces such methods from the perspective of problem solving process. Region matching is introduced from two aspects of region feature extraction and matching and template matching. Typical regional feature extraction and matching methods include maximally stable extremal regions, tree-based morse regions, template matching (including fast affine template matching), fast affine template matching for color images, deformable diversity similarity, occlusion aware template matching, and deep learning methods, such as MatchNet, L2-Net, PN-Net, and DeepCD. Medical image matching is an important application in the image matching field, which is significant for clinically precise diagnosis and treatment. This work introduces this type of method from the point of view of practical applications, such as fractional total variation-L1 and feature matching with learned nonlinear descriptors.ResultIn the analysis and comparison of multiple image matching algorithms, the CPU with two cores at 3.4 GHz and with graphics card NVIDIA GTX TITAN X GPU are selected as the experimental environment of the computer. The test datasets are the Technical University of Denmark dataset and Oxford University dataset Graf. This paper summarizes and compares these methods from three aspects, namely, local invariant feature points, straight lines, and regions. The comparison results of influential factors in feature matching methods, mismatched point removal methods, between hand-crafted and learn-based descriptors, and matching objects and the implementation forms of semantic matching methods are also presented. The corresponding papers and downloaded code addresses of such methods are provided, and the future research directions of image matching algorithms are prospected.ConclusionImage matching is the basis for subsequent advanced processing in the computer vision field. This method is widely used in medical image analysis, satellite image processing, remote sensing image processing, and computer vision. At present, further research is required on wide baseline and real-time matching.关键词:image matching;local invariant feature matching;line matching;region matching;semantic matching;deep learning277|178|0更新时间:2024-05-08

Review

-

摘要:ObjectiveAs a fundamental issue in the field of image processing, image inpainting has been widely studied in the past two decades. The goal of image inpainting is to reconstruct the original high-quality image from its corrupted observation. Notably, prior knowledge of images is important in image inpainting. Thus, designing an effective regularization to represent image priors is a critical task for image inpainting. The TV(total variation) model usually exploits local structures and high effectiveness to preserve image edges; thus, it has been found to be widely used in image inpainting. However, the regular term of the TV model is a first-order differential, which usually loses image details and tends to suffer from over-smooth effects owing to the piecewise constant assumption. Fortunately, fractional differential is capable of enhancing low-and intermediate-frequency signals and amplifying high-frequency signals moderately; thus, it is introduced into the TV model. However, the existing fractional TV model is limited with regard to its preservation of the information of images with texture and edge details. Furthermore, it does not fully use prior information such as known edges and textures.MethodTo address these problems, we propose a new fractional-order TV model that introduces texture structure information into a fractional TV model for image inpainting. A minimum value is used in the TV model to calculate the image gradient when solving the fractional model. Thus, the improved model is robust because it overcomes the problem of the model being non-differentiable at zero point. In this way, the weak texture information is effectively preserved. The improved model determines the texture direction of the region to be restored on the basis of the priors of the known region of the image and fully uses the texture information of the image to improve the accuracy of image inpainting.ResultThe Barbara and Lena images are selected as test images. The Barbara image presents a large weak texture area. By contrast, the Lena image includes few texture regions and a highly smooth area. Therefore, these two images are used for the experiment. To improve efficiency, we intercept the texture part of the original image and conduct many experiments by using differently sized templates and different orders of fractional differential. Then, the optimal parameters for different images, such as template size and order, can be obtained. The optimal parameters for the Barbara and Lena images are as follows. For the Barbara image, the optimal order is 0.1, and the optimal template size is 3×3 pixels; for the Lena image, the optimal order is 0.9, and the optimal template size is 5×5 pixels. The algorithm is compared with three algorithms with better restoration effects. Mean square error (MSE) and peak signal-to-noise ratio (PSNR) are introduced to evaluate the performance of the different methods. Experimental results indicate that the proposed algorithm achieves improved inpainting result. Unlike that in the TV model, the PSNR values after the restoration of the Barbara, Lena, and Rock images in the proposed method increase by 5.94%, 8.07%, and 3.85%, respectively; and the MSE values decrease by 48.66%, 65.89%, and 35%, respectively. Relative to the fractional TV model, the proposed method achieves PSNR values for the Barbara image, Lena image, and Rock image that increase by 4.17%, 8.59%, and 1.81%, respectively; its MSE values decrease by 37.90%, 68.00%, and 18.68%, respectively.ConclusionThe relationship between inpainting effect, template order, and template size is are demonstrated in experiments, thereby providing the basis for selecting optimal parameters. Although the optimal parameters of different types of images are different, the optimal inpainting order is generally between 0 and 1 because the smooth part of the image corresponds to the low-frequency part of the signal. The texture details of the image correspond to the intermediate-frequency part of the signal. Meanwhile, the TV algorithm is not ideal for the weak texture region. To enhance the gradient information of the region, we must improve the low-and intermediate-frequency parts. Therefore, choosing the order between 0 and 1 is recommended. Furthermore, although the optimal order varies with the type of the image, a weak texture region usually results in a small order. Theoretical analysis and experimental results show that the proposed model can effectively improve the accuracy of image restoration relative to the original TV model and fractional order TV model. The proposed model is suitable for inpainting images with weak texture and edge information. This model is an important extension of the TV model.关键词:image inpainting;fractional differential;weak texture;TV model;edge detail28|30|0更新时间:2024-05-08

摘要:ObjectiveAs a fundamental issue in the field of image processing, image inpainting has been widely studied in the past two decades. The goal of image inpainting is to reconstruct the original high-quality image from its corrupted observation. Notably, prior knowledge of images is important in image inpainting. Thus, designing an effective regularization to represent image priors is a critical task for image inpainting. The TV(total variation) model usually exploits local structures and high effectiveness to preserve image edges; thus, it has been found to be widely used in image inpainting. However, the regular term of the TV model is a first-order differential, which usually loses image details and tends to suffer from over-smooth effects owing to the piecewise constant assumption. Fortunately, fractional differential is capable of enhancing low-and intermediate-frequency signals and amplifying high-frequency signals moderately; thus, it is introduced into the TV model. However, the existing fractional TV model is limited with regard to its preservation of the information of images with texture and edge details. Furthermore, it does not fully use prior information such as known edges and textures.MethodTo address these problems, we propose a new fractional-order TV model that introduces texture structure information into a fractional TV model for image inpainting. A minimum value is used in the TV model to calculate the image gradient when solving the fractional model. Thus, the improved model is robust because it overcomes the problem of the model being non-differentiable at zero point. In this way, the weak texture information is effectively preserved. The improved model determines the texture direction of the region to be restored on the basis of the priors of the known region of the image and fully uses the texture information of the image to improve the accuracy of image inpainting.ResultThe Barbara and Lena images are selected as test images. The Barbara image presents a large weak texture area. By contrast, the Lena image includes few texture regions and a highly smooth area. Therefore, these two images are used for the experiment. To improve efficiency, we intercept the texture part of the original image and conduct many experiments by using differently sized templates and different orders of fractional differential. Then, the optimal parameters for different images, such as template size and order, can be obtained. The optimal parameters for the Barbara and Lena images are as follows. For the Barbara image, the optimal order is 0.1, and the optimal template size is 3×3 pixels; for the Lena image, the optimal order is 0.9, and the optimal template size is 5×5 pixels. The algorithm is compared with three algorithms with better restoration effects. Mean square error (MSE) and peak signal-to-noise ratio (PSNR) are introduced to evaluate the performance of the different methods. Experimental results indicate that the proposed algorithm achieves improved inpainting result. Unlike that in the TV model, the PSNR values after the restoration of the Barbara, Lena, and Rock images in the proposed method increase by 5.94%, 8.07%, and 3.85%, respectively; and the MSE values decrease by 48.66%, 65.89%, and 35%, respectively. Relative to the fractional TV model, the proposed method achieves PSNR values for the Barbara image, Lena image, and Rock image that increase by 4.17%, 8.59%, and 1.81%, respectively; its MSE values decrease by 37.90%, 68.00%, and 18.68%, respectively.ConclusionThe relationship between inpainting effect, template order, and template size is are demonstrated in experiments, thereby providing the basis for selecting optimal parameters. Although the optimal parameters of different types of images are different, the optimal inpainting order is generally between 0 and 1 because the smooth part of the image corresponds to the low-frequency part of the signal. The texture details of the image correspond to the intermediate-frequency part of the signal. Meanwhile, the TV algorithm is not ideal for the weak texture region. To enhance the gradient information of the region, we must improve the low-and intermediate-frequency parts. Therefore, choosing the order between 0 and 1 is recommended. Furthermore, although the optimal order varies with the type of the image, a weak texture region usually results in a small order. Theoretical analysis and experimental results show that the proposed model can effectively improve the accuracy of image restoration relative to the original TV model and fractional order TV model. The proposed model is suitable for inpainting images with weak texture and edge information. This model is an important extension of the TV model.关键词:image inpainting;fractional differential;weak texture;TV model;edge detail28|30|0更新时间:2024-05-08 -

摘要:ObjectiveIn recent years, the technique of intelligent video analysis has become an important research area in computer vision. Moving object detection is aimed at catching moving foreground in all types of surveillance environment and is thus an essential foundation for following video processing, including target tracking and object segmentation. Traditional methods often model the background in a color feature space and single pixel. The traditional color feature is easily disturbed by light and shadow. A single pixel cannot reflect the region spatial relation between pixels. To detect the moving foreground precisely in complex video sequences, including the illumination and dynamic background in time, we propose a moving detection method on the basis of the background modeling technique via region spatiogram in the color name space. Color names are linguistic labels that humans attach to colors. The learning of color names is achieved by the PLSA model. In fact, it conducts mapping from the RGB space to the robust 11-dimension CN space. The modeling background in the color name space addresses the illumination variation. A histogram is a zeroth-order tool for feature description that is robust to scale variation and rotation variation, whereas a second-order spatiogram contains the spatial mean and covariance for each histogram bin. Thus, the spatiogram retains extensive information about the geometry of patches and captures the global positions of pixels rather than their pairwise relationships. Therefore, using spatiogram in the color name space for background modeling is necessary.MethodA novel method for moving detection was proposed. At first, we mapped the RGB color space to a lower-dimensional color name space that is more robust. Then, we established spatiograms in the pixel local region characterized by the color name feature and recorded the spatial information of pixels in every bin. The background models of every pixel comprised K spatiograms. The spatiograms were given different weights according to the matching rates. The color name feature by dimension reduction enhanced the robustness of the models and the detection of timeliness. The spatial information introduced by the spatiograms enhanced the accuracy of the background model. To enhance the adaptivity of the models, the approach controlled the update of the model spatiograms and their weights by learning rate αb and αω. We conducted experiments on all video sequences from the standard test data CDnet (changedetection.net), which included different challenges, such as illumination variation, moving shadow, multi-model background, and so on. The parameters such as model size K; threshold TB, Tp; and learning rates αb, αω in the algorithm were determined through the analysis of comprehensive performance F1 and averaged false negative curves.ResultThe quantitative and qualitative analyses indicates that the proposed method can achieve expected results. The method can obtain outstanding effects in certain scenes, including illumination and multi-model background. Compared with ViBe, LOBSTER(loeal binary similarity segmenter), and DECOLOR(detecting contiguous outliers in the low-rank representation), the method enhances 0.65%, 3.86%, and 3.9% of the average comprehensive performance F1 of all scenes, respectively. Modeling for every pixel in its local region is concurrent. Thus, real-time detection is achieved with GPU parallel acceleration to improve time efficiency.ConclusionRobust color name spaces effectively address illumination variation. Multiple spatiogram models effectively match multi-model background, such as waving tree, water, and fountain. Therefore, the algorithm can segment moving foreground in complex video environment more accurately than existing methods. The algorithm is a real-time and effective detection algorithm that has certain practical value in intelligent video analysis.关键词:computer vision;intelligent video analysis;moving detection;background model;color names;spatiogram21|25|0更新时间:2024-05-08

摘要:ObjectiveIn recent years, the technique of intelligent video analysis has become an important research area in computer vision. Moving object detection is aimed at catching moving foreground in all types of surveillance environment and is thus an essential foundation for following video processing, including target tracking and object segmentation. Traditional methods often model the background in a color feature space and single pixel. The traditional color feature is easily disturbed by light and shadow. A single pixel cannot reflect the region spatial relation between pixels. To detect the moving foreground precisely in complex video sequences, including the illumination and dynamic background in time, we propose a moving detection method on the basis of the background modeling technique via region spatiogram in the color name space. Color names are linguistic labels that humans attach to colors. The learning of color names is achieved by the PLSA model. In fact, it conducts mapping from the RGB space to the robust 11-dimension CN space. The modeling background in the color name space addresses the illumination variation. A histogram is a zeroth-order tool for feature description that is robust to scale variation and rotation variation, whereas a second-order spatiogram contains the spatial mean and covariance for each histogram bin. Thus, the spatiogram retains extensive information about the geometry of patches and captures the global positions of pixels rather than their pairwise relationships. Therefore, using spatiogram in the color name space for background modeling is necessary.MethodA novel method for moving detection was proposed. At first, we mapped the RGB color space to a lower-dimensional color name space that is more robust. Then, we established spatiograms in the pixel local region characterized by the color name feature and recorded the spatial information of pixels in every bin. The background models of every pixel comprised K spatiograms. The spatiograms were given different weights according to the matching rates. The color name feature by dimension reduction enhanced the robustness of the models and the detection of timeliness. The spatial information introduced by the spatiograms enhanced the accuracy of the background model. To enhance the adaptivity of the models, the approach controlled the update of the model spatiograms and their weights by learning rate αb and αω. We conducted experiments on all video sequences from the standard test data CDnet (changedetection.net), which included different challenges, such as illumination variation, moving shadow, multi-model background, and so on. The parameters such as model size K; threshold TB, Tp; and learning rates αb, αω in the algorithm were determined through the analysis of comprehensive performance F1 and averaged false negative curves.ResultThe quantitative and qualitative analyses indicates that the proposed method can achieve expected results. The method can obtain outstanding effects in certain scenes, including illumination and multi-model background. Compared with ViBe, LOBSTER(loeal binary similarity segmenter), and DECOLOR(detecting contiguous outliers in the low-rank representation), the method enhances 0.65%, 3.86%, and 3.9% of the average comprehensive performance F1 of all scenes, respectively. Modeling for every pixel in its local region is concurrent. Thus, real-time detection is achieved with GPU parallel acceleration to improve time efficiency.ConclusionRobust color name spaces effectively address illumination variation. Multiple spatiogram models effectively match multi-model background, such as waving tree, water, and fountain. Therefore, the algorithm can segment moving foreground in complex video environment more accurately than existing methods. The algorithm is a real-time and effective detection algorithm that has certain practical value in intelligent video analysis.关键词:computer vision;intelligent video analysis;moving detection;background model;color names;spatiogram21|25|0更新时间:2024-05-08 -

摘要:ObjectiveGiven the development of digital video technology, especially the emergence of ultra-high definition (UHD) video technology, video compression faces enormous challenges. To solve the problem of voluminous data and to address the high-speed transmission requirements of UHD videos, the Joint Video Experts Team (JVET) is exploring future video coding (FVC) based on the high-efficiency video coding (HEVC) standard. FVC uses the hybrid coding framework of HEVC with new techniques. The compression efficiency of FVC is higher than that of HEVC; however, its coding complexity is extremely high. Therefore, reducing the complexity of FVC is of great significance. Among all the new techniques in FVC, the most effective but extremely time consuming one is the quad tree plus binary tree (QTBT) coding structure, which includes four partition modes, namely, quad tree split, vertical split, horizontal split, and no-split. The final split of coding units (CUs) is decided after trying all the partition modes and calculating the rate distortion cost. Thus, the complexity of the QTBT is extremely high. The existing HEVC-based fast coding method is no longer suitable for FVC because the QTBT coding structure and the recent work about low-complexity encoding methods are insufficient for FVC applications. To reduce the high complexity of FVC, the complexity of the QTBT structure should be considered. The traversal process of CU partition modes exhibits redundancy, and unnecessary attempts to achieve mode partition should be avoided. To optimize CUs' split process, we propose a random forest-based fast intra coding unit partition algorithm for FVC.MethodThe proposed algorithm is designed to optimize the QTBT structure in FVC. Compared with traditional statistical-based methods, the machine learning-based approach is more applicable because of the elaborate split modes of the QTBT structure. Among the methods of machine learning, random forest offers unique advantages. Random forest can handle the classification problem of multi-dimensional data and is strongly resistant to over-fitting and estimation. Furthermore, the approach performs well on classification issues and is suitable for CU splitting. Therefore, a fast algorithm based on random forest is proposed. The problem of distinguishing different split results of CUs is considered a classification problem, and random forest is used as the classifier. The image texture features and split results of the CU in the first frame of video sequences are first extracted. Image texture features have a strong correlation with split results and can thus be selected as the training data of the model. Various image texture features are used in the algorithm to achieve superior performance, and they are carefully selected by the calculation of feature importance. Specifically, the features finally used in the proposed algorithm are the width and height of the CU, Haar wavelet coefficients, angular second moment, entropy, contrast, inverse differential moment, and standard deviation. After the data collection process, four random forest models are established for different depths of CUs. CU depth can be represented as the joint depth of the quad tree and the binary tree, and this representative method is used to collect data in the algorithm. Then, the texture features and split results are set as multidimensional data, and they are separately trained online for each model. The training time is included in the entire encoding time and is relatively shorter than the encoding time. Finally, the trained models are used to predict the split results of the CUs of the remaining frames of the video sequences, thereby reducing the traversal of the partition modes and the time of rate distortion cost calculation. To ensure the algorithm's effectiveness, we test the accuracy of the models online by using different video sequences. The algorithm is implemented on the recently released JEM5.0 platform. A total of 22 test sequences of different contents and resolutions from class A1 to class E are tested under the common test condition, which is a full I-frame configuration mode with quantization parameters 22, 27, 32, and 37. The encoding performance of the algorithm is evaluated using the Bjontegaard delta bitrate (BDBR) and average amount of time saved between the proposed algorithm and the original platform.ResultExperimental results show that compared with the original platform's algorithm, the proposed algorithm can decrease the average encoding time by 44.1% with negligible coding performance loss, and the BDBR only increases by 2.6%. The approach can also save more than 20% of encoding time relative to state-of-the-art methods, with BDBR slightly increasing. This algorithm is suitable for various classes of video sequences with different resolutions and textures. Among all the sequences, the sequences with high resolution save more encoding time than other sequences do because of the online training time consumption. Furthermore, the coding performance of the proposed algorithm is stable, thereby proving the effectiveness of the models.ConclusionA random forest-based fast intra CU partition algorithm for FVC is proposed to reduce the complexity of the QTBT structure in FVC. By extracting the texture features of images, the algorithm establishes random forest models to predict the CU partitioning result while avoiding the unnecessary traversal of split modes to save encoding time. The proposed intra prediction coding algorithm can effectively reduce the complexity of FVC and maintain the encoding performance. The proposed algorithm is more suitable for video sequences with high resolution. Furthermore, the proposed algorithm should be optimized in the future to enhance time reduction and reduce coding performance loss. The possibilities of machine learning in FVC inter-prediction will also be explored in the future.关键词:video encoding;future video coding (FVC);fast intra prediction coding;machine learning;random forest20|70|0更新时间:2024-05-08

摘要:ObjectiveGiven the development of digital video technology, especially the emergence of ultra-high definition (UHD) video technology, video compression faces enormous challenges. To solve the problem of voluminous data and to address the high-speed transmission requirements of UHD videos, the Joint Video Experts Team (JVET) is exploring future video coding (FVC) based on the high-efficiency video coding (HEVC) standard. FVC uses the hybrid coding framework of HEVC with new techniques. The compression efficiency of FVC is higher than that of HEVC; however, its coding complexity is extremely high. Therefore, reducing the complexity of FVC is of great significance. Among all the new techniques in FVC, the most effective but extremely time consuming one is the quad tree plus binary tree (QTBT) coding structure, which includes four partition modes, namely, quad tree split, vertical split, horizontal split, and no-split. The final split of coding units (CUs) is decided after trying all the partition modes and calculating the rate distortion cost. Thus, the complexity of the QTBT is extremely high. The existing HEVC-based fast coding method is no longer suitable for FVC because the QTBT coding structure and the recent work about low-complexity encoding methods are insufficient for FVC applications. To reduce the high complexity of FVC, the complexity of the QTBT structure should be considered. The traversal process of CU partition modes exhibits redundancy, and unnecessary attempts to achieve mode partition should be avoided. To optimize CUs' split process, we propose a random forest-based fast intra coding unit partition algorithm for FVC.MethodThe proposed algorithm is designed to optimize the QTBT structure in FVC. Compared with traditional statistical-based methods, the machine learning-based approach is more applicable because of the elaborate split modes of the QTBT structure. Among the methods of machine learning, random forest offers unique advantages. Random forest can handle the classification problem of multi-dimensional data and is strongly resistant to over-fitting and estimation. Furthermore, the approach performs well on classification issues and is suitable for CU splitting. Therefore, a fast algorithm based on random forest is proposed. The problem of distinguishing different split results of CUs is considered a classification problem, and random forest is used as the classifier. The image texture features and split results of the CU in the first frame of video sequences are first extracted. Image texture features have a strong correlation with split results and can thus be selected as the training data of the model. Various image texture features are used in the algorithm to achieve superior performance, and they are carefully selected by the calculation of feature importance. Specifically, the features finally used in the proposed algorithm are the width and height of the CU, Haar wavelet coefficients, angular second moment, entropy, contrast, inverse differential moment, and standard deviation. After the data collection process, four random forest models are established for different depths of CUs. CU depth can be represented as the joint depth of the quad tree and the binary tree, and this representative method is used to collect data in the algorithm. Then, the texture features and split results are set as multidimensional data, and they are separately trained online for each model. The training time is included in the entire encoding time and is relatively shorter than the encoding time. Finally, the trained models are used to predict the split results of the CUs of the remaining frames of the video sequences, thereby reducing the traversal of the partition modes and the time of rate distortion cost calculation. To ensure the algorithm's effectiveness, we test the accuracy of the models online by using different video sequences. The algorithm is implemented on the recently released JEM5.0 platform. A total of 22 test sequences of different contents and resolutions from class A1 to class E are tested under the common test condition, which is a full I-frame configuration mode with quantization parameters 22, 27, 32, and 37. The encoding performance of the algorithm is evaluated using the Bjontegaard delta bitrate (BDBR) and average amount of time saved between the proposed algorithm and the original platform.ResultExperimental results show that compared with the original platform's algorithm, the proposed algorithm can decrease the average encoding time by 44.1% with negligible coding performance loss, and the BDBR only increases by 2.6%. The approach can also save more than 20% of encoding time relative to state-of-the-art methods, with BDBR slightly increasing. This algorithm is suitable for various classes of video sequences with different resolutions and textures. Among all the sequences, the sequences with high resolution save more encoding time than other sequences do because of the online training time consumption. Furthermore, the coding performance of the proposed algorithm is stable, thereby proving the effectiveness of the models.ConclusionA random forest-based fast intra CU partition algorithm for FVC is proposed to reduce the complexity of the QTBT structure in FVC. By extracting the texture features of images, the algorithm establishes random forest models to predict the CU partitioning result while avoiding the unnecessary traversal of split modes to save encoding time. The proposed intra prediction coding algorithm can effectively reduce the complexity of FVC and maintain the encoding performance. The proposed algorithm is more suitable for video sequences with high resolution. Furthermore, the proposed algorithm should be optimized in the future to enhance time reduction and reduce coding performance loss. The possibilities of machine learning in FVC inter-prediction will also be explored in the future.关键词:video encoding;future video coding (FVC);fast intra prediction coding;machine learning;random forest20|70|0更新时间:2024-05-08

Image Processing and Coding

-

摘要:ObjectiveCollective motion detection is fundamentally important for analyzing crowd behavior and has attracted considerable attention in artificial intelligence. The recognition and segmentation of collective behavior are important branches of computer vision and graphics, which are significant for public safety, intelligent traffic, and architectural design. The main task of recognizing collective behavior is to mine the coherent patterns consisting of highly coherent tracklets according to the extracted features from crowd motion in videos. However, existing works mostly have limitations due to the insufficient utilization of crowd properties and the arbitrary processing of individuals. Collective behavior involves local and global motion patterns, in which varying densities and arbitrary shapes are salient characteristics. The global coherent motion with complex interaction requires an accurate measurement of local coherency and analysis of global continuity. A further study demonstrates that the motion descriptor and similarity measurement remain limited to finding the latent relativity among tracklet points under the circumstances of perspective distortion and large spatial gap. In view of these shortcomings, we propose the density-based manifold collective clustering approach for detecting groups in crowd scenes.MethodOur background modeling is based on the clustering strategy and aims to recognize arbitrarily shaped clusters in coherent motion. Motion features are detected with the generalized Kanade-Lucas-Tomasi feature point tracker, which jointly combines the detecting and tracking stages with efficient computation. The corresponding algorithms mainly include manifold collective density definition, density-based manifold collective clustering, and hierarchical collectiveness merging process. In the first process, a new manifold distance metric is presented to express the intrinsic characteristics of moving individuals. A collective density with novelty is defined based on this topological structure to describe the collectiveness between an individual and its neighbors. This collective density represents the local density efficiently, reflects the global consistency, and is highly adaptive to reveal the underlying patterns of varying densities in coherent motion. We propose a novel collective clustering to find a local topological relationship that can precisely recognize the collectiveness relationship between points and their surroundings. Similar to the core idea of fast search and density peak cluster method, the cluster peak centers of subgroups are assumed to be characterized by a higher collective density calculated by collectiveness than their neighbors. The density-based manifold collective clustering approach is proposed to detect local and global coherent motions with arbitrary shapes and varying densities. Three salient properties must not be ignored:1) the accurate identification of outliers and exploration of manifold structure, 2) the automatic decision of group number without involving any arbitrary threshold, and 3) the capability of dealing with crowd scenes with varying densities. Thus, in the proposed method, the center of collective subgroups is characterized by two criteria:one is a higher collective density than its neighbors, and the other is a relatively large distance and inconsistent orientation from points with a higher density. From this view, the clustering method can determine the cluster centers automatically. In the recognition of global consistency part, inspired by the main ideology of the BIRCH clustering method, a hierarchical collectiveness merging strategy is developed to combine local motions and recognize global consistency. In this process, the collectiveness is used to capture the intracorrelations of subgroups, and local clusters are successfully combined into global coherent groups by merging the highly consistent pairwise subgroups iteratively.ResultCollective Motion Database is employed to evaluate the experimental performance, and four state-of-the-art group detection techniques are used for comparison, namely, coherent filtering, collective transition, measuring crowd collectiveness, and collective density clustering. Results show that in complex scenes under different conditions, the experimental results of the algorithm remain highly effective and robust. Compared with the traditional clustering methods and state-of-the-art algorithms, average difference (AD) and variance (VAR) are used as criteria to evaluate the algorithm performance. The AD rate of our proposed method is controlled within 0.81, and the VAR rate is under 0.99, which is approximately 6% lower than those of classical clustering methods. Compared with such classical methods, our method exhibits great improvement. Accurate and effective recognition results can be obtained in crowd scenes with complex manifold structures and arbitrary density conditions, which solve the shortcomings of the classical methods in this special scenario.ConclusionThis study proposes a cluster aggregation clustering algorithm based on manifold density for multiple complex real-world videos based on the manifold description and analysis of existing methods for identifying the lack of accuracy and stability of collective behavioral flow in manifold structures. Experiments on various real-world videos and comparisons with previous works validate that our method yields substantial boosts over state-of-the-art competitors. Therefore, the proposed algorithm can have a preferable adaptive performance in complex scenes with varying densities and arbitrary shape situations.关键词:coherent motion detection;manifold density clustering;collective manifold;collectiveness;motion consistency22|10|0更新时间:2024-05-08

摘要:ObjectiveCollective motion detection is fundamentally important for analyzing crowd behavior and has attracted considerable attention in artificial intelligence. The recognition and segmentation of collective behavior are important branches of computer vision and graphics, which are significant for public safety, intelligent traffic, and architectural design. The main task of recognizing collective behavior is to mine the coherent patterns consisting of highly coherent tracklets according to the extracted features from crowd motion in videos. However, existing works mostly have limitations due to the insufficient utilization of crowd properties and the arbitrary processing of individuals. Collective behavior involves local and global motion patterns, in which varying densities and arbitrary shapes are salient characteristics. The global coherent motion with complex interaction requires an accurate measurement of local coherency and analysis of global continuity. A further study demonstrates that the motion descriptor and similarity measurement remain limited to finding the latent relativity among tracklet points under the circumstances of perspective distortion and large spatial gap. In view of these shortcomings, we propose the density-based manifold collective clustering approach for detecting groups in crowd scenes.MethodOur background modeling is based on the clustering strategy and aims to recognize arbitrarily shaped clusters in coherent motion. Motion features are detected with the generalized Kanade-Lucas-Tomasi feature point tracker, which jointly combines the detecting and tracking stages with efficient computation. The corresponding algorithms mainly include manifold collective density definition, density-based manifold collective clustering, and hierarchical collectiveness merging process. In the first process, a new manifold distance metric is presented to express the intrinsic characteristics of moving individuals. A collective density with novelty is defined based on this topological structure to describe the collectiveness between an individual and its neighbors. This collective density represents the local density efficiently, reflects the global consistency, and is highly adaptive to reveal the underlying patterns of varying densities in coherent motion. We propose a novel collective clustering to find a local topological relationship that can precisely recognize the collectiveness relationship between points and their surroundings. Similar to the core idea of fast search and density peak cluster method, the cluster peak centers of subgroups are assumed to be characterized by a higher collective density calculated by collectiveness than their neighbors. The density-based manifold collective clustering approach is proposed to detect local and global coherent motions with arbitrary shapes and varying densities. Three salient properties must not be ignored:1) the accurate identification of outliers and exploration of manifold structure, 2) the automatic decision of group number without involving any arbitrary threshold, and 3) the capability of dealing with crowd scenes with varying densities. Thus, in the proposed method, the center of collective subgroups is characterized by two criteria:one is a higher collective density than its neighbors, and the other is a relatively large distance and inconsistent orientation from points with a higher density. From this view, the clustering method can determine the cluster centers automatically. In the recognition of global consistency part, inspired by the main ideology of the BIRCH clustering method, a hierarchical collectiveness merging strategy is developed to combine local motions and recognize global consistency. In this process, the collectiveness is used to capture the intracorrelations of subgroups, and local clusters are successfully combined into global coherent groups by merging the highly consistent pairwise subgroups iteratively.ResultCollective Motion Database is employed to evaluate the experimental performance, and four state-of-the-art group detection techniques are used for comparison, namely, coherent filtering, collective transition, measuring crowd collectiveness, and collective density clustering. Results show that in complex scenes under different conditions, the experimental results of the algorithm remain highly effective and robust. Compared with the traditional clustering methods and state-of-the-art algorithms, average difference (AD) and variance (VAR) are used as criteria to evaluate the algorithm performance. The AD rate of our proposed method is controlled within 0.81, and the VAR rate is under 0.99, which is approximately 6% lower than those of classical clustering methods. Compared with such classical methods, our method exhibits great improvement. Accurate and effective recognition results can be obtained in crowd scenes with complex manifold structures and arbitrary density conditions, which solve the shortcomings of the classical methods in this special scenario.ConclusionThis study proposes a cluster aggregation clustering algorithm based on manifold density for multiple complex real-world videos based on the manifold description and analysis of existing methods for identifying the lack of accuracy and stability of collective behavioral flow in manifold structures. Experiments on various real-world videos and comparisons with previous works validate that our method yields substantial boosts over state-of-the-art competitors. Therefore, the proposed algorithm can have a preferable adaptive performance in complex scenes with varying densities and arbitrary shape situations.关键词:coherent motion detection;manifold density clustering;collective manifold;collectiveness;motion consistency22|10|0更新时间:2024-05-08 -

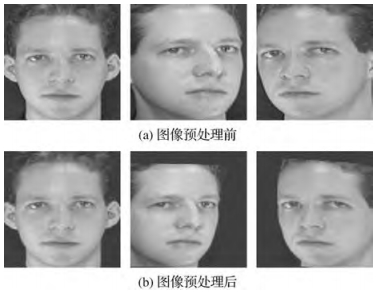

摘要:ObjectiveThe development and popularization of face recognition authentication technology in recent years has made the storage of a large number of face photos in third-party servers highly common. Face recognition plays an important role in clothing, food, housing, and various industries, and moves from theoretical research to practical application of the "blowout period". However, faces are relatively open features compared to irises and fingerprints, and many people post selfies on various social platforms. Not only can you get face photos easily through the Internet, but you can also use a variety of image processing tools to fake faces. Thus, the protection of the privacy of face information has become prominent. At present, the research content in the field of face recognition focuses on directly recognizing face images, and there is a problem of privacy leakage; or the face image is encrypted and decrypted, but the encryption and decryption operation has the disadvantage of high computational complexity.MethodTo solve the problem of the unevenness of the face in a scrambled photo due to camera angles, this study preprocesses the face image as follows. First, we determine whether a given image contains a face. If a face does exist, then we find the border that contains the complete face. Next, we must locate the key points such as the nose and eyes, align the face images on the basis of these key point positions, and normalize them to the same size following the key mechanism of vision. That is, the human eye consistently sees the center of the photo first and then gradually moves to the last four corners. Then, the key parts of the face (eyes, ears, mouth, and nose) are scrambled and blocked by Arnold transform for a random number of times. Second, to achieve face privacy protection and image recognition after scrambling, this study proposes a deep convolutional neural network based on block random scrambling, which does not include an additional layer. The network structure of the model is composed of four convolutional layers, three pooling layers, one fully connected layer, and a softmax regression layer. The convolution kernel sizes of the four convolutional layers are 6×6, 3×3, 3×3, and 2×2. In the training phase, the preprocessed samples are divided into training sets and test sets. At the beginning of training, the convolution kernel parameters are randomly initialized to a small value, and small random numbers are used to ensure that the network does not enter a saturated state due to excessive weights. The training process is divided into the forward propagation and backward propagation phases. After the input passes by the multiple convolutional layers and pooling layers, it is transferred to the output layer. In the process, the input is actually multiplied by each layer of the weight matrix, and a calculation is performed to obtain the output result. The difference between the actual output and the ideal output is calculated in the backward propagation phase, and the weight is adjusted in reverse on the basis of the minimization error method. The server side directly verifies and recognizes the scrambled face image by the deep neural network model. Prior to transmission or storage on the server, the preprocessed and randomized scrambled images are encrypted, and the key is saved to further improve security. Then, the color histogram of the image will show a straight line. When identification is necessary and if a legal key is available, it can be correctly restored to the previous state to perform the identification operation.ResultThis algorithm enables the server to not store the original face template throughout the entire process, thereby achieving effective scrambling protection of the original face image. Using the block random scrambling proposed in this paper, a higher recognition rate can be obtained. Further considering the security problem, the image after random scrambling is twice encrypted and the key is saved before being transmitted or stored in the server. The experiment uses this deep convolutional neural network to identify the ORL face database, and the final recognition accuracy rate reaches 97.62%. Concurrently, the effectiveness of the proposed method is verified by multiple sets of comparative experiments. The face of the original image before processing has a strong correlation with adjacent pixels. After the pixel position is scrambled, the pixel points of the key positions of the face have a uniform distribution trend on the whole image, and the correlation is obviously weakened. Thus, the algorithm has a good effect on hiding the pixel points of the face.ConclusionCompared with other methods that are used to manually extract features and methods based on decision trees and random forest for training recognition in the literature, the proposed method reduces the workload of manually extracting features and retains a higher recognition rate. From the experimental results, the Arnold random parameter scrambling on the block image effectively reduces the correlation of the ciphertext image, and still maintains a high recognition rate for deep neural network recognition. This paper also uses the chaotic map encryption method for secondary encryption. The results show that the correlation of ciphertext images is further reduced, which not only enhances the protection of face privacy, but also has strong robustness to the image recognition after scrambling transformation.关键词:face recognition authentication;convolutional neural network;Arnold transform;face alignment;face privacy protection22|9|0更新时间:2024-05-08

摘要:ObjectiveThe development and popularization of face recognition authentication technology in recent years has made the storage of a large number of face photos in third-party servers highly common. Face recognition plays an important role in clothing, food, housing, and various industries, and moves from theoretical research to practical application of the "blowout period". However, faces are relatively open features compared to irises and fingerprints, and many people post selfies on various social platforms. Not only can you get face photos easily through the Internet, but you can also use a variety of image processing tools to fake faces. Thus, the protection of the privacy of face information has become prominent. At present, the research content in the field of face recognition focuses on directly recognizing face images, and there is a problem of privacy leakage; or the face image is encrypted and decrypted, but the encryption and decryption operation has the disadvantage of high computational complexity.MethodTo solve the problem of the unevenness of the face in a scrambled photo due to camera angles, this study preprocesses the face image as follows. First, we determine whether a given image contains a face. If a face does exist, then we find the border that contains the complete face. Next, we must locate the key points such as the nose and eyes, align the face images on the basis of these key point positions, and normalize them to the same size following the key mechanism of vision. That is, the human eye consistently sees the center of the photo first and then gradually moves to the last four corners. Then, the key parts of the face (eyes, ears, mouth, and nose) are scrambled and blocked by Arnold transform for a random number of times. Second, to achieve face privacy protection and image recognition after scrambling, this study proposes a deep convolutional neural network based on block random scrambling, which does not include an additional layer. The network structure of the model is composed of four convolutional layers, three pooling layers, one fully connected layer, and a softmax regression layer. The convolution kernel sizes of the four convolutional layers are 6×6, 3×3, 3×3, and 2×2. In the training phase, the preprocessed samples are divided into training sets and test sets. At the beginning of training, the convolution kernel parameters are randomly initialized to a small value, and small random numbers are used to ensure that the network does not enter a saturated state due to excessive weights. The training process is divided into the forward propagation and backward propagation phases. After the input passes by the multiple convolutional layers and pooling layers, it is transferred to the output layer. In the process, the input is actually multiplied by each layer of the weight matrix, and a calculation is performed to obtain the output result. The difference between the actual output and the ideal output is calculated in the backward propagation phase, and the weight is adjusted in reverse on the basis of the minimization error method. The server side directly verifies and recognizes the scrambled face image by the deep neural network model. Prior to transmission or storage on the server, the preprocessed and randomized scrambled images are encrypted, and the key is saved to further improve security. Then, the color histogram of the image will show a straight line. When identification is necessary and if a legal key is available, it can be correctly restored to the previous state to perform the identification operation.ResultThis algorithm enables the server to not store the original face template throughout the entire process, thereby achieving effective scrambling protection of the original face image. Using the block random scrambling proposed in this paper, a higher recognition rate can be obtained. Further considering the security problem, the image after random scrambling is twice encrypted and the key is saved before being transmitted or stored in the server. The experiment uses this deep convolutional neural network to identify the ORL face database, and the final recognition accuracy rate reaches 97.62%. Concurrently, the effectiveness of the proposed method is verified by multiple sets of comparative experiments. The face of the original image before processing has a strong correlation with adjacent pixels. After the pixel position is scrambled, the pixel points of the key positions of the face have a uniform distribution trend on the whole image, and the correlation is obviously weakened. Thus, the algorithm has a good effect on hiding the pixel points of the face.ConclusionCompared with other methods that are used to manually extract features and methods based on decision trees and random forest for training recognition in the literature, the proposed method reduces the workload of manually extracting features and retains a higher recognition rate. From the experimental results, the Arnold random parameter scrambling on the block image effectively reduces the correlation of the ciphertext image, and still maintains a high recognition rate for deep neural network recognition. This paper also uses the chaotic map encryption method for secondary encryption. The results show that the correlation of ciphertext images is further reduced, which not only enhances the protection of face privacy, but also has strong robustness to the image recognition after scrambling transformation.关键词:face recognition authentication;convolutional neural network;Arnold transform;face alignment;face privacy protection22|9|0更新时间:2024-05-08 -

摘要:ObjectiveExpression is important in human-computer interaction. As a special expression, spontaneous expression features shorter duration and weaker intensity in comparison with traditional expressions. Spontaneous expressions can reveal a person's true emotions and present immense potential in detection, anti-detection, and medical diagnosis. Therefore, identifying the categories of spontaneous expression can make human-computer interaction smooth and fundamentally change the relationship between people and computers. Given that spontaneous expressions are difficult to be induced and collected, the scale of a spontaneous expression dataset is relatively small for training a new deep neural network. Only ten thousand spontaneous samples are present in each database. The convolutional neural network shows excellent performance and is thus widely used in a large number of scenes. For instance, the approach is better than the traditional feature extraction method in the aspect of improving the accuracy of discriminating the categories of spontaneous expression.MethodThis study proposes a method on the basis of different deep transfer network models for discriminating the categories of spontaneous expression. To preserve the characteristics of the original spontaneous expression, we do not use the technique of data enhancement to reduce the risk of convergence. At the same time, training samples, which comprise three-dimensional images that are composed of optical flow and grayscale images, are compared with the original RGB images. The three-dimensional image contains spatial information and temporal displacement information. In this study, we compare three network models with different samples. The first model is based on Alexnet that only changes the number of output layer neurons that is equal to the number of categories of spontaneous expression. Then, the network is fine-tuned to obtain the best training and testing results by fixing the parameters of different layers several times. The second model is based on InceptionV3. Two fully connected layers whose neuron numbers are equal to 512 and the number of spontaneous expression categories, respectively, are added to the output results. Thus, we only need to fine-tune the parameters of the two layers. Network depth increases with a reduction of the number of parameters due to the 3×3 convolution kernel replacing the 7×7 convolution kernel. The third model is based on Inception-ResNet-v2. Similar to the first model, we only change the number of output layer neurons. Finally, the isomorphic network model is proposed to identify the categories of spontaneous expression. The model is composed of two transfer learning networks of the same type that are trained by different samples and then takes the maximum as the final output. The isomorphic network makes decisions with high accuracy because the same output of the isomorphic network is infinitely close to the standard answer. From the perspective of probability, we take the maximum of different outputs as a prediction value.ResultExperimental results indicate that the proposed method exhibits excellent classification performance on different samples. The single network output clearly shows that the features extracted from RGB images are as effective as the features extracted from the three-dimensional images of optical flow. This result indicates that spatiotemporal features extracted by the optical flow method can be replaced by features that are extracted from the deep neural network. Simultaneously, the method shows that at a certain degree, features extracted from the neural network can replace the lost information and features, such as the temporal features of RGB images or color features of OF+ images. The high average accuracy of a single network indicates that it has good testing performance on each dataset. Networks with high complexity perform well because the samples of spontaneous expression can train the deep transfer learning network effectively. The proposed models achieve state-of-the-art performance and an average accuracy of over 96%. After analyzing the result of the isomorphic network model, we know that its expression is not better than that of a single network in some cases because a single network has a high confidence degree in discriminating the categories of spontaneous expression and thus, the isomorphic network cannot easily improve the average accuracy. The Titan Xp used for this research was donated by the NVIDIA Corporation.ConclusionCompared with traditional expression, spontaneous expression is able to change subtly and extract features in a difficult manner. In the study, different transfer learning networks are applied to discriminate the categories of spontaneous expression. Concurrently, the testing accuracies of different networks, which are trained by different kinds of samples, are compared. Experimental results show that in contrast to traditional methods, deep learning has obvious advantages in spontaneous expression feature extraction. The findings also prove that deep network can extract complete features from spontaneous expression and that it is robust on different databases because of its good testing results. In the future, we will extract spontaneous expressions directly from videos and identify the categories of spontaneous expression with high accuracy by removing distracting occurrences, such as blinking.关键词:spontaneous expression;transfer learning;classification;neural networks;isomorphic network15|9|0更新时间:2024-05-08

摘要:ObjectiveExpression is important in human-computer interaction. As a special expression, spontaneous expression features shorter duration and weaker intensity in comparison with traditional expressions. Spontaneous expressions can reveal a person's true emotions and present immense potential in detection, anti-detection, and medical diagnosis. Therefore, identifying the categories of spontaneous expression can make human-computer interaction smooth and fundamentally change the relationship between people and computers. Given that spontaneous expressions are difficult to be induced and collected, the scale of a spontaneous expression dataset is relatively small for training a new deep neural network. Only ten thousand spontaneous samples are present in each database. The convolutional neural network shows excellent performance and is thus widely used in a large number of scenes. For instance, the approach is better than the traditional feature extraction method in the aspect of improving the accuracy of discriminating the categories of spontaneous expression.MethodThis study proposes a method on the basis of different deep transfer network models for discriminating the categories of spontaneous expression. To preserve the characteristics of the original spontaneous expression, we do not use the technique of data enhancement to reduce the risk of convergence. At the same time, training samples, which comprise three-dimensional images that are composed of optical flow and grayscale images, are compared with the original RGB images. The three-dimensional image contains spatial information and temporal displacement information. In this study, we compare three network models with different samples. The first model is based on Alexnet that only changes the number of output layer neurons that is equal to the number of categories of spontaneous expression. Then, the network is fine-tuned to obtain the best training and testing results by fixing the parameters of different layers several times. The second model is based on InceptionV3. Two fully connected layers whose neuron numbers are equal to 512 and the number of spontaneous expression categories, respectively, are added to the output results. Thus, we only need to fine-tune the parameters of the two layers. Network depth increases with a reduction of the number of parameters due to the 3×3 convolution kernel replacing the 7×7 convolution kernel. The third model is based on Inception-ResNet-v2. Similar to the first model, we only change the number of output layer neurons. Finally, the isomorphic network model is proposed to identify the categories of spontaneous expression. The model is composed of two transfer learning networks of the same type that are trained by different samples and then takes the maximum as the final output. The isomorphic network makes decisions with high accuracy because the same output of the isomorphic network is infinitely close to the standard answer. From the perspective of probability, we take the maximum of different outputs as a prediction value.ResultExperimental results indicate that the proposed method exhibits excellent classification performance on different samples. The single network output clearly shows that the features extracted from RGB images are as effective as the features extracted from the three-dimensional images of optical flow. This result indicates that spatiotemporal features extracted by the optical flow method can be replaced by features that are extracted from the deep neural network. Simultaneously, the method shows that at a certain degree, features extracted from the neural network can replace the lost information and features, such as the temporal features of RGB images or color features of OF+ images. The high average accuracy of a single network indicates that it has good testing performance on each dataset. Networks with high complexity perform well because the samples of spontaneous expression can train the deep transfer learning network effectively. The proposed models achieve state-of-the-art performance and an average accuracy of over 96%. After analyzing the result of the isomorphic network model, we know that its expression is not better than that of a single network in some cases because a single network has a high confidence degree in discriminating the categories of spontaneous expression and thus, the isomorphic network cannot easily improve the average accuracy. The Titan Xp used for this research was donated by the NVIDIA Corporation.ConclusionCompared with traditional expression, spontaneous expression is able to change subtly and extract features in a difficult manner. In the study, different transfer learning networks are applied to discriminate the categories of spontaneous expression. Concurrently, the testing accuracies of different networks, which are trained by different kinds of samples, are compared. Experimental results show that in contrast to traditional methods, deep learning has obvious advantages in spontaneous expression feature extraction. The findings also prove that deep network can extract complete features from spontaneous expression and that it is robust on different databases because of its good testing results. In the future, we will extract spontaneous expressions directly from videos and identify the categories of spontaneous expression with high accuracy by removing distracting occurrences, such as blinking.关键词:spontaneous expression;transfer learning;classification;neural networks;isomorphic network15|9|0更新时间:2024-05-08 -