最新刊期

卷 24 , 期 4 , 2019

-

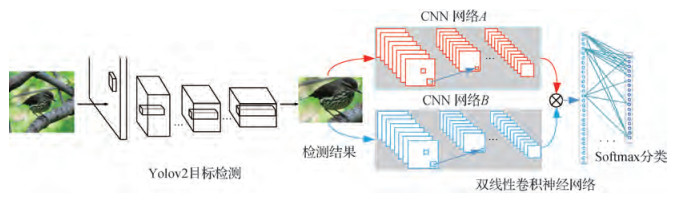

摘要:ObjectiveIn recent years, with the rapid progress of science and technology as well as the increasing demand of human life, people's research has shifted from the coarse-grained image classification to the fine-grained image classification. Fine-grained image classification is a hot research topic in the field of computer vision research in recent years. Its purpose is to provide a detailed subdivision of a large category, such as the distinction of bird species, car brand style, and dog breed. Nowadays, the fine-grained image classification has great application requirements. For example, in the field of ecological protection, the identification of different species of organisms is the key to ecological research. And in the field of botany, because of the variety and quantity of flowers as well as the similarity between different flowers which make the fine-grained image classification tasks more difficult. With the help of computer vision technology, we can realize low-cost fine-grained image classification tasks. However, the fine-grained classification often has smaller differences between classes and larger differences within classes. Thus, in comparison with the ordinary image classification, the task of the fine-grained image classification is more challenging. Moreover, the fine-grained image classification has much irrelevant information and background interference. Those problems would influence the network model to learn the actual discriminative characteristics and result in inferior classification performance in fine-grained image classification. Therefore, finding discriminative regions in the image is important for the improvement of fine-grained image classification performance. To solve this problem, a joint deep learning framework of focus and recognition is constructed for fine-grained image classification. This framework can remove the background in the image, highlight the target to be identified, and then automatically locate the discriminative area in the image. Thus, the deep convolutional neural networks can extract more useful and discriminative features, and the classification rate of fine-grained images can be improved naturally.MethodFirstly, the Yolov2 (you only look once v2) target detection algorithm can detect object in the image rapidly and eliminate the influence of background interference and unrelated information, and then the datasets, which include the detected target objects, are used to train the bilinear convolutional neural network. Finally, the final model can be used for fine-grained image classification. The Yolov2 algorithm is a further improvement of the Yolov1 target detection algorithm, and it is more precise for small object localization. It can automatically find the target in the picture to filter out most of the regions that do not contribute to image classification. Bilinear convolutional neural network is a special network for fine-grained image classification. Its characteristic is that it uses the two convolutional neural networks to extract the features of the same picture simultaneously, and the bilinear feature vector is obtained by the approaches of bilinear pooling. Finally, the bilinear feature vector is fed into the softmax network layer and the classification task is completed, we can get the final classification results. In addition, the advantage of the bilinear convolutional neural network is that it is not dependent on additional manual annotation information and it is an entire system which can complete end-to-end training. It only relies on the class label information. Therefore, it greatly reduces the difficulty and complexity of fine-grained image classification.ResultWe perform verification experiments on open standard fine-grained image library CUB-200-2011, Cars196, and Aircrafts100. We use the pre-trained target detection model of Yolov2 algorithm to detect these three datasets respectively, therefore, we can get the discriminative regions in the image for each datasets. Then, the bilinear convolutional neural network is trained by the processed datasets. Finally, our proposed bilinear convolutional neural network model can be used for the fine-grained image classification and achieves classification accuracy of 84.5%, 92%, and 88.4% on these three datasets. In comparison with the highest classification accuracy obtained by the same classification algorithm without discriminant information extraction, the classification accuracy of the three databases is improved by 0.4%, 0.7%, and 3.9%. Moreover, the recognition rate is also increased by 0.5%, 1.4%, and 4.5% compared with the same classification algorithm, which extracts features from two identical D(dence)-Net networks. We also compared with other fine-grained image classification algorithms, such as the Spatial Transformer Networks, which has a fine classification performance in fine-grained image classification and it is also an entire system and is only dependent on label information. For all that, the classification accuracy rate of ours is still 0.4 percentage points higher than the method of Spatial Transformer Networks on bird dataset.ConclusionIn this paper, an innovative method based on focused recognition network architecture is proposed to improve the recognition rate of the fine-grained image classification. And the experiment results show that our method positively affects the fine-grained image classification results, which uses the network architecture of focus and recognition to detect discriminative region in the image. It can filter out most of the area in the image which does not contribute to the classification of fine-grained images, thereby reducing the influence of background interference to the classification results. Thus, the bilinear convolutional neural network can learn more useful features, which are beneficial to the classification of fine-grained images. Finally, the recognition rate of the model of the fine-grained image classification can be improved effectively. Of course, we also compare with other fine-grained image classification algorithms on several datasets, which also strongly proves the effectiveness of our algorithm.关键词:fine-grained image classification;target detection;bilinear convolutional neural network;framework of focus and recognition;discrimination59|81|4更新时间:2024-05-07

摘要:ObjectiveIn recent years, with the rapid progress of science and technology as well as the increasing demand of human life, people's research has shifted from the coarse-grained image classification to the fine-grained image classification. Fine-grained image classification is a hot research topic in the field of computer vision research in recent years. Its purpose is to provide a detailed subdivision of a large category, such as the distinction of bird species, car brand style, and dog breed. Nowadays, the fine-grained image classification has great application requirements. For example, in the field of ecological protection, the identification of different species of organisms is the key to ecological research. And in the field of botany, because of the variety and quantity of flowers as well as the similarity between different flowers which make the fine-grained image classification tasks more difficult. With the help of computer vision technology, we can realize low-cost fine-grained image classification tasks. However, the fine-grained classification often has smaller differences between classes and larger differences within classes. Thus, in comparison with the ordinary image classification, the task of the fine-grained image classification is more challenging. Moreover, the fine-grained image classification has much irrelevant information and background interference. Those problems would influence the network model to learn the actual discriminative characteristics and result in inferior classification performance in fine-grained image classification. Therefore, finding discriminative regions in the image is important for the improvement of fine-grained image classification performance. To solve this problem, a joint deep learning framework of focus and recognition is constructed for fine-grained image classification. This framework can remove the background in the image, highlight the target to be identified, and then automatically locate the discriminative area in the image. Thus, the deep convolutional neural networks can extract more useful and discriminative features, and the classification rate of fine-grained images can be improved naturally.MethodFirstly, the Yolov2 (you only look once v2) target detection algorithm can detect object in the image rapidly and eliminate the influence of background interference and unrelated information, and then the datasets, which include the detected target objects, are used to train the bilinear convolutional neural network. Finally, the final model can be used for fine-grained image classification. The Yolov2 algorithm is a further improvement of the Yolov1 target detection algorithm, and it is more precise for small object localization. It can automatically find the target in the picture to filter out most of the regions that do not contribute to image classification. Bilinear convolutional neural network is a special network for fine-grained image classification. Its characteristic is that it uses the two convolutional neural networks to extract the features of the same picture simultaneously, and the bilinear feature vector is obtained by the approaches of bilinear pooling. Finally, the bilinear feature vector is fed into the softmax network layer and the classification task is completed, we can get the final classification results. In addition, the advantage of the bilinear convolutional neural network is that it is not dependent on additional manual annotation information and it is an entire system which can complete end-to-end training. It only relies on the class label information. Therefore, it greatly reduces the difficulty and complexity of fine-grained image classification.ResultWe perform verification experiments on open standard fine-grained image library CUB-200-2011, Cars196, and Aircrafts100. We use the pre-trained target detection model of Yolov2 algorithm to detect these three datasets respectively, therefore, we can get the discriminative regions in the image for each datasets. Then, the bilinear convolutional neural network is trained by the processed datasets. Finally, our proposed bilinear convolutional neural network model can be used for the fine-grained image classification and achieves classification accuracy of 84.5%, 92%, and 88.4% on these three datasets. In comparison with the highest classification accuracy obtained by the same classification algorithm without discriminant information extraction, the classification accuracy of the three databases is improved by 0.4%, 0.7%, and 3.9%. Moreover, the recognition rate is also increased by 0.5%, 1.4%, and 4.5% compared with the same classification algorithm, which extracts features from two identical D(dence)-Net networks. We also compared with other fine-grained image classification algorithms, such as the Spatial Transformer Networks, which has a fine classification performance in fine-grained image classification and it is also an entire system and is only dependent on label information. For all that, the classification accuracy rate of ours is still 0.4 percentage points higher than the method of Spatial Transformer Networks on bird dataset.ConclusionIn this paper, an innovative method based on focused recognition network architecture is proposed to improve the recognition rate of the fine-grained image classification. And the experiment results show that our method positively affects the fine-grained image classification results, which uses the network architecture of focus and recognition to detect discriminative region in the image. It can filter out most of the area in the image which does not contribute to the classification of fine-grained images, thereby reducing the influence of background interference to the classification results. Thus, the bilinear convolutional neural network can learn more useful features, which are beneficial to the classification of fine-grained images. Finally, the recognition rate of the model of the fine-grained image classification can be improved effectively. Of course, we also compare with other fine-grained image classification algorithms on several datasets, which also strongly proves the effectiveness of our algorithm.关键词:fine-grained image classification;target detection;bilinear convolutional neural network;framework of focus and recognition;discrimination59|81|4更新时间:2024-05-07 -

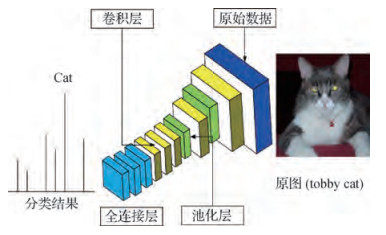

摘要:ObjectiveTraditional sparse representation classification methods have drawn extensive attention due to the improved sparse classification capacity by means of high-dimensional data. However, they ignore the information redundancy between the gallery and query sets, thereby leading to the uncertainty of final recognized results. To address this issue, we propose a novel method by jointly using a convolutional neural network (CNN) and a PCA(principal component analysis)-constrained optimization model to perform sparse representation-based classification (EPCNN-SRC).Method In this study, we present a new sparse learning strategy based on CNN and PCA-constrained optimization model to perform sparse classification. The two critical contributions of this work are as follows. First, we utilize LDA (linear discriminant analysis) to enhance further the discriminative capacity of the collaborative representation classification. Second, we obtain robust face features by using a deep CNN. Specifically, for designing a classification method, we reconstruct PCA coefficients of training samples, which are achieved via PCA-constrained optimization. The objective of the proposed classification method is to use PCA plus LDA hybrid constrained model to enhance the discriminatory capacity of SRC. In the first phase, the proposed method seeks to achieve a compressive linear representation of the test samples. Our design achieves an accurate reconstruction of the test sample using sample space and principle coefficient space. The second phase further improves the discriminative capability of the PCA coefficient in representing a test sample, thereby obtaining a competitive optimization model for face classification. Assuming that a given dataset with multiple images per subject exists, the samples in each subject are stacked as vectors. Hence, an interclass variant dictionary can be constructed by subtracting the natural image from other images of the same class for training data augmentation. Many different approaches have been proposed by researchers to construct a variation dictionary. However, the idea of setting a variation dictionary can be the same, that is, to augment the training set. The constructed interclass dictionary contains all types of important difference information, such as illumination, expression, and other differences that the error cannot represent. To improve the optimization efficiency, we project the training samples into the PCA space, in which a new sparse representation model with PCA-constrained optimization is designed. The formula is described in this study. The strength of the proposed strategies lies in successfully constructing some optimization solutions using quadratic optimization in downsized coefficient subspace, thereby enhancing the collaborative and discriminative capacity of the dictionary to reconstruct the input images. Most existing sparse or collaborative representation methods focus on the training data augmentation for effective optimization to alleviate the adverse effect of the small sample size problem. However, the original dictionary is commonly built on a high-dimensional subspace. A typical example is found in some famous collaborative representation-based methods, such as ESRC(extended sparse representation-based classifier). The abundant hybrid training atoms with high dimensionality may lead to time-consuming and uncertainty in the dataset. In our method, original and within-class variations of one subject can be approximated by a collaborative linear combination of the other subjects, thereby integrating the dimensionality reduction of the training samples and the hybrid optimization process. With the PCA-constrained model, the ESRC decomposes the original face structure of the training set into the orthogonal components known as eigenfaces, and the transformed axes can be established as a set of biases, which represent the variations among the different subjects. Thus, our method can remarkably reduce the computational complexity. Meanwhile, CNNs have been successfully used in a wide range of computer vision and pattern recognition applications and become the mainstream in face biometrics. A CNN trained on a large number of face images can extract robust textural features for face recognition across a variety of appearance variations, such as pose, expression, illumination, and occlusion. To further improve the accuracy of the proposed system, we apply state-of-the-art deep CNN features to our model to improve the accuracy. Here, the proposed classification method is based on CNN features, which are different from widely used nearest neighbor classifiers with cosine and Euclidean distances. In the data processing phase, we use the pretrained VGG16 model for feature extraction. The extracted features from the original input face image can obtain better performance than the traditional sparse representation method, which uses the raw pixel intensities for classification. Therefore, the robust sample features in the process of classification perform a crucial role.Result The designed method has achieved better performance than the traditional sparse representation methods. We repeat each experiment 20 times in every dataset and compute the average value as the final recognition rate. We design an experiment in four different face datasets to evaluate the robustness of the proposed method. Each face dataset contains different styles and numbers in express and pose change. Results are compared with some traditional related classification methods, such as ESRC, NN_CNN(nearest neighbor convolutional neural networks), CIRLRC (conventional and inverse representation-based linear regression classification), TPTSR(two-phase test sample sparse representation), SRICE (sparse representation using iterative class elimination), SRC(sparse representation-based classifier), CRC(collaborative representation based classification), and LRC(linear representation classification). All the methods are operated under the same experimental condition. To confirm the capability of the method to alleviate the adverse effect of the small sample size problem, some experiments are performed in a single sample. The results obtained from the AR, FERET, FRGC, and LFW datasets show that when each subject has only one sample, the proposed EPCNN-SRC achieves 96.92%, 96.15%, 86.94%, and 42.44% recognition rates, respectively, which are higher than that of other traditional methods. This finding has fully provided the effectiveness of the proposed method. In addition, when the test environment contains complex changes, the algorithm still shows good recognition, particularly in terms of time complexity, which is considerably lower than that of the traditional representation classification algorithm, and achieves the expected results.ConclusionIn this study, we propose EPCNN-SRC. Experiments in many datasets show that this algorithm, which applies iterative optimization strategy in feature space, not only effectively extracts the robust information features of the original samples but also combines norm and norm minimization to reduce the time cost of the representation classification algorithm. The key innovation of the proposed work is to accomplish face recognition using a novel dimensionality reduction optimization model, thereby resulting in robust SRC under appearance variations. The strength of the technique lies in successfully constructing a quadratic optimization in downsized coefficient solution subspace, thereby enhancing the discriminatory capacity of the dictionary to reconstruct input signals effectively. We believe that our promising results can encourage future works on synthesizing additional informative optimization structures and can improve this study for better SRC solutions.关键词:sparse representation;convolutional neural network;dimensionality reduction of features;PCA-constrained optimization;face recognition38|125|0更新时间:2024-05-07

摘要:ObjectiveTraditional sparse representation classification methods have drawn extensive attention due to the improved sparse classification capacity by means of high-dimensional data. However, they ignore the information redundancy between the gallery and query sets, thereby leading to the uncertainty of final recognized results. To address this issue, we propose a novel method by jointly using a convolutional neural network (CNN) and a PCA(principal component analysis)-constrained optimization model to perform sparse representation-based classification (EPCNN-SRC).Method In this study, we present a new sparse learning strategy based on CNN and PCA-constrained optimization model to perform sparse classification. The two critical contributions of this work are as follows. First, we utilize LDA (linear discriminant analysis) to enhance further the discriminative capacity of the collaborative representation classification. Second, we obtain robust face features by using a deep CNN. Specifically, for designing a classification method, we reconstruct PCA coefficients of training samples, which are achieved via PCA-constrained optimization. The objective of the proposed classification method is to use PCA plus LDA hybrid constrained model to enhance the discriminatory capacity of SRC. In the first phase, the proposed method seeks to achieve a compressive linear representation of the test samples. Our design achieves an accurate reconstruction of the test sample using sample space and principle coefficient space. The second phase further improves the discriminative capability of the PCA coefficient in representing a test sample, thereby obtaining a competitive optimization model for face classification. Assuming that a given dataset with multiple images per subject exists, the samples in each subject are stacked as vectors. Hence, an interclass variant dictionary can be constructed by subtracting the natural image from other images of the same class for training data augmentation. Many different approaches have been proposed by researchers to construct a variation dictionary. However, the idea of setting a variation dictionary can be the same, that is, to augment the training set. The constructed interclass dictionary contains all types of important difference information, such as illumination, expression, and other differences that the error cannot represent. To improve the optimization efficiency, we project the training samples into the PCA space, in which a new sparse representation model with PCA-constrained optimization is designed. The formula is described in this study. The strength of the proposed strategies lies in successfully constructing some optimization solutions using quadratic optimization in downsized coefficient subspace, thereby enhancing the collaborative and discriminative capacity of the dictionary to reconstruct the input images. Most existing sparse or collaborative representation methods focus on the training data augmentation for effective optimization to alleviate the adverse effect of the small sample size problem. However, the original dictionary is commonly built on a high-dimensional subspace. A typical example is found in some famous collaborative representation-based methods, such as ESRC(extended sparse representation-based classifier). The abundant hybrid training atoms with high dimensionality may lead to time-consuming and uncertainty in the dataset. In our method, original and within-class variations of one subject can be approximated by a collaborative linear combination of the other subjects, thereby integrating the dimensionality reduction of the training samples and the hybrid optimization process. With the PCA-constrained model, the ESRC decomposes the original face structure of the training set into the orthogonal components known as eigenfaces, and the transformed axes can be established as a set of biases, which represent the variations among the different subjects. Thus, our method can remarkably reduce the computational complexity. Meanwhile, CNNs have been successfully used in a wide range of computer vision and pattern recognition applications and become the mainstream in face biometrics. A CNN trained on a large number of face images can extract robust textural features for face recognition across a variety of appearance variations, such as pose, expression, illumination, and occlusion. To further improve the accuracy of the proposed system, we apply state-of-the-art deep CNN features to our model to improve the accuracy. Here, the proposed classification method is based on CNN features, which are different from widely used nearest neighbor classifiers with cosine and Euclidean distances. In the data processing phase, we use the pretrained VGG16 model for feature extraction. The extracted features from the original input face image can obtain better performance than the traditional sparse representation method, which uses the raw pixel intensities for classification. Therefore, the robust sample features in the process of classification perform a crucial role.Result The designed method has achieved better performance than the traditional sparse representation methods. We repeat each experiment 20 times in every dataset and compute the average value as the final recognition rate. We design an experiment in four different face datasets to evaluate the robustness of the proposed method. Each face dataset contains different styles and numbers in express and pose change. Results are compared with some traditional related classification methods, such as ESRC, NN_CNN(nearest neighbor convolutional neural networks), CIRLRC (conventional and inverse representation-based linear regression classification), TPTSR(two-phase test sample sparse representation), SRICE (sparse representation using iterative class elimination), SRC(sparse representation-based classifier), CRC(collaborative representation based classification), and LRC(linear representation classification). All the methods are operated under the same experimental condition. To confirm the capability of the method to alleviate the adverse effect of the small sample size problem, some experiments are performed in a single sample. The results obtained from the AR, FERET, FRGC, and LFW datasets show that when each subject has only one sample, the proposed EPCNN-SRC achieves 96.92%, 96.15%, 86.94%, and 42.44% recognition rates, respectively, which are higher than that of other traditional methods. This finding has fully provided the effectiveness of the proposed method. In addition, when the test environment contains complex changes, the algorithm still shows good recognition, particularly in terms of time complexity, which is considerably lower than that of the traditional representation classification algorithm, and achieves the expected results.ConclusionIn this study, we propose EPCNN-SRC. Experiments in many datasets show that this algorithm, which applies iterative optimization strategy in feature space, not only effectively extracts the robust information features of the original samples but also combines norm and norm minimization to reduce the time cost of the representation classification algorithm. The key innovation of the proposed work is to accomplish face recognition using a novel dimensionality reduction optimization model, thereby resulting in robust SRC under appearance variations. The strength of the technique lies in successfully constructing a quadratic optimization in downsized coefficient solution subspace, thereby enhancing the discriminatory capacity of the dictionary to reconstruct input signals effectively. We believe that our promising results can encourage future works on synthesizing additional informative optimization structures and can improve this study for better SRC solutions.关键词:sparse representation;convolutional neural network;dimensionality reduction of features;PCA-constrained optimization;face recognition38|125|0更新时间:2024-05-07 -

摘要:ObjectiveThe person re-identification task is of great value in multi-target tracking and the target retrieval of multi-cameras. Thus, it has received increasing attention in the field of computer vision and widespread interest among researchers at home and abroad in recent years. The differences in camera viewing angles and imaging quality lead to variations in pedestrian posture, image resolution, and illumination. These variations make the appearance of the same pedestrian in various surveillance videos considerably different. This difference, in turn, causes severe interference in person re-identification. To improve the recognition rate of person re-identification and solve the posture changing problem, this study proposes a person re-identification algorithm with region block segmentation and fusion on the basis of human body structure information.Method First, according to the distribution of the human body structure, a pedestrian image is divided into three local regions:the head part (the H region), the shoulder-knee part (the SK region), and the leg part (the L region). These local regions are enlarged to the original image size using a bilinear interpolation method, which can enhance the expression of the regions and fully use the region information. Second, according to the different roles of each local region in the recognition process, the Gaussian of Gaussian (GOG) feature is extracted from the H and the L regions. The GOG feature, the local maximal occurrence (LOMO) feature, and the kernel canonical correlation analysis (KCCA) feature are extracted from the SK region because the SK region contains the most abundant information of pedestrian images. Extracting numerous features in the SK region can increase the diversity of the region information and strengthen the role of the region in the re-identification process. Third, the interference block removal (IBR) algorithm is used to eliminate the invalid blocks in the image and fuse the similarities of the effective blocks. Given the differences in posture and viewpoint, some objects might appear in one image and be absent in another image of the same person captured by another camera. Such objects may cause large changes in the color and texture information of the pedestrian's corresponding body regions. These changes result in disturbances to the recognition process. The regions in which such objects are located are called interference blocks in this study. By observing the location of the interference blocks, we find that the interference blocks are distributed from the shoulder to the knee of pedestrians. Therefore, the IBR algorithm uses the image of the SK region. According to the human body structure distribution, the IBR algorithm horizontally divides the SK region into the chest part (h1 block), the lumbar part (h2 block), and the leg part (h3 block); and vertically divides the region into the left-arm part (v1 block), the torso part (v2 block), and the right-arm part (v3 block). Then, the GOG feature, LOMO feature, and KCCA feature are extracted from each block. The three features of each block are fed to the similarity measure function to obtain the three similarities between the corresponding blocks. The three similarities of the same block are merged to form the final similarity of the block. When the final similarities of the six block (h1, h2, h3, v1, v2, v3) pairs are calculated, the similarities of the three horizontal block (h1, h2, h3) pairs are compared to find the block with the smallest similarity, which is the interference block in the horizontal direction. The interference block in the vertical direction is found in the same manner. When the two interference blocks are removed, the influence of the interference block on the overall pedestrian similarity can be eliminated. After the interference blocks are removed, the similarities of the remaining four blocks are fused as the similarity of the SK region. Finally, the global similarity of the pedestrian image pair and the similarities of the three local regions (H, L, and SK) are combined to realize person re-identification.Result Many experiments are conducted on four benchmark datasets, namely, VIPeR, GRID, PRID450S, and CUHK01. The results of rank 1 (represents the proportion of queried people) for the four datasets are 62.85%, 30.56%, 71.82%, and 79.03%. The results of rank 5 are 86.17%, 51.20%, 91.16%, and 93.60%. The experimental results show the considerable improvement of recognition rates for the small and large datasets. Thus, the proposed algorithm offers practical application value.ConclusionExperimental results show that the proposed method can effectively express the image information of pedestrians. Furthermore, the proposed region block segmentation and fusion algorithm can remove useless and interference information in images as much as possible under the guidance of human body structure information. It can also preserve the effective information of pedestrians and use it effectively. This method can solve the differences in pedestrian appearance caused by changes in pedestrian posture to a certain extent and greatly improve recognition rates.关键词:person re-identification;human structure information;region block segmentation;interference block removal;region block fusion20|49|2更新时间:2024-05-07

摘要:ObjectiveThe person re-identification task is of great value in multi-target tracking and the target retrieval of multi-cameras. Thus, it has received increasing attention in the field of computer vision and widespread interest among researchers at home and abroad in recent years. The differences in camera viewing angles and imaging quality lead to variations in pedestrian posture, image resolution, and illumination. These variations make the appearance of the same pedestrian in various surveillance videos considerably different. This difference, in turn, causes severe interference in person re-identification. To improve the recognition rate of person re-identification and solve the posture changing problem, this study proposes a person re-identification algorithm with region block segmentation and fusion on the basis of human body structure information.Method First, according to the distribution of the human body structure, a pedestrian image is divided into three local regions:the head part (the H region), the shoulder-knee part (the SK region), and the leg part (the L region). These local regions are enlarged to the original image size using a bilinear interpolation method, which can enhance the expression of the regions and fully use the region information. Second, according to the different roles of each local region in the recognition process, the Gaussian of Gaussian (GOG) feature is extracted from the H and the L regions. The GOG feature, the local maximal occurrence (LOMO) feature, and the kernel canonical correlation analysis (KCCA) feature are extracted from the SK region because the SK region contains the most abundant information of pedestrian images. Extracting numerous features in the SK region can increase the diversity of the region information and strengthen the role of the region in the re-identification process. Third, the interference block removal (IBR) algorithm is used to eliminate the invalid blocks in the image and fuse the similarities of the effective blocks. Given the differences in posture and viewpoint, some objects might appear in one image and be absent in another image of the same person captured by another camera. Such objects may cause large changes in the color and texture information of the pedestrian's corresponding body regions. These changes result in disturbances to the recognition process. The regions in which such objects are located are called interference blocks in this study. By observing the location of the interference blocks, we find that the interference blocks are distributed from the shoulder to the knee of pedestrians. Therefore, the IBR algorithm uses the image of the SK region. According to the human body structure distribution, the IBR algorithm horizontally divides the SK region into the chest part (h1 block), the lumbar part (h2 block), and the leg part (h3 block); and vertically divides the region into the left-arm part (v1 block), the torso part (v2 block), and the right-arm part (v3 block). Then, the GOG feature, LOMO feature, and KCCA feature are extracted from each block. The three features of each block are fed to the similarity measure function to obtain the three similarities between the corresponding blocks. The three similarities of the same block are merged to form the final similarity of the block. When the final similarities of the six block (h1, h2, h3, v1, v2, v3) pairs are calculated, the similarities of the three horizontal block (h1, h2, h3) pairs are compared to find the block with the smallest similarity, which is the interference block in the horizontal direction. The interference block in the vertical direction is found in the same manner. When the two interference blocks are removed, the influence of the interference block on the overall pedestrian similarity can be eliminated. After the interference blocks are removed, the similarities of the remaining four blocks are fused as the similarity of the SK region. Finally, the global similarity of the pedestrian image pair and the similarities of the three local regions (H, L, and SK) are combined to realize person re-identification.Result Many experiments are conducted on four benchmark datasets, namely, VIPeR, GRID, PRID450S, and CUHK01. The results of rank 1 (represents the proportion of queried people) for the four datasets are 62.85%, 30.56%, 71.82%, and 79.03%. The results of rank 5 are 86.17%, 51.20%, 91.16%, and 93.60%. The experimental results show the considerable improvement of recognition rates for the small and large datasets. Thus, the proposed algorithm offers practical application value.ConclusionExperimental results show that the proposed method can effectively express the image information of pedestrians. Furthermore, the proposed region block segmentation and fusion algorithm can remove useless and interference information in images as much as possible under the guidance of human body structure information. It can also preserve the effective information of pedestrians and use it effectively. This method can solve the differences in pedestrian appearance caused by changes in pedestrian posture to a certain extent and greatly improve recognition rates.关键词:person re-identification;human structure information;region block segmentation;interference block removal;region block fusion20|49|2更新时间:2024-05-07 -

摘要:ObjectiveHuman body detection is a key subject of computer vision and has important research relevance in areas, such as intelligent video surveillance, unmanned driving, and intelligent robots. Head-shoulder detection is often used in embedded systems due to its strong anti-masking capabilities, attitude adaptability, and low computational requirements. Commonly used embedded head-shoulder detection methods mainly include motion detection and matching; however, these two methods have low detection accuracy and poor adaptability to different postures and human appearances. To improve the head-shoulder detection accuracy, an embedded real-time human head-shoulder detection method based on aggregated channel features (ACFs) is proposed.MethodA variety of pedestrian detection and human pose datasets, namely, Caltech Pedestrian dataset, INRIA Pedestrian dataset, and MPⅡ Human Pose dataset, are analyzed to generate human head-shoulder sample. Suitable samples in MPⅡ Human Pose dataset are filtered. Then, head-shoulder areas are clipped accurately on the basis of the positions of head and neck joints, and a human head-shoulder dataset with varied head-shoulder poses and perspectives, named MPⅡ-HS, is generated. The MPⅡ-HS dataset is used as positive training samples. Images from Caltech and INRIA Pedestrian datasets, which do not contain humans are used as negative training samples. AdaBoost algorithm with multiple stages, which consist of one channel of gradient amplitude, six channels of gradient direction, and three channels in YUV color space, is used to train a head-shoulder classifier for a 40×40 pixels image based on ACFs. The trained classifier is an enhanced decision tree composed of 4 096 binary decision trees with a maximum depth of five. The final score of the classifier is the sum of the scores of every binary decision tree. The classification will end early if the score sum reaches a lower threshold to speed up detection. Image feature pyramid is calculated based on fast feature pyramid algorithm. For the Linux ARM platform, multi-core parallel techniques and single-instruction multiple-data instruction set are used to accelerate the calculation of image feature pyramid. Finally, sliding-window detection is applied in multiple threads where each thread handles one row of detection windows. The trained head-shoulder image classifier identifies candidate head-shoulder targets in every detection window, and candidate detection results are merged via non-maximum suppression algorithm.ResultTo estimate the accuracy of the proposed head-shoulder detector, head-shoulder targets in the validation set of INRIA Pedestrian dataset are re-labeled and named as INRIA-HS. The trained head-shoulder image classifier is applied. The detection results are evaluated by miss rate (MR) and false positive per picture (FPPI) in the receiver operating characteristic curve. The log-average MS for head-shoulder targets with a height of ≥ 50 pixels in INRIA-HS dataset is 16.61%, that of MR is lower than 20%, whereas the FPPI is 0.1. In addition, the head-shoulder images of various poses and perspectives in different scenes are collected in actual scenes to verify the adaptability of the proposed classifier. Results show that the proposed classifier can detect multi-pose, multi-perspective, and occluded head-shoulder target under different illumination conditions. However, the receptive field of the proposed classifier is limited to the head-shoulder area; thus, some image areas similar to that of the head-shoulder but not similar to the human body may be misclassified as positive. Thus, the FPPI of the proposed head-shoulder detection is slightly higher than that of the ACF classifier trained for all human body detection. However, the proposed head-shoulder classifier is suitable for occluded human in indoor and crowded scenes. In the embedded platform with quad-core ARM Cortex-A53 with 1.4 GHz Raspberry Pi 3B, the proposed optimized head-shoulder detection program takes approximately 178 ms for a 640×480 pixels image. For a single detection window containing positive samples, the classification takes approximately 2 ms. The overall detection speed can satisfy the demands of real-time detection of video streams.ConclusionHuman head-shoulders are detected based on ACF. The generated head-shoulder dataset MPⅡ-HS has rich and varied head-shoulder samples with accurate annotations. The AdaBoost algorithm is used to learn the ACF of head-shoulder images. The trained head-shoulder image classifier has strong adaptability to different human poses or appearances. It benefits from the struct of classifier, and its hardware performance requirement is low. These advantages allow human head-shoulder detection accuracy possible on an embedded platform in a wide range.关键词:pedestrian detection;head-shoulder detection;embedded system;aggregated channel features;AdaBoost;machine learning27|56|0更新时间:2024-05-07

摘要:ObjectiveHuman body detection is a key subject of computer vision and has important research relevance in areas, such as intelligent video surveillance, unmanned driving, and intelligent robots. Head-shoulder detection is often used in embedded systems due to its strong anti-masking capabilities, attitude adaptability, and low computational requirements. Commonly used embedded head-shoulder detection methods mainly include motion detection and matching; however, these two methods have low detection accuracy and poor adaptability to different postures and human appearances. To improve the head-shoulder detection accuracy, an embedded real-time human head-shoulder detection method based on aggregated channel features (ACFs) is proposed.MethodA variety of pedestrian detection and human pose datasets, namely, Caltech Pedestrian dataset, INRIA Pedestrian dataset, and MPⅡ Human Pose dataset, are analyzed to generate human head-shoulder sample. Suitable samples in MPⅡ Human Pose dataset are filtered. Then, head-shoulder areas are clipped accurately on the basis of the positions of head and neck joints, and a human head-shoulder dataset with varied head-shoulder poses and perspectives, named MPⅡ-HS, is generated. The MPⅡ-HS dataset is used as positive training samples. Images from Caltech and INRIA Pedestrian datasets, which do not contain humans are used as negative training samples. AdaBoost algorithm with multiple stages, which consist of one channel of gradient amplitude, six channels of gradient direction, and three channels in YUV color space, is used to train a head-shoulder classifier for a 40×40 pixels image based on ACFs. The trained classifier is an enhanced decision tree composed of 4 096 binary decision trees with a maximum depth of five. The final score of the classifier is the sum of the scores of every binary decision tree. The classification will end early if the score sum reaches a lower threshold to speed up detection. Image feature pyramid is calculated based on fast feature pyramid algorithm. For the Linux ARM platform, multi-core parallel techniques and single-instruction multiple-data instruction set are used to accelerate the calculation of image feature pyramid. Finally, sliding-window detection is applied in multiple threads where each thread handles one row of detection windows. The trained head-shoulder image classifier identifies candidate head-shoulder targets in every detection window, and candidate detection results are merged via non-maximum suppression algorithm.ResultTo estimate the accuracy of the proposed head-shoulder detector, head-shoulder targets in the validation set of INRIA Pedestrian dataset are re-labeled and named as INRIA-HS. The trained head-shoulder image classifier is applied. The detection results are evaluated by miss rate (MR) and false positive per picture (FPPI) in the receiver operating characteristic curve. The log-average MS for head-shoulder targets with a height of ≥ 50 pixels in INRIA-HS dataset is 16.61%, that of MR is lower than 20%, whereas the FPPI is 0.1. In addition, the head-shoulder images of various poses and perspectives in different scenes are collected in actual scenes to verify the adaptability of the proposed classifier. Results show that the proposed classifier can detect multi-pose, multi-perspective, and occluded head-shoulder target under different illumination conditions. However, the receptive field of the proposed classifier is limited to the head-shoulder area; thus, some image areas similar to that of the head-shoulder but not similar to the human body may be misclassified as positive. Thus, the FPPI of the proposed head-shoulder detection is slightly higher than that of the ACF classifier trained for all human body detection. However, the proposed head-shoulder classifier is suitable for occluded human in indoor and crowded scenes. In the embedded platform with quad-core ARM Cortex-A53 with 1.4 GHz Raspberry Pi 3B, the proposed optimized head-shoulder detection program takes approximately 178 ms for a 640×480 pixels image. For a single detection window containing positive samples, the classification takes approximately 2 ms. The overall detection speed can satisfy the demands of real-time detection of video streams.ConclusionHuman head-shoulders are detected based on ACF. The generated head-shoulder dataset MPⅡ-HS has rich and varied head-shoulder samples with accurate annotations. The AdaBoost algorithm is used to learn the ACF of head-shoulder images. The trained head-shoulder image classifier has strong adaptability to different human poses or appearances. It benefits from the struct of classifier, and its hardware performance requirement is low. These advantages allow human head-shoulder detection accuracy possible on an embedded platform in a wide range.关键词:pedestrian detection;head-shoulder detection;embedded system;aggregated channel features;AdaBoost;machine learning27|56|0更新时间:2024-05-07 -

摘要:ObjectiveVisual tracking is a classical computer vision problem with many applications. In generic visual tracking, the task is to estimate the trajectory of a target in an image sequence, given only its initial location. Recently, traditional discriminative correlation filter-based approaches have been successfully applied to tracking problems. These methods learn a discriminative correlation filter from a set of training samples, which adopt a circular shift operator on the tracking target object (the only accurate positive sample) to obtain the training negative samples. These shifted patches are implicitly generated through the circulatory property of correlation in frequency domain and are used as negative examples for training the filter. All shifted patches are plagued by circular boundary effects and are not truly representative of negative patches in real-word scenes. Thus, the actual background information is not modeled during the total learning process and when the target object is similar to the background information, thereby leading to a drift. To improve the performance, a large number of training samples are collected, which results in the increase of computational complexity. Moreover, preferring the background is easy due to the online model update strategy, which causes drift. To resolve this problem, we construct a discriminative correlation filter-based target function with equation-constrained condition on the background-aware correlation filtering (BACF) visual object tracking algorithm, which is termed as the background-temporal-aware correlation filter (BTCF) visual object tracking. Our algorithm obtains the actual negative sample with the same size as the target object on the training set by multiplying the filter with a binary mask to suppress the background region. Moreover, it can learn a strong correlation filter-based discriminative classifier by only using the current frame information without online updating of the model.MethodIn this paper, the proposed BTCF model is convex and can be minimized to obtain the globally optimal solution. In order to further reduce the computational burden, we propose a new equation-constrained discriminative correlation filter-based objective function. This objective function satisfies the Eckstein-Bertsekas condition, therefore, it can be transformed into an unconstrained augmented Lagrange multiplier formula to converge to the global optimum solution. Then, two sub-problems with closed-form solution are gained by using the alternating direction multiplier method (ADMM). Every sub-problem is a smooth and convex function and is very easy to obtain the solution, therefore, each iteration of the sub-problem has a closed-form solution and is the global optimal solution of each sub-problem. Because of the convolution calculation in sub-problem two, it is difficult to solve the optimization problem, consequently, according to Parseval's theorem, we transform sub-problem two into Fourier domain to reduce computational complexity. The efficient ADMM based approach for learning our filter on multi-channel features, with computational cost of

摘要:ObjectiveVisual tracking is a classical computer vision problem with many applications. In generic visual tracking, the task is to estimate the trajectory of a target in an image sequence, given only its initial location. Recently, traditional discriminative correlation filter-based approaches have been successfully applied to tracking problems. These methods learn a discriminative correlation filter from a set of training samples, which adopt a circular shift operator on the tracking target object (the only accurate positive sample) to obtain the training negative samples. These shifted patches are implicitly generated through the circulatory property of correlation in frequency domain and are used as negative examples for training the filter. All shifted patches are plagued by circular boundary effects and are not truly representative of negative patches in real-word scenes. Thus, the actual background information is not modeled during the total learning process and when the target object is similar to the background information, thereby leading to a drift. To improve the performance, a large number of training samples are collected, which results in the increase of computational complexity. Moreover, preferring the background is easy due to the online model update strategy, which causes drift. To resolve this problem, we construct a discriminative correlation filter-based target function with equation-constrained condition on the background-aware correlation filtering (BACF) visual object tracking algorithm, which is termed as the background-temporal-aware correlation filter (BTCF) visual object tracking. Our algorithm obtains the actual negative sample with the same size as the target object on the training set by multiplying the filter with a binary mask to suppress the background region. Moreover, it can learn a strong correlation filter-based discriminative classifier by only using the current frame information without online updating of the model.MethodIn this paper, the proposed BTCF model is convex and can be minimized to obtain the globally optimal solution. In order to further reduce the computational burden, we propose a new equation-constrained discriminative correlation filter-based objective function. This objective function satisfies the Eckstein-Bertsekas condition, therefore, it can be transformed into an unconstrained augmented Lagrange multiplier formula to converge to the global optimum solution. Then, two sub-problems with closed-form solution are gained by using the alternating direction multiplier method (ADMM). Every sub-problem is a smooth and convex function and is very easy to obtain the solution, therefore, each iteration of the sub-problem has a closed-form solution and is the global optimal solution of each sub-problem. Because of the convolution calculation in sub-problem two, it is difficult to solve the optimization problem, consequently, according to Parseval's theorem, we transform sub-problem two into Fourier domain to reduce computational complexity. The efficient ADMM based approach for learning our filter on multi-channel features, with computational cost of${\rm{O}}\left({LKT\lg \left(T \right)} \right)$ $T$ $K$ $L$ 关键词:visual tracking;correlation filter;background-aware;temporal-aware;regularization;alternating direction multiplier method17|6|7更新时间:2024-05-07 -

摘要:ObjectiveThe problem of environmental pollution in China has become increasingly serious with the rapid development of society and economy. Creating a beautiful ecological environment is becoming an important issue in the current national planning. Environmental pollution problems affect the sustainable development of the country and society. As an important source of pollution, cement plants must be effectively counted and monitored. With the development of satellite remote sensing, high-resolution and good quality images become available. At the same time, deep learning has made great progress in the field of target detection, and many excellent deep convolutional network models, such as Faster R-CNN, YOLO, SSD, and Mask R-CNN, have been proposed recently. In the object detection task on satellite images, huge differences lie in the plant area scales, the structures of equipment composition, and the orientations of each cement plant. Thus, various cement plants are presented with various appearances due to the complex natural geographical surroundings. Overcoming the problem of cement plant target detection and recognition using traditional artificial image feature methods is difficult. However, deep learning has achieved excellent performance in the field of image target recognition. The application of the deep convolutional network may be a brilliant method to locate cement plants on satellite images.MethodA method of detecting and locating cement plant position using high-resolution satellite images was proposed based on the convolutional neural network of Faster R-CNN framework for image target detection. First, we used GoogleMap API to download Google Earth satellite images. We developed this high-resolution satellite image dataset of cement factory target using GoogleMap web API, which contains 464 cement plant locations, according to the Beijing-Tianjin-Hebei cement dataset given by the Satellite Environment Center, Ministry of Environmental protection. Through the training and testing datasets of the cement plant in Beijing-Tianjin-Hebei with three different feature extracting modules (namely, VGG(visual geometry group network), ZF, and ResNet), we compared the testing results among the three CNN models. Three methods, which include image haze removal using dark channel prior, data augmentation, and adding negative training samples, were introduced to solve the problems of overfitting and reduce high false positive rate because of insufficient amount of training data. We also verified the influence of different numbers of negative training samples on model training. We used these features to assist cement plant target detection, considering the characteristics of cement buildings with evident cylindrical cement tanks and heating reaction tower buildings.ResultThe visualized images of the convolutional feature map show that the identification of the deep convolution network for cement plant target detection is mainly based on special buildings in the plant area, such as cylindrical cement tanks, heating towers, and rectangular plants. The experimental results on the test set reveal that the ResNet achieves the best performance with the average accuracy rate of 74%. An optimization method by three methods is proposed to further enhance the detection of accurate rate and suppress the false positive rate. The precision of the promoted CNN model reached 94% in the augmented testing dataset, and the false positive rate was reduced to 14%. The true positive detection rate in the global cement plant dataset reached 96%, and the number of false detection of 10 000 random satellite images was reduced to 30 (0.3%). The actual cement plant target scan detection of the satellite images in the entire Shanghai area was conducted. To avoid dividing the cement plant into two image parts, we used the overlapping detection method, which also detected adjacent areas between satellite images. As a result, 11 out of 16 registered cement plants, and 17 unregistered cement plants are detected.ConclusionThe cement plant has special buildings of different shapes and background, even varying over time, thereby resulting in relatively difficult detection task. However, the method of cement plant detection on satellite images based on deep convolutional networks can automatically learn to extract effective features and can identify the position of the target in the image. In addition, some optimization methods, including image preprocessing, data augmentation, and adding negative samples to promote the performance of the model, are adopted to improve the model detection accuracy and solve the problem of few training data. The geographic latitude and longitude coordinates of cement targets are easy to obtain based on the result of the image object detection and its position information because the satellite image is geocoded. We can also estimate the cement plant area using information of detected boxes. In the model generalization capability test experiment, the proposed method achieves good performance in the detection and location tasks in the global cement dataset. The scanning results of the entire satellite image set in Shanghai indicate that the deep convolution network target detection method not only can detect most registered cement plants but also multiple unregistered cement plants. The method provides a reliable reference for monitoring environmental pollution sources. Furthermore, this model can be easily converted to detect other architectural targets using transfer learning techniques.关键词:high-resolution satellite image;target detection;convolutional neural network;deep learning;model optimization25|4|4更新时间:2024-05-07

摘要:ObjectiveThe problem of environmental pollution in China has become increasingly serious with the rapid development of society and economy. Creating a beautiful ecological environment is becoming an important issue in the current national planning. Environmental pollution problems affect the sustainable development of the country and society. As an important source of pollution, cement plants must be effectively counted and monitored. With the development of satellite remote sensing, high-resolution and good quality images become available. At the same time, deep learning has made great progress in the field of target detection, and many excellent deep convolutional network models, such as Faster R-CNN, YOLO, SSD, and Mask R-CNN, have been proposed recently. In the object detection task on satellite images, huge differences lie in the plant area scales, the structures of equipment composition, and the orientations of each cement plant. Thus, various cement plants are presented with various appearances due to the complex natural geographical surroundings. Overcoming the problem of cement plant target detection and recognition using traditional artificial image feature methods is difficult. However, deep learning has achieved excellent performance in the field of image target recognition. The application of the deep convolutional network may be a brilliant method to locate cement plants on satellite images.MethodA method of detecting and locating cement plant position using high-resolution satellite images was proposed based on the convolutional neural network of Faster R-CNN framework for image target detection. First, we used GoogleMap API to download Google Earth satellite images. We developed this high-resolution satellite image dataset of cement factory target using GoogleMap web API, which contains 464 cement plant locations, according to the Beijing-Tianjin-Hebei cement dataset given by the Satellite Environment Center, Ministry of Environmental protection. Through the training and testing datasets of the cement plant in Beijing-Tianjin-Hebei with three different feature extracting modules (namely, VGG(visual geometry group network), ZF, and ResNet), we compared the testing results among the three CNN models. Three methods, which include image haze removal using dark channel prior, data augmentation, and adding negative training samples, were introduced to solve the problems of overfitting and reduce high false positive rate because of insufficient amount of training data. We also verified the influence of different numbers of negative training samples on model training. We used these features to assist cement plant target detection, considering the characteristics of cement buildings with evident cylindrical cement tanks and heating reaction tower buildings.ResultThe visualized images of the convolutional feature map show that the identification of the deep convolution network for cement plant target detection is mainly based on special buildings in the plant area, such as cylindrical cement tanks, heating towers, and rectangular plants. The experimental results on the test set reveal that the ResNet achieves the best performance with the average accuracy rate of 74%. An optimization method by three methods is proposed to further enhance the detection of accurate rate and suppress the false positive rate. The precision of the promoted CNN model reached 94% in the augmented testing dataset, and the false positive rate was reduced to 14%. The true positive detection rate in the global cement plant dataset reached 96%, and the number of false detection of 10 000 random satellite images was reduced to 30 (0.3%). The actual cement plant target scan detection of the satellite images in the entire Shanghai area was conducted. To avoid dividing the cement plant into two image parts, we used the overlapping detection method, which also detected adjacent areas between satellite images. As a result, 11 out of 16 registered cement plants, and 17 unregistered cement plants are detected.ConclusionThe cement plant has special buildings of different shapes and background, even varying over time, thereby resulting in relatively difficult detection task. However, the method of cement plant detection on satellite images based on deep convolutional networks can automatically learn to extract effective features and can identify the position of the target in the image. In addition, some optimization methods, including image preprocessing, data augmentation, and adding negative samples to promote the performance of the model, are adopted to improve the model detection accuracy and solve the problem of few training data. The geographic latitude and longitude coordinates of cement targets are easy to obtain based on the result of the image object detection and its position information because the satellite image is geocoded. We can also estimate the cement plant area using information of detected boxes. In the model generalization capability test experiment, the proposed method achieves good performance in the detection and location tasks in the global cement dataset. The scanning results of the entire satellite image set in Shanghai indicate that the deep convolution network target detection method not only can detect most registered cement plants but also multiple unregistered cement plants. The method provides a reliable reference for monitoring environmental pollution sources. Furthermore, this model can be easily converted to detect other architectural targets using transfer learning techniques.关键词:high-resolution satellite image;target detection;convolutional neural network;deep learning;model optimization25|4|4更新时间:2024-05-07 -

摘要:ObjectiveIn view of the increasing number and diversity of minority clothing in the domains of multimedia, digital clothing, graphics, and images, understanding and recognizing minority clothing images automatically is essential. However, most previous works have used low-level features directly for classification and recognition, there by lacking local feature analysis and semantic annotation of clothing. The diversity of clothing colors and styles results in low recognition accuracy of minority clothing. Therefore, a minority clothing recognition method based on human detection and multitask learning was proposed for Yunnan minority clothing.MethodThe main idea of this work is to propose the

摘要:ObjectiveIn view of the increasing number and diversity of minority clothing in the domains of multimedia, digital clothing, graphics, and images, understanding and recognizing minority clothing images automatically is essential. However, most previous works have used low-level features directly for classification and recognition, there by lacking local feature analysis and semantic annotation of clothing. The diversity of clothing colors and styles results in low recognition accuracy of minority clothing. Therefore, a minority clothing recognition method based on human detection and multitask learning was proposed for Yunnan minority clothing.MethodThe main idea of this work is to propose the$k$ $k$ 关键词:minority clothing;image recognition;human detection;semantic attributes;multi-task learning13|4|8更新时间:2024-05-07 -

摘要:ObjectiveRapid and convenient product retrieval is the key for excellent user experience in online shopping. The application of keyword-based retrieval in commodity retrieval is ineffective because of problems, such as standardization of the description of goods and the differences in the understanding of the attributes of the goods by the users. In recent years, "search by image" has been increasingly used in e-commerce platforms. Retrieval technology is constantly improving, from text-based image retrieval to content-based image retrieval, and then to utilizing deep learning to achieve image retrieval. However, retrieval results are often unsatisfactory. These methods cannot rapidly and accurately retrieve results that satisfy people's expectations, thereby lacking excellent user experience. Therefore, a new method of commodity retrieval is proposed. From the features of the commodity design, the image feature is obtained using the complete picture information as well as the human cognition of the goods, which is introduced into the retrieval process of the commodity picture to obtain the desired results.MethodHuman cognition of commodities is a type of subconsciousness formed by human experience, which corresponds to the designers' norms. We can obtain results that are consistent with human cognitive retrieval results by studying the commodity design specifications and designing commodity features and then using these features for commodity retrieval. We select fashionable women's bags as the research object. Women's bags are a necessity and favorable to women; thus, bags have practical relevance to the study. Moreover, the design elements of women's bags are relatively independent and flexible. Thus, using traditional image retrieval methods is difficult to satisfy user's retrieval intentions. Therefore, studying similar searches of women's bags is necessary. The design features are decomposed into shape, color, and design element features based on the designers' specifications (such as tassel, chain, and zipper). A deep convolution neural network is used to construct classification models for the three features. The features of each picture are then extracted, and three feature sets are established for similarity comparison in retrieval. The shape, color, and design element picture sets are established to construct the feature models that correspond to shape, color, and local design elements, respectively. Each picture set must be marked in advance. The shape picture set is marked by 14 categories, including shell, Boston, and platinum bags. The color picture set is marked by 13 categories, including red, orange, and yellow. The design element picture set is marked by 11 categories, including strip closure, zipper decoration, and diamond grille. Adding a Hashing layer into the deep convolution neural network and extracting Hashing layer data as image features can provide feature binarization and simplify the calculation. At the same time, in the retrieval process, using the proposed Top3 within-class retrieval algorithm can reduce the algorithm complexity. Searching can be according to the classification features, namely, shape, color, and design elements, selected by users in real time. Thus, the retrieval results reflect the users' intention of commodity search. Given a picture of a fashion woman bag image to be retrieved, the corresponding classification model is called after the user selects the classification features. First, the classification of the image under a feature is recognized, and the image feature is then extracted. Subsequently, the Euclidean distance is calculated with all the images in Top3. Finally, the retrieval results are returned in order of similarity.ResultThe dataset is currently the only one dedicated to the search of fashionable women's bags. Notably, the design element picture set contains not only the overall picture of bags but also the segmented design element picture. The dataset and feature models are used for classification recognition and image retrieval experiments. Results show that the recognition accuracy of each model of the Top1 algorithm is less than 95%, whereas the recognition accuracy of the Top3 is more than 98.5%. Using Top3 within-class retrieval algorithm can speed up the retrieval and ensure the accuracy of the retrieval results as much as possible. At the same time, the use of Hashing method and Top3 within-class retrieval algorithm results in nearly 3.5 times faster retrieval speed and greatly improves the retrieval efficiency. When multiple features for commodity retrieval are used, the corresponding weights of color, shape, and design elements are 0.6, 0.2, and 0.2 respectively. These weights can be defined by the users in real time to reflect the changes of users' attention to different features during the retrieval process.ConclusionA method of commodity retrieval that is based on the commodity appearance design criterion and is combined with people's cognitive model, is proposed. In comparison with image-based retrieval tools, such as Taobao and Baidu, the retrieval results are more similar to the original image and more in line with people's expectations. At the same time, according to the user's preference, the proposed method can synthetically query according to single and multiple features, and the retrieval results are diversified. In addition, we use the global features of shape and color and the local feature of design elements to conduct a survey of online users' retrieval satisfaction. The survey results show that the user satisfaction of Taobao and Baidu pictures is similar. However, the user satisfaction of women's bag retrieval results obtained by the proposed method is remarkably higher than those of Taobao and Baidu pictures, which is more consistent with human cognition. The proposed method is suitable for the retrieval of fashionable women's bags and can be used for reference in the research of image-based retrieval methods for other goods. At present, for a given bag picture, the design elements are obtained by interactive manual segmentation in the process of similar bag retrieval. In future works, we can study the method of identifying the design elements of women's package to realize the automatic identification and segmentation of design elements, thereby improving the automation of women's package retrieval and the practical value of the proposed method.关键词:cognitive;design specification;online shopping;image retrieval;deep convolution neural network (DCNN);Hashing method13|4|0更新时间:2024-05-07