最新刊期

卷 24 , 期 2 , 2019

-

摘要:ObjectiveClothing retrieval is a technology that combines clothing inspection, clothing classification, and feature learning, which plays an important role in clothing promotion and sales. Current clothing retrieval algorithms are mainly based on deep neural network. These algorithms initially learn the high-dimensional features of a clothing image through the network and compare the high-dimensional features between different images to determine clothing similarity. These clothing retrieval algorithms usually possess a semantic gap problem. They cannot connect the clothing features with semantic information, such as color, texture, and style, which results in their insufficient interpretability. Therefore, these algorithms cannot adapt another domain and usually fail in retrieving several clothing with new styles. The accuracy of clothing retrieval algorithm should be improved, especially for cross-domain multilabel clothing image. This study proposes a new cloth retrieval pipeline with deep multilabel parsing and hashing to increase the cross-domain clothing retrieval accuracy and reduce the high-dimensional output features of the deep neural network.MethodOn the basis of the semantic expression of street shot photos, we introduce and improve a fully convolutional network (FCN) structure to parse clothing in pixel level. To overcome the fragment label and noise problem, we employ conditional random fields (CRFs) to the FCN as a post process. In addition, a new image retrieval algorithm based on multi-task learning and Hashing is proposed to solve the semantic gap problem and dimension disaster in clothing retrieval. On the basis of extracted image features, a Hashing algorithm is used to map the high-dimensional feature vectors to low-dimensional Hamming space while maintaining their similarities. Hence, the dimension disaster problem in the clothing retrieval algorithm can be solved, and a real-time performance can be achieved. Moreover, we reorganize the Consumer-to-Shop database based on cross-scene clothing retrieval. The database is organized in accordance with shops and consumers' photos to ensure that the clothes under the same ID are similar. We also propose a clothing classification model and integrate this model on a traditional clothing similarity model to overcome the semantic drift problem. In summary, the proposed clothing retrieval model can be divided into two parts. The first part is a semantic segmentation network for street shot photos, which is used to identify the specific clothing target in the image. The second part is a Hashing model based on the multi-task network, which can map the high-dimensional network features to the low-latitude hash space.ResultWe modify the Clothing Co-Parsing dataset and establish the Consumer-to-Shop dataset. We conduct a clothing parsing experiment for the modified dataset. We find that the FCN might drop the detailed features of an image. The segmentation results show blurred edges and color blocking effect after several up-sampling operations. To overcome these limitations, CRFs are used in the method for subsequent correction. The experimental results show that many areas are recognized as correct labels, and fine color blocks are replaced by smooth segmentation results after the addition of CRFs as post-processing, which are easily recognized by human intuition. Then, we compare our method with three mainstream retrieval algorithms, and the results show that our method can achieve top-level accuracy with the usage of hash features. The top-5 accuracy is 1.31% higher than that of WTBI and 0.21% higher than that of DARN.ConclusionWe propose a deep multilabel parsing and hashing retrieval network to increase the efficiency and accuracy of clothing retrieval algorithm. For the clothing parsing task, the modified FCN-CRFs model shows the best subjective visual effects among other methods and achieves a superior time performance. For the clothing retrieval task, an approximate nearest neighbor search technique is employed and a hashing algorithm is used to simplify high-dimensional features. At the same time, the clothing classification and clothing similarity models are trained by using a multi-task learning network to solve the semantic drift phenomena during retrieval. In comparison with other clothing retrieval methods, our method shows several advantages in multi-label clothing retrieval scenarios. Our method achieves the highest score in top-10 accuracy, effectively reduces storage space, and improves retrieval efficiency.关键词:clothes retrieval;FCN;Hashing;multi-label parsing;multi-task learning20|61|3更新时间:2024-05-07

摘要:ObjectiveClothing retrieval is a technology that combines clothing inspection, clothing classification, and feature learning, which plays an important role in clothing promotion and sales. Current clothing retrieval algorithms are mainly based on deep neural network. These algorithms initially learn the high-dimensional features of a clothing image through the network and compare the high-dimensional features between different images to determine clothing similarity. These clothing retrieval algorithms usually possess a semantic gap problem. They cannot connect the clothing features with semantic information, such as color, texture, and style, which results in their insufficient interpretability. Therefore, these algorithms cannot adapt another domain and usually fail in retrieving several clothing with new styles. The accuracy of clothing retrieval algorithm should be improved, especially for cross-domain multilabel clothing image. This study proposes a new cloth retrieval pipeline with deep multilabel parsing and hashing to increase the cross-domain clothing retrieval accuracy and reduce the high-dimensional output features of the deep neural network.MethodOn the basis of the semantic expression of street shot photos, we introduce and improve a fully convolutional network (FCN) structure to parse clothing in pixel level. To overcome the fragment label and noise problem, we employ conditional random fields (CRFs) to the FCN as a post process. In addition, a new image retrieval algorithm based on multi-task learning and Hashing is proposed to solve the semantic gap problem and dimension disaster in clothing retrieval. On the basis of extracted image features, a Hashing algorithm is used to map the high-dimensional feature vectors to low-dimensional Hamming space while maintaining their similarities. Hence, the dimension disaster problem in the clothing retrieval algorithm can be solved, and a real-time performance can be achieved. Moreover, we reorganize the Consumer-to-Shop database based on cross-scene clothing retrieval. The database is organized in accordance with shops and consumers' photos to ensure that the clothes under the same ID are similar. We also propose a clothing classification model and integrate this model on a traditional clothing similarity model to overcome the semantic drift problem. In summary, the proposed clothing retrieval model can be divided into two parts. The first part is a semantic segmentation network for street shot photos, which is used to identify the specific clothing target in the image. The second part is a Hashing model based on the multi-task network, which can map the high-dimensional network features to the low-latitude hash space.ResultWe modify the Clothing Co-Parsing dataset and establish the Consumer-to-Shop dataset. We conduct a clothing parsing experiment for the modified dataset. We find that the FCN might drop the detailed features of an image. The segmentation results show blurred edges and color blocking effect after several up-sampling operations. To overcome these limitations, CRFs are used in the method for subsequent correction. The experimental results show that many areas are recognized as correct labels, and fine color blocks are replaced by smooth segmentation results after the addition of CRFs as post-processing, which are easily recognized by human intuition. Then, we compare our method with three mainstream retrieval algorithms, and the results show that our method can achieve top-level accuracy with the usage of hash features. The top-5 accuracy is 1.31% higher than that of WTBI and 0.21% higher than that of DARN.ConclusionWe propose a deep multilabel parsing and hashing retrieval network to increase the efficiency and accuracy of clothing retrieval algorithm. For the clothing parsing task, the modified FCN-CRFs model shows the best subjective visual effects among other methods and achieves a superior time performance. For the clothing retrieval task, an approximate nearest neighbor search technique is employed and a hashing algorithm is used to simplify high-dimensional features. At the same time, the clothing classification and clothing similarity models are trained by using a multi-task learning network to solve the semantic drift phenomena during retrieval. In comparison with other clothing retrieval methods, our method shows several advantages in multi-label clothing retrieval scenarios. Our method achieves the highest score in top-10 accuracy, effectively reduces storage space, and improves retrieval efficiency.关键词:clothes retrieval;FCN;Hashing;multi-label parsing;multi-task learning20|61|3更新时间:2024-05-07 -

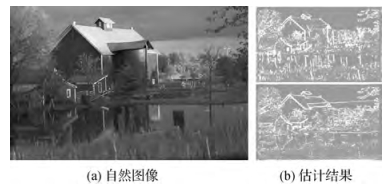

摘要:ObjectiveImage dehazing is a process of reducing the degradation effect of low-visibility imaging environment, such as fog, sputum, and sand, and improving the quality of image information acquisition. Image dehazing mainly solves the problems of image feature information blur, low contrast, gray-level concentration, and color distortion. At present, image dehazing methods are mainly divided into two categories, namely, image restoration and image enhancement. A dehazing algorithm based on image restoration is used to establish a physical model of image degradation in restoring the clear image in a targeted manner by analyzing the degradation mechanism of the image and by using prior knowledge or assumptions. The dehazing algorithm is more targeted compared with image enhancement algorithms. Deblurring is better and image information is complete, which should be investigated. Therefore, an image dehazing algorithm based on mixed prior and weighted guided filter (MPWGF) is proposed to eliminate the prior blind zone and improve the sharpness of the edge detail of haze-free images.MethodFirst, a new method of atmospheric light value estimation is proposed to reduce the limitation of atmospheric light value estimation and utilize the advantage of mixed prior conditions. Pixel positions of 0.1% before brightness in dark channel and depth maps are extracted, and coordinate points extracted from the two images are compared. The coordinate points are retained when two images are observed simultaneously; otherwise, the values with the highest brightness that correspond to the remaining coordinate points in the original image are eliminated. This method can eliminate outliers to some extent and improves the accuracy of atmospheric light estimation. Then, mixed prior theory is used to calculate the atmospheric transmission of the double-constraint region, which eliminates the prior blind zone to a certain extent. This theory improves the robustness of the dehazing algorithm. The dark channel prior (DCP) and color attenuation prior (CAP) have good recovery effects and can compensate for the existence of the prior blind zone. Therefore, an effective region segmentation method is proposed to segment the bright and foggy regions of blurred images. On the basis of regional characteristics, DCP and CAP are used to obtain atmospheric transmittance maps to solve the prior blind region problem in which a single prior estimation method affects the robustness of the restoration algorithm. Finally, an adaptive guided filter algorithm is used to optimize the transmission map, which improves the sharpness of the image edge details. The obtained coarse transmittance map should be refined to eliminate the halo and block artifacts that locally exist in the restored image. A traditional transmittance map optimization algorithm has poor edge retention capability and serious loss of details. Thus, this study proposes an adaptive weighted guidance filtering algorithm based on the traditional algorithm. The edge detail improvement of the fine transmittance map is achieved by adding an adaptive weighted factor.ResultIn this study, the general dehazing test image and foggy image captured by a small UAV are taken as experimental objects. The rationality of the improved methods and the superiority of the overall algorithm are verified by comparing and analyzing the restoration effects of the four combined step algorithms. Experimental results show that the mixed prior theory improves the distortion of the dark priori in the bright region and the deficiency of CAP in dense fog processing and achieves better visual effect. Weighted guided filtering improves the image edge blurring and makes the image edge details clear after restoration. In comparison with other comparison algorithms, the proposed method has better visual effects, and the edge details of haze-free images are more evident. The average increase of comprehensive evaluation index is large.ConclusionFor the restoration of hazy images, the superiority of the proposed improved model is demonstrated through theoretical analysis and experimental verification. The restoration of the proposed algorithm is better than that with the traditional algorithm. The main conclusions are summarized as follows. Mixed prior theory can improve the prior blind area problem in DCP and CAP theories to a certain extent, and the effect of image defogging is better. The adaptive guided filtering algorithm can optimize the transmittance image better and improve the edge sharpness of the defogging image. The image defogging effect can be improved by combining hybrid prior theory with the adaptive guided filtering algorithm. Under the same conditions, the proposed algorithm has better image restoration effect compared with the traditional fog removal algorithm. In this study, several limitations are observed in the parameter setting of regional segmentation, such as the adjustment of parameters through experience and insufficient certain theoretical basis. The parameter setting will be investigated in our future study. MPWGF has broad application prospects in image restoration, artificial intelligence, photogrammetry, and other fields.关键词:image dehazing;mixed prior;double constraint region;guided filter;atmospheric light22|5|6更新时间:2024-05-07

摘要:ObjectiveImage dehazing is a process of reducing the degradation effect of low-visibility imaging environment, such as fog, sputum, and sand, and improving the quality of image information acquisition. Image dehazing mainly solves the problems of image feature information blur, low contrast, gray-level concentration, and color distortion. At present, image dehazing methods are mainly divided into two categories, namely, image restoration and image enhancement. A dehazing algorithm based on image restoration is used to establish a physical model of image degradation in restoring the clear image in a targeted manner by analyzing the degradation mechanism of the image and by using prior knowledge or assumptions. The dehazing algorithm is more targeted compared with image enhancement algorithms. Deblurring is better and image information is complete, which should be investigated. Therefore, an image dehazing algorithm based on mixed prior and weighted guided filter (MPWGF) is proposed to eliminate the prior blind zone and improve the sharpness of the edge detail of haze-free images.MethodFirst, a new method of atmospheric light value estimation is proposed to reduce the limitation of atmospheric light value estimation and utilize the advantage of mixed prior conditions. Pixel positions of 0.1% before brightness in dark channel and depth maps are extracted, and coordinate points extracted from the two images are compared. The coordinate points are retained when two images are observed simultaneously; otherwise, the values with the highest brightness that correspond to the remaining coordinate points in the original image are eliminated. This method can eliminate outliers to some extent and improves the accuracy of atmospheric light estimation. Then, mixed prior theory is used to calculate the atmospheric transmission of the double-constraint region, which eliminates the prior blind zone to a certain extent. This theory improves the robustness of the dehazing algorithm. The dark channel prior (DCP) and color attenuation prior (CAP) have good recovery effects and can compensate for the existence of the prior blind zone. Therefore, an effective region segmentation method is proposed to segment the bright and foggy regions of blurred images. On the basis of regional characteristics, DCP and CAP are used to obtain atmospheric transmittance maps to solve the prior blind region problem in which a single prior estimation method affects the robustness of the restoration algorithm. Finally, an adaptive guided filter algorithm is used to optimize the transmission map, which improves the sharpness of the image edge details. The obtained coarse transmittance map should be refined to eliminate the halo and block artifacts that locally exist in the restored image. A traditional transmittance map optimization algorithm has poor edge retention capability and serious loss of details. Thus, this study proposes an adaptive weighted guidance filtering algorithm based on the traditional algorithm. The edge detail improvement of the fine transmittance map is achieved by adding an adaptive weighted factor.ResultIn this study, the general dehazing test image and foggy image captured by a small UAV are taken as experimental objects. The rationality of the improved methods and the superiority of the overall algorithm are verified by comparing and analyzing the restoration effects of the four combined step algorithms. Experimental results show that the mixed prior theory improves the distortion of the dark priori in the bright region and the deficiency of CAP in dense fog processing and achieves better visual effect. Weighted guided filtering improves the image edge blurring and makes the image edge details clear after restoration. In comparison with other comparison algorithms, the proposed method has better visual effects, and the edge details of haze-free images are more evident. The average increase of comprehensive evaluation index is large.ConclusionFor the restoration of hazy images, the superiority of the proposed improved model is demonstrated through theoretical analysis and experimental verification. The restoration of the proposed algorithm is better than that with the traditional algorithm. The main conclusions are summarized as follows. Mixed prior theory can improve the prior blind area problem in DCP and CAP theories to a certain extent, and the effect of image defogging is better. The adaptive guided filtering algorithm can optimize the transmittance image better and improve the edge sharpness of the defogging image. The image defogging effect can be improved by combining hybrid prior theory with the adaptive guided filtering algorithm. Under the same conditions, the proposed algorithm has better image restoration effect compared with the traditional fog removal algorithm. In this study, several limitations are observed in the parameter setting of regional segmentation, such as the adjustment of parameters through experience and insufficient certain theoretical basis. The parameter setting will be investigated in our future study. MPWGF has broad application prospects in image restoration, artificial intelligence, photogrammetry, and other fields.关键词:image dehazing;mixed prior;double constraint region;guided filter;atmospheric light22|5|6更新时间:2024-05-07 -

摘要:ObjectiveNatural images generally consist of smooth regions with sharp edges, which lead to a heavy-tailed gradient distribution. The gradient priors of these images are commonly used for image deblurring. However, previous results show that existing parameter estimation methods cannot tightly fit the texture change of different image patches. This study presents an image deblurring algorithm that uses a local adaptive sparse gradient model that is based on a blocky stationary distribution characteristic of a natural image.MethodFirst, our method uses a generalized Gaussian distribution (GGD) to represent the image's heavy-tailed gradient statistics. Second, an adaptive sparse gradient model is established to estimate a clean image via the maximization of posterior probability. In the model, different patches have different gradient statistics distribution, even within a single image, rather than assigning a single image gradient prior to an entire image. Third, an alternating minimization algorithm based on a variable-splitting technique is employed to solve the optimization problem of the deblurring model. This optimization problem is divided into two sub-problems, namely, latent image

摘要:ObjectiveNatural images generally consist of smooth regions with sharp edges, which lead to a heavy-tailed gradient distribution. The gradient priors of these images are commonly used for image deblurring. However, previous results show that existing parameter estimation methods cannot tightly fit the texture change of different image patches. This study presents an image deblurring algorithm that uses a local adaptive sparse gradient model that is based on a blocky stationary distribution characteristic of a natural image.MethodFirst, our method uses a generalized Gaussian distribution (GGD) to represent the image's heavy-tailed gradient statistics. Second, an adaptive sparse gradient model is established to estimate a clean image via the maximization of posterior probability. In the model, different patches have different gradient statistics distribution, even within a single image, rather than assigning a single image gradient prior to an entire image. Third, an alternating minimization algorithm based on a variable-splitting technique is employed to solve the optimization problem of the deblurring model. This optimization problem is divided into two sub-problems, namely, latent image$\boldsymbol{u}$ $\boldsymbol{\omega }$ $\boldsymbol{\omega }$ $\boldsymbol{u}$ $\boldsymbol{u}$ $\boldsymbol{\omega }$ $\boldsymbol{g}$ $\boldsymbol{u}_0$ $\boldsymbol{u}_1$ $\boldsymbol{u}_1$ 关键词:image deblurring;adaptive sparse gradient;statistical prior;distribution parameter estimation;image deconvolution27|49|1更新时间:2024-05-07 -

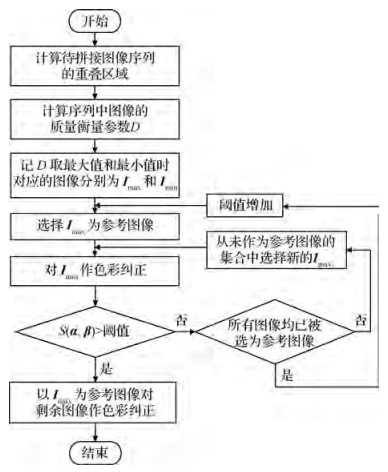

摘要:ObjectivePanorama scene reproduction, which is widely used in medicine, tourism, remote sensing, and photography, is a technique utilized to produce a large or wide-angle scene with multiple overlapping images. Color correction and image blending are the key issues in the generation of high-quality panoramas. The generation efficiency and quality are primarily determined by selecting a reference image for color correction and an image blending algorithm. To determine a reference image, state-of-the-art methods compare the similarity of all target images, which is computationally complex with poor real-time responsiveness. In addition, a contradiction exists between the quality and speed in image blending. Therefore, a high-quality panoramic image should be rapidly generated to reproduce panoramic scenes.MethodA key task for a panorama stitching technique is finding the optimal seams in the overlap region of the source image, merging them along the seam, and minimizing the seam artifact. This study presents an efficient method in selecting a reference image for color correction and a partition blending method that differentiates the overlapping area. The color and illumination of images are inconsistent due to the difference in camera equipment, shooting angle, and shooting time, which affect the visual quality of the panorama. Color correction is applied to reduce the color difference between images and accelerate the optimal seam search and image blending processes. Color correction uses a reference image to adjust the color style of other images. In other words, the reference image determines the quality of the final panorama. The panoramic image may suffer from blurriness, inappropriate brightness, and low contrast when the quality of the selected reference image is poor. The quality of an image is usually inversely proportional to the stability of an image; thus, a greedy strategy is adopted to determine the best reference image and reduce the computational complexity. The worst quality image is selected as the baseline, which is determined based on the relative standard deviation of the image pixels of adjacent images. The similarity between the original and corrected baselines is used to determine whether an input image is appropriate to be used as the reference image, such that the complexity for selecting a reference image is remarkably reduced while guaranteeing the need for color correction. An effective color correction method can achieve a smooth transition of the panoramic image. However, an unnatural transition along the seam is observed. Image blending is required to conceal the artifacts, in which Poisson and linear blending are usually used. Linear blending is simple and fast. However, the weakness of linear blending is that stitching artifacts may be visible after the blending. In comparison with linear blending, Poisson blending is more effective although it is more time-consuming than the former. Partition blending is proposed to solve the following problems. The overlapping region is divided into seam and non-seam regions, Poisson blending is performed in the seam region, and linear correction is conducted in the non-seam region to obtain a high-quality image. A simple point-light source is added to solve the light inconsistency generated by the aforementioned processes and improve the quality of the panorama.ResultSubjective and objective evaluations show interesting results for the proposed method. For the subjective evaluation, the methods can produce a panoramic scene with consistent color styles and can maintain the original details. For the objective evaluation, the structural similarity of the image after color correction is controlled between 0.85 and 0.99, the time complexity is reduced to O(

摘要:ObjectivePanorama scene reproduction, which is widely used in medicine, tourism, remote sensing, and photography, is a technique utilized to produce a large or wide-angle scene with multiple overlapping images. Color correction and image blending are the key issues in the generation of high-quality panoramas. The generation efficiency and quality are primarily determined by selecting a reference image for color correction and an image blending algorithm. To determine a reference image, state-of-the-art methods compare the similarity of all target images, which is computationally complex with poor real-time responsiveness. In addition, a contradiction exists between the quality and speed in image blending. Therefore, a high-quality panoramic image should be rapidly generated to reproduce panoramic scenes.MethodA key task for a panorama stitching technique is finding the optimal seams in the overlap region of the source image, merging them along the seam, and minimizing the seam artifact. This study presents an efficient method in selecting a reference image for color correction and a partition blending method that differentiates the overlapping area. The color and illumination of images are inconsistent due to the difference in camera equipment, shooting angle, and shooting time, which affect the visual quality of the panorama. Color correction is applied to reduce the color difference between images and accelerate the optimal seam search and image blending processes. Color correction uses a reference image to adjust the color style of other images. In other words, the reference image determines the quality of the final panorama. The panoramic image may suffer from blurriness, inappropriate brightness, and low contrast when the quality of the selected reference image is poor. The quality of an image is usually inversely proportional to the stability of an image; thus, a greedy strategy is adopted to determine the best reference image and reduce the computational complexity. The worst quality image is selected as the baseline, which is determined based on the relative standard deviation of the image pixels of adjacent images. The similarity between the original and corrected baselines is used to determine whether an input image is appropriate to be used as the reference image, such that the complexity for selecting a reference image is remarkably reduced while guaranteeing the need for color correction. An effective color correction method can achieve a smooth transition of the panoramic image. However, an unnatural transition along the seam is observed. Image blending is required to conceal the artifacts, in which Poisson and linear blending are usually used. Linear blending is simple and fast. However, the weakness of linear blending is that stitching artifacts may be visible after the blending. In comparison with linear blending, Poisson blending is more effective although it is more time-consuming than the former. Partition blending is proposed to solve the following problems. The overlapping region is divided into seam and non-seam regions, Poisson blending is performed in the seam region, and linear correction is conducted in the non-seam region to obtain a high-quality image. A simple point-light source is added to solve the light inconsistency generated by the aforementioned processes and improve the quality of the panorama.ResultSubjective and objective evaluations show interesting results for the proposed method. For the subjective evaluation, the methods can produce a panoramic scene with consistent color styles and can maintain the original details. For the objective evaluation, the structural similarity of the image after color correction is controlled between 0.85 and 0.99, the time complexity is reduced to O($n$ ${n^2}$ 关键词:panoramic scene reproduction;reference image;color correction;image blending;region division;local light11|6|0更新时间:2024-05-07

Image Processing and Coding

-

摘要:ObjectiveAs an important part of face recognition, face detection has attracted considerable attention in computer vision and has been widely investigated. Face detection determines the location and size of human faces in an image. Traditional face detection methods are limited by face multi-pose changes and incomplete facial features, which lead to their poor detection effect. Modern face detectors can easily detect near-frontal faces. Recent research in this area has focused on the uncontrolled face detection problem, where a number of factors, such as multi-pose changes and incomplete facial features, can lead to large visual variations in face appearance and can severely degrade the robustness of the face detector. A convolutional neural network can automatically select facial features, rapidly delete a large number of non-face background information, and can achieve good face detection results. However, a single convolutional neural network should possess three functions, namely, facial feature extraction, reduction of feature dimensions to decrease the computational complexity, and feature classification, which result in complex network structure, limited detection speed, and overfitting of the network. To solve these problems, this study presents a face detection method of two-layer cascaded convolutional neural network (TC_CNN).MethodFirst, a two-layer convolutional neural network model is constructed. The first convolutional neural network model is used to extract the features of the face image, and a max pooling method is adopted to reduce the dimension of those features in which multiple suspected face windows are outputted. Second, the face windows are used as the inputs of the second convolutional neural network model for fine feature extraction, and a new feature map is obtained by pool operation. Finally, the best detection window is outputted through full connection layer discrimination. The face is successfully detected and the face window is returned when the result of discriminant classification is a face; otherwise, the non-face window is deleted. An optimal face detection window can be selected through non-maximum suppression, the size and position of the face in the input image are returned based on the location information of the optimal face detection window, and the entire process of face detection is completed. In the training of TC_CNN, we use 10 000 images with near-frontal faces, face multi-pose changes, and incomplete facial features from the labeled faces in the Wild dataset as positive training samples and 1 000 images as negative training samples. In the testing of the TC_CNN model, we utilize an authoritative dataset FDDB to evaluate, measure, and determine the validity of the model based on four indexes, namely, detection rate, false detection rate, missing detection rate, and detection time. The TC_CNN model is compared with excellent face detection algorithms, such as AdaBoost, fast LBP, NPD+AdaBoost, and SPP+CNN methods.ResultImages with face multi-pose changes and incomplete face feature information in the FDDB face detection dataset are selected for the test. Results show that the face detection rate by TC_CNN method is up to 96.39%, false detection rate is as low as 3.78%, and detection time is 0.451 s. For the detection rate, the TC_CNN method is 7.63% higher than the traditional AdaBoost method based on cascade idea, 3.57% higher than the fast LBP method, 0.50% higher than the NPD+AdaBoost method, and 6.04% higher than the SPP+CNN method. For the false detection rate, the TC_CNN method is 2.44% lower than the AdaBoost method, 4.47% lower than the fast LBP method, 0.59% lower than the NPD+AdaBoost method, and 5.09% lower than the SPP+CNN method. For the detection time, the TC_CNN method's detection efficiency is remarkably higher than the SPP+CNN method and slightly higher than the AdaBoost, fast LBP, and NPD+AdaBoost methods. In comparison with the current methods, the detection rate is increased while ensuring the efficiency of the algorithm. To verify the robustness of the TC_CNN model under the conditions of face multi-pose changes and incomplete facial features, representative images of two special cases are selected from the FDDB dataset in conducting four groups of comparative experiments under the multi-pose changes of a single face image, multi-pose changes of a multi face image, incomplete facial features of a single face image, and incomplete facial features of a multi face image. Experimental results show that the TC_CNN model shows good effectiveness and robustness compared with the four excellent algorithms or four groups of contrastive experiments under different interference conditions.ConclusionThe TC_CNN model for face detection can achieve accurate detection under face multi-pose changes and incomplete facial feature information. This model can obtain a high detection rate and effectively reduce false detection rate. The method has good robustness and generalization capability. The TC_CNN method overcomes the limitations of the excellent AdaBoost cascade concept on the face detection method (such as cascading two convolutional neural networks; effectively avoiding the complex network structure caused by the three functions of extraction, reduction, and classification of features simultaneously), which easily cause overfitting and other contradictions. However, the selection of the number and parameter of the cascaded convolutional neural network is difficult for the improvement of the model performance and detection effect. In future research, we will determine the number and parameter of cascaded convolution neural network to optimize the model and will attempt to detect the size and position of the face accurately.关键词:face detection;convolutional neural network;ten-fold cross validation;two-layer cascaded convolutional neural network;max pooling19|35|3更新时间:2024-05-07

摘要:ObjectiveAs an important part of face recognition, face detection has attracted considerable attention in computer vision and has been widely investigated. Face detection determines the location and size of human faces in an image. Traditional face detection methods are limited by face multi-pose changes and incomplete facial features, which lead to their poor detection effect. Modern face detectors can easily detect near-frontal faces. Recent research in this area has focused on the uncontrolled face detection problem, where a number of factors, such as multi-pose changes and incomplete facial features, can lead to large visual variations in face appearance and can severely degrade the robustness of the face detector. A convolutional neural network can automatically select facial features, rapidly delete a large number of non-face background information, and can achieve good face detection results. However, a single convolutional neural network should possess three functions, namely, facial feature extraction, reduction of feature dimensions to decrease the computational complexity, and feature classification, which result in complex network structure, limited detection speed, and overfitting of the network. To solve these problems, this study presents a face detection method of two-layer cascaded convolutional neural network (TC_CNN).MethodFirst, a two-layer convolutional neural network model is constructed. The first convolutional neural network model is used to extract the features of the face image, and a max pooling method is adopted to reduce the dimension of those features in which multiple suspected face windows are outputted. Second, the face windows are used as the inputs of the second convolutional neural network model for fine feature extraction, and a new feature map is obtained by pool operation. Finally, the best detection window is outputted through full connection layer discrimination. The face is successfully detected and the face window is returned when the result of discriminant classification is a face; otherwise, the non-face window is deleted. An optimal face detection window can be selected through non-maximum suppression, the size and position of the face in the input image are returned based on the location information of the optimal face detection window, and the entire process of face detection is completed. In the training of TC_CNN, we use 10 000 images with near-frontal faces, face multi-pose changes, and incomplete facial features from the labeled faces in the Wild dataset as positive training samples and 1 000 images as negative training samples. In the testing of the TC_CNN model, we utilize an authoritative dataset FDDB to evaluate, measure, and determine the validity of the model based on four indexes, namely, detection rate, false detection rate, missing detection rate, and detection time. The TC_CNN model is compared with excellent face detection algorithms, such as AdaBoost, fast LBP, NPD+AdaBoost, and SPP+CNN methods.ResultImages with face multi-pose changes and incomplete face feature information in the FDDB face detection dataset are selected for the test. Results show that the face detection rate by TC_CNN method is up to 96.39%, false detection rate is as low as 3.78%, and detection time is 0.451 s. For the detection rate, the TC_CNN method is 7.63% higher than the traditional AdaBoost method based on cascade idea, 3.57% higher than the fast LBP method, 0.50% higher than the NPD+AdaBoost method, and 6.04% higher than the SPP+CNN method. For the false detection rate, the TC_CNN method is 2.44% lower than the AdaBoost method, 4.47% lower than the fast LBP method, 0.59% lower than the NPD+AdaBoost method, and 5.09% lower than the SPP+CNN method. For the detection time, the TC_CNN method's detection efficiency is remarkably higher than the SPP+CNN method and slightly higher than the AdaBoost, fast LBP, and NPD+AdaBoost methods. In comparison with the current methods, the detection rate is increased while ensuring the efficiency of the algorithm. To verify the robustness of the TC_CNN model under the conditions of face multi-pose changes and incomplete facial features, representative images of two special cases are selected from the FDDB dataset in conducting four groups of comparative experiments under the multi-pose changes of a single face image, multi-pose changes of a multi face image, incomplete facial features of a single face image, and incomplete facial features of a multi face image. Experimental results show that the TC_CNN model shows good effectiveness and robustness compared with the four excellent algorithms or four groups of contrastive experiments under different interference conditions.ConclusionThe TC_CNN model for face detection can achieve accurate detection under face multi-pose changes and incomplete facial feature information. This model can obtain a high detection rate and effectively reduce false detection rate. The method has good robustness and generalization capability. The TC_CNN method overcomes the limitations of the excellent AdaBoost cascade concept on the face detection method (such as cascading two convolutional neural networks; effectively avoiding the complex network structure caused by the three functions of extraction, reduction, and classification of features simultaneously), which easily cause overfitting and other contradictions. However, the selection of the number and parameter of the cascaded convolutional neural network is difficult for the improvement of the model performance and detection effect. In future research, we will determine the number and parameter of cascaded convolution neural network to optimize the model and will attempt to detect the size and position of the face accurately.关键词:face detection;convolutional neural network;ten-fold cross validation;two-layer cascaded convolutional neural network;max pooling19|35|3更新时间:2024-05-07 -

摘要:ObjectiveThe texture reflected by 2D facial image is different for a 3D face surface, and this 2D texture is considerably affected by the variations of illumination and make-up. These issues make the investigation on 3D local texture features important for face recognition tasks. The concept of 3D texture is completely different from 2D texture, which reflects the repeatable patterns of a 3D facial surface. Aside from the geometric information, 3D texture preserves the photometric information of the same individual due to the flexibility of 3D mesh. Therefore, two original 3D textures, namely, 3D geometric texture and 3D photometric texture, should be investigated.MethodIn this study, we investigate a novel framework called mesh-LBP in representing 3D facial texture in detail. Here, we mainly focus on the improvement and statistic of this operator rather than the comparisons on final face recognition rate with state-of-the-art methods. First, a set of general preprocessing operations, including face detection, outlier removal, and hole filling, are performed before feature extraction and classification because raw 3D facial data contain spikes and holes and a large background area. Specifically, a facial surface is initially cropped by using a common scheme, that is, the point sets of a raw face model located on a sphere that are constructed by nose tip and fixed radius, are extracted as the detected facial area. Then, we define the outlier of raw data as the point whose number of neighborhood points are lower than that of a threshold. A mean filter is used to smooth the facial surface when these outliers are detected. The outlier removal operation usually results in holes in 3D facial data. Thus, we adopt bicubic interpolation to solve this problem. Second, the construction procedure of original mesh-LBP operator and three improved operators based on thresholding scheme, which we called mesh-tLBP, mesh-MBP, and mesh-LTP, are developed. For the mesh-tLBP, a small threshold is added to the calculation process of the mesh-LBP. For the mesh-MBP, the value of a center facet on the mesh is replaced by the mean value of its neighborhood. For the mesh-LTP, an additional coding unit is added for the subtle capture of code changes of the mesh-LBP. The first two improvements are designed for the robustness of the mesh-LBP to noise or face changes, whereas the last one improves the power of the mesh-LBP in capturing facial details. Third, different statistical methods, including naïve holistic histogram, spatially enhanced histogram, and holistic coded image, are employed to form the final facial representation. For the naïve holistic histogram, we do not use any processing method and directly perform frequency statistics on the calculated LBP pattern. For the spatially enhanced histogram, we initially block a 3D facial surface, perform frequency statistics for each block, and concatenate them to form the entire description of the face. For the holistic coded image, we directly use the calculated LBP pattern. However, the number of patterns from different faces is different; thus, we initially normalize them to the same size. Finally, we employ 615 neutral scans under different illumination condition from CASIA3D face database as the training set and evaluate the recognition performance on 615 scans of expression variation and 1 230 scans of pose on the basis of a simple minimum distance classifier.ResultComparison of the texture features of facial surface and common object surface show that the facial texture is completely different from ordinary texture and is irregular and difficult to describe. In addition, the texture variations of 3D faces are smaller than that of 2D faces, and this finding shows the superiority of 3D data. Experiments on the two variants of mesh-LBP show that the mesh-LBP(

摘要:ObjectiveThe texture reflected by 2D facial image is different for a 3D face surface, and this 2D texture is considerably affected by the variations of illumination and make-up. These issues make the investigation on 3D local texture features important for face recognition tasks. The concept of 3D texture is completely different from 2D texture, which reflects the repeatable patterns of a 3D facial surface. Aside from the geometric information, 3D texture preserves the photometric information of the same individual due to the flexibility of 3D mesh. Therefore, two original 3D textures, namely, 3D geometric texture and 3D photometric texture, should be investigated.MethodIn this study, we investigate a novel framework called mesh-LBP in representing 3D facial texture in detail. Here, we mainly focus on the improvement and statistic of this operator rather than the comparisons on final face recognition rate with state-of-the-art methods. First, a set of general preprocessing operations, including face detection, outlier removal, and hole filling, are performed before feature extraction and classification because raw 3D facial data contain spikes and holes and a large background area. Specifically, a facial surface is initially cropped by using a common scheme, that is, the point sets of a raw face model located on a sphere that are constructed by nose tip and fixed radius, are extracted as the detected facial area. Then, we define the outlier of raw data as the point whose number of neighborhood points are lower than that of a threshold. A mean filter is used to smooth the facial surface when these outliers are detected. The outlier removal operation usually results in holes in 3D facial data. Thus, we adopt bicubic interpolation to solve this problem. Second, the construction procedure of original mesh-LBP operator and three improved operators based on thresholding scheme, which we called mesh-tLBP, mesh-MBP, and mesh-LTP, are developed. For the mesh-tLBP, a small threshold is added to the calculation process of the mesh-LBP. For the mesh-MBP, the value of a center facet on the mesh is replaced by the mean value of its neighborhood. For the mesh-LTP, an additional coding unit is added for the subtle capture of code changes of the mesh-LBP. The first two improvements are designed for the robustness of the mesh-LBP to noise or face changes, whereas the last one improves the power of the mesh-LBP in capturing facial details. Third, different statistical methods, including naïve holistic histogram, spatially enhanced histogram, and holistic coded image, are employed to form the final facial representation. For the naïve holistic histogram, we do not use any processing method and directly perform frequency statistics on the calculated LBP pattern. For the spatially enhanced histogram, we initially block a 3D facial surface, perform frequency statistics for each block, and concatenate them to form the entire description of the face. For the holistic coded image, we directly use the calculated LBP pattern. However, the number of patterns from different faces is different; thus, we initially normalize them to the same size. Finally, we employ 615 neutral scans under different illumination condition from CASIA3D face database as the training set and evaluate the recognition performance on 615 scans of expression variation and 1 230 scans of pose on the basis of a simple minimum distance classifier.ResultComparison of the texture features of facial surface and common object surface show that the facial texture is completely different from ordinary texture and is irregular and difficult to describe. In addition, the texture variations of 3D faces are smaller than that of 2D faces, and this finding shows the superiority of 3D data. Experiments on the two variants of mesh-LBP show that the mesh-LBP($α_1$ $α_2$ $α_2$ 关键词:three dimensional texture;mesh-LBP;threshold scheme;statistical method;three dimensional face recognition11|4|1更新时间:2024-05-07 -

摘要:ObjectiveFace emotion recognition is widely applied in the fields of commercial, security, and medicine. Rapid and accurate identification of facial expressions are of great significance for their research and application. Several traditional machine learning methods, such as support vector machine (SVM), principal component analysis (PCA), and local binary pattern (LBP) are used to identify facial expressions. However, these traditional machine learning algorithms require manual feature extraction. In this process, some features are hidden or deliberately enlarged due to many human interventions, which affect accuracy. In recent years, convolutional neural networks (CNNs) have been used extensively in image recognition due to their good self-learning and generalization capabilities. However, several problems, such as difficulty in facial expression feature extraction and long training time of neural network, are still observed with neural network training. This study presents an expression recognition method based on parallel CNN to solve the aforementioned problems.MethodFirst, a series of preprocessing operations is performed on facial expression images. For example, an original image is detected by using an AdaBoost cascade classifier to remove the complex background and obtain the face part. Then, a face image is compensated by illumination, a histogram equalization method is used to stretch the image nonlinearly, and the pixel value of the image is reallocated. Finally, affine transformation is used to achieve face alignment. The preceding preprocessing can remove complex background effects, compensate lighting, and adjust the angle to obtain more accurate face parts than that of the original image. Then, a CNN with two parallel convolution and pooling structures, which can extract subtle expressions, is designed for facial expression images. This parallel unit is the core unit of the CNN and comprises a convolutional layer, a pooling layer, and an activation function ReLu. This parallel structure has three different channels, in which each channel has different number of convolutions, pooling layers, and ReLu to extract different image features and fuse the extracted features. The second parallel processing unit can perform convolution and pooling on the extracted features by the first parallel processing unit and reduce the dimension of the image and shorten the training time of CNN. Finally, the previously merged features are sent to the SoftMax layer for expression classification.ResultCK+ and FER2013 expression datasets that have undergone pre-processing and data enhancement are divided into 10 equal parts. Then, training and testing are performed on 10 parts, and the final accuracy is the average of the 10 results. Experimental results show that the accuracy increases and time decreases remarkably compared with traditional machine learning methods, such as SVM, PCA, and LBP or their combination and other classical CNNs, such as AlexNet and GoogLeNet. Finally, CK+ and FER2013 achieve 94.03% and 65.6% accuracy, and the iteration time reaches 0.185 s and 0.101 s, respectively.ConclusionThis study presents a new parallel CNN structure that extracts the features of facial expressions by using three different convolutional and pooling structures. The three paths have different combinations of convolutional and pooling layers, and they can extract different image features. The different extracted features are combined and sent to the next layer for processing. This study provides a new concept for the design of CNNs, which can extend the breadth of CNN and control the depth. The proposed CNN can extract many expressions that are ignored or difficult to extract. CK+ and FER2013 expression datasets have large difference in quantity, size, and resolution. The experiments of CK+ and FER2013 show that the model can extract the precise and subtle features of facial expression images in a relatively short time under the premise of ensuring the recognition rate.关键词:expression recognition;deep learning;convolutional neural network(CNN);parallel processing;image classification21|70|21更新时间:2024-05-07

摘要:ObjectiveFace emotion recognition is widely applied in the fields of commercial, security, and medicine. Rapid and accurate identification of facial expressions are of great significance for their research and application. Several traditional machine learning methods, such as support vector machine (SVM), principal component analysis (PCA), and local binary pattern (LBP) are used to identify facial expressions. However, these traditional machine learning algorithms require manual feature extraction. In this process, some features are hidden or deliberately enlarged due to many human interventions, which affect accuracy. In recent years, convolutional neural networks (CNNs) have been used extensively in image recognition due to their good self-learning and generalization capabilities. However, several problems, such as difficulty in facial expression feature extraction and long training time of neural network, are still observed with neural network training. This study presents an expression recognition method based on parallel CNN to solve the aforementioned problems.MethodFirst, a series of preprocessing operations is performed on facial expression images. For example, an original image is detected by using an AdaBoost cascade classifier to remove the complex background and obtain the face part. Then, a face image is compensated by illumination, a histogram equalization method is used to stretch the image nonlinearly, and the pixel value of the image is reallocated. Finally, affine transformation is used to achieve face alignment. The preceding preprocessing can remove complex background effects, compensate lighting, and adjust the angle to obtain more accurate face parts than that of the original image. Then, a CNN with two parallel convolution and pooling structures, which can extract subtle expressions, is designed for facial expression images. This parallel unit is the core unit of the CNN and comprises a convolutional layer, a pooling layer, and an activation function ReLu. This parallel structure has three different channels, in which each channel has different number of convolutions, pooling layers, and ReLu to extract different image features and fuse the extracted features. The second parallel processing unit can perform convolution and pooling on the extracted features by the first parallel processing unit and reduce the dimension of the image and shorten the training time of CNN. Finally, the previously merged features are sent to the SoftMax layer for expression classification.ResultCK+ and FER2013 expression datasets that have undergone pre-processing and data enhancement are divided into 10 equal parts. Then, training and testing are performed on 10 parts, and the final accuracy is the average of the 10 results. Experimental results show that the accuracy increases and time decreases remarkably compared with traditional machine learning methods, such as SVM, PCA, and LBP or their combination and other classical CNNs, such as AlexNet and GoogLeNet. Finally, CK+ and FER2013 achieve 94.03% and 65.6% accuracy, and the iteration time reaches 0.185 s and 0.101 s, respectively.ConclusionThis study presents a new parallel CNN structure that extracts the features of facial expressions by using three different convolutional and pooling structures. The three paths have different combinations of convolutional and pooling layers, and they can extract different image features. The different extracted features are combined and sent to the next layer for processing. This study provides a new concept for the design of CNNs, which can extend the breadth of CNN and control the depth. The proposed CNN can extract many expressions that are ignored or difficult to extract. CK+ and FER2013 expression datasets have large difference in quantity, size, and resolution. The experiments of CK+ and FER2013 show that the model can extract the precise and subtle features of facial expression images in a relatively short time under the premise of ensuring the recognition rate.关键词:expression recognition;deep learning;convolutional neural network(CNN);parallel processing;image classification21|70|21更新时间:2024-05-07 -

摘要:ObjectiveA new straight-line matching algorithm for line band descriptors (LBDs) combined with multiple constraints is proposed to solve the typical problems in many straight-line matching algorithms that use descriptors. Such problems include insufficient information utilization between matching straight lines, which are effective geometric constraints, and vulnerability of matching straight lines to the influence of low texture and scale change of images during the matching process.MethodStraight line segments are extracted by using a line segment detector method as matching elements, and a corresponding triangulation network established by using SIFT matching points is then used as the constraint region to determine the candidate lines in the searched image. After the candidate lines are selected, a region for band descriptor construction is constructed. The construction method is described as follows. A rectangular support region, in which the target straight line segment is the central axis in the region, is established in the reference image. Then, the corresponding support region of the candidate straight line segment in the searched image is determined based on epipolar constraints, which is calculated by the endpoints of the target straight line segment and four corner points of its support region in the reference image. The support regions of the target and candidate straight line segments are constructed with the same size by utilizing affine transformation. After completing the support regions of straight line segments, the regions are divided into a set of bands, where each band has the same size and the length of the band equals the length of the straight line segment, and the LBDs of straight line segment are obtained by calculating the information of each band in the support region. The descriptors are calculated based on the gradient values of four directions of pixels, and each band weight coefficient that is along the vertical direction in the support region is controlled by using a Gaussian function. On the basis of the above methods, the matching descriptor construction of LBDs for the target and candidate straight line segments is completed in sequence. Furthermore, new LBDs combined with multiple constraints are normalized in obtaining a unit LBD to reduce the influence of nonlinear illumination changes, and the descriptor is a 40D vector. Euclidean distances are used as the similarity measure in our algorithm and are determined based on the calculated vectors between the target straight line segment and each candidate straight line segment descriptor. The candidate straight line segment, which satisfies the nearest neighbor distance ratio criterion of Euclidean distances, is the matching straight line. In this process, the minimum Euclidean distance and nearest neighbor distance ratio thresholds should be determined, which directly affect the matching performance of the algorithm. Thus, many experiments should be conducted to ensure the accuracy of multi-threshold. The angle constraint, which is between the corresponding straight line and its corresponding epipolar line, is used to evaluate the matching result and determine the final corresponding straight lines.ResultThree typical groups of close-range image pairs with angle, rotation, and scale transformation are used as the experimental dataset, which is used to complete the straight line segment matching experiments by the proposed algorithm. In comparison with other straight line segment matching algorithms, the matching results show that the proposed algorithm is more suitable in different typical close-range image pairs. The conclusions based on the result analysis are summarized as follows. The successful matches of the proposed algorithm have 1.06-1.41 times more lines compared with other straight-line matching algorithms, and the proposed algorithm can improve the accuracy of straight line matching by 2.4% to 11.6%. In terms of matching efficiency, although the proposed algorithm is time-consuming, it is robust and achieves accurate and reliable straight line matching results by synthesizing the relevant experiment results on the number of corresponding matching straight lines, matching accuracy, and running time. Moreover, a highly accurate and reliable matching result is obtained.ConclusionConstructed LBDs combined with multiple constraints are stable for line matching of close-range images with angles, rotation, and scale changes. The instability of other descriptors caused by numerous factors in line matching is improved.关键词:multiple constraints;line band descriptor;straight line matching;nearest neighbor distance ratio;angle constraint11|4|3更新时间:2024-05-07

摘要:ObjectiveA new straight-line matching algorithm for line band descriptors (LBDs) combined with multiple constraints is proposed to solve the typical problems in many straight-line matching algorithms that use descriptors. Such problems include insufficient information utilization between matching straight lines, which are effective geometric constraints, and vulnerability of matching straight lines to the influence of low texture and scale change of images during the matching process.MethodStraight line segments are extracted by using a line segment detector method as matching elements, and a corresponding triangulation network established by using SIFT matching points is then used as the constraint region to determine the candidate lines in the searched image. After the candidate lines are selected, a region for band descriptor construction is constructed. The construction method is described as follows. A rectangular support region, in which the target straight line segment is the central axis in the region, is established in the reference image. Then, the corresponding support region of the candidate straight line segment in the searched image is determined based on epipolar constraints, which is calculated by the endpoints of the target straight line segment and four corner points of its support region in the reference image. The support regions of the target and candidate straight line segments are constructed with the same size by utilizing affine transformation. After completing the support regions of straight line segments, the regions are divided into a set of bands, where each band has the same size and the length of the band equals the length of the straight line segment, and the LBDs of straight line segment are obtained by calculating the information of each band in the support region. The descriptors are calculated based on the gradient values of four directions of pixels, and each band weight coefficient that is along the vertical direction in the support region is controlled by using a Gaussian function. On the basis of the above methods, the matching descriptor construction of LBDs for the target and candidate straight line segments is completed in sequence. Furthermore, new LBDs combined with multiple constraints are normalized in obtaining a unit LBD to reduce the influence of nonlinear illumination changes, and the descriptor is a 40D vector. Euclidean distances are used as the similarity measure in our algorithm and are determined based on the calculated vectors between the target straight line segment and each candidate straight line segment descriptor. The candidate straight line segment, which satisfies the nearest neighbor distance ratio criterion of Euclidean distances, is the matching straight line. In this process, the minimum Euclidean distance and nearest neighbor distance ratio thresholds should be determined, which directly affect the matching performance of the algorithm. Thus, many experiments should be conducted to ensure the accuracy of multi-threshold. The angle constraint, which is between the corresponding straight line and its corresponding epipolar line, is used to evaluate the matching result and determine the final corresponding straight lines.ResultThree typical groups of close-range image pairs with angle, rotation, and scale transformation are used as the experimental dataset, which is used to complete the straight line segment matching experiments by the proposed algorithm. In comparison with other straight line segment matching algorithms, the matching results show that the proposed algorithm is more suitable in different typical close-range image pairs. The conclusions based on the result analysis are summarized as follows. The successful matches of the proposed algorithm have 1.06-1.41 times more lines compared with other straight-line matching algorithms, and the proposed algorithm can improve the accuracy of straight line matching by 2.4% to 11.6%. In terms of matching efficiency, although the proposed algorithm is time-consuming, it is robust and achieves accurate and reliable straight line matching results by synthesizing the relevant experiment results on the number of corresponding matching straight lines, matching accuracy, and running time. Moreover, a highly accurate and reliable matching result is obtained.ConclusionConstructed LBDs combined with multiple constraints are stable for line matching of close-range images with angles, rotation, and scale changes. The instability of other descriptors caused by numerous factors in line matching is improved.关键词:multiple constraints;line band descriptor;straight line matching;nearest neighbor distance ratio;angle constraint11|4|3更新时间:2024-05-07

Image Analysis and Recognition

-

摘要:ObjectiveFrameworks for point cloud object recognition are generally composed by two stages. An offline stage constructs a model library, and an online stage recognizes objects by using nearest neighbor search. Traditional methods use global surfaces to construct a model library, which is sensitive to occlusion and inaccurate segmentation result. This study investigates the offline stage and presents a novel model library construction method.MethodThe proposed method simulates possible occlusions and adds point clouds with simulated occlusions to the model library to alleviate the influence of occlusion and inaccurate segmentation result. First, a CAD model is placed at the center of an icosahedron, and multiple virtual cameras are used to obtain the partial point clouds of the model. For each partial point cloud, a local coordinate system is constructed using principal component analysis, and the point cloud is aligned with the coordinate system. This process makes the proposed method invariant to rigid transformations. Second, several direction vectors are obtained based on the local coordinate system, and the partial point clouds are segmented into multiple subparts based on the length of the point cloud on each direction vector. Simulation of occlusion at different degrees is performed on these subparts, which contain the global and local surfaces of the partial point cloud. Third, a simple clustering method is used to obtain the largest cluster of the subparts, and outliner points are removed at this stage. The largest cluster will be added to the model library only if the cluster has sufficient points. This process reduces the memory requirements and decreases time consumption during the nearest searches. Redundant clusters with similar surface in the library are still observed after removing the clusters with few points. Finally, an iterative closest point(ICP) based algorithm is used to remove the point clouds with similar surfaces, thereby further decreasing the memory requirements. Subsequently, only dozens of subparts are used to describe each of the CAD model.ResultExperimental results on two public datasets show that the proposed method promotes recognition accuracy at different levels. For the UWAOR dataset, the recognition performance on five types of point cloud descriptor is remarkably improved. Particularly, the proposed method enhances the recognition performance by 0.208 on the GASD descriptor and 0.173 on the ROPS descriptor (

摘要:ObjectiveFrameworks for point cloud object recognition are generally composed by two stages. An offline stage constructs a model library, and an online stage recognizes objects by using nearest neighbor search. Traditional methods use global surfaces to construct a model library, which is sensitive to occlusion and inaccurate segmentation result. This study investigates the offline stage and presents a novel model library construction method.MethodThe proposed method simulates possible occlusions and adds point clouds with simulated occlusions to the model library to alleviate the influence of occlusion and inaccurate segmentation result. First, a CAD model is placed at the center of an icosahedron, and multiple virtual cameras are used to obtain the partial point clouds of the model. For each partial point cloud, a local coordinate system is constructed using principal component analysis, and the point cloud is aligned with the coordinate system. This process makes the proposed method invariant to rigid transformations. Second, several direction vectors are obtained based on the local coordinate system, and the partial point clouds are segmented into multiple subparts based on the length of the point cloud on each direction vector. Simulation of occlusion at different degrees is performed on these subparts, which contain the global and local surfaces of the partial point cloud. Third, a simple clustering method is used to obtain the largest cluster of the subparts, and outliner points are removed at this stage. The largest cluster will be added to the model library only if the cluster has sufficient points. This process reduces the memory requirements and decreases time consumption during the nearest searches. Redundant clusters with similar surface in the library are still observed after removing the clusters with few points. Finally, an iterative closest point(ICP) based algorithm is used to remove the point clouds with similar surfaces, thereby further decreasing the memory requirements. Subsequently, only dozens of subparts are used to describe each of the CAD model.ResultExperimental results on two public datasets show that the proposed method promotes recognition accuracy at different levels. For the UWAOR dataset, the recognition performance on five types of point cloud descriptor is remarkably improved. Particularly, the proposed method enhances the recognition performance by 0.208 on the GASD descriptor and 0.173 on the ROPS descriptor ($k$ $k$ 关键词:point cloud object recognition;offline stage;model library construction;point cloud feature extraction;global and local surfaces35|8|3更新时间:2024-05-07

Image Understanding and Computer Vision

-

摘要:ObjectiveDeep learning has been widely used in the field of synthetic aperture radar (SAR) target recognition and most studies have been conducted for target recognition under the standard operating conditions (SOCs) of MSTAR datasets. Many challenges exist due to the small differences among the targets when applied to target recognition with variants, such as T72 subclasses. To preserve the input features of SAR images, a deep convolutional neural network (CNN) architecture for SAR target recognition with variants is designed in this study.MethodThe proposed network is composed of one multiscale feature extraction module and several dense blocks and transition layers proposed in DenseNet. The multiscale feature extraction module, which is placed at the bottom of the network, uses multiple convolution kernels with sizes of 1×1, 3×3, 5×5, 7×7, and 9×9 to extract rich spatial features. The convolution kernels with a size of 1×1 are adopted to preserve the detailed information from the input image, and convolution kernels with large sizes are used in multiscale feature extraction module to suppress the influence of speckle noise on extracted features because speckle noise is a main factor that affects recognition performance. To transfer the information from the input image effectively and utilize the feature learned from all layers, dense blocks and transition layers are adopted in designing the latter layers of the network. A full convolution layer is used behind three dense blocks and transition layers to transform the learned features to vectors, and a SoftMax layer is adopted to perform classification. Finally, training datasets are augmented by displacing and adding speckle noise to the original images, and the proposed model is implemented using TensorFlow and is trained by using these samples. The influences of input image resolution, target translation, and different noise levels on the recognition accuracy of the proposed network are determined after augmenting the training datasets, and performance comparisons with other deep learning models under SOCs.ResultExperimental results demonstrate that the input image resolution has a considerable influence on the recognition accuracy for eight types of T72 targets, and the accuracy improves considerably with the increase of input resolution. However, the input resolution has minimal effect on the recognition accuracy for SOC due to the large differences among the targets in SOC. The image resolution as the input of the proposed model is set to 88×88×1 because the target and shadow information during data enhancement should be preserved. To verify the performance of the proposed multiscale feature extraction module, tests are performed using different multiscale feature extraction strategies, and the proposed model obtains a classification accuracy of approximately 95.48% in the classification of eight subclasses of T72 target with variants. Aside from the recognition of test samples under SOC, the classification accuracies of the proposed model are investigated in terms of target translation and different noise levels. The proposed model can achieve a recognition accuracy higher than 90%, especially when the target is displaced 16 pixels away from the center of the original image. The proposed model still exhibits a good performance when the noise intensity is set to 0.5 or 1 but causes a remarkable decline in recognition accuracy when the noise intensity is greater than 1. The average classification accuracy can reach 94.61% and 86.36% in the case of object translation and different noise levels. Recognition accuracies of 99.38% (SOC1-10), 99.50% (SOC1-14), and 98.81% (SOC2) are achieved by using augmented training datasets in training the models for 10-class target recognition under SOC (without variants and with variants). Our model achieves comparable recognition performance with other deep models.ConclusionOur model utilizes the input information and features of each convolutional layer and captures the detailed difference among the targets from the images. Our model not only can be applied to target recognition task with variants but also achieve satisfactory recognition results under SOC.关键词:SAR target recognition;target variants;deep learning;multi-scale feature;DenseNet37|47|2更新时间:2024-05-07