最新刊期

卷 24 , 期 12 , 2019

-

摘要:Object tracking is a fundamental problem in computer vision, which uses context information in a video or image sequence to predict and locate a target(s). It is widely used in smart video monitoring systems, intelligent human interaction, intelligent transportation, visual navigation systems, and many other areas. With the advent of the big data era and the emergence of deep learning methods, tracking performance has substantially improved. In this paper, we introduce the basic research framework of object tracking and review the history of object tracking from the perspective of the observation model. We indicate that deep learning allows for a more robust observation model to be obtained. We review the deep learning methods that are suitable for object tracking from the aspects of deep discriminative model and deep generative model. We also classify and analyze the existing deep object tracking methods from the perspectives of network structure, network function, and network training. In addition, we introduce several other deep object tracking methods, including deep object tracking based on the fusion of classification and regression, on reinforcement learning, on ensemble learning, and on meta-learning. We show the current commonly used databases for object tracking based on deep learning and their evaluation methods. We likewise analyze and summarize the latest specific application scenarios in object tracking from the perspectives of mobile tracking system, detection, and tracking-based system. Finally, we analyze the problems of object tracking, including insufficient training data, real-time tracking, and long-term tracking and specify further research directions for deep object tracking.关键词:visual object tracking;deep neural network;correlation filter;deep Siamese network;reinforcement learning;generative adversarial network395|311|58更新时间:2024-05-07

摘要:Object tracking is a fundamental problem in computer vision, which uses context information in a video or image sequence to predict and locate a target(s). It is widely used in smart video monitoring systems, intelligent human interaction, intelligent transportation, visual navigation systems, and many other areas. With the advent of the big data era and the emergence of deep learning methods, tracking performance has substantially improved. In this paper, we introduce the basic research framework of object tracking and review the history of object tracking from the perspective of the observation model. We indicate that deep learning allows for a more robust observation model to be obtained. We review the deep learning methods that are suitable for object tracking from the aspects of deep discriminative model and deep generative model. We also classify and analyze the existing deep object tracking methods from the perspectives of network structure, network function, and network training. In addition, we introduce several other deep object tracking methods, including deep object tracking based on the fusion of classification and regression, on reinforcement learning, on ensemble learning, and on meta-learning. We show the current commonly used databases for object tracking based on deep learning and their evaluation methods. We likewise analyze and summarize the latest specific application scenarios in object tracking from the perspectives of mobile tracking system, detection, and tracking-based system. Finally, we analyze the problems of object tracking, including insufficient training data, real-time tracking, and long-term tracking and specify further research directions for deep object tracking.关键词:visual object tracking;deep neural network;correlation filter;deep Siamese network;reinforcement learning;generative adversarial network395|311|58更新时间:2024-05-07 -

摘要:Depth estimation from a single image, a classical problem in computer vision, is important for scene reconstruction, occlusion, and illumination processing in augmented reality. In this paper, the recent related literature of single-image depth estimation are reviewed, and the commonly used datasets and methods are introduced. According to different types of scenes, the datasets can be divided into indoor, outdoor, and virtual scenes. In consideration of the different mathematical models, monocular depth estimation methods can be divided into traditional machine learning-based methods and deep learning-based methods. Traditional machine learning-based methods use a Markov random field or conditional random field to model the depth relationships of pixels in an image. In the framework of maximum a posteriori probability, the depth can be obtained by minimizing the energy function. According to whether the model contains parameters, traditional machine learning-based methods can be further divided into parameter and non-parameter learning methods. The former assumes that the model contains unknown parameters, and the training process obtains these unknown parameters. The latter uses existing datasets for similarity retrieval to infer depth, and no parameters need to be solved. In recent years, deep learning has promoted the development of computer vision in many fields. The current research situations of deep learning-based monocular depth estimation methods in China abroad are analyzed with their advantages and disadvantages. These methods are classified hierarchically in a bottom-up paradigm with reference to different classification criteria. The depth and semantics of images are closely related, and several works focus on multi-task joint learning. In the first level, single-depth estimation methods are segregated into single-task methods that predict only depth and multi-task methods that simultaneously predict depth and semantics. The second level contains absolute depth prediction methods and relative depth prediction methods. Absolute depth refers to the actual distance between the object in the scene and the camera, while relative depth focuses on the relative distance of the object in the picture. Given arbitrary images, people are often better at judging the relative distances of objects in the scene. The third level consists of supervised regression method, supervised classification method, and unsupervised method. For single-image depth estimation task, most works focus on the prediction of absolute depth, and most of the early methods use a supervised regression model. In this manner, the model regression on continuous depth values and the training data should contain depth labels. On the basis of the characteristics of the scene from far to near, several studies were conducted to solve the problem of depth estimation with classification methods. Supervised learning methods require each RGB image to have a corresponding depth label, whose acquisition usually requires a depth camera or radar. However, the depth camera is limited in scope, and the radar is expensive. Furthermore, the original depth collected by the depth camera is usually sparse and cannot precisely match the original image. Therefore, the unsupervised depth estimation methods that do not need a depth label have been the research trends in recent years. The basic idea is to combine the polar geometry based on left-right consistency with an automatic coding machine to obtain depth.关键词:machine learning;depth estimation;3D reconstruction;deep learning110|374|15更新时间:2024-05-07

摘要:Depth estimation from a single image, a classical problem in computer vision, is important for scene reconstruction, occlusion, and illumination processing in augmented reality. In this paper, the recent related literature of single-image depth estimation are reviewed, and the commonly used datasets and methods are introduced. According to different types of scenes, the datasets can be divided into indoor, outdoor, and virtual scenes. In consideration of the different mathematical models, monocular depth estimation methods can be divided into traditional machine learning-based methods and deep learning-based methods. Traditional machine learning-based methods use a Markov random field or conditional random field to model the depth relationships of pixels in an image. In the framework of maximum a posteriori probability, the depth can be obtained by minimizing the energy function. According to whether the model contains parameters, traditional machine learning-based methods can be further divided into parameter and non-parameter learning methods. The former assumes that the model contains unknown parameters, and the training process obtains these unknown parameters. The latter uses existing datasets for similarity retrieval to infer depth, and no parameters need to be solved. In recent years, deep learning has promoted the development of computer vision in many fields. The current research situations of deep learning-based monocular depth estimation methods in China abroad are analyzed with their advantages and disadvantages. These methods are classified hierarchically in a bottom-up paradigm with reference to different classification criteria. The depth and semantics of images are closely related, and several works focus on multi-task joint learning. In the first level, single-depth estimation methods are segregated into single-task methods that predict only depth and multi-task methods that simultaneously predict depth and semantics. The second level contains absolute depth prediction methods and relative depth prediction methods. Absolute depth refers to the actual distance between the object in the scene and the camera, while relative depth focuses on the relative distance of the object in the picture. Given arbitrary images, people are often better at judging the relative distances of objects in the scene. The third level consists of supervised regression method, supervised classification method, and unsupervised method. For single-image depth estimation task, most works focus on the prediction of absolute depth, and most of the early methods use a supervised regression model. In this manner, the model regression on continuous depth values and the training data should contain depth labels. On the basis of the characteristics of the scene from far to near, several studies were conducted to solve the problem of depth estimation with classification methods. Supervised learning methods require each RGB image to have a corresponding depth label, whose acquisition usually requires a depth camera or radar. However, the depth camera is limited in scope, and the radar is expensive. Furthermore, the original depth collected by the depth camera is usually sparse and cannot precisely match the original image. Therefore, the unsupervised depth estimation methods that do not need a depth label have been the research trends in recent years. The basic idea is to combine the polar geometry based on left-right consistency with an automatic coding machine to obtain depth.关键词:machine learning;depth estimation;3D reconstruction;deep learning110|374|15更新时间:2024-05-07 - 摘要:Aerospace science and technology (S&T) is a direct indicator of comprehensive national power and S&T strength. Satellite remote sensing is one of the most immediate and realistic productivities transformed from aerospace S&T, which is composed of two broad procedures:remote sensing data acquisition and dissemination and data processing and information extraction. On the one hand, given the steady promotion of China's Civil Space Infrastructure, the capacity for satellite image acquisition has been enhanced largely in terms of quality and quantity. On the other hand, an image processing platform is a considerable infrastructure for satellite remote sensing application. Platform development is increasingly becoming an important factor restricting the application of satellite remote sensing and the development of spatial information-related business. This paper reviews state-of-the-art status and analyzes the future trend of acquisition capacity and processing platform of satellite remote sensing image.In terms of data acquisition and dissemination, international open remote sensing satellites and sensors, such as Terro/Aqua-MODIS, Landsat, and Sentinel, have largely broadened and deepened applications of satellite remote sensing imagery. Data sharing policy and regularized senior image product enable the use of these commonweal data to analyze a long-term geographical phenomenon in a large region. Large commercial satellites, such as WorldView, Pleiades, and Radarsat, are operated by sizeable commercial remote sensing firms. The imagery obtained by big satellites helps promote the commercial value of satellite imagery in traditional industry applications, whereas small commercial satellite constellations, such as Flock, SkySat, and BlackSky, lower the barrier for more generalized and common applications. With the steady promotion of China's Civil Space Infrastructure, China's 27 civil remote sensing satellites in orbit can be broadly categorized into land, ocean, and atmosphere observation satellites. Land observation satellites are composed of GaoFen, HuanJing, and ZiYuan series, and sensors onboard can acquire high-resolution visible-near infrared, hyperspectral, thermal, and synthetic aperture radar (SAR) imagery. Over 30 small commercial satellites in orbit include BeiJing, GaoJing, JiLin, and ZhuHai series. Despite the substantial progress of China's capacity for remote sensing data acquisition and dissemination, distinct generation gaps remain, especially in terms of new type sensors such as polarization and electromagnetic monitoring sensors. Policy barrier, data regularity, and quality as well as data sharing model in big data era are the main challenges encountered in data sharing. Several efforts, including project on big earth data science engineering, have been made to accelerate the development of satellite remote sensing data sharing.In terms of satellite remote sensing processing platforms, well-known platforms such as ERDAS IMAGINE, ENVI, and PCI Geomatic lead the development worldwide. The leadership can be characterized by four advantages. First, platform expandability is reflected by flexible deployment environment, powerful secondary development capability, and seamless integration with GIS platforms. Second, these platforms support the processing of multi-source and multi-format remote sensing data. Multi-source data refer to optical, SAR, LiDAR, and hyperspectral data, while multi-format data refer to image, point cloud, and video data. Third, ERDAS IMAGINE and ENVI start to develop modules based on deep learning algorithms, such as Faster RCNN. The introduction of deep learning is based on its overwhelming accuracy compared with traditional machine learning algorithms, such as SVM. Finally, algorithms and hardware, such as GPU, DSP, and FPGA, are integrated more tightly to continue promoting data processing efficiency. In China, common platforms such as IRSA, ImageInfo, Titan Image, and PIE pay more attention to satellite optical imagery processing despite limited support for multi-source data. Specialized software, including HypEYE and CAESAR, are developed to fulfil the demands of hyperspectral and SAR image processing. In the last 10 years, cloud computing technology has been introduced into remote sensing imagery processing platforms because of its advantage of providing one-step geospatial service by integrating remote sensing data, information product, application software, and computing and storage resources. Google Earth Engine, Data Cube, ENVI Service Engine, and ERDAS APOLLO are some of the successful platforms, and several similar platforms are available in China. China's self-developed remote sensing imagery processing platforms are not competitive with their international counterparts owing to backwardness caused by a lack of independent innovation and steady profit model.Four evident trends are observed in satellite remote sensing in the era of big data and artificial intelligence. First, small satellite constellations accelerate the industrialization and popularization of satellite remote sensing, while the improvement of geometric and radial accuracy remains the bottleneck. Second, autonomous and intelligent satellites with capabilities of adaptive optimization of imaging parameters, onboard thorough perception of object, and environment are future directions of remote sensing. The intelligent satellite will be an essential component of collaborative unmanned systems. Third, the transformation from meaningless DN value to semantic object information based on artificial intelligence techniques will certainly improve the information provided by satellite remote sensing in terms of quantity and quality. Finally, the integration of position and navigation, time, remote sensing, and communication platforms and signals will magnify remote sensing capability by providing application-oriented solutions.关键词:satellite remote sensing;image processing;cloud computing;remote sensing application89|148|20更新时间:2024-05-07

Review

-

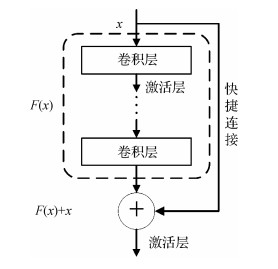

摘要:ObjectiveColor constancy refers to the human ability that allows the brain to recognize an object as having a consistent color under varying illuminants. Color constancy has become an important prerequisite of high-level tasks, such as recognition, segmentation, and 3D vision. In the computer vision community, the goal of computational color constancy is to remove illuminant color casts and obtain accurate color representations for images. Therefore, illuminant estimation is an important means to achieve computational color constancy, which is a difficult and underdetermined problem because the observed image color is influenced by unknown factors, such as scene illuminants and object reflections. Illuminant estimation methods can be categorized into two classes:statistics-based (or static) and learning-based methods. Statistics-based methods estimate the illuminant based on the statistical properties (e.g., reflectance distributions) of the image. Learning-based methods learn a model from training images then estimate the illuminant using the model. Convolutional neural networks (CNNs) are very powerful methods of estimating illuminants, and many competitive results have been obtained with CNN-based methods. We propose a CNN-based illuminant estimation algorithm in this study. We use deep residual learning to improve network accuracy and a patch-selecting network to overcome the color ambiguity issue of local patches.MethodWe uniformly sample local patches from the image, estimate the local illuminant of each patch individually, and generate a global illuminant estimation of the entire image by combining the local illuminants. We use a 64×64 patch size in the patch sampling to guarantee the estimation accuracy of the local illuminant and provide sufficient training inputs without data augmentation. The proposed approach includes two residual networks, namely, illuminant estimation net (IEN) and patch selection net (PSN). IEN estimates the local illuminant of image patches. To improve the estimation accuracy of IEN, we increase the feature extraction hierarchy by adding network depth and use the residual structure to ensure gradient propagation and facilitate the training of the deep network. IEN is based on the residual structure, which consists of many stacked 3×3 and 1×1 convolutional layers, batch normalization layers, and rectified linear unit layers. The remaining part is composed of one global average pooling layer and one full connection layer. We use Euclidean loss and stochastic gradient descent (SGD) to optimize IEN. PSN shares a similar architecture with IEN, except that PSN has an additional Softmax layer that serves as the classifier at the end of the network. PSN is proposed to classify image patches according to their illuminant estimation errors. We use cross entropy loss and SGD to optimize PSN. According to the results of PSN, patches with a large estimation error are removed from the entire image, thus improving the performance of global illuminant estimation. Additionally, we preprocess the input image by using the log-chrominance algorithm, which converts a three-channel RGB image into a two-channel log-chrominance image; this reduces the influence of image luminance and improves the computational efficiency by decreasing the amount of data by 1/3.ResultWe implement the proposed IEN and PSN on the Caffe library. To evaluate the performance of our approach, we use two standard single-illuminant datasets, namely, the NUS-8 dataset and the reprocessed ColorChecker dataset. Both datasets include indoor and outdoor images, and a Macbeth ColorChecker is placed in each image to calculate the ground truth illuminant. The NUS-8 dataset contains 1 736 images captured from 8 different cameras, and the reprocessed ColorChecker dataset consists of 568 images from 2 cameras. Following the configurations of previous studies, we report the following metrics:the mean, the median, the tri-mean, and the mean of the lowest 25% and the highest 25% of angular errors. We also report the additional metric of the 95th percentile for the reprocessed ColorChecker dataset. We divide the NUS-8 dataset into eight subsets, apply three-fold cross-validation on the eight subsets individually, and report the geometric mean of the proposed metrics for all eight subsets. We directly apply three-fold cross-validation on the reprocessed ColorChecker dataset. Experimental results show that the proposed approach is competitive with state-of-the-art methods. For the NUS-8 dataset, the proposed IEN achieves the best results among all compared methods, and the proposed PSN can further increase the precision of the IEN results. For the reprocessed ColorChecker dataset, our results are comparable with those of other advanced methods. In addition, we conduct ablation studies to evaluate the model components of the proposed approach. We compare the proposed IEN with several shallower CNNs. Experimental results show that deep residual learning is effective in improving illuminant estimation accuracy. Moreover, compared with the estimated illuminant on the original image, log-chrominance preprocessing can reduce the illuminant estimation error by 10% to 15%. The proposed PSN can further decrease the global illuminant estimation error by 5% compared with the method that uses IEN alone. Finally, we evaluate the time cost of our method on a PC with an Intel i5 2.7 GHz CPU, 16GB of memory, and an NVIDIA GeForce GTX 1080Ti GPU. Our code takes less than 1.4 s to estimate a 2 K image, which has a typical resolution of 2 048×1 080 pixels.ConclusionExperiments on the two single-illuminant datasets show that the proposed approach, which includes log-chrominance preprocessing, deep residual learning-based network structure, and patch selection for global illuminant estimation, is reasonable and effective. The proposed approach has high precision and robustness and can be widely used in image processing and computer vision systems that require color calibrations.关键词:visual optics;color constancy;illuminant estimation;deep residual learning;log-chrominance35|35|3更新时间:2024-05-07

摘要:ObjectiveColor constancy refers to the human ability that allows the brain to recognize an object as having a consistent color under varying illuminants. Color constancy has become an important prerequisite of high-level tasks, such as recognition, segmentation, and 3D vision. In the computer vision community, the goal of computational color constancy is to remove illuminant color casts and obtain accurate color representations for images. Therefore, illuminant estimation is an important means to achieve computational color constancy, which is a difficult and underdetermined problem because the observed image color is influenced by unknown factors, such as scene illuminants and object reflections. Illuminant estimation methods can be categorized into two classes:statistics-based (or static) and learning-based methods. Statistics-based methods estimate the illuminant based on the statistical properties (e.g., reflectance distributions) of the image. Learning-based methods learn a model from training images then estimate the illuminant using the model. Convolutional neural networks (CNNs) are very powerful methods of estimating illuminants, and many competitive results have been obtained with CNN-based methods. We propose a CNN-based illuminant estimation algorithm in this study. We use deep residual learning to improve network accuracy and a patch-selecting network to overcome the color ambiguity issue of local patches.MethodWe uniformly sample local patches from the image, estimate the local illuminant of each patch individually, and generate a global illuminant estimation of the entire image by combining the local illuminants. We use a 64×64 patch size in the patch sampling to guarantee the estimation accuracy of the local illuminant and provide sufficient training inputs without data augmentation. The proposed approach includes two residual networks, namely, illuminant estimation net (IEN) and patch selection net (PSN). IEN estimates the local illuminant of image patches. To improve the estimation accuracy of IEN, we increase the feature extraction hierarchy by adding network depth and use the residual structure to ensure gradient propagation and facilitate the training of the deep network. IEN is based on the residual structure, which consists of many stacked 3×3 and 1×1 convolutional layers, batch normalization layers, and rectified linear unit layers. The remaining part is composed of one global average pooling layer and one full connection layer. We use Euclidean loss and stochastic gradient descent (SGD) to optimize IEN. PSN shares a similar architecture with IEN, except that PSN has an additional Softmax layer that serves as the classifier at the end of the network. PSN is proposed to classify image patches according to their illuminant estimation errors. We use cross entropy loss and SGD to optimize PSN. According to the results of PSN, patches with a large estimation error are removed from the entire image, thus improving the performance of global illuminant estimation. Additionally, we preprocess the input image by using the log-chrominance algorithm, which converts a three-channel RGB image into a two-channel log-chrominance image; this reduces the influence of image luminance and improves the computational efficiency by decreasing the amount of data by 1/3.ResultWe implement the proposed IEN and PSN on the Caffe library. To evaluate the performance of our approach, we use two standard single-illuminant datasets, namely, the NUS-8 dataset and the reprocessed ColorChecker dataset. Both datasets include indoor and outdoor images, and a Macbeth ColorChecker is placed in each image to calculate the ground truth illuminant. The NUS-8 dataset contains 1 736 images captured from 8 different cameras, and the reprocessed ColorChecker dataset consists of 568 images from 2 cameras. Following the configurations of previous studies, we report the following metrics:the mean, the median, the tri-mean, and the mean of the lowest 25% and the highest 25% of angular errors. We also report the additional metric of the 95th percentile for the reprocessed ColorChecker dataset. We divide the NUS-8 dataset into eight subsets, apply three-fold cross-validation on the eight subsets individually, and report the geometric mean of the proposed metrics for all eight subsets. We directly apply three-fold cross-validation on the reprocessed ColorChecker dataset. Experimental results show that the proposed approach is competitive with state-of-the-art methods. For the NUS-8 dataset, the proposed IEN achieves the best results among all compared methods, and the proposed PSN can further increase the precision of the IEN results. For the reprocessed ColorChecker dataset, our results are comparable with those of other advanced methods. In addition, we conduct ablation studies to evaluate the model components of the proposed approach. We compare the proposed IEN with several shallower CNNs. Experimental results show that deep residual learning is effective in improving illuminant estimation accuracy. Moreover, compared with the estimated illuminant on the original image, log-chrominance preprocessing can reduce the illuminant estimation error by 10% to 15%. The proposed PSN can further decrease the global illuminant estimation error by 5% compared with the method that uses IEN alone. Finally, we evaluate the time cost of our method on a PC with an Intel i5 2.7 GHz CPU, 16GB of memory, and an NVIDIA GeForce GTX 1080Ti GPU. Our code takes less than 1.4 s to estimate a 2 K image, which has a typical resolution of 2 048×1 080 pixels.ConclusionExperiments on the two single-illuminant datasets show that the proposed approach, which includes log-chrominance preprocessing, deep residual learning-based network structure, and patch selection for global illuminant estimation, is reasonable and effective. The proposed approach has high precision and robustness and can be widely used in image processing and computer vision systems that require color calibrations.关键词:visual optics;color constancy;illuminant estimation;deep residual learning;log-chrominance35|35|3更新时间:2024-05-07 -

摘要:ObjectiveTwo typical methods of coverless information hiding are currently available. One is search-based coverless information hiding, which transmits secret information by querying a text or image containing secret information from a database, and the other is texture generation-based information hiding, which relays secret information by generating a stego texture similar to a given image texture. Search-based information hiding has a small embedding capacity and a large search space and involves the intensive transmission of numerous carriers. Although every isolated text or image in this method is a normal text or image without modification, the method is still suspicious because of the dense transmission of carriers. Texture generation-based information hiding can be further divided into texture construction and texture synthesis-based information hiding. Generating a real natural texture directly is challenging. In texture synthesis-based information hiding, apparent distinguishing features between coded and non-coded blocks and a fixed mapping relationship between secret information and coded blocks exist. The method has low security and disregards the difference degree among various coded blocks and category errors during attacks. To address these problems, this work proposes a generation information hiding method that combines difference clustering and minimum error texture synthesis.MethodFirst, in the embedding process, sample blocks are randomly captured in the sample texture image by a key. Then, the mean square errors of the kernel regions between the sample blocks and the random key template are calculated. These errors are divided into several categories through a difference mean clustering strategy, in which the sample block closest to the cluster center position is selected as the coded sample block in each category. Second, a multiple mapping relationship is established to obtain the coded sample block number through secret information decimal numbers, the MD5 (message-digest algorithm 5) value of the secret information, random coordinates, and the coded sample blocks. Finally, the coded sample blocks that represent the secret information decimal numbers are placed randomly in a blank image. The nearest sample blocks are selected to cover the secret information and generate a stego texture image through minimum error priority stitching, where the splicing order is determined by the minimum difference among adjacent blocks. This strategy always selects the least difference error line for minimum difference splicing. In the extraction process, all stego blocks are truncated in the stego texture image by the key, and the same coded sample blocks are obtained by difference mean clustering through the given sample image. All of the closest coded block numbers corresponding to the truncated stego blocks are identified via a similarity comparison and used to recover the binary secret information by combining these block numbers with the secret information's MD5 value and random coordinates.ResultThe proposed method is tightly bound to the plaintext attribute of the secret information's MD5 value and the key. The method completely depends on the key, MD5 value, and sample texture image. Only the correct key, MD5 value, and sample texture image can completely recover secret information. Any change or changes in individual or multiple key variables and the texture sample image will result in errors. For example, the EBR (error bit rate) of the extracted secret information could approach 0.5, and half of the extracted secret information bits cannot be fetched with the maximum uncertainty. Through the minimum error priority, the proposed method has a smaller pixel cumulative difference on the minimum error line compared with existing methods, and the generated stego texture image has better visual quality and is extremely sensitive to the key. The visual quality of stego texture maps decreases whether in salt-and-pepper noise, graffiti, or JPEG compression. However, during high-intensity salt-and-pepper noise and large-scale graffiti attacks, most of the bits embedded into the secret information can be accurately extracted or even completely fetched. In the given experiment samples, the quality factors are set from 50 to 70 for JPEG compression attacks, the EBR of the recovered secret information is always 0, and the entire secret information is completely restored. For 5% to 15% salt-and-pepper noise attacks, the EBR of the recovered secret information is still 0, and the secret information can be completely fetched. Even under 25% to 40% high-intensity salt-and-pepper noise attacks, the EBR of the extracted secret information remains very low, that is, less than 7%. Thus, the proposed method has a strong attack tolerance to high-intensity salt-and-pepper noise and large-scale graffiti attacks. The method can also resist low-quality JPEG compression attacks.ConclusionThe proposed method does not require many samples to build a large database. It avoids the retrieval of big data, and its computation cost is small. The proposed method only involves single carrier embedding, and its embedding capacity is high. It can produce high-quality texture to cover secret information. The introduced random key template and the established multiple mapping relationship between random coordinates and coded sample blocks avoid the fixed mapping relationship between secret information and coded sample blocks. The coded sample blocks have the largest inter-class difference because of sample difference mean clustering. Therefore, the proposed method has a robust recovery process that is entirely dependent on the key, and its security is high. The splicing order is determined according to the minimum difference among adjacent blocks, and the least difference error line that can cover the secret information with high quality is selected for splicing. Moreover, difference minimum error line splicing that can cover secret information with high quality is selected.关键词:difference mean clustering;texture generation;information hiding;minimum error;image stitching;sample texture synthesis28|148|2更新时间:2024-05-07

摘要:ObjectiveTwo typical methods of coverless information hiding are currently available. One is search-based coverless information hiding, which transmits secret information by querying a text or image containing secret information from a database, and the other is texture generation-based information hiding, which relays secret information by generating a stego texture similar to a given image texture. Search-based information hiding has a small embedding capacity and a large search space and involves the intensive transmission of numerous carriers. Although every isolated text or image in this method is a normal text or image without modification, the method is still suspicious because of the dense transmission of carriers. Texture generation-based information hiding can be further divided into texture construction and texture synthesis-based information hiding. Generating a real natural texture directly is challenging. In texture synthesis-based information hiding, apparent distinguishing features between coded and non-coded blocks and a fixed mapping relationship between secret information and coded blocks exist. The method has low security and disregards the difference degree among various coded blocks and category errors during attacks. To address these problems, this work proposes a generation information hiding method that combines difference clustering and minimum error texture synthesis.MethodFirst, in the embedding process, sample blocks are randomly captured in the sample texture image by a key. Then, the mean square errors of the kernel regions between the sample blocks and the random key template are calculated. These errors are divided into several categories through a difference mean clustering strategy, in which the sample block closest to the cluster center position is selected as the coded sample block in each category. Second, a multiple mapping relationship is established to obtain the coded sample block number through secret information decimal numbers, the MD5 (message-digest algorithm 5) value of the secret information, random coordinates, and the coded sample blocks. Finally, the coded sample blocks that represent the secret information decimal numbers are placed randomly in a blank image. The nearest sample blocks are selected to cover the secret information and generate a stego texture image through minimum error priority stitching, where the splicing order is determined by the minimum difference among adjacent blocks. This strategy always selects the least difference error line for minimum difference splicing. In the extraction process, all stego blocks are truncated in the stego texture image by the key, and the same coded sample blocks are obtained by difference mean clustering through the given sample image. All of the closest coded block numbers corresponding to the truncated stego blocks are identified via a similarity comparison and used to recover the binary secret information by combining these block numbers with the secret information's MD5 value and random coordinates.ResultThe proposed method is tightly bound to the plaintext attribute of the secret information's MD5 value and the key. The method completely depends on the key, MD5 value, and sample texture image. Only the correct key, MD5 value, and sample texture image can completely recover secret information. Any change or changes in individual or multiple key variables and the texture sample image will result in errors. For example, the EBR (error bit rate) of the extracted secret information could approach 0.5, and half of the extracted secret information bits cannot be fetched with the maximum uncertainty. Through the minimum error priority, the proposed method has a smaller pixel cumulative difference on the minimum error line compared with existing methods, and the generated stego texture image has better visual quality and is extremely sensitive to the key. The visual quality of stego texture maps decreases whether in salt-and-pepper noise, graffiti, or JPEG compression. However, during high-intensity salt-and-pepper noise and large-scale graffiti attacks, most of the bits embedded into the secret information can be accurately extracted or even completely fetched. In the given experiment samples, the quality factors are set from 50 to 70 for JPEG compression attacks, the EBR of the recovered secret information is always 0, and the entire secret information is completely restored. For 5% to 15% salt-and-pepper noise attacks, the EBR of the recovered secret information is still 0, and the secret information can be completely fetched. Even under 25% to 40% high-intensity salt-and-pepper noise attacks, the EBR of the extracted secret information remains very low, that is, less than 7%. Thus, the proposed method has a strong attack tolerance to high-intensity salt-and-pepper noise and large-scale graffiti attacks. The method can also resist low-quality JPEG compression attacks.ConclusionThe proposed method does not require many samples to build a large database. It avoids the retrieval of big data, and its computation cost is small. The proposed method only involves single carrier embedding, and its embedding capacity is high. It can produce high-quality texture to cover secret information. The introduced random key template and the established multiple mapping relationship between random coordinates and coded sample blocks avoid the fixed mapping relationship between secret information and coded sample blocks. The coded sample blocks have the largest inter-class difference because of sample difference mean clustering. Therefore, the proposed method has a robust recovery process that is entirely dependent on the key, and its security is high. The splicing order is determined according to the minimum difference among adjacent blocks, and the least difference error line that can cover the secret information with high quality is selected for splicing. Moreover, difference minimum error line splicing that can cover secret information with high quality is selected.关键词:difference mean clustering;texture generation;information hiding;minimum error;image stitching;sample texture synthesis28|148|2更新时间:2024-05-07 -

摘要:ObjectiveLow-illumination images are easily produced when taking pictures because of weak lighting conditions or devices with poor filling flash. Low-illumination images are difficult to recognize. Thus, the quality of low-illumination images needs to be improved. In the past, low-illumination image enhancement was dominated by histogram equalization (HE) and Retinex, but these methods cannot easily generate the desired results. Their results often entail problems, such as color distortion and blurred edges. A conditional generative adversarial network (CGAN)-based method is proposed to solve this poor visual perception problem. CGAN is an extension of the generative adversarial network (GAN). At present, it is widely used in data generation, including image de-raining, image resolution enhancement, and speech denoising. Unlike traditional low-illumination image enhancement methods that work on single image adjustment, this method achieves data-driven enhancement.MethodThis study proposes an encode-decode convolutional neural network (CNN) model as the generative model and a CNN model with a classification function as the discriminative model. The two models constitute a GAN. The model processes input images end to end and without adjusting the parameters manually. Instead of using synthetic image datasets, real-shot low-illumination images from the multi-exposure image dataset are used for training and testing. This image dataset contains multi-exposure sequential images, including under-and over-exposure images. The exposure of images is shifted by the exposure value (EV) of cameras or phones. Moreover, this dataset offers high-quality reference light images. During training, by offering reference light images from datasets as conditions to GAN, both models optimize their parameters according to the light images. As a result, the entire model is transformed into CGAN. The coding path of the generative model samples low-illumination images and processes the images at different scales. After coding, the encoding path restores the image size and shortens the distance between the outputs and conditional light images. The low-illumination images are denoised and restored by a different convolution processing of the generative model, and enhanced images are obtained. The discriminative model distinguishes the enhanced and reference light images by comparing their differences. The enhanced images are regarded as false, and the reference light images are regarded as true. Then, the discriminative model provides the result to the generative model. According to the feedback, the generative model optimizes the parameters to obtain an improved enhancement capability, and the discriminative model obtains an improved distinguishing capability by optimizing its own parameters. After training thousands of pairs of images, the parameters of both models are optimized. By using the discriminative model to supervise the generative model and by combining the interrelation between the two models, an improved image enhancement effect is achieved. When the proposed model is used to enhance low-illumination images, the discriminative model is no longer involved in the work, and the result is obtained directly from the generative model. Furthermore, skip connection and batch normalization are integrated into the proposed model. Skip connection transmits the gradient from shallow to deep layers. It has a transitional effect on the shallow and deep features. Batch normalization can effectively avoid gradient vanishing and explosion. Both approaches enhance the processing capability of the model.ResultIn this study, the entire network model and the single generative model are compared; the two sets of models represent CGAN and CNN methods, respectively.Resultsshow that the entire network model has a better processing effect than the single generative model. This finding proves that the discriminative model improves the effect of the generative model during training. Afterward, eight existing methods are applied for comparison with the proposed method. By subjectively comparing the results of these methods, we find that our method achieves a better effect in terms of brightness, clarity, and color restoration. By using the peak signal-to-noise ratio (PSNR), histogram similarity (HS), and structural similarity (SSIM) as the objectives of comparison, our method exhibits improvements of 0.7 dB, 3.9%, and 8.2%, respectively. Meanwhile, the processing time of each method is compared. By using a graphics processing unit (GPU) for acceleration, the proposed method becomes much faster than the other methods, especially traditional central processing unit (CPU)-based methods. The proposed method can meet the requirement of real-time applications. Furthermore, for several low-illumination images with bright parts, our method does not enhance these parts, whereas other existing methods always over-enhance the bright parts.ConclusionA conditional generative adversarial network-based method for low-illumination image enhancement is proposed. Experimental results show that the method proposed is more effective than existing methods not only in perception but also in speed.关键词:image processing;low-illumination image enhancement;convolutional neural network (CNN);conditional generative adversarial network (CGAN);deep learning37|134|10更新时间:2024-05-07

摘要:ObjectiveLow-illumination images are easily produced when taking pictures because of weak lighting conditions or devices with poor filling flash. Low-illumination images are difficult to recognize. Thus, the quality of low-illumination images needs to be improved. In the past, low-illumination image enhancement was dominated by histogram equalization (HE) and Retinex, but these methods cannot easily generate the desired results. Their results often entail problems, such as color distortion and blurred edges. A conditional generative adversarial network (CGAN)-based method is proposed to solve this poor visual perception problem. CGAN is an extension of the generative adversarial network (GAN). At present, it is widely used in data generation, including image de-raining, image resolution enhancement, and speech denoising. Unlike traditional low-illumination image enhancement methods that work on single image adjustment, this method achieves data-driven enhancement.MethodThis study proposes an encode-decode convolutional neural network (CNN) model as the generative model and a CNN model with a classification function as the discriminative model. The two models constitute a GAN. The model processes input images end to end and without adjusting the parameters manually. Instead of using synthetic image datasets, real-shot low-illumination images from the multi-exposure image dataset are used for training and testing. This image dataset contains multi-exposure sequential images, including under-and over-exposure images. The exposure of images is shifted by the exposure value (EV) of cameras or phones. Moreover, this dataset offers high-quality reference light images. During training, by offering reference light images from datasets as conditions to GAN, both models optimize their parameters according to the light images. As a result, the entire model is transformed into CGAN. The coding path of the generative model samples low-illumination images and processes the images at different scales. After coding, the encoding path restores the image size and shortens the distance between the outputs and conditional light images. The low-illumination images are denoised and restored by a different convolution processing of the generative model, and enhanced images are obtained. The discriminative model distinguishes the enhanced and reference light images by comparing their differences. The enhanced images are regarded as false, and the reference light images are regarded as true. Then, the discriminative model provides the result to the generative model. According to the feedback, the generative model optimizes the parameters to obtain an improved enhancement capability, and the discriminative model obtains an improved distinguishing capability by optimizing its own parameters. After training thousands of pairs of images, the parameters of both models are optimized. By using the discriminative model to supervise the generative model and by combining the interrelation between the two models, an improved image enhancement effect is achieved. When the proposed model is used to enhance low-illumination images, the discriminative model is no longer involved in the work, and the result is obtained directly from the generative model. Furthermore, skip connection and batch normalization are integrated into the proposed model. Skip connection transmits the gradient from shallow to deep layers. It has a transitional effect on the shallow and deep features. Batch normalization can effectively avoid gradient vanishing and explosion. Both approaches enhance the processing capability of the model.ResultIn this study, the entire network model and the single generative model are compared; the two sets of models represent CGAN and CNN methods, respectively.Resultsshow that the entire network model has a better processing effect than the single generative model. This finding proves that the discriminative model improves the effect of the generative model during training. Afterward, eight existing methods are applied for comparison with the proposed method. By subjectively comparing the results of these methods, we find that our method achieves a better effect in terms of brightness, clarity, and color restoration. By using the peak signal-to-noise ratio (PSNR), histogram similarity (HS), and structural similarity (SSIM) as the objectives of comparison, our method exhibits improvements of 0.7 dB, 3.9%, and 8.2%, respectively. Meanwhile, the processing time of each method is compared. By using a graphics processing unit (GPU) for acceleration, the proposed method becomes much faster than the other methods, especially traditional central processing unit (CPU)-based methods. The proposed method can meet the requirement of real-time applications. Furthermore, for several low-illumination images with bright parts, our method does not enhance these parts, whereas other existing methods always over-enhance the bright parts.ConclusionA conditional generative adversarial network-based method for low-illumination image enhancement is proposed. Experimental results show that the method proposed is more effective than existing methods not only in perception but also in speed.关键词:image processing;low-illumination image enhancement;convolutional neural network (CNN);conditional generative adversarial network (CGAN);deep learning37|134|10更新时间:2024-05-07

Image Processing and Coding

-

摘要:ObjectiveInfrared small-target detection is a key technology in precision guidance. It is crucial in aircraft infrared search and tracking systems, infrared imaging and guidance systems, and early warning systems for military installations. However, infrared small-target detection in complex backgrounds still encounters challenges. First, due to the long imaging distance, the target is usually very dim and small and lacks a concrete structure and texture information. Second, when strong background clutter and noise exist, such targets are often buried in the background with a low signal-to-clutter ratio. Hence, the issue remains difficult and challenging. Meanwhile, utilizing the existing low rank constraint and sparse representation joint model directly has disadvantages of low accuracy, high false alarm rate, and slow detection. To solve these problems, a small-target detection method based on the multiscale infrared superpixel-image model is proposed.MethodThe method of constructing an infrared-patch image in prior literature involves setting the sliding window to slide from up to down and left to right of the image at a certain step size. The gray value of each pixel in the sliding window is rearranged into a column vector when it slides to each position. The matrix composed of these column vectors is called the patch image. However, in the process of constructing such a patch image in this manner, the proportion of overlapping area between sliding windows is large, resulting in a high degree of information redundancy. In addition, the constructed patch image has high dimensionality, which leads to a large amount of calculation. To overcome these deficiencies, the superpixel method is adopted to segment the original infrared image and obtain superpixel images with no overlapping area. The method makes full use of the local spatial correlation of the infrared image and avoids the computational burden caused by redundant information. Moreover, introducing multiscale theory then merging the target images detected at different scales can further improve the robustness of the algorithm for detecting targets of different sizes.ResultFirst, experiments are conducted on many infrared small target images with varying situations and levels of noise. Experimental results demonstrate that from the perspective of subjective visual evaluation, the proposed method is robust to different scenes and noise. Experiments are also conducted to verify two aspects, namely, background suppression effect and detection speed. The signal-to-clutter ratio gain and background suppression factor are selected as quantitative evaluation indicators for the background suppression effect. Experimental results reveal that compared with the Top-Hat, Max-Median, two-dimensional least mean square, local saliency map, infrared patch-image, and weighted infrared patch-image methods, the proposed method can effectively eliminate various interferences, exerts a superior effect on background suppression, and can accurately detect infrared small targets in complex backgrounds simultaneously. The background suppression factor of the proposed method is several tens of times that of other methods, and the infrared superpixel-image model reduces the detection time by at least 78.2% compared with similar methods.ConclusionIn this study, superpixel image segmentation and multiscale theory are introduced into the low rank constraint and sparse representation joint model. The model can achieve an advantageous background suppression effect and good adaptability to the target size when applied to infrared small-target detection in complex backgrounds. In addition, the proposed method of infrared small-target detection at different scales can be further transformed into parallel processing, which is beneficial for accelerating the detection process of the method. Our future work will focus on reducing the algorithm's complexity and designing a more flexible method for constructing an infrared-patch image.关键词:small target detection;infrared image;low rank constraint;sparse representation;superpixel;multiscale56|88|2更新时间:2024-05-07

摘要:ObjectiveInfrared small-target detection is a key technology in precision guidance. It is crucial in aircraft infrared search and tracking systems, infrared imaging and guidance systems, and early warning systems for military installations. However, infrared small-target detection in complex backgrounds still encounters challenges. First, due to the long imaging distance, the target is usually very dim and small and lacks a concrete structure and texture information. Second, when strong background clutter and noise exist, such targets are often buried in the background with a low signal-to-clutter ratio. Hence, the issue remains difficult and challenging. Meanwhile, utilizing the existing low rank constraint and sparse representation joint model directly has disadvantages of low accuracy, high false alarm rate, and slow detection. To solve these problems, a small-target detection method based on the multiscale infrared superpixel-image model is proposed.MethodThe method of constructing an infrared-patch image in prior literature involves setting the sliding window to slide from up to down and left to right of the image at a certain step size. The gray value of each pixel in the sliding window is rearranged into a column vector when it slides to each position. The matrix composed of these column vectors is called the patch image. However, in the process of constructing such a patch image in this manner, the proportion of overlapping area between sliding windows is large, resulting in a high degree of information redundancy. In addition, the constructed patch image has high dimensionality, which leads to a large amount of calculation. To overcome these deficiencies, the superpixel method is adopted to segment the original infrared image and obtain superpixel images with no overlapping area. The method makes full use of the local spatial correlation of the infrared image and avoids the computational burden caused by redundant information. Moreover, introducing multiscale theory then merging the target images detected at different scales can further improve the robustness of the algorithm for detecting targets of different sizes.ResultFirst, experiments are conducted on many infrared small target images with varying situations and levels of noise. Experimental results demonstrate that from the perspective of subjective visual evaluation, the proposed method is robust to different scenes and noise. Experiments are also conducted to verify two aspects, namely, background suppression effect and detection speed. The signal-to-clutter ratio gain and background suppression factor are selected as quantitative evaluation indicators for the background suppression effect. Experimental results reveal that compared with the Top-Hat, Max-Median, two-dimensional least mean square, local saliency map, infrared patch-image, and weighted infrared patch-image methods, the proposed method can effectively eliminate various interferences, exerts a superior effect on background suppression, and can accurately detect infrared small targets in complex backgrounds simultaneously. The background suppression factor of the proposed method is several tens of times that of other methods, and the infrared superpixel-image model reduces the detection time by at least 78.2% compared with similar methods.ConclusionIn this study, superpixel image segmentation and multiscale theory are introduced into the low rank constraint and sparse representation joint model. The model can achieve an advantageous background suppression effect and good adaptability to the target size when applied to infrared small-target detection in complex backgrounds. In addition, the proposed method of infrared small-target detection at different scales can be further transformed into parallel processing, which is beneficial for accelerating the detection process of the method. Our future work will focus on reducing the algorithm's complexity and designing a more flexible method for constructing an infrared-patch image.关键词:small target detection;infrared image;low rank constraint;sparse representation;superpixel;multiscale56|88|2更新时间:2024-05-07 -

摘要:ObjectiveThe shoreline is not only the basis of analysis in video surveillance in the water industry but also the key to autonomous navigation of unmanned surface boats. Many scholars have proposed shoreline detection methods. However, many existing shoreline detection algorithms utilize traditional image recognition methods for image segmentation using several features of the water surface and the ground. When dealing with different scenes, the parameters must be adjusted, but this is unsuitable for complex scenes. Traditional detection methods cannot overcome the influence of various factors, such as water surface ripple and reflection, and are unadaptable because they cannot be applied to the simultaneous analysis of multiple shoreline scenes. In this study, the Deeplab v3+ network for shoreline segmentation is trained by applying several complex shoreline scene images provided by the Chengdu River Chief's Office and self-photographed images. We simplify the Deeplab v3+ network to improve the performance and speed of segmentation. Then, on the basis of the improved Deeplab v3+, we segment the water surface image and propose a method to extract the shoreline by using the segmented image to achieve automatic shoreline extraction.MethodFirst, images of different waterfront scenes are collected for training and verification sets. To further improve the generalization capability of the network, we use the gamma function to process 10 photos captured in different complex scenes and simulate different lighting situations in the same scene. We add 20 processed images to the verification set to expand the sample. After performing comparative experiments on various semantic segmentation networks, the Deeplab v3 + network is selected for modification, and the Xception structure is fine-tuned to reduce the number of network layers and increase the speed. Meanwhile, a low-level feature is added to the decoder to increase the feature information. Consequently, the time consumption is reduced without affecting the accuracy of the algorithm. Comparison of the modified network with the original network indicates that the modified network improves computational efficiency when the accuracy is basically unchanged. Then, according to the image information, we set the loss weight coefficient and visualization parameters to train data under the improved Deeplab v3+. Second, using the Linux operating system with the C++ interface of TensorFlow, the test image is segmented under the trained PB model. Finally, the waterfront line is detected by the edge detection operator on the basis of the extracted water surface region. The extracted water shoreline is expanded and superimposed on the original image for convenient observation.Result Waterfront detection experiments are performed on the collection of water surface images with different illumination intensities, degrees of corrugation, and shadows. Representative waterfront images are selected and compared using waterfront algorithms proposed by scholars, such as Iwahash et al., Bao et al., and Peng et al.. Experimental results show that only the proposed algorithm can completely process complex scenes on land and water surfaces and accurately detect clear and complete waterfront lines in different waterfront images. Furthermore, the real-time performance of the algorithm can reach 8 frame/s. Compared with the speed of other algorithms, the speed of the proposed algorithm is increased by nearly five times, and its accuracy is 93.98%, indicating an improvement of nearly 20%.ConclusionThe algorithm can overcome the following situations:severely irregular waterfront edges, large difference in waterfront scenes, and interference of light, ripple, reflection, and other factors in complex waterfront scenes. In practical application, the algorithm achieves automatic shoreline extraction without artificial configuration and tuning. In addition, the accuracy and efficiency of waterfront image segmentation are improved, and a clear and complete waterfront line is detected for intelligent monitoring and analysis in the water conservancy industry. The algorithm requires a very large number of samples. However, the current sample is far from being sufficient. In the future, the number of samples in different scenarios must be increased to enhance the generalization capability of the network and the applicability of the algorithm. The application of this method in Windows and the improvement of the algorithm's practicability are other future research directions.关键词:water video surveillance;Deeplab v3+;waterfront image segmentation;edge detection;shoreline detection15|5|2更新时间:2024-05-07

摘要:ObjectiveThe shoreline is not only the basis of analysis in video surveillance in the water industry but also the key to autonomous navigation of unmanned surface boats. Many scholars have proposed shoreline detection methods. However, many existing shoreline detection algorithms utilize traditional image recognition methods for image segmentation using several features of the water surface and the ground. When dealing with different scenes, the parameters must be adjusted, but this is unsuitable for complex scenes. Traditional detection methods cannot overcome the influence of various factors, such as water surface ripple and reflection, and are unadaptable because they cannot be applied to the simultaneous analysis of multiple shoreline scenes. In this study, the Deeplab v3+ network for shoreline segmentation is trained by applying several complex shoreline scene images provided by the Chengdu River Chief's Office and self-photographed images. We simplify the Deeplab v3+ network to improve the performance and speed of segmentation. Then, on the basis of the improved Deeplab v3+, we segment the water surface image and propose a method to extract the shoreline by using the segmented image to achieve automatic shoreline extraction.MethodFirst, images of different waterfront scenes are collected for training and verification sets. To further improve the generalization capability of the network, we use the gamma function to process 10 photos captured in different complex scenes and simulate different lighting situations in the same scene. We add 20 processed images to the verification set to expand the sample. After performing comparative experiments on various semantic segmentation networks, the Deeplab v3 + network is selected for modification, and the Xception structure is fine-tuned to reduce the number of network layers and increase the speed. Meanwhile, a low-level feature is added to the decoder to increase the feature information. Consequently, the time consumption is reduced without affecting the accuracy of the algorithm. Comparison of the modified network with the original network indicates that the modified network improves computational efficiency when the accuracy is basically unchanged. Then, according to the image information, we set the loss weight coefficient and visualization parameters to train data under the improved Deeplab v3+. Second, using the Linux operating system with the C++ interface of TensorFlow, the test image is segmented under the trained PB model. Finally, the waterfront line is detected by the edge detection operator on the basis of the extracted water surface region. The extracted water shoreline is expanded and superimposed on the original image for convenient observation.Result Waterfront detection experiments are performed on the collection of water surface images with different illumination intensities, degrees of corrugation, and shadows. Representative waterfront images are selected and compared using waterfront algorithms proposed by scholars, such as Iwahash et al., Bao et al., and Peng et al.. Experimental results show that only the proposed algorithm can completely process complex scenes on land and water surfaces and accurately detect clear and complete waterfront lines in different waterfront images. Furthermore, the real-time performance of the algorithm can reach 8 frame/s. Compared with the speed of other algorithms, the speed of the proposed algorithm is increased by nearly five times, and its accuracy is 93.98%, indicating an improvement of nearly 20%.ConclusionThe algorithm can overcome the following situations:severely irregular waterfront edges, large difference in waterfront scenes, and interference of light, ripple, reflection, and other factors in complex waterfront scenes. In practical application, the algorithm achieves automatic shoreline extraction without artificial configuration and tuning. In addition, the accuracy and efficiency of waterfront image segmentation are improved, and a clear and complete waterfront line is detected for intelligent monitoring and analysis in the water conservancy industry. The algorithm requires a very large number of samples. However, the current sample is far from being sufficient. In the future, the number of samples in different scenarios must be increased to enhance the generalization capability of the network and the applicability of the algorithm. The application of this method in Windows and the improvement of the algorithm's practicability are other future research directions.关键词:water video surveillance;Deeplab v3+;waterfront image segmentation;edge detection;shoreline detection15|5|2更新时间:2024-05-07 -

摘要:ObjectiveThe target tracking algorithm that is based on deep learning and uses deep convolution features is highly accurate,but it cannot be tracked in real time nor applied to actual situations. The deep convolutional features of convolutional neural networks (CNNs) contain advanced semantic information. Even when the target appearance model has serious interference,such as illumination variation,deformation,and other interference factors,the deep convolution features still exhibit an accurate discriminative performance in the target. Although the tracking algorithm based on correlation filtering is fast (up to several hundred frame/s),it is inaccurate. The algorithm uses the histogram of oriented gradient (HOG),color name (CN),and color histogram as statistical features to calculate the correlation of two image blocks. The position with the highest correlation is the predicted position. To balance the real-time tracking capability and accuracy of the target tracking algorithm,this study proposes a dual-model kernel correlation filtering algorithm based on the combination of the accuracy of the deep convolution feature algorithm and the speed of the correlation filtering algorithm.MethodAn adaptive dual-feature model selection mechanism is proposed. The dual model consists of main-and auxiliary-feature models. The main-feature model adopts a shallow texture feature. The dimension of the HOG feature is relatively low. Thus,it has a high calculation speed. The main-feature model is used for the real-time tracking of video sequences with clear texture contour features,and the kernel correlation function of the correlation filter of the main-feature model uses the Gaussian kernel function. The auxiliary-feature model employs CNN features containing deep semantic information. When serious interference factors,such as illumination variation,occlusion,and deformation,occur in video sequences,the auxiliary-feature model with deep CNN features is used to determine and correct the target position because such factors lead to low-confidence responses of the main-feature model. The linear kernel function is utilized by the kernel correlation function of the auxiliary feature model's correlation filter. The main-and auxiliary-feature models correspond to individual model update and synergistically cooperate to generate a stable correlation filter and improve the computational efficiency of the algorithm. The auxiliary-feature model adopts deep CNN features. The dimension of deep CNN features is too high,resulting in low calculation speed. To optimize the calculation speed and ensure the real-time performance of the algorithm,we use principal component analysis (PCA) to reduce the dimensionality of high-dimensional deep convolution features. Under the premise of saving as much effective information of the original features as possible,the dimension of the CNN features is reduced,and the computing speed is improved. This work also improves the accuracy of the tracking algorithm by optimizing the scale and the solution method.ResultWhen the appearance model of the target changes seriously,the confidence response value of the main-feature model becomes too low. In this case,the dual-feature model discriminating mechanism promptly calls the auxiliary-feature model to correct the target positioning in real time. Experiments show that adopting the adaptive dual-feature model recognition mechanism is effective. We compare our algorithm with current advanced tracking algorithms with real-time speed,such as SiamFC(fully-convolutional Siamese networks),MEM(multiple experts using entropy minimization),SAMF(scale adaptive muttiple features),DSST(discriminative scale space tracking),KCF(kernel correlation filter) Struck,and TLD(tracking learning detection). The OPEresult of the public dataset OTB-2013 shows that the proposed algorithm ranks first in terms of distance precision rate. Compared with the KCF algorithm,the distance precision and overlap success rates of the proposed algorithm are improved by 25.2% and 25.6%,respectively,and the average speed of the proposed algorithm can reach 38 frame/s. To demonstrate the performance of the proposed tracking algorithm more concretely,we also compare it with the most advanced tracking algorithms based on deep convolution characteristics,such as VITAL,SANet,and CCOT. However,these algorithms cannot meet the real-time performance requirement and cannot be applied in actual situations.ConclusionA new tracking model mechanism is proposed in this study. An auxiliary-feature model is added to the main-feature model. The auxiliary-feature model adjusts the optimal position of the target in real time according to the change in the confidence response of the main-feature model and prevents the main-feature model from drifting. PCA is introduced to reduce the dimensionality of the deep convolution feature and optimize the speed of the algorithm. However,the proposed algorithm still has problems. First,a change in the environment would lead to uncertainty of the auxiliary model's threshold. Setting the threshold of the mobilization auxiliary model to a fixed value would reduce the adaptability of the tracking algorithm. Second,the stability of the main-feature model's correlation filter directly affects the accuracy of the algorithm. The correlation filtering algorithm expands the sample set by introducing a sample period hypothesis,but the period hypothesis also introduces the boundary effect,which considerably reduces the accuracy of the filter. To improve the accuracy of the algorithm,methods to eliminate the boundary effect should be introduced. Examples of such methods include adding a spatial regularization term in the ridge regression solution or adding a mask matrix image to highlight the target position. The results of the OTB-2013 public dataset show that the performance of the proposed target tracking algorithm is better than that of current tracking algorithms in terms of tracking accuracy and real-time tracking. The proposed tracking algorithm has good adaptability under 10 different interference factors,such as motion blur,scale variation,and rotation.关键词:target tracking;adaptive feature;convolutional neural network (CNN);correlation filter;principal component analysis (PCA)12|4|2更新时间:2024-05-07