最新刊期

卷 24 , 期 11 , 2019

-

摘要:Remote sensing image, which is an important data source in spatial analysis, records both spectral and spatial information of the scene. Therefore, it is widely utilized in areas such as terrain classification, change detection and object identification etc.. Classification is the most primary problem of remote sensing applications while the issues of large date redundancy and small training set are still the barrier of its widespread application and development. Deep learning is one kind of representational learning, which has led to significant advances in imaging technology. Traditional pattern recognition algorithm always be the thought of a strategy of "divide and conquer", which tends to be divided into feature extraction and feature selection, classifier design processes. Although this solution idea can decompose the problem into several controllable subproblems, at the same time, the optimum solution of these subproblems cannot converge to the global optimum, and even the best feature extraction methods cannot make sure the classification of boundary is prefect. Comparing with the hand-crafted feature extraction methods, the end-to-end optimization pattern of deep learning has brought a superior performance for the remote sensing classification. Unfortunately, deep learning usually requires big data, with respect to both volume and variety, while most remote sensing applications only have limited training data, of which a small subset is labeled. Herein, we provide the most comprehensive survey of state-of-the-art approaches in deep learning to combat this challenge over recent one or two years, and to enable researchers to explore its theory and development. This paper summarizes three kinds of methods to train the deep model under limited training data. The first topic is deep generative model, in which we explore the applications of the generative adversarial networks (GAN), variation autoencoders (VAE) and their derived structures in remote sensing classification and change detection, and the application fields, applicable data and characteristics of the generated model are summarized. The next is transfer learning, in which we review the approaches that network structures or the data features are transferred from one domain to anther domain. Although transfer learning provides a possible solution to make full use of the existing tag data, the ability of transfer learning is also limited. When the task and data distribution between two domains are very different, negative migration is easy to occur. Therefore, the mobility measurement standards in the field of remote sensing technology should be further studied. The last is the novel deep neural networks which is trained with semi-supervised learning or active learning strategies towards remote sensing classification. At the same time, the author enumerates some attempts made by scholars based on novel network structures in recent years. There are two main solutions to handle the full training of deep learning under the limited training data. One is to enhance the prior knowledge. Before the deep generative model appears, data enhancement often relies on simple image transformation and data augmentation. For multispectral data, simple data transformations, such as translation, rotation, scaling, shearing, or any combination of these, were carried out to expand the training samples. For hyperspectral data, data simulation based on physical models, such as spectrum simulation under different illumination of the same ground scene, label propagation driven by data or additional Gaussian white noise is adopted. These solutions rely heavily on the data itself or the assumptions of the physical environment. Different from traditional methods, deep generative methods and transfer learning have strong capability in the learning of prior knowledges. And in the most case, the combination of these two kinds approaches are used.The other is to extract the more effective time-spatio-spectral features towards classification using novel deep neural networks on the limited labelled data. Such methods build or select advanced network structures, which perform well in computer vision fields, and the training process combine such training strategies as semi-supervised learning, ensemble learning and active learning strategies. In the practical applications, these mentioned solutions are jointed to obtain the optimum performance. Due to the exploration of various models, deep learning has shown its superiority in remote sensing technology and the performance exceeds the shallow model in general. Nonetheless, the physics-based deep learning approach and high-powered practicality are still worth studying.关键词:remote sensing classification;deep learning;deep generative model;semi-supervised learning;transfer learning61|93|15更新时间:2024-05-07

摘要:Remote sensing image, which is an important data source in spatial analysis, records both spectral and spatial information of the scene. Therefore, it is widely utilized in areas such as terrain classification, change detection and object identification etc.. Classification is the most primary problem of remote sensing applications while the issues of large date redundancy and small training set are still the barrier of its widespread application and development. Deep learning is one kind of representational learning, which has led to significant advances in imaging technology. Traditional pattern recognition algorithm always be the thought of a strategy of "divide and conquer", which tends to be divided into feature extraction and feature selection, classifier design processes. Although this solution idea can decompose the problem into several controllable subproblems, at the same time, the optimum solution of these subproblems cannot converge to the global optimum, and even the best feature extraction methods cannot make sure the classification of boundary is prefect. Comparing with the hand-crafted feature extraction methods, the end-to-end optimization pattern of deep learning has brought a superior performance for the remote sensing classification. Unfortunately, deep learning usually requires big data, with respect to both volume and variety, while most remote sensing applications only have limited training data, of which a small subset is labeled. Herein, we provide the most comprehensive survey of state-of-the-art approaches in deep learning to combat this challenge over recent one or two years, and to enable researchers to explore its theory and development. This paper summarizes three kinds of methods to train the deep model under limited training data. The first topic is deep generative model, in which we explore the applications of the generative adversarial networks (GAN), variation autoencoders (VAE) and their derived structures in remote sensing classification and change detection, and the application fields, applicable data and characteristics of the generated model are summarized. The next is transfer learning, in which we review the approaches that network structures or the data features are transferred from one domain to anther domain. Although transfer learning provides a possible solution to make full use of the existing tag data, the ability of transfer learning is also limited. When the task and data distribution between two domains are very different, negative migration is easy to occur. Therefore, the mobility measurement standards in the field of remote sensing technology should be further studied. The last is the novel deep neural networks which is trained with semi-supervised learning or active learning strategies towards remote sensing classification. At the same time, the author enumerates some attempts made by scholars based on novel network structures in recent years. There are two main solutions to handle the full training of deep learning under the limited training data. One is to enhance the prior knowledge. Before the deep generative model appears, data enhancement often relies on simple image transformation and data augmentation. For multispectral data, simple data transformations, such as translation, rotation, scaling, shearing, or any combination of these, were carried out to expand the training samples. For hyperspectral data, data simulation based on physical models, such as spectrum simulation under different illumination of the same ground scene, label propagation driven by data or additional Gaussian white noise is adopted. These solutions rely heavily on the data itself or the assumptions of the physical environment. Different from traditional methods, deep generative methods and transfer learning have strong capability in the learning of prior knowledges. And in the most case, the combination of these two kinds approaches are used.The other is to extract the more effective time-spatio-spectral features towards classification using novel deep neural networks on the limited labelled data. Such methods build or select advanced network structures, which perform well in computer vision fields, and the training process combine such training strategies as semi-supervised learning, ensemble learning and active learning strategies. In the practical applications, these mentioned solutions are jointed to obtain the optimum performance. Due to the exploration of various models, deep learning has shown its superiority in remote sensing technology and the performance exceeds the shallow model in general. Nonetheless, the physics-based deep learning approach and high-powered practicality are still worth studying.关键词:remote sensing classification;deep learning;deep generative model;semi-supervised learning;transfer learning61|93|15更新时间:2024-05-07

Scholar View

-

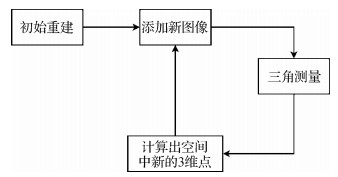

摘要:Light field imaging is an attractive technique for 3D visualization, especially in virtual and augmented reality application scenarios. This technique has also been applied to computer vision areas, such as depth estimation, 3D reconstruction, and object detection. However, light field data have put great pressure on cost-effective storage and transmission owing to the large data volume. The data format of light field is also relatively different from that of conventional images or videos. This difference has resulted in the inefficient compression of light field data by current coding tools designed for traditional images or videos. Thus, light field compression methods must be developed, especially from the perspective of cost-effective storage and transmission bandwidth. With the advancement of light field compression, various light field compression methods have been proposed. This study conducts a survey of related works on light field compression to provide a research foundation for later researchers who will focus on this topic. First, this study briefly introduces the fundamentals of light field and the four types of light field-capturing devices. The advantages and drawbacks of different types of capturing devices are presented accordingly. The influence of different capturing devices on light field data format is also described. Second, this work discusses the recent advances in JPEG Pleno, which is a standard framework for representing and signaling plenoptic modalities. JPEG Pleno was started in 2015 by the Joint Photographic Experts Group Committee. The term "pleno" is an abbreviation of "plenoptic, " which is a mathematical formulation to represent the information of a beam of light passing through an arbitrary point within a scene. JPEG Pleno proposes a light field-coding framework for the light field data acquired by a plenoptic camera or a high-density array of cameras. The JPEG Pleno light field encoder consists of three parts, with each part illustrated in detail. Lastly, on the basis of extensive literature research, the proposed light field compression methods are divided into three categories according to the characteristics of the coding algorithms, namely, transform, pseudo-sequence-based, and predictive coding approaches. We analyze and discuss the coding methods in each category. As for transform coding approaches, the coding performance is not better than those of the other two methods because transform coding approaches do not contain the prediction process. Although several transform methods can achieve good performance in terms of energy compaction, the decorrelation efficiency of transform methods is not as good as that of the hybrid coding framework that consists of prediction and transformation. As for pseudo-sequence-based coding approaches, the correlation in spatial or view domain is converted into temporal domain. Temporal correlation can be removed by inter-prediction techniques with the use of a well-developed video encoder, such as HEVC (high efficiency video coding) codec. The coding performance can be further improved because the disparity information is not used in the video encoder. As for the predictive coding approaches, they can be further divided into two methods: self-similarity-based coding methods, which were proposed in the last two years, and disparity prediction-based coding approaches. Self-similarity-based coding methods directly encode light field images by applying template-matching-based coding methods. However, the coding performance of this method is insufficient compared with that of disparity prediction-based coding approaches. The latter can achieve the best coding performance compared with other coding methods. JPEG Pleno applies such method to encode light field data. The advantages and shortcomings of existing light field-coding methods are elucidated on the basis of the preceding analysis, and possible promising directions for future research are suggested. First, light field video data sets to explore light field video coding are lacking. Second, the JPEG Pleno light field coding framework should be studied, and coding methods should be developed on the basis of this framework. Lastly, a few coding tools, such as depth estimation and view synthesis, should be improved. Light field compression is a popular research topic, and related research achievements, including standardization advances on JPEG Pleno, will attract increasing attention. Efficient compression of light field data remains a great challenge. Although many compression approaches are available for light field data, the coding performance still needs to be improved.关键词:light field;light field compression;light field imaging;JPEG Pleno;transform;pseudo-sequence53|62|2更新时间:2024-05-07

摘要:Light field imaging is an attractive technique for 3D visualization, especially in virtual and augmented reality application scenarios. This technique has also been applied to computer vision areas, such as depth estimation, 3D reconstruction, and object detection. However, light field data have put great pressure on cost-effective storage and transmission owing to the large data volume. The data format of light field is also relatively different from that of conventional images or videos. This difference has resulted in the inefficient compression of light field data by current coding tools designed for traditional images or videos. Thus, light field compression methods must be developed, especially from the perspective of cost-effective storage and transmission bandwidth. With the advancement of light field compression, various light field compression methods have been proposed. This study conducts a survey of related works on light field compression to provide a research foundation for later researchers who will focus on this topic. First, this study briefly introduces the fundamentals of light field and the four types of light field-capturing devices. The advantages and drawbacks of different types of capturing devices are presented accordingly. The influence of different capturing devices on light field data format is also described. Second, this work discusses the recent advances in JPEG Pleno, which is a standard framework for representing and signaling plenoptic modalities. JPEG Pleno was started in 2015 by the Joint Photographic Experts Group Committee. The term "pleno" is an abbreviation of "plenoptic, " which is a mathematical formulation to represent the information of a beam of light passing through an arbitrary point within a scene. JPEG Pleno proposes a light field-coding framework for the light field data acquired by a plenoptic camera or a high-density array of cameras. The JPEG Pleno light field encoder consists of three parts, with each part illustrated in detail. Lastly, on the basis of extensive literature research, the proposed light field compression methods are divided into three categories according to the characteristics of the coding algorithms, namely, transform, pseudo-sequence-based, and predictive coding approaches. We analyze and discuss the coding methods in each category. As for transform coding approaches, the coding performance is not better than those of the other two methods because transform coding approaches do not contain the prediction process. Although several transform methods can achieve good performance in terms of energy compaction, the decorrelation efficiency of transform methods is not as good as that of the hybrid coding framework that consists of prediction and transformation. As for pseudo-sequence-based coding approaches, the correlation in spatial or view domain is converted into temporal domain. Temporal correlation can be removed by inter-prediction techniques with the use of a well-developed video encoder, such as HEVC (high efficiency video coding) codec. The coding performance can be further improved because the disparity information is not used in the video encoder. As for the predictive coding approaches, they can be further divided into two methods: self-similarity-based coding methods, which were proposed in the last two years, and disparity prediction-based coding approaches. Self-similarity-based coding methods directly encode light field images by applying template-matching-based coding methods. However, the coding performance of this method is insufficient compared with that of disparity prediction-based coding approaches. The latter can achieve the best coding performance compared with other coding methods. JPEG Pleno applies such method to encode light field data. The advantages and shortcomings of existing light field-coding methods are elucidated on the basis of the preceding analysis, and possible promising directions for future research are suggested. First, light field video data sets to explore light field video coding are lacking. Second, the JPEG Pleno light field coding framework should be studied, and coding methods should be developed on the basis of this framework. Lastly, a few coding tools, such as depth estimation and view synthesis, should be improved. Light field compression is a popular research topic, and related research achievements, including standardization advances on JPEG Pleno, will attract increasing attention. Efficient compression of light field data remains a great challenge. Although many compression approaches are available for light field data, the coding performance still needs to be improved.关键词:light field;light field compression;light field imaging;JPEG Pleno;transform;pseudo-sequence53|62|2更新时间:2024-05-07 -

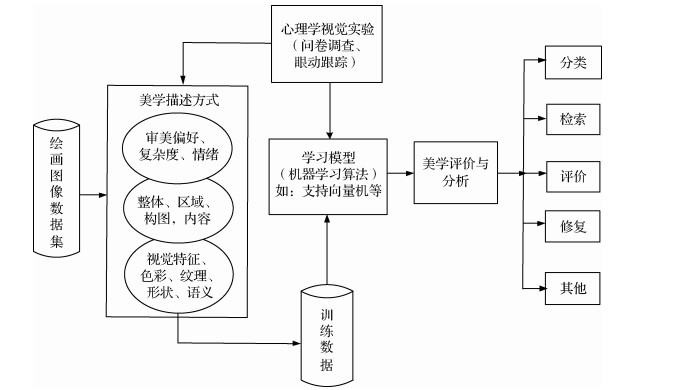

摘要:Aesthetics has been the subject of long-standing debates by philosophers and psychologists. In psychology, aesthetic experience is due to the interaction among perception, cognition, and emotion. This triad has been experimentally studied in the field of experimental aesthetics to obtain understanding of how aesthetic experience is related to the fundamental principles of human visual perception and brain processes. Recently, researchers in computer vision have gained interest in the topic, giving rise to the field of computational aesthetics. With computing hardware and methodology developing at a high pace, the modeling of perceptually relevant aspect of aesthetic stimuli has a huge potential. In the field of aesthetics, the image aesthetics is a popular issue in recent years. Image processing with computer scientists have, for a long time, attempted to solve image quality assessment and image semantics inference. The former deals primarily with the quantification of low-level perceptual degradation of an image, and the latter attempts to infer the content of an image and associate high level semantics to it in part or in whole. More recently, researchers have drawn ideas from the aforementioned methods to address more challenging problems such as associating pictures with aesthetics and emotions that they arouse in humans, with low-level image composition. Painting image is a kind of artistic work with emotion and aesthetics. By understanding the theme, the painter expresses his inner feelings in painting and passes them on to others. However, the artist's emotional communication is also affected and restricted by the audience's aesthetic ability. People's aesthetic and appreciation ability have a direct impact on the evaluation of painting. With the development and wide application of digital technology and network, people can obtain a large number of digital painting images through the Internet. Therefore, the aesthetic characteristics of painting images have become a research hotspot. At present, advanced image processing technology provides a theoretical basis and an effective method for aesthetic study of digital painting images and plays important roles in painting study and art protection. To better research the aesthetic characteristics of painting images, this study provides a comprehensive survey and analysis focusing on domestic and international research about painting aesthetics at present. Based on extensive literature research, this study first shows the different representation modes of Chinese and western paintings and analyzes the reason for such differences. This study also summarizes two methods of painting aesthetics, namely, experimental and computational aesthetics, and analyzes the correlation between the two methods. Experimental aesthetics provide abundant knowledge for computational aesthetics and also gains some quantitative information from computational aesthetics. Experimental aesthetics mainly study the specific attributes of works of art and can help us understand how aesthetic perception is related to human vision and how humans perceive the world. In the study of experimental aesthetics of painting images, researchers need to design aesthetic experiments for quantitative evaluation. The experiment mainly includes three steps: 1) preparing the sample set of painting images; 2) observing and evaluating the painting images by subjects; and 3) analyzing and studying the experimental results. The results of experimental aesthetics are mainly statistical data, such as the score of subjects to the painting image, the number and time of gaze, etc. These experimental data can be analyzed by statistical analytical methods, including variance analysis, correlation analysis, and principal component analysis, etc. Comparing with the subjective analysis in experimental aesthetics, the computational aesthetics of painting images are essentially objective, can avoid the influence of subjective will, and analyze detail features in the painting images. The purpose of the computational aesthetics research is to endow computer with the ability to assess the aesthetics value of images as human beings do. They mainly focus on the evaluation of image complexity (complex/uncomplicated), quality (high/low quality), visual preference (beautiful/not beautiful), and author or artistic style of the painting. Researchers can analyze a large number of painting images automatically by computational aesthetics. In the image classification model of computational aesthetics, data sets are generally divided into training and test data. Different capacities of data sets adopt different evaluation methods. Leave-One-Out is often used when the capacity is small, and K-fold cross-validation is used conversely. The evaluation indicators include precision, accuracy rate, recall rate, P-R (precision-recall) curve, F-measure, confusion matrix, and ROC (receiver operating characteristic) curve. This study sums up the painting database commonly used and briefly describes the sources, quantities, and characteristics of different databases. Based on the number of painting samples, the number of subjects, the quantification of aesthetic grade, and the eye movement indices, this study summarizes the status and development of experimental aesthetic research methods of Chinese and western painting. Based on classification category, features, number of painting samples, classification algorithm, and accuracy, this study presents several commonly machine learning algorithms of painting classification (including emotion, complexity, author and style etc.), and summarizes the research status and development of computational aesthetic research methods of Chinese and western painting.in detail. This study briefly reviews the commonly used evaluation methods in analyzing painting image aesthetics and evaluation indices. Finally, this study highlights the existing problems and challenges in the study of painting classification and affective analysis and discusses prospective solutions. Painting image aesthetics is an innovative and challenging research topic, which can be widely applied in the fields of classification of painting images, aesthetic evaluation of painting images, reconstruction and restoration of painting images, and historical and cultural research. Painting is a result of human creativity and pioneering civilization, so many excellent research ideas and methods have emerged. Through the comprehensive and systematic analysis of existing research, this article provides theoretical basis and exploration thoughts for future research on painting.关键词:Chinese and western paintings;experimental aesthetics;computational aesthetics;machine learning;evaluation method76|575|8更新时间:2024-05-07

摘要:Aesthetics has been the subject of long-standing debates by philosophers and psychologists. In psychology, aesthetic experience is due to the interaction among perception, cognition, and emotion. This triad has been experimentally studied in the field of experimental aesthetics to obtain understanding of how aesthetic experience is related to the fundamental principles of human visual perception and brain processes. Recently, researchers in computer vision have gained interest in the topic, giving rise to the field of computational aesthetics. With computing hardware and methodology developing at a high pace, the modeling of perceptually relevant aspect of aesthetic stimuli has a huge potential. In the field of aesthetics, the image aesthetics is a popular issue in recent years. Image processing with computer scientists have, for a long time, attempted to solve image quality assessment and image semantics inference. The former deals primarily with the quantification of low-level perceptual degradation of an image, and the latter attempts to infer the content of an image and associate high level semantics to it in part or in whole. More recently, researchers have drawn ideas from the aforementioned methods to address more challenging problems such as associating pictures with aesthetics and emotions that they arouse in humans, with low-level image composition. Painting image is a kind of artistic work with emotion and aesthetics. By understanding the theme, the painter expresses his inner feelings in painting and passes them on to others. However, the artist's emotional communication is also affected and restricted by the audience's aesthetic ability. People's aesthetic and appreciation ability have a direct impact on the evaluation of painting. With the development and wide application of digital technology and network, people can obtain a large number of digital painting images through the Internet. Therefore, the aesthetic characteristics of painting images have become a research hotspot. At present, advanced image processing technology provides a theoretical basis and an effective method for aesthetic study of digital painting images and plays important roles in painting study and art protection. To better research the aesthetic characteristics of painting images, this study provides a comprehensive survey and analysis focusing on domestic and international research about painting aesthetics at present. Based on extensive literature research, this study first shows the different representation modes of Chinese and western paintings and analyzes the reason for such differences. This study also summarizes two methods of painting aesthetics, namely, experimental and computational aesthetics, and analyzes the correlation between the two methods. Experimental aesthetics provide abundant knowledge for computational aesthetics and also gains some quantitative information from computational aesthetics. Experimental aesthetics mainly study the specific attributes of works of art and can help us understand how aesthetic perception is related to human vision and how humans perceive the world. In the study of experimental aesthetics of painting images, researchers need to design aesthetic experiments for quantitative evaluation. The experiment mainly includes three steps: 1) preparing the sample set of painting images; 2) observing and evaluating the painting images by subjects; and 3) analyzing and studying the experimental results. The results of experimental aesthetics are mainly statistical data, such as the score of subjects to the painting image, the number and time of gaze, etc. These experimental data can be analyzed by statistical analytical methods, including variance analysis, correlation analysis, and principal component analysis, etc. Comparing with the subjective analysis in experimental aesthetics, the computational aesthetics of painting images are essentially objective, can avoid the influence of subjective will, and analyze detail features in the painting images. The purpose of the computational aesthetics research is to endow computer with the ability to assess the aesthetics value of images as human beings do. They mainly focus on the evaluation of image complexity (complex/uncomplicated), quality (high/low quality), visual preference (beautiful/not beautiful), and author or artistic style of the painting. Researchers can analyze a large number of painting images automatically by computational aesthetics. In the image classification model of computational aesthetics, data sets are generally divided into training and test data. Different capacities of data sets adopt different evaluation methods. Leave-One-Out is often used when the capacity is small, and K-fold cross-validation is used conversely. The evaluation indicators include precision, accuracy rate, recall rate, P-R (precision-recall) curve, F-measure, confusion matrix, and ROC (receiver operating characteristic) curve. This study sums up the painting database commonly used and briefly describes the sources, quantities, and characteristics of different databases. Based on the number of painting samples, the number of subjects, the quantification of aesthetic grade, and the eye movement indices, this study summarizes the status and development of experimental aesthetic research methods of Chinese and western painting. Based on classification category, features, number of painting samples, classification algorithm, and accuracy, this study presents several commonly machine learning algorithms of painting classification (including emotion, complexity, author and style etc.), and summarizes the research status and development of computational aesthetic research methods of Chinese and western painting.in detail. This study briefly reviews the commonly used evaluation methods in analyzing painting image aesthetics and evaluation indices. Finally, this study highlights the existing problems and challenges in the study of painting classification and affective analysis and discusses prospective solutions. Painting image aesthetics is an innovative and challenging research topic, which can be widely applied in the fields of classification of painting images, aesthetic evaluation of painting images, reconstruction and restoration of painting images, and historical and cultural research. Painting is a result of human creativity and pioneering civilization, so many excellent research ideas and methods have emerged. Through the comprehensive and systematic analysis of existing research, this article provides theoretical basis and exploration thoughts for future research on painting.关键词:Chinese and western paintings;experimental aesthetics;computational aesthetics;machine learning;evaluation method76|575|8更新时间:2024-05-07

Review

-

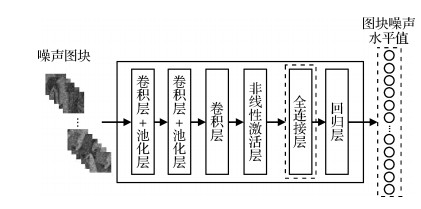

摘要:ObjectiveImage denoising is a fundamental but challenging problem in low-level vision and image processing. Most existing image-denoising methods can be classified as so-called non-blind approaches, which are assumed to work under the premise of the availability of noise level. Thus, their denoising performance highly depends on the accuracy of the noise level fed into them. In practice, however, noise level is always unknown beforehand. As a result, fast and accurate noise level estimation (NLE) is often necessary for blind image denoising. To date, training-based NLE methods using handcrafted features that reflect the distortion level of a noisy image, i.e., noise level-aware features (NLAFs), still suffer from the weak ability of feature description and the low accuracy of nonlinear mapping in NLAF extraction and noise level-mapping modules, respectively. To this end, an NLE algorithm that automatically extracts NLAFs with a convolutional neural network (CNN) and directly maps the NLAFs to their corresponding noise level using AdaBoost backpropagation (BP) neural network was proposed.MethodSubstantially clean images were first corrupted with Gaussian noise at different noise levels to form a set of noisy images. The noisy patches extracted from the noisy images and their corresponding noise levels were then fed into a CNN to train a CNN-based NLE model. However, the CNN-based NLE model directly used to obtain the noise level of a noisy image had poor estimation accuracy. The major reasons were as follows: 1) no strong correlation between most of the output values of the fully connected layer of the CNN-based NLE model and the noise levels, and 2) an inadequate nonlinear mapping ability of the regression layer used to predict noise level in the CNN-based NLE model. Therefore, the correlation between the output values of the fully connected layer and the ground truths was analyzed and then several outputs that had higher correlation coefficient with the ground-truth noise levels were selected as the NLAFs in the form of feature vector. With the support of the AdaBoost technique, multiple BP neural networks with relative weak mapping ability were combined to build a strong nonlinear mapping prediction model, i.e., enhanced BP network, and the obtained prediction model was used to map the extracted NLAFs to their corresponding noise level directly. In the prediction phase, given a noisy image to be denoised, several patches were first randomly extracted and then fed into the trained CNN-based NLE model. Next, several NLAFs were extracted from the fully connected layer of the CNN-based model. The extracted NLAFs were subsequently approximated to corresponding estimated noise levels via the enhanced BP neural network. Finally, the median value of the patch noise levels was taken as the final estimate of the entire image, which could effectively solve the over and underestimation problems and greatly improve the execution efficiency.ResultComparison experiments were conducted to test the validity of the proposed method from three aspects, namely, estimation accuracy, denoising effect, and execution efficiency. The proposed method was compared with several state-of-the-art NLE methods to demonstrate the estimation accuracy. The CNN-based NLE model used to automatically extract NLAFs in this work was also compared. These competing NLE methods were performed on two test image sets, namely, 1) 10 commonly used images, including Cameraman, House, Pepper, Monarch, Plane, Lena, Barbara, Couple, Man, and Boat; and 2) 50 textured images borrowed from the BSD database (different from training). For a fair comparison, all methods were implemented in the environment of MATLAB 2017b, which ran on Inter (R) Core (TM) i7-3770 CPU @ 3.4 GHz RAM 8 GB. For noisy images with different noise levels and texture structures, the estimation error between the noise levels estimated by the proposed method and the ground truths was less than 0.5, and the root mean square error between the noise levels estimated by the proposed method and the ground truths was less than 0.9 across different noise levels (i.e., 5, 15, 35, 55, 75, and 95). These results indicated satisfactory and robust estimation accuracy. In denoising comparison, noise levels different from ones used in the training phase, i.e., 7.5, 17.5, 37.5, 57.5, 77.5, and 97.5, were added to 10 commonly used clean images. The classic benchmark denoising algorithm, block matching and 3D filtering (BM3D), was adopted to restore noisy images of the test set. The peak signal-to-noise ratio results obtained by the BM3D algorithm fed into ground truths and estimated noise levels were nearly equal. The proposed NLE algorithm also had high execution efficiency and took only 13.9 ms to estimate the noise level for an image 512×512 pixels in size.ConclusionExperimental results demonstrate that the proposed NLE algorithm competes efficiently with the reference counterparts across different noise levels and image contents in terms of estimation accuracy and computational complexity. Unlike the previous training-based NLE algorithm with respect to NLAF extraction, the proposed algorithm is purely data-driven and does not rely on handcrafted features or other types of prior domain knowledge. These advantages make the proposed algorithm a preferable candidate for practical denoising. After the proposed NLE method is used as a preprocessing module, non-blind denoising algorithms can obtain good denoising performance when the noise level is required as the key parameter.关键词:noise level estimation (NLE);training-based approach;patch level;noise level-aware feature (NLAF);noise level mapping;median estimation scheme39|55|1更新时间:2024-05-07

摘要:ObjectiveImage denoising is a fundamental but challenging problem in low-level vision and image processing. Most existing image-denoising methods can be classified as so-called non-blind approaches, which are assumed to work under the premise of the availability of noise level. Thus, their denoising performance highly depends on the accuracy of the noise level fed into them. In practice, however, noise level is always unknown beforehand. As a result, fast and accurate noise level estimation (NLE) is often necessary for blind image denoising. To date, training-based NLE methods using handcrafted features that reflect the distortion level of a noisy image, i.e., noise level-aware features (NLAFs), still suffer from the weak ability of feature description and the low accuracy of nonlinear mapping in NLAF extraction and noise level-mapping modules, respectively. To this end, an NLE algorithm that automatically extracts NLAFs with a convolutional neural network (CNN) and directly maps the NLAFs to their corresponding noise level using AdaBoost backpropagation (BP) neural network was proposed.MethodSubstantially clean images were first corrupted with Gaussian noise at different noise levels to form a set of noisy images. The noisy patches extracted from the noisy images and their corresponding noise levels were then fed into a CNN to train a CNN-based NLE model. However, the CNN-based NLE model directly used to obtain the noise level of a noisy image had poor estimation accuracy. The major reasons were as follows: 1) no strong correlation between most of the output values of the fully connected layer of the CNN-based NLE model and the noise levels, and 2) an inadequate nonlinear mapping ability of the regression layer used to predict noise level in the CNN-based NLE model. Therefore, the correlation between the output values of the fully connected layer and the ground truths was analyzed and then several outputs that had higher correlation coefficient with the ground-truth noise levels were selected as the NLAFs in the form of feature vector. With the support of the AdaBoost technique, multiple BP neural networks with relative weak mapping ability were combined to build a strong nonlinear mapping prediction model, i.e., enhanced BP network, and the obtained prediction model was used to map the extracted NLAFs to their corresponding noise level directly. In the prediction phase, given a noisy image to be denoised, several patches were first randomly extracted and then fed into the trained CNN-based NLE model. Next, several NLAFs were extracted from the fully connected layer of the CNN-based model. The extracted NLAFs were subsequently approximated to corresponding estimated noise levels via the enhanced BP neural network. Finally, the median value of the patch noise levels was taken as the final estimate of the entire image, which could effectively solve the over and underestimation problems and greatly improve the execution efficiency.ResultComparison experiments were conducted to test the validity of the proposed method from three aspects, namely, estimation accuracy, denoising effect, and execution efficiency. The proposed method was compared with several state-of-the-art NLE methods to demonstrate the estimation accuracy. The CNN-based NLE model used to automatically extract NLAFs in this work was also compared. These competing NLE methods were performed on two test image sets, namely, 1) 10 commonly used images, including Cameraman, House, Pepper, Monarch, Plane, Lena, Barbara, Couple, Man, and Boat; and 2) 50 textured images borrowed from the BSD database (different from training). For a fair comparison, all methods were implemented in the environment of MATLAB 2017b, which ran on Inter (R) Core (TM) i7-3770 CPU @ 3.4 GHz RAM 8 GB. For noisy images with different noise levels and texture structures, the estimation error between the noise levels estimated by the proposed method and the ground truths was less than 0.5, and the root mean square error between the noise levels estimated by the proposed method and the ground truths was less than 0.9 across different noise levels (i.e., 5, 15, 35, 55, 75, and 95). These results indicated satisfactory and robust estimation accuracy. In denoising comparison, noise levels different from ones used in the training phase, i.e., 7.5, 17.5, 37.5, 57.5, 77.5, and 97.5, were added to 10 commonly used clean images. The classic benchmark denoising algorithm, block matching and 3D filtering (BM3D), was adopted to restore noisy images of the test set. The peak signal-to-noise ratio results obtained by the BM3D algorithm fed into ground truths and estimated noise levels were nearly equal. The proposed NLE algorithm also had high execution efficiency and took only 13.9 ms to estimate the noise level for an image 512×512 pixels in size.ConclusionExperimental results demonstrate that the proposed NLE algorithm competes efficiently with the reference counterparts across different noise levels and image contents in terms of estimation accuracy and computational complexity. Unlike the previous training-based NLE algorithm with respect to NLAF extraction, the proposed algorithm is purely data-driven and does not rely on handcrafted features or other types of prior domain knowledge. These advantages make the proposed algorithm a preferable candidate for practical denoising. After the proposed NLE method is used as a preprocessing module, non-blind denoising algorithms can obtain good denoising performance when the noise level is required as the key parameter.关键词:noise level estimation (NLE);training-based approach;patch level;noise level-aware feature (NLAF);noise level mapping;median estimation scheme39|55|1更新时间:2024-05-07 -

摘要:ObjectiveWith the development of high-density industrial economy, the air quality gradually declines and haze occurs frequently. Haze is an aerosol system formed by the interaction of human daily life and special climate. Large particles in the air could scatter and absorb light, resulting in image collected degradation, which seriously affects image post-analysis. In general, two kinds of algorithms, namely, physical model image defogging and image enhancement defogging, have been adopted to improve the effect of weather factors on image quality. The former is to construct atmospheric scattering model to compensate distortion and obtain a clear image. The common de-fogging algorithms based on the physical model of atmospheric scattering are dark channel prior de-fogging algorithm and polarization imaging de-fogging algorithm and so on. In the last few years, based on the atmospheric scattering model, experts from all over the world have studied the dark channel priori de-fogging. Based on the dark channel priori principle of image de-fogging, on the one hand, the algorithm cannot keep the edge information of the image in the region where the depth of field changes greatly, and a halo phenomenon occurs in the fog-free image; on the other hand, when the global atmospheric light value is close to the pixel luminance component of the foggy image, the color distortion will occur in the restored image. The latter is achieved by improving the quality of image details according to the characteristics of human vision. The representative algorithms include de-fogging algorithm based on histogram equalization, homomorphic filtering, wavelet transform, Retinex theory, and atmospheric modulation transfer function. The basic idea of histogram equalization-based de-fogging algorithm is to obtain uniform distribution of histogram and increase the contrast of the image. The homomorphic filtering based de-fogging algorithm divides the image into irradiating component and reflection component in the frequency domain and increases the contrast of the image by enhancing the high-frequency information of the image. The fog algorithm based on wavelet transform in time domain and frequency domain transformation locally can effectively extract information from the signal. The de-fogging algorithm based on Retinex theory describes color invariance because it has good effect on dynamic range compression, detail enhancement, color fidelity, and so on. The de-fogging algorithm based on the atmospheric modulation transfer function predicts the corresponding upflow transfer function and aerosol transfer function through the formula, obtains the atmospheric modulation transfer function from the product of the two, and then recovers the degraded image in the frequency domain. The attenuation caused by the atmospheric modulation function is compensated. The MSRCR algorithm considers the ratio between the trichromatic channels of the image, so the color distortion should be eliminated to enhance the local detail to a certainextent.However, the time complexity of the algorithm is high, and the operation is complex. However, the following problems persist: the high light region and the thick foggy areas of the images acquired under the fog condition, the inaccurate transmittance calculation often results in detail loss of restored image, halo phenomenon, and contrast and color that cannot meet human visual characteristics. This paper proposes an image defogging algorithm combining GF-MSRCR with dark channel prior.MethodWeighted quad tree method is adopted to fast search the minimum channel graph to obtain the global atmospheric light value. The GF-MSRCR algorithm is used to preliminarily estimate the transmittance for the image enhancement. According to the dark channel prior theory, the minimum channel graph is estimated again. The pixel fusion is operated on the two above results with a certain proportion to determine the transmittance estimation value, which is further modified by variation function and by median filtering to acquire the precise transmittance value. Finally, the atmospheric scattering model is used to restore the foggy image and obtain haze-removed image with complete contour and clear details after contrast and color correction.ResultA computer with a lab platform of Intel (R) Core (TM) i5-7300HQ CPU @ 2.50 GHz 8 GB RAM is used, and the lab environment is MATLAB R2015b under Windows 10. Four types of fog sky images including close-range, small part sky area, large sky area and white object scene are defogged. Theoretical and experimental results show that on one hand, more edge information and local details in the image could be preserved by the proposed algorithm; moreover, color could be restored with high fidelity. With the aid of modification of variation function on scene transmittance and the smooth and optimization of median filtering, the algorithm could accurately process bright areas, such as sky, and retain more edge details. After the restoration and adjustment of the close-range image, the contrast and hue are well restored. After the image restoration and adjustment in the small sky area, the visual effect is better. After image restoration and adjustment, the contrast and color of sky region are more natural. After the white object image is restored and adjusted, the clarity and color can satisfy the visual characteristics of the human eye. When the proposed algorithm is applied to an image, the subjective visual effect of fog removal becomes evident. Five evaluation indicators, namely, information entropy, contrast, structural similarity, average gradient, and running time, are used to compare the image defogging quality of different algorithms. In particular, the running time obviously decreases by 53.22% with increases in the information entropy by 7.87%, contrast by 21.95%, average gradient by 47.73%, and structural similarity by 15.58%. The algorithm shows good restoration results for scene images containing fog close shot, a small sky area, a large sky area, or white objects.ConclusionThe image dehazing algorithm fusing GF-MSRCR and dark channel prior could quickly and effectively retain image details, eliminate halo, and satisfy human visual characteristics. The algorithm possesses certain practicability and universality. Future research would capture fog images in more complex scenes and restore foggy images.关键词:weighted quad tree;GF-MSRCR;dark channel prior;image fusion;variation function;median filtering145|156|6更新时间:2024-05-07

摘要:ObjectiveWith the development of high-density industrial economy, the air quality gradually declines and haze occurs frequently. Haze is an aerosol system formed by the interaction of human daily life and special climate. Large particles in the air could scatter and absorb light, resulting in image collected degradation, which seriously affects image post-analysis. In general, two kinds of algorithms, namely, physical model image defogging and image enhancement defogging, have been adopted to improve the effect of weather factors on image quality. The former is to construct atmospheric scattering model to compensate distortion and obtain a clear image. The common de-fogging algorithms based on the physical model of atmospheric scattering are dark channel prior de-fogging algorithm and polarization imaging de-fogging algorithm and so on. In the last few years, based on the atmospheric scattering model, experts from all over the world have studied the dark channel priori de-fogging. Based on the dark channel priori principle of image de-fogging, on the one hand, the algorithm cannot keep the edge information of the image in the region where the depth of field changes greatly, and a halo phenomenon occurs in the fog-free image; on the other hand, when the global atmospheric light value is close to the pixel luminance component of the foggy image, the color distortion will occur in the restored image. The latter is achieved by improving the quality of image details according to the characteristics of human vision. The representative algorithms include de-fogging algorithm based on histogram equalization, homomorphic filtering, wavelet transform, Retinex theory, and atmospheric modulation transfer function. The basic idea of histogram equalization-based de-fogging algorithm is to obtain uniform distribution of histogram and increase the contrast of the image. The homomorphic filtering based de-fogging algorithm divides the image into irradiating component and reflection component in the frequency domain and increases the contrast of the image by enhancing the high-frequency information of the image. The fog algorithm based on wavelet transform in time domain and frequency domain transformation locally can effectively extract information from the signal. The de-fogging algorithm based on Retinex theory describes color invariance because it has good effect on dynamic range compression, detail enhancement, color fidelity, and so on. The de-fogging algorithm based on the atmospheric modulation transfer function predicts the corresponding upflow transfer function and aerosol transfer function through the formula, obtains the atmospheric modulation transfer function from the product of the two, and then recovers the degraded image in the frequency domain. The attenuation caused by the atmospheric modulation function is compensated. The MSRCR algorithm considers the ratio between the trichromatic channels of the image, so the color distortion should be eliminated to enhance the local detail to a certainextent.However, the time complexity of the algorithm is high, and the operation is complex. However, the following problems persist: the high light region and the thick foggy areas of the images acquired under the fog condition, the inaccurate transmittance calculation often results in detail loss of restored image, halo phenomenon, and contrast and color that cannot meet human visual characteristics. This paper proposes an image defogging algorithm combining GF-MSRCR with dark channel prior.MethodWeighted quad tree method is adopted to fast search the minimum channel graph to obtain the global atmospheric light value. The GF-MSRCR algorithm is used to preliminarily estimate the transmittance for the image enhancement. According to the dark channel prior theory, the minimum channel graph is estimated again. The pixel fusion is operated on the two above results with a certain proportion to determine the transmittance estimation value, which is further modified by variation function and by median filtering to acquire the precise transmittance value. Finally, the atmospheric scattering model is used to restore the foggy image and obtain haze-removed image with complete contour and clear details after contrast and color correction.ResultA computer with a lab platform of Intel (R) Core (TM) i5-7300HQ CPU @ 2.50 GHz 8 GB RAM is used, and the lab environment is MATLAB R2015b under Windows 10. Four types of fog sky images including close-range, small part sky area, large sky area and white object scene are defogged. Theoretical and experimental results show that on one hand, more edge information and local details in the image could be preserved by the proposed algorithm; moreover, color could be restored with high fidelity. With the aid of modification of variation function on scene transmittance and the smooth and optimization of median filtering, the algorithm could accurately process bright areas, such as sky, and retain more edge details. After the restoration and adjustment of the close-range image, the contrast and hue are well restored. After the image restoration and adjustment in the small sky area, the visual effect is better. After image restoration and adjustment, the contrast and color of sky region are more natural. After the white object image is restored and adjusted, the clarity and color can satisfy the visual characteristics of the human eye. When the proposed algorithm is applied to an image, the subjective visual effect of fog removal becomes evident. Five evaluation indicators, namely, information entropy, contrast, structural similarity, average gradient, and running time, are used to compare the image defogging quality of different algorithms. In particular, the running time obviously decreases by 53.22% with increases in the information entropy by 7.87%, contrast by 21.95%, average gradient by 47.73%, and structural similarity by 15.58%. The algorithm shows good restoration results for scene images containing fog close shot, a small sky area, a large sky area, or white objects.ConclusionThe image dehazing algorithm fusing GF-MSRCR and dark channel prior could quickly and effectively retain image details, eliminate halo, and satisfy human visual characteristics. The algorithm possesses certain practicability and universality. Future research would capture fog images in more complex scenes and restore foggy images.关键词:weighted quad tree;GF-MSRCR;dark channel prior;image fusion;variation function;median filtering145|156|6更新时间:2024-05-07

Image Processing and Coding

-

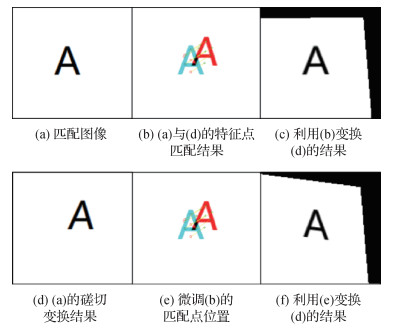

摘要:ObjectiveVisual target tracking is an important issue in machine vision. Its core tasks are to locate the target in a continuous video sequence and estimate the target's motion trajectory. This method has been widely used in many fields, such as human-computer interaction, security monitoring, automatic driving, navigation, and positioning. Through extensive research by domestic and foreign experts in recent years, visual target-tracking technology has gradually matured. However, tracking targets accurately in complex scenes, such as intense illumination change, occlusion, deformation, scale change, and background clutter, remains a challenging task. Visual target-tracking algorithms can be divided into two categories, namely, generative and discriminative tracking methods. Generative tracking converts the tracking problem into the nearest neighbor search task of the target model, constructs the target model by using a template or sparse representation in the subspace, and achieves target tracking by searching for the most similar region in the target model. Discriminant tracking treats the tracking problem as a binary classification problem.The target is separated from the background by training the classifier to achieve target tracking. Given that the generated visual target-tracking algorithm needs to construct a complex target appearance model, its computational complexity is high, and its algorithm has poor real-time performance. Discriminant tracking algorithm uses samples of the target and surrounding background to train a classifier online and achieves target tracking by detecting and tracking. Its classifier obtains considerable background information during training. Thus, this method can distinguish foreground and background better and its performance is generally better than that of the generative tracking method. Correlation-filtering algorithm is an algorithm with better performance than discriminant tracking algorithm. The traditional correlation-filtering algorithm introduces the concept of dense sampling and uses cyclically shifted samples of the base samples as training samples, which greatly improve the classification ability of the filter. The introduction of kernel strategy maps the linear regression problem of the ridge to the nonlinear space and uses the discrete Fourier transform to transform the time-domain calculation into the frequency-domain calculation, which greatly reduces algorithm complexity. Although traditional correlation-filtering algorithm has many advantages, it also has shortcomings.MethodFirst, this algorithm uses false negative samples generated by the cyclic shift to train a classifier, which limits the classifier's classification ability. Second, several incorrect samples (predicted target images) caused by occlusion are used to update the classifier when the target is seriously occluded. With an increase in occlusion time, the classifier will contain considerable noise information and gradually lose discrimination, which causes tracking failure.Aiming to address the above problems, this study proposes a long-term target-tracking algorithm based on a perceptual model. The algorithm introduces the background perceptual strategy to solve the problem of traditional correlation filtering lacking real negative samples and the occlusion-sensing strategy to effectively track the occluded target. The proposed algorithm first increases the number of training samples by enlarging the sampling area. A cropping matrix is then introduced into the algorithm to crop shifted samples and obtain complete and valid samples.This method overcomes the boundary effect problem caused by cyclically shifted samples. A classification pool is subsequently constructed by using the corresponding classifiers of a certain number of frames in the case of no occlusion. In the case of severe occlusion, the optimal classifier is finally selected from the classification pool by minimizing the energy function for redetection to achieve long-term target tracking.ResultThe performance of the proposed algorithm is evaluated by using a public data set. The proposed algorithm has a success rate of 0.990 and an accuracy of 0.988. These values are respectively 2.7% and 2.5% higher than those of the background-aware correlation filter algorithm. The overall success rate and accuracy of the proposed algorithm are considerably higher than those of other algorithms because of the introduction of background and occlusion perception strategies. The tracking accuracy for a single sequence is also higher. However, other algorithms have certain advantages in specific scenarios, and the proposed algorithm does not rank first in the accuracy and success rate of each sequence. The time complexity of the algorithm is slightly higher and the real-time performance is insufficient because of the introduction of perception module.ConclusionExperiments show that the proposed algorithm can accurately track a target under complex conditions, such as severe occlusion, scale change, and target deformation and has certain research value.关键词:target tracking;circular convolution;background perception;heavy occlusion;classification pool17|21|1更新时间:2024-05-07

摘要:ObjectiveVisual target tracking is an important issue in machine vision. Its core tasks are to locate the target in a continuous video sequence and estimate the target's motion trajectory. This method has been widely used in many fields, such as human-computer interaction, security monitoring, automatic driving, navigation, and positioning. Through extensive research by domestic and foreign experts in recent years, visual target-tracking technology has gradually matured. However, tracking targets accurately in complex scenes, such as intense illumination change, occlusion, deformation, scale change, and background clutter, remains a challenging task. Visual target-tracking algorithms can be divided into two categories, namely, generative and discriminative tracking methods. Generative tracking converts the tracking problem into the nearest neighbor search task of the target model, constructs the target model by using a template or sparse representation in the subspace, and achieves target tracking by searching for the most similar region in the target model. Discriminant tracking treats the tracking problem as a binary classification problem.The target is separated from the background by training the classifier to achieve target tracking. Given that the generated visual target-tracking algorithm needs to construct a complex target appearance model, its computational complexity is high, and its algorithm has poor real-time performance. Discriminant tracking algorithm uses samples of the target and surrounding background to train a classifier online and achieves target tracking by detecting and tracking. Its classifier obtains considerable background information during training. Thus, this method can distinguish foreground and background better and its performance is generally better than that of the generative tracking method. Correlation-filtering algorithm is an algorithm with better performance than discriminant tracking algorithm. The traditional correlation-filtering algorithm introduces the concept of dense sampling and uses cyclically shifted samples of the base samples as training samples, which greatly improve the classification ability of the filter. The introduction of kernel strategy maps the linear regression problem of the ridge to the nonlinear space and uses the discrete Fourier transform to transform the time-domain calculation into the frequency-domain calculation, which greatly reduces algorithm complexity. Although traditional correlation-filtering algorithm has many advantages, it also has shortcomings.MethodFirst, this algorithm uses false negative samples generated by the cyclic shift to train a classifier, which limits the classifier's classification ability. Second, several incorrect samples (predicted target images) caused by occlusion are used to update the classifier when the target is seriously occluded. With an increase in occlusion time, the classifier will contain considerable noise information and gradually lose discrimination, which causes tracking failure.Aiming to address the above problems, this study proposes a long-term target-tracking algorithm based on a perceptual model. The algorithm introduces the background perceptual strategy to solve the problem of traditional correlation filtering lacking real negative samples and the occlusion-sensing strategy to effectively track the occluded target. The proposed algorithm first increases the number of training samples by enlarging the sampling area. A cropping matrix is then introduced into the algorithm to crop shifted samples and obtain complete and valid samples.This method overcomes the boundary effect problem caused by cyclically shifted samples. A classification pool is subsequently constructed by using the corresponding classifiers of a certain number of frames in the case of no occlusion. In the case of severe occlusion, the optimal classifier is finally selected from the classification pool by minimizing the energy function for redetection to achieve long-term target tracking.ResultThe performance of the proposed algorithm is evaluated by using a public data set. The proposed algorithm has a success rate of 0.990 and an accuracy of 0.988. These values are respectively 2.7% and 2.5% higher than those of the background-aware correlation filter algorithm. The overall success rate and accuracy of the proposed algorithm are considerably higher than those of other algorithms because of the introduction of background and occlusion perception strategies. The tracking accuracy for a single sequence is also higher. However, other algorithms have certain advantages in specific scenarios, and the proposed algorithm does not rank first in the accuracy and success rate of each sequence. The time complexity of the algorithm is slightly higher and the real-time performance is insufficient because of the introduction of perception module.ConclusionExperiments show that the proposed algorithm can accurately track a target under complex conditions, such as severe occlusion, scale change, and target deformation and has certain research value.关键词:target tracking;circular convolution;background perception;heavy occlusion;classification pool17|21|1更新时间:2024-05-07 -

摘要:ObjectiveThe development and progress of science and technology have made it possible to obtain numerous images from imaging equipment, the Internet, or image databases and have increased people's requirements for image processing. Consequently, image-processing technology has been deeply, widely, and rapidly developed. Target detection is an important research content in the field of computer vision. Rapid and accurate positioning and recognition of specific targets in uncontrolled natural scenes are vital functional bases of many artificial intelligence application scenes. However, several major difficulties presently exist in the field of target detection. First, many small objects are widely distributed in visual scenes. The existence of these small objects challenges the agility and reliability of detection algorithms. Second, detection accuracy and speed are linked, and many technical bottle necks must be overcome to consider the performance of these two factors. Finally, large-scale model parameters are an important reason restricting the loading of deep network chips. The compression of model size while ensuring detection accuracy is a meaningful and urgent problem. Targets with simple background, sufficient illumination, and no occlusion are relatively easy to detect, whereas targets with mixed background and target, occlusion near the target, excessively weak illumination intensity, or diverse target posture are difficult to detect. In natural scene images, the quality of feature extraction is the key factor to determine the performance of target detection. Decades of research have resulted in a more robust detection algorithm. Deep learning technology in the field of computer vision has also achieved great breakthroughs in recent years. Target detection framework based on deep learning has become the mainstream, and two main branches of target detection algorithms based on candidate regions and regression have been derived. Most of the current detection algorithms use the powerful learning ability of convolutional neural networks (CNNs) to obtain the prior knowledge of the target and perform target detection according to such knowledge. The low-level features of convolutional neural networks are characterized by high resolution ratio, low abstract semantics, limited position information, and lack of representation of features. High-level features are characterized by high identification, low resolution ratio, and a weak ability to detect small-scale targets. Therefore, in this study, the semantic information of context is transmitted by combining high- and low-level feature graphs to make the semantic information complete and evenly distributed.MethodWhile balancing detection speed and accuracy, the multiscale feature graph fusion target detection algorithm in this study takes a single-shot multibox detector (SSD) network structure as the basic network and adds a feature fusion module to obtain feature graphs with rich semantic information and uniform distribution. The speech information of feature graphs on different levels is transmitted from top to bottom by feature fusion structure to reduce the semantic difference among feature graphs at different levels. The original SSD network is first used to extract a feature graph, which is then unified into 256 channels through a 1×1 convolution layer. The spatial resolution of the top-down feature maps is subsequently increased by deconvolution. Hence, the feature graph coming from two directions has the same spatial resolution. Feature graphs in both directions are then fused to obtain feature graphs with complete semantic information and uniform distribution by adding corresponding elements. The fused feature graph is convolved with a 3×3 convolution kernel to reduce the aliasing effect of the fused feature graph. A feature graph with strong semantic information is constructed according to the abovementioned steps, and the details of the original feature graph are retained. Lastly, the predicted bounding boxes are aggregated and non maximum suppression is used to achieve the final detection results.ResultKey problems in the practical application of target detection algorithms and difficult problems in related target detection are analyzed according to the research progress and task requirements of visual target detection-related technology. Current solutions are also given. The target detection algorithm based on multiscale feature graph fusion in this study can achieve good results when dealing with weak targets, multiple targets, messy background, occlusion, and other detection difficulties. Experimental tests are performed on PASCAL VOC 2007 and 2012 data sets. The mean average precision values of the proposed model are 78.9% and 76.7%, which are 1.4 and 0.9 percentage points higher than those of the classical SSD algorithm, respectively. In addition, the method in this paper improves by 8.3% mAP compared with the classical SSD model when detecting small-scale targets. Compared with the classical SSD model, the method proposed in this study significantly improves the detection effect when detecting small-scale targets.ConclusionThe multiscale feature graph fusion target detection algorithm proposed in this study uses convolutional neural network to extract convolutional features instead of the traditional manual feature extraction process, thereby expanding semantic information in a top-down manner and constructing a high-strength semantic feature graph. The model can be used to detect new scene images with strong visual task. In combination with the idea of deep learning convolutional neural network, the convolution feature is used to replace the traditional manual feature, thus avoiding the problem of feature selection in the traditional detection problem. The deep convolution feature has improved expressive ability. The target detection model of multiscale feature map fusion is finally obtained through repeated iteration training on the basis of the SSD network. The detection model has good detection effect for small-scale target detection tasks. While realizing end-to-end training of detection algorithm, the model also improves its robustness to various complex scenes and the accuracy of target detection. Therefore, accurate target detection is achieved. This study provides a general and concise way to solve the problem of small-scale target detection.关键词:computer vision;deep learning;convolutional neural network(CNN);target detection;multiscale characteristic map44|78|9更新时间:2024-05-07